dbd57205e7aaf7cce3ddfd5745b4632c.ppt

- Количество слайдов: 18

DØ Grid Computing Gavin Davies, Frédéric Villeneuve-Séguier Imperial College London On behalf of the DØ Collaboration and the SAMGrid team The 2007 Europhysics Conference on High Energy Physics Manchester, England 19 -25 July 2007 EPS-HEP 2007 Manchester

DØ Grid Computing Gavin Davies, Frédéric Villeneuve-Séguier Imperial College London On behalf of the DØ Collaboration and the SAMGrid team The 2007 Europhysics Conference on High Energy Physics Manchester, England 19 -25 July 2007 EPS-HEP 2007 Manchester

Outline • Introduction v DØ Computing Model v SAMGrid Components v Interoperability • Activities v Monte Carlo Generation v Data Processing • Conclusion v Next Steps / Issues v Summary EPS-HEP 2007 Manchester 2

Outline • Introduction v DØ Computing Model v SAMGrid Components v Interoperability • Activities v Monte Carlo Generation v Data Processing • Conclusion v Next Steps / Issues v Summary EPS-HEP 2007 Manchester 2

Introduction • Tevatron – Running experiments (Less data than LHC, but still PBs/experiment) – Growing - great physics & better still to come. . • Have >3 fb-1 of data and expect up 5 fb-1 more by end 2009 • Computing model: Datagrid (SAM) for all data handling & originally distributed computing with evolution to automated use of common tools/solutions on the grid (SAMGrid) for all tasks – Started with production tasks eg MC generation, data processing • Greatest need & easiest to ‘gridify’ - ahead of wave & a running expt. – Base on SAMGrid, but have program of interoperability from v. early on • Initially LCG and then OSG – Increased automation, user analysis considered last • SAM gives remote data analysis EPS-HEP 2007 Manchester 3

Introduction • Tevatron – Running experiments (Less data than LHC, but still PBs/experiment) – Growing - great physics & better still to come. . • Have >3 fb-1 of data and expect up 5 fb-1 more by end 2009 • Computing model: Datagrid (SAM) for all data handling & originally distributed computing with evolution to automated use of common tools/solutions on the grid (SAMGrid) for all tasks – Started with production tasks eg MC generation, data processing • Greatest need & easiest to ‘gridify’ - ahead of wave & a running expt. – Base on SAMGrid, but have program of interoperability from v. early on • Initially LCG and then OSG – Increased automation, user analysis considered last • SAM gives remote data analysis EPS-HEP 2007 Manchester 3

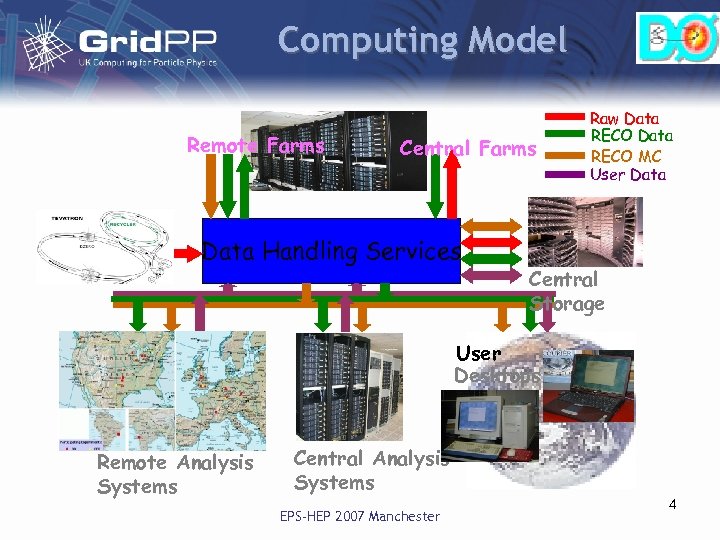

Computing Model Remote Farms Central Farms Data Handling Services Raw Data RECO MC User Data Central Storage User Desktops Remote Analysis Systems Central Analysis Systems EPS-HEP 2007 Manchester 4

Computing Model Remote Farms Central Farms Data Handling Services Raw Data RECO MC User Data Central Storage User Desktops Remote Analysis Systems Central Analysis Systems EPS-HEP 2007 Manchester 4

Components - Terminology • SAM (Sequential Access via Metadata) – Well developed metadata & distributed data replication system – Originally developed by DØ & FNAL-CD, now used by CDF & MINOS • JIM (Job Information and Monitoring) – handles job submission and monitoring (all but data handling) – SAM + JIM →SAMGrid – computational grid • Runjob – handles job workflow management • Automation – d 0 repro tools, automc • (UK Role – Project leadership, key technology and operations) EPS-HEP 2007 Manchester 5

Components - Terminology • SAM (Sequential Access via Metadata) – Well developed metadata & distributed data replication system – Originally developed by DØ & FNAL-CD, now used by CDF & MINOS • JIM (Job Information and Monitoring) – handles job submission and monitoring (all but data handling) – SAM + JIM →SAMGrid – computational grid • Runjob – handles job workflow management • Automation – d 0 repro tools, automc • (UK Role – Project leadership, key technology and operations) EPS-HEP 2007 Manchester 5

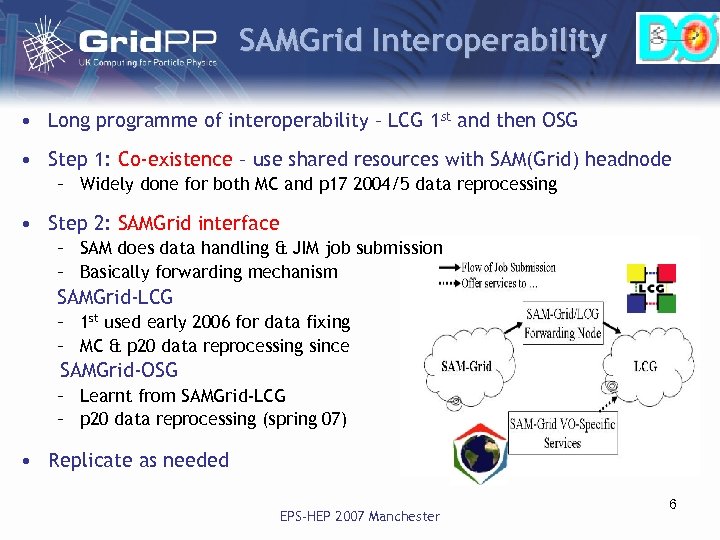

SAMGrid Interoperability • Long programme of interoperability – LCG 1 st and then OSG • Step 1: Co-existence – use shared resources with SAM(Grid) headnode – Widely done for both MC and p 17 2004/5 data reprocessing • Step 2: SAMGrid interface – SAM does data handling & JIM job submission – Basically forwarding mechanism SAMGrid-LCG – 1 st used early 2006 for data fixing – MC & p 20 data reprocessing since SAMGrid-OSG – Learnt from SAMGrid-LCG – p 20 data reprocessing (spring 07) • Replicate as needed EPS-HEP 2007 Manchester 6

SAMGrid Interoperability • Long programme of interoperability – LCG 1 st and then OSG • Step 1: Co-existence – use shared resources with SAM(Grid) headnode – Widely done for both MC and p 17 2004/5 data reprocessing • Step 2: SAMGrid interface – SAM does data handling & JIM job submission – Basically forwarding mechanism SAMGrid-LCG – 1 st used early 2006 for data fixing – MC & p 20 data reprocessing since SAMGrid-OSG – Learnt from SAMGrid-LCG – p 20 data reprocessing (spring 07) • Replicate as needed EPS-HEP 2007 Manchester 6

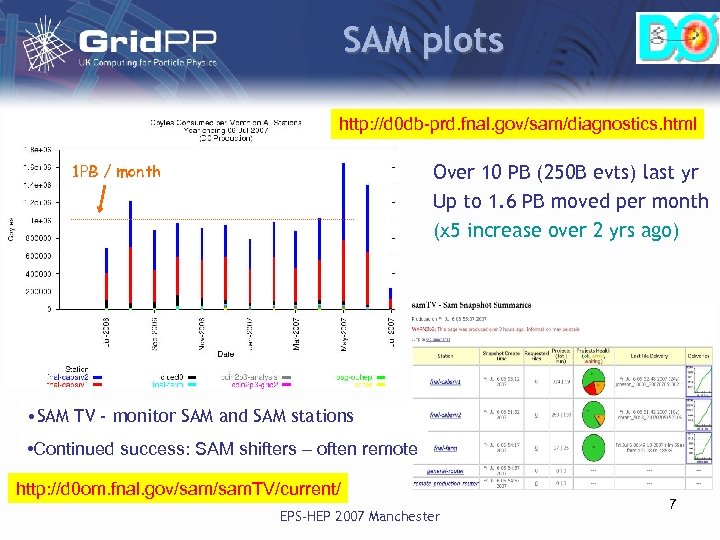

SAM plots http: //d 0 db-prd. fnal. gov/sam/diagnostics. html 1 PB / month Over 10 PB (250 B evts) last yr Up to 1. 6 PB moved per month (x 5 increase over 2 yrs ago) • SAM TV - monitor SAM and SAM stations • Continued success: SAM shifters – often remote http: //d 0 om. fnal. gov/sam. TV/current/ EPS-HEP 2007 Manchester 7

SAM plots http: //d 0 db-prd. fnal. gov/sam/diagnostics. html 1 PB / month Over 10 PB (250 B evts) last yr Up to 1. 6 PB moved per month (x 5 increase over 2 yrs ago) • SAM TV - monitor SAM and SAM stations • Continued success: SAM shifters – often remote http: //d 0 om. fnal. gov/sam. TV/current/ EPS-HEP 2007 Manchester 7

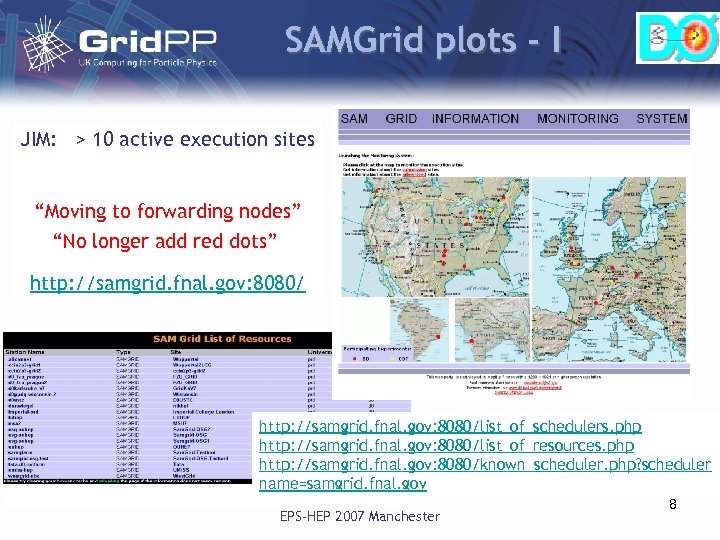

SAMGrid plots - I JIM: > 10 active execution sites “Moving to forwarding nodes” “No longer add red dots” http: //samgrid. fnal. gov: 8080/list_of_schedulers. php http: //samgrid. fnal. gov: 8080/list_of_resources. php http: //samgrid. fnal. gov: 8080/known_scheduler. php? scheduler name=samgrid. fnal. gov EPS-HEP 2007 Manchester 8

SAMGrid plots - I JIM: > 10 active execution sites “Moving to forwarding nodes” “No longer add red dots” http: //samgrid. fnal. gov: 8080/list_of_schedulers. php http: //samgrid. fnal. gov: 8080/list_of_resources. php http: //samgrid. fnal. gov: 8080/known_scheduler. php? scheduler name=samgrid. fnal. gov EPS-HEP 2007 Manchester 8

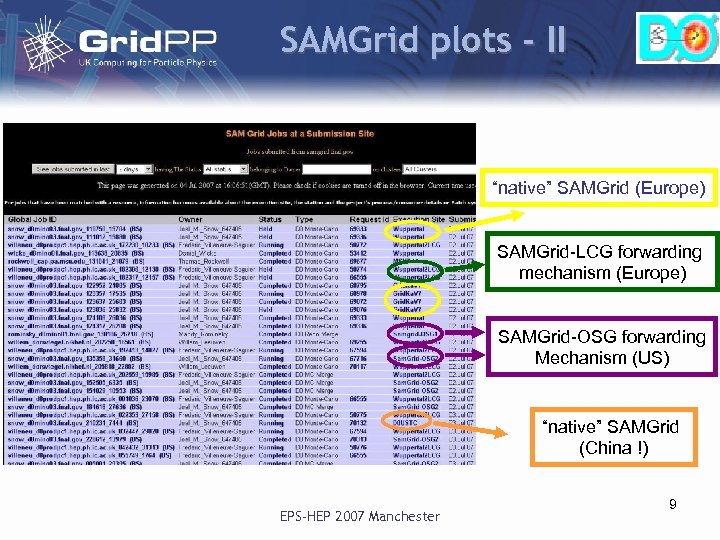

SAMGrid plots - II “native” SAMGrid (Europe) SAMGrid-LCG forwarding mechanism (Europe) SAMGrid-OSG forwarding Mechanism (US) “native” SAMGrid (China !) EPS-HEP 2007 Manchester 9

SAMGrid plots - II “native” SAMGrid (Europe) SAMGrid-LCG forwarding mechanism (Europe) SAMGrid-OSG forwarding Mechanism (US) “native” SAMGrid (China !) EPS-HEP 2007 Manchester 9

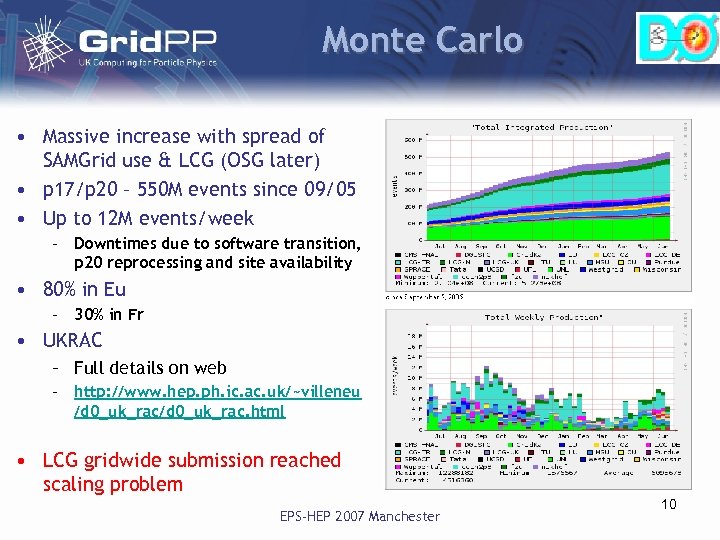

Monte Carlo • Massive increase with spread of SAMGrid use & LCG (OSG later) • p 17/p 20 – 550 M events since 09/05 • Up to 12 M events/week – Downtimes due to software transition, p 20 reprocessing and site availability • 80% in Eu – 30% in Fr • UKRAC – Full details on web – http: //www. hep. ph. ic. ac. uk/~villeneu /d 0_uk_rac. html • LCG gridwide submission reached scaling problem EPS-HEP 2007 Manchester 10

Monte Carlo • Massive increase with spread of SAMGrid use & LCG (OSG later) • p 17/p 20 – 550 M events since 09/05 • Up to 12 M events/week – Downtimes due to software transition, p 20 reprocessing and site availability • 80% in Eu – 30% in Fr • UKRAC – Full details on web – http: //www. hep. ph. ic. ac. uk/~villeneu /d 0_uk_rac. html • LCG gridwide submission reached scaling problem EPS-HEP 2007 Manchester 10

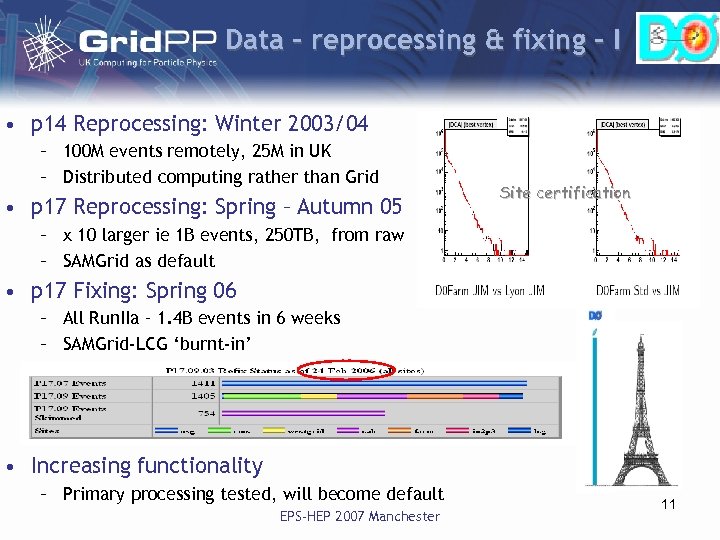

Data – reprocessing & fixing - I • p 14 Reprocessing: Winter 2003/04 – 100 M events remotely, 25 M in UK – Distributed computing rather than Grid • p 17 Reprocessing: Spring – Autumn 05 Site certification – x 10 larger ie 1 B events, 250 TB, from raw – SAMGrid as default • p 17 Fixing: Spring 06 – All Run. IIa – 1. 4 B events in 6 weeks – SAMGrid-LCG ‘burnt-in’ • Increasing functionality – Primary processing tested, will become default EPS-HEP 2007 Manchester 11

Data – reprocessing & fixing - I • p 14 Reprocessing: Winter 2003/04 – 100 M events remotely, 25 M in UK – Distributed computing rather than Grid • p 17 Reprocessing: Spring – Autumn 05 Site certification – x 10 larger ie 1 B events, 250 TB, from raw – SAMGrid as default • p 17 Fixing: Spring 06 – All Run. IIa – 1. 4 B events in 6 weeks – SAMGrid-LCG ‘burnt-in’ • Increasing functionality – Primary processing tested, will become default EPS-HEP 2007 Manchester 11

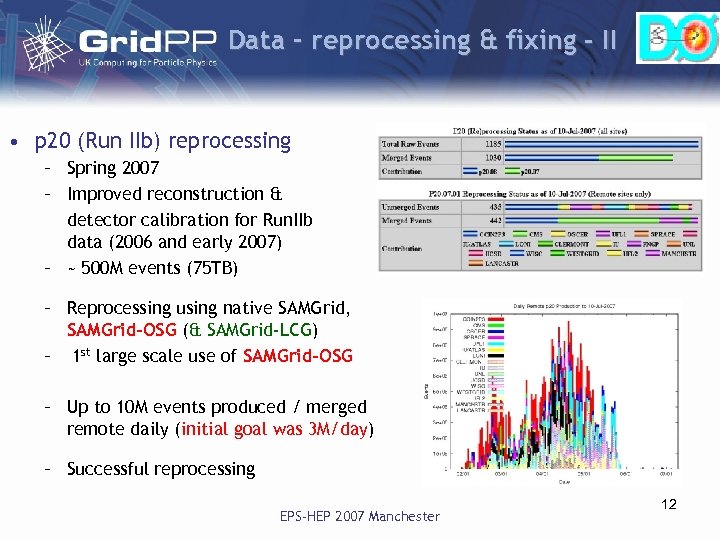

Data – reprocessing & fixing - II • p 20 (Run IIb) reprocessing – Spring 2007 – Improved reconstruction & detector calibration for Run. IIb data (2006 and early 2007) – ~ 500 M events (75 TB) – Reprocessing using native SAMGrid, SAMGrid-OSG (& SAMGrid-LCG) – 1 st large scale use of SAMGrid-OSG – Up to 10 M events produced / merged remote daily (initial goal was 3 M/day) – Successful reprocessing EPS-HEP 2007 Manchester 12

Data – reprocessing & fixing - II • p 20 (Run IIb) reprocessing – Spring 2007 – Improved reconstruction & detector calibration for Run. IIb data (2006 and early 2007) – ~ 500 M events (75 TB) – Reprocessing using native SAMGrid, SAMGrid-OSG (& SAMGrid-LCG) – 1 st large scale use of SAMGrid-OSG – Up to 10 M events produced / merged remote daily (initial goal was 3 M/day) – Successful reprocessing EPS-HEP 2007 Manchester 12

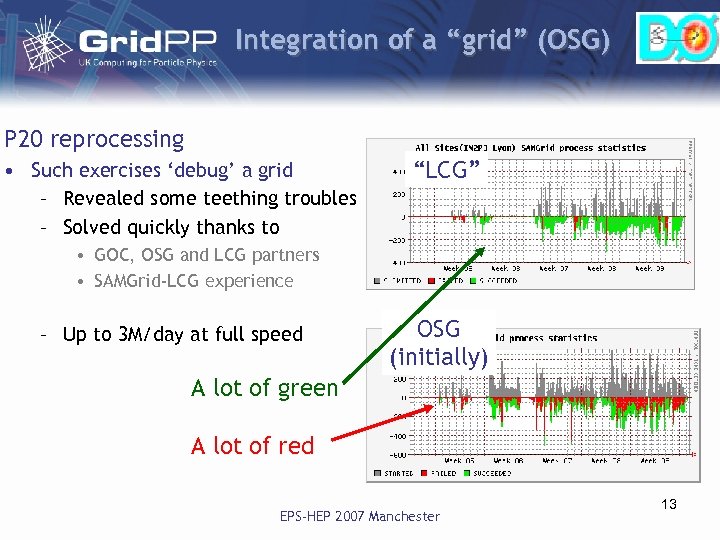

Integration of a “grid” (OSG) P 20 reprocessing • Such exercises ‘debug’ a grid – Revealed some teething troubles – Solved quickly thanks to “LCG” • GOC, OSG and LCG partners • SAMGrid-LCG experience – Up to 3 M/day at full speed OSG (initially) A lot of green A lot of red EPS-HEP 2007 Manchester 13

Integration of a “grid” (OSG) P 20 reprocessing • Such exercises ‘debug’ a grid – Revealed some teething troubles – Solved quickly thanks to “LCG” • GOC, OSG and LCG partners • SAMGrid-LCG experience – Up to 3 M/day at full speed OSG (initially) A lot of green A lot of red EPS-HEP 2007 Manchester 13

Next steps / issues • Complete endgame development – Additional functionality /usage – skimming, primary processing on the grid as default (& at multiple sites? ) – Additional resources - Completing the forwarding nodes • Full data / MC functionality for both LCG & OSG • Scaling issues to access the full LCG &OSG worlds – Data analysis – how gridified do we go? – an open issue • Need to be ‘interoperable’ (Fermigrid, LCG sites, OSG, …) • Will need development, deployment and operations effort • “Steady” state – goal to reach by end of CY 07 (≥ 2 yrs running) – Maintenance of existing functionality – Continued experimental requests – Continued evolution as grid standard’s evolve • Manpower – Development, integration and operation handled by the dedicated few EPS-HEP 2007 Manchester 14

Next steps / issues • Complete endgame development – Additional functionality /usage – skimming, primary processing on the grid as default (& at multiple sites? ) – Additional resources - Completing the forwarding nodes • Full data / MC functionality for both LCG & OSG • Scaling issues to access the full LCG &OSG worlds – Data analysis – how gridified do we go? – an open issue • Need to be ‘interoperable’ (Fermigrid, LCG sites, OSG, …) • Will need development, deployment and operations effort • “Steady” state – goal to reach by end of CY 07 (≥ 2 yrs running) – Maintenance of existing functionality – Continued experimental requests – Continued evolution as grid standard’s evolve • Manpower – Development, integration and operation handled by the dedicated few EPS-HEP 2007 Manchester 14

Summary / plans • Tevatron & DØ performing very well – A lot of data & physics, with more to come • SAM & SAMGrid critical to DØ – Grid computing model as important as any sub-detector • Without LCG and OSG partners would not have worked either – Largest grid ‘data challenges’ in HEP (I believe) – Learnt a lot about the technology, and especially how it scales – Learnt a lot about organisation / operation of such projects – Some of these can be abstracted and of benefit to others… – Accounting model evolved in parallel (~$4 M/yr) • Baseline: Ensure (scaling for) production tasks – Further improving operational robustness / efficiency underway • In parallel open question of data analysis – will need to go part way EPS-HEP 2007 Manchester 15

Summary / plans • Tevatron & DØ performing very well – A lot of data & physics, with more to come • SAM & SAMGrid critical to DØ – Grid computing model as important as any sub-detector • Without LCG and OSG partners would not have worked either – Largest grid ‘data challenges’ in HEP (I believe) – Learnt a lot about the technology, and especially how it scales – Learnt a lot about organisation / operation of such projects – Some of these can be abstracted and of benefit to others… – Accounting model evolved in parallel (~$4 M/yr) • Baseline: Ensure (scaling for) production tasks – Further improving operational robustness / efficiency underway • In parallel open question of data analysis – will need to go part way EPS-HEP 2007 Manchester 15

Back-ups EPS-HEP 2007 Manchester 16

Back-ups EPS-HEP 2007 Manchester 16

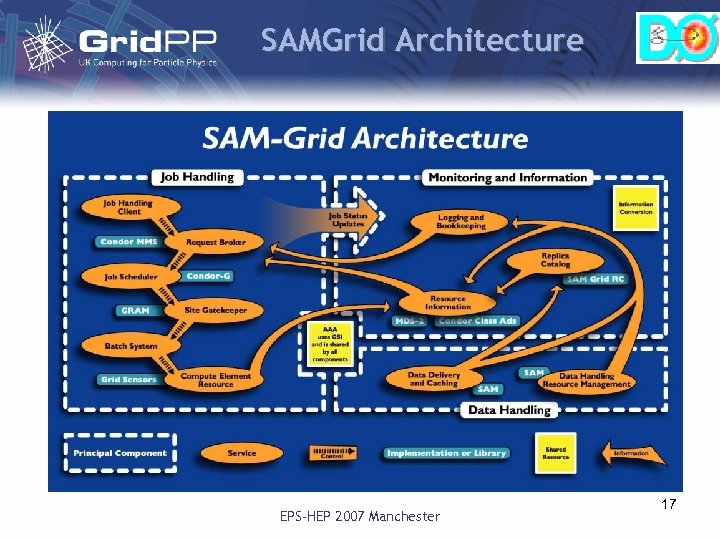

SAMGrid Architecture EPS-HEP 2007 Manchester 17

SAMGrid Architecture EPS-HEP 2007 Manchester 17

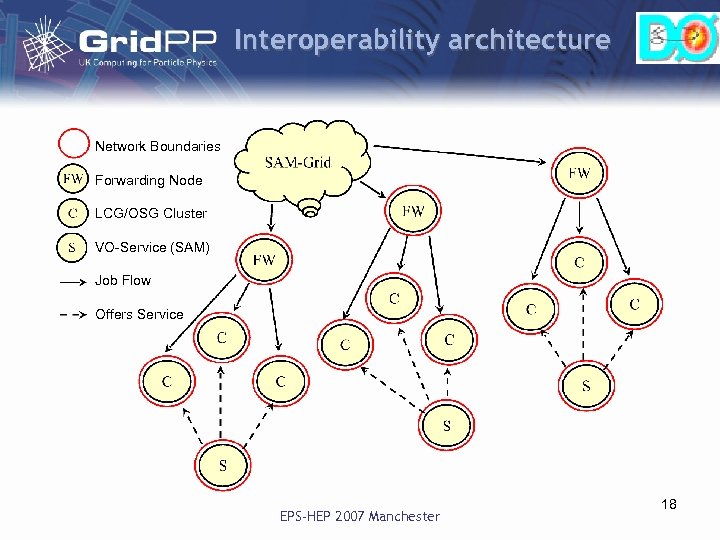

Interoperability architecture Network Boundaries Forwarding Node LCG/OSG Cluster VO-Service (SAM) Job Flow Offers Service EPS-HEP 2007 Manchester 18

Interoperability architecture Network Boundaries Forwarding Node LCG/OSG Cluster VO-Service (SAM) Job Flow Offers Service EPS-HEP 2007 Manchester 18