aef186f0b9c810c8a814636770060ff4.ppt

- Количество слайдов: 76

DISTRIBUTED SYSTEMS Principles and Paradigms Second Edition ANDREW S. TANENBAUM MAARTEN VAN STEEN Chapter 6 Synchronization

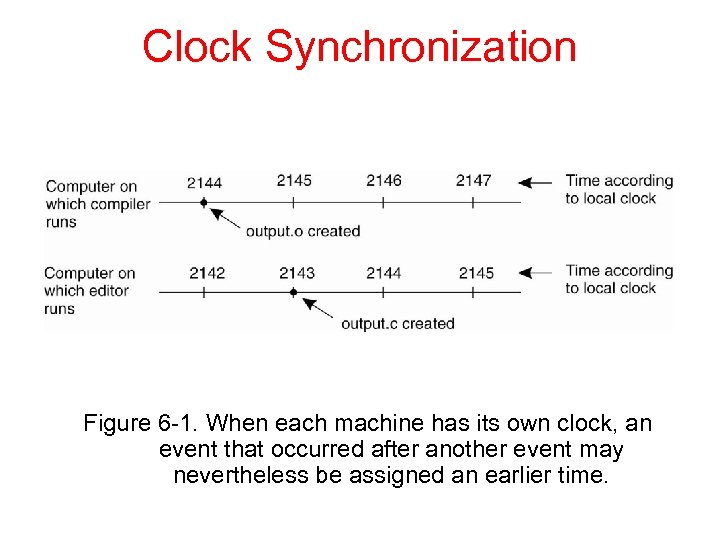

Clock Synchronization Figure 6 -1. When each machine has its own clock, an event that occurred after another event may nevertheless be assigned an earlier time.

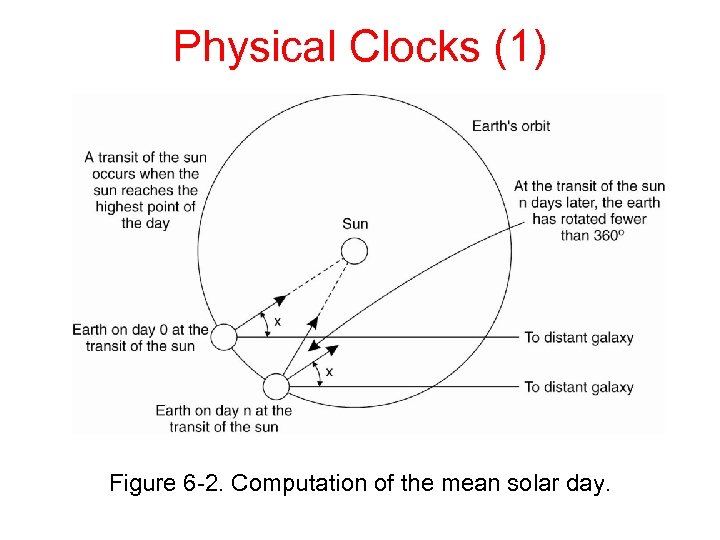

Physical Clocks (1) Figure 6 -2. Computation of the mean solar day.

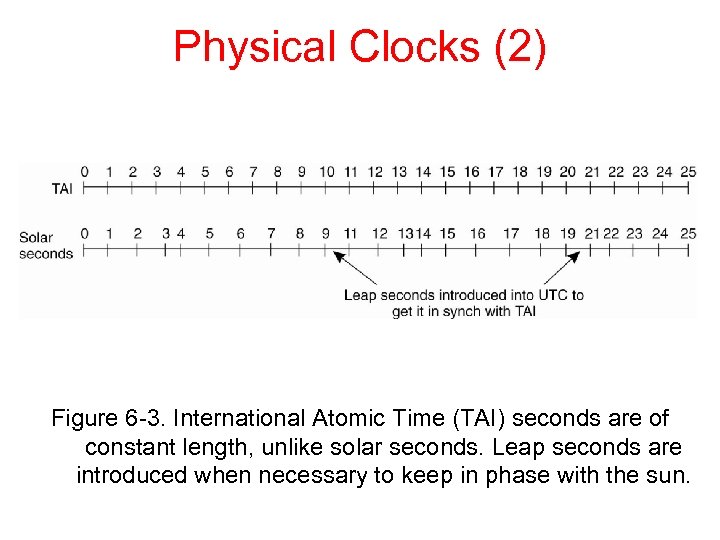

Physical Clocks (2) Figure 6 -3. International Atomic Time (TAI) seconds are of constant length, unlike solar seconds. Leap seconds are introduced when necessary to keep in phase with the sun.

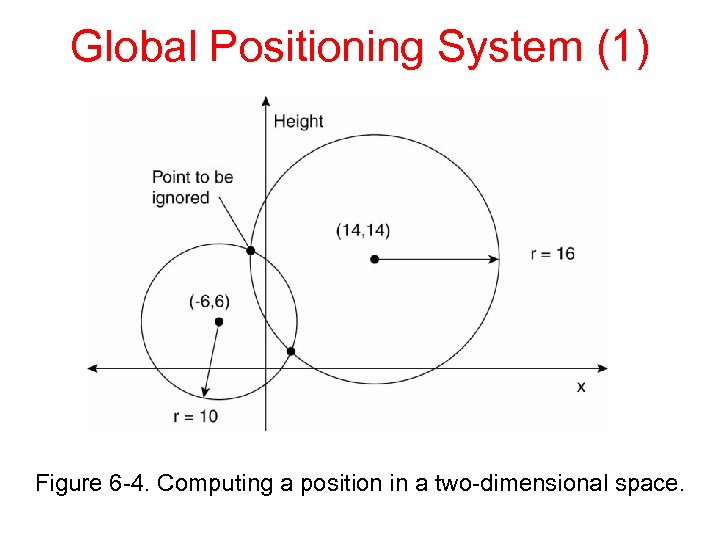

Global Positioning System (1) Figure 6 -4. Computing a position in a two-dimensional space.

Global Positioning System (2) Real world facts that complicate GPS 1. It takes a while before data on a satellite’s position reaches the receiver. 2. The receiver’s clock is generally not in synch with that of a satellite.

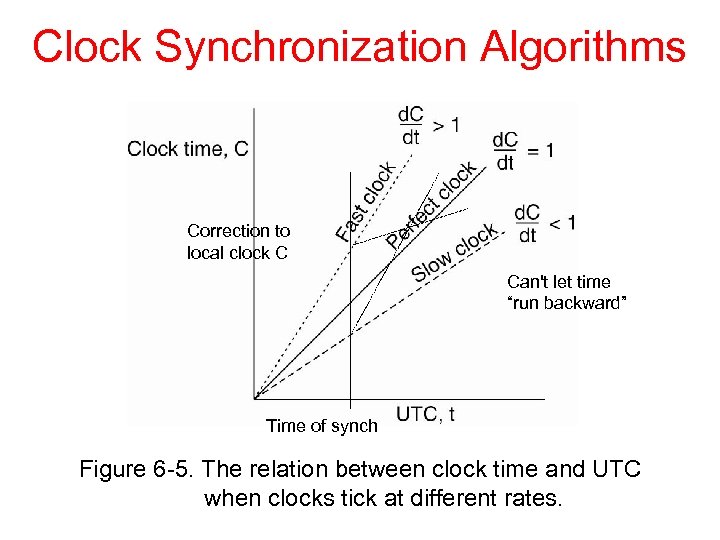

Clock Synchronization Algorithms Correction to local clock C Can't let time “run backward” Time of synch Figure 6 -5. The relation between clock time and UTC when clocks tick at different rates.

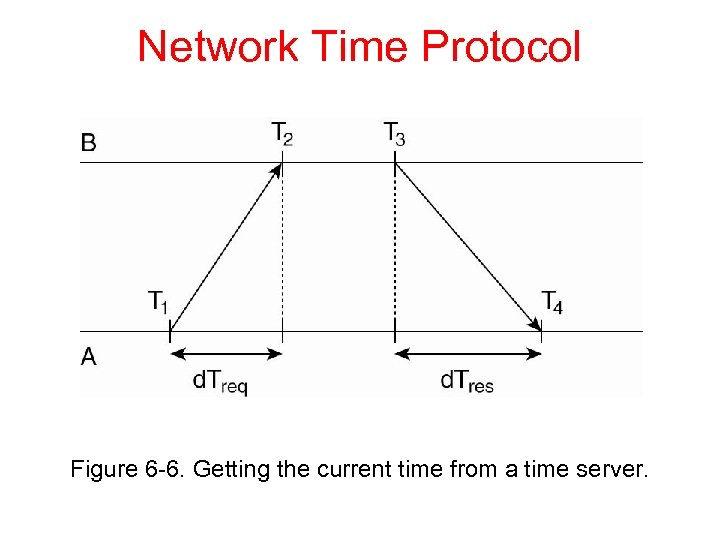

Network Time Protocol Figure 6 -6. Getting the current time from a time server.

NTP (2) Assume delay is about symmetric 1. Skew = [(T 2 -T 1)+(T 3 -T 4)]/2. 2. Take many (8) pair of (skew, delay) and use one with best (least) delay. 3. Clocks have “strata” - lowest stratum is best – only adjust clock if your stratum is higher.

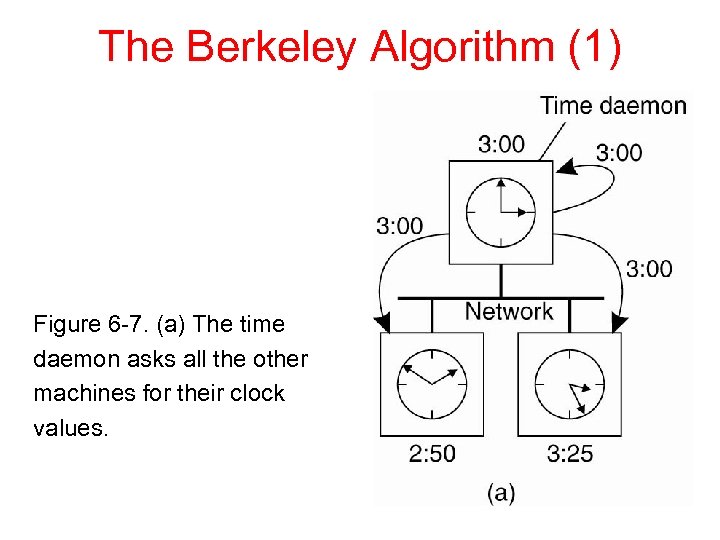

The Berkeley Algorithm (1) Figure 6 -7. (a) The time daemon asks all the other machines for their clock values.

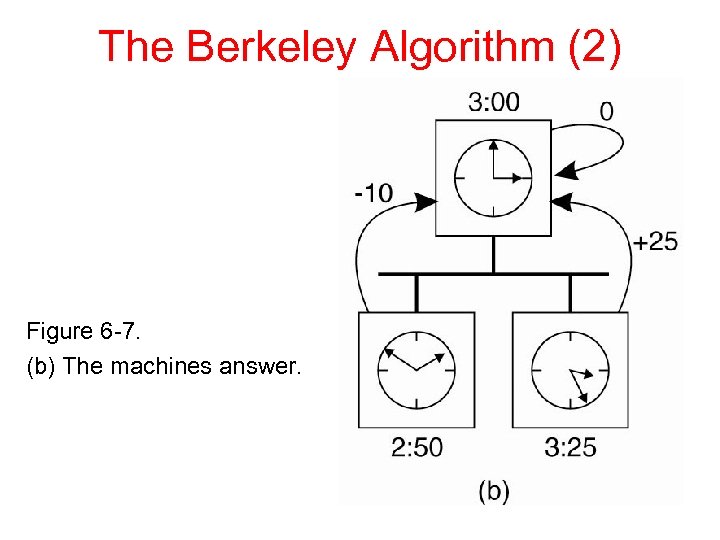

The Berkeley Algorithm (2) Figure 6 -7. (b) The machines answer.

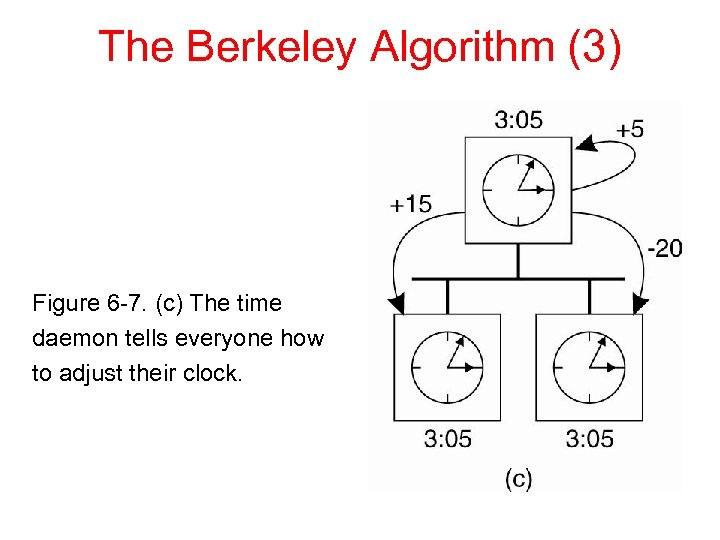

The Berkeley Algorithm (3) Figure 6 -7. (c) The time daemon tells everyone how to adjust their clock.

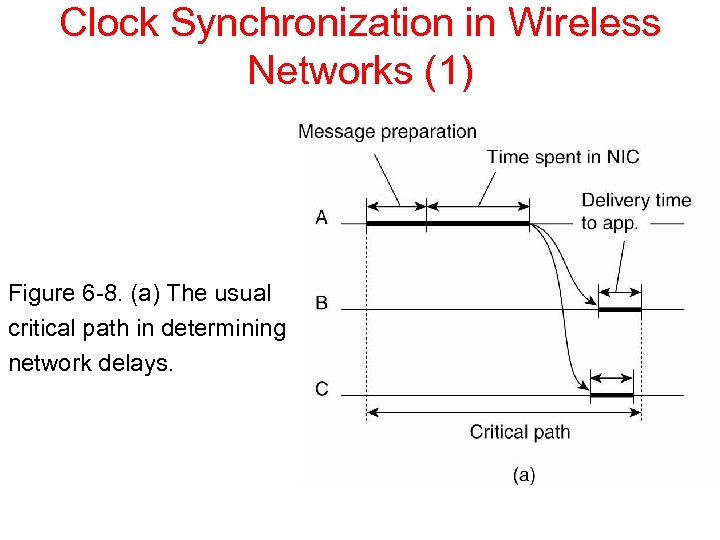

Clock Synchronization in Wireless Networks (1) Figure 6 -8. (a) The usual critical path in determining network delays.

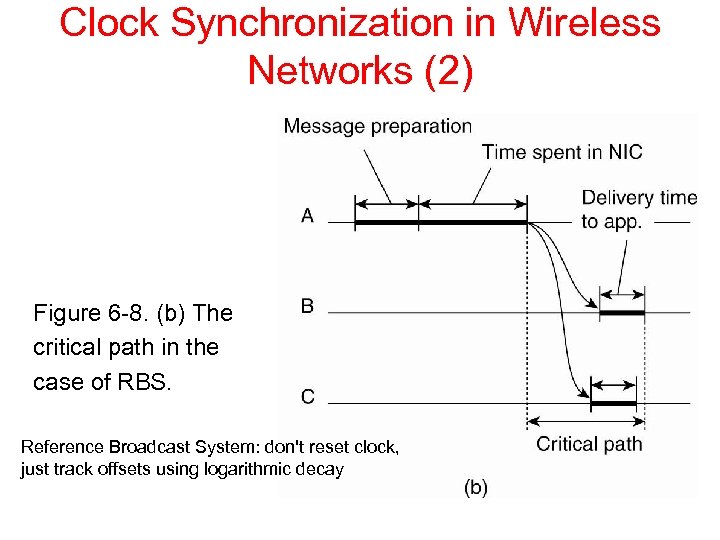

Clock Synchronization in Wireless Networks (2) Figure 6 -8. (b) The critical path in the case of RBS. Reference Broadcast System: don't reset clock, just track offsets using logarithmic decay

Clock Types Time-of-day (wall) • Local clock (oscillator) • Global reference • Distributed clock Logical (event ordering) • Lamport clock • Vector clock • Matrix clock

Lamport’s Logical Clocks (1) The "happens-before" relation → can be observed directly in two situations: • If a and b are events in the same process, and a occurs before b, then a → b is true. • If a is the event of a message being sent by one process, and b is the event of the message being received by another process, then a → b

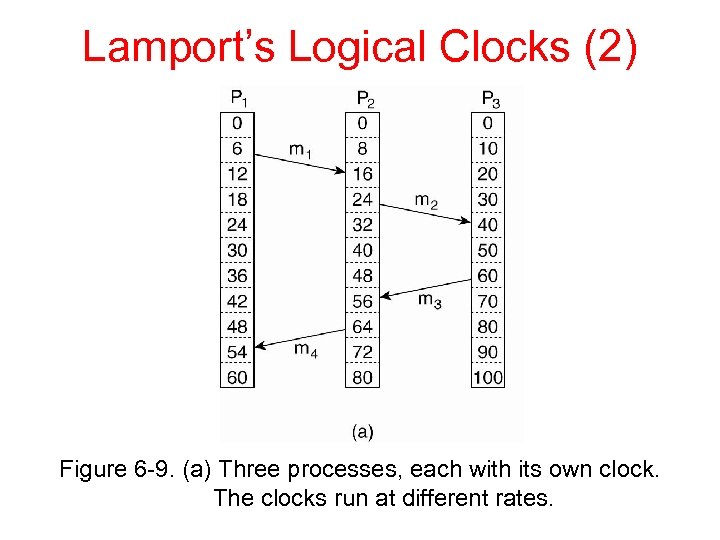

Lamport’s Logical Clocks (2) Figure 6 -9. (a) Three processes, each with its own clock. The clocks run at different rates.

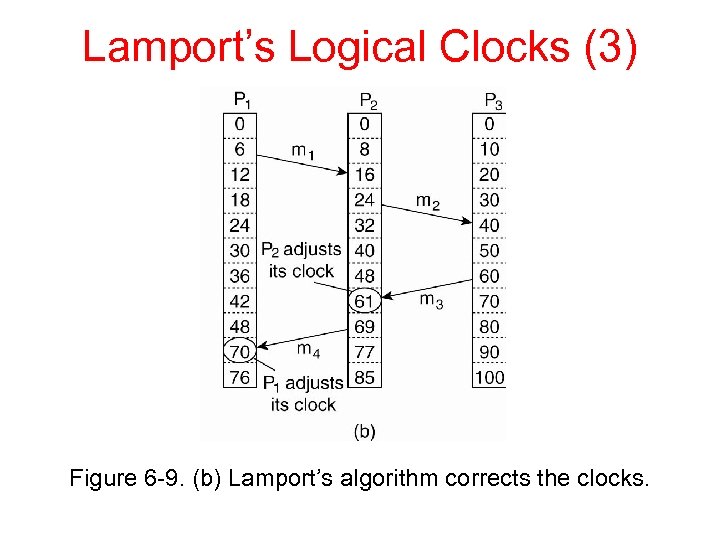

Lamport’s Logical Clocks (3) Figure 6 -9. (b) Lamport’s algorithm corrects the clocks.

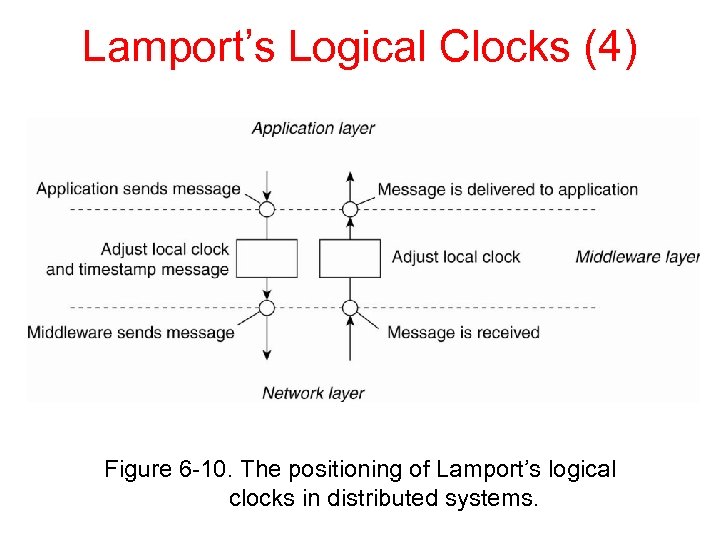

Lamport’s Logical Clocks (4) Figure 6 -10. The positioning of Lamport’s logical clocks in distributed systems.

Lamport’s Logical Clocks (5) Updating counter Ci for process Pi 1. Before executing an event Pi executes Ci ← Ci + 1. (or +Δi in general) 2. When process Pi sends a message m to Pj, it sets m’s timestamp ts (m) equal to Ci after having executed the previous step. 3. Upon the receipt of a message m, process Pj adjusts its own local counter as Cj ← max{Cj , ts (m)}, after which it then executes the first step and delivers the message to the application. Use process ID to break ties if necessary.

Lamport’s Logical Clocks (6) Do we obtain a total order on events? Yes – single integer, break ties using proc ID What information does Lamport clock convey? ei → ej => ts(ei) < ts(ej)? Yes, timestamp of causally dependent event must be larger because of how protocol works ts(ei) < ts(ej) => ei → ej? No – unrelated events can have either order

Group Messaging Properties Two related properties of interest 1. Ordering – FIFO (per sender) – Causal – Total 2. Reliability – Best effort – Duplicate detection – Omission detection/recovery per receiver – Atomic to all receivers (all or none) Digital Fountain – fountain codes

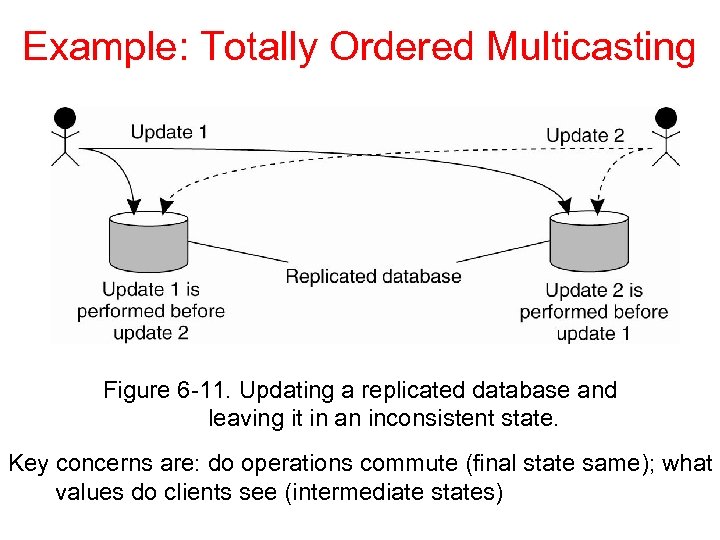

Example: Totally Ordered Multicasting Figure 6 -11. Updating a replicated database and leaving it in an inconsistent state. Key concerns are: do operations commute (final state same); what values do clients see (intermediate states)

Causal Message Delivery Want a method by which messages can be delivered in causal order, not necessarily in some total order across all processes Lamport logical clocks impose a total order that obeys causal order, but this is too restrictive (messages that are not causally related have a delivery order imposed on them unnecessarily) Vector clocks can capture causality information precisely

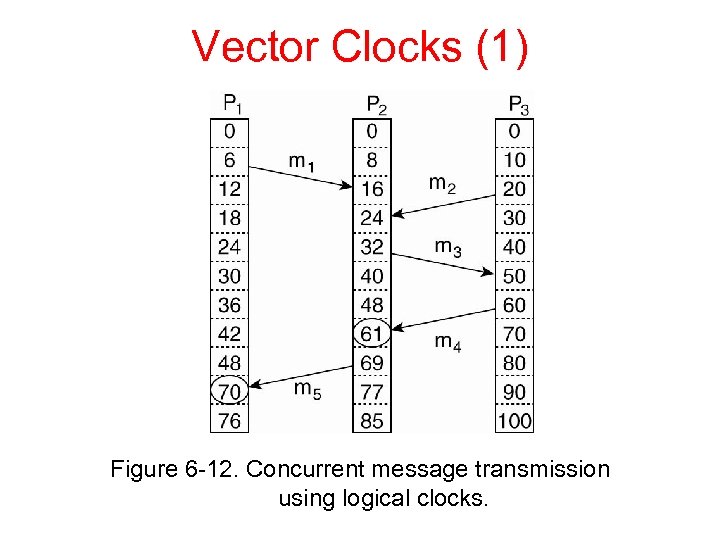

Vector Clocks (1) Figure 6 -12. Concurrent message transmission using logical clocks.

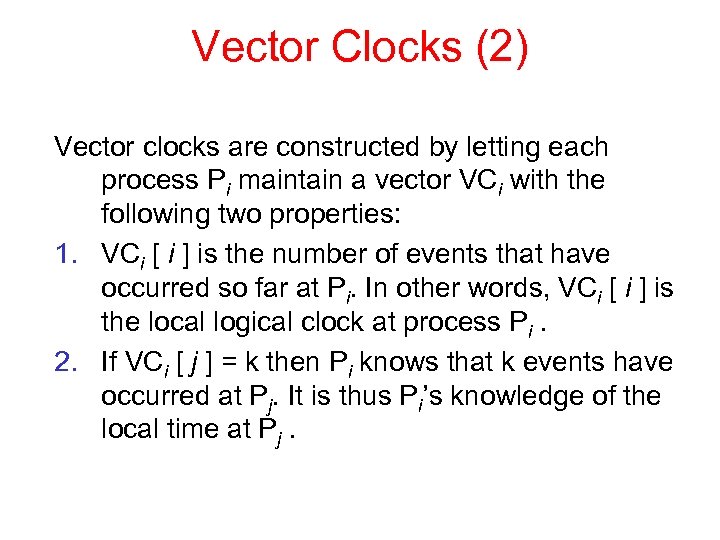

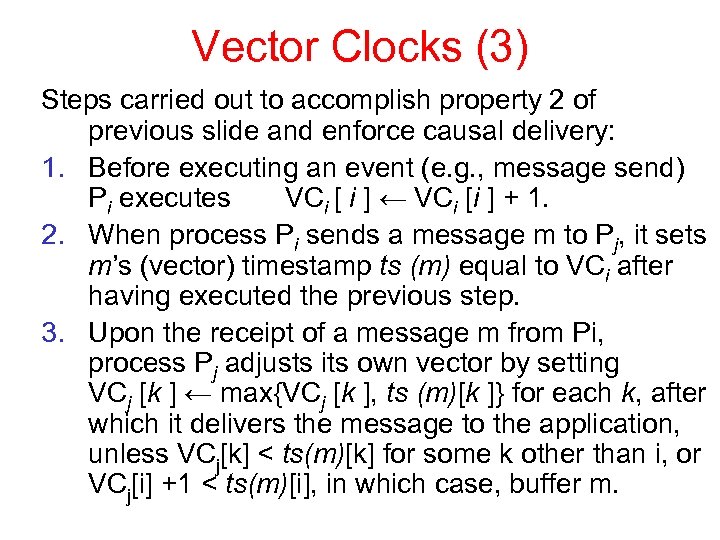

Vector Clocks (2) Vector clocks are constructed by letting each process Pi maintain a vector VCi with the following two properties: 1. VCi [ i ] is the number of events that have occurred so far at Pi. In other words, VCi [ i ] is the local logical clock at process Pi. 2. If VCi [ j ] = k then Pi knows that k events have occurred at Pj. It is thus Pi’s knowledge of the local time at Pj.

Vector Clocks (3) Steps carried out to accomplish property 2 of previous slide and enforce causal delivery: 1. Before executing an event (e. g. , message send) Pi executes VCi [ i ] ← VCi [i ] + 1. 2. When process Pi sends a message m to Pj, it sets m’s (vector) timestamp ts (m) equal to VCi after having executed the previous step. 3. Upon the receipt of a message m from Pi, process Pj adjusts its own vector by setting VCj [k ] ← max{VCj [k ], ts (m)[k ]} for each k, after which it delivers the message to the application, unless VCj[k] < ts(m)[k] for some k other than i, or VCj[i] +1 < ts(m)[i], in which case, buffer m.

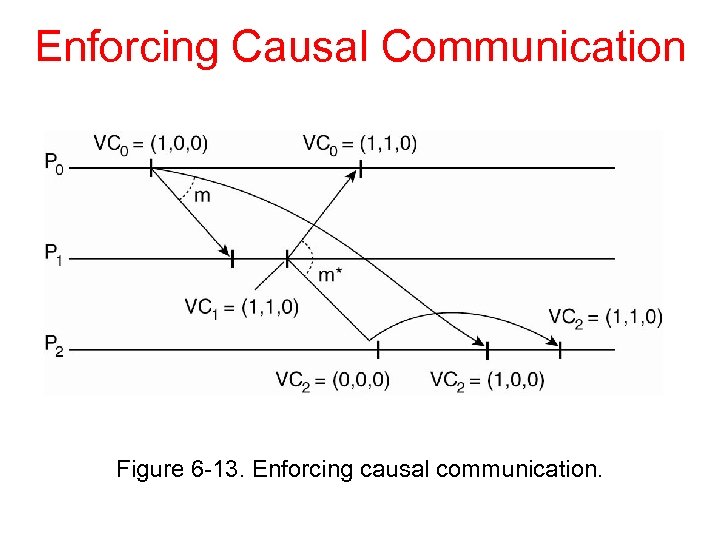

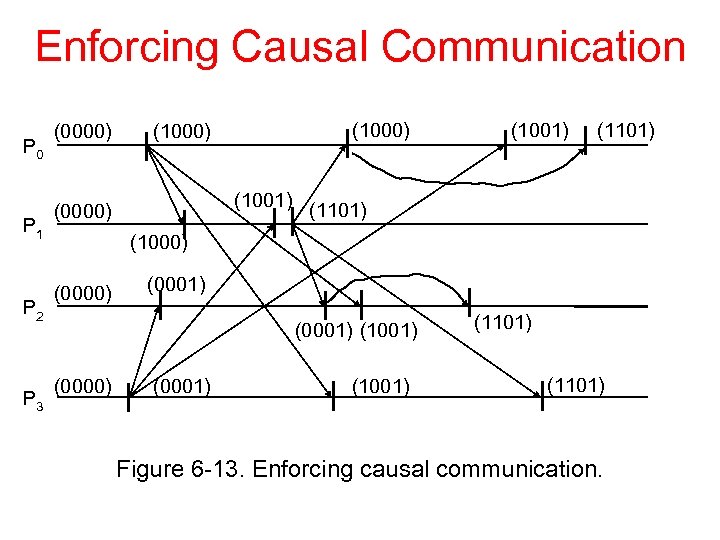

Enforcing Causal Communication Figure 6 -13. Enforcing causal communication.

Enforcing Causal Communication P 0 P 1 P 2 P 3 (0000) (1000) (1001) (0000) (1001) (1101) (1000) (0001) (1001) (0000) (0001) (1101) Figure 6 -13. Enforcing causal communication.

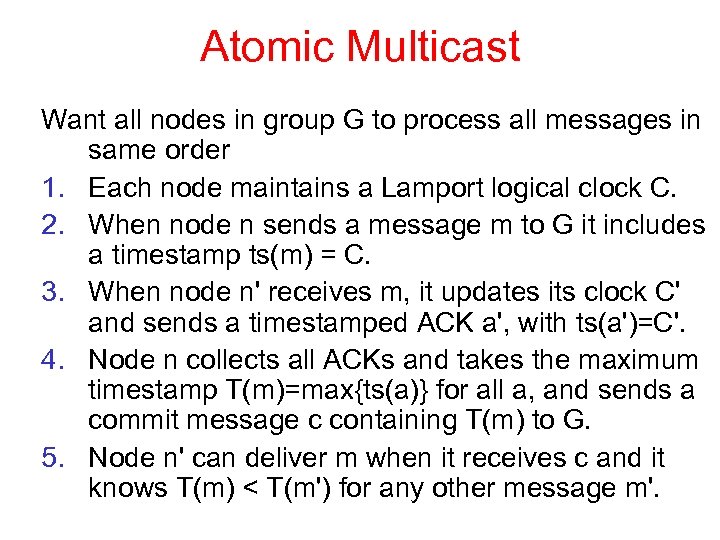

Atomic Multicast Want all nodes in group G to process all messages in same order 1. Each node maintains a Lamport logical clock C. 2. When node n sends a message m to G it includes a timestamp ts(m) = C. 3. When node n' receives m, it updates its clock C' and sends a timestamped ACK a', with ts(a')=C'. 4. Node n collects all ACKs and takes the maximum timestamp T(m)=max{ts(a)} for all a, and sends a commit message c containing T(m) to G. 5. Node n' can deliver m when it receives c and it knows T(m) < T(m') for any other message m'.

Mutual Exclusion Need for synchronization Access to shared variables Access to serially reusable resources Approaches Centralized Distributed Voting Token-passing Characteristics Correctness – safety Efficiency – messages passed Fault tolerance Fairness – liveness

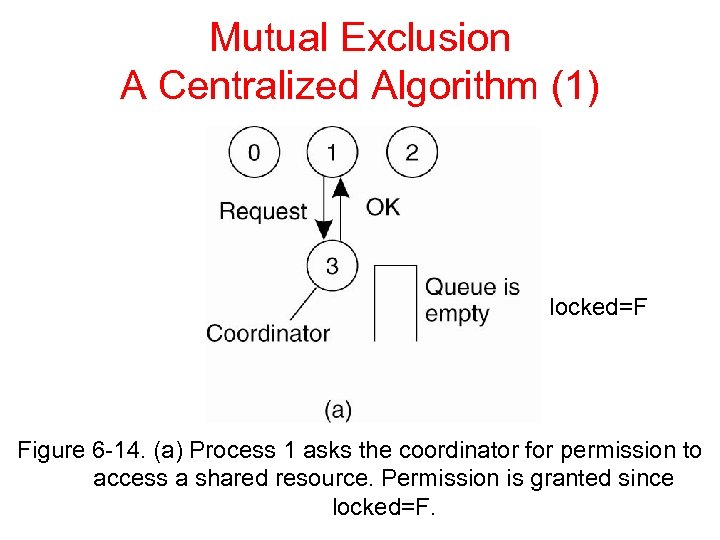

Mutual Exclusion A Centralized Algorithm (1) locked=F Figure 6 -14. (a) Process 1 asks the coordinator for permission to access a shared resource. Permission is granted since locked=F.

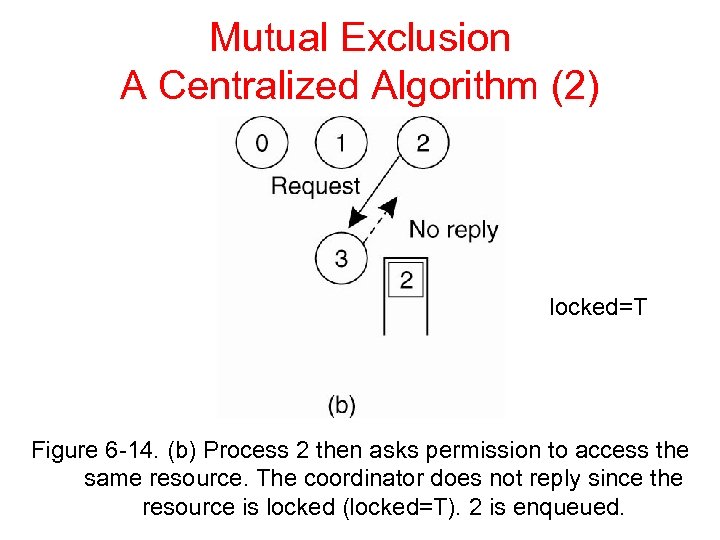

Mutual Exclusion A Centralized Algorithm (2) locked=T Figure 6 -14. (b) Process 2 then asks permission to access the same resource. The coordinator does not reply since the resource is locked (locked=T). 2 is enqueued.

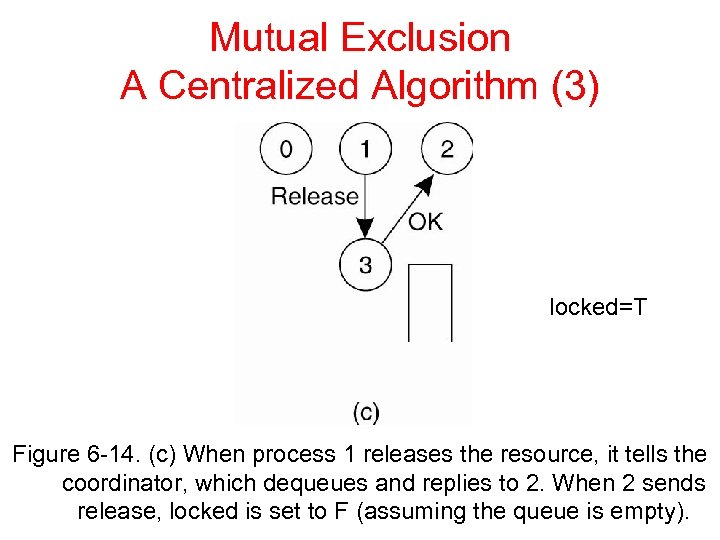

Mutual Exclusion A Centralized Algorithm (3) locked=T Figure 6 -14. (c) When process 1 releases the resource, it tells the coordinator, which dequeues and replies to 2. When 2 sends release, locked is set to F (assuming the queue is empty).

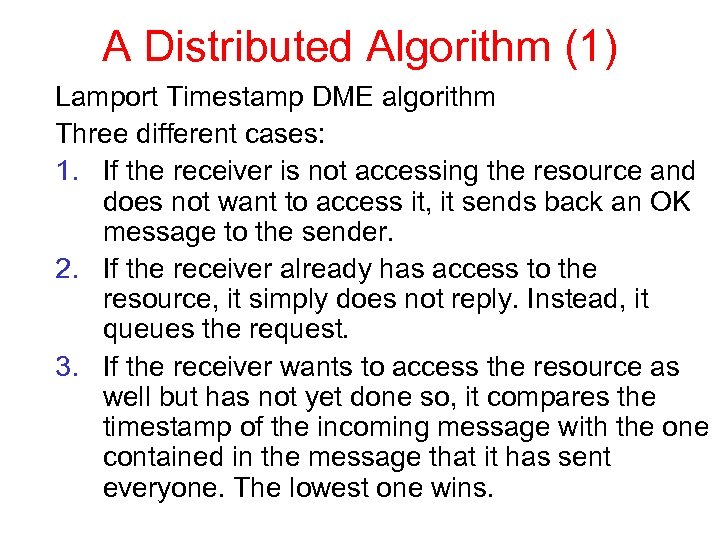

A Distributed Algorithm (1) Lamport Timestamp DME algorithm Three different cases: 1. If the receiver is not accessing the resource and does not want to access it, it sends back an OK message to the sender. 2. If the receiver already has access to the resource, it simply does not reply. Instead, it queues the request. 3. If the receiver wants to access the resource as well but has not yet done so, it compares the timestamp of the incoming message with the one contained in the message that it has sent everyone. The lowest one wins.

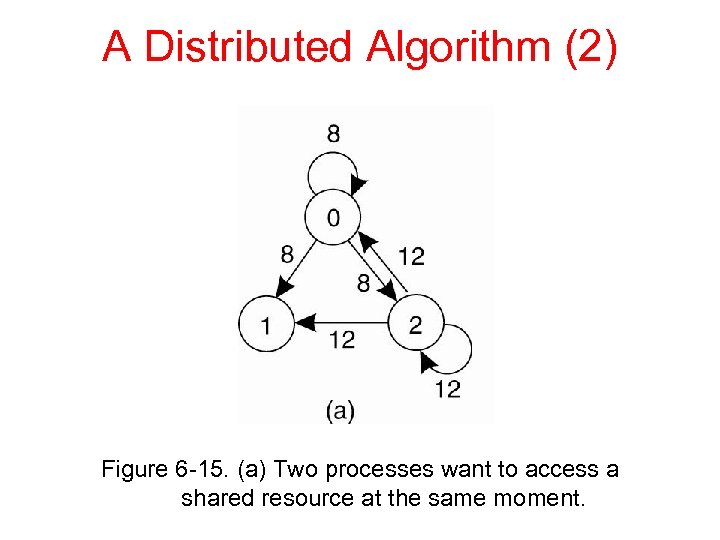

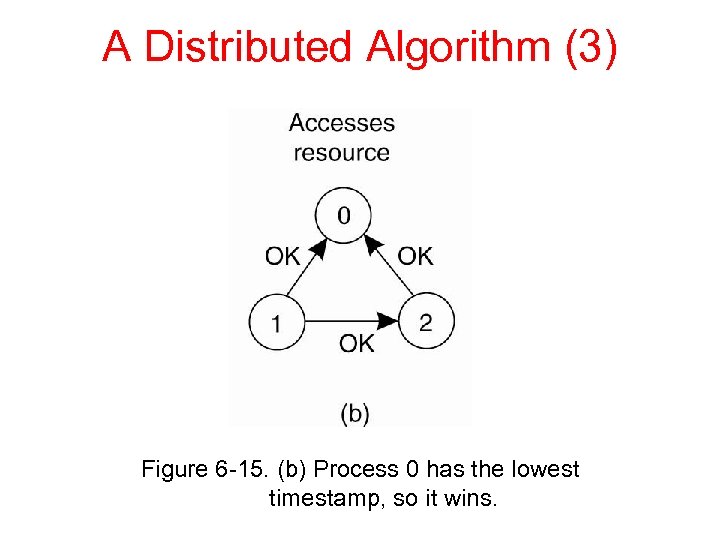

A Distributed Algorithm (2) Figure 6 -15. (a) Two processes want to access a shared resource at the same moment.

A Distributed Algorithm (3) Figure 6 -15. (b) Process 0 has the lowest timestamp, so it wins.

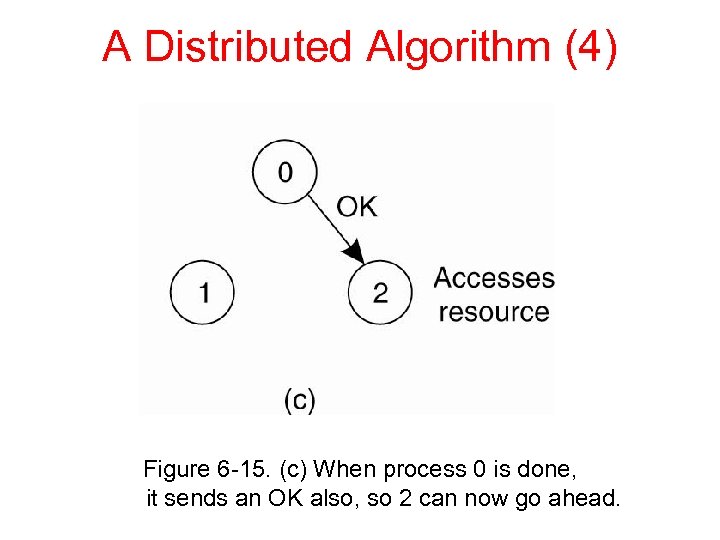

A Distributed Algorithm (4) Figure 6 -15. (c) When process 0 is done, it sends an OK also, so 2 can now go ahead.

A Distributed Algorithm (5) Lamport Timestamp DME algorithm Observations: 1. No deadlock – lowest timestamp wins. 2. No starvation – eventually your request has the lowest timestamp (all later ones will “see” yours and have a larger timestamp). 3. Replaces one overloaded node with N of them. 4. Replaces one point of failure with N of them. “Fix” failures by requiring “Deny” response to denied requests – sender can now resend until gets a reply and distinguish failures.

Voting Algorithms (1) Lamport Voting DME algorithm 1. Send request as before. 2. Give vote if it has not already been given to someone else. 3. Queue requests in timestamp order. 4. Win when a majority of votes is collected. 5. Return vote to grantor when done with resource. 6. Give returned vote to head of queue if not empty.

Voting Algorithms (2) Lamport Voting DME algorithm Observations: 1. Can tolerate N/2 -1 failures. 2. Don't have to send messages to all nodes – just enough to collect the votes needed. 3. Problem: multiple nodes may collect votes and none get a majority – deadlock! 4. Fix this with “Rescind” message – used if a grantor gets a request with a smaller timestamp. Give vote to priority request when returned. 5. If receive a Rescind message and not in CS, must return vote and wait for majority. 6. If receive Rescind while in CS, return when done

Voting Algorithms (3) Quorum Voting DME algorithm - coteries 1. Don't need majority – only need to break ties. 2. Each node n has its own quorum Q(n) whose votes it must get to obtain lock. 3. For all n and n', Q(n) intersects Q(n'). 4. Node(s) in intersection of Q(n) and Q(n') decide between the requests of n and n' – safety. 5. Can make |Q(n)| about sqrt(N) for N nodes. 6. Can also make the number of quorums of which a node n is a member about sqrt(N), so no node is overloaded with requests.

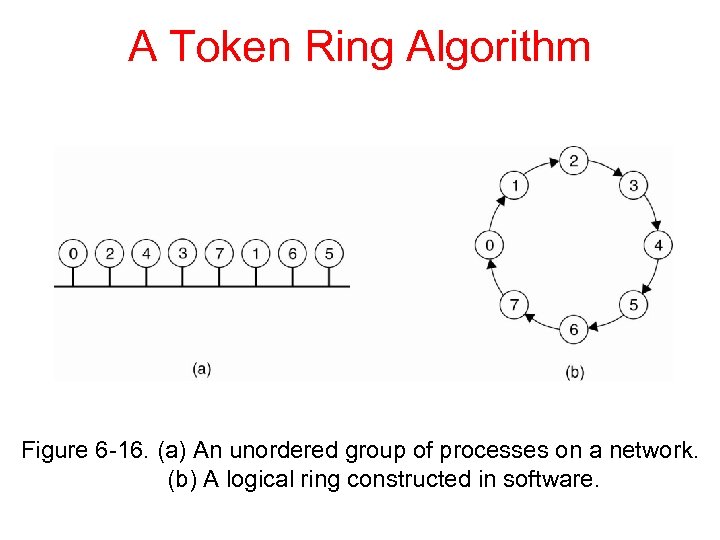

A Token Ring Algorithm Figure 6 -16. (a) An unordered group of processes on a network. (b) A logical ring constructed in software.

Tree Algorithm (1) Form a tree of all nodes with the node currently holding the token as the root. 1. Each node maintains a pointer to current parent and list of children, along with queue of requests (initially empty) and state (hold token or not). 2. If node n wants get lock, checks if it has token. If so, then enter CS, else check if queue is empty. If so, send request to parent. Put self on queue. 3. If receive request, have token, and not in CS, send token to requester and make it parent. Else send request to parent (if not self) and enqueue request. 4. When done with CS or receive token, send to first node in queue and make it new parent.

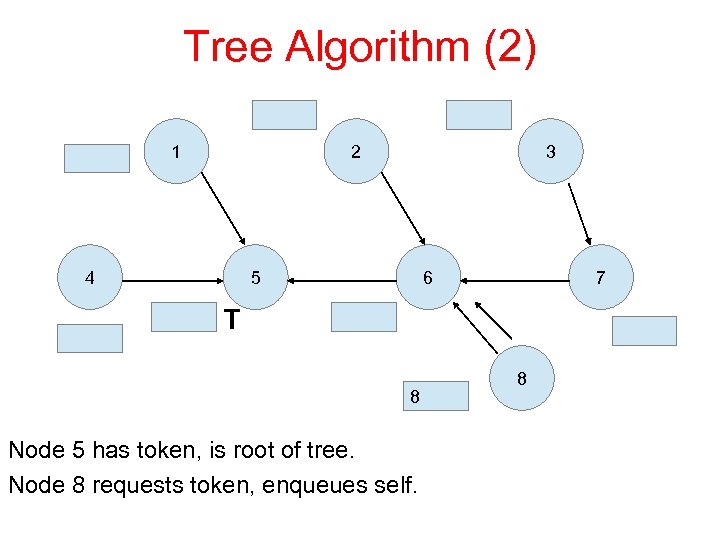

Tree Algorithm (2) 1 2 4 3 5 6 7 T 8 Node 5 has token, is root of tree. Node 8 requests token, enqueues self. 8

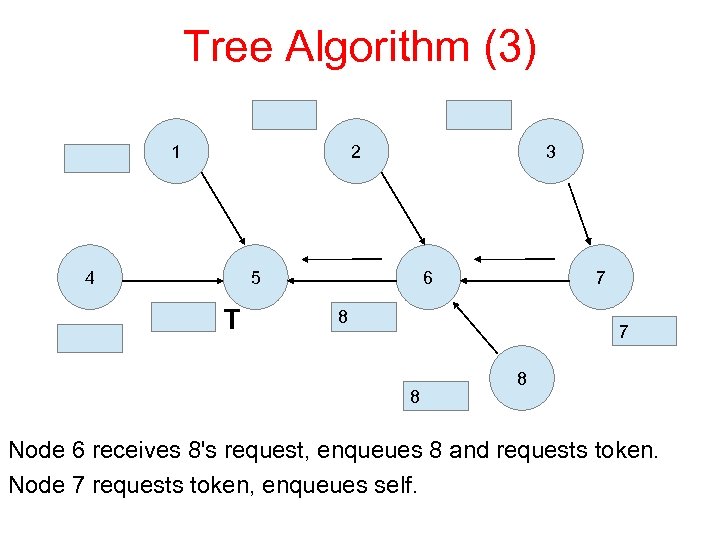

Tree Algorithm (3) 1 2 4 3 5 T 6 7 8 8 Node 6 receives 8's request, enqueues 8 and requests token. Node 7 requests token, enqueues self.

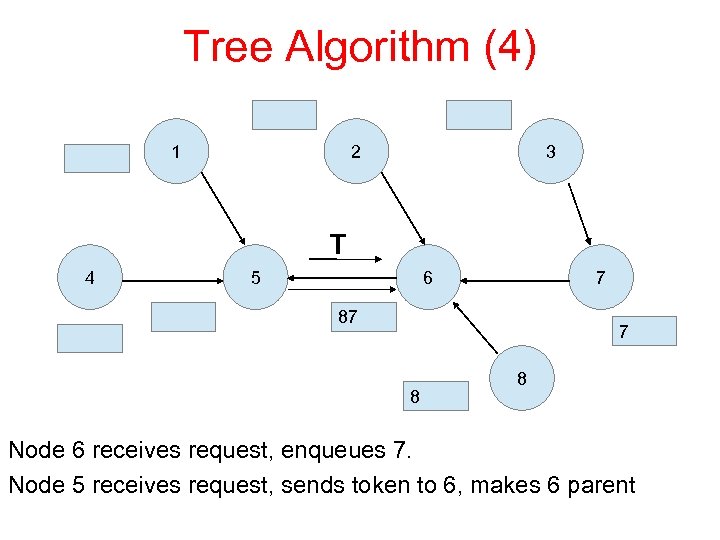

Tree Algorithm (4) 1 2 3 T 4 5 6 7 87 7 8 8 Node 6 receives request, enqueues 7. Node 5 receives request, sends token to 6, makes 6 parent

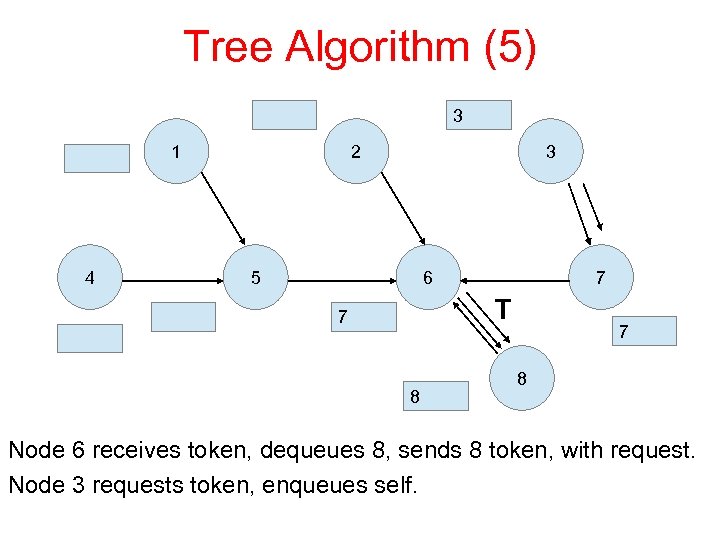

Tree Algorithm (5) 3 1 4 2 3 5 6 7 T 7 8 Node 6 receives token, dequeues 8, sends 8 token, with request. Node 3 requests token, enqueues self.

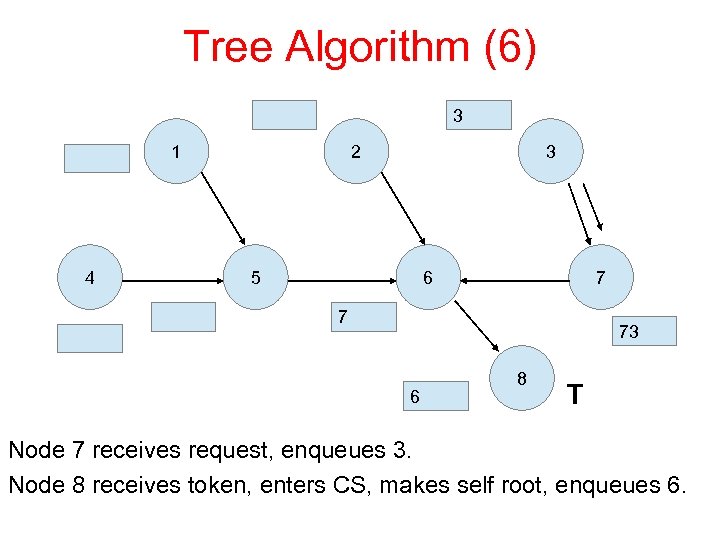

Tree Algorithm (6) 3 1 4 2 3 5 6 7 7 73 6 8 T Node 7 receives request, enqueues 3. Node 8 receives token, enters CS, makes self root, enqueues 6.

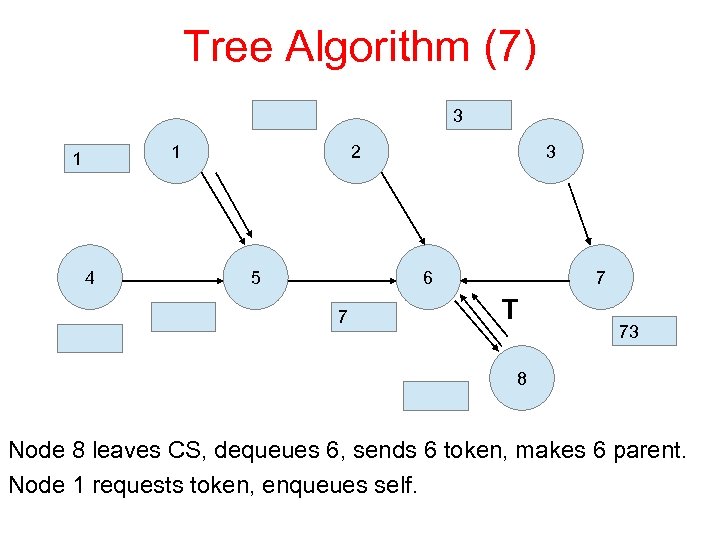

Tree Algorithm (7) 3 1 1 4 2 5 3 6 7 7 T 73 8 Node 8 leaves CS, dequeues 6, sends 6 token, makes 6 parent. Node 1 requests token, enqueues self.

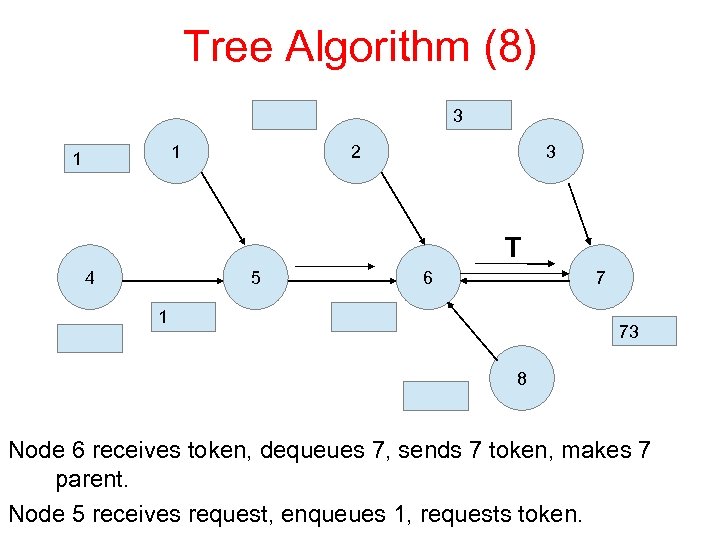

Tree Algorithm (8) 3 1 1 2 3 T 4 5 6 7 1 73 8 Node 6 receives token, dequeues 7, sends 7 token, makes 7 parent. Node 5 receives request, enqueues 1, requests token.

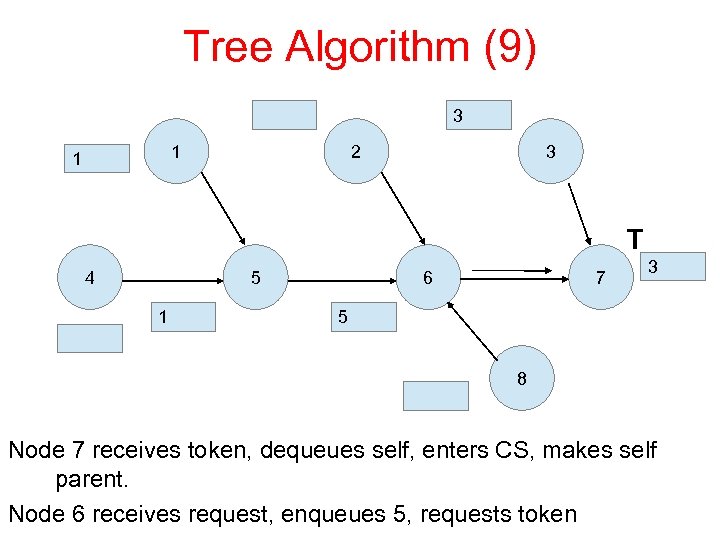

Tree Algorithm (9) 3 1 1 2 3 T 4 5 1 6 7 3 5 8 Node 7 receives token, dequeues self, enters CS, makes self parent. Node 6 receives request, enqueues 5, requests token

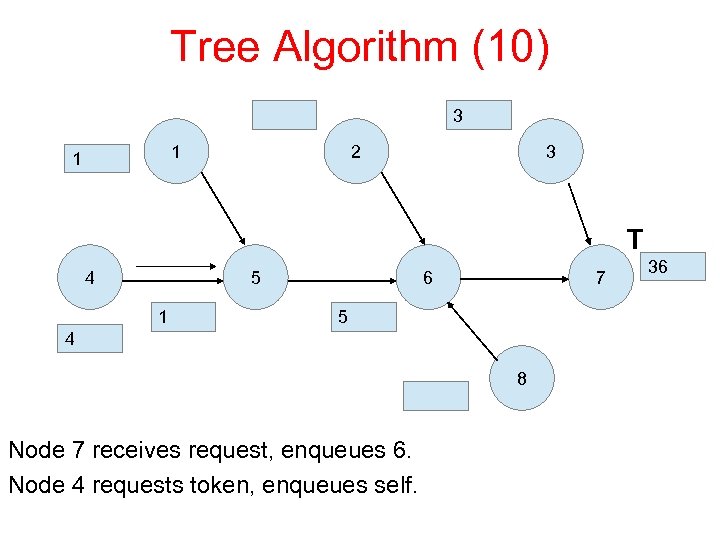

Tree Algorithm (10) 3 1 1 2 3 T 4 5 1 6 7 5 4 8 Node 7 receives request, enqueues 6. Node 4 requests token, enqueues self. 36

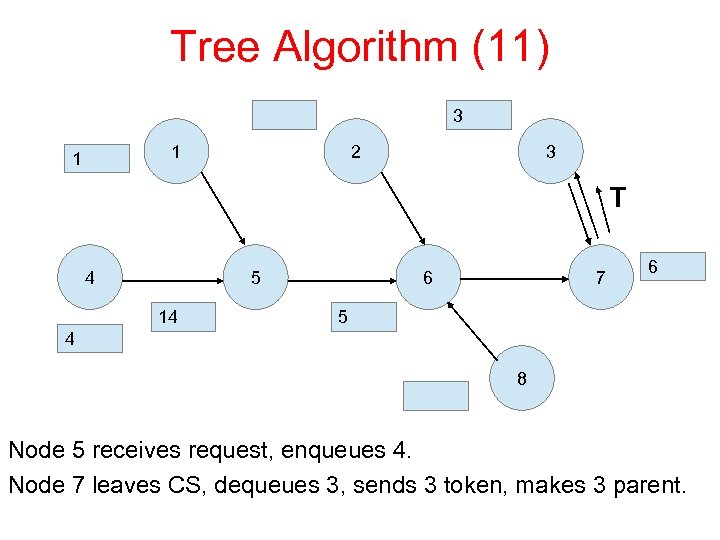

Tree Algorithm (11) 3 1 1 2 3 T 4 5 14 6 7 6 5 4 8 Node 5 receives request, enqueues 4. Node 7 leaves CS, dequeues 3, sends 3 token, makes 3 parent.

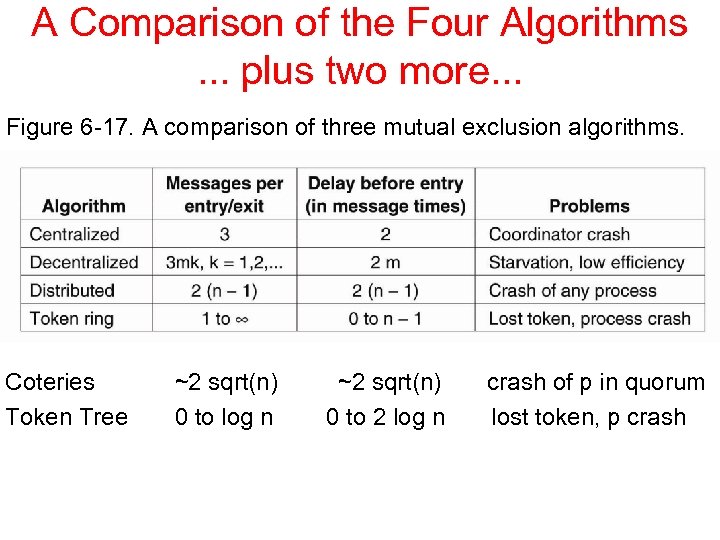

A Comparison of the Four Algorithms. . . plus two more. . . Figure 6 -17. A comparison of three mutual exclusion algorithms. Coteries Token Tree ~2 sqrt(n) 0 to log n ~2 sqrt(n) 0 to 2 log n crash of p in quorum lost token, p crash

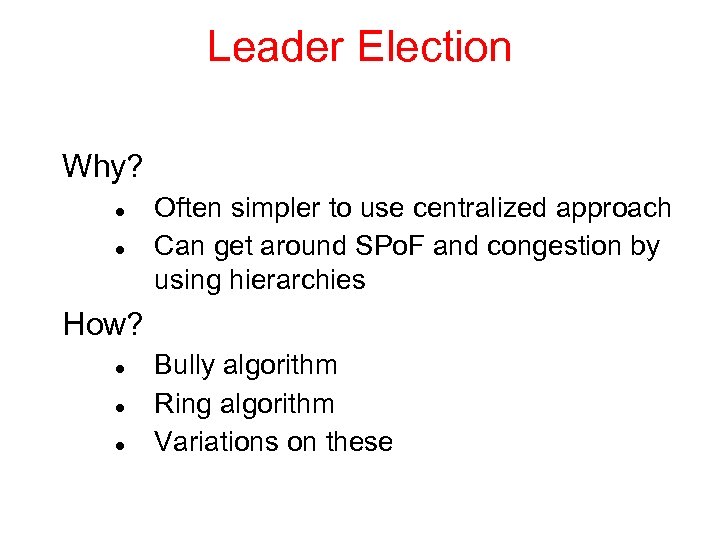

Leader Election Why? Often simpler to use centralized approach Can get around SPo. F and congestion by using hierarchies How? Bully algorithm Ring algorithm Variations on these

Election Algorithms The Bully Algorithm 1. P sends an ELECTION message to all processes with higher numbers. 2. If no one responds, P wins the election and becomes coordinator. 3. If one of the higher-ups answers, it takes over. P’s job is done.

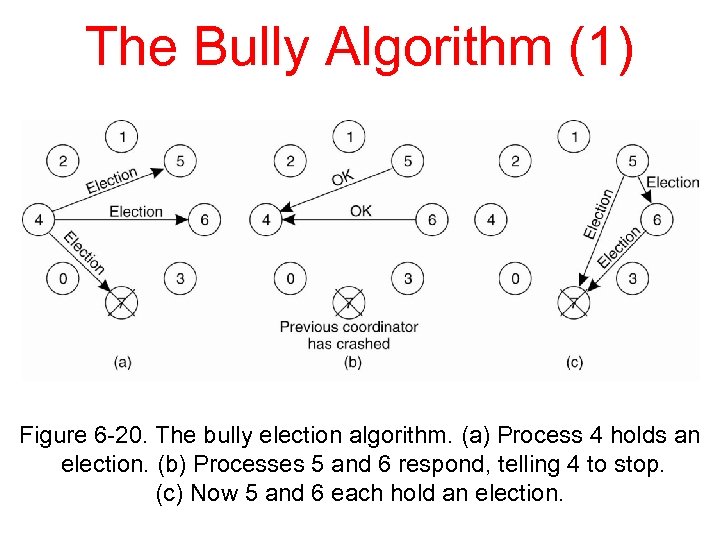

The Bully Algorithm (1) Figure 6 -20. The bully election algorithm. (a) Process 4 holds an election. (b) Processes 5 and 6 respond, telling 4 to stop. (c) Now 5 and 6 each hold an election.

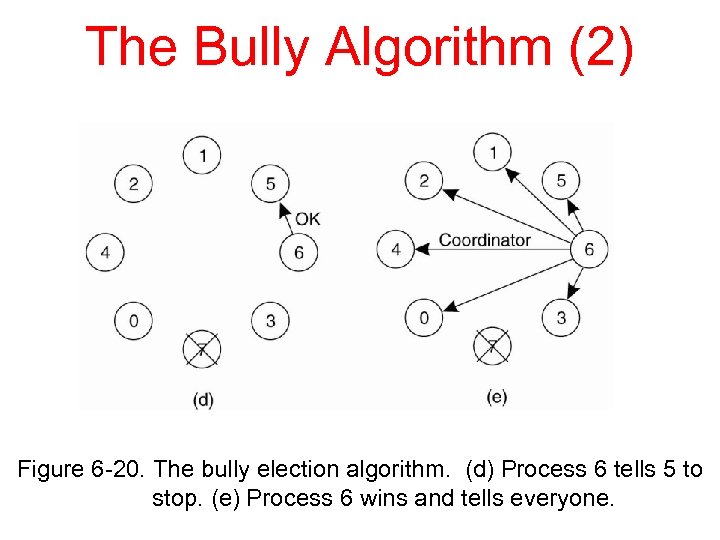

The Bully Algorithm (2) Figure 6 -20. The bully election algorithm. (d) Process 6 tells 5 to stop. (e) Process 6 wins and tells everyone.

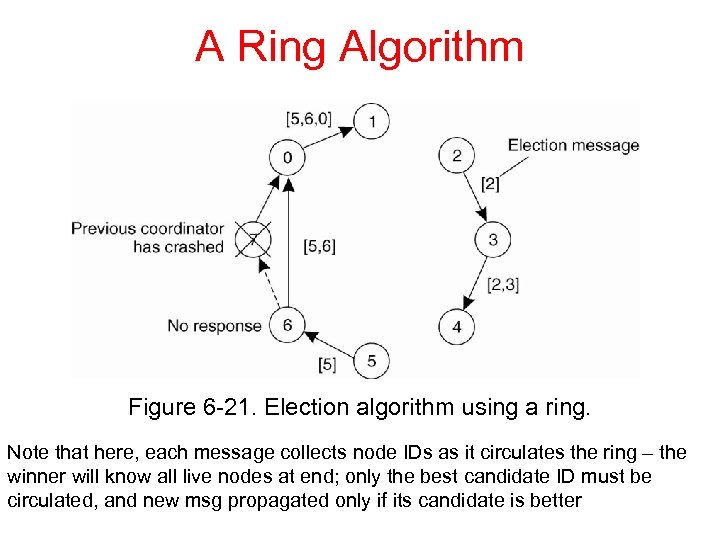

A Ring Algorithm Figure 6 -21. Election algorithm using a ring. Note that here, each message collects node IDs as it circulates the ring – the winner will know all live nodes at end; only the best candidate ID must be circulated, and new msg propagated only if its candidate is better

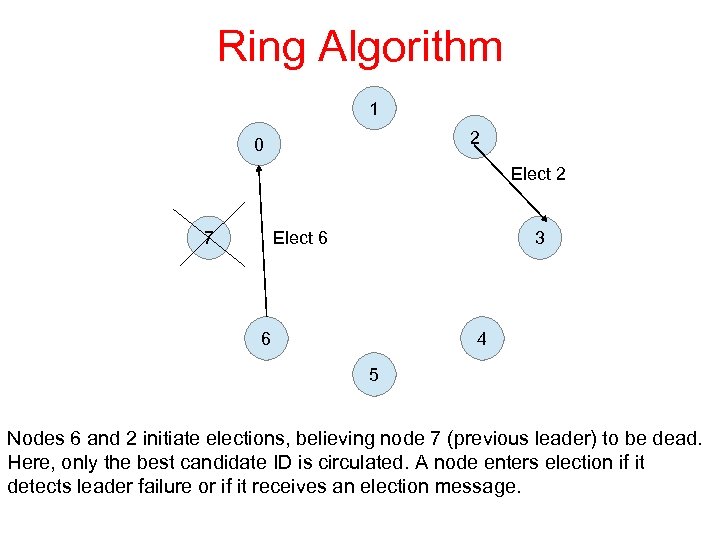

Ring Algorithm 1 2 0 Elect 2 Elect 6 7 3 6 4 5 Nodes 6 and 2 initiate elections, believing node 7 (previous leader) to be dead. Here, only the best candidate ID is circulated. A node enters election if it detects leader failure or if it receives an election message.

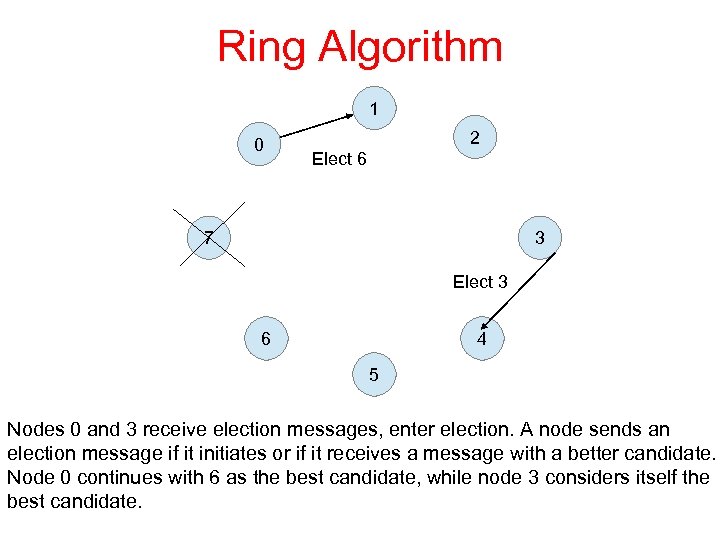

Ring Algorithm 1 0 2 Elect 6 7 3 Elect 3 6 4 5 Nodes 0 and 3 receive election messages, enter election. A node sends an election message if it initiates or if it receives a message with a better candidate. Node 0 continues with 6 as the best candidate, while node 3 considers itself the best candidate.

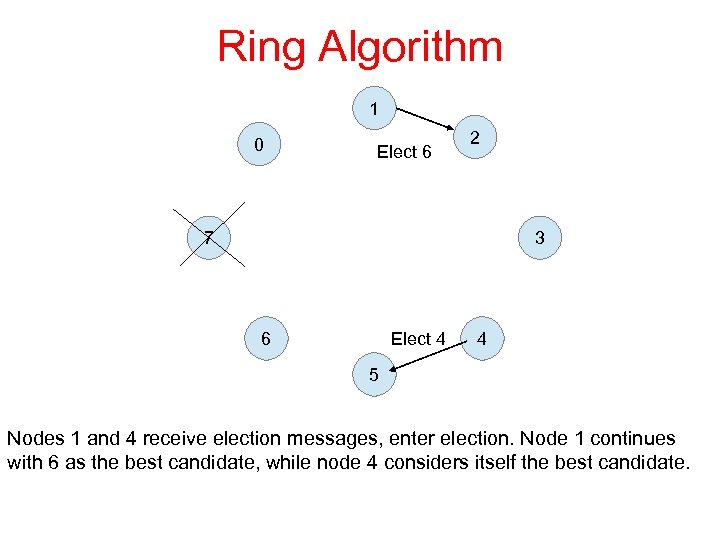

Ring Algorithm 1 0 Elect 6 2 7 3 Elect 4 6 4 5 Nodes 1 and 4 receive election messages, enter election. Node 1 continues with 6 as the best candidate, while node 4 considers itself the best candidate.

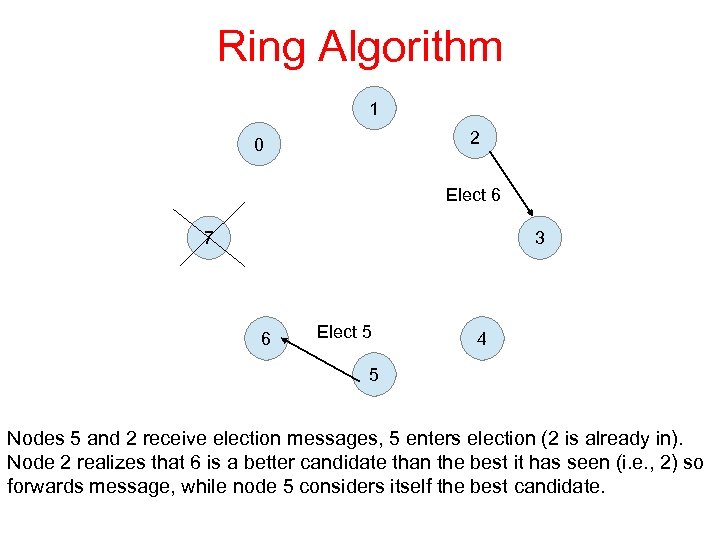

Ring Algorithm 1 2 0 Elect 6 7 3 6 Elect 5 4 5 Nodes 5 and 2 receive election messages, 5 enters election (2 is already in). Node 2 realizes that 6 is a better candidate than the best it has seen (i. e. , 2) so forwards message, while node 5 considers itself the best candidate.

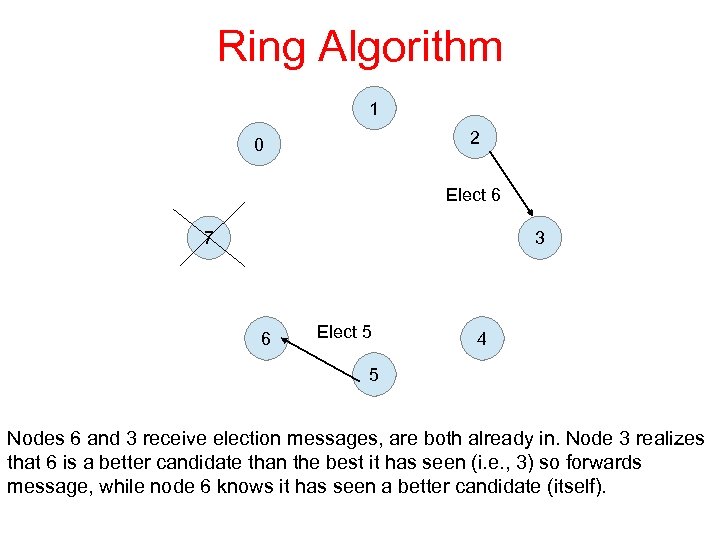

Ring Algorithm 1 2 0 Elect 6 7 3 6 Elect 5 4 5 Nodes 6 and 3 receive election messages, are both already in. Node 3 realizes that 6 is a better candidate than the best it has seen (i. e. , 3) so forwards message, while node 6 knows it has seen a better candidate (itself).

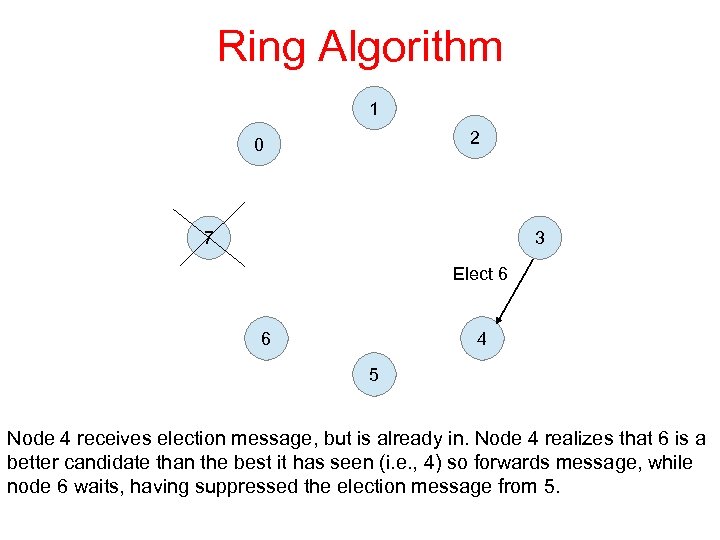

Ring Algorithm 1 2 0 7 3 Elect 6 6 4 5 Node 4 receives election message, but is already in. Node 4 realizes that 6 is a better candidate than the best it has seen (i. e. , 4) so forwards message, while node 6 waits, having suppressed the election message from 5.

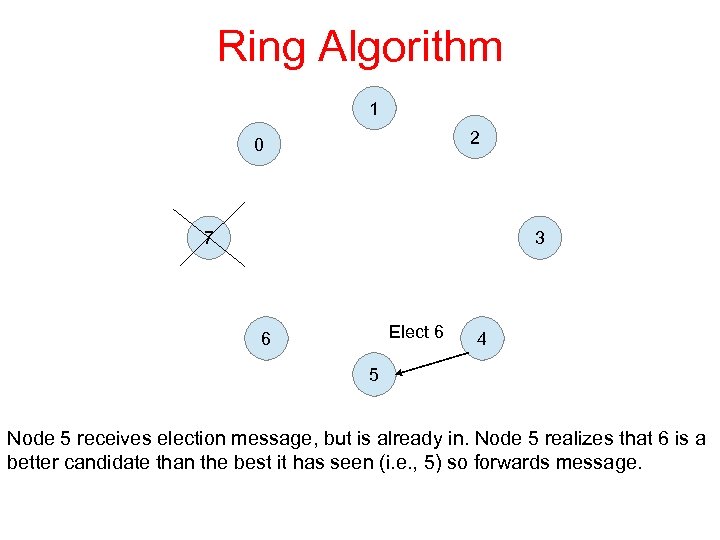

Ring Algorithm 1 2 0 7 3 Elect 6 6 4 5 Node 5 receives election message, but is already in. Node 5 realizes that 6 is a better candidate than the best it has seen (i. e. , 5) so forwards message.

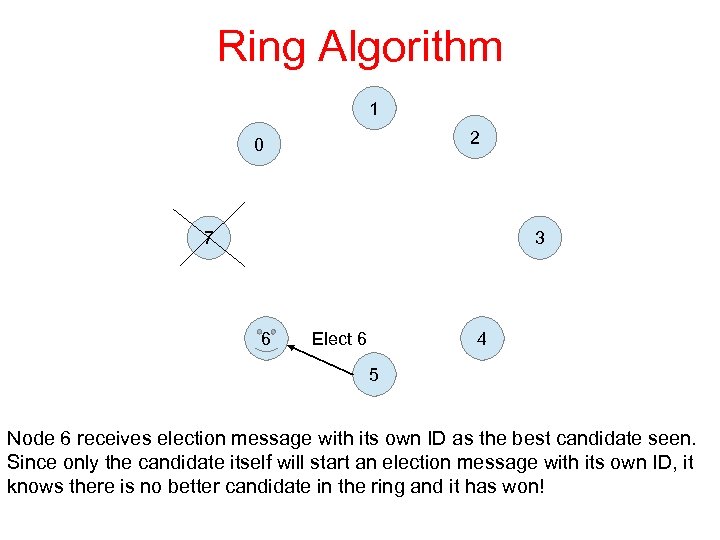

Ring Algorithm 1 2 0 7 3 6 4 Elect 6 5 Node 6 receives election message with its own ID as the best candidate seen. Since only the candidate itself will start an election message with its own ID, it knows there is no better candidate in the ring and it has won!

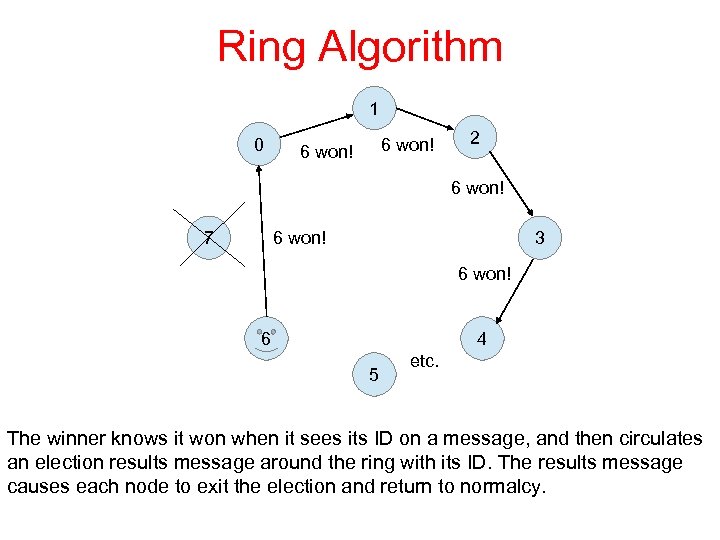

Ring Algorithm 1 0 6 won! 2 6 won! 7 3 6 won! 6 4 5 etc. The winner knows it won when it sees its ID on a message, and then circulates an election results message around the ring with its ID. The results message causes each node to exit the election and return to normalcy.

Improving Elections are generally held when a node fails 1. Use of heartbeats to detect failure – make “better” candidates more sensitive (i. e. , time out sooner) so only one node starts an election 2. Node starting an election can just contact the best candidate it believes to be alive, plus the better ones it thinks have failed (to be sure) 3. To avoid lengthy delays, the initiator can contact the K best candidates, or 4. If the best “live” candidate(s) don't respond, it can escalate to contact more, lower-tier candidates

Elections vs. DME While both types of protocol end up selecting a single winner, there are some important differences: 1. A DME participant keeps trying until it wins; this is not needed in leader election (any winner will do) 2. There is no issue of fairness in leader election 3. All nodes need to know the winner when leader election is done; this is not needed in DME 4. Hence DME can be more message-efficient (i. e. , sublinear) compared to leader election

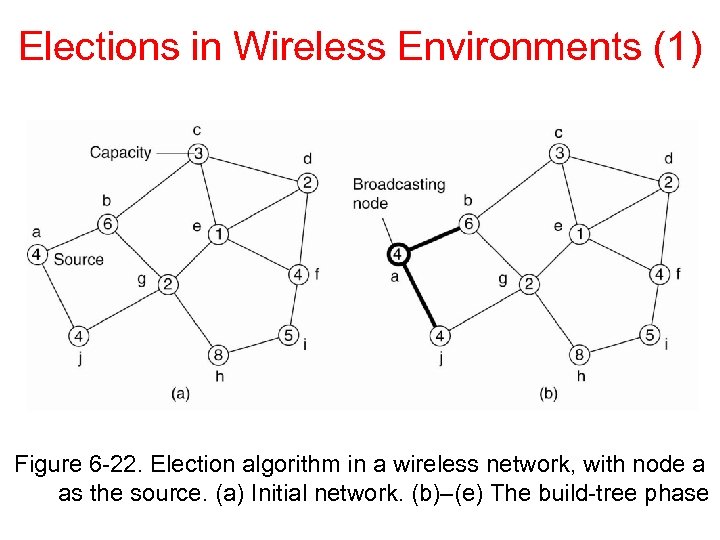

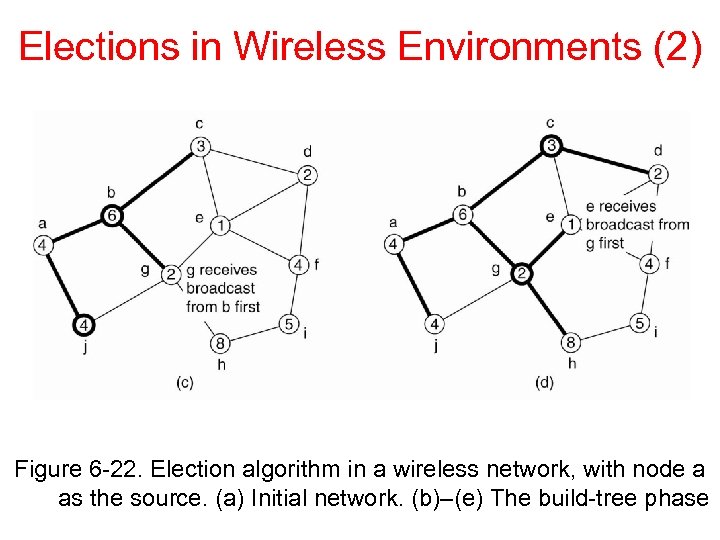

Elections in Wireless Environments (1) Figure 6 -22. Election algorithm in a wireless network, with node a as the source. (a) Initial network. (b)–(e) The build-tree phase

Elections in Wireless Environments (2) Figure 6 -22. Election algorithm in a wireless network, with node a as the source. (a) Initial network. (b)–(e) The build-tree phase

Elections in Wireless Environments (3) Figure 6 -22. (e) The build-tree phase. (f) Reporting of best node to source.

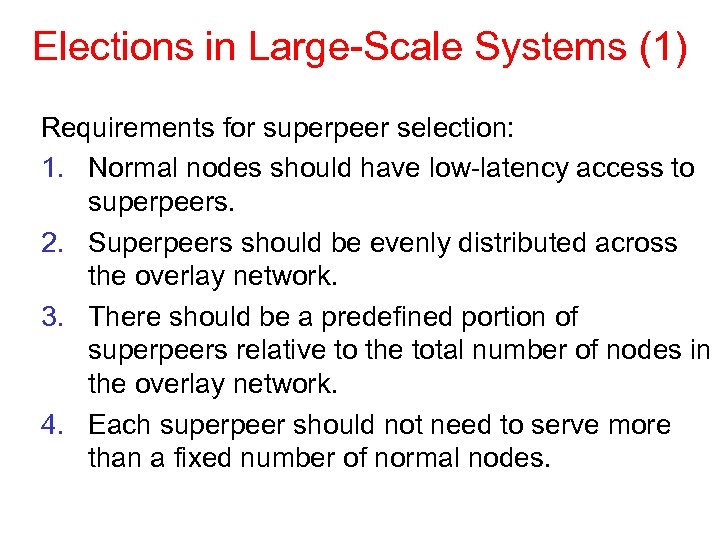

Elections in Large-Scale Systems (1) Requirements for superpeer selection: 1. Normal nodes should have low-latency access to superpeers. 2. Superpeers should be evenly distributed across the overlay network. 3. There should be a predefined portion of superpeers relative to the total number of nodes in the overlay network. 4. Each superpeer should not need to serve more than a fixed number of normal nodes.

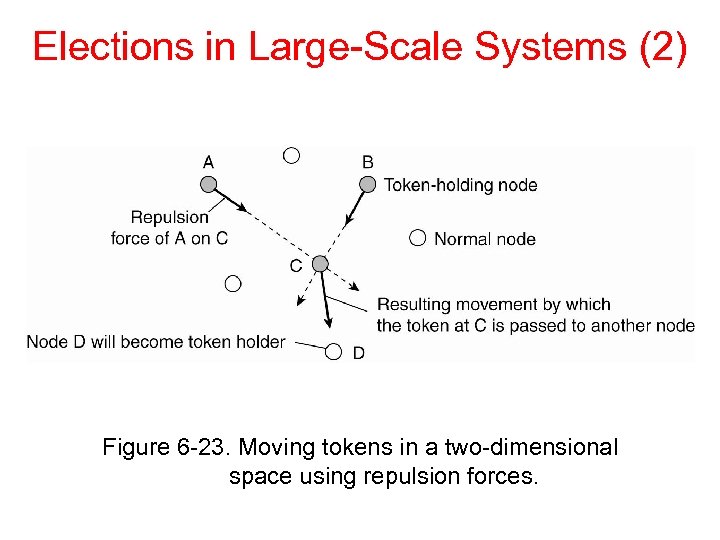

Elections in Large-Scale Systems (2) Figure 6 -23. Moving tokens in a two-dimensional space using repulsion forces.

aef186f0b9c810c8a814636770060ff4.ppt