14b580d88a30a7ef2dc57cf021bcd3cc.ppt

- Количество слайдов: 29

Distributed Self-Propelled Instrumentation Alex Mirgorodskiy VMware, Inc. Barton P. Miller University of Wisconsin-Madison

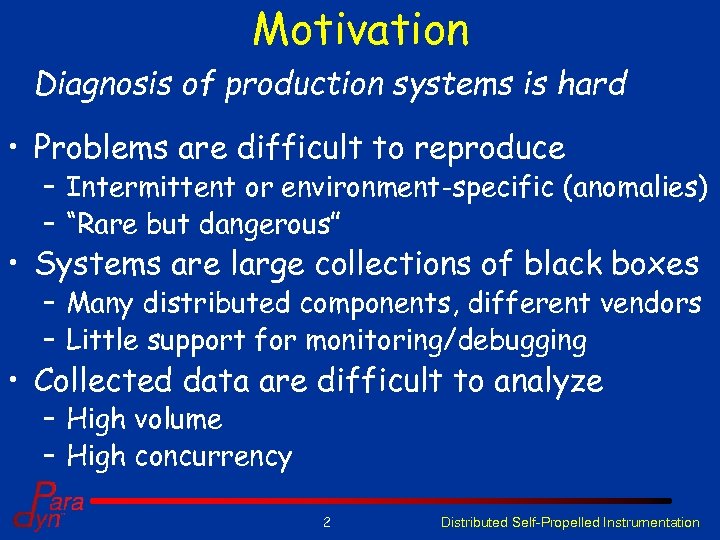

Motivation Diagnosis of production systems is hard • Problems are difficult to reproduce – Intermittent or environment-specific (anomalies) – “Rare but dangerous” • Systems are large collections of black boxes – Many distributed components, different vendors – Little support for monitoring/debugging • Collected data are difficult to analyze – High volume – High concurrency 2 Distributed Self-Propelled Instrumentation

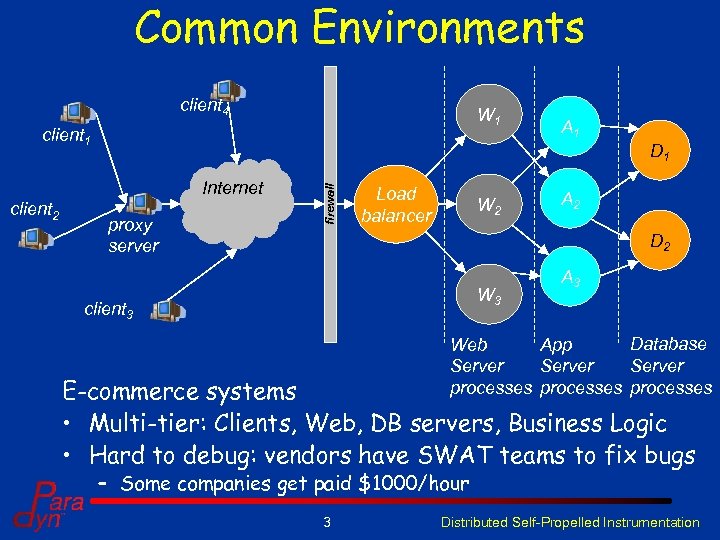

Common Environments client 4 W 1 client 1 proxy server firewall D 1 Internet client 2 A 1 Load balancer W 2 A 2 D 2 W 3 client 3 A 3 Database Web App Server processes E-commerce systems • Multi-tier: Clients, Web, DB servers, Business Logic • Hard to debug: vendors have SWAT teams to fix bugs – Some companies get paid $1000/hour 3 Distributed Self-Propelled Instrumentation

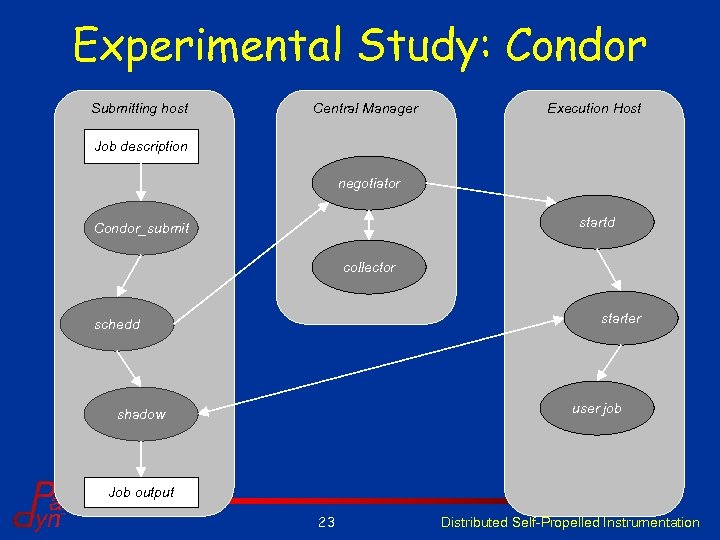

Common Environments Submitting host Central Manager Execution Host Job description negotiator startd submit collector schedd shadow starter user job • Clusters and HPC systems – Large-scale: failures happen often (MTTF: 30 – 150 hours) – Complex: processing a Condor job involves 10+ processes • The Grid: Beyond a single supercomputer – Decentralized – Heterogeneous: different schedulers, architectures • Hard to detect failures, let alone debug them 4 Distributed Self-Propelled Instrumentation

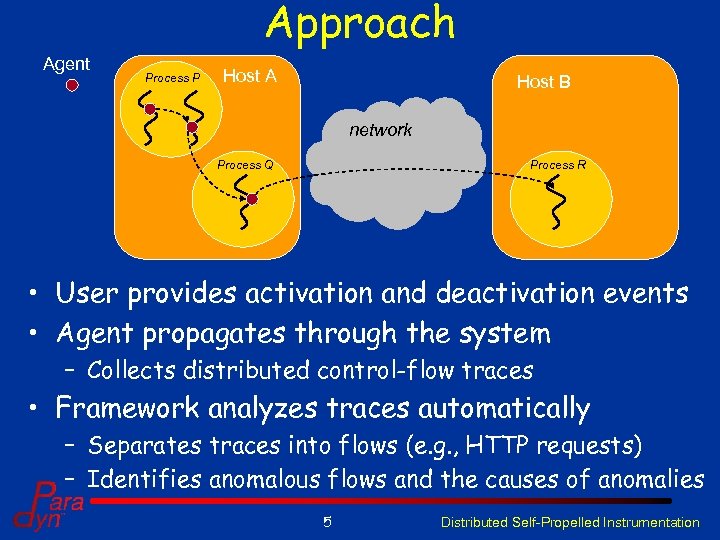

Approach Agent Process P Host A Host B network Process R Process Q • User provides activation and deactivation events • Agent propagates through the system – Collects distributed control-flow traces • Framework analyzes traces automatically – Separates traces into flows (e. g. , HTTP requests) – Identifies anomalous flows and the causes of anomalies 5 Distributed Self-Propelled Instrumentation

Self-Propelled Instrumentation: Overview • The agent sits inside the process – Agent = small code fragment • The agent propagates through the code – – Receives control Inserts calls to itself ahead of the control flow Crosses process, host, and kernel boundaries Returns control to the application • Key features – On-demand distributed deployment – Application-agnostic distributed deployment 6 Distributed Self-Propelled Instrumentation

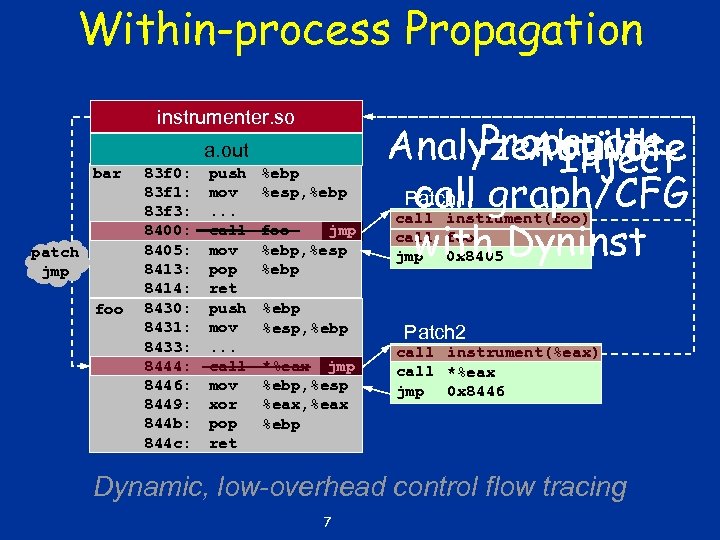

Within-process Propagation instrumenter. so a. out bar patch jmp foo 83 f 0: 83 f 1: 83 f 3: 8400: 8405: 8413: 8414: 8430: 8431: 8433: 8444: 8446: 8449: 844 b: 844 c: push mov. . . call mov pop ret push mov. . . call mov xor pop ret %ebp %esp, %ebp foo jmp %ebp, %esp %ebp %esp, %ebp *%eax jmp %ebp, %esp %eax, %eax %ebp Propagate Analyze: build Activate Inject Patch 1 graph/CFG call with Dyninst call instrument(foo) call foo jmp 0 x 8405 Patch 2 call instrument(%eax) call *%eax jmp 0 x 8446 Dynamic, low-overhead control flow tracing 7

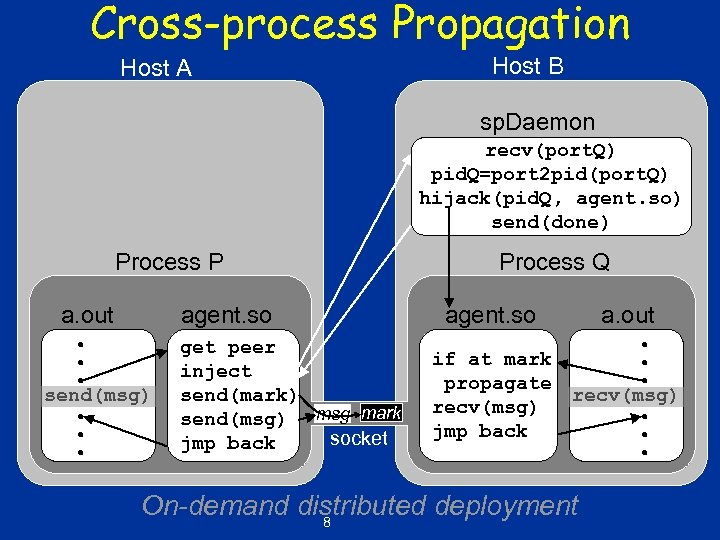

Cross-process Propagation Host A Host B sp. Daemon recv(port. Q) pid. Q=port 2 pid(port. Q) hijack(pid. Q, agent. so) send(done) Process P a. out agent. so send(msg) get peer inject send(mark) send(msg) msg mark socket jmp back Process Q agent. so a. out if at mark propagate recv(msg) jmp back recv(msg) On-demand distributed deployment 8

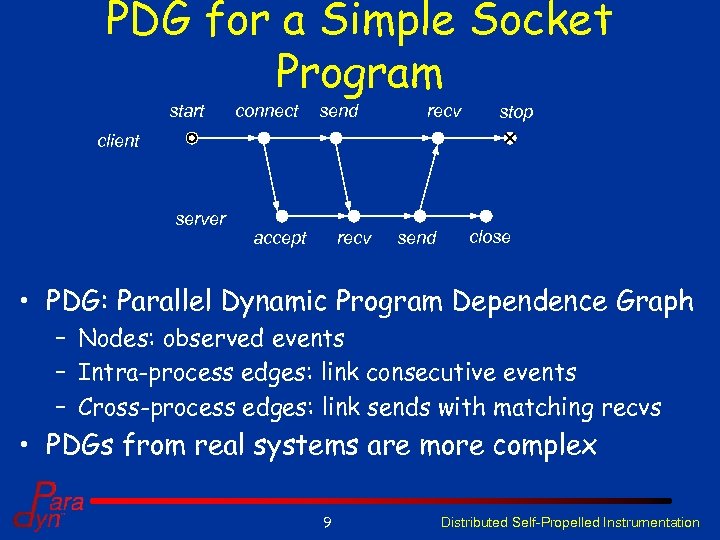

PDG for a Simple Socket Program start connect send recv stop client server accept recv send close • PDG: Parallel Dynamic Program Dependence Graph – Nodes: observed events – Intra-process edges: link consecutive events – Cross-process edges: link sends with matching recvs • PDGs from real systems are more complex 9 Distributed Self-Propelled Instrumentation

PDG for One Condor Job 10 Distributed Self-Propelled Instrumentation

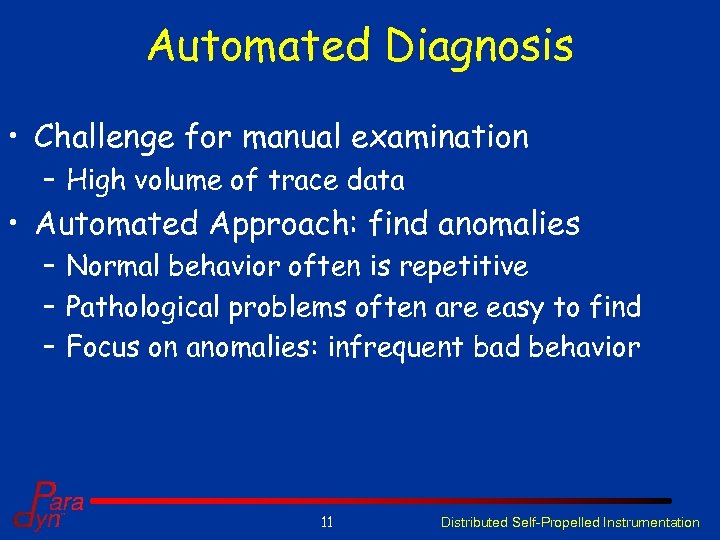

Automated Diagnosis • Challenge for manual examination – High volume of trace data • Automated Approach: find anomalies – Normal behavior often is repetitive – Pathological problems often are easy to find – Focus on anomalies: infrequent bad behavior 11 Distributed Self-Propelled Instrumentation

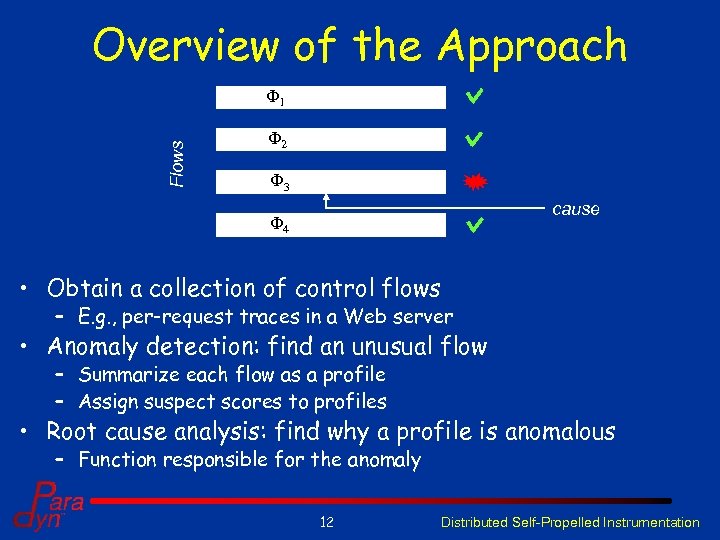

Overview of the Approach Flows Φ 1 Φ 2 Φ 3 cause Φ 4 • Obtain a collection of control flows – E. g. , per-request traces in a Web server • Anomaly detection: find an unusual flow – Summarize each flow as a profile – Assign suspect scores to profiles • Root cause analysis: find why a profile is anomalous – Function responsible for the anomaly 12 Distributed Self-Propelled Instrumentation

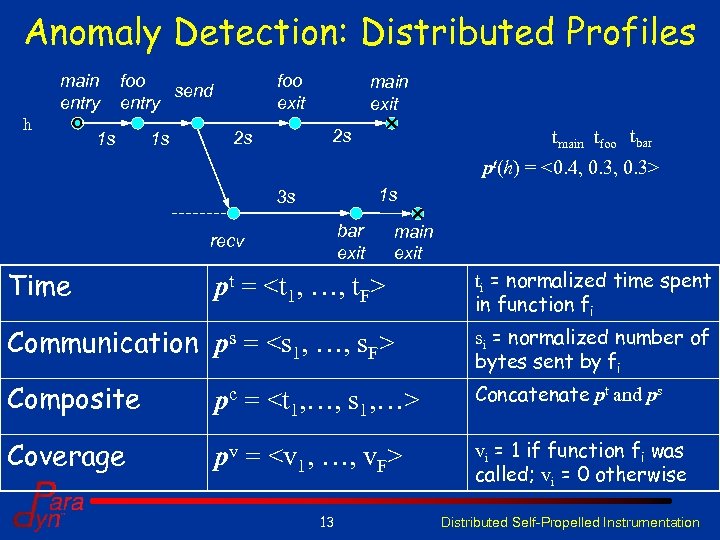

Anomaly Detection: Distributed Profiles main entry h foo send entry 1 s 1 s foo exit main exit tmain tfoo tbar 2 s 2 s pt(h) = <0. 4, 0. 3> 1 s 3 s bar exit recv Time main exit pt = <t 1, …, t. F> ti = normalized time spent in function fi Communication ps = <s 1, …, s. F> si = normalized number of bytes sent by fi Composite pc Concatenate pt and ps Coverage pv = <v 1, …, v. F> = <t 1, …, s 1, …> 13 vi = 1 if function fi was called; vi = 0 otherwise Distributed Self-Propelled Instrumentation

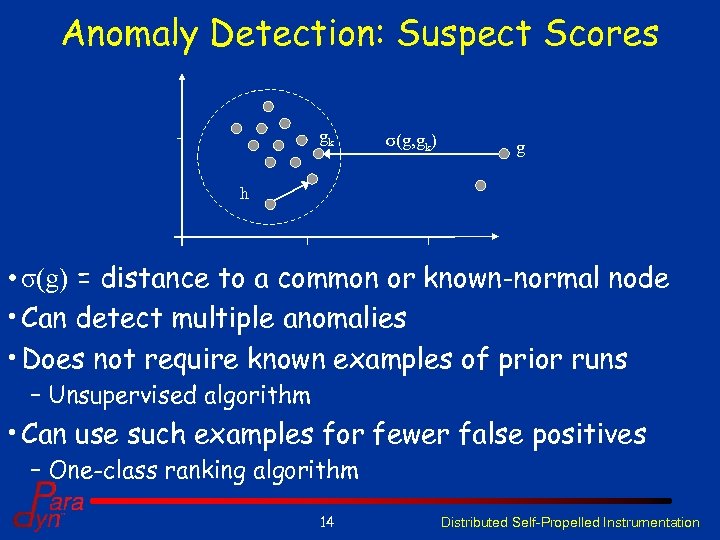

Anomaly Detection: Suspect Scores gk σ(g, gk) g h • σ(g) = distance to a common or known-normal node • Can detect multiple anomalies • Does not require known examples of prior runs – Unsupervised algorithm • Can use such examples for fewer false positives – One-class ranking algorithm 14 Distributed Self-Propelled Instrumentation

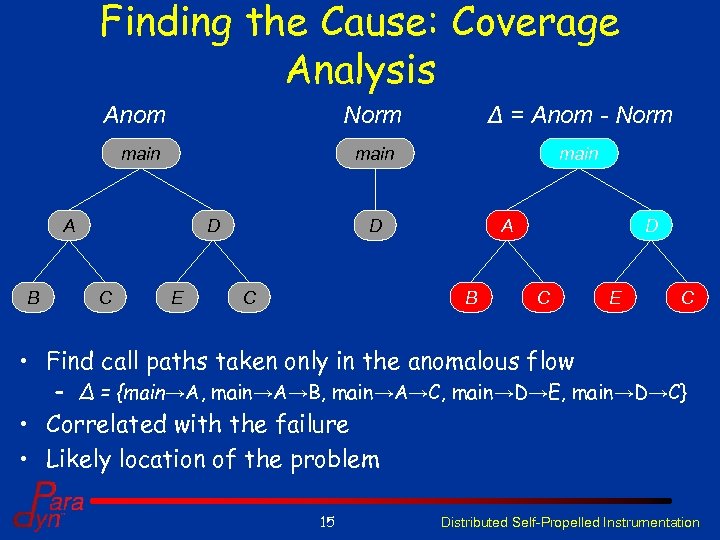

Finding the Cause: Coverage Analysis Anom Norm main A B D C E Δ = Anom - Norm main D C A B D C E C • Find call paths taken only in the anomalous flow – Δ = {main→A, main→A→B, main→A→C, main→D→E, main→D→C} • Correlated with the failure • Likely location of the problem 15 Distributed Self-Propelled Instrumentation

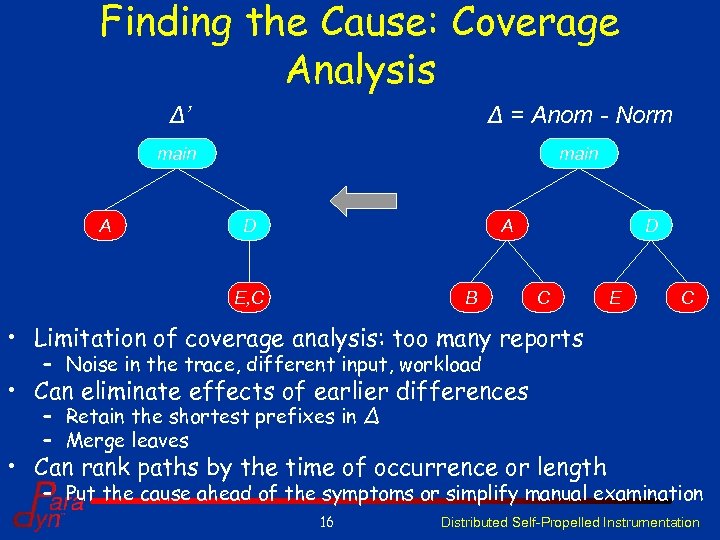

Finding the Cause: Coverage Analysis Δ’ main A Δ = Anom - Norm main D A E, C B D C E C • Limitation of coverage analysis: too many reports – Noise in the trace, different input, workload • Can eliminate effects of earlier differences – Retain the shortest prefixes in Δ – Merge leaves • Can rank paths by the time of occurrence or length – Put the cause ahead of the symptoms or simplify manual examination 16 Distributed Self-Propelled Instrumentation

PDG for One Condor Job 17 Distributed Self-Propelled Instrumentation

PDG for Two Condor Jobs 18 Distributed Self-Propelled Instrumentation

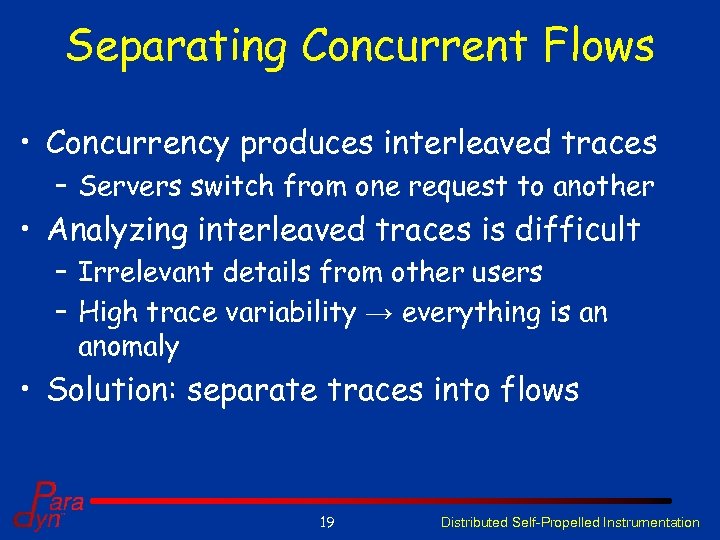

Separating Concurrent Flows • Concurrency produces interleaved traces – Servers switch from one request to another • Analyzing interleaved traces is difficult – Irrelevant details from other users – High trace variability → everything is an anomaly • Solution: separate traces into flows 19 Distributed Self-Propelled Instrumentation

Flow-Separation Algorithm click 1 send URL connect recv page show page browser 1 Web server select accept select recv send URL page select accept browser 2 click 2 recv send URL page send URL connect recv page show page • Decide when two events are in the same flow – (send → recv) and (local → non-recv) • Remove all other edges • Flow = events reachable from a start event 20 Distributed Self-Propelled Instrumentation

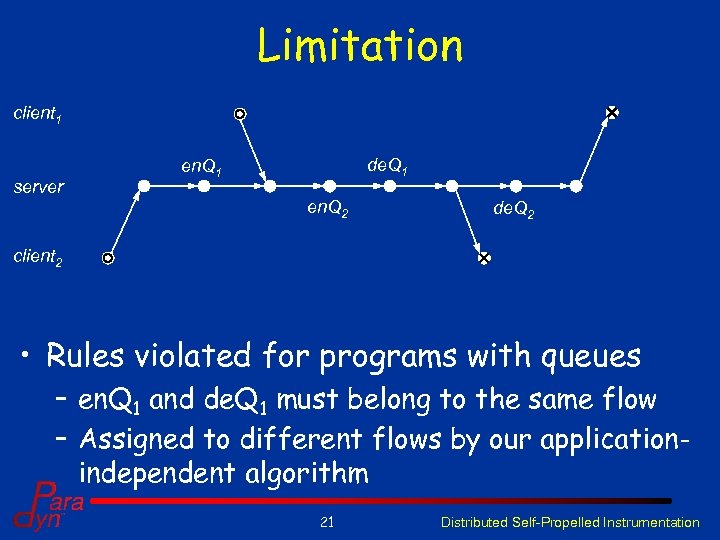

Limitation client 1 server de. Q 1 en. Q 2 de. Q 2 client 2 • Rules violated for programs with queues – en. Q 1 and de. Q 1 must belong to the same flow – Assigned to different flows by our applicationindependent algorithm 21 Distributed Self-Propelled Instrumentation

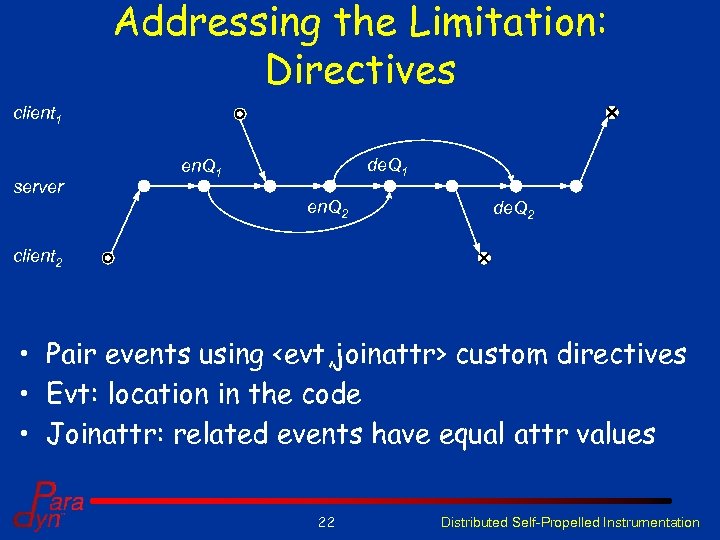

Addressing the Limitation: Directives client 1 server de. Q 1 en. Q 2 de. Q 2 client 2 • Pair events using <evt, joinattr> custom directives • Evt: location in the code • Joinattr: related events have equal attr values 22 Distributed Self-Propelled Instrumentation

Experimental Study: Condor Submitting host Central Manager Execution Host Job description negotiator startd Condor_submit collector starter schedd user job shadow Job output 23 Distributed Self-Propelled Instrumentation

Job-run-twice Problem • Fault handling in Condor – Any component can fail – Detect the failure – Restart the component • Bug in the shadow daemon – Symptoms: user job ran twice – Cause: intermittent crash after shadow reported successful job completion 24 Distributed Self-Propelled Instrumentation

Debugging Approach • Insert an intermittent fault into shadow • Submit a cluster of several jobs – Start tracing condor_submit – Propagate into schedd, shadow, collector, negotiator, startd, starter, mail, the user job • Separate the trace into flows – Processing each job is a separate flow • Identify anomalous flow – Use unsupervised and one-class algorithms • Find the cause of the anomaly 25 Distributed Self-Propelled Instrumentation

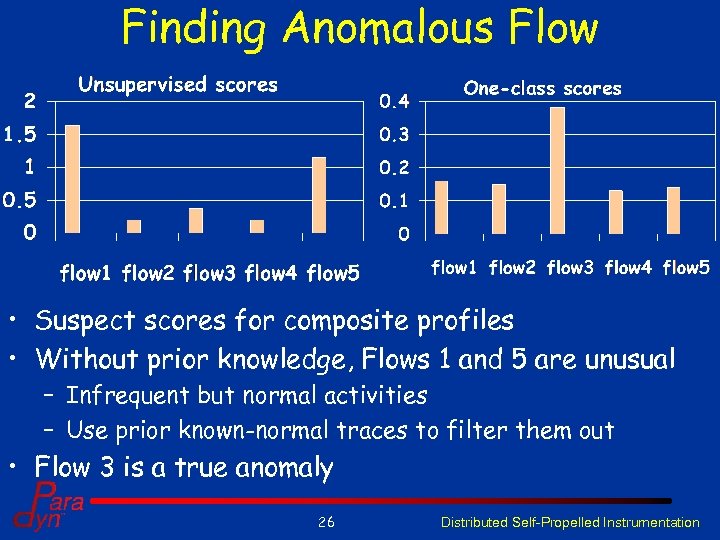

Finding Anomalous Flow • Suspect scores for composite profiles • Without prior knowledge, Flows 1 and 5 are unusual – Infrequent but normal activities – Use prior known-normal traces to filter them out • Flow 3 is a true anomaly 26 Distributed Self-Propelled Instrumentation

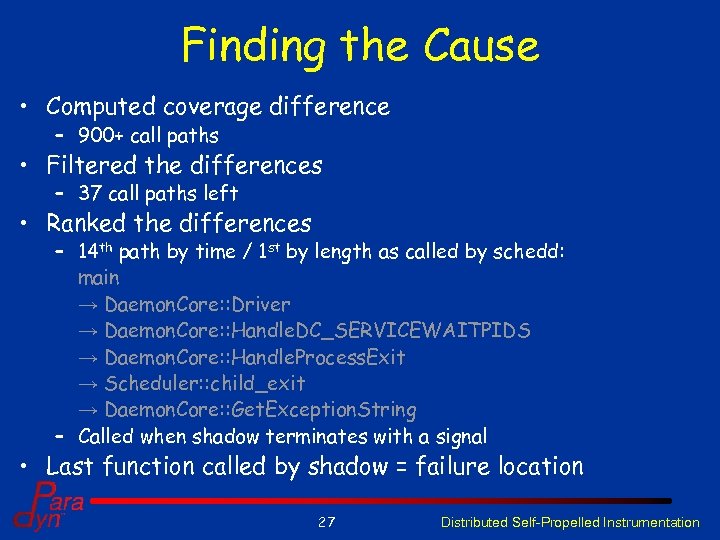

Finding the Cause • Computed coverage difference – 900+ call paths • Filtered the differences – 37 call paths left • Ranked the differences – 14 th path by time / 1 st by length as called by schedd: main → Daemon. Core: : Driver → Daemon. Core: : Handle. DC_SERVICEWAITPIDS → Daemon. Core: : Handle. Process. Exit → Scheduler: : child_exit → Daemon. Core: : Get. Exception. String – Called when shadow terminates with a signal • Last function called by shadow = failure location 27 Distributed Self-Propelled Instrumentation

Conclusion • Self-propelled instrumentation – On-demand, low-overhead control-flow tracing – Across process and host boundaries • Automated root cause analysis – Finds anomalous control flows – Finds the causes of anomalies • Separation of concurrent flows – Little application-specific knowledge 28 Distributed Self-Propelled Instrumentation

Related Publications • A. V. Mirgorodskiy and B. P. Miller, “Diagnosing Distributed Systems with Self-Propelled Instrumentation", Under submission, – ftp: //ftp. cs. wisc. edu/paradyn/papers/Mirgorodskiy 07 Dist. Diagnosis. pdf • A. V. Mirgorodskiy, N. Maruyama, and B. P. Miller, “Problem Diagnosis in Large-Scale Computing Environments", SC’ 06, Tampa, FL, November 2006, – ftp: //ftp. cs. wisc. edu/paradyn/papers/Mirgorodskiy 06 Problem. Diagno sis. pdf • A. V. Mirgorodskiy and B. P. Miller, "Autonomous Analysis of Interactive Systems with Self-Propelled Instrumentation", 12 th Multimedia Computing and Networking (MMCN 2005), San Jose, CA, January 2005, – ftp: //ftp. cs. wisc. edu/paradyn/papers/Mirgorodskiy 04 Self. Prop. pdf 29 Distributed Self-Propelled Instrumentation

14b580d88a30a7ef2dc57cf021bcd3cc.ppt