a9c7403867eb454ba6dbb9e8aac0752e.ppt

- Количество слайдов: 26

Distributed Scientific Workflows in Heterogeneous Network Environments Mengxia Zhu Qishi Wu Assistant Professor Department of Computer Science Southern Illinois University, Carbondale mzhu@cs. siu. edu Assistant Professor Department of Computer Science The University of Memphis qishiwu@memphis. edu September 28 -29, 2009 Fermilab, Chicago Sponsored by Department of Energy 1

Outline · Introduction · Challenges & Objectives & Tasks - Workflow Assembly - Workflow Execution - Workflow Status Monitoring and Management · In-depth Performance Issues - Optimization - Reliability - Stability · Workflow Simulation Program · Preliminary Experiments · Conclusion 2

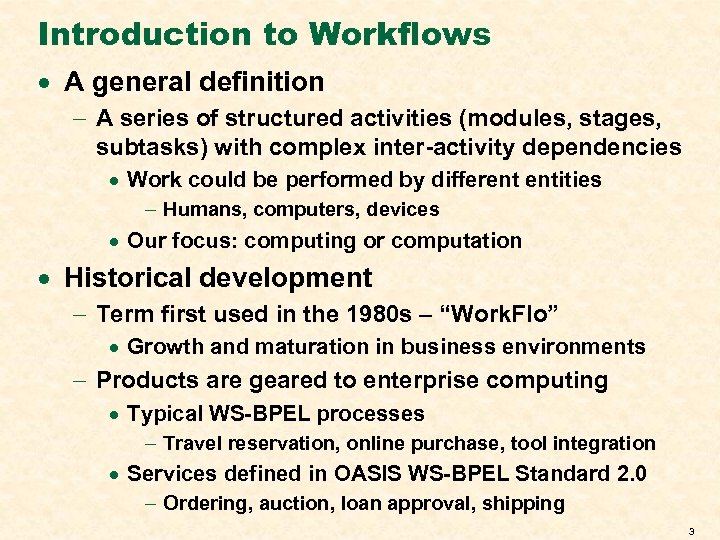

Introduction to Workflows · A general definition - A series of structured activities (modules, stages, subtasks) with complex inter-activity dependencies · Work could be performed by different entities - Humans, computers, devices · Our focus: computing or computation · Historical development - Term first used in the 1980 s – “Work. Flo” · Growth and maturation in business environments - Products are geared to enterprise computing · Typical WS-BPEL processes - Travel reservation, online purchase, tool integration · Services defined in OASIS WS-BPEL Standard 2. 0 - Ordering, auction, loan approval, shipping 3

Introduction to Workflows · Scientific workflows - An even more important area of research · Sciences are increasingly computation-intensive - Heaviest use of computing - Variety of distributed resources - Process automation in scientific settings · Data generation, transfer, processing, synthesis, analysis · Knowledge discovery - Share many features with business workflows · Leveraging existing results and techniques - Go far beyond them · · · Large data size Long-term process Complex structures Network- and computing-intensive activities Application-dependent analysis tools 4

Introduction to Workflows · Large-scale scientific applications - Simulation-based using HPC · Simulate large and complex physical, chemical, biological, and climatic processes - Astrophysics, combustion, computational biology, climate research - Experiment-based using special facilities · High energy experimental sciences - Spallation Neutron Source (SNS) - Large Hadron Collider (LHC) 5

Introduction · Supercomputing for large-scale scientific applications Astrophysics Computational biology Nanoscience Climate research Neutron sciences Flow dynamics Fusion simulation Computational materials 6

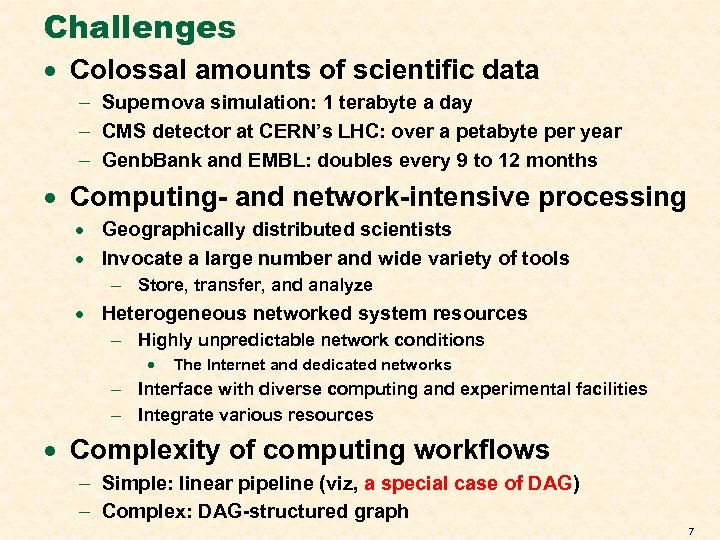

Challenges · Colossal amounts of scientific data - Supernova simulation: 1 terabyte a day - CMS detector at CERN’s LHC: over a petabyte per year - Genb. Bank and EMBL: doubles every 9 to 12 months · Computing- and network-intensive processing · Geographically distributed scientists · Invocate a large number and wide variety of tools - Store, transfer, and analyze · Heterogeneous networked system resources - Highly unpredictable network conditions · The Internet and dedicated networks - Interface with diverse computing and experimental facilities - Integrate various resources · Complexity of computing workflows - Simple: linear pipeline (viz, a special case of DAG) - Complex: DAG-structured graph 7

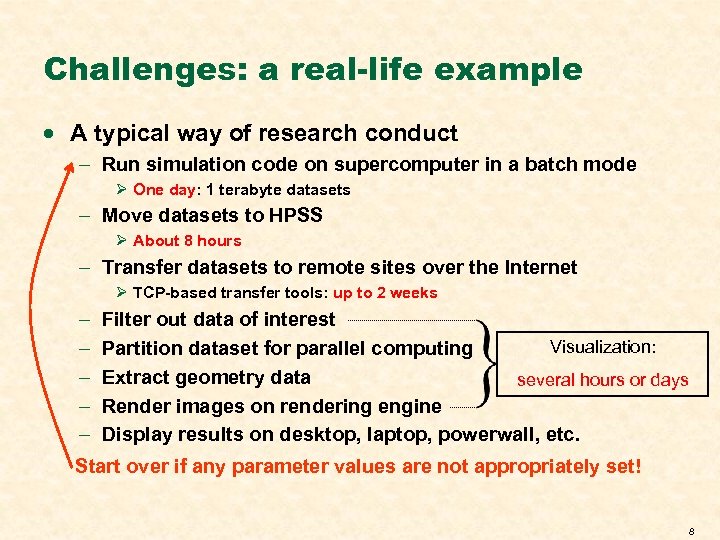

Challenges: a real-life example · A typical way of research conduct - Run simulation code on supercomputer in a batch mode Ø One day: 1 terabyte datasets - Move datasets to HPSS Ø About 8 hours - Transfer datasets to remote sites over the Internet Ø TCP-based transfer tools: up to 2 weeks - Filter out data of interest Visualization: Partition dataset for parallel computing Extract geometry data several hours or days Render images on rendering engine Display results on desktop, laptop, powerwall, etc. Start over if any parameter values are not appropriately set! 8

Challenges · Many large-scale scientific applications feature a similar or more complex workflow · Strategic goals - Automate the entire computing process · Hide operation complexity and implementation details from users · Technical objectives - Assemble, execute, monitor, and manage scientific workflows in heterogeneous network environments · A generic integrated application-support platform - Meet different application requirements · Viz, online computational monitoring and steering - Optimize workflow performance · MED, MFR, stability, reliability 9

The State of the Art · Considerable progress has been made in workflow specification, scheduling and management - DAGMan (www. cs. wisc. edu/condor/dagman) · In grid environments, a workflow engine to manage Condor jobs as DAG and schedule and execute long running jobs - Taverna · Open source system for bioinformatics based on XML - Kepler · Built on Ptolemy II system: a visual modeling tool written in Java - Triana · GUI-based system for coordinating and executing a collection of services · Shared network infrastructure - Tera. Grid, Open Science Grid - Grid is used for loosely coupled workflows · Due to the flexibility and granularity of resource management · Not an ideal platform for scientific workflows · There is no unified theory or system that formalizes scientific workflows that can cover all science areas

Objectives & Tasks · A general-purpose scientific workflow management system with performance guarantee and optimization - Workflow assembly - Workflow execution - Workflow monitoring and management 11

Workflow Assembly · Data-centric workflow · Catalog, store, and move large volumes of data from supercomputers or experimental facilities to a group of geographically distributed users · · Dedicated channels (OSCARS) New transport methods · Service-centric workflow · Process, visualize, synthesize, and analyze data · · Application-dependent Model and implement different workflow components/modules 12

Scientific Workflow Components · Data acquisition · · Simulation (parameter setting) or experimental control (device setting) Data collection · Coupled with scheduling and management, data catalog, and storage function · Data filtering/reduction · Extract data of interest from hierarchical standard data format · · HDF, Net. CDF, etc. Reduce data size · Data analysis · · Statistical methods Clustering tools PCA Many other domain-specific tools · Data visualization · Volume viz · · Marching cubes, ray casting, streamline, LIC Information viz 13

Workflow Implementation & Execution · A black box design - A general module template to fit any computing routine - Implemented as an autonomous agent with multiple concurrent threads · Recv. Thread · Proc. Thread · Send. Thread 14

Workflow Monitoring and Management · Monitoring daemon · Monitor network and host conditions · Available bandwidth, link delay, node power, memory size · Network daemon · Request, establish, and tear down network paths · Transfer data at a target rate · Make bandwidth reservation request in dedicated networks · Prediction daemon · Dynamically predict time cost for data generation, module processing and message transfer at each stage along the workflow · Recovering from exceptions and node or link failures · Checkpoint mechanism · Security issues 15

In-depth Performance Issues · End-to-end performance optimization · Two mapping optimization goals · Interactive applications - An update on the parameter in visualization - Minimum end-to-end delay (MED) for fast response · Streaming applications - A series of data sets (time steps) as input - Maximum frame rate (MFR) for smooth data flow 16

In-depth Performance Issues · On-node optimization - Workflow module scheduling - Buffer minimization · Reliability for fault tolerance · Stability in streaming applications - Last module of the workflow produces output at a constant rate - Pipelines always stabilize - DAG-like workflows may or may not stabilize · No node reuse - Stable · Arbitrary node reuse - Open question 17

SDEDS: Simulation of Dynamic Execution of Distributed Systems · Motivation - Exist many distributed algorithms of workflow optimization for large-scale scientific applications - Lack of appropriate evaluation systems to evaluate mapping/scheduling algorithms - Expensive and time-consuming in real network deployment · SDEDS - Visually illustrate dynamic execution of distributed systems - Evaluate network performance of mapping/scheduling algorithms 18

Pipeline Workflow Simulation 19

Graph Workflow Simulation 20

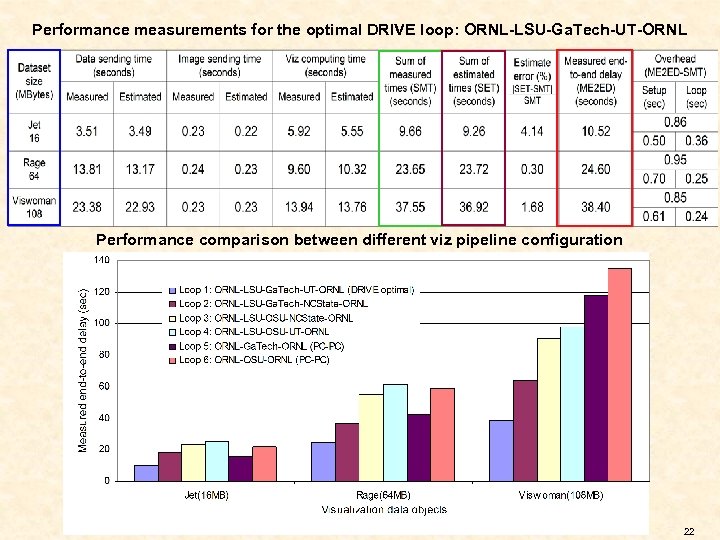

Preliminary Experiments · Implementation - C++, GTK, Open. GL, Fortran · Functionalities: - Iso-surfaces extraction Volume rendering Streamline Line Integration Convolution · Deployed at - ORNL (client), LSU (CM), Ga. Tech & OSU (DS), UTK & NCState (CS) - UTK & NCState: clusters - Others: Linux PC boxes Experiment objective: Visualize 3 datasets (Jet, Rage, Viswoman) duplicated at Ga. Tech & OSU using the clusters deployed at UTK and NCState. 21

Performance measurements for the optimal DRIVE loop: ORNL-LSU-Ga. Tech-UT-ORNL Performance comparison between different viz pipeline configuration 22

Two Examples in Visualizing Large-scale Scientific Applications TSI explosion (density, raycasting) Jet air flow dynamics (pressure, raycasting) 23

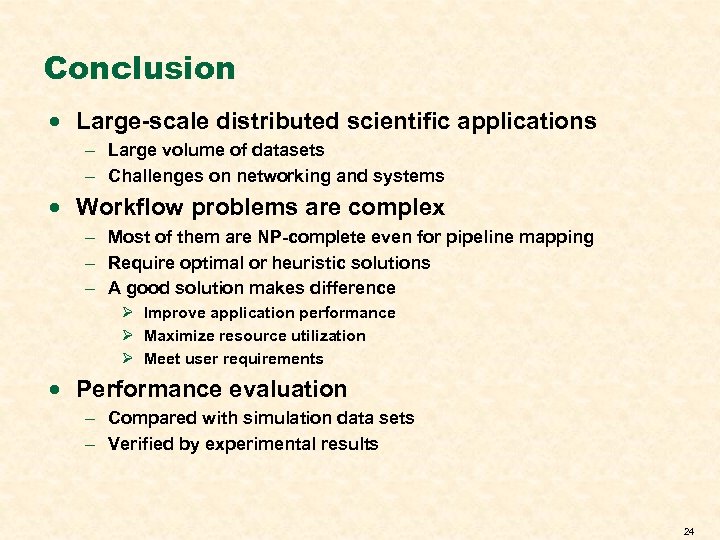

Conclusion · Large-scale distributed scientific applications - Large volume of datasets - Challenges on networking and systems · Workflow problems are complex - Most of them are NP-complete even for pipeline mapping - Require optimal or heuristic solutions - A good solution makes difference Ø Improve application performance Ø Maximize resource utilization Ø Meet user requirements · Performance evaluation - Compared with simulation data sets - Verified by experimental results 24

· · · · References Scientific workflow systems for 21 st century, new bottle or new Wine? Yong Zhao, Ioan Raicu, Ian Foster 2008 IEEE congress on services http: //www. cs. wayne. edu/~shiyong/swf/ijbpim 09. html www. cs. wisc. edu/cpndpr/dagman http: //pegasus. isi. edu/ http: //taverna. sourceforge. net/ Q. Wu, M. Zhu, Y. Gu, and N. S. V. Rao. System design and algorithmic development for computational steering in distributed environments. IEEE Transactions on Parallel and Distributed Systems, vol. 99, no. 1, May 2009. Q. Wu, J. Gao, Z. Chen, and M. Zhu. Pipelining parallel image compositing and delivery for efficient remote visualization. Journal of Parallel and Distributed Computing, vol. 69, no. 3, pp. 230 -238, 2009. Q. Wu, J. Gao, M. Zhu, N. S. V. Rao, J. Huang, and S. S. Iyengar. On optimal resource utilization for distributed remote visualization. IEEE Transactions on Computers, vol. 57, no. 1, pp. 55 -68, January 2008. M. Zhu, Q. Wu, N. S. V. Rao, and S. S. Iyengar. Optimal visualization pipeline decomposition and adaptive network mapping to support remote visualization. Journal of Parallel and Distributed Computing, vol. 67, no. 8, pp. 947 -956, August 2007. Y. Gu and Q. Wu. Optimizing Distributed Computing Workflows in Heterogeneous Network Environments. To appear in Proceedings of the 11 th International Conference on Distributed Computing and Networking, Kolkata, India, January 03 -06, 2010. Y. Gu, Q. Wu, A. Benoit, and Y. Robert. Optimizing End-to-end Performance of Distributed Applications with Linear Computing Pipelines. To appear in Proceedings of the 15 th International Conference on Parallel and Distributed Systems, Shenzhen, China, December 8 -11, 2009 (ICPADS 09, acceptance rate: 29%). Q. Wu, Y. Gu, L. Bao, P. Chen, H. Dai, and W. Jia. Optimizing Distributed Execution of WS-BPEL Processes in Heterogeneous Computing Environments. To appear in Proceedings of the 3 rd International Workshop on Advanced Architectures and Algorithms for Internet DElivery and Applications, Las Palmas de Gran Canaria, Spain, November 26, 2009 (invited paper, AAA-IDEA 09). Q. Wu and Y. Gu. Supporting distributed application workflows in heterogeneous computing environments. In Proceedings of the 14 th IEEE International Conference on Parallel and Distributed Systems, Melbourne, Victoria, Australia, December 8 -10, 2008 (ICPADS 08). Q. Wu, Y. Gu, M. Zhu, and N. S. V. Rao. Optimizing network performance of computing pipelines in distributed environments. In Proceedings of the 22 nd IEEE International Parallel and Distributed Processing Symposium, Miami, Florida, April 14 -18, 2008. 25

Thanks! Questions ? 26

a9c7403867eb454ba6dbb9e8aac0752e.ppt