3e8a89834176a5d98c97db5a4ade5845.ppt

- Количество слайдов: 42

Distributed File Systems (DFS) Matei Ripeanu, UBC EECE 571 R Data-intensive computing (Spring’ 07) 1

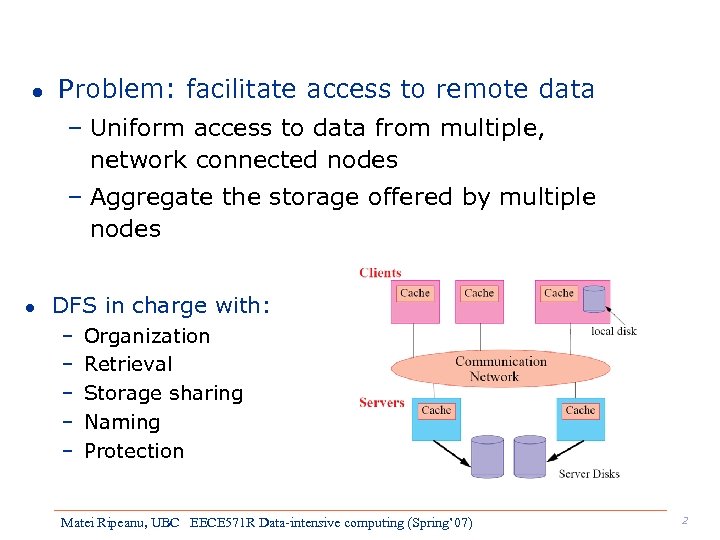

l Problem: facilitate access to remote data – Uniform access to data from multiple, network connected nodes – Aggregate the storage offered by multiple nodes l DFS in charge with: – – – Organization Retrieval Storage sharing Naming Protection Matei Ripeanu, UBC EECE 571 R Data-intensive computing (Spring’ 07) 2

Distributed File System Goals l • • Access transparency – Clients unaware files are remote Location transparency – Consistent name space (local and remote) Concurrency transparency – Modifications are coherent Failure transparency – Client and client programs should operate correctly after server failure. – One client failure should not impact the others Heterogeneity – File service should be provided across different hardware and software platforms Matei Ripeanu, UBC EECE 571 R Data-intensive computing (Spring’ 07) 3

Distributed File System Goals Scalability – Scale from a few machines to many (tens of thousands? ) • Replication transparency – Clients unaware of data replication – Coherence maintained • Migration transparency – Files should be able to move around without clients’ knowledge • Fine grained distribution of data – Locate objects near processes that use them l Matei Ripeanu, UBC EECE 571 R Data-intensive computing (Spring’ 07) 4

A few terms l File service - Specification of what the file system offers to clients l File – name, data, attributes l Immutable file – Cannot be changed once created - Easier to cache and replicate l Protection – Capabilities – Access control lists Matei Ripeanu, UBC EECE 571 R Data-intensive computing (Spring’ 07) 5

File service types l l Upload/download model – Read file: copy file from server to client – Write file: copy file from client to server Advantage – Simple – Problems: > Wasteful: what if client needs small piece? > Problematic: what if client doesn’t have enough space? > Consistency: what if others need to modify the same file? Matei Ripeanu, UBC EECE 571 R Data-intensive computing (Spring’ 07) 6

File service types l Remote access model – File service provides functional interface: > create, delete, read bytes, write bytes, etc… l Advantages: – Client gets only what’s needed – Server can manage coherent view of file system l Problems: – Possible server and network congestion – Servers are accessed for duration of file access – Same data may be requested repeatedly Matei Ripeanu, UBC EECE 571 R Data-intensive computing (Spring’ 07) 7

File service types l Data caching model – File access: local file access, client caches a local copy – Advantage: reduces communication overhead – Problem: data consistency Matei Ripeanu, UBC EECE 571 R Data-intensive computing (Spring’ 07) 8

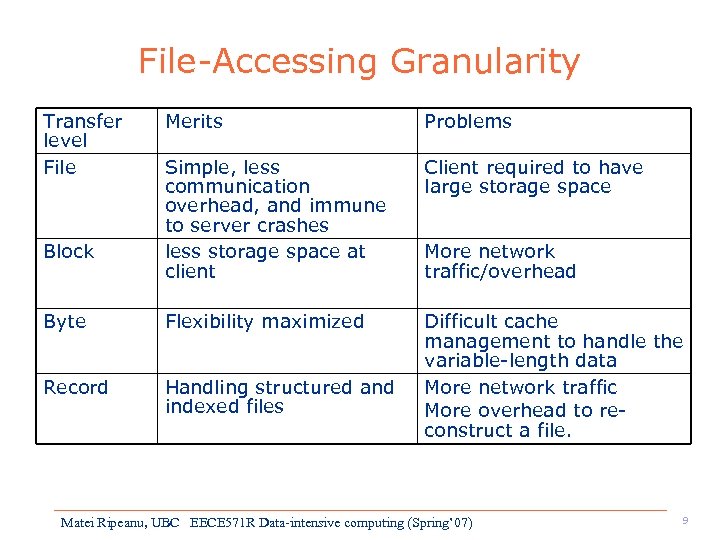

File-Accessing Granularity Transfer level File Merits Problems Simple, less communication overhead, and immune to server crashes less storage space at client Client required to have large storage space Byte Flexibility maximized Record Handling structured and indexed files Difficult cache management to handle the variable-length data More network traffic More overhead to reconstruct a file. Block More network traffic/overhead Matei Ripeanu, UBC EECE 571 R Data-intensive computing (Spring’ 07) 9

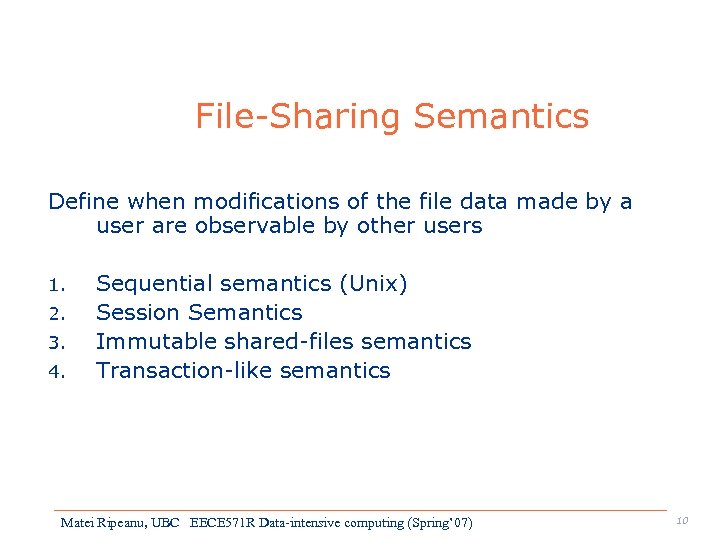

File-Sharing Semantics Define when modifications of the file data made by a user are observable by other users 1. 2. 3. 4. Sequential semantics (Unix) Session Semantics Immutable shared-files semantics Transaction-like semantics Matei Ripeanu, UBC EECE 571 R Data-intensive computing (Spring’ 07) 10

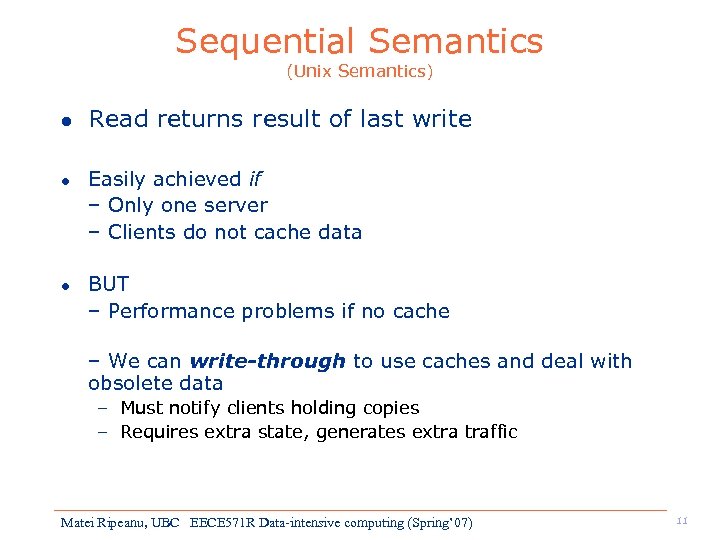

Sequential Semantics (Unix Semantics) l l l Read returns result of last write Easily achieved if – Only one server – Clients do not cache data BUT – Performance problems if no cache – We can write-through to use caches and deal with obsolete data – Must notify clients holding copies – Requires extra state, generates extra traffic Matei Ripeanu, UBC EECE 571 R Data-intensive computing (Spring’ 07) 11

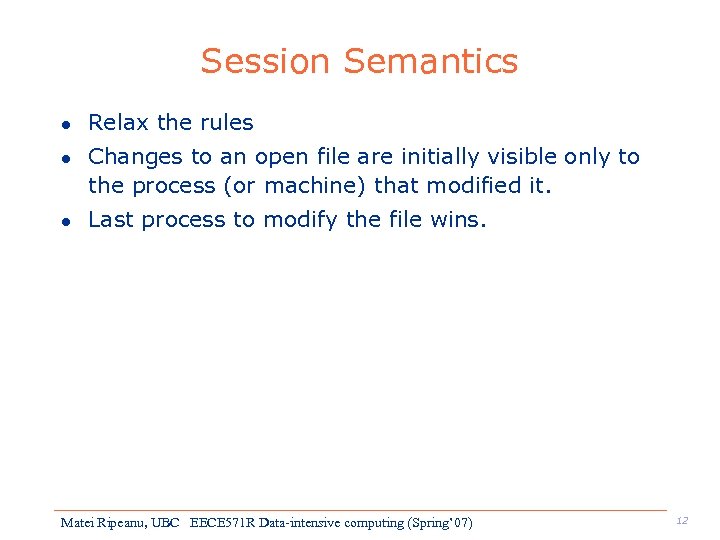

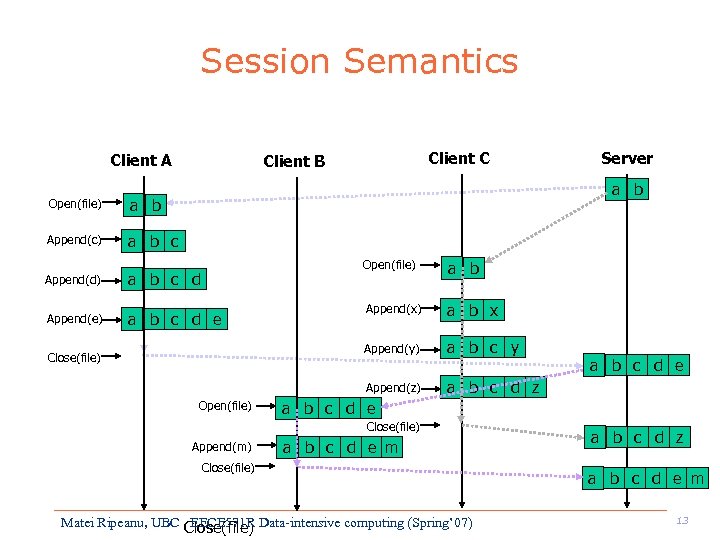

Session Semantics l l l Relax the rules Changes to an open file are initially visible only to the process (or machine) that modified it. Last process to modify the file wins. Matei Ripeanu, UBC EECE 571 R Data-intensive computing (Spring’ 07) 12

Session Semantics Client A Open(file) Server a b Append(c) Client C Client B a b c Open(file) Append(e) a b c d e Close(file) Open(file) Append(x) a b x a b c y Append(z) a b c d a b Append(y) Append(d) a b c d z a b c d e Close(file) Append(m) a b c d e m Close(file) Matei Ripeanu, UBC Close(file) Data-intensive computing (Spring’ 07) EECE 571 R a b c d z a b c d e m 13

Other solutions l Make files immutable – Aids in replication – Does not help with detecting modification Or. . . l Use atomic transactions – Each file access is an atomic transaction – If multiple transactions start concurrently resulting modification is serial Matei Ripeanu, UBC EECE 571 R Data-intensive computing (Spring’ 07) 14

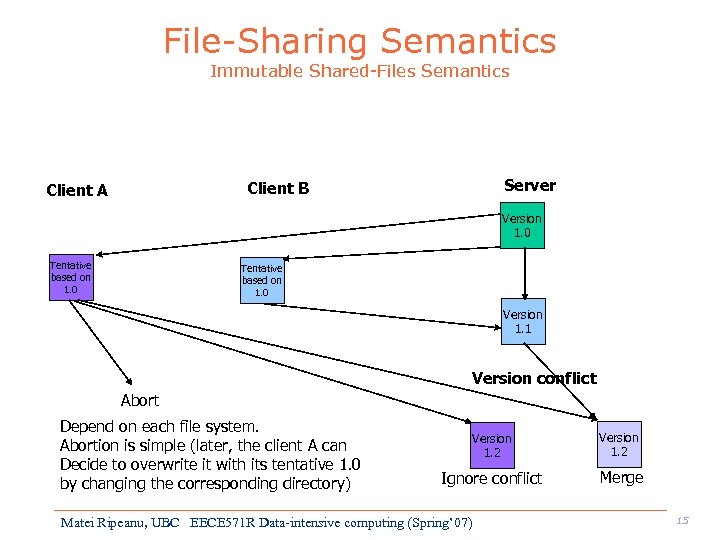

File-Sharing Semantics Immutable Shared-Files Semantics Server Client B Client A Version 1. 0 Tentative based on 1. 0 Version 1. 1 Version conflict Abort Depend on each file system. Abortion is simple (later, the client A can Decide to overwrite it with its tentative 1. 0 by changing the corresponding directory) Version 1. 2 Ignore conflict Merge Matei Ripeanu, UBC EECE 571 R Data-intensive computing (Spring’ 07) 15

File usage patterns l We can’t have the best of all worlds – l Where to compromise? Semantics vs. efficiency – Efficiency = client performance, network traffic, server load - Modified semantics: break transparency, reduce functionality, etc. l To help decision: Understand how files are used – 1981 study by Satyanarayanan Matei Ripeanu, UBC EECE 571 R Data-intensive computing (Spring’ 07) 16

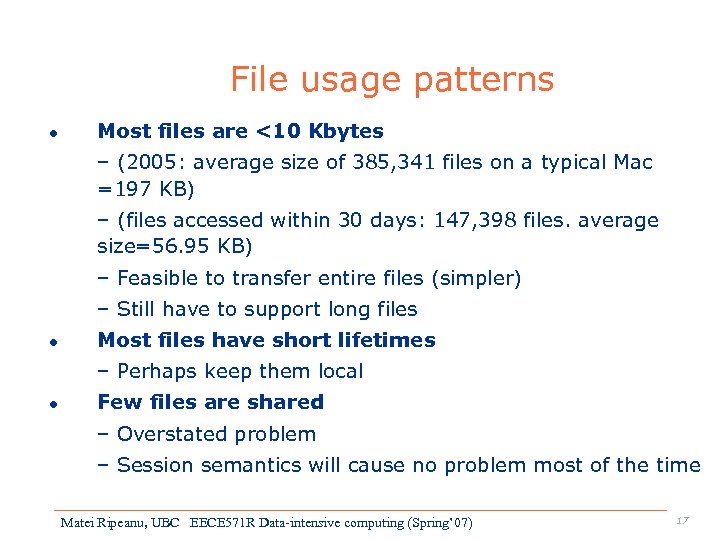

File usage patterns l Most files are <10 Kbytes – (2005: average size of 385, 341 files on a typical Mac =197 KB) – (files accessed within 30 days: 147, 398 files. average size=56. 95 KB) – Feasible to transfer entire files (simpler) – Still have to support long files l Most files have short lifetimes – Perhaps keep them local l Few files are shared – Overstated problem – Session semantics will cause no problem most of the time Matei Ripeanu, UBC EECE 571 R Data-intensive computing (Spring’ 07) 17

Design issues Matei Ripeanu, UBC EECE 571 R Data-intensive computing (Spring’ 07) 18

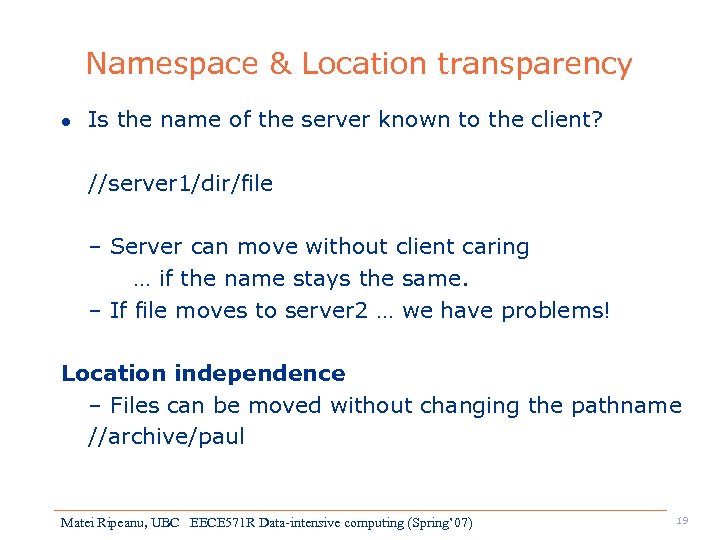

Namespace & Location transparency l Is the name of the server known to the client? //server 1/dir/file – Server can move without client caring … if the name stays the same. – If file moves to server 2 … we have problems! Location independence – Files can be moved without changing the pathname //archive/paul Matei Ripeanu, UBC EECE 571 R Data-intensive computing (Spring’ 07) 19

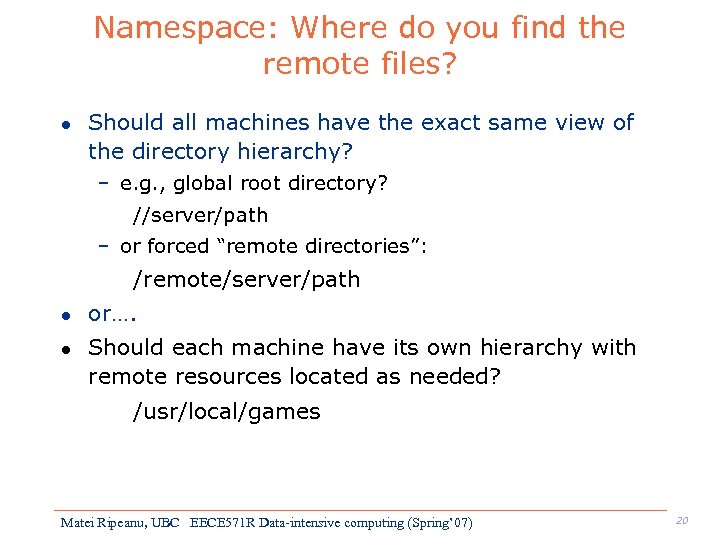

Namespace: Where do you find the remote files? l Should all machines have the exact same view of the directory hierarchy? – e. g. , global root directory? //server/path – or forced “remote directories”: /remote/server/path l l or…. Should each machine have its own hierarchy with remote resources located as needed? /usr/local/games Matei Ripeanu, UBC EECE 571 R Data-intensive computing (Spring’ 07) 20

Access: How do you access files? l l Requirement: Access remote files as local files Remote FS name space should be syntactically consistent with local name space 1. redefine the way all files are named and provide a syntax for specifying remote files -- e. g. //server/dir/file -- Can cause legacy applications to fail 2. use a file system mounting mechanism – Overlay portions of another FS name space over local name space Matei Ripeanu, UBC EECE 571 R Data-intensive computing (Spring’ 07) 21

Name resolution: how to handle. . l Parse: – (a) component at a time – (b) entire path at once l (b) is more efficient but… – … offers less flexibility (e. g. , naming as indirection) l Perhaps use (a) and cache bindings to increase performance Matei Ripeanu, UBC EECE 571 R Data-intensive computing (Spring’ 07) 22

Stateful or stateless design? l Stateful: Server maintains client-specific state – Shorter requests – Better performance in processing requests – Cache coherence is possible > Server can know who’s accessing what – File locking is possible Matei Ripeanu, UBC EECE 571 R Data-intensive computing (Spring’ 07) 23

Stateful or stateless design? l Stateless: Server maintains no information on client accesses – Each request must identify file and offsets – Server can crash and recover > No state to lose – Client can crash and recover – No open/close needed > They only establish state – No server space used for state > Don’t worry about supporting many clients (with low activity) – Problems with consistency > E. g. , if file is deleted on server – File locking not possible Matei Ripeanu, UBC EECE 571 R Data-intensive computing (Spring’ 07) 24

Caching Matei Ripeanu, UBC

Caching l l Goal: Hide latency to improve performance for repeated accesses Four places to place data – Server’s disk – Server’s buffer cache – Client’s disk (last two introduce cache consistency problems!) Matei Ripeanu, UBC EECE 571 R Data-intensive computing (Spring’ 07) 26

Approaches to caching l Write-through – What if another client reads its cached copy? – Consistency > All accesses will require checking with server > Or Server maintains state and sends invalidations – Performance overheads l Delayed writes – Write data can be buffered locally: overwiriting does not produce additi 0 onal overhead – Decide whae to perform writes (when cache is full or periodically, and on close) – One bulk write is more efficient than lots of little writes – Problem: semantics become ambiguous Matei Ripeanu, UBC EECE 571 R Data-intensive computing (Spring’ 07) 27

Approaches to caching l Write on close – Admit that we have session semantics l Centralized control – Keep track of who has what open on each node – Stateful file system with signaling traffic Matei Ripeanu, UBC EECE 571 R Data-intensive computing (Spring’ 07) 28

Striping Matei Ripeanu, UBC

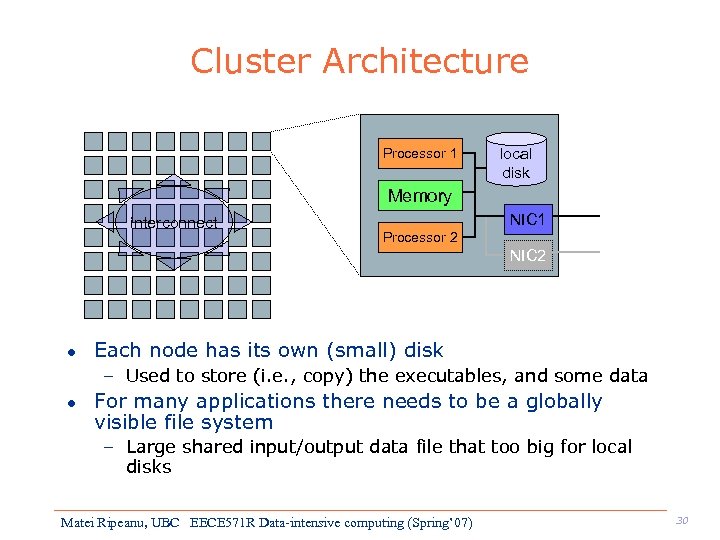

Cluster Architecture Processor 1 local disk Memory interconnect NIC 1 Processor 2 NIC 2 l Each node has its own (small) disk – Used to store (i. e. , copy) the executables, and some data l For many applications there needs to be a globally visible file system – Large shared input/output data file that too big for local disks Matei Ripeanu, UBC EECE 571 R Data-intensive computing (Spring’ 07) 30

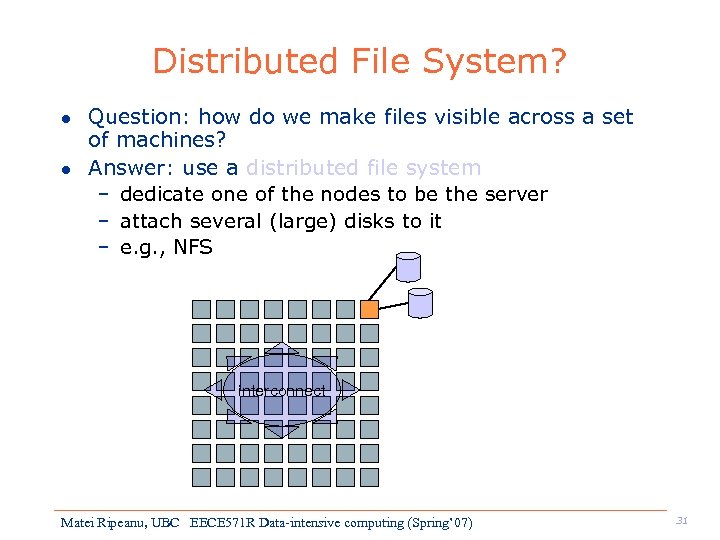

Distributed File System? l l Question: how do we make files visible across a set of machines? Answer: use a distributed file system – dedicate one of the nodes to be the server – attach several (large) disks to it – e. g. , NFS interconnect Matei Ripeanu, UBC EECE 571 R Data-intensive computing (Spring’ 07) 31

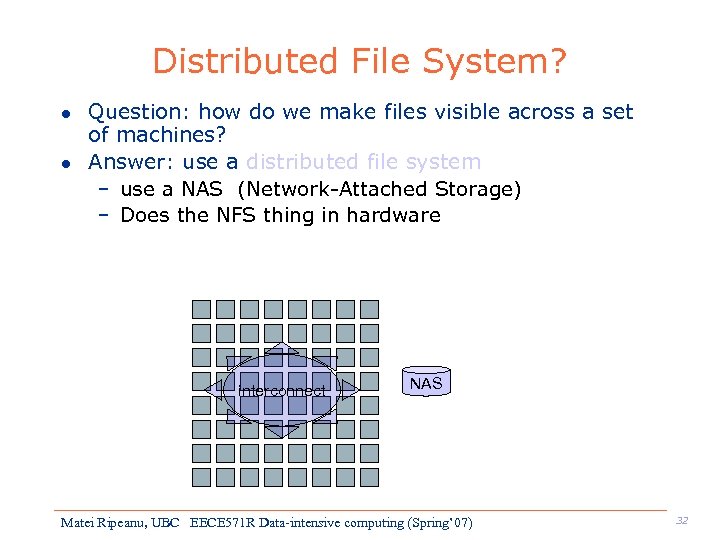

Distributed File System? l l Question: how do we make files visible across a set of machines? Answer: use a distributed file system – use a NAS (Network-Attached Storage) – Does the NFS thing in hardware interconnect NAS Matei Ripeanu, UBC EECE 571 R Data-intensive computing (Spring’ 07) 32

Distributed File System? l Advantages – Simple and well understood l Disadvantages – The file server can be a bottleneck > Especially for a cluster that runs many scientific applications at once – The intended usage is that a single process reads/writes to a file at a time > But parallel applications would most likely prefer doing concurrent reads and concurrent writes – Often not built for top performance (NFS) Matei Ripeanu, UBC EECE 571 R Data-intensive computing (Spring’ 07) 33

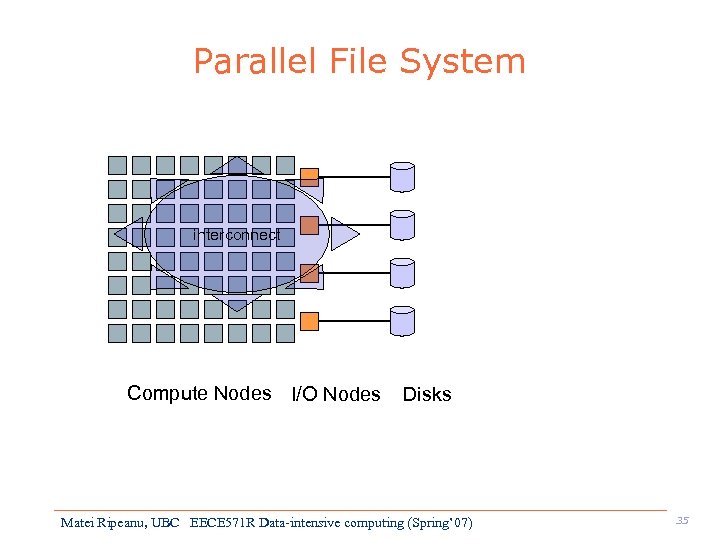

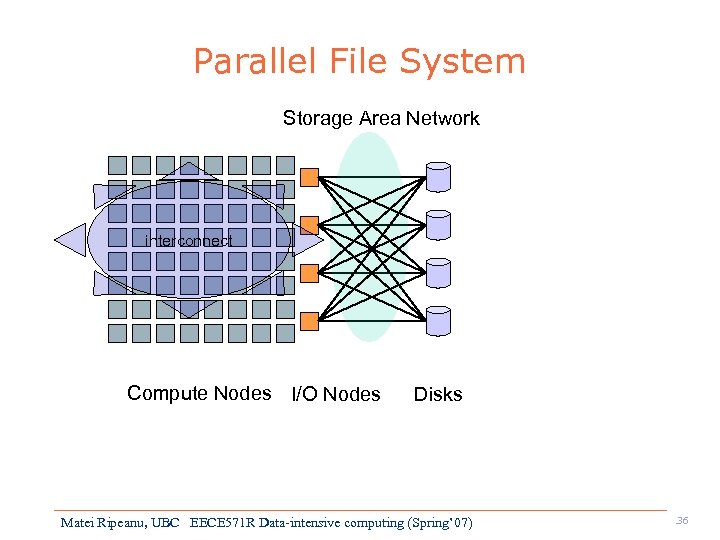

Parallel File System l improves on the drawbacks of distributed file systems – Multiple disks > Each disk has its own I/O channel > Disks can be used simultaneously – I/O is parallel at both ends > Multiple processes writing/reading > Multiple disks writing/reading > Not necessarily matching numbers Matei Ripeanu, UBC EECE 571 R Data-intensive computing (Spring’ 07) 34

Parallel File System interconnect Compute Nodes I/O Nodes Disks Matei Ripeanu, UBC EECE 571 R Data-intensive computing (Spring’ 07) 35

Parallel File System Storage Area Network interconnect Compute Nodes I/O Nodes Disks Matei Ripeanu, UBC EECE 571 R Data-intensive computing (Spring’ 07) 36

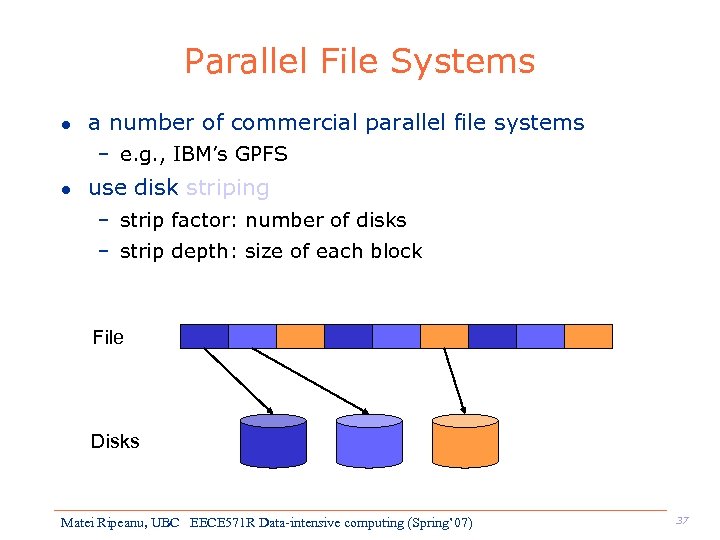

Parallel File Systems l a number of commercial parallel file systems – e. g. , IBM’s GPFS l use disk striping – strip factor: number of disks – strip depth: size of each block File Disks Matei Ripeanu, UBC EECE 571 R Data-intensive computing (Spring’ 07) 37

Striping l l Multiple physical disks + separate I/O channels + striping = parallel access to a single file Typically implements some form of RAID to combine striping with fault-tolerance – e. g. , RAID 5 l The file system needs to figure out where blocks are located – Each I/O node maintains some directory – There is a global name service l Concurrent writes: locking of blocks not files! Matei Ripeanu, UBC EECE 571 R Data-intensive computing (Spring’ 07) 38

Application view: Parallel Applications and I/O is sequential B proportion of program that l Option 1: A single node does all I/O – Amdahl’s law says: if your data is large, forget parallel speedup l Option 2: Before the application, split the input data and store it into local disks on the nodes, then at the end gather output – Cumbersome – Storage may not be sufficient anyway l Option 3: Do parallel I/O with a parallel file system – Allows non-contiguous pieces of data in parallel > e. g. , interleaved pieces of a matrix for a cyclic data distribution – But the UNIX API is not convenient for writing parallel applications and accessing a parallel file system > No complex access patterns > No collective I/O > Different APIs make code non-portable – Solution: use MPI I/O (part of MPI 2) Matei Ripeanu, UBC EECE 571 R Data-intensive computing (Spring’ 07) 39

Striping Summary (from an application/app developer viewpoint) l If your application is stuck doing I/O for most of its time – Buy I/O hardware, Do not use NFS but rather some parallel file system – Write code using MPI I/O > All processes should do the same amount of I/O > Make as large I/O requests as possible at a time to benefit from striping – Performance benefits when compared to the naive solution can be orders of magnitude l Other striping solutions – Striping FTP server Matei Ripeanu, UBC EECE 571 R Data-intensive computing (Spring’ 07) 41

Next l l Case Study: Freeloader Case Study on Data access patterns: small worlds and data sharing graph Matei Ripeanu, UBC EECE 571 R Data-intensive computing (Spring’ 07) 42

Next classes * Volunteers: Discussion leader for Thursday. Tuesday: DFS l l Scale and Performance in a Distributed File System, J. H. Howard et al. , ACM Transactions on Computer Systems Feb. 1988, Vol. 6, No. 1, pp. 51 -81. [pfd] The Google File System, Ghemawat et al. , SOSP 2003 [pdf] Thursday: Data replication l l Efficient Replica Maintenance for Distributed Storage Systems, Byung. Gon Chun et al. NSDI’ 06 [pdf]. Drafting Behind Akamai (Travelocity-Based Detouring), Ao-Jan Su et al. SIGCOMM’ 06 [pdf]. Matei Ripeanu, UBC EECE 571 R Data-intensive computing (Spring’ 07) 43

3e8a89834176a5d98c97db5a4ade5845.ppt