7ca6938889172bc94cdc647156b1114b.ppt

- Количество слайдов: 37

Distributed Analysis System in the ATLAS Experiment Minsuk Kim University of Alberta 24 Jun 2008 KISTI Seminar Minsuk Kim (Univ. of Alberta)

Outline n Large Hadron Collider (LHC) • ATLAS Experiment and Computing n Distributed Analysis Model in ATLAS • Grid Infrastructure n Distributed Analysis Tools • Ganga and Pathena n Conclusions Minsuk Kim (Univ. of Alberta) 2

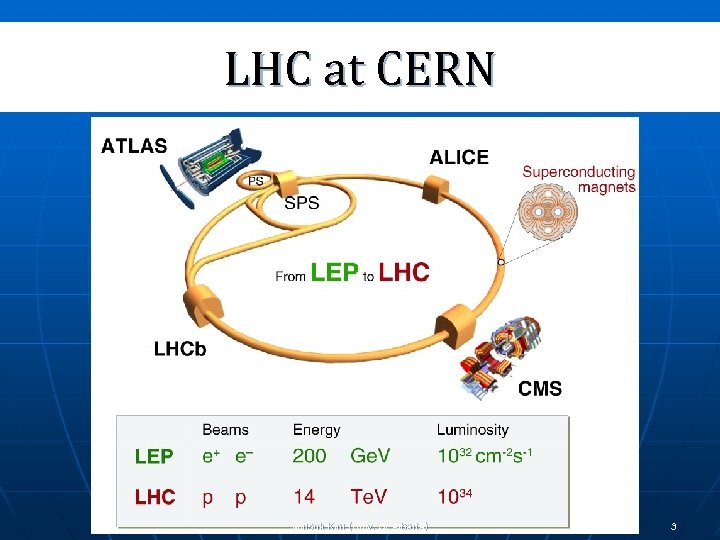

LHC at CERN Minsuk Kim (Univ. of Alberta) 3

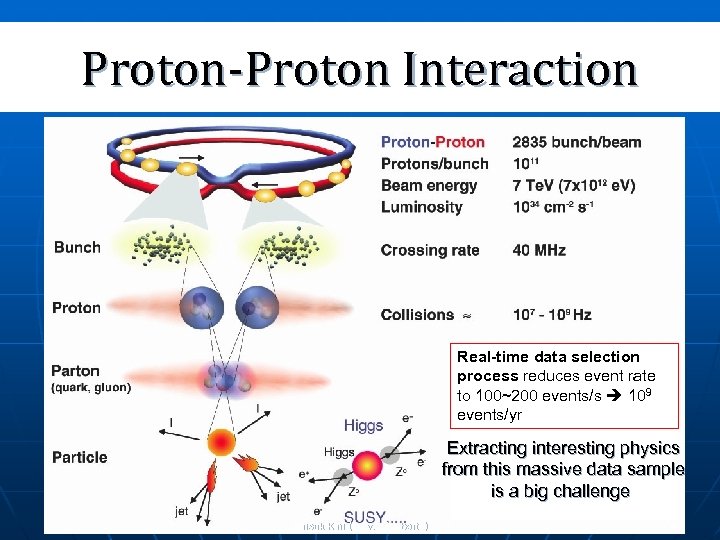

Proton-Proton Interaction Real-time data selection process reduces event rate to 100~200 events/s 109 events/yr Extracting interesting physics from this massive data sample is a big challenge Minsuk Kim (Univ. of Alberta) 4

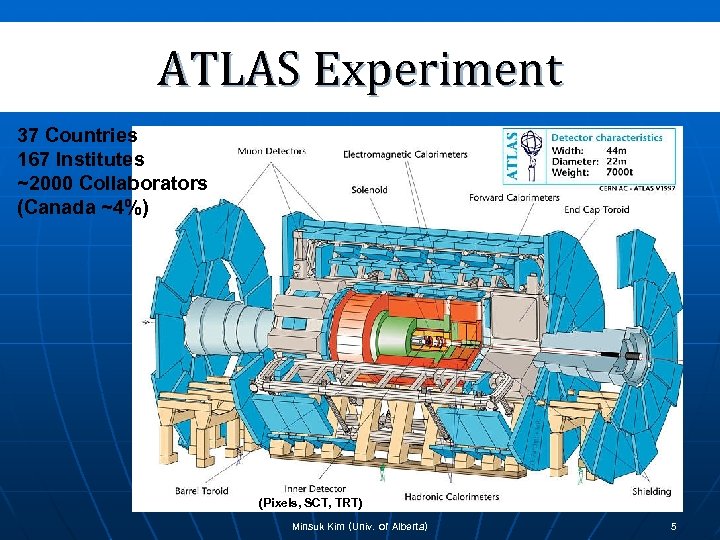

ATLAS Experiment 37 Countries 167 Institutes ~2000 Collaborators (Canada ~4%) (Pixels, SCT, TRT) Minsuk Kim (Univ. of Alberta) 5

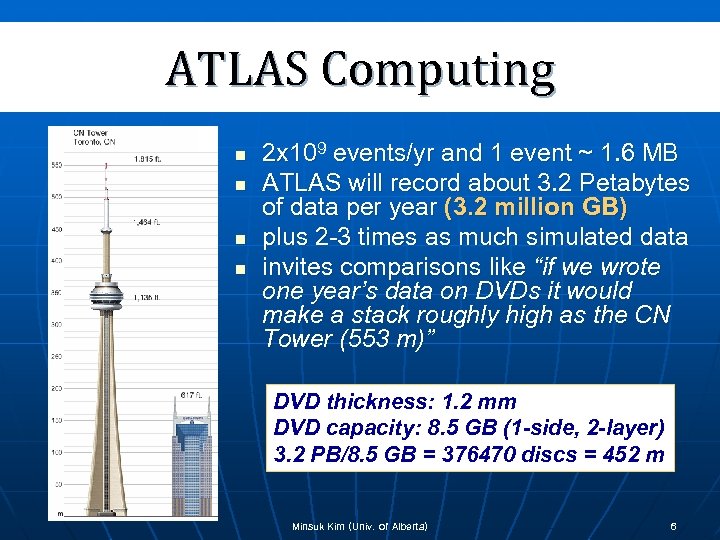

ATLAS Computing n n 2 x 109 events/yr and 1 event ~ 1. 6 MB ATLAS will record about 3. 2 Petabytes of data per year (3. 2 million GB) plus 2 -3 times as much simulated data invites comparisons like “if we wrote one year’s data on DVDs it would make a stack roughly high as the CN Tower (553 m)” DVD thickness: 1. 2 mm DVD capacity: 8. 5 GB (1 -side, 2 -layer) 3. 2 PB/8. 5 GB = 376470 discs = 452 m Minsuk Kim (Univ. of Alberta) 6

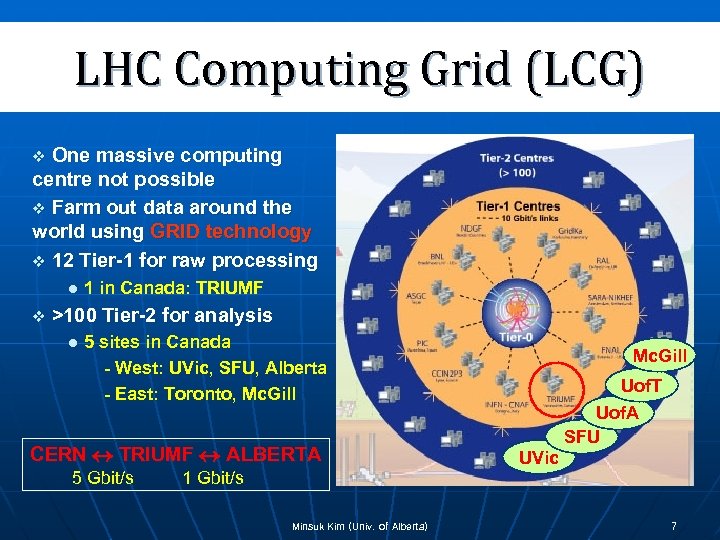

LHC Computing Grid (LCG) One massive computing centre not possible v Farm out data around the world using GRID technology v 12 Tier-1 for raw processing v l v 1 in Canada: TRIUMF >100 Tier-2 for analysis l 5 sites in Canada - West: UVic, SFU, Alberta - East: Toronto, Mc. Gill CERN TRIUMF ALBERTA 5 Gbit/s Mc. Gill Uof. T Uof. A SFU UVic 1 Gbit/s Minsuk Kim (Univ. of Alberta) 7

Distributed Analysis Model in ATLAS Minsuk Kim (Univ. of Alberta)

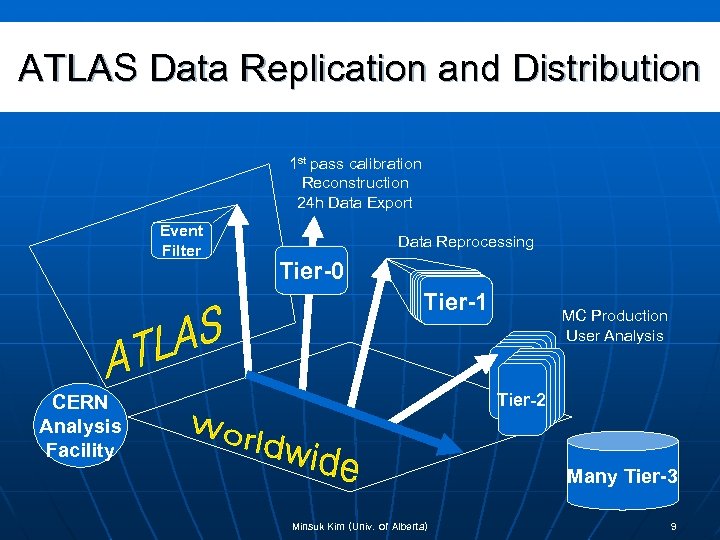

ATLAS Data Replication and Distribution 1 st pass calibration Reconstruction 24 h Data Export Event Filter Data Reprocessing Tier-0 Tier-1 MC Production User Analysis Tier-2 CERN Analysis Facility Many Tier-3 Minsuk Kim (Univ. of Alberta) 9

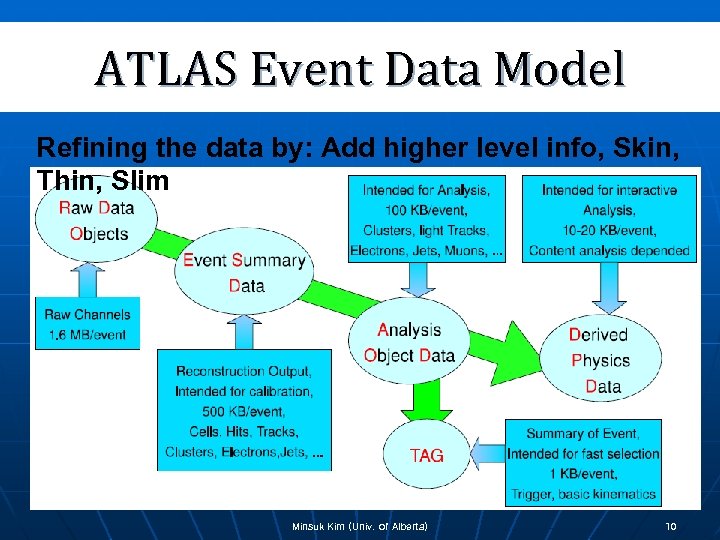

ATLAS Event Data Model Refining the data by: Add higher level info, Skin, Thin, Slim Minsuk Kim (Univ. of Alberta) 10

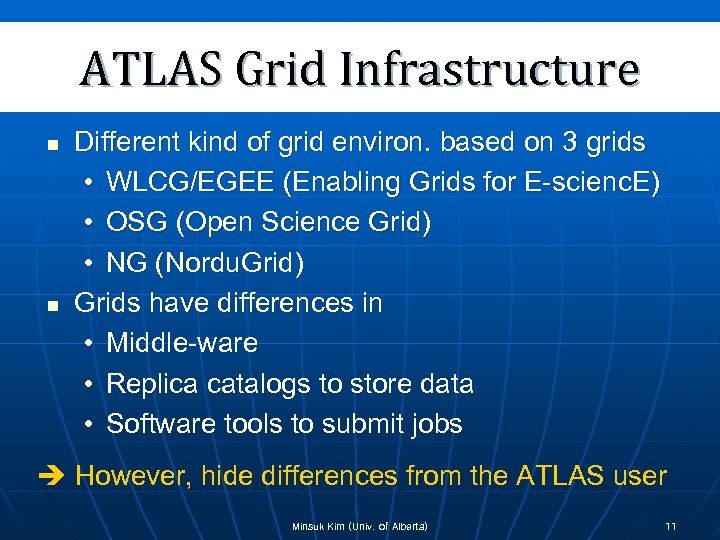

ATLAS Grid Infrastructure n n Different kind of grid environ. based on 3 grids • WLCG/EGEE (Enabling Grids for E-scienc. E) • OSG (Open Science Grid) • NG (Nordu. Grid) Grids have differences in • Middle-ware • Replica catalogs to store data • Software tools to submit jobs However, hide differences from the ATLAS user Minsuk Kim (Univ. of Alberta) 11

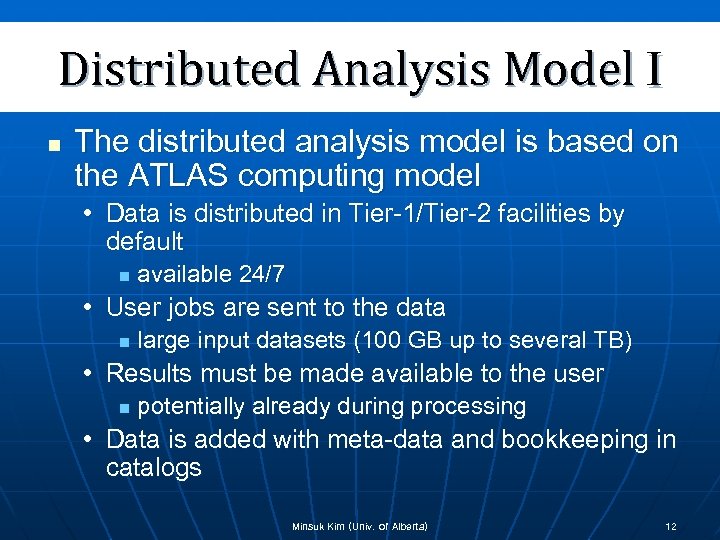

Distributed Analysis Model I n The distributed analysis model is based on the ATLAS computing model • Data is distributed in Tier-1/Tier-2 facilities by default n available 24/7 • User jobs are sent to the data n large input datasets (100 GB up to several TB) • Results must be made available to the user n potentially already during processing • Data is added with meta-data and bookkeeping in catalogs Minsuk Kim (Univ. of Alberta) 12

Distributed Analysis Model II n Need for: Distributed Data Management (DDM) • Managed by DDM system DQ 2 (Don-Quijote 2) • System based on datasets which are collections of files n a file exists in the context of datasets • Automated file management, distribution and archiving throughout the whole grid using a Central Catalog, FTS, LFCs • Random access needs a pre-filtering of data of interest n e. g. trigger or ID streams or TAGs (event-level meta data) Minsuk Kim (Univ. of Alberta) 13

Distributed Data Management n DQ 2: Data management system for all distributed ATLAS data • • n Supports all three ATLAS Grid flavors Manages all data flows (EFg. Tier 0 g. Grid. Tiersg. Institutesgusers) Moves data between grid sites, query and retrieval of data Data is grouped into datasets, based on meta-data, like run period n dataset name: Project. NNNN. Ph. Ref. Production. Step. Format. Version n User-defined should have prefix user. Firstname. Lastname DQ 2 end-user tools (the DQ 2 dataset browser) • • • dq 2_ls to list datasets matching a given pattern dq 2_get to copy data from local storage or over the grid dq 2_put to create user-defined datasets n dq 2 can see only Tier 1/Tier 2 SEs (castor, d. Cache, DPM), so files need to be copied to SE first and then registered to DQ 2 system Minsuk Kim (Univ. of Alberta) 14

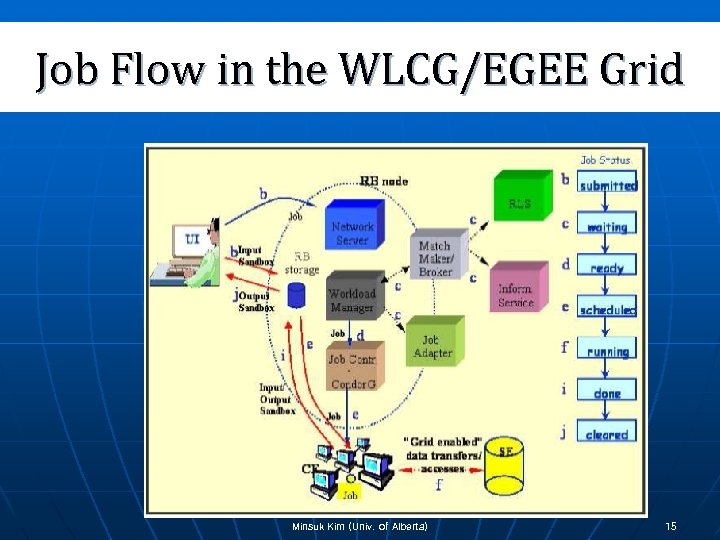

Job Flow in the WLCG/EGEE Grid Minsuk Kim (Univ. of Alberta) 15

Grid Job Submission n Naive assumption: Grid ≈ large batch system • Provide complicated job configuration jdl file • Find suitable Athena software, installed as distribution kits in the Grid • Locate the data on different storage elements • Job splitting, monitoring and book-keeping • etc. Need for automation and integration of various different components Minsuk Kim (Univ. of Alberta) 16

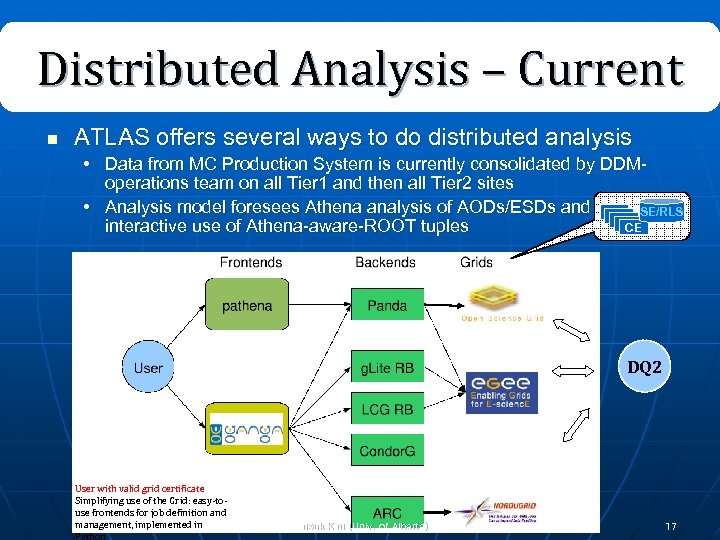

Distributed Analysis – Current n ATLAS offers several ways to do distributed analysis • Data from MC Production System is currently consolidated by DDMoperations team on all Tier 1 and then all Tier 2 sites • Analysis model foresees Athena analysis of AODs/ESDs and SE/RLS CE interactive use of Athena-aware-ROOT tuples DQ 2 User with valid grid certificate Simplifying use of the Grid: easy-touse frontends for job definition and management, implemented in Minsuk Kim (Univ. of Alberta) 17

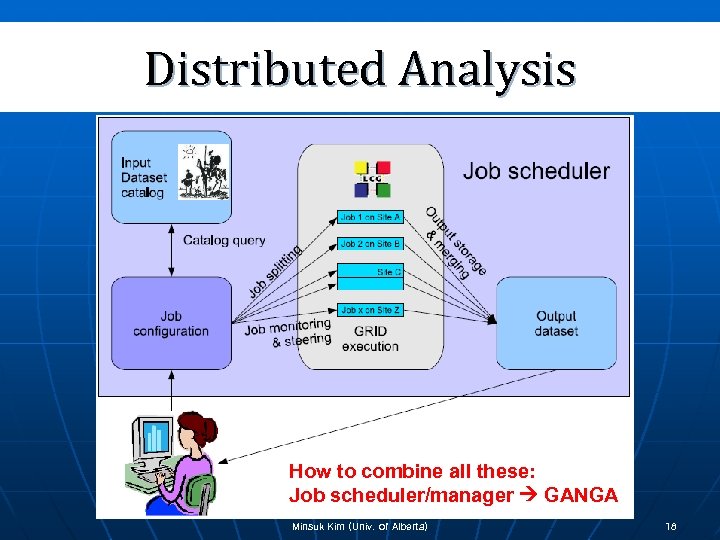

Distributed Analysis How to combine all these: Job scheduler/manager GANGA Minsuk Kim (Univ. of Alberta) 18

Distributed Analysis with Ganga & Pathena Minsuk Kim (Univ. of Alberta)

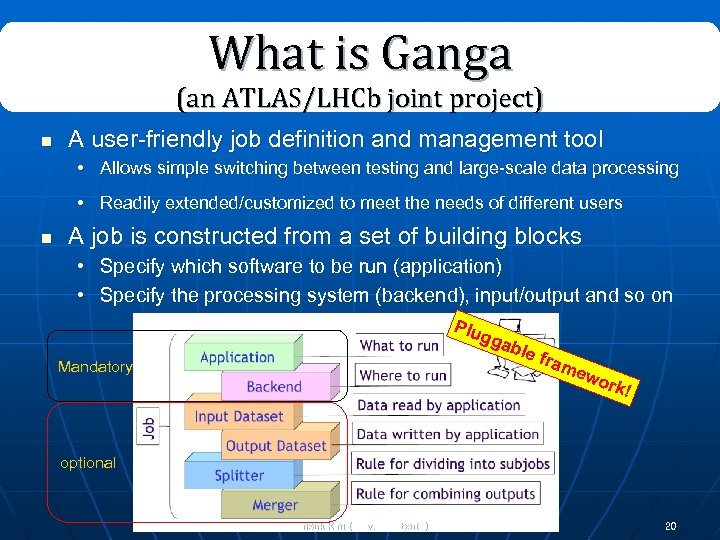

What is Ganga (an ATLAS/LHCb joint project) n A user-friendly job definition and management tool • Allows simple switching between testing and large-scale data processing • Readily extended/customized to meet the needs of different users n A job is constructed from a set of building blocks • Specify which software to be run (application) • Specify the processing system (backend), input/output and so on Plu gga b Mandatory le f ram ewo rk! optional Minsuk Kim (Univ. of Alberta) 20

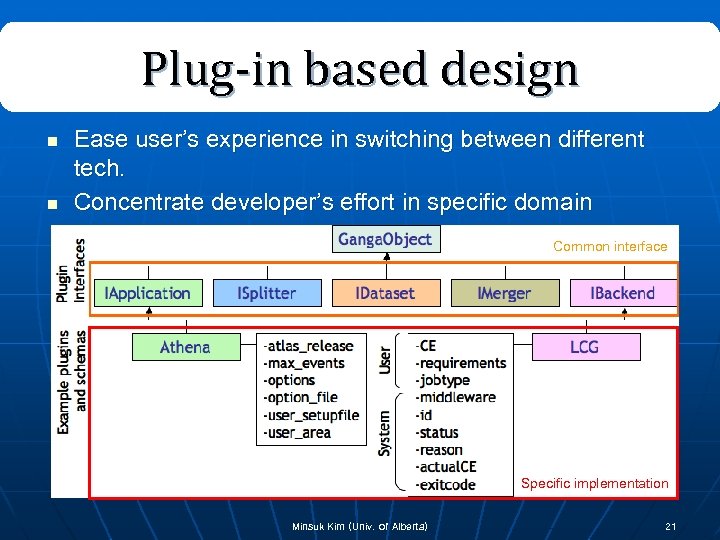

Plug-in based design n n Ease user’s experience in switching between different tech. Concentrate developer’s effort in specific domain Common interface Specific implementation Minsuk Kim (Univ. of Alberta) 21

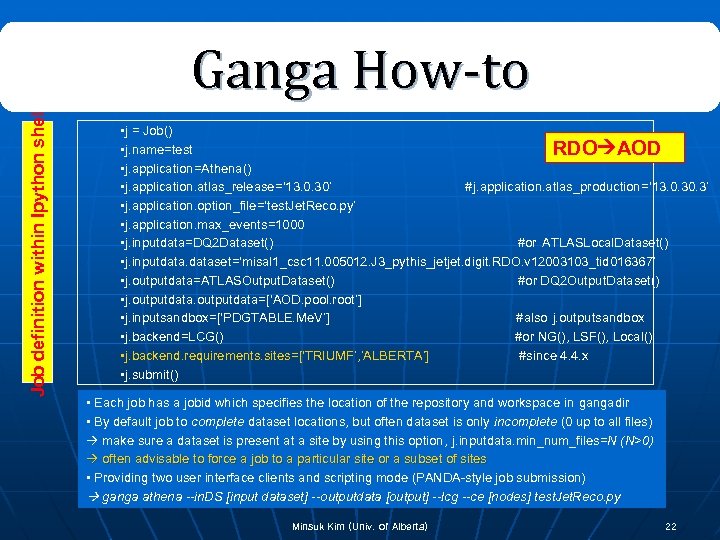

Job definition within Ipython shell Ganga How-to • j = Job() • j. name=test RDO AOD • j. application=Athena() • j. application. atlas_release=‘ 13. 0. 30’ #j. application. atlas_production=‘ 13. 0. 3’ • j. application. option_file=‘test. Jet. Reco. py’ • j. application. max_events=1000 • j. inputdata=DQ 2 Dataset() #or ATLASLocal. Dataset() • j. inputdataset=‘misal 1_csc 11. 005012. J 3_pythis_jetjet. digit. RDO. v 12003103_tid 016367’ • j. outputdata=ATLASOutput. Dataset() #or DQ 2 Output. Dataset() • j. outputdata=[‘AOD. pool. root’] • j. inputsandbox=[‘PDGTABLE. Me. V’] #also j. outputsandbox • j. backend=LCG() #or NG(), LSF(), Local() • j. backend. requirements. sites=[‘TRIUMF’, ’ALBERTA’] #since 4. 4. x • j. submit() • Each job has a jobid which specifies the location of the repository and workspace in gangadir • By default job to complete dataset locations, but often dataset is only incomplete (0 up to all files) make sure a dataset is present at a site by using this option, j. inputdata. min_num_files=N (N>0) often advisable to force a job to a particular site or a subset of sites • Providing two user interface clients and scripting mode (PANDA-style job submission) ganga athena --in. DS [input dataset] -- outputdata [output] --lcg --ce [nodes] test. Jet. Reco. py Minsuk Kim (Univ. of Alberta) 22

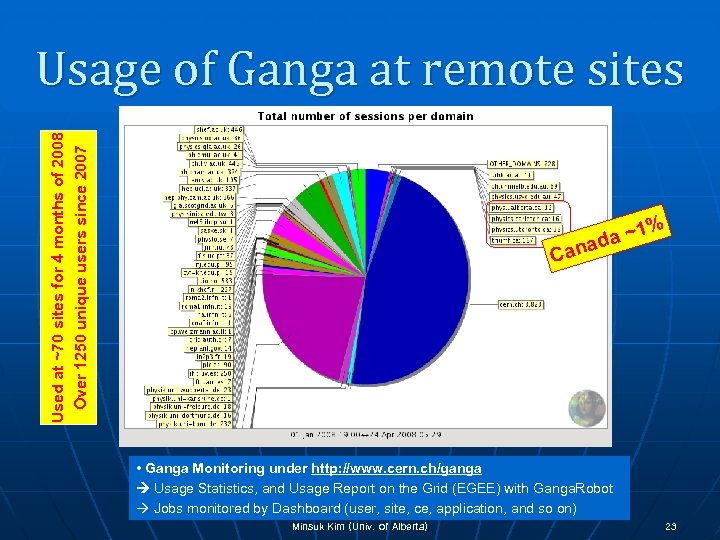

Used at ~70 sites for 4 months of 2008 Over 1250 unique users since 2007 Usage of Ganga at remote sites Ca % a ~1 nad • Ganga Monitoring under http: //www. cern. ch/ganga Usage Statistics, and Usage Report on the Grid (EGEE) with Ganga. Robot Jobs monitored by Dashboard (user, site, ce, application, and so on) Minsuk Kim (Univ. of Alberta) 23

Ganga Activities n Main Users n Other activities HAR P Minsuk Kim (Univ. of Alberta) Garfiel d 24

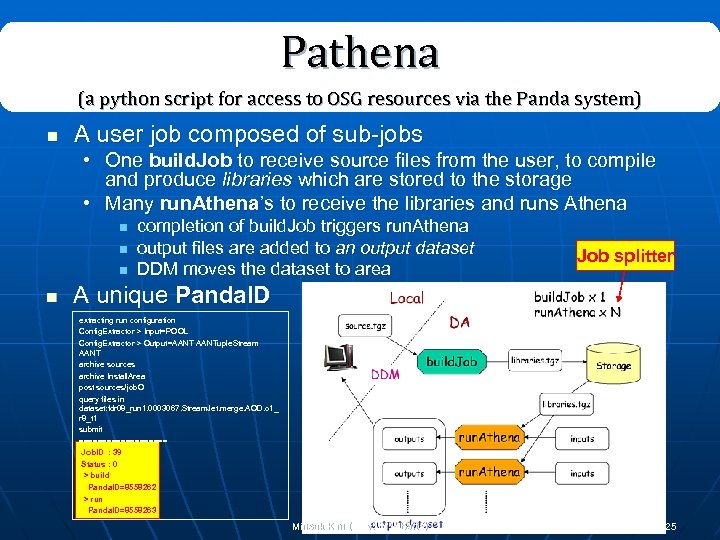

Pathena (a python script for access to OSG resources via the Panda system) n A user job composed of sub-jobs • One build. Job to receive source files from the user, to compile and produce libraries which are stored to the storage • Many run. Athena’s to receive the libraries and runs Athena n n completion of build. Job triggers run. Athena output files are added to an output dataset DDM moves the dataset to area Job splitter A unique Panda. ID extracting run configuration Config. Extractor > Input=POOL Config. Extractor > Output=AANTuple. Stream AANT archive sources archive Install. Area post sources/job. O query files in dataset: fdr 08_run 1. 0003067. Stream. Jet. merge. AOD. o 1_ r 8_t 1 submit ========== Job. ID : 39 Status : 0 > build Panda. ID=8558262 > run Panda. ID=8558263 Minsuk Kim (Univ. of Alberta) 25

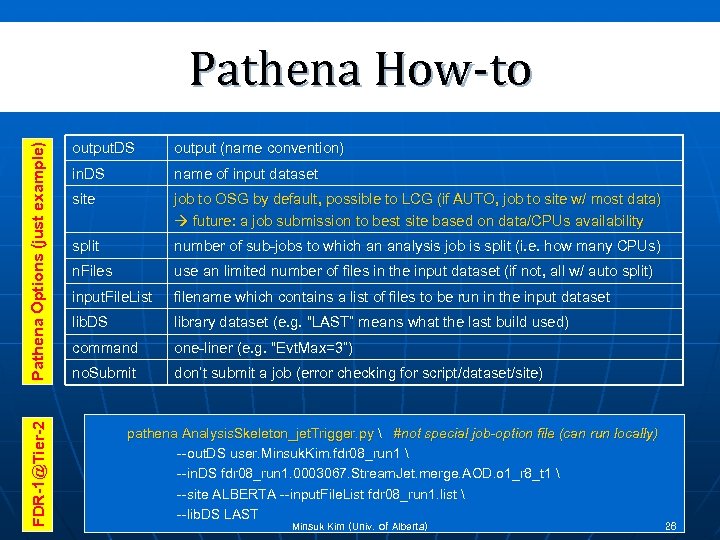

FDR-1@Tier-2 Pathena Options (just example) Pathena How-to output. DS output (name convention) in. DS name of input dataset site job to OSG by default, possible to LCG (if AUTO, job to site w/ most data) future: a job submission to best site based on data/CPUs availability split number of sub-jobs to which an analysis job is split (i. e. how many CPUs) n. Files use an limited number of files in the input dataset (if not, all w/ auto split) input. File. List filename which contains a list of files to be run in the input dataset lib. DS library dataset (e. g. “LAST” means what the last build used) command one-liner (e. g. “Evt. Max=3”) no. Submit don’t submit a job (error checking for script/dataset/site) pathena Analysis. Skeleton_jet. Trigger. py #not special job-option file (can run locally) --out. DS user. Minsuk. Kim. fdr 08_run 1 --in. DS fdr 08_run 1. 0003067. Stream. Jet. merge. AOD. o 1_r 8_t 1 --site ALBERTA --input. File. List fdr 08_run 1. list --lib. DS LAST Minsuk Kim (Univ. of Alberta) 26

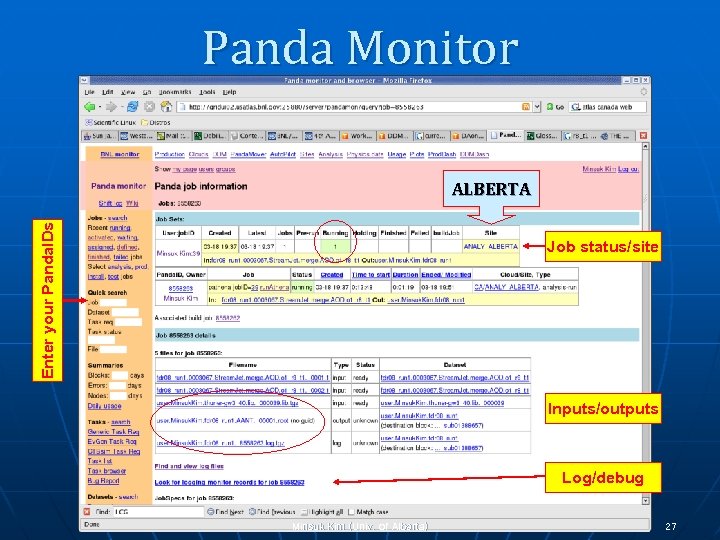

Panda Monitor Enter your Panda. IDs ALBERTA Job status/site Inputs/outputs Log/debug Minsuk Kim (Univ. of Alberta) 27

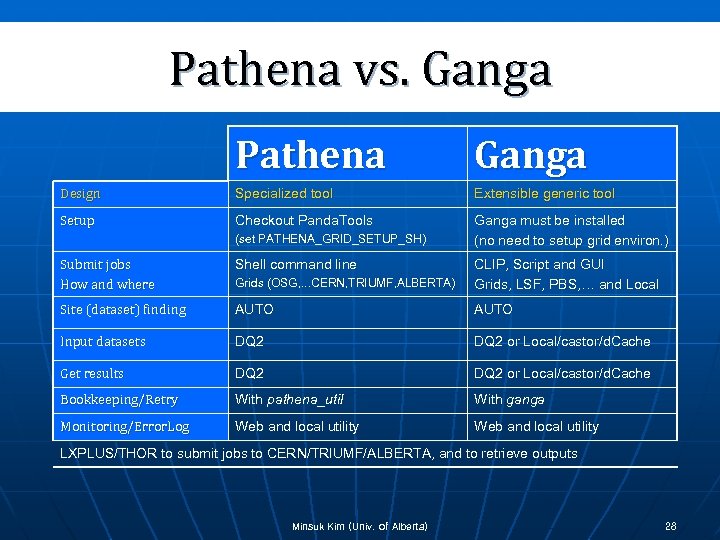

Pathena vs. Ganga Pathena Ganga Design Specialized tool Extensible generic tool Setup Checkout Panda. Tools Ganga must be installed (no need to setup grid environ. ) (set PATHENA_GRID_SETUP_SH) Submit jobs How and where Shell command line Grids (OSG, . . . CERN, TRIUMF, ALBERTA) CLIP, Script and GUI Grids, LSF, PBS, … and Local Site (dataset) finding AUTO Input datasets DQ 2 or Local/castor/d. Cache Get results DQ 2 or Local/castor/d. Cache Bookkeeping/Retry With pathena_util With ganga Monitoring/Error. Log Web and local utility LXPLUS/THOR to submit jobs to CERN/TRIUMF/ALBERTA, and to retrieve outputs Minsuk Kim (Univ. of Alberta) 28

Conclusion I n The generic grid job submission frameworks can be used with DDM/DQ 2 to perform Distributed Analysis • Use both Pathena and Ganga at LXPLUS and THOR clusters • Submit jobs to CERN, TRIUMF, ALBERTA & OSG/NG sites • Ganga available with Local, Batch, and Grid systems n Distributed analysis in ATLAS is evolving rapidly • Many key components like the DDM system have come online (Data Management is a central issue) • Multi-pronged approach to distributed analysis have encouraged one submission system to learn from another and ultimately produced a more robust and feature-rich distributed analysis system Minsuk Kim (Univ. of Alberta) 29

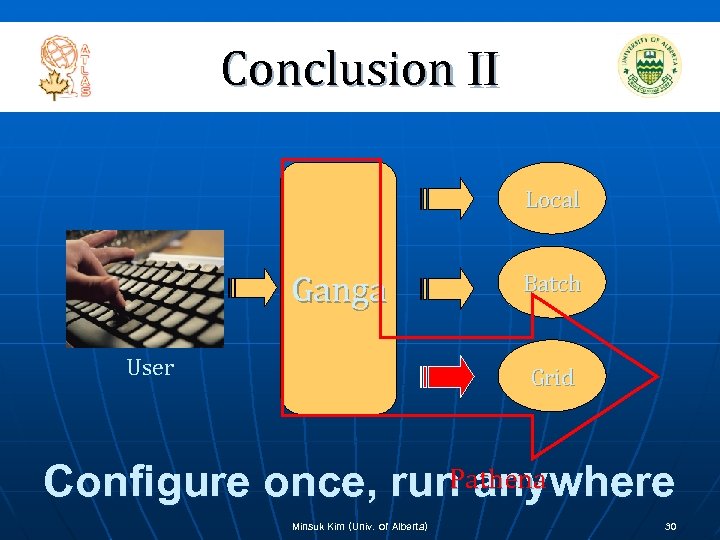

Conclusion II Local Ganga User Batch Grid Pathena Configure once, run anywhere Minsuk Kim (Univ. of Alberta) 30

Tier-2@ALBERTA n THOR Linux Computing Cluster • Began around 1998, 42 dual Pentium II/III machines (100 Mb/s Ethernet) • Beowulf-type, Cheaper than S-computers by more than a factor of ten n Current Hardware/Software Configuration (high-speed Gigabit link) • • • n 3 head-nodes for Cluster/Interactive User, 1 Torque/Maui server node Many server nodes for Grid Compute Element 74 dual processor compute nodes, 250 work nodes (200 AMD Opteron) 4 data storage nodes (~6 TB of RAID disk storage) 4 i. SCSI storage arrays (~22 TB) and 2 mass storage tape systems Scientific Linux 3, 4 (and Fedora Core 2, 3), Various applications & tools Multi-purpose Computing Facility • • • Prototype of PC-based Event Filter sub-farm (high-level trigger system) Multiple-serial Monte Carlo production (Tier-2) Fully integrated with LCG and Grid Canada projects for distributed computing Minsuk Kim (Univ. of Alberta) 31

Backup Minsuk Kim (Univ. of Alberta)

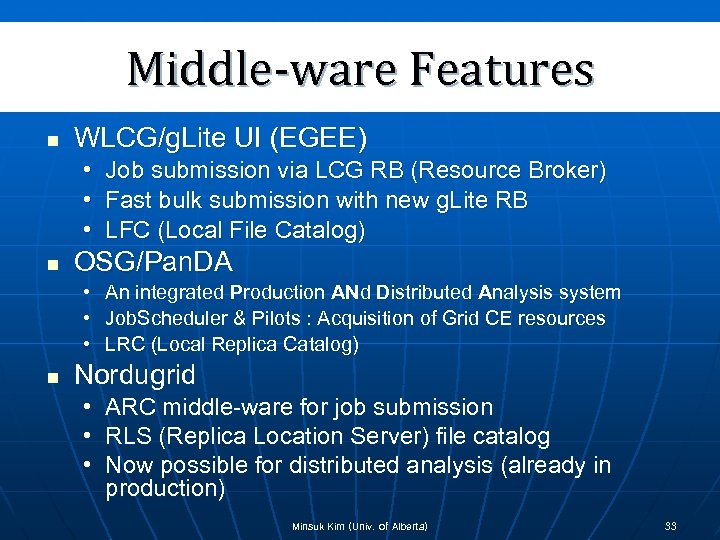

Middle-ware Features n WLCG/g. Lite UI (EGEE) • • • n OSG/Pan. DA • • • n Job submission via LCG RB (Resource Broker) Fast bulk submission with new g. Lite RB LFC (Local File Catalog) An integrated Production ANd Distributed Analysis system Job. Scheduler & Pilots : Acquisition of Grid CE resources LRC (Local Replica Catalog) Nordugrid • • • ARC middle-ware for job submission RLS (Replica Location Server) file catalog Now possible for distributed analysis (already in production) Minsuk Kim (Univ. of Alberta) 33

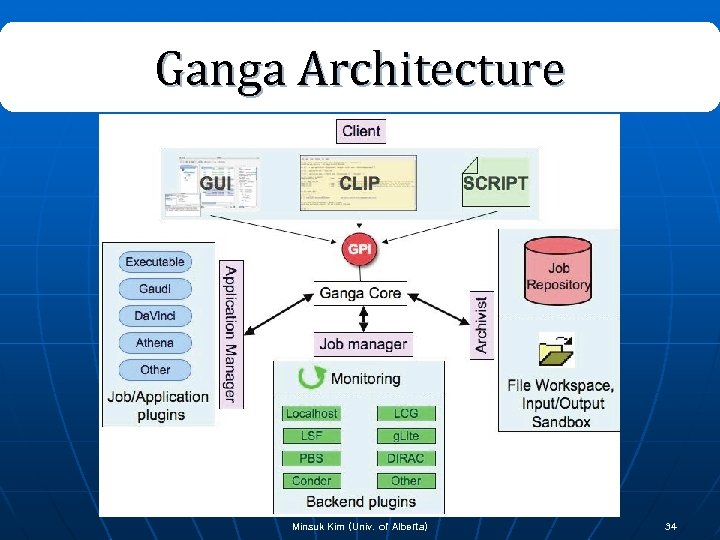

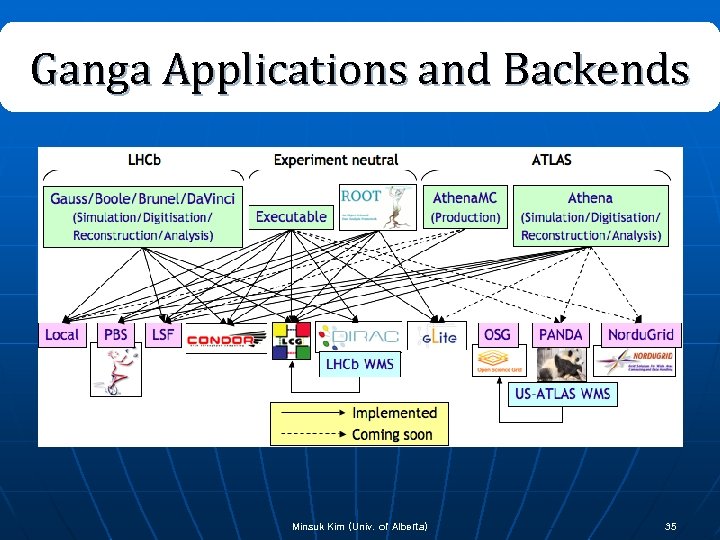

Ganga Architecture Minsuk Kim (Univ. of Alberta) 34

Ganga Applications and Backends Minsuk Kim (Univ. of Alberta) 35

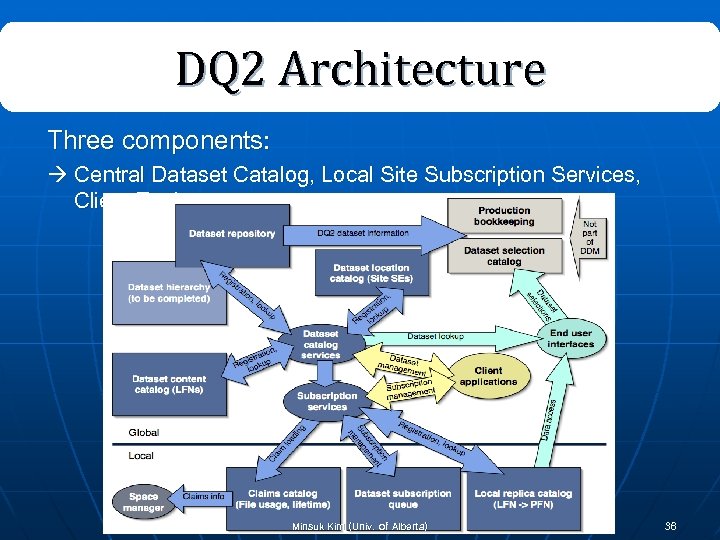

DQ 2 Architecture Three components: Central Dataset Catalog, Local Site Subscription Services, Client Tools Minsuk Kim (Univ. of Alberta) 36

What is a Grid? n The key criteria: • Coordinated distributed resources … • Uses standard, open, general-purpose protocols and interfaces … • Deliver non-trivial qualities of service n What is not a Grid? • A cluster, a network attached storage device, a scientific instrument, a network, etc. • Each is an important component of a Grid, but by itself does not constitute a Grid Minsuk Kim (Univ. of Alberta) 37

7ca6938889172bc94cdc647156b1114b.ppt