fa22a7bdab52953b6e41b92112f017ee.ppt

- Количество слайдов: 19

Discriminative Topic Modeling based on Manifold Learning Seungil Huh and Stephen E. Fienberg July 26, 2010 SIGKDD’ 10

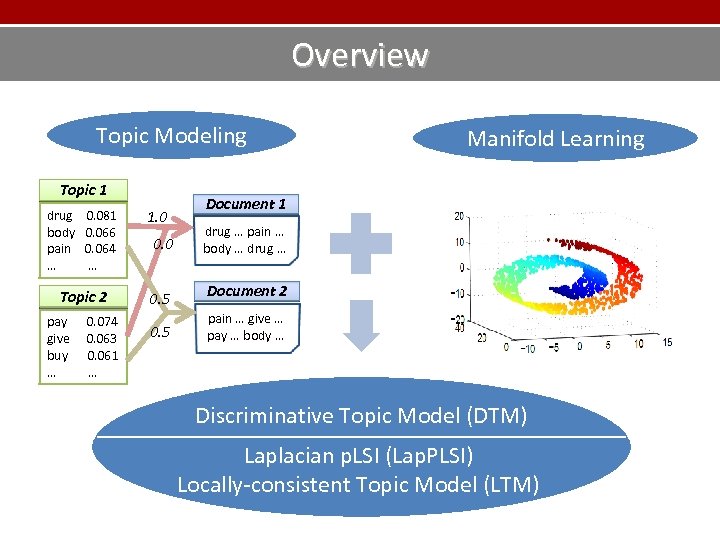

Overview Topic Modeling Topic 1 drug body pain … 0. 081 0. 066 0. 064 … Topic 2 pay give buy … 0. 074 0. 063 0. 061 … 1. 0 0. 5 Manifold Learning Document 1 drug … pain … body … drug … Document 2 pain … give … pay … body … Discriminative Topic Model (DTM) Laplacian p. LSI (Lap. PLSI) Locally-consistent Topic Model (LTM)

Content 1. Background and Notations • • Probabilistic Latent Semantic Analysis (p. LSA) Laplacian Eigenmaps (LE) 2. Previous models 3. Discriminative Topic Model 4. Experiments

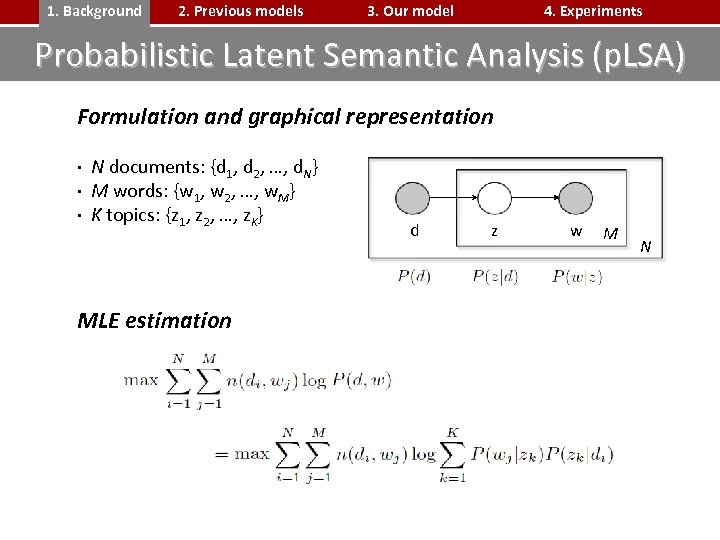

1. Background 2. Previous models 3. Our model 4. Experiments Probabilistic Latent Semantic Analysis (p. LSA) Formulation and graphical representation ∙ N documents: {d 1, d 2, …, d. N} ∙ M words: {w 1, w 2, …, w. M} ∙ K topics: {z 1, z 2, …, z. K} MLE estimation d z w M N

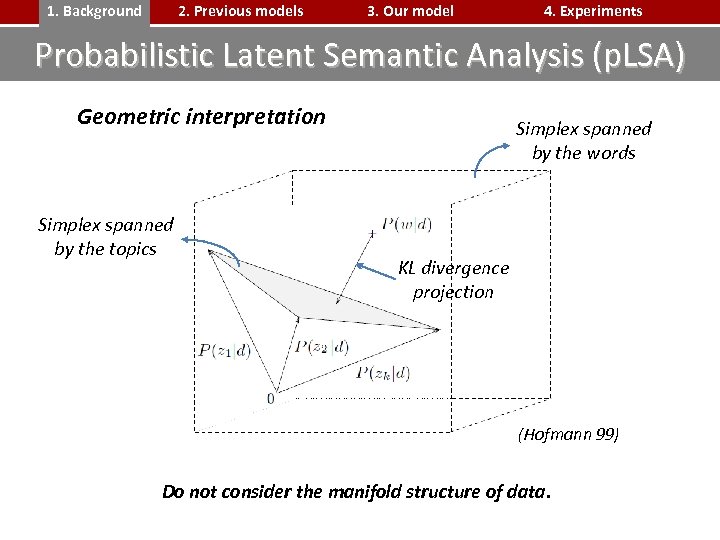

1. Background 2. Previous models 3. Our model 4. Experiments Probabilistic Latent Semantic Analysis (p. LSA) Geometric interpretation Simplex spanned by the topics Simplex spanned by the words KL divergence projection (Hofmann 99) Do not consider the manifold structure of data.

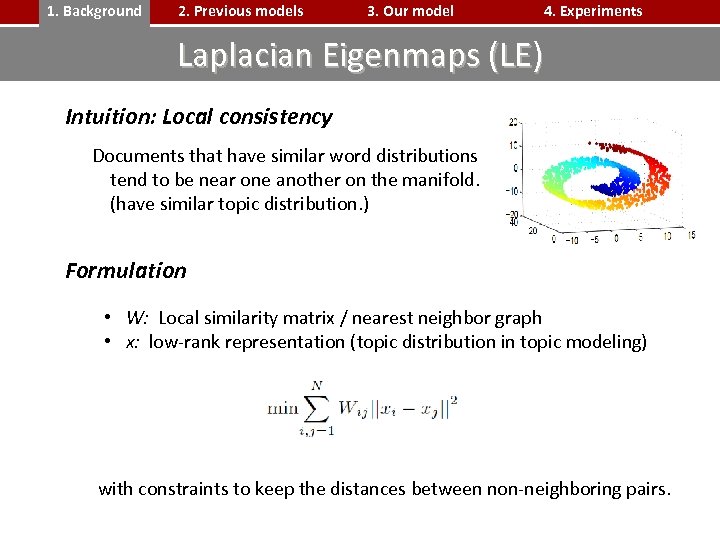

1. Background 2. Previous models 3. Our model 4. Experiments Laplacian Eigenmaps (LE) Intuition: Local consistency Documents that have similar word distributions tend to be near one another on the manifold. (have similar topic distribution. ) Formulation • W: Local similarity matrix / nearest neighbor graph • x: low-rank representation (topic distribution in topic modeling) with constraints to keep the distances between non-neighboring pairs.

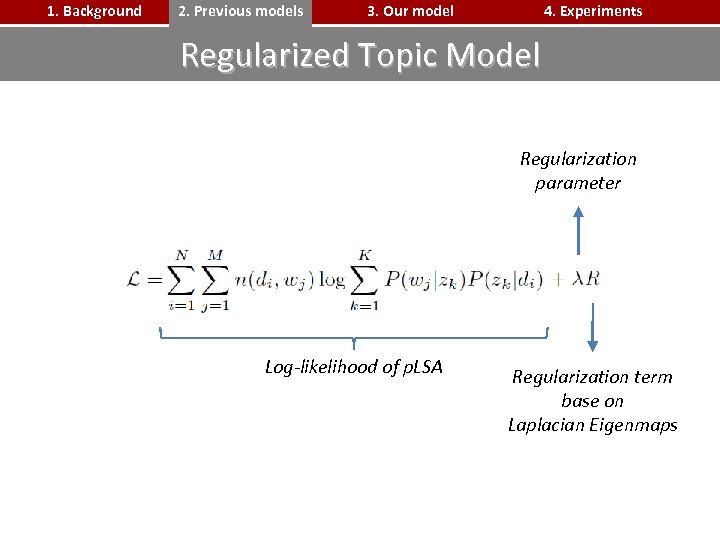

1. Background 2. Previous models 3. Our model 4. Experiments Regularized Topic Model Regularization parameter Log-likelihood of p. LSA Regularization term base on Laplacian Eigenmaps

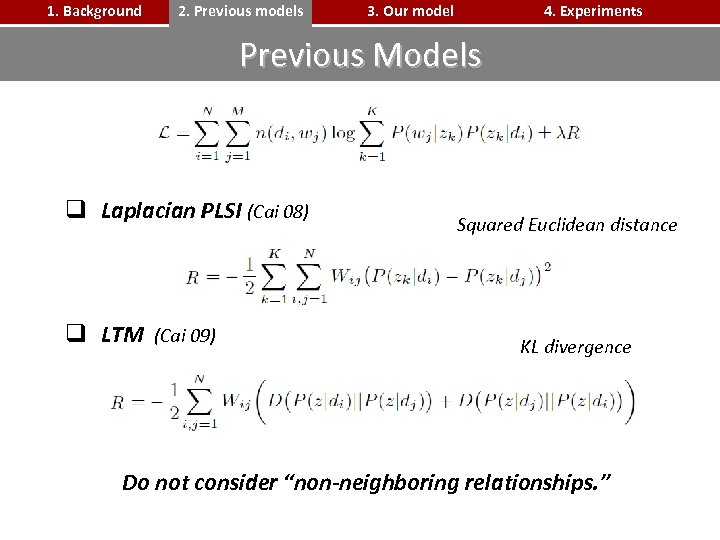

1. Background 2. Previous models 3. Our model 4. Experiments Previous Models q Laplacian PLSI (Cai 08) q LTM (Cai 09) Squared Euclidean distance KL divergence Do not consider “non-neighboring relationships. ”

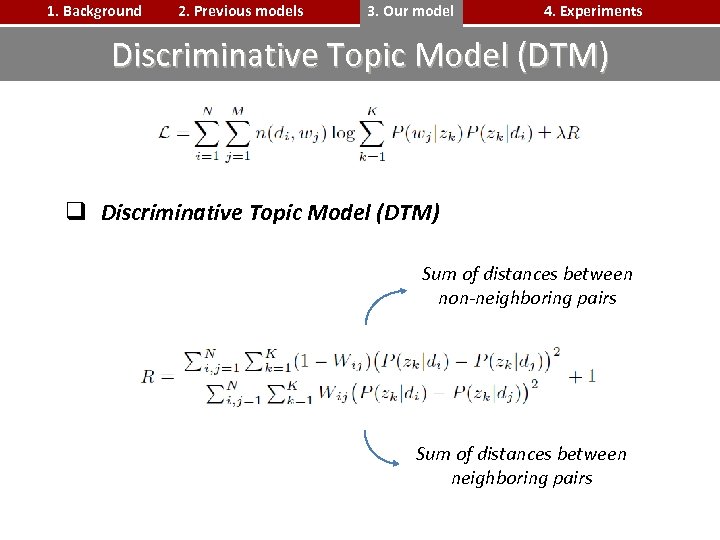

1. Background 2. Previous models 3. Our model 4. Experiments Discriminative Topic Model (DTM) q Discriminative Topic Model (DTM) Sum of distances between non-neighboring pairs Sum of distances between neighboring pairs

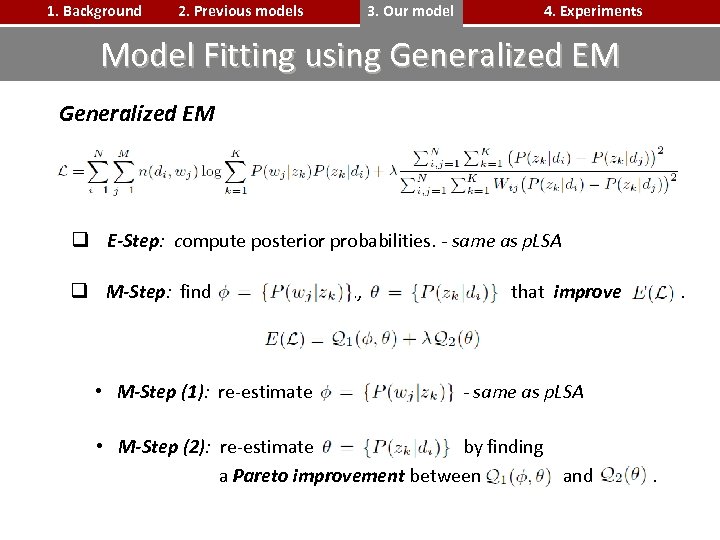

1. Background 2. Previous models 3. Our model 4. Experiments Model Fitting using Generalized EM q E-Step: compute posterior probabilities. - same as p. LSA q M-Step: find • M-Step (1): re-estimate , , that improve . . . - same as p. LSA • M-Step (2): re-estimate by finding a Pareto improvement between and .

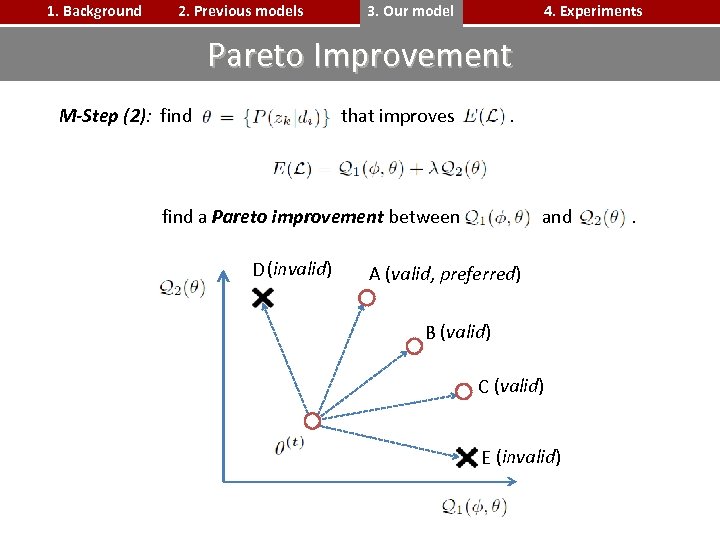

1. Background 2. Previous models 3. Our model 4. Experiments Pareto Improvement M-Step (2): find that improves . find a Pareto improvement between D (invalid) and A (valid, preferred) B (valid) C (valid) E (invalid) .

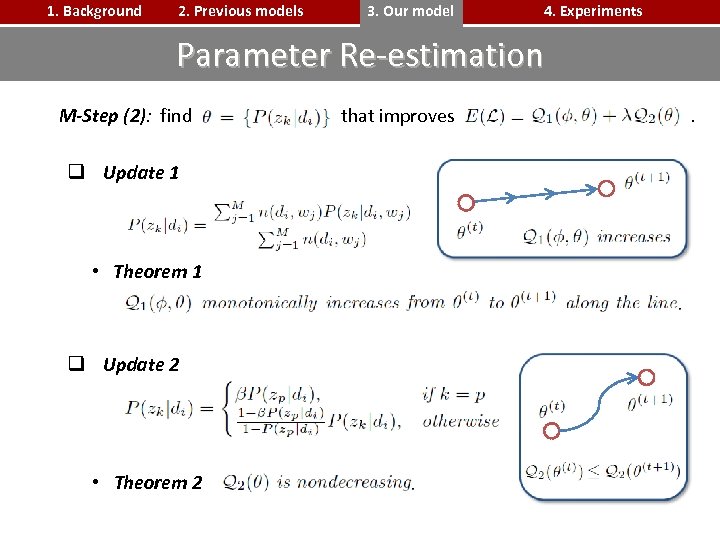

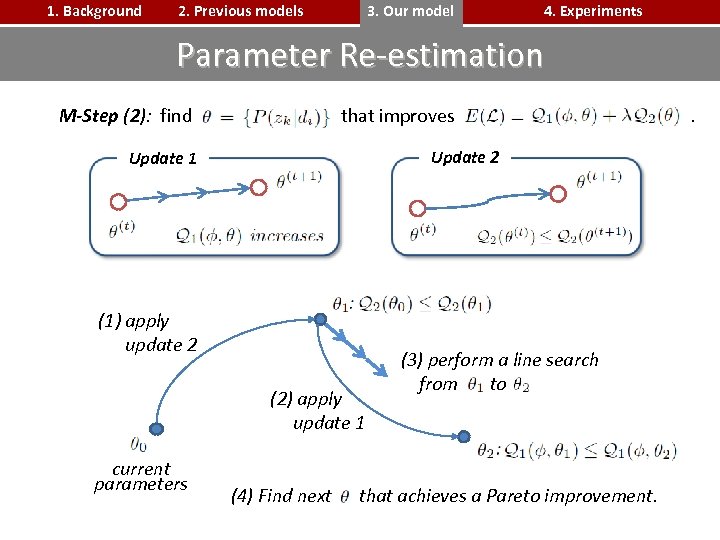

1. Background 2. Previous models 3. Our model 4. Experiments Parameter Re-estimation M-Step (2): find q Update 1 • Theorem 1 q Update 2 • Theorem 2 that improves .

1. Background 2. Previous models 3. Our model 4. Experiments Parameter Re-estimation M-Step (2): find that improves Update 2 Update 1 (1) apply update 2 (2) apply update 1 current parameters (4) Find next (3) perform a line search from to that achieves a Pareto improvement. .

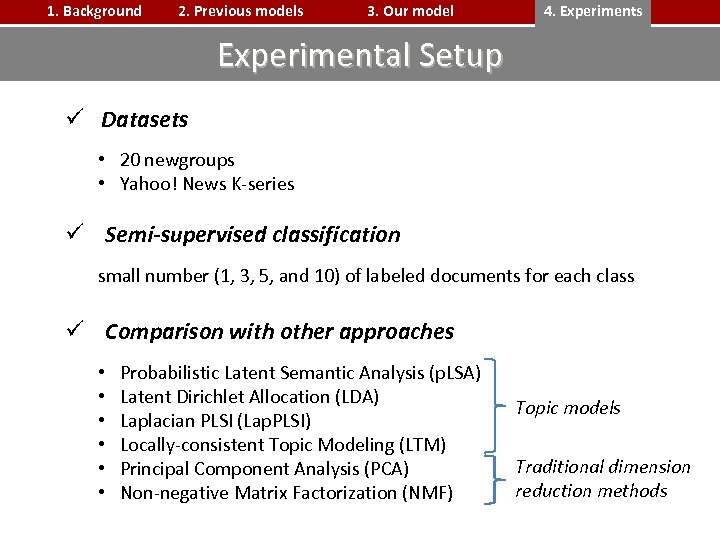

1. Background 2. Previous models 3. Our model 4. Experiments Experimental Setup ü Datasets • 20 newgroups • Yahoo! News K-series ü Semi-supervised classification small number (1, 3, 5, and 10) of labeled documents for each class ü Comparison with other approaches • • • Probabilistic Latent Semantic Analysis (p. LSA) Latent Dirichlet Allocation (LDA) Laplacian PLSI (Lap. PLSI) Locally-consistent Topic Modeling (LTM) Principal Component Analysis (PCA) Non-negative Matrix Factorization (NMF) Topic models Traditional dimension reduction methods

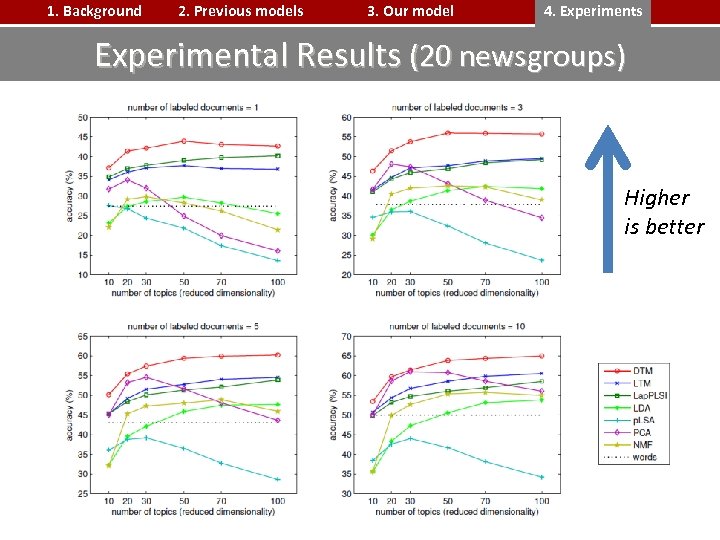

1. Background 2. Previous models 3. Our model 4. Experiments Experimental Results (20 newsgroups) Higher is better

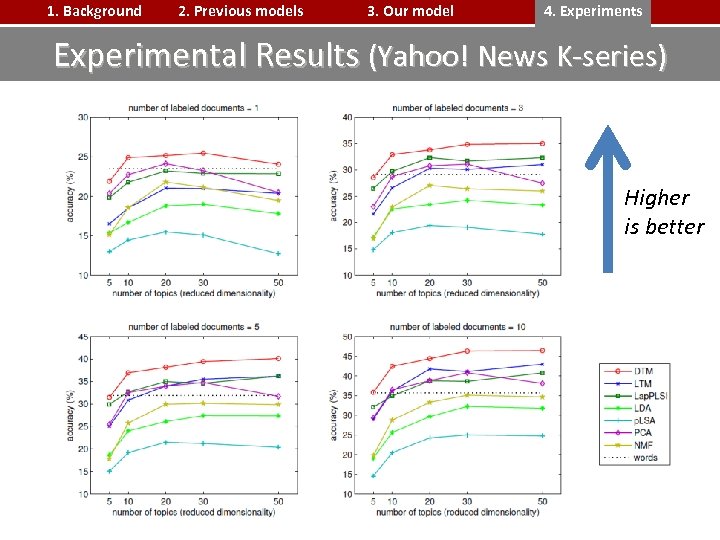

1. Background 2. Previous models 3. Our model 4. Experiments Experimental Results (Yahoo! News K-series) Higher is better

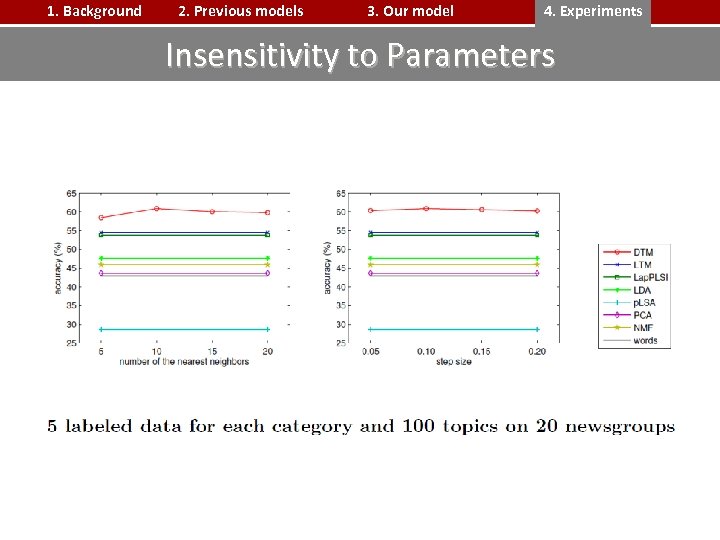

1. Background 2. Previous models 3. Our model 4. Experiments Insensitivity to Parameters

Summary ü Topic model incorporating complete manifold learning formulation ü Effective in semi-supervised classification ü Model fitting using generalized EM and Pareto improvement ü Minimize the sensitivity to parameters

Thank you!

fa22a7bdab52953b6e41b92112f017ee.ppt