134e1aeb001d471af8e8f73d0b0ebbd6.ppt

- Количество слайдов: 41

Digital Information Technology Testbed Conformity Assessment Activities http: //ditt. org US Army, Center For Army Lessons Learned (CALL) Ft. Leavenworth, KS http: //call. army. mil Presentation By Frank Farance, Farance Inc. +1 212 486 4700, frank@farance. com 2000 -03 -16 Digital Information Technology Testbed © 2000 Farance Inc. 1

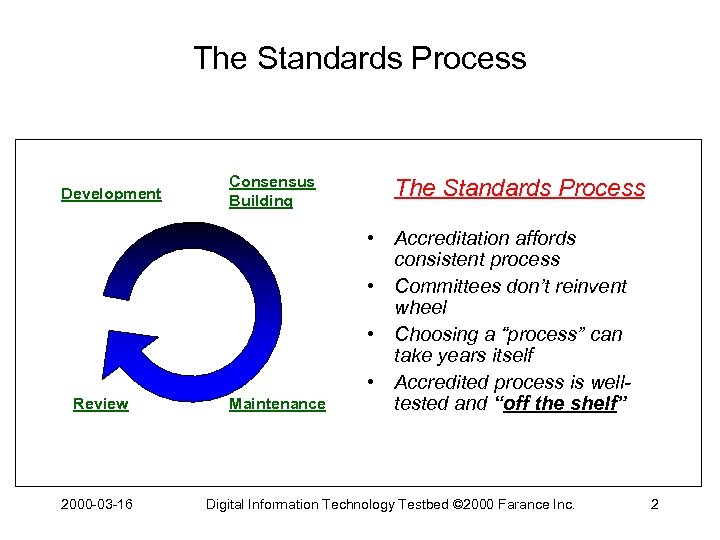

The Standards Process Development Review 2000 -03 -16 Consensus Building Maintenance The Standards Process • Accreditation affords consistent process • Committees don’t reinvent wheel • Choosing a “process” can take years itself • Accredited process is welltested and “off the shelf” Digital Information Technology Testbed © 2000 Farance Inc. 2

Goals of Standards Process • Standards that are well-defined: – Consistent implementations, high interoperability – Coherent functionality • Commercial viability: – Standards allow range of implementations – Commercial products are possible: • All conforming products interoperate, yet. . . • Different “bells and whistles” • Consumer can choose from range of conforming systems • Wide acceptance and adoption • Few bugs: – Consistent requests for interpretation (RFIs) – Low number of defect reports (DRs) 2000 -03 -16 Digital Information Technology Testbed © 2000 Farance Inc. 3

Standards are Developed In Working Groups Developing Standards Development • Source: “from scratch” or “base documents” • Create “standards wording” (normative and informative), rationale for decisions • Technical committee: inperson or electronic collaboration 2000 -03 -16 Digital Information Technology Testbed © 2000 Farance Inc. 4

![Development: Technical Specification [1/4] • Assertions: – Sentences using “shall” – Weaker assertions: “should”, Development: Technical Specification [1/4] • Assertions: – Sentences using “shall” – Weaker assertions: “should”,](https://present5.com/presentation/134e1aeb001d471af8e8f73d0b0ebbd6/image-5.jpg)

Development: Technical Specification [1/4] • Assertions: – Sentences using “shall” – Weaker assertions: “should”, “may” • Inquiries/Ranges: – Implementations have varying limitations – Interoperability: tolerances vs. minimums – Allows implementation-defined and unspecified behavior • Negotiations: – Adaptation to conformance levels 2000 -03 -16 Digital Information Technology Testbed © 2000 Farance Inc. 5

![Development: Technical Specification [2/4] • Conformance: – Complies with all assertions – Performs in Development: Technical Specification [2/4] • Conformance: – Complies with all assertions – Performs in](https://present5.com/presentation/134e1aeb001d471af8e8f73d0b0ebbd6/image-6.jpg)

Development: Technical Specification [2/4] • Conformance: – Complies with all assertions – Performs in range allowed within inquiries/ranges and negotiations – Minimize implementation-defined, unspecified, and undefined behavior 2000 -03 -16 Digital Information Technology Testbed © 2000 Farance Inc. 6

![Development: Technical Specification [3/4] • Applications and standards use: – Strictly conforming: uses features Development: Technical Specification [3/4] • Applications and standards use: – Strictly conforming: uses features](https://present5.com/presentation/134e1aeb001d471af8e8f73d0b0ebbd6/image-7.jpg)

Development: Technical Specification [3/4] • Applications and standards use: – Strictly conforming: uses features that exist in all implementations – Conforming: uses features in some conforming implementations – Conformance levels vs. interoperability 2000 -03 -16 Digital Information Technology Testbed © 2000 Farance Inc. 7

![Development: Technical Specification [4/4] • Rationale: – – Explanation of important committee decisions Features Development: Technical Specification [4/4] • Rationale: – – Explanation of important committee decisions Features](https://present5.com/presentation/134e1aeb001d471af8e8f73d0b0ebbd6/image-8.jpg)

Development: Technical Specification [4/4] • Rationale: – – Explanation of important committee decisions Features considered/rejected Allows for change in membership Helps with Requests for Interpretation 2000 -03 -16 Digital Information Technology Testbed © 2000 Farance Inc. 8

![Development: Engineering Process [1/5] • • • Identify scope Use engineering methodology Preference for Development: Engineering Process [1/5] • • • Identify scope Use engineering methodology Preference for](https://present5.com/presentation/134e1aeb001d471af8e8f73d0b0ebbd6/image-9.jpg)

Development: Engineering Process [1/5] • • • Identify scope Use engineering methodology Preference for existing commercial practice Don’t standardize implementations Standardize specifications 2000 -03 -16 Digital Information Technology Testbed © 2000 Farance Inc. 9

![Development: Engineering Process [2/5] • Can new technology be incorporated? • Technology horizon: – Development: Engineering Process [2/5] • Can new technology be incorporated? • Technology horizon: –](https://present5.com/presentation/134e1aeb001d471af8e8f73d0b0ebbd6/image-10.jpg)

Development: Engineering Process [2/5] • Can new technology be incorporated? • Technology horizon: – Which technology incorporated? Feasible/commercial, up to 1 -2 years from now – How long should standard be useful? At least 510 years from now 2000 -03 -16 Digital Information Technology Testbed © 2000 Farance Inc. 10

![Development: Engineering Process [3/5] • • • Risk management: scope, prior art, schedule Determining Development: Engineering Process [3/5] • • • Risk management: scope, prior art, schedule Determining](https://present5.com/presentation/134e1aeb001d471af8e8f73d0b0ebbd6/image-11.jpg)

Development: Engineering Process [3/5] • • • Risk management: scope, prior art, schedule Determining requirements Asking the right questions (1 -3 years? ) Document organization and phasing Base documents -- starting points Proposals and papers -- additions 2000 -03 -16 Digital Information Technology Testbed © 2000 Farance Inc. 11

![Development: Engineering Process [4/5] • Developing standards wording. . . – – – Step Development: Engineering Process [4/5] • Developing standards wording. . . – – – Step](https://present5.com/presentation/134e1aeb001d471af8e8f73d0b0ebbd6/image-12.jpg)

Development: Engineering Process [4/5] • Developing standards wording. . . – – – Step #0: Requirements identification (optional) Step #1: Functionality: what it does Step #2: Conceptual model: how it works Step #3: Semantics: precise description of features Step #4: Bindings: transform into target, e. g. , application programming interfaces (APIs), syntax, commands, protocol definition, file format, codings – Step #5: Encodings: bit/octet formats (optional) – Step #6: Standards words: “legal” wording 2000 -03 -16 Digital Information Technology Testbed © 2000 Farance Inc. 12

![Development: Engineering Process [5/5] • Let bake -- probably 1 -2 years – – Development: Engineering Process [5/5] • Let bake -- probably 1 -2 years – –](https://present5.com/presentation/134e1aeb001d471af8e8f73d0b0ebbd6/image-13.jpg)

Development: Engineering Process [5/5] • Let bake -- probably 1 -2 years – – Baking is very important Shakes out subtle bugs Greatly improves quality! Usually, vendors fight “baking”. . . want to announce “conforming” products ASAP 2000 -03 -16 Digital Information Technology Testbed © 2000 Farance Inc. 13

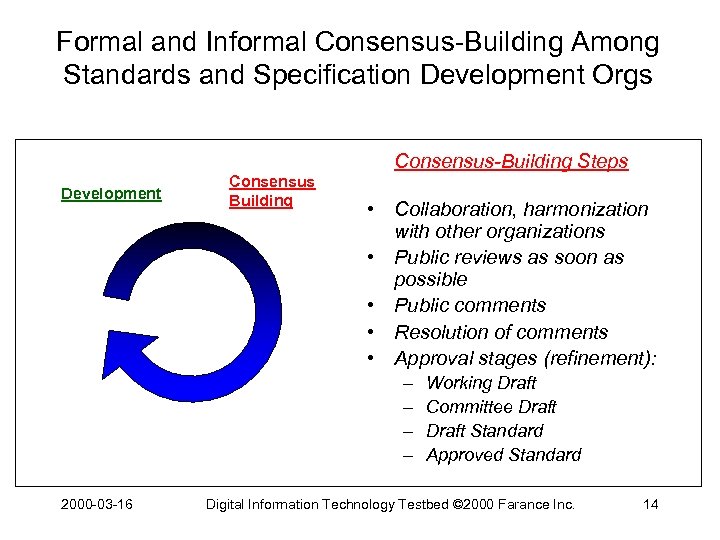

Formal and Informal Consensus-Building Among Standards and Specification Development Orgs Consensus-Building Steps Development Consensus Building • Collaboration, harmonization with other organizations • Public reviews as soon as possible • Public comments • Resolution of comments • Approval stages (refinement): – – 2000 -03 -16 Working Draft Committee Draft Standard Approved Standard Digital Information Technology Testbed © 2000 Farance Inc. 14

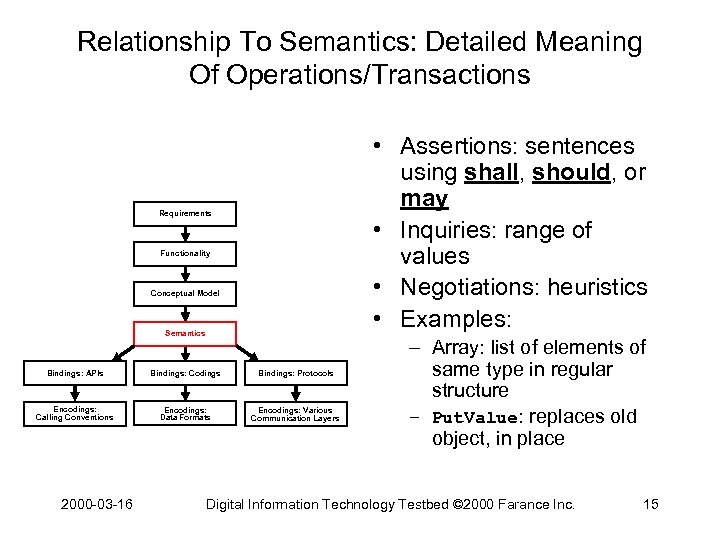

Relationship To Semantics: Detailed Meaning Of Operations/Transactions • Assertions: sentences using shall, should, or may • Inquiries: range of values • Negotiations: heuristics • Examples: Requirements Functionality Conceptual Model Semantics Bindings: APIs Bindings: Codings Bindings: Protocols Encodings: Calling Conventions Encodings: Data Formats Encodings: Various Communication Layers 2000 -03 -16 – Array: list of elements of same type in regular structure – Put. Value: replaces old object, in place Digital Information Technology Testbed © 2000 Farance Inc. 15

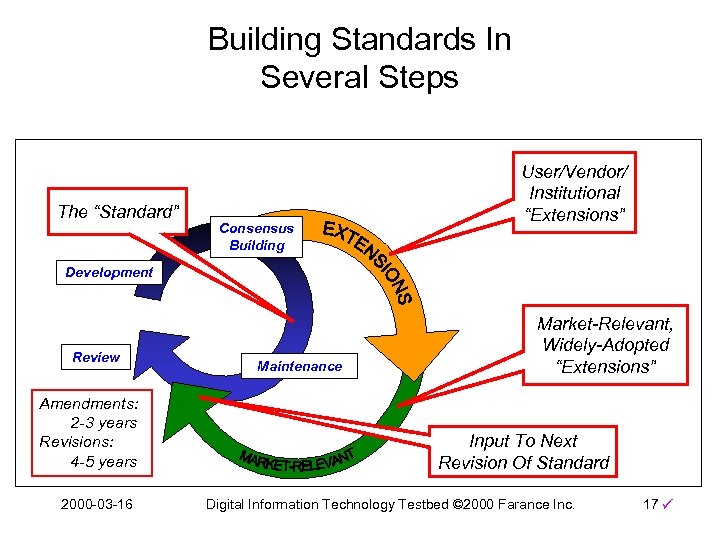

Some Strategies for Standardizing Data Models • Partition into “application areas” • Build standards in several steps, example: – Year 1: Create minimal, widely adoptable standard – Year 3: Create amendment that represents best and widely implemented practices – Year 5: Revise standard, incorporate improvements • Support extension mechanisms – Permits user/vendor/institutional extensions – Widely implemented extensions become basis for new standards amendments/revisions 2000 -03 -16 Digital Information Technology Testbed © 2000 Farance Inc. 16

Building Standards In Several Steps The “Standard” Consensus Building User/Vendor/ Institutional “Extensions” Development Review Amendments: 2 -3 years Revisions: 4 -5 years 2000 -03 -16 Maintenance Market-Relevant, Widely-Adopted “Extensions” Input To Next Revision Of Standard Digital Information Technology Testbed © 2000 Farance Inc. 17

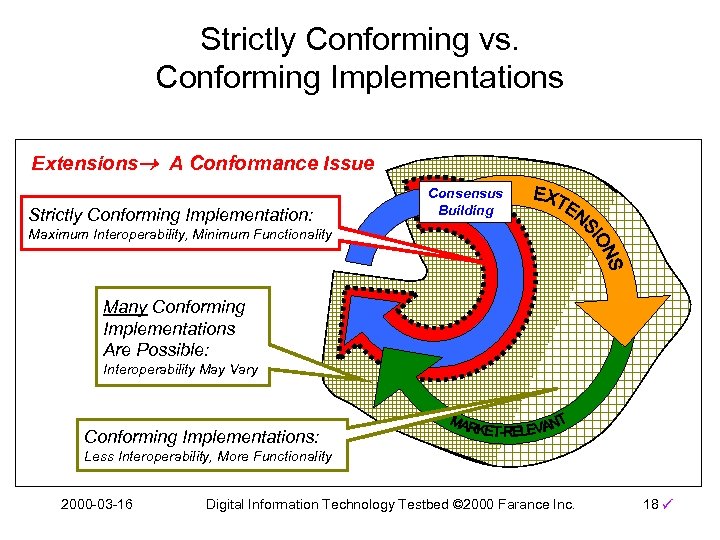

Strictly Conforming vs. Conforming Implementations Extensions A Conformance Issue Strictly Conforming Implementation: Consensus Building Maximum Interoperability, Minimum Functionality Many Conforming Implementations Are Possible: Interoperability May Vary Conforming Implementations: Less Interoperability, More Functionality 2000 -03 -16 Digital Information Technology Testbed © 2000 Farance Inc. 18

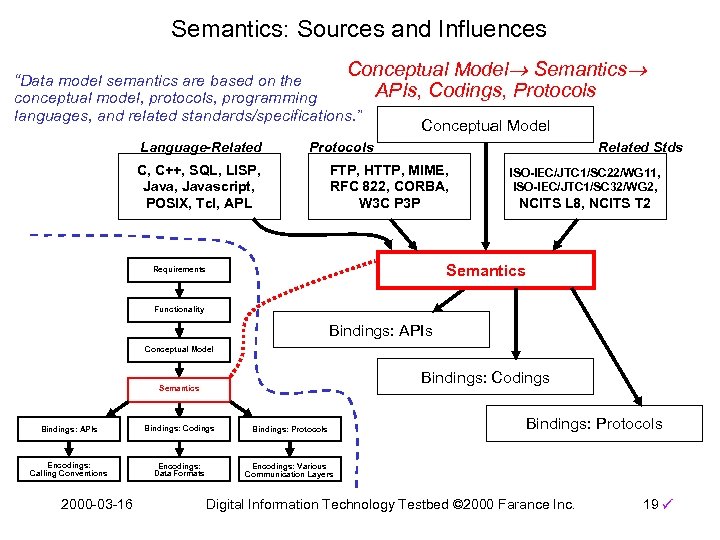

Semantics: Sources and Influences Conceptual Model Semantics APIs, Codings, Protocols “Data model semantics are based on the conceptual model, protocols, programming languages, and related standards/specifications. ” Language-Related Conceptual Model Related Stds Protocols C, C++, SQL, LISP, Javascript, POSIX, Tcl, APL FTP, HTTP, MIME, RFC 822, CORBA, W 3 C P 3 P ISO-IEC/JTC 1/SC 22/WG 11, ISO-IEC/JTC 1/SC 32/WG 2, NCITS L 8, NCITS T 2 Semantics Requirements Functionality Bindings: APIs Conceptual Model Bindings: Codings Semantics Bindings: APIs Bindings: Codings Bindings: Protocols Encodings: Calling Conventions Encodings: Data Formats Bindings: Protocols Encodings: Various Communication Layers 2000 -03 -16 Digital Information Technology Testbed © 2000 Farance Inc. 19

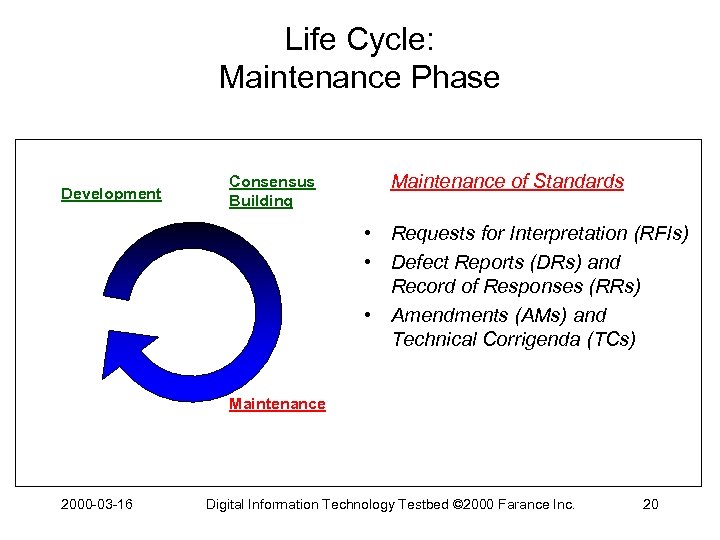

Life Cycle: Maintenance Phase Development Consensus Building Maintenance of Standards • Requests for Interpretation (RFIs) • Defect Reports (DRs) and Record of Responses (RRs) • Amendments (AMs) and Technical Corrigenda (TCs) Maintenance 2000 -03 -16 Digital Information Technology Testbed © 2000 Farance Inc. 20

![Maintenance [1/3] • Requests for interpretation (RFIs): – Questions on ambiguous wording – Response Maintenance [1/3] • Requests for interpretation (RFIs): – Questions on ambiguous wording – Response](https://present5.com/presentation/134e1aeb001d471af8e8f73d0b0ebbd6/image-21.jpg)

Maintenance [1/3] • Requests for interpretation (RFIs): – Questions on ambiguous wording – Response time: 3 -18 months – Interpret questions based on actual standards wording, not what is desired – Like U. S. Supreme Court – Consistent responses — highly desirable 2000 -03 -16 Digital Information Technology Testbed © 2000 Farance Inc. 21

![Maintenance [2/3] • Defect reports: – – – Bug reports Fix features, as necessary Maintenance [2/3] • Defect reports: – – – Bug reports Fix features, as necessary](https://present5.com/presentation/134e1aeb001d471af8e8f73d0b0ebbd6/image-22.jpg)

Maintenance [2/3] • Defect reports: – – – Bug reports Fix features, as necessary Many defect reports weak standard Weak standard little acceptance/use Little acceptance/use failure Failure: only recognized years later 2000 -03 -16 Digital Information Technology Testbed © 2000 Farance Inc. 22

![Maintenance [3/3] • Other deliverables: – Record of responses (to RFIs) – Technical corrigenda Maintenance [3/3] • Other deliverables: – Record of responses (to RFIs) – Technical corrigenda](https://present5.com/presentation/134e1aeb001d471af8e8f73d0b0ebbd6/image-23.jpg)

Maintenance [3/3] • Other deliverables: – Record of responses (to RFIs) – Technical corrigenda (for defect reports) – Amendments • Next revision: – Reaffirm – Revise – Withdraw 2000 -03 -16 Digital Information Technology Testbed © 2000 Farance Inc. 23

![Success Attributes [1/2] • Participants: – expect to be involved 2 -5 years • Success Attributes [1/2] • Participants: – expect to be involved 2 -5 years •](https://present5.com/presentation/134e1aeb001d471af8e8f73d0b0ebbd6/image-24.jpg)

Success Attributes [1/2] • Participants: – expect to be involved 2 -5 years • Schedule: – don’t get rushed, don’t get late • New technology: – be conservative • Scope: – stick to it! 2000 -03 -16 Digital Information Technology Testbed © 2000 Farance Inc. 24

![Success Attributes [2/2] • Conformance: – need to measure it – should have working Success Attributes [2/2] • Conformance: – need to measure it – should have working](https://present5.com/presentation/134e1aeb001d471af8e8f73d0b0ebbd6/image-25.jpg)

Success Attributes [2/2] • Conformance: – need to measure it – should have working definition ASAP • Target audience: – commercial systems and users • Quality: – fix bugs now • Process: – have faith in standards process — it works! 2000 -03 -16 Digital Information Technology Testbed © 2000 Farance Inc. 25

![Failure Attributes [1/2] • Incorporate new/untried technology – Why waste committee time? • Ignore Failure Attributes [1/2] • Incorporate new/untried technology – Why waste committee time? • Ignore](https://present5.com/presentation/134e1aeb001d471af8e8f73d0b0ebbd6/image-26.jpg)

Failure Attributes [1/2] • Incorporate new/untried technology – Why waste committee time? • Ignore commercial interests – Who will implement the standard? • Ignore public comments – Who will buy standardized products? • Creeping featurism – The schedule killer! 2000 -03 -16 Digital Information Technology Testbed © 2000 Farance Inc. 26

![Failure Attributes [2/2] • Poor time estimates – Progress is made over quarters, not Failure Attributes [2/2] • Poor time estimates – Progress is made over quarters, not](https://present5.com/presentation/134e1aeb001d471af8e8f73d0b0ebbd6/image-27.jpg)

Failure Attributes [2/2] • Poor time estimates – Progress is made over quarters, not weeks • Leave bugs to later – Expensive to fix later, like software • Weak tests of conformance – Standard-conforming, but lacks interoperability • Too much implementation-defined behavior 2000 -03 -16 Digital Information Technology Testbed © 2000 Farance Inc. 27

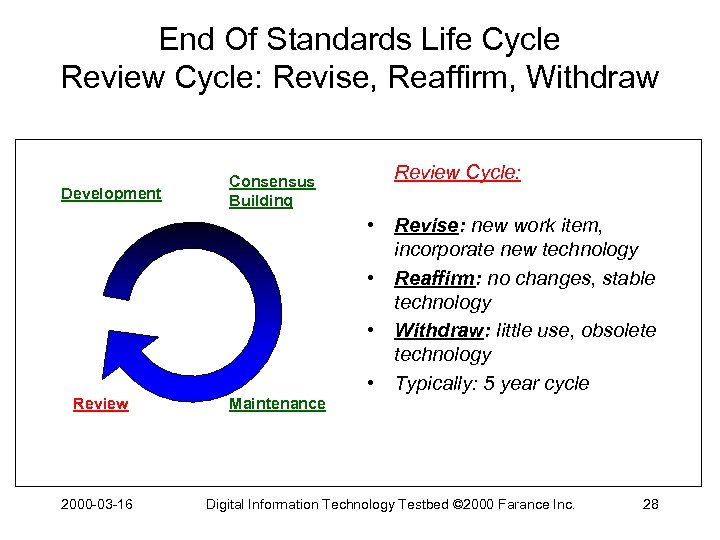

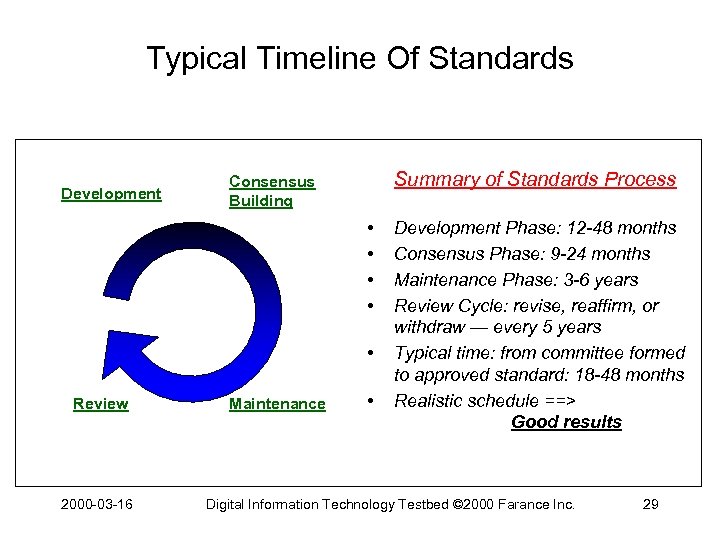

End Of Standards Life Cycle Review Cycle: Revise, Reaffirm, Withdraw Development Consensus Building Review Cycle: • Revise: new work item, incorporate new technology • Reaffirm: no changes, stable technology • Withdraw: little use, obsolete technology • Typically: 5 year cycle Review 2000 -03 -16 Maintenance Digital Information Technology Testbed © 2000 Farance Inc. 28

Typical Timeline Of Standards Development Summary of Standards Process Consensus Building • • • Review 2000 -03 -16 Maintenance • Development Phase: 12 -48 months Consensus Phase: 9 -24 months Maintenance Phase: 3 -6 years Review Cycle: revise, reaffirm, or withdraw — every 5 years Typical time: from committee formed to approved standard: 18 -48 months Realistic schedule ==> Good results Digital Information Technology Testbed © 2000 Farance Inc. 29

Conformance Testing • Testing implementations • Relationship to standards/specifications • Relationship to standard/specification development organizations • Relationship to testing organizations 2000 -03 -16 Digital Information Technology Testbed © 2000 Farance Inc. 30

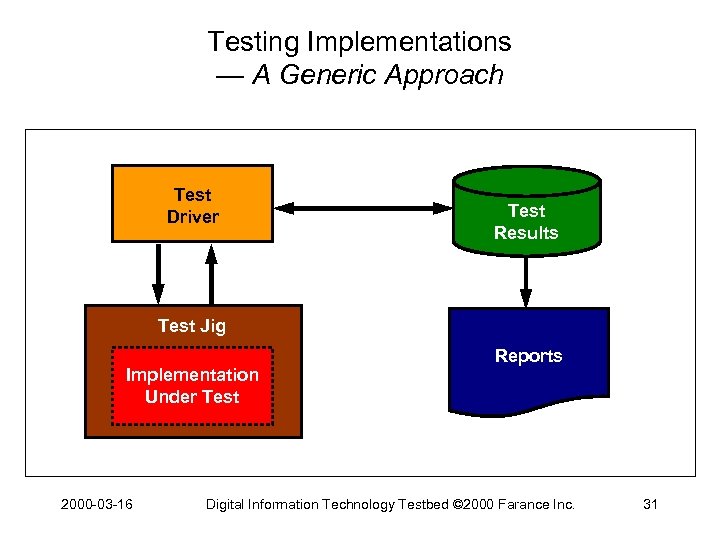

Testing Implementations — A Generic Approach Test Driver Test Results Test Jig Implementation Under Test 2000 -03 -16 Reports Digital Information Technology Testbed © 2000 Farance Inc. 31

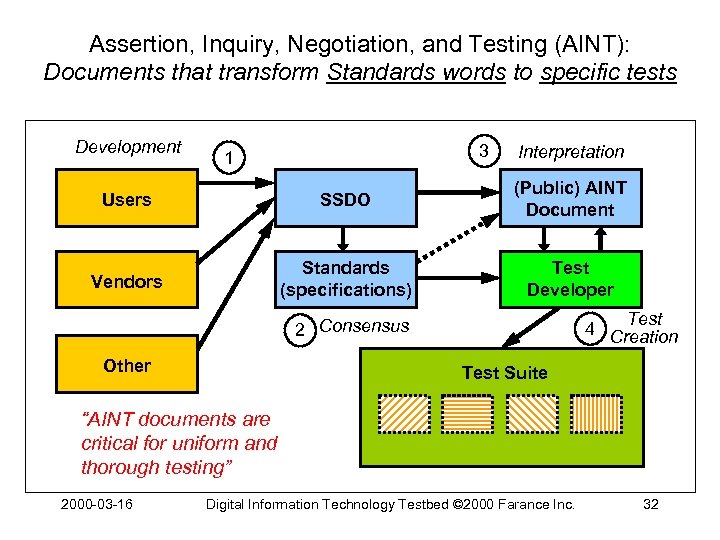

Assertion, Inquiry, Negotiation, and Testing (AINT): Documents that transform Standards words to specific tests Development 3 1 Interpretation Users SSDO (Public) AINT Document Vendors Standards (specifications) Test Developer Test 4 Creation 2 Consensus Other Test Suite “AINT documents are critical for uniform and thorough testing” 2000 -03 -16 Digital Information Technology Testbed © 2000 Farance Inc. 32

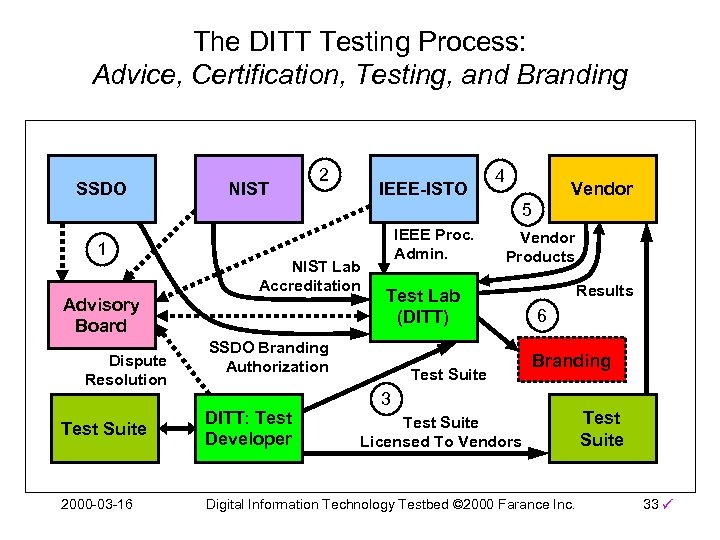

The DITT Testing Process: Advice, Certification, Testing, and Branding SSDO NIST 2 IEEE-ISTO 4 Vendor 5 1 NIST Lab Accreditation Advisory Board Dispute Resolution IEEE Proc. Admin. Vendor Products Test Lab (DITT) SSDO Branding Authorization Test Suite Results 6 Branding 3 Test Suite DITT: Test Developer 2000 -03 -16 Digital Information Technology Testbed © 2000 Farance Inc. Test Suite Licensed To Vendors Test Suite 33

Various Stakeholders’ Perspectives • User: – some person or organization that cares about interoperability • Vendor: – develops products/services that conform to standards/specifications • Small Developers: – develop products, services, content; small testing resources • Institutions: – strong need to reduce business risk – need interoperability 2000 -03 -16 Digital Information Technology Testbed © 2000 Farance Inc. 34

Testing vs. Certification • Users/vendors may “self-test” via publicly available test suites • Certification implies: – Controlled testing – Certified lab – Issuing a “certificate” or “branding” 2000 -03 -16 Digital Information Technology Testbed © 2000 Farance Inc. 35

Initial Technical Scope of DITT Conformance Testing • IEEE 1484. 2 (PAPI Learner) • IEEE 1484. 12 (Learning Objects Metadata) • ISO/IEC 11179 (Metadata Registries) 2000 -03 -16 Digital Information Technology Testbed © 2000 Farance Inc. 36

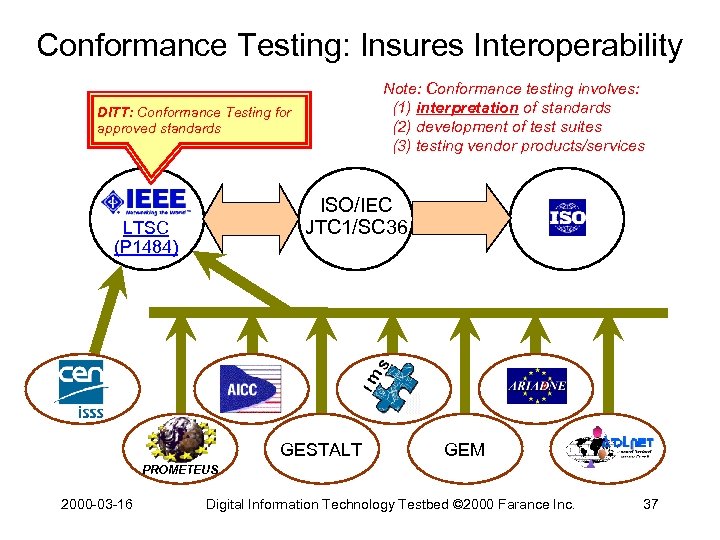

Conformance Testing: Insures Interoperability Note: Conformance testing involves: (1) interpretation of standards (2) development of test suites (3) testing vendor products/services DITT: Conformance Testing for approved standards ISO/IEC JTC 1/SC 36 LTSC (P 1484) GESTALT GEM PROMETEUS 2000 -03 -16 Digital Information Technology Testbed © 2000 Farance Inc. 37

Possibilities Follow-On Scope of Conformance Testing • • IEEE 1484. 6 (Content Sequencing) IEEE 1484. 10 (Portable Content) IEEE 1484. 11 (Computer Managed Instruction) IEEE 1484. 13 (Simple Identifiers) IEEE 1484. 17 (Content Packaging) IEEE 1484. 14 (Semi-Structured Data) IEEE 1484. 18 (Platform/Browser/Media Profiles) 2000 -03 -16 Digital Information Technology Testbed © 2000 Farance Inc. 38

Conformance Testing Of Institutional Extensions • Supporting “institutional” extensions – – Learning objects metadata Institutional extensions Data repositories Data registries 2000 -03 -16 Digital Information Technology Testbed © 2000 Farance Inc. 39

Related Conformance Testing In Healthcare • • • ANSI HISB X 12 HL 7 Patient identifiers Patient records systems Security 2000 -03 -16 Digital Information Technology Testbed © 2000 Farance Inc. 40

More Information • Contact: – Roy Carroll, +1 913 684 5992 carrollr@leavenworth. army. mil – Frank Farance, +1 212 486 4700 frank@farance. com • Upcoming events: – Demonstration at 2000 -05 SC 32/WG 2 meeting – Test suites available 2000 Q 2 -3 2000 -03 -16 Digital Information Technology Testbed © 2000 Farance Inc. 41

134e1aeb001d471af8e8f73d0b0ebbd6.ppt