55c4449f1afb4523cb42b23da667729c.ppt

- Количество слайдов: 68

Digital Image Processing Hardware and System Considerations Dr. John R. Jensen Department of Geography University of South Carolina Columbia, SC 29208 Jensen, 2004

Digital Image Processing Hardware and System Considerations Dr. John R. Jensen Department of Geography University of South Carolina Columbia, SC 29208 Jensen, 2004

Image Processing System Considerations Digital remote sensor data are analyzed using a digital image processing system that consists of computer hardware and special-purpose image processing software. This lecture describes: • fundamental digital image processing system hardware characteristics, • digital image processing software (computer program) requirements, and • public and commercial sources of digital image processing hardware and software. Jensen, 2004

Image Processing System Considerations Digital remote sensor data are analyzed using a digital image processing system that consists of computer hardware and special-purpose image processing software. This lecture describes: • fundamental digital image processing system hardware characteristics, • digital image processing software (computer program) requirements, and • public and commercial sources of digital image processing hardware and software. Jensen, 2004

Image Processing System Considerations A digital image processing system should: • have a reasonable learning curve and be easy to use, • have a reputation for producing accurate results (ideally the company has ISO certification), • produce the desired results in an appropriate format (e. g. , map products in a standard cartographic data structure compatible with most GIS), and • be within the department’s budget. Jensen, 2004

Image Processing System Considerations A digital image processing system should: • have a reasonable learning curve and be easy to use, • have a reputation for producing accurate results (ideally the company has ISO certification), • produce the desired results in an appropriate format (e. g. , map products in a standard cartographic data structure compatible with most GIS), and • be within the department’s budget. Jensen, 2004

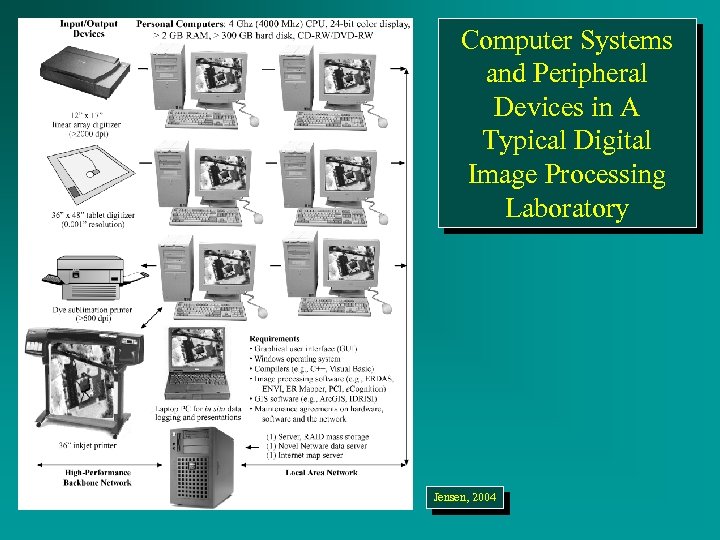

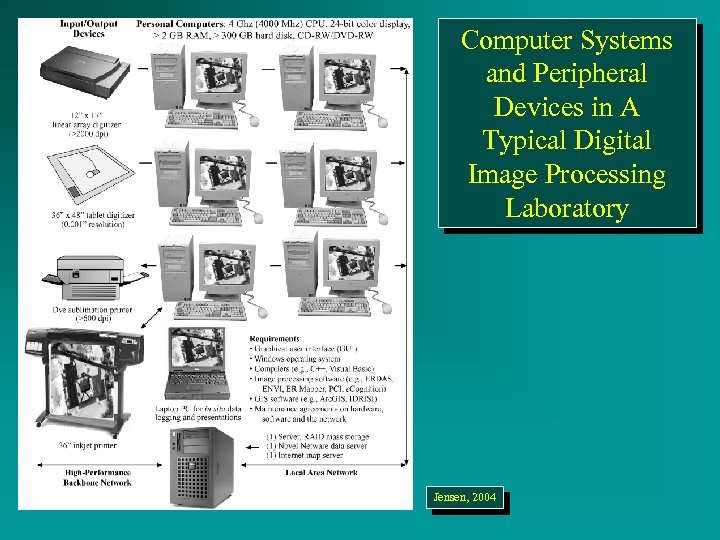

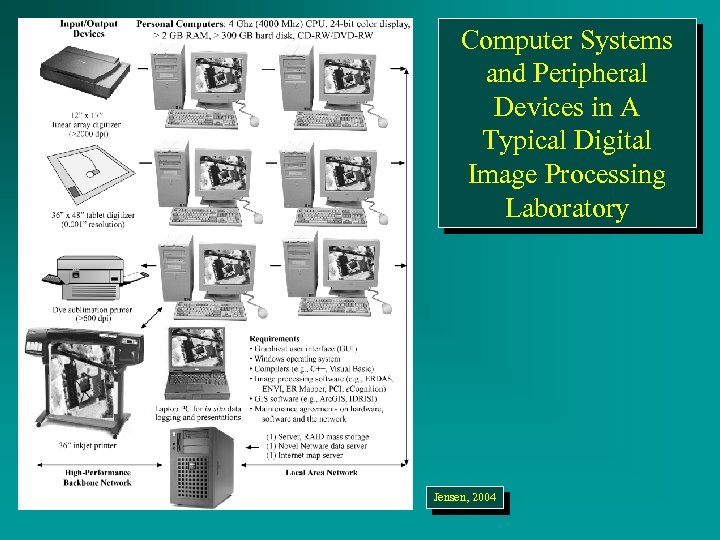

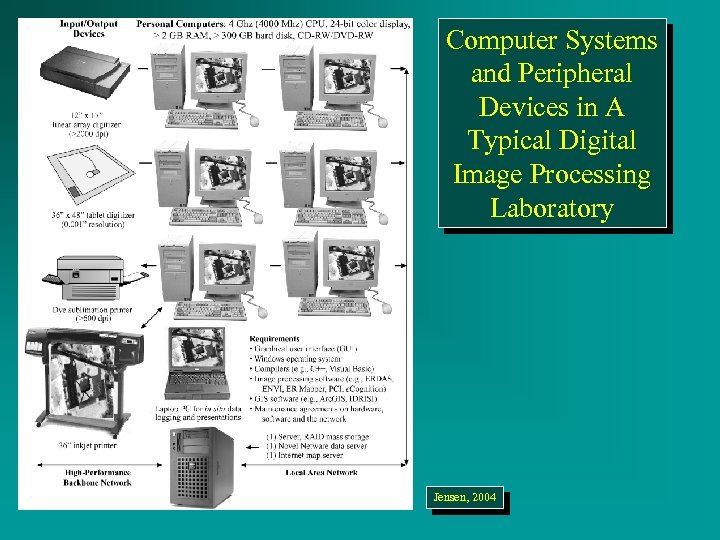

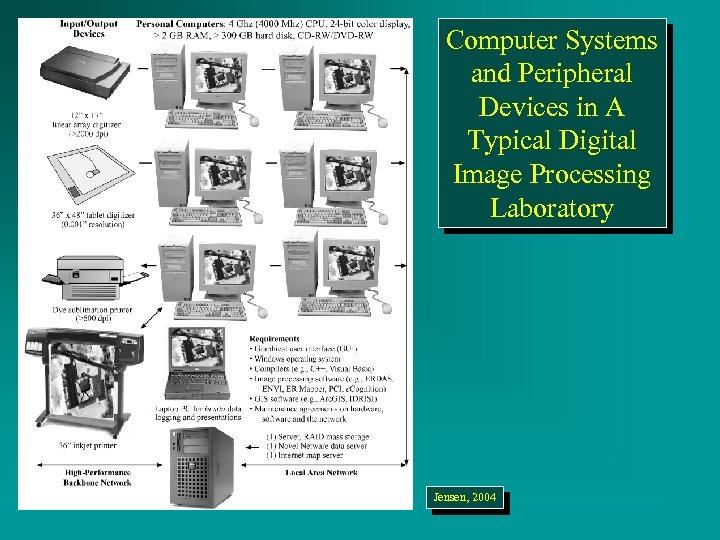

Computer Systems and Peripheral Devices in A Typical Digital Image Processing Laboratory Jensen, 2004

Computer Systems and Peripheral Devices in A Typical Digital Image Processing Laboratory Jensen, 2004

Image Processing System Hardware /Software Considerations • Number and speed of Central Processing Unit(s) (CPU) • Operating system (e. g. , Microsoft Windows; UNIX, Linux, Macintosh) • Amount of random access memory (RAM) • Number of image analysts that can use the system at one time and mode of operation (e. g. , interactive or batch) • Serial or parallel image processing • Arithmetic coprocessor or array processor • Software compiler(s) (e. g. , C++, Visual Basic, Java) Jensen, 2004

Image Processing System Hardware /Software Considerations • Number and speed of Central Processing Unit(s) (CPU) • Operating system (e. g. , Microsoft Windows; UNIX, Linux, Macintosh) • Amount of random access memory (RAM) • Number of image analysts that can use the system at one time and mode of operation (e. g. , interactive or batch) • Serial or parallel image processing • Arithmetic coprocessor or array processor • Software compiler(s) (e. g. , C++, Visual Basic, Java) Jensen, 2004

Computer Systems and Peripheral Devices in A Typical Digital Image Processing Laboratory Jensen, 2004

Computer Systems and Peripheral Devices in A Typical Digital Image Processing Laboratory Jensen, 2004

Image Processing System Hardware /Software Considerations • Type of mass storage (e. g. , hard disk, CD-ROM, DVD) and amount (e. g. , gigabytes) • Monitor display spatial resolution (e. g. , 1024 768 pixels) • Monitor color resolution (e. g. , 24 -bits of image processing video memory yields 16. 7 million displayable colors) • Input devices (e. g. , optical-mechanical drum or flatbed scanners, area array digitizers) • Output devices (e. g. , CD-ROM, CD-RW, DVD-RW, filmwriters, line plotters, dye sublimation printers) • Networks (e. g. , local area, wide area, Internet) Jensen, 2004

Image Processing System Hardware /Software Considerations • Type of mass storage (e. g. , hard disk, CD-ROM, DVD) and amount (e. g. , gigabytes) • Monitor display spatial resolution (e. g. , 1024 768 pixels) • Monitor color resolution (e. g. , 24 -bits of image processing video memory yields 16. 7 million displayable colors) • Input devices (e. g. , optical-mechanical drum or flatbed scanners, area array digitizers) • Output devices (e. g. , CD-ROM, CD-RW, DVD-RW, filmwriters, line plotters, dye sublimation printers) • Networks (e. g. , local area, wide area, Internet) Jensen, 2004

Central Processing Unit The central processing unit (CPU) is the computing part of the computer. It consists of a control unit and an arithmetic logic unit. The CPU performs: • numerical integer and/or floating point calculations, and • directs input and output from and to mass storage devices, color monitors, digitizers, plotters, etc. Jensen, 2004

Central Processing Unit The central processing unit (CPU) is the computing part of the computer. It consists of a control unit and an arithmetic logic unit. The CPU performs: • numerical integer and/or floating point calculations, and • directs input and output from and to mass storage devices, color monitors, digitizers, plotters, etc. Jensen, 2004

Central Processing Unit A CPU’s efficiency is often measured in terms of how many millions-of-instructions-per-second (MIPS) it can process, e. g. , 500 MIPS. It is also customary to describe a CPU in terms of the number of cycles it can process in 1 second measured in megahertz, e. g. , 1000 Mhz (1 GHz). Manufacturers market computers with CPUs faster than 4 GHz, and this speed will increase. The system bus connects the CPU with the main memory, managing data transfer and instructions between the two. Therefore, another important consideration when purchasing a computer is bus speed.

Central Processing Unit A CPU’s efficiency is often measured in terms of how many millions-of-instructions-per-second (MIPS) it can process, e. g. , 500 MIPS. It is also customary to describe a CPU in terms of the number of cycles it can process in 1 second measured in megahertz, e. g. , 1000 Mhz (1 GHz). Manufacturers market computers with CPUs faster than 4 GHz, and this speed will increase. The system bus connects the CPU with the main memory, managing data transfer and instructions between the two. Therefore, another important consideration when purchasing a computer is bus speed.

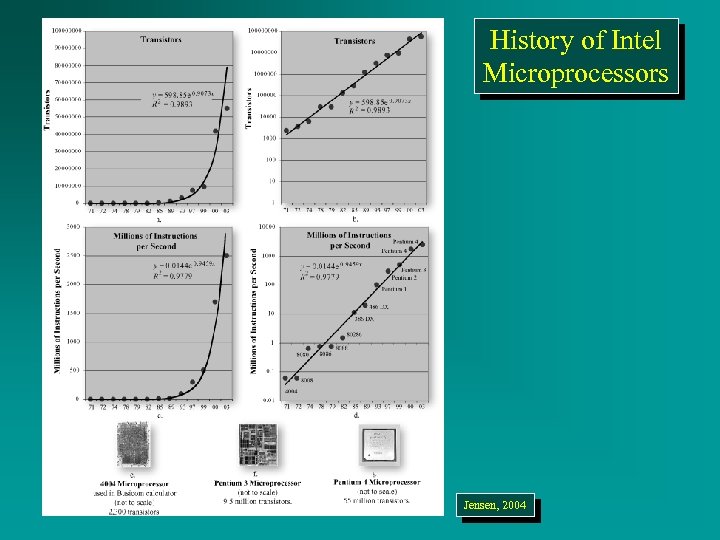

Moore’s Law In 1985, Gordon Moore was preparing a speech and made an observation. He realized that each new computer CPU contained roughly twice as much capacity as its predecessor and each CPU was released within 18 to 24 months of the previous chip. If this trend continued, he reasoned, computing power would rise exponentially over relatively brief periods of time. Moore’s law described a trend that has continued and is still remarkably accurate. It is the basis for many planners’ performance forecasts. MIPS has also increased logarithmically.

Moore’s Law In 1985, Gordon Moore was preparing a speech and made an observation. He realized that each new computer CPU contained roughly twice as much capacity as its predecessor and each CPU was released within 18 to 24 months of the previous chip. If this trend continued, he reasoned, computing power would rise exponentially over relatively brief periods of time. Moore’s law described a trend that has continued and is still remarkably accurate. It is the basis for many planners’ performance forecasts. MIPS has also increased logarithmically.

History of Intel Microprocessors Jensen, 2004

History of Intel Microprocessors Jensen, 2004

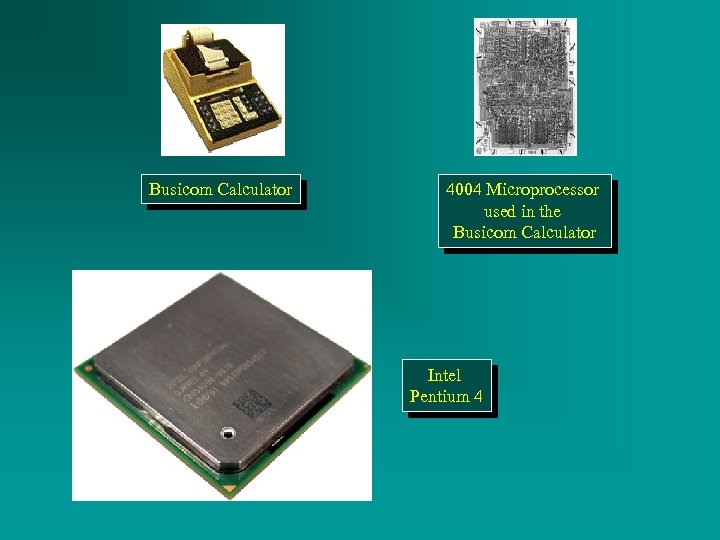

Busicom Calculator 4004 Microprocessor used in the Busicom Calculator Intel Pentium 4

Busicom Calculator 4004 Microprocessor used in the Busicom Calculator Intel Pentium 4

Image Processing System Considerations Type of Computer: * Personal Computers (32 to 64 -bit CPU) * Workstation (> 64 -bit CPU) * Mainframe (> 64 -bit CPU) Jensen, 2004

Image Processing System Considerations Type of Computer: * Personal Computers (32 to 64 -bit CPU) * Workstation (> 64 -bit CPU) * Mainframe (> 64 -bit CPU) Jensen, 2004

Type of Computer Personal computers (16 - to 64 -bit CPUs) are the workhorses of digital image processing and GIS analysis. Personal computers are based on microprocessor technology where the entire CPU is placed on a single chip. These Jensen, 2004 inexpensive complex-instruction-set-computers (CISC) generally have CPUs with 32 - to 64 -bit registers (word size) that can compute integer arithmetic expressions at greater clock speeds and process significantly more MIPS than their 1980 s – 1990 s 8 -bit predecessors. The 32 -bit CPUs can process four 8 -bit bytes at a time and 64 -bit CPUs can process eight bytes at a time.

Type of Computer Personal computers (16 - to 64 -bit CPUs) are the workhorses of digital image processing and GIS analysis. Personal computers are based on microprocessor technology where the entire CPU is placed on a single chip. These Jensen, 2004 inexpensive complex-instruction-set-computers (CISC) generally have CPUs with 32 - to 64 -bit registers (word size) that can compute integer arithmetic expressions at greater clock speeds and process significantly more MIPS than their 1980 s – 1990 s 8 -bit predecessors. The 32 -bit CPUs can process four 8 -bit bytes at a time and 64 -bit CPUs can process eight bytes at a time.

Computer Systems and Peripheral Devices in A Typical Digital Image Processing Laboratory Jensen, 2004

Computer Systems and Peripheral Devices in A Typical Digital Image Processing Laboratory Jensen, 2004

Type of Computer Workstations usually consist of a >64 -bit reduced-instruction -set-computer (RISC) CPU that can address more random access memory than personal computers. The RISC 2004 is chip Jensen, typically faster than the traditional CISC. RISC workstations application software and hardware maintenance costs are usually higher than personal computer-based image processing systems. The most common workstation operating systems are UNIX and various Microsoft Windows products.

Type of Computer Workstations usually consist of a >64 -bit reduced-instruction -set-computer (RISC) CPU that can address more random access memory than personal computers. The RISC 2004 is chip Jensen, typically faster than the traditional CISC. RISC workstations application software and hardware maintenance costs are usually higher than personal computer-based image processing systems. The most common workstation operating systems are UNIX and various Microsoft Windows products.

Type of Computer Mainframe computers (>64 -bit CPU) perform calculations more rapidly than PCs or workstations and able to support hundreds of users simultaneously, especially parallel mainframe computers such as a CRAY. This makes mainframes ideal for intensive, CPU-dependent tasks (e. g. , image rectification, mosaicking, filtering, classification, Jensen, desired, hyperspectral image analysis, and GIS modeling). If 2004 the output from intensive mainframe processing can be passed to a workstation or personal computer for subsequent less intensive, inexpensive processing. Mainframe computer systems are expensive to purchase and maintain. Mainframe applications software is more expensive.

Type of Computer Mainframe computers (>64 -bit CPU) perform calculations more rapidly than PCs or workstations and able to support hundreds of users simultaneously, especially parallel mainframe computers such as a CRAY. This makes mainframes ideal for intensive, CPU-dependent tasks (e. g. , image rectification, mosaicking, filtering, classification, Jensen, desired, hyperspectral image analysis, and GIS modeling). If 2004 the output from intensive mainframe processing can be passed to a workstation or personal computer for subsequent less intensive, inexpensive processing. Mainframe computer systems are expensive to purchase and maintain. Mainframe applications software is more expensive.

Operating System The operating system is the first program loaded into random access memory (RAM) when the computer is turned on. It controls the computer’s higher-order functions. The operating system kernel resides in memory at all times. The operating system provides the user interface and controls multitasking. It handles the input and output to the hard disk Jensen, 2004 and all peripheral devices such as compact disks, scanners, printers, plotters, and color displays. All digital image processing application programs must communicate with the operating system. The operating system sets the protocols for the application programs that are executed by it.

Operating System The operating system is the first program loaded into random access memory (RAM) when the computer is turned on. It controls the computer’s higher-order functions. The operating system kernel resides in memory at all times. The operating system provides the user interface and controls multitasking. It handles the input and output to the hard disk Jensen, 2004 and all peripheral devices such as compact disks, scanners, printers, plotters, and color displays. All digital image processing application programs must communicate with the operating system. The operating system sets the protocols for the application programs that are executed by it.

Operating Systems The difference between a single-user operating system and a network operating system is the latter’s multi-user capability. • Microsoft Windows XP (home edition) and the Macintosh OS are single-user operating systems designed for one Jensen, 2004 person at a desktop computer working independently. • Various Microsoft Windows, UNIX, and Linux network operating systems are designed to manage multiple user requests at the same time and complex network security.

Operating Systems The difference between a single-user operating system and a network operating system is the latter’s multi-user capability. • Microsoft Windows XP (home edition) and the Macintosh OS are single-user operating systems designed for one Jensen, 2004 person at a desktop computer working independently. • Various Microsoft Windows, UNIX, and Linux network operating systems are designed to manage multiple user requests at the same time and complex network security.

Read Only Memory and Random Access Memory Read-only memory (ROM) retains information even after the computer is shut down because power is supplied from a battery that must be replaced occasionally. Most computers have sufficient ROM for digital image processing applications; therefore, it is not a serious consideration. Random access memory (RAM) is the computer’s primary temporary workspace. It requires power to maintain its content. Therefore, all of the information that is temporarily placed in RAM while the CPU is performing digital image processing must be saved to a hard disk (or other media such as a CD) before turning the computer off. Jensen, 2004

Read Only Memory and Random Access Memory Read-only memory (ROM) retains information even after the computer is shut down because power is supplied from a battery that must be replaced occasionally. Most computers have sufficient ROM for digital image processing applications; therefore, it is not a serious consideration. Random access memory (RAM) is the computer’s primary temporary workspace. It requires power to maintain its content. Therefore, all of the information that is temporarily placed in RAM while the CPU is performing digital image processing must be saved to a hard disk (or other media such as a CD) before turning the computer off. Jensen, 2004

Read Only Memory and Random Access Memory Computers should have sufficient RAM for the operating system, image processing applications software, and any remote sensor data that must be held in temporary memory while calculations are performed. Computers with 64 -bit CPUs can address more RAM than 32 -bit machines • It seems that one can never have too much RAM for image processing applications. RAM prices continue to decline while RAM speed continues to increase. Jensen, 2004

Read Only Memory and Random Access Memory Computers should have sufficient RAM for the operating system, image processing applications software, and any remote sensor data that must be held in temporary memory while calculations are performed. Computers with 64 -bit CPUs can address more RAM than 32 -bit machines • It seems that one can never have too much RAM for image processing applications. RAM prices continue to decline while RAM speed continues to increase. Jensen, 2004

Interactive Graphical User Interface (GUI) One of the best scientific visualization environments for the analysis of remote sensor data takes place when the analyst communicates with the digital image processing system interactively using a point-and-click graphical user interface (GUI). Most sophisticated image processing systems are now configured with a friendly GUI that allows rapid Jensen, 2004 display of images and the selection of important image processing functions.

Interactive Graphical User Interface (GUI) One of the best scientific visualization environments for the analysis of remote sensor data takes place when the analyst communicates with the digital image processing system interactively using a point-and-click graphical user interface (GUI). Most sophisticated image processing systems are now configured with a friendly GUI that allows rapid Jensen, 2004 display of images and the selection of important image processing functions.

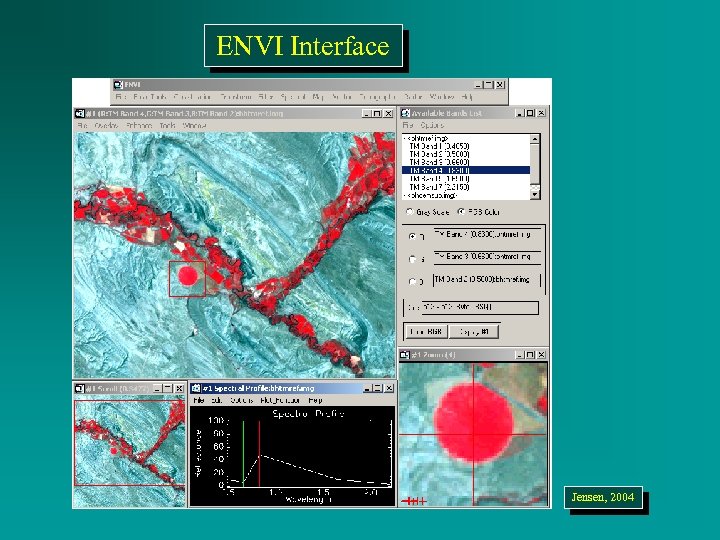

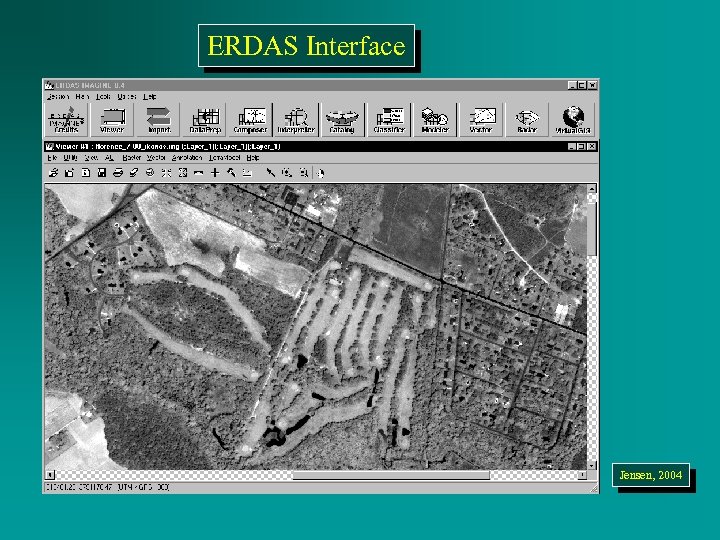

Graphical User Interface Several effective digital image processing graphical user interfaces include: • ERDAS Imagine’s intuitive point-and-click icons, • Research System’s Environment for Visualizing Images (ENVI) hyperspectral data analysis interface, Jensen, 2004 • ER Mapper, • IDRISI, • ESRI Arc. GIS Image Analyst, and • Adobe Photoshop.

Graphical User Interface Several effective digital image processing graphical user interfaces include: • ERDAS Imagine’s intuitive point-and-click icons, • Research System’s Environment for Visualizing Images (ENVI) hyperspectral data analysis interface, Jensen, 2004 • ER Mapper, • IDRISI, • ESRI Arc. GIS Image Analyst, and • Adobe Photoshop.

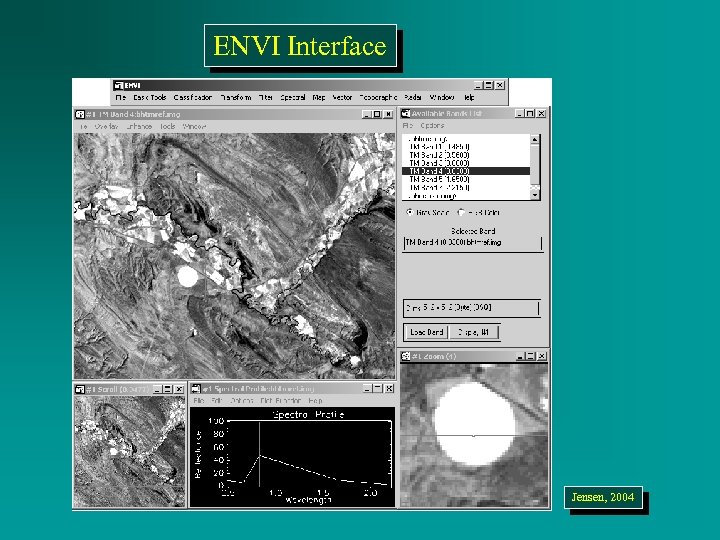

ENVI Interface Jensen, 2004

ENVI Interface Jensen, 2004

ENVI Interface Jensen, 2004

ENVI Interface Jensen, 2004

ERDAS Interface Jensen, 2004

ERDAS Interface Jensen, 2004

Interactive versus Batch Processing Non-interactive, batch processing is of value for timeconsuming processes such as image rectification, mosaicking, orthophoto creation, filtering, etc. • Batch processing frees up lab PCs or workstations during peak demand because the jobs can be stored and executed when the computer is idle (e. g. , early morning hours). Jensen, 2004 • Batch processing can also be useful during peak hours because it allows the analyst to set up a series of operations that can be executed in sequence without operator intervention. • Digital image processing also can now be performed interactively over the Internet at selected sites.

Interactive versus Batch Processing Non-interactive, batch processing is of value for timeconsuming processes such as image rectification, mosaicking, orthophoto creation, filtering, etc. • Batch processing frees up lab PCs or workstations during peak demand because the jobs can be stored and executed when the computer is idle (e. g. , early morning hours). Jensen, 2004 • Batch processing can also be useful during peak hours because it allows the analyst to set up a series of operations that can be executed in sequence without operator intervention. • Digital image processing also can now be performed interactively over the Internet at selected sites.

Storage and Archiving Considerations Digital image processing of remote sensing and related GIS data requires substantial mass storage resources. Mass storage media should have: • rapid access time, • longevity (i. e. , last for a long time), and be • inexpensive. Jensen, 2004

Storage and Archiving Considerations Digital image processing of remote sensing and related GIS data requires substantial mass storage resources. Mass storage media should have: • rapid access time, • longevity (i. e. , last for a long time), and be • inexpensive. Jensen, 2004

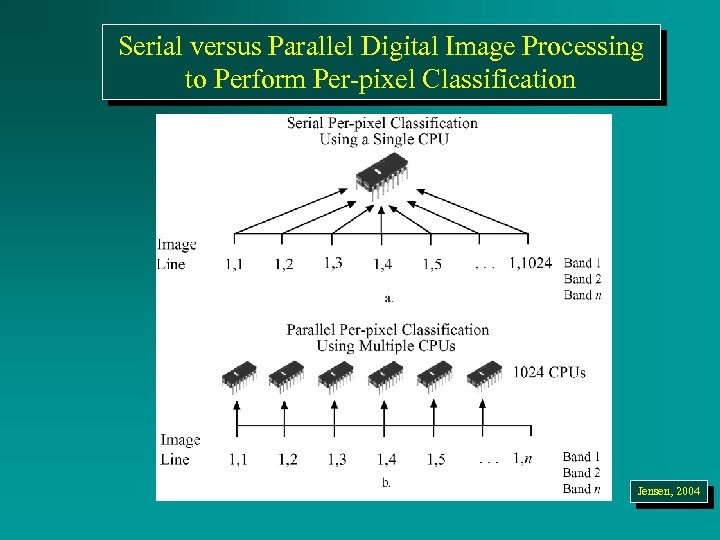

Serial and Parallel Image Processing It is possible to obtain PCs, workstations, and mainframe computers that have multiple CPUs that operate concurrently. Specially written parallel processing software can parse (distribute) the remote sensor data to specific CPUs to perform digital image processing. This can be much more efficient than processing the data serially. Jensen, 2004

Serial and Parallel Image Processing It is possible to obtain PCs, workstations, and mainframe computers that have multiple CPUs that operate concurrently. Specially written parallel processing software can parse (distribute) the remote sensor data to specific CPUs to perform digital image processing. This can be much more efficient than processing the data serially. Jensen, 2004

Serial Versus Parallel Processing • Requires more than one CPU • Requires software that can parse (distribute) the digital image processing to the various CPUs by - task, and/or - line, and/or - column. Jensen, 2004

Serial Versus Parallel Processing • Requires more than one CPU • Requires software that can parse (distribute) the digital image processing to the various CPUs by - task, and/or - line, and/or - column. Jensen, 2004

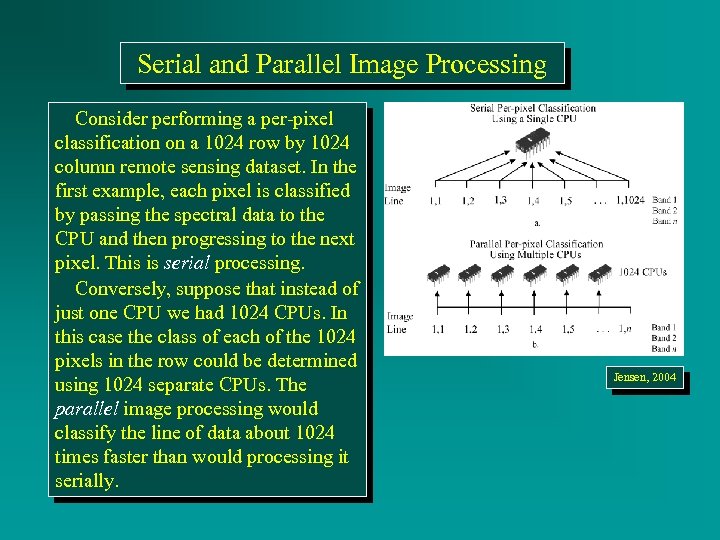

Serial and Parallel Image Processing Consider performing a per-pixel classification on a 1024 row by 1024 column remote sensing dataset. In the first example, each pixel is classified by passing the spectral data to the CPU and then progressing to the next pixel. This is serial processing. Conversely, suppose that instead of just one CPU we had 1024 CPUs. In this case the class of each of the 1024 pixels in the row could be determined using 1024 separate CPUs. The parallel image processing would classify the line of data about 1024 times faster than would processing it serially. Jensen, 2004

Serial and Parallel Image Processing Consider performing a per-pixel classification on a 1024 row by 1024 column remote sensing dataset. In the first example, each pixel is classified by passing the spectral data to the CPU and then progressing to the next pixel. This is serial processing. Conversely, suppose that instead of just one CPU we had 1024 CPUs. In this case the class of each of the 1024 pixels in the row could be determined using 1024 separate CPUs. The parallel image processing would classify the line of data about 1024 times faster than would processing it serially. Jensen, 2004

Serial and Parallel Image Processing Each of the 1024 CPUs could also be allocated an entire row of the dataset. Finally, each of the CPUs could be allocated a separate band if desired. For example, if 224 bands of AVIRIS hyperspectral data were available, 224 of the 1024 processors could be allocated to evaluate the 224 brightness values associated with each individual pixel with 800 additional CPUs available for other tasks. Jensen, 2004

Serial and Parallel Image Processing Each of the 1024 CPUs could also be allocated an entire row of the dataset. Finally, each of the CPUs could be allocated a separate band if desired. For example, if 224 bands of AVIRIS hyperspectral data were available, 224 of the 1024 processors could be allocated to evaluate the 224 brightness values associated with each individual pixel with 800 additional CPUs available for other tasks. Jensen, 2004

Serial versus Parallel Digital Image Processing to Perform Per-pixel Classification Jensen, 2004

Serial versus Parallel Digital Image Processing to Perform Per-pixel Classification Jensen, 2004

Compiler A computer software compiler translates instructions programmed in a high-level language such as C++ or Visual Basic into machine language that the CPU can understand. A compiler usually generates assembly language first and then translates the assembly language into machine language. The compilers most often used in the development of digital Jensen, 2004 image processing software C++, Assembler, and Visual Basic. Many digital image processing systems provide a toolkit that programmers can use to compile their own digital image processing algorithms (e. g. , ERDAS, ER Mapper, ENVI). The toolkit consists of subroutines that perform very specific tasks such as reading a line of image data into RAM or modifying a color look-up table to change the color of a pixel (RGB) on the screen.

Compiler A computer software compiler translates instructions programmed in a high-level language such as C++ or Visual Basic into machine language that the CPU can understand. A compiler usually generates assembly language first and then translates the assembly language into machine language. The compilers most often used in the development of digital Jensen, 2004 image processing software C++, Assembler, and Visual Basic. Many digital image processing systems provide a toolkit that programmers can use to compile their own digital image processing algorithms (e. g. , ERDAS, ER Mapper, ENVI). The toolkit consists of subroutines that perform very specific tasks such as reading a line of image data into RAM or modifying a color look-up table to change the color of a pixel (RGB) on the screen.

Compiler It is often useful for remote sensing analysts to program in one of the high-level languages just listed. Very seldom will a single digital image processing system perform all of the functions needed for a given project. Therefore, the ability to modify existing software or integrate newly developed algorithms with the existing software is important. Jensen, 2004

Compiler It is often useful for remote sensing analysts to program in one of the high-level languages just listed. Very seldom will a single digital image processing system perform all of the functions needed for a given project. Therefore, the ability to modify existing software or integrate newly developed algorithms with the existing software is important. Jensen, 2004

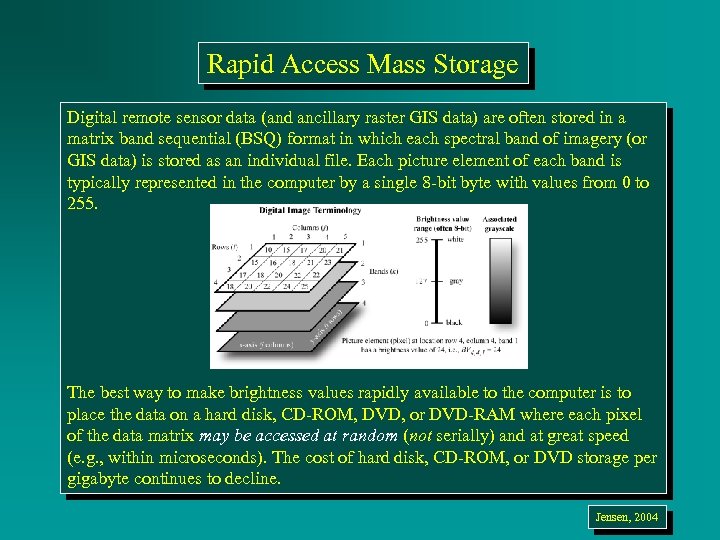

Rapid Access Mass Storage Digital remote sensor data (and ancillary raster GIS data) are often stored in a matrix band sequential (BSQ) format in which each spectral band of imagery (or GIS data) is stored as an individual file. Each picture element of each band is typically represented in the computer by a single 8 -bit byte with values from 0 to 255. The best way to make brightness values rapidly available to the computer is to place the data on a hard disk, CD-ROM, DVD, or DVD-RAM where each pixel of the data matrix may be accessed at random (not serially) and at great speed (e. g. , within microseconds). The cost of hard disk, CD-ROM, or DVD storage per gigabyte continues to decline. Jensen, 2004

Rapid Access Mass Storage Digital remote sensor data (and ancillary raster GIS data) are often stored in a matrix band sequential (BSQ) format in which each spectral band of imagery (or GIS data) is stored as an individual file. Each picture element of each band is typically represented in the computer by a single 8 -bit byte with values from 0 to 255. The best way to make brightness values rapidly available to the computer is to place the data on a hard disk, CD-ROM, DVD, or DVD-RAM where each pixel of the data matrix may be accessed at random (not serially) and at great speed (e. g. , within microseconds). The cost of hard disk, CD-ROM, or DVD storage per gigabyte continues to decline. Jensen, 2004

Rapid Access Mass Storage It is common for digital image processing laboratories to have gigabytes of hard-disk mass storage associated with each workstation. Many image processing labs now use RAID (redundant arrays of inexpensive hard disks) technology in which two or more drives working together provide increased performance and various levels of error recovery and fault tolerance. Other storage media, such as magnetic tapes, are usually too slow for real-time image retrieval, manipulation, and storage because they do not allow random access of data. However, given their large storage capacity, they remain a cost-effective way to archive digital remote sensor data. Jensen, 2004

Rapid Access Mass Storage It is common for digital image processing laboratories to have gigabytes of hard-disk mass storage associated with each workstation. Many image processing labs now use RAID (redundant arrays of inexpensive hard disks) technology in which two or more drives working together provide increased performance and various levels of error recovery and fault tolerance. Other storage media, such as magnetic tapes, are usually too slow for real-time image retrieval, manipulation, and storage because they do not allow random access of data. However, given their large storage capacity, they remain a cost-effective way to archive digital remote sensor data. Jensen, 2004

Rapid Access Mass Storage Companies are now developing new mass storage technologies based on atomic resolution storage (ARS), which holds the promise of storage densities of close to 1 terabit per square inch—the equivalent of nearly 50 DVDs on something the size of a credit card. The technology uses microscopic probes less than one-thousandth the width of a human hair. When the probes are brought near a conducting material, electrons write data on the surface. The same probes can detect and retrieve data and can be used to write over old data. Jensen, 2004

Rapid Access Mass Storage Companies are now developing new mass storage technologies based on atomic resolution storage (ARS), which holds the promise of storage densities of close to 1 terabit per square inch—the equivalent of nearly 50 DVDs on something the size of a credit card. The technology uses microscopic probes less than one-thousandth the width of a human hair. When the probes are brought near a conducting material, electrons write data on the surface. The same probes can detect and retrieve data and can be used to write over old data. Jensen, 2004

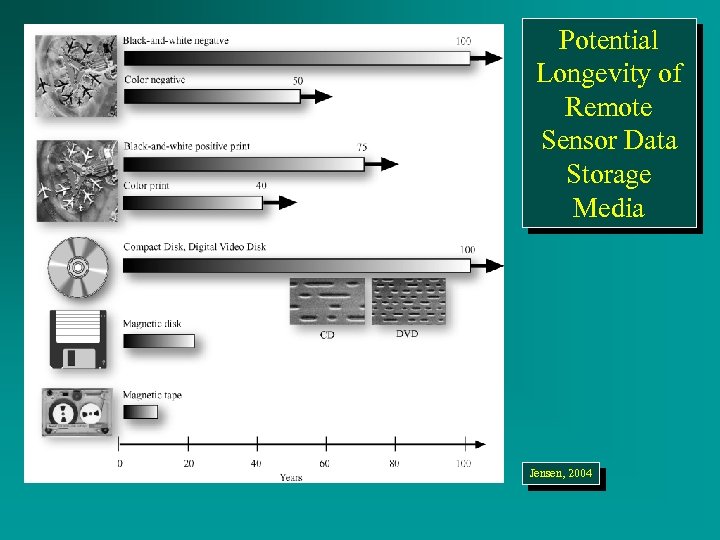

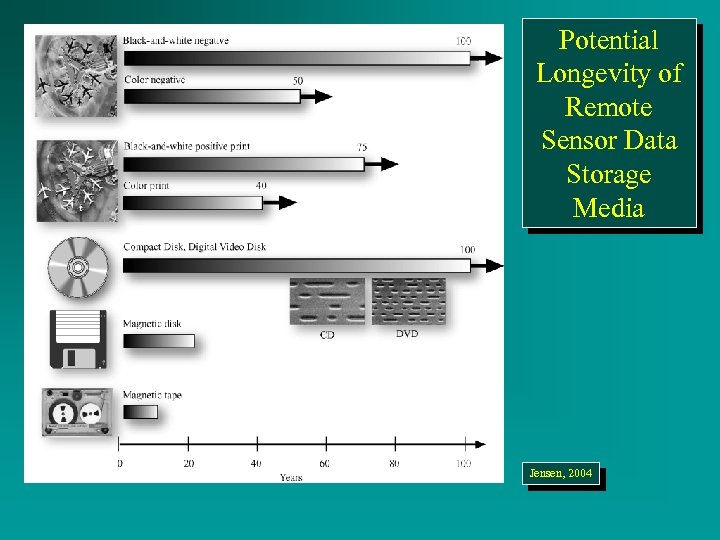

Archiving Considerations and Longevity Storing remote sensor data is no trivial matter. Significant sums of money are spent purchasing remote sensor data by commercial companies, natural resource agencies, and universities. Unfortunately, most of the time not enough attention is given to how the expensive data are stored or archived to protect the long-term investment. The diagram depicts several types of analog and digital remote sensor data mass storage devices and the average time to physical obsolescence, that is, when the media begin to deteriorate and information is lost. Jensen, 2004

Archiving Considerations and Longevity Storing remote sensor data is no trivial matter. Significant sums of money are spent purchasing remote sensor data by commercial companies, natural resource agencies, and universities. Unfortunately, most of the time not enough attention is given to how the expensive data are stored or archived to protect the long-term investment. The diagram depicts several types of analog and digital remote sensor data mass storage devices and the average time to physical obsolescence, that is, when the media begin to deteriorate and information is lost. Jensen, 2004

Potential Longevity of Remote Sensor Data Storage Media Jensen, 2004

Potential Longevity of Remote Sensor Data Storage Media Jensen, 2004

Archiving Considerations and Longevity • Properly exposed, washed, and fixed analog black & white aerial photography negatives have considerable longevity, often more than 100 years. • Color negatives with their respective dye layers have longevity, but not as much as the black-and-white negatives. • Black & white paper prints have greater longevity than color prints (Kodak, 1995). • Hard and floppy magnetic disks have relatively short longevity, often less than 20 years. • Magnetic tape media (e. g. , 3/4 -in. tape, 8 -mm tape) can become unreadable within 10 to 15 years if not rewound and properly stored in a cool, dry environment. Jensen, 2004

Archiving Considerations and Longevity • Properly exposed, washed, and fixed analog black & white aerial photography negatives have considerable longevity, often more than 100 years. • Color negatives with their respective dye layers have longevity, but not as much as the black-and-white negatives. • Black & white paper prints have greater longevity than color prints (Kodak, 1995). • Hard and floppy magnetic disks have relatively short longevity, often less than 20 years. • Magnetic tape media (e. g. , 3/4 -in. tape, 8 -mm tape) can become unreadable within 10 to 15 years if not rewound and properly stored in a cool, dry environment. Jensen, 2004

Potential Longevity of Remote Sensor Data Storage Media Jensen, 2004

Potential Longevity of Remote Sensor Data Storage Media Jensen, 2004

Archiving Considerations and Longevity Optical disks can now be written to, read, and written over again at relatively high speeds and can store much more data than other portable media such as floppy disks. The technology used in rewriteable optical systems is magnetooptics, where data is recorded magnetically like disks and tapes, but the bits are much smaller because a laser is used to etch the bit. The laser heats the bit to 150 °C, at which temperature the bit is realigned when subjected to a magnetic field. To record new data, existing bits must first be set to zero. Jensen, 2004

Archiving Considerations and Longevity Optical disks can now be written to, read, and written over again at relatively high speeds and can store much more data than other portable media such as floppy disks. The technology used in rewriteable optical systems is magnetooptics, where data is recorded magnetically like disks and tapes, but the bits are much smaller because a laser is used to etch the bit. The laser heats the bit to 150 °C, at which temperature the bit is realigned when subjected to a magnetic field. To record new data, existing bits must first be set to zero. Jensen, 2004

Archiving Considerations and Longevity Only the optical disk provides relatively long-term storage potential (>100 years). In addition, optical disks store large volumes of data on relatively small media. Advances in optical compact disc (CD) technology promise to increase the storage capacity to > 17 Gb using new rewriteable digital video disc (DVD) technology. In most remote sensing laboratories, rewritable CD-RWs or DVD-RWs have supplanted tapes as the backup system of choice. DVD drives are backwards compatible and can read data from CDs. Jensen, 2004

Archiving Considerations and Longevity Only the optical disk provides relatively long-term storage potential (>100 years). In addition, optical disks store large volumes of data on relatively small media. Advances in optical compact disc (CD) technology promise to increase the storage capacity to > 17 Gb using new rewriteable digital video disc (DVD) technology. In most remote sensing laboratories, rewritable CD-RWs or DVD-RWs have supplanted tapes as the backup system of choice. DVD drives are backwards compatible and can read data from CDs. Jensen, 2004

Archiving Considerations and Longevity It is important to remember when archiving remote sensor data that sometimes it is the loss of the • read-write software and/or • read-write hardware (the drive mechanism and heads) that is the problem and not the digital media itself. Therefore, as new computers are purchased it is a good idea to set aside a single computer system that is representative of a certain computer era so that one can always read any data stored on archived mass storage media. Jensen, 2004

Archiving Considerations and Longevity It is important to remember when archiving remote sensor data that sometimes it is the loss of the • read-write software and/or • read-write hardware (the drive mechanism and heads) that is the problem and not the digital media itself. Therefore, as new computers are purchased it is a good idea to set aside a single computer system that is representative of a certain computer era so that one can always read any data stored on archived mass storage media. Jensen, 2004

Computer Display Spatial and Color Resolution The display of remote sensor data on a computer screen is one of the most fundamental elements of digital image analysis. Careful selection of the computer display characteristics will provide the optimum visual image analysis environment for the human interpreter. The two most important characteristics are computer : • display spatial resolution, and • color resolution. Jensen, 2004

Computer Display Spatial and Color Resolution The display of remote sensor data on a computer screen is one of the most fundamental elements of digital image analysis. Careful selection of the computer display characteristics will provide the optimum visual image analysis environment for the human interpreter. The two most important characteristics are computer : • display spatial resolution, and • color resolution. Jensen, 2004

Computer Screen Display Resolution The image processing system should be able to display at least 1024 rows by 1024 columns on the computer screen at one time. This allows larger geographic areas to be examined and places the terrain of interest in its regional context. Most Earth scientists prefer this regional perspective when performing terrain analysis using remote sensor data. Furthermore, it is disconcerting to have to analyze four. Jensen, 2004 512 images when a single 1024 display provides the information at a glance. An ideal screen display resolution is 1600 1200 pixels.

Computer Screen Display Resolution The image processing system should be able to display at least 1024 rows by 1024 columns on the computer screen at one time. This allows larger geographic areas to be examined and places the terrain of interest in its regional context. Most Earth scientists prefer this regional perspective when performing terrain analysis using remote sensor data. Furthermore, it is disconcerting to have to analyze four. Jensen, 2004 512 images when a single 1024 display provides the information at a glance. An ideal screen display resolution is 1600 1200 pixels.

Computer Systems and Peripheral Devices in A Typical Digital Image Processing Laboratory Jensen, 2004

Computer Systems and Peripheral Devices in A Typical Digital Image Processing Laboratory Jensen, 2004

Computer Screen Color Resolution The computer screen color resolution is the number of grayscale tones or colors (e. g. , 256) that can be displayed on a CRT monitor at one time out of a palette of available colors (e. g. , 16. 7 million). For many applications, such as highcontrast black-and-white linework cartography, only 1 bit of color is required [i. e. , either the line is black or white (0 or l)]. For more sophisticated computer graphics for which many shades of gray or color combinations are required, up to 8 bits (or 256 colors) may be required. Most thematic mapping Jensen, 2004 and GIS applications may be performed quite well by systems that display just 64 user-selectable colors out of a palette of 256 colors.

Computer Screen Color Resolution The computer screen color resolution is the number of grayscale tones or colors (e. g. , 256) that can be displayed on a CRT monitor at one time out of a palette of available colors (e. g. , 16. 7 million). For many applications, such as highcontrast black-and-white linework cartography, only 1 bit of color is required [i. e. , either the line is black or white (0 or l)]. For more sophisticated computer graphics for which many shades of gray or color combinations are required, up to 8 bits (or 256 colors) may be required. Most thematic mapping Jensen, 2004 and GIS applications may be performed quite well by systems that display just 64 user-selectable colors out of a palette of 256 colors.

Computer Screen Color Resolution The analysis and display of remote sensor image data generally requires much higher CRT screen color resolution than cartographic and GIS applications. For example, most relatively sophisticated digital image processing systems can display a tremendous number of unique colors (e. g. , 16. 7 million) from a large color palette (e. g. , 16. 7 million). The primary reason for these color requirements is that image analysts must often display a composite of several images at one time on a CRT. This process is called color compositing. Jensen, 2004

Computer Screen Color Resolution The analysis and display of remote sensor image data generally requires much higher CRT screen color resolution than cartographic and GIS applications. For example, most relatively sophisticated digital image processing systems can display a tremendous number of unique colors (e. g. , 16. 7 million) from a large color palette (e. g. , 16. 7 million). The primary reason for these color requirements is that image analysts must often display a composite of several images at one time on a CRT. This process is called color compositing. Jensen, 2004

Computer Systems and Peripheral Devices in A Typical Digital Image Processing Laboratory Jensen, 2004

Computer Systems and Peripheral Devices in A Typical Digital Image Processing Laboratory Jensen, 2004

Computer Screen Color Resolution To display a color-infrared image of Landsat Thematic Mapper data it is necessary to composite three separate 8 -bit images [e. g. , green band (TM 2 = 0. 52 to 0. 60 µm), red band (TM 3 = 0. 63 to 0. 69 µm), reflective infrared band (TM 4 = 0. 76 to 0. 90 µm)]. To obtain a true-color composite image that provides every possible color combination for the three 8 -bit images requires that 224 colors (16, 777, 216) be available in the palette. Such true-color systems are relatively expensive because every pixel location must be bitmapped. This means that there must be a specific location in memory Jensen, 2004 that keeps track of the exact blue, green, and red color value for every pixel. This requires substantial computer memory which are usually collected in what is called an image processor. Given the availability of image processor memory, the question is: what is adequate color resolution?

Computer Screen Color Resolution To display a color-infrared image of Landsat Thematic Mapper data it is necessary to composite three separate 8 -bit images [e. g. , green band (TM 2 = 0. 52 to 0. 60 µm), red band (TM 3 = 0. 63 to 0. 69 µm), reflective infrared band (TM 4 = 0. 76 to 0. 90 µm)]. To obtain a true-color composite image that provides every possible color combination for the three 8 -bit images requires that 224 colors (16, 777, 216) be available in the palette. Such true-color systems are relatively expensive because every pixel location must be bitmapped. This means that there must be a specific location in memory Jensen, 2004 that keeps track of the exact blue, green, and red color value for every pixel. This requires substantial computer memory which are usually collected in what is called an image processor. Given the availability of image processor memory, the question is: what is adequate color resolution?

Computer Screen Color Resolution Generally, 4096 carefully selected colors out of a very large palette (e. g. , 16. 7 million) appears to be the minimum acceptable for the creation of remote sensing color composites. This provides 12 bits of color, with 4 bits available for each of the blue, green, and red image planes. For image processing applications other than compositing (e. g. , black & white image display, color density slicing, pattern recognition classification), the 4096 available colors and large color palette are more than adequate. However, the Jensen, 2004 larger the palette and the greater the number of displayable colors at one time, the better the representation of the remote sensor data on the CRT screen for visual analysis.

Computer Screen Color Resolution Generally, 4096 carefully selected colors out of a very large palette (e. g. , 16. 7 million) appears to be the minimum acceptable for the creation of remote sensing color composites. This provides 12 bits of color, with 4 bits available for each of the blue, green, and red image planes. For image processing applications other than compositing (e. g. , black & white image display, color density slicing, pattern recognition classification), the 4096 available colors and large color palette are more than adequate. However, the Jensen, 2004 larger the palette and the greater the number of displayable colors at one time, the better the representation of the remote sensor data on the CRT screen for visual analysis.

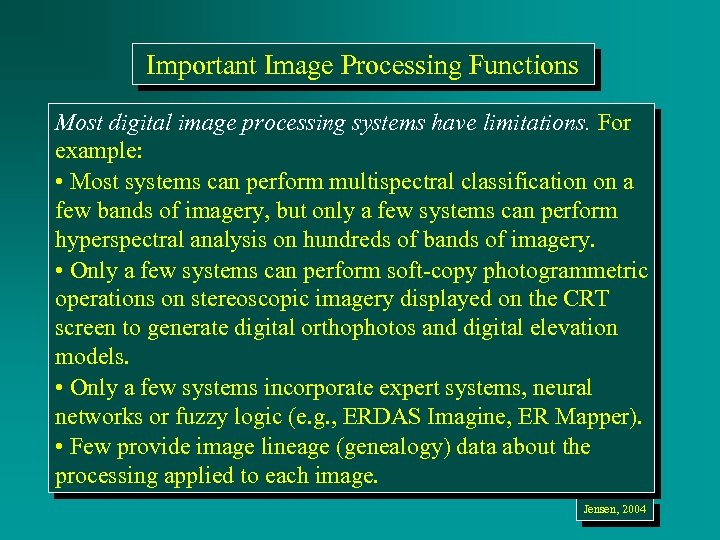

Important Image Processing Functions Many of the most important functions performed using digital image processing systems are summarized in Table 34. Personal computers now have the computing power to perform each of these functions. Jensen, 2004

Important Image Processing Functions Many of the most important functions performed using digital image processing systems are summarized in Table 34. Personal computers now have the computing power to perform each of these functions. Jensen, 2004

Image Processing System Functions * Preprocessing (Radiometric and Geometric) * Display and Enhancement * Information Extraction * Photogrammetric Information Extraction * Metadata and Image/Map Lineage Documentation * Image and Map Cartographic Composition * Geographic Information Systems (GIS) * Integrated Image Processing and GIS * Utilities Jensen, 2004

Image Processing System Functions * Preprocessing (Radiometric and Geometric) * Display and Enhancement * Information Extraction * Photogrammetric Information Extraction * Metadata and Image/Map Lineage Documentation * Image and Map Cartographic Composition * Geographic Information Systems (GIS) * Integrated Image Processing and GIS * Utilities Jensen, 2004

Important Image Processing Functions It is not good for remotely sensed data to be analyzed in a vacuum. Remote sensing information fulfills its promise best when used in conjunction with ancillary data (e. g. , soils, elevation, and slope) stored in a geographic information system (GIS). The ideal system should be able to process the digital remote sensor data and perform any necessary GIS processing. It is not efficient to exit the digital image processing system, log into a GIS system, perform a required GIS function, and then take the output of the procedure back into the digital image processing system for further analysis. Integrated systems perform both digital image processing and GIS functions and consider map data as image data (or vice versa) and operate on them accordingly. Jensen, 2004

Important Image Processing Functions It is not good for remotely sensed data to be analyzed in a vacuum. Remote sensing information fulfills its promise best when used in conjunction with ancillary data (e. g. , soils, elevation, and slope) stored in a geographic information system (GIS). The ideal system should be able to process the digital remote sensor data and perform any necessary GIS processing. It is not efficient to exit the digital image processing system, log into a GIS system, perform a required GIS function, and then take the output of the procedure back into the digital image processing system for further analysis. Integrated systems perform both digital image processing and GIS functions and consider map data as image data (or vice versa) and operate on them accordingly. Jensen, 2004

Important Image Processing Functions Most digital image processing systems have limitations. For example: • Most systems can perform multispectral classification on a few bands of imagery, but only a few systems can perform hyperspectral analysis on hundreds of bands of imagery. • Only a few systems can perform soft-copy photogrammetric operations on stereoscopic imagery displayed on the CRT screen to generate digital orthophotos and digital elevation models. • Only a few systems incorporate expert systems, neural networks or fuzzy logic (e. g. , ERDAS Imagine, ER Mapper). • Few provide image lineage (genealogy) data about the processing applied to each image. Jensen, 2004

Important Image Processing Functions Most digital image processing systems have limitations. For example: • Most systems can perform multispectral classification on a few bands of imagery, but only a few systems can perform hyperspectral analysis on hundreds of bands of imagery. • Only a few systems can perform soft-copy photogrammetric operations on stereoscopic imagery displayed on the CRT screen to generate digital orthophotos and digital elevation models. • Only a few systems incorporate expert systems, neural networks or fuzzy logic (e. g. , ERDAS Imagine, ER Mapper). • Few provide image lineage (genealogy) data about the processing applied to each image. Jensen, 2004

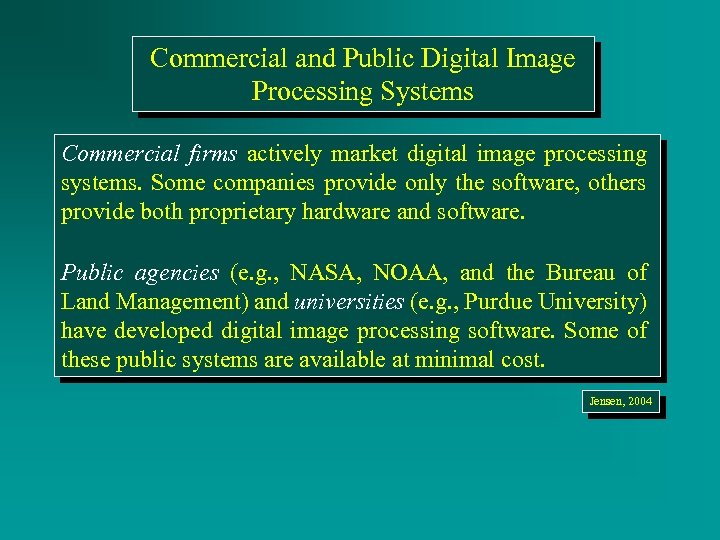

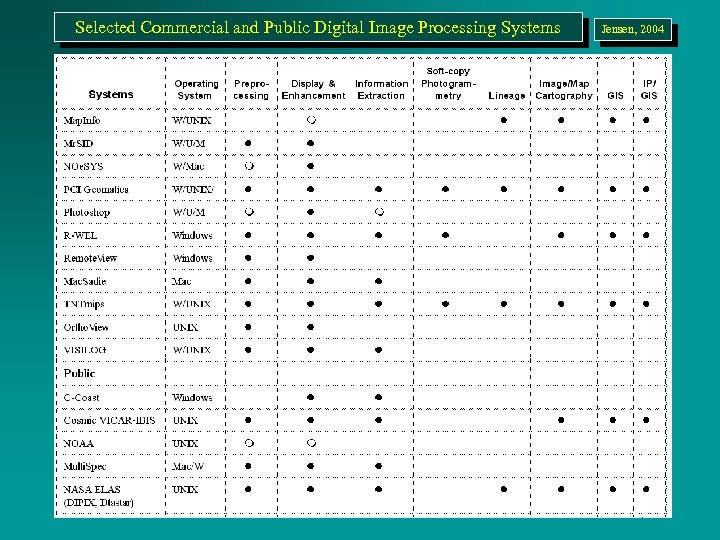

Commercial and Public Digital Image Processing Systems Commercial firms actively market digital image processing systems. Some companies provide only the software, others provide both proprietary hardware and software. Jensen, 2004 Public agencies (e. g. , NASA, NOAA, and the Bureau of Land Management) and universities (e. g. , Purdue University) have developed digital image processing software. Some of these public systems are available at minimal cost. Jensen, 2004

Commercial and Public Digital Image Processing Systems Commercial firms actively market digital image processing systems. Some companies provide only the software, others provide both proprietary hardware and software. Jensen, 2004 Public agencies (e. g. , NASA, NOAA, and the Bureau of Land Management) and universities (e. g. , Purdue University) have developed digital image processing software. Some of these public systems are available at minimal cost. Jensen, 2004

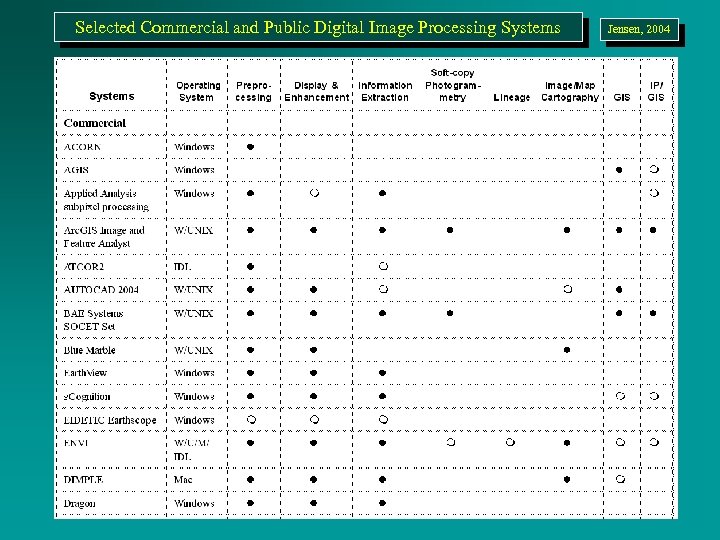

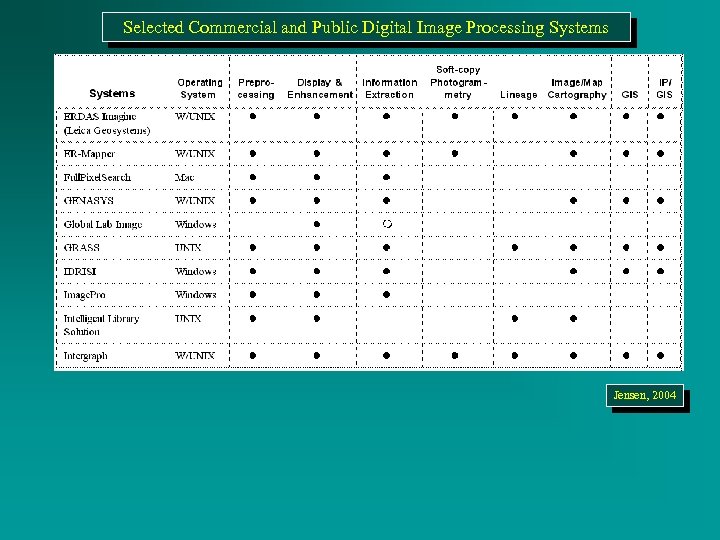

Selected Commercial and Public Digital Image Processing Systems Jensen, 2004

Selected Commercial and Public Digital Image Processing Systems Jensen, 2004

Selected Commercial and Public Digital Image Processing Systems Jensen, 2004

Selected Commercial and Public Digital Image Processing Systems Jensen, 2004

Selected Commercial and Public Digital Image Processing Systems Jensen, 2004

Selected Commercial and Public Digital Image Processing Systems Jensen, 2004

Major Commercial Digital Image Processing Systems - ERDAS - Leica Photogrammetry Suite - ENVI - IDRISI - ER Mapper - PCI Geomatica - e. Cognition Jensen, 2004

Major Commercial Digital Image Processing Systems - ERDAS - Leica Photogrammetry Suite - ENVI - IDRISI - ER Mapper - PCI Geomatica - e. Cognition Jensen, 2004

Major Public Digital Image Processing Systems - GRASS - Multi. Spec (LARS Purdue University) - C-Coast - Adobe Photoshop Jensen, 2004

Major Public Digital Image Processing Systems - GRASS - Multi. Spec (LARS Purdue University) - C-Coast - Adobe Photoshop Jensen, 2004

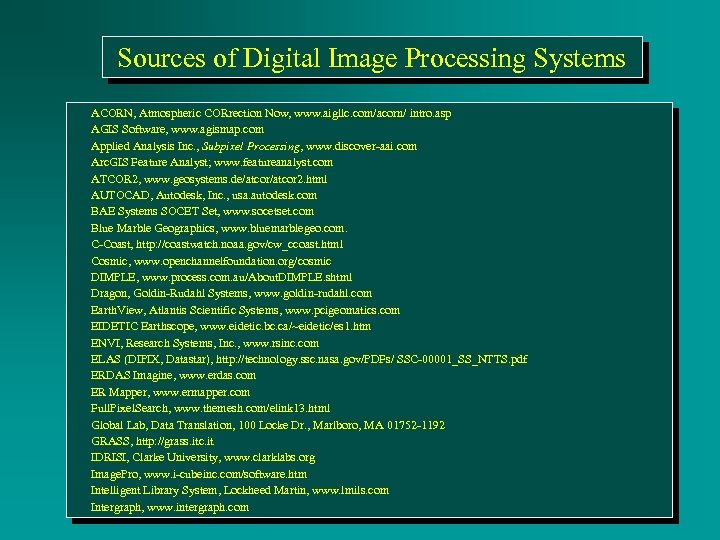

Sources of Digital Image Processing Systems ACORN, Atmospheric CORrection Now, www. aigllc. com/acorn/ intro. asp AGIS Software, www. agismap. com Applied Analysis Inc. , Subpixel Processing, www. discover-aai. com Arc. GIS Feature Analyst; www. featureanalyst. com ATCOR 2, www. geosystems. de/atcor 2. html AUTOCAD, Autodesk, Inc. , usa. autodesk. com BAE Systems SOCET Set, www. socetset. com Blue Marble Geographics, www. bluemarblegeo. com. C-Coast, http: //coastwatch. noaa. gov/cw_ccoast. html Cosmic, www. openchannelfoundation. org/cosmic DIMPLE, www. process. com. au/About. DIMPLE. shtml Dragon, Goldin-Rudahl Systems, www. goldin-rudahl. com Earth. View, Atlantis Scientific Systems, www. pcigeomatics. com EIDETIC Earthscope, www. eidetic. bc. ca/~eidetic/es 1. htm ENVI, Research Systems, Inc. , www. rsinc. com ELAS (DIPIX, Datastar), http: //technology. ssc. nasa. gov/PDFs/ SSC-00001_SS_NTTS. pdf ERDAS Imagine, www. erdas. com ER Mapper, www. ermapper. com Full. Pixel. Search, www. themesh. com/elink 13. html Global Lab, Data Translation, 100 Locke Dr. , Marlboro, MA 01752 -1192 GRASS, http: //grass. itc. it IDRISI, Clarke University, www. clarklabs. org Image. Pro, www. i-cubeinc. com/software. htm Intelligent Library System, Lockheed Martin, www. lmils. com Intergraph, www. intergraph. com Jensen, 2004

Sources of Digital Image Processing Systems ACORN, Atmospheric CORrection Now, www. aigllc. com/acorn/ intro. asp AGIS Software, www. agismap. com Applied Analysis Inc. , Subpixel Processing, www. discover-aai. com Arc. GIS Feature Analyst; www. featureanalyst. com ATCOR 2, www. geosystems. de/atcor 2. html AUTOCAD, Autodesk, Inc. , usa. autodesk. com BAE Systems SOCET Set, www. socetset. com Blue Marble Geographics, www. bluemarblegeo. com. C-Coast, http: //coastwatch. noaa. gov/cw_ccoast. html Cosmic, www. openchannelfoundation. org/cosmic DIMPLE, www. process. com. au/About. DIMPLE. shtml Dragon, Goldin-Rudahl Systems, www. goldin-rudahl. com Earth. View, Atlantis Scientific Systems, www. pcigeomatics. com EIDETIC Earthscope, www. eidetic. bc. ca/~eidetic/es 1. htm ENVI, Research Systems, Inc. , www. rsinc. com ELAS (DIPIX, Datastar), http: //technology. ssc. nasa. gov/PDFs/ SSC-00001_SS_NTTS. pdf ERDAS Imagine, www. erdas. com ER Mapper, www. ermapper. com Full. Pixel. Search, www. themesh. com/elink 13. html Global Lab, Data Translation, 100 Locke Dr. , Marlboro, MA 01752 -1192 GRASS, http: //grass. itc. it IDRISI, Clarke University, www. clarklabs. org Image. Pro, www. i-cubeinc. com/software. htm Intelligent Library System, Lockheed Martin, www. lmils. com Intergraph, www. intergraph. com Jensen, 2004

Sources of Digital Image Processing Systems Map. Info, www. mapinfo. com Mac. Sadie, www. ece. arizona. edu/~dial/base_files/software/ Mac. Sadie 1. 2. html Mr. SID, Lizard. Tech, www. lizardtech. com Multi. Spec, www. ece. purdue. edu/~biehl/Multi. Spec/. NIH-Image, http: //rsb. info. nih. gov/nih-image NOe. SYS, www. rsinc. com/NOESYS/index. cfm PCI, www. pcigeomatics. com PHOTOSHOP, www. adobe. com Remote. View, www. sensor. com/remoteview. html R-WEL Inc. , www. rwel. com TNTmips, Micro. Images, www. microimages. com VISILOG, www. norpix. com/visilog. htm XV image viewer, www. trilon. com/xv Jensen, 2004

Sources of Digital Image Processing Systems Map. Info, www. mapinfo. com Mac. Sadie, www. ece. arizona. edu/~dial/base_files/software/ Mac. Sadie 1. 2. html Mr. SID, Lizard. Tech, www. lizardtech. com Multi. Spec, www. ece. purdue. edu/~biehl/Multi. Spec/. NIH-Image, http: //rsb. info. nih. gov/nih-image NOe. SYS, www. rsinc. com/NOESYS/index. cfm PCI, www. pcigeomatics. com PHOTOSHOP, www. adobe. com Remote. View, www. sensor. com/remoteview. html R-WEL Inc. , www. rwel. com TNTmips, Micro. Images, www. microimages. com VISILOG, www. norpix. com/visilog. htm XV image viewer, www. trilon. com/xv Jensen, 2004

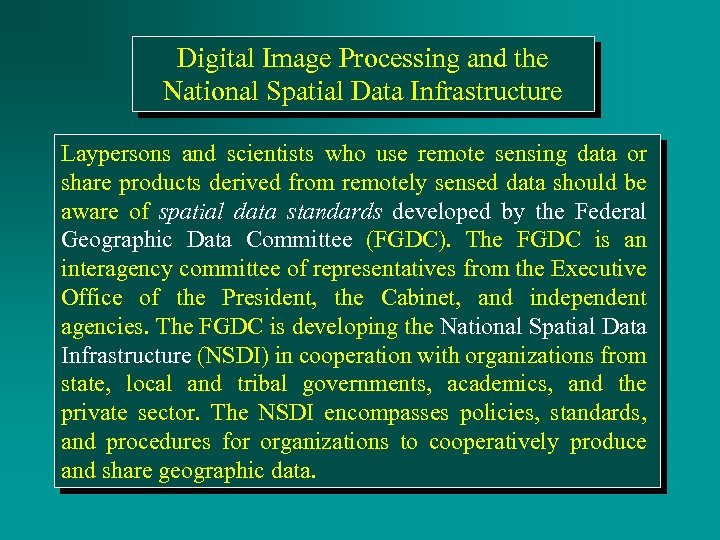

Digital Image Processing and the National Spatial Data Infrastructure Laypersons and scientists who use remote sensing data or share products derived from remotely sensed data should be aware of spatial data standards developed by the Federal Geographic Data Committee (FGDC). The FGDC Jensen, an is 2004 interagency committee of representatives from the Executive Office of the President, the Cabinet, and independent agencies. The FGDC is developing the National Spatial Data Infrastructure (NSDI) in cooperation with organizations from state, local and tribal governments, academics, and the private sector. The NSDI encompasses policies, standards, and procedures for organizations to cooperatively produce and share geographic data.

Digital Image Processing and the National Spatial Data Infrastructure Laypersons and scientists who use remote sensing data or share products derived from remotely sensed data should be aware of spatial data standards developed by the Federal Geographic Data Committee (FGDC). The FGDC Jensen, an is 2004 interagency committee of representatives from the Executive Office of the President, the Cabinet, and independent agencies. The FGDC is developing the National Spatial Data Infrastructure (NSDI) in cooperation with organizations from state, local and tribal governments, academics, and the private sector. The NSDI encompasses policies, standards, and procedures for organizations to cooperatively produce and share geographic data.

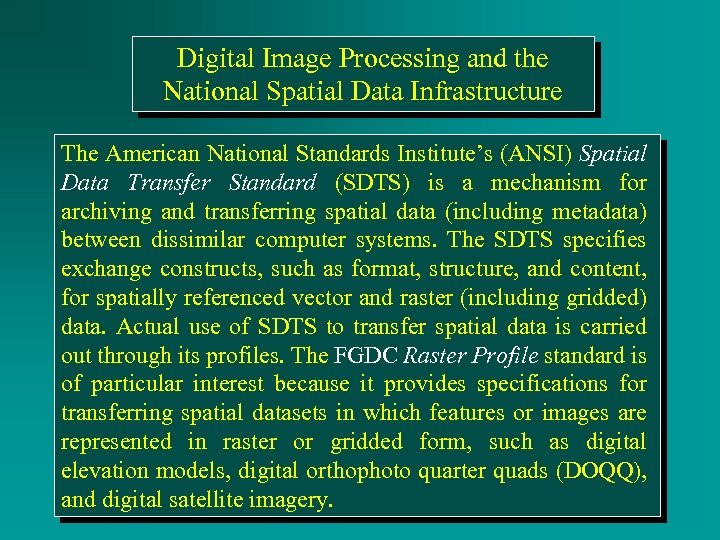

Digital Image Processing and the National Spatial Data Infrastructure The American National Standards Institute’s (ANSI) Spatial Data Transfer Standard (SDTS) is a mechanism for archiving and transferring spatial data (including metadata) between dissimilar computer systems. The SDTS specifies Jensen, 2004 exchange constructs, such as format, structure, and content, for spatially referenced vector and raster (including gridded) data. Actual use of SDTS to transfer spatial data is carried out through its profiles. The FGDC Raster Profile standard is of particular interest because it provides specifications for transferring spatial datasets in which features or images are represented in raster or gridded form, such as digital elevation models, digital orthophoto quarter quads (DOQQ), and digital satellite imagery.

Digital Image Processing and the National Spatial Data Infrastructure The American National Standards Institute’s (ANSI) Spatial Data Transfer Standard (SDTS) is a mechanism for archiving and transferring spatial data (including metadata) between dissimilar computer systems. The SDTS specifies Jensen, 2004 exchange constructs, such as format, structure, and content, for spatially referenced vector and raster (including gridded) data. Actual use of SDTS to transfer spatial data is carried out through its profiles. The FGDC Raster Profile standard is of particular interest because it provides specifications for transferring spatial datasets in which features or images are represented in raster or gridded form, such as digital elevation models, digital orthophoto quarter quads (DOQQ), and digital satellite imagery.

Remote Sensing Data Formats * Band interleaved by line (BIL) * Band interleaved by pixel (PIP) * Band sequential (BSQ) * Run-length encoding Jensen, 2004

Remote Sensing Data Formats * Band interleaved by line (BIL) * Band interleaved by pixel (PIP) * Band sequential (BSQ) * Run-length encoding Jensen, 2004