8cf7c1add0513211b766cb3f4aa8f185.ppt

- Количество слайдов: 50

DHT* Applications Jeffrey Pang CMU Net. Talk, Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk * and DOLR

DHT* Applications Jeffrey Pang CMU Net. Talk, Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk * and DOLR

Brief Review of DHTs o Many DHTs: â PRR Trees, Pastry, Tapestry â Chord, Symphony â CAN â Skip. Net, Kademlia, Koorde, Viceroy, etc. o Good Properties: â Distributed construction/maintenance â Load-balanced with uniform identifiers â O(log n) hops / neighbors per node â Provides underlying network proximity Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 2

Brief Review of DHTs o Many DHTs: â PRR Trees, Pastry, Tapestry â Chord, Symphony â CAN â Skip. Net, Kademlia, Koorde, Viceroy, etc. o Good Properties: â Distributed construction/maintenance â Load-balanced with uniform identifiers â O(log n) hops / neighbors per node â Provides underlying network proximity Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 2

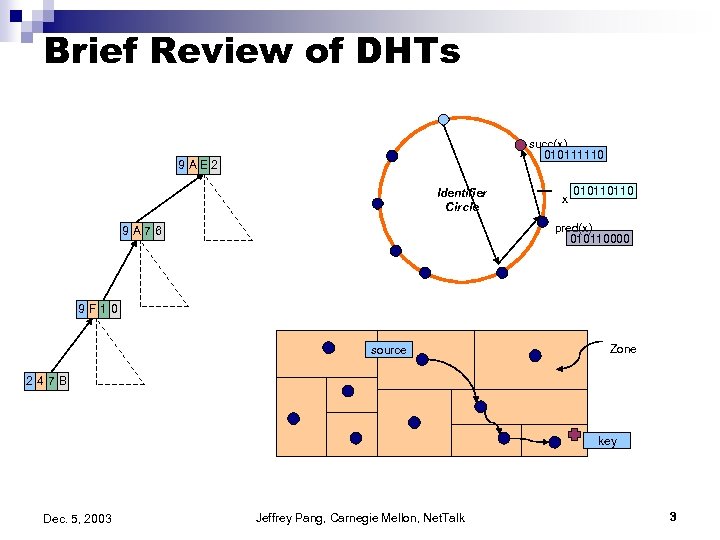

Brief Review of DHTs succ(x) 010111110 9 AE 2 Identifier Circle x 010110110 pred(x) 010110000 9 A 76 9 F 10 source Zone 247 B key Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 3

Brief Review of DHTs succ(x) 010111110 9 AE 2 Identifier Circle x 010110110 pred(x) 010110000 9 A 76 9 F 10 source Zone 247 B key Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 3

Overview of Talk o Review of DHTs o DHT vs DOLR o Storage o Multicast o Database o Misc. o API and Infrastructure Proposals Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 4

Overview of Talk o Review of DHTs o DHT vs DOLR o Storage o Multicast o Database o Misc. o API and Infrastructure Proposals Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 4

Overview of Talk o Review of DHTs o DHT vs DOLR o Storage o Multicast o Database o Misc. o API and Infrastructure Proposals Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 5

Overview of Talk o Review of DHTs o DHT vs DOLR o Storage o Multicast o Database o Misc. o API and Infrastructure Proposals Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 5

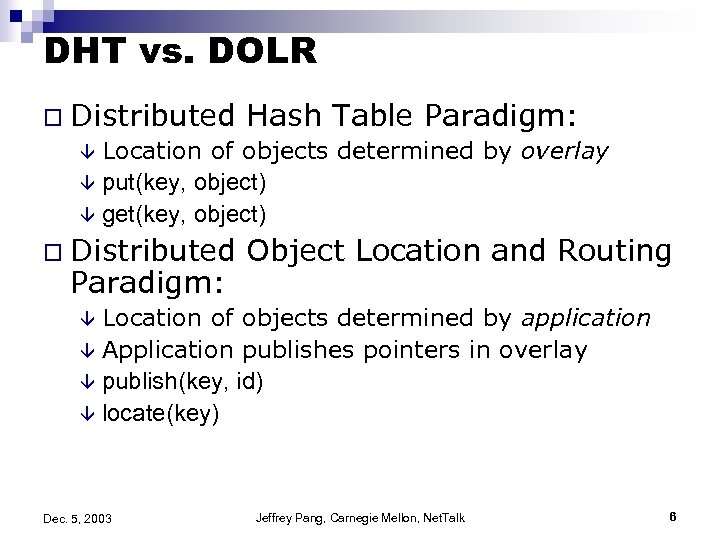

DHT vs. DOLR o Distributed Hash Table Paradigm: â Location of objects determined by overlay â put(key, object) â get(key, object) o Distributed Paradigm: Object Location and Routing Location of objects determined by application â Application publishes pointers in overlay â publish(key, id) â locate(key) â Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 6

DHT vs. DOLR o Distributed Hash Table Paradigm: â Location of objects determined by overlay â put(key, object) â get(key, object) o Distributed Paradigm: Object Location and Routing Location of objects determined by application â Application publishes pointers in overlay â publish(key, id) â locate(key) â Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 6

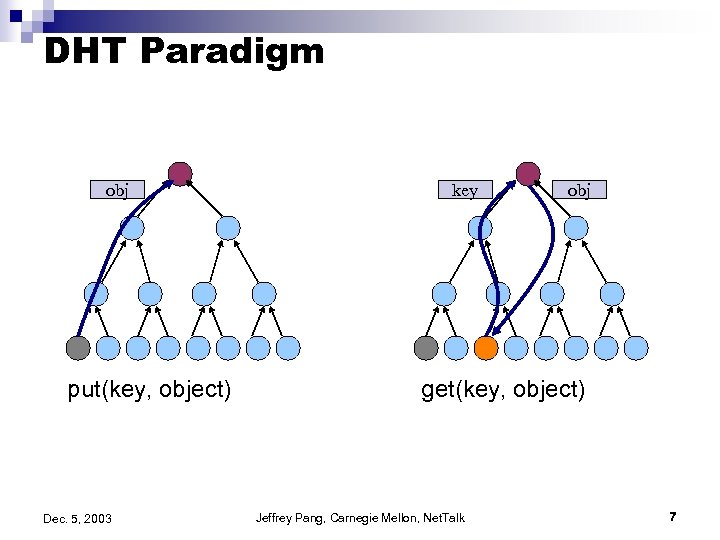

DHT Paradigm obj put(key, object) Dec. 5, 2003 key obj get(key, object) Jeffrey Pang, Carnegie Mellon, Net. Talk 7

DHT Paradigm obj put(key, object) Dec. 5, 2003 key obj get(key, object) Jeffrey Pang, Carnegie Mellon, Net. Talk 7

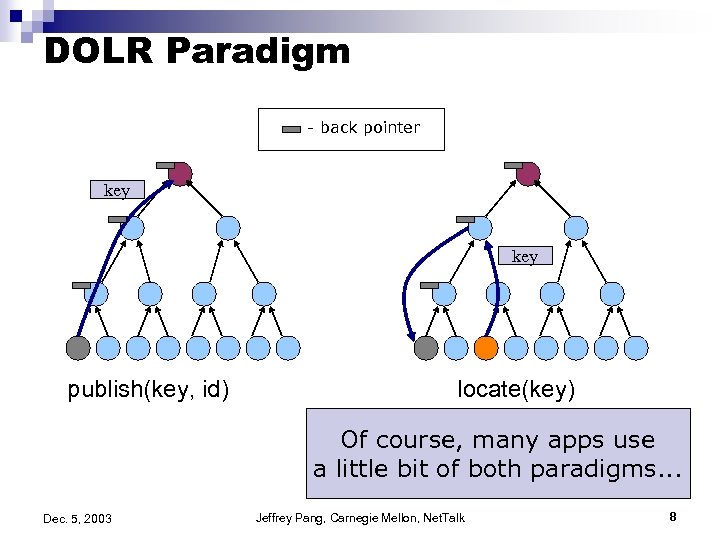

DOLR Paradigm - back pointer key publish(key, id) locate(key) Of course, many apps use a little bit of both paradigms. . . Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 8

DOLR Paradigm - back pointer key publish(key, id) locate(key) Of course, many apps use a little bit of both paradigms. . . Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 8

Overview of Talk o Review of DHTs o DHT vs DOLR o Storage o Multicast o Database o Misc. o API and Infrastructure Proposals Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 9

Overview of Talk o Review of DHTs o DHT vs DOLR o Storage o Multicast o Database o Misc. o API and Infrastructure Proposals Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 9

![Storage Systems o Mnemosnye [Hand â stenographic storage & Roscoe, IPTPS 02] o PAST Storage Systems o Mnemosnye [Hand â stenographic storage & Roscoe, IPTPS 02] o PAST](https://present5.com/presentation/8cf7c1add0513211b766cb3f4aa8f185/image-10.jpg) Storage Systems o Mnemosnye [Hand â stenographic storage & Roscoe, IPTPS 02] o PAST [Rowstron & Druschel, â file-based storage substrate SOSP 01] o CFS [Dabek, et al. , SOSP 01] â single writer cooperative storage o Ivy [Muthitacharoen, et al. , â small group read/write storage OSDI 02] o Ocean. Store [Kubiatowicz, et al. , ASPLOS 00, FAST 03] â global-scale persistent storage Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 10

Storage Systems o Mnemosnye [Hand â stenographic storage & Roscoe, IPTPS 02] o PAST [Rowstron & Druschel, â file-based storage substrate SOSP 01] o CFS [Dabek, et al. , SOSP 01] â single writer cooperative storage o Ivy [Muthitacharoen, et al. , â small group read/write storage OSDI 02] o Ocean. Store [Kubiatowicz, et al. , ASPLOS 00, FAST 03] â global-scale persistent storage Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 10

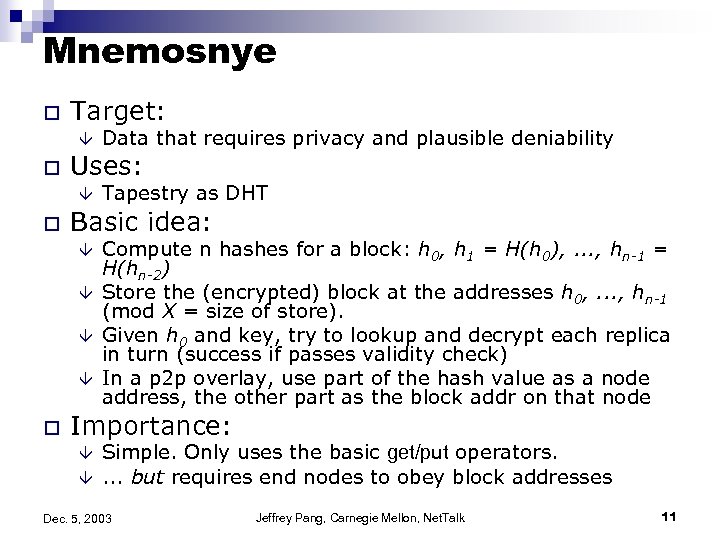

Mnemosnye o Target: â o Uses: â o Tapestry as DHT Basic idea: â â o Data that requires privacy and plausible deniability Compute n hashes for a block: h 0, h 1 = H(h 0), . . . , hn-1 = H(hn-2) Store the (encrypted) block at the addresses h 0, . . . , hn-1 (mod X = size of store). Given h 0 and key, try to lookup and decrypt each replica in turn (success if passes validity check) In a p 2 p overlay, use part of the hash value as a node address, the other part as the block addr on that node Importance: â â Simple. Only uses the basic get/put operators. . but requires end nodes to obey block addresses Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 11

Mnemosnye o Target: â o Uses: â o Tapestry as DHT Basic idea: â â o Data that requires privacy and plausible deniability Compute n hashes for a block: h 0, h 1 = H(h 0), . . . , hn-1 = H(hn-2) Store the (encrypted) block at the addresses h 0, . . . , hn-1 (mod X = size of store). Given h 0 and key, try to lookup and decrypt each replica in turn (success if passes validity check) In a p 2 p overlay, use part of the hash value as a node address, the other part as the block addr on that node Importance: â â Simple. Only uses the basic get/put operators. . but requires end nodes to obey block addresses Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 11

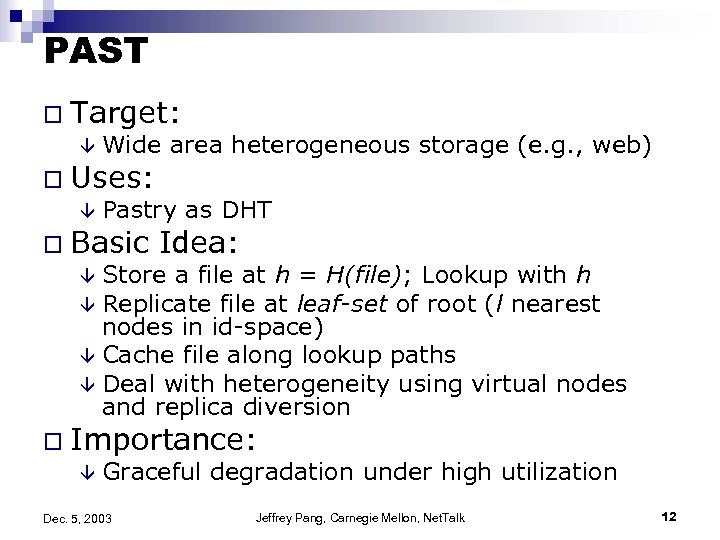

PAST o Target: â Wide area heterogeneous storage (e. g. , web) o Uses: â Pastry as DHT o Basic Idea: â Store a file at h = H(file); Lookup with h â Replicate file at leaf-set of root (l nearest nodes in id-space) â Cache file along lookup paths â Deal with heterogeneity using virtual nodes and replica diversion o Importance: â Graceful degradation under high utilization Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 12

PAST o Target: â Wide area heterogeneous storage (e. g. , web) o Uses: â Pastry as DHT o Basic Idea: â Store a file at h = H(file); Lookup with h â Replicate file at leaf-set of root (l nearest nodes in id-space) â Cache file along lookup paths â Deal with heterogeneity using virtual nodes and replica diversion o Importance: â Graceful degradation under high utilization Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 12

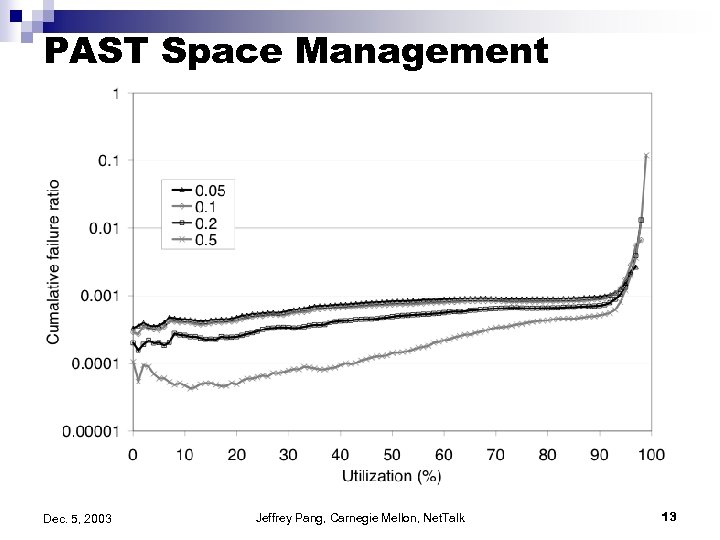

PAST Space Management Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 13

PAST Space Management Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 13

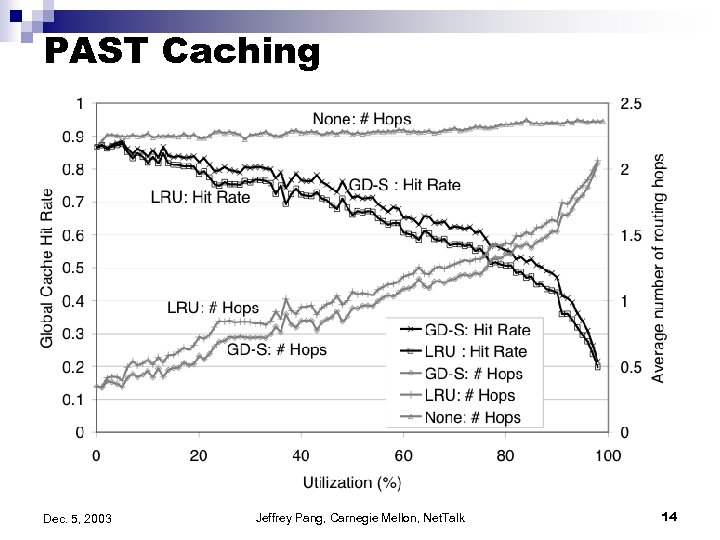

PAST Caching Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 14

PAST Caching Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 14

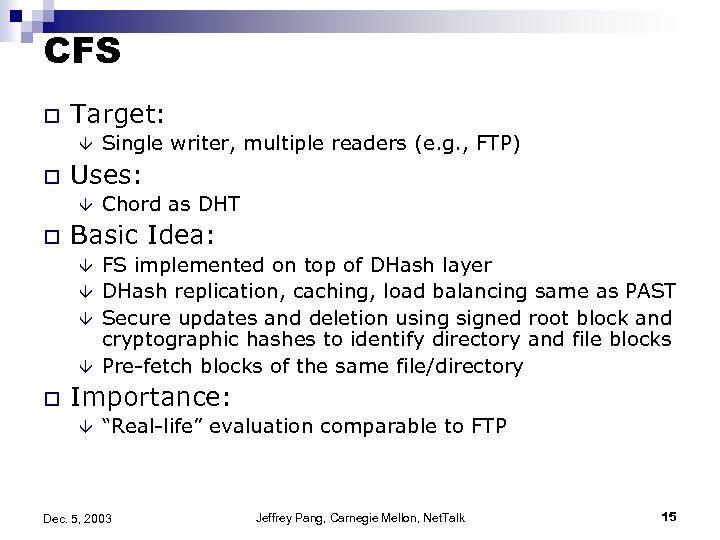

CFS o Target: â o Uses: â o Chord as DHT Basic Idea: â â o Single writer, multiple readers (e. g. , FTP) FS implemented on top of DHash layer DHash replication, caching, load balancing same as PAST Secure updates and deletion using signed root block and cryptographic hashes to identify directory and file blocks Pre-fetch blocks of the same file/directory Importance: â “Real-life” evaluation comparable to FTP Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 15

CFS o Target: â o Uses: â o Chord as DHT Basic Idea: â â o Single writer, multiple readers (e. g. , FTP) FS implemented on top of DHash layer DHash replication, caching, load balancing same as PAST Secure updates and deletion using signed root block and cryptographic hashes to identify directory and file blocks Pre-fetch blocks of the same file/directory Importance: â “Real-life” evaluation comparable to FTP Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 15

CFS File System Structure H(D) public key H(F) D File Block Directory Block F signature H(B 1) Root Block B 1 Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk H(B 2) B 2 16

CFS File System Structure H(D) public key H(F) D File Block Directory Block F signature H(B 1) Root Block B 1 Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk H(B 2) B 2 16

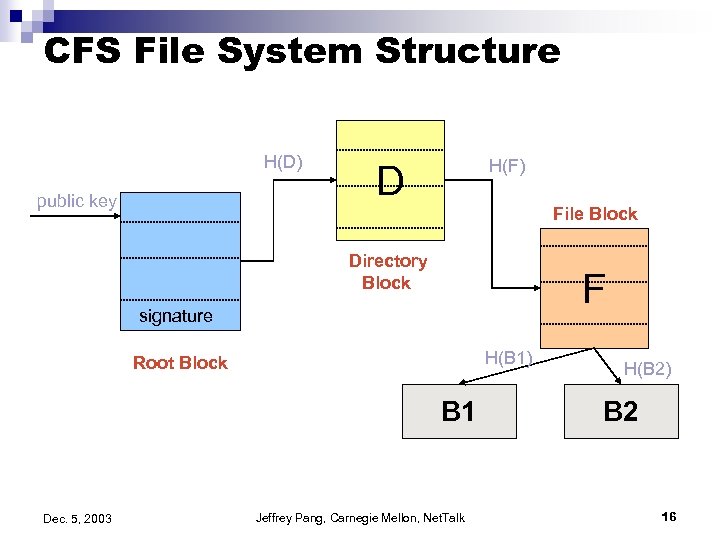

CFS “Real-Life” Evaluation CFS Dec. 5, 2003 Pair-wise TCP Jeffrey Pang, Carnegie Mellon, Net. Talk 17

CFS “Real-Life” Evaluation CFS Dec. 5, 2003 Pair-wise TCP Jeffrey Pang, Carnegie Mellon, Net. Talk 17

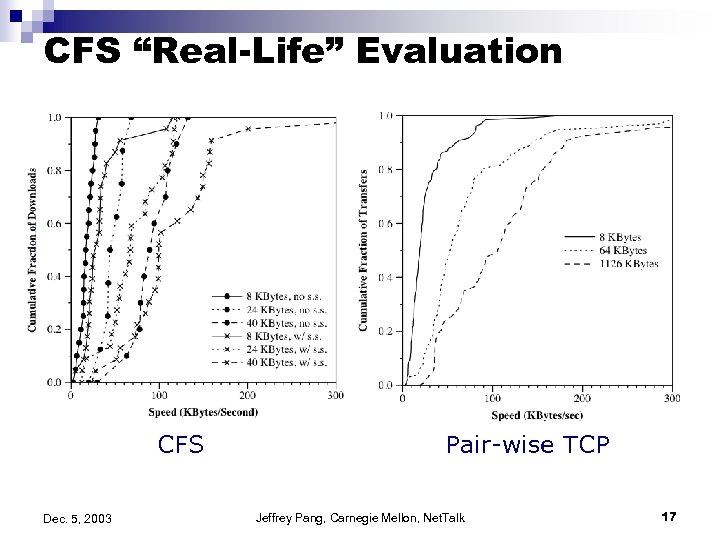

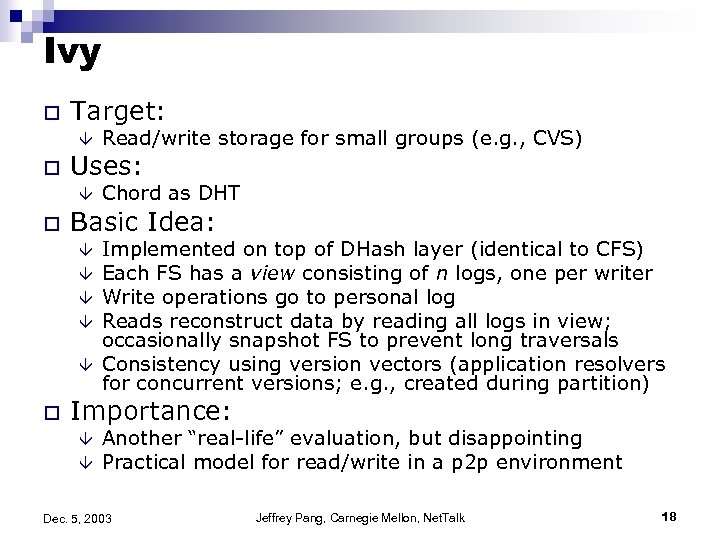

Ivy o Target: â o Uses: â o Chord as DHT Basic Idea: â â â o Read/write storage for small groups (e. g. , CVS) Implemented on top of DHash layer (identical to CFS) Each FS has a view consisting of n logs, one per writer Write operations go to personal log Reads reconstruct data by reading all logs in view; occasionally snapshot FS to prevent long traversals Consistency using version vectors (application resolvers for concurrent versions; e. g. , created during partition) Importance: â â Another “real-life” evaluation, but disappointing Practical model for read/write in a p 2 p environment Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 18

Ivy o Target: â o Uses: â o Chord as DHT Basic Idea: â â â o Read/write storage for small groups (e. g. , CVS) Implemented on top of DHash layer (identical to CFS) Each FS has a view consisting of n logs, one per writer Write operations go to personal log Reads reconstruct data by reading all logs in view; occasionally snapshot FS to prevent long traversals Consistency using version vectors (application resolvers for concurrent versions; e. g. , created during partition) Importance: â â Another “real-life” evaluation, but disappointing Practical model for read/write in a p 2 p environment Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 18

Ivy Log Structure Alice Bob Log head create delete write link ex-create View Dec. 5, 2003 delete write Jeffrey Pang, Carnegie Mellon, Net. Talk 19

Ivy Log Structure Alice Bob Log head create delete write link ex-create View Dec. 5, 2003 delete write Jeffrey Pang, Carnegie Mellon, Net. Talk 19

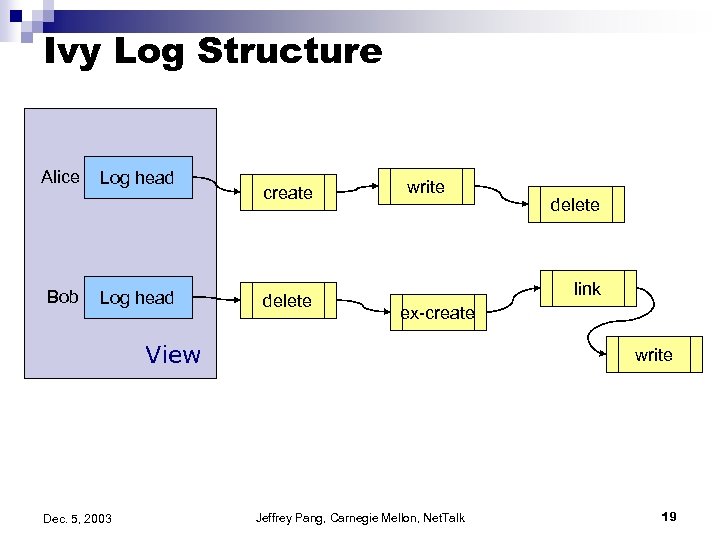

Ivy Wide Area Performance Modified Andrew Benchmark CVS Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 20

Ivy Wide Area Performance Modified Andrew Benchmark CVS Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 20

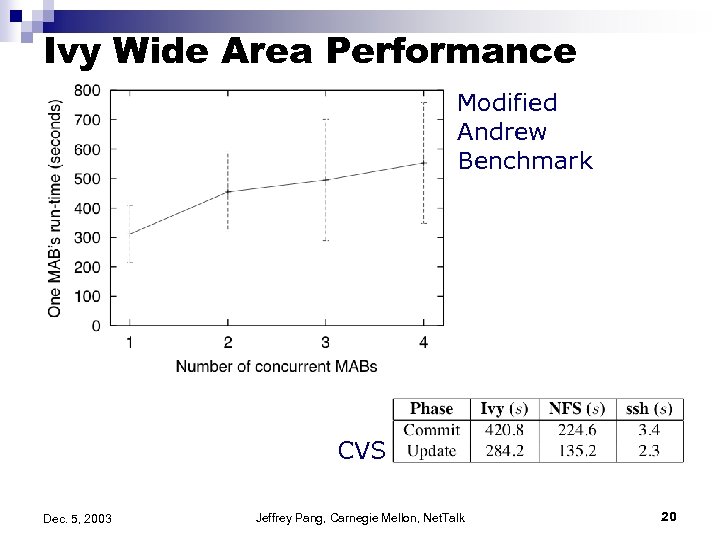

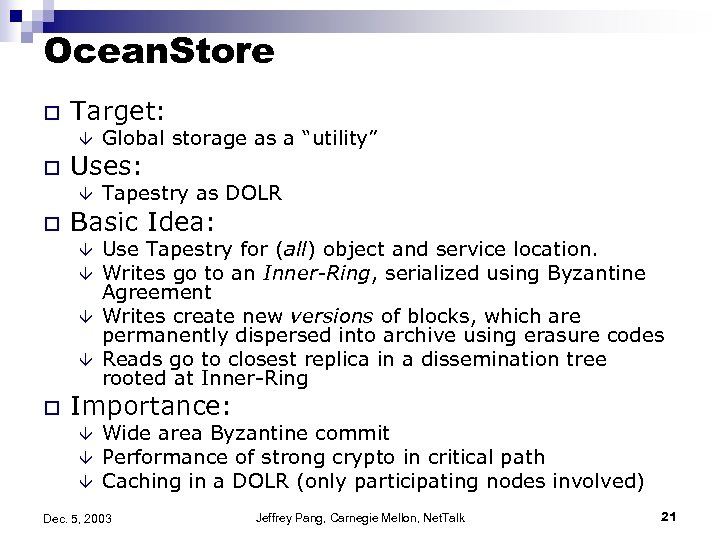

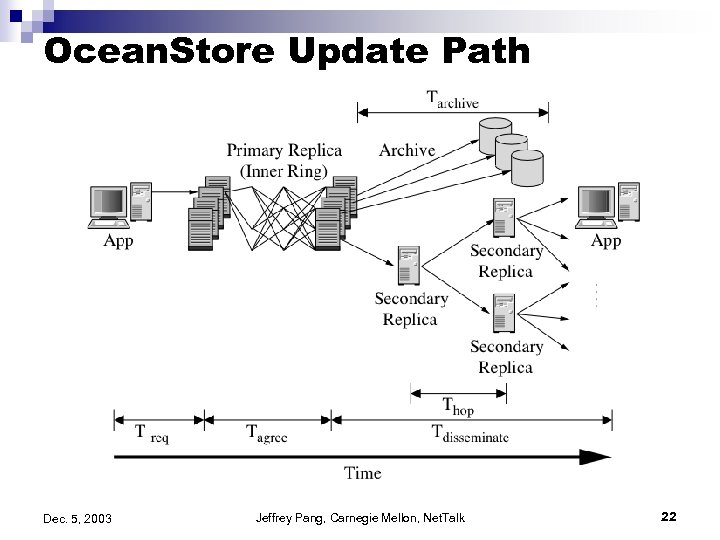

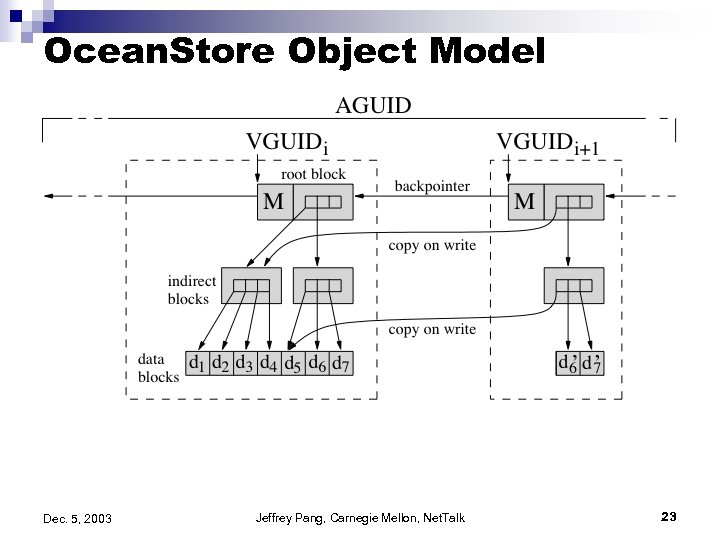

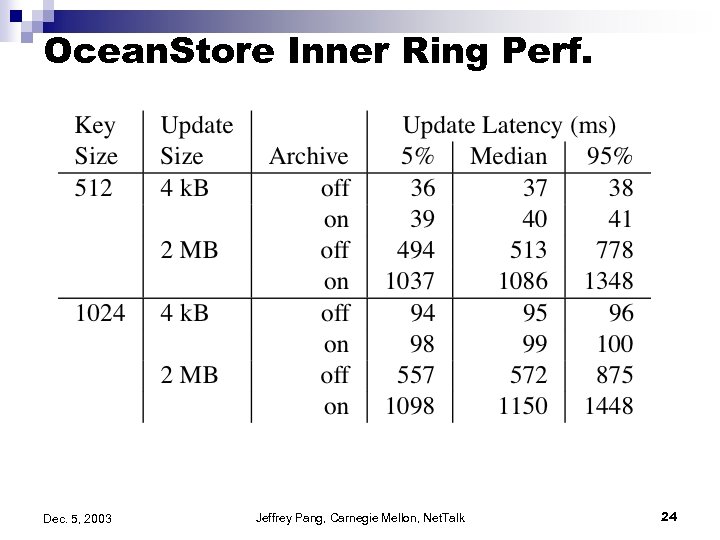

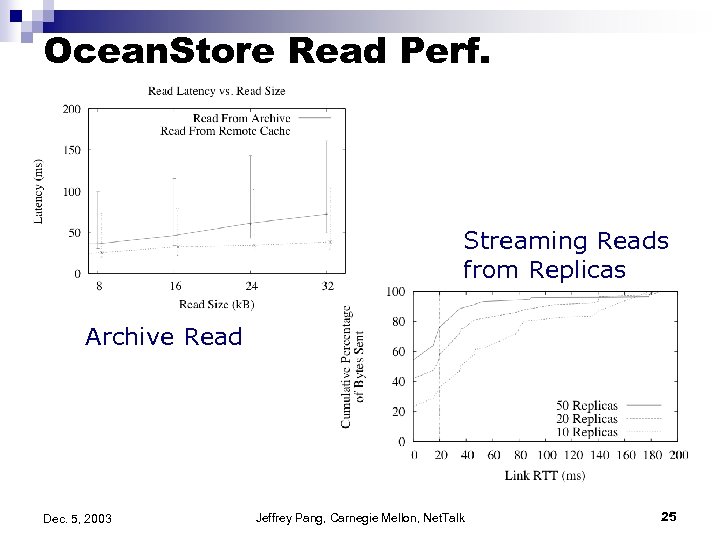

Ocean. Store o Target: â o Uses: â o Tapestry as DOLR Basic Idea: â â o Global storage as a “utility” Use Tapestry for (all) object and service location. Writes go to an Inner-Ring, serialized using Byzantine Agreement Writes create new versions of blocks, which are permanently dispersed into archive using erasure codes Reads go to closest replica in a dissemination tree rooted at Inner-Ring Importance: â â â Wide area Byzantine commit Performance of strong crypto in critical path Caching in a DOLR (only participating nodes involved) Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 21

Ocean. Store o Target: â o Uses: â o Tapestry as DOLR Basic Idea: â â o Global storage as a “utility” Use Tapestry for (all) object and service location. Writes go to an Inner-Ring, serialized using Byzantine Agreement Writes create new versions of blocks, which are permanently dispersed into archive using erasure codes Reads go to closest replica in a dissemination tree rooted at Inner-Ring Importance: â â â Wide area Byzantine commit Performance of strong crypto in critical path Caching in a DOLR (only participating nodes involved) Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 21

Ocean. Store Update Path Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 22

Ocean. Store Update Path Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 22

Ocean. Store Object Model Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 23

Ocean. Store Object Model Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 23

Ocean. Store Inner Ring Perf. Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 24

Ocean. Store Inner Ring Perf. Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 24

Ocean. Store Read Perf. Streaming Reads from Replicas Archive Read Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 25

Ocean. Store Read Perf. Streaming Reads from Replicas Archive Read Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 25

Overview of Talk o Review of DHTs o DHT vs DOLR o Storage o Multicast o Database o Misc. o API and Infrastructure Proposals Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 26

Overview of Talk o Review of DHTs o DHT vs DOLR o Storage o Multicast o Database o Misc. o API and Infrastructure Proposals Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 26

![Multicast Applications o Bayeux [Zhuang, et al. , NOSSDAV 01] â Simple single tree Multicast Applications o Bayeux [Zhuang, et al. , NOSSDAV 01] â Simple single tree](https://present5.com/presentation/8cf7c1add0513211b766cb3f4aa8f185/image-27.jpg) Multicast Applications o Bayeux [Zhuang, et al. , NOSSDAV 01] â Simple single tree per source on DOLR o Scribe [Rowstron, et al. , NGC 01, INFOCOMM 03] â Simple single tree per source on DHT o Split. Stream [Castro, et al. , SOSP 03] â Multiple disjoint trees per source o i 3 â [Stoica, et al. SIGCOMM 02] Internet Indirection Infrastructure (mobility, {multi, any}cast, service composition) Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 27

Multicast Applications o Bayeux [Zhuang, et al. , NOSSDAV 01] â Simple single tree per source on DOLR o Scribe [Rowstron, et al. , NGC 01, INFOCOMM 03] â Simple single tree per source on DHT o Split. Stream [Castro, et al. , SOSP 03] â Multiple disjoint trees per source o i 3 â [Stoica, et al. SIGCOMM 02] Internet Indirection Infrastructure (mobility, {multi, any}cast, service composition) Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 27

Bayeux o Target: â o Uses: â o Tapestry as DOLR Basic Idea: â â â o Multimedia Streaming Advertise session with fake file in Tapestry Clients join by routing message to source id (after learning of it by lookup up the session) All intermediate routers on path join tree Support multiple roots by having multiple sources advertise a session (lookups converge to “closest”) Take advantage of routing redundancy to provide best performance (shortest link) / tolerate faults (predict link reliability) Importance: â Relatively simple (no “frills”) multicast on a DOLR Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 28

Bayeux o Target: â o Uses: â o Tapestry as DOLR Basic Idea: â â â o Multimedia Streaming Advertise session with fake file in Tapestry Clients join by routing message to source id (after learning of it by lookup up the session) All intermediate routers on path join tree Support multiple roots by having multiple sources advertise a session (lookups converge to “closest”) Take advantage of routing redundancy to provide best performance (shortest link) / tolerate faults (predict link reliability) Importance: â Relatively simple (no “frills”) multicast on a DOLR Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 28

Scribe o Target: â o Uses: â o Pastry or CAN as DHTs Basic Idea: â â â o Event notification / pubsub systems (e. g. , IM) Publications routed to root in Pastry Recursively forwarded to all children in tree Subscriptions cause all nodes on path to root to join tree When your parent dies repair by routing to a new parent More complex ways to load balance (e. g. , make children into grandchildren) described in later JSAC article Importance: â â Another simple multicast on a DHT Building block for more complex applications Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 29

Scribe o Target: â o Uses: â o Pastry or CAN as DHTs Basic Idea: â â â o Event notification / pubsub systems (e. g. , IM) Publications routed to root in Pastry Recursively forwarded to all children in tree Subscriptions cause all nodes on path to root to join tree When your parent dies repair by routing to a new parent More complex ways to load balance (e. g. , make children into grandchildren) described in later JSAC article Importance: â â Another simple multicast on a DHT Building block for more complex applications Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 29

Split. Stream o Target: â o Uses: â o Pastry Basic Idea: â â â o P 2 P streaming / bulk file transfer Split content into k stripes Construct k interior-node disjoint Scribe trees Distribute one stripe per tree Receivers choose number of stripes to receive (e. g. , trade off quality for inbound capacity) Limit out-degree of nodes with join-heuristics (later) Importance: â â All nodes share in forwarding of data (w. h. p. ) Nifty use of Pastry ids to construct forest (next slide) Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 30

Split. Stream o Target: â o Uses: â o Pastry Basic Idea: â â â o P 2 P streaming / bulk file transfer Split content into k stripes Construct k interior-node disjoint Scribe trees Distribute one stripe per tree Receivers choose number of stripes to receive (e. g. , trade off quality for inbound capacity) Limit out-degree of nodes with join-heuristics (later) Importance: â â All nodes share in forwarding of data (w. h. p. ) Nifty use of Pastry ids to construct forest (next slide) Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 30

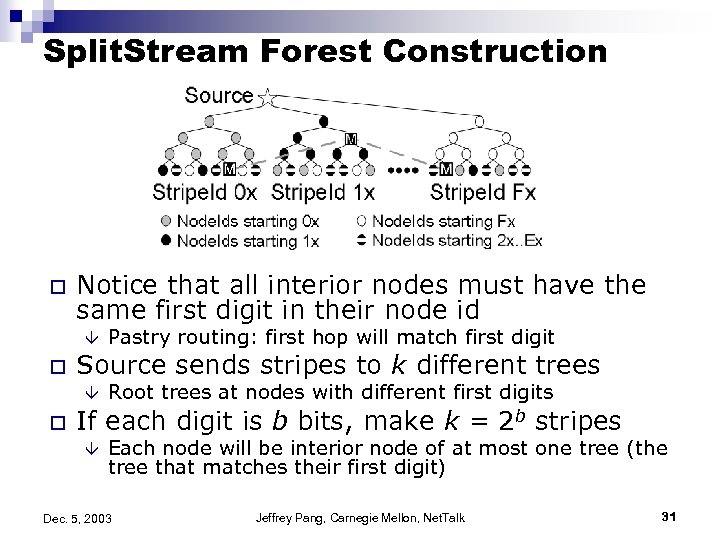

Split. Stream Forest Construction o Notice that all interior nodes must have the same first digit in their node id â o Source sends stripes to k different trees â o Pastry routing: first hop will match first digit Root trees at nodes with different first digits If each digit is b bits, make k = 2 b stripes â Each node will be interior node of at most one tree (the tree that matches their first digit) Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 31

Split. Stream Forest Construction o Notice that all interior nodes must have the same first digit in their node id â o Source sends stripes to k different trees â o Pastry routing: first hop will match first digit Root trees at nodes with different first digits If each digit is b bits, make k = 2 b stripes â Each node will be interior node of at most one tree (the tree that matches their first digit) Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 31

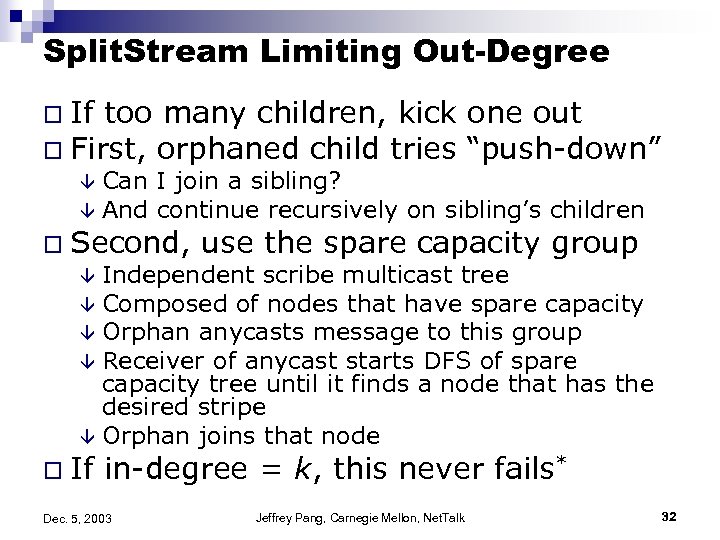

Split. Stream Limiting Out-Degree o If too many children, kick one out o First, orphaned child tries “push-down” Can I join a sibling? â And continue recursively on sibling’s children â o Second, use the spare capacity group â Independent scribe multicast tree â Composed of nodes that have spare capacity â Orphan anycasts message to this group â Receiver of anycast starts DFS of spare capacity tree until it finds a node that has the desired stripe â Orphan joins that node o If in-degree = k, this never fails* Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 32

Split. Stream Limiting Out-Degree o If too many children, kick one out o First, orphaned child tries “push-down” Can I join a sibling? â And continue recursively on sibling’s children â o Second, use the spare capacity group â Independent scribe multicast tree â Composed of nodes that have spare capacity â Orphan anycasts message to this group â Receiver of anycast starts DFS of spare capacity tree until it finds a node that has the desired stripe â Orphan joins that node o If in-degree = k, this never fails* Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 32

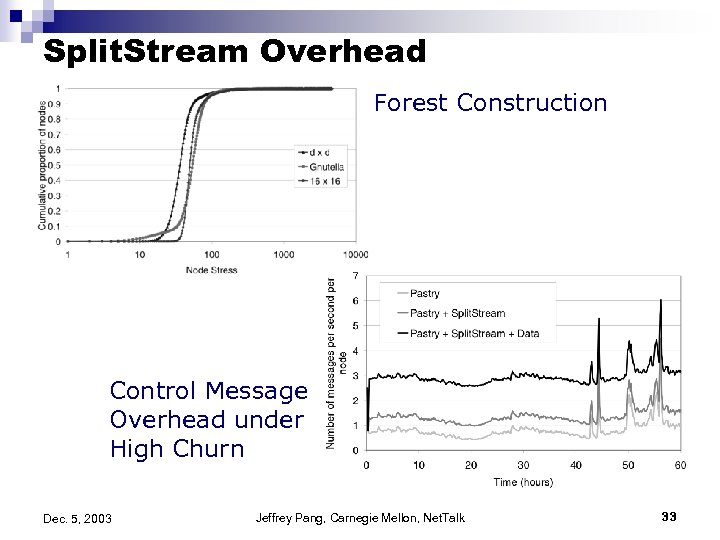

Split. Stream Overhead Forest Construction Control Message Overhead under High Churn Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 33

Split. Stream Overhead Forest Construction Control Message Overhead under High Churn Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 33

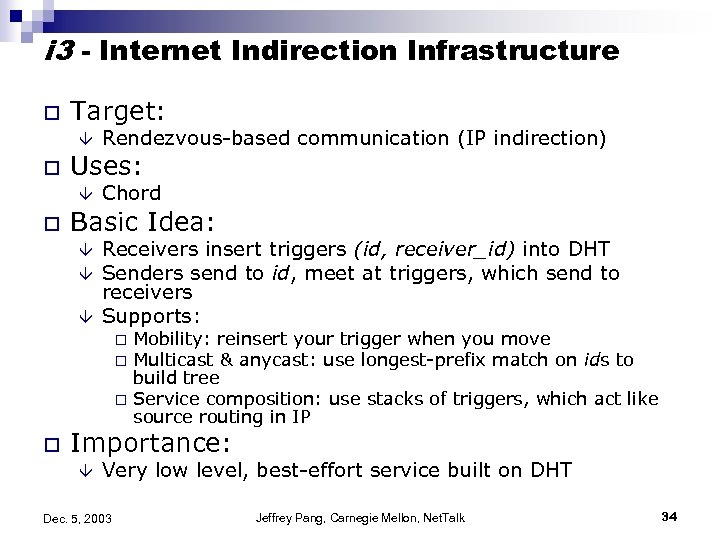

i 3 - Internet Indirection Infrastructure o Target: â o Uses: â o Rendezvous-based communication (IP indirection) Chord Basic Idea: â â â Receivers insert triggers (id, receiver_id) into DHT Senders send to id, meet at triggers, which send to receivers Supports: o o Mobility: reinsert your trigger when you move Multicast & anycast: use longest-prefix match on ids to build tree Service composition: use stacks of triggers, which act like source routing in IP Importance: â Very low level, best-effort service built on DHT Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 34

i 3 - Internet Indirection Infrastructure o Target: â o Uses: â o Rendezvous-based communication (IP indirection) Chord Basic Idea: â â â Receivers insert triggers (id, receiver_id) into DHT Senders send to id, meet at triggers, which send to receivers Supports: o o Mobility: reinsert your trigger when you move Multicast & anycast: use longest-prefix match on ids to build tree Service composition: use stacks of triggers, which act like source routing in IP Importance: â Very low level, best-effort service built on DHT Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 34

Overview of Talk o Review of DHTs o DHT vs DOLR o Storage o Multicast o Database o Misc. o API and Infrastructure Proposals Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 35

Overview of Talk o Review of DHTs o DHT vs DOLR o Storage o Multicast o Database o Misc. o API and Infrastructure Proposals Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 35

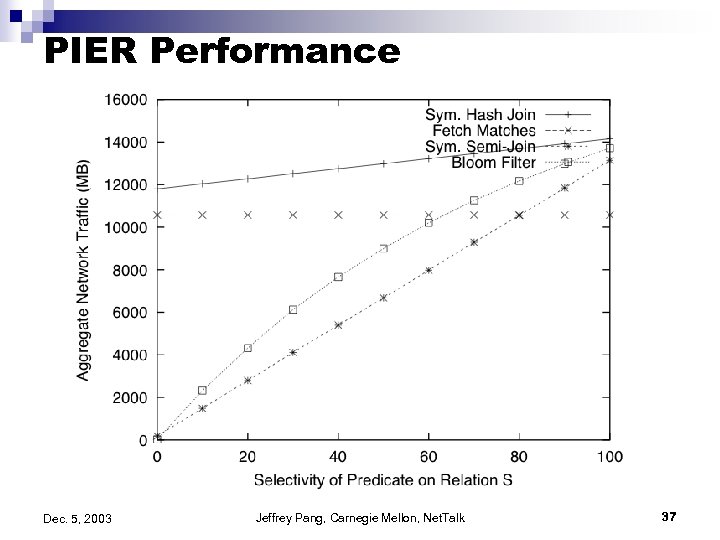

![PIER [Huebsch, et al. , VLDB 03] o Target: â o Uses: â o PIER [Huebsch, et al. , VLDB 03] o Target: â o Uses: â o](https://present5.com/presentation/8cf7c1add0513211b766cb3f4aa8f185/image-36.jpg) PIER [Huebsch, et al. , VLDB 03] o Target: â o Uses: â o CAN as DHT Basic Idea: â â o in situ distributed querying (e. g. , network monitoring) Tables named by (namespace, resource. ID); e. g. , (application, primary_key) Store tables in DHT keyed by this pair Lookup tuples by routing to a table(s)’ key and having the end nodes do an lscan for you Join NR and NS by creating a new namespace NQ in DHT and rehashing tuples to NQ which determines matches Importance: â â Another simple multicast on a DHT Building block for more complex applications Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 36

PIER [Huebsch, et al. , VLDB 03] o Target: â o Uses: â o CAN as DHT Basic Idea: â â o in situ distributed querying (e. g. , network monitoring) Tables named by (namespace, resource. ID); e. g. , (application, primary_key) Store tables in DHT keyed by this pair Lookup tuples by routing to a table(s)’ key and having the end nodes do an lscan for you Join NR and NS by creating a new namespace NQ in DHT and rehashing tuples to NQ which determines matches Importance: â â Another simple multicast on a DHT Building block for more complex applications Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 36

PIER Performance Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 37

PIER Performance Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 37

Overview of Talk o Review of DHTs o DHT vs DOLR o Storage o Multicast o Database o Misc. o API and Infrastructure Proposals Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 38

Overview of Talk o Review of DHTs o DHT vs DOLR o Storage o Multicast o Database o Misc. o API and Infrastructure Proposals Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 38

![Misc. Applications o POST [Mislove, et al. , Hot. OS 03] â Collaborative Applications Misc. Applications o POST [Mislove, et al. , Hot. OS 03] â Collaborative Applications](https://present5.com/presentation/8cf7c1add0513211b766cb3f4aa8f185/image-39.jpg) Misc. Applications o POST [Mislove, et al. , Hot. OS 03] â Collaborative Applications o Approximate Object Location [Zhou, et al. Middleware 03] â Collaborative Spam Filtering Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 39

Misc. Applications o POST [Mislove, et al. , Hot. OS 03] â Collaborative Applications o Approximate Object Location [Zhou, et al. Middleware 03] â Collaborative Spam Filtering Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 39

POST o Target: â o Uses: â o Toolbox for collaborative apps (e. g. , email, IM, etc. ) Pastry as DHT Basic Idea: â â Use PAST as storage substrate Use Scribe as notification system Assume certificate authority for assigning user IDs, keys Example: Email o o o Insert new mail into PAST (encrypted) Notify recipient using Scribe (delegate if not online) Importance: â Use second level systems as substrate for more complex applications (see also Ocean. Store: email, nfs, web cache) Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 40

POST o Target: â o Uses: â o Toolbox for collaborative apps (e. g. , email, IM, etc. ) Pastry as DHT Basic Idea: â â Use PAST as storage substrate Use Scribe as notification system Assume certificate authority for assigning user IDs, keys Example: Email o o o Insert new mail into PAST (encrypted) Notify recipient using Scribe (delegate if not online) Importance: â Use second level systems as substrate for more complex applications (see also Ocean. Store: email, nfs, web cache) Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 40

Approximate Object Location o Target: â o Uses: â o Tapestry as DOLR Basic Idea: â â o Collaborative filtering (e. g. , Spam detection) Calculate checksums of all strings of length L in message. Select N of them deterministically (“feature” vector) Two messages match if enough features match To mark spam, insert my node into Tapestry keyed by each feature To detect spam, lookup its features. Will get back a set of nodes that marked each feature as spam (“votes”). Importance: â â Scary, but looking more and more useful. E. G. , recent Do. S attacks on RBLs. They have a plug-in for Outlook that works Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 41

Approximate Object Location o Target: â o Uses: â o Tapestry as DOLR Basic Idea: â â o Collaborative filtering (e. g. , Spam detection) Calculate checksums of all strings of length L in message. Select N of them deterministically (“feature” vector) Two messages match if enough features match To mark spam, insert my node into Tapestry keyed by each feature To detect spam, lookup its features. Will get back a set of nodes that marked each feature as spam (“votes”). Importance: â â Scary, but looking more and more useful. E. G. , recent Do. S attacks on RBLs. They have a plug-in for Outlook that works Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 41

Overview of Talk o Review of DHTs o DHT vs DOLR o Storage o Multicast o Database o Misc. o API and Infrastructure Proposals Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 42

Overview of Talk o Review of DHTs o DHT vs DOLR o Storage o Multicast o Database o Misc. o API and Infrastructure Proposals Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 42

API & Infrastructure Proposals o One Ring to Rule them All [Castro, et al. SIGOPS 02] â Bootstrapping multiple overlays o Common IPTPS 02] â P 2 P API [Dabek, et al. DHT/DOLR as a library o Open. Hash [Karp, â DHT as a service et al. IPTPS 04*] *submitted Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 43

API & Infrastructure Proposals o One Ring to Rule them All [Castro, et al. SIGOPS 02] â Bootstrapping multiple overlays o Common IPTPS 02] â P 2 P API [Dabek, et al. DHT/DOLR as a library o Open. Hash [Karp, â DHT as a service et al. IPTPS 04*] *submitted Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 43

One Ring to Rule them All o Goal: â o Basic Idea: â â â o Bootstrap multiple overlays Everyone joins a “universal” Pastry ring This ring implements PAST, Scribe, and distributed search (see Harren, et al. , IPTPS 02) Advertise your overlay service in the search engine Store your code and certificates in PAST Upgrades disseminated through Scribe Importance: â â How one might use an overlay to manage overlays Interesting title for a Microsoft paper : ) Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 44

One Ring to Rule them All o Goal: â o Basic Idea: â â â o Bootstrap multiple overlays Everyone joins a “universal” Pastry ring This ring implements PAST, Scribe, and distributed search (see Harren, et al. , IPTPS 02) Advertise your overlay service in the search engine Store your code and certificates in PAST Upgrades disseminated through Scribe Importance: â â How one might use an overlay to manage overlays Interesting title for a Microsoft paper : ) Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 44

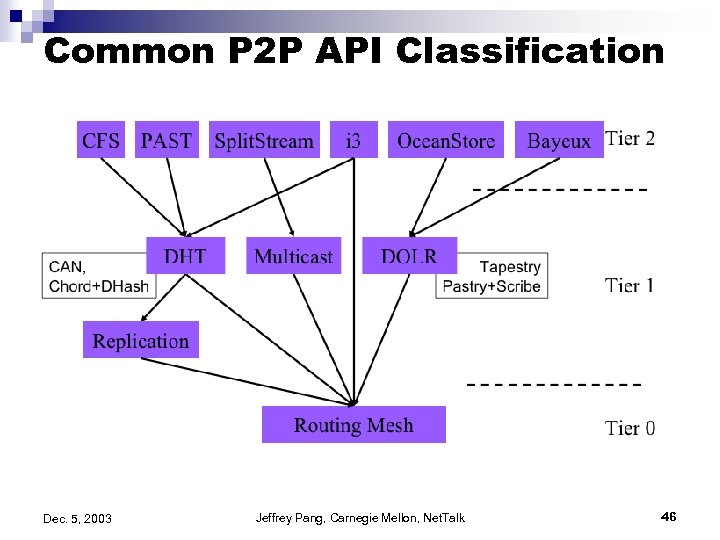

Common P 2 P API o Goal: â o Basic Idea: â â o Common API for structured overlays First, described a common layer that both DHT and DOLR could be implemented on Second, looked at applications developed so far See what abstractions can be derived Described what DHT “library” functions might be Importance: â â How much has to be exposed to application developers? Any DHT App can be implemented on any DHT Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 45

Common P 2 P API o Goal: â o Basic Idea: â â o Common API for structured overlays First, described a common layer that both DHT and DOLR could be implemented on Second, looked at applications developed so far See what abstractions can be derived Described what DHT “library” functions might be Importance: â â How much has to be exposed to application developers? Any DHT App can be implemented on any DHT Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 45

Common P 2 P API Classification Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 46

Common P 2 P API Classification Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 46

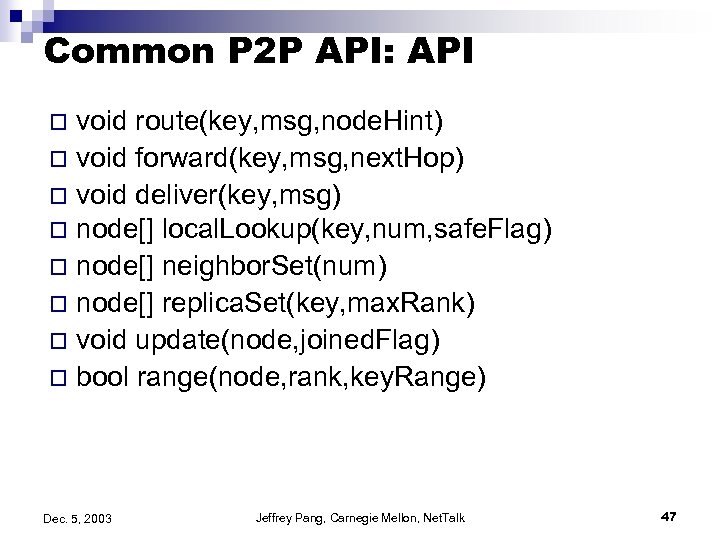

Common P 2 P API: API void route(key, msg, node. Hint) o void forward(key, msg, next. Hop) o void deliver(key, msg) o node[] local. Lookup(key, num, safe. Flag) o node[] neighbor. Set(num) o node[] replica. Set(key, max. Rank) o void update(node, joined. Flag) o bool range(node, rank, key. Range) o Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 47

Common P 2 P API: API void route(key, msg, node. Hint) o void forward(key, msg, next. Hop) o void deliver(key, msg) o node[] local. Lookup(key, num, safe. Flag) o node[] neighbor. Set(num) o node[] replica. Set(key, max. Rank) o void update(node, joined. Flag) o bool range(node, rank, key. Range) o Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 47

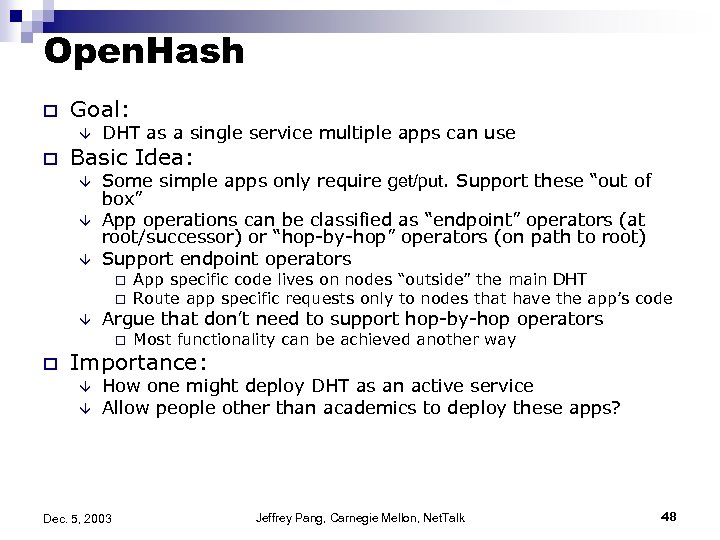

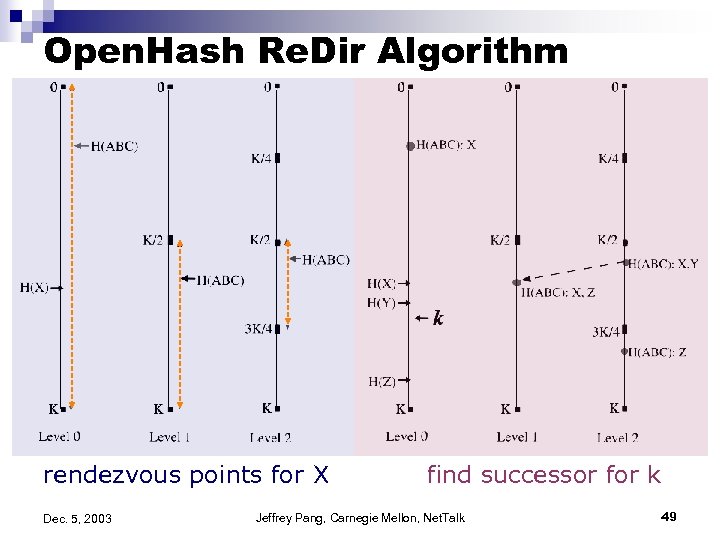

Open. Hash o Goal: â o DHT as a single service multiple apps can use Basic Idea: â â â Some simple apps only require get/put. Support these “out of box” App operations can be classified as “endpoint” operators (at root/successor) or “hop-by-hop” operators (on path to root) Support endpoint operators o o â Argue that don’t need to support hop-by-hop operators o o App specific code lives on nodes “outside” the main DHT Route app specific requests only to nodes that have the app’s code Most functionality can be achieved another way Importance: â â How one might deploy DHT as an active service Allow people other than academics to deploy these apps? Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 48

Open. Hash o Goal: â o DHT as a single service multiple apps can use Basic Idea: â â â Some simple apps only require get/put. Support these “out of box” App operations can be classified as “endpoint” operators (at root/successor) or “hop-by-hop” operators (on path to root) Support endpoint operators o o â Argue that don’t need to support hop-by-hop operators o o App specific code lives on nodes “outside” the main DHT Route app specific requests only to nodes that have the app’s code Most functionality can be achieved another way Importance: â â How one might deploy DHT as an active service Allow people other than academics to deploy these apps? Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 48

Open. Hash Re. Dir Algorithm rendezvous points for X Dec. 5, 2003 find successor for k Jeffrey Pang, Carnegie Mellon, Net. Talk 49

Open. Hash Re. Dir Algorithm rendezvous points for X Dec. 5, 2003 find successor for k Jeffrey Pang, Carnegie Mellon, Net. Talk 49

Conclusion o DHT Apps not going away â Are they still struggling to find a purpose? â Would any of these apps be better off not on top of a DHT? o Using ones: basic apps to build more complex CFS, Ivy build on DHash â POST, One. Ring build on PAST, Scribe â Split. Stream builds on Scribe â o Starting to notice that no one besides researchers using DHTs 3+ years of research. . . â How to make them useful to real people? â Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 50

Conclusion o DHT Apps not going away â Are they still struggling to find a purpose? â Would any of these apps be better off not on top of a DHT? o Using ones: basic apps to build more complex CFS, Ivy build on DHash â POST, One. Ring build on PAST, Scribe â Split. Stream builds on Scribe â o Starting to notice that no one besides researchers using DHTs 3+ years of research. . . â How to make them useful to real people? â Dec. 5, 2003 Jeffrey Pang, Carnegie Mellon, Net. Talk 50