79e67a189375b1f0c719cbc472e57411.ppt

- Количество слайдов: 22

Development of Indicators for Integrated System Validation Leena Norros & Maaria Nuutinen & Paula Savioja VTT Industrial Systems: Work, Organisation and System Usability Research 20. 1. 2005

Development of Indicators for Integrated System Validation Leena Norros & Maaria Nuutinen & Paula Savioja VTT Industrial Systems: Work, Organisation and System Usability Research 20. 1. 2005

Outline of the Presentation • • NPP control room modernizations Integrated System Validation (ISV) Performance indicators in validation Development of the evaluation framework for intelligent environments • Conclusions 2

Outline of the Presentation • • NPP control room modernizations Integrated System Validation (ISV) Performance indicators in validation Development of the evaluation framework for intelligent environments • Conclusions 2

3

3

NPP Control Room Modernizations • Current control and automation systems are being modernized • No changes to the degree of automation • Technological rationale for the change • Maintenance costs • Lack of spare parts • Technological possibilities exist • Different strategies adopted by the utilities • Some human centered design principles implicitly adopted in the projects • Happening at the same time • OL 3 • Generation change within the personnel of existing NPPs 4

NPP Control Room Modernizations • Current control and automation systems are being modernized • No changes to the degree of automation • Technological rationale for the change • Maintenance costs • Lack of spare parts • Technological possibilities exist • Different strategies adopted by the utilities • Some human centered design principles implicitly adopted in the projects • Happening at the same time • OL 3 • Generation change within the personnel of existing NPPs 4

NPP Control Room Modernizations: The Effective Changes • Loss of individual data points and controls in the information panels and desks • The decrease in peripheral information Tacit knowledge, Process feel and awareness • Spatial memory memorability, skill based behavior, response times • Co-operation within the crew communication, group awareness • Adoption of individual information displays • Sequential use of information instead of parallel, “key hole effect” Windows and dialogs might hide information • Active searching required understanding of the available resources • Secondary tasks from manipulating the interface possibility of confusion, response times • Higher abstraction level in the information orientation, constraints and possibilities • Adoption of large screen displays • Basis for shared co-operation group SA • Higher abstraction level in the information 5

NPP Control Room Modernizations: The Effective Changes • Loss of individual data points and controls in the information panels and desks • The decrease in peripheral information Tacit knowledge, Process feel and awareness • Spatial memory memorability, skill based behavior, response times • Co-operation within the crew communication, group awareness • Adoption of individual information displays • Sequential use of information instead of parallel, “key hole effect” Windows and dialogs might hide information • Active searching required understanding of the available resources • Secondary tasks from manipulating the interface possibility of confusion, response times • Higher abstraction level in the information orientation, constraints and possibilities • Adoption of large screen displays • Basis for shared co-operation group SA • Higher abstraction level in the information 5

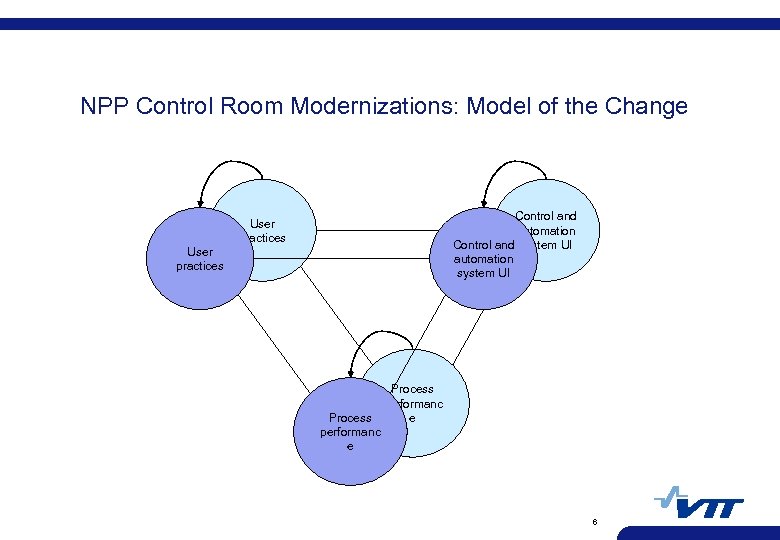

NPP Control Room Modernizations: Model of the Change Control and automation Control and system UI automation system UI User practices Process performanc Process e performanc e 6

NPP Control Room Modernizations: Model of the Change Control and automation Control and system UI automation system UI User practices Process performanc Process e performanc e 6

How do we know that a complex system can be safely operated? 7

How do we know that a complex system can be safely operated? 7

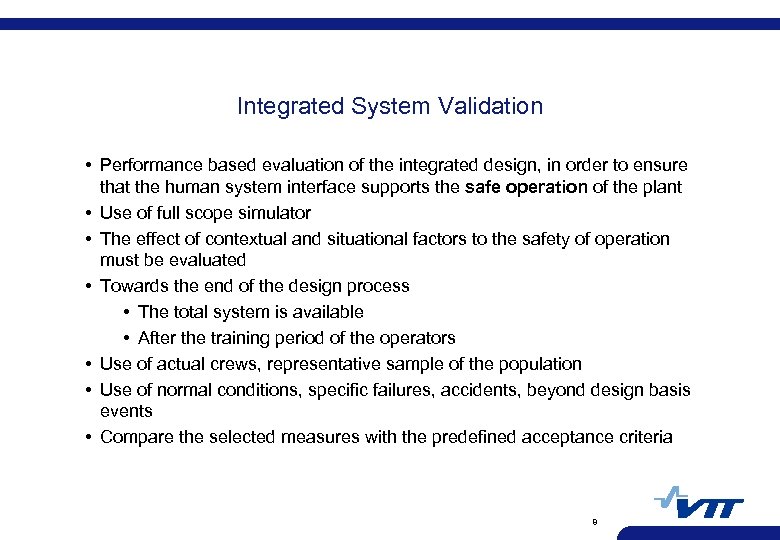

Integrated System Validation • Performance based evaluation of the integrated design, in order to ensure that the human system interface supports the safe operation of the plant • Use of full scope simulator • The effect of contextual and situational factors to the safety of operation must be evaluated • Towards the end of the design process • The total system is available • After the training period of the operators • Use of actual crews, representative sample of the population • Use of normal conditions, specific failures, accidents, beyond design basis events • Compare the selected measures with the predefined acceptance criteria 8

Integrated System Validation • Performance based evaluation of the integrated design, in order to ensure that the human system interface supports the safe operation of the plant • Use of full scope simulator • The effect of contextual and situational factors to the safety of operation must be evaluated • Towards the end of the design process • The total system is available • After the training period of the operators • Use of actual crews, representative sample of the population • Use of normal conditions, specific failures, accidents, beyond design basis events • Compare the selected measures with the predefined acceptance criteria 8

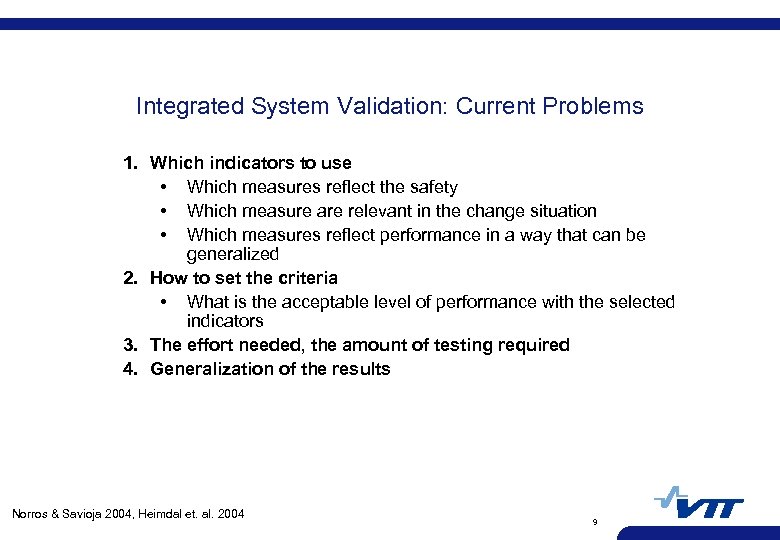

Integrated System Validation: Current Problems 1. Which indicators to use • Which measures reflect the safety • Which measure are relevant in the change situation • Which measures reflect performance in a way that can be generalized 2. How to set the criteria • What is the acceptable level of performance with the selected indicators 3. The effort needed, the amount of testing required 4. Generalization of the results Norros & Savioja 2004, Heimdal et. al. 2004 9

Integrated System Validation: Current Problems 1. Which indicators to use • Which measures reflect the safety • Which measure are relevant in the change situation • Which measures reflect performance in a way that can be generalized 2. How to set the criteria • What is the acceptable level of performance with the selected indicators 3. The effort needed, the amount of testing required 4. Generalization of the results Norros & Savioja 2004, Heimdal et. al. 2004 9

Validation: The Problem of the ε – case How to predict what will happen in a very rarely occurring beyond design basis, beyond validation possibilities, event that nobody predicted ever to happen? Predictive capabilities of validation procedures? 10

Validation: The Problem of the ε – case How to predict what will happen in a very rarely occurring beyond design basis, beyond validation possibilities, event that nobody predicted ever to happen? Predictive capabilities of validation procedures? 10

Performance Indicators: Development Challenges • Process performance • Do not really differentiate enough • High degree of automation • Complex defenses within the system • Thorough training process • Difficult to anchor to the HF-related changes taking place in modernization • Not predictive of future performance in the conditions not tested • Human performance • Do not describe how and based on what underlying assumptions the crew acts in the situation • Not predictive of future performance in the conditions not tested 11

Performance Indicators: Development Challenges • Process performance • Do not really differentiate enough • High degree of automation • Complex defenses within the system • Thorough training process • Difficult to anchor to the HF-related changes taking place in modernization • Not predictive of future performance in the conditions not tested • Human performance • Do not describe how and based on what underlying assumptions the crew acts in the situation • Not predictive of future performance in the conditions not tested 11

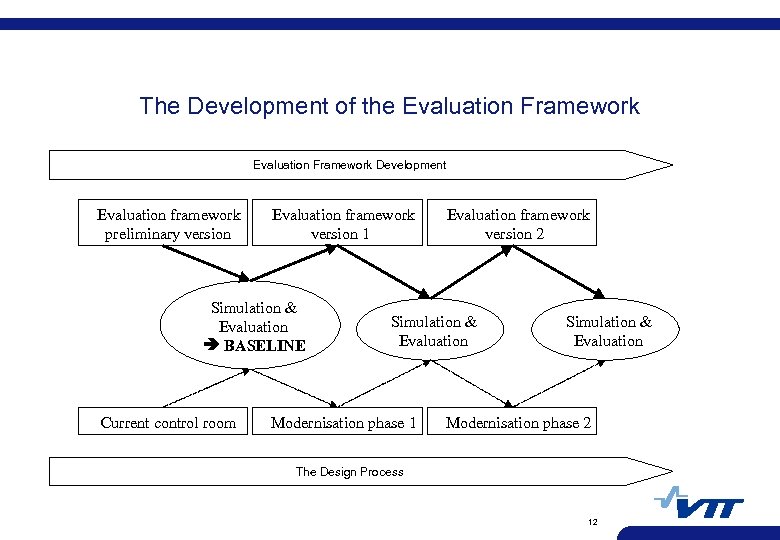

The Development of the Evaluation Framework Development Evaluation framework preliminary version Evaluation framework version 1 Simulation & Evaluation BASELINE Current control room Evaluation framework version 2 Simulation & Evaluation Modernisation phase 1 Simulation & Evaluation Modernisation phase 2 The Design Process 12

The Development of the Evaluation Framework Development Evaluation framework preliminary version Evaluation framework version 1 Simulation & Evaluation BASELINE Current control room Evaluation framework version 2 Simulation & Evaluation Modernisation phase 1 Simulation & Evaluation Modernisation phase 2 The Design Process 12

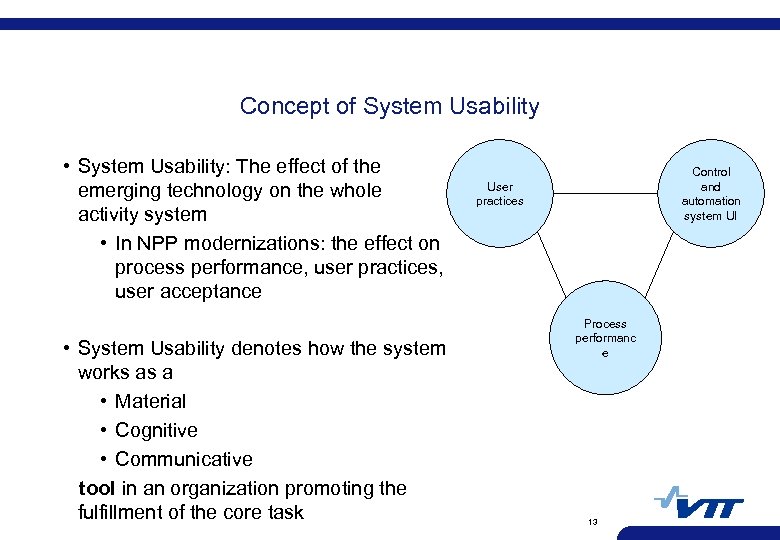

Concept of System Usability • System Usability: The effect of the emerging technology on the whole activity system • In NPP modernizations: the effect on process performance, user practices, user acceptance • System Usability denotes how the system works as a • Material • Cognitive • Communicative tool in an organization promoting the fulfillment of the core task Control and automation system UI User practices Process performanc e 13

Concept of System Usability • System Usability: The effect of the emerging technology on the whole activity system • In NPP modernizations: the effect on process performance, user practices, user acceptance • System Usability denotes how the system works as a • Material • Cognitive • Communicative tool in an organization promoting the fulfillment of the core task Control and automation system UI User practices Process performanc e 13

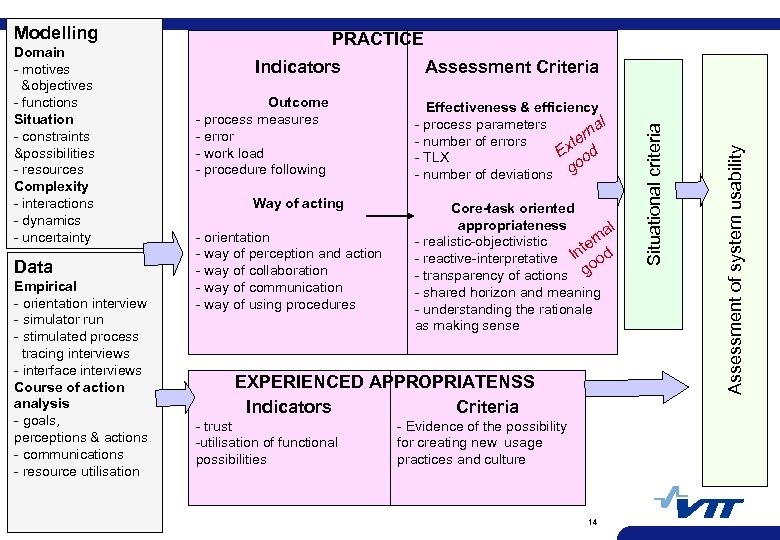

Modelling Empirical - orientation interview - simulator run - stimulated process tracing interviews - interface interviews Course of action analysis - goals, perceptions & actions - communications - resource utilisation Indicators Outcome - process measures - error - work load - procedure following Way of acting - orientation - way of perception and action - way of collaboration - way of communication - way of using procedures Assessment Criteria Effectiveness & efficiency - process parameters al rn - number of errors te Ex od - TLX o - number of deviations g Core-task oriented appropriateness l na - realistic-objectivistic er nt od - reactive-interpretative I o - transparency of actions g - shared horizon and meaning - understanding the rationale as making sense EXPERIENCED APPROPRIATENSS Indicators Criteria - trust -utilisation of functional possibilities - Evidence of the possibility for creating new usage practices and culture 14 Assessment of system usability Data PRACTICE Situational criteria Domain - motives &objectives - functions Situation - constraints &possibilities - resources Complexity - interactions - dynamics - uncertainty

Modelling Empirical - orientation interview - simulator run - stimulated process tracing interviews - interface interviews Course of action analysis - goals, perceptions & actions - communications - resource utilisation Indicators Outcome - process measures - error - work load - procedure following Way of acting - orientation - way of perception and action - way of collaboration - way of communication - way of using procedures Assessment Criteria Effectiveness & efficiency - process parameters al rn - number of errors te Ex od - TLX o - number of deviations g Core-task oriented appropriateness l na - realistic-objectivistic er nt od - reactive-interpretative I o - transparency of actions g - shared horizon and meaning - understanding the rationale as making sense EXPERIENCED APPROPRIATENSS Indicators Criteria - trust -utilisation of functional possibilities - Evidence of the possibility for creating new usage practices and culture 14 Assessment of system usability Data PRACTICE Situational criteria Domain - motives &objectives - functions Situation - constraints &possibilities - resources Complexity - interactions - dynamics - uncertainty

Conclusions • Traditional scientific performance measures do not differentiate between UIs in a highly automated environment more profound criteria in assessment are needed • A system with high system usability induces good working practices on the users • With practices individual users cope with system uncertainty which is a critical demand in the NPP environment • In validation practices within a new system will be compared to the practices in the baseline evaluation within the valid traditional system • Further work: Connect the practice-driven performance indicators to the changes in the modernization 15

Conclusions • Traditional scientific performance measures do not differentiate between UIs in a highly automated environment more profound criteria in assessment are needed • A system with high system usability induces good working practices on the users • With practices individual users cope with system uncertainty which is a critical demand in the NPP environment • In validation practices within a new system will be compared to the practices in the baseline evaluation within the valid traditional system • Further work: Connect the practice-driven performance indicators to the changes in the modernization 15

Thank You! 16

Thank You! 16

17

17

18

18

19

19

20

20

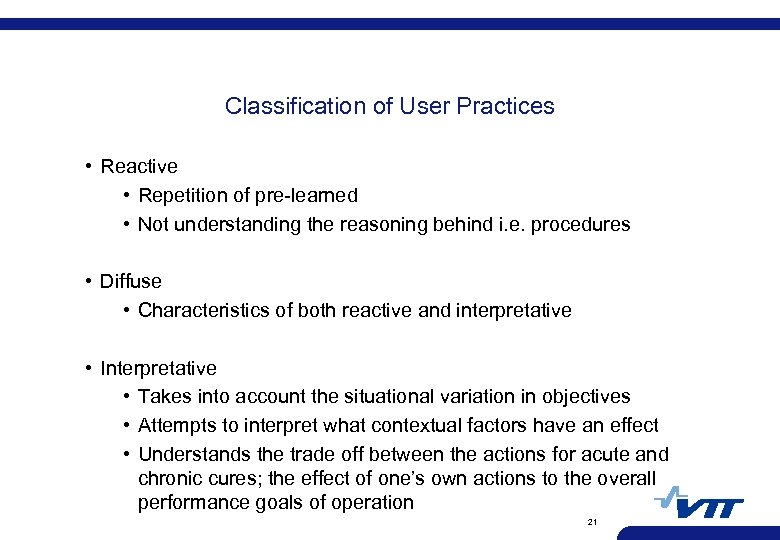

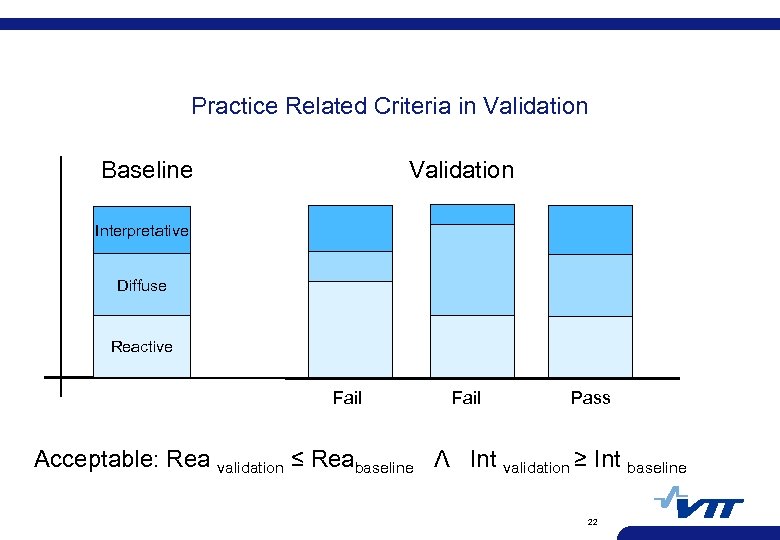

Classification of User Practices • Reactive • Repetition of pre-learned • Not understanding the reasoning behind i. e. procedures • Diffuse • Characteristics of both reactive and interpretative • Interpretative • Takes into account the situational variation in objectives • Attempts to interpret what contextual factors have an effect • Understands the trade off between the actions for acute and chronic cures; the effect of one’s own actions to the overall performance goals of operation 21

Classification of User Practices • Reactive • Repetition of pre-learned • Not understanding the reasoning behind i. e. procedures • Diffuse • Characteristics of both reactive and interpretative • Interpretative • Takes into account the situational variation in objectives • Attempts to interpret what contextual factors have an effect • Understands the trade off between the actions for acute and chronic cures; the effect of one’s own actions to the overall performance goals of operation 21

Practice Related Criteria in Validation Baseline Validation Interpretative Diffuse Reactive Fail Pass Acceptable: Rea validation ≤ Reabaseline Λ Int validation ≥ Int baseline 22

Practice Related Criteria in Validation Baseline Validation Interpretative Diffuse Reactive Fail Pass Acceptable: Rea validation ≤ Reabaseline Λ Int validation ≥ Int baseline 22