92a5d32fddb26a6d3714b06c1422579f.ppt

- Количество слайдов: 36

Developing, Measuring, and Improving Program Fidelity: Achieving positive outcomes through high-fidelity implementation SPDG National Conference Washington, DC March 5, 2013 Allison Metz, Ph. D, Associate Director, NIRN Frank Porter Graham Child Development Institute University of North Carolina

Developing, Measuring, and Improving Program Fidelity: Achieving positive outcomes through high-fidelity implementation SPDG National Conference Washington, DC March 5, 2013 Allison Metz, Ph. D, Associate Director, NIRN Frank Porter Graham Child Development Institute University of North Carolina

Goals for Today • Define fidelity and its link to outcomes • Identify strategies for developing fidelity measures • Discuss fidelity within a stage-based context • Describe the use of Implementation Drivers to promote high fidelity • Provide case example

Goals for Today • Define fidelity and its link to outcomes • Identify strategies for developing fidelity measures • Discuss fidelity within a stage-based context • Describe the use of Implementation Drivers to promote high fidelity • Provide case example

“PROGRAM FIDELITY” “The degree to which the program or practice is implemented ‘as intended’ by the program developers and researchers. ” “Fidelity measures detect the presence and strength of an intervention in practice. ”

“PROGRAM FIDELITY” “The degree to which the program or practice is implemented ‘as intended’ by the program developers and researchers. ” “Fidelity measures detect the presence and strength of an intervention in practice. ”

Definition of Fidelity Context, Compliance, and Competence • Three components – Context: Structural aspects that encompass the framework for service delivery – Compliance: The extent to which the practitioner uses the core program components – Competence: Process aspects that encompass the level of skill shown by the practitioner and the “way in which the service is delivered”

Definition of Fidelity Context, Compliance, and Competence • Three components – Context: Structural aspects that encompass the framework for service delivery – Compliance: The extent to which the practitioner uses the core program components – Competence: Process aspects that encompass the level of skill shown by the practitioner and the “way in which the service is delivered”

Fidelity Purpose and Importance • Interpret outcomes – is this an implementation challenge or intervention challenge? • Detect variations in implementation • Replicate consistently • Ensure compliance and competence • Develop and refine interventions in the context of practice • Identify “active ingredients” of program

Fidelity Purpose and Importance • Interpret outcomes – is this an implementation challenge or intervention challenge? • Detect variations in implementation • Replicate consistently • Ensure compliance and competence • Develop and refine interventions in the context of practice • Identify “active ingredients” of program

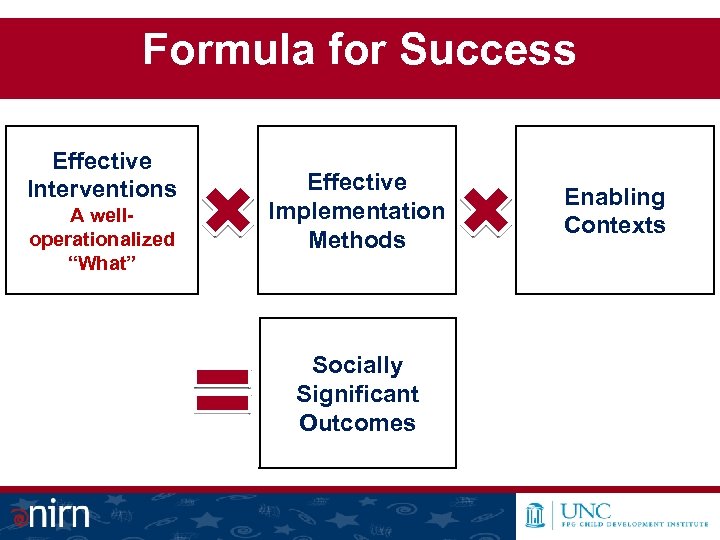

Formula for Success Effective Interventions A welloperationalized “What” Effective Implementation Methods Socially Significant Outcomes Enabling Contexts

Formula for Success Effective Interventions A welloperationalized “What” Effective Implementation Methods Socially Significant Outcomes Enabling Contexts

Usable Intervention Criteria • Clear description of the program • Clear essential functions that define the program • Operational definitions of essential functions (practice profiles; do, say) • Practical performance assessment

Usable Intervention Criteria • Clear description of the program • Clear essential functions that define the program • Operational definitions of essential functions (practice profiles; do, say) • Practical performance assessment

Developing Fidelity Measures Practice Profiles Operationalize the Work • Describe the essential functions that allow a model to be teachable, learnable, and doable in typical human service settings • Promote consistency across practitioners at the level of actual service delivery • Consist of measurable and/or observable, behaviorallybased indicators for each essential function Gene Hall and Shirley Hord, (2010) Implementing Change: Patterns, Principles, and Potholes (3 rd Edition)

Developing Fidelity Measures Practice Profiles Operationalize the Work • Describe the essential functions that allow a model to be teachable, learnable, and doable in typical human service settings • Promote consistency across practitioners at the level of actual service delivery • Consist of measurable and/or observable, behaviorallybased indicators for each essential function Gene Hall and Shirley Hord, (2010) Implementing Change: Patterns, Principles, and Potholes (3 rd Edition)

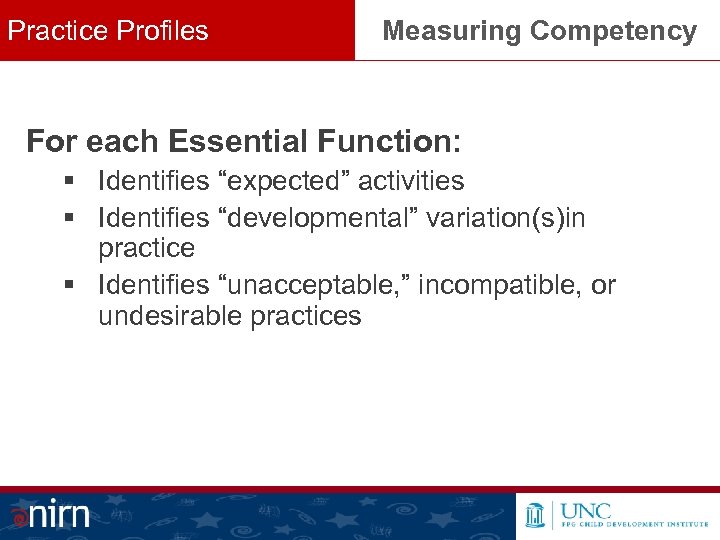

Practice Profiles Measuring Competency For each Essential Function: § Identifies “expected” activities § Identifies “developmental” variation(s)in practice § Identifies “unacceptable, ” incompatible, or undesirable practices

Practice Profiles Measuring Competency For each Essential Function: § Identifies “expected” activities § Identifies “developmental” variation(s)in practice § Identifies “unacceptable, ” incompatible, or undesirable practices

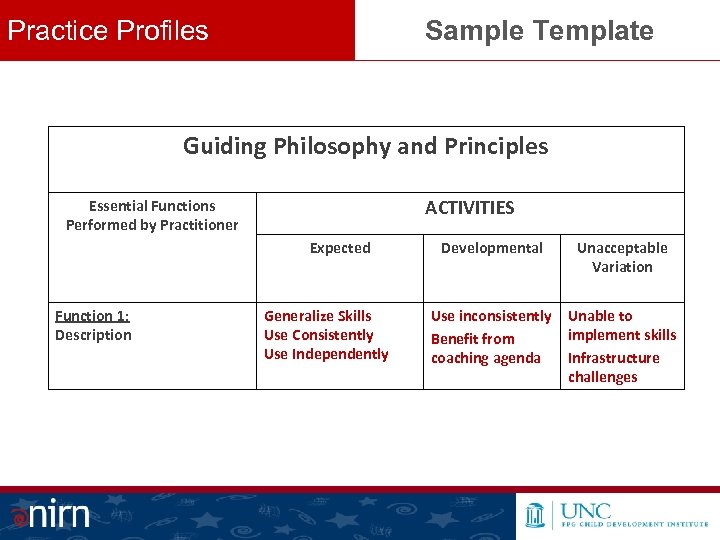

Practice Profiles Sample Template Guiding Philosophy and Principles ACTIVITIES Essential Functions Performed by Practitioner Expected Function 1: Description Generalize Skills Use Consistently Use Independently Developmental Unacceptable Variation Use inconsistently Benefit from coaching agenda Unable to implement skills Infrastructure challenges

Practice Profiles Sample Template Guiding Philosophy and Principles ACTIVITIES Essential Functions Performed by Practitioner Expected Function 1: Description Generalize Skills Use Consistently Use Independently Developmental Unacceptable Variation Use inconsistently Benefit from coaching agenda Unable to implement skills Infrastructure challenges

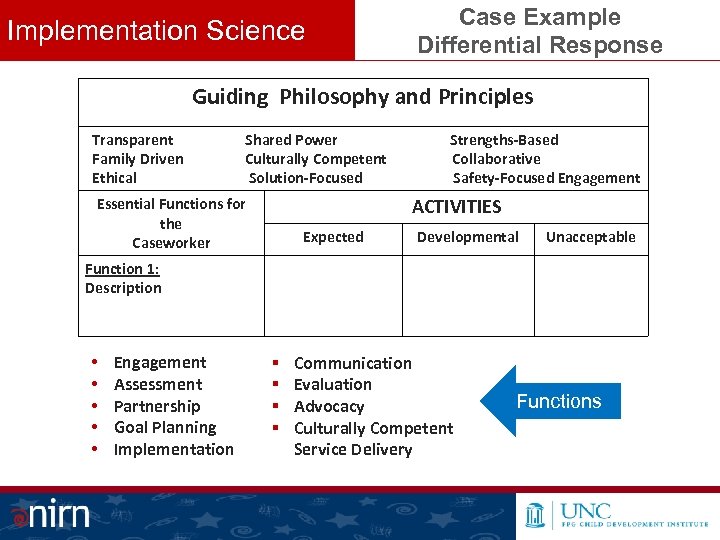

Implementation Science Case Example Differential Response Guiding Philosophy and Principles Transparent Shared Power Strengths-Based Family Driven Culturally Competent Collaborative Ethical Solution-Focused Safety-Focused Engagement ACTIVITIES Essential Functions for the Caseworker Expected Developmental Unacceptable Function 1: Description • • • Engagement Assessment Partnership Goal Planning Implementation § § Communication Evaluation Advocacy Culturally Competent Service Delivery Functions

Implementation Science Case Example Differential Response Guiding Philosophy and Principles Transparent Shared Power Strengths-Based Family Driven Culturally Competent Collaborative Ethical Solution-Focused Safety-Focused Engagement ACTIVITIES Essential Functions for the Caseworker Expected Developmental Unacceptable Function 1: Description • • • Engagement Assessment Partnership Goal Planning Implementation § § Communication Evaluation Advocacy Culturally Competent Service Delivery Functions

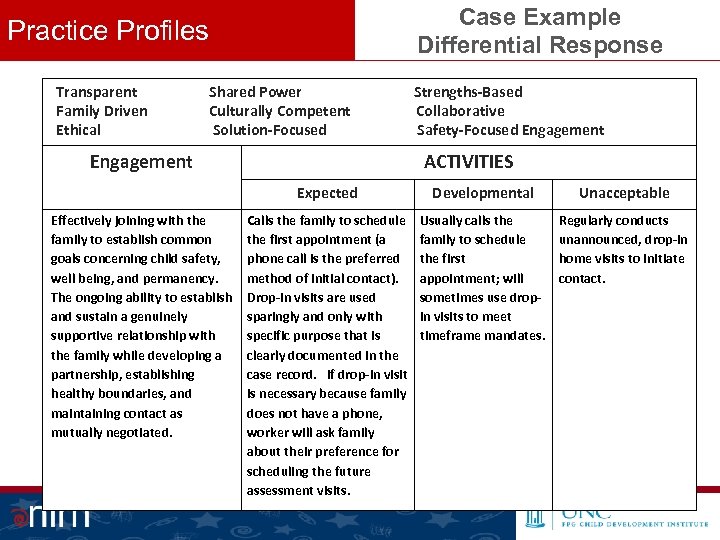

Case Example Differential Response Practice Profiles Transparent Shared Power Strengths-Based Family Driven Culturally Competent Collaborative Ethical Solution-Focused Safety-Focused Engagement ACTIVITIES Expected Effectively joining with the family to establish common goals concerning child safety, well being, and permanency. The ongoing ability to establish and sustain a genuinely supportive relationship with the family while developing a partnership, establishing healthy boundaries, and maintaining contact as mutually negotiated. Developmental Unacceptable Calls the family to schedule the first appointment (a phone call is the preferred method of initial contact). Drop-in visits are used sparingly and only with specific purpose that is clearly documented in the case record. If drop-in visit is necessary because family does not have a phone, worker will ask family about their preference for scheduling the future assessment visits. Usually calls the family to schedule the first appointment; will sometimes use dropin visits to meet timeframe mandates. Regularly conducts unannounced, drop-in home visits to initiate contact.

Case Example Differential Response Practice Profiles Transparent Shared Power Strengths-Based Family Driven Culturally Competent Collaborative Ethical Solution-Focused Safety-Focused Engagement ACTIVITIES Expected Effectively joining with the family to establish common goals concerning child safety, well being, and permanency. The ongoing ability to establish and sustain a genuinely supportive relationship with the family while developing a partnership, establishing healthy boundaries, and maintaining contact as mutually negotiated. Developmental Unacceptable Calls the family to schedule the first appointment (a phone call is the preferred method of initial contact). Drop-in visits are used sparingly and only with specific purpose that is clearly documented in the case record. If drop-in visit is necessary because family does not have a phone, worker will ask family about their preference for scheduling the future assessment visits. Usually calls the family to schedule the first appointment; will sometimes use dropin visits to meet timeframe mandates. Regularly conducts unannounced, drop-in home visits to initiate contact.

Practice Profiles If you know what “it” is then: • • Multiple Purpose for Implementation You know the practice to be implemented You can improve “it” Increased ability to effectively develop the Drivers Increased ability to replicate “it” More likely to deliver high quality services Outcomes can be accurately interpreted Common language and deeper understanding

Practice Profiles If you know what “it” is then: • • Multiple Purpose for Implementation You know the practice to be implemented You can improve “it” Increased ability to effectively develop the Drivers Increased ability to replicate “it” More likely to deliver high quality services Outcomes can be accurately interpreted Common language and deeper understanding

Stages of Implementation When are we ready to assess fidelity? Practice profiles are a part of stage-based work. When we are engaged in program development work, practice profiles operationalize the intervention so that installation activities can be effective and fidelity can be measured during initial implementation. Stages

Stages of Implementation When are we ready to assess fidelity? Practice profiles are a part of stage-based work. When we are engaged in program development work, practice profiles operationalize the intervention so that installation activities can be effective and fidelity can be measured during initial implementation. Stages

Practice Profiles When are they developed? In order to create the necessary conditions for… Creating practitioner competence and confidence Changing organizations and systems …. we need to define our program and practice adequately so that we can install the Drivers necessary to promote consistent implementation of the specific activities associated with the essential functions of the new service(s) Drivers

Practice Profiles When are they developed? In order to create the necessary conditions for… Creating practitioner competence and confidence Changing organizations and systems …. we need to define our program and practice adequately so that we can install the Drivers necessary to promote consistent implementation of the specific activities associated with the essential functions of the new service(s) Drivers

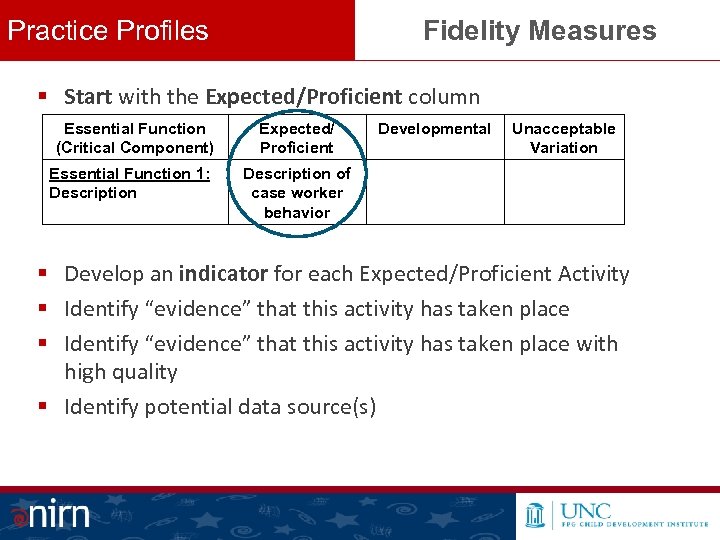

Practice Profiles Fidelity Measures § Start with the Expected/Proficient column Essential Function Expected/ Developmental (Critical Component) Essential Function 1: Description Proficient Unacceptable Variation Description of case worker behavior § Develop an indicator for each Expected/Proficient Activity § Identify “evidence” that this activity has taken place with high quality § Identify potential data source(s)

Practice Profiles Fidelity Measures § Start with the Expected/Proficient column Essential Function Expected/ Developmental (Critical Component) Essential Function 1: Description Proficient Unacceptable Variation Description of case worker behavior § Develop an indicator for each Expected/Proficient Activity § Identify “evidence” that this activity has taken place with high quality § Identify potential data source(s)

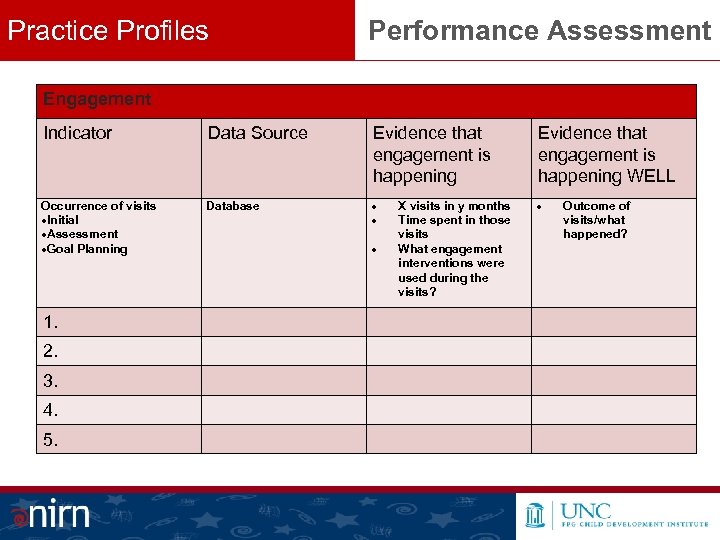

Practice Profiles Performance Assessment Engagement Indicator Data Source Evidence that engagement is happening WELL Occurrence of visits Initial Assessment Goal Planning Database 1. 2. 3. 4. 5. X visits in y months Time spent in those visits What engagement interventions were used during the visits? Outcome of visits/what happened?

Practice Profiles Performance Assessment Engagement Indicator Data Source Evidence that engagement is happening WELL Occurrence of visits Initial Assessment Goal Planning Database 1. 2. 3. 4. 5. X visits in y months Time spent in those visits What engagement interventions were used during the visits? Outcome of visits/what happened?

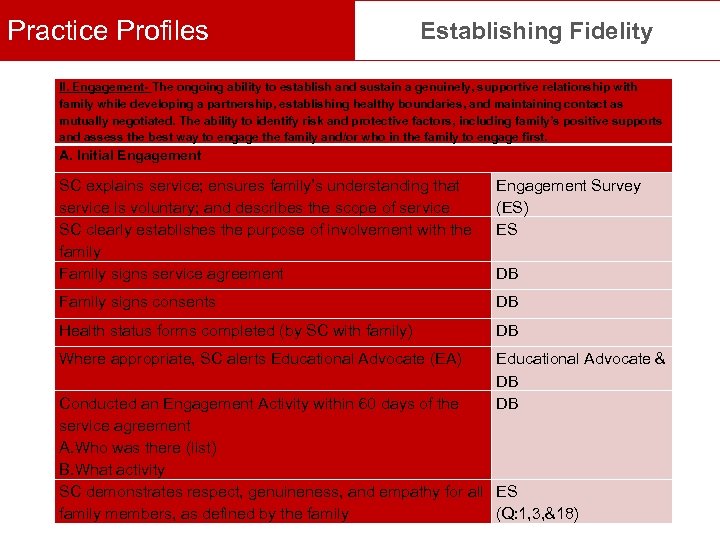

Practice Profiles Establishing Fidelity II. Engagement- The ongoing ability to establish and sustain a genuinely, supportive relationship with family while developing a partnership, establishing healthy boundaries, and maintaining contact as mutually negotiated. The ability to identify risk and protective factors, including family’s positive supports and assess the best way to engage the family and/or who in the family to engage first. A. Initial Engagement SC explains service; ensures family’s understanding that service is voluntary; and describes the scope of service SC clearly establishes the purpose of involvement with the family Family signs service agreement Engagement Survey (ES) ES Family signs consents DB Health status forms completed (by SC with family) DB Where appropriate, SC alerts Educational Advocate (EA) Educational Advocate & DB DB Conducted an Engagement Activity within 60 days of the service agreement A. Who was there (list) B. What activity SC demonstrates respect, genuineness, and empathy for all ES family members, as defined by the family (Q: 1, 3, &18)

Practice Profiles Establishing Fidelity II. Engagement- The ongoing ability to establish and sustain a genuinely, supportive relationship with family while developing a partnership, establishing healthy boundaries, and maintaining contact as mutually negotiated. The ability to identify risk and protective factors, including family’s positive supports and assess the best way to engage the family and/or who in the family to engage first. A. Initial Engagement SC explains service; ensures family’s understanding that service is voluntary; and describes the scope of service SC clearly establishes the purpose of involvement with the family Family signs service agreement Engagement Survey (ES) ES Family signs consents DB Health status forms completed (by SC with family) DB Where appropriate, SC alerts Educational Advocate (EA) Educational Advocate & DB DB Conducted an Engagement Activity within 60 days of the service agreement A. Who was there (list) B. What activity SC demonstrates respect, genuineness, and empathy for all ES family members, as defined by the family (Q: 1, 3, &18)

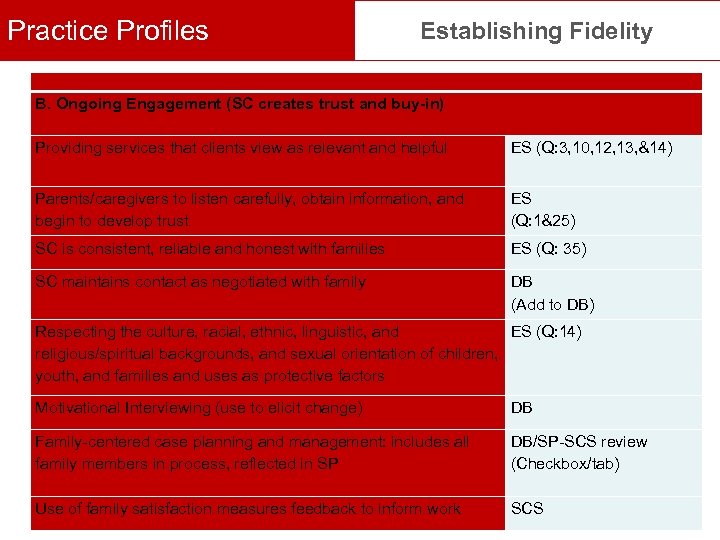

Practice Profiles Establishing Fidelity B. Ongoing Engagement (SC creates trust and buy-in) Providing services that clients view as relevant and helpful ES (Q: 3, 10, 12, 13, &14) Parents/caregivers to listen carefully, obtain information, and begin to develop trust ES (Q: 1&25) SC is consistent, reliable and honest with families ES (Q: 35) SC maintains contact as negotiated with family DB (Add to DB) Respecting the culture, racial, ethnic, linguistic, and ES (Q: 14) religious/spiritual backgrounds, and sexual orientation of children, youth, and families and uses as protective factors Motivational Interviewing (use to elicit change) DB Family-centered case planning and management: includes all family members in process, reflected in SP DB/SP-SCS review (Checkbox/tab) Use of family satisfaction measures feedback to inform work SCS

Practice Profiles Establishing Fidelity B. Ongoing Engagement (SC creates trust and buy-in) Providing services that clients view as relevant and helpful ES (Q: 3, 10, 12, 13, &14) Parents/caregivers to listen carefully, obtain information, and begin to develop trust ES (Q: 1&25) SC is consistent, reliable and honest with families ES (Q: 35) SC maintains contact as negotiated with family DB (Add to DB) Respecting the culture, racial, ethnic, linguistic, and ES (Q: 14) religious/spiritual backgrounds, and sexual orientation of children, youth, and families and uses as protective factors Motivational Interviewing (use to elicit change) DB Family-centered case planning and management: includes all family members in process, reflected in SP DB/SP-SCS review (Checkbox/tab) Use of family satisfaction measures feedback to inform work SCS

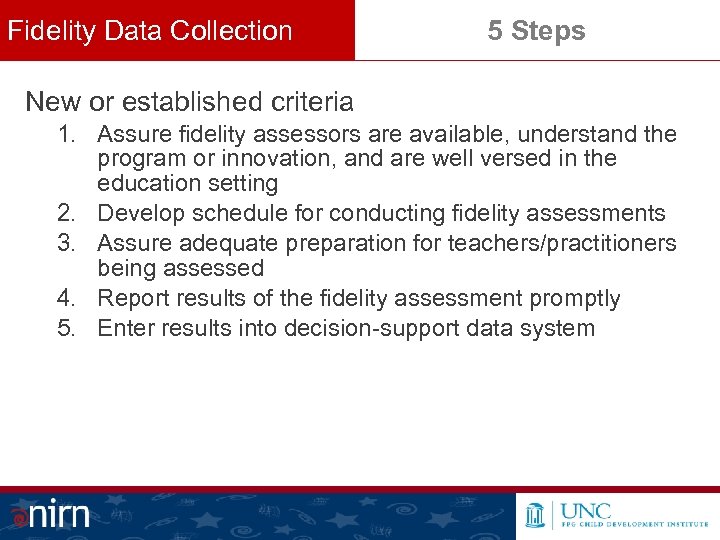

Fidelity Data Collection 5 Steps New or established criteria 1. Assure fidelity assessors are available, understand the program or innovation, and are well versed in the education setting 2. Develop schedule for conducting fidelity assessments 3. Assure adequate preparation for teachers/practitioners being assessed 4. Report results of the fidelity assessment promptly 5. Enter results into decision-support data system

Fidelity Data Collection 5 Steps New or established criteria 1. Assure fidelity assessors are available, understand the program or innovation, and are well versed in the education setting 2. Develop schedule for conducting fidelity assessments 3. Assure adequate preparation for teachers/practitioners being assessed 4. Report results of the fidelity assessment promptly 5. Enter results into decision-support data system

Promote High Fidelity Implementation Supports Fidelity is an implementation outcome How can we create an implementation infrastructure that supports high fidelity implementation?

Promote High Fidelity Implementation Supports Fidelity is an implementation outcome How can we create an implementation infrastructure that supports high fidelity implementation?

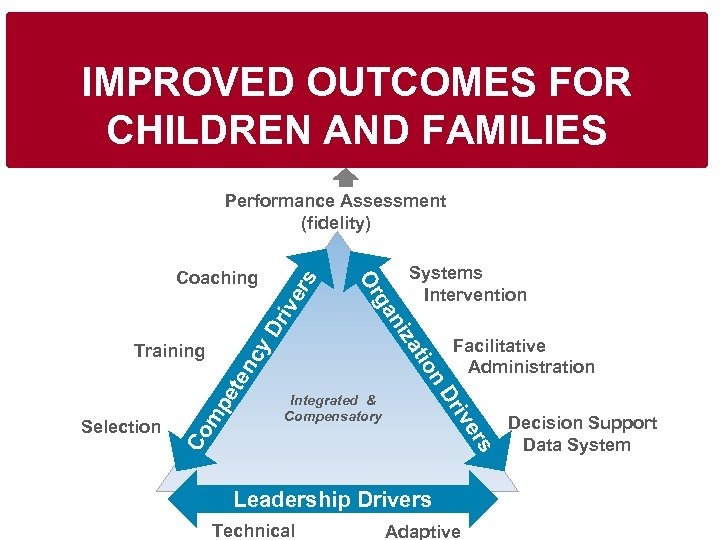

IMPROVED OUTCOMES FOR CHILDREN AND FAMILIES riv y D nc Facilitative Administration n rs Co Compensatory ive Dr Integrated & Compensatory tio za mp ete Systems Intervention ni Selection ga Training Or Coaching er s Performance Assessment (fidelity) Leadership Drivers Technical Adaptive Decision Support Data System

IMPROVED OUTCOMES FOR CHILDREN AND FAMILIES riv y D nc Facilitative Administration n rs Co Compensatory ive Dr Integrated & Compensatory tio za mp ete Systems Intervention ni Selection ga Training Or Coaching er s Performance Assessment (fidelity) Leadership Drivers Technical Adaptive Decision Support Data System

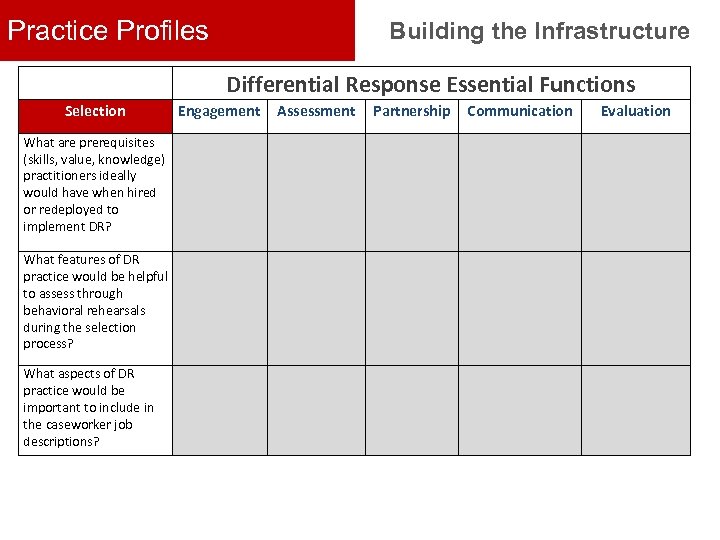

Practice Profiles Building the Infrastructure Differential Response Essential Functions Selection What are prerequisites (skills, value, knowledge) practitioners ideally would have when hired or redeployed to implement DR? What features of DR practice would be helpful to assess through behavioral rehearsals during the selection process? What aspects of DR practice would be important to include in the caseworker job descriptions? Engagement Assessment Partnership Communication Evaluation

Practice Profiles Building the Infrastructure Differential Response Essential Functions Selection What are prerequisites (skills, value, knowledge) practitioners ideally would have when hired or redeployed to implement DR? What features of DR practice would be helpful to assess through behavioral rehearsals during the selection process? What aspects of DR practice would be important to include in the caseworker job descriptions? Engagement Assessment Partnership Communication Evaluation

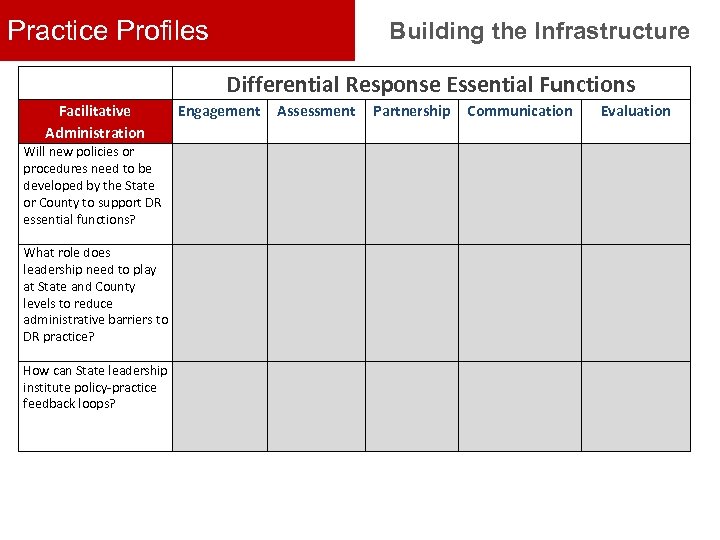

Practice Profiles Building the Infrastructure Differential Response Essential Functions Facilitative Administration Will new policies or procedures need to be developed by the State or County to support DR essential functions? What role does leadership need to play at State and County levels to reduce administrative barriers to DR practice? How can State leadership institute policy-practice feedback loops? Engagement Assessment Partnership Communication Evaluation

Practice Profiles Building the Infrastructure Differential Response Essential Functions Facilitative Administration Will new policies or procedures need to be developed by the State or County to support DR essential functions? What role does leadership need to play at State and County levels to reduce administrative barriers to DR practice? How can State leadership institute policy-practice feedback loops? Engagement Assessment Partnership Communication Evaluation

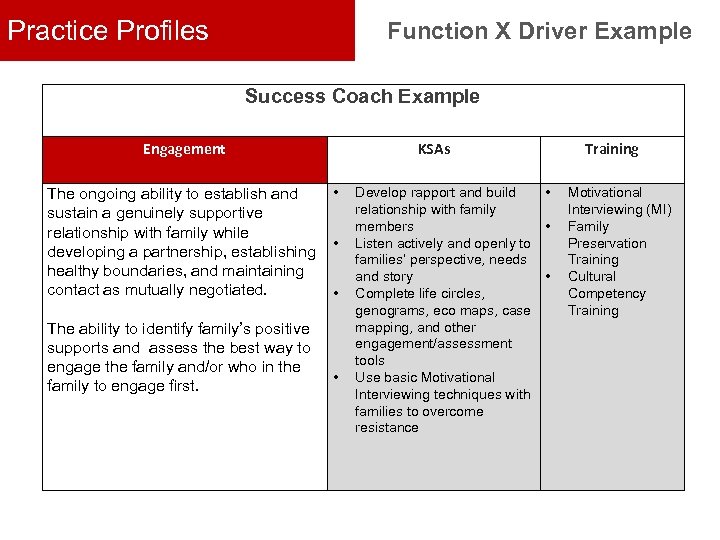

Practice Profiles Function X Driver Example Success Coach Example Engagement KSAs • The ongoing ability to establish and sustain a genuinely supportive relationship with family while • developing a partnership, establishing healthy boundaries, and maintaining contact as mutually negotiated. • The ability to identify family’s positive supports and assess the best way to engage the family and/or who in the family to engage first. • Develop rapport and build • relationship with family members • Listen actively and openly to families’ perspective, needs and story • Complete life circles, genograms, eco maps, case mapping, and other engagement/assessment tools Use basic Motivational Interviewing techniques with families to overcome resistance Training Motivational Interviewing (MI) Family Preservation Training Cultural Competency Training

Practice Profiles Function X Driver Example Success Coach Example Engagement KSAs • The ongoing ability to establish and sustain a genuinely supportive relationship with family while • developing a partnership, establishing healthy boundaries, and maintaining contact as mutually negotiated. • The ability to identify family’s positive supports and assess the best way to engage the family and/or who in the family to engage first. • Develop rapport and build • relationship with family members • Listen actively and openly to families’ perspective, needs and story • Complete life circles, genograms, eco maps, case mapping, and other engagement/assessment tools Use basic Motivational Interviewing techniques with families to overcome resistance Training Motivational Interviewing (MI) Family Preservation Training Cultural Competency Training

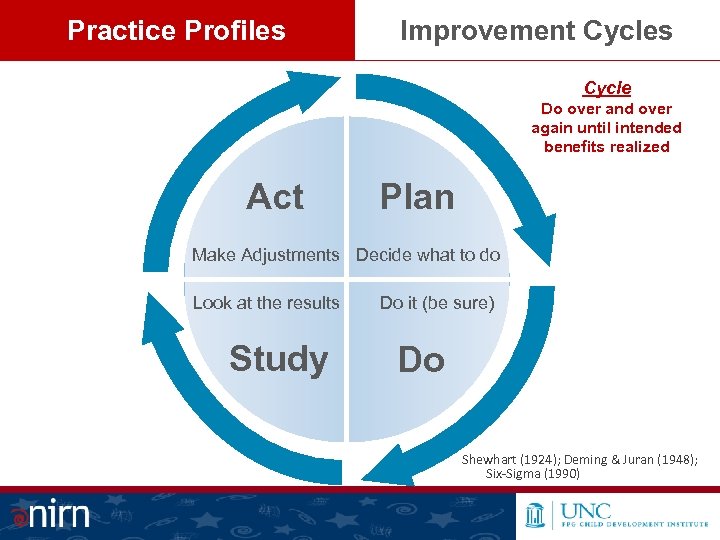

Practice Profiles Improvement Cycles Cycle Do over and over again until intended benefits realized Act Plan Make Adjustments Decide what to do Look at the results Study Do it (be sure) Do Shewhart (1924); Deming & Juran (1948); Six-Sigma (1990)

Practice Profiles Improvement Cycles Cycle Do over and over again until intended benefits realized Act Plan Make Adjustments Decide what to do Look at the results Study Do it (be sure) Do Shewhart (1924); Deming & Juran (1948); Six-Sigma (1990)

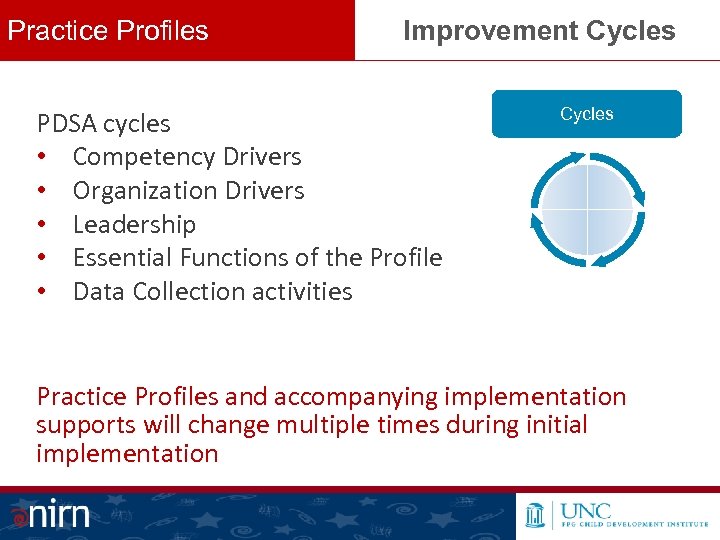

Practice Profiles Improvement Cycles PDSA cycles • Competency Drivers • Organization Drivers • Leadership • Essential Functions of the Profile • Data Collection activities Cycles Practice Profiles and accompanying implementation supports will change multiple times during initial implementation

Practice Profiles Improvement Cycles PDSA cycles • Competency Drivers • Organization Drivers • Leadership • Essential Functions of the Profile • Data Collection activities Cycles Practice Profiles and accompanying implementation supports will change multiple times during initial implementation

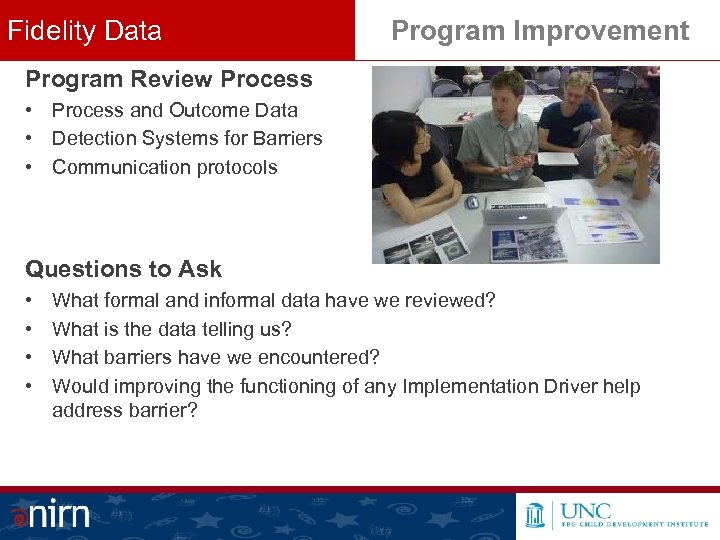

Fidelity Data Program Improvement Program Review Process • Process and Outcome Data • Detection Systems for Barriers • Communication protocols Questions to Ask • • What formal and informal data have we reviewed? What is the data telling us? What barriers have we encountered? Would improving the functioning of any Implementation Driver help address barrier?

Fidelity Data Program Improvement Program Review Process • Process and Outcome Data • Detection Systems for Barriers • Communication protocols Questions to Ask • • What formal and informal data have we reviewed? What is the data telling us? What barriers have we encountered? Would improving the functioning of any Implementation Driver help address barrier?

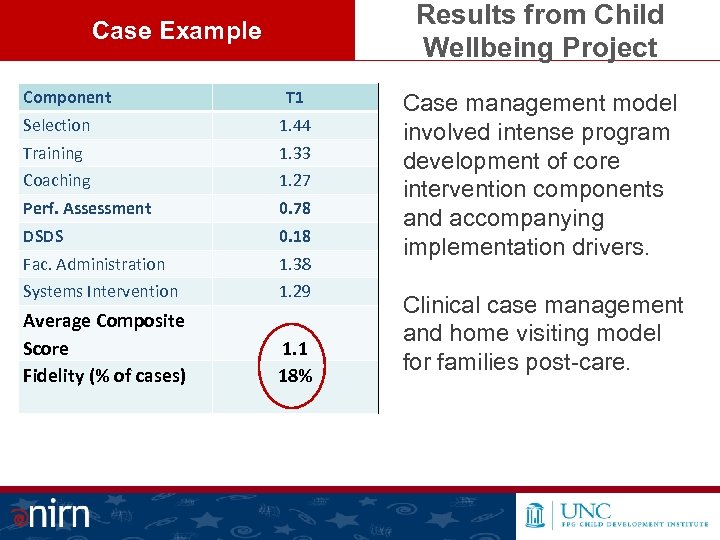

Results from Child Wellbeing Project Case Example Component T 1 Selection 1. 44 Training 1. 33 Coaching 1. 27 Perf. Assessment 0. 78 DSDS 0. 18 Fac. Administration 1. 38 Systems Intervention 1. 29 Average Composite Score Fidelity (% of cases) 1. 1 18% Case management model involved intense program development of core intervention components and accompanying implementation drivers. Clinical case management and home visiting model for families post-care.

Results from Child Wellbeing Project Case Example Component T 1 Selection 1. 44 Training 1. 33 Coaching 1. 27 Perf. Assessment 0. 78 DSDS 0. 18 Fac. Administration 1. 38 Systems Intervention 1. 29 Average Composite Score Fidelity (% of cases) 1. 1 18% Case management model involved intense program development of core intervention components and accompanying implementation drivers. Clinical case management and home visiting model for families post-care.

Using Data to Improve Case Example Fidelity • How did Implementation Teams improve fidelity? – Intentional action planning based on implementation drivers assessment data and program data – Improved coaching, administrative support, and use of data to drive decision-making ; adapted model – Diagnosed adaptive challenges, engaged stakeholders, inspired change

Using Data to Improve Case Example Fidelity • How did Implementation Teams improve fidelity? – Intentional action planning based on implementation drivers assessment data and program data – Improved coaching, administrative support, and use of data to drive decision-making ; adapted model – Diagnosed adaptive challenges, engaged stakeholders, inspired change

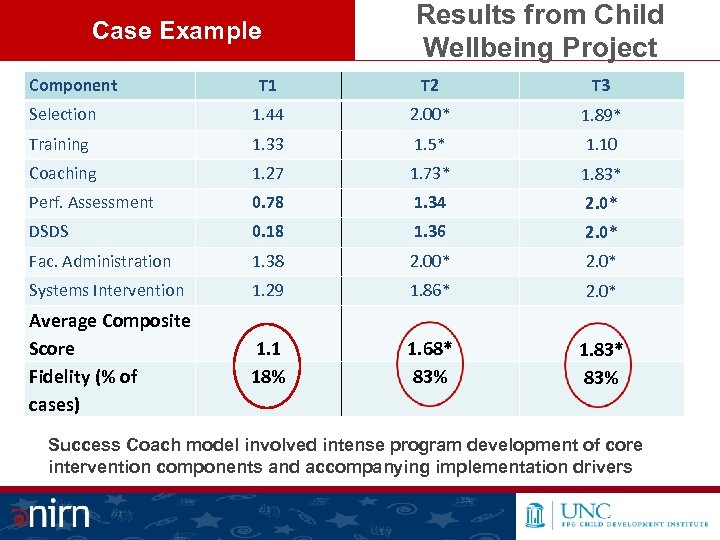

Case Example Component Results from Child Wellbeing Project T 1 T 2 T 3 Selection 1. 44 2. 00* 1. 89* Training 1. 33 1. 5* 1. 10 Coaching 1. 27 1. 73* 1. 83* Perf. Assessment 0. 78 1. 34 2. 0* DSDS 0. 18 1. 36 2. 0* Fac. Administration 1. 38 2. 00* 2. 0* Systems Intervention 1. 29 1. 86* 2. 0* Average Composite Score Fidelity (% of cases) 1. 1 18% 1. 68* 83% 1. 83* 83% Success Coach model involved intense program development of core intervention components and accompanying implementation drivers

Case Example Component Results from Child Wellbeing Project T 1 T 2 T 3 Selection 1. 44 2. 00* 1. 89* Training 1. 33 1. 5* 1. 10 Coaching 1. 27 1. 73* 1. 83* Perf. Assessment 0. 78 1. 34 2. 0* DSDS 0. 18 1. 36 2. 0* Fac. Administration 1. 38 2. 00* 2. 0* Systems Intervention 1. 29 1. 86* 2. 0* Average Composite Score Fidelity (% of cases) 1. 1 18% 1. 68* 83% 1. 83* 83% Success Coach model involved intense program development of core intervention components and accompanying implementation drivers

High Fidelity Positive Outcomes Did high fidelity implementation lead to improved outcomes? Early outcomes include… • Stabilized families • Prevented re-entry of children into out of home placements

High Fidelity Positive Outcomes Did high fidelity implementation lead to improved outcomes? Early outcomes include… • Stabilized families • Prevented re-entry of children into out of home placements

Fidelity Data Collection Methods, Resources and Feasibility If fidelity criteria are already developed 1. Understand reliability and validity of instruments a. b. c. 2. Work with program developers or purveyors to understand the detailed protocols for data collection a. b. c. 3. Are we measuring what we thought we were? Is fidelity predictive of outcomes? Does fidelity assessment discriminate between programs? Who collects the data (expert raters, teachers) How often is data collected How are data scored analyzed Understand issues (reliability, feasibility, cost) in collecting different kinds of fidelity data a. Process data vs. Structural data

Fidelity Data Collection Methods, Resources and Feasibility If fidelity criteria are already developed 1. Understand reliability and validity of instruments a. b. c. 2. Work with program developers or purveyors to understand the detailed protocols for data collection a. b. c. 3. Are we measuring what we thought we were? Is fidelity predictive of outcomes? Does fidelity assessment discriminate between programs? Who collects the data (expert raters, teachers) How often is data collected How are data scored analyzed Understand issues (reliability, feasibility, cost) in collecting different kinds of fidelity data a. Process data vs. Structural data

Summary Program Fidelity • Fidelity has multiple facets and is critical to achieving outcomes • Fully operationalized programs are pre-requisites for developing fidelity criteria • Valid and reliable fidelity criteria need to be collected carefully with guidance from program developers or purveyors • Fidelity is an implementation outcome; effective use of Implementation Drivers can increase our chances of high-fidelity implementation • Fidelity data can and should be used for program improvement

Summary Program Fidelity • Fidelity has multiple facets and is critical to achieving outcomes • Fully operationalized programs are pre-requisites for developing fidelity criteria • Valid and reliable fidelity criteria need to be collected carefully with guidance from program developers or purveyors • Fidelity is an implementation outcome; effective use of Implementation Drivers can increase our chances of high-fidelity implementation • Fidelity data can and should be used for program improvement

Resources Program Fidelity Examples of fidelity instruments • Teaching Pyramid Observation Tool for Preschool Classrooms (TPOT), Research Edition, Mary Louise Hemmeter and Lise Fox • The PBIS fidelity measure (the SET) described at http: //www. pbis. org/pbis_resource_detail_page. aspx? Type=4&PB IS_Resource. ID=222 Articles • Sanetti, L. & Kratochwill, T. (2009). Toward Developing a Science of Treatment Integrity: Introduction to the Special Series. School Psychology Review, Volume 38, No. 4, pp. 445– 459. • Mowbray, C. T. , Holter, M. C. , Teague, G. B. , Bybee, D. (2003). Fidelity Criteria: Development, Measurement and Validation. American Journal of Evaluation, 24 (3), 315 -340. • Hall, G. E. , & Hord, S. M. (2011). Implementing Change: Patterns, principles and potholes (3 rd ed. )Boston: Allyn and Bacon.

Resources Program Fidelity Examples of fidelity instruments • Teaching Pyramid Observation Tool for Preschool Classrooms (TPOT), Research Edition, Mary Louise Hemmeter and Lise Fox • The PBIS fidelity measure (the SET) described at http: //www. pbis. org/pbis_resource_detail_page. aspx? Type=4&PB IS_Resource. ID=222 Articles • Sanetti, L. & Kratochwill, T. (2009). Toward Developing a Science of Treatment Integrity: Introduction to the Special Series. School Psychology Review, Volume 38, No. 4, pp. 445– 459. • Mowbray, C. T. , Holter, M. C. , Teague, G. B. , Bybee, D. (2003). Fidelity Criteria: Development, Measurement and Validation. American Journal of Evaluation, 24 (3), 315 -340. • Hall, G. E. , & Hord, S. M. (2011). Implementing Change: Patterns, principles and potholes (3 rd ed. )Boston: Allyn and Bacon.

Stay Connected! Allison. metz@unc. edu nirn. fpg. unc. edu www. scalingup. org www. implementationconference. org

Stay Connected! Allison. metz@unc. edu nirn. fpg. unc. edu www. scalingup. org www. implementationconference. org