422131119a99a6b3884e9f2132de2152.ppt

- Количество слайдов: 24

Developing artificial agents worthy of trust “Would you buy a used car from this artificial agent? ” F. S. Grodzinsky Sacred Heart University Research Center for Computing and Society Southern CT State University

Developing artificial agents worthy of trust “Would you buy a used car from this artificial agent? ” F. S. Grodzinsky Sacred Heart University Research Center for Computing and Society Southern CT State University

Developing artificial agents worthy of trust ¡ This research is presented on behalf of my myself and my co-authors ¡ K. W. Miller University of Illinois Springfield ¡ M. J. Wolf Bemidji State University

Developing artificial agents worthy of trust ¡ This research is presented on behalf of my myself and my co-authors ¡ K. W. Miller University of Illinois Springfield ¡ M. J. Wolf Bemidji State University

Developing artificial agents worthy of trust

Developing artificial agents worthy of trust

Roadmap Definitions ¡ Preliminary Model ¡ Analysis of relevant literature ¡ Trust relationships: role of the developer ¡ Expanded Model ¡ Our warning ¡

Roadmap Definitions ¡ Preliminary Model ¡ Analysis of relevant literature ¡ Trust relationships: role of the developer ¡ Expanded Model ¡ Our warning ¡

Definitions “A TRUSTS B”

Definitions “A TRUSTS B”

Definitions “A TRUSTS B” ‘Trustor’ ‘Trustee’

Definitions “A TRUSTS B” ‘Trustor’ ‘Trustee’

“Artificial Agent”, or AA ¡ An artificial agent is a non-human entity that is (in some sense) autonomous, interacts with its environment, and adapts itself as a function of its internal state and its interactions with the environment.

“Artificial Agent”, or AA ¡ An artificial agent is a non-human entity that is (in some sense) autonomous, interacts with its environment, and adapts itself as a function of its internal state and its interactions with the environment.

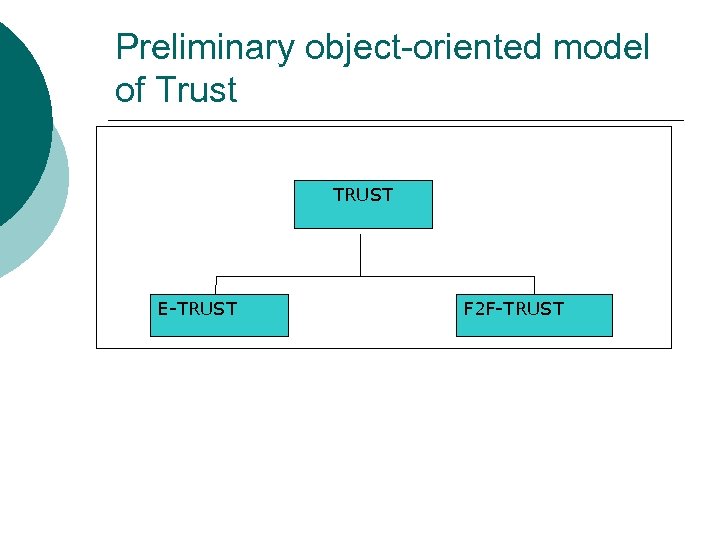

Preliminary object-oriented model of Trust TRUST E-TRUST F 2 F-TRUST

Preliminary object-oriented model of Trust TRUST E-TRUST F 2 F-TRUST

E-Trust Physical contact not required. ¡ Low bandwidth communication possible. ¡ Social norms are less established. ¡ “Trust needs touch” not required. ¡ Referential trust, based on recommendations, is often important. ¡

E-Trust Physical contact not required. ¡ Low bandwidth communication possible. ¡ Social norms are less established. ¡ “Trust needs touch” not required. ¡ Referential trust, based on recommendations, is often important. ¡

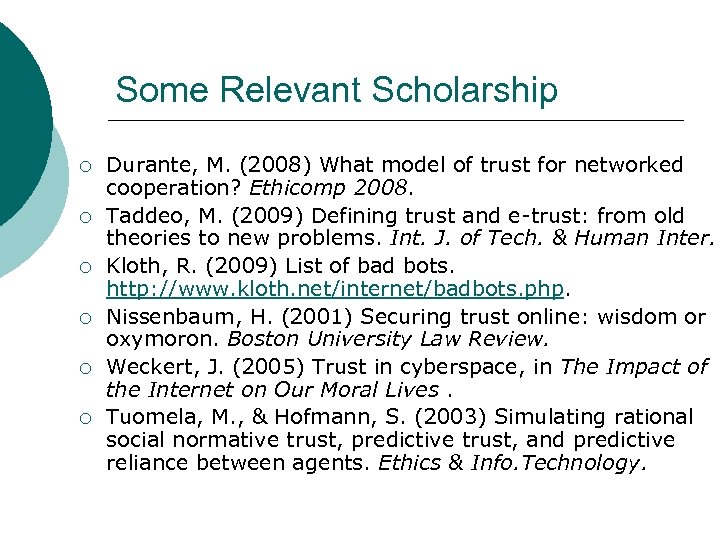

Some Relevant Scholarship ¡ ¡ ¡ Durante, M. (2008) What model of trust for networked cooperation? Ethicomp 2008. Taddeo, M. (2009) Defining trust and e-trust: from old theories to new problems. Int. J. of Tech. & Human Inter. Kloth, R. (2009) List of bad bots. http: //www. kloth. net/internet/badbots. php. Nissenbaum, H. (2001) Securing trust online: wisdom or oxymoron. Boston University Law Review. Weckert, J. (2005) Trust in cyberspace, in The Impact of the Internet on Our Moral Lives. Tuomela, M. , & Hofmann, S. (2003) Simulating rational social normative trust, predictive trust, and predictive reliance between agents. Ethics & Info. Technology.

Some Relevant Scholarship ¡ ¡ ¡ Durante, M. (2008) What model of trust for networked cooperation? Ethicomp 2008. Taddeo, M. (2009) Defining trust and e-trust: from old theories to new problems. Int. J. of Tech. & Human Inter. Kloth, R. (2009) List of bad bots. http: //www. kloth. net/internet/badbots. php. Nissenbaum, H. (2001) Securing trust online: wisdom or oxymoron. Boston University Law Review. Weckert, J. (2005) Trust in cyberspace, in The Impact of the Internet on Our Moral Lives. Tuomela, M. , & Hofmann, S. (2003) Simulating rational social normative trust, predictive trust, and predictive reliance between agents. Ethics & Info. Technology.

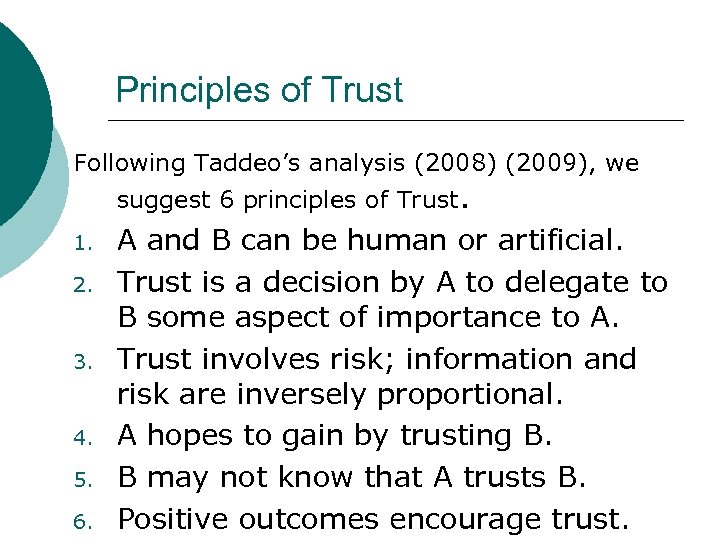

Principles of Trust Following Taddeo’s analysis (2008) (2009), we suggest 6 principles of Trust. 1. 2. 3. 4. 5. 6. A and B can be human or artificial. Trust is a decision by A to delegate to B some aspect of importance to A. Trust involves risk; information and risk are inversely proportional. A hopes to gain by trusting B. B may not know that A trusts B. Positive outcomes encourage trust.

Principles of Trust Following Taddeo’s analysis (2008) (2009), we suggest 6 principles of Trust. 1. 2. 3. 4. 5. 6. A and B can be human or artificial. Trust is a decision by A to delegate to B some aspect of importance to A. Trust involves risk; information and risk are inversely proportional. A hopes to gain by trusting B. B may not know that A trusts B. Positive outcomes encourage trust.

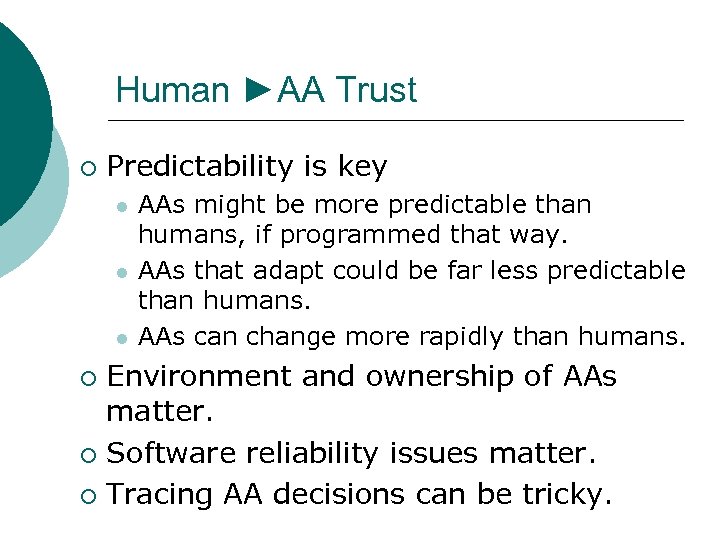

Human ►AA Trust ¡ Predictability is key l l l AAs might be more predictable than humans, if programmed that way. AAs that adapt could be far less predictable than humans. AAs can change more rapidly than humans. Environment and ownership of AAs matter. ¡ Software reliability issues matter. ¡ Tracing AA decisions can be tricky. ¡

Human ►AA Trust ¡ Predictability is key l l l AAs might be more predictable than humans, if programmed that way. AAs that adapt could be far less predictable than humans. AAs can change more rapidly than humans. Environment and ownership of AAs matter. ¡ Software reliability issues matter. ¡ Tracing AA decisions can be tricky. ¡

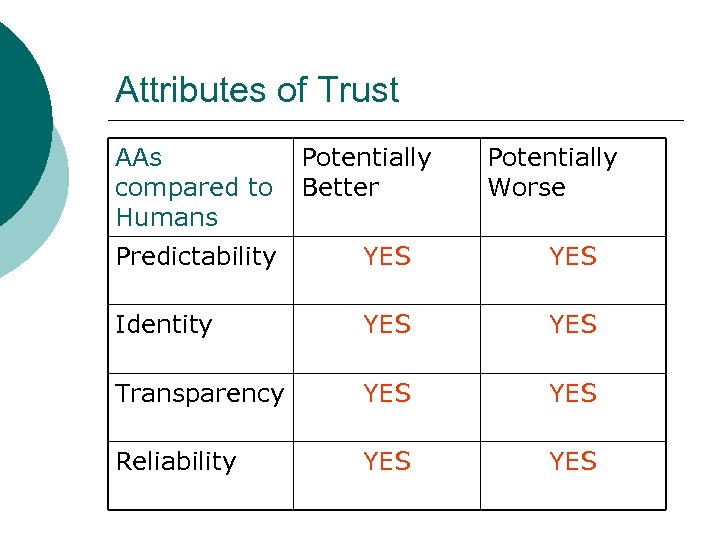

Attributes of Trust AAs Potentially compared to Better Humans Potentially Worse Predictability YES Identity YES Transparency YES Reliability YES

Attributes of Trust AAs Potentially compared to Better Humans Potentially Worse Predictability YES Identity YES Transparency YES Reliability YES

AA ►Human “Trust” What data will influence an AA that is calculating the risk and benefit of acting out a trust relationship with a human? ¡ AA developers must make explicit what they value in order to make an AA model trust. ¡ Records of AA trust decisions and outcomes may be useful in studying human trust decisions and relationships. ¡

AA ►Human “Trust” What data will influence an AA that is calculating the risk and benefit of acting out a trust relationship with a human? ¡ AA developers must make explicit what they value in order to make an AA model trust. ¡ Records of AA trust decisions and outcomes may be useful in studying human trust decisions and relationships. ¡

AA ◄►AA “Trust” Transparency is again important. ¡ Should an AA “reveal itself” to other AAs in order to encourage trust? ¡ Trade secrets block transparency and trust. ¡ AA “third parties” may be called upon to act as intermediaries in AA interactions that require trust. ¡ Development and testing of AAs involved in trust relationships is difficult. ¡

AA ◄►AA “Trust” Transparency is again important. ¡ Should an AA “reveal itself” to other AAs in order to encourage trust? ¡ Trade secrets block transparency and trust. ¡ AA “third parties” may be called upon to act as intermediaries in AA interactions that require trust. ¡ Development and testing of AAs involved in trust relationships is difficult. ¡

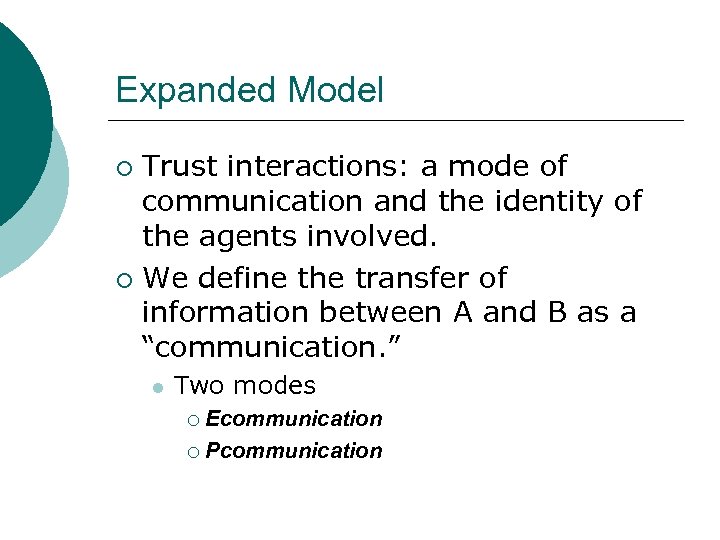

Expanded Model Trust interactions: a mode of communication and the identity of the agents involved. ¡ We define the transfer of information between A and B as a “communication. ” ¡ l Two modes Ecommunication ¡ Pcommunication ¡

Expanded Model Trust interactions: a mode of communication and the identity of the agents involved. ¡ We define the transfer of information between A and B as a “communication. ” ¡ l Two modes Ecommunication ¡ Pcommunication ¡

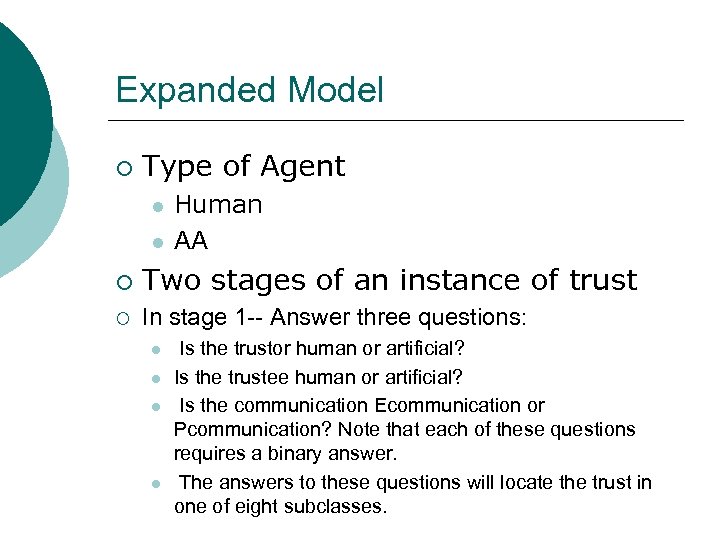

Expanded Model ¡ Type of Agent l l Human AA ¡ Two stages of an instance of trust ¡ In stage 1 -- Answer three questions: l l Is the trustor human or artificial? Is the trustee human or artificial? Is the communication Ecommunication or Pcommunication? Note that each of these questions requires a binary answer. The answers to these questions will locate the trust in one of eight subclasses.

Expanded Model ¡ Type of Agent l l Human AA ¡ Two stages of an instance of trust ¡ In stage 1 -- Answer three questions: l l Is the trustor human or artificial? Is the trustee human or artificial? Is the communication Ecommunication or Pcommunication? Note that each of these questions requires a binary answer. The answers to these questions will locate the trust in one of eight subclasses.

Expanded Model ¡ Stage 2 What is the socio-technical context of the relationship between A and B? l What is the socio-technical context of the communication between A and B? We will use the name “XYZtrust” for a superclass. The intent is that X and Y classify the trustor and trustee respectively as either human (H) or artificial (A); and that Z specifies if the trust is established with Pcommunication or Ecommunication. Since X, Y, and Z are binary, there are eight possible combinations for XYZ. Each of these eight combinations is a subclass of our superclass. l ¡

Expanded Model ¡ Stage 2 What is the socio-technical context of the relationship between A and B? l What is the socio-technical context of the communication between A and B? We will use the name “XYZtrust” for a superclass. The intent is that X and Y classify the trustor and trustee respectively as either human (H) or artificial (A); and that Z specifies if the trust is established with Pcommunication or Ecommunication. Since X, Y, and Z are binary, there are eight possible combinations for XYZ. Each of these eight combinations is a subclass of our superclass. l ¡

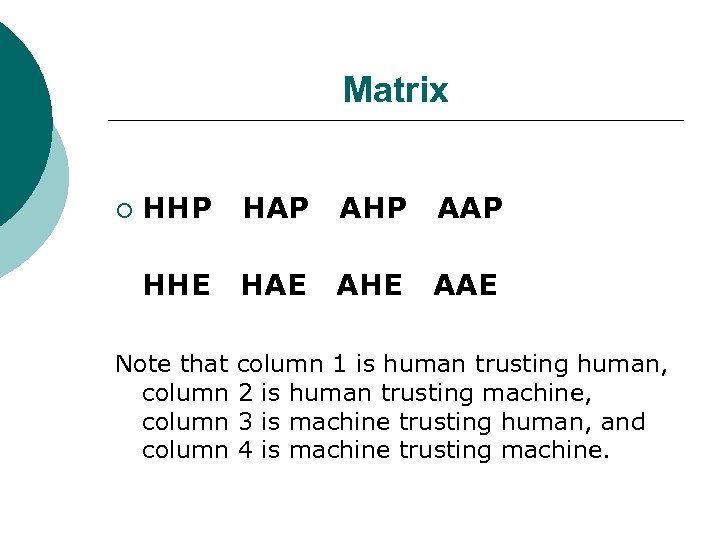

Matrix ¡ HHP HAP AHP AAP HHE HAE AHE AAE Note that column 1 is human trusting human, column 2 is human trusting machine, column 3 is machine trusting human, and column 4 is machine trusting machine.

Matrix ¡ HHP HAP AHP AAP HHE HAE AHE AAE Note that column 1 is human trusting human, column 2 is human trusting machine, column 3 is machine trusting human, and column 4 is machine trusting machine.

Expanded Model-Eight Subclasses ¡ ¡ ¡ HH-Ptrust: traditional notion of human, “face-toface” trust HH-Etrust: humans trust each other, but mediated by electronic means HA-Ptrust: human trusts a physically present AA, for example, a robot (no electronic mediation) HA-Etrust: human trusts an artificial entity (like a web bot) over the Internet AH-Ptrust: an AA, perhaps a robot, trusts a physically present human AH-Etrust: an AA, perhaps a web bot, trusts a human based on Internet interactions

Expanded Model-Eight Subclasses ¡ ¡ ¡ HH-Ptrust: traditional notion of human, “face-toface” trust HH-Etrust: humans trust each other, but mediated by electronic means HA-Ptrust: human trusts a physically present AA, for example, a robot (no electronic mediation) HA-Etrust: human trusts an artificial entity (like a web bot) over the Internet AH-Ptrust: an AA, perhaps a robot, trusts a physically present human AH-Etrust: an AA, perhaps a web bot, trusts a human based on Internet interactions

Expanded Model ¡ ¡ ¡ AA-Ptrust: an AA trusts another AA in a physical encounter; because this is Ptrust, the AAs might, for example, use sign language AA-Etrust: an AA trusts another AA electronically, e. g. , two web bots communicate via the Internet XYZtrust is our superclass, an abstraction that represents what is common to the eight types of subclasses. Each subclass represents an entire set of possible trust relationships

Expanded Model ¡ ¡ ¡ AA-Ptrust: an AA trusts another AA in a physical encounter; because this is Ptrust, the AAs might, for example, use sign language AA-Etrust: an AA trusts another AA electronically, e. g. , two web bots communicate via the Internet XYZtrust is our superclass, an abstraction that represents what is common to the eight types of subclasses. Each subclass represents an entire set of possible trust relationships

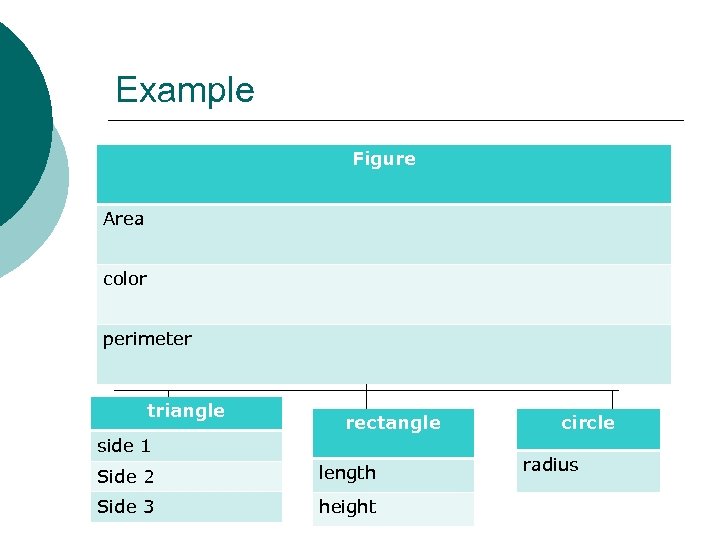

Example Figure Area color perimeter triangle rectangle side 1 Side 2 length Side 3 height circle radius

Example Figure Area color perimeter triangle rectangle side 1 Side 2 length Side 3 height circle radius

Socio-Technical Context ¡ ¡ Any instantiation of a XYZtrust subclass must include a description of the socio-technical contexts of X, Y, and Z Each subclass has characteristics that distinguish it from any other subclass. Each instantiation of that subclass can include a distinctive combination of socio-technical contexts. The model does not over-simplify the situations we are discussing; it seeks to organize our thinking about these situations in a coherent fashion.

Socio-Technical Context ¡ ¡ Any instantiation of a XYZtrust subclass must include a description of the socio-technical contexts of X, Y, and Z Each subclass has characteristics that distinguish it from any other subclass. Each instantiation of that subclass can include a distinctive combination of socio-technical contexts. The model does not over-simplify the situations we are discussing; it seeks to organize our thinking about these situations in a coherent fashion.

Conclusions AAs and humans are distinct. ¡ Trust relations between humans, between AAs, and between AAs and humans, have significant similarities. ¡ Adaptability in AAs increases risks. ¡ Predictability, transparency and traceability will enhance AA trustworthiness. ¡

Conclusions AAs and humans are distinct. ¡ Trust relations between humans, between AAs, and between AAs and humans, have significant similarities. ¡ Adaptability in AAs increases risks. ¡ Predictability, transparency and traceability will enhance AA trustworthiness. ¡