57d285fab626d399fdd545c0390bfd75.ppt

- Количество слайдов: 31

Designing clustering methods for ontology building: The Mo’K workbench Authors: Presenter: Gilles Bisson, Claire Nédellec and Dolores Cañamero Ovidiu Fortu

INTRODUCTION ¡ Paper objectives: l l Presentation of a workbench for development and evaluation of the methods that learn ontologies Some experimental results that illustrate the suitability of the model in characterization of the methods of learning semantic classes

INTRODUCTION ¡ Ontology building general strategy: l l Define a distance metric (as good an approximation for the semantic distance as possible) Devise/use a classifying algorithm that uses the above distance to build the ontology

Harris’ hypothesis Formulation: Study of syntactic regularities leads to identification of syntactic schemata made out of combinations of word classes reflecting specific domain knowledge ¡ Consequence: one can measure similarity using cooccurence in syntactic patterns ¡

Conceptual clustering ¡ Ontologies are organized as acyclic graphs: l l ¡ Nodes represent concepts Links represent inclusion (generality relation) The methods considered in this paper rely upon bottom-up construction of the graph

The Mo’K model ¡ Representation of examples: Binary syntactic patterns of the form: <head – grammatical relation – modifier head>, where <modifier head> is the object, and the rest of the pattern is the attribute l ¡ Example: l l This causes a decrease in […] <cause Dobj decrease>

Clustering ¡ Bottom up clustering by joining classes that are near: l l Join classes of objects (nouns or actions – tuples <verb, relation>) that are frequently determined by the same attributes Join attribute classes that frequently determine the same objects

Corpora Specialized corpora used for domain specific ontologies ¡ Corpora are pruned (rare examples are eliminated) – the workbench allows the specification of ¡ l l Minimum number of occurences for a pattern to be considered Minimum number of occurences for an attribute/object to be considered

Distance modeling ¡ Consider only distances that: l l l ¡ Take syntactic analysis as input Do not use other ontologies (like Word. Net) Are based on distributions of the attributes of an object Identify general steps in computation of these distances to formulate a general model

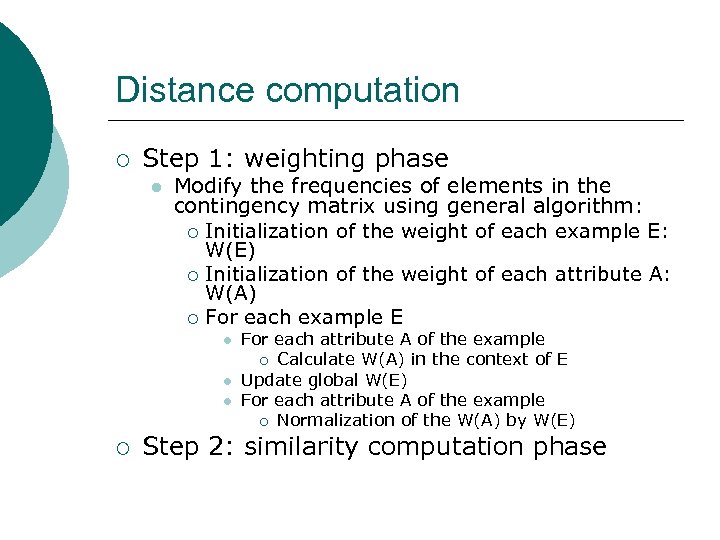

Distance computation ¡ Step 1: weighting phase l Modify the frequencies of elements in the contingency matrix using general algorithm: ¡ Initialization of the weight of each example E: W(E) ¡ Initialization of the weight of each attribute A: W(A) ¡ For each example E l l l ¡ For each attribute A of the example ¡ Calculate W(A) in the context of E Update global W(E) For each attribute A of the example ¡ Normalization of the W(A) by W(E) Step 2: similarity computation phase

Distance evaluation The workbench provides support for evaluation of metrics ¡ The procedure is ¡ l l l Divide the corpus in training and test Perform clustering on training Use similarities computed on training to classify examples in the test and compute precision and recall – produce negative examples by randomly combining objects and attributes

Experiments Purpose: evaluate Mo’K ’s parameterization possibilities and the impact of the parameters on results ¡ Corpora: two French corpora ¡ l l One with cooking recipes from the Web – nearly 50000 examples One with agricultural data (Agrovoc) – 168287 examples

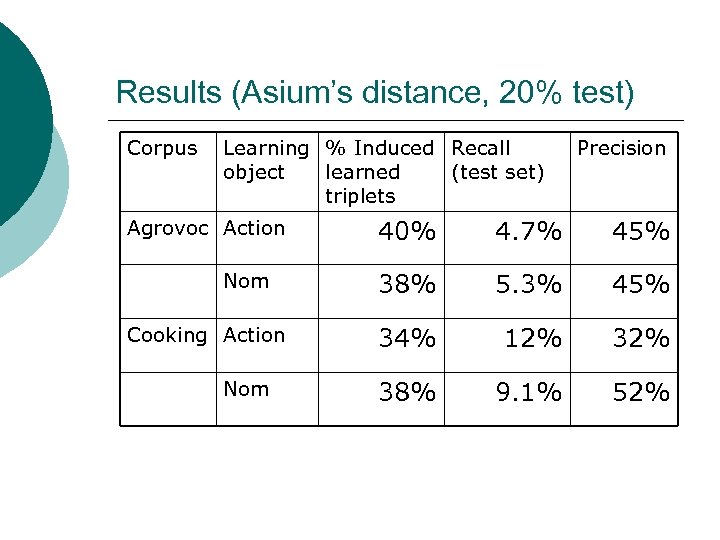

Results (Asium’s distance, 20% test) Corpus Learning % Induced Recall object learned (test set) triplets Agrovoc Action Nom Cooking Action Nom Precision 40% 4. 7% 45% 38% 5. 3% 45% 34% 12% 38% 9. 1% 52%

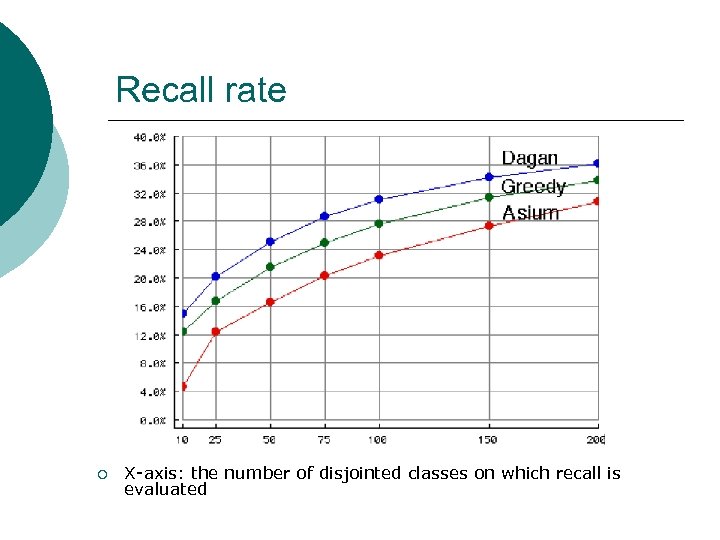

Recall rate ¡ X-axis: the number of disjointed classes on which recall is evaluated

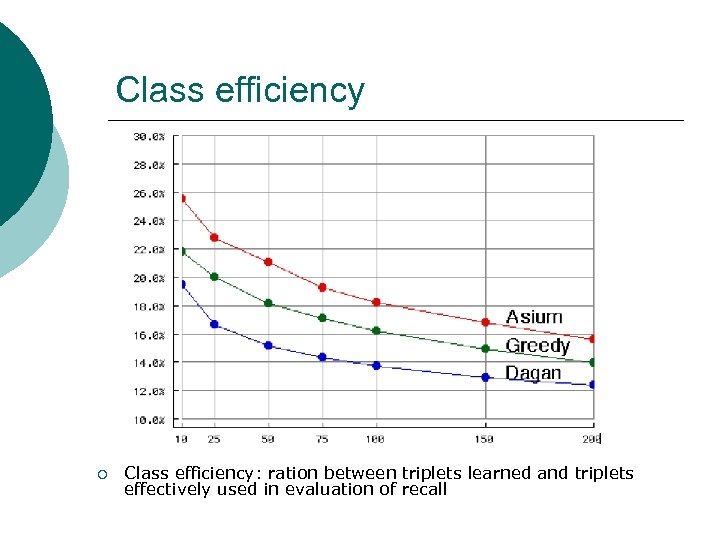

Class efficiency ¡ Class efficiency: ration between triplets learned and triplets effectively used in evaluation of recall

Conclusions Comments? ¡ Questions? ¡

Ontology Learning and Its Application to Automated Terminology Translation Authors: Roberto Navigli, Paola Velardi and Aldo Gangemi Presenter: Ovidiu Fortu

Introduction ¡ Paper objective: l l Present Onto. Learn, a system for automated construction of ontologies by extraction of relevant domain terms from corpora of text Present the usage of Onto. Learn in the task of translating multiword terms from English to Italian

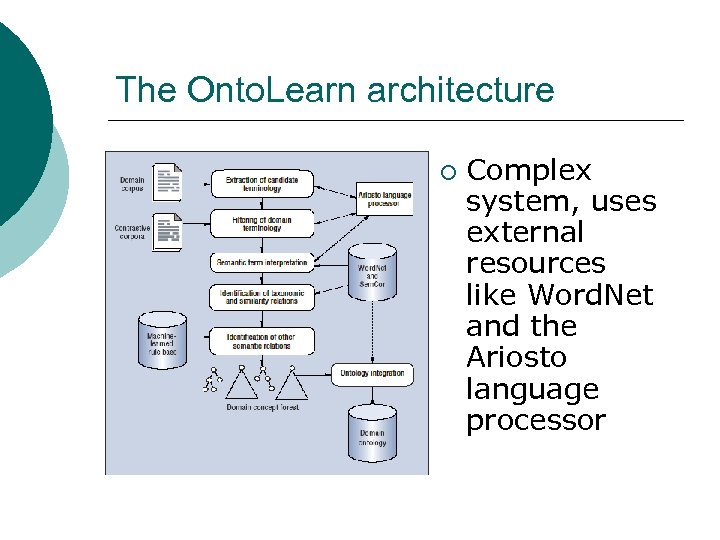

The Onto. Learn architecture ¡ Complex system, uses external resources like Word. Net and the Ariosto language processor

The Onto. Learn ¡ New important feature: l ¡ Semantic interpretation of terms (word sense disambiguation) Three main phases: l l l Terminology extraction Semantic interpretation Creation of a specialized view of Word. Net

Terminology extraction Terms selected with shallow stochastic methods ¡ Better quality if syntactic features are used ¡ High frequency in a corpus is not necessarily sufficient: ¡ l l credit card – is a term last week – not a term

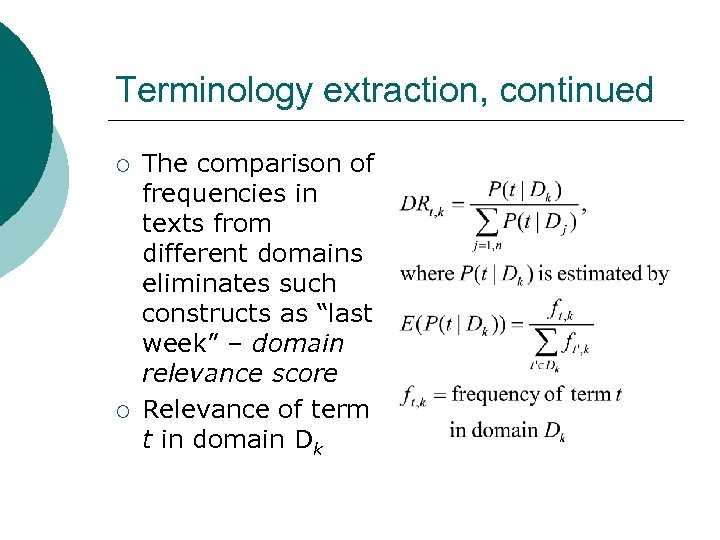

Terminology extraction, continued ¡ ¡ The comparison of frequencies in texts from different domains eliminates such constructs as “last week” – domain relevance score Relevance of term t in domain Dk

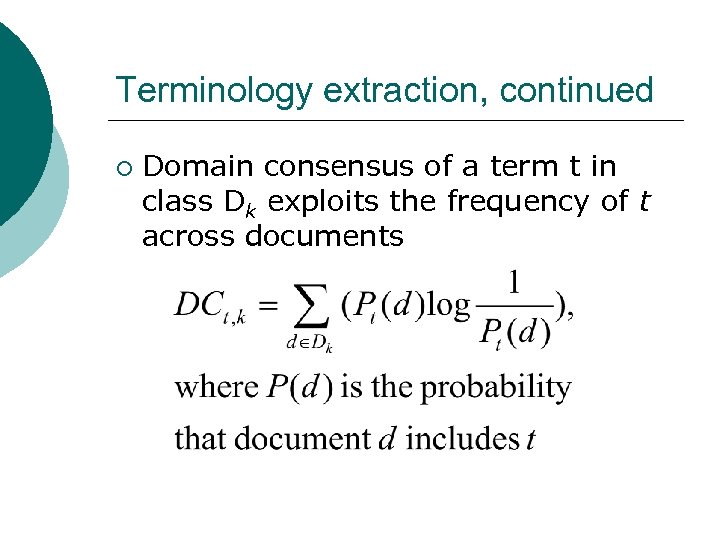

Terminology extraction, continued ¡ Domain consensus of a term t in class Dk exploits the frequency of t across documents

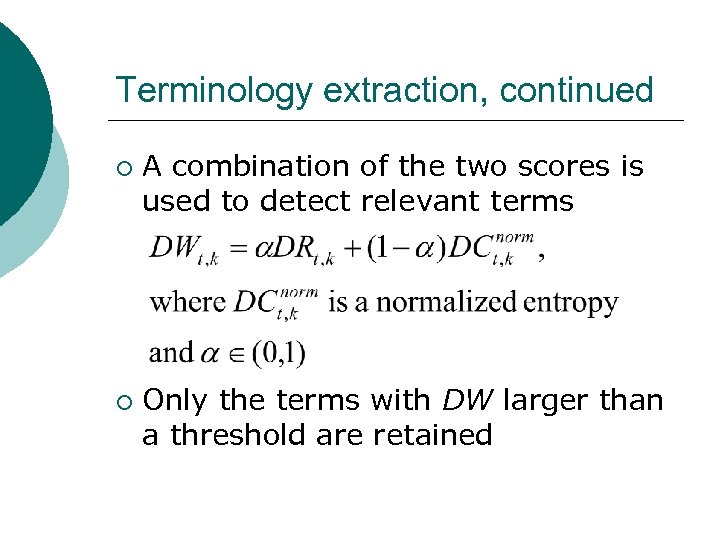

Terminology extraction, continued ¡ ¡ A combination of the two scores is used to detect relevant terms Only the terms with DW larger than a threshold are retained

Semantic interpretation Step 1: create semantic nets for every wk t and any synset wk by following all Word. Net links, but limiting the path length to 3 (after disambiguation of words) ¡ Step 2: intersect the networks and compute a score based on the number and type of semantic patterns connecting the networks ¡

Semantic interpretation, continued Semantic patterns are instances of 13 predefined metapatterns ¡ Example: ¡ l ¡ Topic, like in archeological site Compute the score (Sik is sense k of word i in the term) for all possible pairs

Semantic interpretation, continued ¡ Use the common paths in the semantic networks to detect semantic relations (taxonomic knowledge) between concepts: l l l ¡ Select a set of domain specific semantic relations Use inductive learning to learn semantic relations given ontological knowledge Apply the model to detect semantic relations Errors from the disambiguation phase can be corrected here

Creation of a specialized view of Word. Net ¡ In the last phase of the process l l Construct the ontology by eliminating the Word. Net nodes that are not domain terms from the semantic networks A domain core ontology can also be used as backbone

Translating multiword terms ¡ Classic approach: use of parallel corpora l l ¡ Advantage: easy to implement Disadvantage: few such corpora, especially in specific domains Onto. Learn based solution: l Use Euro. Word. Net and build ontologies in both languages, associating them to synsets

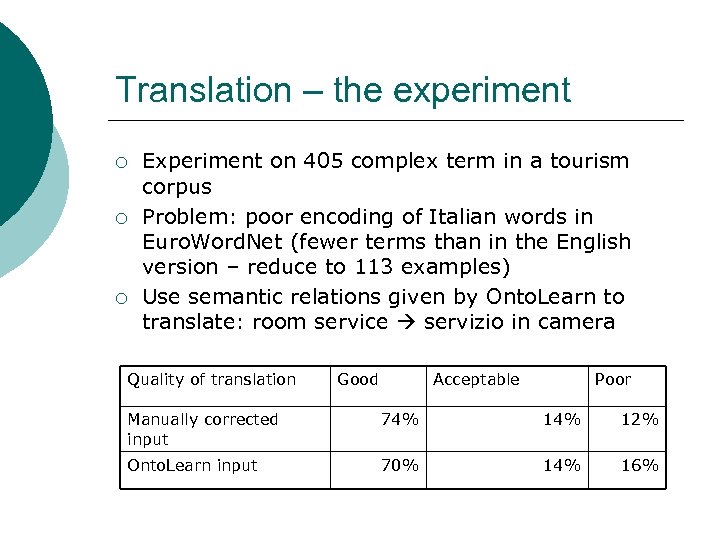

Translation – the experiment ¡ ¡ ¡ Experiment on 405 complex term in a tourism corpus Problem: poor encoding of Italian words in Euro. Word. Net (fewer terms than in the English version – reduce to 113 examples) Use semantic relations given by Onto. Learn to translate: room service servizio in camera Quality of translation Good Acceptable Poor Manually corrected input 74% 12% Onto. Learn input 70% 14% 16%

Conclusions Questions? ¡ Comments? ¡

57d285fab626d399fdd545c0390bfd75.ppt