afce4d102a8d47feab23eb4a94bc7904.ppt

- Количество слайдов: 30

Design and Implementation of a Generic Resource-Sharing Virtual-Time Dispatcher Tal Ben-Nun Yoav Etsion Dror Feitelson Scl. Eng & CS Hebrew University CS Dept Barcelona SC Ctr Scl. Eng & CS Hebrew University Supported by the Israel Science Foundation, grant no. 28/09

Design and Implementation of a Generic Resource-Sharing Virtual-Time Dispatcher Tal Ben-Nun Yoav Etsion Dror Feitelson Scl. Eng & CS Hebrew University CS Dept Barcelona SC Ctr Scl. Eng & CS Hebrew University Supported by the Israel Science Foundation, grant no. 28/09

Design and Implementation of a Generic Resource-Sharing Virtual-Time Dispatcher Goal is to control share of resources, not to optimize performance – important in virtualization

Design and Implementation of a Generic Resource-Sharing Virtual-Time Dispatcher Goal is to control share of resources, not to optimize performance – important in virtualization

Design and Implementation of a Generic Resource-Sharing Virtual-Time Dispatcher Goal is to control share of resources, not to optimize performance – important in virtualization Same module used for diverse resources

Design and Implementation of a Generic Resource-Sharing Virtual-Time Dispatcher Goal is to control share of resources, not to optimize performance – important in virtualization Same module used for diverse resources

Design and Implementation of a Generic Resource-Sharing Virtual-Time Dispatcher Goal is to control share of resources, not to optimize performance – important in virtualization Same module used for diverse resources Mechanism used: dispatch the most deserving client at each instant

Design and Implementation of a Generic Resource-Sharing Virtual-Time Dispatcher Goal is to control share of resources, not to optimize performance – important in virtualization Same module used for diverse resources Mechanism used: dispatch the most deserving client at each instant

Design and Implementation of a Generic Resource-Sharing Virtual-Time Dispatcher Goal is to control share of resources, not to optimize performance – important in virtualization Same module used for diverse resources Mechanism used: dispatch the most deserving client at each instant Selection of deserving client using virtual time formalism

Design and Implementation of a Generic Resource-Sharing Virtual-Time Dispatcher Goal is to control share of resources, not to optimize performance – important in virtualization Same module used for diverse resources Mechanism used: dispatch the most deserving client at each instant Selection of deserving client using virtual time formalism

Design and Implementation of a Generic Resource-Sharing Virtual-Time Dispatcher Goal is to control share of resources, not to optimize performance – important in virtualization Same module used for diverse resources Mechanism used: dispatch the most deserving client at each instant Selection of deserving client using virtual time formalism Implemented and measured in Linux

Design and Implementation of a Generic Resource-Sharing Virtual-Time Dispatcher Goal is to control share of resources, not to optimize performance – important in virtualization Same module used for diverse resources Mechanism used: dispatch the most deserving client at each instant Selection of deserving client using virtual time formalism Implemented and measured in Linux

Motivation Context: VMM for server consolidation Multiple legacy servers share physical platform Improved utilization and easier maintenance Flexibility in allocating resources to virtual machines Virtual machines typically run a single application (“appliances”)

Motivation Context: VMM for server consolidation Multiple legacy servers share physical platform Improved utilization and easier maintenance Flexibility in allocating resources to virtual machines Virtual machines typically run a single application (“appliances”)

Motivation Assumed goal: enforce predefined allocation of resources to different virtual machines (“fair share” scheduling) Based on importance / SLA Can change with time or due to external events Problem: what is “ 30% of the resources” when there are many different resources, and diverse requirements?

Motivation Assumed goal: enforce predefined allocation of resources to different virtual machines (“fair share” scheduling) Based on importance / SLA Can change with time or due to external events Problem: what is “ 30% of the resources” when there are many different resources, and diverse requirements?

Global Scheduling “Fair share” usually applied to a single resource But what if this resource is not a bottleneck? Global scheduling idea: 1) Identify the system bottleneck resource 2) Apply fair share scheduling on this resource 3) This induces appropriate allocations on other resources This paper: how to apply fair-share scheduling on any resource in the system

Global Scheduling “Fair share” usually applied to a single resource But what if this resource is not a bottleneck? Global scheduling idea: 1) Identify the system bottleneck resource 2) Apply fair share scheduling on this resource 3) This induces appropriate allocations on other resources This paper: how to apply fair-share scheduling on any resource in the system

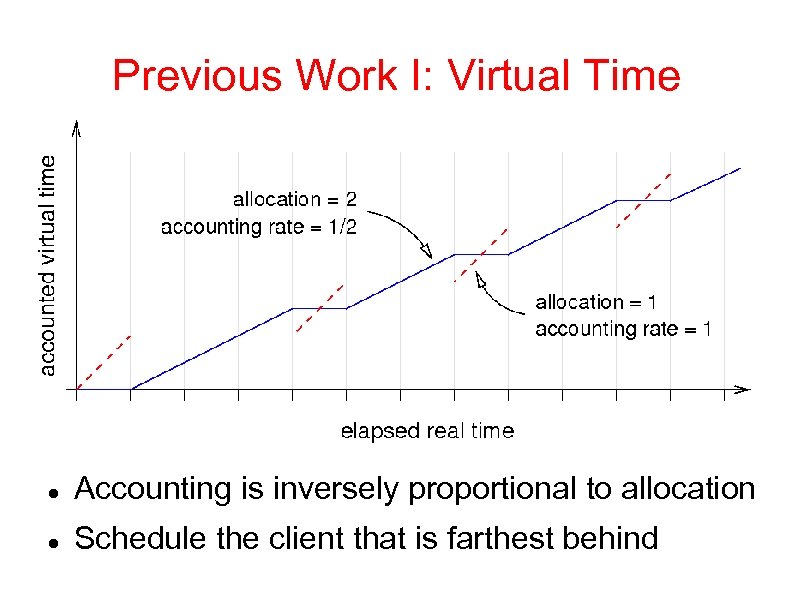

Previous Work I: Virtual Time Accounting is inversely proportional to allocation Schedule the client that is farthest behind

Previous Work I: Virtual Time Accounting is inversely proportional to allocation Schedule the client that is farthest behind

Previous Work II: Traffic Shaping • Leaky bucket – Variable requests – Constant rate transmission – Bucket represent buffer • Token bucket – Variable requests – Constant allocations – Bucket represents stored capacity

Previous Work II: Traffic Shaping • Leaky bucket – Variable requests – Constant rate transmission – Bucket represent buffer • Token bucket – Variable requests – Constant allocations – Bucket represents stored capacity

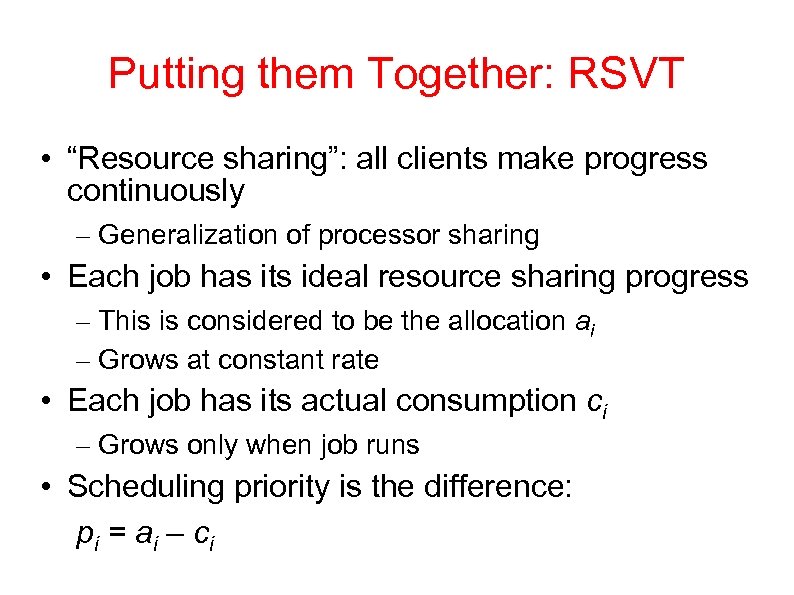

Putting them Together: RSVT • “Resource sharing”: all clients make progress continuously – Generalization of processor sharing • Each job has its ideal resource sharing progress – This is considered to be the allocation ai – Grows at constant rate • Each job has its actual consumption ci – Grows only when job runs • Scheduling priority is the difference: pi = ai – ci

Putting them Together: RSVT • “Resource sharing”: all clients make progress continuously – Generalization of processor sharing • Each job has its ideal resource sharing progress – This is considered to be the allocation ai – Grows at constant rate • Each job has its actual consumption ci – Grows only when job runs • Scheduling priority is the difference: pi = ai – ci

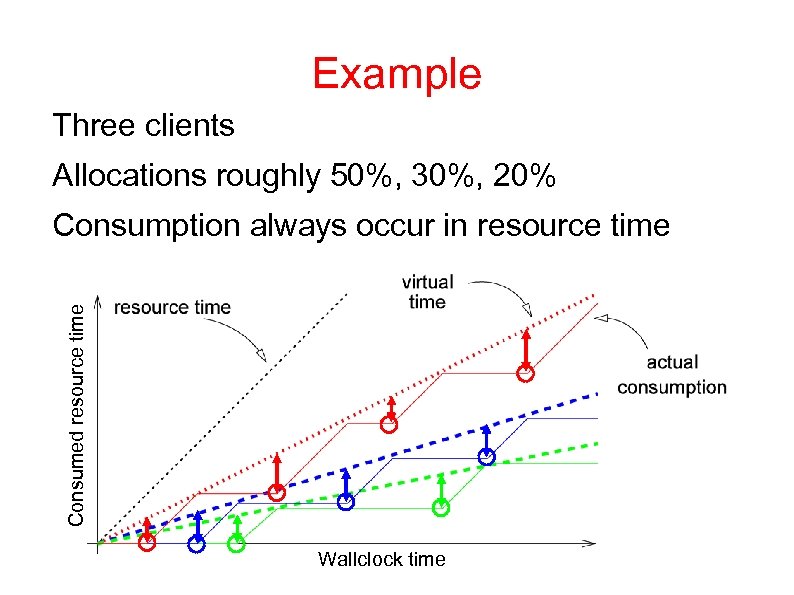

Example Three clients Allocations roughly 50%, 30%, 20% Consumed resource time Consumption always occur in resource time Wallclock time

Example Three clients Allocations roughly 50%, 30%, 20% Consumed resource time Consumption always occur in resource time Wallclock time

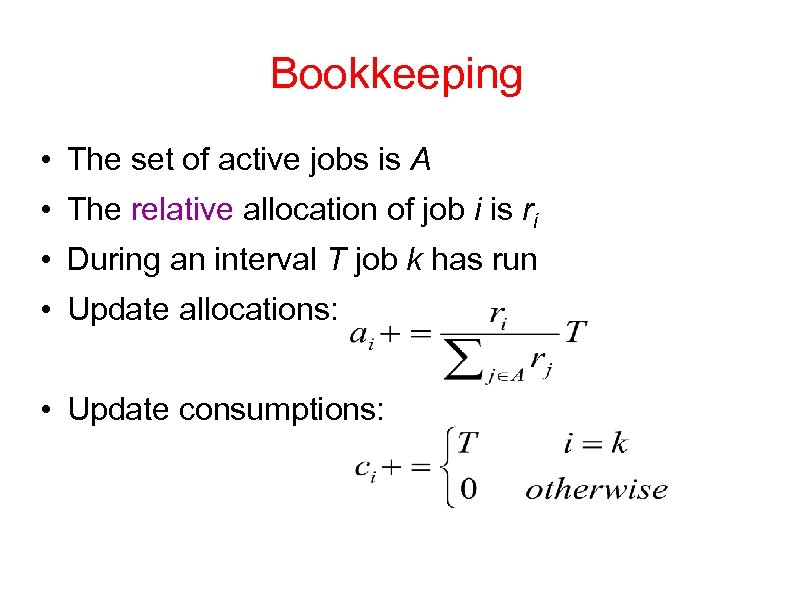

Bookkeeping • The set of active jobs is A • The relative allocation of job i is ri • During an interval T job k has run • Update allocations: • Update consumptions:

Bookkeeping • The set of active jobs is A • The relative allocation of job i is ri • During an interval T job k has run • Update allocations: • Update consumptions:

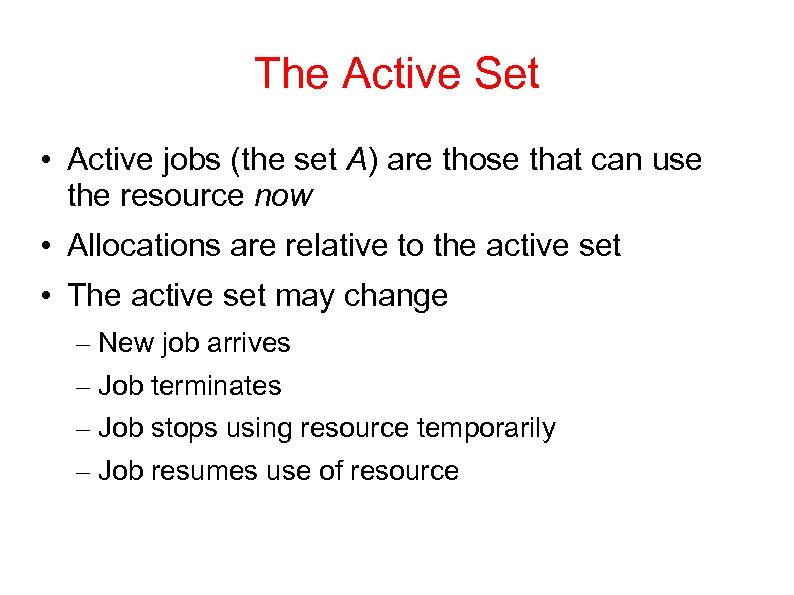

The Active Set • Active jobs (the set A) are those that can use the resource now • Allocations are relative to the active set • The active set may change – New job arrives – Job terminates – Job stops using resource temporarily – Job resumes use of resource

The Active Set • Active jobs (the set A) are those that can use the resource now • Allocations are relative to the active set • The active set may change – New job arrives – Job terminates – Job stops using resource temporarily – Job resumes use of resource

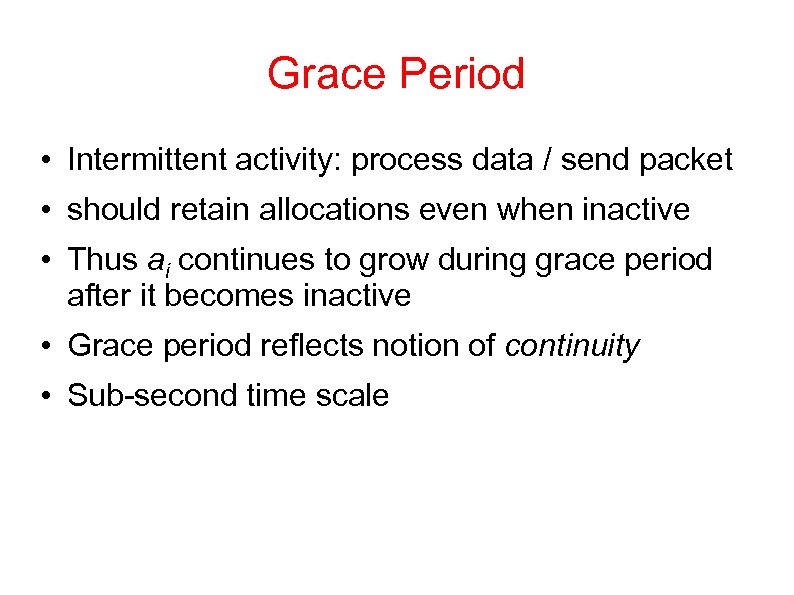

Grace Period • Intermittent activity: process data / send packet • should retain allocations even when inactive • Thus ai continues to grow during grace period after it becomes inactive • Grace period reflects notion of continuity • Sub-second time scale

Grace Period • Intermittent activity: process data / send packet • should retain allocations even when inactive • Thus ai continues to grow during grace period after it becomes inactive • Grace period reflects notion of continuity • Sub-second time scale

Rebirth • Resumption after very long inactive periods should be treated as new arrivals • Due to grace period, job that becomes inactive accrues extra allocation • Forget this extra allocation after rebirth period (set ai = ci) • Two order of magnitude larger than grace period

Rebirth • Resumption after very long inactive periods should be treated as new arrivals • Due to grace period, job that becomes inactive accrues extra allocation • Forget this extra allocation after rebirth period (set ai = ci) • Two order of magnitude larger than grace period

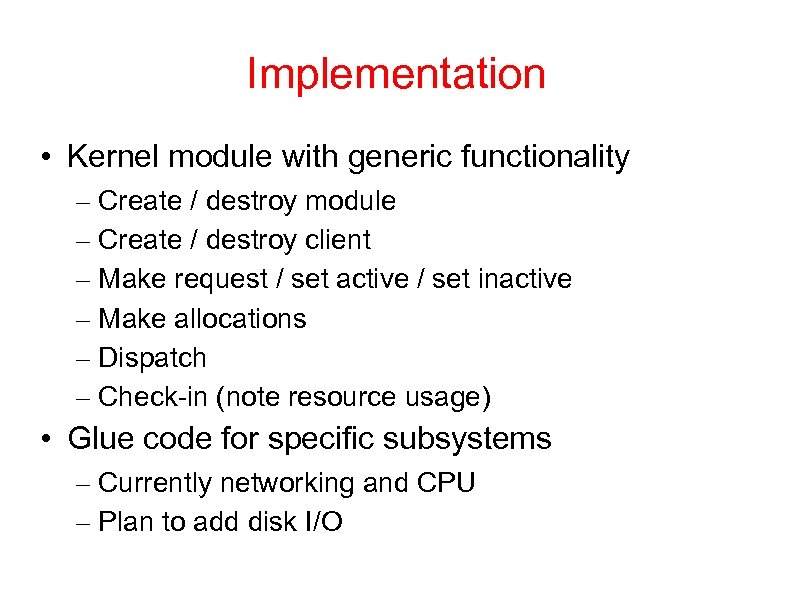

Implementation • Kernel module with generic functionality – Create / destroy module – Create / destroy client – Make request / set active / set inactive – Make allocations – Dispatch – Check-in (note resource usage) • Glue code for specific subsystems – Currently networking and CPU – Plan to add disk I/O

Implementation • Kernel module with generic functionality – Create / destroy module – Create / destroy client – Make request / set active / set inactive – Make allocations – Dispatch – Check-in (note resource usage) • Glue code for specific subsystems – Currently networking and CPU – Plan to add disk I/O

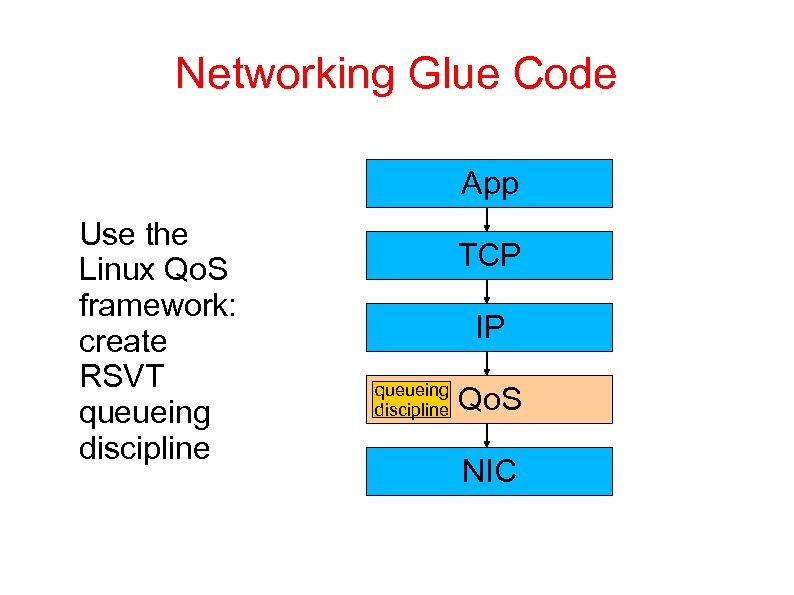

Networking Glue Code App Use the Linux Qo. S framework: create RSVT queueing discipline TCP IP queueing discipline Qo. S NIC

Networking Glue Code App Use the Linux Qo. S framework: create RSVT queueing discipline TCP IP queueing discipline Qo. S NIC

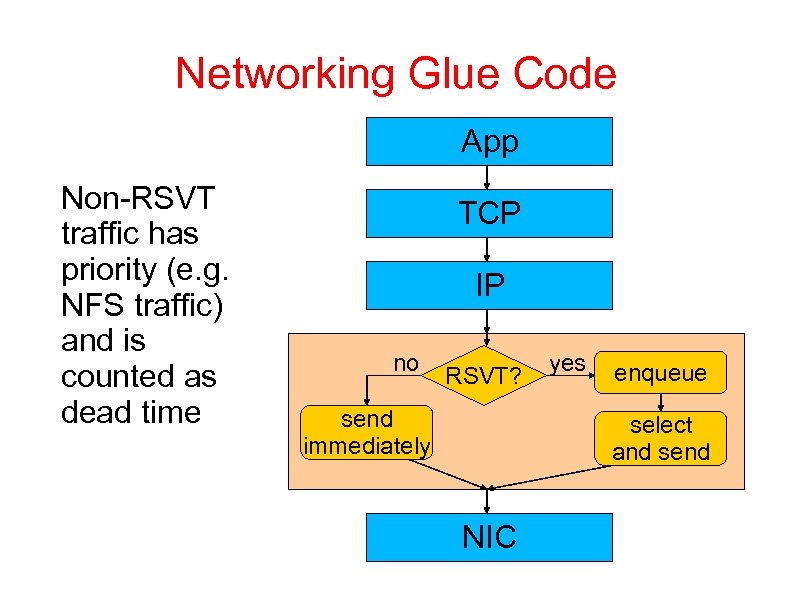

Networking Glue Code App Non-RSVT traffic has priority (e. g. NFS traffic) and is counted as dead time TCP IP no RSVT? send immediately yes enqueue select and send NIC

Networking Glue Code App Non-RSVT traffic has priority (e. g. NFS traffic) and is counted as dead time TCP IP no RSVT? send immediately yes enqueue select and send NIC

CPU Scheduling Glue Code • Use Linux modular scheduling core • Add an RSVT scheduling policy – RSVT module essentially replaces the policy runqueue – Initial implementation only for uniprocessors • CFS and possibly other policies also exist and have higher priority – When they run, this is considered dead time

CPU Scheduling Glue Code • Use Linux modular scheduling core • Add an RSVT scheduling policy – RSVT module essentially replaces the policy runqueue – Initial implementation only for uniprocessors • CFS and possibly other policies also exist and have higher priority – When they run, this is considered dead time

Timer Interrupts • Linux employs timer interrupts (250 Hz) • Allocations are done at these times – Translate time into microseconds – Subtract known dead time (unavailable to us) – Divide among active clients according to relative allocations – Bound divergence of allocation from consumption • Also handling of grace period (mark as inactive) • Also handling of rebirth (set ai = ci)

Timer Interrupts • Linux employs timer interrupts (250 Hz) • Allocations are done at these times – Translate time into microseconds – Subtract known dead time (unavailable to us) – Divide among active clients according to relative allocations – Bound divergence of allocation from consumption • Also handling of grace period (mark as inactive) • Also handling of rebirth (set ai = ci)

Multi-Queue • At dispatch, need to find client with highest priority • But priorities change at different rates • Solution: allow only a limited discrete set of relative priorities • Each priority has a separate queue • Maintain all clients in each queue in priority order • Only need to check the first in each queue to find the maximum

Multi-Queue • At dispatch, need to find client with highest priority • But priorities change at different rates • Solution: allow only a limited discrete set of relative priorities • Each priority has a separate queue • Maintain all clients in each queue in priority order • Only need to check the first in each queue to find the maximum

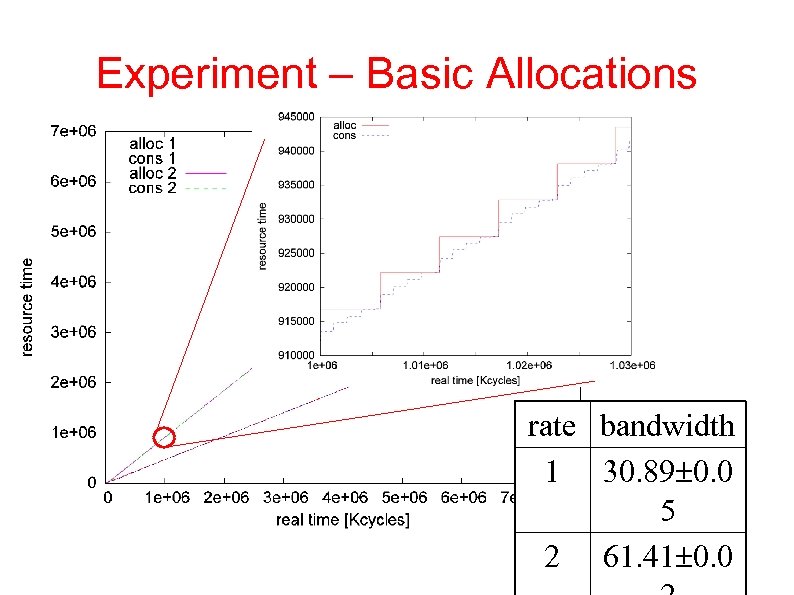

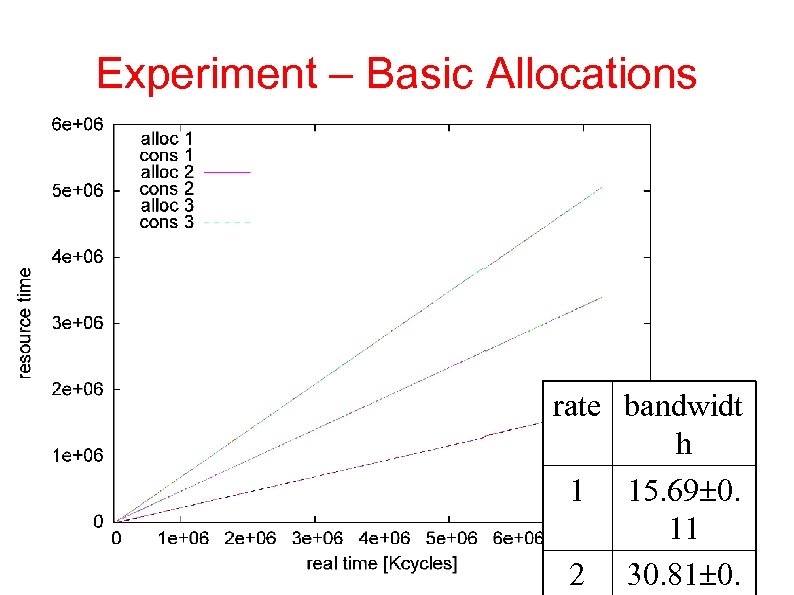

Experiment – Basic Allocations rate bandwidth 1 30. 89 0. 0 5 2 61. 41 0. 0

Experiment – Basic Allocations rate bandwidth 1 30. 89 0. 0 5 2 61. 41 0. 0

Experiment – Basic Allocations rate bandwidt h 1 15. 69 0. 11 2 30. 81 0.

Experiment – Basic Allocations rate bandwidt h 1 15. 69 0. 11 2 30. 81 0.

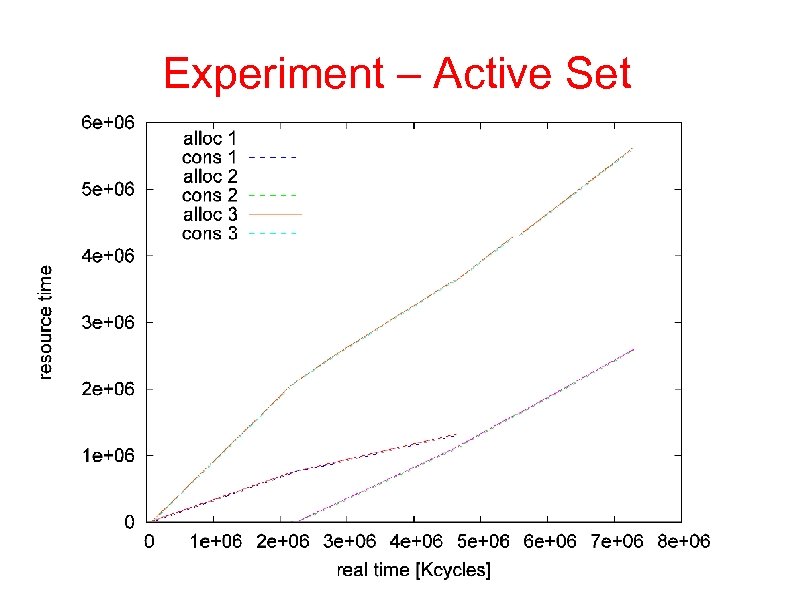

Experiment – Active Set

Experiment – Active Set

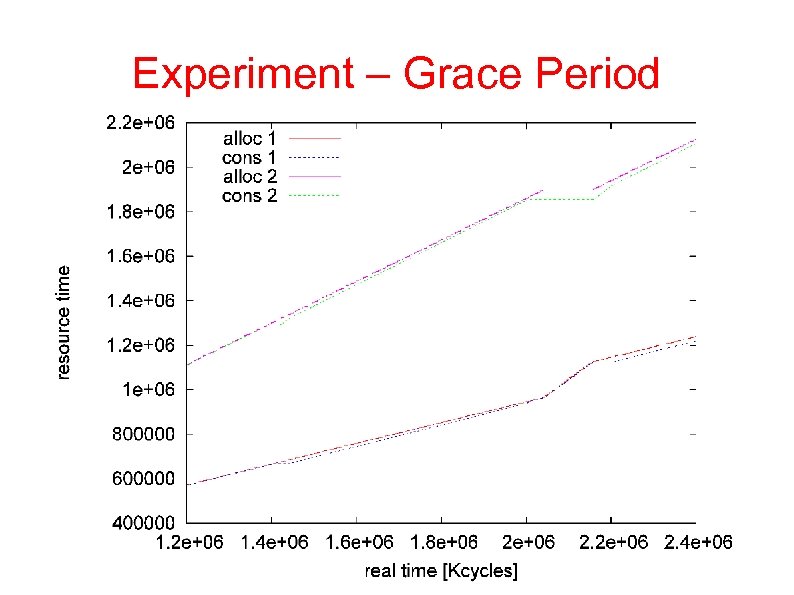

Experiment – Grace Period

Experiment – Grace Period

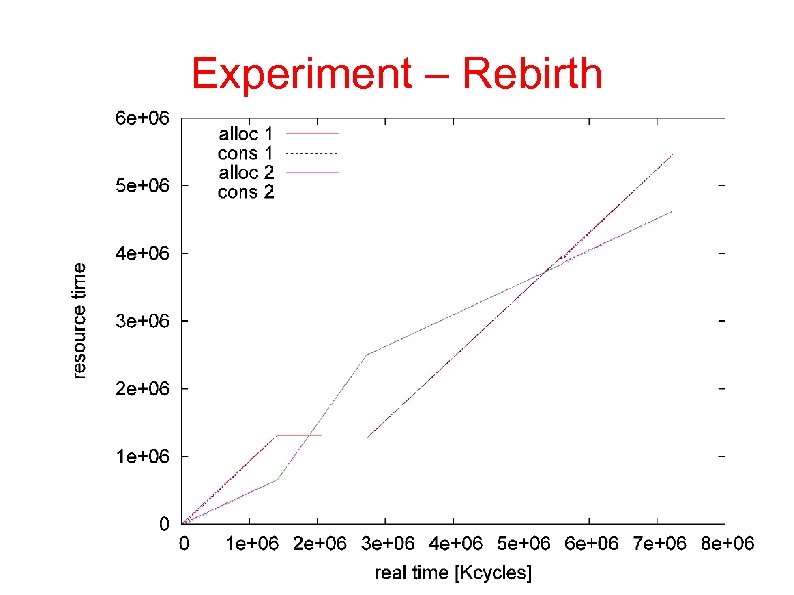

Experiment – Rebirth

Experiment – Rebirth

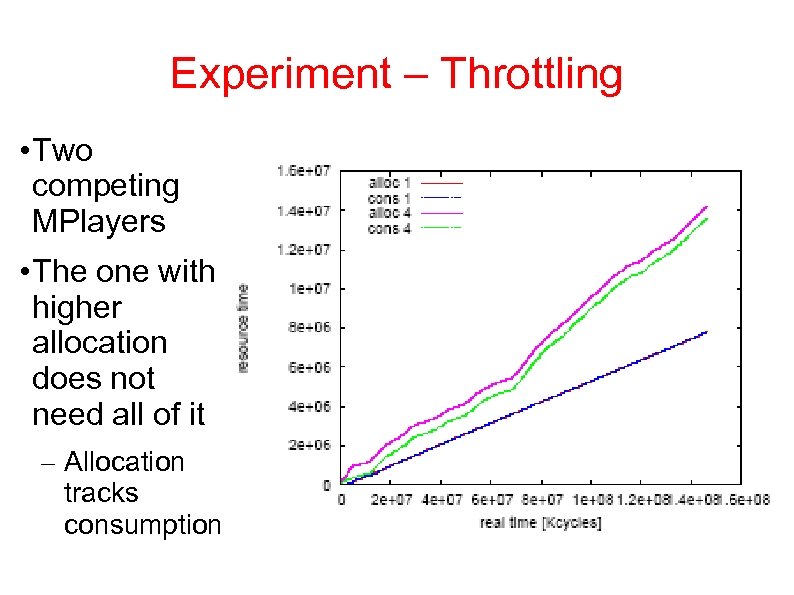

Experiment – Throttling • Two competing MPlayers • The one with higher allocation does not need all of it – Allocation tracks consumption

Experiment – Throttling • Two competing MPlayers • The one with higher allocation does not need all of it – Allocation tracks consumption

Conclusions • Demonstrated generic virtual-time based resource sharing dispatcher • Need to complete implementation – Support for I/O scheduling – More details, e. g. SMP support • Building block of global scheduling vision

Conclusions • Demonstrated generic virtual-time based resource sharing dispatcher • Need to complete implementation – Support for I/O scheduling – More details, e. g. SMP support • Building block of global scheduling vision