fe94ac491a26a8b91ec4d1ce99168e46.ppt

- Количество слайдов: 16

Deployment of NMI Components on the UT Grid Shyamal Mitra TEXAS ADVANCED COMPUTING CENTER

Deployment of NMI Components on the UT Grid Shyamal Mitra TEXAS ADVANCED COMPUTING CENTER

Outline • TACC Grid Program • NMI Testbed Activities • Synergistic Activities – Computations on the Grid – Grid Portals 2

Outline • TACC Grid Program • NMI Testbed Activities • Synergistic Activities – Computations on the Grid – Grid Portals 2

TACC Grid Program • Building Grids – UT Campus Grid – State Grid (TIGRE) • Grid Resources – – – NMI Components United Devices LSF Multicluster • Significantly leveraging NMI Components and experience 3

TACC Grid Program • Building Grids – UT Campus Grid – State Grid (TIGRE) • Grid Resources – – – NMI Components United Devices LSF Multicluster • Significantly leveraging NMI Components and experience 3

Resources at TACC • • • IBM Power 4 System (224 processors, 512 GB Memory, 1. 16 TF) IBM IA-64 Cluster (40 processors, 80 GB Memory, 128 GF) IBM IA-32 Cluster (64 processors, 32 GB Memory, 64 GF) Cray SV 1 (16 processors, 16 GB Memory, 19. 2 GF) SGI Origin 2000 (4 processors, 2 GB Memory, 1 TB storage) SGI Onyx 2 (24 processors, 25 GB Memory, 6 Infinite Reality-2 Graphics pipes) • NMI components Globus and NWS installed on all systems save the Cray SV 1 4

Resources at TACC • • • IBM Power 4 System (224 processors, 512 GB Memory, 1. 16 TF) IBM IA-64 Cluster (40 processors, 80 GB Memory, 128 GF) IBM IA-32 Cluster (64 processors, 32 GB Memory, 64 GF) Cray SV 1 (16 processors, 16 GB Memory, 19. 2 GF) SGI Origin 2000 (4 processors, 2 GB Memory, 1 TB storage) SGI Onyx 2 (24 processors, 25 GB Memory, 6 Infinite Reality-2 Graphics pipes) • NMI components Globus and NWS installed on all systems save the Cray SV 1 4

Resources at UT Campus • Individual clusters belonging to professors in – engineering – computer sciences – NMI components Globus and NWS installed on several machines on campus • Computer laboratories having ~100 s of PCs in the engineering and computer sciences departments 5

Resources at UT Campus • Individual clusters belonging to professors in – engineering – computer sciences – NMI components Globus and NWS installed on several machines on campus • Computer laboratories having ~100 s of PCs in the engineering and computer sciences departments 5

Campus Grid Model • “Hub and Spoke” Model • Researchers build programs on their clusters and migrate bigger jobs to TACC resources – Use GSI for authentication – Use Grid. FTP for data migration – Use LSF Multicluster for migration of jobs • Reclaim unused computing cycles on PCs through United Devices infrastructure. 6

Campus Grid Model • “Hub and Spoke” Model • Researchers build programs on their clusters and migrate bigger jobs to TACC resources – Use GSI for authentication – Use Grid. FTP for data migration – Use LSF Multicluster for migration of jobs • Reclaim unused computing cycles on PCs through United Devices infrastructure. 6

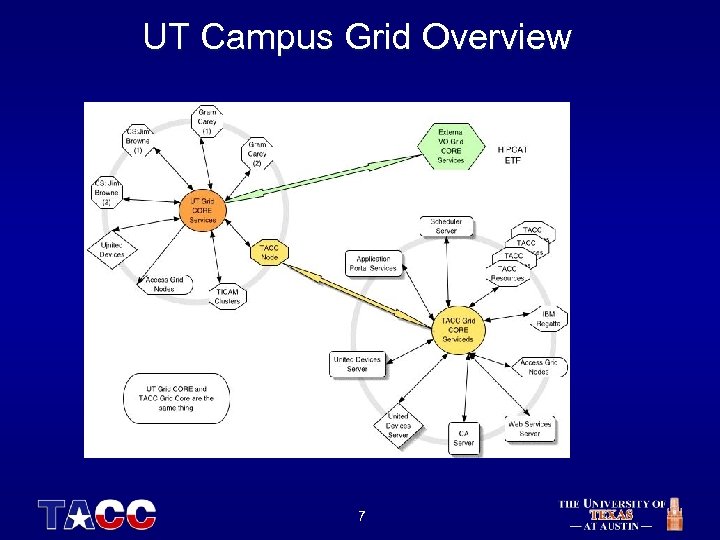

UT Campus Grid Overview LSF 7

UT Campus Grid Overview LSF 7

NMI Testbed Activities • Globus 2. 2. 2 – GSI, GRAM, MDS, Grid. FTP – Robust software – Standard Grid middleware – Need to install from source code to link to other components like MPICH-G 2, Simple CA • Condor-G 6. 4. 4 – submit jobs using GRAM, monitor queues, receive notification, and maintain Globus credentials. Lacks – scheduling capability of Condor – checkpointing 8

NMI Testbed Activities • Globus 2. 2. 2 – GSI, GRAM, MDS, Grid. FTP – Robust software – Standard Grid middleware – Need to install from source code to link to other components like MPICH-G 2, Simple CA • Condor-G 6. 4. 4 – submit jobs using GRAM, monitor queues, receive notification, and maintain Globus credentials. Lacks – scheduling capability of Condor – checkpointing 8

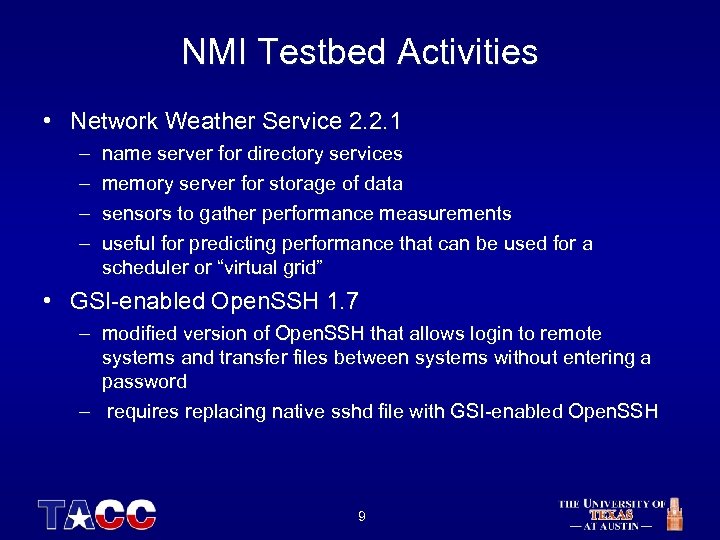

NMI Testbed Activities • Network Weather Service 2. 2. 1 – – name server for directory services memory server for storage of data sensors to gather performance measurements useful for predicting performance that can be used for a scheduler or “virtual grid” • GSI-enabled Open. SSH 1. 7 – modified version of Open. SSH that allows login to remote systems and transfer files between systems without entering a password – requires replacing native sshd file with GSI-enabled Open. SSH 9

NMI Testbed Activities • Network Weather Service 2. 2. 1 – – name server for directory services memory server for storage of data sensors to gather performance measurements useful for predicting performance that can be used for a scheduler or “virtual grid” • GSI-enabled Open. SSH 1. 7 – modified version of Open. SSH that allows login to remote systems and transfer files between systems without entering a password – requires replacing native sshd file with GSI-enabled Open. SSH 9

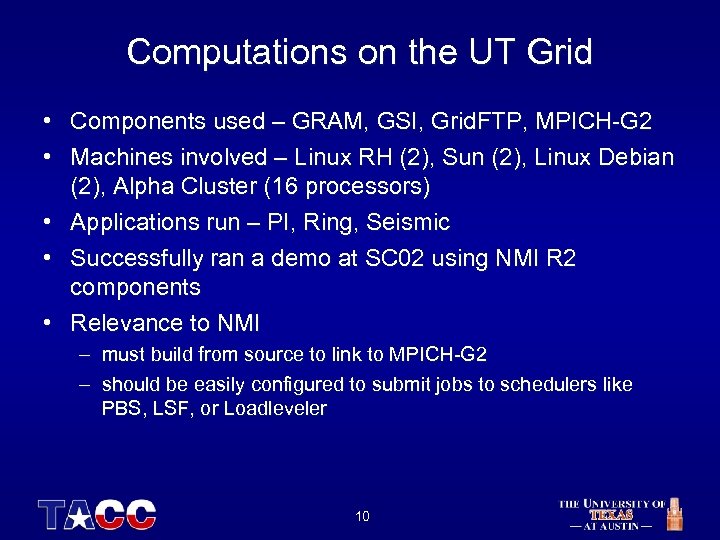

Computations on the UT Grid • Components used – GRAM, GSI, Grid. FTP, MPICH-G 2 • Machines involved – Linux RH (2), Sun (2), Linux Debian (2), Alpha Cluster (16 processors) • Applications run – PI, Ring, Seismic • Successfully ran a demo at SC 02 using NMI R 2 components • Relevance to NMI – must build from source to link to MPICH-G 2 – should be easily configured to submit jobs to schedulers like PBS, LSF, or Loadleveler 10

Computations on the UT Grid • Components used – GRAM, GSI, Grid. FTP, MPICH-G 2 • Machines involved – Linux RH (2), Sun (2), Linux Debian (2), Alpha Cluster (16 processors) • Applications run – PI, Ring, Seismic • Successfully ran a demo at SC 02 using NMI R 2 components • Relevance to NMI – must build from source to link to MPICH-G 2 – should be easily configured to submit jobs to schedulers like PBS, LSF, or Loadleveler 10

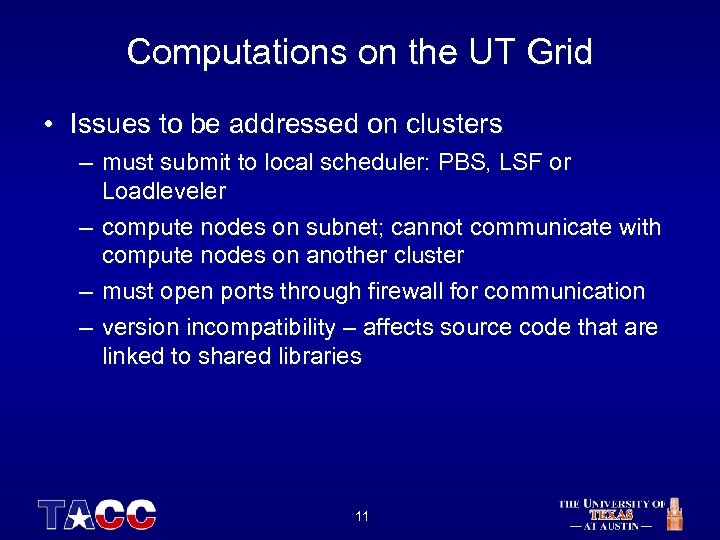

Computations on the UT Grid • Issues to be addressed on clusters – must submit to local scheduler: PBS, LSF or Loadleveler – compute nodes on subnet; cannot communicate with compute nodes on another cluster – must open ports through firewall for communication – version incompatibility – affects source code that are linked to shared libraries 11

Computations on the UT Grid • Issues to be addressed on clusters – must submit to local scheduler: PBS, LSF or Loadleveler – compute nodes on subnet; cannot communicate with compute nodes on another cluster – must open ports through firewall for communication – version incompatibility – affects source code that are linked to shared libraries 11

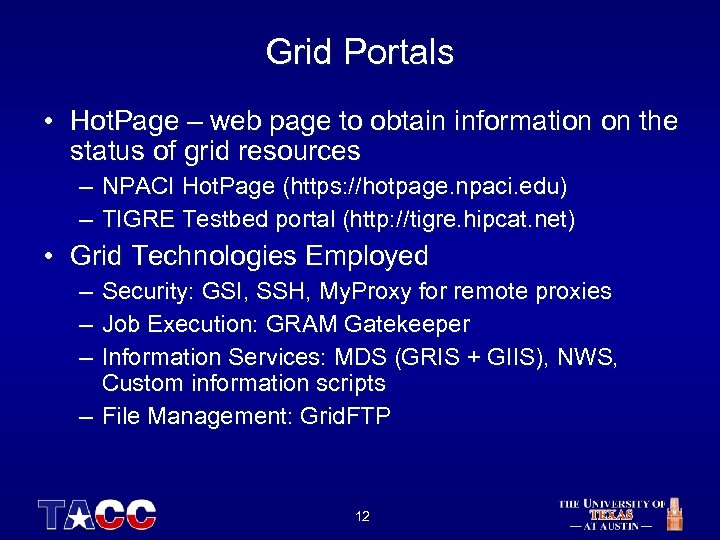

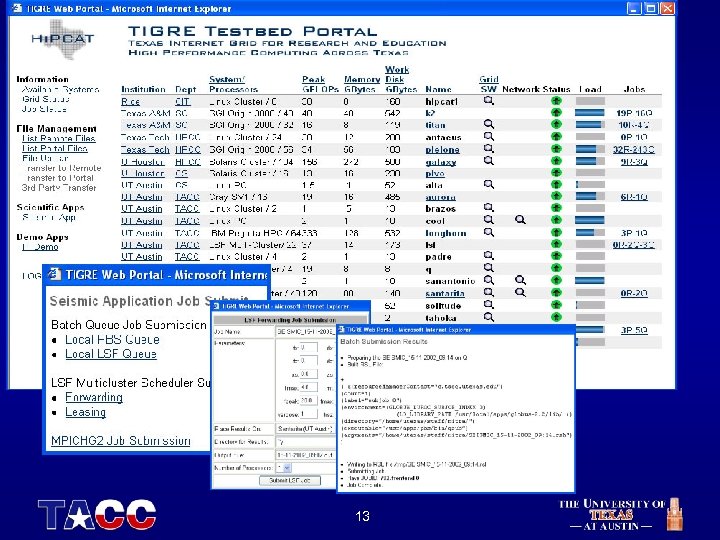

Grid Portals • Hot. Page – web page to obtain information on the status of grid resources – NPACI Hot. Page (https: //hotpage. npaci. edu) – TIGRE Testbed portal (http: //tigre. hipcat. net) • Grid Technologies Employed – – – Security: GSI, SSH, My. Proxy for remote proxies Job Execution: GRAM Gatekeeper Information Services: MDS (GRIS + GIIS), NWS, Custom information scripts – File Management: Grid. FTP 12

Grid Portals • Hot. Page – web page to obtain information on the status of grid resources – NPACI Hot. Page (https: //hotpage. npaci. edu) – TIGRE Testbed portal (http: //tigre. hipcat. net) • Grid Technologies Employed – – – Security: GSI, SSH, My. Proxy for remote proxies Job Execution: GRAM Gatekeeper Information Services: MDS (GRIS + GIIS), NWS, Custom information scripts – File Management: Grid. FTP 12

13

13

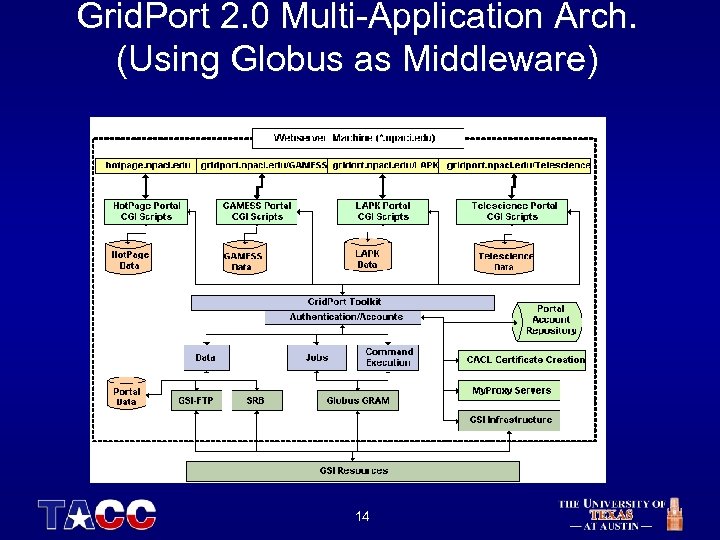

Grid. Port 2. 0 Multi-Application Arch. (Using Globus as Middleware) 14

Grid. Port 2. 0 Multi-Application Arch. (Using Globus as Middleware) 14

Future Work • Use NMI components where possible in building grids • Use Lightweight Campus Certificate Policy for instantiating a Certificate Authority at TACC • Build portals and deploy applications on the UT Grid 15

Future Work • Use NMI components where possible in building grids • Use Lightweight Campus Certificate Policy for instantiating a Certificate Authority at TACC • Build portals and deploy applications on the UT Grid 15

Collaborators • • Mary Thomas Dr. John Boisseau Rich Toscano Jeson Martajaya Eric Roberts Maytal Dahan Tom Urban 16

Collaborators • • Mary Thomas Dr. John Boisseau Rich Toscano Jeson Martajaya Eric Roberts Maytal Dahan Tom Urban 16