c750b9e2dcf14f6aba3d8a40ffec5c1a.ppt

- Количество слайдов: 36

Deploying HPC Linux Clusters (the LLNL way!) Robin Goldstone Presented to Scicom. P/SP-XXL August 10, 2004 UCRL-PRES-206330 This work was performed under the auspices of the U. S. Department of Energy by University of California, Lawrence Livermore National Laboratory under Contract W-7405 -Eng-48.

UNCLASSIFIED Background UNCLASSIFIED 1

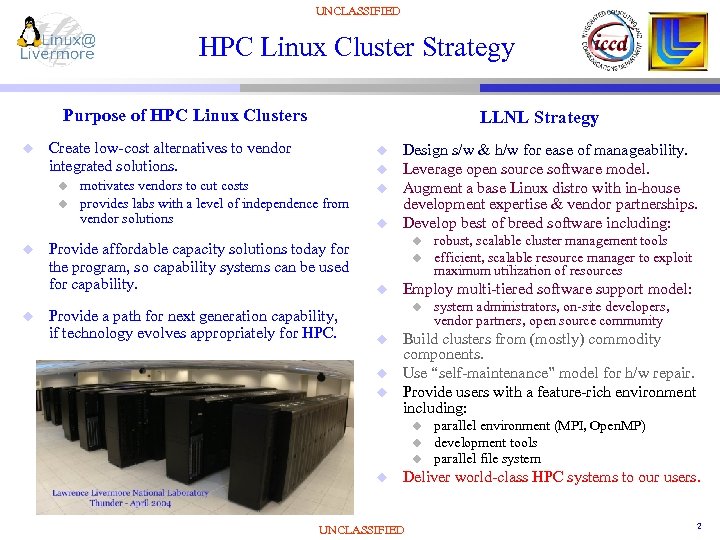

UNCLASSIFIED HPC Linux Cluster Strategy Purpose of HPC Linux Clusters u Create low-cost alternatives to vendor integrated solutions. u u LLNL Strategy u u motivates vendors to cut costs provides labs with a level of independence from vendor solutions Provide affordable capacity solutions today for the program, so capability systems can be used for capability. Provide a path for next generation capability, if technology evolves appropriately for HPC. u u Design s/w & h/w for ease of manageability. Leverage open source software model. Augment a base Linux distro with in-house development expertise & vendor partnerships. Develop best of breed software including: u u u Employ multi-tiered software support model: u u system administrators, on-site developers, vendor partners, open source community Build clusters from (mostly) commodity components. Use “self-maintenance” model for h/w repair. Provide users with a feature-rich environment including: u u robust, scalable cluster management tools efficient, scalable resource manager to exploit maximum utilization of resources parallel environment (MPI, Open. MP) development tools parallel file system Deliver world-class HPC systems to our users. UNCLASSIFIED 2

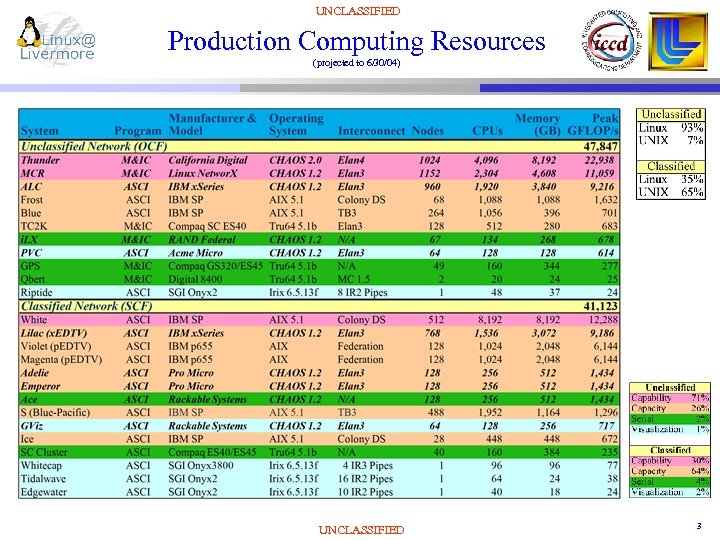

UNCLASSIFIED Production Computing Resources (projected to 6/30/04) UNCLASSIFIED 3

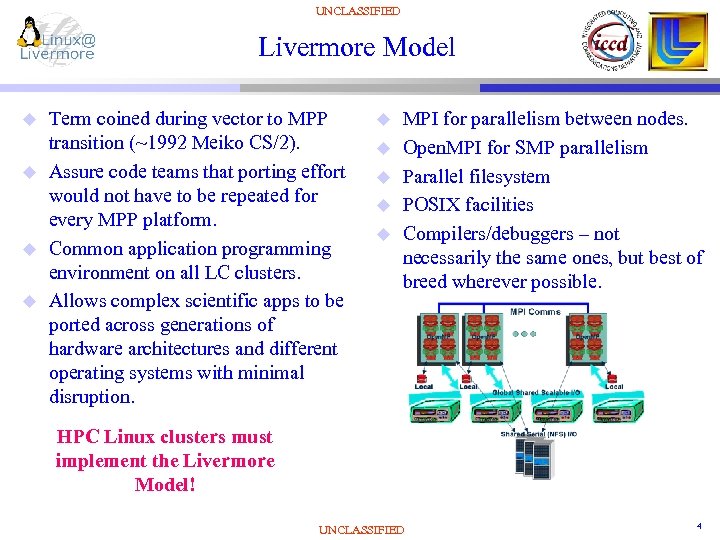

UNCLASSIFIED Livermore Model u u Term coined during vector to MPP transition (~1992 Meiko CS/2). Assure code teams that porting effort would not have to be repeated for every MPP platform. Common application programming environment on all LC clusters. Allows complex scientific apps to be ported across generations of hardware architectures and different operating systems with minimal disruption. u u u MPI for parallelism between nodes. Open. MPI for SMP parallelism Parallel filesystem POSIX facilities Compilers/debuggers – not necessarily the same ones, but best of breed wherever possible. HPC Linux clusters must implement the Livermore Model! UNCLASSIFIED 4

UNCLASSIFIED Decision-making process u Principal Investigator for HPC Platforms u u “Visionary” who tracks HPC trends and innovations Understands scientific apps and customer requirements Very deep and broad understanding of computer architecture IO Testbed u Evaluate new technologies: nodes, interconnects, storage systems u u u Explore integration with existing infrastructure Develop required support in Linux OS stack RFP process u In response to a specific programmatic need for a new computing resource: u u u Performance characteristics Manageability aspects, MTBF, etc. determine the best architecture define the requirements solicit bids Best value procurements or sole source where required Bidders typically required to execute a benchmark suite on the proposed HW and submit results Integration -> acceptance -> deployment u u Integration requires close coordination with LLNL staff since our OS stack is used Often the integrator is not familiar with all of the HPC components (interconnect, etc. ) so LLNL must act at the “prime contractor” to coordinate the integration of cluster nodes, interconnect and storage systems. Acceptance testing is critical! Does the system function/perform as expected? For LLNL’s large Linux clusters, time from contract award to production status has been ~8 months. UNCLASSIFIED 5

UNCLASSIFIED Software Environment UNCLASSIFIED 6

UNCLASSIFIED CHAOS Overview u What is CHAOS? u u u Clustered High Availability Operating System Internal LC HPC Linux Distro Currently derived from Red Hat Enterprise Linux Scalability target: 4 K nodes The CHAOS “Framework” u u u Limitations u What is added? u u u Configuration Management Release discipline Integration testing Bug tracking Kernel modifications for HPC requirements Cluster system administration tools MPI environment Parallel filesystem (Lustre) Resource manager (SLURM) UNCLASSIFIED u u Assumes LC services structure Assumes LC sys admin culture Tools supported by DEG are not included in CHAOS distro Only supports LC production h/w Assumes cluster architecture 7

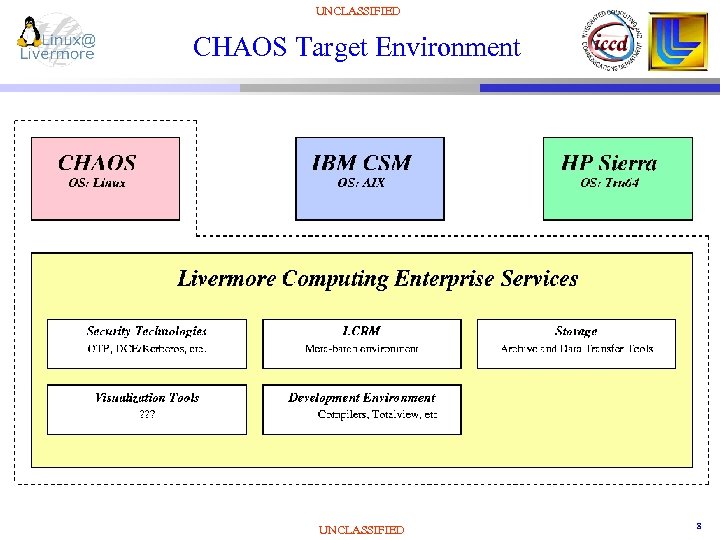

UNCLASSIFIED CHAOS Target Environment UNCLASSIFIED 8

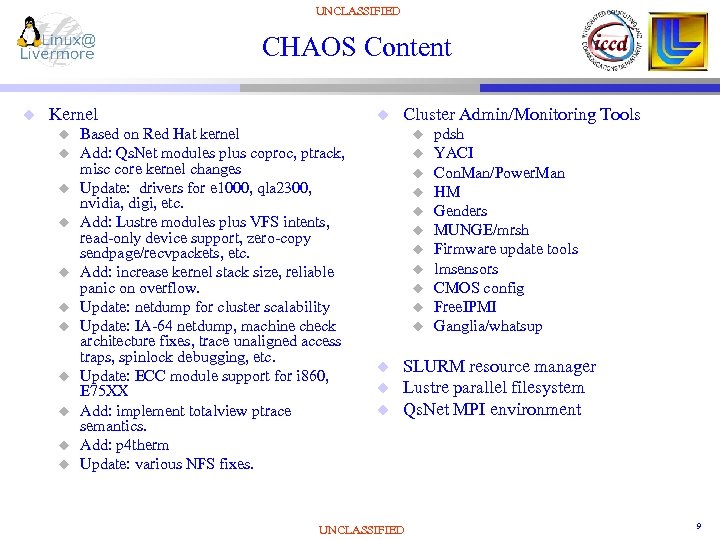

UNCLASSIFIED CHAOS Content u Kernel u u u Based on Red Hat kernel Add: Qs. Net modules plus coproc, ptrack, misc core kernel changes Update: drivers for e 1000, qla 2300, nvidia, digi, etc. Add: Lustre modules plus VFS intents, read-only device support, zero-copy sendpage/recvpackets, etc. Add: increase kernel stack size, reliable panic on overflow. Update: netdump for cluster scalability Update: IA-64 netdump, machine check architecture fixes, trace unaligned access traps, spinlock debugging, etc. Update: ECC module support for i 860, E 75 XX Add: implement totalview ptrace semantics. Add: p 4 therm Update: various NFS fixes. Cluster Admin/Monitoring Tools u u u u pdsh YACI Con. Man/Power. Man HM Genders MUNGE/mrsh Firmware update tools lmsensors CMOS config Free. IPMI Ganglia/whatsup SLURM resource manager Lustre parallel filesystem Qs. Net MPI environment UNCLASSIFIED 9

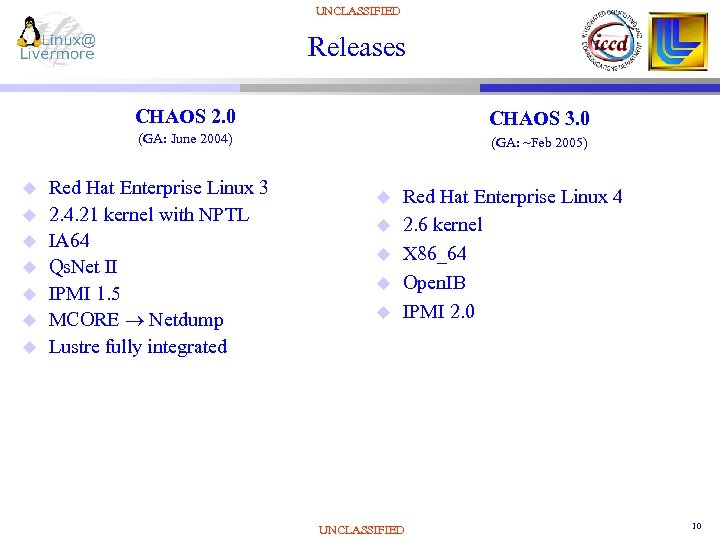

UNCLASSIFIED Releases CHAOS 2. 0 (GA: June 2004) u u u u CHAOS 3. 0 (GA: ~Feb 2005) Red Hat Enterprise Linux 3 2. 4. 21 kernel with NPTL IA 64 Qs. Net II IPMI 1. 5 MCORE Netdump Lustre fully integrated u u u Red Hat Enterprise Linux 4 2. 6 kernel X 86_64 Open. IB IPMI 2. 0 UNCLASSIFIED 10

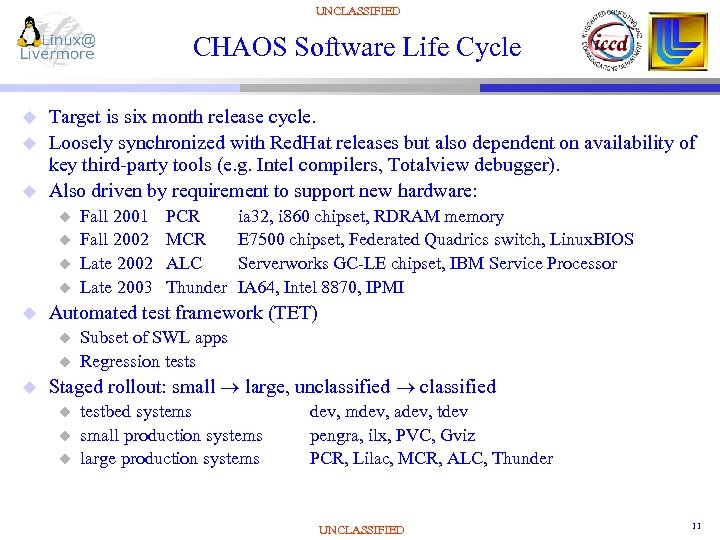

UNCLASSIFIED CHAOS Software Life Cycle u u u Target is six month release cycle. Loosely synchronized with Red. Hat releases but also dependent on availability of key third-party tools (e. g. Intel compilers, Totalview debugger). Also driven by requirement to support new hardware: u u u PCR MCR ALC Thunder ia 32, i 860 chipset, RDRAM memory E 7500 chipset, Federated Quadrics switch, Linux. BIOS Serverworks GC-LE chipset, IBM Service Processor IA 64, Intel 8870, IPMI Automated test framework (TET) u u u Fall 2001 Fall 2002 Late 2003 Subset of SWL apps Regression tests Staged rollout: small large, unclassified u u u testbed systems small production systems large production systems dev, mdev, adev, tdev pengra, ilx, PVC, Gviz PCR, Lilac, MCR, ALC, Thunder UNCLASSIFIED 11

UNCLASSIFIED CHAOS Software Support Model u u System administrators perform first level problem determination. If a software defect is suspected, problem is reported to CHAOS development team. Bugs are entered and tracked in CHAOS GNATS database. Fixes are pursued as appropriate: u u u in open source community through Red. Hat through vendor partners (Quadrics, CFS, etc. ) locally by CHAOS developers Bug fix is incorporated into CHAOS CVS source tree and new RPM(s) built and tested on testbed systems. Changes are rolled out in next CHAOS release or sooner depending on severity. UNCLASSIFIED 12

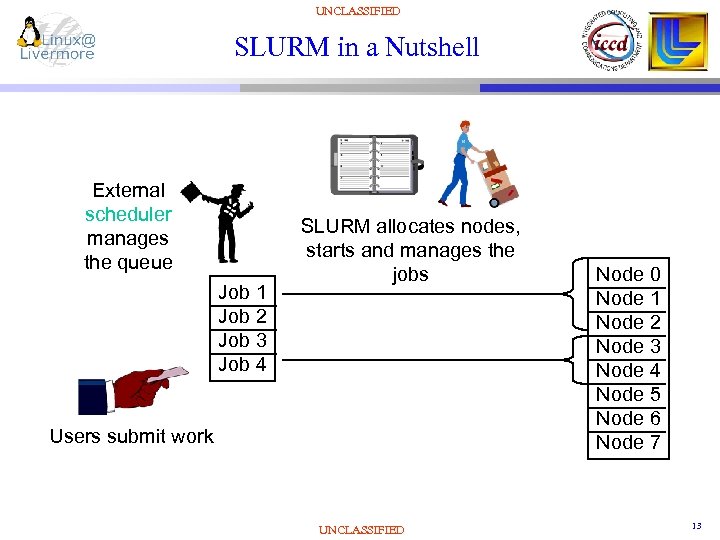

UNCLASSIFIED SLURM in a Nutshell External scheduler manages the queue Job 1 Job 2 Job 3 Job 4 SLURM allocates nodes, starts and manages the jobs Users submit work UNCLASSIFIED Node 0 Node 1 Node 2 Node 3 Node 4 Node 5 Node 6 Node 7 13

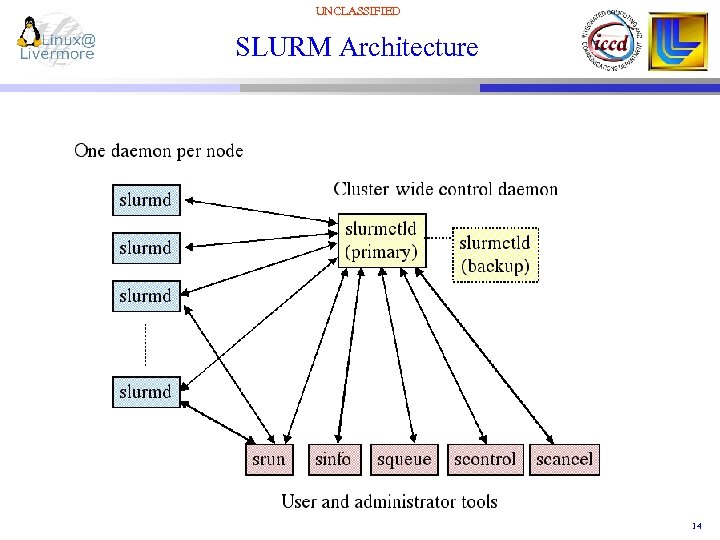

UNCLASSIFIED SLURM Architecture UNCLASSIFIED 14

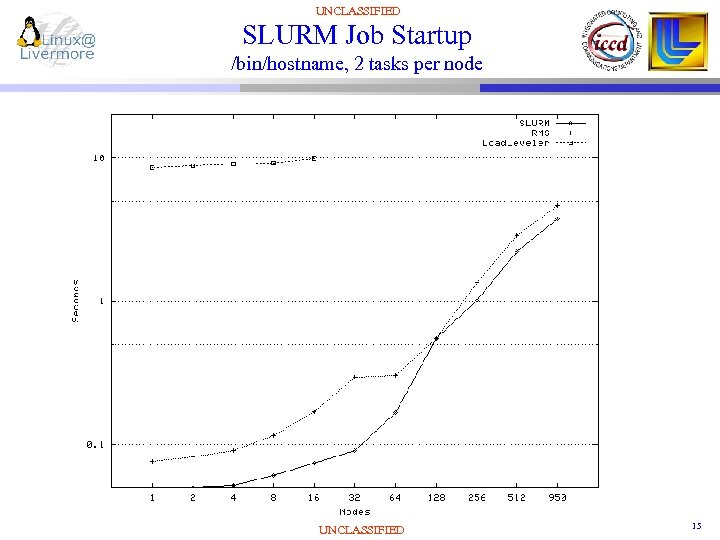

UNCLASSIFIED SLURM Job Startup /bin/hostname, 2 tasks per node UNCLASSIFIED 15

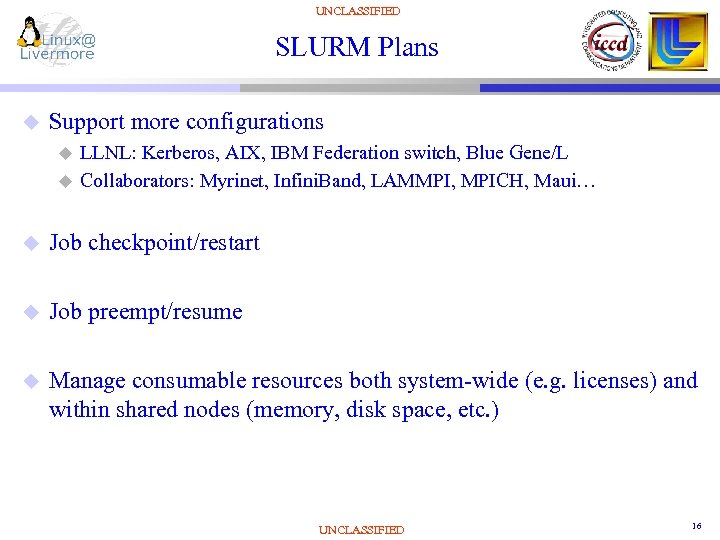

UNCLASSIFIED SLURM Plans u Support more configurations u u LLNL: Kerberos, AIX, IBM Federation switch, Blue Gene/L Collaborators: Myrinet, Infini. Band, LAMMPI, MPICH, Maui… u Job checkpoint/restart u Job preempt/resume u Manage consumable resources both system-wide (e. g. licenses) and within shared nodes (memory, disk space, etc. ) UNCLASSIFIED 16

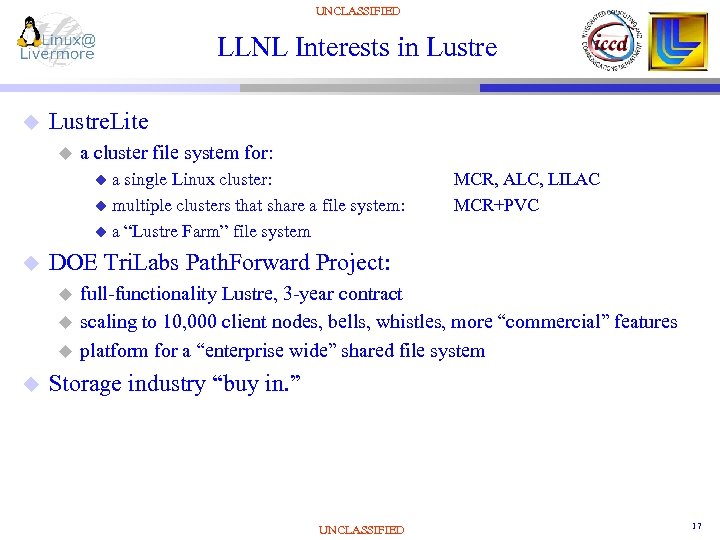

UNCLASSIFIED LLNL Interests in Lustre u Lustre. Lite u a cluster file system for: ua single Linux cluster: u multiple clusters that share a file system: u a “Lustre Farm” file system u DOE Tri. Labs Path. Forward Project: u u MCR, ALC, LILAC MCR+PVC full-functionality Lustre, 3 -year contract scaling to 10, 000 client nodes, bells, whistles, more “commercial” features platform for a “enterprise wide” shared file system Storage industry “buy in. ” UNCLASSIFIED 17

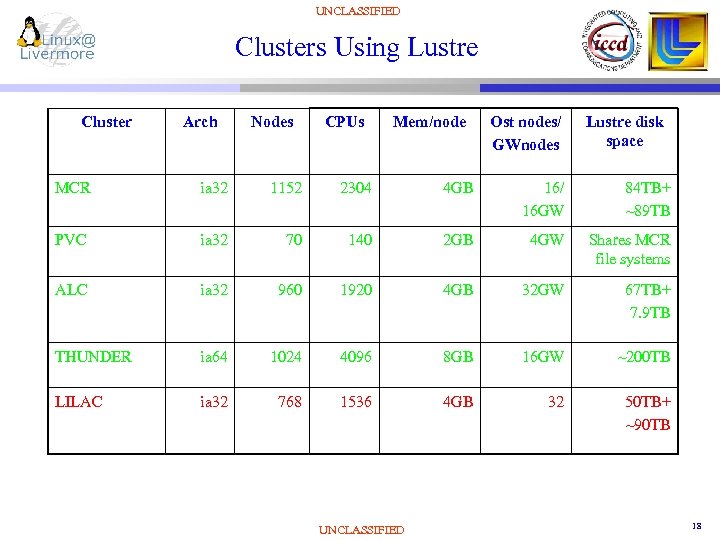

UNCLASSIFIED Clusters Using Lustre Cluster Arch Nodes CPUs Mem/node Ost nodes/ GWnodes Lustre disk space MCR ia 32 1152 2304 4 GB 16/ 16 GW 84 TB+ ~89 TB PVC ia 32 70 140 2 GB 4 GW Shares MCR file systems ALC ia 32 960 1920 4 GB 32 GW 67 TB+ 7. 9 TB THUNDER ia 64 1024 4096 8 GB 16 GW ~200 TB LILAC ia 32 768 1536 4 GB 32 50 TB+ ~90 TB UNCLASSIFIED 18

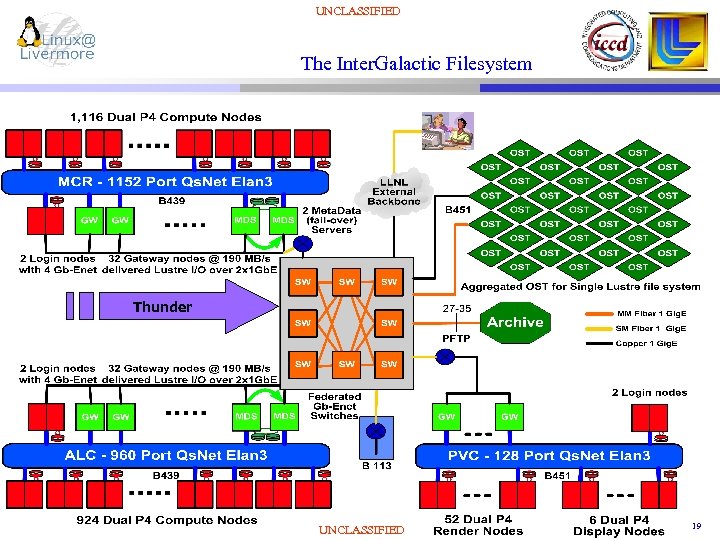

UNCLASSIFIED The Inter. Galactic Filesystem Thunder UNCLASSIFIED 19

UNCLASSIFIED Lustre Issues and Priorities u Reliability!!!! u u Manageability of “Lustre Farm” configurations: u u u first and foremost OST “pool” notion management tools (config, control, monitor, fault-determination) Security of “Lustre Farm” TCP-connected operations. Better/quicker fault determination in multi-networked environment. Better recovery/operational robustness in the face of failures. Improved performance. UNCLASSIFIED 20

UNCLASSIFIED 21

UNCLASSIFIED 22

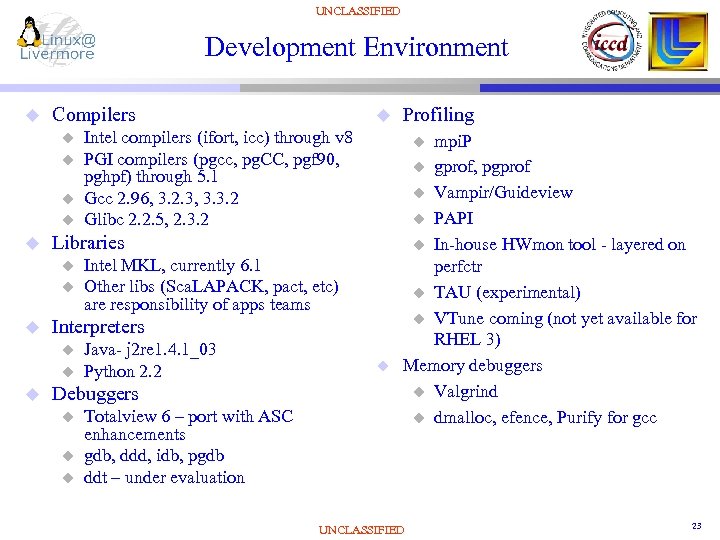

UNCLASSIFIED Development Environment u Compilers u u u Intel MKL, currently 6. 1 Other libs (Sca. LAPACK, pact, etc) are responsibility of apps teams u Java- j 2 re 1. 4. 1_03 Python 2. 2 Debuggers u u u Totalview 6 – port with ASC enhancements gdb, ddd, idb, pgdb ddt – under evaluation Profiling mpi. P u gprof, pgprof u Vampir/Guideview u PAPI u In-house HWmon tool - layered on perfctr u TAU (experimental) u VTune coming (not yet available for RHEL 3) Memory debuggers u Valgrind u dmalloc, efence, Purify for gcc u Interpreters u u Intel compilers (ifort, icc) through v 8 PGI compilers (pgcc, pg. CC, pgf 90, pghpf) through 5. 1 Gcc 2. 96, 3. 2. 3, 3. 3. 2 Glibc 2. 2. 5, 2. 3. 2 Libraries u u UNCLASSIFIED 23

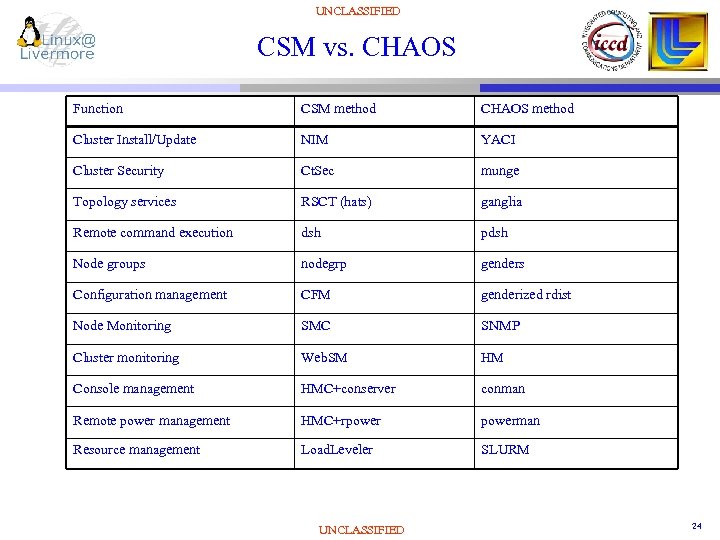

UNCLASSIFIED CSM vs. CHAOS Function CSM method CHAOS method Cluster Install/Update NIM YACI Cluster Security Ct. Sec munge Topology services RSCT (hats) ganglia Remote command execution dsh pdsh Node groups nodegrp genders Configuration management CFM genderized rdist Node Monitoring SMC SNMP Cluster monitoring Web. SM HM Console management HMC+conserver conman Remote power management HMC+rpowerman Resource management Load. Leveler SLURM UNCLASSIFIED 24

UNCLASSIFIED Linux Development Staffing u u u 2 FTE kernel 3 FTE cluster tools + misc 2 FTE SLURM 4 FTE Lustre 1 FTE on-site Red Hat analyst UNCLASSIFIED 25

UNCLASSIFIED Hardware Environment UNCLASSIFIED 26

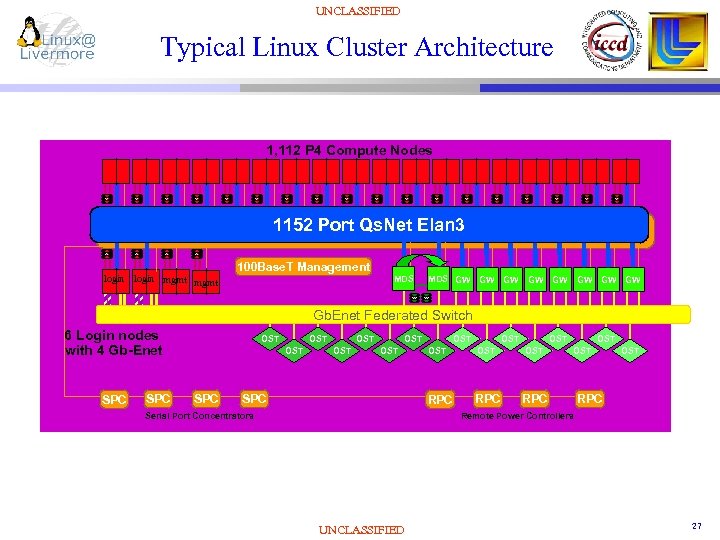

UNCLASSIFIED Typical Linux Cluster Architecture 1, 112 P 4 Compute Nodes 1152 Port Qs. Net Elan 3, 100 Base. T Control login mgmt 100 Base. T Management MDS GW GW Gb. Enet Federated Switch 6 Login nodes with 4 Gb-Enet SPC OST OST OST OST Serial Port Concentrators OST RPC SPC OST RPC Remote Power Controllers UNCLASSIFIED 27

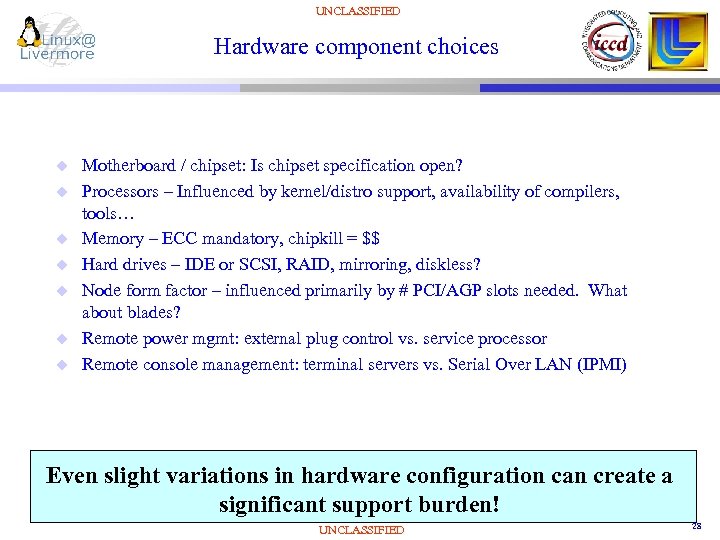

UNCLASSIFIED Hardware component choices u u u u Motherboard / chipset: Is chipset specification open? Processors – Influenced by kernel/distro support, availability of compilers, tools… Memory – ECC mandatory, chipkill = $$ Hard drives – IDE or SCSI, RAID, mirroring, diskless? Node form factor – influenced primarily by # PCI/AGP slots needed. What about blades? Remote power mgmt: external plug control vs. service processor Remote console management: terminal servers vs. Serial Over LAN (IPMI) Even slight variations in hardware configuration can create a significant support burden! UNCLASSIFIED 28

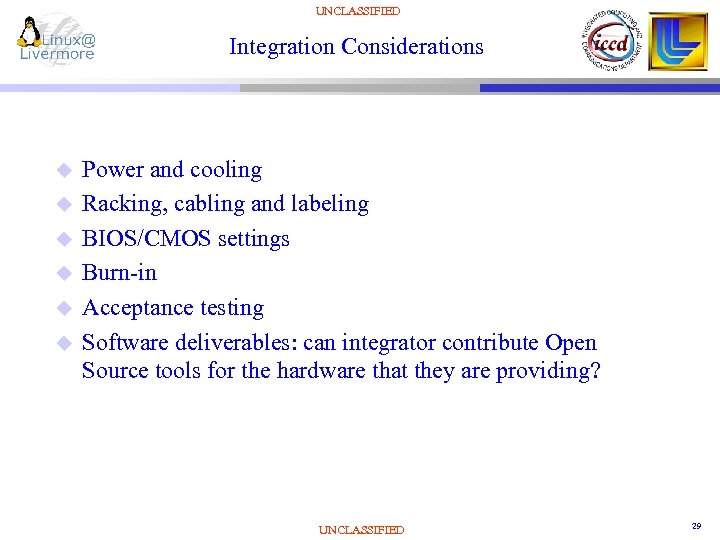

UNCLASSIFIED Integration Considerations u u u Power and cooling Racking, cabling and labeling BIOS/CMOS settings Burn-in Acceptance testing Software deliverables: can integrator contribute Open Source tools for the hardware that they are providing? UNCLASSIFIED 29

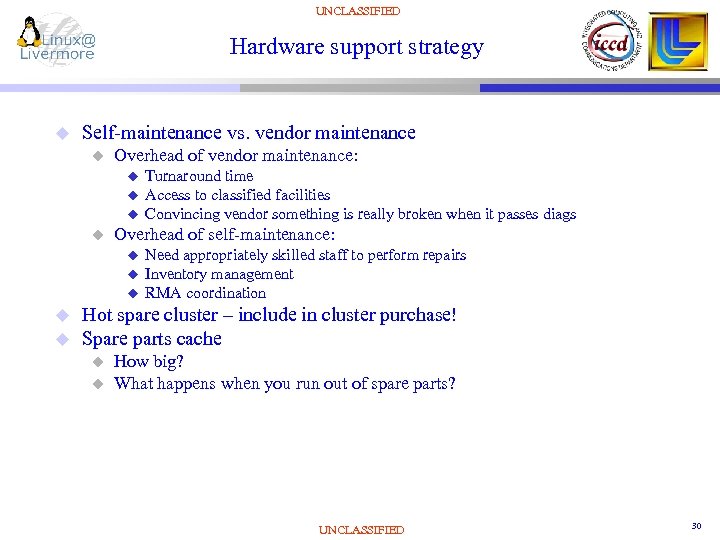

UNCLASSIFIED Hardware support strategy u Self-maintenance vs. vendor maintenance u Overhead of vendor maintenance: u u Overhead of self-maintenance: u u u Turnaround time Access to classified facilities Convincing vendor something is really broken when it passes diags Need appropriately skilled staff to perform repairs Inventory management RMA coordination Hot spare cluster – include in cluster purchase! Spare parts cache u u How big? What happens when you run out of spare parts? UNCLASSIFIED 30

UNCLASSIFIED Conclusions UNCLASSIFIED 31

UNCLASSIFIED Lessons Learned (SW) u A “roll your own” software stack like CHAOS takes significant effort and in-house expertise. Is this overkill for your needs? u u Consider alternatives like OSCAR or NPACI Rocks for small (< 128 node) clusters with common interconnects (Myrinet or Gig. E). Use LLNL cluster tools to augment or replace deficient components of these stacks as needed. Avoid proprietary vendor cluster stacks which will tie you to one vendor’s hardware. A vendor-neutral Open Source software stack enables a common system management toolset across multiple platforms. Even with vendor-supported software stack, a local resource with kernel/systems expertise is a valuable asset to assist in problem isolation and rapid deployment of bug fixes. UNCLASSIFIED 32

UNCLASSIFIED Lessons Learned (HW) u Buy hardware from vendors who understand Linux and can guarantee compatibility. u u Who will do integration? The devil is in the details. u u BIOS pre-configured for serial console or Linux. BIOS! Linux-based tools (preferably Open Source) for hardware management functions. Linux device drivers for all hardware components. A rack is NOT just a rack, a rail is not just a rail All cat 5 cables are not created equally Labeling is critical Hardware self-maintenance can be a significant burden. u u u Node repair Parts cache maintenance RMA processing PC hardware in a rack != HPC Linux cluster UNCLASSIFIED 33

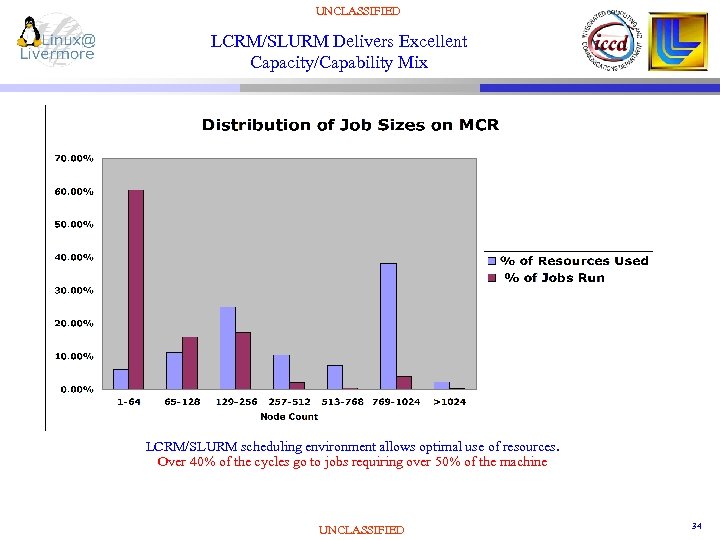

UNCLASSIFIED LCRM/SLURM Delivers Excellent Capacity/Capability Mix LCRM/SLURM scheduling environment allows optimal use of resources. Over 40% of the cycles go to jobs requiring over 50% of the machine UNCLASSIFIED 34

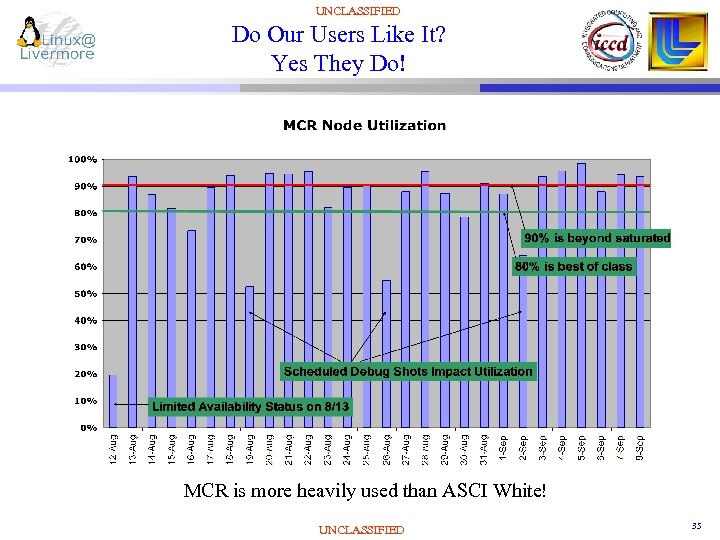

UNCLASSIFIED Do Our Users Like It? Yes They Do! MCR is more heavily used than ASCI White! UNCLASSIFIED 35

c750b9e2dcf14f6aba3d8a40ffec5c1a.ppt