14d3bd52c89b9baebc0bb88a3265895f.ppt

- Количество слайдов: 44

Demo. System 2004 RTES Collaboration 7 March 2005 (NSF ITR grant ACI-0121658) Real-Time and Embedded Technology & Applications Symposium (IEEE) FALSE II Workshop San Francisco, II Workshop March 7 -10, 2005 FALSE

Introduction n Our context: BTe. V q n Our Solution q n n n A 20 Tera. Hz Real-Time System MIC, ARMORs, VLAs The demonstration system Demonstration Comments 7 March 2005 FALSE II Workshop 2

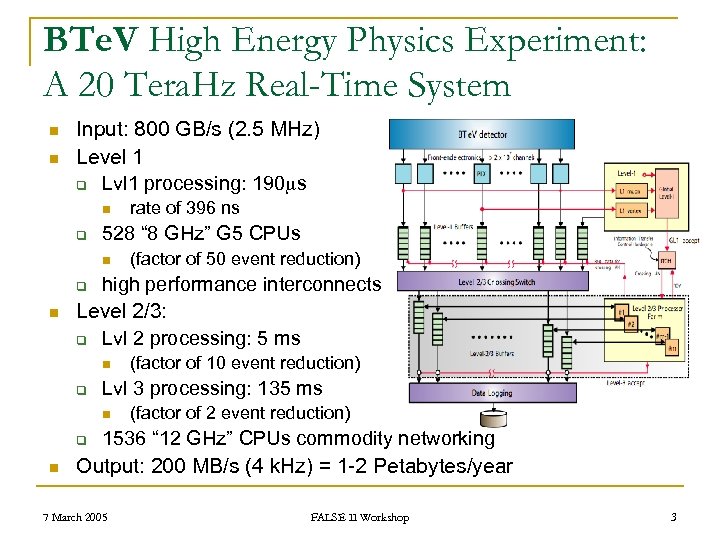

BTe. V High Energy Physics Experiment: A 20 Tera. Hz Real-Time System n n Input: 800 GB/s (2. 5 MHz) Level 1 q Lvl 1 processing: 190 s n q 528 “ 8 GHz” G 5 CPUs n q n (factor of 50 event reduction) high performance interconnects Level 2/3: q Lvl 2 processing: 5 ms n q q (factor of 10 event reduction) Lvl 3 processing: 135 ms n n rate of 396 ns (factor of 2 event reduction) 1536 “ 12 GHz” CPUs commodity networking Output: 200 MB/s (4 k. Hz) = 1 -2 Petabytes/year 7 March 2005 FALSE II Workshop 3

The Problem n Monitoring, Fault Tolerance and Fault Mitigation are crucial q n Software reliability depends on q q q n In a cluster of this size, processes and daemons are constantly hanging/failing without warning or notice Physics detector-machine performance Program testing procedures, implementation, and design quality Behavior of the electronics (front-end and within the trigger) Hardware failures will occur! q one to a few per week 7 March 2005 FALSE II Workshop 4

The Problem (continued) n Given the very complex nature of this system where thousands of events are simultaneously and asynchronously cooking, issues of data integrity, robustness, and monitoring are critically important and have the capacity to cripple a design if not dealt with at the outset… BTe. V [needs to] supply the necessary level of “self-awareness” in the trigger system. [June 2000 Project Review] 7 March 2005 FALSE II Workshop 5

BTe. V’s Response: RTES n The Real Time Embedded System Group q n n NSF ITR grant ACI-0121658 Physicists and Computer Scientists/Electrical Engineers at BTe. V institutions with expertise in q q q n A collaboration of five institutions, n University of Illinois n University of Pittsburgh n University of Syracuse n Vanderbilt University (PI) n Fermilab High performance, real-time system software and hardware, Reliability and fault tolerance, System specification, generation, and modeling tools. Working on fault management in large computing clusters 7 March 2005 FALSE II Workshop 6

RTES Goals for BTe. V n High availability q Fault handling infrastructure capable of n n n n Accurately identifying problems (where, what, and why) Compensating for problems (shift the load, changing thresholds) Automated recovery procedures (restart / reconfiguration) Accurate accounting Extensibility (capturing new detection/recovery procedures) Policy driven monitoring and control Dynamic reconfiguration q adjust to potentially changing resources 7 March 2005 FALSE II Workshop 7

RTES Goals for BTe. V (continued) n Faults must be detected/corrected ASAP q semi-autonomously n q n with as little human intervention as possible distributed and hierarchical monitoring and control Life-cycle maintainability and evolvability q to deal with new algorithms, new hardware and new versions of the OS 7 March 2005 FALSE II Workshop 8

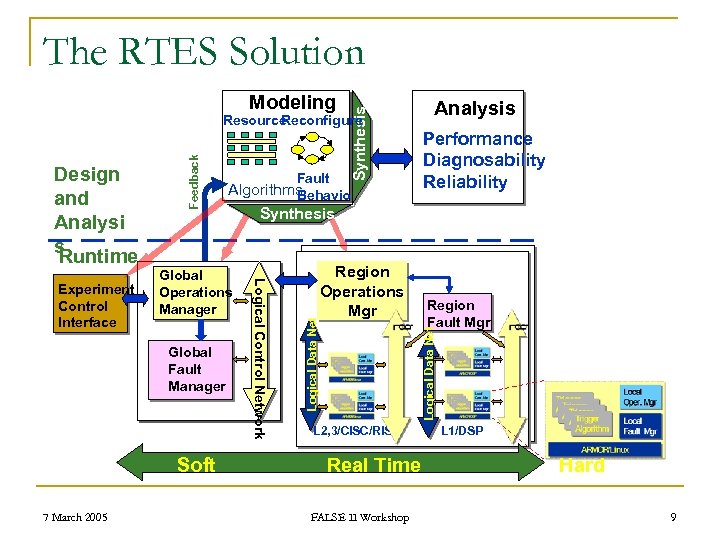

Modeling Synthesis The RTES Solution Global Fault Manager Soft 7 March 2005 Region Operations Mgr Analysis Performance Diagnosability Reliability Region Fault Mgr Logical Data Net Global Operations Manager Logical Control Network Experiment Control Interface Fault Algorithms Behavior Synthesis Logical Data Net Design and Analysi s Runtime Feedback Resource Reconfigure L 2, 3/CISC/RISC Real Time FALSE II Workshop L 1/DSP Hard 9

RTES Deliverables n A hierarchical fault management system and toolkit: q Model Integrated Computing n GME (Generic Modeling Environment) system modeling tools q q ARMORs (Adaptive, Reconfigurable, and Mobile Objects for Reliability) n q and application specific “graphic languages” for modeling system configuration, messaging, fault behaviors, user interface, etc. Robust framework for detection and reaction to faults in processes VLAs (Very Lightweight Agents for limited resource environments) n To monitor/mitigate at every level q 7 March 2005 DSP, Supervisory nodes, Linux farm, etc. FALSE II Workshop 10

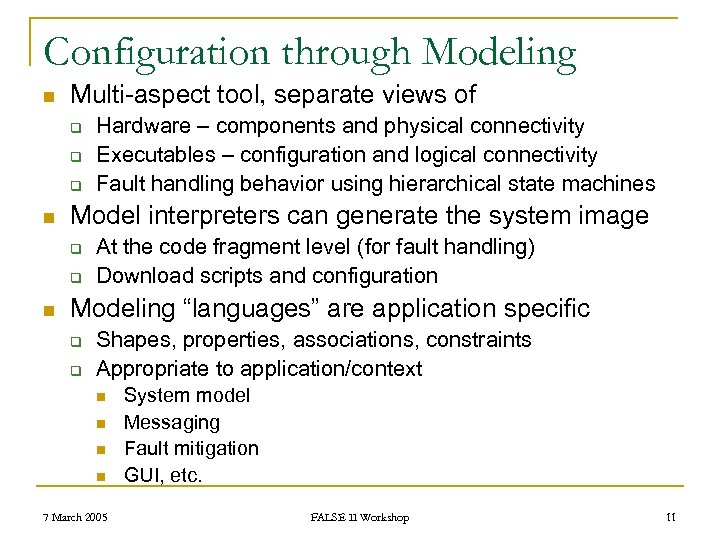

Configuration through Modeling n Multi-aspect tool, separate views of q q q n Model interpreters can generate the system image q q n Hardware – components and physical connectivity Executables – configuration and logical connectivity Fault handling behavior using hierarchical state machines At the code fragment level (for fault handling) Download scripts and configuration Modeling “languages” are application specific q q Shapes, properties, associations, constraints Appropriate to application/context n n 7 March 2005 System model Messaging Fault mitigation GUI, etc. FALSE II Workshop 11

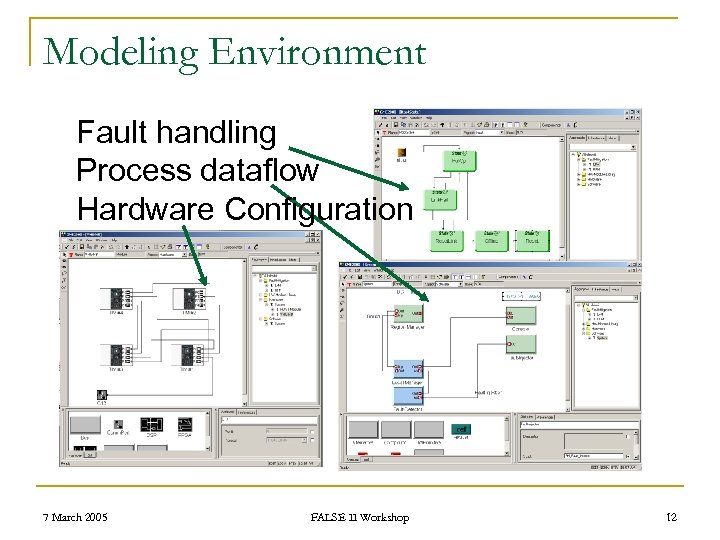

Modeling Environment §Fault handling §Process dataflow §Hardware Configuration 7 March 2005 FALSE II Workshop 12

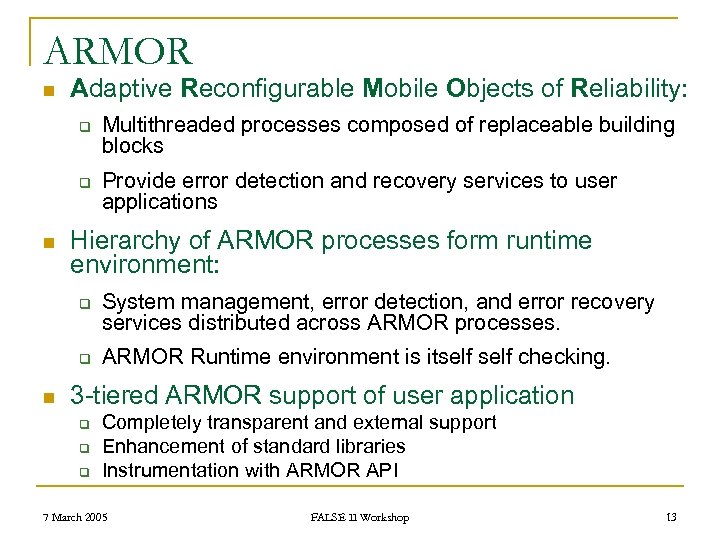

ARMOR n Adaptive Reconfigurable Mobile Objects of Reliability: q q n Provide error detection and recovery services to user applications Hierarchy of ARMOR processes form runtime environment: q q n Multithreaded processes composed of replaceable building blocks System management, error detection, and error recovery services distributed across ARMOR processes. ARMOR Runtime environment is itself checking. 3 -tiered ARMOR support of user application q q q Completely transparent and external support Enhancement of standard libraries Instrumentation with ARMOR API 7 March 2005 FALSE II Workshop 13

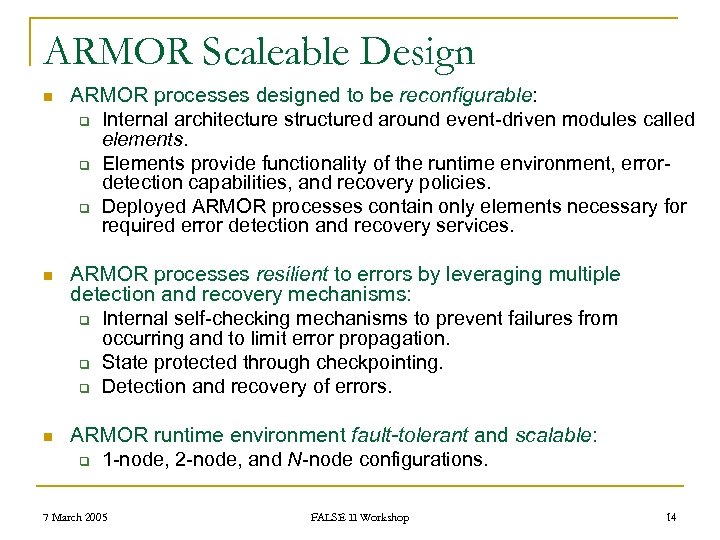

ARMOR Scaleable Design n ARMOR processes designed to be reconfigurable: q Internal architecture structured around event-driven modules called elements. q Elements provide functionality of the runtime environment, errordetection capabilities, and recovery policies. q Deployed ARMOR processes contain only elements necessary for required error detection and recovery services. n ARMOR processes resilient to errors by leveraging multiple detection and recovery mechanisms: q Internal self-checking mechanisms to prevent failures from occurring and to limit error propagation. q State protected through checkpointing. q Detection and recovery of errors. n ARMOR runtime environment fault-tolerant and scalable: q 1 -node, 2 -node, and N-node configurations. 7 March 2005 FALSE II Workshop 14

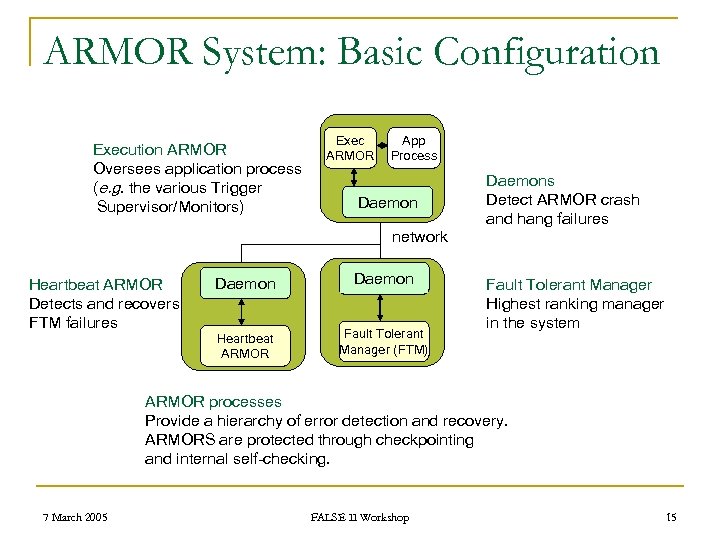

ARMOR System: Basic Configuration Execution ARMOR Oversees application process (e. g. the various Trigger Supervisor/Monitors) Exec ARMOR App Process Daemon network Heartbeat ARMOR Detects and recovers FTM failures Daemon Heartbeat ARMOR Fault Tolerant Manager (FTM) Daemons Detect ARMOR crash and hang failures Fault Tolerant Manager Highest ranking manager in the system ARMOR processes Provide a hierarchy of error detection and recovery. ARMORS are protected through checkpointing and internal self-checking. 7 March 2005 FALSE II Workshop 15

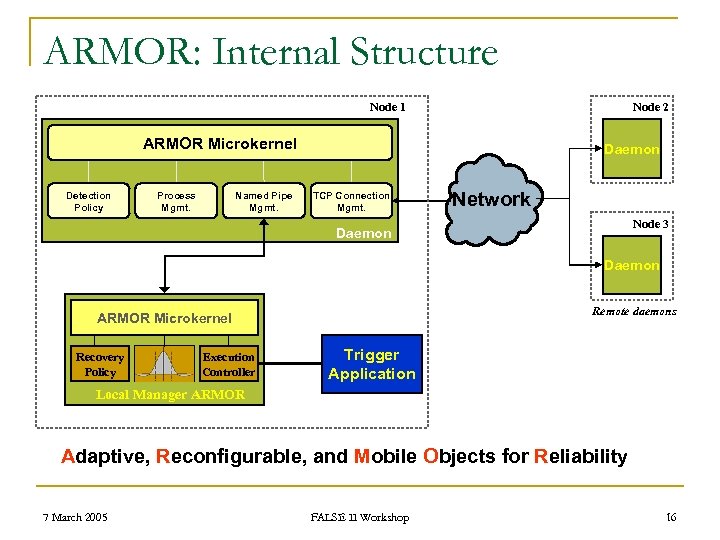

ARMOR: Internal Structure Node 1 Node 2 ARMOR Microkernel Detection Policy Process Mgmt. Named Pipe Mgmt. Daemon TCP Connection Mgmt. Network Node 3 Daemon Remote daemons ARMOR Microkernel Recovery Policy Execution Controller Trigger Application Local Manager ARMOR Adaptive, Reconfigurable, and Mobile Objects for Reliability 7 March 2005 FALSE II Workshop 16

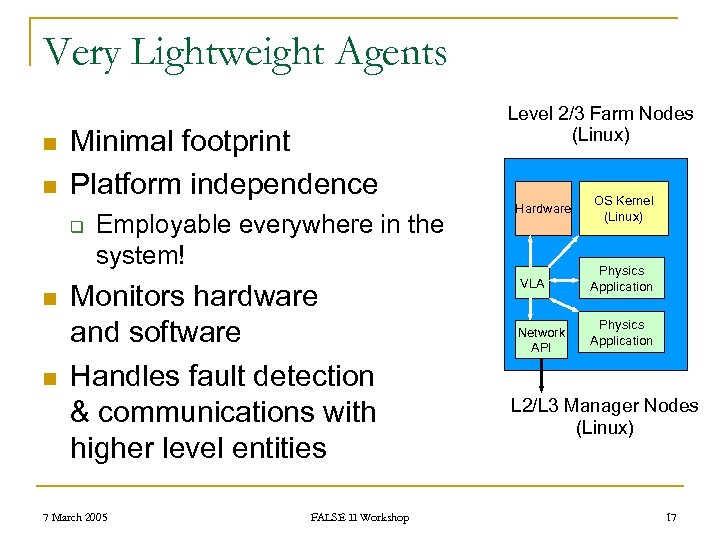

Very Lightweight Agents n n Minimal footprint Platform independence q n n Employable everywhere in the system! Monitors hardware and software Handles fault detection & communications with higher level entities 7 March 2005 FALSE II Workshop Level 2/3 Farm Nodes (Linux) Hardware VLA Network API OS Kernel (Linux) Physics Application L 2/L 3 Manager Nodes (Linux) 17

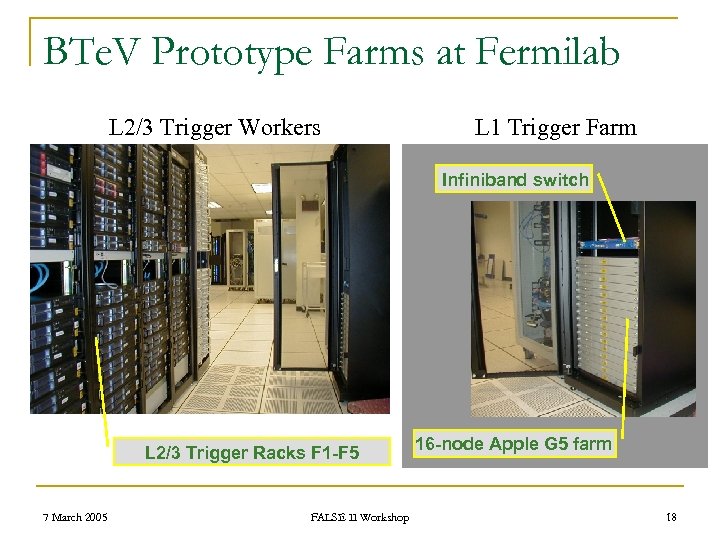

BTe. V Prototype Farms at Fermilab L 2/3 Trigger Workers L 1 Trigger Farm Infiniband switch L 2/3 Trigger Racks F 1 -F 5 7 March 2005 FALSE II Workshop 16 -node Apple G 5 farm 18

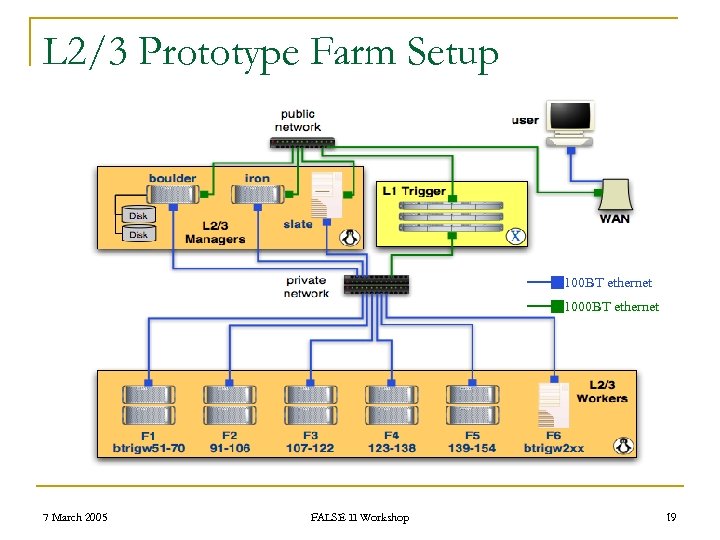

L 2/3 Prototype Farm Setup 100 BT ethernet 1000 BT ethernet 7 March 2005 FALSE II Workshop 19

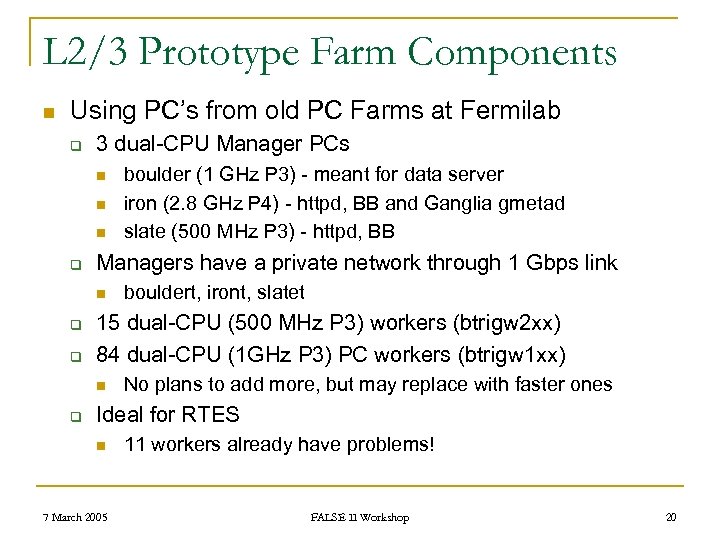

L 2/3 Prototype Farm Components n Using PC’s from old PC Farms at Fermilab q 3 dual-CPU Manager PCs n n n q Managers have a private network through 1 Gbps link n q q bouldert, iront, slatet 15 dual-CPU (500 MHz P 3) workers (btrigw 2 xx) 84 dual-CPU (1 GHz P 3) PC workers (btrigw 1 xx) n q boulder (1 GHz P 3) - meant for data server iron (2. 8 GHz P 4) - httpd, BB and Ganglia gmetad slate (500 MHz P 3) - httpd, BB No plans to add more, but may replace with faster ones Ideal for RTES n 7 March 2005 11 workers already have problems! FALSE II Workshop 20

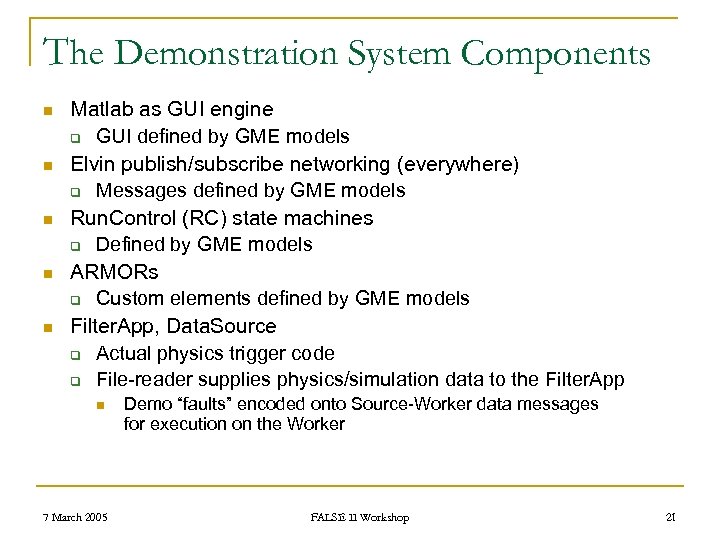

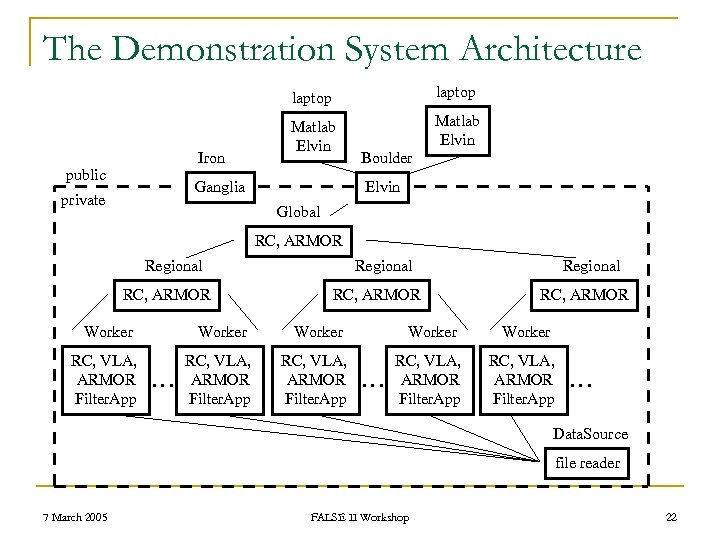

The Demonstration System Components n n n Matlab as GUI engine q GUI defined by GME models Elvin publish/subscribe networking (everywhere) q Messages defined by GME models Run. Control (RC) state machines q Defined by GME models ARMORs q Custom elements defined by GME models Filter. App, Data. Source q Actual physics trigger code q File-reader supplies physics/simulation data to the Filter. App n 7 March 2005 Demo “faults” encoded onto Source-Worker data messages for execution on the Worker FALSE II Workshop 21

The Demonstration System Architecture laptop Iron public laptop Matlab Elvin Ganglia private Boulder Elvin Global RC, ARMOR Regional RC, ARMOR Worker Worker RC, VLA, ARMOR Filter. App … … … Data. Source file reader 7 March 2005 FALSE II Workshop 22

BB and Ganglia n For traditional monitoring http: //iron. fnal. gov/ 7 March 2005 FALSE II Workshop 23

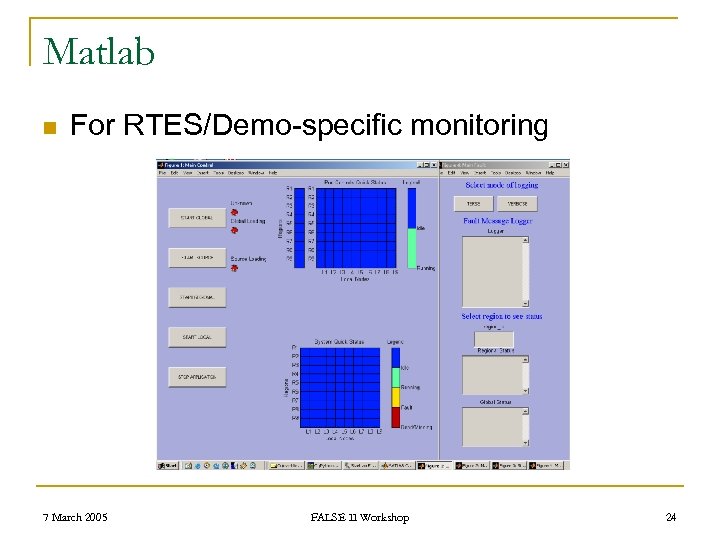

Matlab n For RTES/Demo-specific monitoring 7 March 2005 FALSE II Workshop 24

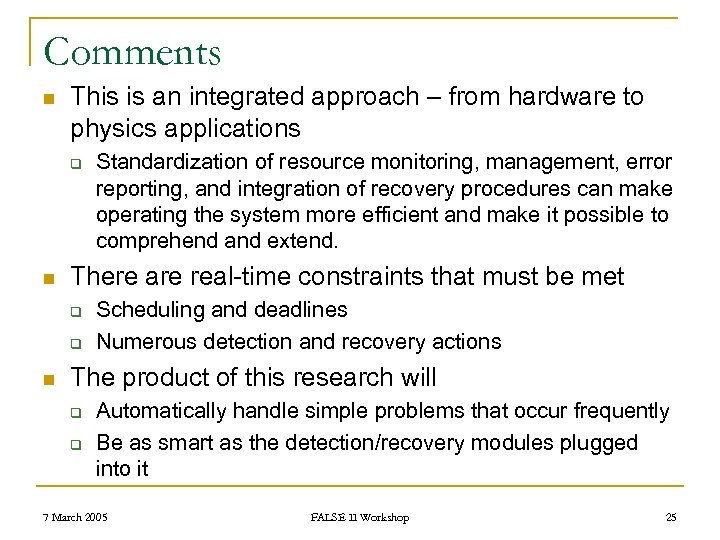

Comments n This is an integrated approach – from hardware to physics applications q n There are real-time constraints that must be met q q n Standardization of resource monitoring, management, error reporting, and integration of recovery procedures can make operating the system more efficient and make it possible to comprehend and extend. Scheduling and deadlines Numerous detection and recovery actions The product of this research will q q Automatically handle simple problems that occur frequently Be as smart as the detection/recovery modules plugged into it 7 March 2005 FALSE II Workshop 25

Comments (continued) n The product can lead to better or increased q System uptime by compensating for problems or predicting them n q Resource utilization n q q instead of pausing or stopping the experiment the system will use resources that it needs Understanding of the operating characteristics of the software Ability to debug and diagnose difficult problems 7 March 2005 FALSE II Workshop 26

Further Information n General information about RTES q n General information about BTe. V q n http: //www-btev. fnal. gov/public/hep/detector/rtes/ http: //www-btev. fnal. gov/ Information about GME and the Vanderbilt ISIS group q http: //www. isis. vanderbilt. edu/ 7 March 2005 FALSE II Workshop 27

Further Information (continued) n Information about ARMOR technology q n Talks from our last workshop q n http: //false 2002. vanderbilt. edu/program. php Wiki (internal, today) q n http: //www. crhc. uiuc. edu/DEPEND/projects. ARMORs. htm whcdf 03. fnal. gov/BTe. V-wiki/Demo. System 2004 Elvin publish/subscribe networking q www. mantara. com 7 March 2005 FALSE II Workshop 28

Demonstration Outline n GME q q n n Building and Deployment Runtime q q n SIML, DTML, Custom Elements, Matlab GUI Meta language ARMORs, VLAs Fault detection and mitigation Reconfigure and Redeploy q 2 x, 4 x 7 March 2005 FALSE II Workshop 29

Demonstration Slides 7 March 2005 FALSE II Workshop 30

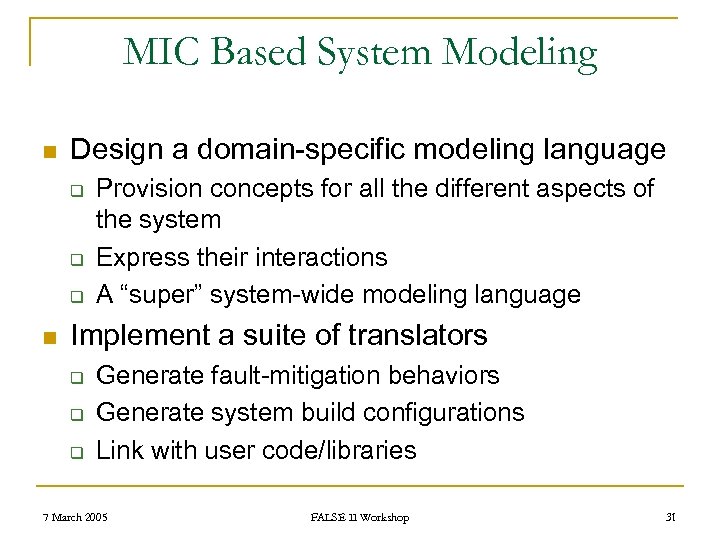

MIC Based System Modeling n Design a domain-specific modeling language q q q n Provision concepts for all the different aspects of the system Express their interactions A “super” system-wide modeling language Implement a suite of translators q q q Generate fault-mitigation behaviors Generate system build configurations Link with user code/libraries 7 March 2005 FALSE II Workshop 31

SIML –System Integration Modeling Language n n n 7 March 2005 FALSE II Workshop Model Component Hierarchy and Interactions Loosely specified model of computation Model information relevant for system configuration 32

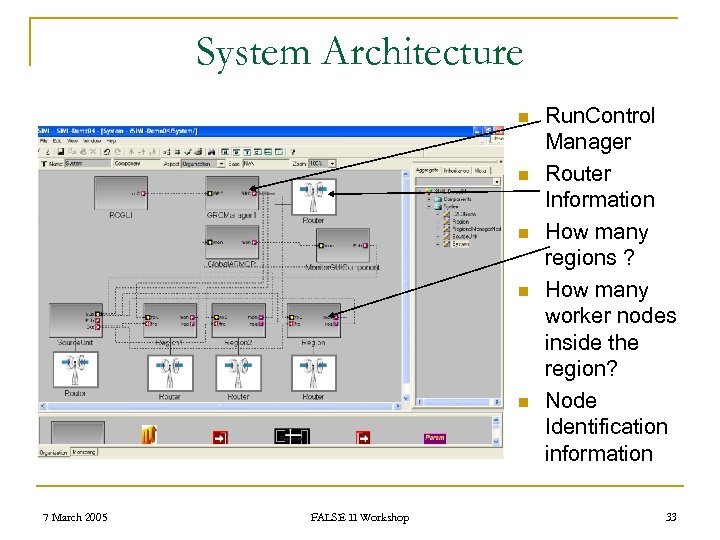

System Architecture n n n 7 March 2005 FALSE II Workshop Run. Control Manager Router Information How many regions ? How many worker nodes inside the region? Node Identification information 33

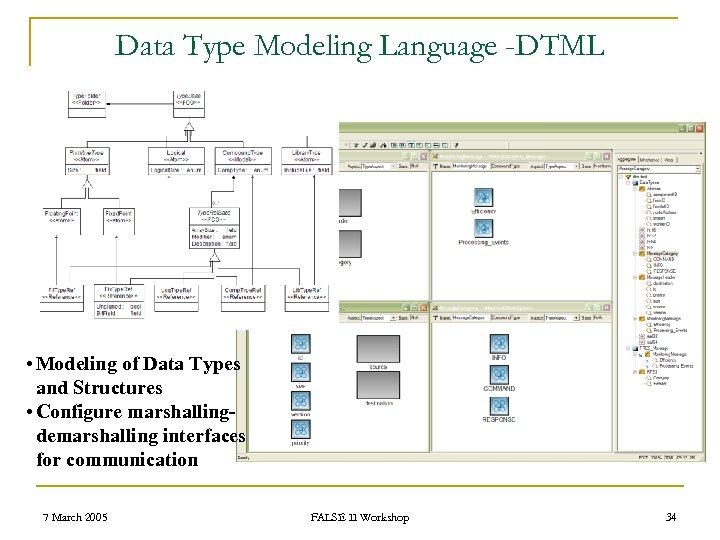

Data Type Modeling Language -DTML • Modeling of Data Types and Structures • Configure marshallingdemarshalling interfaces for communication 7 March 2005 FALSE II Workshop 34

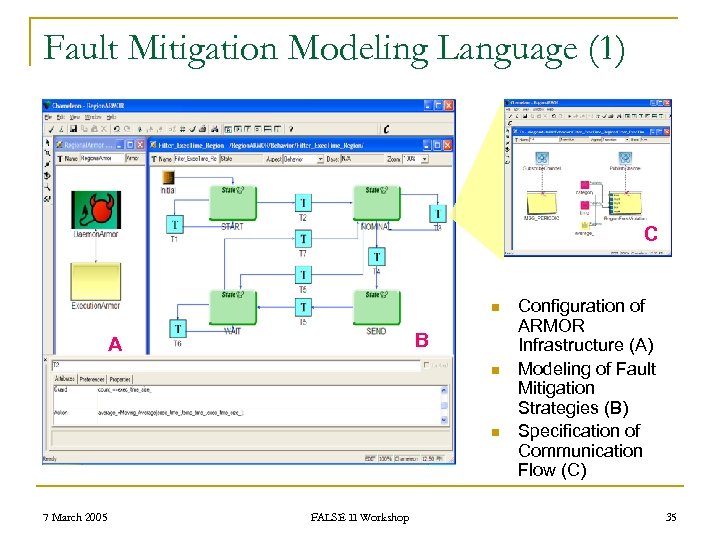

Fault Mitigation Modeling Language (1) C n B A n n 7 March 2005 FALSE II Workshop Configuration of ARMOR Infrastructure (A) Modeling of Fault Mitigation Strategies (B) Specification of Communication Flow (C) 35

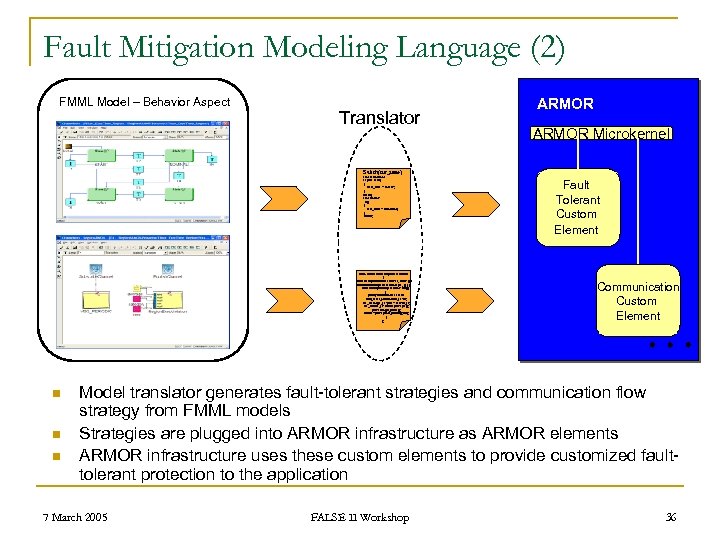

Fault Mitigation Modeling Language (2) FMML Model – Behavior Aspect Translator ARMOR Microkernel Switch(cur_state) case NOMINAL: I f (time<100) { next_state = FAULT; } Break; case FAULT if () { next_state = NOMINAL; } break; class armorcallback 0: public Callback { public: ack 0(Controls. Cection *cc, void *p) : Callback. Fault. Inject. Tererbose>(cc, p) { } void invoke(Fault. Injecerbose* msg) { printf("Callback. Recievede dtml_rcver_Local. Armor_ct *Lo; mc_message_ct *pmc = new m_ct; mc_bundle_ct *bundlepmc->ple(); pmc->assign_name(); bundle=pmc->push_bundle(); mc); } }; n n n Fault Tolerant Custom Element Communication Custom Element Model translator generates fault-tolerant strategies and communication flow strategy from FMML models Strategies are plugged into ARMOR infrastructure as ARMOR elements ARMOR infrastructure uses these custom elements to provide customized faulttolerant protection to the application 7 March 2005 FALSE II Workshop 36

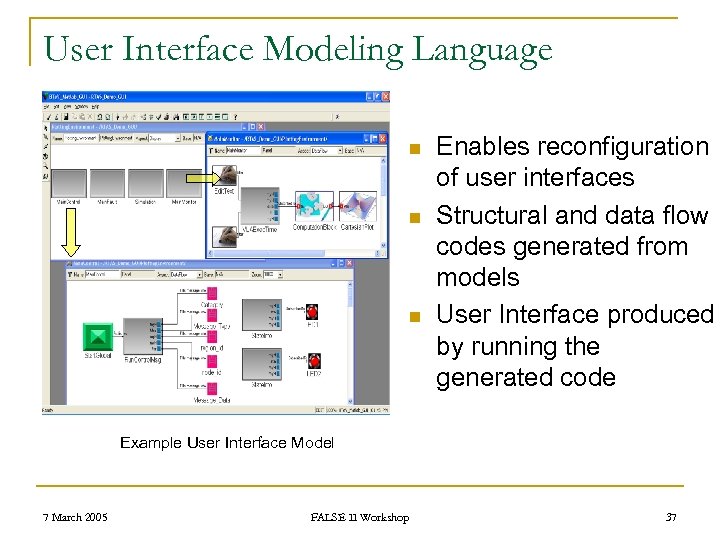

User Interface Modeling Language n n n Enables reconfiguration of user interfaces Structural and data flow codes generated from models User Interface produced by running the generated code Example User Interface Model 7 March 2005 FALSE II Workshop 37

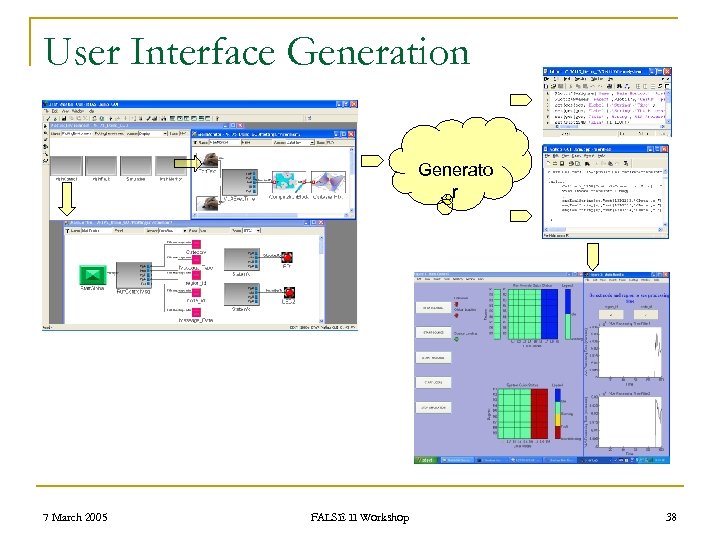

User Interface Generation Generato r 7 March 2005 FALSE II Workshop 38

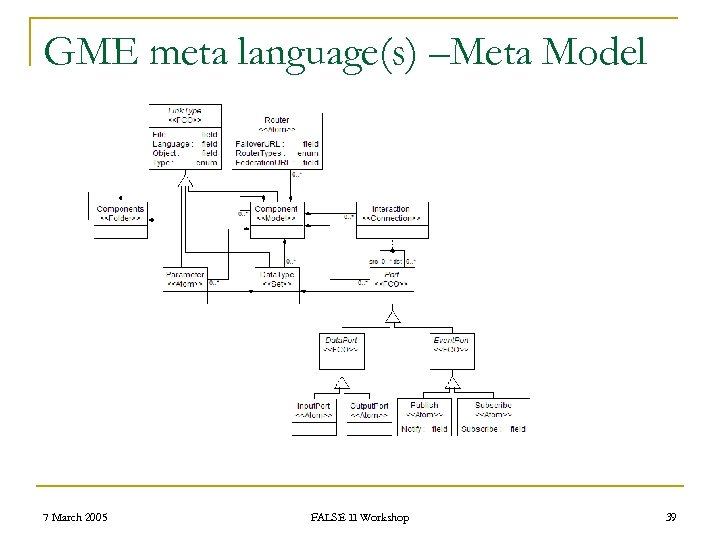

GME meta language(s) –Meta Model 7 March 2005 FALSE II Workshop 39

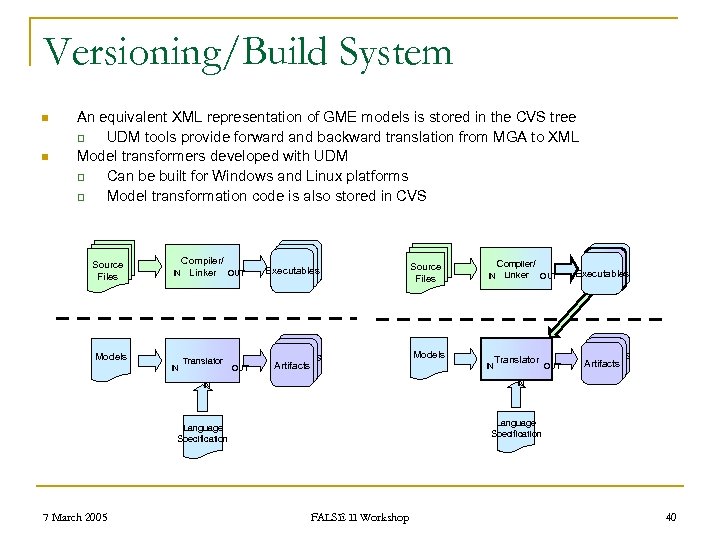

Versioning/Build System n n An equivalent XML representation of GME models is stored in the CVS tree q UDM tools provide forward and backward translation from MGA to XML Model transformers developed with UDM q Can be built for Windows and Linux platforms q Model transformation code is also stored in CVS Source Files IN Models IN Compiler/ Linker OUT Translator Object Executables Artifacts OUT Object Artifacts IN IN Language Specification 7 March 2005 Source Files FALSE II Workshop 40

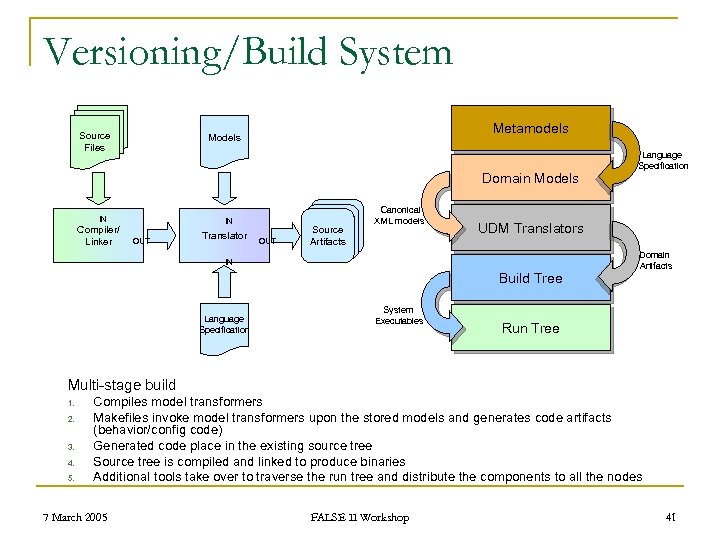

Versioning/Build System Source Files Metamodels Models Domain Models IN Compiler/ Linker IN OUT Translator OUT Artifacts Source Object Artifacts Canonical XML models UDM Translators IN Build Tree Language Specification System Executables Language Specification Domain Artifacts Run Tree Multi-stage build 1. 2. 3. 4. 5. Compiles model transformers Makefiles invoke model transformers upon the stored models and generates code artifacts (behavior/config code) Generated code place in the existing source tree Source tree is compiled and linked to produce binaries Additional tools take over to traverse the run tree and distribute the components to all the nodes 7 March 2005 FALSE II Workshop 41

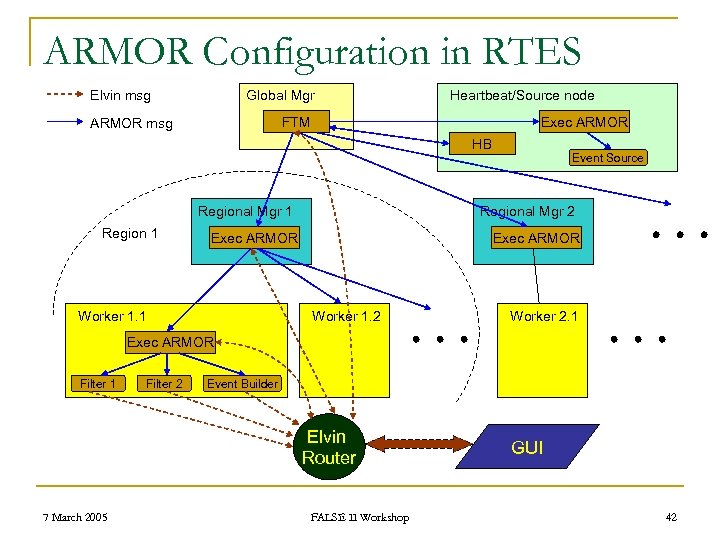

ARMOR Configuration in RTES Elvin msg Global Mgr Heartbeat/Source node FTM ARMOR msg Exec ARMOR HB Regional Mgr 1 Regional Mgr 2 Exec ARMOR Worker 1. 1 Event Source Exec ARMOR Worker 1. 2 Worker 2. 1 Exec ARMOR Filter 1 Filter 2 Event Builder Elvin Router 7 March 2005 FALSE II Workshop GUI 42

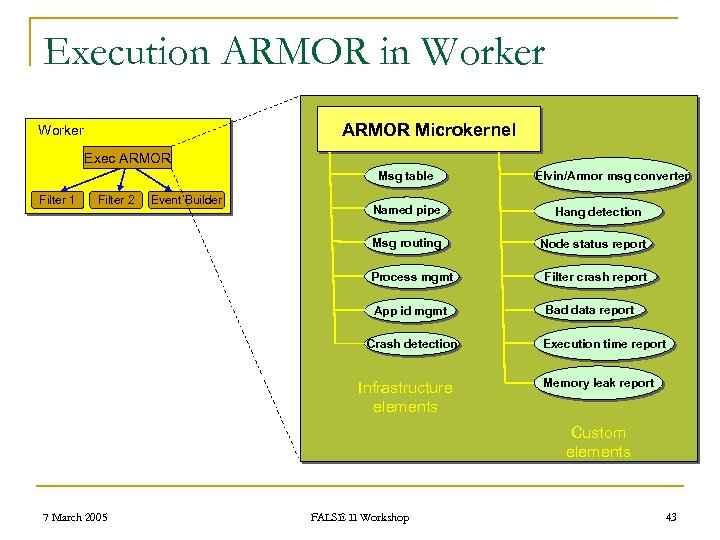

Execution ARMOR in Worker ARMOR Microkernel Worker Exec ARMOR Msg table Filter 1 Filter 2 Event Builder Named pipe Msg routing Elvin/Armor msg converter Hang detection Node status report Process mgmt Filter crash report App id mgmt Bad data report Crash detection Infrastructure elements Execution time report Memory leak report Custom elements 7 March 2005 FALSE II Workshop 43

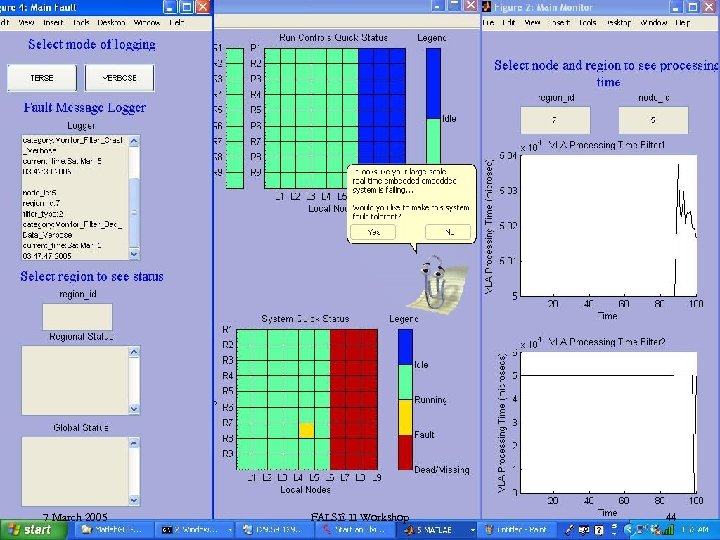

7 March 2005 FALSE II Workshop 44

14d3bd52c89b9baebc0bb88a3265895f.ppt