74650e19eebed13382c747f523d85ffa.ppt

- Количество слайдов: 10

DEISA security Jules Wolfrat SARA MSWG, Amsterdam, December 15, 2005

DEISA objectives • To enable Europe’s terascale science by the integration of Europe’s most powerful supercomputing systems. • Enabling scientific discovery across a broad spectrum of science and technology is the only criterion for success • DEISA is an European Supercomputing Service built on top of existing national services. This service is based on the deployment and operation of a persistent, production quality, distributed supercomputing environment with continental scope. • The integration of national facilities and services, together with innovative operational models, is expected to add substantial value to existing infrastructures. • Main focus is High Performance Computing (HPC). MSWG, Amsterdam, December 15, 2005 2

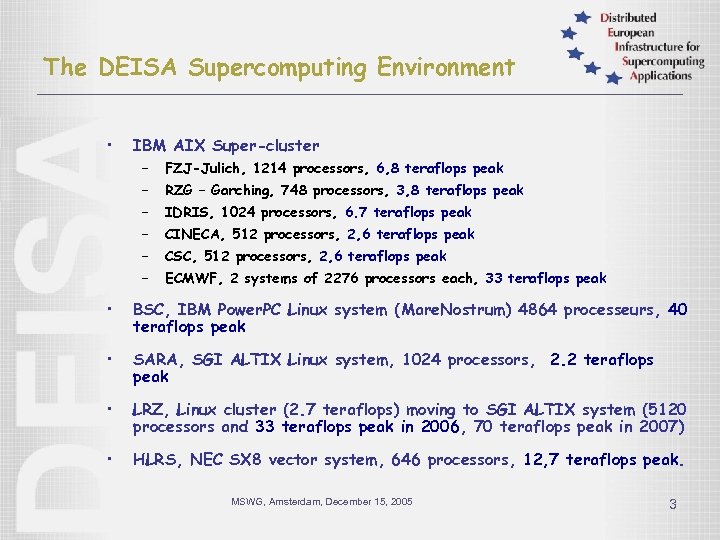

The DEISA Supercomputing Environment • IBM AIX Super-cluster – FZJ-Julich, 1214 processors, 6, 8 teraflops peak – RZG – Garching, 748 processors, 3, 8 teraflops peak – IDRIS, 1024 processors, 6. 7 teraflops peak – CINECA, 512 processors, 2, 6 teraflops peak – CSC, 512 processors, 2, 6 teraflops peak – ECMWF, 2 systems of 2276 processors each, 33 teraflops peak • BSC, IBM Power. PC Linux system (Mare. Nostrum) 4864 processeurs, 40 teraflops peak • SARA, SGI ALTIX Linux system, 1024 processors, 2. 2 teraflops peak • LRZ, Linux cluster (2. 7 teraflops) moving to SGI ALTIX system (5120 processors and 33 teraflops peak in 2006, 70 teraflops peak in 2007) • HLRS, NEC SX 8 vector system, 646 processors, 12, 7 teraflops peak. MSWG, Amsterdam, December 15, 2005 3

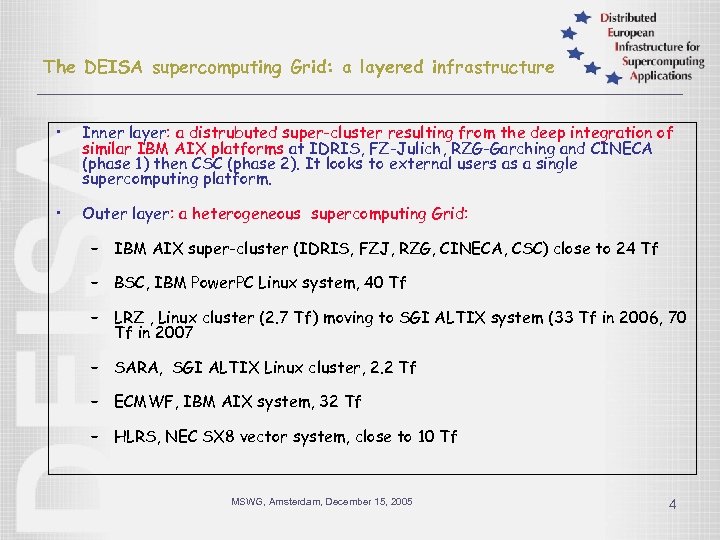

The DEISA supercomputing Grid: a layered infrastructure • Inner layer: a distrubuted super-cluster resulting from the deep integration of similar IBM AIX platforms at IDRIS, FZ-Julich, RZG-Garching and CINECA (phase 1) then CSC (phase 2). It looks to external users as a single supercomputing platform. • Outer layer: a heterogeneous supercomputing Grid: – IBM AIX super-cluster (IDRIS, FZJ, RZG, CINECA, CSC) close to 24 Tf – BSC, IBM Power. PC Linux system, 40 Tf – LRZ , Linux cluster (2. 7 Tf) moving to SGI ALTIX system (33 Tf in 2006, 70 Tf in 2007 – SARA, SGI ALTIX Linux cluster, 2. 2 Tf – ECMWF, IBM AIX system, 32 Tf – HLRS, NEC SX 8 vector system, close to 10 Tf MSWG, Amsterdam, December 15, 2005 4

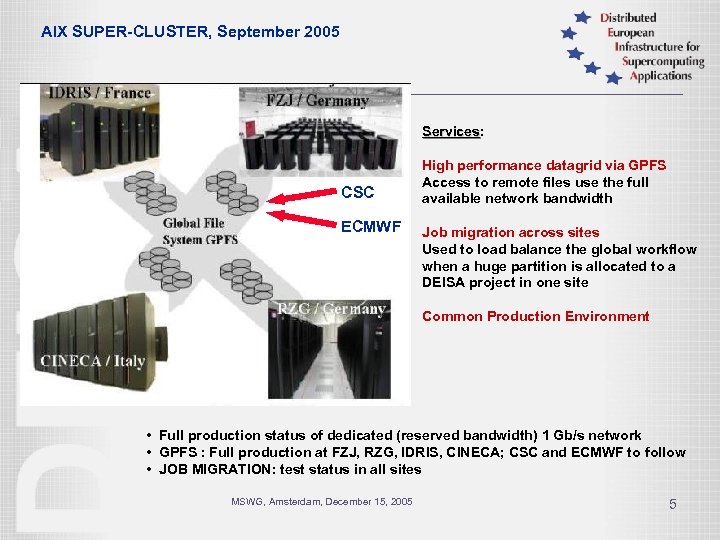

AIX SUPER-CLUSTER, September 2005 Services: Services CSC ECMWF High performance datagrid via GPFS Access to remote files use the full available network bandwidth Job migration across sites Used to load balance the global workflow when a huge partition is allocated to a DEISA project in one site Common Production Environment • Full production status of dedicated (reserved bandwidth) 1 Gb/s network • GPFS : Full production at FZJ, RZG, IDRIS, CINECA; CSC and ECMWF to follow • JOB MIGRATION: test status in all sites MSWG, Amsterdam, December 15, 2005 5

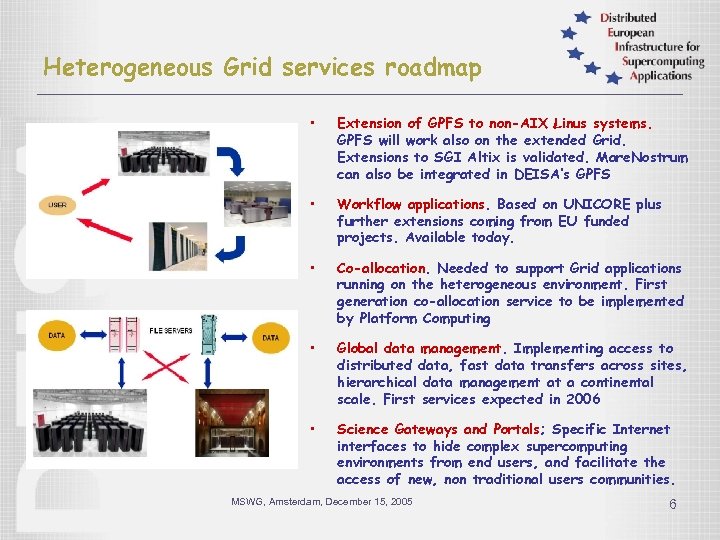

Heterogeneous Grid services roadmap • Extension of GPFS to non-AIX Linus systems. GPFS will work also on the extended Grid. Extensions to SGI Altix is validated. Mare. Nostrum can also be integrated in DEISA’s GPFS • Workflow applications. Based on UNICORE plus further extensions coming from EU funded projects. Available today. • Co-allocation. Needed to support Grid applications running on the heterogeneous environment. First generation co-allocation service to be implemented by Platform Computing • Global data management. Implementing access to distributed data, fast data transfers across sites, hierarchical data management at a continental scale. First services expected in 2006 • Science Gateways and Portals; Specific Internet interfaces to hide complex supercomputing environments from end users, and facilitate the access of new, non traditional users communities. MSWG, Amsterdam, December 15, 2005 6

Technologies deployed • Batch systems integrated between core sites (Loadleveler. MC) • Transparent data access – Global file system – GPFS (MC) on IBM systems – high performance parallel filesystem, high throughput network needed between sites to achieve performance – dedicated network between sites, currently provided by GEANT and NRENs (1 Gbps) – AFS (if GPFS not available) • UNICORE for job submission in heterogeneous environment MSWG, Amsterdam, December 15, 2005 7

DEISA AA (1) • For both LL-MC and GPFS Auth. X and Auth. Z based on Posix ids • Synchronization needed between sites of DEISA user ids and group ids • User administration system build based on LDAP – Each site add DEISA users from their sites in LDAP system – Other sites extract information and update local user administration • Duplicate ids avoided by using reserved ranges for each partner – for both uid and gid - also existing users get a new DEISA user id. • GPFS also has mapping functionality e. g. xuid 1 (site A) and uid 2 (site B) – not used yet MSWG, Amsterdam, December 15, 2005 8

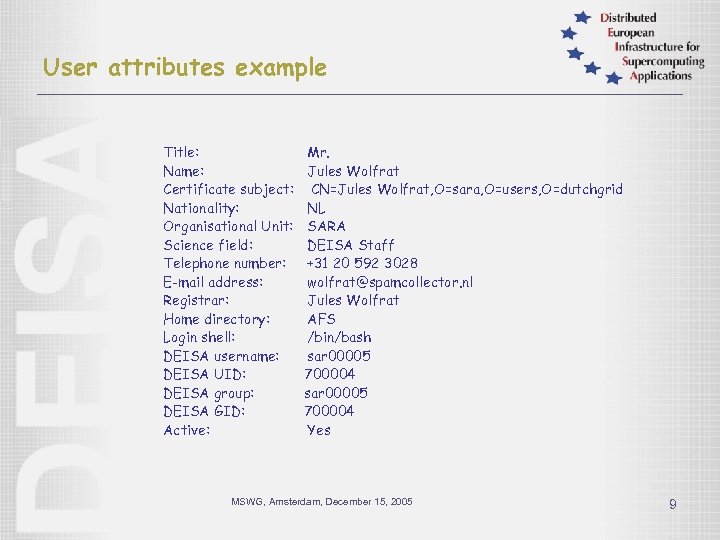

User attributes example Title: Name: Certificate subject: Nationality: Organisational Unit: Science field: Telephone number: E-mail address: Registrar: Home directory: Login shell: DEISA username: DEISA UID: DEISA group: DEISA GID: Active: Mr. Jules Wolfrat CN=Jules Wolfrat, O=sara, O=users, O=dutchgrid NL SARA DEISA Staff +31 20 592 3028 wolfrat@spamcollector. nl Jules Wolfrat AFS /bin/bash sar 00005 700004 Yes MSWG, Amsterdam, December 15, 2005 9

DEISA AA (2) • UNICORE Auth. X and Auth. Z based on X. 509 certs • Certificates accepted from EUGrid. PMA CAs – Except ECMWF, lifetime considered too long – they provide smartcards for users that need access – and then they can request certs with lifetime in order of 2 weeks from ECMWF CA • LDAP system used for distribution of certs for addition to UUDB for UNICORE auth. Z – transition to DN based Auth. Z now • More fine grained auth. Z is under discussion – now access to site is yes/no MSWG, Amsterdam, December 15, 2005 10

74650e19eebed13382c747f523d85ffa.ppt