0a61521be563cf81bee4c4ca655d749f.ppt

- Количество слайдов: 25

Deep Web Mining and Learning for Advanced Local Search CS 8803 Advisor Prof Liu Yu Liu, Dan Hou Zhigang Hua, Xin Sun Yanbing Yu

Competitors n n n Yahoo! Local Yelp City. Search Google Local Yellow Page How to beat them?

Research Background n n n Deep Web Crawling Sentimental Learning Sentimental Ranking Model Geo-credit Ranking Model Social Network for Businesses

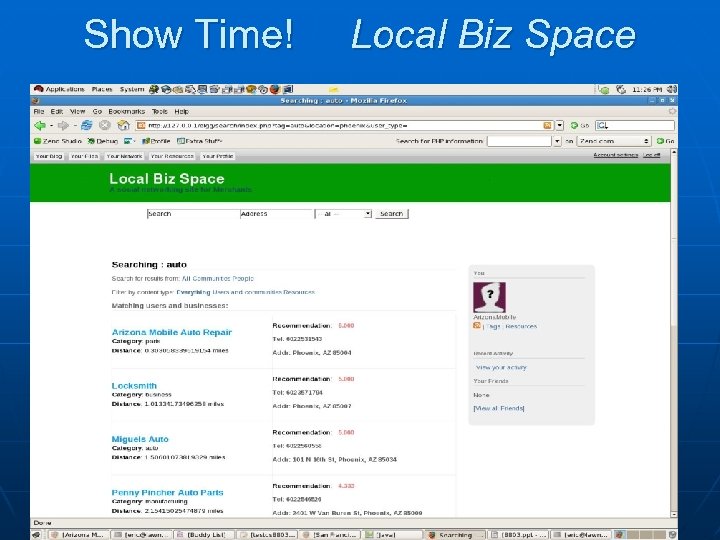

Show Time! Local Biz Space

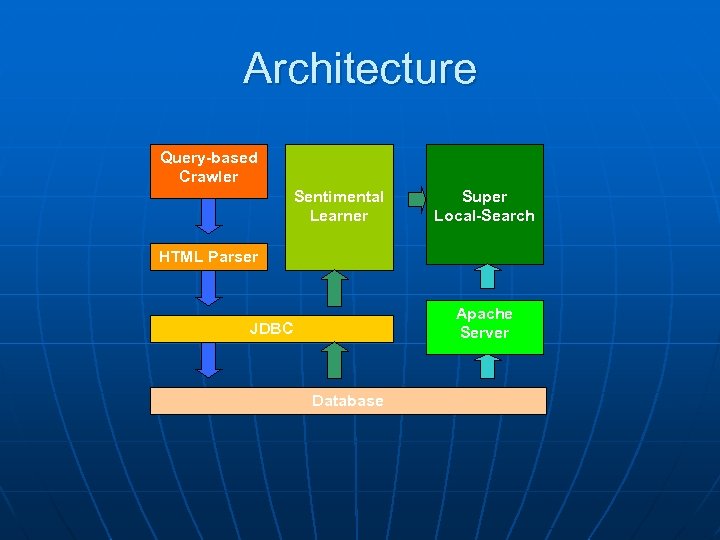

Architecture Query-based Crawler Sentimental Learner Super Local-Search HTML Parser Apache Server JDBC Database

Tools Open source social network platform Elgg, Open. Social n LAMP Server Linux+Apache+Mysql+PHP n Google Map API, eg, Geocode, n

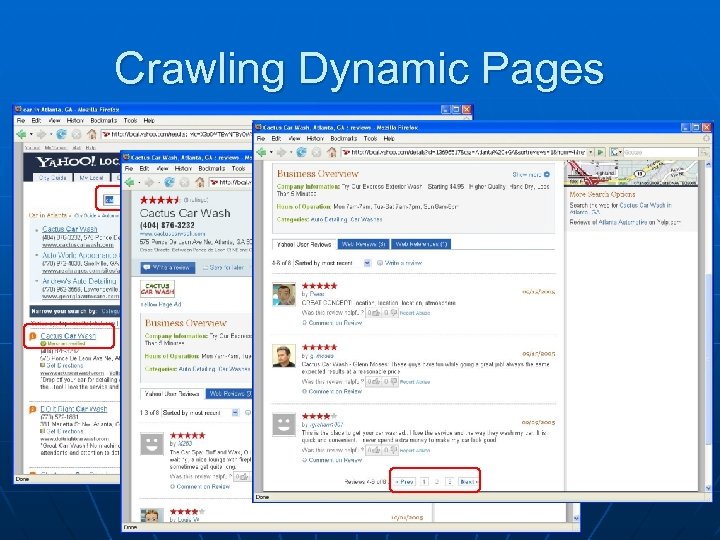

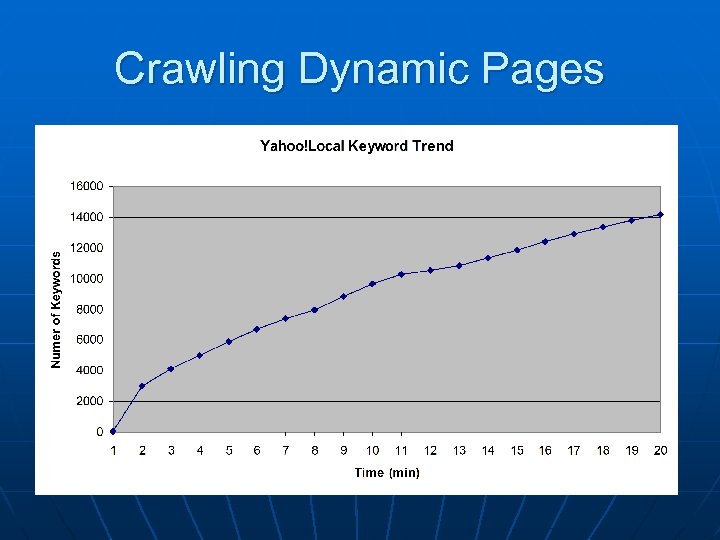

Crawling Dynamic Pages

Crawling Dynamic Pages

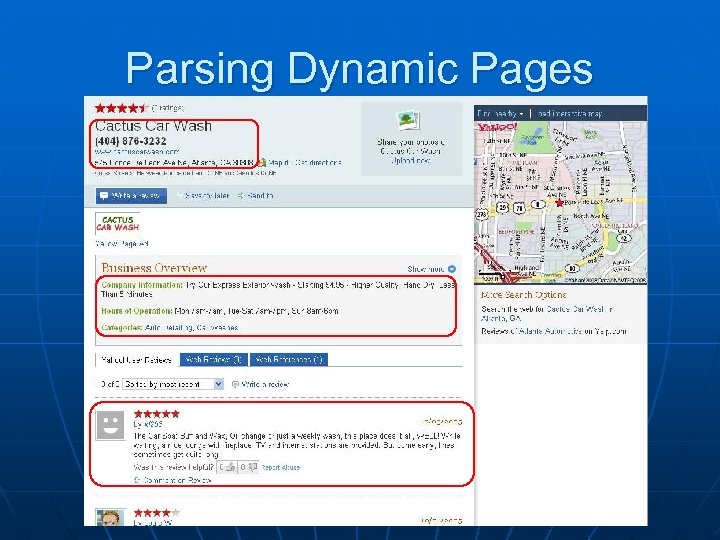

Parsing Dynamic Pages

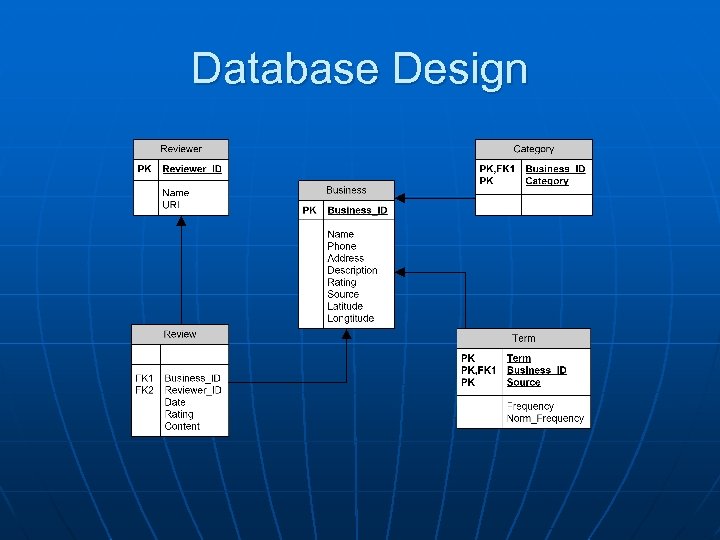

Database Design

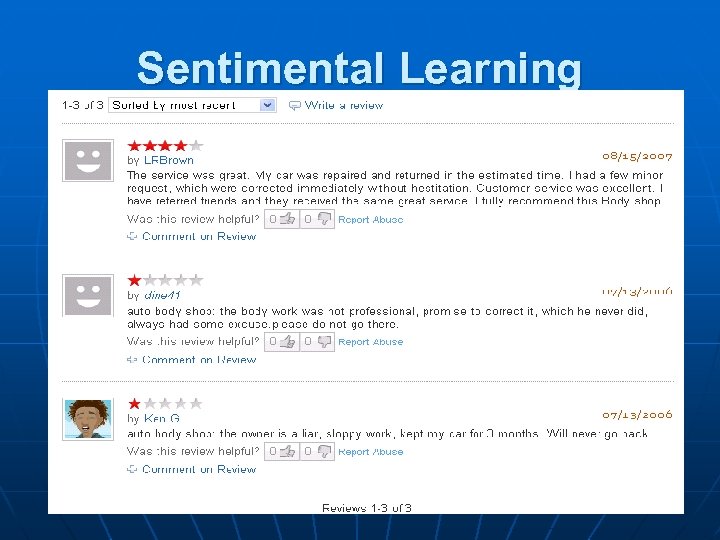

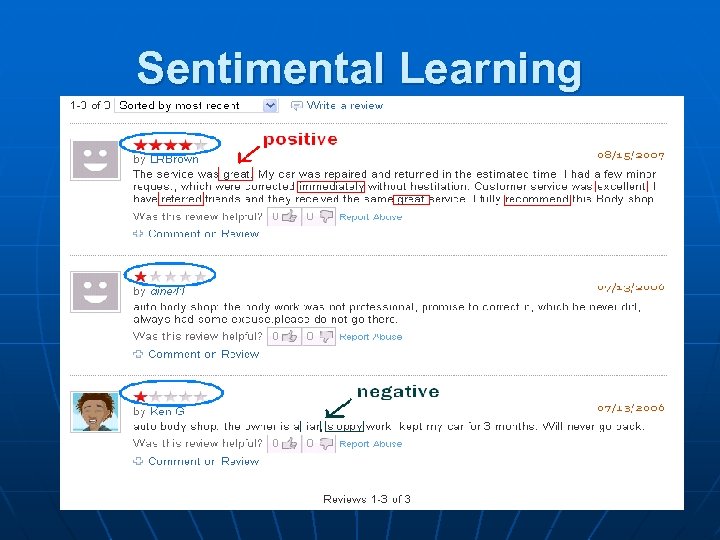

Sentimental Learning

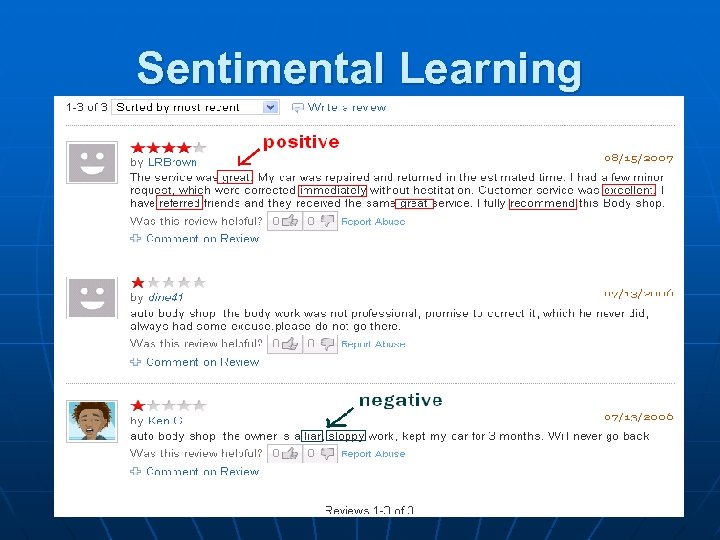

Sentimental Learning

Sentimental Learning Can we use ONE score to show good/ bad the store is?

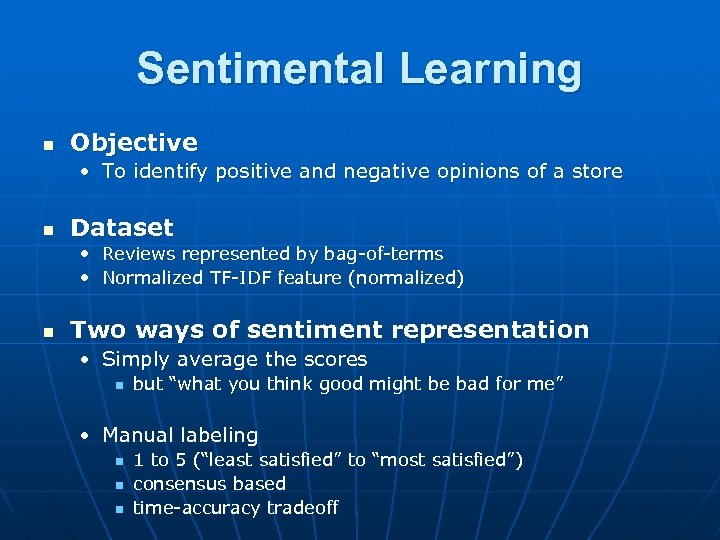

Sentimental Learning n Objective • To identify positive and negative opinions of a store n Dataset • Reviews represented by bag-of-terms • Normalized TF-IDF feature (normalized) n Two ways of sentiment representation • Simply average the scores n but “what you think good might be bad for me” • Manual labeling n n n 1 to 5 (“least satisfied” to “most satisfied”) consensus based time-accuracy tradeoff

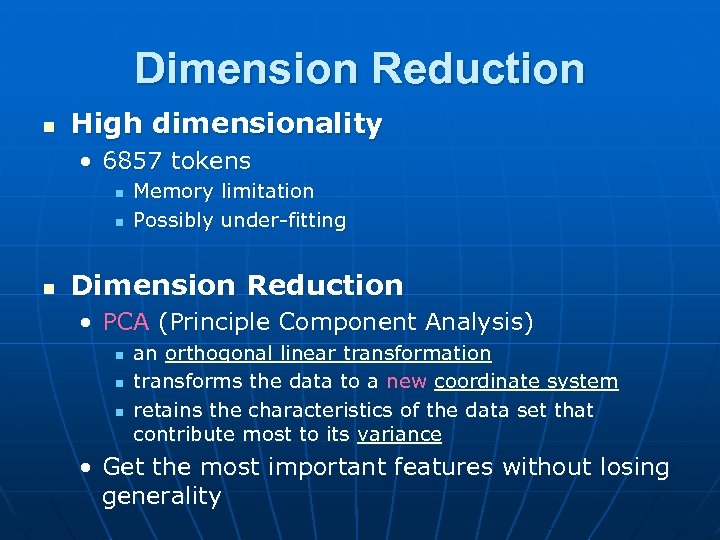

Dimension Reduction n High dimensionality • 6857 tokens n n n Memory limitation Possibly under-fitting Dimension Reduction • PCA (Principle Component Analysis) n n n an orthogonal linear transformation transforms the data to a new coordinate system retains the characteristics of the data set that contribute most to its variance • Get the most important features without losing generality

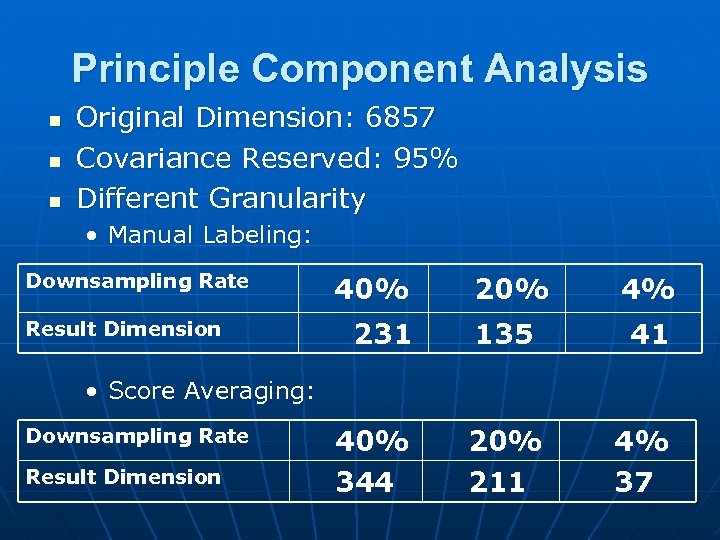

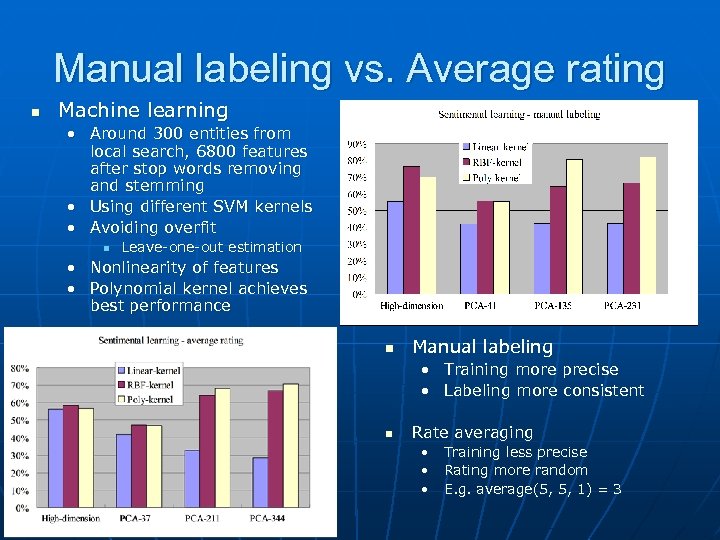

Principle Component Analysis n n n Original Dimension: 6857 Covariance Reserved: 95% Different Granularity • Manual Labeling: Downsampling Rate Result Dimension 40% 231 20% 4% 135 41 20% 211 4% 37 • Score Averaging: Downsampling Rate Result Dimension 40% 344

Sentimental learning n Features used for sentimental learning: • Vector Space Model (reviews/comments) n Some keywords related to sentiments: • Positive: good, happy, wonderful, excellent, awesome, great, ok, nice, etc • Negative: bad, sad, ugly, outdated, shabby, stupid, wrong, awful, etc n Most words unrelated to sentiments: • e. g. buy, take, go, i. Pod, apple, comment, etc… • Causing noise for sentimental learning!!

What we do? n How to learn sentiments from a large set of features with lots of noise? n n Vector Space Model: MXN (Entity-Term, e. g. 6, 000 X 20, 000) Dimensionality reduction (PCA) Using supervised learning for sentimental learning Human labeling vs. Average rating • An online entity always includes many reviews with each review containing a rating n Average Rating is an alternative labeling for the entity • Manual labeling: n n 1 (least satisfactory) – 5 (most satisfactory) Three persons do labeling, most-vote-adopted

Manual labeling vs. Average rating n Machine learning • Around 300 entities from local search, 6800 features after stop words removing and stemming • Using different SVM kernels • Avoiding overfit n Leave-one-out estimation • Nonlinearity of features • Polynomial kernel achieves best performance n Manual labeling • Training more precise • Labeling more consistent n Rate averaging • Training less precise • Rating more random • E. g. average(5, 5, 1) = 3

What we learned? n Dimensionality reduction is necessary • Term Vector Space Model (VSM) is huge in nature n Human labeling is necessary • Sentimental learning involved subjective judge instead of objective judge. • Human rating is very random because it is not consistent across different people • More labeling data is needed n Other methods to be used: • Unsupervised learning (clustering) n Gaussian Mixture Model (an alternative to learn sentiments, while it is difficult to know the # of hidden sentiments)

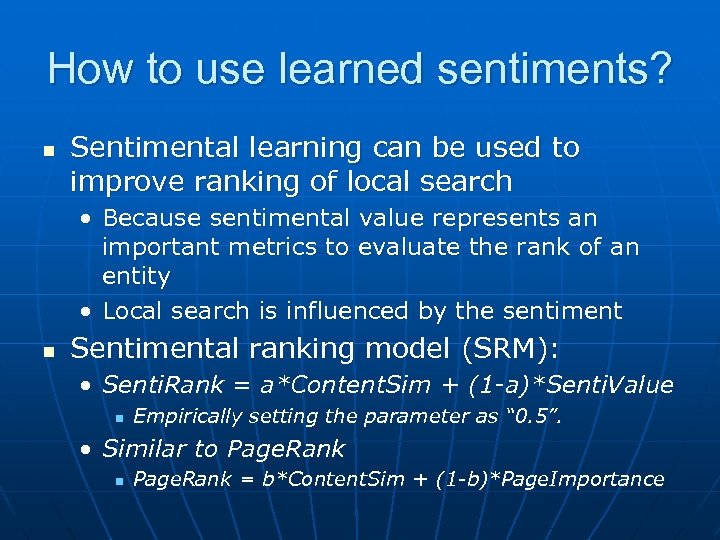

How to use learned sentiments? n Sentimental learning can be used to improve ranking of local search • Because sentimental value represents an important metrics to evaluate the rank of an entity • Local search is influenced by the sentiment n Sentimental ranking model (SRM): • Senti. Rank = a*Content. Sim + (1 -a)*Senti. Value n Empirically setting the parameter as “ 0. 5”. • Similar to Page. Rank n Page. Rank = b*Content. Sim + (1 -b)*Page. Importance

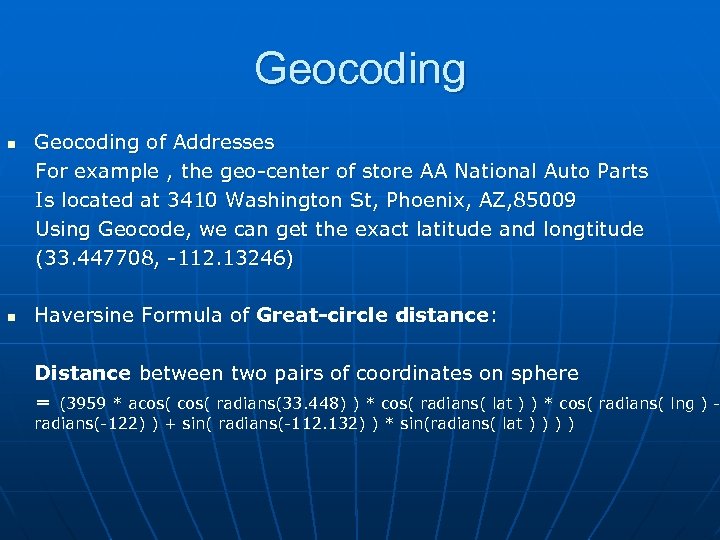

Geocoding n n Geocoding of Addresses For example , the geo-center of store AA National Auto Parts Is located at 3410 Washington St, Phoenix, AZ, 85009 Using Geocode, we can get the exact latitude and longtitude (33. 447708, -112. 13246) Haversine Formula of Great-circle distance: Distance between two pairs of coordinates on sphere = (3959 * acos( radians(33. 448) ) * cos( radians( lat ) ) * cos( radians( lng ) - radians(-122) ) + sin( radians(-112. 132) ) * sin(radians( lat ) )

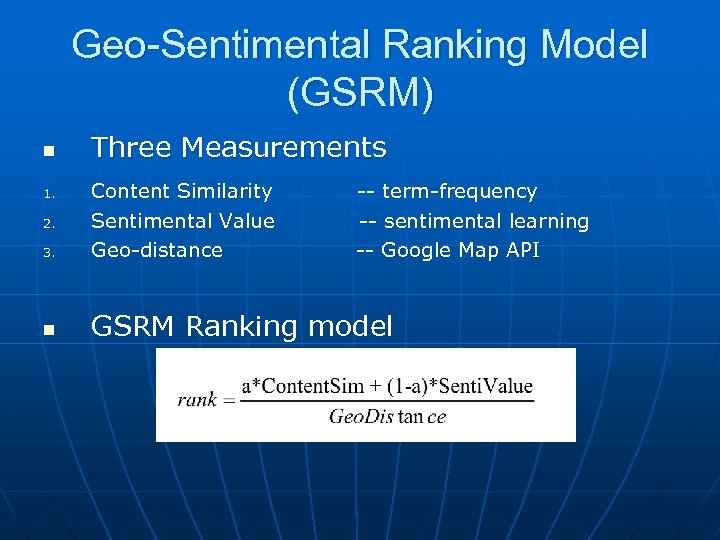

Geo-Sentimental Ranking Model (GSRM) n Three Measurements 3. Content Similarity Sentimental Value Geo-distance n GSRM Ranking model 1. 2. -- term-frequency -- sentimental learning -- Google Map API

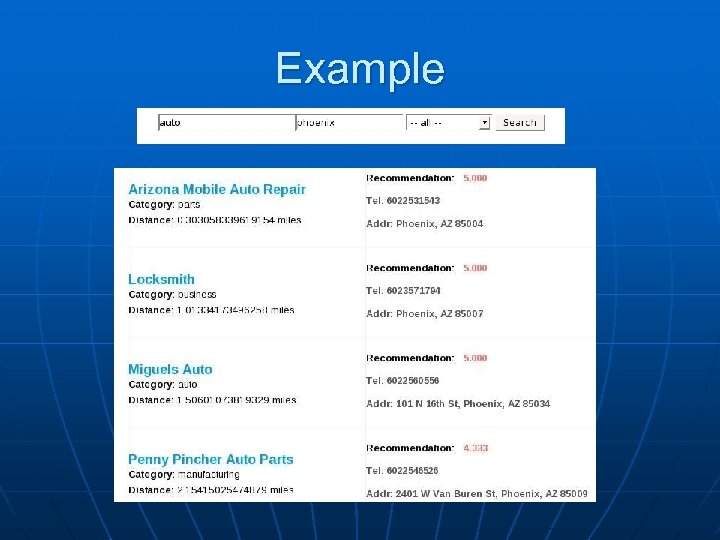

Example

n n Thank You ! QA time

0a61521be563cf81bee4c4ca655d749f.ppt