5bca9eb94d272a282eeda52a2bbe13cc.ppt

- Количество слайдов: 71

Deep Text Understanding with Word. Net Christiane Fellbaum Princeton University and Berlin-Brandenburg Academy of Sciences

Word. Net • What is Word. Net and why is it interesting/useful? • A bit of history • Word. Net for natural language processing/word sense disambiguation

What is Word. Net? • A large lexical database, or “electronic dictionary, ” developed and maintained at Princeton University http: //wordnet. princeton. edu • Includes most English nouns, verbs, adjectives, adverbs • Electronic format makes it amenable to automatic manipulation • Used in many Natural Language Processing applications (information retrieval, text mining, question answering, machine translation, AI/reasoning, . . . ) • Wordnets are built for many languages (including Danish!)

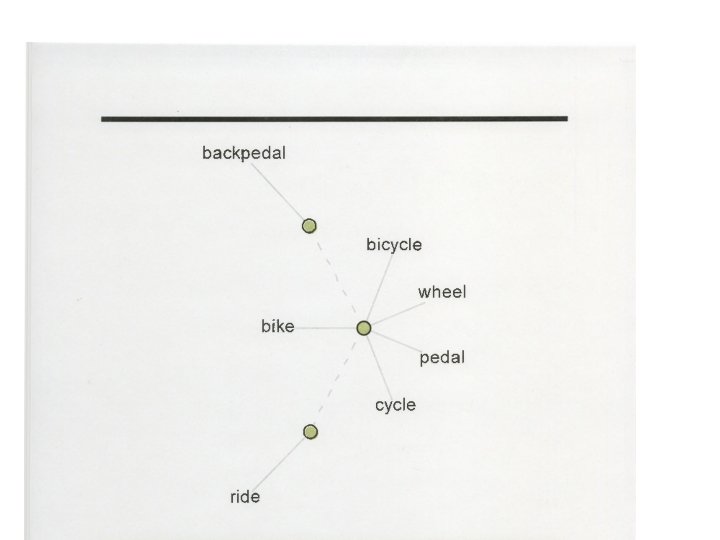

What’s special about Word. Net? • Traditional paper dictionaries are organized alphabetically: words that are found together (on the same page) are not related by meaning • Word. Net is organized by meaning: words in close proximity are semantically similar • Human users and computers can browse Word. Net and find words that are meaningfully related to their queries (somewhat like in a hyperdimensional thesaurus) • Meaning similiarity can be measured and quantified to support Natural Language Understanding

A bit of history Research in Artificial Intelligence (AI): How do humans store and access knowledge about concept? Hypothesis: concepts are interconnected via meaningful relations Knowledge about concepts is huge--must be stored in an efficient and economic fashion

A bit of history Knowledge about concepts is computed “on the fly” via access to general concepts E. g. , we know that “canaries fly” because “birds fly” and “canaries are a kind of bird”

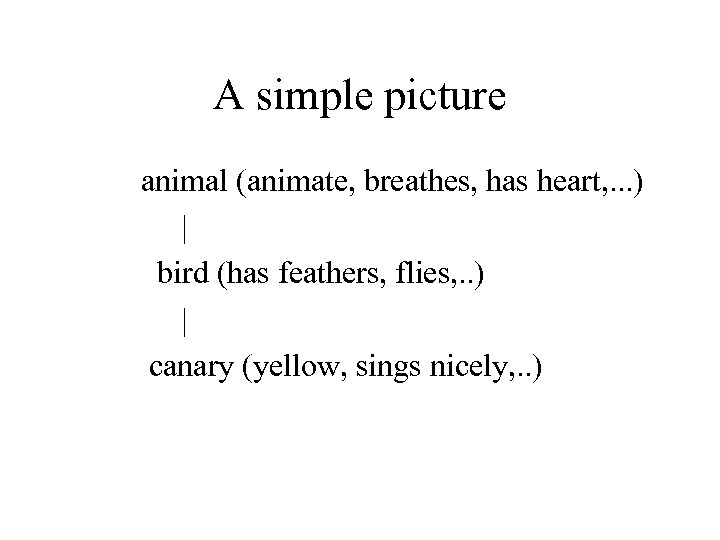

A simple picture animal (animate, breathes, has heart, . . . ) | bird (has feathers, flies, . . ) | canary (yellow, sings nicely, . . )

Knowledge is stored at the highest possible node and inherited by lower (more specific) concepts rather than being multiply stored Collins & Quillian (1969) measured reaction times to statements involving knowledge distributed across different “levels”

Do birds fly? --short RT Do canaries fly? --longer RT Do canaries have a heart? --even longer RT

Collins’ & Quillian’s results are subject to criticism (reaction time to statements like “do canaries move? ” are influenced by prototypicality, word frequency, uneven semantic distance across levels) But other evidence from psychological experiments confirms that humans organize knowledge about words and concept by means of meaningful relations Access to one concepts activates related concepts in an outward spreading (radial) fashion

A bit of history But the idea inspired Word. Net (1986), which asked: Can most/all of the lexicon be represented as a semantic network where words are interlinked by meaning? If so, the result would be a semantic network (a graph)

Word. Net If the (English) lexicon can be represented as a semantic network, which are the relations that connect the nodes?

Whence the relations? • Inspection of association norms stimulus: hand reponse: finger, arm • Classical ontology (Aristotle): IS-A (maple-tree), HAS-A (maple-leaves) • Co-occurrence patterns in texts (meaningfully related words are used together)

Relations: Synonymy One concept is expressed by several different word forms: {beat, hit, strike} {car, motorcar, automobile} { big, large} Synonymy = one: many mapping of meaning and form

Synonymy in Word. Net groups (roughly) synonymous, denotationally equivalent, words into unordered sets of synonyms (“synsets”) {hit, beat, strike} {big, large} {queue, line} Each synset expresses a distinct meaning/concept

Polysemy One word form expresses multiple meanings Polysemy = one: many mapping of form and meaning {table, tabular_array} {table, piece_of_furniture} {table, mesa} {table, postpone} Note: the most frequent word forms are the most polysemous!

Polysemy in Word. Net A word form that appears in n synsets is n-fold polysemous {table, tabular_array} {table, piece_of_furniture} {table, mesa} {table, postpone} table is fourfold polysemous/has four senses

Some Word. Net stats

The “Net” part of Word. Net Synsets arethe building block of the network Synsets are interconnected via relations Bi-directional arcs express semantic relations Result: large semantic network (graph)

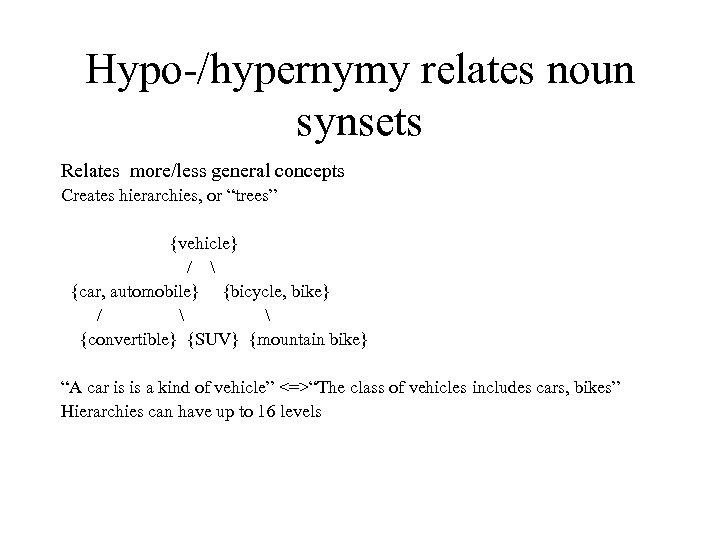

Hypo-/hypernymy relates noun synsets Relates more/less general concepts Creates hierarchies, or “trees” {vehicle} / {car, automobile} {bicycle, bike} / {convertible} {SUV} {mountain bike} “A car is is a kind of vehicle” <=>“The class of vehicles includes cars, bikes” Hierarchies can have up to 16 levels

Hyponymy Transitivity: A car is a kind of vehicle An SUV is a kind of car => An SUV is a kind of vehicle

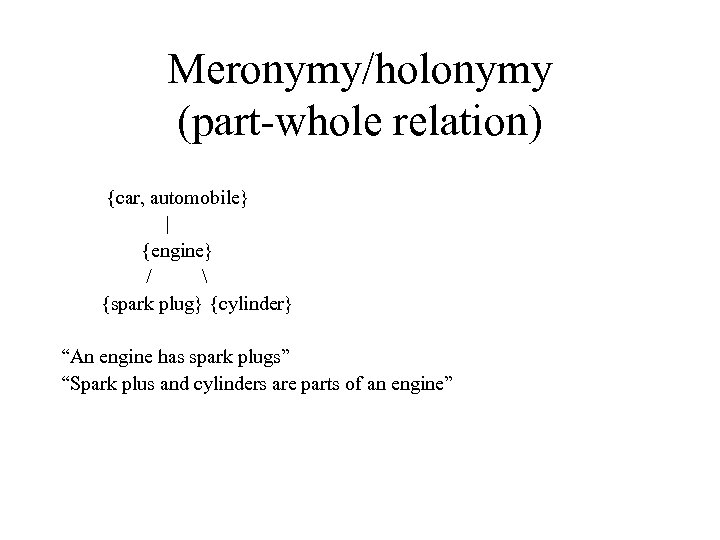

Meronymy/holonymy (part-whole relation) {car, automobile} | {engine} / {spark plug} {cylinder} “An engine has spark plugs” “Spark plus and cylinders are parts of an engine”

Meronymy/Holonymy Inheritance: A finger is part of a hand A hand is part of an arm An arm is part of a body =>a finger is part of a body

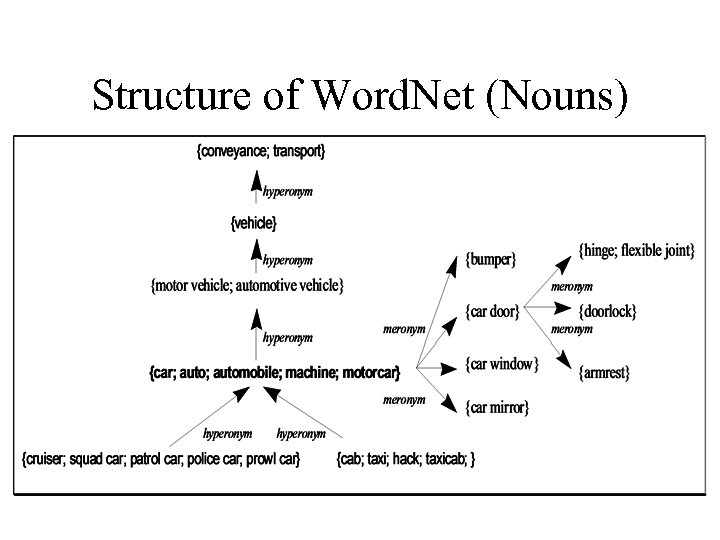

Structure of Word. Net (Nouns)

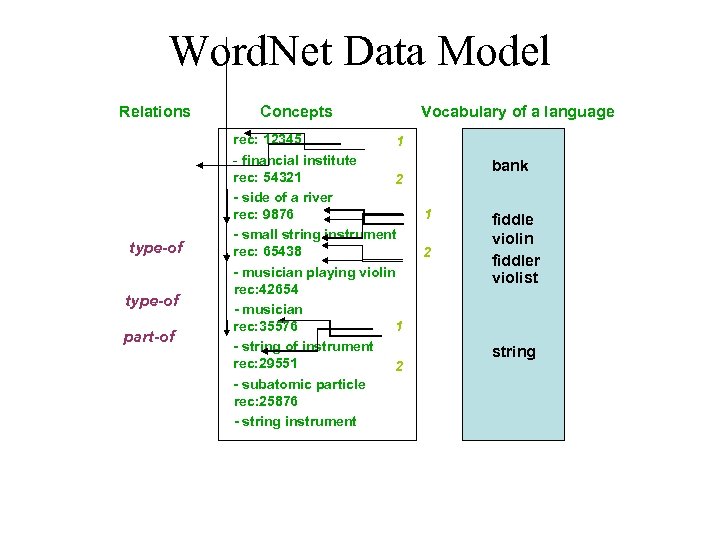

Word. Net Data Model Relations type-of part-of Concepts rec: 12345 1 - financial institute rec: 54321 2 - side of a river rec: 9876 - small string instrument rec: 65438 - musician playing violin rec: 42654 - musician rec: 35576 1 - string of instrument rec: 29551 2 - subatomic particle rec: 25876 - string instrument Vocabulary of a language bank 1 2 fiddle violin fiddler violist string

Word. Net for Natural Language Processing Challenge: get a computer to “understand” language • Information retrieval • Text mining • Document sorting • Machine translation

Natural Language Processing • Stemming, parsing currently at >90% accuracy level • Word sense discrimination (lexical disambiguation) still a major hurdle for successful NLP • Which sense is intended by the writer (relative to a dictionary)? • Best systems: ~60% precision, ~60% recall (but human inter-annotator agreement isn’t perfect, either!)

Understanding text beyond the word level (joint work with Peter Clark and Jerry Hobbs)

Knowledge in text Human language users routinely derive knowledge from text that is NOT expressed on the surface Perhaps more knowledge is unexpressed than overtly expressed on the surface Grasser (1981) estimates explicit: implicit info = 1: 8

An example Text: A soldier was killed in a gun battle Inferences: Soldiers were fighting one another The soldiers had guns with live ammunition Multiple shots were fired One soldier shot another soldier The shot soldier died as a result of the injuries caused by the shot The time interval between the fatal shot and the death was short

Humans use world knowledge to supplement word knowledge (How) can such knowledge be encoded and harnessed by automatic systems? Previous attempts (e. g. , Cyc’s microtheories) --too few theories --uneven coverage of world knowledge

Recognizing Textual Entailment Task: Evaluate truth of hypothesis H given a text T (T) A soldier was killed in a gun battle (H) A soldier died Answer may be yes/no/probably/. . .

RTE Many automatic system attempt RTE via lexical, syntactic matching algorithms (“do the same words occur in T, H? ” “do T, H have the same subject/object? ”) Not “deep” language understanding

Our RTE test suite 250 Text-Hypothesis pairs for 50% of them, H is entailed by T for the remaining 50%, H is not (necessarily) entailed Focus on semantic interpretation

RTE test suite Core of T statements came from newspaper texts H statements were hand-coded focus on general world knowledge

RTE test suite Manually analyzed pairs Distinguished, classified 19 types of knowledge among the T-H pairs some partial overlap

Exx: Types of knowledge (increasing order of difficulty) Lexical: relation among irregular forms of a single lemma, Named Entities vs. proper nouns Lexical-semantic (paradigmatic): synonyms, hypernyms, meronyms, antonyms, metonymy, derivations Syntagmatic: selectional preferences, telic roles Propositional: cause-effect, preconditions World knowledge/core theories (e. g. , ambush entails concealment)

Overall approach (bag of tricks) • Initial text interpretation with language processing tools (Peter Clark et al. ) • Compute subsumption among text fragments • Word. Net augmentations

Text interpretation First step: parsing (assign a structure to a sentence or phrase) SAPIR parser (Harrison & Maxwell 1986) SAPIR also produces a Logical Form (LF)

LFs LF structures are trees generated by rules parallel to grammar rules contain logic elements nouns, verbs, adj’s, prepositions represented as variables LFs are parsed and have part-of-speech tags LFs generate ground logical assertions

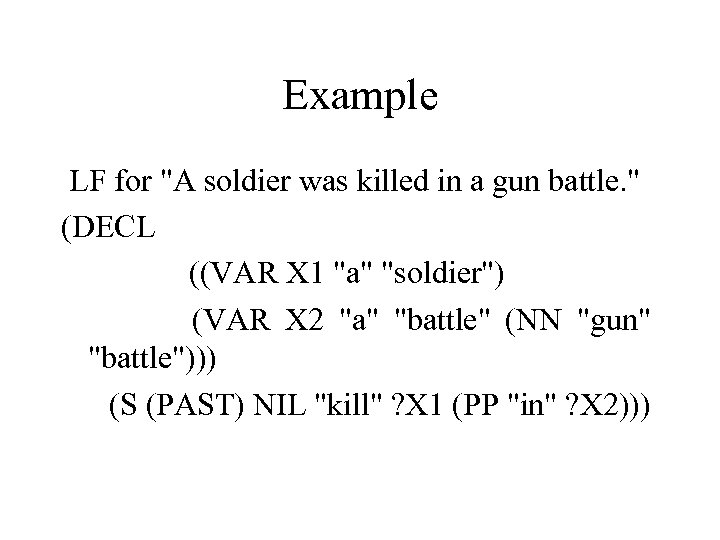

Example LF for "A soldier was killed in a gun battle. " (DECL ((VAR X 1 "a" "soldier") (VAR X 2 "a" "battle" (NN "gun" "battle"))) (S (PAST) NIL "kill" ? X 1 (PP "in" ? X 2)))

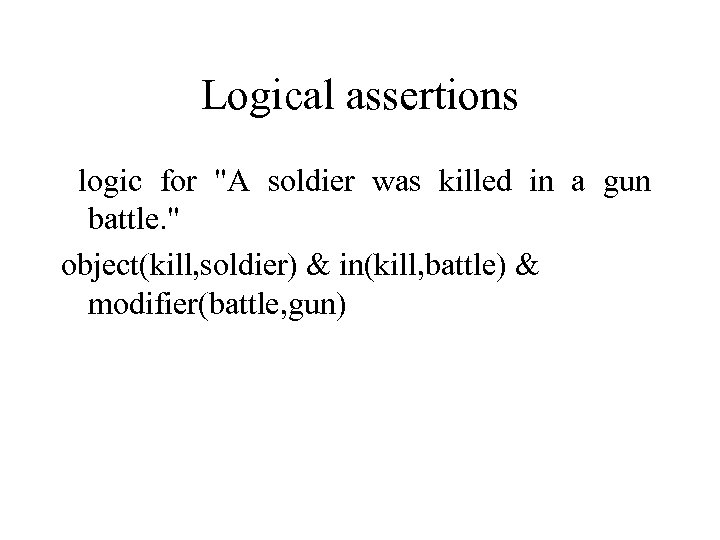

Logical assertions logic for "A soldier was killed in a gun battle. " object(kill, soldier) & in(kill, battle) & modifier(battle, gun)

Result: T, H in Logical Form

Matching sentences/fragments with subsumption A basic reasoning operation A person loves a person subsumes A man loves a woman Set 1 of clauses subsumes another Set 2 of clauses if each clause in S 1 subsumes some member of S 2. Similary, a set of clauses subsumes another set of clauses if the arguments of the first subsume or match the arguments of the second Argument (word) subsumption as in Word. Net (X is a Y) Matching = synonyms

Syntactic matching of predicates --both are the same --one is predicate “of” or modifier (my friend’s car, the car of my friend) --predicates “subject” and “by” match (passives)

Lexical (word) matching Words related by derivational morphology (destroy, destruction) are considered matches in conjunction with syntactic matches

Recognize as equivalent: the bomb destroyed the shrine the destruction of the shrine by the bomb But not the destruction of the bomb by the shrine a person attacks with a bomb there is a bomb attack by a person

Benefits for text understanding/RTE (T) Moore is a prolific writer (H) Moore writes many books Moore is the Agent of write

Exploiting word and world knowledge encoded in Word. Net

Use of Word. Net glosses Glosses = definition of “concept” expressed by synset members {airplane, plane (an aircraft that has fixed wings and is powered by propellers or jets)} syntagmatic information, world knowledge

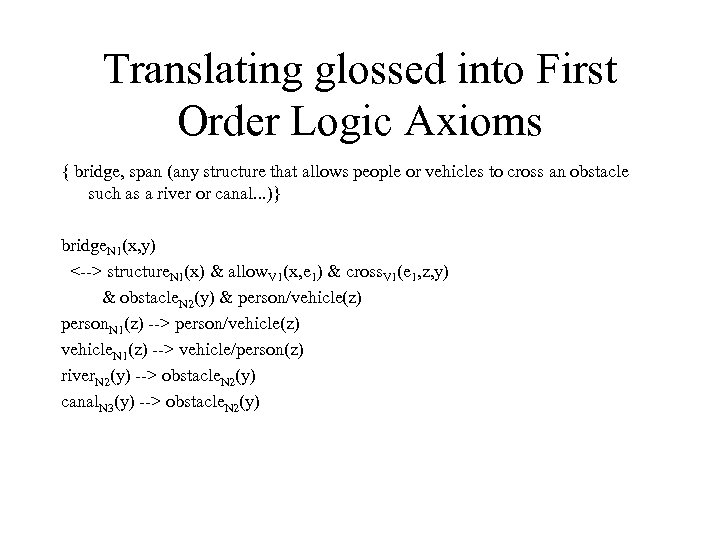

Translating glossed into First Order Logic Axioms { bridge, span (any structure that allows people or vehicles to cross an obstacle such as a river or canal. . . )} bridge. N 1(x, y) <--> structure. N 1(x) & allow. V 1(x, e 1) & cross. V 1(e 1, z, y) & obstacle. N 2(y) & person/vehicle(z) person. N 1(z) --> person/vehicle(z) vehicle. N 1(z) --> vehicle/person(z) river. N 2(y) --> obstacle. N 2(y) canal. N 3(y) --> obstacle. N 2(y)

The nouns, verbs, adjectives, adverbs in the LF glosses were manually disambiguated Thus, each variable in the LFs was identified not just with a word form, but a form-meaning pair (sense) in Word. Net LFs were generated for 110 K glosses Particular emphasis on Core. Word. Net

How well do our tricks perform?

An example that works Exploiting formally related words in WN: (T) …go through licensing procedures (H) …go through licensing processes Exploiting hyponymy (IS-A relation): (T) Beverley served at WEDCOR (H) Beverley worked at WEDCOR

More complex example that works (T) Britain puts curbs on immigrant labor from Bulgaria (H) Britain restricted workers from Bulgaria

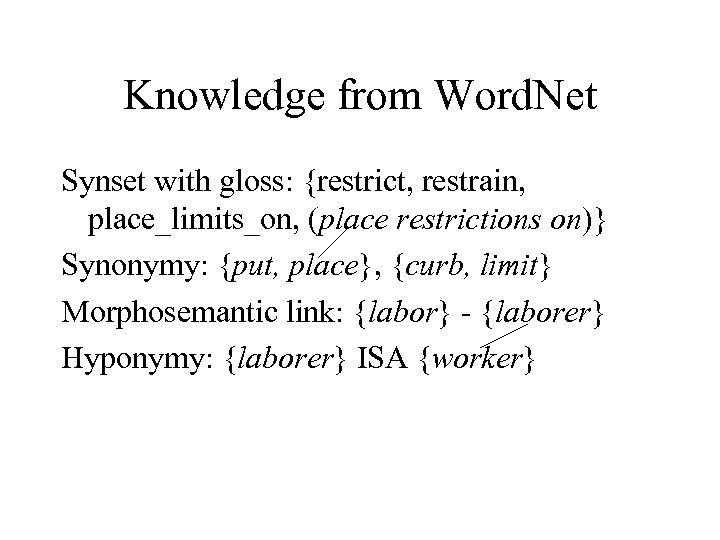

Knowledge from Word. Net Synset with gloss: {restrict, restrain, place_limits_on, (place restrictions on)} Synonymy: {put, place}, {curb, limit} Morphosemantic link: {labor} - {laborer} Hyponymy: {laborer} ISA {worker}

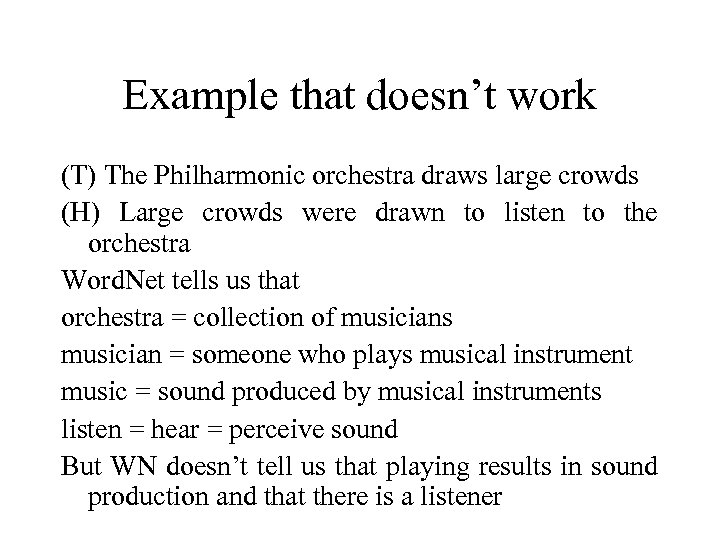

Example that doesn’t work (T) The Philharmonic orchestra draws large crowds (H) Large crowds were drawn to listen to the orchestra Word. Net tells us that orchestra = collection of musicians musician = someone who plays musical instrument music = sound produced by musical instruments listen = hear = perceive sound But WN doesn’t tell us that playing results in sound production and that there is a listener

Examples that don’t work The most fundamental knowledge that humans take for granted trips up automatic systems Such knowledge is not explicitly taught to children But it must be “taught” to machines!

Core theories (Jerry Hobbs) • • Attempt to encode fundamental knowledge Space, time, causality, . . . Essential for reasoning Not encoded in Word. Net glosses

Core theories • Manually encoded • Axiomatized

Core theories • Composite entities (things made of other things, stuff) • Scalar notions (time, space, . . . ) • Change of state • Causality

Core theories Example of predications: change(e 1, e 2) change. From(e 1) change. To(e 2)

Core theories and Word. Net map core theories to Core WN synsets encode meanings of synsets denoting events, event structure in terms of core theory predications

Examples let(x, e) <--> not(cause(x, not(e))) {go, become, get (“he went wild”)} go(x, e) <--> change. To(e) free(x, y) <--> cause(x, change. To(free(y))) (All words are linked to WN senses)

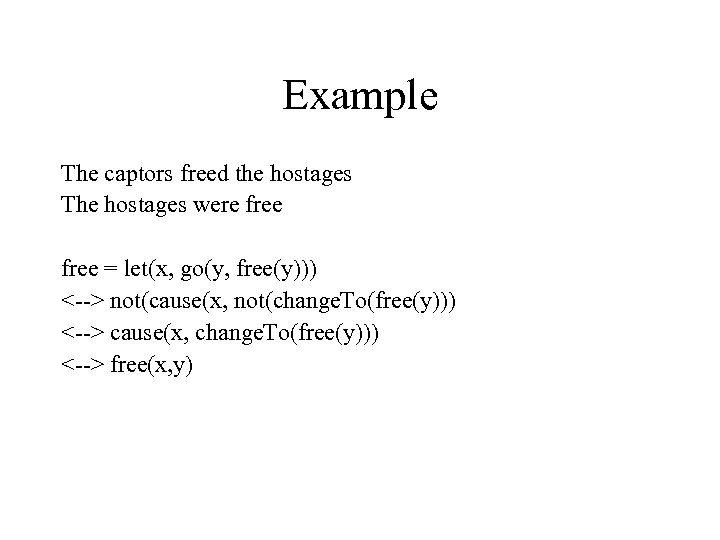

Example The captors freed the hostages The hostages were free = let(x, go(y, free(y))) <--> not(cause(x, not(change. To(free(y))) <--> cause(x, change. To(free(y))) <--> free(x, y)

Preliminary evaluation (What) does each component contribute to RTE? For the 250 Text-Hypothesis pairs in our test suite:

Conclusion • Way to go! • Deliberately exclude statistical similarity measures (this hurts our results) • Symbolic approach: aim at deep level understanding

Word. Net for Deeper Text Understanding • Axioms in Logical Form are useful for many other NL Understanding applications • E. g. , automated question answering: translate Qs and As into logic representation • Logic representations enable reasoning (axioms can be fed into a reasoner/logic prover)

Thanks for your attention

5bca9eb94d272a282eeda52a2bbe13cc.ppt