Nets_compression_and_speedup.pptx

- Количество слайдов: 26

Deep neural networks compression Alexander Chigorin Head of research projects Vision. Labs a. chigorin@visionlabs. ru

Deep neural networks compression Alexander Chigorin Head of research projects Vision. Labs a. chigorin@visionlabs. ru

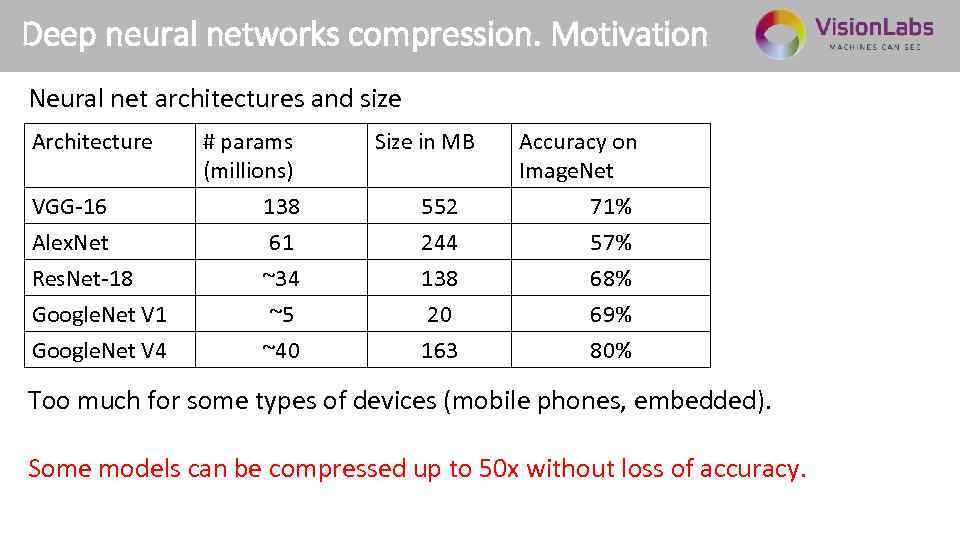

Deep neural networks compression. Motivation Neural net architectures and size Architecture VGG-16 Alex. Net Res. Net-18 Google. Net V 1 Google. Net V 4 # params (millions) 138 61 ~34 ~5 ~40 Size in MB 552 Accuracy on Image. Net 71% 244 138 20 163 57% 68% 69% 80% Too much for some types of devices (mobile phones, embedded). Some models can be compressed up to 50 x without loss of accuracy.

Deep neural networks compression. Motivation Neural net architectures and size Architecture VGG-16 Alex. Net Res. Net-18 Google. Net V 1 Google. Net V 4 # params (millions) 138 61 ~34 ~5 ~40 Size in MB 552 Accuracy on Image. Net 71% 244 138 20 163 57% 68% 69% 80% Too much for some types of devices (mobile phones, embedded). Some models can be compressed up to 50 x without loss of accuracy.

Deep neural networks compression. Overview Methods to review: • Learning both Weights and Connections for Efficient Neural Networks • Deep Compression: Compressing Deep Neural Networks with Pruning, Trained Quantization and Huffman Coding • Incremental Network Quantization: Towards Lossless CNNs with Low. Precision Weights

Deep neural networks compression. Overview Methods to review: • Learning both Weights and Connections for Efficient Neural Networks • Deep Compression: Compressing Deep Neural Networks with Pruning, Trained Quantization and Huffman Coding • Incremental Network Quantization: Towards Lossless CNNs with Low. Precision Weights

Networks Pruning

Networks Pruning

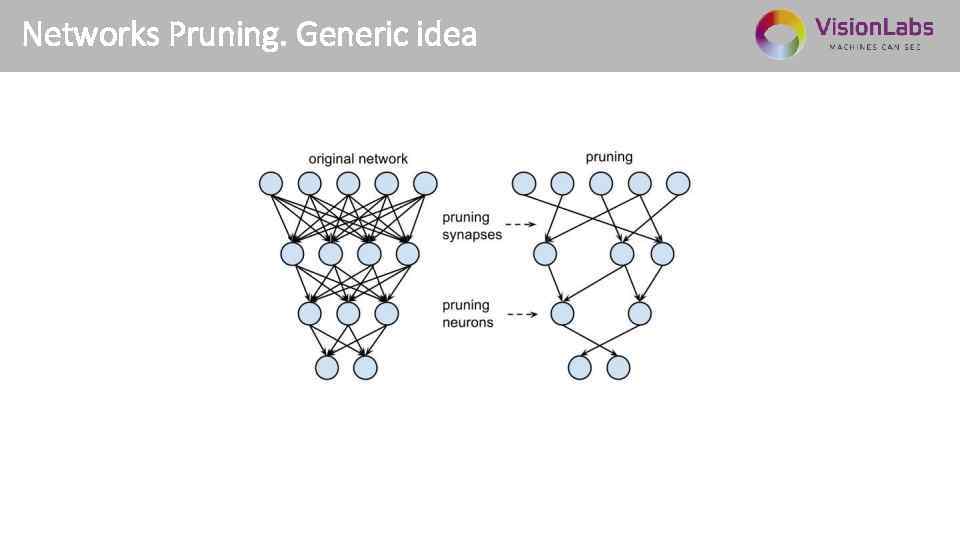

Networks Pruning. Generic idea 2

Networks Pruning. Generic idea 2

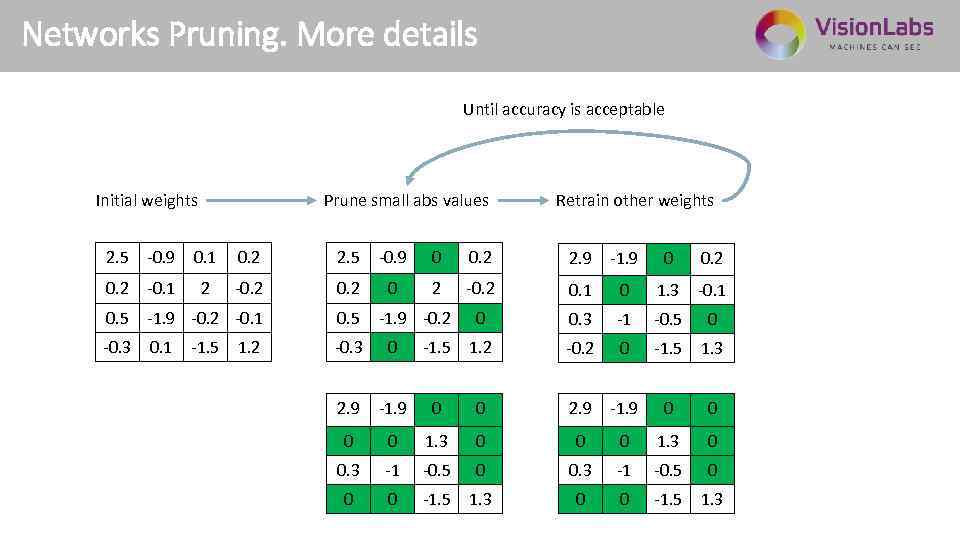

Networks Pruning. More details Until accuracy is acceptable Initial weights Prune small abs values Retrain other weights 2. 5 -0. 9 0. 1 0. 2 2. 5 -0. 9 0 0. 2 2. 9 -1. 9 0 0. 2 -0. 1 2 -0. 2 0. 1 0 1. 3 -0. 1 0. 5 -1. 9 -0. 2 -0. 1 0. 5 0 0. 3 -1 -0. 5 0 -0. 3 0. 1 -0. 3 0 -1. 5 1. 2 -0. 2 0 -1. 5 1. 3 2. 9 -1. 9 0 0 0 0 1. 3 0 0. 3 -1 -0. 5 0 0 0 -1. 5 1. 3 -1. 5 1. 2 -1. 9 -0. 2

Networks Pruning. More details Until accuracy is acceptable Initial weights Prune small abs values Retrain other weights 2. 5 -0. 9 0. 1 0. 2 2. 5 -0. 9 0 0. 2 2. 9 -1. 9 0 0. 2 -0. 1 2 -0. 2 0. 1 0 1. 3 -0. 1 0. 5 -1. 9 -0. 2 -0. 1 0. 5 0 0. 3 -1 -0. 5 0 -0. 3 0. 1 -0. 3 0 -1. 5 1. 2 -0. 2 0 -1. 5 1. 3 2. 9 -1. 9 0 0 0 0 1. 3 0 0. 3 -1 -0. 5 0 0 0 -1. 5 1. 3 -1. 5 1. 2 -1. 9 -0. 2

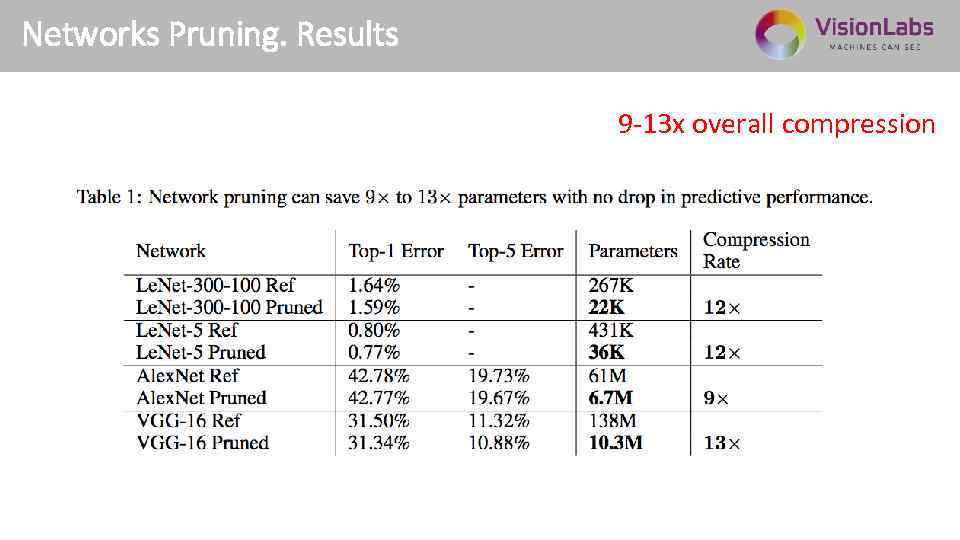

Networks Pruning. Results 9 -13 x overall compression 2

Networks Pruning. Results 9 -13 x overall compression 2

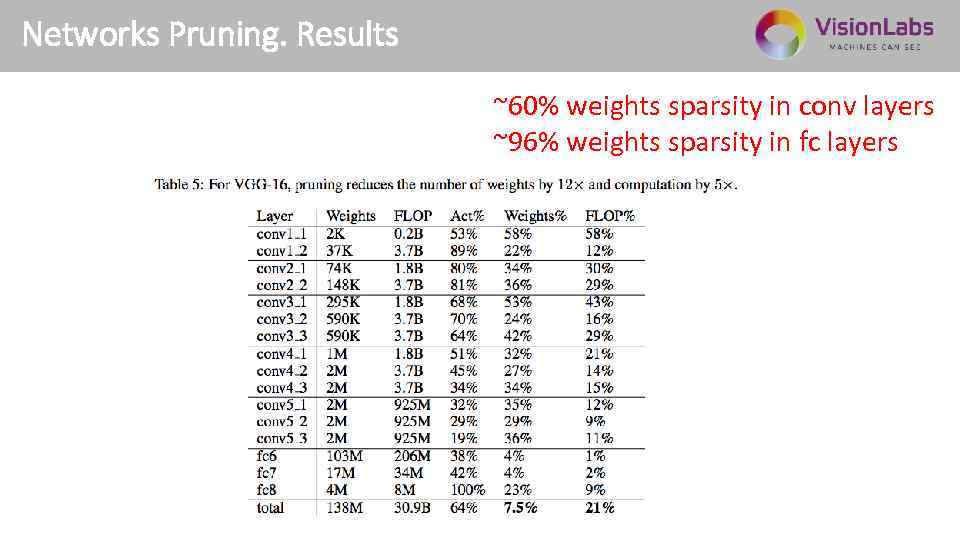

Networks Pruning. Results ~60% weights sparsity in conv layers ~96% weights sparsity in fc layers 2

Networks Pruning. Results ~60% weights sparsity in conv layers ~96% weights sparsity in fc layers 2

Deep Compression ICLR 2016 Best Paper 2

Deep Compression ICLR 2016 Best Paper 2

Deep Compression. Overview Algorithm: • Iterative weights pruning • Weights quantization • Huffman encoding 2

Deep Compression. Overview Algorithm: • Iterative weights pruning • Weights quantization • Huffman encoding 2

Deep Compression. Weights pruning Already discussed

Deep Compression. Weights pruning Already discussed

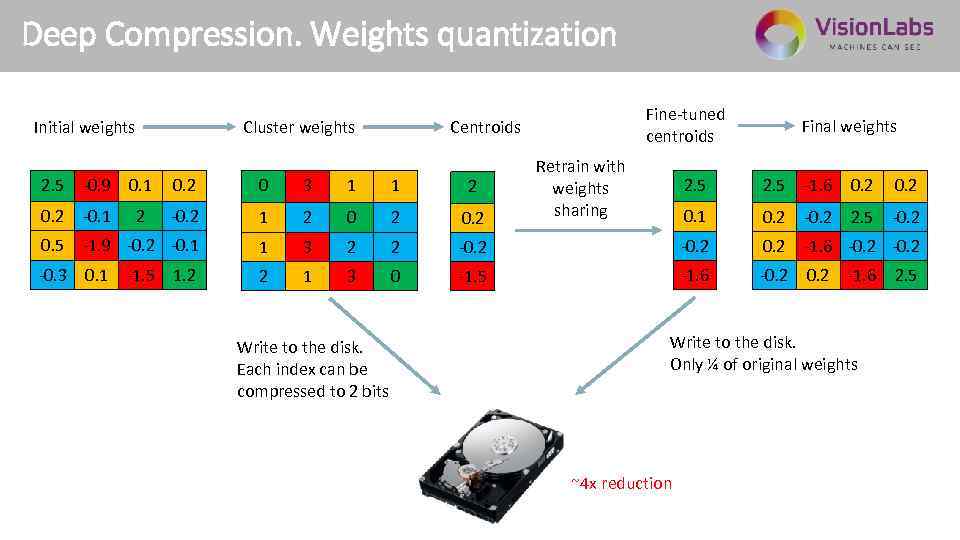

Deep Compression. Weights quantization Initial weights Cluster weights 2. 5 -0. 9 0. 1 0. 2 0 3 1 1 2 0. 2 -0. 1 2 -0. 2 1 2 0. 5 -1. 9 -0. 2 -0. 1 1 3 2 2 -0. 3 0. 1 2 1 3 0 -1. 5 1. 2 Write to the disk. Each index can be compressed to 2 bits Fine-tuned centroids Centroids Retrain with weights sharing Final weights 2. 5 -1. 6 0. 2 0. 1 0. 2 -0. 2 2. 5 -0. 2 -1. 6 -0. 2 -1. 5 -1. 6 -0. 2 -1. 6 Write to the disk. Only ¼ of original weights ~4 x reduction 2. 5

Deep Compression. Weights quantization Initial weights Cluster weights 2. 5 -0. 9 0. 1 0. 2 0 3 1 1 2 0. 2 -0. 1 2 -0. 2 1 2 0. 5 -1. 9 -0. 2 -0. 1 1 3 2 2 -0. 3 0. 1 2 1 3 0 -1. 5 1. 2 Write to the disk. Each index can be compressed to 2 bits Fine-tuned centroids Centroids Retrain with weights sharing Final weights 2. 5 -1. 6 0. 2 0. 1 0. 2 -0. 2 2. 5 -0. 2 -1. 6 -0. 2 -1. 5 -1. 6 -0. 2 -1. 6 Write to the disk. Only ¼ of original weights ~4 x reduction 2. 5

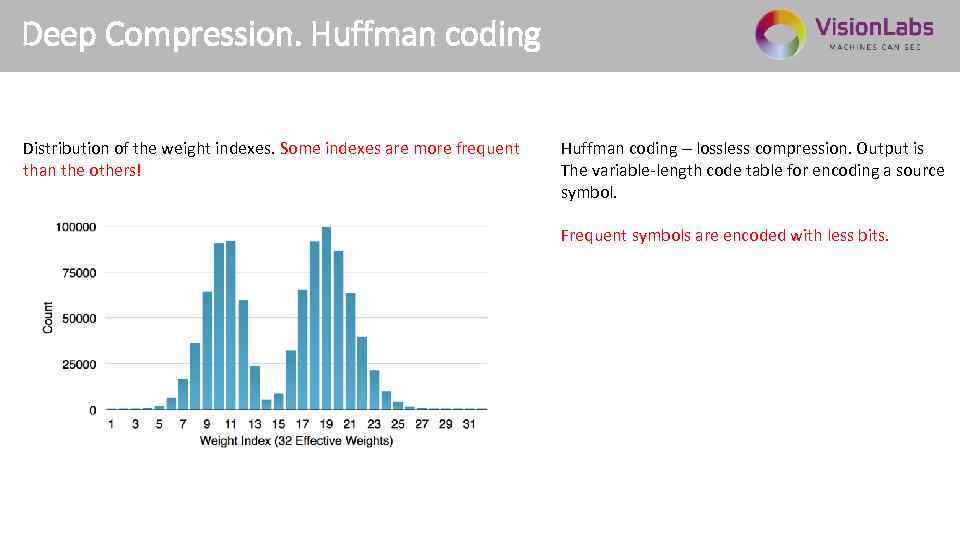

Deep Compression. Huffman coding Distribution of the weight indexes. Some indexes are more frequent than the others! Huffman coding – lossless compression. Output is The variable-length code table for encoding a source symbol. Frequent symbols are encoded with less bits. 2

Deep Compression. Huffman coding Distribution of the weight indexes. Some indexes are more frequent than the others! Huffman coding – lossless compression. Output is The variable-length code table for encoding a source symbol. Frequent symbols are encoded with less bits. 2

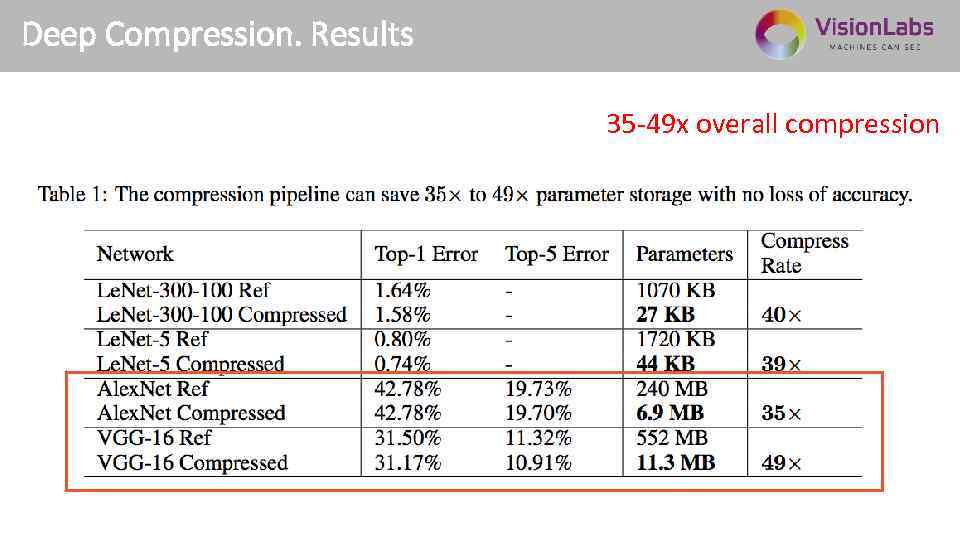

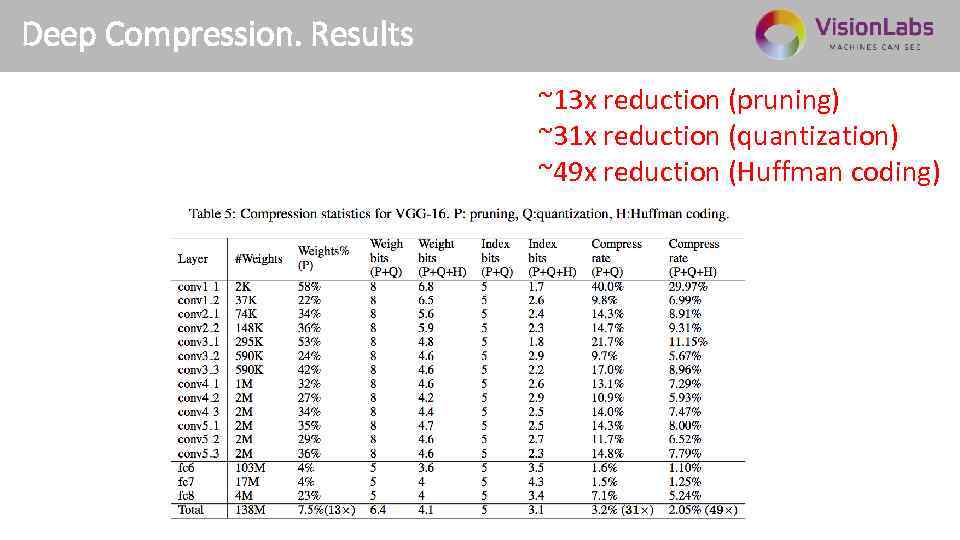

Deep Compression. Results 35 -49 x overall compression

Deep Compression. Results 35 -49 x overall compression

Deep Compression. Results ~13 x reduction (pruning) ~31 x reduction (quantization) ~49 x reduction (Huffman coding)

Deep Compression. Results ~13 x reduction (pruning) ~31 x reduction (quantization) ~49 x reduction (Huffman coding)

Incremental Network Quantization

Incremental Network Quantization

Incremental Network Quantization. Idea: • let’s quantize weights incrementally (as we do during pruning) • let’s quantize to the power of 2

Incremental Network Quantization. Idea: • let’s quantize weights incrementally (as we do during pruning) • let’s quantize to the power of 2

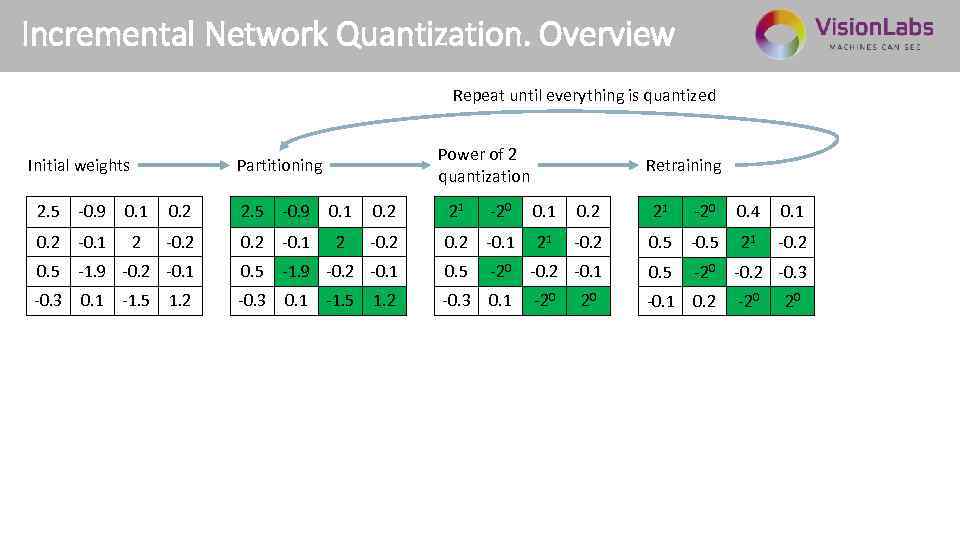

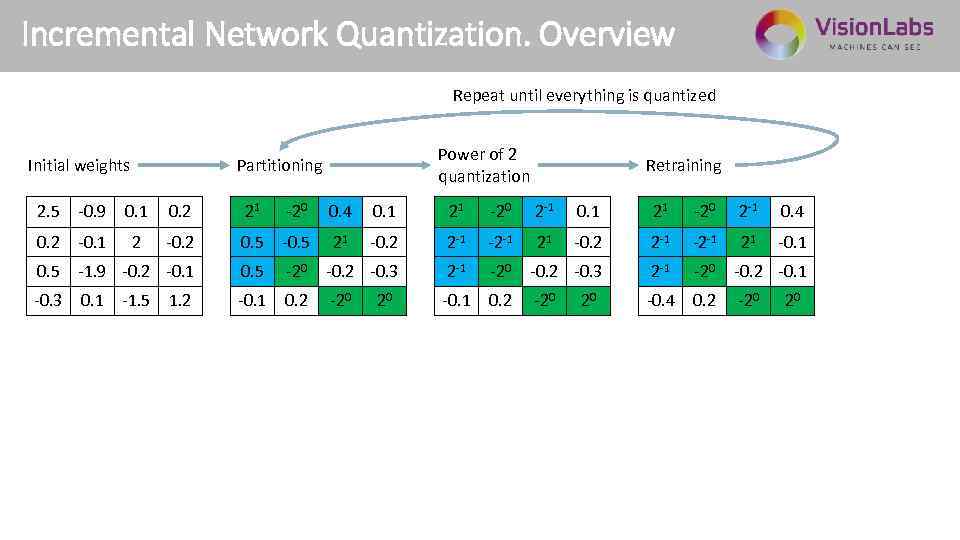

Incremental Network Quantization. Overview Repeat until everything is quantized Initial weights Power of 2 quantization Partitioning Retraining 2. 5 -0. 9 0. 1 0. 2 21 -20 0. 4 0. 1 0. 2 -0. 1 2 -0. 1 21 -0. 2 0. 5 -0. 5 21 -0. 2 0. 5 -1. 9 -0. 2 -0. 1 0. 5 -20 -0. 2 -0. 3 0. 1 -20 -0. 1 0. 2 -20 -1. 5 1. 2 20 20

Incremental Network Quantization. Overview Repeat until everything is quantized Initial weights Power of 2 quantization Partitioning Retraining 2. 5 -0. 9 0. 1 0. 2 21 -20 0. 4 0. 1 0. 2 -0. 1 2 -0. 1 21 -0. 2 0. 5 -0. 5 21 -0. 2 0. 5 -1. 9 -0. 2 -0. 1 0. 5 -20 -0. 2 -0. 3 0. 1 -20 -0. 1 0. 2 -20 -1. 5 1. 2 20 20

Incremental Network Quantization. Overview Repeat until everything is quantized Initial weights Power of 2 quantization Partitioning Retraining 2. 5 -0. 9 0. 1 0. 2 21 -20 0. 4 0. 1 21 -20 2 -1 0. 4 0. 2 -0. 1 2 -0. 2 0. 5 -0. 5 21 -0. 2 2 -1 -2 -1 21 -0. 1 0. 5 -1. 9 -0. 2 -0. 1 0. 5 -20 -0. 2 -0. 3 2 -1 -20 -0. 2 -0. 1 -0. 3 0. 1 -0. 1 0. 2 -20 -0. 4 0. 2 -20 -1. 5 1. 2 20 20 20

Incremental Network Quantization. Overview Repeat until everything is quantized Initial weights Power of 2 quantization Partitioning Retraining 2. 5 -0. 9 0. 1 0. 2 21 -20 0. 4 0. 1 21 -20 2 -1 0. 4 0. 2 -0. 1 2 -0. 2 0. 5 -0. 5 21 -0. 2 2 -1 -2 -1 21 -0. 1 0. 5 -1. 9 -0. 2 -0. 1 0. 5 -20 -0. 2 -0. 3 2 -1 -20 -0. 2 -0. 1 -0. 3 0. 1 -0. 1 0. 2 -20 -0. 4 0. 2 -20 -1. 5 1. 2 20 20 20

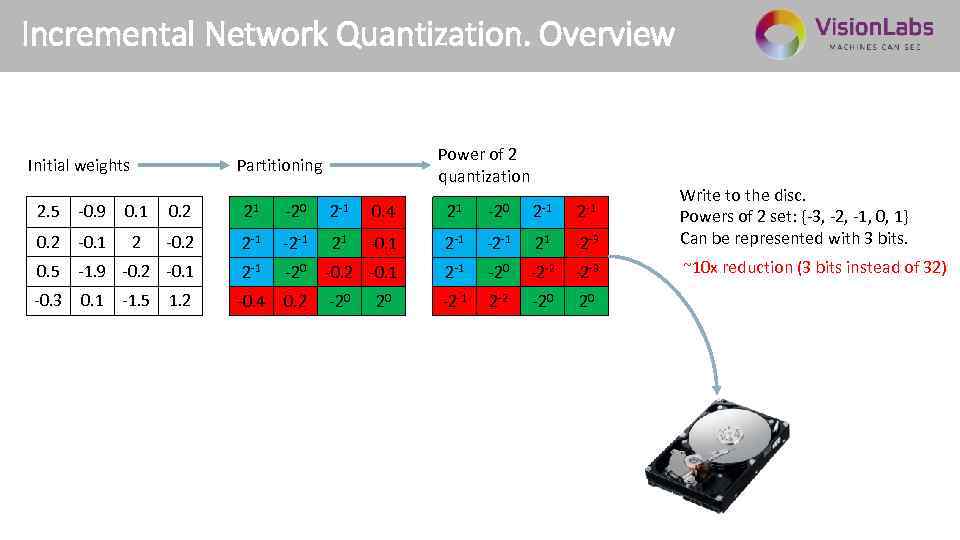

Incremental Network Quantization. Overview Initial weights Power of 2 quantization Partitioning 0. 4 21 -20 2 -1 -0. 1 2 -1 -2 -1 21 -2 -3 Write to the disc. Powers of 2 set: {-3, -2, -1, 0, 1} Can be represented with 3 bits. ~10 x reduction (3 bits instead of 32) 2. 5 -0. 9 0. 1 0. 2 21 0. 2 -0. 1 2 -0. 2 2 -1 -2 -1 0. 5 -1. 9 -0. 2 -0. 1 2 -1 -20 -2 -2 -2 -3 -0. 3 0. 1 -0. 4 0. 2 -20 -2 -1 2 -2 -20 20 -1. 5 1. 2 -20 2 -1 21 20 2

Incremental Network Quantization. Overview Initial weights Power of 2 quantization Partitioning 0. 4 21 -20 2 -1 -0. 1 2 -1 -2 -1 21 -2 -3 Write to the disc. Powers of 2 set: {-3, -2, -1, 0, 1} Can be represented with 3 bits. ~10 x reduction (3 bits instead of 32) 2. 5 -0. 9 0. 1 0. 2 21 0. 2 -0. 1 2 -0. 2 2 -1 -2 -1 0. 5 -1. 9 -0. 2 -0. 1 2 -1 -20 -2 -2 -2 -3 -0. 3 0. 1 -0. 4 0. 2 -20 -2 -1 2 -2 -20 20 -1. 5 1. 2 -20 2 -1 21 20 2

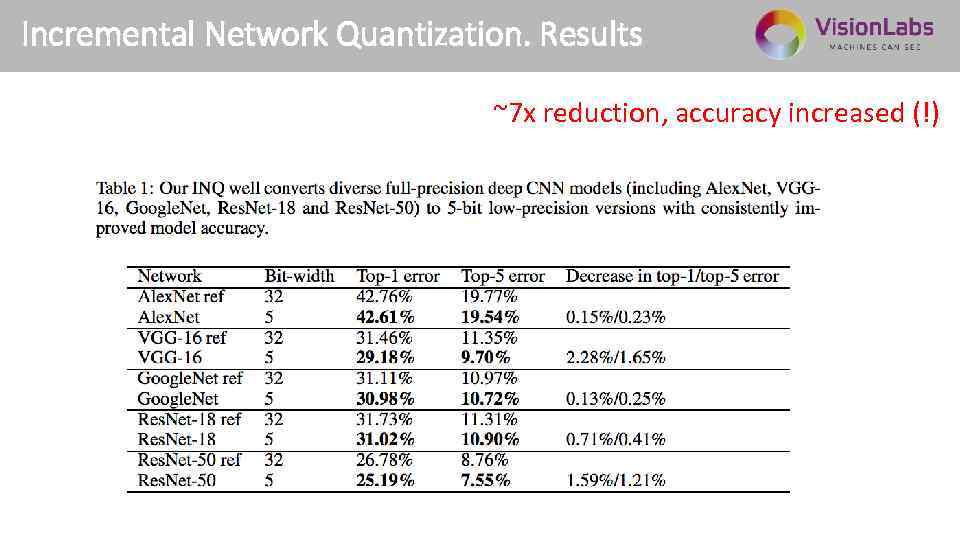

Incremental Network Quantization. Results ~7 x reduction, accuracy increased (!)

Incremental Network Quantization. Results ~7 x reduction, accuracy increased (!)

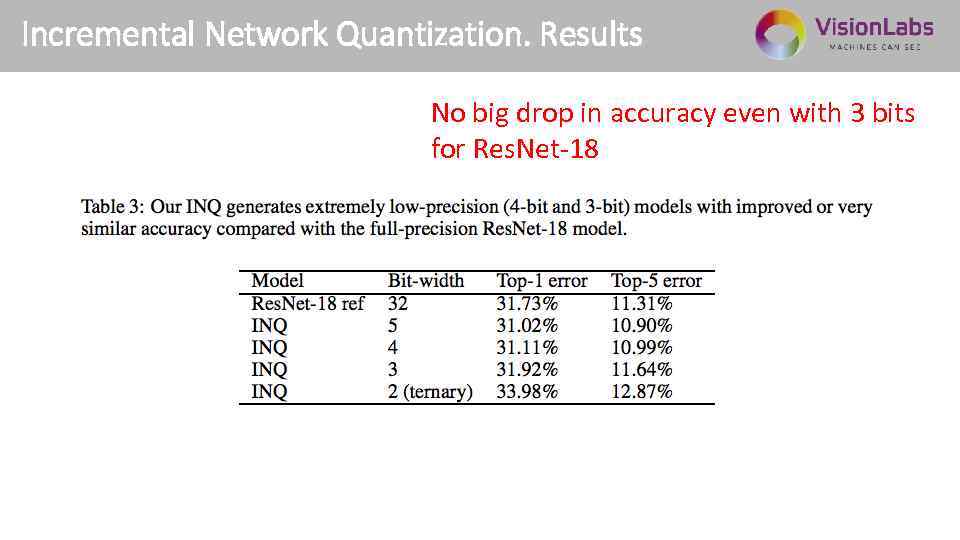

Incremental Network Quantization. Results No big drop in accuracy even with 3 bits for Res. Net-18 2

Incremental Network Quantization. Results No big drop in accuracy even with 3 bits for Res. Net-18 2

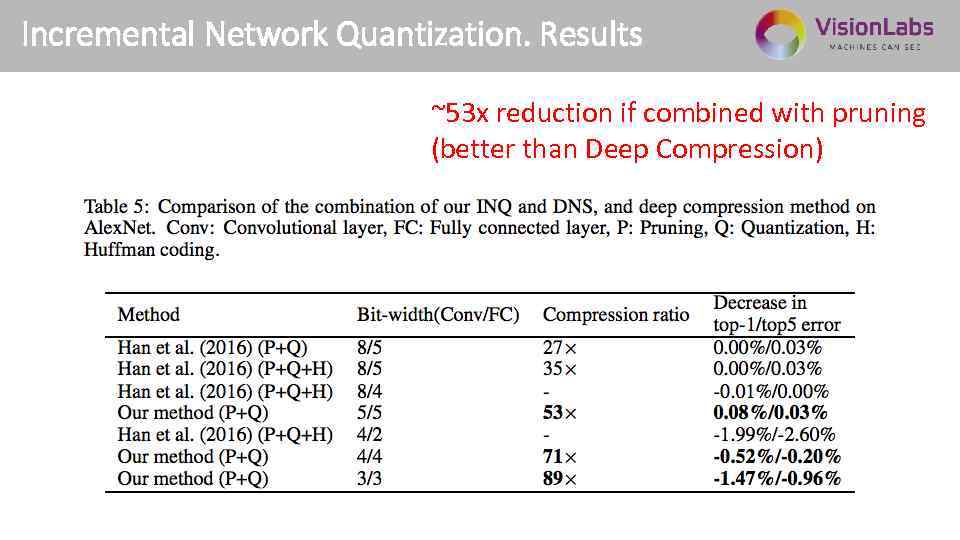

Incremental Network Quantization. Results ~53 x reduction if combined with pruning (better than Deep Compression)

Incremental Network Quantization. Results ~53 x reduction if combined with pruning (better than Deep Compression)

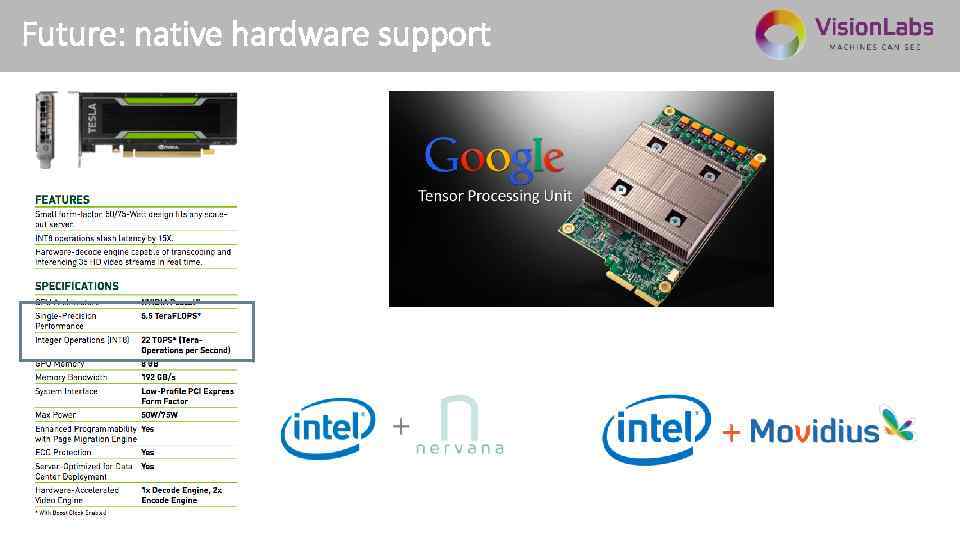

Future: native hardware support

Future: native hardware support

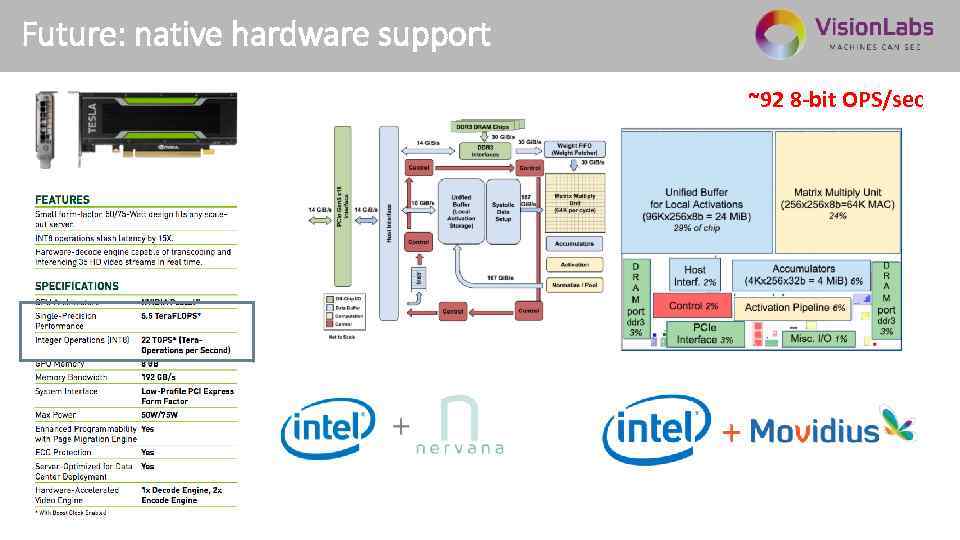

Future: native hardware support ~92 8 -bit OPS/sec

Future: native hardware support ~92 8 -bit OPS/sec

Этапы типового внедрения платформы 26 Alexander Chigorin Head of research projects Vision. Labs a. chigorin@visionlabs. ru

Этапы типового внедрения платформы 26 Alexander Chigorin Head of research projects Vision. Labs a. chigorin@visionlabs. ru