HPC 2015 Deep Learning (3).pptx

- Количество слайдов: 51

Deep Learning Методы глубокого обучения Жерздев Сергей Itseez, 2015

Agenda Motivation & History Supervised, unsupervised and RL Sparse coding Autoencoders RBM, DBN, DBM CNN RNN & LSTM Reinforcement Learning Need more!

Current results Image recognition (try it at http: //deeplearning. cs. toronto. edu) Speech recognition (Android) NLP (translation, Siri, Google Now) Multi modal models ‐ Image captioning Image translation (try Google translate mobile) Speech translation (Skype) Emotion recognition VQA Reinforcement learning (robotics) …

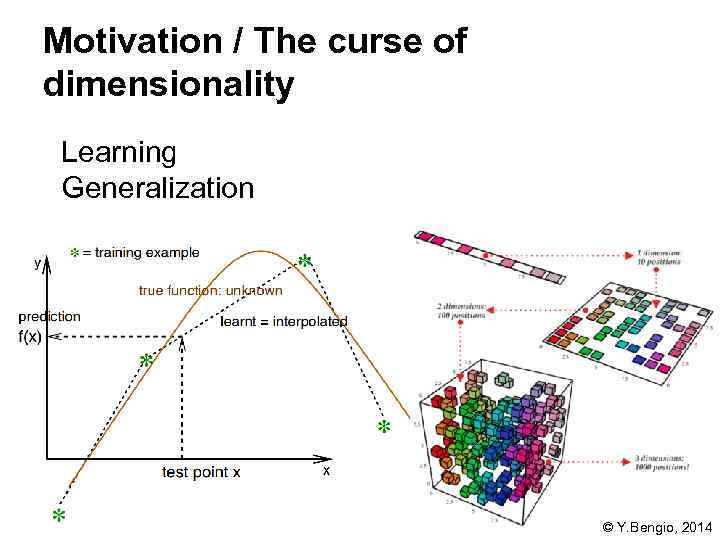

Motivation / The curse of dimensionality Learning Generalization © Y. Bengio, 2014

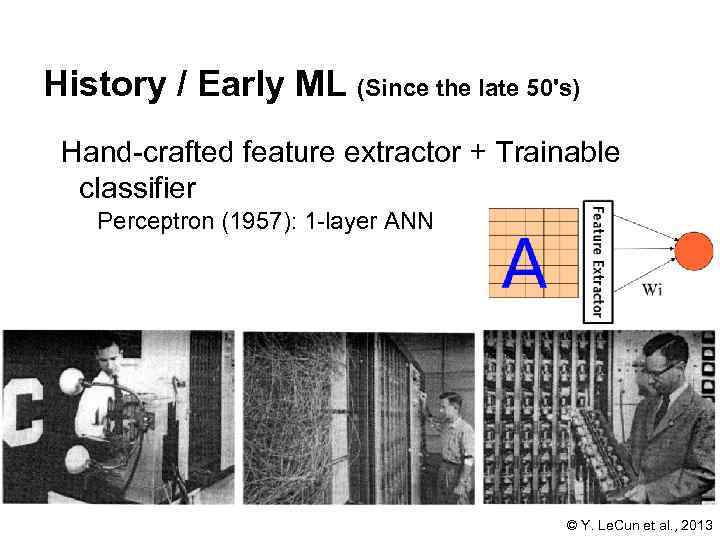

History / Early ML (Since the late 50's) Hand crafted feature extractor + Trainable classifier Perceptron (1957): 1 layer ANN © Y. Le. Cun et al. , 2013

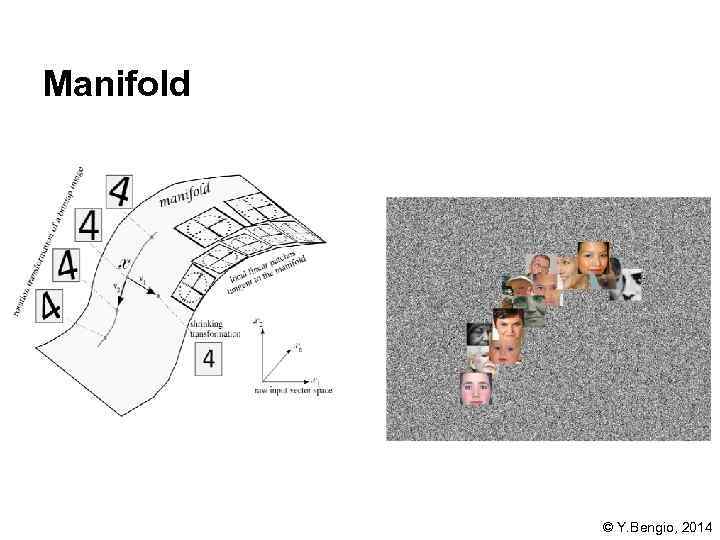

Manifold © Y. Bengio, 2014

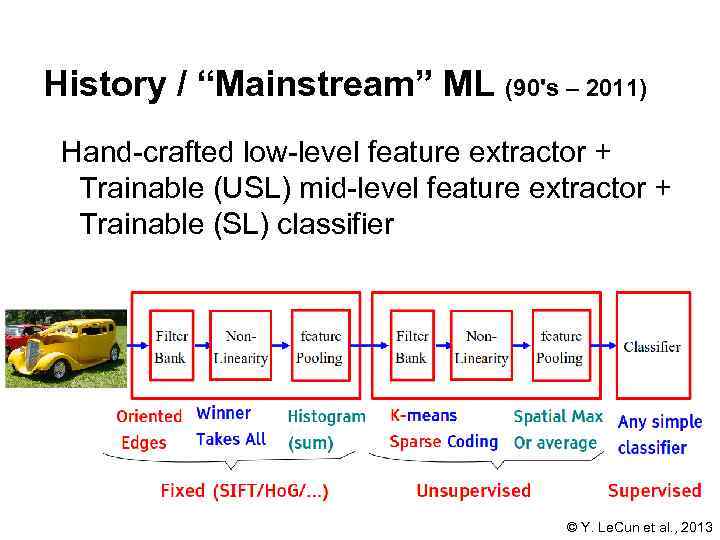

History / “Mainstream” ML (90's – 2011) Hand crafted low level feature extractor + Trainable (USL) mid level feature extractor + Trainable (SL) classifier © Y. Le. Cun et al. , 2013

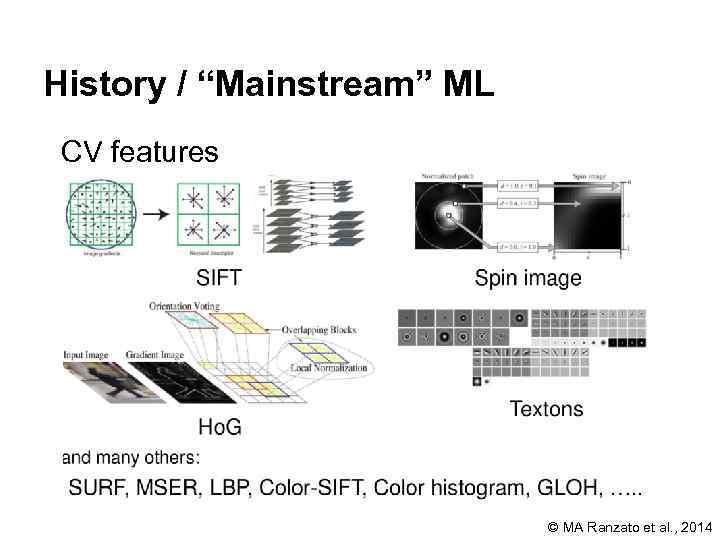

History / “Mainstream” ML CV features © MA Ranzato et al. , 2014

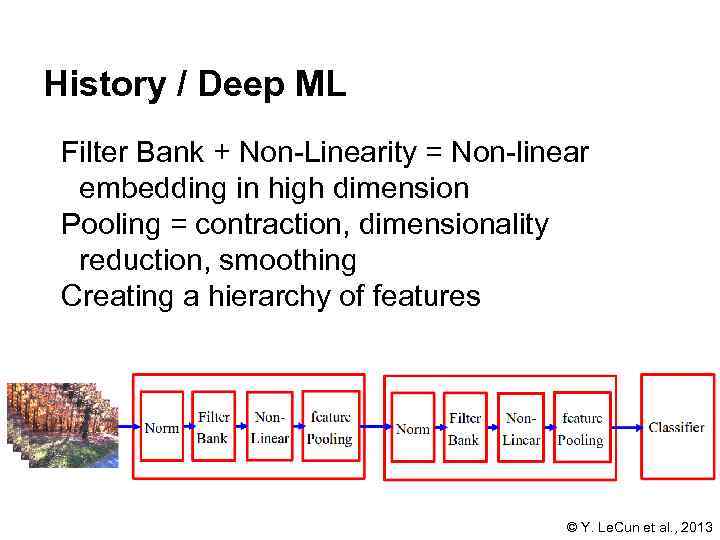

History / Deep ML Filter Bank + Non Linearity = Non linear embedding in high dimension Pooling = contraction, dimensionality reduction, smoothing Creating a hierarchy of features © Y. Le. Cun et al. , 2013

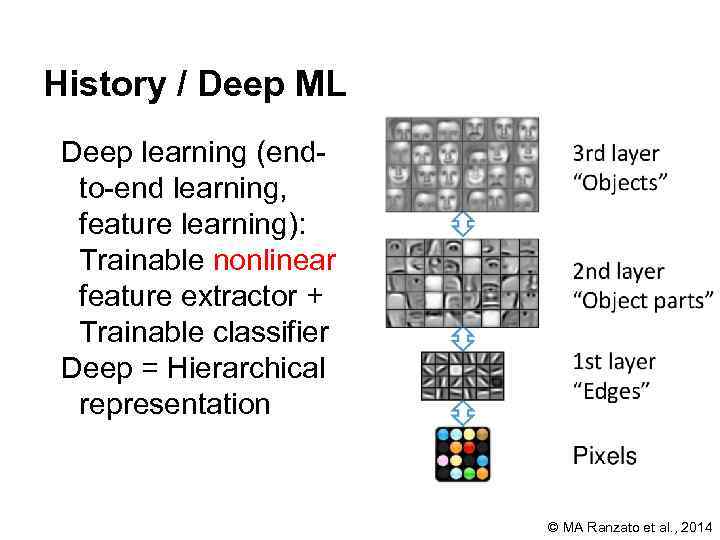

History / Deep ML Deep learning (end to end learning, feature learning): Trainable nonlinear feature extractor + Trainable classifier Deep = Hierarchical representation © MA Ranzato et al. , 2014

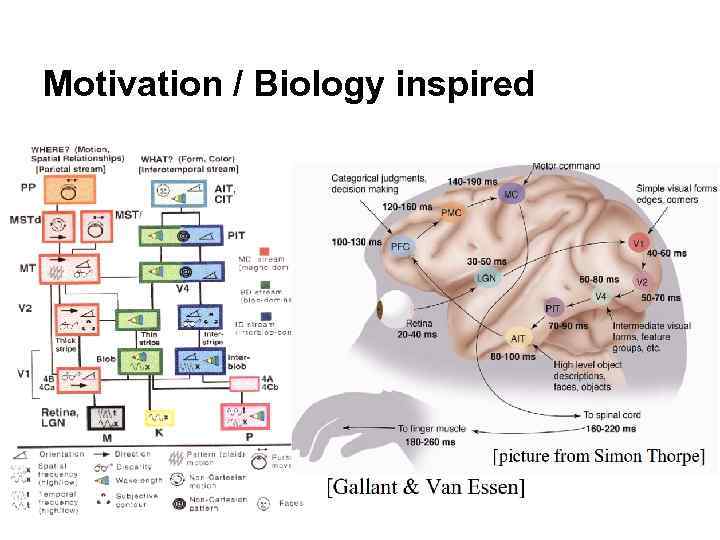

Motivation / Biology inspired

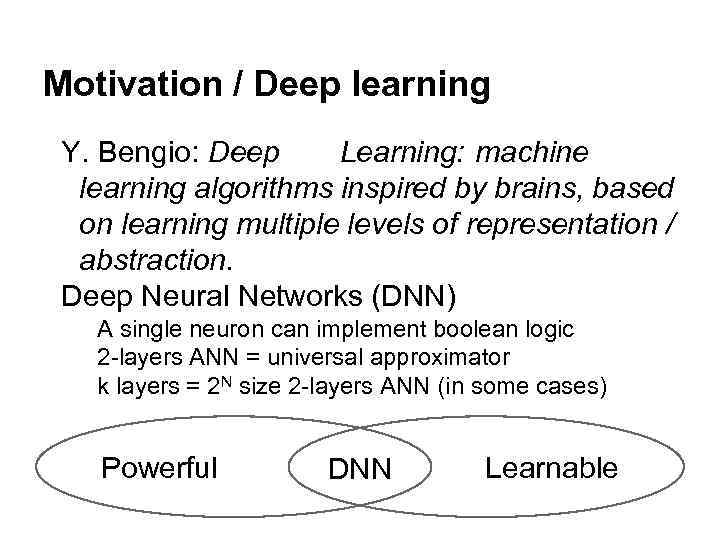

Motivation / Deep learning Y. Bengio: Deep Learning: machine learning algorithms inspired by brains, based on learning multiple levels of representation / abstraction. Deep Neural Networks (DNN) A single neuron can implement boolean logic 2 layers ANN = universal approximator k layers = 2 N size 2 layers ANN (in some cases) Powerful DNN Learnable

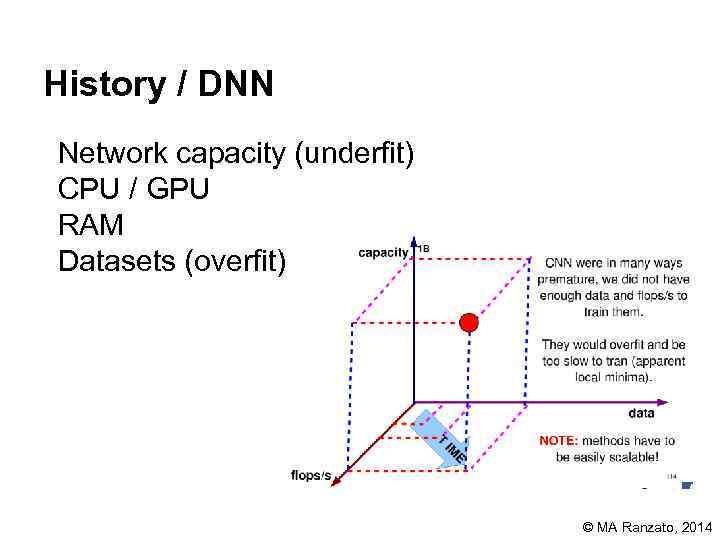

History / DNN Network capacity (underfit) CPU / GPU RAM Datasets (overfit) © MA Ranzato, 2014

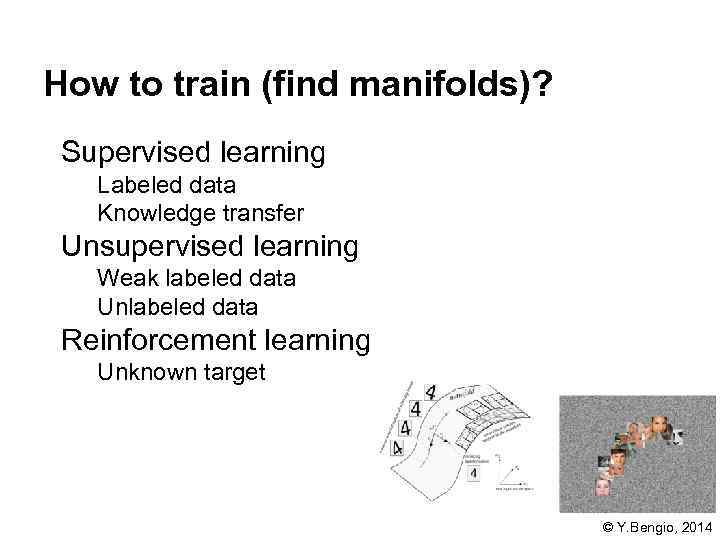

How to train (find manifolds)? Supervised learning Labeled data Knowledge transfer Unsupervised learning Weak labeled data Unlabeled data Reinforcement learning Unknown target © Y. Bengio, 2014

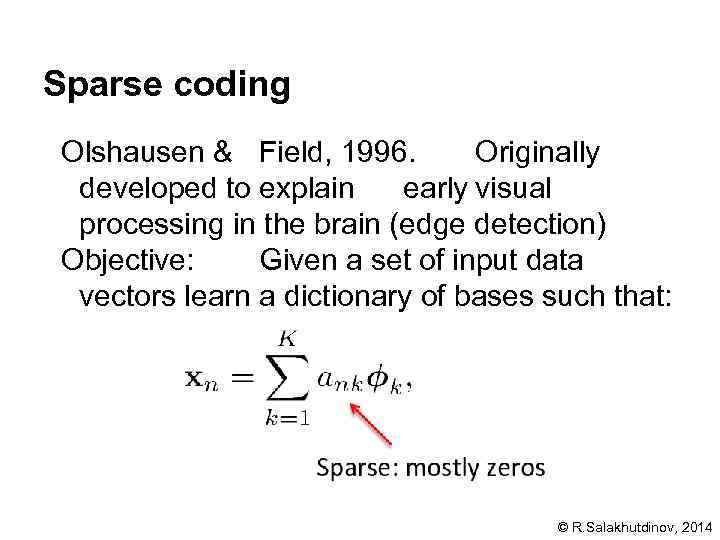

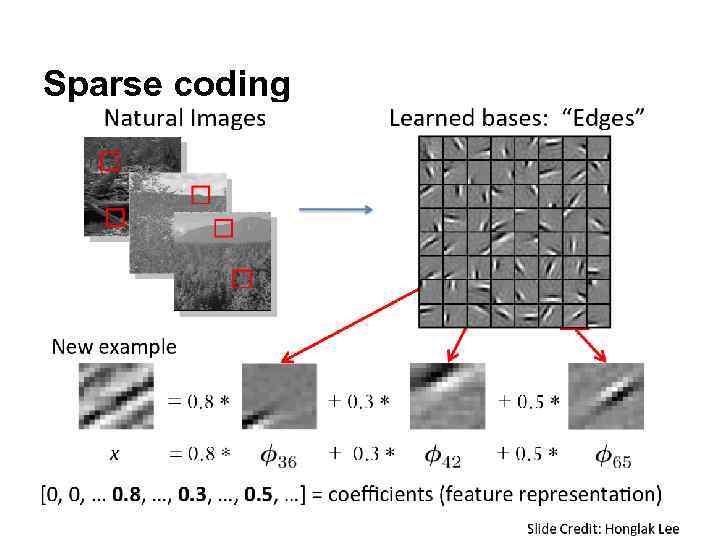

Sparse coding Olshausen & Field, 1996. Originally developed to explain early visual processing in the brain (edge detection) Objective: Given a set of input data vectors learn a dictionary of bases such that: © R. Salakhutdinov, 2014

Sparse coding

![Autoencoders [Sparse] Feature Representation Decoder Encoder Input Data Autoencoders [Sparse] Feature Representation Decoder Encoder Input Data](https://present5.com/presentation/8160236_437300440/image-17.jpg)

Autoencoders [Sparse] Feature Representation Decoder Encoder Input Data

![Stacked Autoencoders Class labels Decoder Encoder [Sparse] Feature Representation Decoder Encoder Input Data Layer Stacked Autoencoders Class labels Decoder Encoder [Sparse] Feature Representation Decoder Encoder Input Data Layer](https://present5.com/presentation/8160236_437300440/image-18.jpg)

Stacked Autoencoders Class labels Decoder Encoder [Sparse] Feature Representation Decoder Encoder Input Data Layer wise learning!

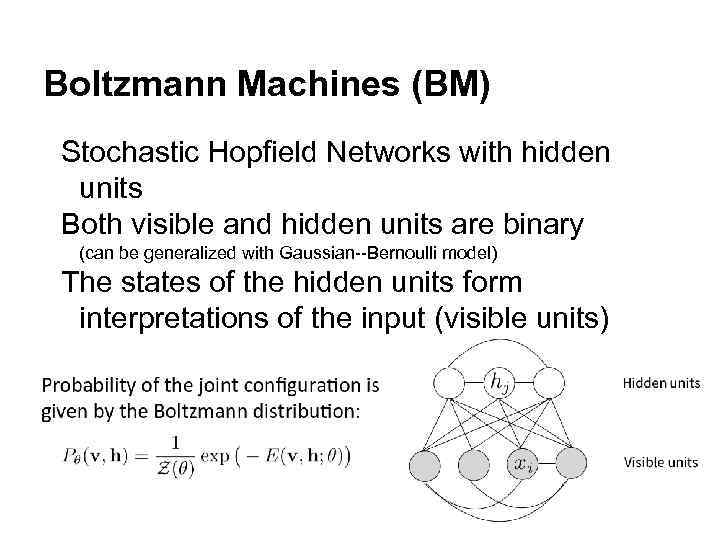

Boltzmann Machines (BM) Stochastic Hopfield Networks with hidden units Both visible and hidden units are binary (can be generalized with Gaussian Bernoulli model) The states of the hidden units form interpretations of the input (visible units)

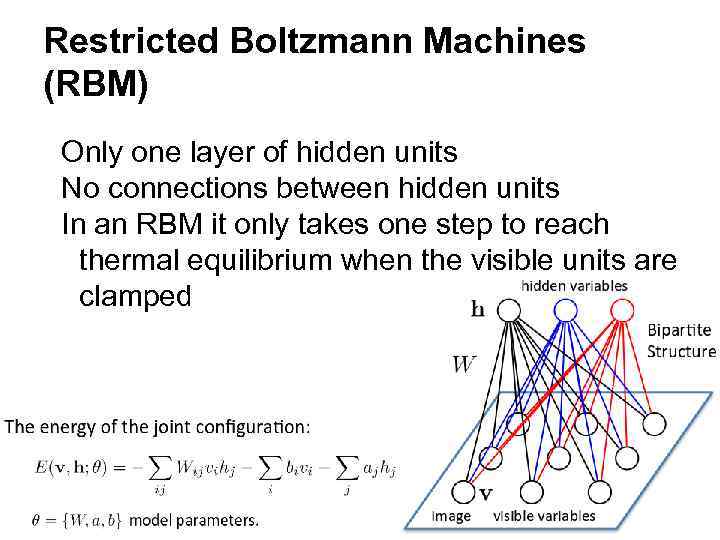

Restricted Boltzmann Machines (RBM) Only one layer of hidden units No connections between hidden units In an RBM it only takes one step to reach thermal equilibrium when the visible units are clamped

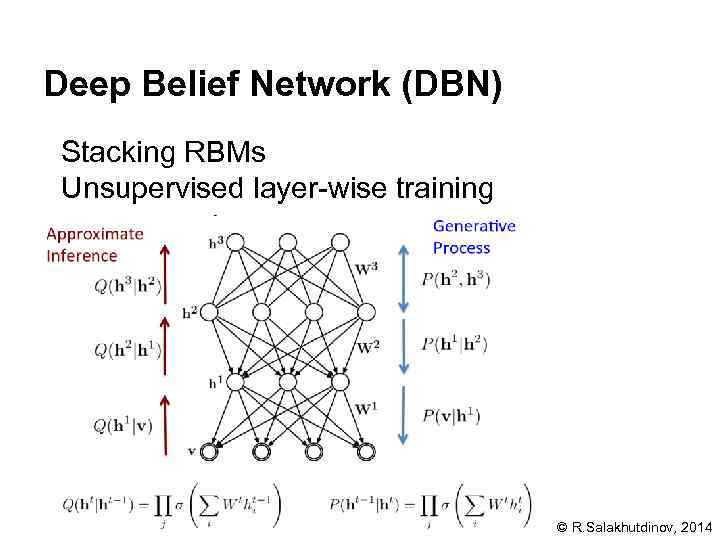

Deep Belief Network (DBN) Stacking RBMs Unsupervised layer wise training © R. Salakhutdinov, 2014

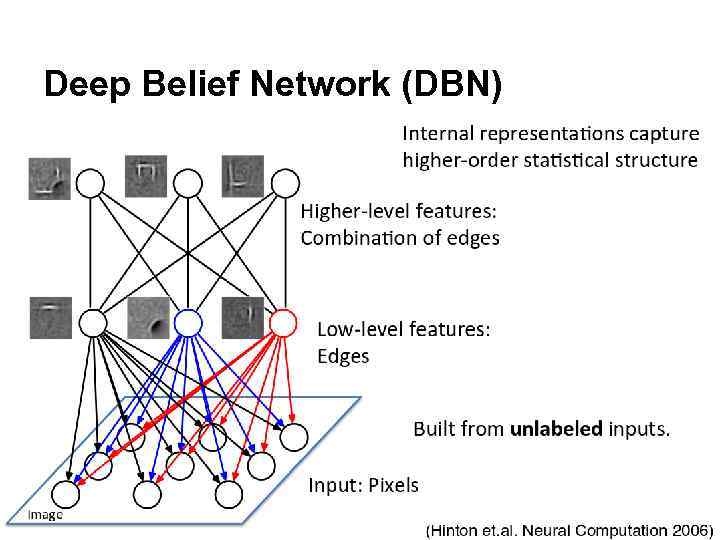

Deep Belief Network (DBN)

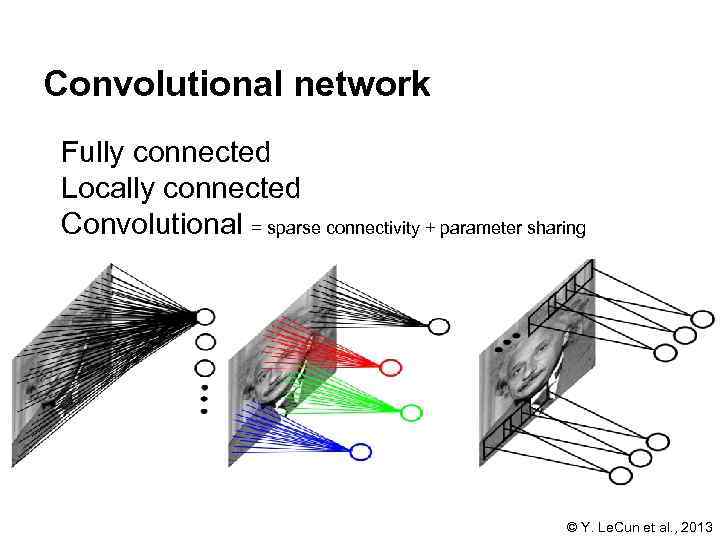

Convolutional network Fully connected Locally connected Convolutional = sparse connectivity + parameter sharing © Y. Le. Cun et al. , 2013

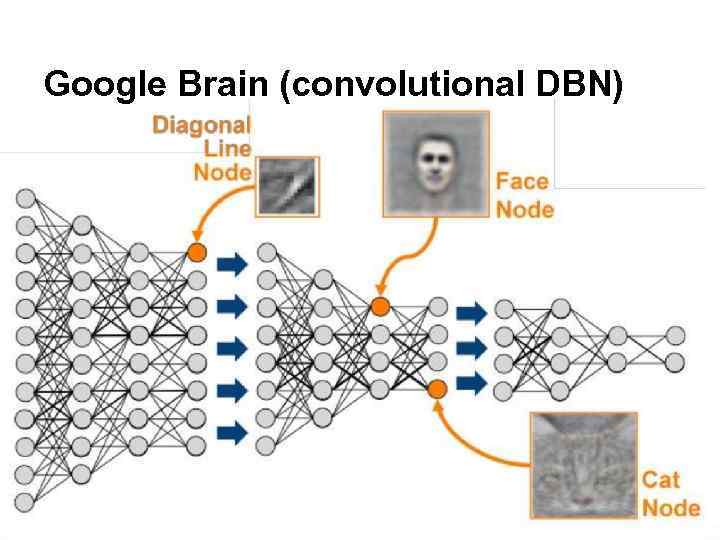

Google Brain (convolutional DBN)

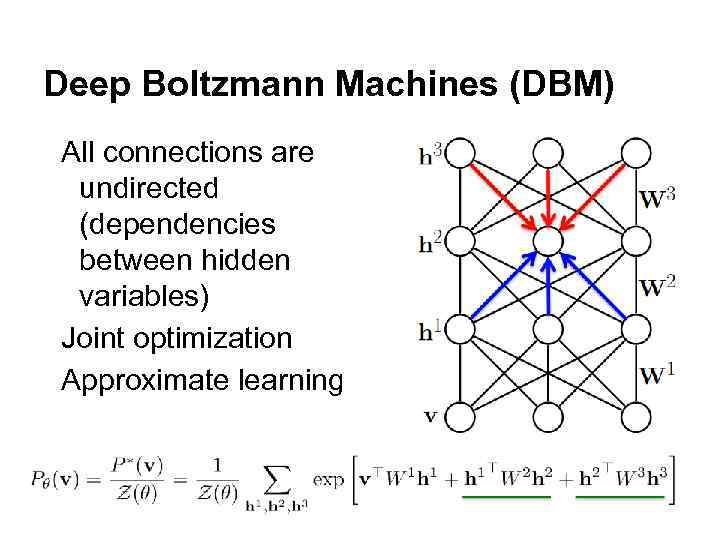

Deep Boltzmann Machines (DBM) All connections are undirected (dependencies between hidden variables) Joint optimization Approximate learning

![Feed-forward NN Class labels Encoder [Sparse] Feature Representation Encoder Input Data Layer wise learning! Feed-forward NN Class labels Encoder [Sparse] Feature Representation Encoder Input Data Layer wise learning!](https://present5.com/presentation/8160236_437300440/image-26.jpg)

Feed-forward NN Class labels Encoder [Sparse] Feature Representation Encoder Input Data Layer wise learning!

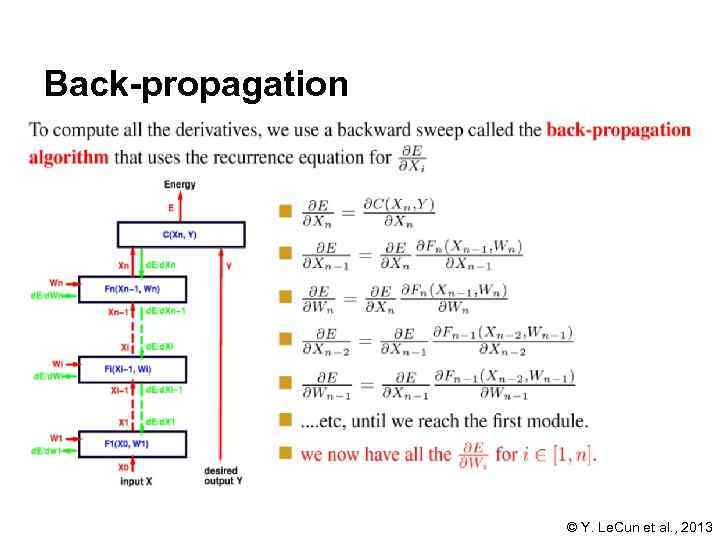

Back-propagation © Y. Le. Cun et al. , 2013

Back-propagation Any connection is permissible Networks with loops must be “unfolded in time”. Any module is permissible As long as it is continuous and differentiable almost everywhere with respect to the parameters, and with respect to non terminal inputs. Supervised learning is non convex Local minimas. Saddle points. Back Propagation + SGD properties

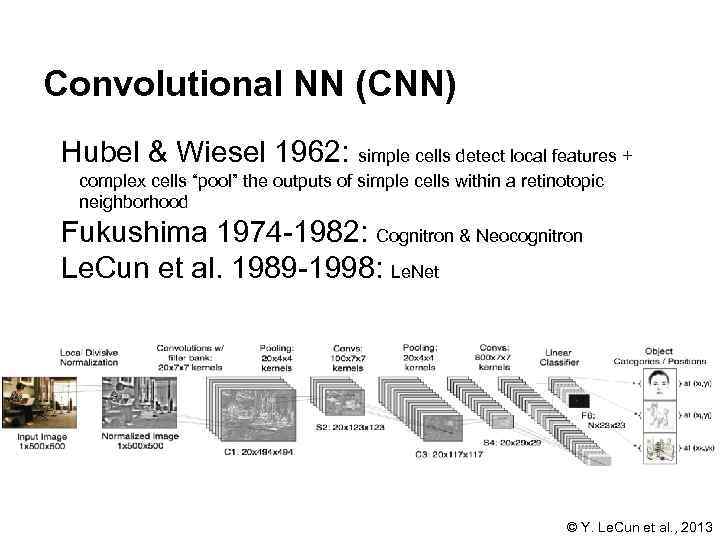

Convolutional NN (CNN) Hubel & Wiesel 1962: simple cells detect local features + complex cells “pool” the outputs of simple cells within a retinotopic neighborhood Fukushima 1974 1982: Cognitron & Neocognitron Le. Cun et al. 1989 1998: Le. Net © Y. Le. Cun et al. , 2013

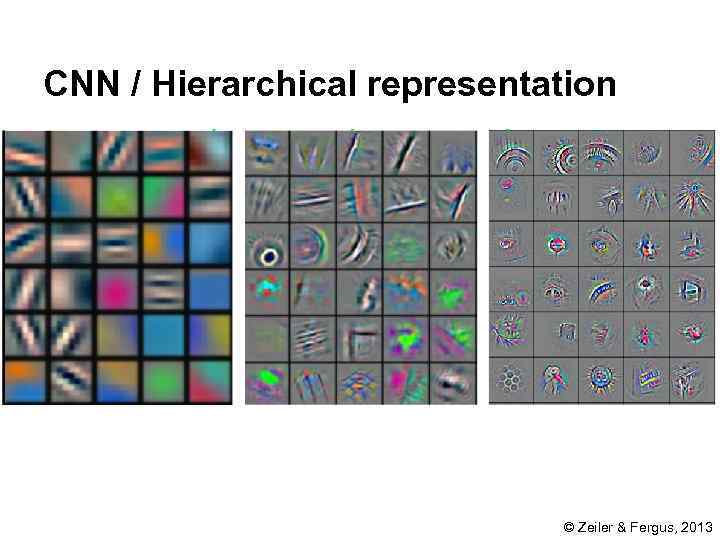

CNN / Hierarchical representation © Zeiler & Fergus, 2013

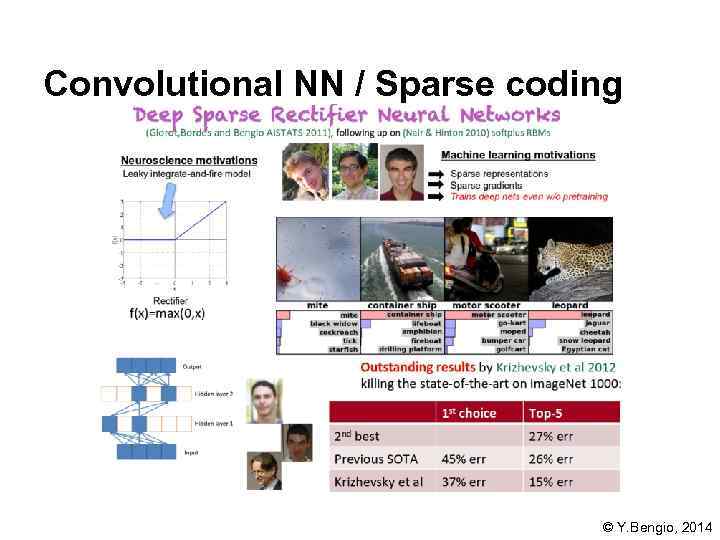

Convolutional NN / Sparse coding © Y. Bengio, 2014

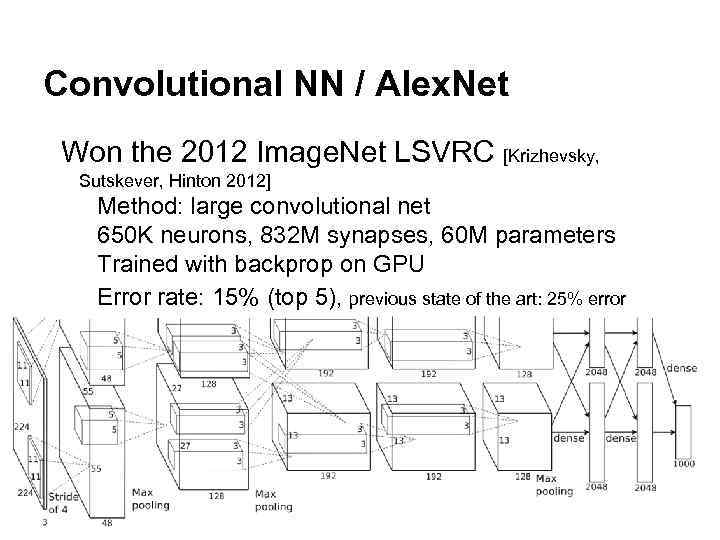

Convolutional NN / Alex. Net Won the 2012 Image. Net LSVRC [Krizhevsky, Sutskever, Hinton 2012] Method: large convolutional net 650 K neurons, 832 M synapses, 60 M parameters Trained with backprop on GPU Error rate: 15% (top 5), previous state of the art: 25% error

Convolutional NN / Conv. Net Scene parse (Farabet et al. ICML 2012, PAMI 2013)

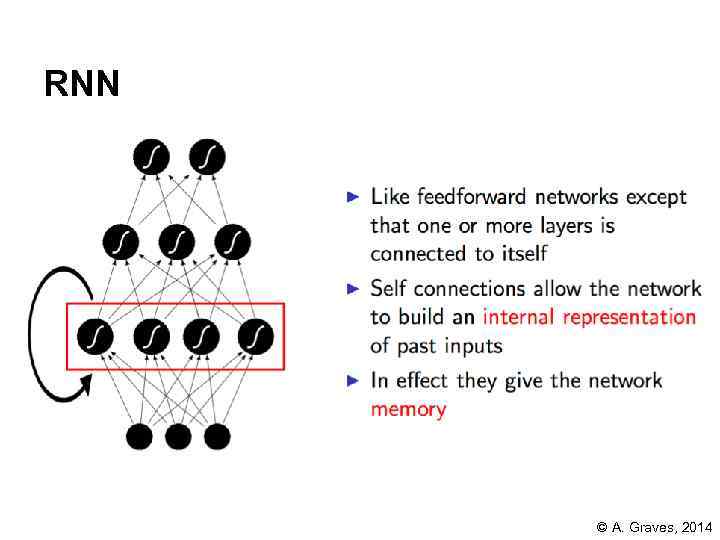

RNN © A. Graves, 2014

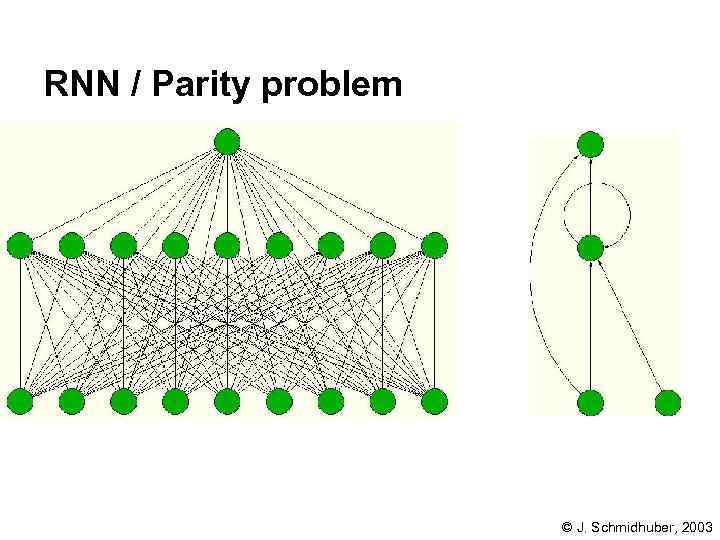

RNN / Parity problem © J. Schmidhuber, 2003

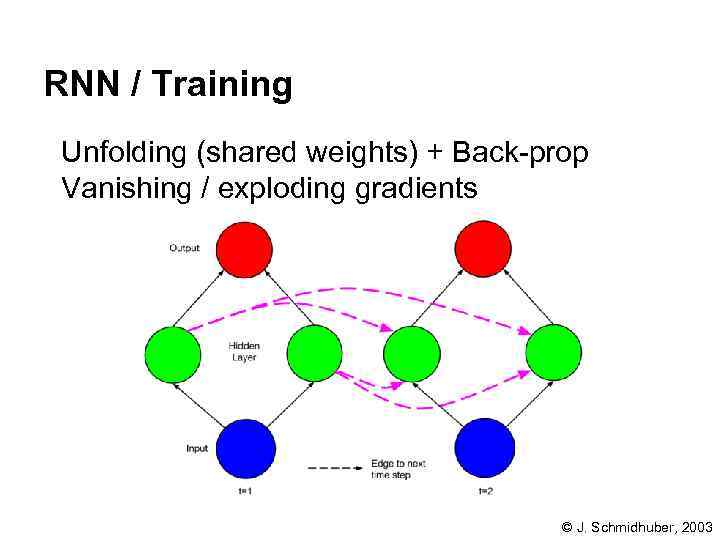

RNN / Training Unfolding (shared weights) + Back prop Vanishing / exploding gradients © J. Schmidhuber, 2003

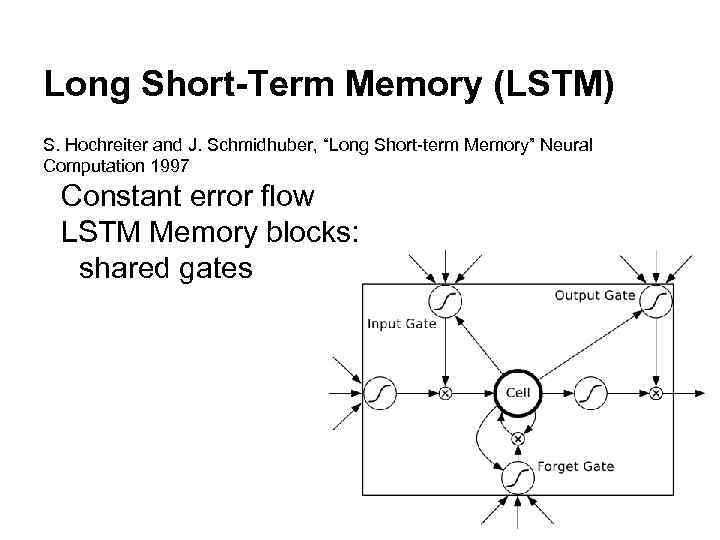

Long Short-Term Memory (LSTM) S. Hochreiter and J. Schmidhuber, “Long Short term Memory” Neural Computation 1997 Constant error flow LSTM Memory blocks: shared gates

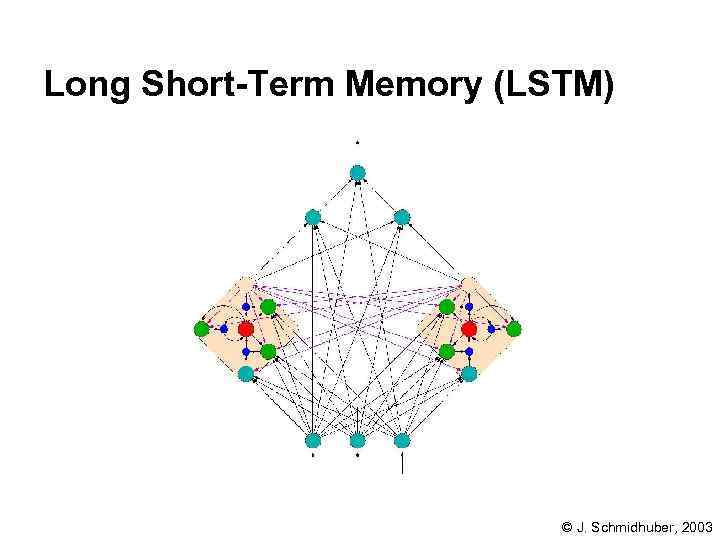

Long Short-Term Memory (LSTM) © J. Schmidhuber, 2003

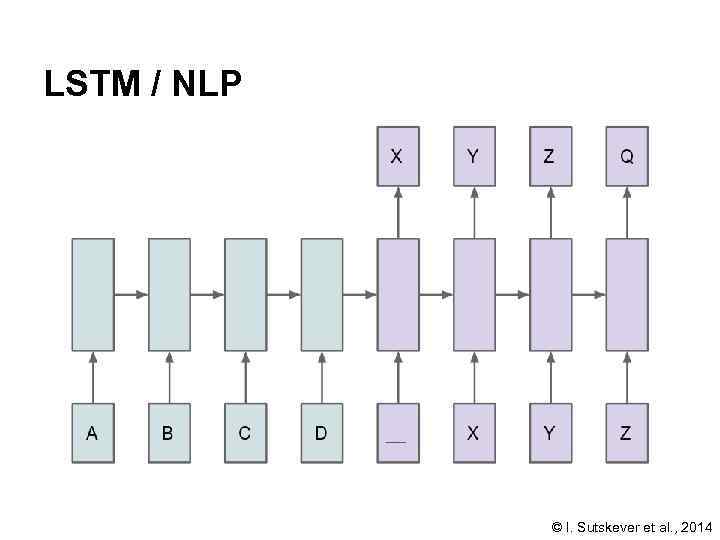

LSTM / NLP © I. Sutskever et al. , 2014

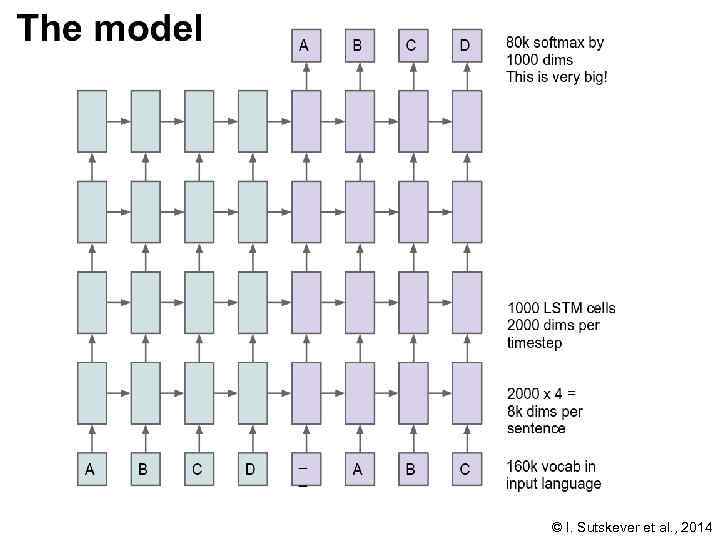

© I. Sutskever et al. , 2014

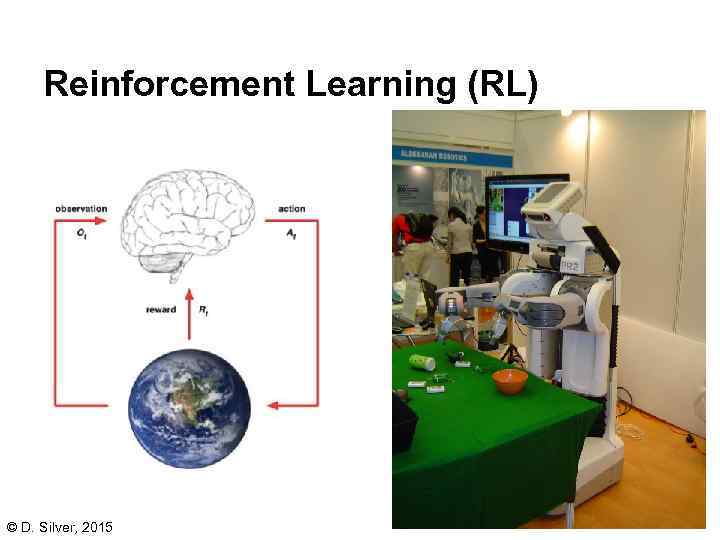

Reinforcement Learning (RL) © D. Silver, 2015

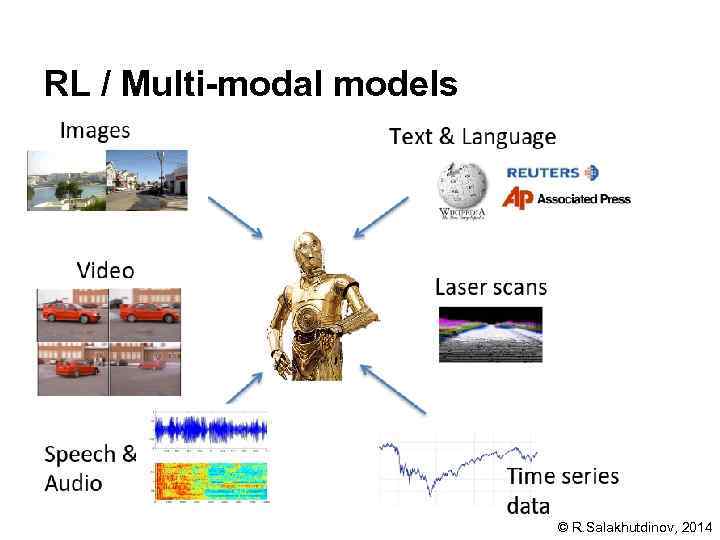

RL / Multi-modal models © R. Salakhutdinov, 2014

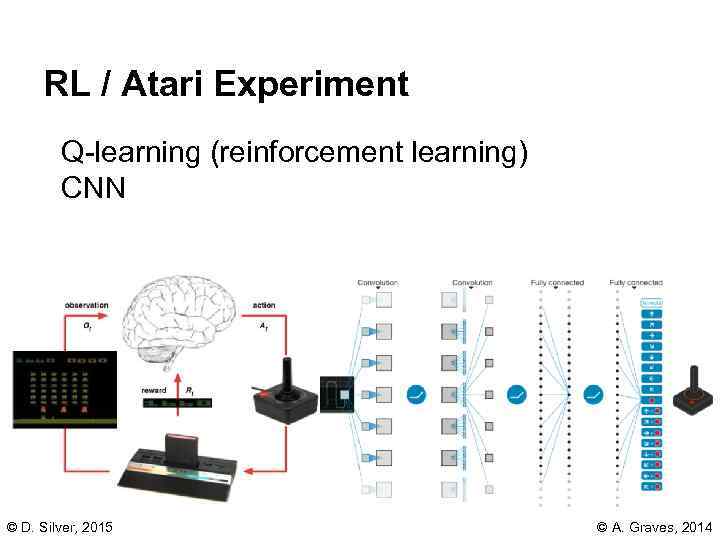

RL / Atari Experiment Q learning (reinforcement learning) CNN © D. Silver, 2015 © A. Graves, 2014

There is no silver bullet © A. Nguyen et al. , 2015

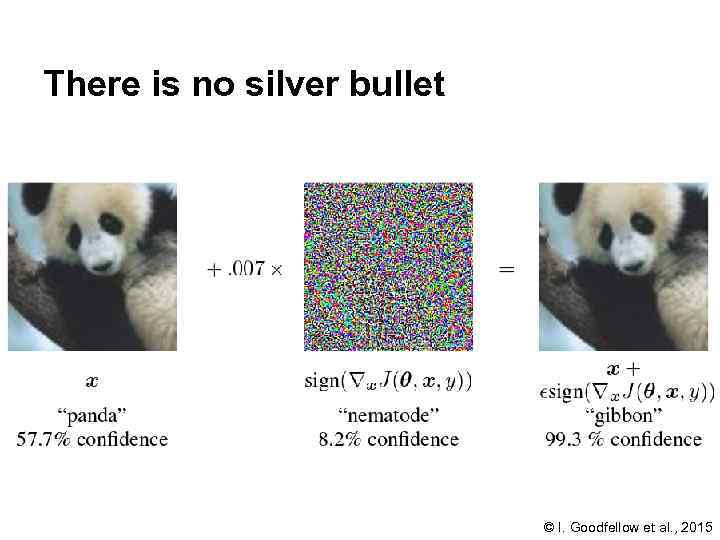

There is no silver bullet © I. Goodfellow et al. , 2015

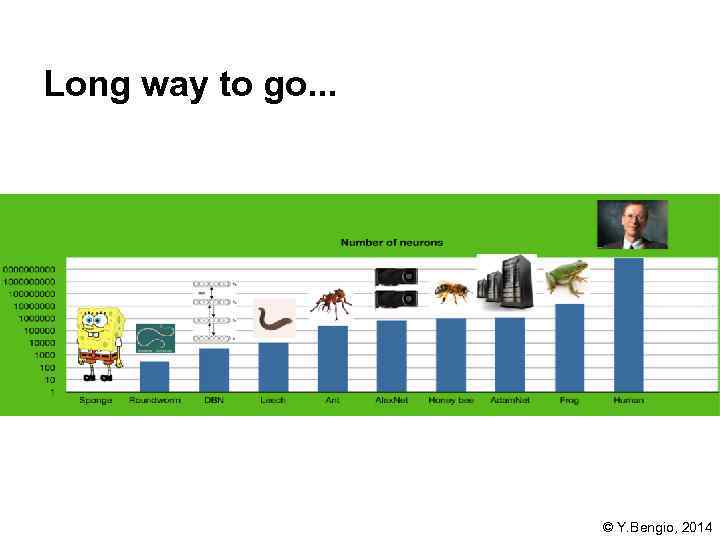

Long way to go. . . © Y. Bengio, 2014

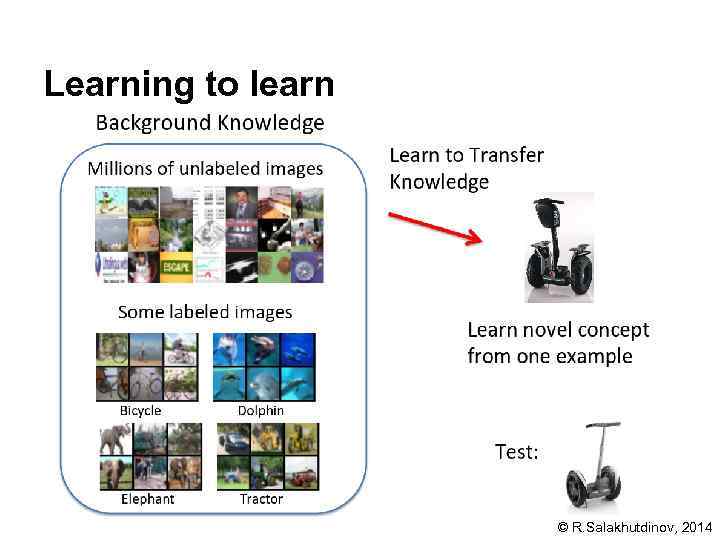

Learning to learn © R. Salakhutdinov, 2014

Need more info! / Names Alex Graves Geoffrey E. Hinton Yann Le. Cun Yoshua Bengio Andrew Ng Jürgen Schmidhuber

Need more info! / URLs Neural Networks and Deep Learning http: //neuralnetworksanddeeplearning. com/index. html Stanford CS class CS 231 n: Convolutional Neural Networks for Visual Recognition http: //cs 231 n. github. io/ Deep Learning for Computer Vision https: //sites. google. com/site/deeplearningcvpr 2014/ Unsupervised Feature Learning and Deep Learning Tutorial http: //ufldl. stanford. edu/wiki/index. php/UFLDL_Tutorial Recurrent Neural Networks http: //people. idsia. ch/~juergen/rnn. html

Need more info! / Tools Caffe deep learning framework (BVLC) http: //caffe. berkeleyvision. org/ Theano/Pylearn 2 (LISA U. Montreal, Python & Numpy) http: //deeplearning. net/software/theano http: //deeplearning. net/software/pylearn 2 Torch 7 (Lua/C++, NYU, Google Deepmind, Twitter, etc) http: //torch. ch/ Mat. Convnet (Matlab/C++, VGG, U. Oxford) http: //www. vlfeat. org/matconvnet Tensor. Flow (Python/C++, Google) http: //www. tensorflow. org/ cud. NN (NVIDIA)

Questions? Thank you!

HPC 2015 Deep Learning (3).pptx