35689028622015d7f3f1272709ecf05a.ppt

- Количество слайдов: 48

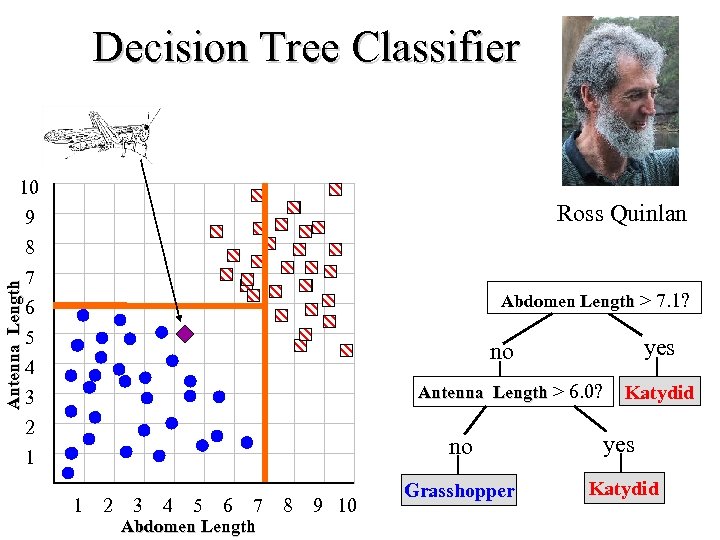

Decision Tree Classifier Antenna Length 10 Ross Quinlan 9 8 7 6 5 4 3 2 1 Abdomen Length > 7. 1? yes no Antenna Length > 6. 0? Katydid no 1 2 3 4 5 6 7 Abdomen Length 8 9 10 yes Grasshopper Katydid

Decision Tree Classifier Antenna Length 10 Ross Quinlan 9 8 7 6 5 4 3 2 1 Abdomen Length > 7. 1? yes no Antenna Length > 6. 0? Katydid no 1 2 3 4 5 6 7 Abdomen Length 8 9 10 yes Grasshopper Katydid

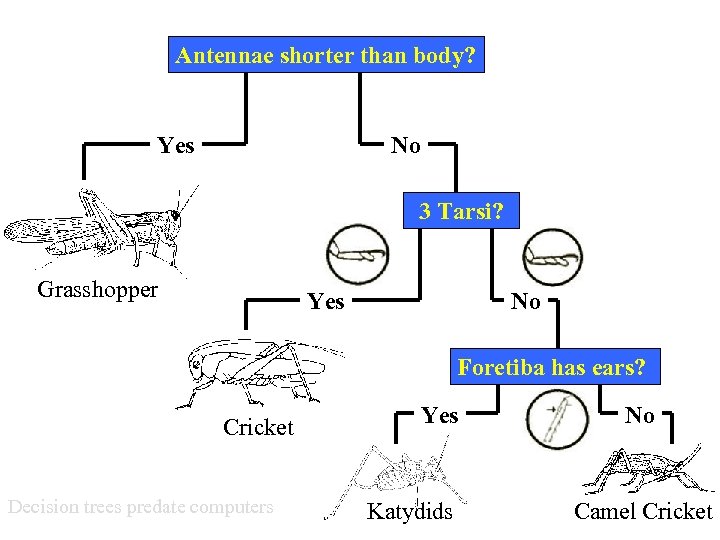

Antennae shorter than body? Yes No 3 Tarsi? Grasshopper Yes No Foretiba has ears? Cricket Decision trees predate computers Yes Katydids No Camel Cricket

Antennae shorter than body? Yes No 3 Tarsi? Grasshopper Yes No Foretiba has ears? Cricket Decision trees predate computers Yes Katydids No Camel Cricket

Decision Tree Classification • Decision tree – – A flow-chart-like tree structure Internal node denotes a test on an attribute Branch represents an outcome of the test Leaf nodes represent class labels or class distribution • Decision tree generation consists of two phases – Tree construction • At start, all the training examples are at the root • Partition examples recursively based on selected attributes – Tree pruning • Identify and remove branches that reflect noise or outliers • Use of decision tree: Classifying an unknown sample – Test the attribute values of the sample against the decision tree

Decision Tree Classification • Decision tree – – A flow-chart-like tree structure Internal node denotes a test on an attribute Branch represents an outcome of the test Leaf nodes represent class labels or class distribution • Decision tree generation consists of two phases – Tree construction • At start, all the training examples are at the root • Partition examples recursively based on selected attributes – Tree pruning • Identify and remove branches that reflect noise or outliers • Use of decision tree: Classifying an unknown sample – Test the attribute values of the sample against the decision tree

How do we construct the decision tree? • Basic algorithm (a greedy algorithm) – Tree is constructed in a top-down recursive divide-and-conquer manner – At start, all the training examples are at the root – Attributes are categorical (if continuous-valued, they can be discretized in advance) – Examples are partitioned recursively based on selected attributes. – Test attributes are selected on the basis of a heuristic or statistical measure (e. g. , information gain) • Conditions for stopping partitioning – All samples for a given node belong to the same class – There are no remaining attributes for further partitioning – majority voting is employed for classifying the leaf – There are no samples left

How do we construct the decision tree? • Basic algorithm (a greedy algorithm) – Tree is constructed in a top-down recursive divide-and-conquer manner – At start, all the training examples are at the root – Attributes are categorical (if continuous-valued, they can be discretized in advance) – Examples are partitioned recursively based on selected attributes. – Test attributes are selected on the basis of a heuristic or statistical measure (e. g. , information gain) • Conditions for stopping partitioning – All samples for a given node belong to the same class – There are no remaining attributes for further partitioning – majority voting is employed for classifying the leaf – There are no samples left

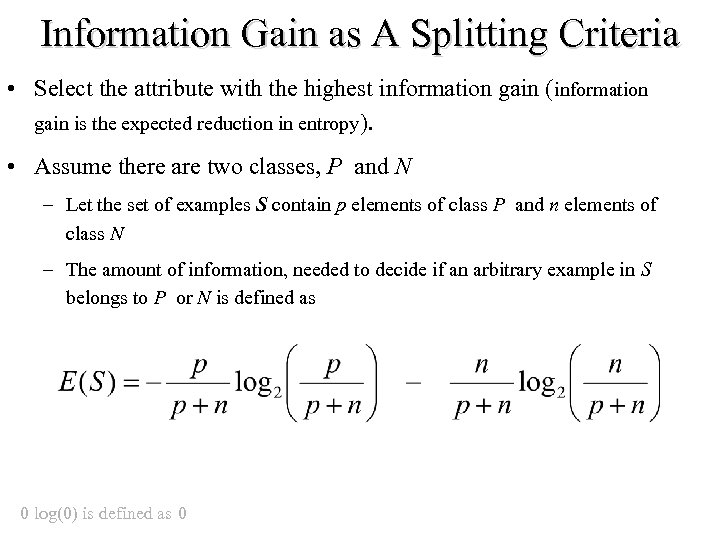

Information Gain as A Splitting Criteria • Select the attribute with the highest information gain (information gain is the expected reduction in entropy). • Assume there are two classes, P and N – Let the set of examples S contain p elements of class P and n elements of class N – The amount of information, needed to decide if an arbitrary example in S belongs to P or N is defined as 0 log(0) is defined as 0

Information Gain as A Splitting Criteria • Select the attribute with the highest information gain (information gain is the expected reduction in entropy). • Assume there are two classes, P and N – Let the set of examples S contain p elements of class P and n elements of class N – The amount of information, needed to decide if an arbitrary example in S belongs to P or N is defined as 0 log(0) is defined as 0

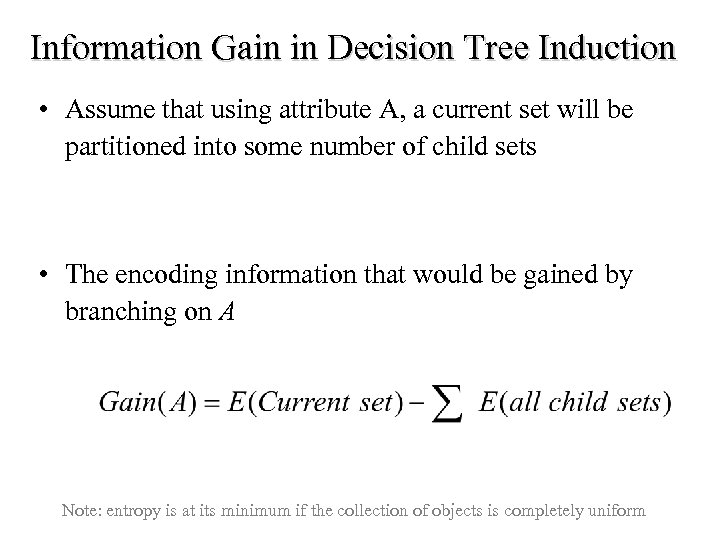

Information Gain in Decision Tree Induction • Assume that using attribute A, a current set will be partitioned into some number of child sets • The encoding information that would be gained by branching on A Note: entropy is at its minimum if the collection of objects is completely uniform

Information Gain in Decision Tree Induction • Assume that using attribute A, a current set will be partitioned into some number of child sets • The encoding information that would be gained by branching on A Note: entropy is at its minimum if the collection of objects is completely uniform

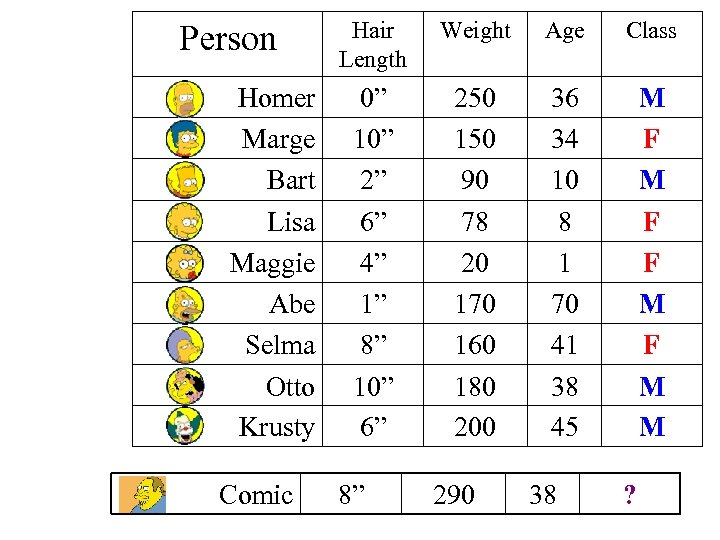

Person Hair Length Weight Age Class Homer Marge Bart Lisa Maggie Abe Selma Otto Krusty 0” 10” 2” 6” 4” 1” 8” 10” 6” 250 150 90 78 20 170 160 180 200 36 34 10 8 1 70 41 38 45 M F F M M Comic 8” 290 38 ?

Person Hair Length Weight Age Class Homer Marge Bart Lisa Maggie Abe Selma Otto Krusty 0” 10” 2” 6” 4” 1” 8” 10” 6” 250 150 90 78 20 170 160 180 200 36 34 10 8 1 70 41 38 45 M F F M M Comic 8” 290 38 ?

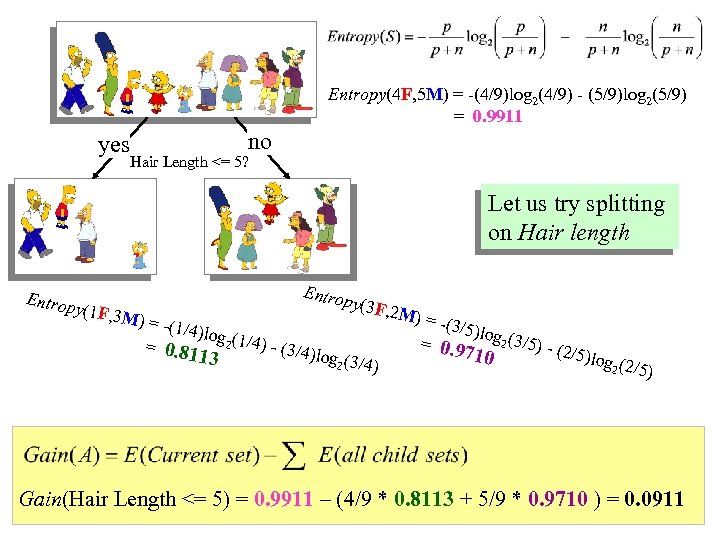

Entropy(4 F, 5 M) = -(4/9)log 2(4/9) - (5/9)log 2(5/9) = 0. 9911 yes no Hair Length <= 5? Let us try splitting on Hair length Entrop Entro y(1 F, 3 , 2 M) = -(3/ 5 = 0 )log 2 (3/5) (2/5)l. 9710 )log (3 og 2 (2/ 2 /4) 5) M) = (1/4)lo g 2 (1/4) = 0. 8 - (3/4 113 py(3 F Gain(Hair Length <= 5) = 0. 9911 – (4/9 * 0. 8113 + 5/9 * 0. 9710 ) = 0. 0911

Entropy(4 F, 5 M) = -(4/9)log 2(4/9) - (5/9)log 2(5/9) = 0. 9911 yes no Hair Length <= 5? Let us try splitting on Hair length Entrop Entro y(1 F, 3 , 2 M) = -(3/ 5 = 0 )log 2 (3/5) (2/5)l. 9710 )log (3 og 2 (2/ 2 /4) 5) M) = (1/4)lo g 2 (1/4) = 0. 8 - (3/4 113 py(3 F Gain(Hair Length <= 5) = 0. 9911 – (4/9 * 0. 8113 + 5/9 * 0. 9710 ) = 0. 0911

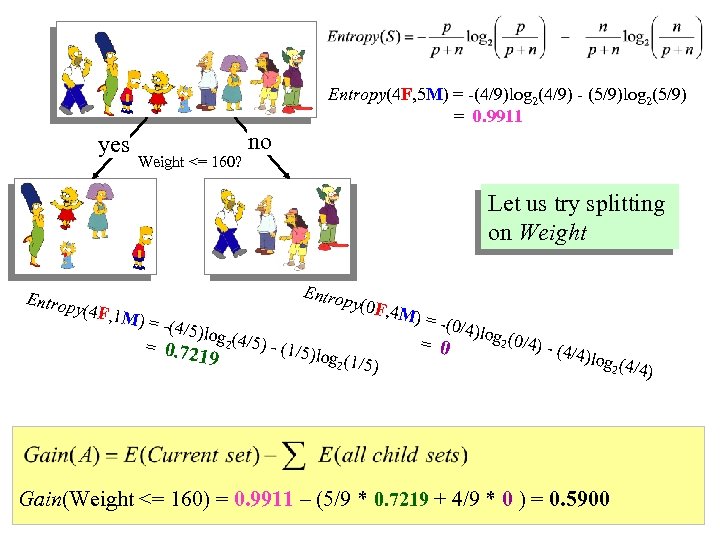

Entropy(4 F, 5 M) = -(4/9)log 2(4/9) - (5/9)log 2(5/9) = 0. 9911 yes Weight <= 160? no Let us try splitting on Weight Entrop Entro y(4 F, 1 , 4 M) = -(0/ 4 = 0 )log 2 (0/4) (4/4)l )log (1 og 2 (4/ 2 /5) 4) M) = (4/5)lo g 2 (4/5) = 0. 7 - (1/5 219 py(0 F Gain(Weight <= 160) = 0. 9911 – (5/9 * 0. 7219 + 4/9 * 0 ) = 0. 5900

Entropy(4 F, 5 M) = -(4/9)log 2(4/9) - (5/9)log 2(5/9) = 0. 9911 yes Weight <= 160? no Let us try splitting on Weight Entrop Entro y(4 F, 1 , 4 M) = -(0/ 4 = 0 )log 2 (0/4) (4/4)l )log (1 og 2 (4/ 2 /5) 4) M) = (4/5)lo g 2 (4/5) = 0. 7 - (1/5 219 py(0 F Gain(Weight <= 160) = 0. 9911 – (5/9 * 0. 7219 + 4/9 * 0 ) = 0. 5900

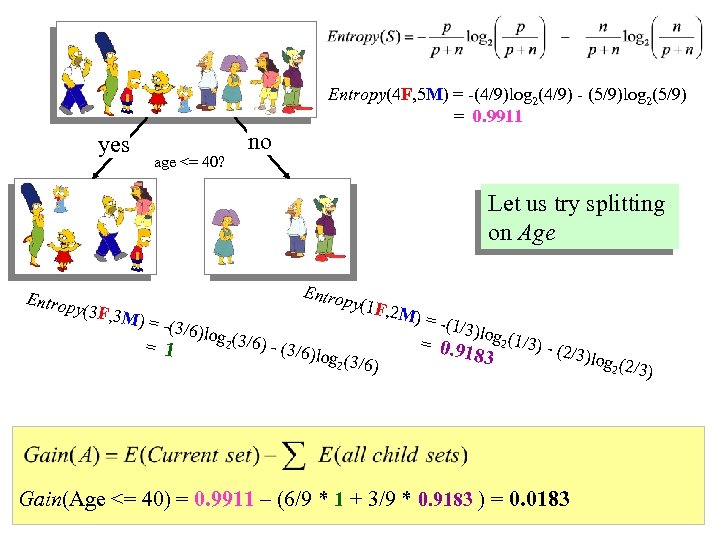

Entropy(4 F, 5 M) = -(4/9)log 2(4/9) - (5/9)log 2(5/9) = 0. 9911 yes age <= 40? no Let us try splitting on Age Entrop y(3 F, 3 Entro py(1 F , 2 M) = -(1/ 3 = 0 )log 2 (1/3) (2/3)l. 9183 )log (3 og 2 (2/ 2 /6) 3) M) = (3/6)lo g 2 (3/6) = 1 - (3/6 Gain(Age <= 40) = 0. 9911 – (6/9 * 1 + 3/9 * 0. 9183 ) = 0. 0183

Entropy(4 F, 5 M) = -(4/9)log 2(4/9) - (5/9)log 2(5/9) = 0. 9911 yes age <= 40? no Let us try splitting on Age Entrop y(3 F, 3 Entro py(1 F , 2 M) = -(1/ 3 = 0 )log 2 (1/3) (2/3)l. 9183 )log (3 og 2 (2/ 2 /6) 3) M) = (3/6)lo g 2 (3/6) = 1 - (3/6 Gain(Age <= 40) = 0. 9911 – (6/9 * 1 + 3/9 * 0. 9183 ) = 0. 0183

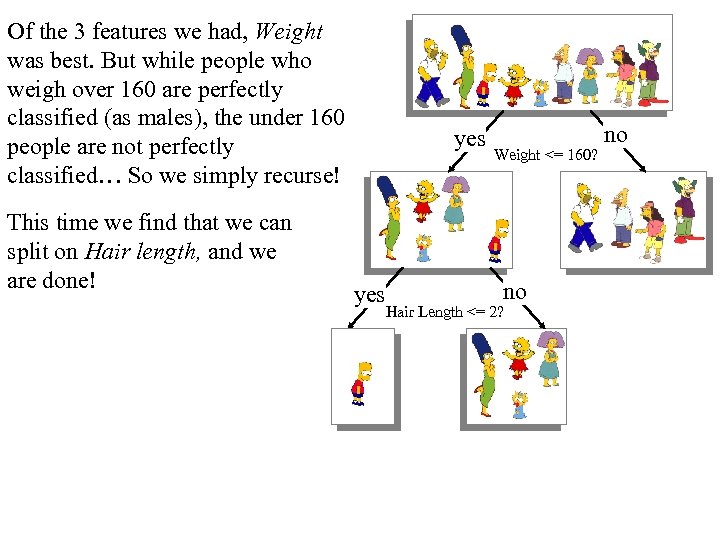

Of the 3 features we had, Weight was best. But while people who weigh over 160 are perfectly classified (as males), the under 160 people are not perfectly classified… So we simply recurse! This time we find that we can split on Hair length, and we are done! yes Weight <= 160? no Hair Length <= 2? no

Of the 3 features we had, Weight was best. But while people who weigh over 160 are perfectly classified (as males), the under 160 people are not perfectly classified… So we simply recurse! This time we find that we can split on Hair length, and we are done! yes Weight <= 160? no Hair Length <= 2? no

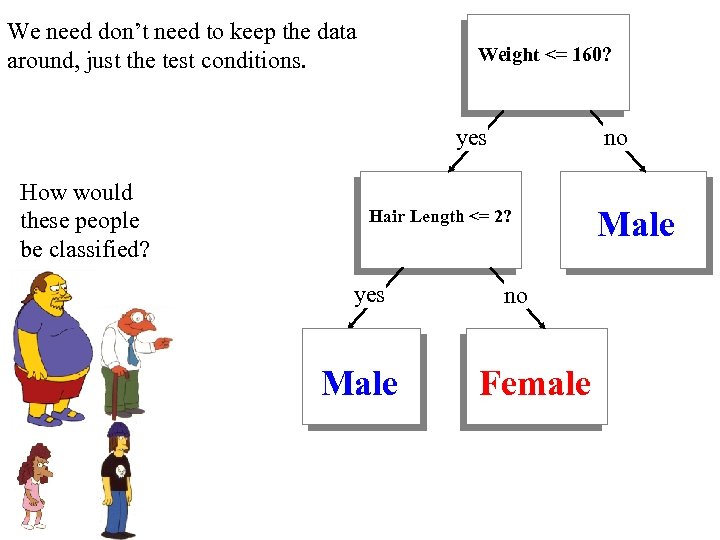

We need don’t need to keep the data around, just the test conditions. Weight <= 160? yes How would these people be classified? no Hair Length <= 2? yes Male no Female Male

We need don’t need to keep the data around, just the test conditions. Weight <= 160? yes How would these people be classified? no Hair Length <= 2? yes Male no Female Male

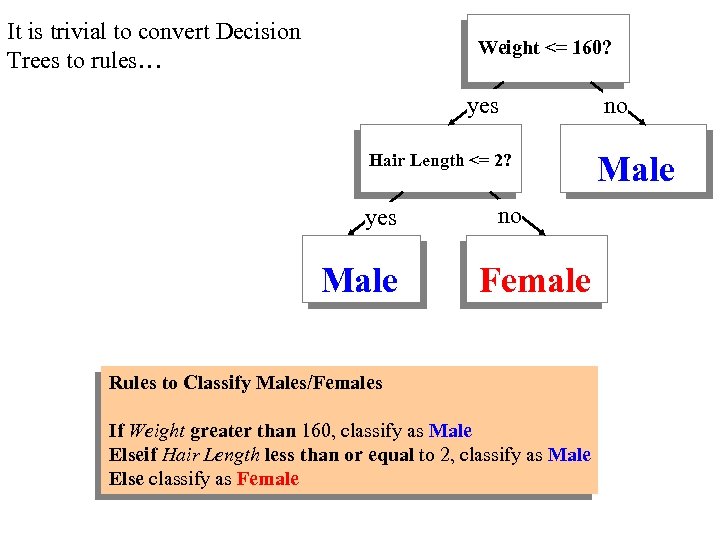

It is trivial to convert Decision Trees to rules… Weight <= 160? yes Hair Length <= 2? yes Male no Female Rules to Classify Males/Females If Weight greater than 160, classify as Male Elseif Hair Length less than or equal to 2, classify as Male Else classify as Female no Male

It is trivial to convert Decision Trees to rules… Weight <= 160? yes Hair Length <= 2? yes Male no Female Rules to Classify Males/Females If Weight greater than 160, classify as Male Elseif Hair Length less than or equal to 2, classify as Male Else classify as Female no Male

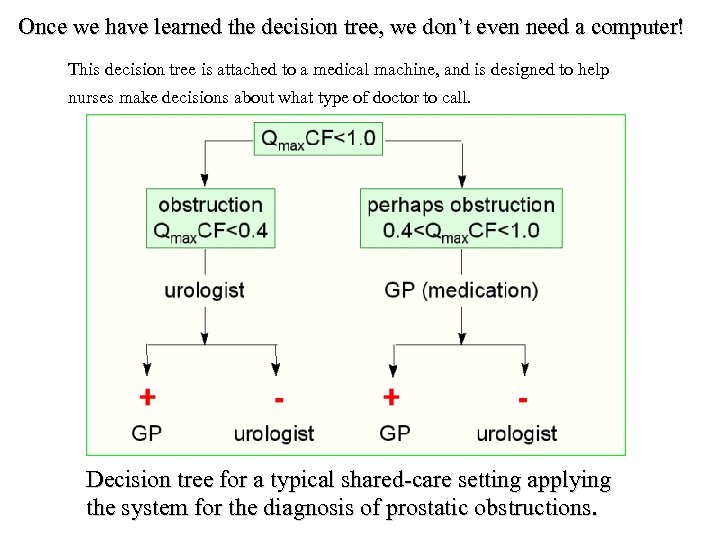

Once we have learned the decision tree, we don’t even need a computer! This decision tree is attached to a medical machine, and is designed to help nurses make decisions about what type of doctor to call. Decision tree for a typical shared-care setting applying the system for the diagnosis of prostatic obstructions.

Once we have learned the decision tree, we don’t even need a computer! This decision tree is attached to a medical machine, and is designed to help nurses make decisions about what type of doctor to call. Decision tree for a typical shared-care setting applying the system for the diagnosis of prostatic obstructions.

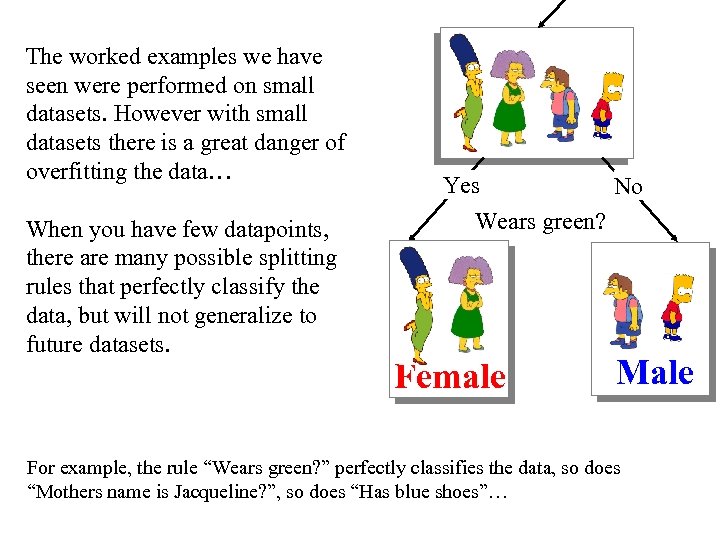

The worked examples we have seen were performed on small datasets. However with small datasets there is a great danger of overfitting the data… When you have few datapoints, there are many possible splitting rules that perfectly classify the data, but will not generalize to future datasets. Yes No Wears green? Female Male For example, the rule “Wears green? ” perfectly classifies the data, so does “Mothers name is Jacqueline? ”, so does “Has blue shoes”…

The worked examples we have seen were performed on small datasets. However with small datasets there is a great danger of overfitting the data… When you have few datapoints, there are many possible splitting rules that perfectly classify the data, but will not generalize to future datasets. Yes No Wears green? Female Male For example, the rule “Wears green? ” perfectly classifies the data, so does “Mothers name is Jacqueline? ”, so does “Has blue shoes”…

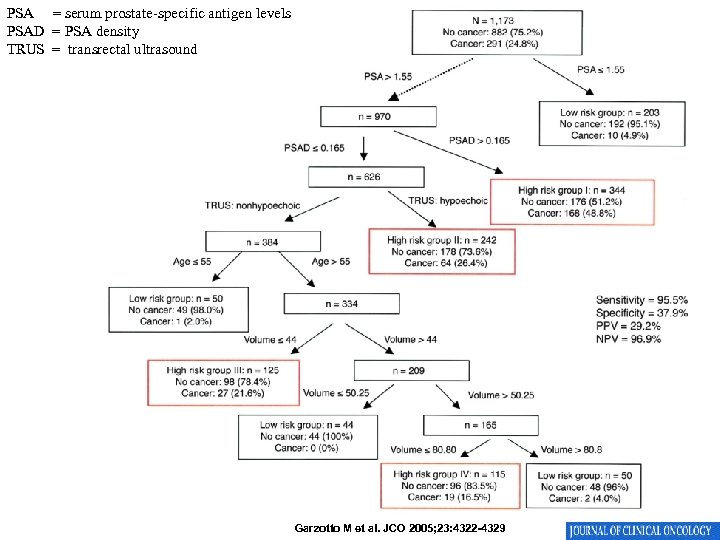

PSA = serum prostate-specific antigen levels PSAD = PSA density TRUS = transrectal ultrasound Garzotto M et al. JCO 2005; 23: 4322 -4329

PSA = serum prostate-specific antigen levels PSAD = PSA density TRUS = transrectal ultrasound Garzotto M et al. JCO 2005; 23: 4322 -4329

Avoid Overfitting in Classification • The generated tree may overfit the training data – Too many branches, some may reflect anomalies due to noise or outliers – Result is in poor accuracy for unseen samples • Two approaches to avoid overfitting – Prepruning: Halt tree construction early—do not split a node if this would result in the goodness measure falling below a threshold • Difficult to choose an appropriate threshold – Postpruning: Remove branches from a “fully grown” tree —get a sequence of progressively pruned trees • Use a set of data different from the training data to decide which is the “best pruned tree”

Avoid Overfitting in Classification • The generated tree may overfit the training data – Too many branches, some may reflect anomalies due to noise or outliers – Result is in poor accuracy for unseen samples • Two approaches to avoid overfitting – Prepruning: Halt tree construction early—do not split a node if this would result in the goodness measure falling below a threshold • Difficult to choose an appropriate threshold – Postpruning: Remove branches from a “fully grown” tree —get a sequence of progressively pruned trees • Use a set of data different from the training data to decide which is the “best pruned tree”

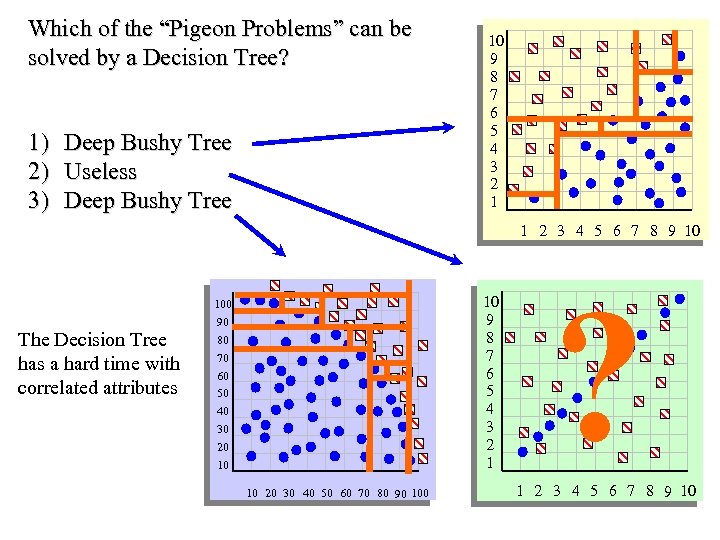

Which of the “Pigeon Problems” can be solved by a Decision Tree? 1) 2) 3) Deep Bushy Tree Useless Deep Bushy Tree 10 9 8 7 6 5 4 3 2 1 1 2 3 4 5 6 7 8 9 10 The Decision Tree has a hard time with correlated attributes 10 9 8 7 6 5 4 3 2 1 100 90 80 70 60 50 40 30 20 10 10 20 30 40 50 60 70 80 90 100 ? 1 2 3 4 5 6 7 8 9 10

Which of the “Pigeon Problems” can be solved by a Decision Tree? 1) 2) 3) Deep Bushy Tree Useless Deep Bushy Tree 10 9 8 7 6 5 4 3 2 1 1 2 3 4 5 6 7 8 9 10 The Decision Tree has a hard time with correlated attributes 10 9 8 7 6 5 4 3 2 1 100 90 80 70 60 50 40 30 20 10 10 20 30 40 50 60 70 80 90 100 ? 1 2 3 4 5 6 7 8 9 10

Advantages/Disadvantages of Decision Trees • Advantages: – Easy to understand (Doctors love them!) – Easy to generate rules • Disadvantages: – May suffer from overfitting. – Classifies by rectangular partitioning (so does not handle correlated features very well). – Can be quite large – pruning is necessary. – Does not handle streaming data easily

Advantages/Disadvantages of Decision Trees • Advantages: – Easy to understand (Doctors love them!) – Easy to generate rules • Disadvantages: – May suffer from overfitting. – Classifies by rectangular partitioning (so does not handle correlated features very well). – Can be quite large – pruning is necessary. – Does not handle streaming data easily

Naïve Bayes Classifier Thomas Bayes 1702 - 1761 We will start off with a visual intuition, before looking at the math…

Naïve Bayes Classifier Thomas Bayes 1702 - 1761 We will start off with a visual intuition, before looking at the math…

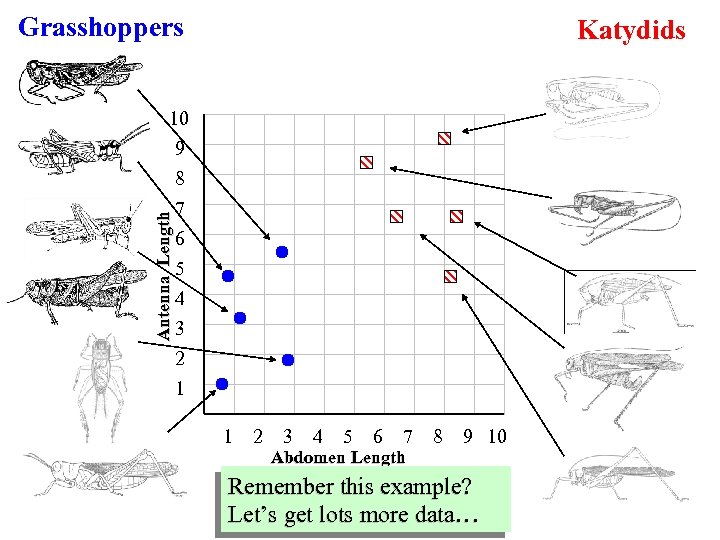

Grasshoppers Katydids Antenna Length 10 9 8 7 6 5 4 3 2 1 1 2 3 4 5 6 7 Abdomen Length 8 9 10 Remember this example? Let’s get lots more data…

Grasshoppers Katydids Antenna Length 10 9 8 7 6 5 4 3 2 1 1 2 3 4 5 6 7 Abdomen Length 8 9 10 Remember this example? Let’s get lots more data…

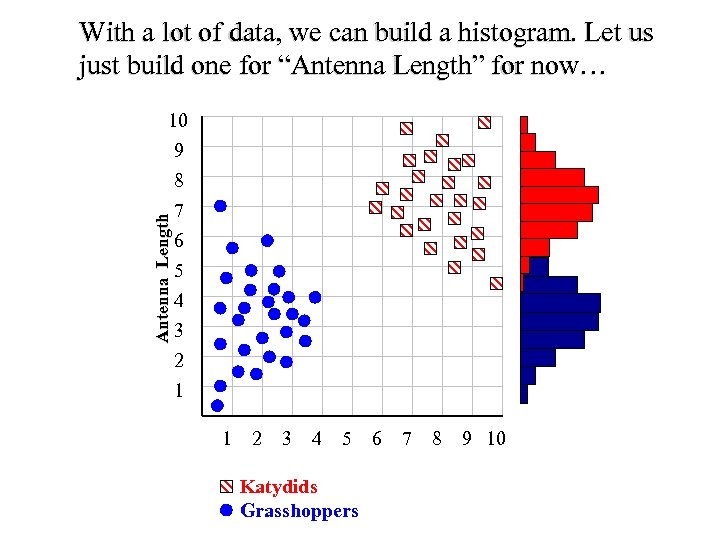

With a lot of data, we can build a histogram. Let us just build one for “Antenna Length” for now… Antenna Length 10 9 8 7 6 5 4 3 2 1 1 2 3 4 5 Katydids Grasshoppers 6 7 8 9 10

With a lot of data, we can build a histogram. Let us just build one for “Antenna Length” for now… Antenna Length 10 9 8 7 6 5 4 3 2 1 1 2 3 4 5 Katydids Grasshoppers 6 7 8 9 10

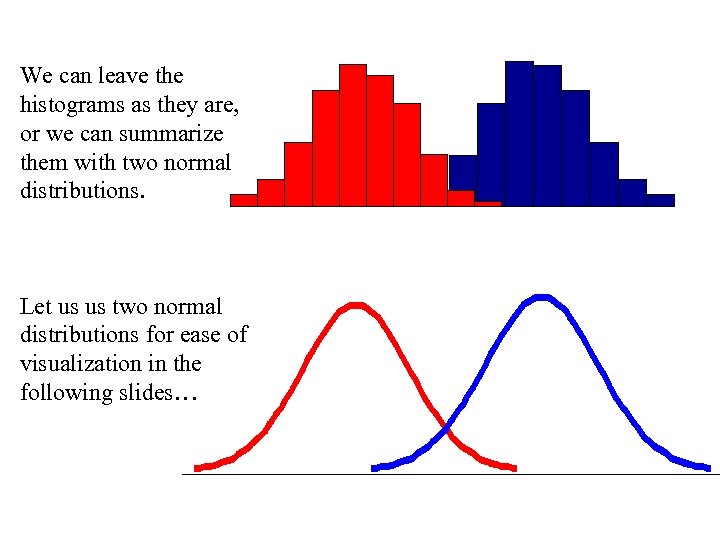

We can leave the histograms as they are, or we can summarize them with two normal distributions. Let us us two normal distributions for ease of visualization in the following slides…

We can leave the histograms as they are, or we can summarize them with two normal distributions. Let us us two normal distributions for ease of visualization in the following slides…

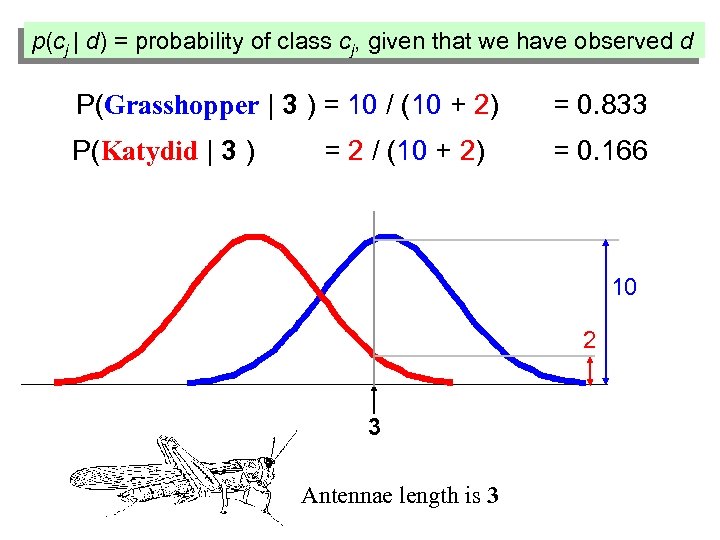

• We want to classify an insect we have found. Its antennae are 3 units long. How can we classify it? • We can just ask ourselves, give the distributions of antennae lengths we have seen, is it more probable that our insect is a Grasshopper or a Katydid. • There is a formal way to discuss the most probable classification… p(cj | d) = probability of class cj, given that we have observed d 3 Antennae length is 3

• We want to classify an insect we have found. Its antennae are 3 units long. How can we classify it? • We can just ask ourselves, give the distributions of antennae lengths we have seen, is it more probable that our insect is a Grasshopper or a Katydid. • There is a formal way to discuss the most probable classification… p(cj | d) = probability of class cj, given that we have observed d 3 Antennae length is 3

p(cj | d) = probability of class cj, given that we have observed d P(Grasshopper | 3 ) = 10 / (10 + 2) = 0. 833 P(Katydid | 3 ) = 0. 166 = 2 / (10 + 2) 10 2 3 Antennae length is 3

p(cj | d) = probability of class cj, given that we have observed d P(Grasshopper | 3 ) = 10 / (10 + 2) = 0. 833 P(Katydid | 3 ) = 0. 166 = 2 / (10 + 2) 10 2 3 Antennae length is 3

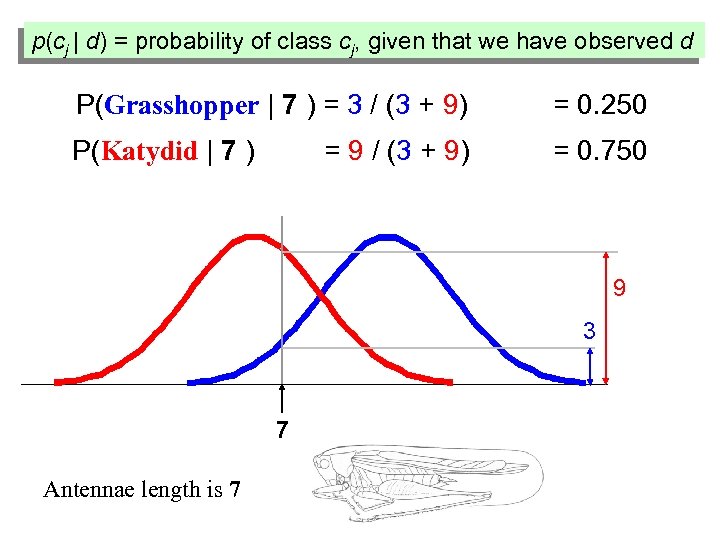

p(cj | d) = probability of class cj, given that we have observed d P(Grasshopper | 7 ) = 3 / (3 + 9) = 0. 250 P(Katydid | 7 ) = 0. 750 = 9 / (3 + 9) 9 3 7 Antennae length is 7

p(cj | d) = probability of class cj, given that we have observed d P(Grasshopper | 7 ) = 3 / (3 + 9) = 0. 250 P(Katydid | 7 ) = 0. 750 = 9 / (3 + 9) 9 3 7 Antennae length is 7

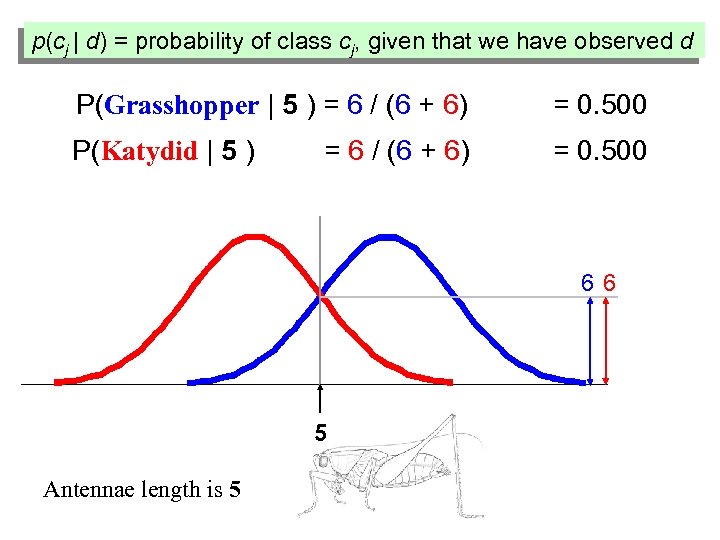

p(cj | d) = probability of class cj, given that we have observed d P(Grasshopper | 5 ) = 6 / (6 + 6) = 0. 500 P(Katydid | 5 ) = 0. 500 = 6 / (6 + 6) 66 5 Antennae length is 5

p(cj | d) = probability of class cj, given that we have observed d P(Grasshopper | 5 ) = 6 / (6 + 6) = 0. 500 P(Katydid | 5 ) = 0. 500 = 6 / (6 + 6) 66 5 Antennae length is 5

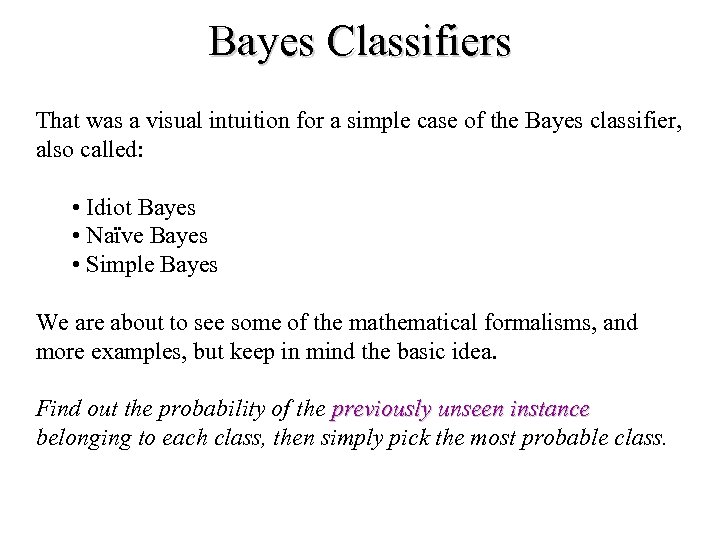

Bayes Classifiers That was a visual intuition for a simple case of the Bayes classifier, also called: • Idiot Bayes • Naïve Bayes • Simple Bayes We are about to see some of the mathematical formalisms, and more examples, but keep in mind the basic idea. Find out the probability of the previously unseen instance belonging to each class, then simply pick the most probable class.

Bayes Classifiers That was a visual intuition for a simple case of the Bayes classifier, also called: • Idiot Bayes • Naïve Bayes • Simple Bayes We are about to see some of the mathematical formalisms, and more examples, but keep in mind the basic idea. Find out the probability of the previously unseen instance belonging to each class, then simply pick the most probable class.

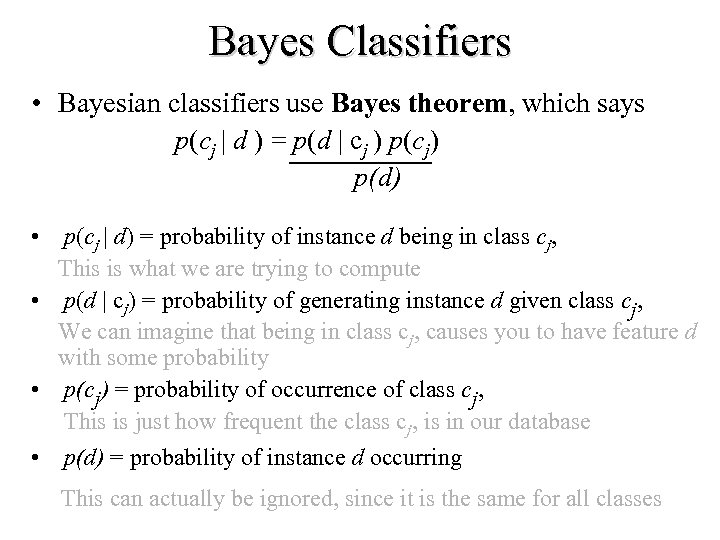

Bayes Classifiers • Bayesian classifiers use Bayes theorem, which says p(cj | d ) = p(d | cj ) p(cj) p(d) • p(cj | d) = probability of instance d being in class cj, This is what we are trying to compute • p(d | cj) = probability of generating instance d given class cj, We can imagine that being in class cj, causes you to have feature d with some probability • p(cj) = probability of occurrence of class cj, This is just how frequent the class cj, is in our database • p(d) = probability of instance d occurring This can actually be ignored, since it is the same for all classes

Bayes Classifiers • Bayesian classifiers use Bayes theorem, which says p(cj | d ) = p(d | cj ) p(cj) p(d) • p(cj | d) = probability of instance d being in class cj, This is what we are trying to compute • p(d | cj) = probability of generating instance d given class cj, We can imagine that being in class cj, causes you to have feature d with some probability • p(cj) = probability of occurrence of class cj, This is just how frequent the class cj, is in our database • p(d) = probability of instance d occurring This can actually be ignored, since it is the same for all classes

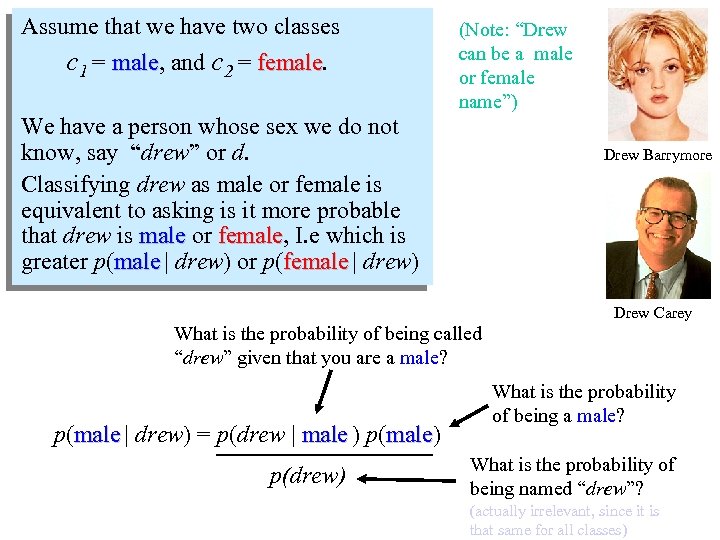

Assume that we have two classes c 1 = male, and c 2 = female. male female We have a person whose sex we do not know, say “drew” or d. Classifying drew as male or female is equivalent to asking is it more probable that drew is male or female, I. e which is male female greater p(male | drew) or p(female | drew) (Note: “Drew can be a male or female name”) Drew Barrymore Drew Carey What is the probability of being called “drew” given that you are a male? p(male | drew) = p(drew | male ) p(male) male p(drew) What is the probability of being a male? What is the probability of being named “drew”? (actually irrelevant, since it is that same for all classes)

Assume that we have two classes c 1 = male, and c 2 = female. male female We have a person whose sex we do not know, say “drew” or d. Classifying drew as male or female is equivalent to asking is it more probable that drew is male or female, I. e which is male female greater p(male | drew) or p(female | drew) (Note: “Drew can be a male or female name”) Drew Barrymore Drew Carey What is the probability of being called “drew” given that you are a male? p(male | drew) = p(drew | male ) p(male) male p(drew) What is the probability of being a male? What is the probability of being named “drew”? (actually irrelevant, since it is that same for all classes)

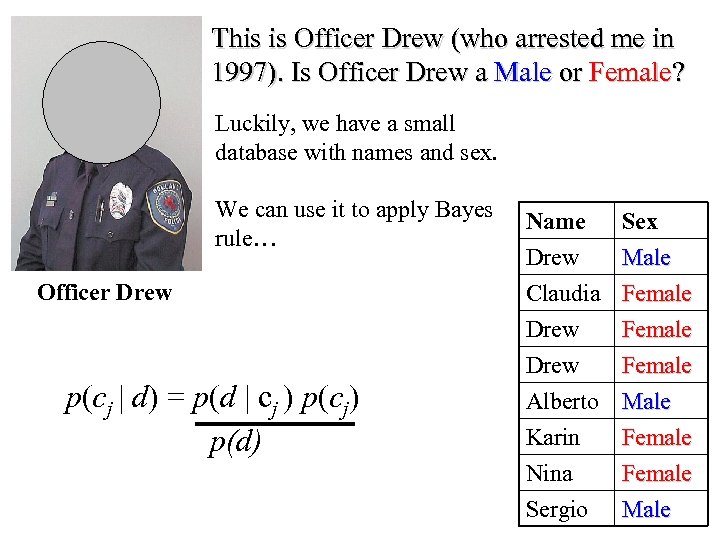

This is Officer Drew (who arrested me in 1997). Is Officer Drew a Male or Female? Luckily, we have a small database with names and sex. We can use it to apply Bayes rule… Officer Drew p(cj | d) = p(d | cj ) p(cj) p(d) Name Drew Claudia Drew Sex Male Female Drew Alberto Karin Nina Female Male Female Sergio Male

This is Officer Drew (who arrested me in 1997). Is Officer Drew a Male or Female? Luckily, we have a small database with names and sex. We can use it to apply Bayes rule… Officer Drew p(cj | d) = p(d | cj ) p(cj) p(d) Name Drew Claudia Drew Sex Male Female Drew Alberto Karin Nina Female Male Female Sergio Male

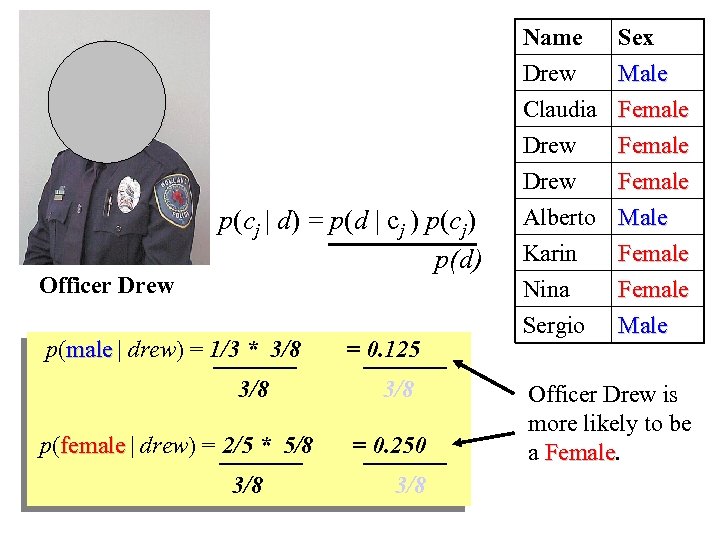

Name Drew Claudia Drew Officer Drew p(cj | d) = p(d | cj ) p(cj) p(d) p(male | drew) = 1/3 * 3/8 p(female | drew) = 2/5 * 5/8 3/8 = 0. 125 3/8 = 0. 250 3/8 Sex Male Female Drew Alberto Karin Nina Sergio Female Male Officer Drew is more likely to be a Female

Name Drew Claudia Drew Officer Drew p(cj | d) = p(d | cj ) p(cj) p(d) p(male | drew) = 1/3 * 3/8 p(female | drew) = 2/5 * 5/8 3/8 = 0. 125 3/8 = 0. 250 3/8 Sex Male Female Drew Alberto Karin Nina Sergio Female Male Officer Drew is more likely to be a Female

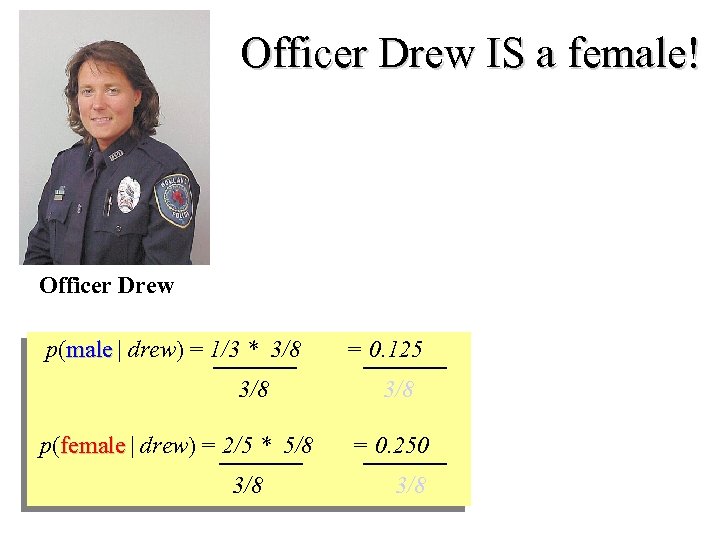

Officer Drew IS a female! Officer Drew p(male | drew) = 1/3 * 3/8 p(female | drew) = 2/5 * 5/8 3/8 = 0. 125 3/8 = 0. 250 3/8

Officer Drew IS a female! Officer Drew p(male | drew) = 1/3 * 3/8 p(female | drew) = 2/5 * 5/8 3/8 = 0. 125 3/8 = 0. 250 3/8

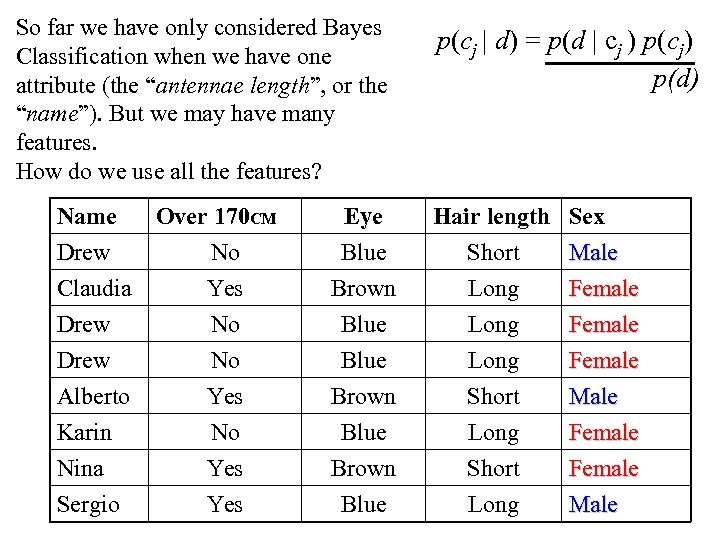

So far we have only considered Bayes Classification when we have one attribute (the “antennae length”, or the “name”). But we may have many features. How do we use all the features? p(cj | d) = p(d | cj ) p(cj) p(d) Name Over 170 CM Drew No Claudia Yes Drew No Eye Blue Brown Blue Hair length Short Long Sex Male Female Drew Alberto Karin Nina No Yes Blue Brown Long Short Female Male Female Sergio Yes Blue Long Male

So far we have only considered Bayes Classification when we have one attribute (the “antennae length”, or the “name”). But we may have many features. How do we use all the features? p(cj | d) = p(d | cj ) p(cj) p(d) Name Over 170 CM Drew No Claudia Yes Drew No Eye Blue Brown Blue Hair length Short Long Sex Male Female Drew Alberto Karin Nina No Yes Blue Brown Long Short Female Male Female Sergio Yes Blue Long Male

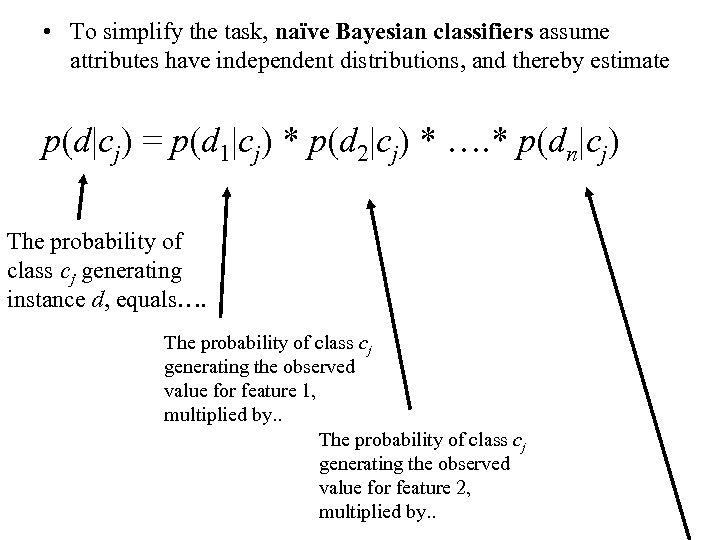

• To simplify the task, naïve Bayesian classifiers assume attributes have independent distributions, and thereby estimate p(d|cj) = p(d 1|cj) * p(d 2|cj) * …. * p(dn|cj) The probability of class cj generating instance d, equals…. The probability of class cj generating the observed value for feature 1, multiplied by. . The probability of class cj generating the observed value for feature 2, multiplied by. .

• To simplify the task, naïve Bayesian classifiers assume attributes have independent distributions, and thereby estimate p(d|cj) = p(d 1|cj) * p(d 2|cj) * …. * p(dn|cj) The probability of class cj generating instance d, equals…. The probability of class cj generating the observed value for feature 1, multiplied by. . The probability of class cj generating the observed value for feature 2, multiplied by. .

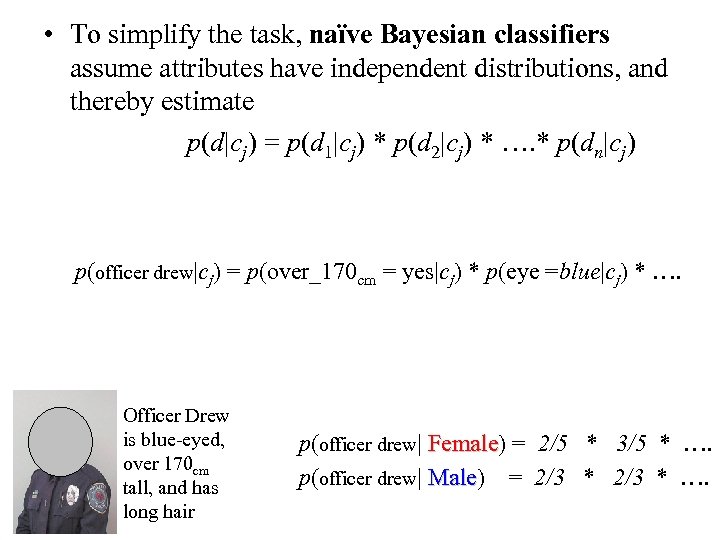

• To simplify the task, naïve Bayesian classifiers assume attributes have independent distributions, and thereby estimate p(d|cj) = p(d 1|cj) * p(d 2|cj) * …. * p(dn|cj) p(officer drew|cj) = p(over_170 cm = yes|cj) * p(eye =blue|cj) * …. Officer Drew is blue-eyed, over 170 cm tall, and has long hair p(officer drew| Female) = 2/5 * 3/5 * …. Female p(officer drew| Male) = 2/3 * …. Male

• To simplify the task, naïve Bayesian classifiers assume attributes have independent distributions, and thereby estimate p(d|cj) = p(d 1|cj) * p(d 2|cj) * …. * p(dn|cj) p(officer drew|cj) = p(over_170 cm = yes|cj) * p(eye =blue|cj) * …. Officer Drew is blue-eyed, over 170 cm tall, and has long hair p(officer drew| Female) = 2/5 * 3/5 * …. Female p(officer drew| Male) = 2/3 * …. Male

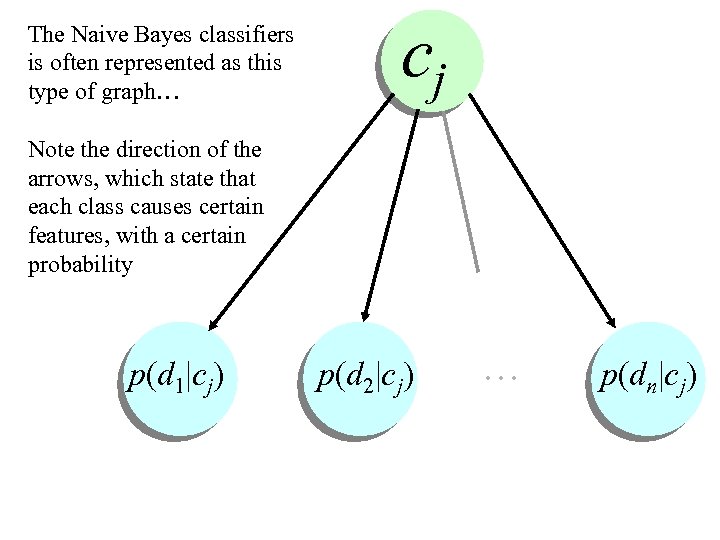

The Naive Bayes classifiers is often represented as this type of graph… cj Note the direction of the arrows, which state that each class causes certain features, with a certain probability … p(d 1|cj) p(d 2|cj) p(dn|cj)

The Naive Bayes classifiers is often represented as this type of graph… cj Note the direction of the arrows, which state that each class causes certain features, with a certain probability … p(d 1|cj) p(d 2|cj) p(dn|cj)

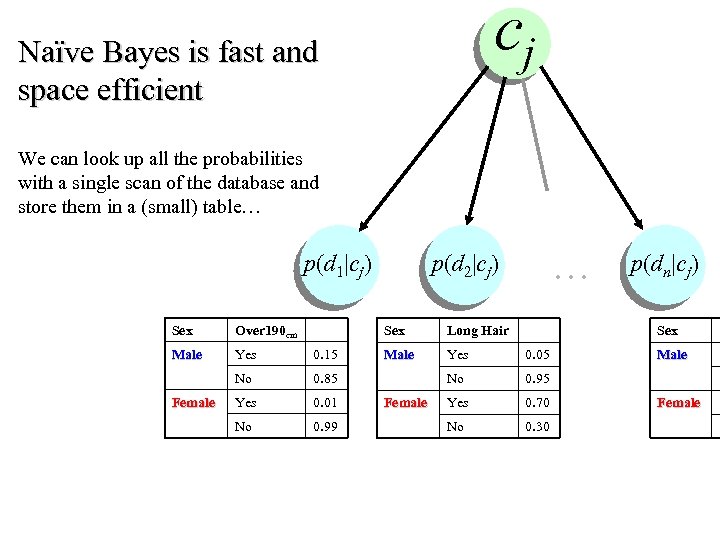

cj Naïve Bayes is fast and space efficient We can look up all the probabilities with a single scan of the database and store them in a (small) table… … p(d 1|cj) p(d 2|cj) p(dn|cj) Sex Over 190 cm Male Yes 0. 15 No 0. 85 Yes 0. 01 No 0. 99 Female Sex Long Hair Male Yes 0. 05 No 0. 95 Yes 0. 70 No 0. 30 Female Sex Male Female

cj Naïve Bayes is fast and space efficient We can look up all the probabilities with a single scan of the database and store them in a (small) table… … p(d 1|cj) p(d 2|cj) p(dn|cj) Sex Over 190 cm Male Yes 0. 15 No 0. 85 Yes 0. 01 No 0. 99 Female Sex Long Hair Male Yes 0. 05 No 0. 95 Yes 0. 70 No 0. 30 Female Sex Male Female

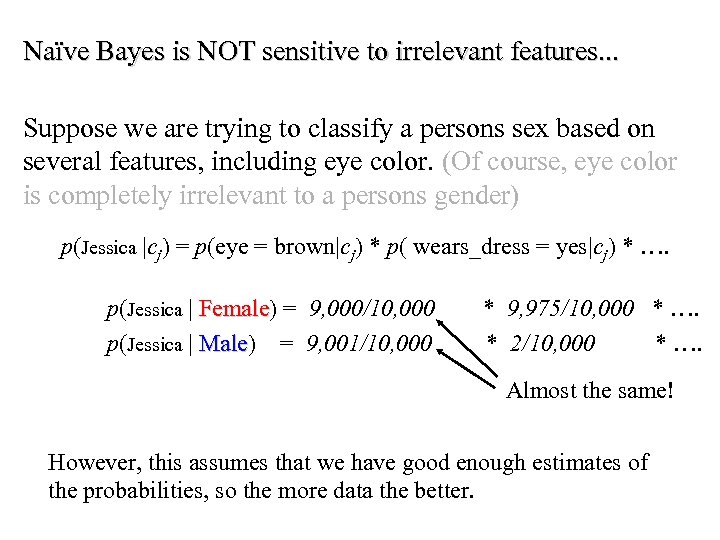

Naïve Bayes is NOT sensitive to irrelevant features. . . Suppose we are trying to classify a persons sex based on several features, including eye color. (Of course, eye color is completely irrelevant to a persons gender) p(Jessica |cj) = p(eye = brown|cj) * p( wears_dress = yes|cj) * …. p(Jessica | Female) = 9, 000/10, 000 * 9, 975/10, 000 * …. Female p(Jessica | Male) = 9, 001/10, 000 * 2/10, 000 * …. Male Almost the same! However, this assumes that we have good enough estimates of the probabilities, so the more data the better.

Naïve Bayes is NOT sensitive to irrelevant features. . . Suppose we are trying to classify a persons sex based on several features, including eye color. (Of course, eye color is completely irrelevant to a persons gender) p(Jessica |cj) = p(eye = brown|cj) * p( wears_dress = yes|cj) * …. p(Jessica | Female) = 9, 000/10, 000 * 9, 975/10, 000 * …. Female p(Jessica | Male) = 9, 001/10, 000 * 2/10, 000 * …. Male Almost the same! However, this assumes that we have good enough estimates of the probabilities, so the more data the better.

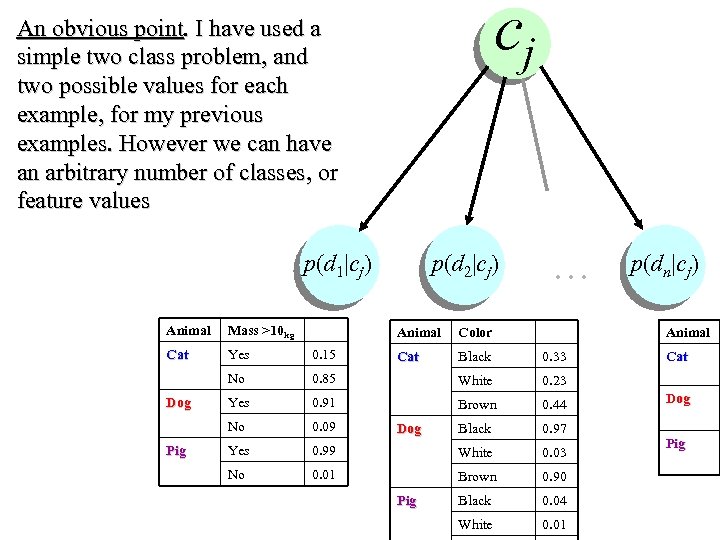

cj An obvious point. I have used a simple two class problem, and two possible values for each example, for my previous examples. However we can have an arbitrary number of classes, or feature values … p(d 1|cj) p(d 2|cj) p(dn|cj) Animal Mass >10 kg Cat Yes 0. 15 No Animal Pig Color Cat Black 0. 33 0. 85 White 0. 23 Yes 0. 91 Brown 0. 44 No Dog Animal 0. 09 Black 0. 97 Yes 0. 99 White 0. 03 No 0. 01 Brown 0. 90 Black 0. 04 White 0. 01 Dog Pig Cat Dog Pig

cj An obvious point. I have used a simple two class problem, and two possible values for each example, for my previous examples. However we can have an arbitrary number of classes, or feature values … p(d 1|cj) p(d 2|cj) p(dn|cj) Animal Mass >10 kg Cat Yes 0. 15 No Animal Pig Color Cat Black 0. 33 0. 85 White 0. 23 Yes 0. 91 Brown 0. 44 No Dog Animal 0. 09 Black 0. 97 Yes 0. 99 White 0. 03 No 0. 01 Brown 0. 90 Black 0. 04 White 0. 01 Dog Pig Cat Dog Pig

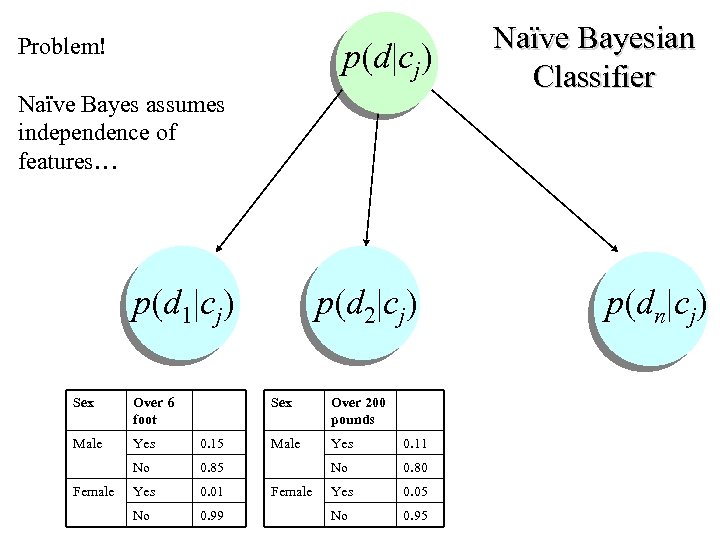

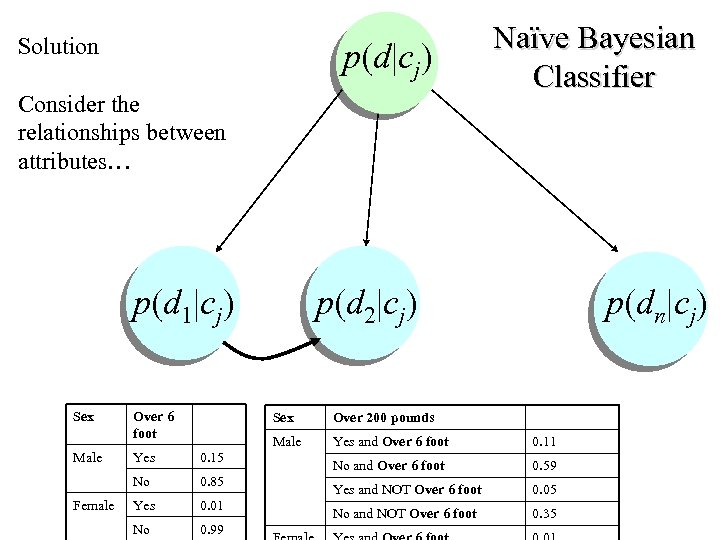

Problem! p(d|cj) Naïve Bayes assumes independence of features… Naïve Bayesian Classifier p(d 1|cj) p(d 2|cj) p(dn|cj) Sex Over 6 foot Male Yes 0. 15 No 0. 85 Yes 0. 01 No 0. 99 Female Sex Over 200 pounds Male Yes 0. 11 No 0. 80 Yes 0. 05 No 0. 95 Female

Problem! p(d|cj) Naïve Bayes assumes independence of features… Naïve Bayesian Classifier p(d 1|cj) p(d 2|cj) p(dn|cj) Sex Over 6 foot Male Yes 0. 15 No 0. 85 Yes 0. 01 No 0. 99 Female Sex Over 200 pounds Male Yes 0. 11 No 0. 80 Yes 0. 05 No 0. 95 Female

Solution p(d|cj) Consider the relationships between attributes… Naïve Bayesian Classifier p(d 1|cj) p(d 2|cj) p(dn|cj) Sex Male Over 6 foot Sex 0. 15 No Female Yes 0. 85 Yes 0. 01 No 0. 99 Over 200 pounds Male Yes and Over 6 foot 0. 11 No and Over 6 foot 0. 59 Yes and NOT Over 6 foot 0. 05 No and NOT Over 6 foot 0. 35

Solution p(d|cj) Consider the relationships between attributes… Naïve Bayesian Classifier p(d 1|cj) p(d 2|cj) p(dn|cj) Sex Male Over 6 foot Sex 0. 15 No Female Yes 0. 85 Yes 0. 01 No 0. 99 Over 200 pounds Male Yes and Over 6 foot 0. 11 No and Over 6 foot 0. 59 Yes and NOT Over 6 foot 0. 05 No and NOT Over 6 foot 0. 35

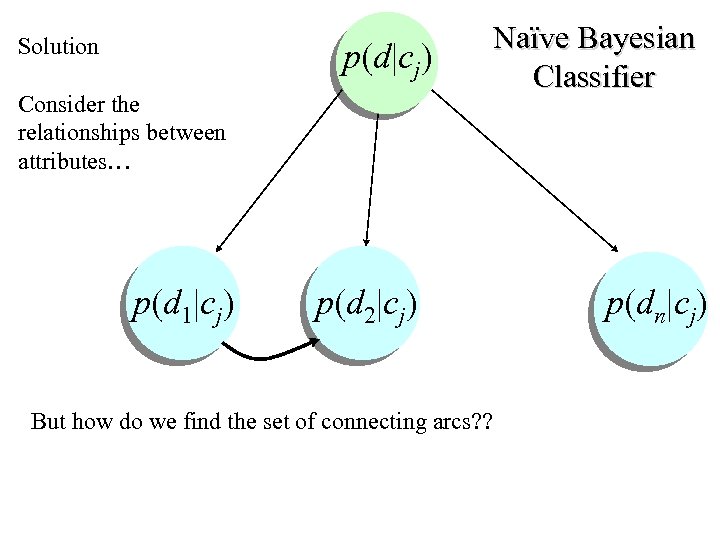

Solution p(d|cj) Consider the relationships between attributes… Naïve Bayesian Classifier p(d 1|cj) p(d 2|cj) p(dn|cj) But how do we find the set of connecting arcs? ?

Solution p(d|cj) Consider the relationships between attributes… Naïve Bayesian Classifier p(d 1|cj) p(d 2|cj) p(dn|cj) But how do we find the set of connecting arcs? ?

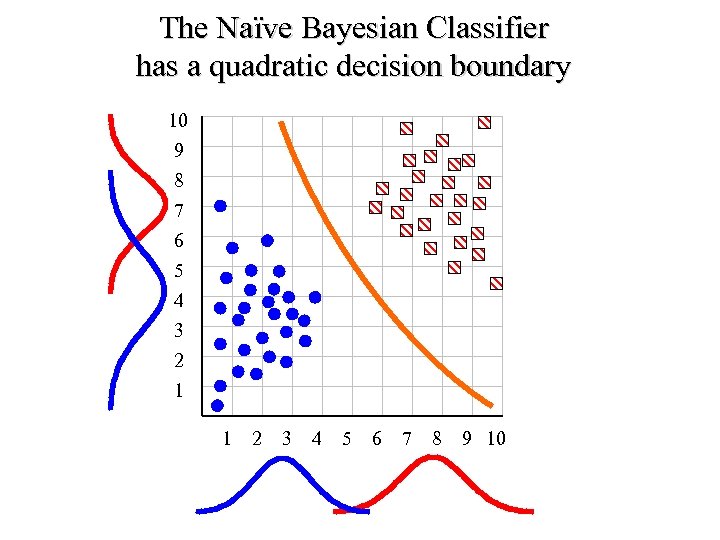

The Naïve Bayesian Classifier has a quadratic decision boundary 10 9 8 7 6 5 4 3 2 1 1 2 3 4 5 6 7 8 9 10

The Naïve Bayesian Classifier has a quadratic decision boundary 10 9 8 7 6 5 4 3 2 1 1 2 3 4 5 6 7 8 9 10

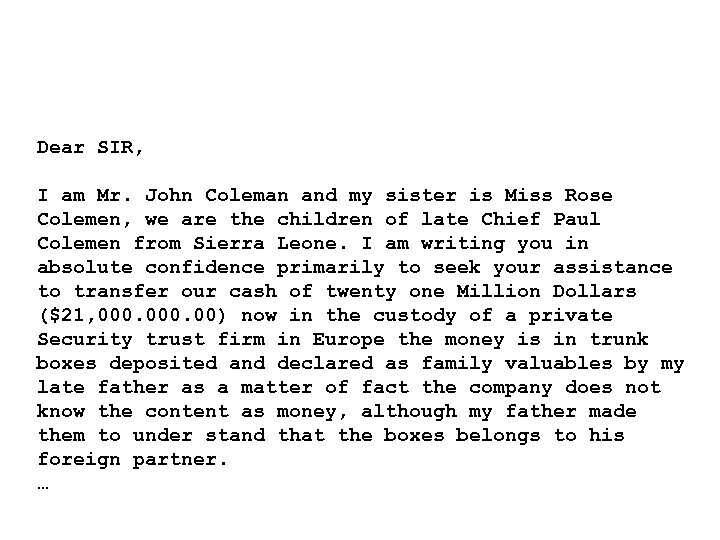

Dear SIR, I am Mr. John Coleman and my sister is Miss Rose Colemen, we are the children of late Chief Paul Colemen from Sierra Leone. I am writing you in absolute confidence primarily to seek your assistance to transfer our cash of twenty one Million Dollars ($21, 000. 00) now in the custody of a private Security trust firm in Europe the money is in trunk boxes deposited and declared as family valuables by my late father as a matter of fact the company does not know the content as money, although my father made them to under stand that the boxes belongs to his foreign partner. …

Dear SIR, I am Mr. John Coleman and my sister is Miss Rose Colemen, we are the children of late Chief Paul Colemen from Sierra Leone. I am writing you in absolute confidence primarily to seek your assistance to transfer our cash of twenty one Million Dollars ($21, 000. 00) now in the custody of a private Security trust firm in Europe the money is in trunk boxes deposited and declared as family valuables by my late father as a matter of fact the company does not know the content as money, although my father made them to under stand that the boxes belongs to his foreign partner. …

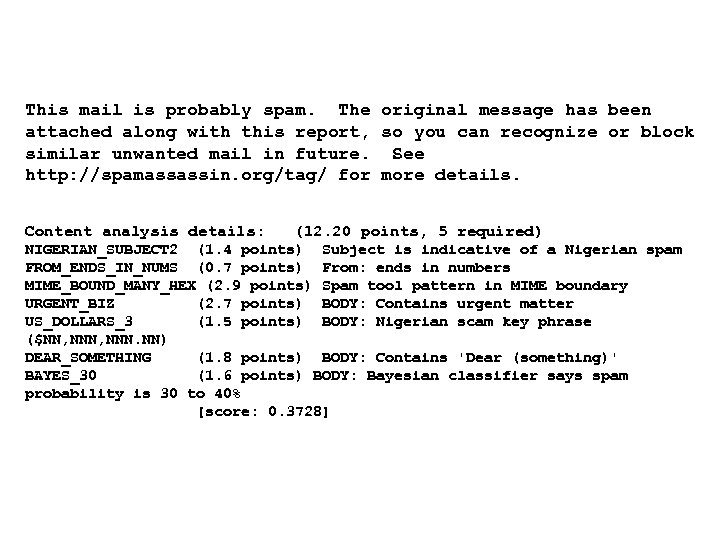

This mail is probably spam. The original message has been attached along with this report, so you can recognize or block similar unwanted mail in future. See http: //spamassassin. org/tag/ for more details. Content analysis details: (12. 20 points, 5 required) NIGERIAN_SUBJECT 2 (1. 4 points) Subject is indicative of a Nigerian spam FROM_ENDS_IN_NUMS (0. 7 points) From: ends in numbers MIME_BOUND_MANY_HEX (2. 9 points) Spam tool pattern in MIME boundary URGENT_BIZ (2. 7 points) BODY: Contains urgent matter US_DOLLARS_3 (1. 5 points) BODY: Nigerian scam key phrase ($NN, NNN. NN) DEAR_SOMETHING (1. 8 points) BODY: Contains 'Dear (something)' BAYES_30 (1. 6 points) BODY: Bayesian classifier says spam probability is 30 to 40% [score: 0. 3728]

This mail is probably spam. The original message has been attached along with this report, so you can recognize or block similar unwanted mail in future. See http: //spamassassin. org/tag/ for more details. Content analysis details: (12. 20 points, 5 required) NIGERIAN_SUBJECT 2 (1. 4 points) Subject is indicative of a Nigerian spam FROM_ENDS_IN_NUMS (0. 7 points) From: ends in numbers MIME_BOUND_MANY_HEX (2. 9 points) Spam tool pattern in MIME boundary URGENT_BIZ (2. 7 points) BODY: Contains urgent matter US_DOLLARS_3 (1. 5 points) BODY: Nigerian scam key phrase ($NN, NNN. NN) DEAR_SOMETHING (1. 8 points) BODY: Contains 'Dear (something)' BAYES_30 (1. 6 points) BODY: Bayesian classifier says spam probability is 30 to 40% [score: 0. 3728]

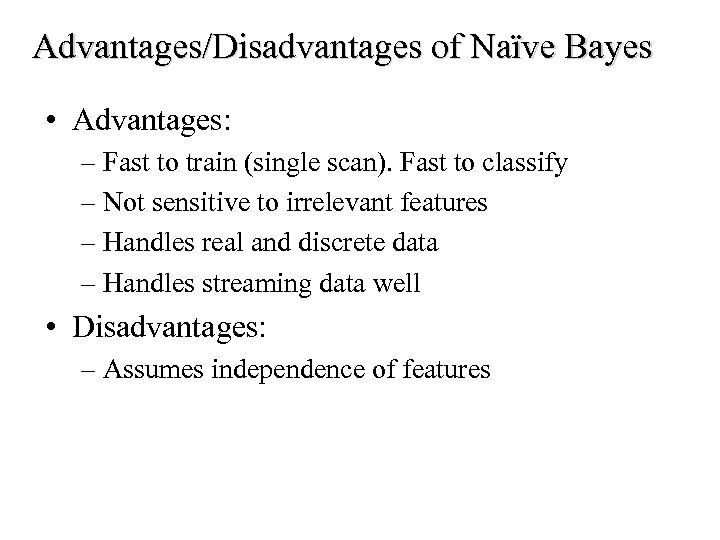

Advantages/Disadvantages of Naïve Bayes • Advantages: – Fast to train (single scan). Fast to classify – Not sensitive to irrelevant features – Handles real and discrete data – Handles streaming data well • Disadvantages: – Assumes independence of features

Advantages/Disadvantages of Naïve Bayes • Advantages: – Fast to train (single scan). Fast to classify – Not sensitive to irrelevant features – Handles real and discrete data – Handles streaming data well • Disadvantages: – Assumes independence of features

Summary of Classification We have seen 4 major classification techniques: • Simple linear classifier, Nearest neighbor, Decision tree. There are other techniques: • Neural Networks, Support Vector Machines, Genetic algorithms. . In general, there is no one best classifier for all problems. You have to consider what you hope to achieve, and the data itself… Let us now move on to the other classic problem of data mining and machine learning, Clustering…

Summary of Classification We have seen 4 major classification techniques: • Simple linear classifier, Nearest neighbor, Decision tree. There are other techniques: • Neural Networks, Support Vector Machines, Genetic algorithms. . In general, there is no one best classifier for all problems. You have to consider what you hope to achieve, and the data itself… Let us now move on to the other classic problem of data mining and machine learning, Clustering…