b564e514c97f9d490bcc8f9f94617b85.ppt

- Количество слайдов: 39

Data/Thread Level Speculation (TLS) in the Stanford Hydra Chip Multiprocessor (CMP) Hydra is a 4 -core Chip Multiprocessor (CMP) based microarchitecture/compiler effort at Stanford that provides hardware/software support for Data/Thread Level Speculation (TLS) to extract parallel speculated threads from sequential code (single thread) augmented with software thread speculation handlers. Stanford Hydra, discussed here, is one TLS architecture example. Other TLS Architectures include: Goal of TLS Architectures: - Wisconsin Multiscalar Increase the range of parallelizable - Carnegie-Mellon Stampede applications/computations. - MIT M-machine Primary Hydra papers: 4, 6 EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

Data/Thread Level Speculation (TLS) in the Stanford Hydra Chip Multiprocessor (CMP) Hydra is a 4 -core Chip Multiprocessor (CMP) based microarchitecture/compiler effort at Stanford that provides hardware/software support for Data/Thread Level Speculation (TLS) to extract parallel speculated threads from sequential code (single thread) augmented with software thread speculation handlers. Stanford Hydra, discussed here, is one TLS architecture example. Other TLS Architectures include: Goal of TLS Architectures: - Wisconsin Multiscalar Increase the range of parallelizable - Carnegie-Mellon Stampede applications/computations. - MIT M-machine Primary Hydra papers: 4, 6 EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

Motivation for Chip Multiprocessors (CMPs) • Chip Multiprocessors (CMPs) offers implementation benefits: – High-speed signals are localized in individual CPUs – A proven CPU design is replicated across the die (including SMT processors, e. g IBM Power 5) • Overcomes diminishing performance/transistor return problem (limited-ILP) in single -threaded superscalar processors (similar motivation for SMT) – Transistors are used today mostly for ILP extraction – CMPs use transistors to run multiple threads (exploit thread level parallelism, TLP): • On parallelized (multi-threaded) programs • With multi-programmed workloads (multi-tasking) – A number of single-threaded applications executing on different CPUs • Fast inter-processor communication eases parallelization of code (Using shared L 2 cache) But slower than logical processor communication in SMT • Potential Drawback of CMPs: – High power/heat issues using current VLSI processes due to core duplication. – Limited ILP/poor latency hiding within individual cores (SMT addresses this) EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

Motivation for Chip Multiprocessors (CMPs) • Chip Multiprocessors (CMPs) offers implementation benefits: – High-speed signals are localized in individual CPUs – A proven CPU design is replicated across the die (including SMT processors, e. g IBM Power 5) • Overcomes diminishing performance/transistor return problem (limited-ILP) in single -threaded superscalar processors (similar motivation for SMT) – Transistors are used today mostly for ILP extraction – CMPs use transistors to run multiple threads (exploit thread level parallelism, TLP): • On parallelized (multi-threaded) programs • With multi-programmed workloads (multi-tasking) – A number of single-threaded applications executing on different CPUs • Fast inter-processor communication eases parallelization of code (Using shared L 2 cache) But slower than logical processor communication in SMT • Potential Drawback of CMPs: – High power/heat issues using current VLSI processes due to core duplication. – Limited ILP/poor latency hiding within individual cores (SMT addresses this) EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

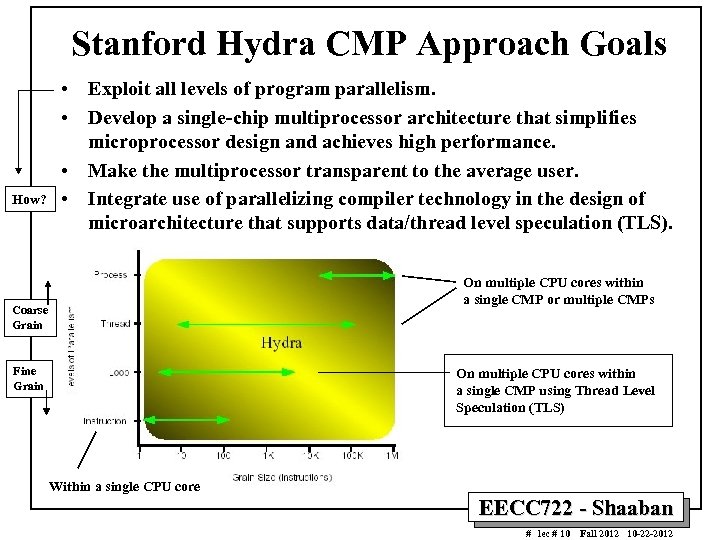

Stanford Hydra CMP Approach Goals How? • Exploit all levels of program parallelism. • Develop a single-chip multiprocessor architecture that simplifies microprocessor design and achieves high performance. • Make the multiprocessor transparent to the average user. • Integrate use of parallelizing compiler technology in the design of microarchitecture that supports data/thread level speculation (TLS). On multiple CPU cores within a single CMP or multiple CMPs Coarse Grain Fine Grain On multiple CPU cores within a single CMP using Thread Level Speculation (TLS) Within a single CPU core EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

Stanford Hydra CMP Approach Goals How? • Exploit all levels of program parallelism. • Develop a single-chip multiprocessor architecture that simplifies microprocessor design and achieves high performance. • Make the multiprocessor transparent to the average user. • Integrate use of parallelizing compiler technology in the design of microarchitecture that supports data/thread level speculation (TLS). On multiple CPU cores within a single CMP or multiple CMPs Coarse Grain Fine Grain On multiple CPU cores within a single CMP using Thread Level Speculation (TLS) Within a single CPU core EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

Hydra Prototype Overview To support Thread-Level Speculation (TLS) • 4 CPU cores with modified private L 1 caches. • Speculative coprocessor (for each processor core) – Speculative memory reference controller. – Speculative interrupt screening mechanism. – Statistics mechanisms for performance evaluation and to allow feedback for code tuning. • Memory system – – – Read and write buses. Controllers for all resources. On-chip shared L 2 cache. L 2 Speculation write buffers. Simple off-chip main memory controller. I/O and debugging interface. EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

Hydra Prototype Overview To support Thread-Level Speculation (TLS) • 4 CPU cores with modified private L 1 caches. • Speculative coprocessor (for each processor core) – Speculative memory reference controller. – Speculative interrupt screening mechanism. – Statistics mechanisms for performance evaluation and to allow feedback for code tuning. • Memory system – – – Read and write buses. Controllers for all resources. On-chip shared L 2 cache. L 2 Speculation write buffers. Simple off-chip main memory controller. I/O and debugging interface. EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

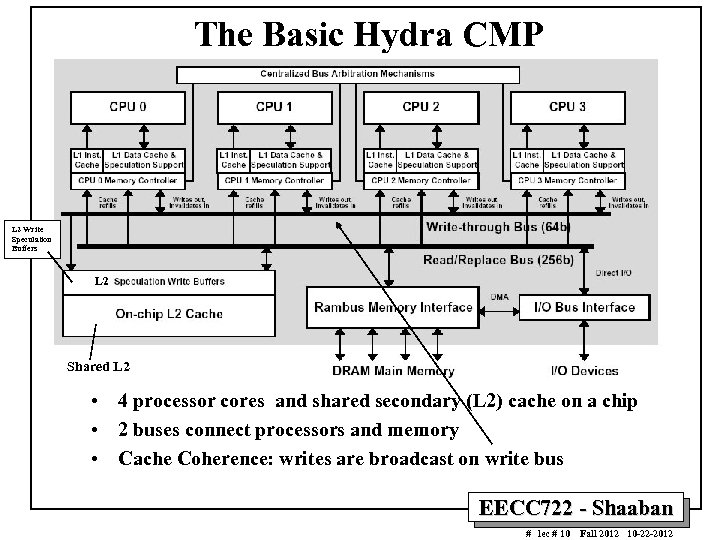

The Basic Hydra CMP L 2 Write Speculation Buffers L 2 Shared L 2 • 4 processor cores and shared secondary (L 2) cache on a chip • 2 buses connect processors and memory • Cache Coherence: writes are broadcast on write bus EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

The Basic Hydra CMP L 2 Write Speculation Buffers L 2 Shared L 2 • 4 processor cores and shared secondary (L 2) cache on a chip • 2 buses connect processors and memory • Cache Coherence: writes are broadcast on write bus EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

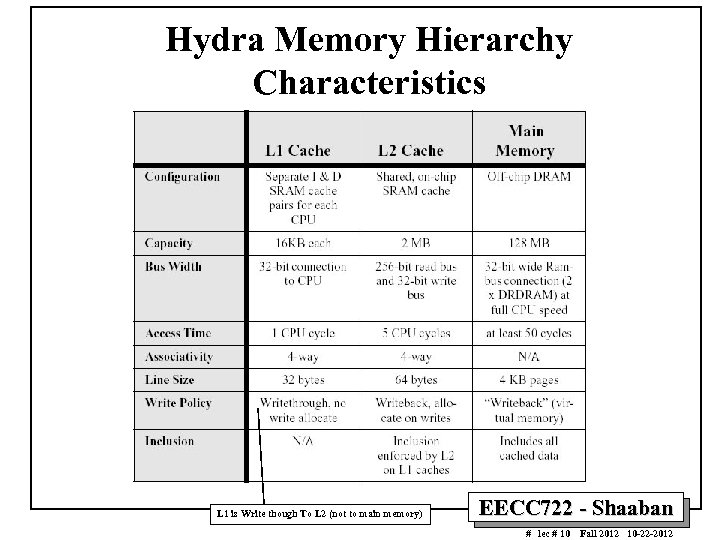

Hydra Memory Hierarchy Characteristics L 1 is Write though To L 2 (not to main memory) EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

Hydra Memory Hierarchy Characteristics L 1 is Write though To L 2 (not to main memory) EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

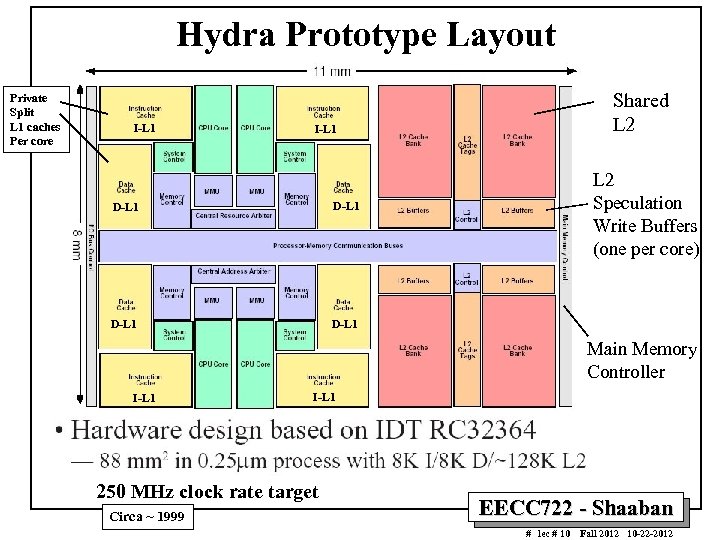

Hydra Prototype Layout Private Split L 1 caches Per core I-L 1 Shared L 2 I-L 1 D-L 1 L 2 Speculation Write Buffers (one per core) D-L 1 Main Memory Controller I-L 1 250 MHz clock rate target Circa ~ 1999 EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

Hydra Prototype Layout Private Split L 1 caches Per core I-L 1 Shared L 2 I-L 1 D-L 1 L 2 Speculation Write Buffers (one per core) D-L 1 Main Memory Controller I-L 1 250 MHz clock rate target Circa ~ 1999 EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

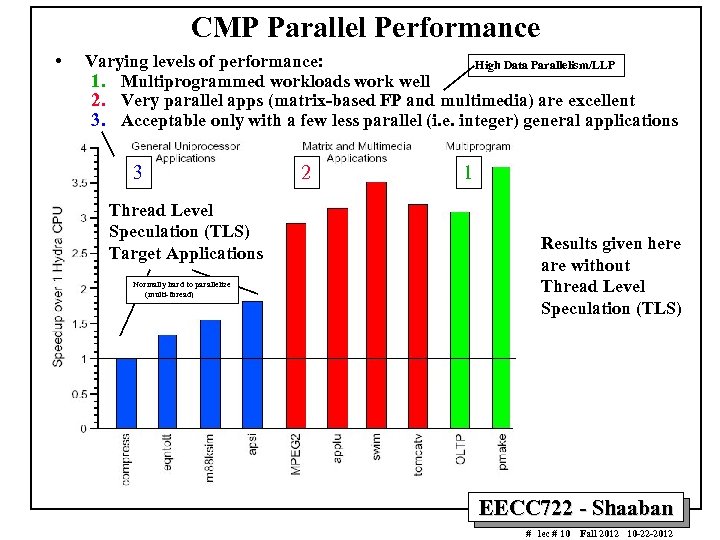

CMP Parallel Performance • Varying levels of performance: High Data Parallelism/LLP 1. Multiprogrammed workloads work well 2. Very parallel apps (matrix-based FP and multimedia) are excellent 3. Acceptable only with a few less parallel (i. e. integer) general applications 3 Thread Level Speculation (TLS) Target Applications Normally hard to parallelize (multi-thread) 2 1 Results given here are without Thread Level Speculation (TLS) EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

CMP Parallel Performance • Varying levels of performance: High Data Parallelism/LLP 1. Multiprogrammed workloads work well 2. Very parallel apps (matrix-based FP and multimedia) are excellent 3. Acceptable only with a few less parallel (i. e. integer) general applications 3 Thread Level Speculation (TLS) Target Applications Normally hard to parallelize (multi-thread) 2 1 Results given here are without Thread Level Speculation (TLS) EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

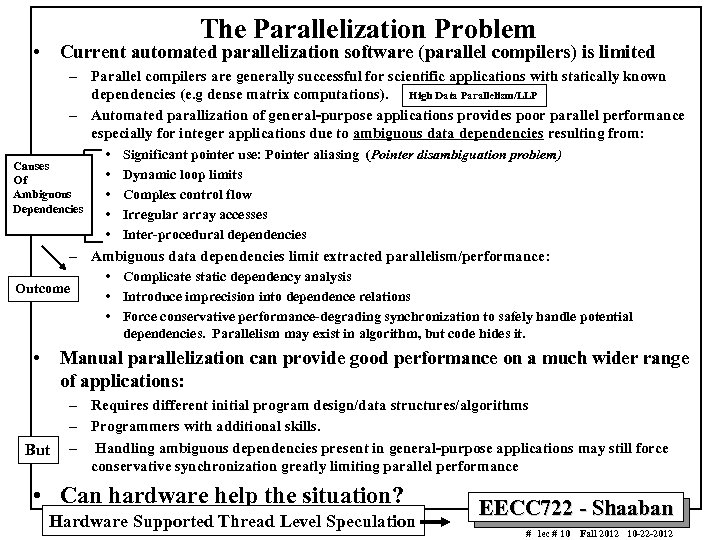

The Parallelization Problem • Current automated parallelization software (parallel compilers) is limited – Parallel compilers are generally successful for scientific applications with statically known dependencies (e. g dense matrix computations). High Data Parallelism/LLP – Automated parallization of general-purpose applications provides poor parallel performance especially for integer applications due to ambiguous data dependencies resulting from: Causes Of Ambiguous Dependencies – Outcome • • Significant pointer use: Pointer aliasing (Pointer disambiguation problem) • Dynamic loop limits • Complex control flow • Irregular array accesses • Inter-procedural dependencies Ambiguous data dependencies limit extracted parallelism/performance: • Complicate static dependency analysis • Introduce imprecision into dependence relations • Force conservative performance-degrading synchronization to safely handle potential dependencies. Parallelism may exist in algorithm, but code hides it. Manual parallelization can provide good performance on a much wider range of applications: But – Requires different initial program design/data structures/algorithms – Programmers with additional skills. – Handling ambiguous dependencies present in general-purpose applications may still force conservative synchronization greatly limiting parallel performance • Can hardware help the situation? Hardware Supported Thread Level Speculation EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

The Parallelization Problem • Current automated parallelization software (parallel compilers) is limited – Parallel compilers are generally successful for scientific applications with statically known dependencies (e. g dense matrix computations). High Data Parallelism/LLP – Automated parallization of general-purpose applications provides poor parallel performance especially for integer applications due to ambiguous data dependencies resulting from: Causes Of Ambiguous Dependencies – Outcome • • Significant pointer use: Pointer aliasing (Pointer disambiguation problem) • Dynamic loop limits • Complex control flow • Irregular array accesses • Inter-procedural dependencies Ambiguous data dependencies limit extracted parallelism/performance: • Complicate static dependency analysis • Introduce imprecision into dependence relations • Force conservative performance-degrading synchronization to safely handle potential dependencies. Parallelism may exist in algorithm, but code hides it. Manual parallelization can provide good performance on a much wider range of applications: But – Requires different initial program design/data structures/algorithms – Programmers with additional skills. – Handling ambiguous dependencies present in general-purpose applications may still force conservative synchronization greatly limiting parallel performance • Can hardware help the situation? Hardware Supported Thread Level Speculation EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

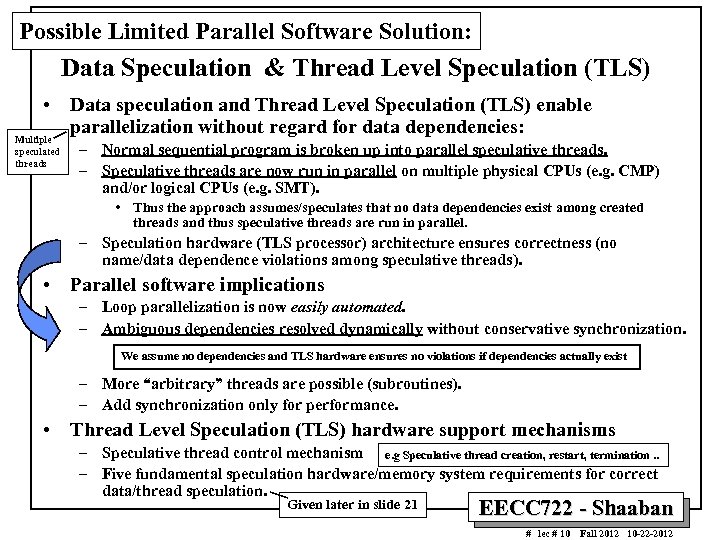

Possible Limited Parallel Software Solution: Data Speculation & Thread Level Speculation (TLS) • Data speculation and Thread Level Speculation (TLS) enable parallelization without regard for data dependencies: Multiple speculated threads – Normal sequential program is broken up into parallel speculative threads. – Speculative threads are now run in parallel on multiple physical CPUs (e. g. CMP) and/or logical CPUs (e. g. SMT). • Thus the approach assumes/speculates that no data dependencies exist among created threads and thus speculative threads are run in parallel. – Speculation hardware (TLS processor) architecture ensures correctness (no name/data dependence violations among speculative threads). • Parallel software implications – Loop parallelization is now easily automated. – Ambiguous dependencies resolved dynamically without conservative synchronization. We assume no dependencies and TLS hardware ensures no violations if dependencies actually exist – More “arbitrary” threads are possible (subroutines). – Add synchronization only for performance. • Thread Level Speculation (TLS) hardware support mechanisms – Speculative thread control mechanism e. g Speculative thread creation, restart, termination. . – Five fundamental speculation hardware/memory system requirements for correct data/thread speculation. Given later in slide 21 EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

Possible Limited Parallel Software Solution: Data Speculation & Thread Level Speculation (TLS) • Data speculation and Thread Level Speculation (TLS) enable parallelization without regard for data dependencies: Multiple speculated threads – Normal sequential program is broken up into parallel speculative threads. – Speculative threads are now run in parallel on multiple physical CPUs (e. g. CMP) and/or logical CPUs (e. g. SMT). • Thus the approach assumes/speculates that no data dependencies exist among created threads and thus speculative threads are run in parallel. – Speculation hardware (TLS processor) architecture ensures correctness (no name/data dependence violations among speculative threads). • Parallel software implications – Loop parallelization is now easily automated. – Ambiguous dependencies resolved dynamically without conservative synchronization. We assume no dependencies and TLS hardware ensures no violations if dependencies actually exist – More “arbitrary” threads are possible (subroutines). – Add synchronization only for performance. • Thread Level Speculation (TLS) hardware support mechanisms – Speculative thread control mechanism e. g Speculative thread creation, restart, termination. . – Five fundamental speculation hardware/memory system requirements for correct data/thread speculation. Given later in slide 21 EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

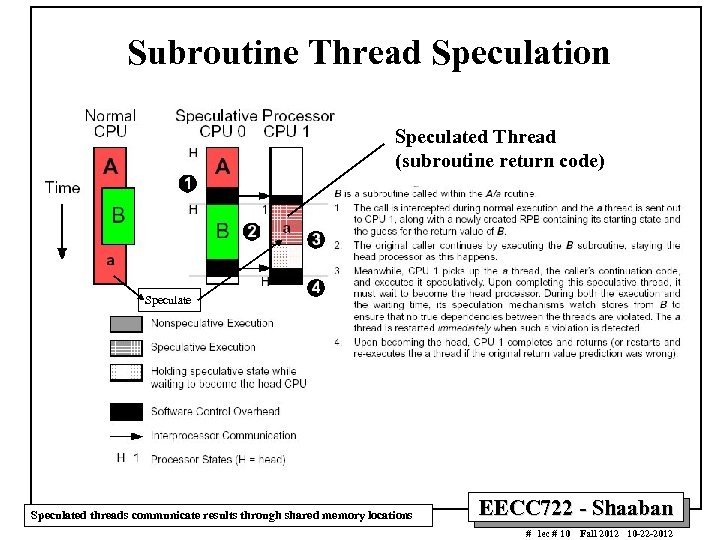

Subroutine Thread Speculation Speculated Thread (subroutine return code) Speculated threads communicate results through shared memory locations EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

Subroutine Thread Speculation Speculated Thread (subroutine return code) Speculated threads communicate results through shared memory locations EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

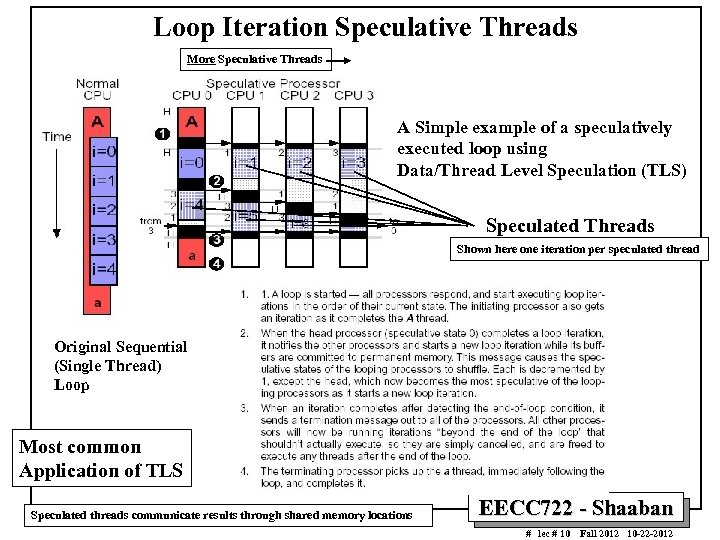

Loop Iteration Speculative Threads More Speculative Threads A Simple example of a speculatively executed loop using Data/Thread Level Speculation (TLS) Speculated Threads Shown here one iteration per speculated thread Original Sequential (Single Thread) Loop Most common Application of TLS Speculated threads communicate results through shared memory locations EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

Loop Iteration Speculative Threads More Speculative Threads A Simple example of a speculatively executed loop using Data/Thread Level Speculation (TLS) Speculated Threads Shown here one iteration per speculated thread Original Sequential (Single Thread) Loop Most common Application of TLS Speculated threads communicate results through shared memory locations EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

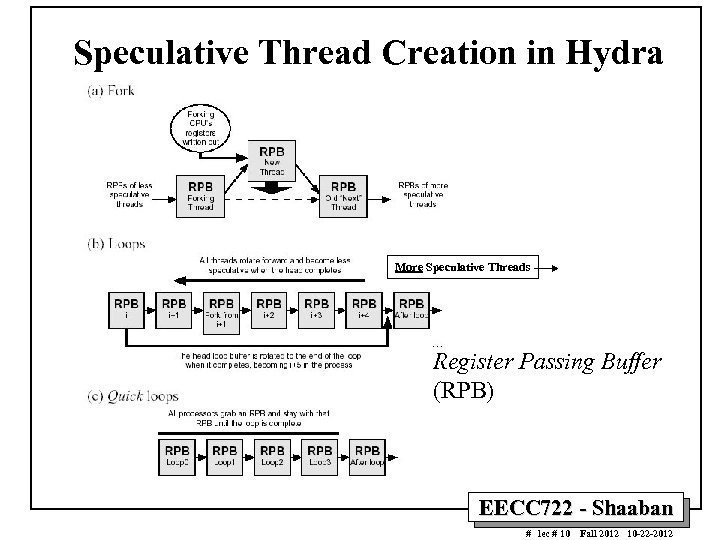

Speculative Thread Creation in Hydra More Speculative Threads Register Passing Buffer (RPB) EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

Speculative Thread Creation in Hydra More Speculative Threads Register Passing Buffer (RPB) EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

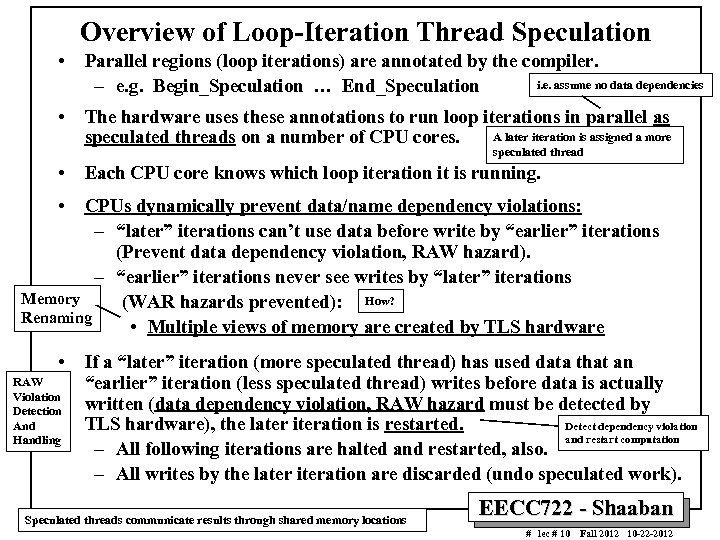

Overview of Loop-Iteration Thread Speculation • Parallel regions (loop iterations) are annotated by the compiler. i. e. assume no data dependencies – e. g. Begin_Speculation … End_Speculation • The hardware uses these annotations to run loop iterations in parallel as A later iteration is assigned a more speculated threads on a number of CPU cores. speculated thread • Each CPU core knows which loop iteration it is running. • CPUs dynamically prevent data/name dependency violations: – “later” iterations can’t use data before write by “earlier” iterations (Prevent data dependency violation, RAW hazard). – “earlier” iterations never see writes by “later” iterations Memory (WAR hazards prevented): How? Renaming • Multiple views of memory are created by TLS hardware • RAW Violation Detection And Handling If a “later” iteration (more speculated thread) has used data that an “earlier” iteration (less speculated thread) writes before data is actually written (data dependency violation, RAW hazard must be detected by Detect dependency violation TLS hardware), the later iteration is restarted. and restart computation – All following iterations are halted and restarted, also. – All writes by the later iteration are discarded (undo speculated work). Speculated threads communicate results through shared memory locations EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

Overview of Loop-Iteration Thread Speculation • Parallel regions (loop iterations) are annotated by the compiler. i. e. assume no data dependencies – e. g. Begin_Speculation … End_Speculation • The hardware uses these annotations to run loop iterations in parallel as A later iteration is assigned a more speculated threads on a number of CPU cores. speculated thread • Each CPU core knows which loop iteration it is running. • CPUs dynamically prevent data/name dependency violations: – “later” iterations can’t use data before write by “earlier” iterations (Prevent data dependency violation, RAW hazard). – “earlier” iterations never see writes by “later” iterations Memory (WAR hazards prevented): How? Renaming • Multiple views of memory are created by TLS hardware • RAW Violation Detection And Handling If a “later” iteration (more speculated thread) has used data that an “earlier” iteration (less speculated thread) writes before data is actually written (data dependency violation, RAW hazard must be detected by Detect dependency violation TLS hardware), the later iteration is restarted. and restart computation – All following iterations are halted and restarted, also. – All writes by the later iteration are discarded (undo speculated work). Speculated threads communicate results through shared memory locations EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

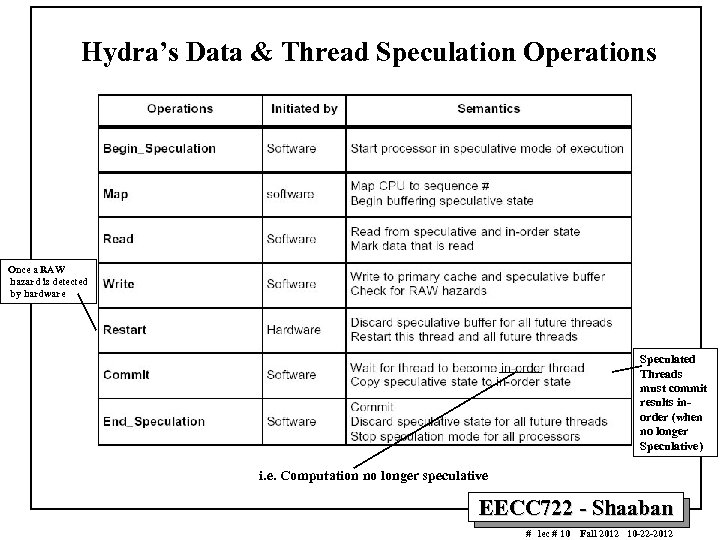

Hydra’s Data & Thread Speculation Operations Once a RAW hazard is detected by hardware Speculated Threads must commit results inorder (when no longer Speculative) i. e. Computation no longer speculative EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

Hydra’s Data & Thread Speculation Operations Once a RAW hazard is detected by hardware Speculated Threads must commit results inorder (when no longer Speculative) i. e. Computation no longer speculative EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

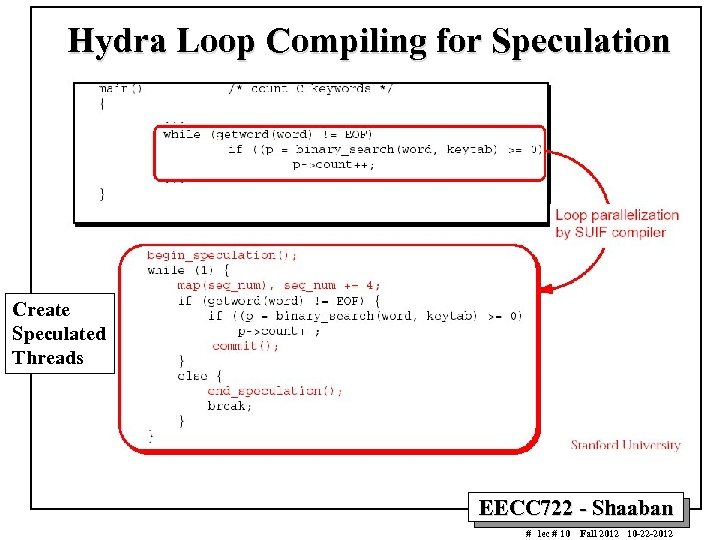

Hydra Loop Compiling for Speculation Create Speculated Threads EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

Hydra Loop Compiling for Speculation Create Speculated Threads EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

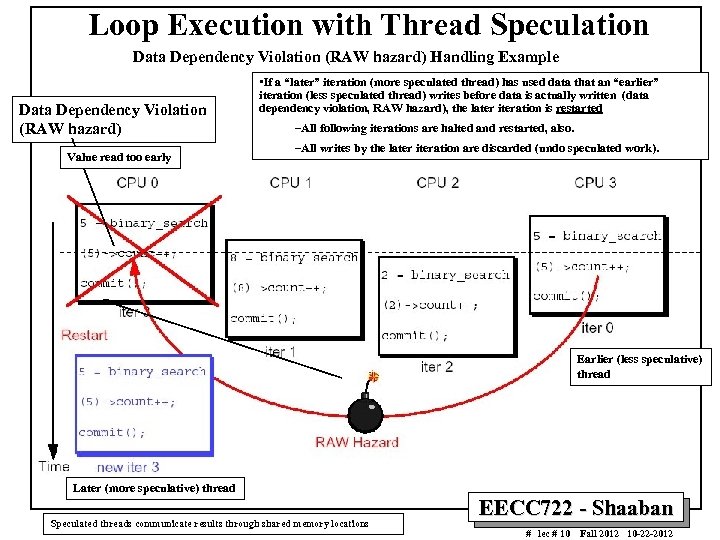

Loop Execution with Thread Speculation Data Dependency Violation (RAW hazard) Handling Example Data Dependency Violation (RAW hazard) Value read too early • If a “later” iteration (more speculated thread) has used data that an “earlier” iteration (less speculated thread) writes before data is actually written (data dependency violation, RAW hazard), the later iteration is restarted –All following iterations are halted and restarted, also. –All writes by the later iteration are discarded (undo speculated work). Earlier (less speculative) thread Later (more speculative) thread Speculated threads communicate results through shared memory locations EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

Loop Execution with Thread Speculation Data Dependency Violation (RAW hazard) Handling Example Data Dependency Violation (RAW hazard) Value read too early • If a “later” iteration (more speculated thread) has used data that an “earlier” iteration (less speculated thread) writes before data is actually written (data dependency violation, RAW hazard), the later iteration is restarted –All following iterations are halted and restarted, also. –All writes by the later iteration are discarded (undo speculated work). Earlier (less speculative) thread Later (more speculative) thread Speculated threads communicate results through shared memory locations EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

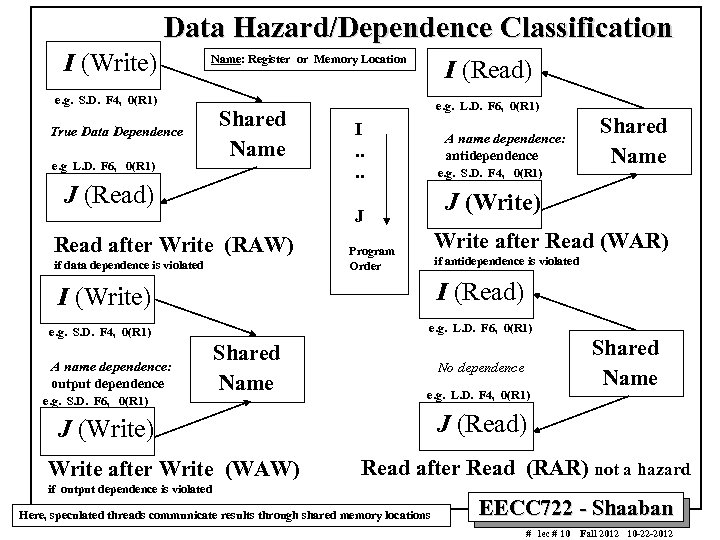

Data Hazard/Dependence Classification I (Write) Name: Register or Memory Location I (Read) e. g. S. D. F 4, 0(R 1) True Data Dependence e. g L. D. F 6, 0(R 1) Shared Name J (Read) e. g. L. D. F 6, 0(R 1) I. . A name dependence: antidependence e. g. S. D. F 4, 0(R 1) J (Write) J Read after Write (RAW) if data dependence is violated Write after Read (WAR) Program Order if antidependence is violated I (Read) I (Write) e. g. L. D. F 6, 0(R 1) e. g. S. D. F 4, 0(R 1) A name dependence: output dependence e. g. S. D. F 6, 0(R 1) Shared Name No dependence e. g. L. D. F 4, 0(R 1) J (Read) J (Write) Write after Write (WAW) Shared Name Read after Read (RAR) not a hazard if output dependence is violated Here, speculated threads communicate results through shared memory locations EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

Data Hazard/Dependence Classification I (Write) Name: Register or Memory Location I (Read) e. g. S. D. F 4, 0(R 1) True Data Dependence e. g L. D. F 6, 0(R 1) Shared Name J (Read) e. g. L. D. F 6, 0(R 1) I. . A name dependence: antidependence e. g. S. D. F 4, 0(R 1) J (Write) J Read after Write (RAW) if data dependence is violated Write after Read (WAR) Program Order if antidependence is violated I (Read) I (Write) e. g. L. D. F 6, 0(R 1) e. g. S. D. F 4, 0(R 1) A name dependence: output dependence e. g. S. D. F 6, 0(R 1) Shared Name No dependence e. g. L. D. F 4, 0(R 1) J (Read) J (Write) Write after Write (WAW) Shared Name Read after Read (RAR) not a hazard if output dependence is violated Here, speculated threads communicate results through shared memory locations EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

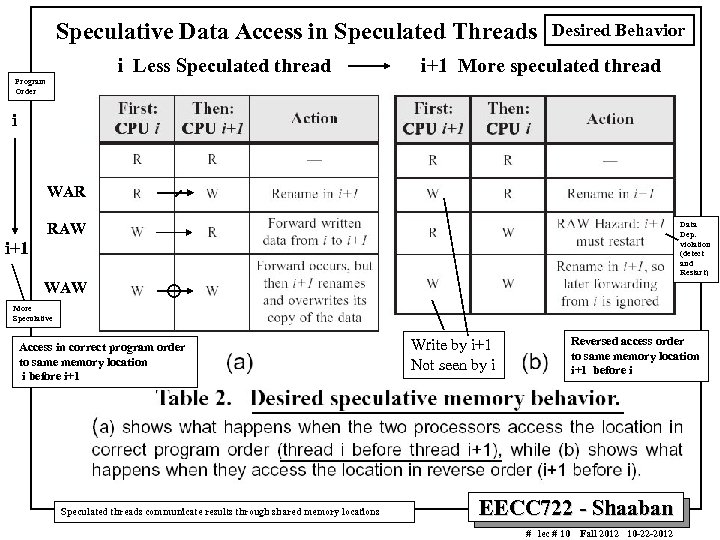

Speculative Data Access in Speculated Threads i Less Speculated thread Desired Behavior i+1 More speculated thread Program Order i WAR RAW Data Dep. violation (detect and Restart) i+1 WAW More Speculative Access in correct program order to same memory location i before i+1 Speculated threads communicate results through shared memory locations Reversed access order to same memory location i+1 before i Write by i+1 Not seen by i EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

Speculative Data Access in Speculated Threads i Less Speculated thread Desired Behavior i+1 More speculated thread Program Order i WAR RAW Data Dep. violation (detect and Restart) i+1 WAW More Speculative Access in correct program order to same memory location i before i+1 Speculated threads communicate results through shared memory locations Reversed access order to same memory location i+1 before i Write by i+1 Not seen by i EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

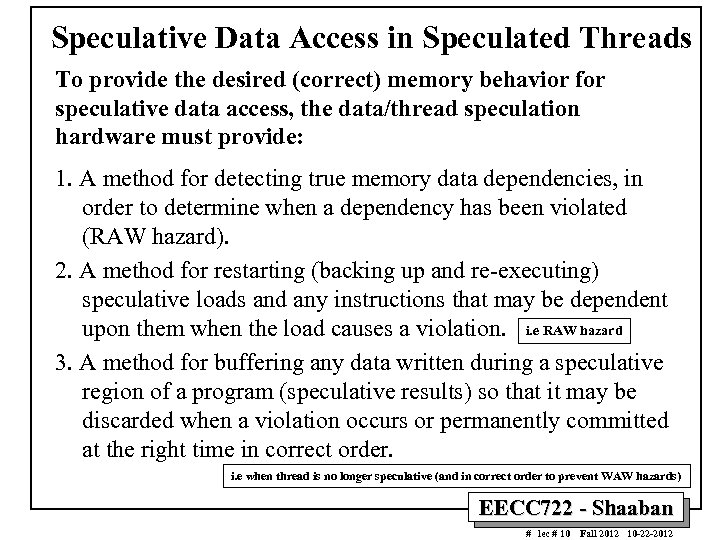

Speculative Data Access in Speculated Threads To provide the desired (correct) memory behavior for speculative data access, the data/thread speculation hardware must provide: 1. A method for detecting true memory data dependencies, in order to determine when a dependency has been violated (RAW hazard). 2. A method for restarting (backing up and re-executing) speculative loads and any instructions that may be dependent upon them when the load causes a violation. i. e RAW hazard 3. A method for buffering any data written during a speculative region of a program (speculative results) so that it may be discarded when a violation occurs or permanently committed at the right time in correct order. i. e when thread is no longer speculative (and in correct order to prevent WAW hazards) EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

Speculative Data Access in Speculated Threads To provide the desired (correct) memory behavior for speculative data access, the data/thread speculation hardware must provide: 1. A method for detecting true memory data dependencies, in order to determine when a dependency has been violated (RAW hazard). 2. A method for restarting (backing up and re-executing) speculative loads and any instructions that may be dependent upon them when the load causes a violation. i. e RAW hazard 3. A method for buffering any data written during a speculative region of a program (speculative results) so that it may be discarded when a violation occurs or permanently committed at the right time in correct order. i. e when thread is no longer speculative (and in correct order to prevent WAW hazards) EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

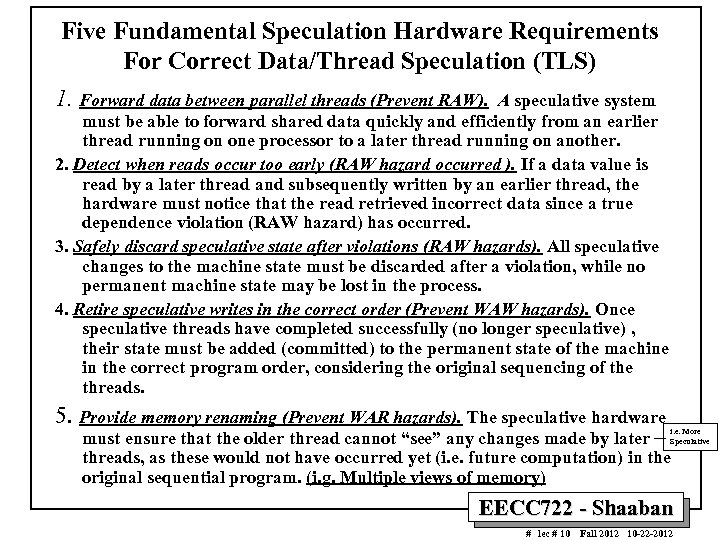

Five Fundamental Speculation Hardware Requirements For Correct Data/Thread Speculation (TLS) 1. Forward data between parallel threads (Prevent RAW). A speculative system must be able to forward shared data quickly and efficiently from an earlier thread running on one processor to a later thread running on another. 2. Detect when reads occur too early (RAW hazard occurred ). If a data value is read by a later thread and subsequently written by an earlier thread, the hardware must notice that the read retrieved incorrect data since a true dependence violation (RAW hazard) has occurred. 3. Safely discard speculative state after violations (RAW hazards). All speculative changes to the machine state must be discarded after a violation, while no permanent machine state may be lost in the process. 4. Retire speculative writes in the correct order (Prevent WAW hazards). Once speculative threads have completed successfully (no longer speculative) , their state must be added (committed) to the permanent state of the machine in the correct program order, considering the original sequencing of the threads. 5. Provide memory renaming (Prevent WAR hazards). The speculative hardware i. e. More must ensure that the older thread cannot “see” any changes made by later Speculative threads, as these would not have occurred yet (i. e. future computation) in the original sequential program. (i. g. Multiple views of memory) EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

Five Fundamental Speculation Hardware Requirements For Correct Data/Thread Speculation (TLS) 1. Forward data between parallel threads (Prevent RAW). A speculative system must be able to forward shared data quickly and efficiently from an earlier thread running on one processor to a later thread running on another. 2. Detect when reads occur too early (RAW hazard occurred ). If a data value is read by a later thread and subsequently written by an earlier thread, the hardware must notice that the read retrieved incorrect data since a true dependence violation (RAW hazard) has occurred. 3. Safely discard speculative state after violations (RAW hazards). All speculative changes to the machine state must be discarded after a violation, while no permanent machine state may be lost in the process. 4. Retire speculative writes in the correct order (Prevent WAW hazards). Once speculative threads have completed successfully (no longer speculative) , their state must be added (committed) to the permanent state of the machine in the correct program order, considering the original sequencing of the threads. 5. Provide memory renaming (Prevent WAR hazards). The speculative hardware i. e. More must ensure that the older thread cannot “see” any changes made by later Speculative threads, as these would not have occurred yet (i. e. future computation) in the original sequential program. (i. g. Multiple views of memory) EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

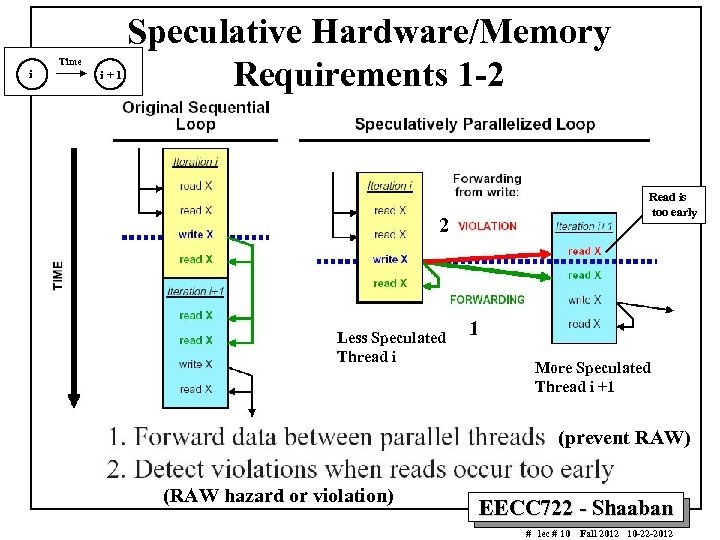

i Time i+1 Speculative Hardware/Memory Requirements 1 -2 Read is too early 2 Less Speculated Thread i 1 More Speculated Thread i +1 (prevent RAW) (RAW hazard or violation) EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

i Time i+1 Speculative Hardware/Memory Requirements 1 -2 Read is too early 2 Less Speculated Thread i 1 More Speculated Thread i +1 (prevent RAW) (RAW hazard or violation) EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

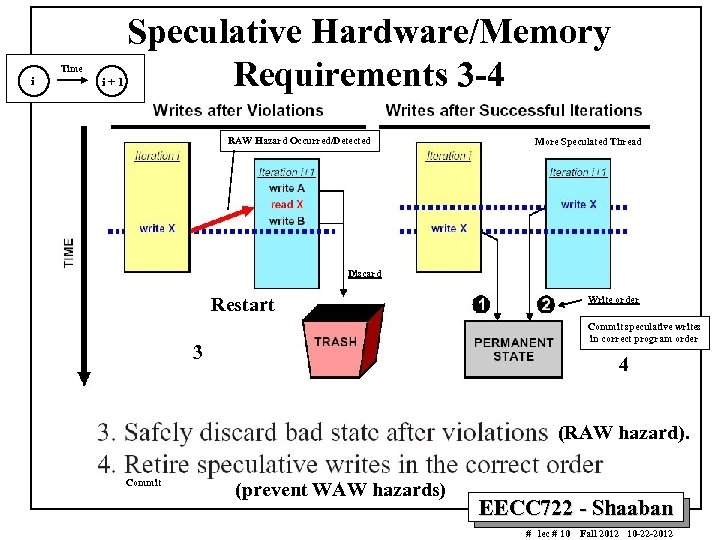

i Time i+1 Speculative Hardware/Memory Requirements 3 -4 RAW Hazard Occurred/Detected More Speculated Thread Discard Restart Write order Commit speculative writes in correct program order 3 4 (RAW hazard). Commit (prevent WAW hazards) EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

i Time i+1 Speculative Hardware/Memory Requirements 3 -4 RAW Hazard Occurred/Detected More Speculated Thread Discard Restart Write order Commit speculative writes in correct program order 3 4 (RAW hazard). Commit (prevent WAW hazards) EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

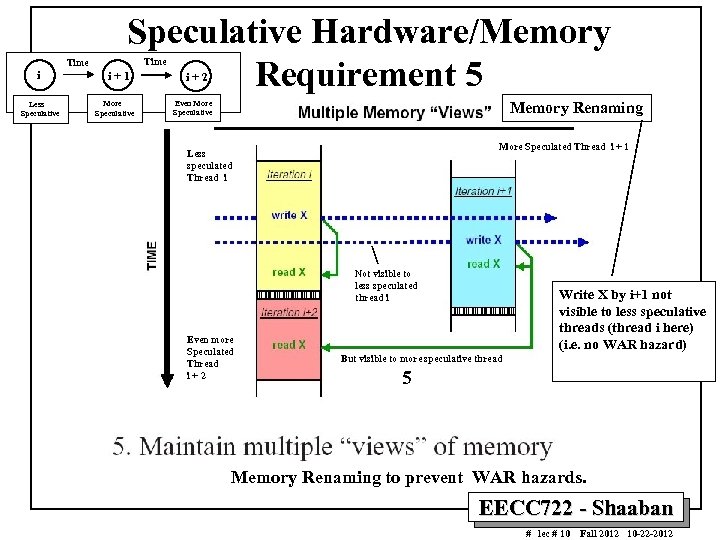

i Less Speculative Time Speculative Hardware/Memory Requirement 5 Time i+1 More Speculative i+2 Even More Speculative Memory Renaming More Speculated Thread i + 1 Less speculated Thread i Not visible to less speculated thread i Even more Speculated Thread i+2 Write X by i+1 not visible to less speculative threads (thread i here) (i. e. no WAR hazard) But visible to more speculative thread 5 Memory Renaming to prevent WAR hazards. EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

i Less Speculative Time Speculative Hardware/Memory Requirement 5 Time i+1 More Speculative i+2 Even More Speculative Memory Renaming More Speculated Thread i + 1 Less speculated Thread i Not visible to less speculated thread i Even more Speculated Thread i+2 Write X by i+1 not visible to less speculative threads (thread i here) (i. e. no WAR hazard) But visible to more speculative thread 5 Memory Renaming to prevent WAR hazards. EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

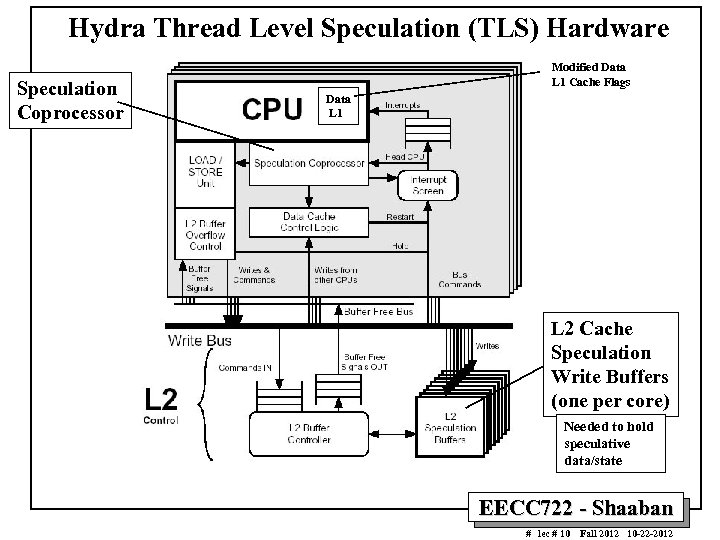

Hydra Thread Level Speculation (TLS) Hardware Speculation Coprocessor Modified Data L 1 Cache Flags Data L 1 L 2 Cache Speculation Write Buffers (one per core) Needed to hold speculative data/state EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

Hydra Thread Level Speculation (TLS) Hardware Speculation Coprocessor Modified Data L 1 Cache Flags Data L 1 L 2 Cache Speculation Write Buffers (one per core) Needed to hold speculative data/state EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

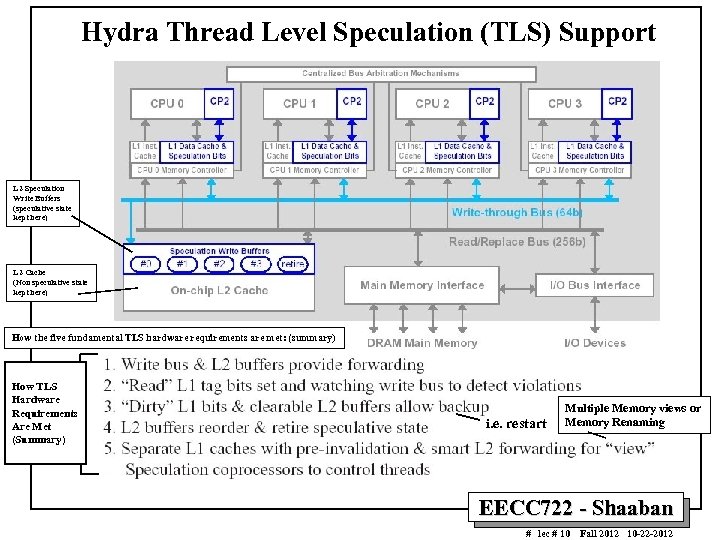

Hydra Thread Level Speculation (TLS) Support L 2 Speculation Write Buffers (speculative state kept here) L 2 Cache (Non speculative state kept here) How the five fundamental TLS hardware requirements are met: (summary) How TLS Hardware Requirements Are Met (Summary) i. e. restart Multiple Memory views or Memory Renaming EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

Hydra Thread Level Speculation (TLS) Support L 2 Speculation Write Buffers (speculative state kept here) L 2 Cache (Non speculative state kept here) How the five fundamental TLS hardware requirements are met: (summary) How TLS Hardware Requirements Are Met (Summary) i. e. restart Multiple Memory views or Memory Renaming EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

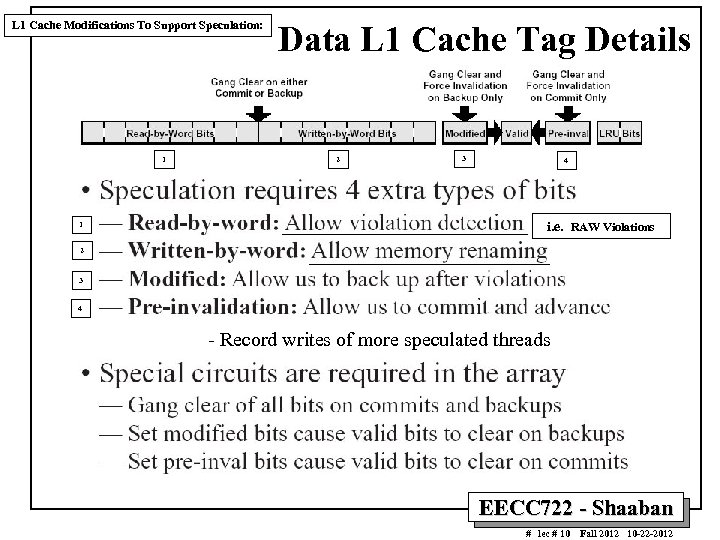

L 1 Cache Modifications To Support Speculation: 1 1 Data L 1 Cache Tag Details 2 3 4 i. e. RAW Violations 2 3 4 - Record writes of more speculated threads EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

L 1 Cache Modifications To Support Speculation: 1 1 Data L 1 Cache Tag Details 2 3 4 i. e. RAW Violations 2 3 4 - Record writes of more speculated threads EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

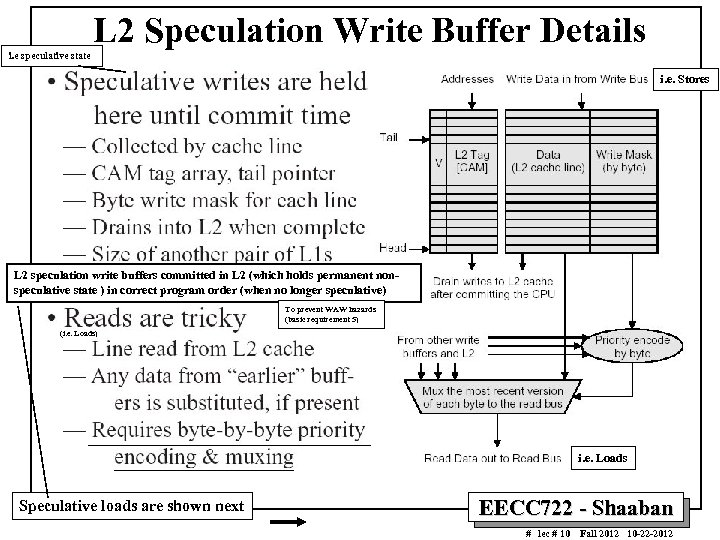

L 2 Speculation Write Buffer Details i. e speculative state i. e. Stores L 2 speculation write buffers committed in L 2 (which holds permanent nonspeculative state ) in correct program order (when no longer speculative) To prevent WAW hazards (basic requirement 5) (i. e. Loads) i. e. Loads Speculative loads are shown next EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

L 2 Speculation Write Buffer Details i. e speculative state i. e. Stores L 2 speculation write buffers committed in L 2 (which holds permanent nonspeculative state ) in correct program order (when no longer speculative) To prevent WAW hazards (basic requirement 5) (i. e. Loads) i. e. Loads Speculative loads are shown next EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

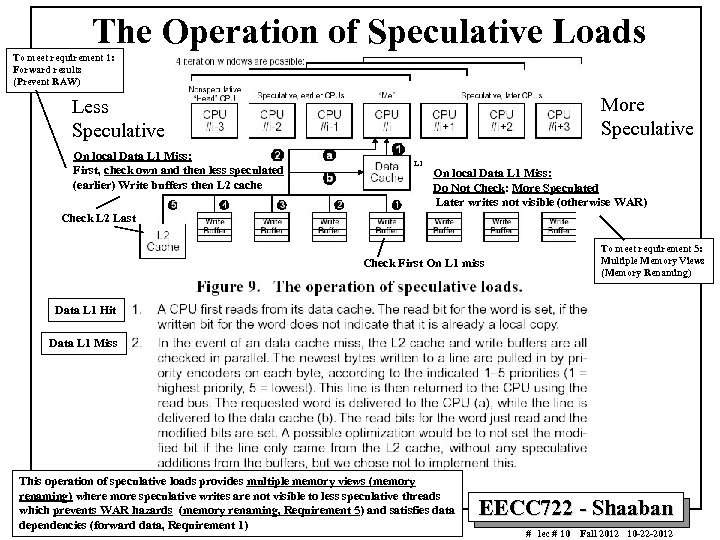

The Operation of Speculative Loads To meet requirement 1: Forward results (Prevent RAW) More Speculative Less Speculative On local Data L 1 Miss: First, check own and then less speculated (earlier) Write buffers then L 2 cache L 1 On local Data L 1 Miss: Do Not Check: More Speculated Later writes not visible (otherwise WAR) Check L 2 Last To meet requirement 5: Multiple Memory Views (Memory Renaming) Check First On L 1 miss Data L 1 Hit Data L 1 Miss This operation of speculative loads provides multiple memory views (memory renaming) where more speculative writes are not visible to less speculative threads which prevents WAR hazards (memory renaming, Requirement 5) and satisfies data dependencies (forward data, Requirement 1) EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

The Operation of Speculative Loads To meet requirement 1: Forward results (Prevent RAW) More Speculative Less Speculative On local Data L 1 Miss: First, check own and then less speculated (earlier) Write buffers then L 2 cache L 1 On local Data L 1 Miss: Do Not Check: More Speculated Later writes not visible (otherwise WAR) Check L 2 Last To meet requirement 5: Multiple Memory Views (Memory Renaming) Check First On L 1 miss Data L 1 Hit Data L 1 Miss This operation of speculative loads provides multiple memory views (memory renaming) where more speculative writes are not visible to less speculative threads which prevents WAR hazards (memory renaming, Requirement 5) and satisfies data dependencies (forward data, Requirement 1) EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

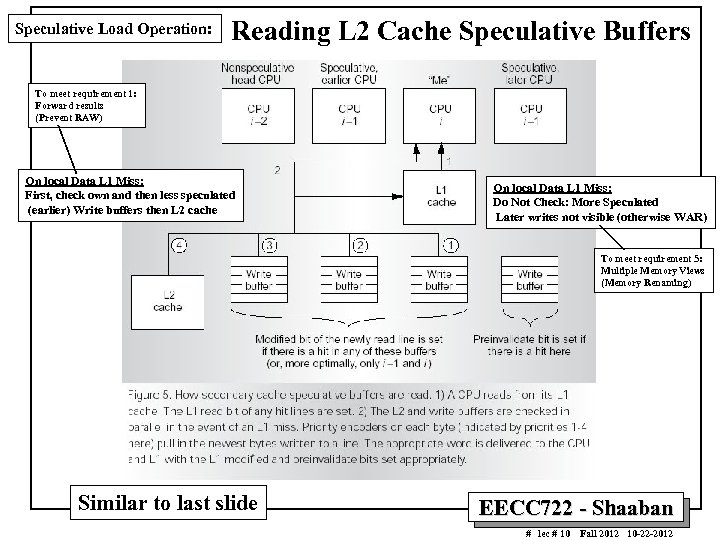

Speculative Load Operation: Reading L 2 Cache Speculative Buffers To meet requirement 1: Forward results (Prevent RAW) On local Data L 1 Miss: First, check own and then less speculated (earlier) Write buffers then L 2 cache On local Data L 1 Miss: Do Not Check: More Speculated Later writes not visible (otherwise WAR) To meet requirement 5: Multiple Memory Views (Memory Renaming) Similar to last slide EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

Speculative Load Operation: Reading L 2 Cache Speculative Buffers To meet requirement 1: Forward results (Prevent RAW) On local Data L 1 Miss: First, check own and then less speculated (earlier) Write buffers then L 2 cache On local Data L 1 Miss: Do Not Check: More Speculated Later writes not visible (otherwise WAR) To meet requirement 5: Multiple Memory Views (Memory Renaming) Similar to last slide EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

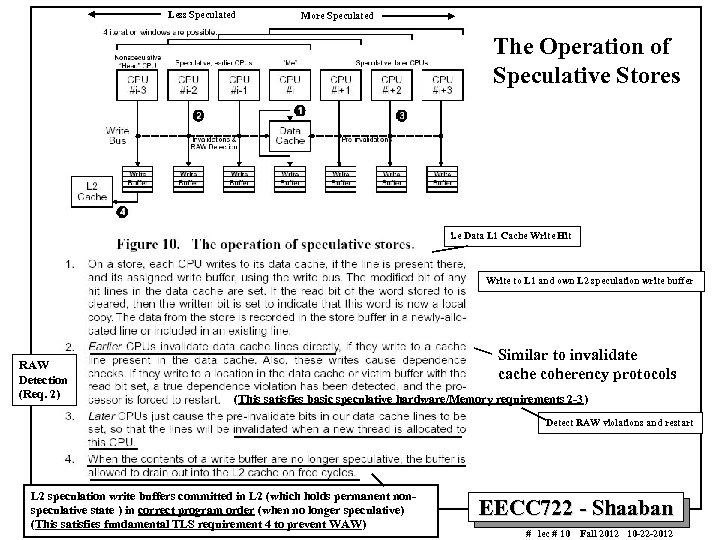

Less Speculated More Speculated The Operation of Speculative Stores i. e Data L 1 Cache Write Hit Write to L 1 and own L 2 speculation write buffer RAW Detection (Req. 2) Similar to invalidate cache coherency protocols (This satisfies basic speculative hardware/Memory requirements 2 -3) Detect RAW violations and restart L 2 speculation write buffers committed in L 2 (which holds permanent nonspeculative state ) in correct program order (when no longer speculative) (This satisfies fundamental TLS requirement 4 to prevent WAW) EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

Less Speculated More Speculated The Operation of Speculative Stores i. e Data L 1 Cache Write Hit Write to L 1 and own L 2 speculation write buffer RAW Detection (Req. 2) Similar to invalidate cache coherency protocols (This satisfies basic speculative hardware/Memory requirements 2 -3) Detect RAW violations and restart L 2 speculation write buffers committed in L 2 (which holds permanent nonspeculative state ) in correct program order (when no longer speculative) (This satisfies fundamental TLS requirement 4 to prevent WAW) EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

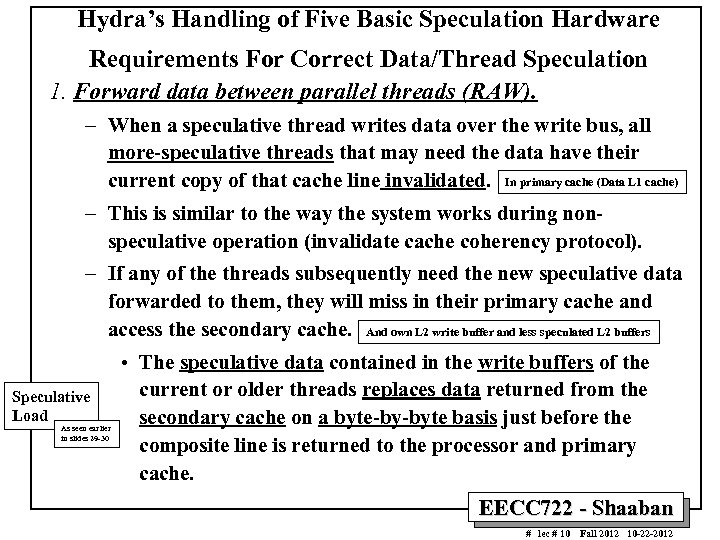

Hydra’s Handling of Five Basic Speculation Hardware Requirements For Correct Data/Thread Speculation 1. Forward data between parallel threads (RAW). – When a speculative thread writes data over the write bus, all more-speculative threads that may need the data have their current copy of that cache line invalidated. In primary cache (Data L 1 cache) – This is similar to the way the system works during nonspeculative operation (invalidate cache coherency protocol). – If any of the threads subsequently need the new speculative data forwarded to them, they will miss in their primary cache and access the secondary cache. And own L 2 write buffer and less speculated L 2 buffers Speculative Load As seen earlier in slides 29 -30 • The speculative data contained in the write buffers of the current or older threads replaces data returned from the secondary cache on a byte-by-byte basis just before the composite line is returned to the processor and primary cache. EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

Hydra’s Handling of Five Basic Speculation Hardware Requirements For Correct Data/Thread Speculation 1. Forward data between parallel threads (RAW). – When a speculative thread writes data over the write bus, all more-speculative threads that may need the data have their current copy of that cache line invalidated. In primary cache (Data L 1 cache) – This is similar to the way the system works during nonspeculative operation (invalidate cache coherency protocol). – If any of the threads subsequently need the new speculative data forwarded to them, they will miss in their primary cache and access the secondary cache. And own L 2 write buffer and less speculated L 2 buffers Speculative Load As seen earlier in slides 29 -30 • The speculative data contained in the write buffers of the current or older threads replaces data returned from the secondary cache on a byte-by-byte basis just before the composite line is returned to the processor and primary cache. EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

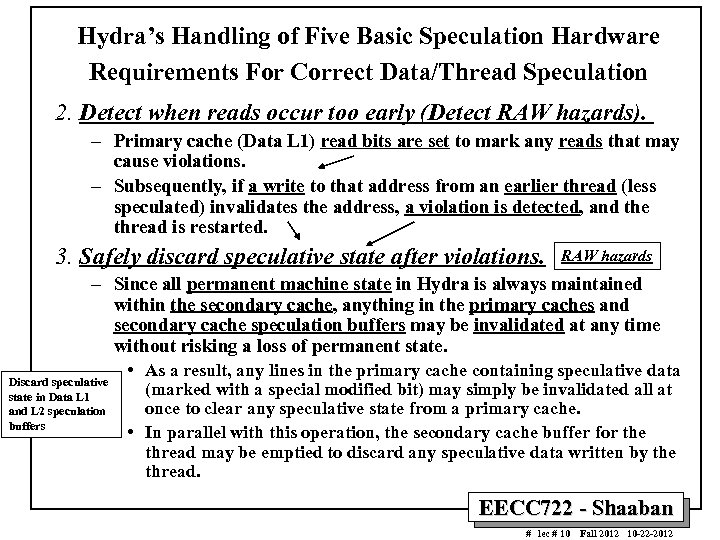

Hydra’s Handling of Five Basic Speculation Hardware Requirements For Correct Data/Thread Speculation 2. Detect when reads occur too early (Detect RAW hazards). – Primary cache (Data L 1) read bits are set to mark any reads that may cause violations. – Subsequently, if a write to that address from an earlier thread (less speculated) invalidates the address, a violation is detected, and the thread is restarted. 3. Safely discard speculative state after violations. RAW hazards – Since all permanent machine state in Hydra is always maintained within the secondary cache, anything in the primary caches and secondary cache speculation buffers may be invalidated at any time without risking a loss of permanent state. Discard speculative state in Data L 1 and L 2 speculation buffers • As a result, any lines in the primary cache containing speculative data (marked with a special modified bit) may simply be invalidated all at once to clear any speculative state from a primary cache. • In parallel with this operation, the secondary cache buffer for the thread may be emptied to discard any speculative data written by the thread. EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

Hydra’s Handling of Five Basic Speculation Hardware Requirements For Correct Data/Thread Speculation 2. Detect when reads occur too early (Detect RAW hazards). – Primary cache (Data L 1) read bits are set to mark any reads that may cause violations. – Subsequently, if a write to that address from an earlier thread (less speculated) invalidates the address, a violation is detected, and the thread is restarted. 3. Safely discard speculative state after violations. RAW hazards – Since all permanent machine state in Hydra is always maintained within the secondary cache, anything in the primary caches and secondary cache speculation buffers may be invalidated at any time without risking a loss of permanent state. Discard speculative state in Data L 1 and L 2 speculation buffers • As a result, any lines in the primary cache containing speculative data (marked with a special modified bit) may simply be invalidated all at once to clear any speculative state from a primary cache. • In parallel with this operation, the secondary cache buffer for the thread may be emptied to discard any speculative data written by the thread. EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

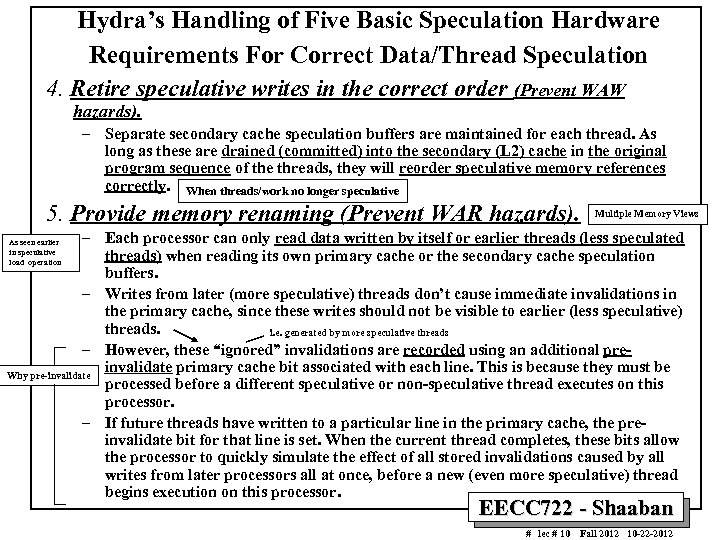

Hydra’s Handling of Five Basic Speculation Hardware Requirements For Correct Data/Thread Speculation 4. Retire speculative writes in the correct order (Prevent WAW hazards). – Separate secondary cache speculation buffers are maintained for each thread. As long as these are drained (committed) into the secondary (L 2) cache in the original program sequence of the threads, they will reorder speculative memory references correctly. When threads/work no longer speculative 5. Provide memory renaming (Prevent WAR hazards). Multiple Memory Views – Each processor can only read data written by itself or earlier threads (less speculated threads) when reading its own primary cache or the secondary cache speculation buffers. – Writes from later (more speculative) threads don’t cause immediate invalidations in the primary cache, since these writes should not be visible to earlier (less speculative) threads. i. e. generated by more speculative threads – However, these “ignored” invalidations are recorded using an additional preinvalidate primary cache bit associated with each line. This is because they must be Why pre-invalidate processed before a different speculative or non-speculative thread executes on this processor. – If future threads have written to a particular line in the primary cache, the preinvalidate bit for that line is set. When the current thread completes, these bits allow the processor to quickly simulate the effect of all stored invalidations caused by all writes from later processors all at once, before a new (even more speculative) thread begins execution on this processor. As seen earlier in speculative load operation EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

Hydra’s Handling of Five Basic Speculation Hardware Requirements For Correct Data/Thread Speculation 4. Retire speculative writes in the correct order (Prevent WAW hazards). – Separate secondary cache speculation buffers are maintained for each thread. As long as these are drained (committed) into the secondary (L 2) cache in the original program sequence of the threads, they will reorder speculative memory references correctly. When threads/work no longer speculative 5. Provide memory renaming (Prevent WAR hazards). Multiple Memory Views – Each processor can only read data written by itself or earlier threads (less speculated threads) when reading its own primary cache or the secondary cache speculation buffers. – Writes from later (more speculative) threads don’t cause immediate invalidations in the primary cache, since these writes should not be visible to earlier (less speculative) threads. i. e. generated by more speculative threads – However, these “ignored” invalidations are recorded using an additional preinvalidate primary cache bit associated with each line. This is because they must be Why pre-invalidate processed before a different speculative or non-speculative thread executes on this processor. – If future threads have written to a particular line in the primary cache, the preinvalidate bit for that line is set. When the current thread completes, these bits allow the processor to quickly simulate the effect of all stored invalidations caused by all writes from later processors all at once, before a new (even more speculative) thread begins execution on this processor. As seen earlier in speculative load operation EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

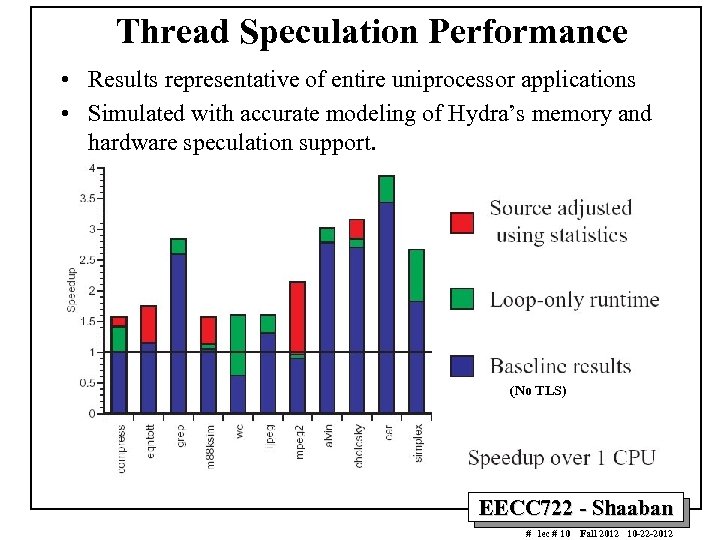

Thread Speculation Performance • Results representative of entire uniprocessor applications • Simulated with accurate modeling of Hydra’s memory and hardware speculation support. (No TLS) EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

Thread Speculation Performance • Results representative of entire uniprocessor applications • Simulated with accurate modeling of Hydra’s memory and hardware speculation support. (No TLS) EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

Hydra Conclusions • Hydra offers a number of advantages: – Good performance on parallel applications. – Promising performance on difficult to parallelize sequential (single-threaded) applications using data/Thread Level Speculation (TLS) mechanisms. – Scalable, modular design. – Low hardware overhead support for speculative thread parallelism (compared to other TLS architectures), yet greatly increases the number of parallelizable applications. Main goal of TLS EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

Hydra Conclusions • Hydra offers a number of advantages: – Good performance on parallel applications. – Promising performance on difficult to parallelize sequential (single-threaded) applications using data/Thread Level Speculation (TLS) mechanisms. – Scalable, modular design. – Low hardware overhead support for speculative thread parallelism (compared to other TLS architectures), yet greatly increases the number of parallelizable applications. Main goal of TLS EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

Other Thread Level Speculation (TLS) Efforts: Wisconsin Multiscalar (1995) • This CMP-based design proposed the first reasonable hardware to implement TLS. • Unlike Hydra, Multiscalar implements a ring-like network between all of the processors to allow direct register-to-register communication. – Along with hardware-based thread sequencing, this type of communication allows much smaller threads to be exploited at the expense of more complex processor cores. • The designers proposed two different speculative memory systems to support the Multiscalar core. – The first was a unified primary cache, or address resolution buffer (ARB). Unfortunately, the ARB has most of the complexity of Hydra’s secondary cache buffers at the primary cache level, making it difficult to implement. – Later, they proposed the speculative versioning cache (SVC). • The SVC uses write-back primary caches to buffer speculative writes in the primary caches, using a sophisticated coherence scheme. EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

Other Thread Level Speculation (TLS) Efforts: Wisconsin Multiscalar (1995) • This CMP-based design proposed the first reasonable hardware to implement TLS. • Unlike Hydra, Multiscalar implements a ring-like network between all of the processors to allow direct register-to-register communication. – Along with hardware-based thread sequencing, this type of communication allows much smaller threads to be exploited at the expense of more complex processor cores. • The designers proposed two different speculative memory systems to support the Multiscalar core. – The first was a unified primary cache, or address resolution buffer (ARB). Unfortunately, the ARB has most of the complexity of Hydra’s secondary cache buffers at the primary cache level, making it difficult to implement. – Later, they proposed the speculative versioning cache (SVC). • The SVC uses write-back primary caches to buffer speculative writes in the primary caches, using a sophisticated coherence scheme. EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

Other Thread Level Speculation (TLS) Efforts: Carnegie-Mellon Stampede • This CMP-with-TLS proposal is very similar to Hydra, – Including the use of software speculation handlers. • However, the hardware is simpler than Hydra’s. • The design uses write-back primary caches to buffer writes— similar to those in the SVC—and sophisticated compiler technology to explicitly mark all memory references that require forwarding to another speculative thread. • Their simplified SVC must drain its speculative contents as each thread completes, unfortunately resulting in heavy bursts of bus activity. EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

Other Thread Level Speculation (TLS) Efforts: Carnegie-Mellon Stampede • This CMP-with-TLS proposal is very similar to Hydra, – Including the use of software speculation handlers. • However, the hardware is simpler than Hydra’s. • The design uses write-back primary caches to buffer writes— similar to those in the SVC—and sophisticated compiler technology to explicitly mark all memory references that require forwarding to another speculative thread. • Their simplified SVC must drain its speculative contents as each thread completes, unfortunately resulting in heavy bursts of bus activity. EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

Other Thread Level Speculation (TLS) Efforts: MIT M-machine • This CMP design has three processors that share a primary cache and can communicate register-to-register through a crossbar. • Each processor can also switch dynamically among several Fine grain multi-threaded, not SMT threads. (TLS & SMT? ? ) • As a result, the hardware connecting processors together is quite complex and slow. • However, programs executed on the M-machine can be parallelized using very fine-grain mechanisms that are impossible on an architecture that shares outside of the processor cores, like Hydra. • Performance results show that on typical applications extremely fine-grained parallelization is often not as effective as parallelism at the levels that Hydra can exploit. The overhead incurred by frequent synchronizations reduces the effectiveness. EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012

Other Thread Level Speculation (TLS) Efforts: MIT M-machine • This CMP design has three processors that share a primary cache and can communicate register-to-register through a crossbar. • Each processor can also switch dynamically among several Fine grain multi-threaded, not SMT threads. (TLS & SMT? ? ) • As a result, the hardware connecting processors together is quite complex and slow. • However, programs executed on the M-machine can be parallelized using very fine-grain mechanisms that are impossible on an architecture that shares outside of the processor cores, like Hydra. • Performance results show that on typical applications extremely fine-grained parallelization is often not as effective as parallelism at the levels that Hydra can exploit. The overhead incurred by frequent synchronizations reduces the effectiveness. EECC 722 - Shaaban # lec # 10 Fall 2012 10 -22 -2012