2a889ae202e8ca3dda3932ac237f07ee.ppt

- Количество слайдов: 47

Data Quality Class 6

Data Quality Class 6

This Week l l Homework Questions Data Standardization

This Week l l Homework Questions Data Standardization

Data Standardization l l l What is a standard? Benefits of Standardization Defining Data standards Testing for standard form Transforming into standard form

Data Standardization l l l What is a standard? Benefits of Standardization Defining Data standards Testing for standard form Transforming into standard form

What is a Standard? l l a standard is something set up and established by authority, custom, or general consent as a model or example a model to which all objects of the same class must conform.

What is a Standard? l l a standard is something set up and established by authority, custom, or general consent as a model or example a model to which all objects of the same class must conform.

What is a Standard? 2 l conforms to a predefined expected format which may be defined by: – – an organization with some official authority (e. g. , government) some recognized authoritative board (such as a standards committee) negotiated agreement (such as electronic data interchange (EDI) agreements) de facto convention (e. g. , telephone number formats)

What is a Standard? 2 l conforms to a predefined expected format which may be defined by: – – an organization with some official authority (e. g. , government) some recognized authoritative board (such as a standards committee) negotiated agreement (such as electronic data interchange (EDI) agreements) de facto convention (e. g. , telephone number formats)

Benefits of Standardization l l l conformity for comparison (as well as aggregation and analysis purposes) an audit trail for data error accountability a streamlined means for the transfer and sharing of information

Benefits of Standardization l l l conformity for comparison (as well as aggregation and analysis purposes) an audit trail for data error accountability a streamlined means for the transfer and sharing of information

Defining Standards l l Find representative body Identify a simple set of rules that completely specify the valid structure and meaning of a correct data value Present the standard to the committee (or even the community as a whole) for comments Document and publish standard

Defining Standards l l Find representative body Identify a simple set of rules that completely specify the valid structure and meaning of a correct data value Present the standard to the committee (or even the community as a whole) for comments Document and publish standard

Testing for Standard Form l l If there is a standard, there should be a way to test to see if data is in standard form Example: US Telephone numbers – – – l l Defined by Industry Numbering Committee (INC) NPA: Numbering Plan Area code NXX: Central Office Code Test format conformance (e. g. , 1 -XXX-YYY-ZZZZ for telephone numbers) Test for validity (e. g. , is XXX a valid NPA, is YYY a valid NXX for the NPA XXX)

Testing for Standard Form l l If there is a standard, there should be a way to test to see if data is in standard form Example: US Telephone numbers – – – l l Defined by Industry Numbering Committee (INC) NPA: Numbering Plan Area code NXX: Central Office Code Test format conformance (e. g. , 1 -XXX-YYY-ZZZZ for telephone numbers) Test for validity (e. g. , is XXX a valid NPA, is YYY a valid NXX for the NPA XXX)

Transforming into Standard Form l l Given a good standard, it should be straightforward to transform data into that form Must be able to recognize data components to be able to place them in proper locations

Transforming into Standard Form l l Given a good standard, it should be straightforward to transform data into that form Must be able to recognize data components to be able to place them in proper locations

Error Paradigms l How are errors introduced into data? – – – – – Attribute Granularity Finger Flubs Format Conformance Semi-structured form Transcription Errors Transformation Flubs Misfielded Data Floating Data Overloaded Attributes

Error Paradigms l How are errors introduced into data? – – – – – Attribute Granularity Finger Flubs Format Conformance Semi-structured form Transcription Errors Transformation Flubs Misfielded Data Floating Data Overloaded Attributes

Attribute Granularity l Data granularity is not at the proper level – l Example: “name” vs. last name, first name Creates confusion when more than one entity can be represented in the same attribute

Attribute Granularity l Data granularity is not at the proper level – l Example: “name” vs. last name, first name Creates confusion when more than one entity can be represented in the same attribute

Finger Flubs l l l This happens whem the incorrect letter is typed on the keybpard Also, sometimes mnore than one letter is hit by mistake Also, a leter might be missing

Finger Flubs l l l This happens whem the incorrect letter is typed on the keybpard Also, sometimes mnore than one letter is hit by mistake Also, a leter might be missing

Format Conformance l When the format is too restrictive, the user may not be able to properly enter the data – Example: First name, middle initial, last name l Some people go by their middle name

Format Conformance l When the format is too restrictive, the user may not be able to properly enter the data – Example: First name, middle initial, last name l Some people go by their middle name

Semi-structured form l There may be multiple “valid” formats that appear in free-form – – Example: corporate structure laid out at web sites Example: l l (first name) (middle initial) (last name) or (last name), (first name)

Semi-structured form l There may be multiple “valid” formats that appear in free-form – – Example: corporate structure laid out at web sites Example: l l (first name) (middle initial) (last name) or (last name), (first name)

Transcription Errors l Data is collected through “fuzzy” media and is not properly transcribed – – Mispronounced data Incorrect spellings

Transcription Errors l Data is collected through “fuzzy” media and is not properly transcribed – – Mispronounced data Incorrect spellings

Transformation Flubs l Automated processing may introduce errors – We’ve already seen this example: l l a database of names was found to have an inordinately large number of high-frequency word fragments, such as “INCORP, ” “ATIONAL, ” “COMPA. ” Text spanned multiple fields, which were not concatenated properly on extraction

Transformation Flubs l Automated processing may introduce errors – We’ve already seen this example: l l a database of names was found to have an inordinately large number of high-frequency word fragments, such as “INCORP, ” “ATIONAL, ” “COMPA. ” Text spanned multiple fields, which were not concatenated properly on extraction

Misfielded Data l l Data that is placed in the wrong field Example: street addresses – – Fields may not be big enough Text spills over to next field

Misfielded Data l l Data that is placed in the wrong field Example: street addresses – – Fields may not be big enough Text spills over to next field

Floating Data l l Information that belongs in one field is contained in different fields in different records in the database See examples in housing authority database

Floating Data l l Information that belongs in one field is contained in different fields in different records in the database See examples in housing authority database

Overloaded Attributes l l More than one entity shows up in data Example: – John and Mary Smith, TTES, Smith Foundation

Overloaded Attributes l l More than one entity shows up in data Example: – John and Mary Smith, TTES, Smith Foundation

Record Parsing l l Tokenizing data elements within an attribute Assign meaning to tokens – – – Domain membership Patterns Context

Record Parsing l l Tokenizing data elements within an attribute Assign meaning to tokens – – – Domain membership Patterns Context

Record Parsing 2 l In order to do this, we need: – – l The names and types of the data components expected to be found in the field The set of valid values for each data component type The acceptable forms that the data may take A means for tagging records that have unidentified data components We can do this with domains, mappings, and rules!

Record Parsing 2 l In order to do this, we need: – – l The names and types of the data components expected to be found in the field The set of valid values for each data component type The acceptable forms that the data may take A means for tagging records that have unidentified data components We can do this with domains, mappings, and rules!

Data Correction l l l If we can automatically recognize data as not conforming to a standard, can we automate its correction? If we have translation rules or mappings from incorrect values to correct values This is how many data cleansing applications work – example: Internatinal International

Data Correction l l l If we can automatically recognize data as not conforming to a standard, can we automate its correction? If we have translation rules or mappings from incorrect values to correct values This is how many data cleansing applications work – example: Internatinal International

Data Correction 2 l l Correction by consolidation Makes use of record linkage – – – Find a pivot attribute across which to link The pivot should be unique (such as social security number) Link records together and consolidate “correct” name based on other factors, such as data source, timestamp, etc.

Data Correction 2 l l Correction by consolidation Makes use of record linkage – – – Find a pivot attribute across which to link The pivot should be unique (such as social security number) Link records together and consolidate “correct” name based on other factors, such as data source, timestamp, etc.

Data Standardization l l Use standard form as a pivot for linkage and consolidation Example – – l l Elizabeth R. Johnson, 123 Main St Beth R. Johnson, 123 Main St It’s a good hunch that these records represent the same person We can standardize components based on nicknames, abbreviations, etc.

Data Standardization l l Use standard form as a pivot for linkage and consolidation Example – – l l Elizabeth R. Johnson, 123 Main St Beth R. Johnson, 123 Main St It’s a good hunch that these records represent the same person We can standardize components based on nicknames, abbreviations, etc.

Data Standardization 2 l Examples: – – – l Robert, Rob, Bob, Robby, Bobby Elizabeth, Elisabeth, Lizzie, Beth International, Int’l, Intrntnl Make use of a standard form, even if it is not necessarily correct – – In other words, “change” all Roberts, Robs, Bobs, Robbys, and Bobbys to Robert Use standard form for linkage

Data Standardization 2 l Examples: – – – l Robert, Rob, Bob, Robby, Bobby Elizabeth, Elisabeth, Lizzie, Beth International, Int’l, Intrntnl Make use of a standard form, even if it is not necessarily correct – – In other words, “change” all Roberts, Robs, Bobs, Robbys, and Bobbys to Robert Use standard form for linkage

Data Standardization 3 l Again, this concept sounds familiar – – – Many to one mapping Maintain the standardization mapping as metadata Apply mapping to get standard form

Data Standardization 3 l Again, this concept sounds familiar – – – Many to one mapping Maintain the standardization mapping as metadata Apply mapping to get standard form

Abbreviation Expansion l l l Rule/mapping oriented Translates common abbreviations to a standard form Types: – – – Shortenings (INC for INCORPORATED) Compression (INTL for INTERNATIONAL) Acronyms (IBM for you know what)

Abbreviation Expansion l l l Rule/mapping oriented Translates common abbreviations to a standard form Types: – – – Shortenings (INC for INCORPORATED) Compression (INTL for INTERNATIONAL) Acronyms (IBM for you know what)

Transformation Rules l l Standardization is a process of transforming nonconforming forms to conforming forms Use mappings/transformation rules Create a rule engine instance and integrate the rules Engine becomes a filter

Transformation Rules l l Standardization is a process of transforming nonconforming forms to conforming forms Use mappings/transformation rules Create a rule engine instance and integrate the rules Engine becomes a filter

Transformation Engine l l Application of context-sensitive consistency and derivation rules transforms a data instance into appropriate form In this case, derivation rules act on nonstandard values Also referred to as “edits” Rule base grows as violations are noted

Transformation Engine l l Application of context-sensitive consistency and derivation rules transforms a data instance into appropriate form In this case, derivation rules act on nonstandard values Also referred to as “edits” Rule base grows as violations are noted

Transformation Engine 2 1. 2. 3. 4. Determine validity expectations (=what should the data instance look like if it were in standard form) Create a validity filter with invalid records forwarded to a domain expert For each violation, (or set of violations) the domain expert determines if a general rule can be applied to transform the bad record into its standard form Merge transformation rules into validity filter

Transformation Engine 2 1. 2. 3. 4. Determine validity expectations (=what should the data instance look like if it were in standard form) Create a validity filter with invalid records forwarded to a domain expert For each violation, (or set of violations) the domain expert determines if a general rule can be applied to transform the bad record into its standard form Merge transformation rules into validity filter

Example: Address Standardization l l l United States Postal Service (USPS) has done a very good job of presenting their addressing standard Their goal: increase readability of mail to increase deliverability Benefits are given to postal customers when data is in correct form

Example: Address Standardization l l l United States Postal Service (USPS) has done a very good job of presenting their addressing standard Their goal: increase readability of mail to increase deliverability Benefits are given to postal customers when data is in correct form

USPS Address Standard l Multiple address lines – – – l Recipient line Delivery Address line Last line Standard Address Block

USPS Address Standard l Multiple address lines – – – l Recipient line Delivery Address line Last line Standard Address Block

Recipient Line l l Person or entity to whom mail is to be delivered First line of standard address block

Recipient Line l l Person or entity to whom mail is to be delivered First line of standard address block

Delivery Address Line l l l Contains location information Includes street address Broken down into: – – – Primary address number Predirectional and/or Postdirectional Street name Suffix (RD, ST, etc. ) Secondary address designator

Delivery Address Line l l l Contains location information Includes street address Broken down into: – – – Primary address number Predirectional and/or Postdirectional Street name Suffix (RD, ST, etc. ) Secondary address designator

Last Line l l l City State ZIP+4 code

Last Line l l l City State ZIP+4 code

Standard Abbreviations l l l USPS expects addresses to be represented in a reduced form, using standard abbreviations This can be represented using a mapping See example (pub. 28)

Standard Abbreviations l l l USPS expects addresses to be represented in a reduced form, using standard abbreviations This can be represented using a mapping See example (pub. 28)

ZIP+4 l l l Encoding of geographical data Actually, the ZIP code is an overloaded data value It contains state information as well as delivery location focus

ZIP+4 l l l Encoding of geographical data Actually, the ZIP code is an overloaded data value It contains state information as well as delivery location focus

Address Standardization l First: Is the address already in standard form? – – This can be checked by making sure that the address conforms to the address block layout Some special cases need addressing (East West Hwy) Are real city names used, or vanity names? Is correct ZIP+4 used?

Address Standardization l First: Is the address already in standard form? – – This can be checked by making sure that the address conforms to the address block layout Some special cases need addressing (East West Hwy) Are real city names used, or vanity names? Is correct ZIP+4 used?

Address Standardization 2 l More… – – Identify all addressing elements Make sure placement is correct; if not, correct it Is the street specified a valid street name? (USPS provides database) Is the address number valid within the street address ranges?

Address Standardization 2 l More… – – Identify all addressing elements Make sure placement is correct; if not, correct it Is the street specified a valid street name? (USPS provides database) Is the address number valid within the street address ranges?

Address Standardization 3 l Next: Correct if necessary – – – Identify all address elements Look up proper city name Look up correct ZIP+4 l – – – If the right one cannot be used, use the ZIP+4 centroid Move elements to proper location in address block Transform elements into standard abbreviated form Generate bar code (if needed)

Address Standardization 3 l Next: Correct if necessary – – – Identify all address elements Look up proper city name Look up correct ZIP+4 l – – – If the right one cannot be used, use the ZIP+4 centroid Move elements to proper location in address block Transform elements into standard abbreviated form Generate bar code (if needed)

Business Data Elements l l USPS standard is a nice source for business rules Elements are broken down into element classes:

Business Data Elements l l USPS standard is a nice source for business rules Elements are broken down into element classes:

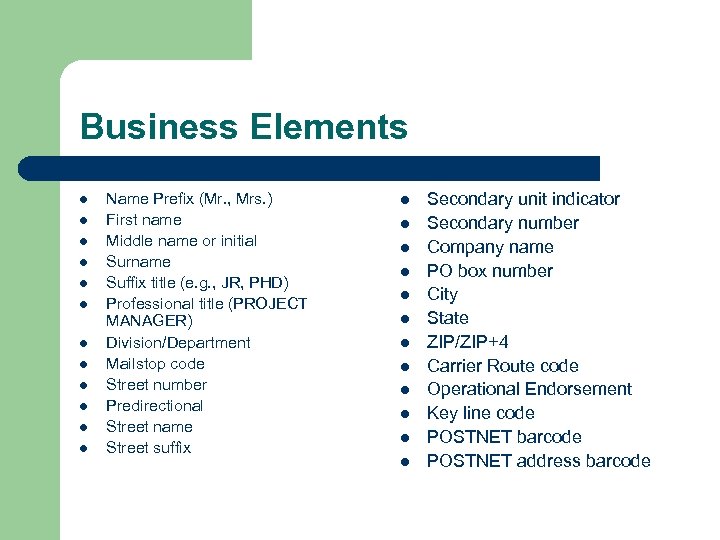

Business Elements l l l Name Prefix (Mr. , Mrs. ) First name Middle name or initial Surname Suffix title (e. g. , JR, PHD) Professional title (PROJECT MANAGER) Division/Department Mailstop code Street number Predirectional Street name Street suffix l l l Secondary unit indicator Secondary number Company name PO box number City State ZIP/ZIP+4 Carrier Route code Operational Endorsement Key line code POSTNET barcode POSTNET address barcode

Business Elements l l l Name Prefix (Mr. , Mrs. ) First name Middle name or initial Surname Suffix title (e. g. , JR, PHD) Professional title (PROJECT MANAGER) Division/Department Mailstop code Street number Predirectional Street name Street suffix l l l Secondary unit indicator Secondary number Company name PO box number City State ZIP/ZIP+4 Carrier Route code Operational Endorsement Key line code POSTNET barcode POSTNET address barcode

CASS l Acronym for Coding Accuracy Support System – – – Provides a platform to measure the quality of address matching and standardization software Addresses are CASS certified if they pass USPS provided tests (I. e. , they are standardized) Only mail that is CASS certified can qualify for postage savings

CASS l Acronym for Coding Accuracy Support System – – – Provides a platform to measure the quality of address matching and standardization software Addresses are CASS certified if they pass USPS provided tests (I. e. , they are standardized) Only mail that is CASS certified can qualify for postage savings

NCOA l l ~20% of population changes addresses each year NCOA: National Change of Address

NCOA l l ~20% of population changes addresses each year NCOA: National Change of Address

Other Standards l l l Telephone industry Financial industry (SWIFT, FIX) HTML SIC codes GIS standards

Other Standards l l l Telephone industry Financial industry (SWIFT, FIX) HTML SIC codes GIS standards

XML l l l www. xml. org Incredible growth of defined format DTDs and schemas for data interchange Review some of these and look for data quality rules!

XML l l l www. xml. org Incredible growth of defined format DTDs and schemas for data interchange Review some of these and look for data quality rules!

Next Week l l l Data cleansing Record linkage Similarity and distance

Next Week l l l Data cleansing Record linkage Similarity and distance