210ba85362f2c57273ef918a67895a12.ppt

- Количество слайдов: 42

Data Mining Comp. Sc. and Inf. Mgmt. Asian Institute of Technology Instructor: Prof. Sumanta Guha Slide Sources: Han & Kamber “Data Mining: Concepts and Techniques” book, slides by Han, Han & Kamber, adapted and supplemented by Guha

Data Mining Comp. Sc. and Inf. Mgmt. Asian Institute of Technology Instructor: Prof. Sumanta Guha Slide Sources: Han & Kamber “Data Mining: Concepts and Techniques” book, slides by Han, Han & Kamber, adapted and supplemented by Guha

Chapter 6: Classification and Prediction

Chapter 6: Classification and Prediction

Classification vs. Prediction vs. Estimation n Classification is the grouping of existing data. n n n Prediction is the use of existing data values to guess a future value. n n n E. g. , grouping patients based on their medical history. E. g. , grouping students based on their test scores. E. g. , using a patient’s medical history to guess the effect of a treatment. E. g. , using a student’s past test scores to guess chance of success in a future exam. Estimation is the prediction of a continuous value. n n E. g. , predicting a patient’s cholesterol level given certain drugs. E. g. , predicting a student’s score on the GRE knowing her scores on school tests. Prediction of discrete values is most often based on classification, e. g. , middle -aged, overweight, male smoker has high chance of heart attack; young, normal weight, female non-smoker has low chance of heart attack. Question: Why is classification not useful in estimation?

Classification vs. Prediction vs. Estimation n Classification is the grouping of existing data. n n n Prediction is the use of existing data values to guess a future value. n n n E. g. , grouping patients based on their medical history. E. g. , grouping students based on their test scores. E. g. , using a patient’s medical history to guess the effect of a treatment. E. g. , using a student’s past test scores to guess chance of success in a future exam. Estimation is the prediction of a continuous value. n n E. g. , predicting a patient’s cholesterol level given certain drugs. E. g. , predicting a student’s score on the GRE knowing her scores on school tests. Prediction of discrete values is most often based on classification, e. g. , middle -aged, overweight, male smoker has high chance of heart attack; young, normal weight, female non-smoker has low chance of heart attack. Question: Why is classification not useful in estimation?

Classification Procedure Split known data into two sets: a training set and a testing set. Next, two-step classification procedure: 1. Learning step: Use training data to develop a classification model. 2. Testing step: Use the testing data to test the accuracy model. If the accuracy is acceptable the model is put to use on real data.

Classification Procedure Split known data into two sets: a training set and a testing set. Next, two-step classification procedure: 1. Learning step: Use training data to develop a classification model. 2. Testing step: Use the testing data to test the accuracy model. If the accuracy is acceptable the model is put to use on real data.

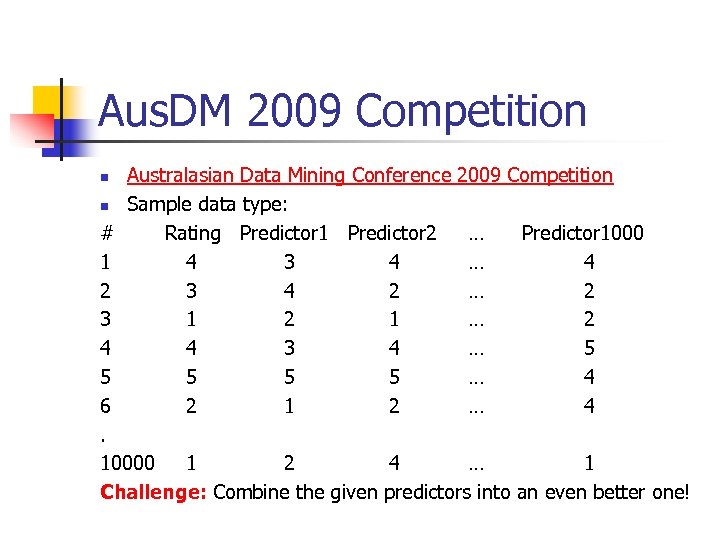

Aus. DM 2009 Competition n n Australasian Data Mining Conference Sample data type: Rating Predictor 1 Predictor 2 4 3 4 2 1 4 3 4 5 5 5 2 1 2 2009 Competition # … Predictor 1000 1 … 4 2 … 2 3 … 2 4 … 5 5 … 4 6 … 4. 10000 1 2 4 … 1 Challenge: Combine the given predictors into an even better one!

Aus. DM 2009 Competition n n Australasian Data Mining Conference Sample data type: Rating Predictor 1 Predictor 2 4 3 4 2 1 4 3 4 5 5 5 2 1 2 2009 Competition # … Predictor 1000 1 … 4 2 … 2 3 … 2 4 … 5 5 … 4 6 … 4. 10000 1 2 4 … 1 Challenge: Combine the given predictors into an even better one!

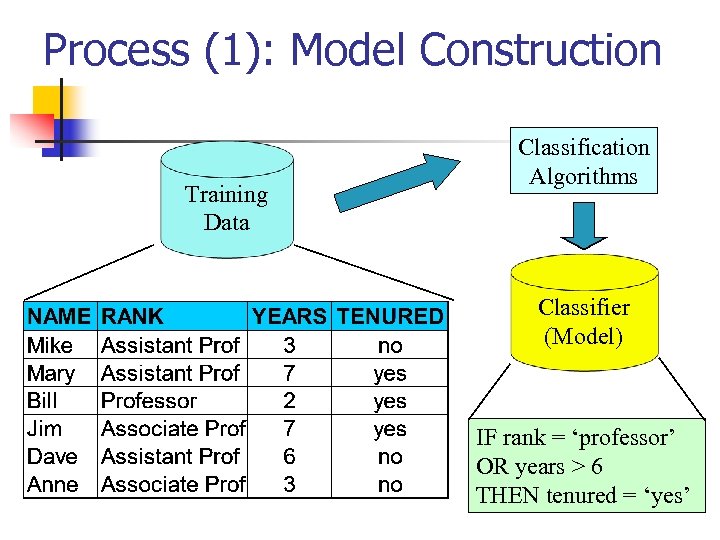

Process (1): Model Construction Training Data Classification Algorithms Classifier (Model) IF rank = ‘professor’ OR years > 6 THEN tenured = ‘yes’

Process (1): Model Construction Training Data Classification Algorithms Classifier (Model) IF rank = ‘professor’ OR years > 6 THEN tenured = ‘yes’

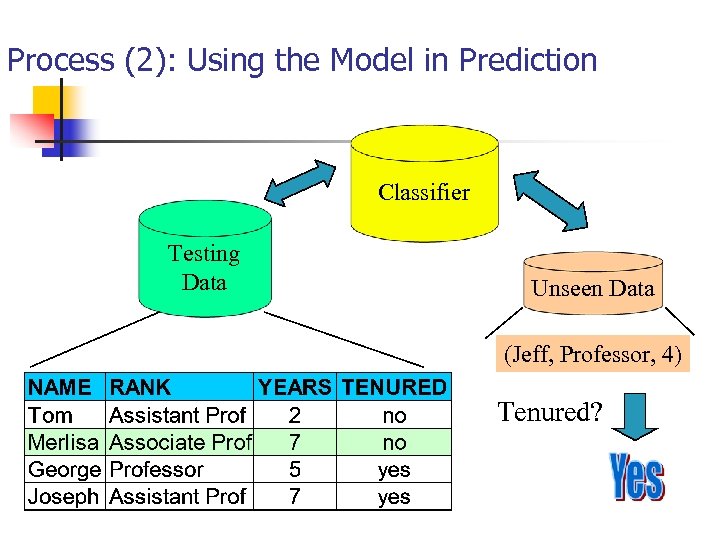

Process (2): Using the Model in Prediction Classifier Testing Data Unseen Data (Jeff, Professor, 4) Tenured?

Process (2): Using the Model in Prediction Classifier Testing Data Unseen Data (Jeff, Professor, 4) Tenured?

Supervised vs. Unsupervised Learning n Supervised learning (classification) n n n Supervision: The training data (observations, measurements, etc. ) are accompanied by labels indicating the class of the observations New data is classified based on the training set Unsupervised learning (clustering) n n The class labels of training data is unknown Given a set of measurements, observations, etc. with the aim of establishing the existence of classes or clusters in the data

Supervised vs. Unsupervised Learning n Supervised learning (classification) n n n Supervision: The training data (observations, measurements, etc. ) are accompanied by labels indicating the class of the observations New data is classified based on the training set Unsupervised learning (clustering) n n The class labels of training data is unknown Given a set of measurements, observations, etc. with the aim of establishing the existence of classes or clusters in the data

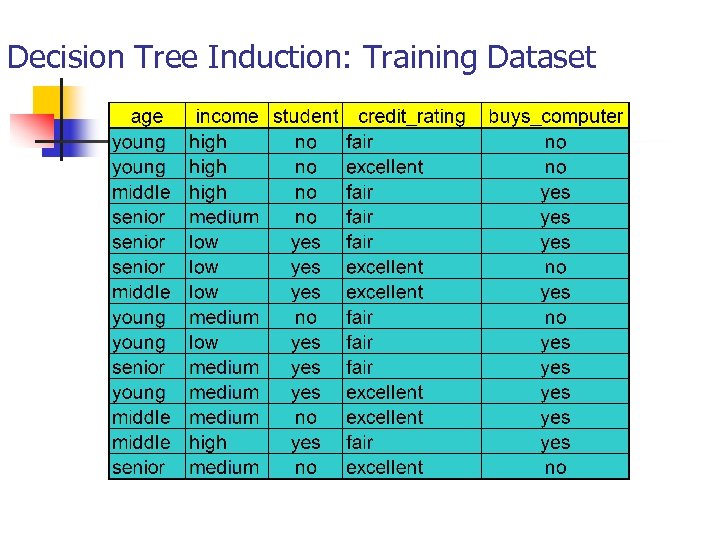

Decision Tree Induction: Training Dataset

Decision Tree Induction: Training Dataset

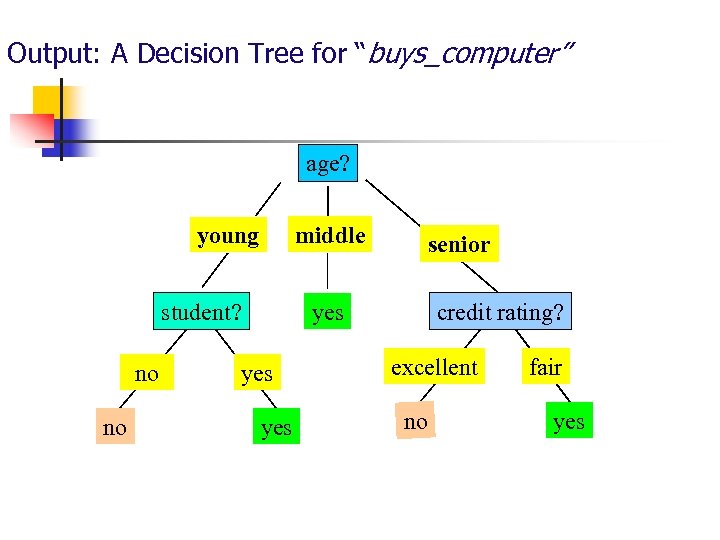

Output: A Decision Tree for “buys_computer” age? middle young student? no no senior credit rating? yes yes excellent no fair yes

Output: A Decision Tree for “buys_computer” age? middle young student? no no senior credit rating? yes yes excellent no fair yes

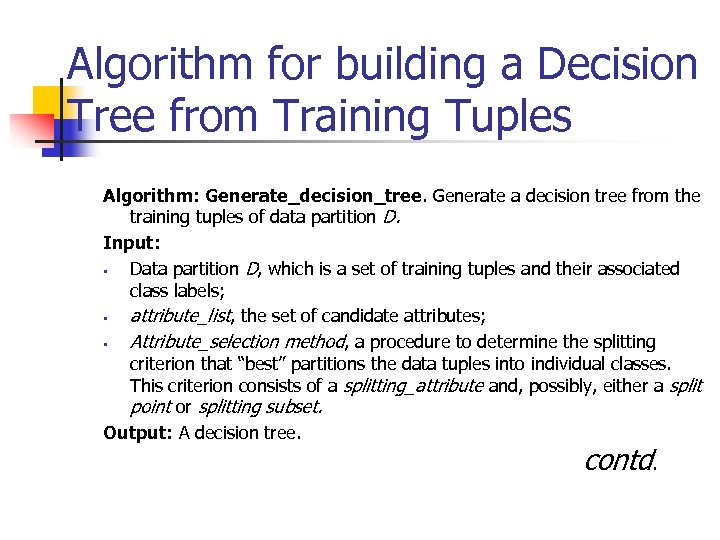

Algorithm for building a Decision Tree from Training Tuples Algorithm: Generate_decision_tree. Generate a decision tree from the training tuples of data partition D. Input: § Data partition D, which is a set of training tuples and their associated class labels; § attribute_list, the set of candidate attributes; § Attribute_selection method, a procedure to determine the splitting criterion that “best” partitions the data tuples into individual classes. This criterion consists of a splitting_attribute and, possibly, either a split point or splitting subset. Output: A decision tree. contd.

Algorithm for building a Decision Tree from Training Tuples Algorithm: Generate_decision_tree. Generate a decision tree from the training tuples of data partition D. Input: § Data partition D, which is a set of training tuples and their associated class labels; § attribute_list, the set of candidate attributes; § Attribute_selection method, a procedure to determine the splitting criterion that “best” partitions the data tuples into individual classes. This criterion consists of a splitting_attribute and, possibly, either a split point or splitting subset. Output: A decision tree. contd.

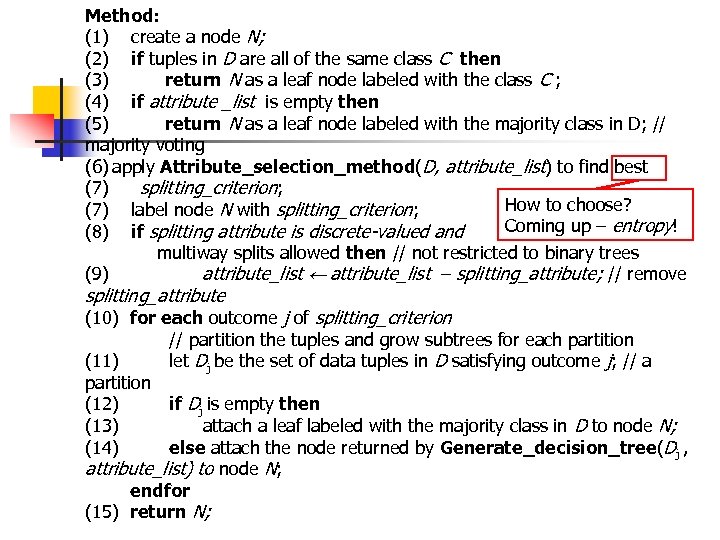

Method: (1) create a node N; (2) if tuples in D are all of the same class C then (3) return N as a leaf node labeled with the class C ; (4) if attribute _list is empty then (5) return N as a leaf node labeled with the majority class in D; // majority voting (6) apply Attribute_selection_method(D, attribute_list) to find best (7) splitting_criterion; How to choose? (7) label node N with splitting_criterion; Coming up – entropy! (8) if splitting attribute is discrete-valued and multiway splits allowed then // not restricted to binary trees (9) attribute_list ← attribute_list – splitting_attribute; // remove splitting_attribute (10) for each outcome j of splitting_criterion // partition the tuples and grow subtrees for each partition (11) let Dj be the set of data tuples in D satisfying outcome j; // a partition (12) if Dj is empty then (13) attach a leaf labeled with the majority class in D to node N; (14) else attach the node returned by Generate_decision_tree(Dj , attribute_list) to node N; endfor (15) return N;

Method: (1) create a node N; (2) if tuples in D are all of the same class C then (3) return N as a leaf node labeled with the class C ; (4) if attribute _list is empty then (5) return N as a leaf node labeled with the majority class in D; // majority voting (6) apply Attribute_selection_method(D, attribute_list) to find best (7) splitting_criterion; How to choose? (7) label node N with splitting_criterion; Coming up – entropy! (8) if splitting attribute is discrete-valued and multiway splits allowed then // not restricted to binary trees (9) attribute_list ← attribute_list – splitting_attribute; // remove splitting_attribute (10) for each outcome j of splitting_criterion // partition the tuples and grow subtrees for each partition (11) let Dj be the set of data tuples in D satisfying outcome j; // a partition (12) if Dj is empty then (13) attach a leaf labeled with the majority class in D to node N; (14) else attach the node returned by Generate_decision_tree(Dj , attribute_list) to node N; endfor (15) return N;

Entropy n n n Entropy measures the uncertainty associated with a random variable. Intuitively, if we toss a coin, then the maximum entropy is if the coin is fair, i. e. , if prob(H) = prob(T) = 0. 5. If the coin is not fair, e. g. , if prob(H) = 0. 75, prob(T) = 0. 25, then entropy is less (there is less uncertainty = less information gain, associated tossing the coin). Calculating entropy: If a random variable X can take values x 1, x 2, …, xn with probabilities p 1, p 2, …, pn, then the entropy of X is given by the formula: H(X) = –∑i=1. . n pi log(pi) (If pi = 0 for some i, then 0 log(0) is taken to be 0. ) Exercise: Calculate the entropy of a fair coin and one with prob(H) = 0. 75, prob(T) = 0. 25. Exercise: Calculate the entropy of a fair dice.

Entropy n n n Entropy measures the uncertainty associated with a random variable. Intuitively, if we toss a coin, then the maximum entropy is if the coin is fair, i. e. , if prob(H) = prob(T) = 0. 5. If the coin is not fair, e. g. , if prob(H) = 0. 75, prob(T) = 0. 25, then entropy is less (there is less uncertainty = less information gain, associated tossing the coin). Calculating entropy: If a random variable X can take values x 1, x 2, …, xn with probabilities p 1, p 2, …, pn, then the entropy of X is given by the formula: H(X) = –∑i=1. . n pi log(pi) (If pi = 0 for some i, then 0 log(0) is taken to be 0. ) Exercise: Calculate the entropy of a fair coin and one with prob(H) = 0. 75, prob(T) = 0. 25. Exercise: Calculate the entropy of a fair dice.

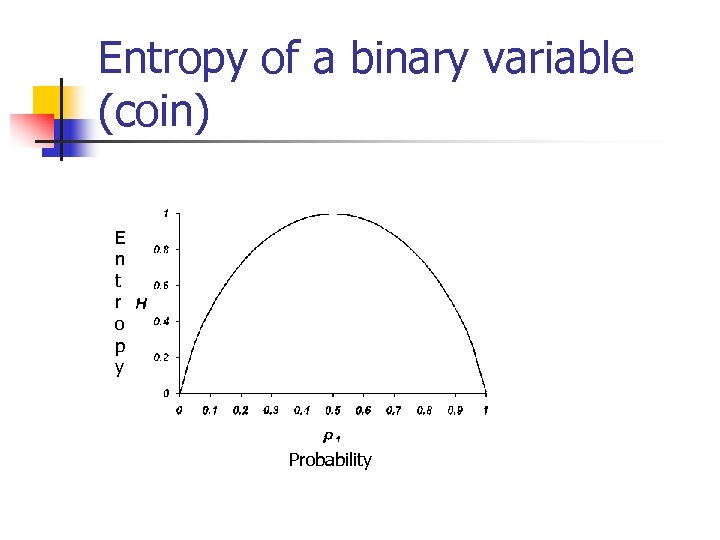

Entropy of a binary variable (coin) E n t r o p y Probability

Entropy of a binary variable (coin) E n t r o p y Probability

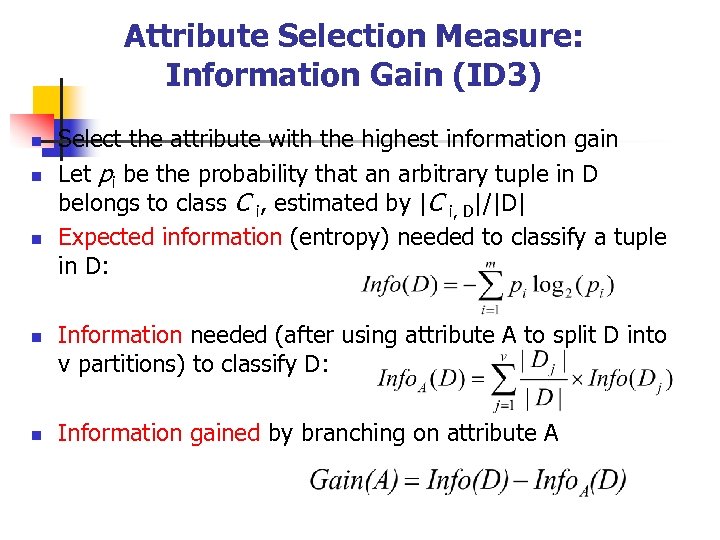

Attribute Selection Measure: Information Gain (ID 3) n n n Select the attribute with the highest information gain Let pi be the probability that an arbitrary tuple in D belongs to class C i, estimated by |C i, D|/|D| Expected information (entropy) needed to classify a tuple in D: Information needed (after using attribute A to split D into v partitions) to classify D: Information gained by branching on attribute A

Attribute Selection Measure: Information Gain (ID 3) n n n Select the attribute with the highest information gain Let pi be the probability that an arbitrary tuple in D belongs to class C i, estimated by |C i, D|/|D| Expected information (entropy) needed to classify a tuple in D: Information needed (after using attribute A to split D into v partitions) to classify D: Information gained by branching on attribute A

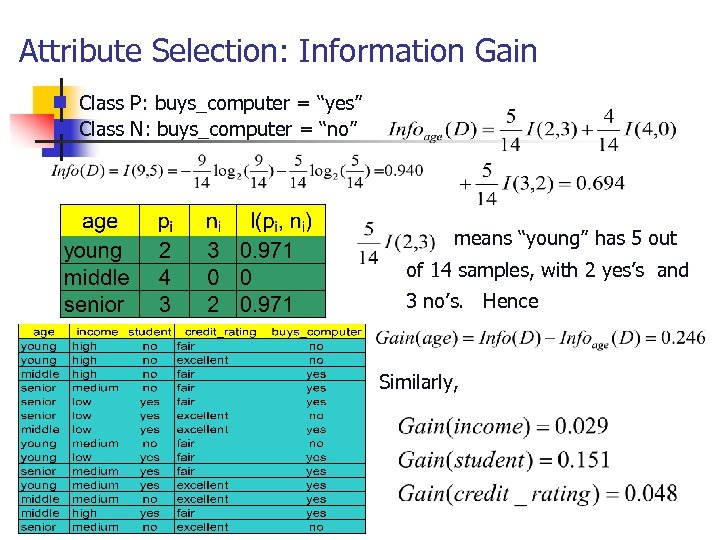

Attribute Selection: Information Gain Class P: buys_computer = “yes” g Class N: buys_computer = “no” g means “young” has 5 out of 14 samples, with 2 yes’s and 3 no’s. Hence Similarly,

Attribute Selection: Information Gain Class P: buys_computer = “yes” g Class N: buys_computer = “no” g means “young” has 5 out of 14 samples, with 2 yes’s and 3 no’s. Hence Similarly,

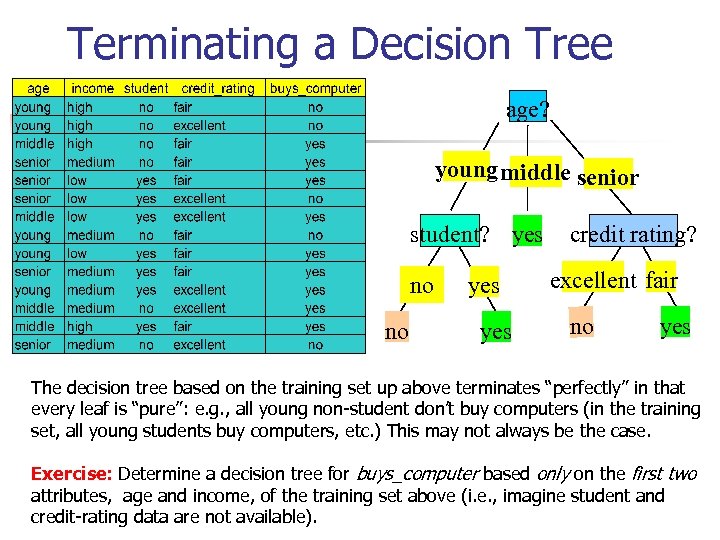

Terminating a Decision Tree age? young middle senior student? yes no no yes credit rating? excellent fair no yes The decision tree based on the training set up above terminates “perfectly” in that every leaf is “pure”: e. g. , all young non-student don’t buy computers (in the training set, all young students buy computers, etc. ) This may not always be the case. Exercise: Determine a decision tree for buys_computer based only on the first two attributes, age and income, of the training set above (i. e. , imagine student and credit-rating data are not available).

Terminating a Decision Tree age? young middle senior student? yes no no yes credit rating? excellent fair no yes The decision tree based on the training set up above terminates “perfectly” in that every leaf is “pure”: e. g. , all young non-student don’t buy computers (in the training set, all young students buy computers, etc. ) This may not always be the case. Exercise: Determine a decision tree for buys_computer based only on the first two attributes, age and income, of the training set above (i. e. , imagine student and credit-rating data are not available).

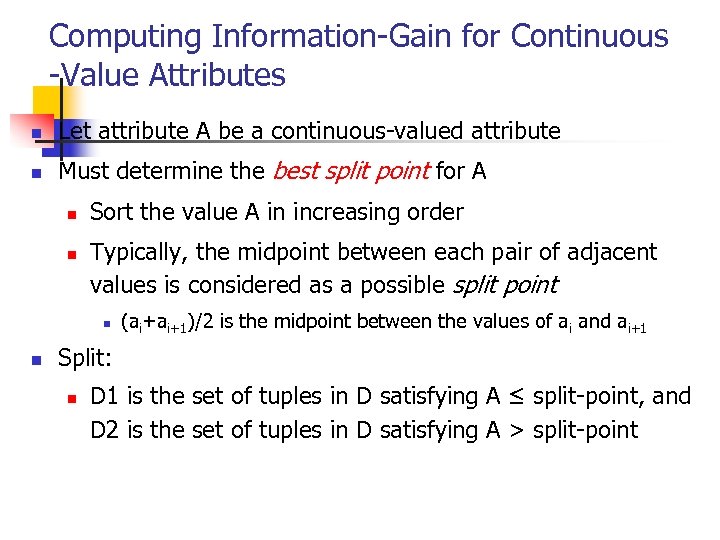

Computing Information-Gain for Continuous -Value Attributes n Let attribute A be a continuous-valued attribute n Must determine the best split point for A n n Sort the value A in increasing order Typically, the midpoint between each pair of adjacent values is considered as a possible split point n n (ai+ai+1)/2 is the midpoint between the values of ai and ai+1 Split: n D 1 is the set of tuples in D satisfying A ≤ split-point, and D 2 is the set of tuples in D satisfying A > split-point

Computing Information-Gain for Continuous -Value Attributes n Let attribute A be a continuous-valued attribute n Must determine the best split point for A n n Sort the value A in increasing order Typically, the midpoint between each pair of adjacent values is considered as a possible split point n n (ai+ai+1)/2 is the midpoint between the values of ai and ai+1 Split: n D 1 is the set of tuples in D satisfying A ≤ split-point, and D 2 is the set of tuples in D satisfying A > split-point

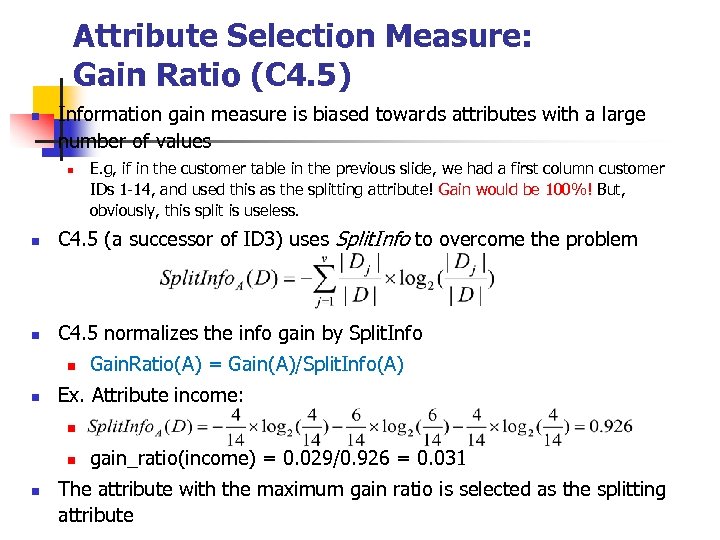

Attribute Selection Measure: Gain Ratio (C 4. 5) n Information gain measure is biased towards attributes with a large number of values n E. g, if in the customer table in the previous slide, we had a first column customer IDs 1 -14, and used this as the splitting attribute! Gain would be 100%! But, obviously, this split is useless. n C 4. 5 (a successor of ID 3) uses Split. Info to overcome the problem n C 4. 5 normalizes the info gain by Split. Info n n Gain. Ratio(A) = Gain(A)/Split. Info(A) Ex. Attribute income: n n n gain_ratio(income) = 0. 029/0. 926 = 0. 031 The attribute with the maximum gain ratio is selected as the splitting attribute

Attribute Selection Measure: Gain Ratio (C 4. 5) n Information gain measure is biased towards attributes with a large number of values n E. g, if in the customer table in the previous slide, we had a first column customer IDs 1 -14, and used this as the splitting attribute! Gain would be 100%! But, obviously, this split is useless. n C 4. 5 (a successor of ID 3) uses Split. Info to overcome the problem n C 4. 5 normalizes the info gain by Split. Info n n Gain. Ratio(A) = Gain(A)/Split. Info(A) Ex. Attribute income: n n n gain_ratio(income) = 0. 029/0. 926 = 0. 031 The attribute with the maximum gain ratio is selected as the splitting attribute

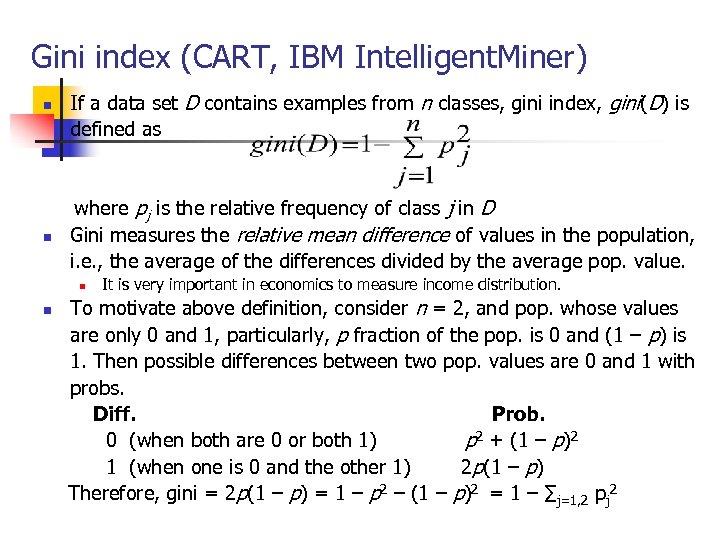

Gini index (CART, IBM Intelligent. Miner) n n If a data set D contains examples from n classes, gini index, gini(D) is defined as where pj is the relative frequency of class j in D Gini measures the relative mean difference of values in the population, i. e. , the average of the differences divided by the average pop. value. n n It is very important in economics to measure income distribution. To motivate above definition, consider n = 2, and pop. whose values are only 0 and 1, particularly, p fraction of the pop. is 0 and (1 – p) is 1. Then possible differences between two pop. values are 0 and 1 with probs. Diff. Prob. 0 (when both are 0 or both 1) p 2 + (1 – p)2 1 (when one is 0 and the other 1) 2 p(1 – p) Therefore, gini = 2 p(1 – p) = 1 – p 2 – (1 – p)2 = 1 – ∑j=1, 2 pj 2

Gini index (CART, IBM Intelligent. Miner) n n If a data set D contains examples from n classes, gini index, gini(D) is defined as where pj is the relative frequency of class j in D Gini measures the relative mean difference of values in the population, i. e. , the average of the differences divided by the average pop. value. n n It is very important in economics to measure income distribution. To motivate above definition, consider n = 2, and pop. whose values are only 0 and 1, particularly, p fraction of the pop. is 0 and (1 – p) is 1. Then possible differences between two pop. values are 0 and 1 with probs. Diff. Prob. 0 (when both are 0 or both 1) p 2 + (1 – p)2 1 (when one is 0 and the other 1) 2 p(1 – p) Therefore, gini = 2 p(1 – p) = 1 – p 2 – (1 – p)2 = 1 – ∑j=1, 2 pj 2

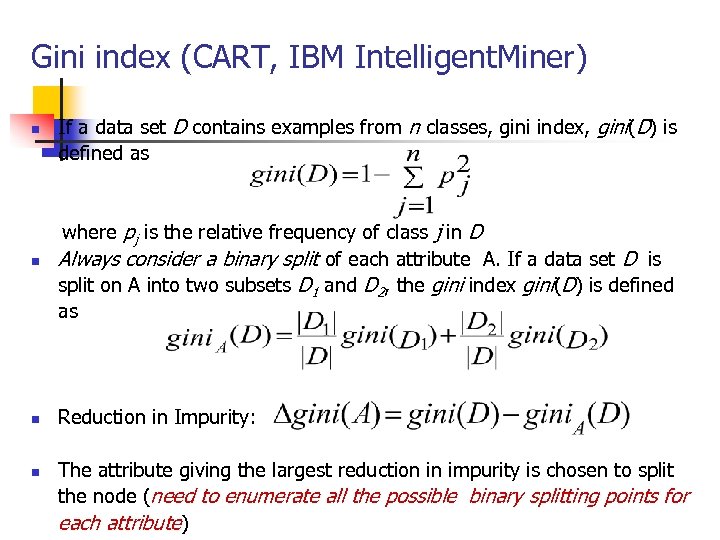

Gini index (CART, IBM Intelligent. Miner) n n If a data set D contains examples from n classes, gini index, gini(D) is defined as where pj is the relative frequency of class j in D Always consider a binary split of each attribute A. If a data set D is split on A into two subsets D 1 and D 2, the gini index gini(D) is defined as Reduction in Impurity: The attribute giving the largest reduction in impurity is chosen to split the node (need to enumerate all the possible binary splitting points for each attribute)

Gini index (CART, IBM Intelligent. Miner) n n If a data set D contains examples from n classes, gini index, gini(D) is defined as where pj is the relative frequency of class j in D Always consider a binary split of each attribute A. If a data set D is split on A into two subsets D 1 and D 2, the gini index gini(D) is defined as Reduction in Impurity: The attribute giving the largest reduction in impurity is chosen to split the node (need to enumerate all the possible binary splitting points for each attribute)

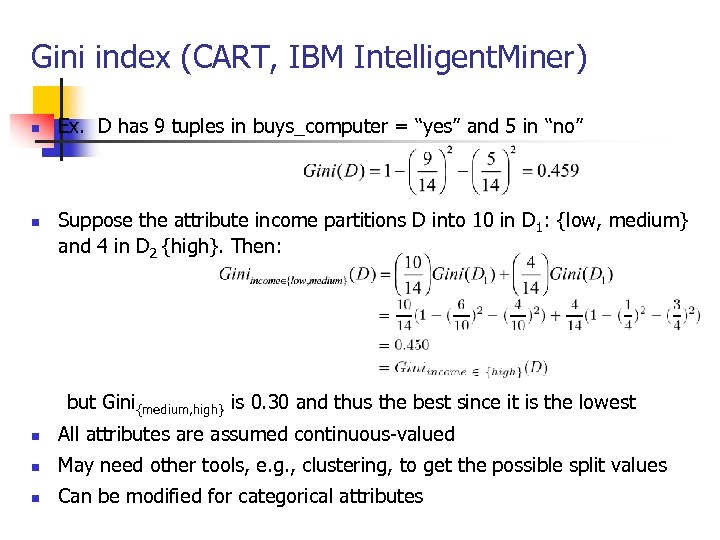

Gini index (CART, IBM Intelligent. Miner) n n Ex. D has 9 tuples in buys_computer = “yes” and 5 in “no” Suppose the attribute income partitions D into 10 in D 1: {low, medium} and 4 in D 2 {high}. Then: but Gini{medium, high} is 0. 30 and thus the best since it is the lowest n All attributes are assumed continuous-valued n May need other tools, e. g. , clustering, to get the possible split values n Can be modified for categorical attributes

Gini index (CART, IBM Intelligent. Miner) n n Ex. D has 9 tuples in buys_computer = “yes” and 5 in “no” Suppose the attribute income partitions D into 10 in D 1: {low, medium} and 4 in D 2 {high}. Then: but Gini{medium, high} is 0. 30 and thus the best since it is the lowest n All attributes are assumed continuous-valued n May need other tools, e. g. , clustering, to get the possible split values n Can be modified for categorical attributes

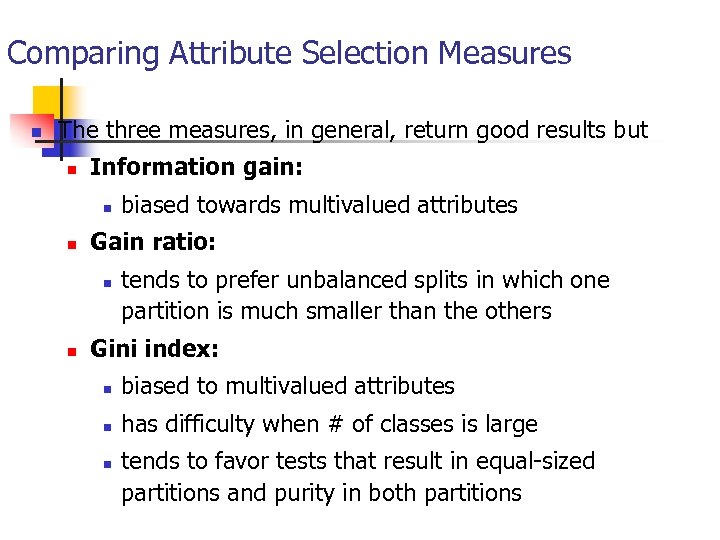

Comparing Attribute Selection Measures n The three measures, in general, return good results but n Information gain: n n Gain ratio: n n biased towards multivalued attributes tends to prefer unbalanced splits in which one partition is much smaller than the others Gini index: n biased to multivalued attributes n has difficulty when # of classes is large n tends to favor tests that result in equal-sized partitions and purity in both partitions

Comparing Attribute Selection Measures n The three measures, in general, return good results but n Information gain: n n Gain ratio: n n biased towards multivalued attributes tends to prefer unbalanced splits in which one partition is much smaller than the others Gini index: n biased to multivalued attributes n has difficulty when # of classes is large n tends to favor tests that result in equal-sized partitions and purity in both partitions

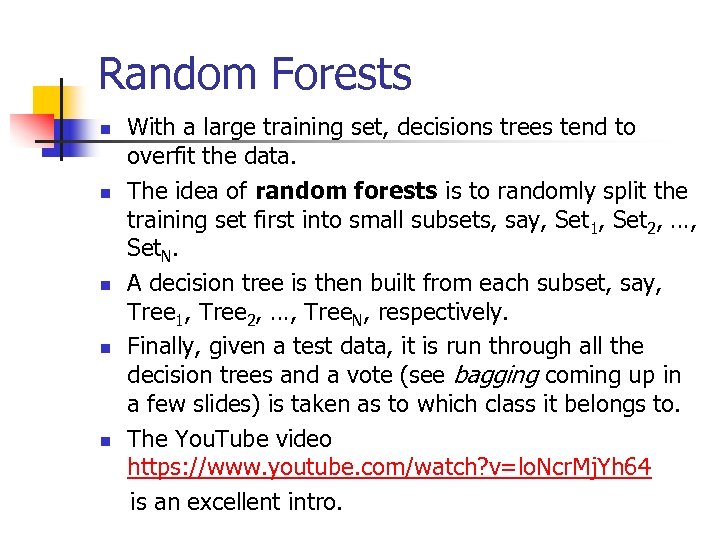

Random Forests n n n With a large training set, decisions trees tend to overfit the data. The idea of random forests is to randomly split the training set first into small subsets, say, Set 1, Set 2, …, Set. N. A decision tree is then built from each subset, say, Tree 1, Tree 2, …, Tree. N, respectively. Finally, given a test data, it is run through all the decision trees and a vote (see bagging coming up in a few slides) is taken as to which class it belongs to. The You. Tube video https: //www. youtube. com/watch? v=lo. Ncr. Mj. Yh 64 is an excellent intro.

Random Forests n n n With a large training set, decisions trees tend to overfit the data. The idea of random forests is to randomly split the training set first into small subsets, say, Set 1, Set 2, …, Set. N. A decision tree is then built from each subset, say, Tree 1, Tree 2, …, Tree. N, respectively. Finally, given a test data, it is run through all the decision trees and a vote (see bagging coming up in a few slides) is taken as to which class it belongs to. The You. Tube video https: //www. youtube. com/watch? v=lo. Ncr. Mj. Yh 64 is an excellent intro.

Bayesian Classification: Why? n n n A statistical classifier: performs probabilistic prediction, i. e. , predicts class membership probabilities Foundation: Based on Bayes’ Theorem. Performance: A simple Bayesian classifier, naïve Bayesian classifier, has comparable performance with decision tree and selected neural network classifiers Incremental: Each training example can incrementally increase/decrease the probability that a hypothesis is correct — prior knowledge can be combined with observed data Standard: Even when Bayesian methods are computationally intractable, they can provide a standard of optimal decision making against which other methods can be measured

Bayesian Classification: Why? n n n A statistical classifier: performs probabilistic prediction, i. e. , predicts class membership probabilities Foundation: Based on Bayes’ Theorem. Performance: A simple Bayesian classifier, naïve Bayesian classifier, has comparable performance with decision tree and selected neural network classifiers Incremental: Each training example can incrementally increase/decrease the probability that a hypothesis is correct — prior knowledge can be combined with observed data Standard: Even when Bayesian methods are computationally intractable, they can provide a standard of optimal decision making against which other methods can be measured

Bayesian Theorem: Basics n Let X be a data sample (“evidence”): class label is unknown n Let H be a hypothesis that X belongs to class C n n Classification is to determine P(H|X) (posteriori probability of H conditioned on X), the probability that the hypothesis holds given the observed data sample X P(H) (prior probability), the initial probability n n P(X): prior probability that an evidence is observed n n E. g. , H is “will buy computer” regardless of age, income, … E. g. , X is “age young, income medium, student no, c_r fair” P(X|H) (posteriori probability of X conditioned on H), the probability of observing the evidence X, given that the hypothesis holds n E. g. , Given H that a customer will buy computer, P(X|H) is the prob. of evidence X that customer “age young, income medium, student no, c_r fair”

Bayesian Theorem: Basics n Let X be a data sample (“evidence”): class label is unknown n Let H be a hypothesis that X belongs to class C n n Classification is to determine P(H|X) (posteriori probability of H conditioned on X), the probability that the hypothesis holds given the observed data sample X P(H) (prior probability), the initial probability n n P(X): prior probability that an evidence is observed n n E. g. , H is “will buy computer” regardless of age, income, … E. g. , X is “age young, income medium, student no, c_r fair” P(X|H) (posteriori probability of X conditioned on H), the probability of observing the evidence X, given that the hypothesis holds n E. g. , Given H that a customer will buy computer, P(X|H) is the prob. of evidence X that customer “age young, income medium, student no, c_r fair”

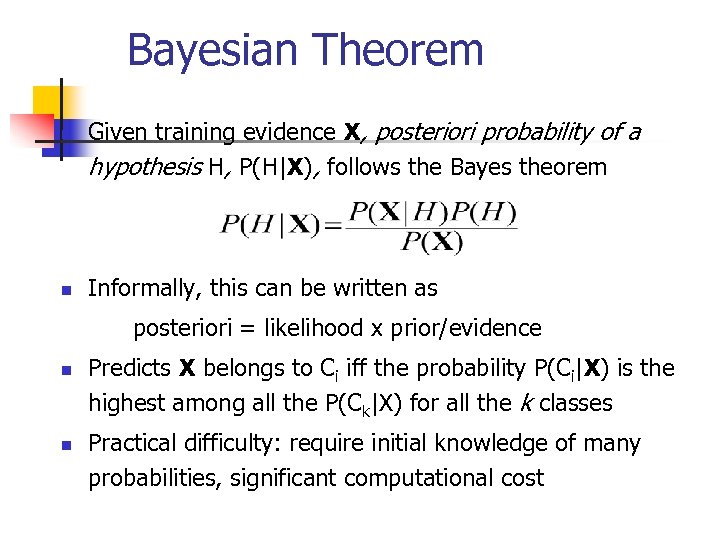

Bayesian Theorem n n Given training evidence X, posteriori probability of a hypothesis H, P(H|X), follows the Bayes theorem Informally, this can be written as posteriori = likelihood x prior/evidence n n Predicts X belongs to Ci iff the probability P(Ci|X) is the highest among all the P(Ck|X) for all the k classes Practical difficulty: require initial knowledge of many probabilities, significant computational cost

Bayesian Theorem n n Given training evidence X, posteriori probability of a hypothesis H, P(H|X), follows the Bayes theorem Informally, this can be written as posteriori = likelihood x prior/evidence n n Predicts X belongs to Ci iff the probability P(Ci|X) is the highest among all the P(Ck|X) for all the k classes Practical difficulty: require initial knowledge of many probabilities, significant computational cost

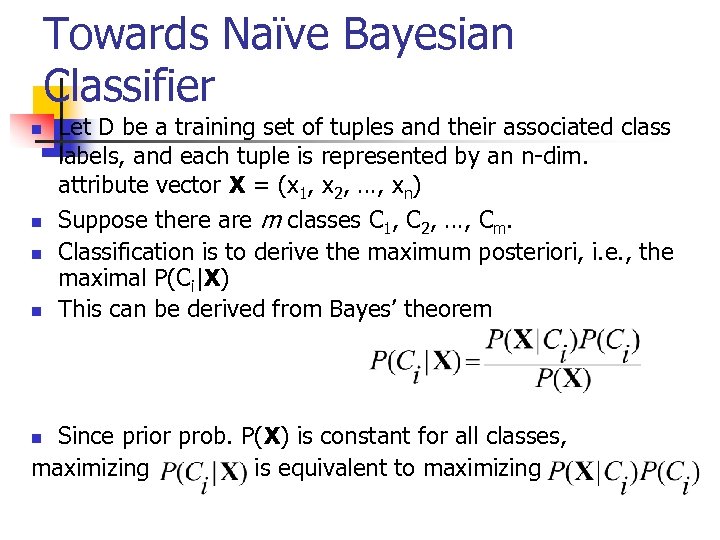

Towards Naïve Bayesian Classifier n n Let D be a training set of tuples and their associated class labels, and each tuple is represented by an n-dim. attribute vector X = (x 1, x 2, …, xn) Suppose there are m classes C 1, C 2, …, Cm. Classification is to derive the maximum posteriori, i. e. , the maximal P(Ci|X) This can be derived from Bayes’ theorem Since prior prob. P(X) is constant for all classes, maximizing is equivalent to maximizing n

Towards Naïve Bayesian Classifier n n Let D be a training set of tuples and their associated class labels, and each tuple is represented by an n-dim. attribute vector X = (x 1, x 2, …, xn) Suppose there are m classes C 1, C 2, …, Cm. Classification is to derive the maximum posteriori, i. e. , the maximal P(Ci|X) This can be derived from Bayes’ theorem Since prior prob. P(X) is constant for all classes, maximizing is equivalent to maximizing n

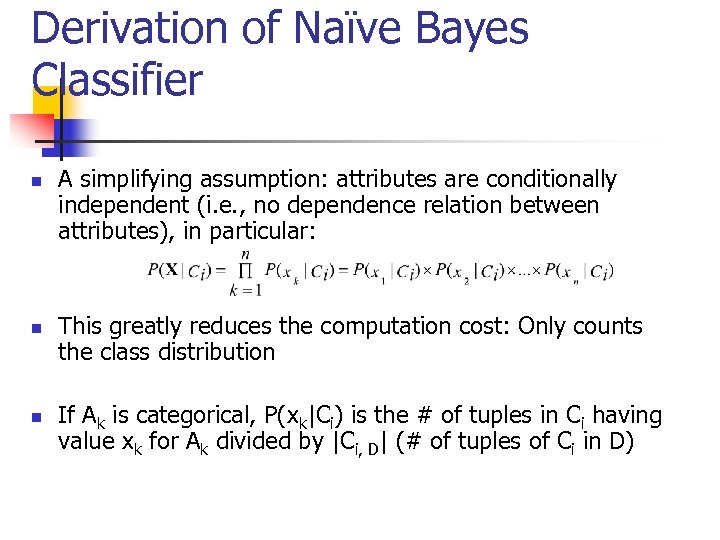

Derivation of Naïve Bayes Classifier n n n A simplifying assumption: attributes are conditionally independent (i. e. , no dependence relation between attributes), in particular: This greatly reduces the computation cost: Only counts the class distribution If Ak is categorical, P(xk|Ci) is the # of tuples in Ci having value xk for Ak divided by |Ci, D| (# of tuples of Ci in D)

Derivation of Naïve Bayes Classifier n n n A simplifying assumption: attributes are conditionally independent (i. e. , no dependence relation between attributes), in particular: This greatly reduces the computation cost: Only counts the class distribution If Ak is categorical, P(xk|Ci) is the # of tuples in Ci having value xk for Ak divided by |Ci, D| (# of tuples of Ci in D)

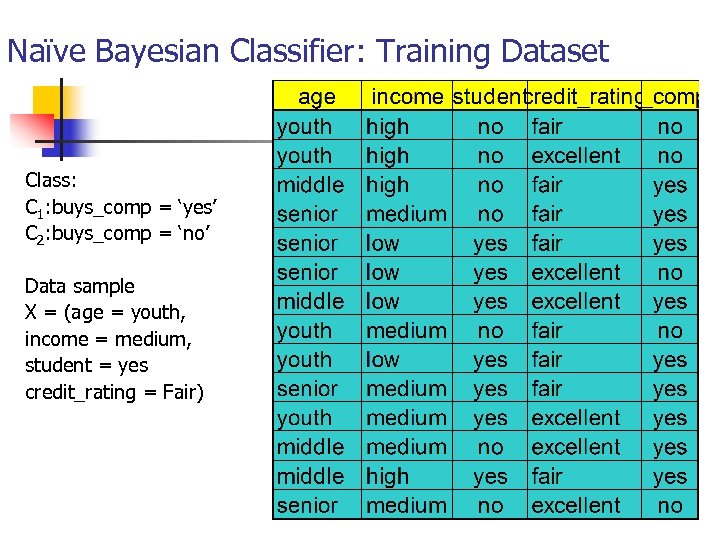

Naïve Bayesian Classifier: Training Dataset Class: C 1: buys_comp = ‘yes’ C 2: buys_comp = ‘no’ Data sample X = (age = youth, income = medium, student = yes credit_rating = Fair)

Naïve Bayesian Classifier: Training Dataset Class: C 1: buys_comp = ‘yes’ C 2: buys_comp = ‘no’ Data sample X = (age = youth, income = medium, student = yes credit_rating = Fair)

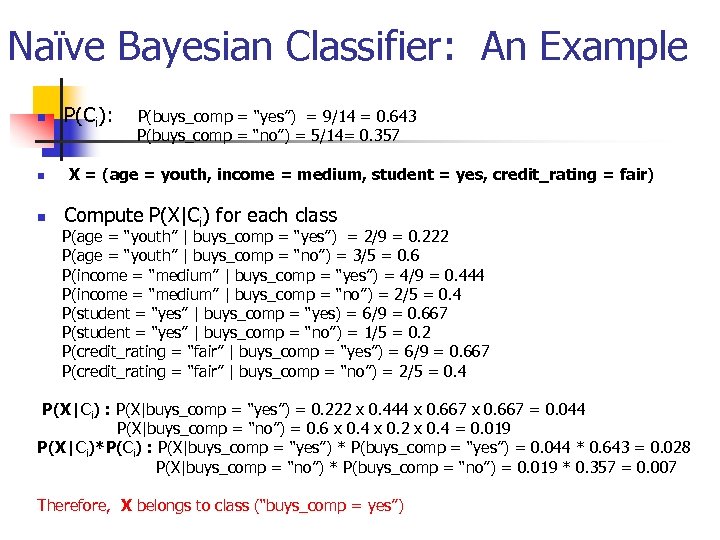

Naïve Bayesian Classifier: An Example n n n P(Ci): P(buys_comp = “yes”) = 9/14 = 0. 643 P(buys_comp = “no”) = 5/14= 0. 357 X = (age = youth, income = medium, student = yes, credit_rating = fair) Compute P(X|Ci) for each class P(age = “youth” | buys_comp = “yes”) = 2/9 = 0. 222 P(age = “youth” | buys_comp = “no”) = 3/5 = 0. 6 P(income = “medium” | buys_comp = “yes”) = 4/9 = 0. 444 P(income = “medium” | buys_comp = “no”) = 2/5 = 0. 4 P(student = “yes” | buys_comp = “yes) = 6/9 = 0. 667 P(student = “yes” | buys_comp = “no”) = 1/5 = 0. 2 P(credit_rating = “fair” | buys_comp = “yes”) = 6/9 = 0. 667 P(credit_rating = “fair” | buys_comp = “no”) = 2/5 = 0. 4 P(X|Ci) : P(X|buys_comp = “yes”) = 0. 222 x 0. 444 x 0. 667 = 0. 044 P(X|buys_comp = “no”) = 0. 6 x 0. 4 x 0. 2 x 0. 4 = 0. 019 P(X|Ci)*P(Ci) : P(X|buys_comp = “yes”) * P(buys_comp = “yes”) = 0. 044 * 0. 643 = 0. 028 P(X|buys_comp = “no”) * P(buys_comp = “no”) = 0. 019 * 0. 357 = 0. 007 Therefore, X belongs to class (“buys_comp = yes”)

Naïve Bayesian Classifier: An Example n n n P(Ci): P(buys_comp = “yes”) = 9/14 = 0. 643 P(buys_comp = “no”) = 5/14= 0. 357 X = (age = youth, income = medium, student = yes, credit_rating = fair) Compute P(X|Ci) for each class P(age = “youth” | buys_comp = “yes”) = 2/9 = 0. 222 P(age = “youth” | buys_comp = “no”) = 3/5 = 0. 6 P(income = “medium” | buys_comp = “yes”) = 4/9 = 0. 444 P(income = “medium” | buys_comp = “no”) = 2/5 = 0. 4 P(student = “yes” | buys_comp = “yes) = 6/9 = 0. 667 P(student = “yes” | buys_comp = “no”) = 1/5 = 0. 2 P(credit_rating = “fair” | buys_comp = “yes”) = 6/9 = 0. 667 P(credit_rating = “fair” | buys_comp = “no”) = 2/5 = 0. 4 P(X|Ci) : P(X|buys_comp = “yes”) = 0. 222 x 0. 444 x 0. 667 = 0. 044 P(X|buys_comp = “no”) = 0. 6 x 0. 4 x 0. 2 x 0. 4 = 0. 019 P(X|Ci)*P(Ci) : P(X|buys_comp = “yes”) * P(buys_comp = “yes”) = 0. 044 * 0. 643 = 0. 028 P(X|buys_comp = “no”) * P(buys_comp = “no”) = 0. 019 * 0. 357 = 0. 007 Therefore, X belongs to class (“buys_comp = yes”)

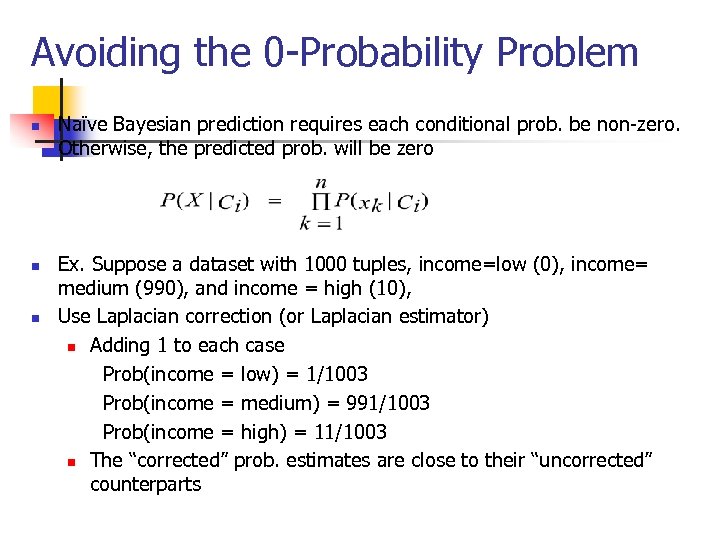

Avoiding the 0 -Probability Problem n n n Naïve Bayesian prediction requires each conditional prob. be non-zero. Otherwise, the predicted prob. will be zero Ex. Suppose a dataset with 1000 tuples, income=low (0), income= medium (990), and income = high (10), Use Laplacian correction (or Laplacian estimator) n Adding 1 to each case Prob(income = low) = 1/1003 Prob(income = medium) = 991/1003 Prob(income = high) = 11/1003 n The “corrected” prob. estimates are close to their “uncorrected” counterparts

Avoiding the 0 -Probability Problem n n n Naïve Bayesian prediction requires each conditional prob. be non-zero. Otherwise, the predicted prob. will be zero Ex. Suppose a dataset with 1000 tuples, income=low (0), income= medium (990), and income = high (10), Use Laplacian correction (or Laplacian estimator) n Adding 1 to each case Prob(income = low) = 1/1003 Prob(income = medium) = 991/1003 Prob(income = high) = 11/1003 n The “corrected” prob. estimates are close to their “uncorrected” counterparts

Naïve Bayesian Classifier: Comments n n Advantages n Easy to implement n Good results obtained in most of the cases Disadvantages n Assumption: class conditional independence, therefore loss of accuracy n Practically, dependencies exist among variables E. g. , hospitals: patients: Profile: age, family history, etc. Symptoms: fever, cough etc. , Disease: lung cancer, diabetes, etc. n Dependencies among these cannot be modeled by Naïve Bayesian Classifier n n How to deal with these dependencies? n Bayesian Belief Networks

Naïve Bayesian Classifier: Comments n n Advantages n Easy to implement n Good results obtained in most of the cases Disadvantages n Assumption: class conditional independence, therefore loss of accuracy n Practically, dependencies exist among variables E. g. , hospitals: patients: Profile: age, family history, etc. Symptoms: fever, cough etc. , Disease: lung cancer, diabetes, etc. n Dependencies among these cannot be modeled by Naïve Bayesian Classifier n n How to deal with these dependencies? n Bayesian Belief Networks

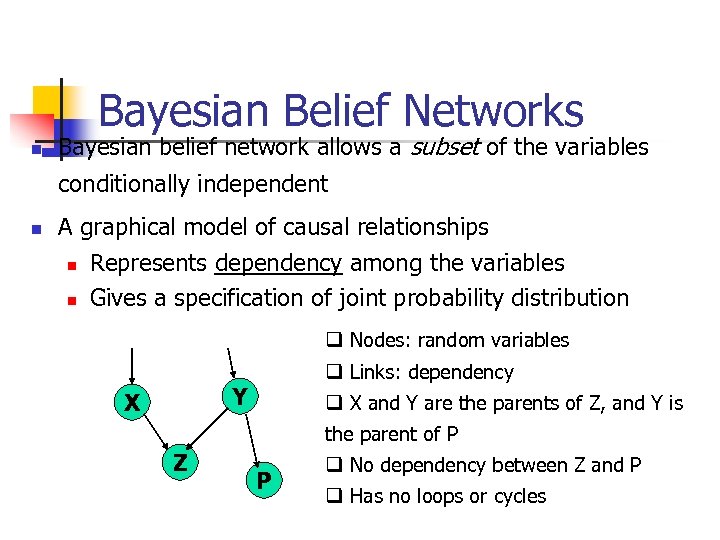

Bayesian Belief Networks n Bayesian belief network allows a subset of the variables conditionally independent n A graphical model of causal relationships n n Represents dependency among the variables Gives a specification of joint probability distribution q Nodes: random variables q Links: dependency Y X q X and Y are the parents of Z, and Y is the parent of P Z P q No dependency between Z and P q Has no loops or cycles

Bayesian Belief Networks n Bayesian belief network allows a subset of the variables conditionally independent n A graphical model of causal relationships n n Represents dependency among the variables Gives a specification of joint probability distribution q Nodes: random variables q Links: dependency Y X q X and Y are the parents of Z, and Y is the parent of P Z P q No dependency between Z and P q Has no loops or cycles

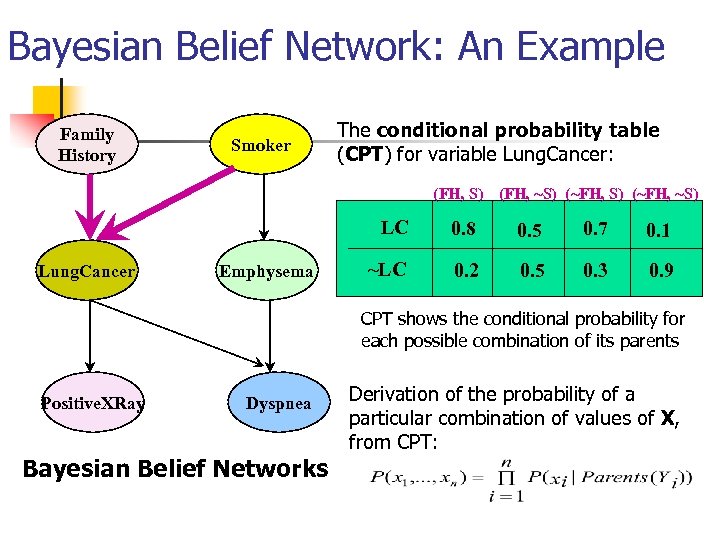

Bayesian Belief Network: An Example Family History Smoker The conditional probability table (CPT) for variable Lung. Cancer: (FH, S) (FH, ~S) (~FH, ~S) LC Lung. Cancer Emphysema 0. 8 0. 5 0. 7 0. 1 ~LC 0. 2 0. 5 0. 3 0. 9 CPT shows the conditional probability for each possible combination of its parents Positive. XRay Dyspnea Bayesian Belief Networks Derivation of the probability of a particular combination of values of X, from CPT:

Bayesian Belief Network: An Example Family History Smoker The conditional probability table (CPT) for variable Lung. Cancer: (FH, S) (FH, ~S) (~FH, ~S) LC Lung. Cancer Emphysema 0. 8 0. 5 0. 7 0. 1 ~LC 0. 2 0. 5 0. 3 0. 9 CPT shows the conditional probability for each possible combination of its parents Positive. XRay Dyspnea Bayesian Belief Networks Derivation of the probability of a particular combination of values of X, from CPT:

Training Bayesian Networks n Several scenarios: n Given both the network structure and all variables observable: learn only the CPTs n Network structure known, some hidden variables: gradient descent (greedy hill-climbing) method, analogous to neural network learning n Network structure unknown, all variables observable: search through the model space to reconstruct network topology Unknown structure, all hidden variables: No good algorithms known for this purpose Ref. D. Heckerman: Bayesian networks for data mining n n

Training Bayesian Networks n Several scenarios: n Given both the network structure and all variables observable: learn only the CPTs n Network structure known, some hidden variables: gradient descent (greedy hill-climbing) method, analogous to neural network learning n Network structure unknown, all variables observable: search through the model space to reconstruct network topology Unknown structure, all hidden variables: No good algorithms known for this purpose Ref. D. Heckerman: Bayesian networks for data mining n n

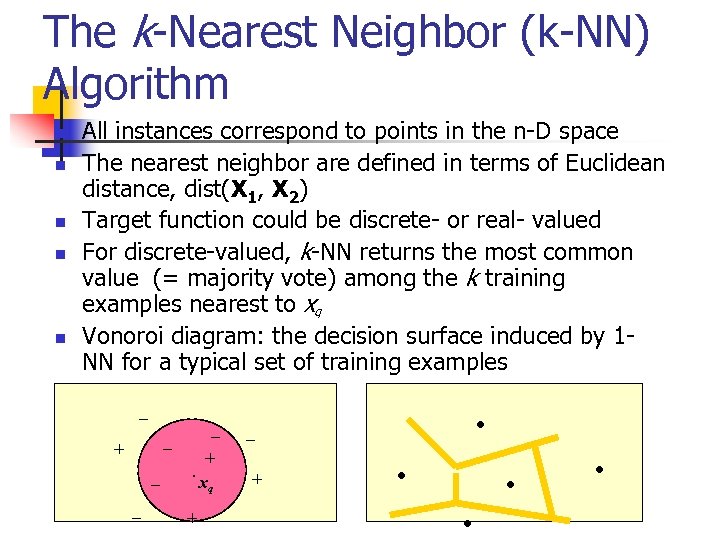

The k-Nearest Neighbor (k-NN) Algorithm n n n All instances correspond to points in the n-D space The nearest neighbor are defined in terms of Euclidean distance, dist(X 1, X 2) Target function could be discrete- or real- valued For discrete-valued, k-NN returns the most common value (= majority vote) among the k training examples nearest to xq Vonoroi diagram: the decision surface induced by 1 NN for a typical set of training examples _ _ + _ _ _ +. xq + . _ + . .

The k-Nearest Neighbor (k-NN) Algorithm n n n All instances correspond to points in the n-D space The nearest neighbor are defined in terms of Euclidean distance, dist(X 1, X 2) Target function could be discrete- or real- valued For discrete-valued, k-NN returns the most common value (= majority vote) among the k training examples nearest to xq Vonoroi diagram: the decision surface induced by 1 NN for a typical set of training examples _ _ + _ _ _ +. xq + . _ + . .

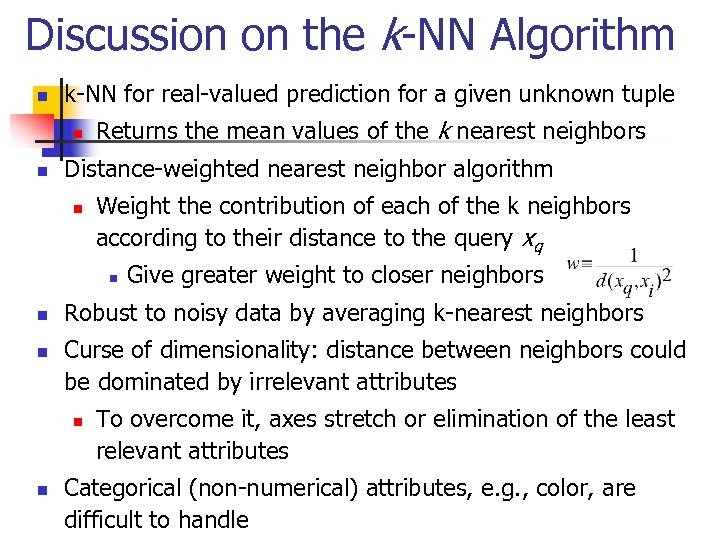

Discussion on the k-NN Algorithm n k-NN for real-valued prediction for a given unknown tuple n n Returns the mean values of the k nearest neighbors Distance-weighted nearest neighbor algorithm n Weight the contribution of each of the k neighbors according to their distance to the query xq n n n Robust to noisy data by averaging k-nearest neighbors Curse of dimensionality: distance between neighbors could be dominated by irrelevant attributes n n Give greater weight to closer neighbors To overcome it, axes stretch or elimination of the least relevant attributes Categorical (non-numerical) attributes, e. g. , color, are difficult to handle

Discussion on the k-NN Algorithm n k-NN for real-valued prediction for a given unknown tuple n n Returns the mean values of the k nearest neighbors Distance-weighted nearest neighbor algorithm n Weight the contribution of each of the k neighbors according to their distance to the query xq n n n Robust to noisy data by averaging k-nearest neighbors Curse of dimensionality: distance between neighbors could be dominated by irrelevant attributes n n Give greater weight to closer neighbors To overcome it, axes stretch or elimination of the least relevant attributes Categorical (non-numerical) attributes, e. g. , color, are difficult to handle

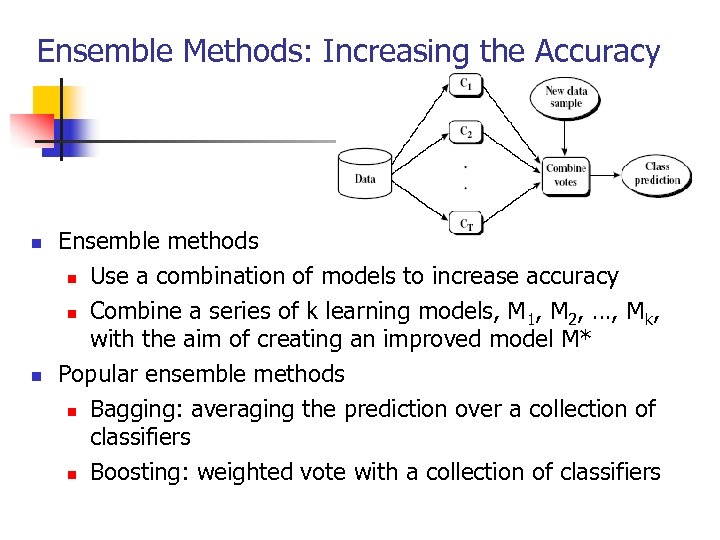

Ensemble Methods: Increasing the Accuracy n n Ensemble methods n Use a combination of models to increase accuracy n Combine a series of k learning models, M 1, M 2, …, Mk, with the aim of creating an improved model M* Popular ensemble methods n Bagging: averaging the prediction over a collection of classifiers n Boosting: weighted vote with a collection of classifiers

Ensemble Methods: Increasing the Accuracy n n Ensemble methods n Use a combination of models to increase accuracy n Combine a series of k learning models, M 1, M 2, …, Mk, with the aim of creating an improved model M* Popular ensemble methods n Bagging: averaging the prediction over a collection of classifiers n Boosting: weighted vote with a collection of classifiers

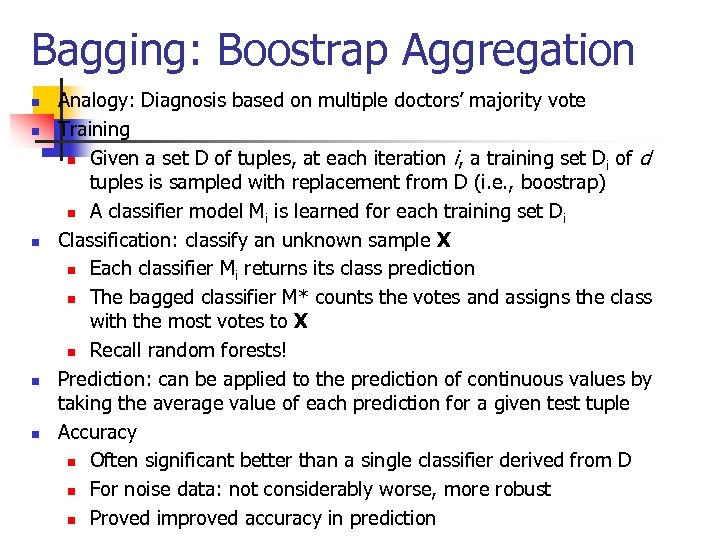

Bagging: Boostrap Aggregation n n Analogy: Diagnosis based on multiple doctors’ majority vote Training n Given a set D of tuples, at each iteration i, a training set Di of d tuples is sampled with replacement from D (i. e. , boostrap) n A classifier model Mi is learned for each training set D i Classification: classify an unknown sample X n Each classifier Mi returns its class prediction n The bagged classifier M* counts the votes and assigns the class with the most votes to X n Recall random forests! Prediction: can be applied to the prediction of continuous values by taking the average value of each prediction for a given test tuple Accuracy n Often significant better than a single classifier derived from D n For noise data: not considerably worse, more robust n Proved improved accuracy in prediction

Bagging: Boostrap Aggregation n n Analogy: Diagnosis based on multiple doctors’ majority vote Training n Given a set D of tuples, at each iteration i, a training set Di of d tuples is sampled with replacement from D (i. e. , boostrap) n A classifier model Mi is learned for each training set D i Classification: classify an unknown sample X n Each classifier Mi returns its class prediction n The bagged classifier M* counts the votes and assigns the class with the most votes to X n Recall random forests! Prediction: can be applied to the prediction of continuous values by taking the average value of each prediction for a given test tuple Accuracy n Often significant better than a single classifier derived from D n For noise data: not considerably worse, more robust n Proved improved accuracy in prediction

Boosting n n Analogy: Consult several doctors, based on a combination of weighted diagnoses—weight assigned based on the previous diagnosis accuracy How boosting works? n Weights are assigned to each training tuple n A series of k classifiers is iteratively learned n n After a classifier Mi is learned, the weights are updated to allow the subsequent classifier, Mi+1, to pay more attention to the training tuples that were misclassified by Mi The final M* combines the votes of each individual classifier, where the weight of each classifier's vote is a function of its accuracy The boosting algorithm can be extended for the prediction of continuous values Comparing with bagging: boosting tends to achieve greater accuracy, but it also risks overfitting the model to misclassified data

Boosting n n Analogy: Consult several doctors, based on a combination of weighted diagnoses—weight assigned based on the previous diagnosis accuracy How boosting works? n Weights are assigned to each training tuple n A series of k classifiers is iteratively learned n n After a classifier Mi is learned, the weights are updated to allow the subsequent classifier, Mi+1, to pay more attention to the training tuples that were misclassified by Mi The final M* combines the votes of each individual classifier, where the weight of each classifier's vote is a function of its accuracy The boosting algorithm can be extended for the prediction of continuous values Comparing with bagging: boosting tends to achieve greater accuracy, but it also risks overfitting the model to misclassified data

Adaboost (Freund and Schapire, 1997) Theory: Ada. Boost Lecture slides by Šochman and Matas Example: Ada. Boost running example slides from NTU, Taiwan Exercise: The training data: index 0 1 2 x-value 0 1 2 y-value 1 1 1 3 4 -1 1 The four given weak classifiers are I(x > 1. 5), I(x < 2. 5), I(x > 3. 5) and I(x < 4. 5). Find the final strong classifier. Try yourself first! Then check the solution.

Adaboost (Freund and Schapire, 1997) Theory: Ada. Boost Lecture slides by Šochman and Matas Example: Ada. Boost running example slides from NTU, Taiwan Exercise: The training data: index 0 1 2 x-value 0 1 2 y-value 1 1 1 3 4 -1 1 The four given weak classifiers are I(x > 1. 5), I(x < 2. 5), I(x > 3. 5) and I(x < 4. 5). Find the final strong classifier. Try yourself first! Then check the solution.