26fd692c4d06c4c5f73df6ee825bba1d.ppt

- Количество слайдов: 37

Data Mining (and machine learning) DM Lecture 2: Data Cleaning

Data Mining (and machine learning) DM Lecture 2: Data Cleaning

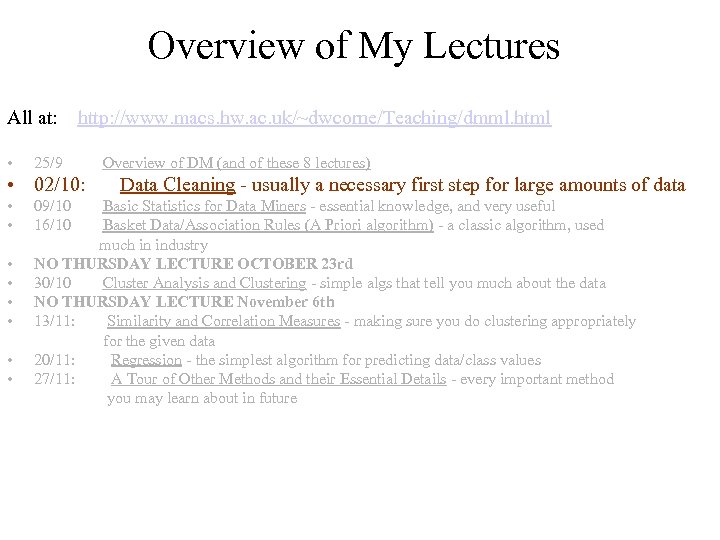

Overview of My Lectures All at: http: //www. macs. hw. ac. uk/~dwcorne/Teaching/dmml. html • 25/9 Overview of DM (and of these 8 lectures) • 02/10: Data Cleaning - usually a necessary first step for large amounts of data • 09/10 Basic Statistics for Data Miners - essential knowledge, and very useful • 16/10 Basket Data/Association Rules (A Priori algorithm) - a classic algorithm, used much in industry • NO THURSDAY LECTURE OCTOBER 23 rd • 30/10 Cluster Analysis and Clustering - simple algs that tell you much about the data • NO THURSDAY LECTURE November 6 th • 13/11: Similarity and Correlation Measures - making sure you do clustering appropriately for the given data • 20/11: Regression - the simplest algorithm for predicting data/class values • 27/11: A Tour of Other Methods and their Essential Details - every important method you may learn about in future

Overview of My Lectures All at: http: //www. macs. hw. ac. uk/~dwcorne/Teaching/dmml. html • 25/9 Overview of DM (and of these 8 lectures) • 02/10: Data Cleaning - usually a necessary first step for large amounts of data • 09/10 Basic Statistics for Data Miners - essential knowledge, and very useful • 16/10 Basket Data/Association Rules (A Priori algorithm) - a classic algorithm, used much in industry • NO THURSDAY LECTURE OCTOBER 23 rd • 30/10 Cluster Analysis and Clustering - simple algs that tell you much about the data • NO THURSDAY LECTURE November 6 th • 13/11: Similarity and Correlation Measures - making sure you do clustering appropriately for the given data • 20/11: Regression - the simplest algorithm for predicting data/class values • 27/11: A Tour of Other Methods and their Essential Details - every important method you may learn about in future

Acknowledgements I adapted this material from various sources, most notably: • A ppt presentation called `Data Quality and Data Cleaning: An Overview’ by Tamrapani Dasu and Theodore Johnson, at AT & T Labs • A paper called `Data Cleaning: Problems and Current Approaches’, by Erhard Rahm and Hong Hai Do, University of Leipzig, Germany. My thanks to these researchers for making their materials freely available online.

Acknowledgements I adapted this material from various sources, most notably: • A ppt presentation called `Data Quality and Data Cleaning: An Overview’ by Tamrapani Dasu and Theodore Johnson, at AT & T Labs • A paper called `Data Cleaning: Problems and Current Approaches’, by Erhard Rahm and Hong Hai Do, University of Leipzig, Germany. My thanks to these researchers for making their materials freely available online.

On Data Quality Suppose you have a database sitting in front of you, and I ask ``Is it a good quality database? ’’ What is your answer? What does quality depend on? Note: this is about the data themselves, not the system in use to access it.

On Data Quality Suppose you have a database sitting in front of you, and I ask ``Is it a good quality database? ’’ What is your answer? What does quality depend on? Note: this is about the data themselves, not the system in use to access it.

A Conventional Definition of Data Quality Good quality data are: Accurate, Complete, Unique, Up-to-date, and Consistent ; meaning …

A Conventional Definition of Data Quality Good quality data are: Accurate, Complete, Unique, Up-to-date, and Consistent ; meaning …

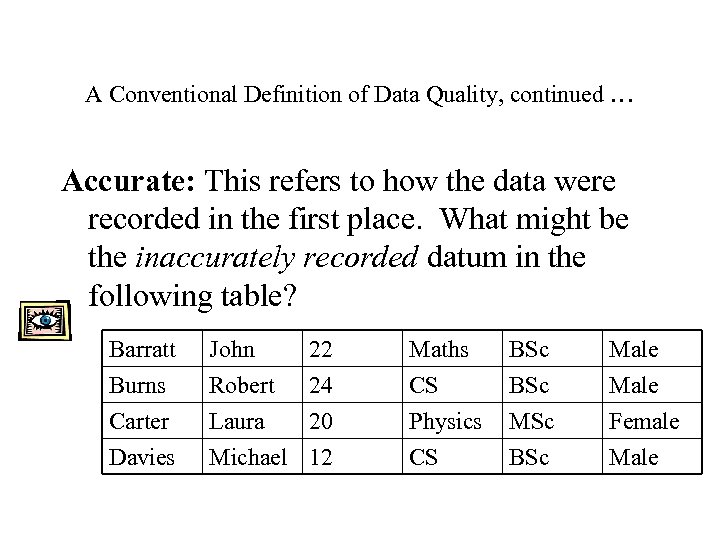

A Conventional Definition of Data Quality, continued … Accurate: This refers to how the data were recorded in the first place. What might be the inaccurately recorded datum in the following table? Barratt Burns Carter Davies John Robert Laura Michael 22 24 20 12 Maths CS Physics CS BSc MSc BSc Male Female Male

A Conventional Definition of Data Quality, continued … Accurate: This refers to how the data were recorded in the first place. What might be the inaccurately recorded datum in the following table? Barratt Burns Carter Davies John Robert Laura Michael 22 24 20 12 Maths CS Physics CS BSc MSc BSc Male Female Male

A Conventional Definition of Data Quality, continued … Complete: This refers to whether or not the database really contains everything it is supposed to contain. E. g. a patient’s medical records should contain references to all medication prescribed to date for that patient. The BBC TV Licensing DB should contain an entry for every address in the country. Does it?

A Conventional Definition of Data Quality, continued … Complete: This refers to whether or not the database really contains everything it is supposed to contain. E. g. a patient’s medical records should contain references to all medication prescribed to date for that patient. The BBC TV Licensing DB should contain an entry for every address in the country. Does it?

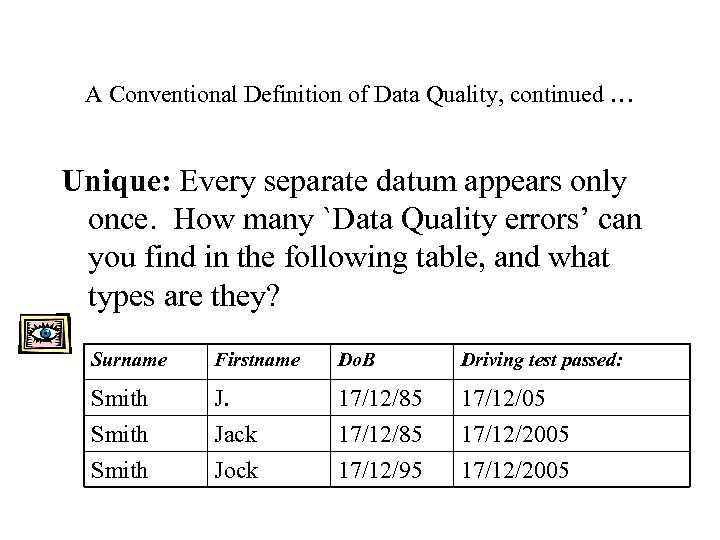

A Conventional Definition of Data Quality, continued … Unique: Every separate datum appears only once. How many `Data Quality errors’ can you find in the following table, and what types are they? Surname Firstname Do. B Driving test passed: Smith J. Jack Jock 17/12/85 17/12/95 17/12/05 17/12/2005

A Conventional Definition of Data Quality, continued … Unique: Every separate datum appears only once. How many `Data Quality errors’ can you find in the following table, and what types are they? Surname Firstname Do. B Driving test passed: Smith J. Jack Jock 17/12/85 17/12/95 17/12/05 17/12/2005

A Conventional Definition of Data Quality, continued … Up-to-date: The data are kept up to date. The post office recently changed my postcode from EX 6 8 RA to EX 6 8 NU. Why does this make it difficult for me to get a sensible quote for home insurance or car insurance? Can you think of a DB where it doesn’t matter whether or not the data are kept up to date? ?

A Conventional Definition of Data Quality, continued … Up-to-date: The data are kept up to date. The post office recently changed my postcode from EX 6 8 RA to EX 6 8 NU. Why does this make it difficult for me to get a sensible quote for home insurance or car insurance? Can you think of a DB where it doesn’t matter whether or not the data are kept up to date? ?

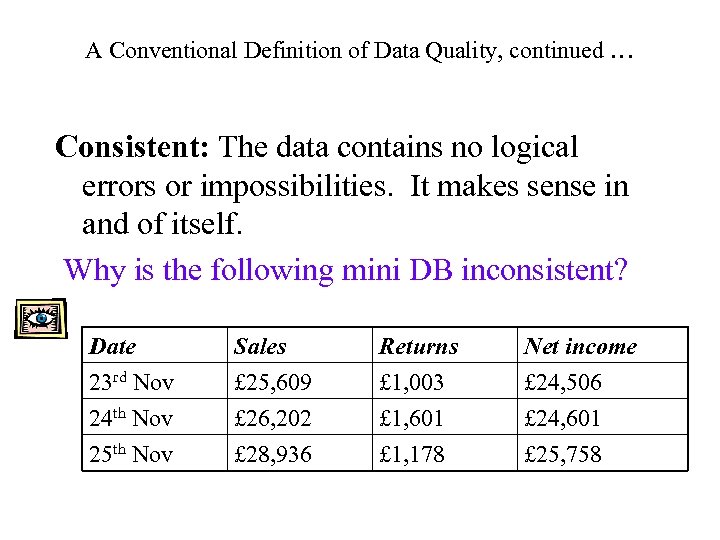

A Conventional Definition of Data Quality, continued … Consistent: The data contains no logical errors or impossibilities. It makes sense in and of itself. Why is the following mini DB inconsistent? Date 23 rd Nov 24 th Nov 25 th Nov Sales £ 25, 609 £ 26, 202 £ 28, 936 Returns £ 1, 003 £ 1, 601 £ 1, 178 Net income £ 24, 506 £ 24, 601 £ 25, 758

A Conventional Definition of Data Quality, continued … Consistent: The data contains no logical errors or impossibilities. It makes sense in and of itself. Why is the following mini DB inconsistent? Date 23 rd Nov 24 th Nov 25 th Nov Sales £ 25, 609 £ 26, 202 £ 28, 936 Returns £ 1, 003 £ 1, 601 £ 1, 178 Net income £ 24, 506 £ 24, 601 £ 25, 758

Note: This definition of data quality is not much use, since there is no way to measure DQ sensibly Completeness: How will we know? ? Uniqueness: It is hard to tell whether two entries are similar, or duplicates! Up-to-date-ness: How do we know? Consistent: consistency errors can be very hard to find, especially in a very large DB The database research `world’ is actively engaged in finding ways to measure data quality sensibly. In the meantime, we just use common sense to avoid dirty data at all points of the DQ continuum

Note: This definition of data quality is not much use, since there is no way to measure DQ sensibly Completeness: How will we know? ? Uniqueness: It is hard to tell whether two entries are similar, or duplicates! Up-to-date-ness: How do we know? Consistent: consistency errors can be very hard to find, especially in a very large DB The database research `world’ is actively engaged in finding ways to measure data quality sensibly. In the meantime, we just use common sense to avoid dirty data at all points of the DQ continuum

The Data Quality Continuum It’s rare that a datum is entered once into a DB and then left alone. Usually, a datum has a long and varied life, into which errors can arise at each and every stage. The continuum is: – – – Data gathering Data delivery Data storage Data integration Data retrieval Data analysis So, if we want to monitor DQ, we need to monitor it at each of these stages

The Data Quality Continuum It’s rare that a datum is entered once into a DB and then left alone. Usually, a datum has a long and varied life, into which errors can arise at each and every stage. The continuum is: – – – Data gathering Data delivery Data storage Data integration Data retrieval Data analysis So, if we want to monitor DQ, we need to monitor it at each of these stages

DQ Continuum: Example This is an example I am familiar with, helping to illustrate the DQ continuum. The International Seismological Centre (ISC) is in Thatcham, in Berkshire. It’s a charity funded by various governments. Their role is to be the repository for recording all earthquake events on the planet.

DQ Continuum: Example This is an example I am familiar with, helping to illustrate the DQ continuum. The International Seismological Centre (ISC) is in Thatcham, in Berkshire. It’s a charity funded by various governments. Their role is to be the repository for recording all earthquake events on the planet.

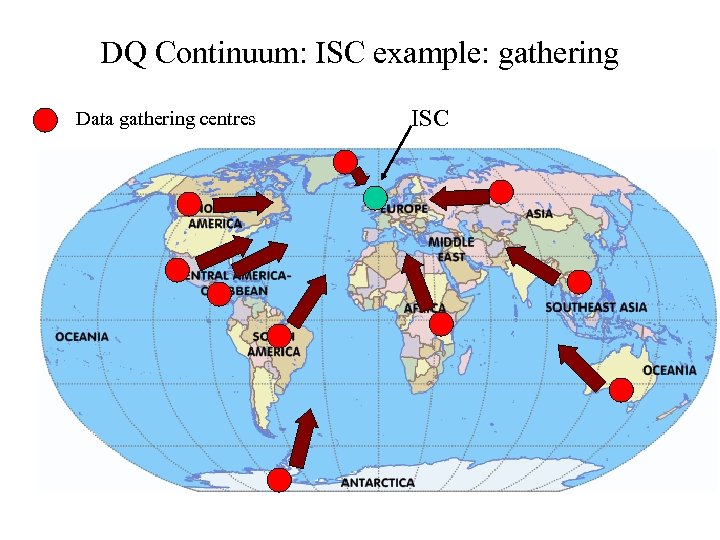

DQ Continuum: ISC example: gathering Data gathering centres ISC

DQ Continuum: ISC example: gathering Data gathering centres ISC

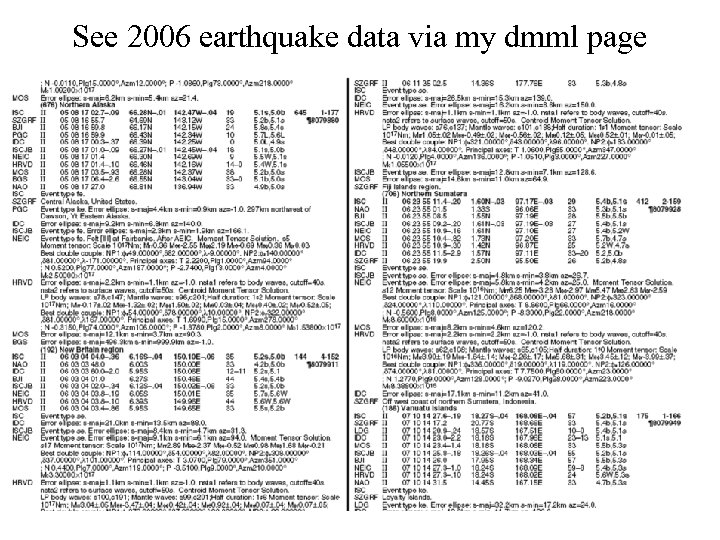

See 2006 earthquake data via my dmml page

See 2006 earthquake data via my dmml page

DQ Continuum: ISC example: delivery Raw seismograph data from local collection points to DG centres. Email or ftp to ISC; some centres provide raw data, some provide interpreted data (e. g. maybe won’t send some data if they believe it in error in the first place)

DQ Continuum: ISC example: delivery Raw seismograph data from local collection points to DG centres. Email or ftp to ISC; some centres provide raw data, some provide interpreted data (e. g. maybe won’t send some data if they believe it in error in the first place)

DQ Continuum: ISC example: integration The ISC’s role is actually to figure out where and when the Earth tremors were (there are hundreds per month) based on reverse engineering from seismograph readings. They integrate the raw data and attempt to do this, largely by hand brain, and record their findings in archival CDs

DQ Continuum: ISC example: integration The ISC’s role is actually to figure out where and when the Earth tremors were (there are hundreds per month) based on reverse engineering from seismograph readings. They integrate the raw data and attempt to do this, largely by hand brain, and record their findings in archival CDs

DQ Continuum: ISC example: retrieval/analysis You can get a CD from ISC anytime, for the earth tremor activity on any particular day. I’m not sure whether you can get the raw data from them. Naturally, you can analyse the data and see if you can find inconsistencies or errors.

DQ Continuum: ISC example: retrieval/analysis You can get a CD from ISC anytime, for the earth tremor activity on any particular day. I’m not sure whether you can get the raw data from them. Naturally, you can analyse the data and see if you can find inconsistencies or errors.

The ISC DQ Continuum Where might there occur errors, of: Accuracy? Completeness? Uniqueness? Timeliness? Consisency? What else is important in this case?

The ISC DQ Continuum Where might there occur errors, of: Accuracy? Completeness? Uniqueness? Timeliness? Consisency? What else is important in this case?

Where DQ problems occur (gathering) • Manual data entry (how can we improve this? ) • Lack of uniform standards format and content. • Duplicates arising from parallel entry • Approximations, alternatives, entries altered in order to cope with s/w and/or h/w constraints. • Measurement errors.

Where DQ problems occur (gathering) • Manual data entry (how can we improve this? ) • Lack of uniform standards format and content. • Duplicates arising from parallel entry • Approximations, alternatives, entries altered in order to cope with s/w and/or h/w constraints. • Measurement errors.

Where DQ problems occur (delivery) • Multiple hops from source to DB – problems can happen anywhere • Inappropriate pre-processing (e. g. removing some `small’ seismograph readings before sending on to ISC; rounding up or down, when the destination needs more accurate data). • Transmission problems: buffer overflows, checks (did all files arrive, and all correctly? )

Where DQ problems occur (delivery) • Multiple hops from source to DB – problems can happen anywhere • Inappropriate pre-processing (e. g. removing some `small’ seismograph readings before sending on to ISC; rounding up or down, when the destination needs more accurate data). • Transmission problems: buffer overflows, checks (did all files arrive, and all correctly? )

Where DQ problems occur (storage) • Poor, out of date or inappropriate metadata • Missing timestamps • conversion to storage format (e. g. to excel files, to higher/lower precision

Where DQ problems occur (storage) • Poor, out of date or inappropriate metadata • Missing timestamps • conversion to storage format (e. g. to excel files, to higher/lower precision

Where DQ problems occur (integration) This is the business of combining datasets – e. g. from different parts of a company, from (previously) different companies following an acquisition; from different government agencies, etc. • Different keys, different fields, different formats • Different definitions (`customer’, `income’, …) • Sociological factors: reluctance to share!

Where DQ problems occur (integration) This is the business of combining datasets – e. g. from different parts of a company, from (previously) different companies following an acquisition; from different government agencies, etc. • Different keys, different fields, different formats • Different definitions (`customer’, `income’, …) • Sociological factors: reluctance to share!

Where DQ problems occur (retrieval/analysis) The problem here is usually the quality of DBs that store the retrieved data, or the use of the retrieved data in general. Problems arise because: • The source DB is not properly understood! • Straightforward mistakes in the queries that retrieve the relevant data. E. g. A database of genes contains entries that indicate whether or not each gene has a known or suspected link with cancer. A retrieval/analysis task leads to publishing a list of genes that are not relevant to cancer. What is the problem here?

Where DQ problems occur (retrieval/analysis) The problem here is usually the quality of DBs that store the retrieved data, or the use of the retrieved data in general. Problems arise because: • The source DB is not properly understood! • Straightforward mistakes in the queries that retrieve the relevant data. E. g. A database of genes contains entries that indicate whether or not each gene has a known or suspected link with cancer. A retrieval/analysis task leads to publishing a list of genes that are not relevant to cancer. What is the problem here?

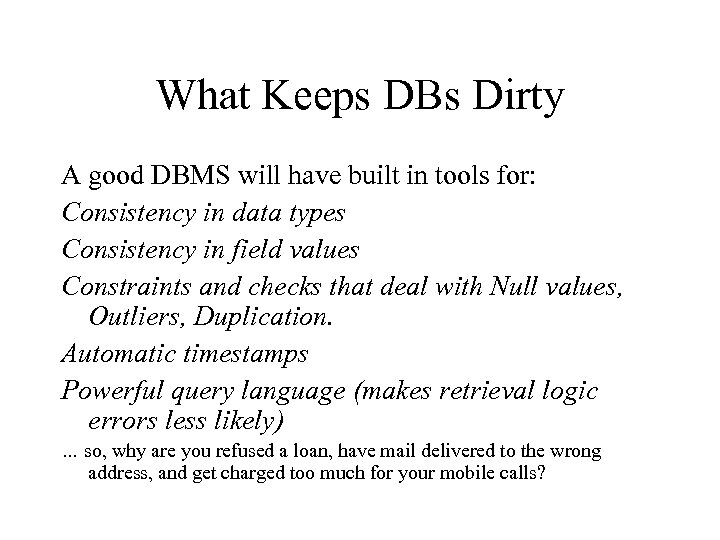

What Keeps DBs Dirty A good DBMS will have built in tools for: Consistency in data types Consistency in field values Constraints and checks that deal with Null values, Outliers, Duplication. Automatic timestamps Powerful query language (makes retrieval logic errors less likely) … so, why are you refused a loan, have mail delivered to the wrong address, and get charged too much for your mobile calls?

What Keeps DBs Dirty A good DBMS will have built in tools for: Consistency in data types Consistency in field values Constraints and checks that deal with Null values, Outliers, Duplication. Automatic timestamps Powerful query language (makes retrieval logic errors less likely) … so, why are you refused a loan, have mail delivered to the wrong address, and get charged too much for your mobile calls?

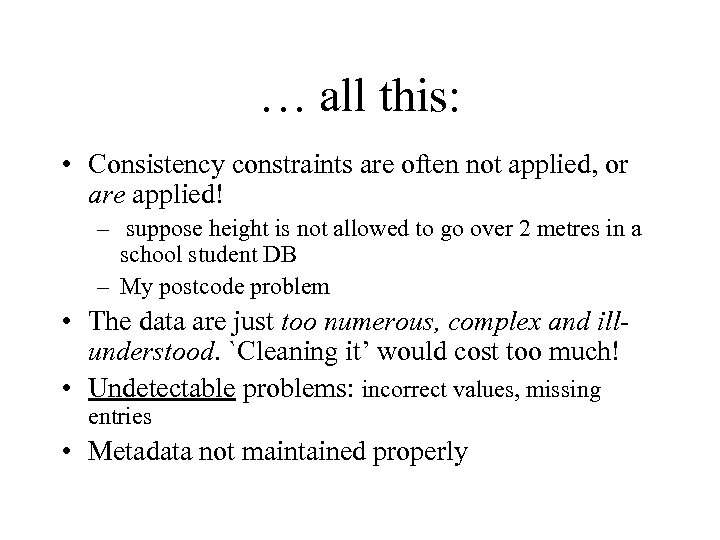

… all this: • Consistency constraints are often not applied, or are applied! – suppose height is not allowed to go over 2 metres in a school student DB – My postcode problem • The data are just too numerous, complex and illunderstood. `Cleaning it’ would cost too much! • Undetectable problems: incorrect values, missing entries • Metadata not maintained properly

… all this: • Consistency constraints are often not applied, or are applied! – suppose height is not allowed to go over 2 metres in a school student DB – My postcode problem • The data are just too numerous, complex and illunderstood. `Cleaning it’ would cost too much! • Undetectable problems: incorrect values, missing entries • Metadata not maintained properly

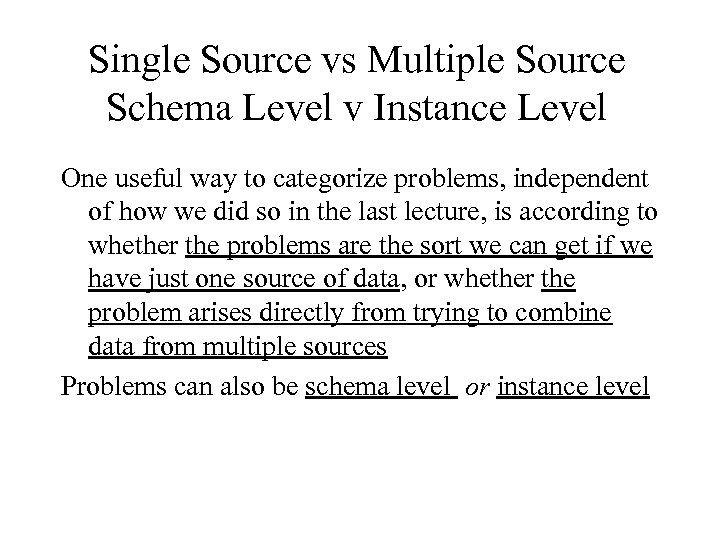

Single Source vs Multiple Source Schema Level v Instance Level One useful way to categorize problems, independent of how we did so in the last lecture, is according to whether the problems are the sort we can get if we have just one source of data, or whether the problem arises directly from trying to combine data from multiple sources Problems can also be schema level or instance level

Single Source vs Multiple Source Schema Level v Instance Level One useful way to categorize problems, independent of how we did so in the last lecture, is according to whether the problems are the sort we can get if we have just one source of data, or whether the problem arises directly from trying to combine data from multiple sources Problems can also be schema level or instance level

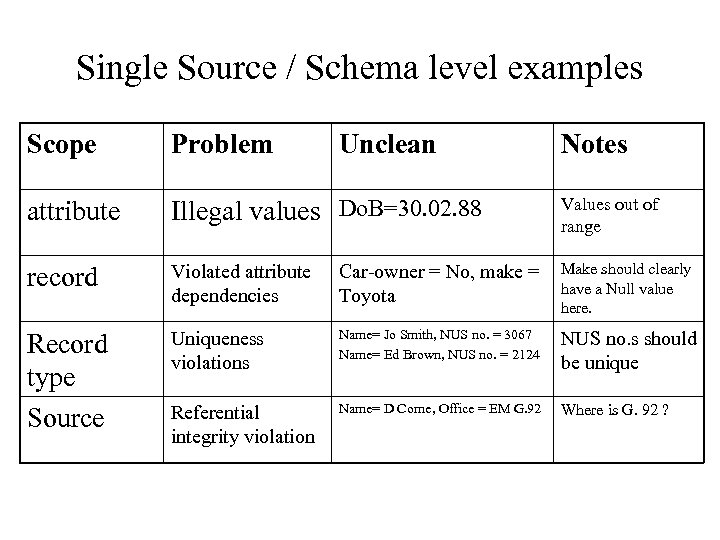

Single Source / Schema level examples Scope Problem Unclean Notes attribute Illegal values Do. B=30. 02. 88 Values out of range record Violated attribute dependencies Car-owner = No, make = Toyota Make should clearly have a Null value here. Record type Source Uniqueness violations Name= Jo Smith, NUS no. = 3067 Name= Ed Brown, NUS no. = 2124 NUS no. s should be unique Referential integrity violation Name= D Corne, Office = EM G. 92 Where is G. 92 ?

Single Source / Schema level examples Scope Problem Unclean Notes attribute Illegal values Do. B=30. 02. 88 Values out of range record Violated attribute dependencies Car-owner = No, make = Toyota Make should clearly have a Null value here. Record type Source Uniqueness violations Name= Jo Smith, NUS no. = 3067 Name= Ed Brown, NUS no. = 2124 NUS no. s should be unique Referential integrity violation Name= D Corne, Office = EM G. 92 Where is G. 92 ?

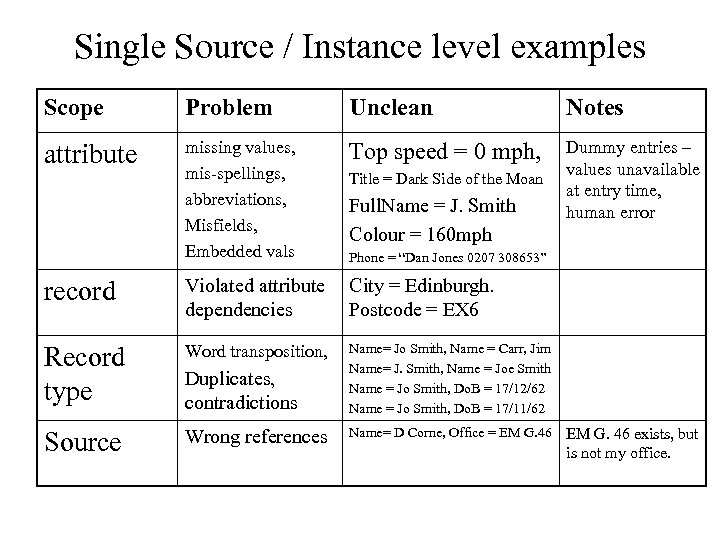

Single Source / Instance level examples Scope Problem Unclean Notes attribute missing values, mis-spellings, abbreviations, Misfields, Embedded vals Top speed = 0 mph, Dummy entries – values unavailable at entry time, human error Title = Dark Side of the Moan Full. Name = J. Smith Colour = 160 mph Phone = “Dan Jones 0207 308653” record Violated attribute City = Edinburgh. dependencies Postcode = EX 6 Record type Word transposition, Source Wrong references Duplicates, contradictions Name= Jo Smith, Name = Carr, Jim Name= J. Smith, Name = Joe Smith Name = Jo Smith, Do. B = 17/12/62 Name = Jo Smith, Do. B = 17/11/62 Name= D Corne, Office = EM G. 46 exists, but is not my office.

Single Source / Instance level examples Scope Problem Unclean Notes attribute missing values, mis-spellings, abbreviations, Misfields, Embedded vals Top speed = 0 mph, Dummy entries – values unavailable at entry time, human error Title = Dark Side of the Moan Full. Name = J. Smith Colour = 160 mph Phone = “Dan Jones 0207 308653” record Violated attribute City = Edinburgh. dependencies Postcode = EX 6 Record type Word transposition, Source Wrong references Duplicates, contradictions Name= Jo Smith, Name = Carr, Jim Name= J. Smith, Name = Joe Smith Name = Jo Smith, Do. B = 17/12/62 Name = Jo Smith, Do. B = 17/11/62 Name= D Corne, Office = EM G. 46 exists, but is not my office.

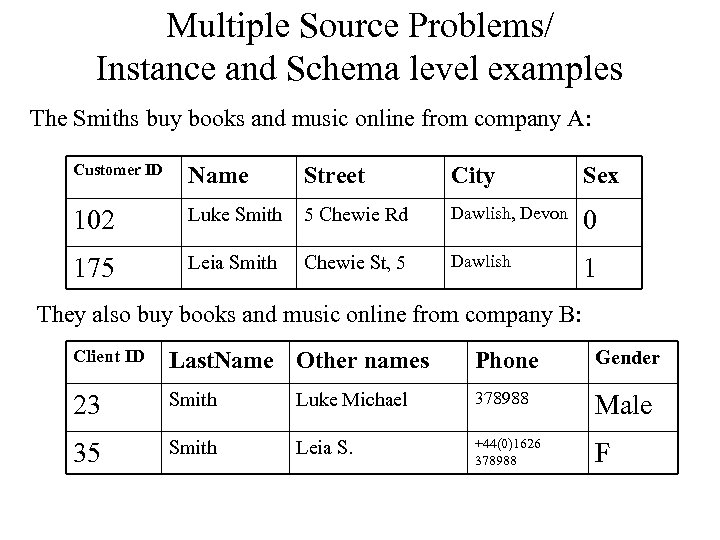

Multiple Source Problems/ Instance and Schema level examples The Smiths buy books and music online from company A: Name Street City Sex 102 Luke Smith 5 Chewie Rd Dawlish, Devon 0 175 Customer ID Leia Smith Chewie St, 5 Dawlish 1 They also buy books and music online from company B: Client ID Last. Name Other names Phone Gender 23 Smith Luke Michael 378988 Male 35 Smith Leia S. +44(0)1626 378988 F

Multiple Source Problems/ Instance and Schema level examples The Smiths buy books and music online from company A: Name Street City Sex 102 Luke Smith 5 Chewie Rd Dawlish, Devon 0 175 Customer ID Leia Smith Chewie St, 5 Dawlish 1 They also buy books and music online from company B: Client ID Last. Name Other names Phone Gender 23 Smith Luke Michael 378988 Male 35 Smith Leia S. +44(0)1626 378988 F

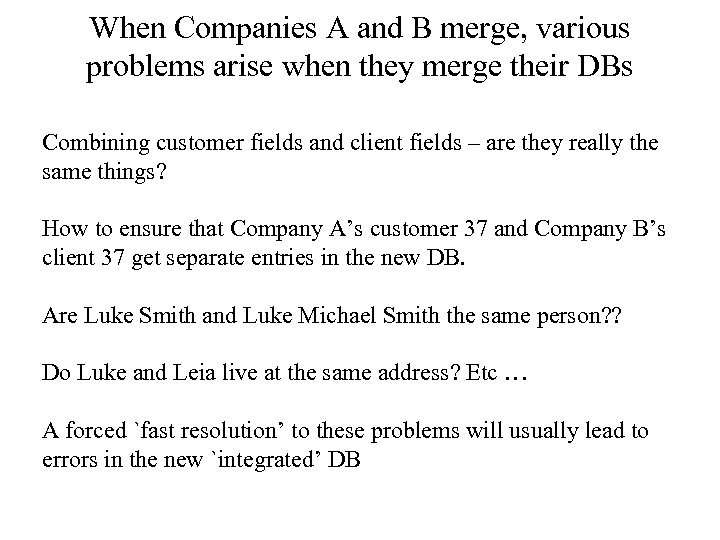

When Companies A and B merge, various problems arise when they merge their DBs Combining customer fields and client fields – are they really the same things? How to ensure that Company A’s customer 37 and Company B’s client 37 get separate entries in the new DB. Are Luke Smith and Luke Michael Smith the same person? ? Do Luke and Leia live at the same address? Etc … A forced `fast resolution’ to these problems will usually lead to errors in the new `integrated’ DB

When Companies A and B merge, various problems arise when they merge their DBs Combining customer fields and client fields – are they really the same things? How to ensure that Company A’s customer 37 and Company B’s client 37 get separate entries in the new DB. Are Luke Smith and Luke Michael Smith the same person? ? Do Luke and Leia live at the same address? Etc … A forced `fast resolution’ to these problems will usually lead to errors in the new `integrated’ DB

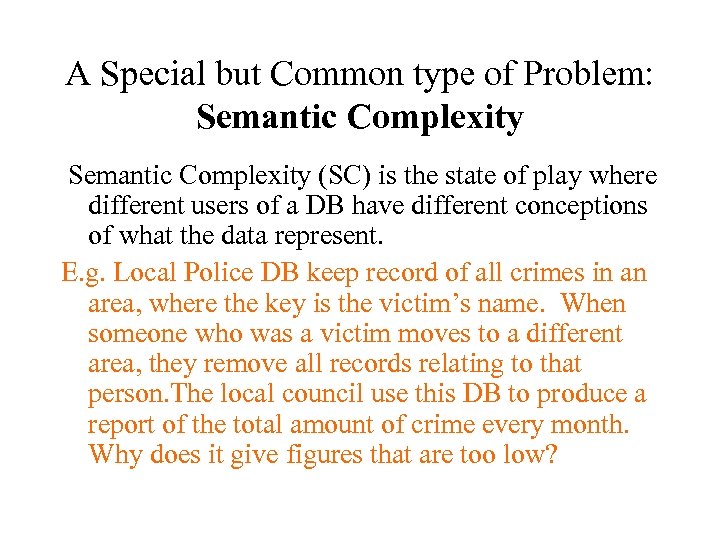

A Special but Common type of Problem: Semantic Complexity (SC) is the state of play where different users of a DB have different conceptions of what the data represent. E. g. Local Police DB keep record of all crimes in an area, where the key is the victim’s name. When someone who was a victim moves to a different area, they remove all records relating to that person. The local council use this DB to produce a report of the total amount of crime every month. Why does it give figures that are too low?

A Special but Common type of Problem: Semantic Complexity (SC) is the state of play where different users of a DB have different conceptions of what the data represent. E. g. Local Police DB keep record of all crimes in an area, where the key is the victim’s name. When someone who was a victim moves to a different area, they remove all records relating to that person. The local council use this DB to produce a report of the total amount of crime every month. Why does it give figures that are too low?

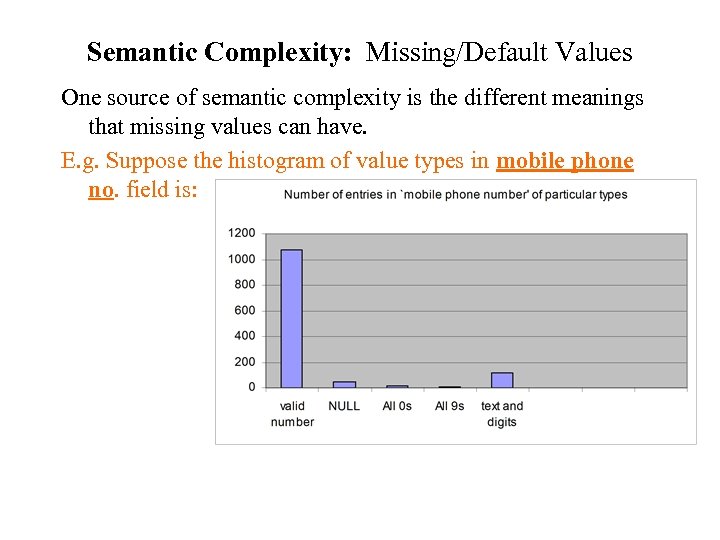

Semantic Complexity: Missing/Default Values One source of semantic complexity is the different meanings that missing values can have. E. g. Suppose the histogram of value types in mobile phone no. field is:

Semantic Complexity: Missing/Default Values One source of semantic complexity is the different meanings that missing values can have. E. g. Suppose the histogram of value types in mobile phone no. field is:

What does NULL mean? A. This record is of someone who does not have a mobile phone? B. This record is of someone who has a mobile phone, but chose not to supply the number? C. This record is of someone who has a mobile phone, but who forgot to supply the number, or it was hard to decipher and recorded as NULL? Maybe some are of type A and some are of type B and some are of type C. For some applications/analyses, we may wish to know the breakdown into types. What about the All zero and All nine entries? Precisely the same can be said of them. Or, perhaps the protocols for recording the entries indicated NULL for type A, 0000000 for type B and 9999999 for type C. The above relate to a quite simple form of semantic complexity – but what if someone uses this DB to estimate the proportion of people who have never had a mobile phone?

What does NULL mean? A. This record is of someone who does not have a mobile phone? B. This record is of someone who has a mobile phone, but chose not to supply the number? C. This record is of someone who has a mobile phone, but who forgot to supply the number, or it was hard to decipher and recorded as NULL? Maybe some are of type A and some are of type B and some are of type C. For some applications/analyses, we may wish to know the breakdown into types. What about the All zero and All nine entries? Precisely the same can be said of them. Or, perhaps the protocols for recording the entries indicated NULL for type A, 0000000 for type B and 9999999 for type C. The above relate to a quite simple form of semantic complexity – but what if someone uses this DB to estimate the proportion of people who have never had a mobile phone?

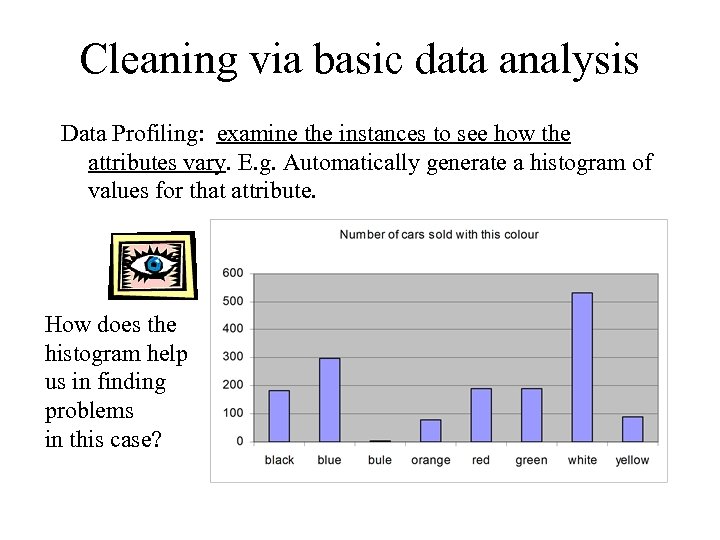

Cleaning via basic data analysis Data Profiling: examine the instances to see how the attributes vary. E. g. Automatically generate a histogram of values for that attribute. How does the histogram help us in finding problems in this case?

Cleaning via basic data analysis Data Profiling: examine the instances to see how the attributes vary. E. g. Automatically generate a histogram of values for that attribute. How does the histogram help us in finding problems in this case?

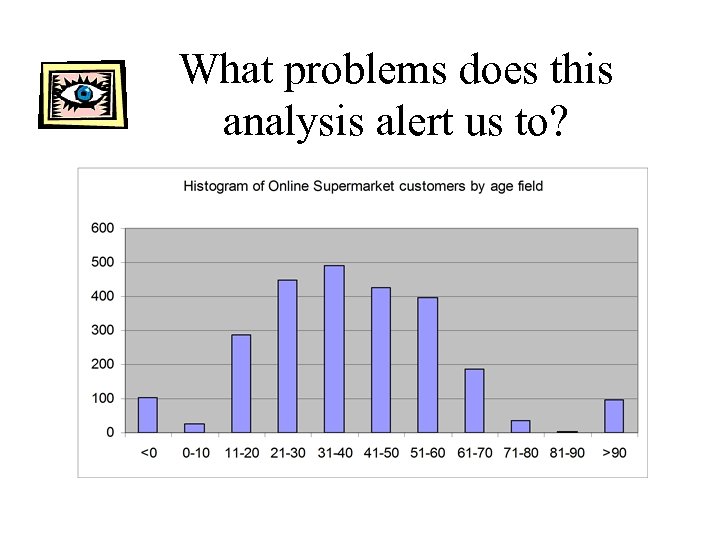

What problems does this analysis alert us to?

What problems does this analysis alert us to?

Which brings us to “basic statistics for data miners”, next week …

Which brings us to “basic statistics for data miners”, next week …