b463d08ffdbeaa2d61cb8f66509e0dd5.ppt

- Количество слайдов: 16

Data Management for the Dark Energy Survey OSG Consortium All Hands Meeting SDSC, San Diego CA March 2007 Greg Daues National Center for Supercomputing Applications University of Illinois at Urbana-Champaign, IL, USA daues@ncsa. uiuc. edu National Center for Supercomputing Applications

Data Management for the Dark Energy Survey OSG Consortium All Hands Meeting SDSC, San Diego CA March 2007 Greg Daues National Center for Supercomputing Applications University of Illinois at Urbana-Champaign, IL, USA daues@ncsa. uiuc. edu National Center for Supercomputing Applications

The Dark Energy Survey International Collaboration – Fermilab- Camera building, Survey Planning and Simulations – U Illinois- Data Management, Data Acquisition, SPT – U Chicago- SPT, Simulations, Optics – U Michigan- Optics – LBNL- Red Sensitive CCD Detectors – CTIO- Telescope & Camera Operations – Spanish Consortium- Front End Electronics • Institut de Fisica d’Altes Energies, Barcelona • Institut de Ciencias del Espacias, Barcelona • CIEMAT, Madrid – UK Consortium- Optics • UCL, Cambridge, Edinburgh, Portsmouth and Sussex – U Penn- science software, electronics – Brazilian Consortium- Simulations, CCDs, Data Management • Observatorio Nacional • Centro Brasileiro de Pesquisas Fisicas • Universidade Federal do Rio de Janeiro • Universidade Federal do Rio do Sul – Ohio State University- electronics – Argonne National Laboratory- electronics, CCDs National Center for Supercomputing Applications

The Dark Energy Survey International Collaboration – Fermilab- Camera building, Survey Planning and Simulations – U Illinois- Data Management, Data Acquisition, SPT – U Chicago- SPT, Simulations, Optics – U Michigan- Optics – LBNL- Red Sensitive CCD Detectors – CTIO- Telescope & Camera Operations – Spanish Consortium- Front End Electronics • Institut de Fisica d’Altes Energies, Barcelona • Institut de Ciencias del Espacias, Barcelona • CIEMAT, Madrid – UK Consortium- Optics • UCL, Cambridge, Edinburgh, Portsmouth and Sussex – U Penn- science software, electronics – Brazilian Consortium- Simulations, CCDs, Data Management • Observatorio Nacional • Centro Brasileiro de Pesquisas Fisicas • Universidade Federal do Rio de Janeiro • Universidade Federal do Rio do Sul – Ohio State University- electronics – Argonne National Laboratory- electronics, CCDs National Center for Supercomputing Applications

DESDM Team Tanweer Alam 3 Emannuel Bertin 4 Joe Mohr 1, 3 Jim Annis 2 Dora Cai 3 Choong Ngeow 1 Wayne Barkhouse 1 Greg Daues 3 Ray Plante 3 Cristina Beldica 3 Patrick Duda 3 Huan Lin 2 Douglas Tucker 2 1 U Illinois Astronomy 2 Fermilab 4 Terapix, 3 U Illinois NCSA IAP National Center for Supercomputing Applications

DESDM Team Tanweer Alam 3 Emannuel Bertin 4 Joe Mohr 1, 3 Jim Annis 2 Dora Cai 3 Choong Ngeow 1 Wayne Barkhouse 1 Greg Daues 3 Ray Plante 3 Cristina Beldica 3 Patrick Duda 3 Huan Lin 2 Douglas Tucker 2 1 U Illinois Astronomy 2 Fermilab 4 Terapix, 3 U Illinois NCSA IAP National Center for Supercomputing Applications

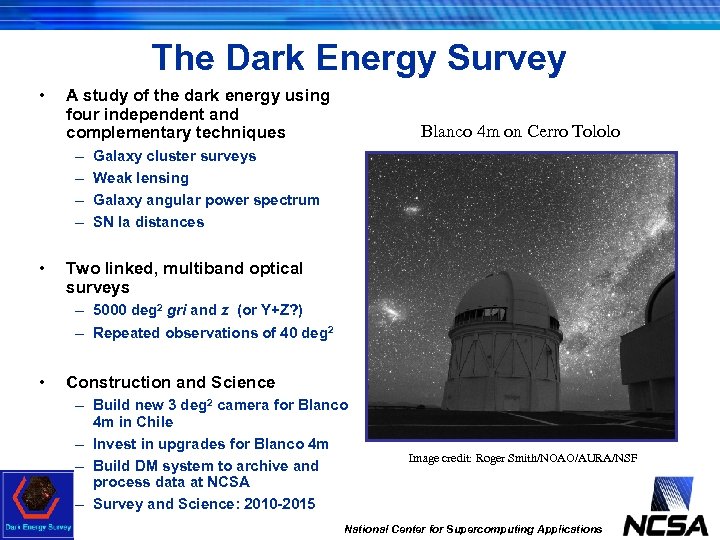

The Dark Energy Survey • A study of the dark energy using four independent and complementary techniques – – • Blanco 4 m on Cerro Tololo Galaxy cluster surveys Weak lensing Galaxy angular power spectrum SN Ia distances Two linked, multiband optical surveys – 5000 deg 2 gri and z (or Y+Z? ) – Repeated observations of 40 deg 2 • Construction and Science – Build new 3 deg 2 camera for Blanco 4 m in Chile – Invest in upgrades for Blanco 4 m – Build DM system to archive and process data at NCSA – Survey and Science: 2010 -2015 Image credit: Roger Smith/NOAO/AURA/NSF National Center for Supercomputing Applications

The Dark Energy Survey • A study of the dark energy using four independent and complementary techniques – – • Blanco 4 m on Cerro Tololo Galaxy cluster surveys Weak lensing Galaxy angular power spectrum SN Ia distances Two linked, multiband optical surveys – 5000 deg 2 gri and z (or Y+Z? ) – Repeated observations of 40 deg 2 • Construction and Science – Build new 3 deg 2 camera for Blanco 4 m in Chile – Invest in upgrades for Blanco 4 m – Build DM system to archive and process data at NCSA – Survey and Science: 2010 -2015 Image credit: Roger Smith/NOAO/AURA/NSF National Center for Supercomputing Applications

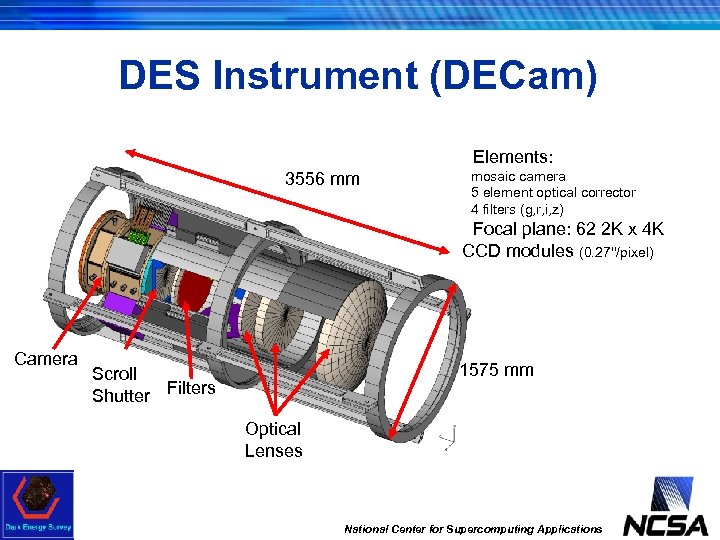

DES Instrument (DECam) • Elements: 3556 mm • mosaic camera • 5 element optical corrector • 4 filters (g, r, i, z) • Focal plane: 62 2 K x 4 K CCD modules (0. 27''/pixel) Camera 1575 mm Scroll Shutter Filters Optical Lenses National Center for Supercomputing Applications

DES Instrument (DECam) • Elements: 3556 mm • mosaic camera • 5 element optical corrector • 4 filters (g, r, i, z) • Focal plane: 62 2 K x 4 K CCD modules (0. 27''/pixel) Camera 1575 mm Scroll Shutter Filters Optical Lenses National Center for Supercomputing Applications

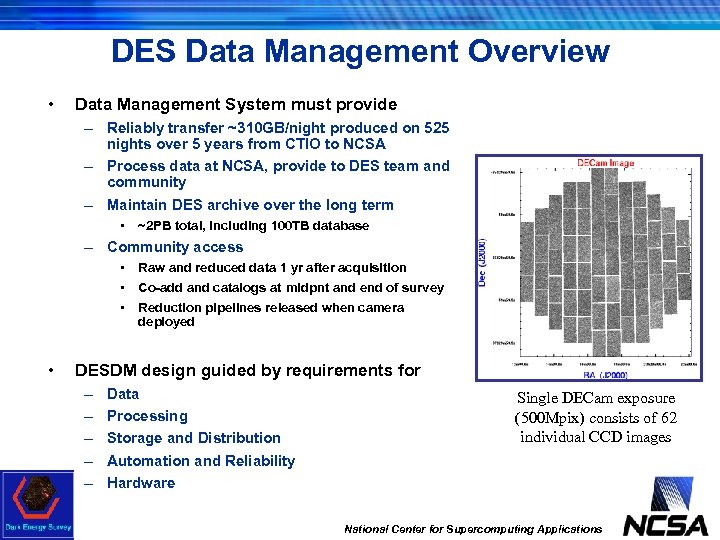

DES Data Management Overview • Data Management System must provide – Reliably transfer ~310 GB/night produced on 525 nights over 5 years from CTIO to NCSA – Process data at NCSA, provide to DES team and community – Maintain DES archive over the long term • ~2 PB total, including 100 TB database – Community access • Raw and reduced data 1 yr after acquisition • Co-add and catalogs at midpnt and end of survey • Reduction pipelines released when camera deployed • DESDM design guided by requirements for – – – Data Processing Storage and Distribution Automation and Reliability Hardware Single DECam exposure (500 Mpix) consists of 62 individual CCD images National Center for Supercomputing Applications

DES Data Management Overview • Data Management System must provide – Reliably transfer ~310 GB/night produced on 525 nights over 5 years from CTIO to NCSA – Process data at NCSA, provide to DES team and community – Maintain DES archive over the long term • ~2 PB total, including 100 TB database – Community access • Raw and reduced data 1 yr after acquisition • Co-add and catalogs at midpnt and end of survey • Reduction pipelines released when camera deployed • DESDM design guided by requirements for – – – Data Processing Storage and Distribution Automation and Reliability Hardware Single DECam exposure (500 Mpix) consists of 62 individual CCD images National Center for Supercomputing Applications

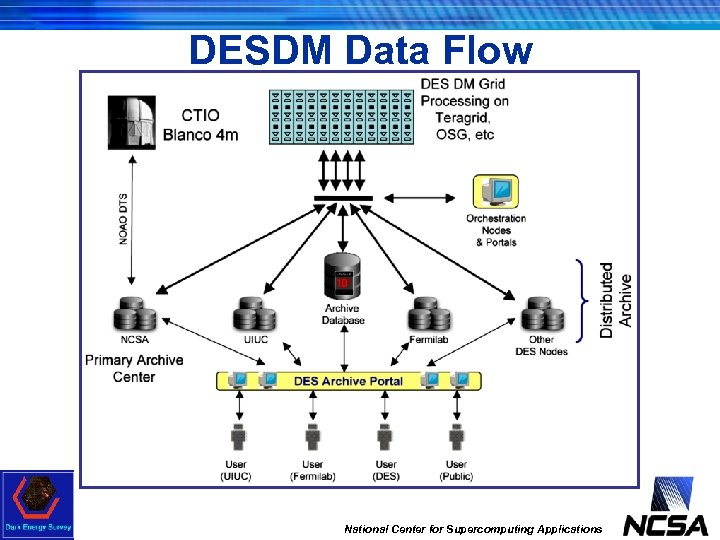

DESDM Data Flow National Center for Supercomputing Applications

DESDM Data Flow National Center for Supercomputing Applications

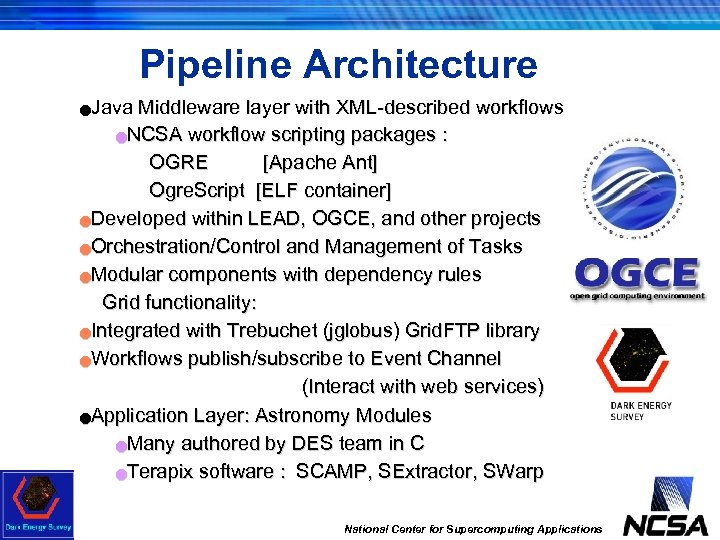

Pipeline Architecture Java Middleware layer with XML-described workflows n. NCSA workflow scripting packages : OGRE [Apache Ant] Ogre. Script [ELF container] n. Developed within LEAD, OGCE, and other projects n. Orchestration/Control and Management of Tasks n. Modular components with dependency rules Grid functionality: n. Integrated with Trebuchet (jglobus) Grid. FTP library n. Workflows publish/subscribe to Event Channel (Interact with web services) n. Application Layer: Astronomy Modules n. Many authored by DES team in C n. Terapix software : SCAMP, SExtractor, SWarp n National Center for Supercomputing Applications

Pipeline Architecture Java Middleware layer with XML-described workflows n. NCSA workflow scripting packages : OGRE [Apache Ant] Ogre. Script [ELF container] n. Developed within LEAD, OGCE, and other projects n. Orchestration/Control and Management of Tasks n. Modular components with dependency rules Grid functionality: n. Integrated with Trebuchet (jglobus) Grid. FTP library n. Workflows publish/subscribe to Event Channel (Interact with web services) n. Application Layer: Astronomy Modules n. Many authored by DES team in C n. Terapix software : SCAMP, SExtractor, SWarp n National Center for Supercomputing Applications

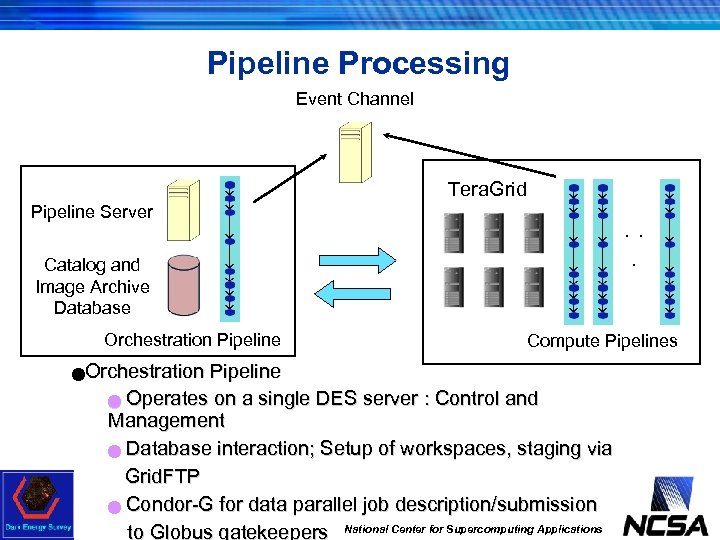

Pipeline Processing Event Channel Tera. Grid Pipeline Server . . . Catalog and Image Archive Database Orchestration Pipeline Compute Pipelines Orchestration Pipeline n Operates on a single DES server : Control and Management n Database interaction; Setup of workspaces, staging via Grid. FTP n Condor-G for data parallel job description/submission to Globus gatekeepers National Center for Supercomputing Applications n

Pipeline Processing Event Channel Tera. Grid Pipeline Server . . . Catalog and Image Archive Database Orchestration Pipeline Compute Pipelines Orchestration Pipeline n Operates on a single DES server : Control and Management n Database interaction; Setup of workspaces, staging via Grid. FTP n Condor-G for data parallel job description/submission to Globus gatekeepers National Center for Supercomputing Applications n

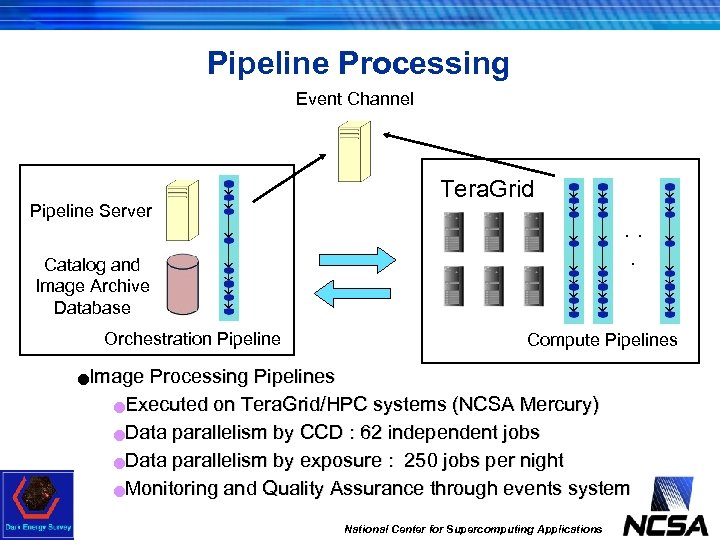

Pipeline Processing Event Channel Pipeline Server Tera. Grid. . . Catalog and Image Archive Database Orchestration Pipeline Compute Pipelines Image Processing Pipelines n. Executed on Tera. Grid/HPC systems (NCSA Mercury) n. Data parallelism by CCD : 62 independent jobs n. Data parallelism by exposure : 250 jobs per night n. Monitoring and Quality Assurance through events system n National Center for Supercomputing Applications

Pipeline Processing Event Channel Pipeline Server Tera. Grid. . . Catalog and Image Archive Database Orchestration Pipeline Compute Pipelines Image Processing Pipelines n. Executed on Tera. Grid/HPC systems (NCSA Mercury) n. Data parallelism by CCD : 62 independent jobs n. Data parallelism by exposure : 250 jobs per night n. Monitoring and Quality Assurance through events system n National Center for Supercomputing Applications

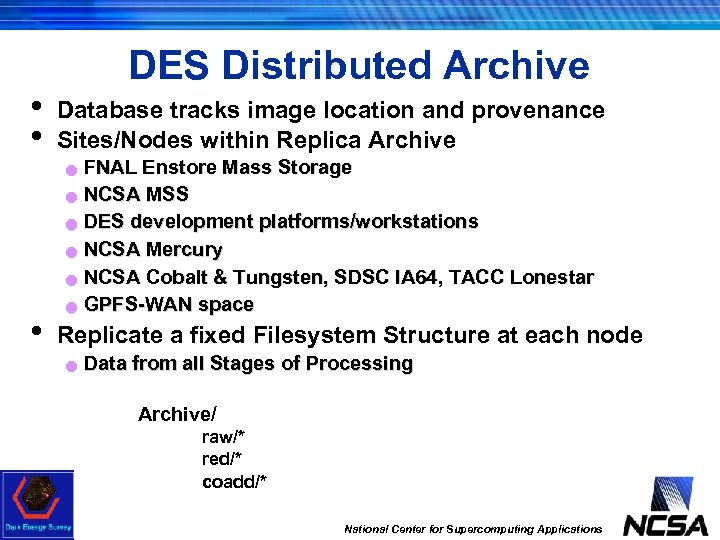

• • DES Distributed Archive Database tracks image location and provenance Sites/Nodes within Replica Archive n n n • n FNAL Enstore Mass Storage NCSA MSS DES development platforms/workstations NCSA Mercury NCSA Cobalt & Tungsten, SDSC IA 64, TACC Lonestar GPFS-WAN space Replicate a fixed Filesystem Structure at each node n Data from all Stages of Processing Archive/ raw/* red/* coadd/* National Center for Supercomputing Applications

• • DES Distributed Archive Database tracks image location and provenance Sites/Nodes within Replica Archive n n n • n FNAL Enstore Mass Storage NCSA MSS DES development platforms/workstations NCSA Mercury NCSA Cobalt & Tungsten, SDSC IA 64, TACC Lonestar GPFS-WAN space Replicate a fixed Filesystem Structure at each node n Data from all Stages of Processing Archive/ raw/* red/* coadd/* National Center for Supercomputing Applications

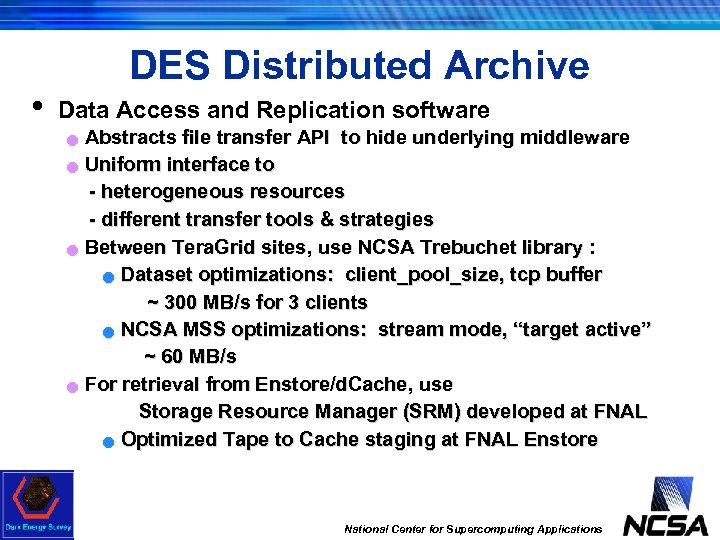

• DES Distributed Archive Data Access and Replication software n n Abstracts file transfer API to hide underlying middleware Uniform interface to - heterogeneous resources - different transfer tools & strategies Between Tera. Grid sites, use NCSA Trebuchet library : n Dataset optimizations: client_pool_size, tcp buffer ~ 300 MB/s for 3 clients n NCSA MSS optimizations: stream mode, “target active” ~ 60 MB/s For retrieval from Enstore/d. Cache, use Storage Resource Manager (SRM) developed at FNAL n Optimized Tape to Cache staging at FNAL Enstore National Center for Supercomputing Applications

• DES Distributed Archive Data Access and Replication software n n Abstracts file transfer API to hide underlying middleware Uniform interface to - heterogeneous resources - different transfer tools & strategies Between Tera. Grid sites, use NCSA Trebuchet library : n Dataset optimizations: client_pool_size, tcp buffer ~ 300 MB/s for 3 clients n NCSA MSS optimizations: stream mode, “target active” ~ 60 MB/s For retrieval from Enstore/d. Cache, use Storage Resource Manager (SRM) developed at FNAL n Optimized Tape to Cache staging at FNAL Enstore National Center for Supercomputing Applications

DES Data Challenges • DESDM employs spiral development model with testing thru yearly Data Challenges • Large scale DECam image simulation effort at Fermilab – Produce sky images over hundreds of deg 2 with realistic distribution of galaxies, stars (weak lensing shear from large scale structure still to come) – Realistic effects: cosmic rays, dead columns, gain variations, seeing variations, saturation, optical distortion, nightly extinction variations, etc • Data challenges: DESDM team uses current system to process simulated images to the catalog level and compare catalogs to truth tables National Center for Supercomputing Applications

DES Data Challenges • DESDM employs spiral development model with testing thru yearly Data Challenges • Large scale DECam image simulation effort at Fermilab – Produce sky images over hundreds of deg 2 with realistic distribution of galaxies, stars (weak lensing shear from large scale structure still to come) – Realistic effects: cosmic rays, dead columns, gain variations, seeing variations, saturation, optical distortion, nightly extinction variations, etc • Data challenges: DESDM team uses current system to process simulated images to the catalog level and compare catalogs to truth tables National Center for Supercomputing Applications

DES Data Challenges (2) • DES DC 1 Oct 05 - Jan 06 n n n • 5 nights simulated data (Im. Sim 1) 700 GB raw Basic Orchestration, Condor-G job submission First Astro modules: Image Reduction & Cataloguing; Ingestion of objects to Database Tera. Grid usage: Data parallel computing on NCSA Mercury Catalogued, calibrated and ingested 50 million objects DES DC 2 Oct 06 - Jan 07 n n n 10 nights simulated data (5 TB, equiv to 20% of SDSS imaging dataset) Astronomy algorithms: astrometry, co-addition, cataloguing Distributed Archive; high performance Grid. FTP transfers Archive Portal Teragrid usage: Production runs on NCSA Mercury; Pipelines successfully tested on NCSA Cobalt, SDSC IA 64 Catalogued, calibrated and ingested 250 million objects National Center for Supercomputing Applications

DES Data Challenges (2) • DES DC 1 Oct 05 - Jan 06 n n n • 5 nights simulated data (Im. Sim 1) 700 GB raw Basic Orchestration, Condor-G job submission First Astro modules: Image Reduction & Cataloguing; Ingestion of objects to Database Tera. Grid usage: Data parallel computing on NCSA Mercury Catalogued, calibrated and ingested 50 million objects DES DC 2 Oct 06 - Jan 07 n n n 10 nights simulated data (5 TB, equiv to 20% of SDSS imaging dataset) Astronomy algorithms: astrometry, co-addition, cataloguing Distributed Archive; high performance Grid. FTP transfers Archive Portal Teragrid usage: Production runs on NCSA Mercury; Pipelines successfully tested on NCSA Cobalt, SDSC IA 64 Catalogued, calibrated and ingested 250 million objects National Center for Supercomputing Applications

DESDM Partnership with OSG • DESDM reflects underlying DOE-NSF partnership in DES • DESDM designed to support production, delivery and analysis of science ready data products to all collaboration sites • Fermilab and NCSA both host massive HPC resources – Camera construction supported by DOE (Fermilab) – Telescope built and operated by NSF (NOAO) – Data management development supported by NSF (NCSA) – Strong incentive for developing DM system that seamlessly integrates TG and OSG resources National Center for Supercomputing Applications

DESDM Partnership with OSG • DESDM reflects underlying DOE-NSF partnership in DES • DESDM designed to support production, delivery and analysis of science ready data products to all collaboration sites • Fermilab and NCSA both host massive HPC resources – Camera construction supported by DOE (Fermilab) – Telescope built and operated by NSF (NOAO) – Data management development supported by NSF (NCSA) – Strong incentive for developing DM system that seamlessly integrates TG and OSG resources National Center for Supercomputing Applications

OSG Partnership Components • Archiving – Distributed archive already includes node on Fermilab dcache system • Simplifies transfer from simulated and reduced images among DES collaborators at NCSA and Fermilab – In operations phase Fermilab and NCSA will both host comprehensive DES data repositories • Processing – Large scale reprocessing of DES data would benefit from access to more HPC resources – Orchestration layer already supports processing on multiple (similar) TG nodes – Working to add support for processing on Fermigrid platforms National Center for Supercomputing Applications

OSG Partnership Components • Archiving – Distributed archive already includes node on Fermilab dcache system • Simplifies transfer from simulated and reduced images among DES collaborators at NCSA and Fermilab – In operations phase Fermilab and NCSA will both host comprehensive DES data repositories • Processing – Large scale reprocessing of DES data would benefit from access to more HPC resources – Orchestration layer already supports processing on multiple (similar) TG nodes – Working to add support for processing on Fermigrid platforms National Center for Supercomputing Applications