594713859f82b6de17c88eb2ee74c2ec.ppt

- Количество слайдов: 41

Data Grids and Data-Intensive Science Ian Foster Mathematics and Computer Science Division Argonne National Laboratory and Department of Computer Science The University of Chicago http: //www. mcs. anl. gov/~foster Keynote Talk, Research Library Group Annual Conference, Amsterdam, April 22, 2002

Data Grids and Data-Intensive Science Ian Foster Mathematics and Computer Science Division Argonne National Laboratory and Department of Computer Science The University of Chicago http: //www. mcs. anl. gov/~foster Keynote Talk, Research Library Group Annual Conference, Amsterdam, April 22, 2002

2 Overview l The technology landscape – Living in an exponential world l Grid concepts – Resource sharing in virtual organizations l Petascale Virtual Data Grids – Data, programs, computers, computations as community resources foster@mcs. anl. gov ARGONNE öCHICAGO

2 Overview l The technology landscape – Living in an exponential world l Grid concepts – Resource sharing in virtual organizations l Petascale Virtual Data Grids – Data, programs, computers, computations as community resources foster@mcs. anl. gov ARGONNE öCHICAGO

Living in an Exponential World (1) Computing & Sensors 3 Moore’s Law: transistor count doubles each 18 months Magnetohydrodynamics star formation foster@mcs. anl. gov ARGONNE öCHICAGO

Living in an Exponential World (1) Computing & Sensors 3 Moore’s Law: transistor count doubles each 18 months Magnetohydrodynamics star formation foster@mcs. anl. gov ARGONNE öCHICAGO

Living in an Exponential World: (2) Storage l l Storage density doubles every 12 months Dramatic growth in online data (1 petabyte = 1000 terabyte = 1, 000 gigabyte) – 2000 ~0. 5 petabyte – 2005 ~10 petabytes – 2010 ~100 petabytes – 2015 l 4 ~1000 petabytes? Transforming entire disciplines in physical and, increasingly, biological sciences; humanities next? foster@mcs. anl. gov ARGONNE öCHICAGO

Living in an Exponential World: (2) Storage l l Storage density doubles every 12 months Dramatic growth in online data (1 petabyte = 1000 terabyte = 1, 000 gigabyte) – 2000 ~0. 5 petabyte – 2005 ~10 petabytes – 2010 ~100 petabytes – 2015 l 4 ~1000 petabytes? Transforming entire disciplines in physical and, increasingly, biological sciences; humanities next? foster@mcs. anl. gov ARGONNE öCHICAGO

Data Intensive Physical Sciences l 5 High energy & nuclear physics – Including new experiments at CERN l Gravity wave searches – LIGO, GEO, VIRGO l Time-dependent 3 -D systems (simulation, data) – Earth Observation, climate modeling – Geophysics, earthquake modeling – Fluids, aerodynamic design – Pollutant dispersal scenarios l Astronomy: Digital sky surveys foster@mcs. anl. gov ARGONNE öCHICAGO

Data Intensive Physical Sciences l 5 High energy & nuclear physics – Including new experiments at CERN l Gravity wave searches – LIGO, GEO, VIRGO l Time-dependent 3 -D systems (simulation, data) – Earth Observation, climate modeling – Geophysics, earthquake modeling – Fluids, aerodynamic design – Pollutant dispersal scenarios l Astronomy: Digital sky surveys foster@mcs. anl. gov ARGONNE öCHICAGO

6 Ongoing Astronomical Mega-Surveys l Large number of new surveys – Multi-TB in size, 100 M objects or larger – In databases – Individual archives planned and under way l Multi-wavelength view of the sky – > 13 wavelength coverage within 5 years l Impressive early discoveries – Finding exotic objects by unusual colors > L, T dwarfs, high redshift quasars – Finding objects by time variability MACHO 2 MASS SDSS DPOSS GSC-II COBE MAP NVSS FIRST GALEX ROSAT OGLE. . . > Gravitational micro-lensing foster@mcs. anl. gov ARGONNE öCHICAGO

6 Ongoing Astronomical Mega-Surveys l Large number of new surveys – Multi-TB in size, 100 M objects or larger – In databases – Individual archives planned and under way l Multi-wavelength view of the sky – > 13 wavelength coverage within 5 years l Impressive early discoveries – Finding exotic objects by unusual colors > L, T dwarfs, high redshift quasars – Finding objects by time variability MACHO 2 MASS SDSS DPOSS GSC-II COBE MAP NVSS FIRST GALEX ROSAT OGLE. . . > Gravitational micro-lensing foster@mcs. anl. gov ARGONNE öCHICAGO

7 Crab Nebula in 4 Spectral Regions X-ray Infrared foster@mcs. anl. gov Optical Radio ARGONNE öCHICAGO

7 Crab Nebula in 4 Spectral Regions X-ray Infrared foster@mcs. anl. gov Optical Radio ARGONNE öCHICAGO

8 Coming Floods of Astronomy Data l The planned Large Synoptic Survey Telescope will produce over 10 petabytes per year by 2008! – All-sky survey every few days, so will have fine-grain time series for the first time foster@mcs. anl. gov ARGONNE öCHICAGO

8 Coming Floods of Astronomy Data l The planned Large Synoptic Survey Telescope will produce over 10 petabytes per year by 2008! – All-sky survey every few days, so will have fine-grain time series for the first time foster@mcs. anl. gov ARGONNE öCHICAGO

Data Intensive Biology and Medicine l 9 Medical data – X-Ray, mammography data, etc. (many petabytes) – Digitizing patient records (ditto) l X-ray crystallography l Molecular genomics and related disciplines – Human Genome, other genome databases – Proteomics (protein structure, activities, …) – Protein interactions, drug delivery l Virtual Population Laboratory (proposed) – Simulate likely spread of disease outbreaks l Brain scans (3 -D, time dependent) foster@mcs. anl. gov ARGONNE öCHICAGO

Data Intensive Biology and Medicine l 9 Medical data – X-Ray, mammography data, etc. (many petabytes) – Digitizing patient records (ditto) l X-ray crystallography l Molecular genomics and related disciplines – Human Genome, other genome databases – Proteomics (protein structure, activities, …) – Protein interactions, drug delivery l Virtual Population Laboratory (proposed) – Simulate likely spread of disease outbreaks l Brain scans (3 -D, time dependent) foster@mcs. anl. gov ARGONNE öCHICAGO

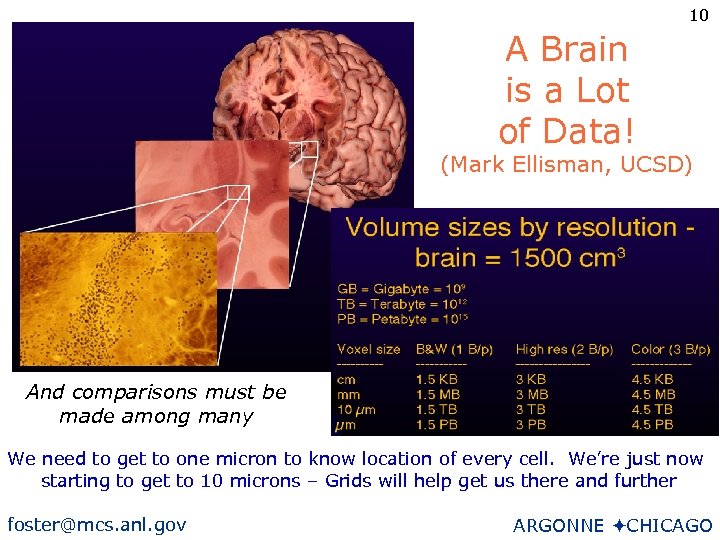

10 A Brain is a Lot of Data! (Mark Ellisman, UCSD) And comparisons must be made among many We need to get to one micron to know location of every cell. We’re just now starting to get to 10 microns – Grids will help get us there and further foster@mcs. anl. gov ARGONNE öCHICAGO

10 A Brain is a Lot of Data! (Mark Ellisman, UCSD) And comparisons must be made among many We need to get to one micron to know location of every cell. We’re just now starting to get to 10 microns – Grids will help get us there and further foster@mcs. anl. gov ARGONNE öCHICAGO

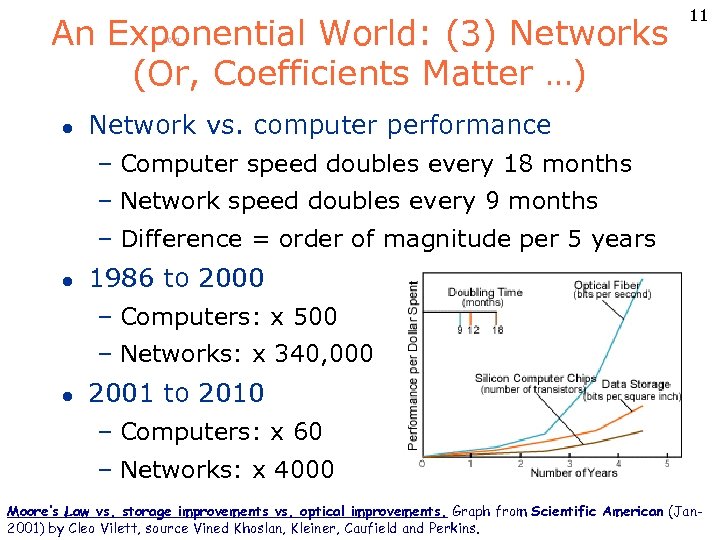

An Exponential World: (3) Networks (Or, Coefficients Matter …) l 11 Network vs. computer performance – Computer speed doubles every 18 months – Network speed doubles every 9 months – Difference = order of magnitude per 5 years l 1986 to 2000 – Computers: x 500 – Networks: x 340, 000 l 2001 to 2010 – Computers: x 60 – Networks: x 4000 Moore’s Law vs. storage improvements vs. optical improvements. Graph from Scientific American (Jan- foster@mcs. anl. gov Vined Khoslan, Kleiner, Caufield and Perkins. 2001) by Cleo Vilett, source ARGONNE öCHICAGO

An Exponential World: (3) Networks (Or, Coefficients Matter …) l 11 Network vs. computer performance – Computer speed doubles every 18 months – Network speed doubles every 9 months – Difference = order of magnitude per 5 years l 1986 to 2000 – Computers: x 500 – Networks: x 340, 000 l 2001 to 2010 – Computers: x 60 – Networks: x 4000 Moore’s Law vs. storage improvements vs. optical improvements. Graph from Scientific American (Jan- foster@mcs. anl. gov Vined Khoslan, Kleiner, Caufield and Perkins. 2001) by Cleo Vilett, source ARGONNE öCHICAGO

12 Evolution of the Scientific Process l Pre-electronic – Theorize &/or experiment, alone or in small teams; publish paper l Post-electronic – Construct and mine very large databases of observational or simulation data – Develop computer simulations & analyses – Exchange information quasi-instantaneously within large, distributed, multidisciplinary teams foster@mcs. anl. gov ARGONNE öCHICAGO

12 Evolution of the Scientific Process l Pre-electronic – Theorize &/or experiment, alone or in small teams; publish paper l Post-electronic – Construct and mine very large databases of observational or simulation data – Develop computer simulations & analyses – Exchange information quasi-instantaneously within large, distributed, multidisciplinary teams foster@mcs. anl. gov ARGONNE öCHICAGO

13 The Grid “Resource sharing & coordinated problem solving in dynamic, multi-institutional virtual organizations” foster@mcs. anl. gov ARGONNE öCHICAGO

13 The Grid “Resource sharing & coordinated problem solving in dynamic, multi-institutional virtual organizations” foster@mcs. anl. gov ARGONNE öCHICAGO

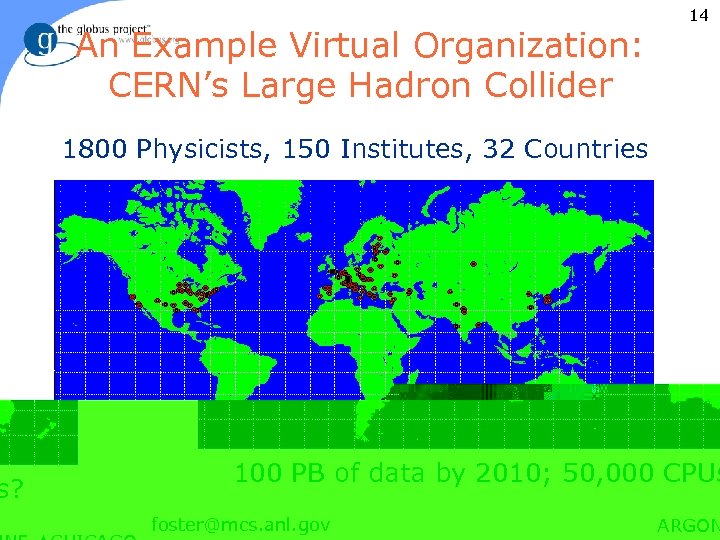

An Example Virtual Organization: CERN’s Large Hadron Collider 14 1800 Physicists, 150 Institutes, 32 Countries 100 PB of data by 2010; 50, 000 CPUs? foster@mcs. anl. gov ARGONNE öCHICAGO

An Example Virtual Organization: CERN’s Large Hadron Collider 14 1800 Physicists, 150 Institutes, 32 Countries 100 PB of data by 2010; 50, 000 CPUs? foster@mcs. anl. gov ARGONNE öCHICAGO

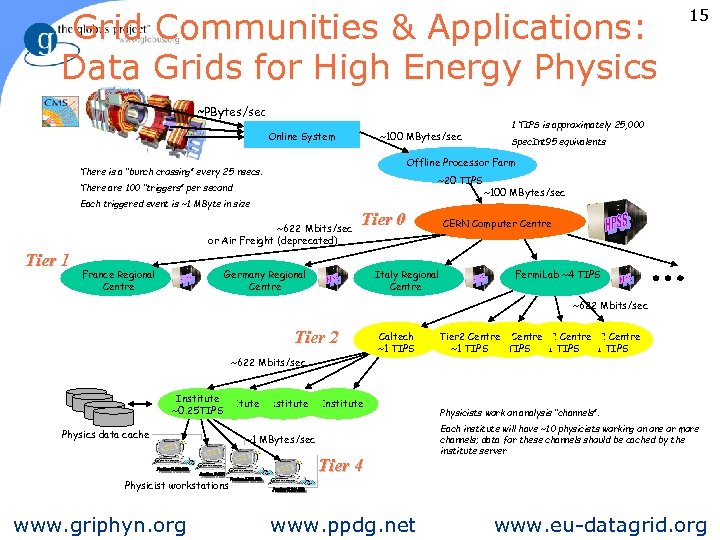

Grid Communities & Applications: Data Grids for High Energy Physics ~PBytes/sec Online System ~100 MBytes/sec ~20 TIPS There are 100 “triggers” per second Each triggered event is ~1 MByte in size ~622 Mbits/sec or Air Freight (deprecated) France Regional Centre 1 TIPS is approximately 25, 000 Spec. Int 95 equivalents Offline Processor Farm There is a “bunch crossing” every 25 nsecs. Tier 1 15 Tier 0 Germany Regional Centre Italy Regional Centre ~100 MBytes/sec CERN Computer Centre Fermi. Lab ~4 TIPS ~622 Mbits/sec Tier 2 ~622 Mbits/sec Institute ~0. 25 TIPS Physics data cache Caltech ~1 TIPS Institute ~1 MBytes/sec Tier 4 Tier 2 Centre Tier 2 Centre ~1 TIPS Physicists work on analysis “channels”. Each institute will have ~10 physicists working on one or more channels; data for these channels should be cached by the institute server Physicist workstations foster@mcs. anl. gov www. griphyn. org www. ppdg. net www. eu-datagrid. org ARGONNE öCHICAGO

Grid Communities & Applications: Data Grids for High Energy Physics ~PBytes/sec Online System ~100 MBytes/sec ~20 TIPS There are 100 “triggers” per second Each triggered event is ~1 MByte in size ~622 Mbits/sec or Air Freight (deprecated) France Regional Centre 1 TIPS is approximately 25, 000 Spec. Int 95 equivalents Offline Processor Farm There is a “bunch crossing” every 25 nsecs. Tier 1 15 Tier 0 Germany Regional Centre Italy Regional Centre ~100 MBytes/sec CERN Computer Centre Fermi. Lab ~4 TIPS ~622 Mbits/sec Tier 2 ~622 Mbits/sec Institute ~0. 25 TIPS Physics data cache Caltech ~1 TIPS Institute ~1 MBytes/sec Tier 4 Tier 2 Centre Tier 2 Centre ~1 TIPS Physicists work on analysis “channels”. Each institute will have ~10 physicists working on one or more channels; data for these channels should be cached by the institute server Physicist workstations foster@mcs. anl. gov www. griphyn. org www. ppdg. net www. eu-datagrid. org ARGONNE öCHICAGO

The Grid Opportunity: e. Science and e. Business l l l 16 Physicists worldwide pool resources for petaop analyses of petabytes of data Civil engineers collaborate to design, execute, & analyze shake table experiments An insurance company mines data from partner hospitals for fraud detection An application service provider offloads excess load to a compute cycle provider An enterprise configures internal & external resources to support e. Business workload foster@mcs. anl. gov ARGONNE öCHICAGO

The Grid Opportunity: e. Science and e. Business l l l 16 Physicists worldwide pool resources for petaop analyses of petabytes of data Civil engineers collaborate to design, execute, & analyze shake table experiments An insurance company mines data from partner hospitals for fraud detection An application service provider offloads excess load to a compute cycle provider An enterprise configures internal & external resources to support e. Business workload foster@mcs. anl. gov ARGONNE öCHICAGO

Grid Computing foster@mcs. anl. gov 17 ARGONNE öCHICAGO

Grid Computing foster@mcs. anl. gov 17 ARGONNE öCHICAGO

18 The Grid: A Brief History l Early 90 s – Gigabit testbeds, metacomputing l Mid to late 90 s – Early experiments (e. g. , I-WAY), academic software projects (e. g. , Globus, Legion), application experiments l 2002 – Dozens of application communities & projects – Major infrastructure deployments – Significant technology base (esp. Globus Toolkit. TM) – Growing industrial interest – Global Grid Forum: ~500 people, 20+ countries foster@mcs. anl. gov ARGONNE öCHICAGO

18 The Grid: A Brief History l Early 90 s – Gigabit testbeds, metacomputing l Mid to late 90 s – Early experiments (e. g. , I-WAY), academic software projects (e. g. , Globus, Legion), application experiments l 2002 – Dozens of application communities & projects – Major infrastructure deployments – Significant technology base (esp. Globus Toolkit. TM) – Growing industrial interest – Global Grid Forum: ~500 people, 20+ countries foster@mcs. anl. gov ARGONNE öCHICAGO

Challenging Technical Requirements l l 19 Dynamic formation and management of virtual organizations Online negotiation of access to services: who, what, why, when, how Establishment of applications and systems able to deliver multiple qualities of service Autonomic management of infrastructure elements Open Grid Services Architecture http: //www. globus. org/ogsa foster@mcs. anl. gov ARGONNE öCHICAGO

Challenging Technical Requirements l l 19 Dynamic formation and management of virtual organizations Online negotiation of access to services: who, what, why, when, how Establishment of applications and systems able to deliver multiple qualities of service Autonomic management of infrastructure elements Open Grid Services Architecture http: //www. globus. org/ogsa foster@mcs. anl. gov ARGONNE öCHICAGO

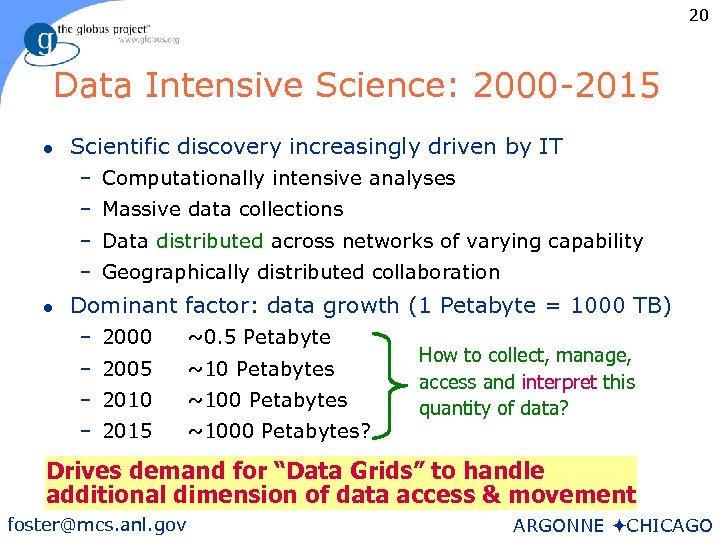

20 Data Intensive Science: 2000 -2015 l Scientific discovery increasingly driven by IT – Computationally intensive analyses – Massive data collections – Data distributed across networks of varying capability – Geographically distributed collaboration l Dominant factor: data growth (1 Petabyte = 1000 TB) – 2000 ~0. 5 Petabyte – 2005 ~10 Petabytes – 2010 ~100 Petabytes – 2015 ~1000 Petabytes? How to collect, manage, access and interpret this quantity of data? Drives demand for “Data Grids” to handle additional dimension of data access & movement foster@mcs. anl. gov ARGONNE öCHICAGO

20 Data Intensive Science: 2000 -2015 l Scientific discovery increasingly driven by IT – Computationally intensive analyses – Massive data collections – Data distributed across networks of varying capability – Geographically distributed collaboration l Dominant factor: data growth (1 Petabyte = 1000 TB) – 2000 ~0. 5 Petabyte – 2005 ~10 Petabytes – 2010 ~100 Petabytes – 2015 ~1000 Petabytes? How to collect, manage, access and interpret this quantity of data? Drives demand for “Data Grids” to handle additional dimension of data access & movement foster@mcs. anl. gov ARGONNE öCHICAGO

21 Data Grid Projects l Particle Physics Data Grid (US, DOE) – Data Grid applications for HENP expts. l Gri. Phy. N (US, NSF) – Petascale Virtual-Data Grids l i. VDGL (US, NSF) – Global Grid lab l Tera. Grid (US, NSF) – Dist. supercomp. resources (13 TFlops) l European Data Grid (EU, EC) – Data Grid technologies, EU deployment l u Collaborations of application scientists & computer scientists u Infrastructure deployment u Globus devel. & based Cross. Grid (EU, EC) – Data Grid technologies, EU emphasis l Data. TAG (EU, EC) – Transatlantic network, Grid applications l Japanese Grid Projects (APGrid) (Japan) – Grid deployment throughout Japan foster@mcs. anl. gov ARGONNE öCHICAGO

21 Data Grid Projects l Particle Physics Data Grid (US, DOE) – Data Grid applications for HENP expts. l Gri. Phy. N (US, NSF) – Petascale Virtual-Data Grids l i. VDGL (US, NSF) – Global Grid lab l Tera. Grid (US, NSF) – Dist. supercomp. resources (13 TFlops) l European Data Grid (EU, EC) – Data Grid technologies, EU deployment l u Collaborations of application scientists & computer scientists u Infrastructure deployment u Globus devel. & based Cross. Grid (EU, EC) – Data Grid technologies, EU emphasis l Data. TAG (EU, EC) – Transatlantic network, Grid applications l Japanese Grid Projects (APGrid) (Japan) – Grid deployment throughout Japan foster@mcs. anl. gov ARGONNE öCHICAGO

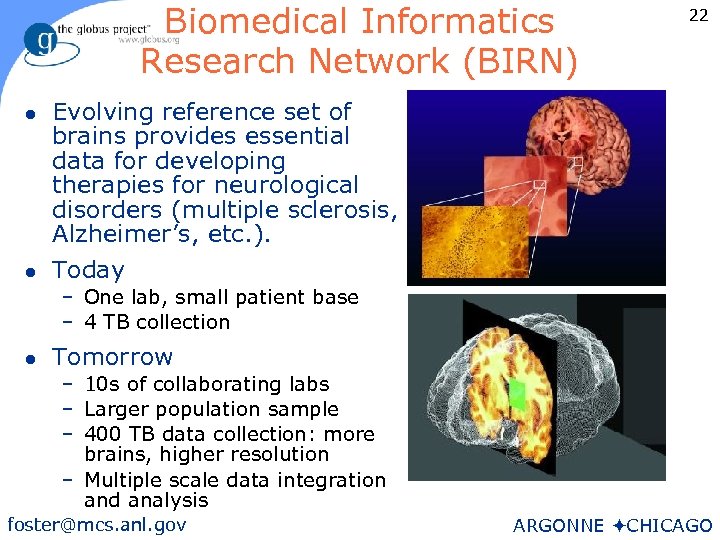

Biomedical Informatics Research Network (BIRN) l l 22 Evolving reference set of brains provides essential data for developing therapies for neurological disorders (multiple sclerosis, Alzheimer’s, etc. ). Today – One lab, small patient base – 4 TB collection l Tomorrow – 10 s of collaborating labs – Larger population sample – 400 TB data collection: more brains, higher resolution – Multiple scale data integration and analysis foster@mcs. anl. gov ARGONNE öCHICAGO

Biomedical Informatics Research Network (BIRN) l l 22 Evolving reference set of brains provides essential data for developing therapies for neurological disorders (multiple sclerosis, Alzheimer’s, etc. ). Today – One lab, small patient base – 4 TB collection l Tomorrow – 10 s of collaborating labs – Larger population sample – 400 TB data collection: more brains, higher resolution – Multiple scale data integration and analysis foster@mcs. anl. gov ARGONNE öCHICAGO

23 Gri. Phy. N = App. Science + CS + Grids l Gri. Phy. N = Grid Physics Network – US-CMS High Energy Physics – US-ATLAS High Energy Physics – LIGO/LSC Gravity wave research – SDSS Sloan Digital Sky Survey – Strong partnership with computer scientists l Design and implement production-scale grids – Develop common infrastructure, tools and services – Integration into the 4 experiments – Application to other sciences via “Virtual Data Toolkit” l Multi-year project – R&D for grid architecture (funded at $11. 9 M +$1. 6 M) – Integrate Grid infrastructure into experiments through VDT foster@mcs. anl. gov ARGONNE öCHICAGO

23 Gri. Phy. N = App. Science + CS + Grids l Gri. Phy. N = Grid Physics Network – US-CMS High Energy Physics – US-ATLAS High Energy Physics – LIGO/LSC Gravity wave research – SDSS Sloan Digital Sky Survey – Strong partnership with computer scientists l Design and implement production-scale grids – Develop common infrastructure, tools and services – Integration into the 4 experiments – Application to other sciences via “Virtual Data Toolkit” l Multi-year project – R&D for grid architecture (funded at $11. 9 M +$1. 6 M) – Integrate Grid infrastructure into experiments through VDT foster@mcs. anl. gov ARGONNE öCHICAGO

24 Gri. Phy. N Institutions – U Florida – U Penn – U Chicago – U Texas, Brownsville – Boston U – U Wisconsin, Milwaukee – Caltech – UC Berkeley – U Wisconsin, Madison – UC San Diego – USC/ISI – Harvard – San Diego Supercomputer Center – Indiana – Lawrence Berkeley Lab – Johns Hopkins – Argonne – Northwestern – Fermilab – Stanford – Brookhaven – U Illinois at Chicago foster@mcs. anl. gov ARGONNE öCHICAGO

24 Gri. Phy. N Institutions – U Florida – U Penn – U Chicago – U Texas, Brownsville – Boston U – U Wisconsin, Milwaukee – Caltech – UC Berkeley – U Wisconsin, Madison – UC San Diego – USC/ISI – Harvard – San Diego Supercomputer Center – Indiana – Lawrence Berkeley Lab – Johns Hopkins – Argonne – Northwestern – Fermilab – Stanford – Brookhaven – U Illinois at Chicago foster@mcs. anl. gov ARGONNE öCHICAGO

25 Gri. Phy. N: Peta. Scale Virtual Data Grids Production Team Individual Investigator Interactive User Tools Virtual Data Tools Request Planning & Scheduling Tools Resource è Management è Services Workgroups ~1 Petaop/s ~100 Petabytes Request Execution & Management Tools Other Grid Services è Security and è Policy è Services è Other Grid è Services Transforms Raw data source foster@mcs. anl. gov Distributed resources (code, storage, CPUs, networks) ARGONNE öCHICAGO

25 Gri. Phy. N: Peta. Scale Virtual Data Grids Production Team Individual Investigator Interactive User Tools Virtual Data Tools Request Planning & Scheduling Tools Resource è Management è Services Workgroups ~1 Petaop/s ~100 Petabytes Request Execution & Management Tools Other Grid Services è Security and è Policy è Services è Other Grid è Services Transforms Raw data source foster@mcs. anl. gov Distributed resources (code, storage, CPUs, networks) ARGONNE öCHICAGO

Gri. Phy. N Research Agenda l 26 Virtual Data technologies – Derived data, calculable via algorithm – Instantiated 0, 1, or many times (e. g. , caches) – “Fetch value” vs. “execute algorithm” – Potentially complex (versions, cost calculation, etc) l l E. g. , LIGO: “Get gravitational strain for 2 minutes around 200 gamma-ray bursts over last year” For each requested data value, need to – Locate item materialization, location, and algorithm – Determine costs of fetching vs. calculating – Plan data movements, computations to obtain results – Execute the plan foster@mcs. anl. gov ARGONNE öCHICAGO

Gri. Phy. N Research Agenda l 26 Virtual Data technologies – Derived data, calculable via algorithm – Instantiated 0, 1, or many times (e. g. , caches) – “Fetch value” vs. “execute algorithm” – Potentially complex (versions, cost calculation, etc) l l E. g. , LIGO: “Get gravitational strain for 2 minutes around 200 gamma-ray bursts over last year” For each requested data value, need to – Locate item materialization, location, and algorithm – Determine costs of fetching vs. calculating – Plan data movements, computations to obtain results – Execute the plan foster@mcs. anl. gov ARGONNE öCHICAGO

27 Virtual Data in Action l Data request may Major facilities, archives – Compute locally – Compute remotely – Access local data – Access remote data l Scheduling based on Regional facilities, caches – Local policies – Global policies – Cost foster@mcs. anl. gov Fetch item Local facilities, caches ARGONNE öCHICAGO

27 Virtual Data in Action l Data request may Major facilities, archives – Compute locally – Compute remotely – Access local data – Access remote data l Scheduling based on Regional facilities, caches – Local policies – Global policies – Cost foster@mcs. anl. gov Fetch item Local facilities, caches ARGONNE öCHICAGO

28 Gri. Phy. N Research Agenda (cont. ) l Execution management – Co-allocation (CPU, storage, network transfers) – Fault tolerance, error reporting – Interaction, feedback to planning l Performance analysis (with PPDG) – Instrumentation, measurement of all components – Understand optimize grid performance l Virtual Data Toolkit (VDT) – VDT = virtual data services + virtual data tools – One of the primary deliverables of R&D effort – Technology transfer to other scientific domains foster@mcs. anl. gov ARGONNE öCHICAGO

28 Gri. Phy. N Research Agenda (cont. ) l Execution management – Co-allocation (CPU, storage, network transfers) – Fault tolerance, error reporting – Interaction, feedback to planning l Performance analysis (with PPDG) – Instrumentation, measurement of all components – Understand optimize grid performance l Virtual Data Toolkit (VDT) – VDT = virtual data services + virtual data tools – One of the primary deliverables of R&D effort – Technology transfer to other scientific domains foster@mcs. anl. gov ARGONNE öCHICAGO

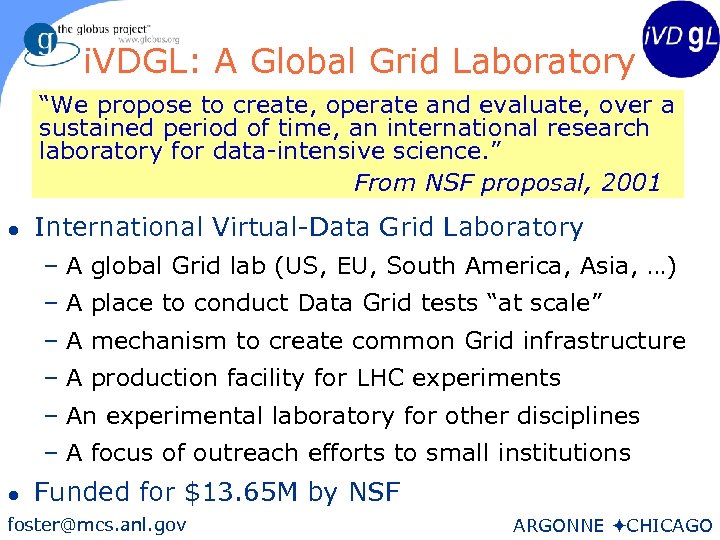

29 i. VDGL: A Global Grid Laboratory “We propose to create, operate and evaluate, over a sustained period of time, an international research laboratory for data-intensive science. ” From NSF proposal, 2001 l International Virtual-Data Grid Laboratory – A global Grid lab (US, EU, South America, Asia, …) – A place to conduct Data Grid tests “at scale” – A mechanism to create common Grid infrastructure – A production facility for LHC experiments – An experimental laboratory for other disciplines – A focus of outreach efforts to small institutions l Funded for $13. 65 M by NSF foster@mcs. anl. gov ARGONNE öCHICAGO

29 i. VDGL: A Global Grid Laboratory “We propose to create, operate and evaluate, over a sustained period of time, an international research laboratory for data-intensive science. ” From NSF proposal, 2001 l International Virtual-Data Grid Laboratory – A global Grid lab (US, EU, South America, Asia, …) – A place to conduct Data Grid tests “at scale” – A mechanism to create common Grid infrastructure – A production facility for LHC experiments – An experimental laboratory for other disciplines – A focus of outreach efforts to small institutions l Funded for $13. 65 M by NSF foster@mcs. anl. gov ARGONNE öCHICAGO

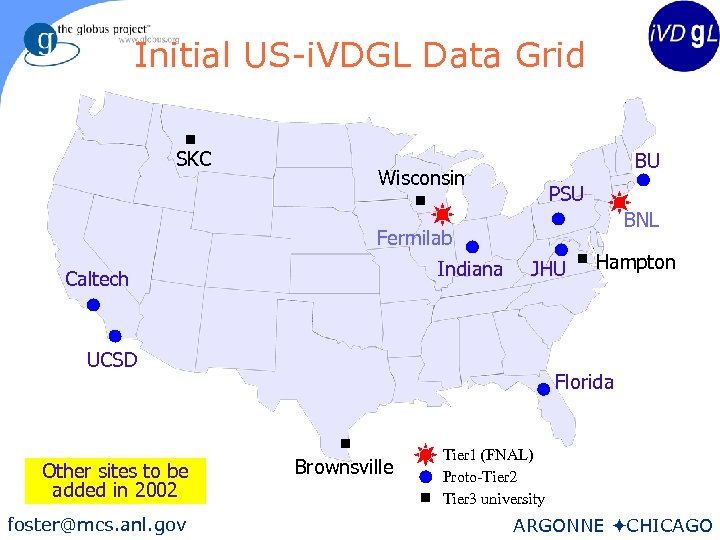

30 Initial US-i. VDGL Data Grid SKC BU Wisconsin PSU BNL Fermilab Indiana Caltech JHU UCSD Other sites to be added in 2002 foster@mcs. anl. gov Hampton Florida Brownsville Tier 1 (FNAL) Proto-Tier 2 Tier 3 university ARGONNE öCHICAGO

30 Initial US-i. VDGL Data Grid SKC BU Wisconsin PSU BNL Fermilab Indiana Caltech JHU UCSD Other sites to be added in 2002 foster@mcs. anl. gov Hampton Florida Brownsville Tier 1 (FNAL) Proto-Tier 2 Tier 3 university ARGONNE öCHICAGO

31 i. VDGL Map (2002 -2003) Surfnet Data. TAG Later ØBrazil ØPakistan ØRussia ØChina Tier 0/1 facility Tier 2 facility Tier 3 facility 10 Gbps link 2. 5 Gbps link 622 Mbps link Other link foster@mcs. anl. gov ARGONNE öCHICAGO

31 i. VDGL Map (2002 -2003) Surfnet Data. TAG Later ØBrazil ØPakistan ØRussia ØChina Tier 0/1 facility Tier 2 facility Tier 3 facility 10 Gbps link 2. 5 Gbps link 622 Mbps link Other link foster@mcs. anl. gov ARGONNE öCHICAGO

Programs as Community Resources: Data Derivation and Provenance l 32 Most scientific data are not simple “measurements”; essentially all are: – Computationally corrected/reconstructed – And/or produced by numerical simulation l And thus, as data and computers become ever larger and more expensive: – Programs are significant community resources – So are the executions of those programs foster@mcs. anl. gov ARGONNE öCHICAGO

Programs as Community Resources: Data Derivation and Provenance l 32 Most scientific data are not simple “measurements”; essentially all are: – Computationally corrected/reconstructed – And/or produced by numerical simulation l And thus, as data and computers become ever larger and more expensive: – Programs are significant community resources – So are the executions of those programs foster@mcs. anl. gov ARGONNE öCHICAGO

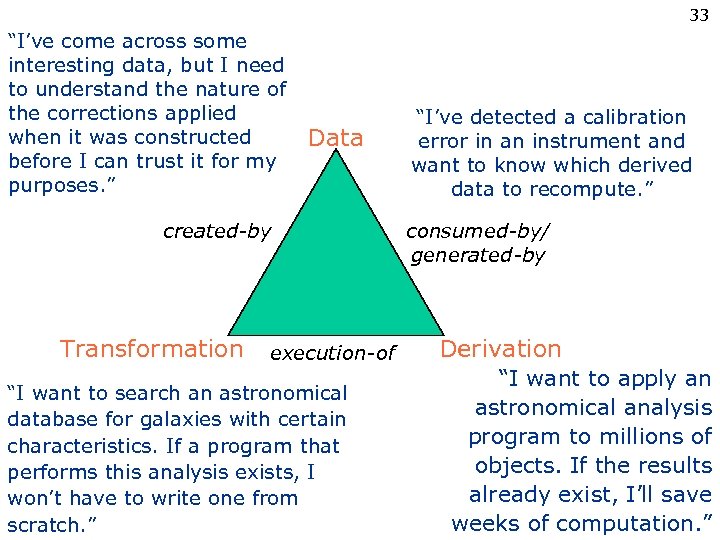

33 “I’ve come across some interesting data, but I need to understand the nature of the corrections applied when it was constructed before I can trust it for my purposes. ” Data created-by Transformation execution-of “I want to search an astronomical database for galaxies with certain characteristics. If a program that performs this analysis exists, I won’t have to write one from foster@mcs. anl. gov scratch. ” “I’ve detected a calibration error in an instrument and want to know which derived data to recompute. ” consumed-by/ generated-by Derivation “I want to apply an astronomical analysis program to millions of objects. If the results already exist, I’ll save weeks of computation. ” ARGONNE öCHICAGO

33 “I’ve come across some interesting data, but I need to understand the nature of the corrections applied when it was constructed before I can trust it for my purposes. ” Data created-by Transformation execution-of “I want to search an astronomical database for galaxies with certain characteristics. If a program that performs this analysis exists, I won’t have to write one from foster@mcs. anl. gov scratch. ” “I’ve detected a calibration error in an instrument and want to know which derived data to recompute. ” consumed-by/ generated-by Derivation “I want to apply an astronomical analysis program to millions of objects. If the results already exist, I’ll save weeks of computation. ” ARGONNE öCHICAGO

The Chimera Virtual Data System (Gri. Phy. N Project) l 34 Virtual data catalog – Transformations, derivations, data l Virtual data language – Data definition + query l Applications include browsers and data analysis applications foster@mcs. anl. gov ARGONNE öCHICAGO

The Chimera Virtual Data System (Gri. Phy. N Project) l 34 Virtual data catalog – Transformations, derivations, data l Virtual data language – Data definition + query l Applications include browsers and data analysis applications foster@mcs. anl. gov ARGONNE öCHICAGO

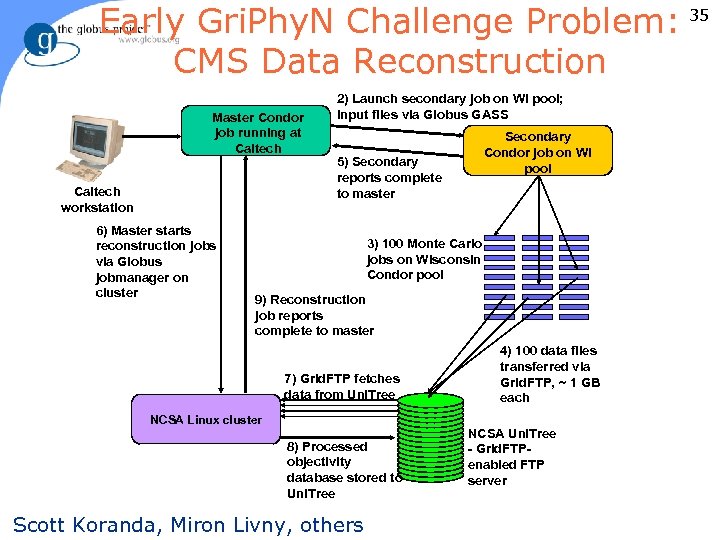

Early Gri. Phy. N Challenge Problem: 35 CMS Data Reconstruction Master Condor job running at Caltech workstation 6) Master starts reconstruction jobs via Globus jobmanager on cluster 2) Launch secondary job on WI pool; input files via Globus GASS Secondary Condor job on WI pool 5) Secondary reports complete to master 3) 100 Monte Carlo jobs on Wisconsin Condor pool 9) Reconstruction job reports complete to master 7) Grid. FTP fetches data from Uni. Tree NCSA Linux cluster 8) Processed objectivity database stored to Uni. Tree foster@mcs. anl. gov Scott Koranda, Miron Livny, others 4) 100 data files transferred via Grid. FTP, ~ 1 GB each NCSA Uni. Tree - Grid. FTPenabled FTP server ARGONNE öCHICAGO

Early Gri. Phy. N Challenge Problem: 35 CMS Data Reconstruction Master Condor job running at Caltech workstation 6) Master starts reconstruction jobs via Globus jobmanager on cluster 2) Launch secondary job on WI pool; input files via Globus GASS Secondary Condor job on WI pool 5) Secondary reports complete to master 3) 100 Monte Carlo jobs on Wisconsin Condor pool 9) Reconstruction job reports complete to master 7) Grid. FTP fetches data from Uni. Tree NCSA Linux cluster 8) Processed objectivity database stored to Uni. Tree foster@mcs. anl. gov Scott Koranda, Miron Livny, others 4) 100 data files transferred via Grid. FTP, ~ 1 GB each NCSA Uni. Tree - Grid. FTPenabled FTP server ARGONNE öCHICAGO

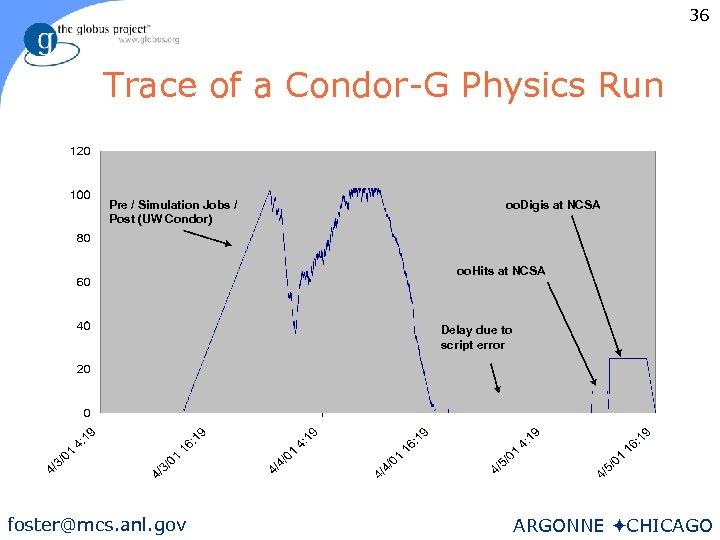

36 Trace of a Condor-G Physics Run Pre / Simulation Jobs / Post (UW Condor) oo. Digis at NCSA oo. Hits at NCSA Delay due to script error foster@mcs. anl. gov ARGONNE öCHICAGO

36 Trace of a Condor-G Physics Run Pre / Simulation Jobs / Post (UW Condor) oo. Digis at NCSA oo. Hits at NCSA Delay due to script error foster@mcs. anl. gov ARGONNE öCHICAGO

37 Knowledge-based Data Grid Roadmap (Reagan Moore, SDSC) Relationships Between Concepts Knowledge Repository for Rules Access Services Rules - KQL Knowledge Management XTM DTD Ingest Services Knowledge or Topic-Based Query / Browse Attributes Semantics Information Repository SDLIP Information XML DTD (Model-based Access) Attribute-based Query Fields Containers Folders foster@mcs. anl. gov Storage (Replicas, Persistent IDs) Grids Data MCAT/HDF (Data Handling System ) Feature-based Query ARGONNE öCHICAGO

37 Knowledge-based Data Grid Roadmap (Reagan Moore, SDSC) Relationships Between Concepts Knowledge Repository for Rules Access Services Rules - KQL Knowledge Management XTM DTD Ingest Services Knowledge or Topic-Based Query / Browse Attributes Semantics Information Repository SDLIP Information XML DTD (Model-based Access) Attribute-based Query Fields Containers Folders foster@mcs. anl. gov Storage (Replicas, Persistent IDs) Grids Data MCAT/HDF (Data Handling System ) Feature-based Query ARGONNE öCHICAGO

38 New Programs l l U. K. e. Science program EU 6 th Framework U. S. Committee on Cyberinfrastructure Japanese Grid initiative foster@mcs. anl. gov ARGONNE öCHICAGO

38 New Programs l l U. K. e. Science program EU 6 th Framework U. S. Committee on Cyberinfrastructure Japanese Grid initiative foster@mcs. anl. gov ARGONNE öCHICAGO

U. S. Cyberinfrastructure: Draft Recommendations l l 39 New INITIATIVE to revolutionize science and engineering research at NSF and worldwide to capitalize on new computing and communications opportunities 21 st Century Cyberinfrastructure includes supercomputing, but also massive storage, networking, software, collaboration, visualization, and human resources – Current centers (NCSA, SDSC, PSC) are a key resource for the INITIATIVE – Budget estimate: incremental $650 M/year (continuing) An INITIATIVE OFFICE with a highly placed, credible leader empowered to – Initiate competitive, discipline-driven path-breaking applications within NSF of cyberinfrastructure which contribute to the shared goals of the INITIATIVE – Coordinate policy and allocations across fields and projects. Participants across NSF directorates, Federal agencies, and international e-science – Develop high quality middleware and other software that is essential and special to scientific research – Manage individual computational, storage, and networking resources at least 100 x larger than individual projects or universities can provide. foster@mcs. anl. gov ARGONNE öCHICAGO

U. S. Cyberinfrastructure: Draft Recommendations l l 39 New INITIATIVE to revolutionize science and engineering research at NSF and worldwide to capitalize on new computing and communications opportunities 21 st Century Cyberinfrastructure includes supercomputing, but also massive storage, networking, software, collaboration, visualization, and human resources – Current centers (NCSA, SDSC, PSC) are a key resource for the INITIATIVE – Budget estimate: incremental $650 M/year (continuing) An INITIATIVE OFFICE with a highly placed, credible leader empowered to – Initiate competitive, discipline-driven path-breaking applications within NSF of cyberinfrastructure which contribute to the shared goals of the INITIATIVE – Coordinate policy and allocations across fields and projects. Participants across NSF directorates, Federal agencies, and international e-science – Develop high quality middleware and other software that is essential and special to scientific research – Manage individual computational, storage, and networking resources at least 100 x larger than individual projects or universities can provide. foster@mcs. anl. gov ARGONNE öCHICAGO

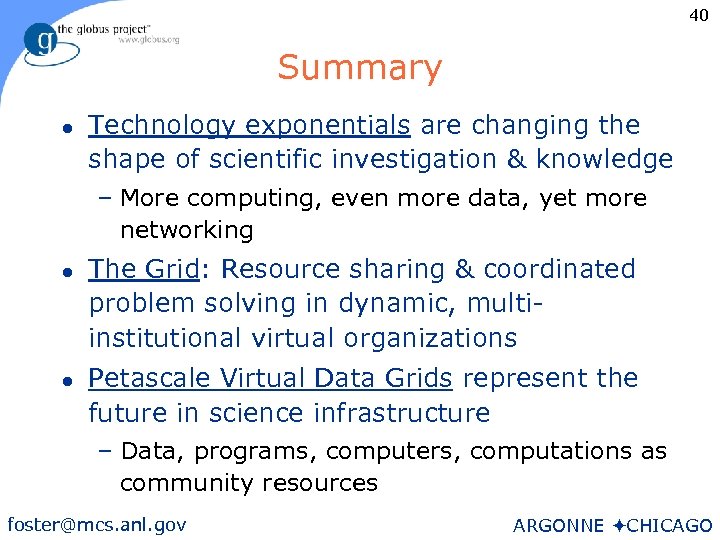

40 Summary l Technology exponentials are changing the shape of scientific investigation & knowledge – More computing, even more data, yet more networking l l The Grid: Resource sharing & coordinated problem solving in dynamic, multiinstitutional virtual organizations Petascale Virtual Data Grids represent the future in science infrastructure – Data, programs, computers, computations as community resources foster@mcs. anl. gov ARGONNE öCHICAGO

40 Summary l Technology exponentials are changing the shape of scientific investigation & knowledge – More computing, even more data, yet more networking l l The Grid: Resource sharing & coordinated problem solving in dynamic, multiinstitutional virtual organizations Petascale Virtual Data Grids represent the future in science infrastructure – Data, programs, computers, computations as community resources foster@mcs. anl. gov ARGONNE öCHICAGO

l Grid Book For More Information 41 – www. mkp. com/grids l The Globus Project™ – www. globus. org l Global Grid Forum – www. gridforum. org l Tera. Grid – www. teragrid. org l EU Data. Grid – www. eu-datagrid. org l Gri. Phy. N – www. griphyn. org l i. VDGL – www. ivdgl. org l Background papers – www. mcs. anl. gov/~foster@mcs. anl. gov ARGONNE öCHICAGO

l Grid Book For More Information 41 – www. mkp. com/grids l The Globus Project™ – www. globus. org l Global Grid Forum – www. gridforum. org l Tera. Grid – www. teragrid. org l EU Data. Grid – www. eu-datagrid. org l Gri. Phy. N – www. griphyn. org l i. VDGL – www. ivdgl. org l Background papers – www. mcs. anl. gov/~foster@mcs. anl. gov ARGONNE öCHICAGO