413d42d8b0d6a4512d50f86157677086.ppt

- Количество слайдов: 43

Data Flow and SDLC HUSH via Firm Solutions, Inc. Virginia Mushkatblat copyright

Data Flow and SDLC HUSH via Firm Solutions, Inc. Virginia Mushkatblat copyright

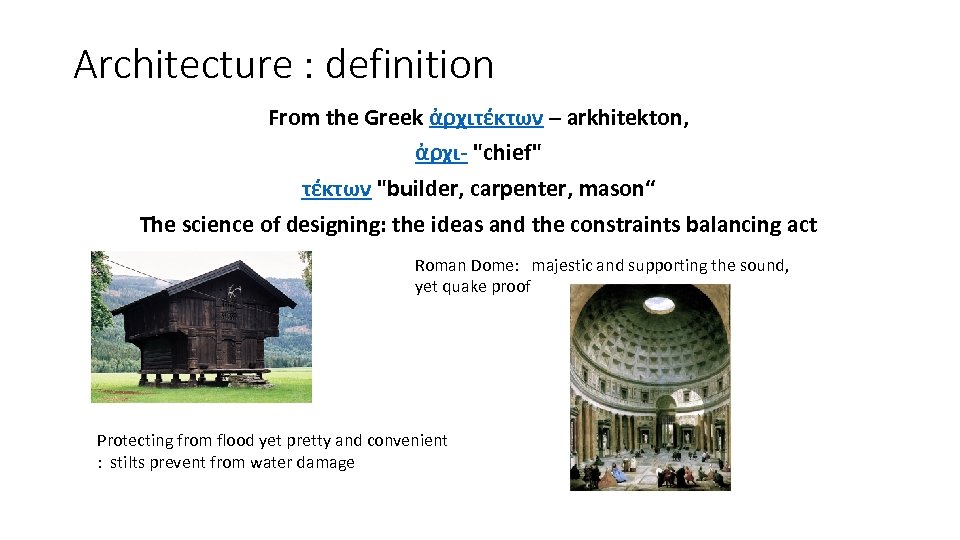

Architecture : definition From the Greek ἀρχιτέκτων – arkhitekton, ἀρχι- "chief" τέκτων "builder, carpenter, mason“ The science of designing: the ideas and the constraints balancing act Roman Dome: majestic and supporting the sound, yet quake proof Protecting from flood yet pretty and convenient : stilts prevent from water damage

Architecture : definition From the Greek ἀρχιτέκτων – arkhitekton, ἀρχι- "chief" τέκτων "builder, carpenter, mason“ The science of designing: the ideas and the constraints balancing act Roman Dome: majestic and supporting the sound, yet quake proof Protecting from flood yet pretty and convenient : stilts prevent from water damage

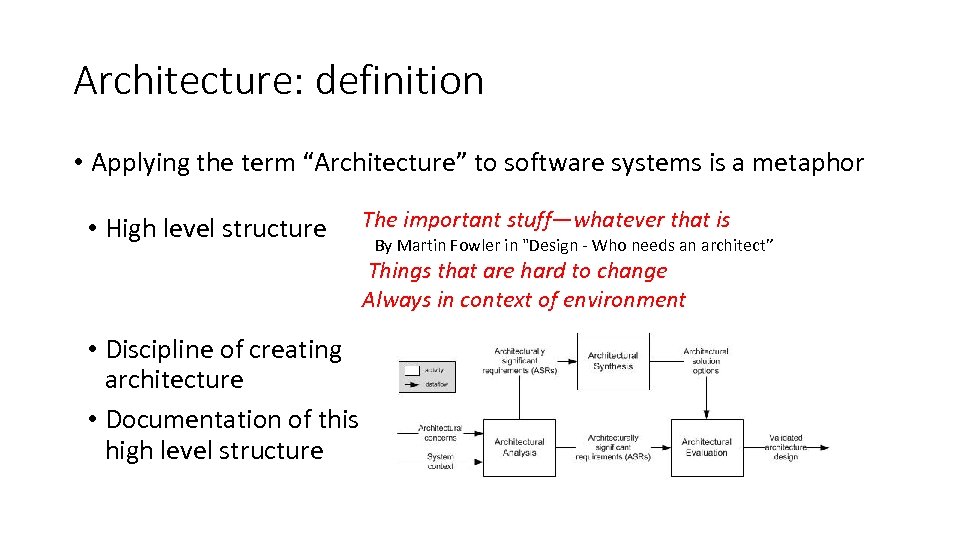

Architecture: definition • Applying the term “Architecture” to software systems is a metaphor • High level structure The important stuff—whatever that is By Martin Fowler in "Design - Who needs an architect” Things that are hard to change Always in context of environment • Discipline of creating architecture • Documentation of this high level structure

Architecture: definition • Applying the term “Architecture” to software systems is a metaphor • High level structure The important stuff—whatever that is By Martin Fowler in "Design - Who needs an architect” Things that are hard to change Always in context of environment • Discipline of creating architecture • Documentation of this high level structure

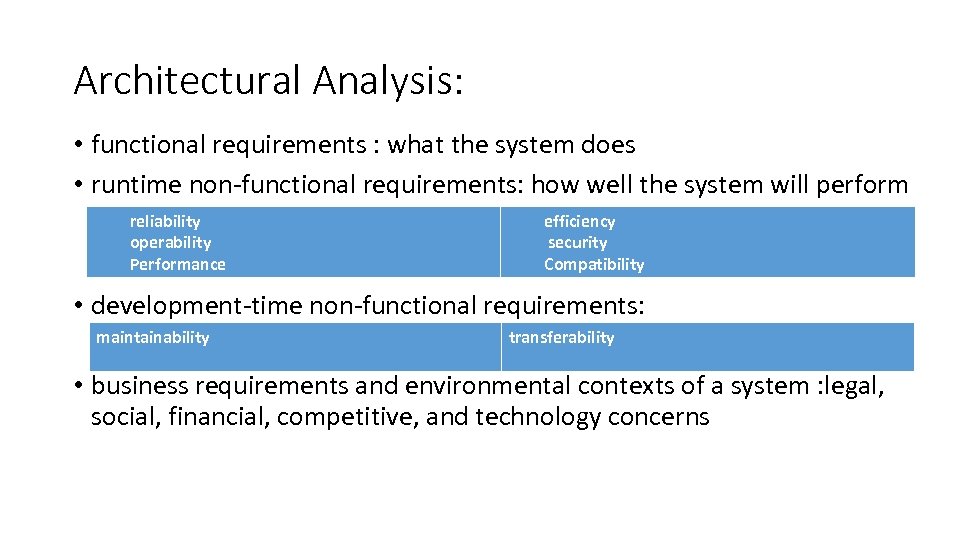

Architectural Analysis: • functional requirements : what the system does • runtime non-functional requirements: how well the system will perform reliability operability Performance efficiency security Compatibility • development-time non-functional requirements: maintainability transferability • business requirements and environmental contexts of a system : legal, social, financial, competitive, and technology concerns

Architectural Analysis: • functional requirements : what the system does • runtime non-functional requirements: how well the system will perform reliability operability Performance efficiency security Compatibility • development-time non-functional requirements: maintainability transferability • business requirements and environmental contexts of a system : legal, social, financial, competitive, and technology concerns

Different Companies – Different Needs • Start Ups • New Development. No data to start with. • Developed Organizations • New Development. Data Already Exists. New data and existing Data Feeds. • Continuous Development. A lot of Data in the System. • Maintenance Stage. Migrations.

Different Companies – Different Needs • Start Ups • New Development. No data to start with. • Developed Organizations • New Development. Data Already Exists. New data and existing Data Feeds. • Continuous Development. A lot of Data in the System. • Maintenance Stage. Migrations.

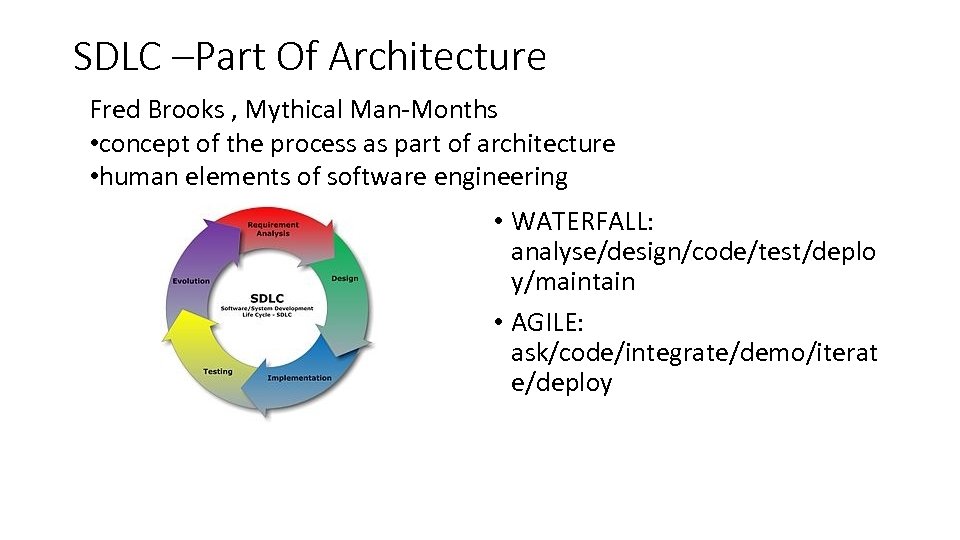

SDLC –Part Of Architecture Fred Brooks , Mythical Man-Months • concept of the process as part of architecture • human elements of software engineering • WATERFALL: analyse/design/code/test/deplo y/maintain • AGILE: ask/code/integrate/demo/iterat e/deploy

SDLC –Part Of Architecture Fred Brooks , Mythical Man-Months • concept of the process as part of architecture • human elements of software engineering • WATERFALL: analyse/design/code/test/deplo y/maintain • AGILE: ask/code/integrate/demo/iterat e/deploy

New Development • When we start from Scratch, there is nothing and we can initially treat everything as if databases were code.

New Development • When we start from Scratch, there is nothing and we can initially treat everything as if databases were code.

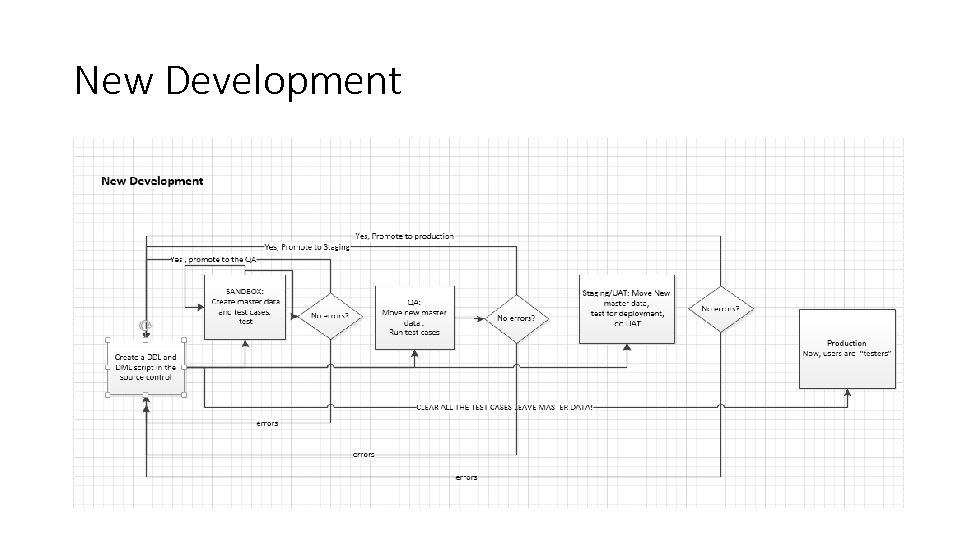

New Development

New Development

To Infinity And Beyond : in Production • Big day being behind, we are in production q Lots of transactions q Database size reaches GB, TB, PT – think Amazon, we all want our business be there q We scale in various ways, yet the CRUD logic is the same q. Master data matures and gets into DB via GUI

To Infinity And Beyond : in Production • Big day being behind, we are in production q Lots of transactions q Database size reaches GB, TB, PT – think Amazon, we all want our business be there q We scale in various ways, yet the CRUD logic is the same q. Master data matures and gets into DB via GUI

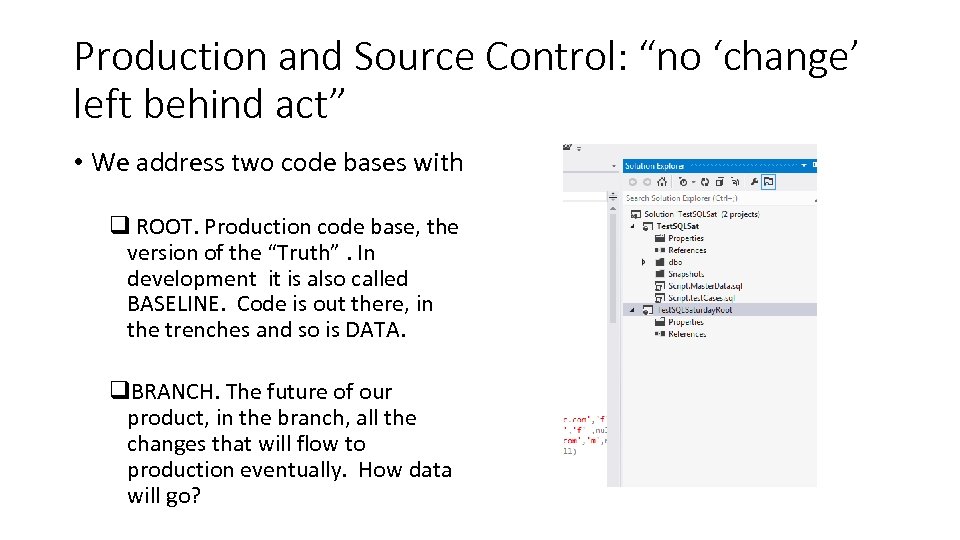

Production and Source Control: “no ‘change’ left behind act” • We address two code bases with q ROOT. Production code base, the version of the “Truth”. In development it is also called BASELINE. Code is out there, in the trenches and so is DATA. q. BRANCH. The future of our product, in the branch, all the changes that will flow to production eventually. How data will go?

Production and Source Control: “no ‘change’ left behind act” • We address two code bases with q ROOT. Production code base, the version of the “Truth”. In development it is also called BASELINE. Code is out there, in the trenches and so is DATA. q. BRANCH. The future of our product, in the branch, all the changes that will flow to production eventually. How data will go?

Problem child of the builds: DATA A couple of definitions , first: • Extreme Programming (XP) ü improve software quality and responsiveness to changing customer requirements. • Continuous integration (CI) üthe practice of merging all developer workspaces with a shared mainline several times a day. It was first named and proposed as part of extreme programming (XP). • How does data fit the process?

Problem child of the builds: DATA A couple of definitions , first: • Extreme Programming (XP) ü improve software quality and responsiveness to changing customer requirements. • Continuous integration (CI) üthe practice of merging all developer workspaces with a shared mainline several times a day. It was first named and proposed as part of extreme programming (XP). • How does data fit the process?

DATA Concepts • Master Data • It is standardized and managed ( formally or not) across enterprise • Often has external source from other governing body • It is relatively un-changeable. Change is in the context of user based transaction • Examples: Roles, Countries, Rates can be volatile, yet have to be uniform across enterprise in close to real-time • Transactional Data • User introduces transactions

DATA Concepts • Master Data • It is standardized and managed ( formally or not) across enterprise • Often has external source from other governing body • It is relatively un-changeable. Change is in the context of user based transaction • Examples: Roles, Countries, Rates can be volatile, yet have to be uniform across enterprise in close to real-time • Transactional Data • User introduces transactions

Problem child of the builds: DATA • Master data and number of test cases grows • Builds take time, either continuous or daily • Inserts, updates, deletes are physical phenomena: they take time to write to disk. • Not everyone is rich to get SSDs or Fusion IO for development environments Image circa Microsoft

Problem child of the builds: DATA • Master data and number of test cases grows • Builds take time, either continuous or daily • Inserts, updates, deletes are physical phenomena: they take time to write to disk. • Not everyone is rich to get SSDs or Fusion IO for development environments Image circa Microsoft

Solution Strategies • Constraints: • Operational requirements for qdata availability qdata consistency qperformance qdata integrity • Development Requirements for: qdevelopment time qskill sets • Environmental q. Monetary (space and processors) q. Political (we just do not want to use third party) • Architectural patterns: q. Backup/Load q. ETL Solutions (different kinds)

Solution Strategies • Constraints: • Operational requirements for qdata availability qdata consistency qperformance qdata integrity • Development Requirements for: qdevelopment time qskill sets • Environmental q. Monetary (space and processors) q. Political (we just do not want to use third party) • Architectural patterns: q. Backup/Load q. ETL Solutions (different kinds)

![Backup/Load: truncate data; refactoring USE [master] GO ALTER DATABASE [Test. SQLSat. Refactor] SET SINGLE_USER Backup/Load: truncate data; refactoring USE [master] GO ALTER DATABASE [Test. SQLSat. Refactor] SET SINGLE_USER](https://present5.com/presentation/413d42d8b0d6a4512d50f86157677086/image-15.jpg) Backup/Load: truncate data; refactoring USE [master] GO ALTER DATABASE [Test. SQLSat. Refactor] SET SINGLE_USER WITH ROLLBACK IMMEDIATE GO RESTORE DATABASE [Test. SQLSat. Refactor] FROM DISK = N'C: Program FilesMicrosoft SQL ServerMSSQL 10. MSSQLSERVERMSSQL BackupTest. SQLSat. bak' WITH MOVE 'Test. SQLSat' TO 'C: Program FilesMicrosoft SQL Server MSSQL 10. MSSQLSERVERMSSQLDATATest. SQLSat. Refactor. mdf', MOVE 'Test. SQLSat_log' TO 'C: Program FilesMicrosoft SQL ServerMSSQL 10. MSSQLSERVER MSSQLDATATest. SQLSat. Refactor_log. ldf' , FILE = 1, NOUNLOAD, REPLACE, STATS = 10; GO

Backup/Load: truncate data; refactoring USE [master] GO ALTER DATABASE [Test. SQLSat. Refactor] SET SINGLE_USER WITH ROLLBACK IMMEDIATE GO RESTORE DATABASE [Test. SQLSat. Refactor] FROM DISK = N'C: Program FilesMicrosoft SQL ServerMSSQL 10. MSSQLSERVERMSSQL BackupTest. SQLSat. bak' WITH MOVE 'Test. SQLSat' TO 'C: Program FilesMicrosoft SQL Server MSSQL 10. MSSQLSERVERMSSQLDATATest. SQLSat. Refactor. mdf', MOVE 'Test. SQLSat_log' TO 'C: Program FilesMicrosoft SQL ServerMSSQL 10. MSSQLSERVER MSSQLDATATest. SQLSat. Refactor_log. ldf' , FILE = 1, NOUNLOAD, REPLACE, STATS = 10; GO

Perils of environments: • Data homogeneity across environments. How close should environments be in terms of data? • The sandbox and integration environments hold the least amount of data. A rule of thumb: data set sufficient enough for developing functional requirements. Pros: speeds up development, cons: can’t accommodate all the test cases and needs constant data set assessments. • The QA/Staging should hold complete data set to allow for UAT and for performance and regression testing. • Break Fix environment holds data set and schema as close to production as possible to allow for speedy production issues resolutions. • Physical Constraints: goes without saying. NO disk space means no disk space. • Environment SLAs: is development around the clock with international development team, 24/7 or only happens in one place with 8 hours development day/time? • Rate of refreshes: depends on whether we do continuous deployments or scheduled releases • Data retention: how much and often transactional data gets purged? • Schema management: schema/data in source control? Are deployments automated including data? Are there specific structures that support metadata?

Perils of environments: • Data homogeneity across environments. How close should environments be in terms of data? • The sandbox and integration environments hold the least amount of data. A rule of thumb: data set sufficient enough for developing functional requirements. Pros: speeds up development, cons: can’t accommodate all the test cases and needs constant data set assessments. • The QA/Staging should hold complete data set to allow for UAT and for performance and regression testing. • Break Fix environment holds data set and schema as close to production as possible to allow for speedy production issues resolutions. • Physical Constraints: goes without saying. NO disk space means no disk space. • Environment SLAs: is development around the clock with international development team, 24/7 or only happens in one place with 8 hours development day/time? • Rate of refreshes: depends on whether we do continuous deployments or scheduled releases • Data retention: how much and often transactional data gets purged? • Schema management: schema/data in source control? Are deployments automated including data? Are there specific structures that support metadata?

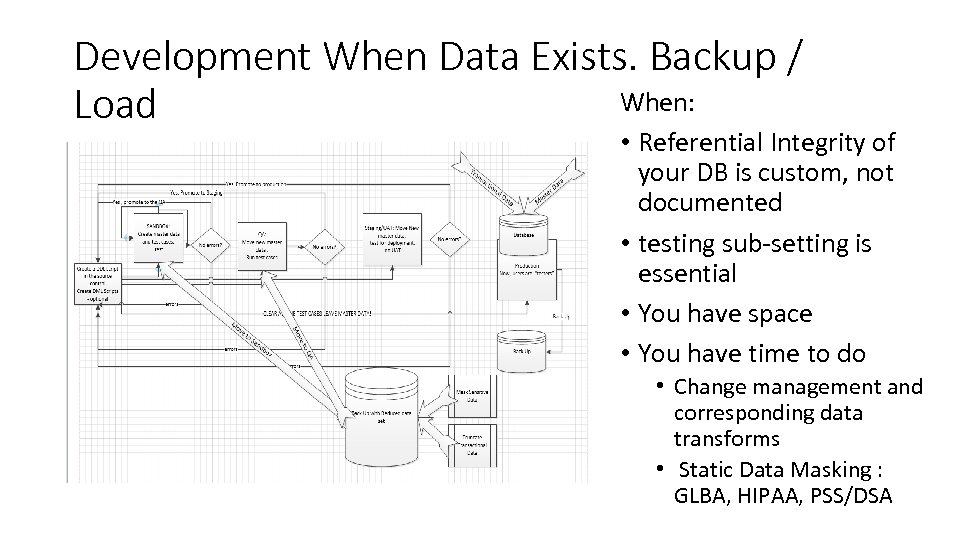

Development When Data Exists. Backup / When: Load • Referential Integrity of your DB is custom, not documented • testing sub-setting is essential • You have space • You have time to do • Change management and corresponding data transforms • Static Data Masking : GLBA, HIPAA, PSS/DSA

Development When Data Exists. Backup / When: Load • Referential Integrity of your DB is custom, not documented • testing sub-setting is essential • You have space • You have time to do • Change management and corresponding data transforms • Static Data Masking : GLBA, HIPAA, PSS/DSA

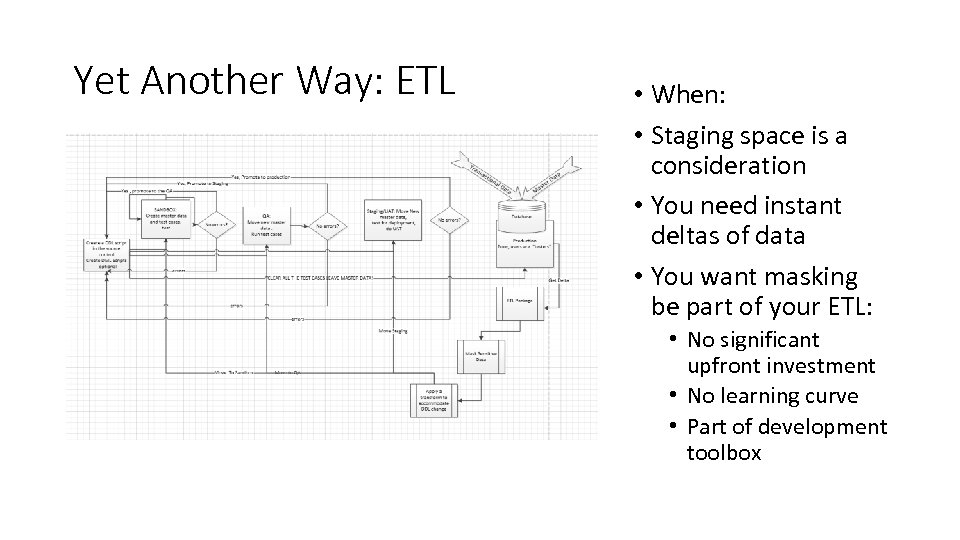

Yet Another Way: ETL • When: • Staging space is a consideration • You need instant deltas of data • You want masking be part of your ETL: • No significant upfront investment • No learning curve • Part of development toolbox

Yet Another Way: ETL • When: • Staging space is a consideration • You need instant deltas of data • You want masking be part of your ETL: • No significant upfront investment • No learning curve • Part of development toolbox

Dreaded Maintenance: Break/Fix

Dreaded Maintenance: Break/Fix

QA/Staging/Break Fix Environments !!!! BE AWARE!!!! Backup/Load takes time. Count on it. Refactoring takes time. Count on it. ETL takes time. Count on it. Masking has its own architectures. Chose the one appropriate.

QA/Staging/Break Fix Environments !!!! BE AWARE!!!! Backup/Load takes time. Count on it. Refactoring takes time. Count on it. ETL takes time. Count on it. Masking has its own architectures. Chose the one appropriate.

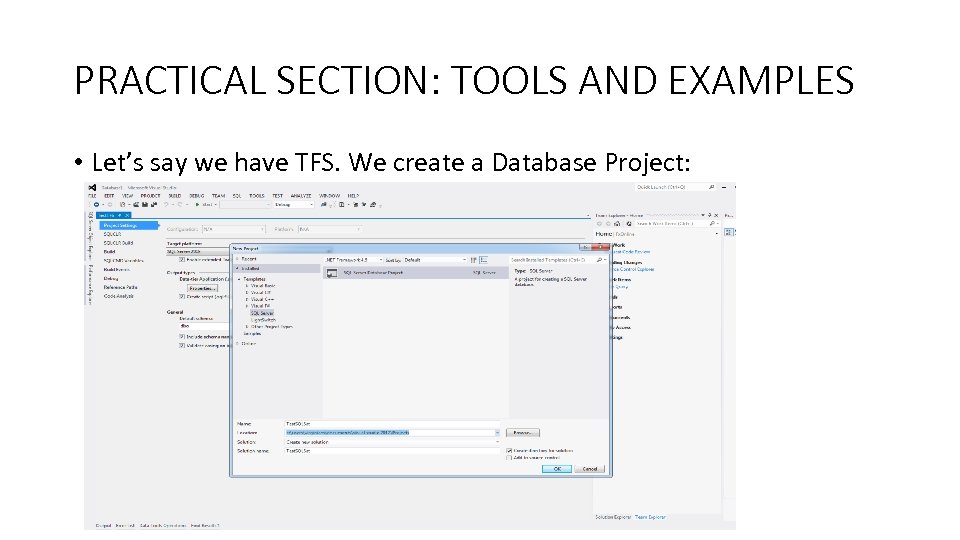

PRACTICAL SECTION: TOOLS AND EXAMPLES • Let’s say we have TFS. We create a Database Project:

PRACTICAL SECTION: TOOLS AND EXAMPLES • Let’s say we have TFS. We create a Database Project:

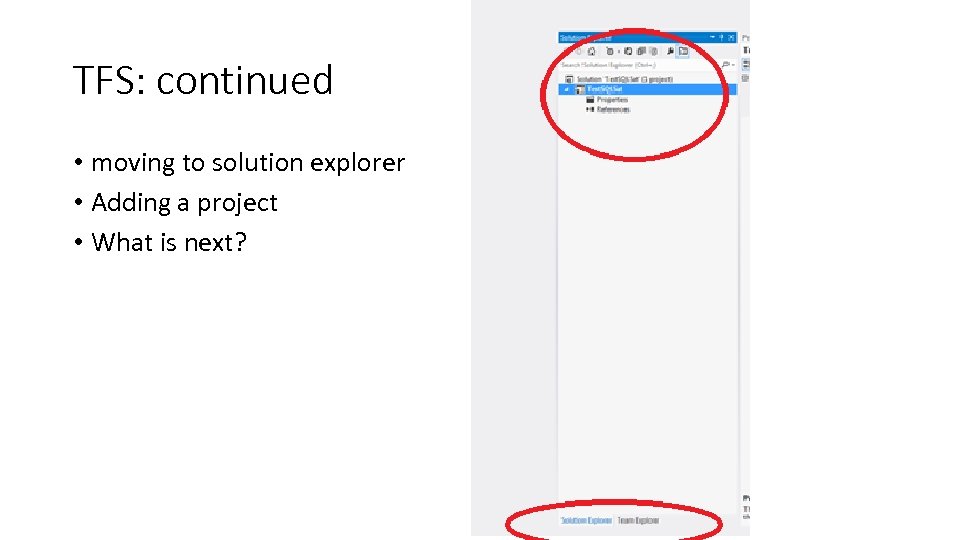

TFS: continued • moving to solution explorer • Adding a project • What is next?

TFS: continued • moving to solution explorer • Adding a project • What is next?

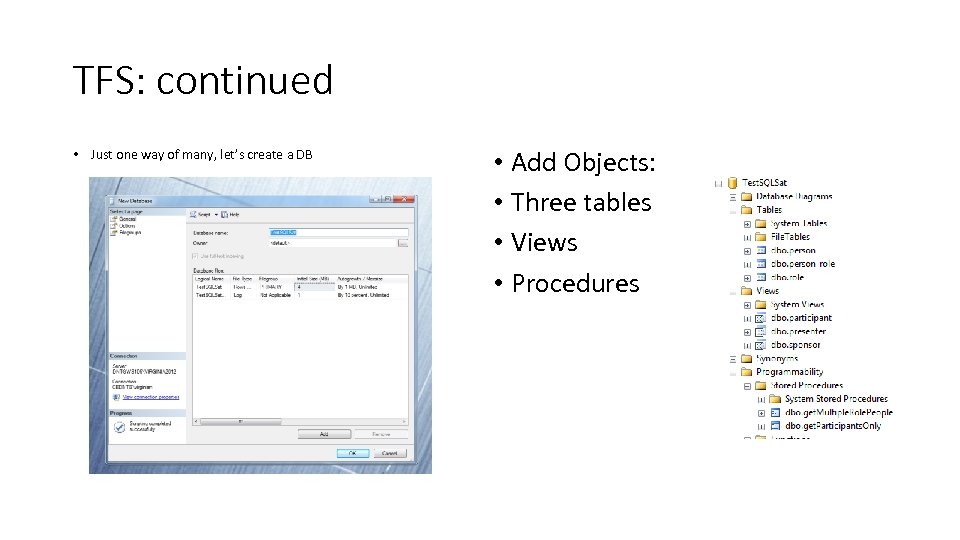

TFS: continued • Just one way of many, let’s create a DB • Add Objects: • Three tables • Views • Procedures

TFS: continued • Just one way of many, let’s create a DB • Add Objects: • Three tables • Views • Procedures

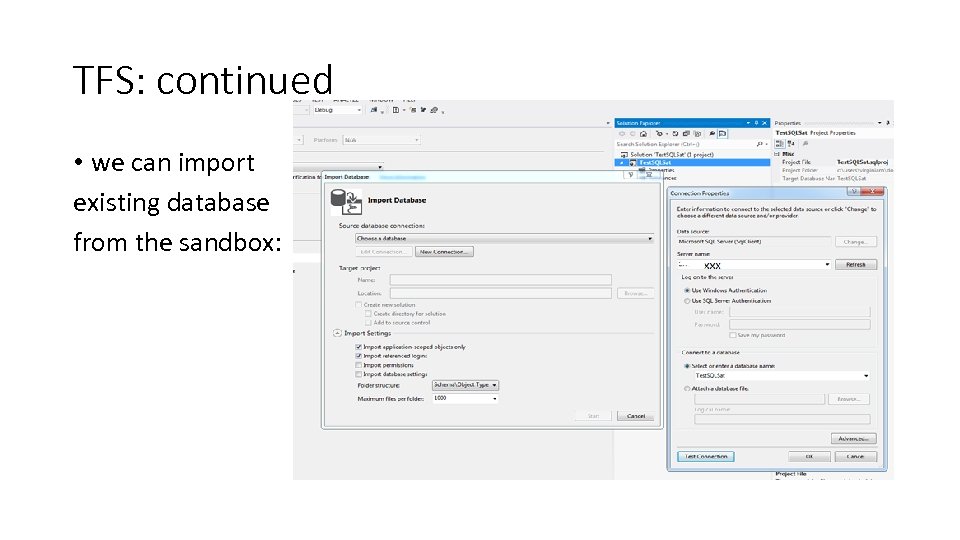

TFS: continued • we can import existing database from the sandbox:

TFS: continued • we can import existing database from the sandbox:

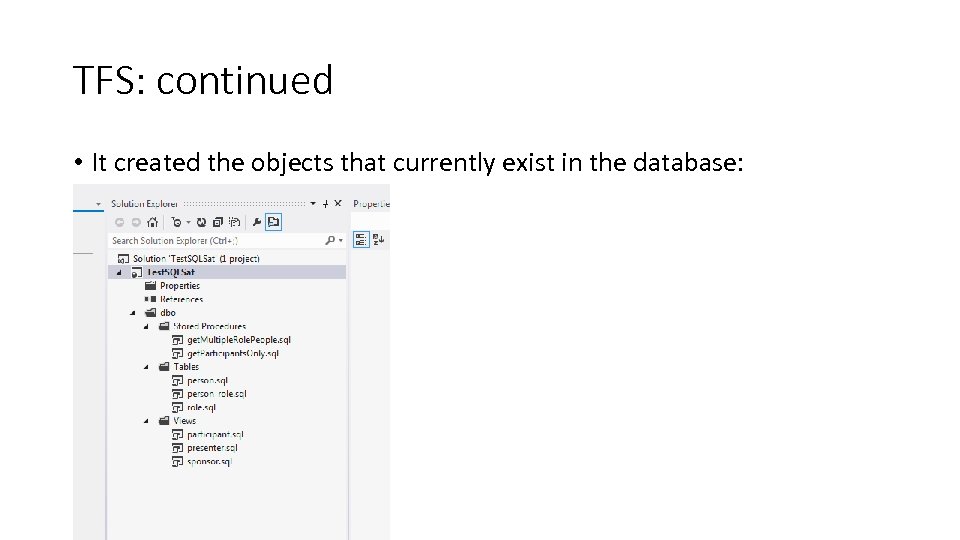

TFS: continued • It created the objects that currently exist in the database:

TFS: continued • It created the objects that currently exist in the database:

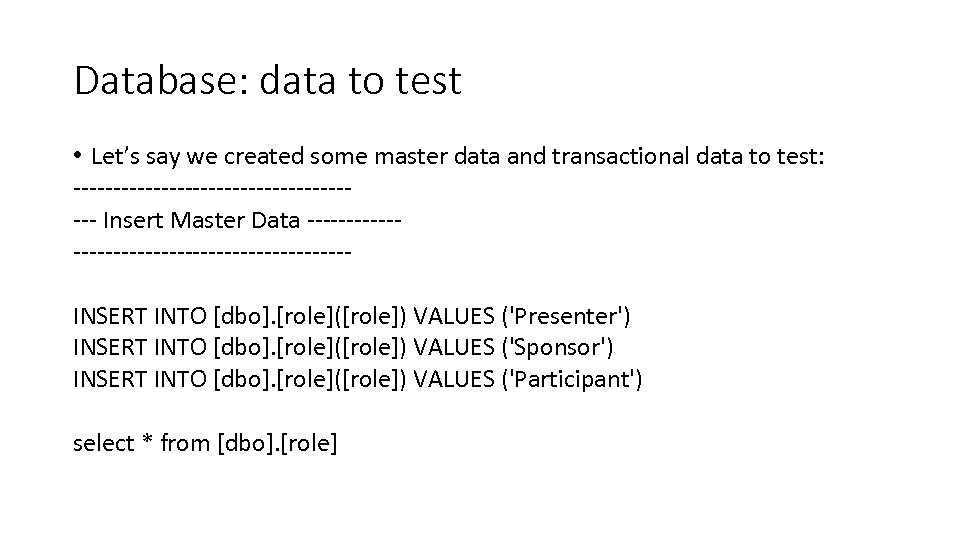

Database: data to test • Let’s say we created some master data and transactional data to test: ------------------- Insert Master Data ----------------------- INSERT INTO [dbo]. [role]([role]) VALUES ('Presenter') INSERT INTO [dbo]. [role]([role]) VALUES ('Sponsor') INSERT INTO [dbo]. [role]([role]) VALUES ('Participant') select * from [dbo]. [role]

Database: data to test • Let’s say we created some master data and transactional data to test: ------------------- Insert Master Data ----------------------- INSERT INTO [dbo]. [role]([role]) VALUES ('Presenter') INSERT INTO [dbo]. [role]([role]) VALUES ('Sponsor') INSERT INTO [dbo]. [role]([role]) VALUES ('Participant') select * from [dbo]. [role]

![Database: data to test ------------------ --- Insert Test Cases ----------------------- INSERT INTO [dbo]. [person] Database: data to test ------------------ --- Insert Test Cases ----------------------- INSERT INTO [dbo]. [person]](https://present5.com/presentation/413d42d8b0d6a4512d50f86157677086/image-27.jpg) Database: data to test ------------------ --- Insert Test Cases ----------------------- INSERT INTO [dbo]. [person] ([name], [email], [gender], [date_of_birth], [place_id]) VALUES ('Virginia M', 'genya@firmsolutionsinc. com', 'f', null) INSERT INTO [dbo]. [person] ([name], [email], [gender], [date_of_birth], [place_id]) VALUES ('Lynn Langit' , 'lynn@lynnlangit. com', 'f' , null) INSERT INTO [dbo]. [person] ([name], [email], [gender], [date_of_birth], [place_id]) VALUES ('Andrew Karcher ', 'andrew@akarcher. com', 'm', null) INSERT INTO [dbo]. [person] ([name], [email], [gender], [date_of_birth], [place_id]) VALUES ('Josh S ', 'Josh@js. com', 'm', null) --Virginia INSERT INTO [dbo]. [person_role]([person_id] , [role_id]) VALUES (1, 1) INSERT INTO [dbo]. [person_role]([person_id] , [role_id]) VALUES (1, 2) INSERT INTO [dbo]. [person_role]([person_id] , [role_id]) VALUES (1, 3) -- Lynn INSERT INTO [dbo]. [person_role]([person_id] , [role_id]) VALUES (2, 1) INSERT INTO [dbo]. [person_role]([person_id] , [role_id]) VALUES (2, 3) --Andrew INSERT INTO [dbo]. [person_role]([person_id] , [role_id]) VALUES (3, 2) INSERT INTO [dbo]. [person_role]([person_id] , [role_id]) VALUES (3, 3) --Josh INSERT INTO [dbo]. [person_role]([person_id] , [role_id]) VALUES (4, 3)

Database: data to test ------------------ --- Insert Test Cases ----------------------- INSERT INTO [dbo]. [person] ([name], [email], [gender], [date_of_birth], [place_id]) VALUES ('Virginia M', 'genya@firmsolutionsinc. com', 'f', null) INSERT INTO [dbo]. [person] ([name], [email], [gender], [date_of_birth], [place_id]) VALUES ('Lynn Langit' , 'lynn@lynnlangit. com', 'f' , null) INSERT INTO [dbo]. [person] ([name], [email], [gender], [date_of_birth], [place_id]) VALUES ('Andrew Karcher ', 'andrew@akarcher. com', 'm', null) INSERT INTO [dbo]. [person] ([name], [email], [gender], [date_of_birth], [place_id]) VALUES ('Josh S ', 'Josh@js. com', 'm', null) --Virginia INSERT INTO [dbo]. [person_role]([person_id] , [role_id]) VALUES (1, 1) INSERT INTO [dbo]. [person_role]([person_id] , [role_id]) VALUES (1, 2) INSERT INTO [dbo]. [person_role]([person_id] , [role_id]) VALUES (1, 3) -- Lynn INSERT INTO [dbo]. [person_role]([person_id] , [role_id]) VALUES (2, 1) INSERT INTO [dbo]. [person_role]([person_id] , [role_id]) VALUES (2, 3) --Andrew INSERT INTO [dbo]. [person_role]([person_id] , [role_id]) VALUES (3, 2) INSERT INTO [dbo]. [person_role]([person_id] , [role_id]) VALUES (3, 3) --Josh INSERT INTO [dbo]. [person_role]([person_id] , [role_id]) VALUES (4, 3)

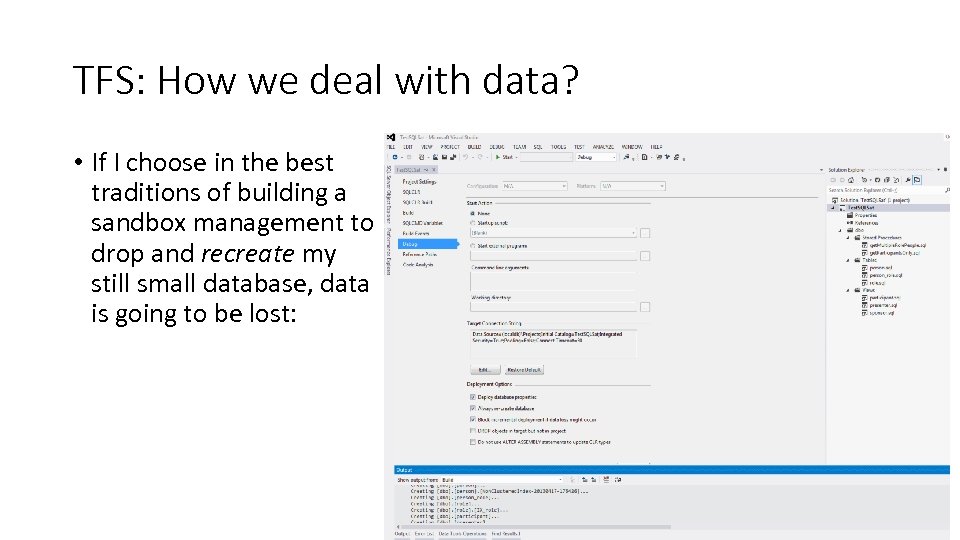

TFS: How we deal with data? • If I choose in the best traditions of building a sandbox management to drop and recreate my still small database, data is going to be lost:

TFS: How we deal with data? • If I choose in the best traditions of building a sandbox management to drop and recreate my still small database, data is going to be lost:

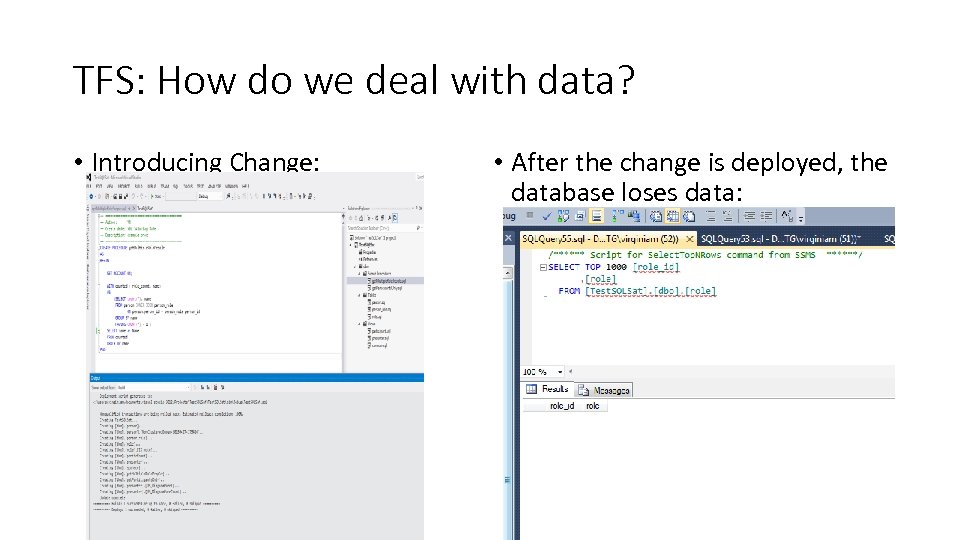

TFS: How do we deal with data? • Introducing Change: • After the change is deployed, the database loses data:

TFS: How do we deal with data? • Introducing Change: • After the change is deployed, the database loses data:

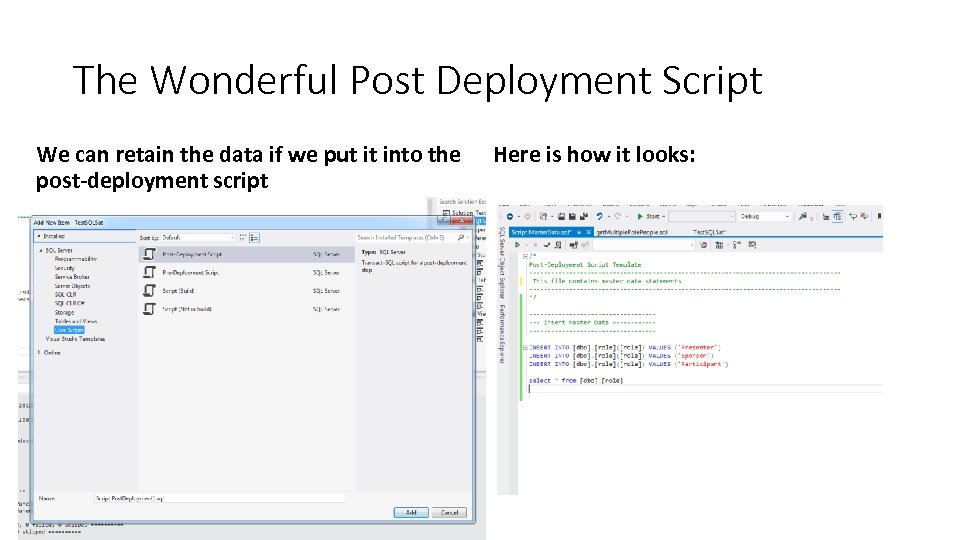

The Wonderful Post Deployment Script We can retain the data if we put it into the post-deployment script Here is how it looks:

The Wonderful Post Deployment Script We can retain the data if we put it into the post-deployment script Here is how it looks:

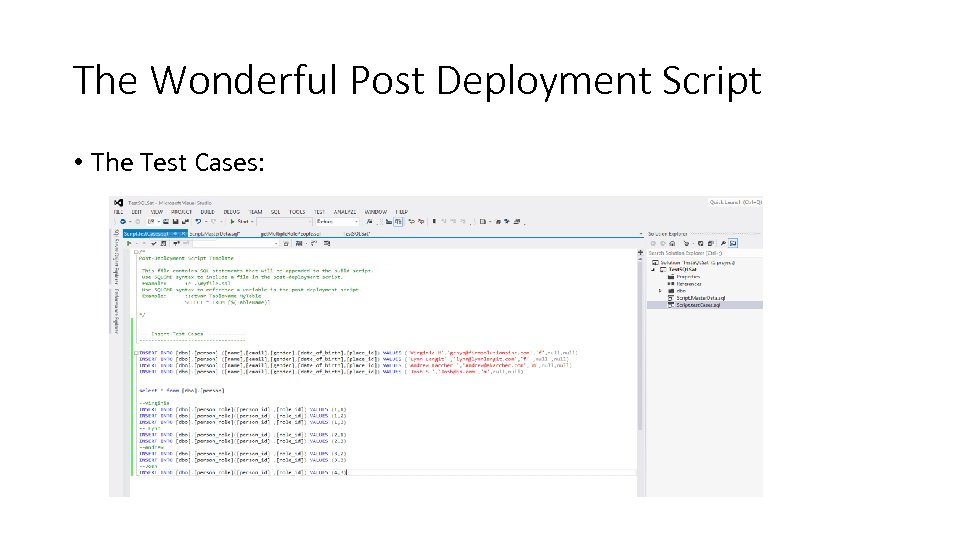

The Wonderful Post Deployment Script • The Test Cases:

The Wonderful Post Deployment Script • The Test Cases:

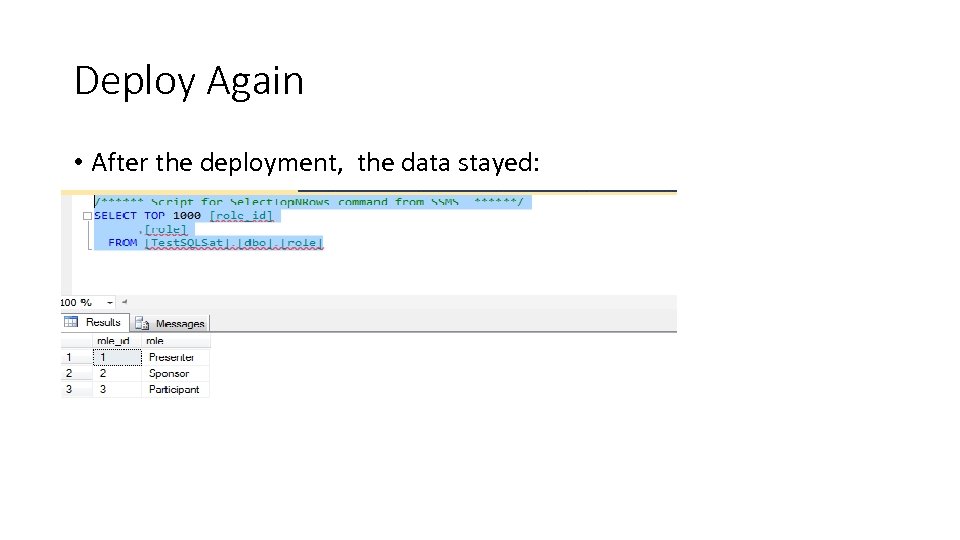

Deploy Again • After the deployment, the data stayed:

Deploy Again • After the deployment, the data stayed:

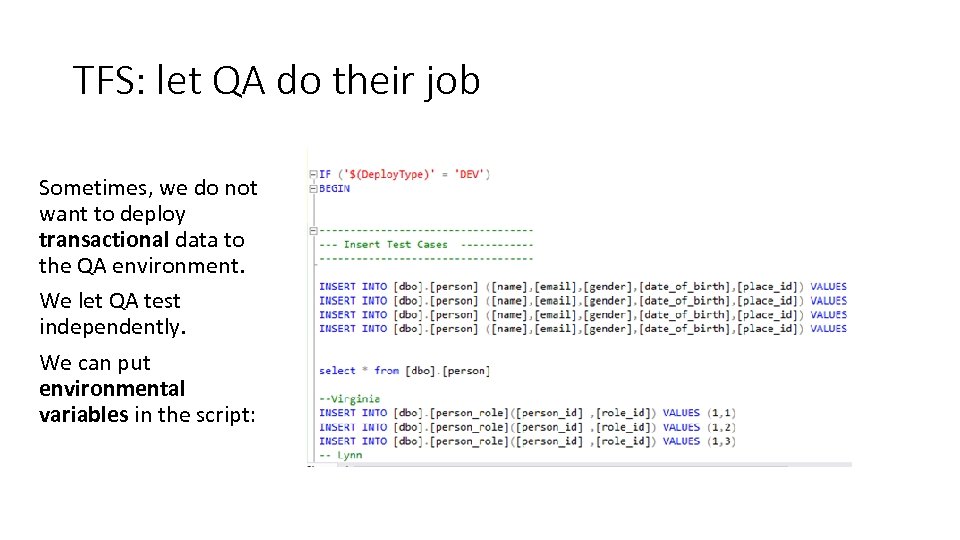

TFS: let QA do their job Sometimes, we do not want to deploy transactional data to the QA environment. We let QA test independently. We can put environmental variables in the script:

TFS: let QA do their job Sometimes, we do not want to deploy transactional data to the QA environment. We let QA test independently. We can put environmental variables in the script:

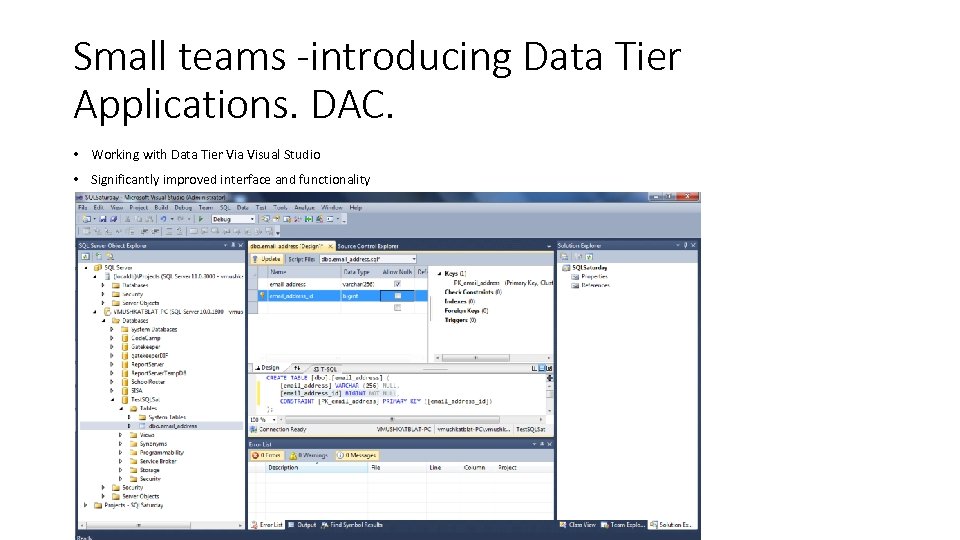

Small teams -introducing Data Tier Applications. DAC. • Working with Data Tier Via Visual Studio • Significantly improved interface and functionality

Small teams -introducing Data Tier Applications. DAC. • Working with Data Tier Via Visual Studio • Significantly improved interface and functionality

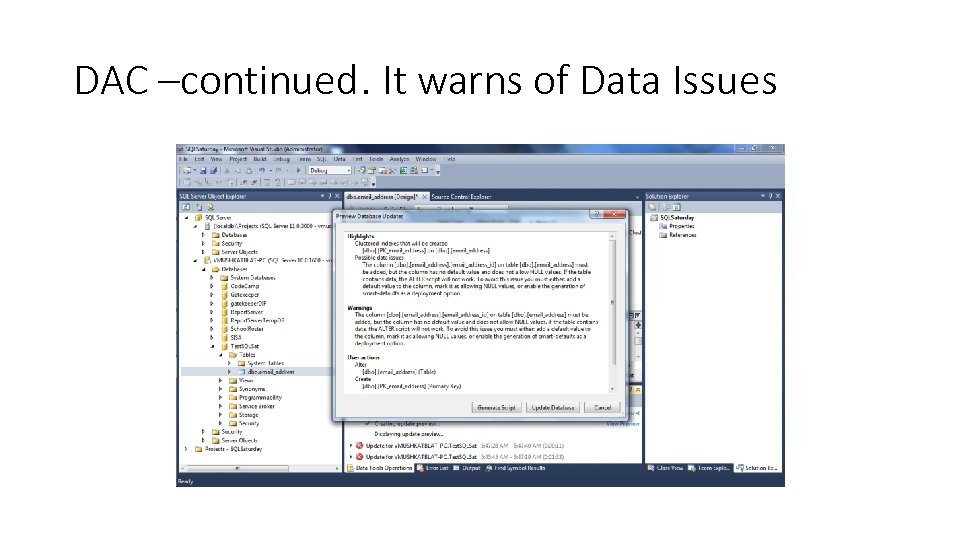

DAC –continued. It warns of Data Issues

DAC –continued. It warns of Data Issues

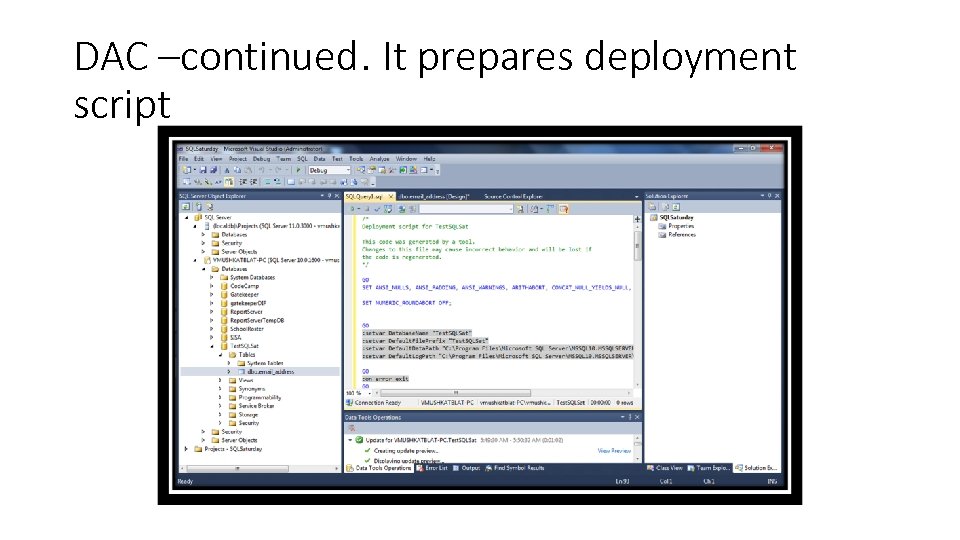

DAC –continued. It prepares deployment script

DAC –continued. It prepares deployment script

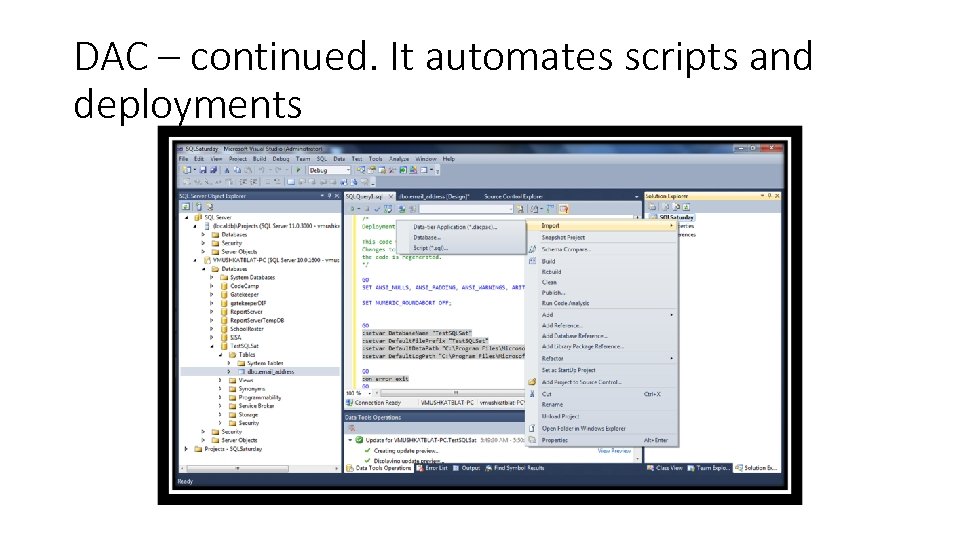

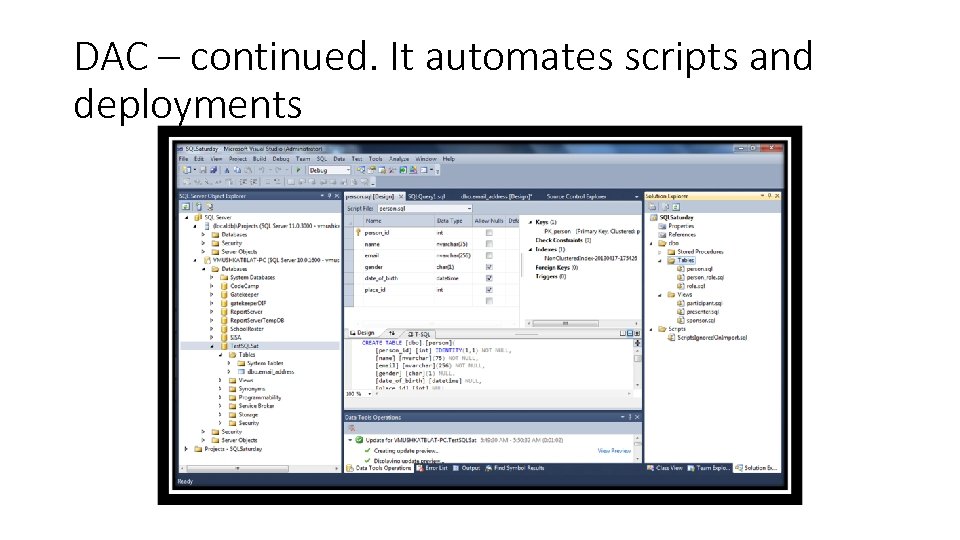

DAC – continued. It automates scripts and deployments

DAC – continued. It automates scripts and deployments

DAC – continued. It automates scripts and deployments

DAC – continued. It automates scripts and deployments

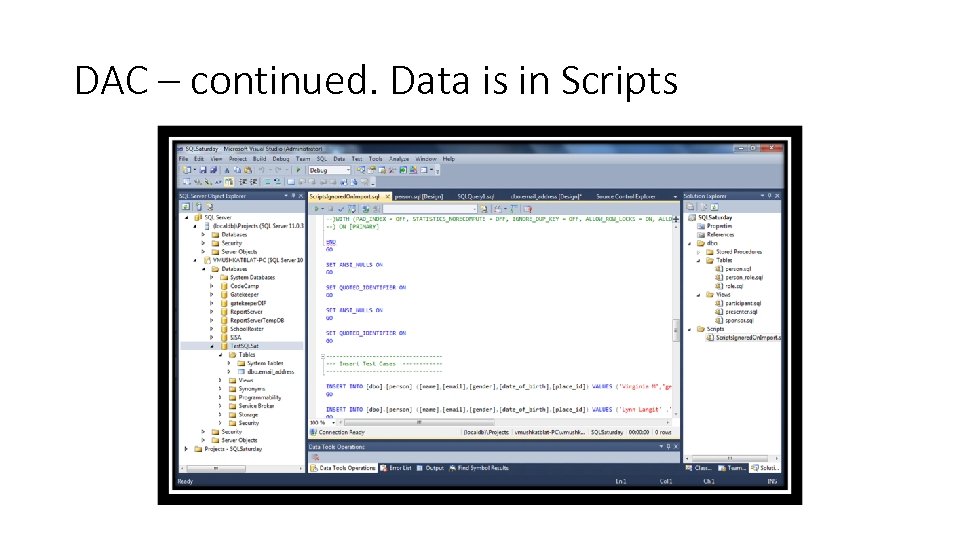

DAC – continued. Data is in Scripts

DAC – continued. Data is in Scripts

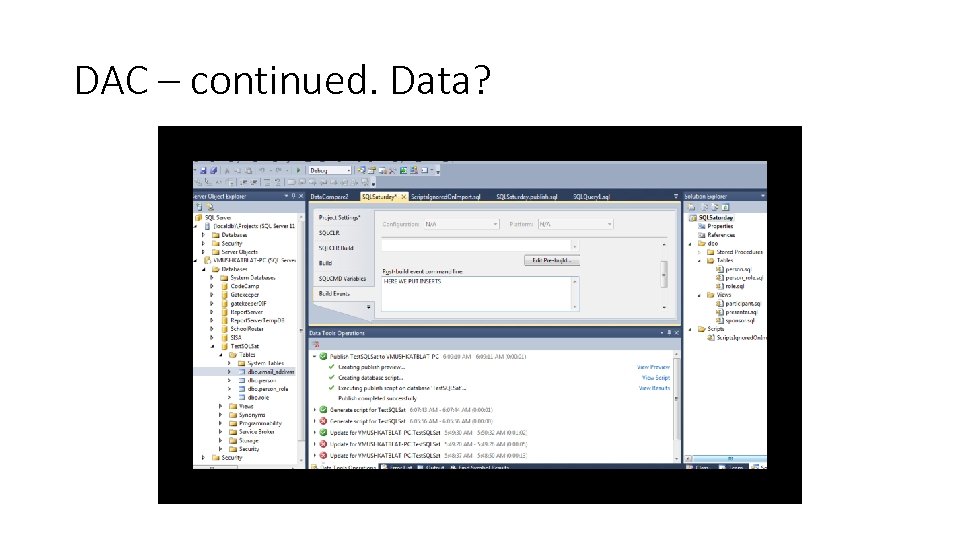

DAC – continued. Data?

DAC – continued. Data?

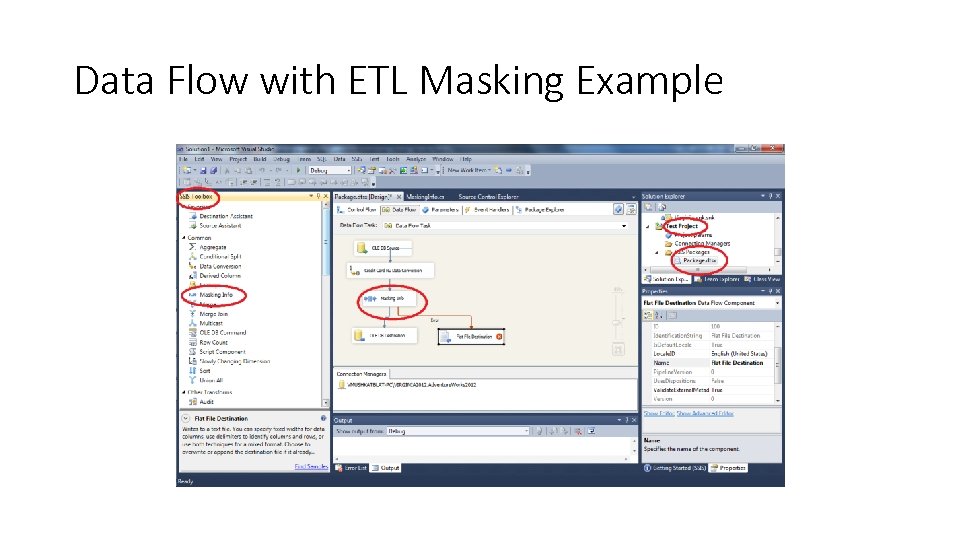

Data Flow with ETL Masking Example

Data Flow with ETL Masking Example

Used Tools and Other Tools That Help What I used: SQL Server, SSMS, SSIS, Data Quality Services, TFS, Visio If you do not have VS and TFS: • Red Gate: SQL Compare, SQL Data Generator, SQL Source Control • Embarcadero: E/R Studio, DB Change Manager • HUSH-HUSH Masking components for ETL architectures from FSI

Used Tools and Other Tools That Help What I used: SQL Server, SSMS, SSIS, Data Quality Services, TFS, Visio If you do not have VS and TFS: • Red Gate: SQL Compare, SQL Data Generator, SQL Source Control • Embarcadero: E/R Studio, DB Change Manager • HUSH-HUSH Masking components for ETL architectures from FSI