04d31d06ac3d84bf90f551c90bfdee86.ppt

- Количество слайдов: 56

Data-Driven Knowledge Discovery and Philosophy of Science Vladimir Cherkassky University of Minnesota cherk 001@umn. edu Presented at Ockham’s Razor Workshop, CMU, June 2012 Electrical and Computer Engineering 1

Data-Driven Knowledge Discovery and Philosophy of Science Vladimir Cherkassky University of Minnesota cherk 001@umn. edu Presented at Ockham’s Razor Workshop, CMU, June 2012 Electrical and Computer Engineering 1

OUTLINE • • • Motivation + Background - changing nature of knowledge discovery - scientific vs empirical knowledge - induction and empirical knowledge Philosophical interpretation Predictive learning framework Practical aspects and examples Summary 2

OUTLINE • • • Motivation + Background - changing nature of knowledge discovery - scientific vs empirical knowledge - induction and empirical knowledge Philosophical interpretation Predictive learning framework Practical aspects and examples Summary 2

Disclaimer • Philosophy of science (as I see it) - philosophical ideas form in response to major scientific/ technological advances Meaningful discussion possible only in the context of these scientific developments • Ockham’s Razor - general vaguely stated principle - originally interpreted for classical science - in statistical inference ~ justification for model complexity control (model selection) 3

Disclaimer • Philosophy of science (as I see it) - philosophical ideas form in response to major scientific/ technological advances Meaningful discussion possible only in the context of these scientific developments • Ockham’s Razor - general vaguely stated principle - originally interpreted for classical science - in statistical inference ~ justification for model complexity control (model selection) 3

Historical View: data-analytic modeling • Two theoretical developments - classical statistics ~ mid 20 -th century - Vapnik-Chervonenkis theory ~ 1970’s • Two related technological advances - applied statistics - machine learning, neural nets, data mining etc. • Statistical(probabilistic) vs predictive modeling - philosophical difference (not widely understood) - interpretation of Ockham’s Razor 4

Historical View: data-analytic modeling • Two theoretical developments - classical statistics ~ mid 20 -th century - Vapnik-Chervonenkis theory ~ 1970’s • Two related technological advances - applied statistics - machine learning, neural nets, data mining etc. • Statistical(probabilistic) vs predictive modeling - philosophical difference (not widely understood) - interpretation of Ockham’s Razor 4

Scientific Discovery • Combines ideas/models and facts/data • First-principle knowledge: hypothesis experiment theory ~ deterministic, simple causal models • Modern data-driven discovery: Computer program + DATA knowledge • ~ statistical, complex systems Two different philosophies 5

Scientific Discovery • Combines ideas/models and facts/data • First-principle knowledge: hypothesis experiment theory ~ deterministic, simple causal models • Modern data-driven discovery: Computer program + DATA knowledge • ~ statistical, complex systems Two different philosophies 5

Scientific Knowledge • • • Classical Knowledge (last 3 -4 centuries): - objective - recurrent events (repeatable by others) - quantifiable (described by math models) Knowledge ~ causal, deterministic, logical Humans cannot reason well about - noisy/random data - multivariate high-dimensional data 6

Scientific Knowledge • • • Classical Knowledge (last 3 -4 centuries): - objective - recurrent events (repeatable by others) - quantifiable (described by math models) Knowledge ~ causal, deterministic, logical Humans cannot reason well about - noisy/random data - multivariate high-dimensional data 6

Cultural and Psychological Aspects • • • All men by nature desire knowledge Man has an intense desire for assured knowledge Assured Knowledge ~ belief in - religion - reason (causal determinism) - science / pseudoscience - empirical data-analytic models • Ockham’s Razor ~ methodological belief (? ) 7

Cultural and Psychological Aspects • • • All men by nature desire knowledge Man has an intense desire for assured knowledge Assured Knowledge ~ belief in - religion - reason (causal determinism) - science / pseudoscience - empirical data-analytic models • Ockham’s Razor ~ methodological belief (? ) 7

Gods, Prophets and Shamans 8

Gods, Prophets and Shamans 8

Knowledge Discovery in Digital Age • Most information in the form of data from sensors (not human sense perceptions) • Can we get assured knowledge from data? • Naïve realism: data knowledge Wired Magazine, 16/07: We can stop looking for (scientific) models. We can analyze the data without hypotheses about what it might show. We can throw the numbers into the biggest computing clusters the world has ever seen and let statistical algorithms find patterns where science cannot 9

Knowledge Discovery in Digital Age • Most information in the form of data from sensors (not human sense perceptions) • Can we get assured knowledge from data? • Naïve realism: data knowledge Wired Magazine, 16/07: We can stop looking for (scientific) models. We can analyze the data without hypotheses about what it might show. We can throw the numbers into the biggest computing clusters the world has ever seen and let statistical algorithms find patterns where science cannot 9

(Over) Promise of Science Archimedes: Give me a place to stand, and a lever long enough, and I will move the world Laplace: Present events are connected with preceding ones by a tie based upon the evident principle that a thing cannot occur without a cause that produces it. Digital Age: more data new knowledge more connectivity more knowledge 10

(Over) Promise of Science Archimedes: Give me a place to stand, and a lever long enough, and I will move the world Laplace: Present events are connected with preceding ones by a tie based upon the evident principle that a thing cannot occur without a cause that produces it. Digital Age: more data new knowledge more connectivity more knowledge 10

REALITY • Many studies have questionable value - statistical correlation vs causation • Some border nonsense - US scientists at SUNY discovered Adultery Gene !!! (based on a sample of 181 volunteers interviewed about sexual life) • Usual conclusion - more research is needed … 11

REALITY • Many studies have questionable value - statistical correlation vs causation • Some border nonsense - US scientists at SUNY discovered Adultery Gene !!! (based on a sample of 181 volunteers interviewed about sexual life) • Usual conclusion - more research is needed … 11

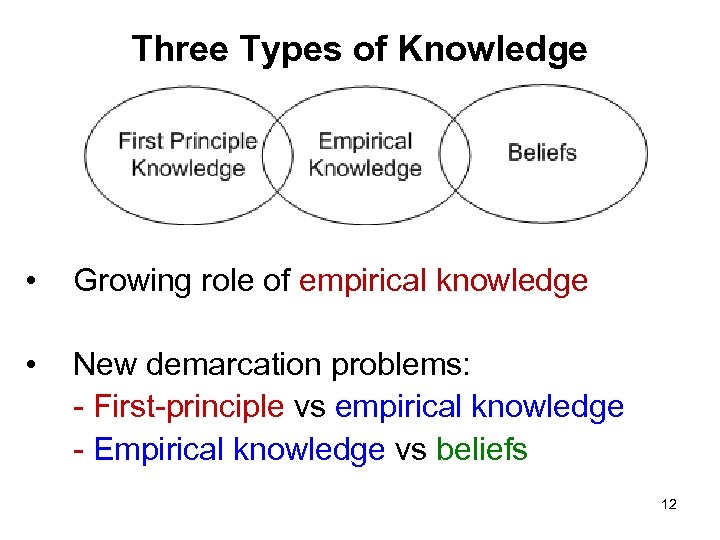

Three Types of Knowledge • Growing role of empirical knowledge • New demarcation problems: - First-principle vs empirical knowledge - Empirical knowledge vs beliefs 12

Three Types of Knowledge • Growing role of empirical knowledge • New demarcation problems: - First-principle vs empirical knowledge - Empirical knowledge vs beliefs 12

Philosophical Challenges • Empirical data-driven knowledge - different from classical knowledge • Philosophical Interpretation - first-principle: hypothetico-deductive - empirical knowledge: ? ? ? - fragmentation in technical fields, e. g. statistics, machine learning, neural nets, data mining etc. • Predictive Learning (VC-theory) - provides consistent framework for many apps - different from classical statistical approach 13

Philosophical Challenges • Empirical data-driven knowledge - different from classical knowledge • Philosophical Interpretation - first-principle: hypothetico-deductive - empirical knowledge: ? ? ? - fragmentation in technical fields, e. g. statistics, machine learning, neural nets, data mining etc. • Predictive Learning (VC-theory) - provides consistent framework for many apps - different from classical statistical approach 13

What is a ‘good’ data-analytic model? • All models are mental constructs that (hopefully) relate to real world • Two goals of modeling - explain available data ~ subjective - predict future data ~ objective • True science makes non-trivial predictions Good data-driven models can predict well, so the goal is to estimate predictive models 14

What is a ‘good’ data-analytic model? • All models are mental constructs that (hopefully) relate to real world • Two goals of modeling - explain available data ~ subjective - predict future data ~ objective • True science makes non-trivial predictions Good data-driven models can predict well, so the goal is to estimate predictive models 14

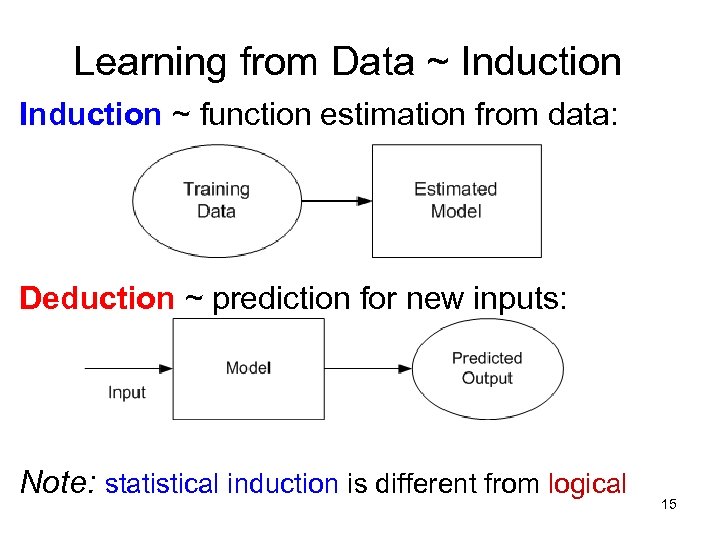

Learning from Data ~ Induction ~ function estimation from data: Deduction ~ prediction for new inputs: Note: statistical induction is different from logical 15

Learning from Data ~ Induction ~ function estimation from data: Deduction ~ prediction for new inputs: Note: statistical induction is different from logical 15

OUTLINE • • • Motivation + Background Philosophical interpretation Predictive learning framework Practical aspects and examples Summary 16

OUTLINE • • • Motivation + Background Philosophical interpretation Predictive learning framework Practical aspects and examples Summary 16

Observations, Reality and Mind Philosophy is concerned with relationship between - Reality (Nature) - Sensory Perceptions - Mental Constructs (interpretations of reality) Three Philosophical Schools • REALISM: - objective physical reality perceived via senses - mental constructs reflect objective reality • IDEALISM: - primary role belongs to ideas (mental constructs) - physical reality is a by-product of Mind • INSTRUMENTALISM: - the goal of science is to produce useful theories Which one should be adopted (by scientists+ engineers)? ? 17

Observations, Reality and Mind Philosophy is concerned with relationship between - Reality (Nature) - Sensory Perceptions - Mental Constructs (interpretations of reality) Three Philosophical Schools • REALISM: - objective physical reality perceived via senses - mental constructs reflect objective reality • IDEALISM: - primary role belongs to ideas (mental constructs) - physical reality is a by-product of Mind • INSTRUMENTALISM: - the goal of science is to produce useful theories Which one should be adopted (by scientists+ engineers)? ? 17

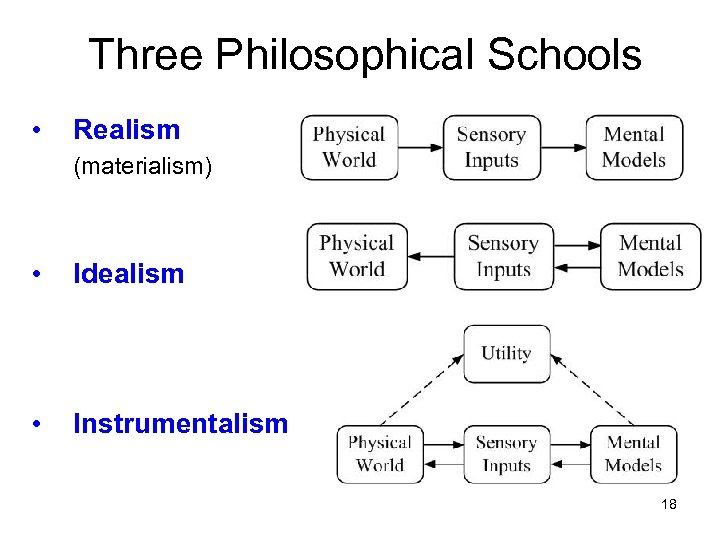

Three Philosophical Schools • Realism (materialism) • Idealism • Instrumentalism 18

Three Philosophical Schools • Realism (materialism) • Idealism • Instrumentalism 18

Realistic View of Science • • • Every observation/effect has its cause ~ prevailing view and cultural attitude Isaac Newton: Hypotheses non fingo scientific knowledge can be derived from observations + experience More data better model (closer approximation to the truth) 19

Realistic View of Science • • • Every observation/effect has its cause ~ prevailing view and cultural attitude Isaac Newton: Hypotheses non fingo scientific knowledge can be derived from observations + experience More data better model (closer approximation to the truth) 19

Alternative Views • Karl Popper: Science starts from problems, and not from observations • Werner Heisenberg: What we observe is not nature itself, but nature exposed to our method of questioning • Albert Einstein: - Reality is merely an illusion, albeit a very persistent one. Science ~ creation of human mind? ? ? 20

Alternative Views • Karl Popper: Science starts from problems, and not from observations • Werner Heisenberg: What we observe is not nature itself, but nature exposed to our method of questioning • Albert Einstein: - Reality is merely an illusion, albeit a very persistent one. Science ~ creation of human mind? ? ? 20

Empirical Knowledge • • Can it be obtained from data alone? How is it different from ‘beliefs’ ? Role of a priori knowledge vs data ? What is ‘the method of questioning’ ? These methodological/philosophical issues have not been properly addressed 21

Empirical Knowledge • • Can it be obtained from data alone? How is it different from ‘beliefs’ ? Role of a priori knowledge vs data ? What is ‘the method of questioning’ ? These methodological/philosophical issues have not been properly addressed 21

OUTLINE • • • Motivation + Background Philosophical perspective Predictive learning framework - classical statistics vs predictive learning - standard inductive learning setting - Ockham’s Razor vs VC-dimension Practical aspects and examples Summary 22

OUTLINE • • • Motivation + Background Philosophical perspective Predictive learning framework - classical statistics vs predictive learning - standard inductive learning setting - Ockham’s Razor vs VC-dimension Practical aspects and examples Summary 22

Method of Questioning • Learning Problem Setting ~ - assumptions about training + test data - goals of learning (model estimation) • Classical statistics: - data generated from a parametric distribution - estimate /approximate true probabilistic model • Predictive modeling (VC-theory): - data generated from unknown distribution - estimate useful (~ predictive) model 23

Method of Questioning • Learning Problem Setting ~ - assumptions about training + test data - goals of learning (model estimation) • Classical statistics: - data generated from a parametric distribution - estimate /approximate true probabilistic model • Predictive modeling (VC-theory): - data generated from unknown distribution - estimate useful (~ predictive) model 23

Critique of Statistical Approach (L. Breiman) • The Belief that a statistician can invent a reasonably good parametric class of models for a complex mechanism devised by nature • Then parameters are estimated and conclusions are drawn • But conclusions are about - the model’s mechanism - not about nature’s mechanism 24

Critique of Statistical Approach (L. Breiman) • The Belief that a statistician can invent a reasonably good parametric class of models for a complex mechanism devised by nature • Then parameters are estimated and conclusions are drawn • But conclusions are about - the model’s mechanism - not about nature’s mechanism 24

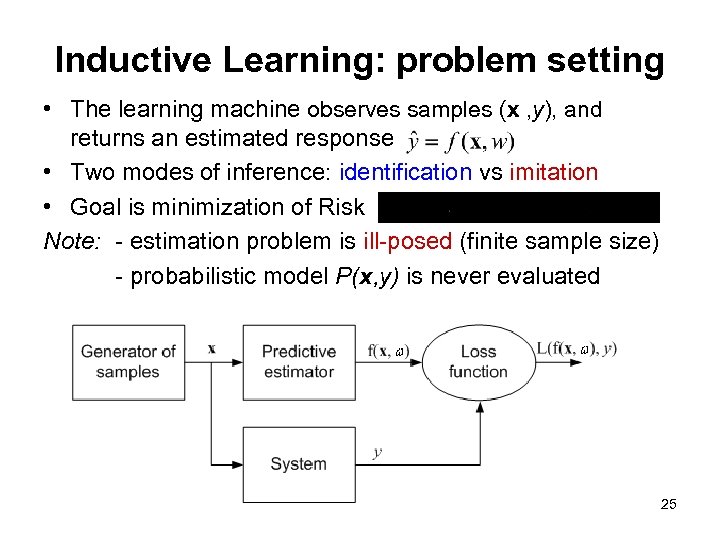

Inductive Learning: problem setting • The learning machine observes samples (x , y), and returns an estimated response • Two modes of inference: identification vs imitation • Goal is minimization of Risk Note: - estimation problem is ill-posed (finite sample size) - probabilistic model P(x, y) is never evaluated 25

Inductive Learning: problem setting • The learning machine observes samples (x , y), and returns an estimated response • Two modes of inference: identification vs imitation • Goal is minimization of Risk Note: - estimation problem is ill-posed (finite sample size) - probabilistic model P(x, y) is never evaluated 25

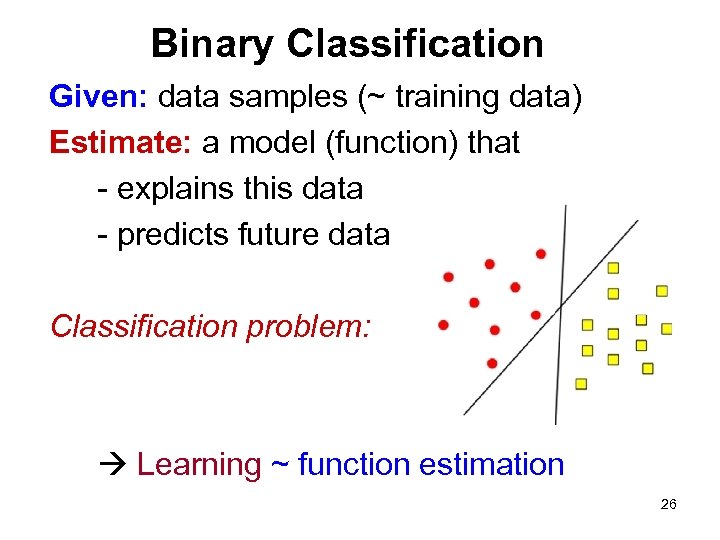

Binary Classification Given: data samples (~ training data) Estimate: a model (function) that - explains this data - predicts future data Classification problem: Learning ~ function estimation 26

Binary Classification Given: data samples (~ training data) Estimate: a model (function) that - explains this data - predicts future data Classification problem: Learning ~ function estimation 26

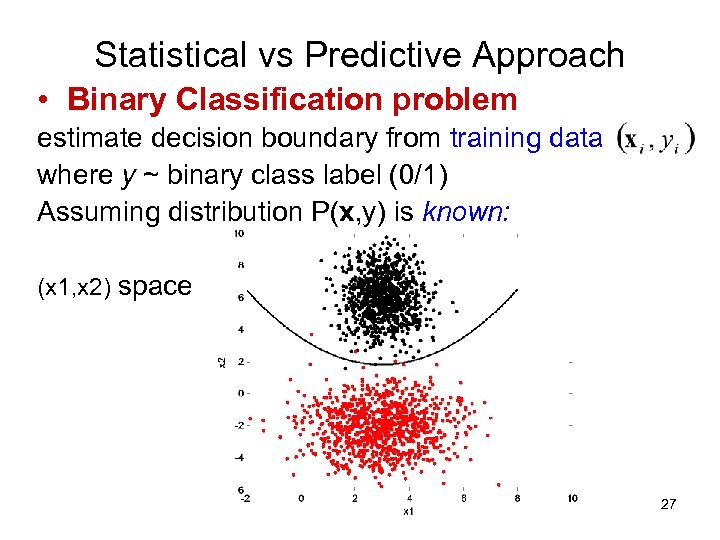

Statistical vs Predictive Approach • Binary Classification problem estimate decision boundary from training data where y ~ binary class label (0/1) Assuming distribution P(x, y) is known: (x 1, x 2) space 27

Statistical vs Predictive Approach • Binary Classification problem estimate decision boundary from training data where y ~ binary class label (0/1) Assuming distribution P(x, y) is known: (x 1, x 2) space 27

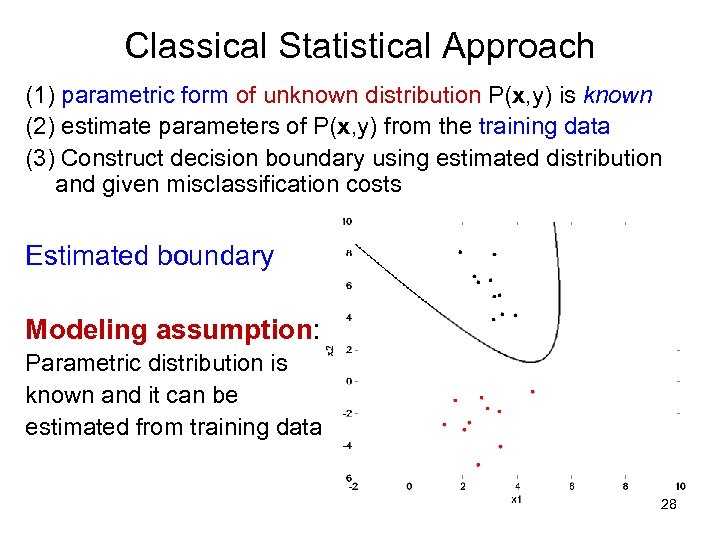

Classical Statistical Approach (1) parametric form of unknown distribution P(x, y) is known (2) estimate parameters of P(x, y) from the training data (3) Construct decision boundary using estimated distribution and given misclassification costs Estimated boundary Modeling assumption: Parametric distribution is known and it can be estimated from training data 28

Classical Statistical Approach (1) parametric form of unknown distribution P(x, y) is known (2) estimate parameters of P(x, y) from the training data (3) Construct decision boundary using estimated distribution and given misclassification costs Estimated boundary Modeling assumption: Parametric distribution is known and it can be estimated from training data 28

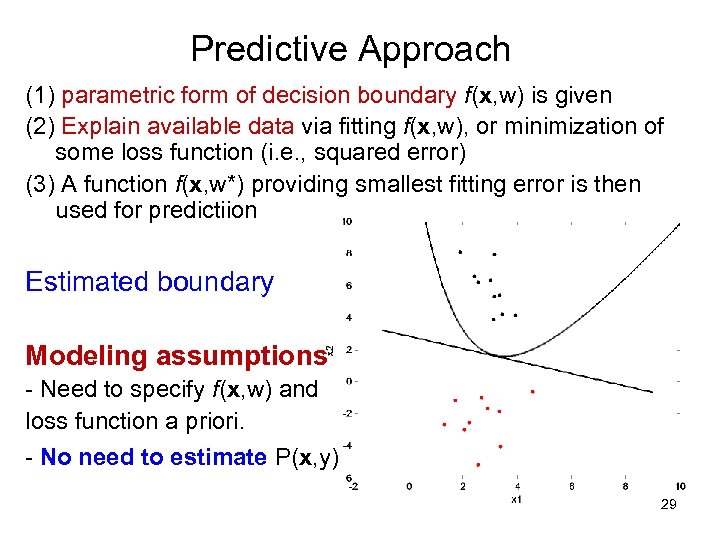

Predictive Approach (1) parametric form of decision boundary f(x, w) is given (2) Explain available data via fitting f(x, w), or minimization of some loss function (i. e. , squared error) (3) A function f(x, w*) providing smallest fitting error is then used for predictiion Estimated boundary Modeling assumptions - Need to specify f(x, w) and loss function a priori. - No need to estimate P(x, y) 29

Predictive Approach (1) parametric form of decision boundary f(x, w) is given (2) Explain available data via fitting f(x, w), or minimization of some loss function (i. e. , squared error) (3) A function f(x, w*) providing smallest fitting error is then used for predictiion Estimated boundary Modeling assumptions - Need to specify f(x, w) and loss function a priori. - No need to estimate P(x, y) 29

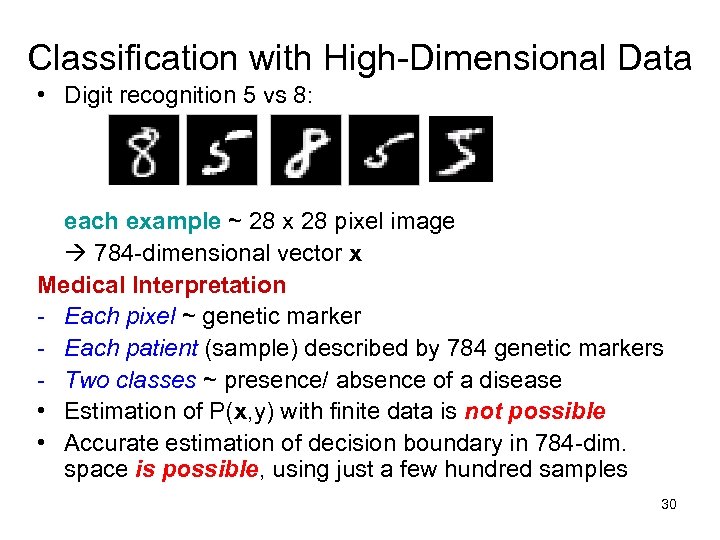

Classification with High-Dimensional Data • Digit recognition 5 vs 8: each example ~ 28 x 28 pixel image 784 -dimensional vector x Medical Interpretation - Each pixel ~ genetic marker - Each patient (sample) described by 784 genetic markers - Two classes ~ presence/ absence of a disease • Estimation of P(x, y) with finite data is not possible • Accurate estimation of decision boundary in 784 -dim. space is possible, using just a few hundred samples 30

Classification with High-Dimensional Data • Digit recognition 5 vs 8: each example ~ 28 x 28 pixel image 784 -dimensional vector x Medical Interpretation - Each pixel ~ genetic marker - Each patient (sample) described by 784 genetic markers - Two classes ~ presence/ absence of a disease • Estimation of P(x, y) with finite data is not possible • Accurate estimation of decision boundary in 784 -dim. space is possible, using just a few hundred samples 30

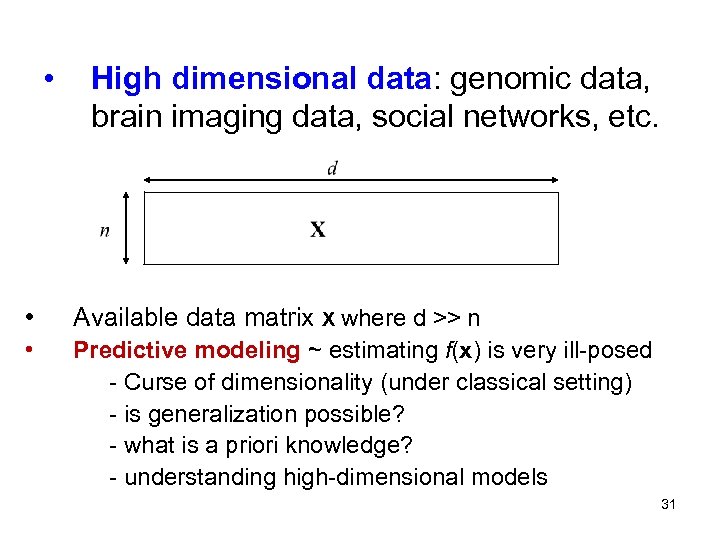

• High dimensional data: genomic data, brain imaging data, social networks, etc. • Available data matrix X where d >> n • Predictive modeling ~ estimating f(x) is very ill-posed - Curse of dimensionality (under classical setting) - is generalization possible? - what is a priori knowledge? - understanding high-dimensional models 31

• High dimensional data: genomic data, brain imaging data, social networks, etc. • Available data matrix X where d >> n • Predictive modeling ~ estimating f(x) is very ill-posed - Curse of dimensionality (under classical setting) - is generalization possible? - what is a priori knowledge? - understanding high-dimensional models 31

Predictive Modeling Predictive approach - estimates certain properties of unknown P(x, y) that are useful for predicting the output y. - based on mathematical theory (VC-theory) - successfully used in many apps BUT its methodology + concepts are very different from classical statistics: - formalization of the learning problem (~ requires understanding of application domain) - a priori specification of a loss function - interpretation of predictive models is hard - many good models estimated from the same data 32

Predictive Modeling Predictive approach - estimates certain properties of unknown P(x, y) that are useful for predicting the output y. - based on mathematical theory (VC-theory) - successfully used in many apps BUT its methodology + concepts are very different from classical statistics: - formalization of the learning problem (~ requires understanding of application domain) - a priori specification of a loss function - interpretation of predictive models is hard - many good models estimated from the same data 32

VC-dimension • • Measures of model ‘complexity’ - number of ‘free’ parameters/ entities - VC-dimension Classical statistics: Ockham’s Razor - estimate simple (~interpretable) models - typical strategy: feature selection - trade-off between simplicity and accuracy • Predictive modeling (VC-theory): - complex black-box models - multiplicity of good models - prediction is controlled by VC-dimension 33

VC-dimension • • Measures of model ‘complexity’ - number of ‘free’ parameters/ entities - VC-dimension Classical statistics: Ockham’s Razor - estimate simple (~interpretable) models - typical strategy: feature selection - trade-off between simplicity and accuracy • Predictive modeling (VC-theory): - complex black-box models - multiplicity of good models - prediction is controlled by VC-dimension 33

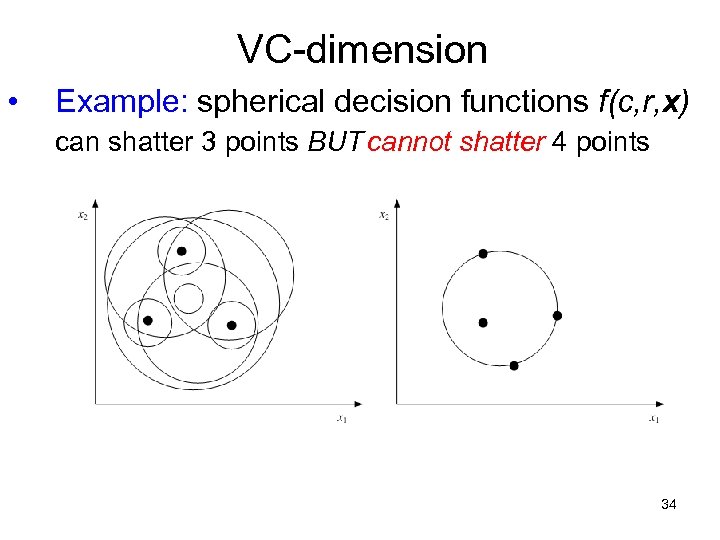

VC-dimension • Example: spherical decision functions f(c, r, x) can shatter 3 points BUT cannot shatter 4 points 34

VC-dimension • Example: spherical decision functions f(c, r, x) can shatter 3 points BUT cannot shatter 4 points 34

![VC-dimension • Example: set of functions Sign [Sin (wx)] can shatter any number of VC-dimension • Example: set of functions Sign [Sin (wx)] can shatter any number of](https://present5.com/presentation/04d31d06ac3d84bf90f551c90bfdee86/image-35.jpg) VC-dimension • Example: set of functions Sign [Sin (wx)] can shatter any number of points: 35

VC-dimension • Example: set of functions Sign [Sin (wx)] can shatter any number of points: 35

VC-dimension vs number of parameters • VC-dimension can be equal to Do. F (number of parameters) Example: linear estimators • VC-dimension can be smaller than Do. F Example: penalized estimators • VC-dimension can be larger than Do. F Example: feature selection sin (wx) 36

VC-dimension vs number of parameters • VC-dimension can be equal to Do. F (number of parameters) Example: linear estimators • VC-dimension can be smaller than Do. F Example: penalized estimators • VC-dimension can be larger than Do. F Example: feature selection sin (wx) 36

Philosophical interpretation: VC-falsifiability • Occam’s Razor: Select the model that explains available data and has the small number of entities (free parameters) • VC theory: Select the model that explains available data and has low VC-dimension (i. e. can be easily falsified) New Principle of VC falsifiability 37

Philosophical interpretation: VC-falsifiability • Occam’s Razor: Select the model that explains available data and has the small number of entities (free parameters) • VC theory: Select the model that explains available data and has low VC-dimension (i. e. can be easily falsified) New Principle of VC falsifiability 37

OUTLINE • • • Motivation + Background Philosophical perspective Predictive learning framework Practical aspects and examples - philosophical interpretation of data-driven knowledge discovery - trading international mutual funds - handwritten digit recognition Summary 38

OUTLINE • • • Motivation + Background Philosophical perspective Predictive learning framework Practical aspects and examples - philosophical interpretation of data-driven knowledge discovery - trading international mutual funds - handwritten digit recognition Summary 38

Philosophical Interpretation • What is primary in data-driven knowledge: - observed data or method of questioning ? - what is ‘method of questioning’? • Is it possible to achieve good generalization with finite samples ? • Philosophical interpretation of the goal of learning & math conditions for generalization 39

Philosophical Interpretation • What is primary in data-driven knowledge: - observed data or method of questioning ? - what is ‘method of questioning’? • Is it possible to achieve good generalization with finite samples ? • Philosophical interpretation of the goal of learning & math conditions for generalization 39

VC-Theory provides answers • • Method of questioning is - the learning problem setting - should be driven by app requirements Standard inductive learning commonly used (not always the best choice) Good generalization depends on two factors - (small) training error - small VC-dimension ~ large ‘falsifiability’ Occam’s Razor does not explain successful methods: SVM, boosting, random forests, . . . 40

VC-Theory provides answers • • Method of questioning is - the learning problem setting - should be driven by app requirements Standard inductive learning commonly used (not always the best choice) Good generalization depends on two factors - (small) training error - small VC-dimension ~ large ‘falsifiability’ Occam’s Razor does not explain successful methods: SVM, boosting, random forests, . . . 40

Application Examples • Both use binary classification • ISSUES - good prediction/generalization - interpretation of estimated models, especially for high-dimensional data - multiple good models 41

Application Examples • Both use binary classification • ISSUES - good prediction/generalization - interpretation of estimated models, especially for high-dimensional data - multiple good models 41

Timing of International Funds • International mutual funds - priced at 4 pm EST (New York time) - reflect price of foreign securities traded at European/ Asian markets - Foreign markets close earlier than US market Possibility of inefficient pricing Market timing exploits this inefficiency. • Scandals in the mutual fund industry ~2002 • Solution adopted: restrictions on trading 42

Timing of International Funds • International mutual funds - priced at 4 pm EST (New York time) - reflect price of foreign securities traded at European/ Asian markets - Foreign markets close earlier than US market Possibility of inefficient pricing Market timing exploits this inefficiency. • Scandals in the mutual fund industry ~2002 • Solution adopted: restrictions on trading 42

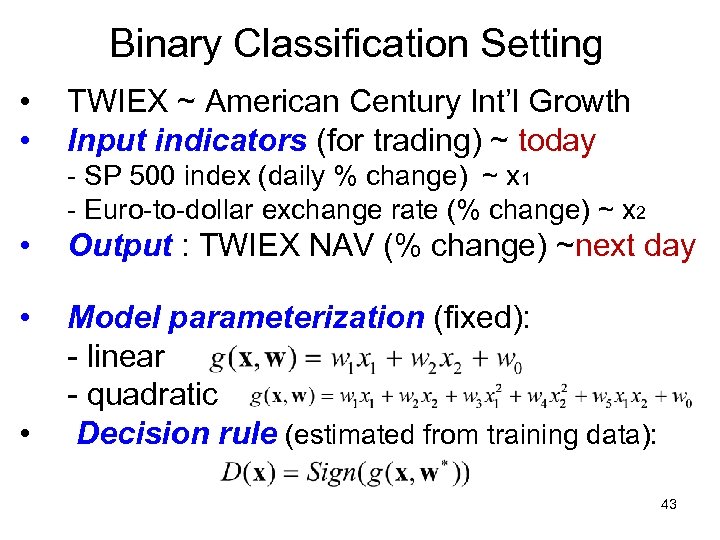

Binary Classification Setting • • TWIEX ~ American Century Int’l Growth Input indicators (for trading) ~ today - SP 500 index (daily % change) ~ x 1 - Euro-to-dollar exchange rate (% change) ~ x 2 • Output : TWIEX NAV (% change) ~next day • Model parameterization (fixed): - linear - quadratic Decision rule (estimated from training data): • 43

Binary Classification Setting • • TWIEX ~ American Century Int’l Growth Input indicators (for trading) ~ today - SP 500 index (daily % change) ~ x 1 - Euro-to-dollar exchange rate (% change) ~ x 2 • Output : TWIEX NAV (% change) ~next day • Model parameterization (fixed): - linear - quadratic Decision rule (estimated from training data): • 43

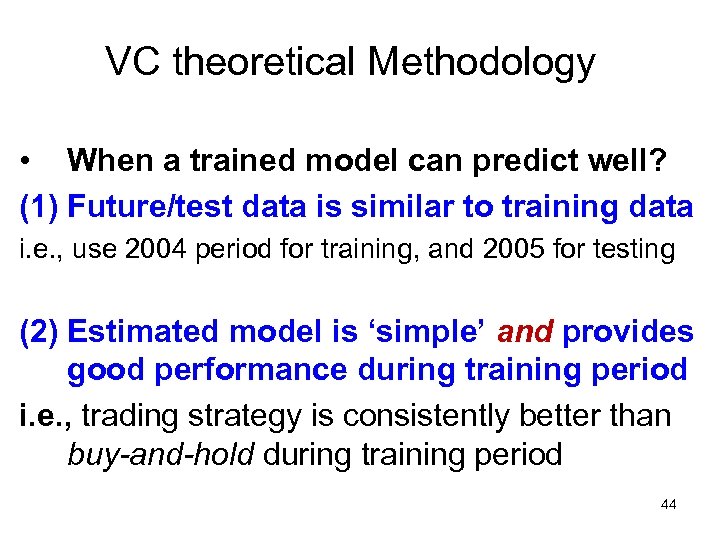

VC theoretical Methodology • When a trained model can predict well? (1) Future/test data is similar to training data i. e. , use 2004 period for training, and 2005 for testing (2) Estimated model is ‘simple’ and provides good performance during training period i. e. , trading strategy is consistently better than buy-and-hold during training period 44

VC theoretical Methodology • When a trained model can predict well? (1) Future/test data is similar to training data i. e. , use 2004 period for training, and 2005 for testing (2) Estimated model is ‘simple’ and provides good performance during training period i. e. , trading strategy is consistently better than buy-and-hold during training period 44

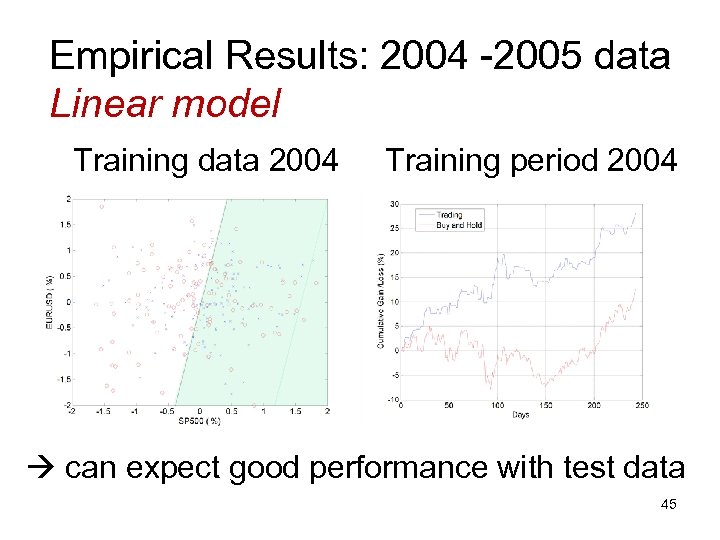

Empirical Results: 2004 -2005 data Linear model Training data 2004 Training period 2004 can expect good performance with test data 45

Empirical Results: 2004 -2005 data Linear model Training data 2004 Training period 2004 can expect good performance with test data 45

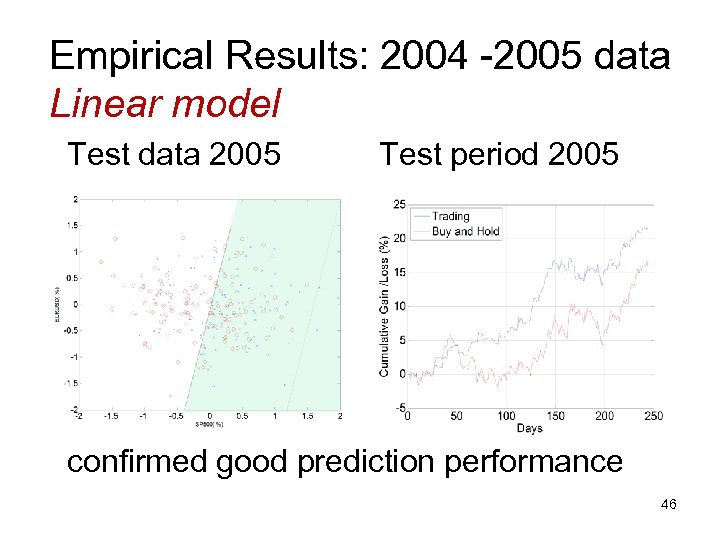

Empirical Results: 2004 -2005 data Linear model Test data 2005 Test period 2005 confirmed good prediction performance 46

Empirical Results: 2004 -2005 data Linear model Test data 2005 Test period 2005 confirmed good prediction performance 46

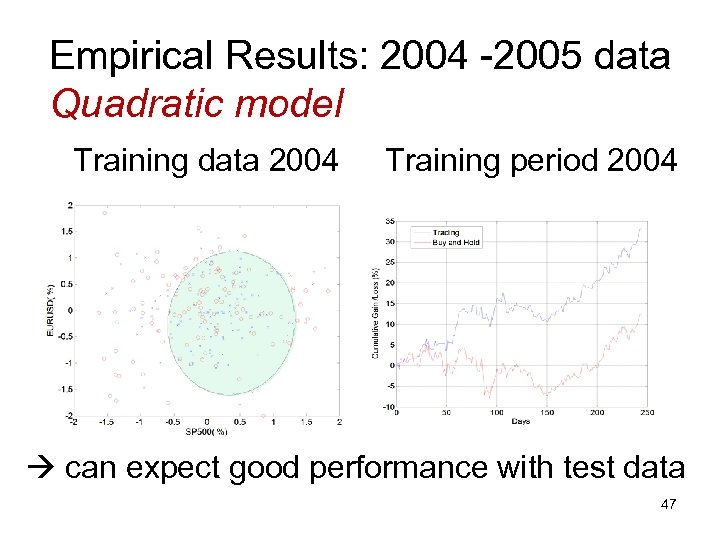

Empirical Results: 2004 -2005 data Quadratic model Training data 2004 Training period 2004 can expect good performance with test data 47

Empirical Results: 2004 -2005 data Quadratic model Training data 2004 Training period 2004 can expect good performance with test data 47

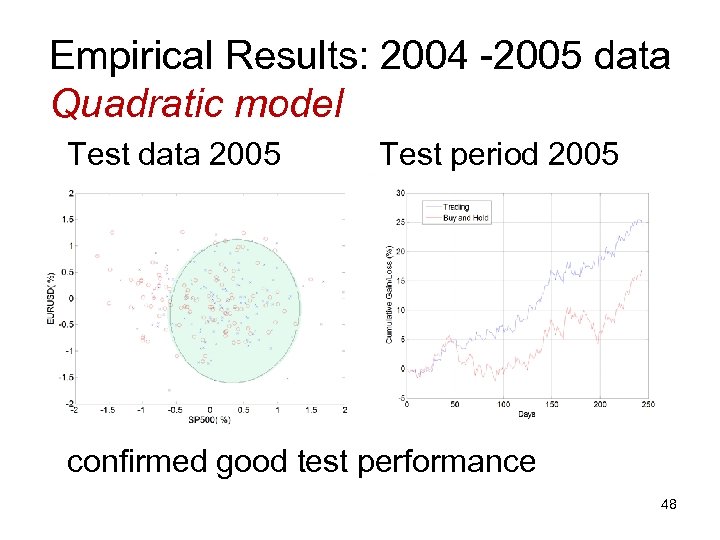

Empirical Results: 2004 -2005 data Quadratic model Test data 2005 Test period 2005 confirmed good test performance 48

Empirical Results: 2004 -2005 data Quadratic model Test data 2005 Test period 2005 confirmed good test performance 48

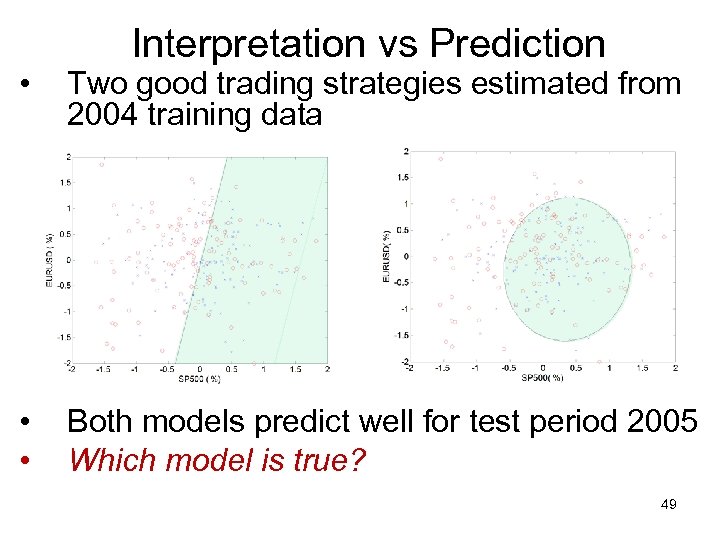

Interpretation vs Prediction • Two good trading strategies estimated from 2004 training data • • Both models predict well for test period 2005 Which model is true? 49

Interpretation vs Prediction • Two good trading strategies estimated from 2004 training data • • Both models predict well for test period 2005 Which model is true? 49

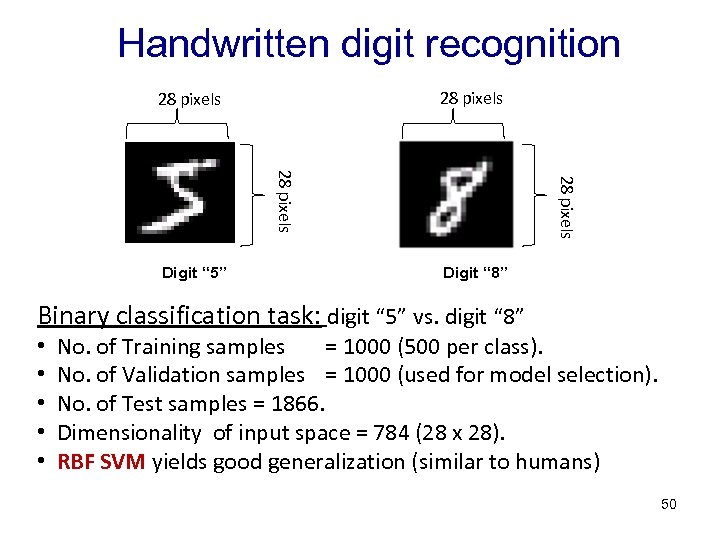

Handwritten digit recognition 28 pixels Digit “ 5” Digit “ 8” Binary classification task: digit “ 5” vs. digit “ 8” • • • No. of Training samples = 1000 (500 per class). No. of Validation samples = 1000 (used for model selection). No. of Test samples = 1866. Dimensionality of input space = 784 (28 x 28). RBF SVM yields good generalization (similar to humans) 50

Handwritten digit recognition 28 pixels Digit “ 5” Digit “ 8” Binary classification task: digit “ 5” vs. digit “ 8” • • • No. of Training samples = 1000 (500 per class). No. of Validation samples = 1000 (used for model selection). No. of Test samples = 1866. Dimensionality of input space = 784 (28 x 28). RBF SVM yields good generalization (similar to humans) 50

Interpretation vs Prediction • Humans cannot provide interpretation even when they make good prediction • Interpretation of black-box models Not unique/ subjective Depends on parameterization: i. e. kernel type 51

Interpretation vs Prediction • Humans cannot provide interpretation even when they make good prediction • Interpretation of black-box models Not unique/ subjective Depends on parameterization: i. e. kernel type 51

Interpretation of SVM models How to interpret high-dimensional models? Strategy 1: dimensionality reduction/feature selection prediction accuracy usually suffers Strategy 2: interpretation of a high-dimensional model utilizing properties of SVM (~ separation margin) 52

Interpretation of SVM models How to interpret high-dimensional models? Strategy 1: dimensionality reduction/feature selection prediction accuracy usually suffers Strategy 2: interpretation of a high-dimensional model utilizing properties of SVM (~ separation margin) 52

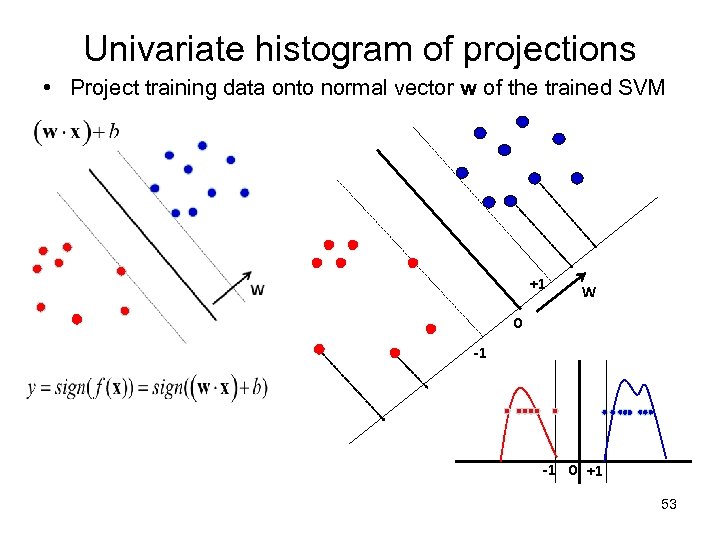

Univariate histogram of projections • Project training data onto normal vector w of the trained SVM +1 W 0 -1 -1 0 +1 53

Univariate histogram of projections • Project training data onto normal vector w of the trained SVM +1 W 0 -1 -1 0 +1 53

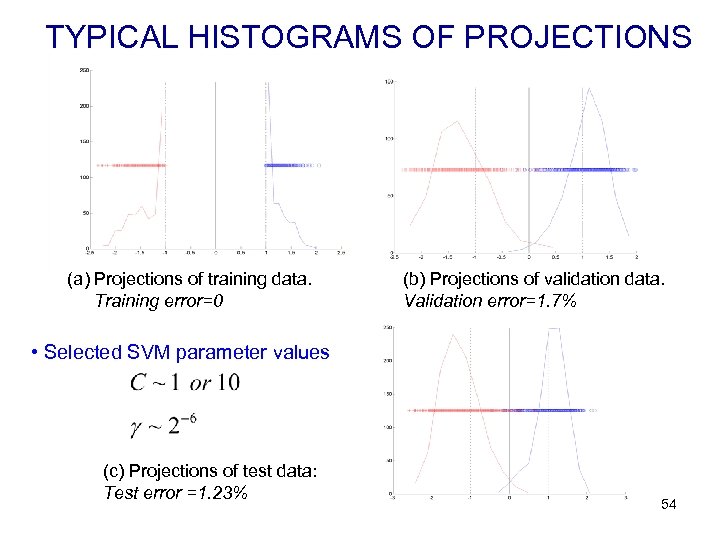

TYPICAL HISTOGRAMS OF PROJECTIONS (a) Projections of training data. Training error=0 (b) Projections of validation data. Validation error=1. 7% • Selected SVM parameter values (c) Projections of test data: Test error =1. 23% 54

TYPICAL HISTOGRAMS OF PROJECTIONS (a) Projections of training data. Training error=0 (b) Projections of validation data. Validation error=1. 7% • Selected SVM parameter values (c) Projections of test data: Test error =1. 23% 54

SUMMARY • • • Philosophical issues + methodology: important for data-analytic modeling Important distinction between first-principle knowledge, empirical knowledge, beliefs Black-box predictive models - no simple interpretation (many variables) - multiplicity of good models Simple/interpretable parameterizations do not predict well for high-dimensional data Non-standard and non-inductive settings 55

SUMMARY • • • Philosophical issues + methodology: important for data-analytic modeling Important distinction between first-principle knowledge, empirical knowledge, beliefs Black-box predictive models - no simple interpretation (many variables) - multiplicity of good models Simple/interpretable parameterizations do not predict well for high-dimensional data Non-standard and non-inductive settings 55

References • V. Vapnik, Estimation of Dependencies Based on Empirical Data. Empirical Inference Science: Afterword of 2006 Springer • L. Breiman, “Statistical Modeling: the Two Cultures”, Statistical Science, vol. 16(3), pp. 199 -231, 2001 • V. Cherkassky and F. Mulier, Learning from Data, second edition, Wiley, 2007 • V. Cherkassky, Predictive Learning, 2012 (to appear) - check Amazon. com in early Aug 2012 - developed for upper-level undergrad course for engineering and computer science students at U. of Minnesota with significant Liberal Arts content (on philosophy) - see http: //www. ece. umn. edu/users/cherkass/ee 4389/ 56

References • V. Vapnik, Estimation of Dependencies Based on Empirical Data. Empirical Inference Science: Afterword of 2006 Springer • L. Breiman, “Statistical Modeling: the Two Cultures”, Statistical Science, vol. 16(3), pp. 199 -231, 2001 • V. Cherkassky and F. Mulier, Learning from Data, second edition, Wiley, 2007 • V. Cherkassky, Predictive Learning, 2012 (to appear) - check Amazon. com in early Aug 2012 - developed for upper-level undergrad course for engineering and computer science students at U. of Minnesota with significant Liberal Arts content (on philosophy) - see http: //www. ece. umn. edu/users/cherkass/ee 4389/ 56