1357bf1c94457a3e93e1ebd33761ac59.ppt

- Количество слайдов: 80

Data Documentation Initiative: A global phenomenon – coming soon to ABS! Wendy Thomas Chair, DDI Technical Implementation Committee 1 September 2009

Acknowledgments • Slides provided for use by: – Wendy Thomas – Pascal Heus – Mary Vardigan – Peter Granda – Nancy Mc. Govern – Jeremy Iverson – Dan Smith

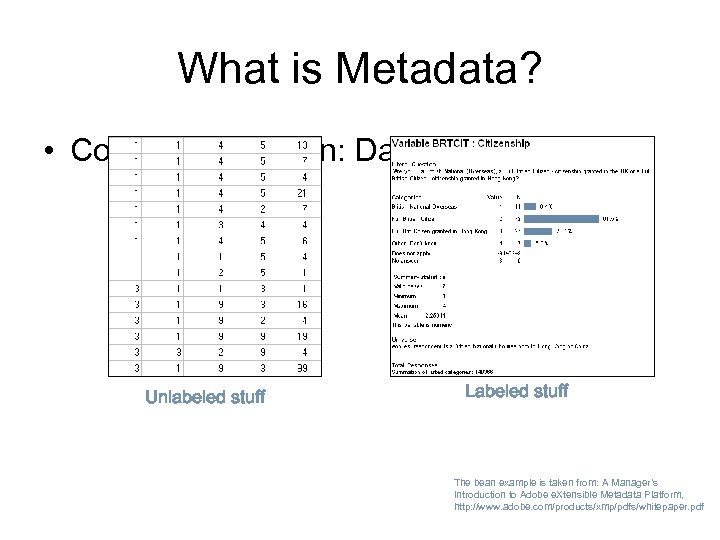

What is Metadata? • Common definition: Data about Data Unlabeled stuff Labeled stuff The bean example is taken from: A Manager’s Introduction to Adobe e. Xtensible Metadata Platform, http: //www. adobe. com/products/xmp/pdfs/whitepaper. pdf

Managing data and metadata is challenging! We are in charge of the We want easy access need support our data. We to collect the to high quality and well information from the to We have an users but also need documented data! it, producers, preserve protect our information and provide access to respondents! management our users! Academic problem Producers Users Government Sponsors Librarians Business Policy Makers General Public Media/Press

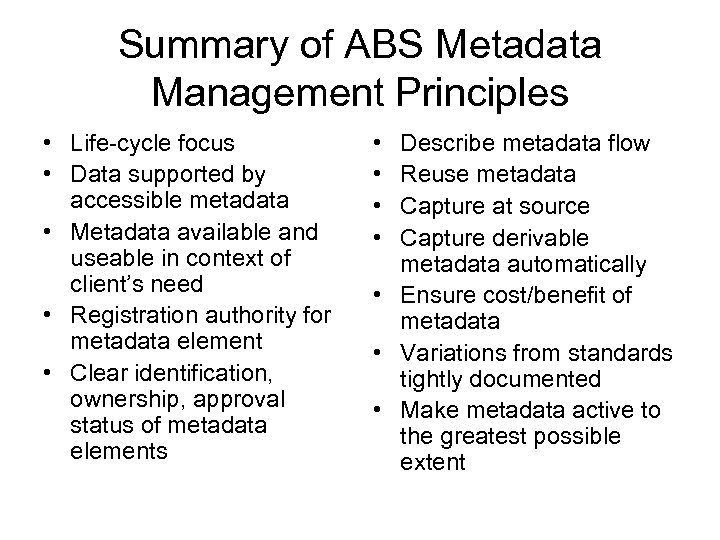

Summary of ABS Metadata Management Principles • Life-cycle focus • Data supported by accessible metadata • Metadata available and useable in context of client’s need • Registration authority for metadata element • Clear identification, ownership, approval status of metadata elements • • Describe metadata flow Reuse metadata Capture at source Capture derivable metadata automatically • Ensure cost/benefit of metadata • Variations from standards tightly documented • Make metadata active to the greatest possible extent

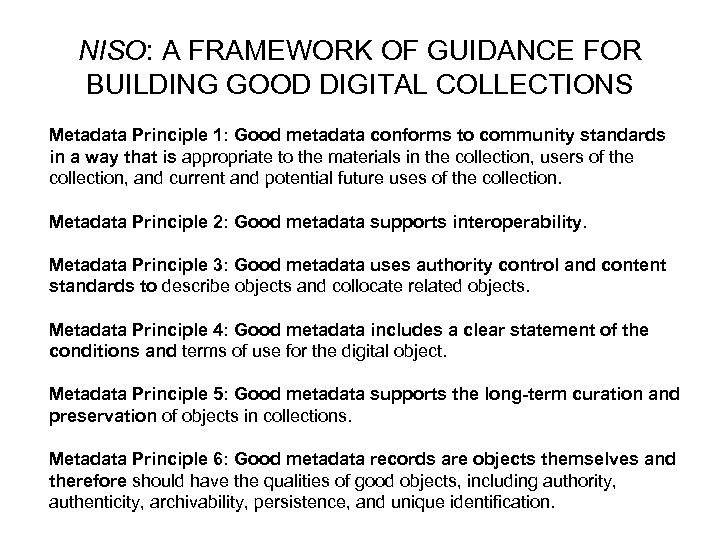

NISO: A FRAMEWORK OF GUIDANCE FOR BUILDING GOOD DIGITAL COLLECTIONS Metadata Principle 1: Good metadata conforms to community standards in a way that is appropriate to the materials in the collection, users of the collection, and current and potential future uses of the collection. Metadata Principle 2: Good metadata supports interoperability. Metadata Principle 3: Good metadata uses authority control and content standards to describe objects and collocate related objects. Metadata Principle 4: Good metadata includes a clear statement of the conditions and terms of use for the digital object. Metadata Principle 5: Good metadata supports the long-term curation and preservation of objects in collections. Metadata Principle 6: Good metadata records are objects themselves and therefore should have the qualities of good objects, including authority, authenticity, archivability, persistence, and unique identification.

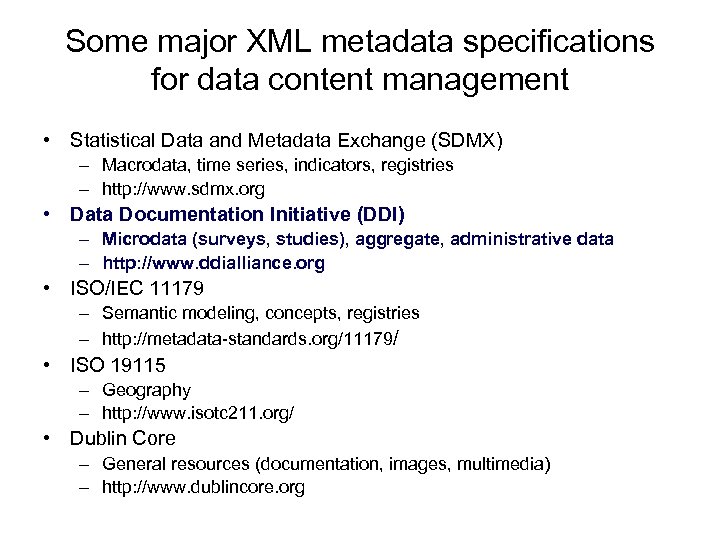

Some major XML metadata specifications for data content management • Statistical Data and Metadata Exchange (SDMX) – Macrodata, time series, indicators, registries – http: //www. sdmx. org • Data Documentation Initiative (DDI) – Microdata (surveys, studies), aggregate, administrative data – http: //www. ddialliance. org • ISO/IEC 11179 – Semantic modeling, concepts, registries – http: //metadata-standards. org/11179/ • ISO 19115 – Geography – http: //www. isotc 211. org/ • Dublin Core – General resources (documentation, images, multimedia) – http: //www. dublincore. org

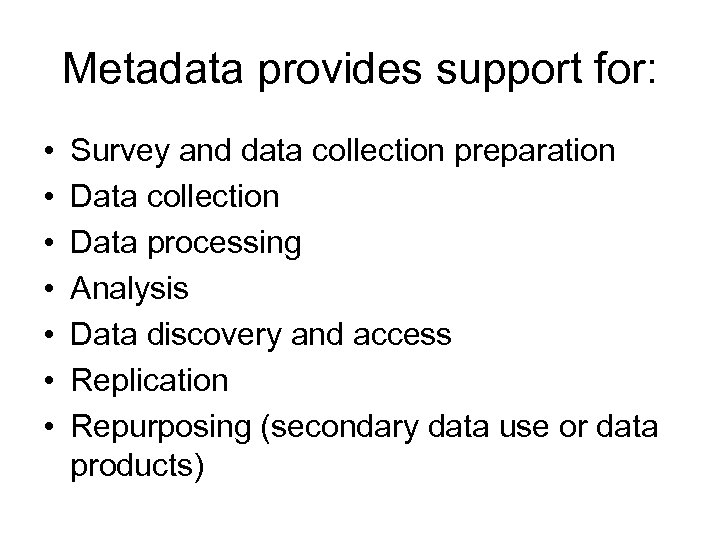

Metadata provides support for: • • Survey and data collection preparation Data collection Data processing Analysis Data discovery and access Replication Repurposing (secondary data use or data products)

Metadata • Metadata is essential information for research and reuse of data • The further data gets from its source, the greater the importance of the metadata • Content is critical • Structure is becoming increasingly important in a networked world

Why Standards? • Standards provide structure for: – Accurate transfer of content between systems – Increased automation of ingest, reducing costs – Interoperability between systems and software – Structural base for discovery and comparison

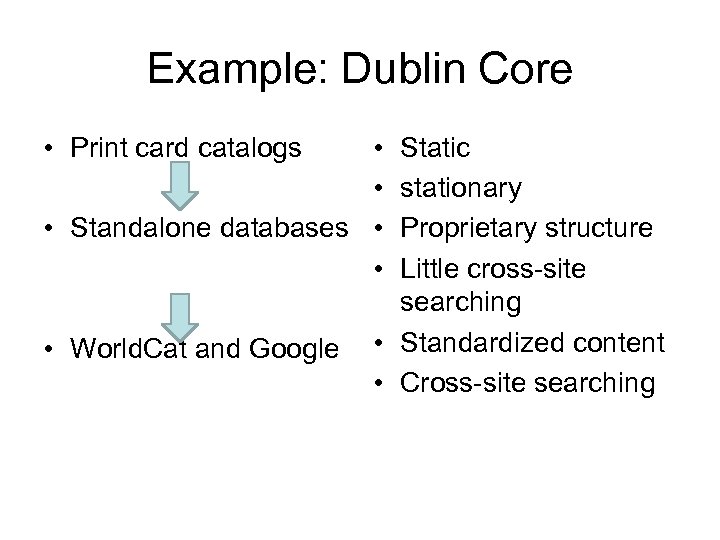

Example: Dublin Core • Print card catalogs • • • Standalone databases • • • World. Cat and Google Static stationary Proprietary structure Little cross-site searching • Standardized content • Cross-site searching

Interacting Standards for Data • Dublin Core • ISO/IEC 11179 • ISO 19115 – Geography • Statistical Packages • METS • PREMIS • SDMX • DDI • Citation structure • Coverage – Temporal – Topical – Spatial • Location specific information

Interacting Standards for Data • Dublin Core • ISO/IEC 11179 • ISO 19115 – Geography • Statistical Packages • METS • PREMIS • SDMX • DDI • Structure and content of a data element as the building block of information • Supports registry functions • Provides – Object – Property – Representation

Interacting Standards for Data • Dublin Core • ISO/IEC 11179 • ISO 19115 – Geography • Statistical Packages • METS • PREMIS • SDMX • DDI • i. e. , ANZLIC and US FGDC • Focus is on describing spatial objects and their attributes

Interacting Standards for Data • Dublin Core • ISO/IEC 11179 • ISO 19115 – Geography • Statistical Packages • METS • PREMIS • SDMX • DDI • Proprietary standards • Content is generally limited to: – Variable name – Variable label – Data type and structure – Category labels • Translation tools used to transport content

Interacting Standards for Data • Dublin Core • ISO/IEC 11179 • ISO 19115 – Geography • Statistical Packages • METS • PREMIS • SDMX • DDI • Digital Library Federation • Consistent outer wrapper for digital objects of all type • Contains a profile providing the structural information for the contained object

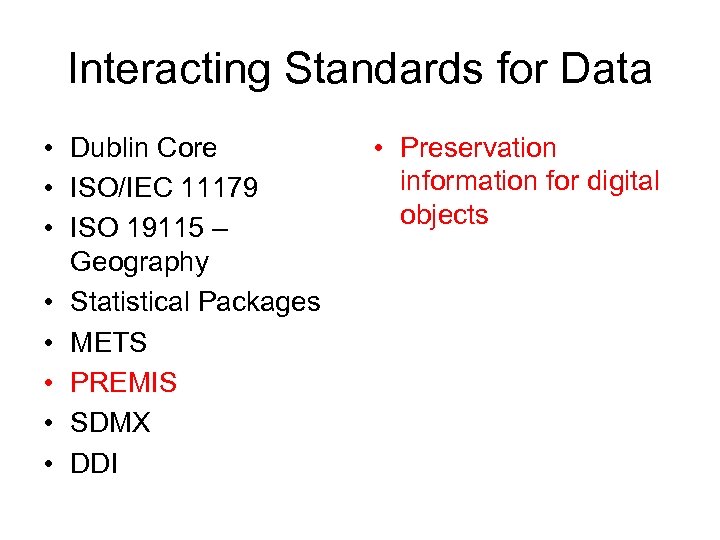

Interacting Standards for Data • Dublin Core • ISO/IEC 11179 • ISO 19115 – Geography • Statistical Packages • METS • PREMIS • SDMX • DDI • Preservation information for digital objects

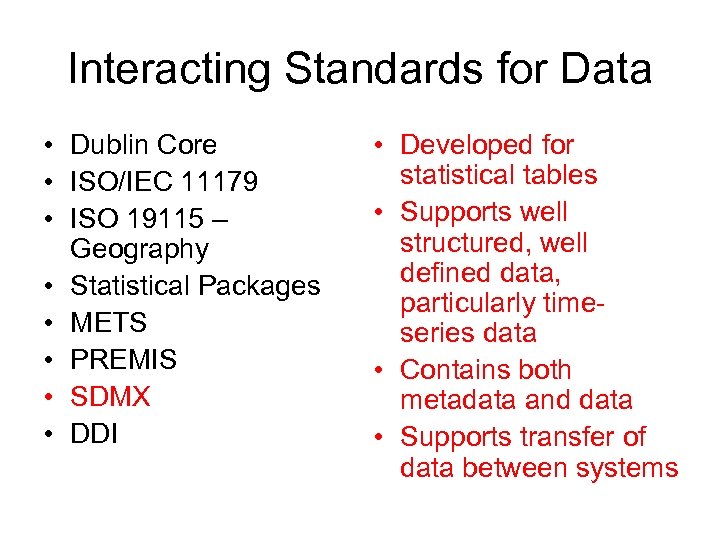

Interacting Standards for Data • Dublin Core • ISO/IEC 11179 • ISO 19115 – Geography • Statistical Packages • METS • PREMIS • SDMX • DDI • Developed for statistical tables • Supports well structured, well defined data, particularly timeseries data • Contains both metadata and data • Supports transfer of data between systems

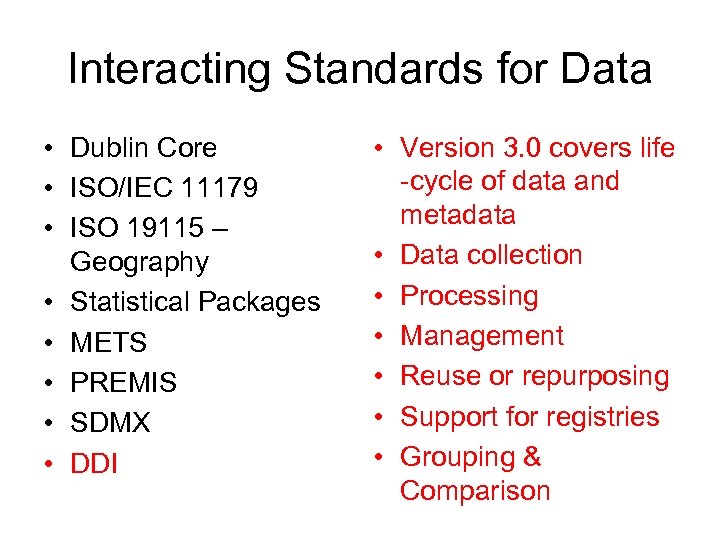

Interacting Standards for Data • Dublin Core • ISO/IEC 11179 • ISO 19115 – Geography • Statistical Packages • METS • PREMIS • SDMX • DDI • Version 3. 0 covers life -cycle of data and metadata • Data collection • Processing • Management • Reuse or repurposing • Support for registries • Grouping & Comparison

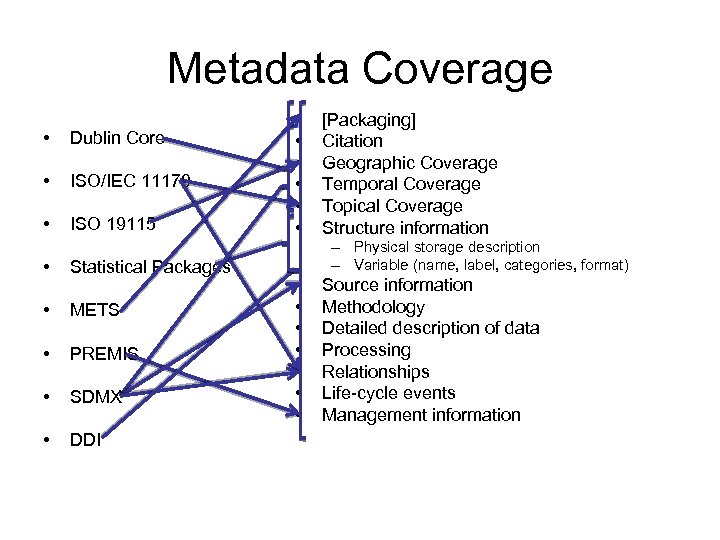

Metadata Coverage • Dublin Core • ISO/IEC 11179 • ISO 19115 • Statistical Packages • METS • PREMIS • SDMX • DDI • • • [Packaging] Citation Geographic Coverage Temporal Coverage Topical Coverage Structure information – Physical storage description – Variable (name, label, categories, format) • • Source information Methodology Detailed description of data Processing Relationships Life-cycle events Management information

The Data Documentation Initiative (DDI) • International XML based specification – Started in 1995, now driven by DDI Alliance (30+ members) – Became XML specification in 2000 (v 1. 0) – Current version is 2. 1 with focus on archiving (codebook) • New Version 3. 0 (2008) – Focus on entire survey “Life Cycle” – Provide comprehensive metadata on the entire survey process and usage – Aligned on other metadata standards (DC, MARC, ISO/IEC 11179, SDMX, …) – Include machine actionable elements to facilitate processing, discovery and analysis

Intent of DDI Design • Facilitate point-of-origin capture of metadata • Reuse of metadata to support: – Consistency and accuracy of metadata content – Provide internal and external implicit comparisons – Support external registries of concepts, questions, variables, etc. – Metadata driven processing • Provide clear paths of interaction with other major standards

Basic Structures • DDI 3 used a model similar to SDMX in terms of the following: – Indentifiable, Versionable, and Maintainable objects – The use of multiple schemas to describe different process sub-sections in the life-cycle – Use of schemes to facilitate reuse of common materials

DDI: Full content coverage for survey and administrative data • • • Conceptual coverage Methodology Data Collection Processing – cleaning, paradata Recoding and derivations Variable and tabular content Internal relationships Physical storage Data management

Plus: Relationships between studies • Comparison by design – Study series can inherit from earlier metadata – Capture changes only • Data integration – Mapping of codes between source and target – Capture comparison information • Comparison of abstract content models – Publication of reusable materials (code schemes, concept schemes, geographic structure, etc. )

Current Areas of DDI Development • Controlled vocabularies to improve machine actionability • Data collection methodology and process expansion for more depth and detail • Qualitative data • Increased comparison coverage • Tools

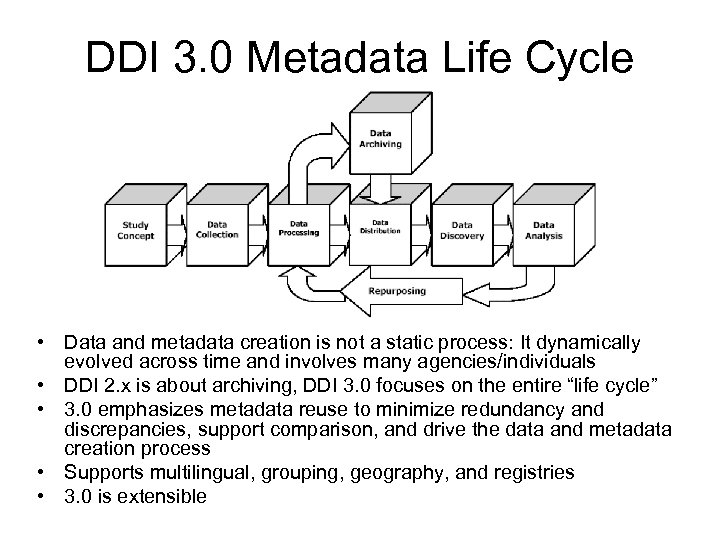

DDI 3. 0 Metadata Life Cycle • Data and metadata creation is not a static process: It dynamically evolved across time and involves many agencies/individuals • DDI 2. x is about archiving, DDI 3. 0 focuses on the entire “life cycle” • 3. 0 emphasizes metadata reuse to minimize redundancy and discrepancies, support comparison, and drive the data and metadata creation process • Supports multilingual, grouping, geography, and registries • 3. 0 is extensible

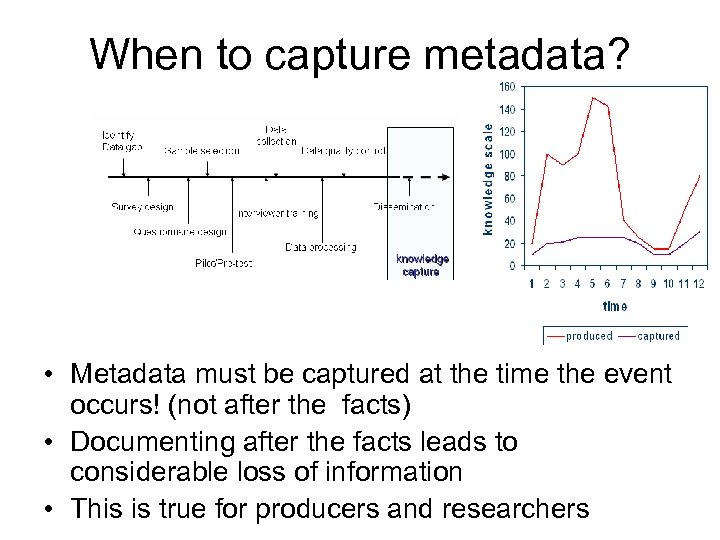

When to capture metadata? • Metadata must be captured at the time the event occurs! (not after the facts) • Documenting after the facts leads to considerable loss of information • This is true for producers and researchers

Reuse • DDI is designed around schemes (lists of items) for commonly reused information within a study such as categories, code schemes, concepts, universe, etc. – Items are “used” in multiple locations in a DDI document by referencing the item in the list – Enter once, use in multiple locations – Items can be versioned for management over time without having to change content in multiple locations

Comparison and Registries • Information in DDI schemes can be published in external registries and used by multiple studies – Provides implicit comparison both within a study and between studies – Supports organizational consistency through the use of agreed content managed in registries – Referencing structured lists provides further context to individual items used in a study

Metadata driven processing • Capturing metadata upstream can provide over 90% of the building blocks needed to generate the remainder of the metadata • DDI supports imbedding command code to run data processing events driving data capture, data processing during after collection, and to support post-collection recoding, derivations, and harmonization maps

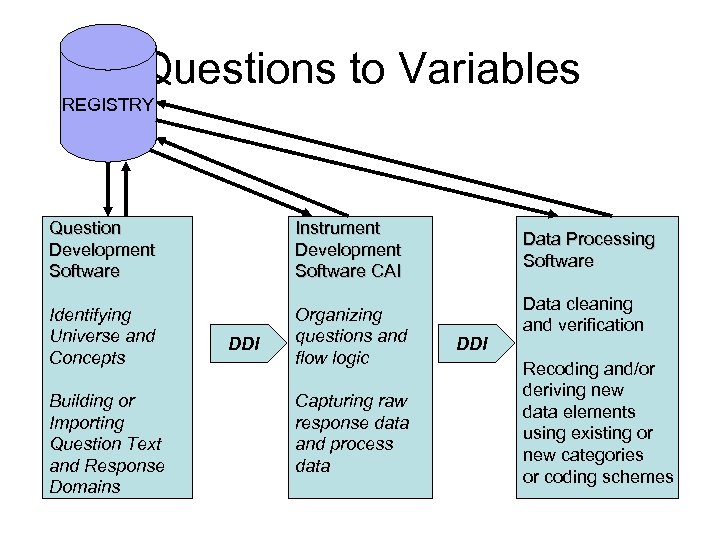

Questions to Variables REGISTRY Question Development Software Instrument Development Software CAI Identifying Universe and Concepts Organizing questions and flow logic Building or Importing Question Text and Response Domains DDI Capturing raw response data and process data Data Processing Software DDI Data cleaning and verification Recoding and/or deriving new data elements using existing or new categories or coding schemes

Working with other standards • There is no single standard that does it all • DDI was specifically designed to support easy interaction with: – Dublin Core – mapping of citation elements and imbedding native Dublin Core – ISO/IEC 11179 – working with an editor of the standard to reflect data element model and ISO/IEC 11179 -5 naming conventions for registry intended items

Standards continued – SDMX – DDI NCubes were revised to incorporate the ability to attach attributes to any area of a cube and map cleanly into and out of SDMX cubes. SDMX has added means of attaching fragments of DDI which provide source and processing information that can be indexed and delivered through SMDX tools. – ISO 19115 (ANZLIC) – Geographic elements in DDI are structured to reflect basic discovery elements used by geographic search engines and provide the detailed geographic structure information needed by GIS system to incorporate the data accurately

DDI does not replace good content • DDI structures metadata to leverage content – Collection and processing – Discovery and access – Analysis and repurposing – Registries – Comparison • DDI is not a software application – Supports and informs software applications • DDI is a neutral archival structure – Preserving content and relationships

Value • Supports consistent use concepts, questions, variables, etc. throughout organization • Supports implicit comparison through reuse of content • Supports explicit comparison by mapping content between studies and to standard content • Retention of explicit relationships between data collection and the resulting data files • Early capture of a broad range of metadata at point of creation

Value - continued • • • Interoperability Flexibility in data storage Reuse of element structures Strong data typing Improved data mining between and across systems • Improved access to detailed metadata

DDI User Base • Archives and data libraries worldwide – Catalogs – Data delivery – Documentation delivery from data systems • Research Institutes/Services Data Centers – Documentation for data – Data search and analysis systems – Data management systems • International Organizations and National Statistical Agencies – Data collection and management

Archives and Data Libraries (examples) • Catalogs – ICPSR Data Catalog and Social Science Variable Database – CESSDA Data Portal – The Dataverse Network (former Virtual Data Collection) • Data delivery – California Digital Library “Counting California” – National Geographic Historical Information System • Documentation delivery – Survey Documentation and Analysis (SDA) – Data Liberation Initiative Metadata Collection

Research Institutes/Service Data Centers (examples) • Documentation for data – German Microcensus (GESIS) – Institute for the Study of Labor (IZA) – US General Social Survey (NORC) • Data search and analysis systems – Nesstar – Canadian Research Data Centres (RDC’s) • Data management systems – Questionnaire Development Documentation System (University of Konstanz/GESIS)

Current DDI Products at ICPSR • Most existing products currently in DDI 2. 1 with new additions moving to DDI 3 • DDI-XML variable-level codebooks output as PDF files for downloading by users • DDI-XML metadata records created initially by data depositors and edited by ICPSR staff to augment content and include additional fields • Increasing use of DDI for special projects: Social Science Variables Database, various harmonization and data processing tasks

Potential Use of DDI 3 at ICPSR • Information collected from data producers in precollection phase – Concept • Metadata output from CAI applications – Data Collection • Processor‘s dashboard – Metadata Processing • Metadata mining: New faceted search tool to facilitate discovery through more precise searching – Data Discovery • Relational database for comparison and harmonization across studies – Repurposing

Potential Use of DDI 3 at ICPSR -2 • Use of DDI in combination with other metadata standards, e. g. , Dublin Core, MARC, PREMIS • Beginning of FEDORA “object-centered“ implementation concepts into data processing and data preservation strategies • Processor‘s dashboard – Data Processing • Relationships of study object to file object • DDI 3 as “wrapper“ for all ICPSR metadata?

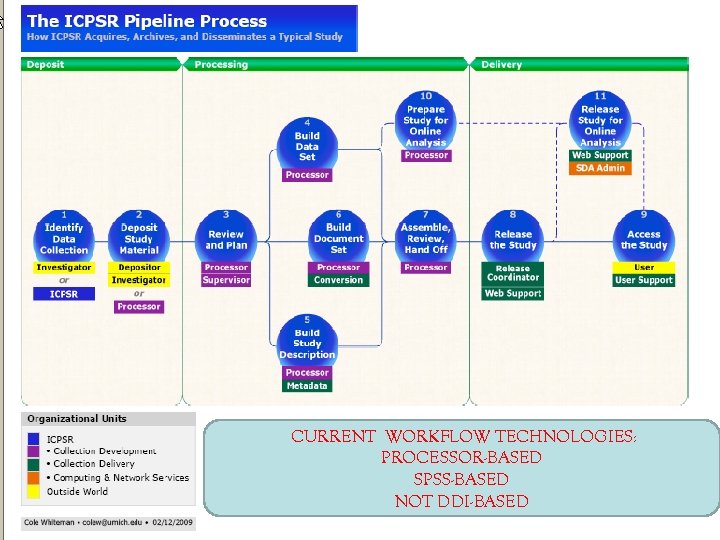

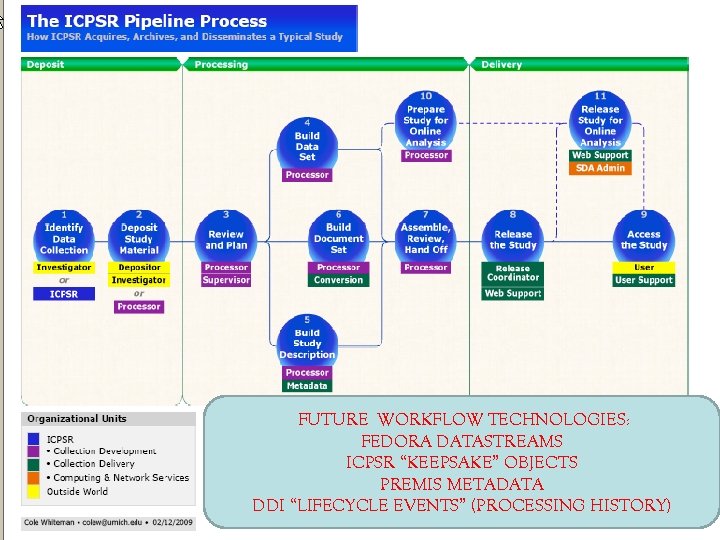

CURRENT WORKFLOW TECHNOLOGIES: PROCESSOR-BASED SPSS-BASED NOT DDI-BASED

FUTURE WORKFLOW TECHNOLOGIES: FEDORA DATASTREAMS ICPSR “KEEPSAKE” OBJECTS PREMIS METADATA DDI “LIFECYCLE EVENTS” (PROCESSING HISTORY)

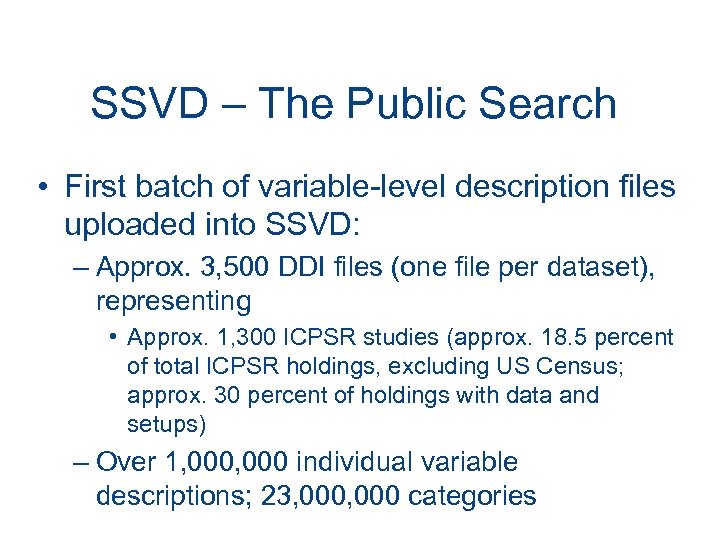

SSVD – The Public Search • First batch of variable-level description files uploaded into SSVD: – Approx. 3, 500 DDI files (one file per dataset), representing • Approx. 1, 300 ICPSR studies (approx. 18. 5 percent of total ICPSR holdings, excluding US Census; approx. 30 percent of holdings with data and setups) – Over 1, 000 individual variable descriptions; 23, 000 categories

SSVD – The Public Search • New database finalized Fall 2008 • Built to match DDI 3. 0 data model • Both DDI 2. x and DDI 3. 0 compliant – Designed to accept both DDI 2. x and 3. 0 input and produce output in both versions • ICPSR version currently uploads DDI 2. 1 and generates DDI 3. 0 individual variable descriptions.

SSVD – The Public Search Moving forward… • Transition to automated DDI upload – DDI uploaded at the time of study publication – First quality check performed by study processing staff – Acceptable DDI immediately released for public view – Problematic DDI suppressed from public view for further review, and upgrade as appropriate

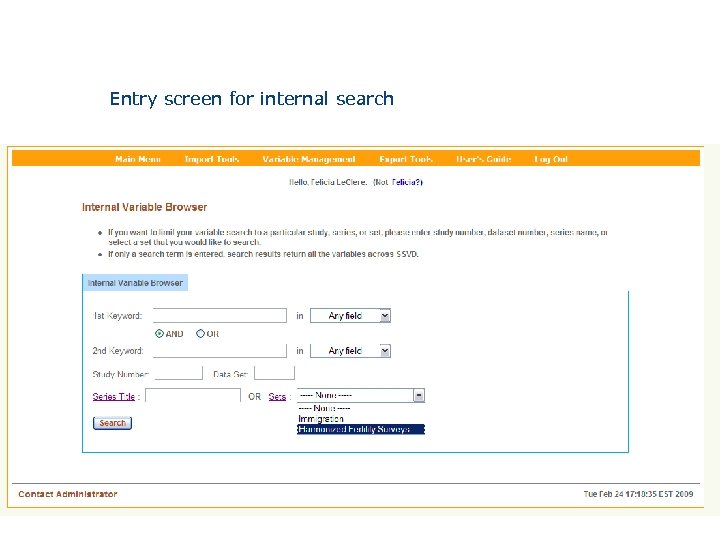

Entry screen for internal search

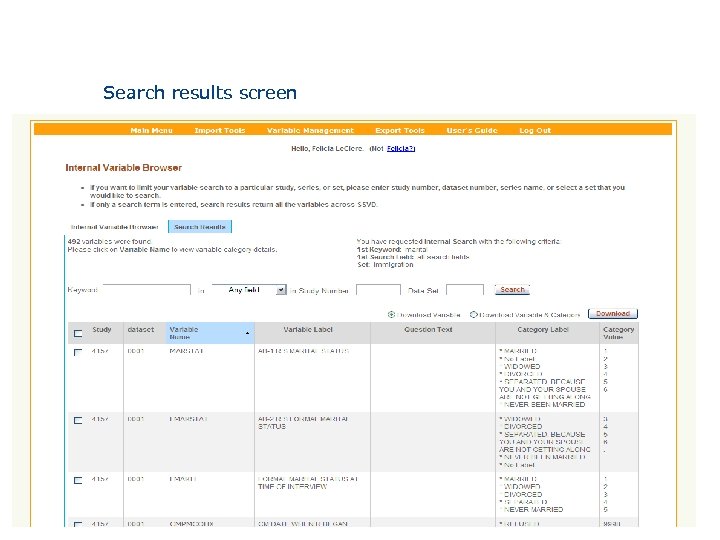

Search results screen

IPUMS at MPC • Did not use DDI because DDI 2 cannot handle translation tables • Currently in the process of mapping DDI 3 codebook output from IPUMS database • Importing DDI 2 files from Microdata Toolkit into processing, validation, and harmonization system

NHGIS • Contains historical aggregate data from population, housing, agricultural, and economic censuses as well as BEA data from 1790 to 2000 • Runs from DDI 2 n. Cube descriptions • Searches variables, identifies related n. Cube tables, determines geographic availability • Generates data subsets with geographic links to objects in NHGIS shape files, and shape files

Future Plans • Funding has been obtained to improve search and extraction system • Current limitations of the system reflect limitations of DDI 2 • Moving to DDI 3 will support: – broader cross survey searching – identification of common dimensions between NCubes over time – support harmonization instructions as well as common transformations such as calculation of medians

International Organizations and National Statistical Agencies • International Household Survey Network (IHSN) – – Major international organizations involved Coordination of activities Adopted DDI 1/2. x as standard Developed the Microdata Management Toolkit and related tools / guidelines – http: //www. surveynetwork. org • Accelerated Data Program (ADP) – World Bank / Paris 21 – Implement IHSN activities in developing countries • Task 1. Documentation and dissemination of existing survey microdata. – Has introduced DDI in national statistical agencies in over 50 countries – http: //www. surveynetwork. org/adp

INDEPTH/DSS Example • 38 Demographic Surveillance Sites in 19 countries spanning Africa, South Asia, Central American and Oceania • Diverse yet similar health research portfolios • Data management goals: – Standardize and harmonize data collection tools – Cross-site comparability of information – Sharing data effectively and efficiently

Reasons for choosing DDI • “It will be ideal to describe our data for the purposes of the Data Repository” • “It has really powerful features that will enable us to standardise several facets of our work. ” • “I originally underestimated the usefulness DDI will have as a means to harmonised data collection between sites. ” • Ability to expand comparison and harmonization with additional groups such as AIDS research team

Statistical Agencies • BLS considering publication of category and coding standards supported by BLS such as NAICS, SOC etc • Statistics Canada considering publishing concept schemes in DDI 3 for use by the research community • DDI is becoming more widely used for survey and census collection in developing countries (primarily Africa)

MQDS Version 1 • Extracted metadata from Blaise data model as XML tagged data • Provided user interface for selection of – Blaise files – Instrument questions and sections – Types of metadata to extract – Languages to display – Style sheet for generation of instrument documentation or codebook

Using MQDS V 1 XML: Codebook in Five Languages National Latino and Asian American Study www. icpsr. umich. edu/CPES

MQDS Version 1 • Limitations – XML not DDI-compliant • DDI Version 2 did not have XML tags for all metadata provided by Blaise • Did not provide easy means of adding XML tags without becoming noncompliant – XML files for complex surveys can be very large (text files) • Entire files had to be processed in computer memory • Limited ability to fully automate documentation

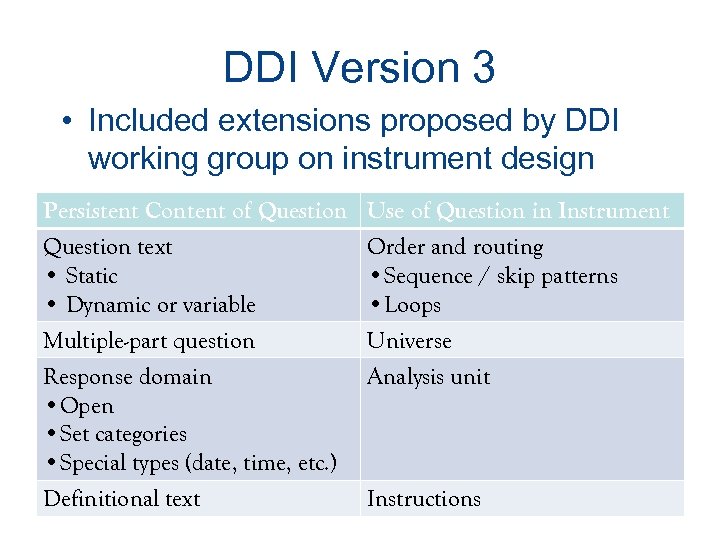

DDI Version 3 • Included extensions proposed by DDI working group on instrument design Persistent Content of Question text • Static • Dynamic or variable Use of Question in Instrument Order and routing • Sequence / skip patterns • Loops Multiple-part question Response domain • Open • Set categories • Special types (date, time, etc. ) Universe Analysis unit Definitional text Instructions

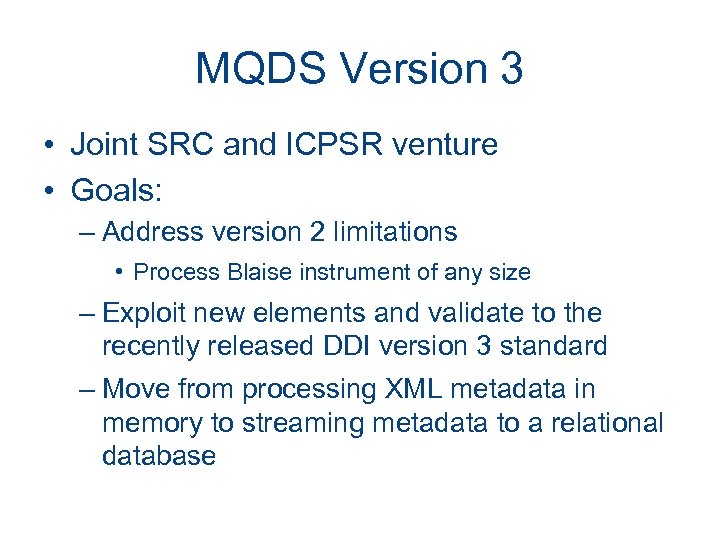

MQDS Version 3 • Joint SRC and ICPSR venture • Goals: – Address version 2 limitations • Process Blaise instrument of any size – Exploit new elements and validate to the recently released DDI version 3 standard – Move from processing XML metadata in memory to streaming metadata to a relational database

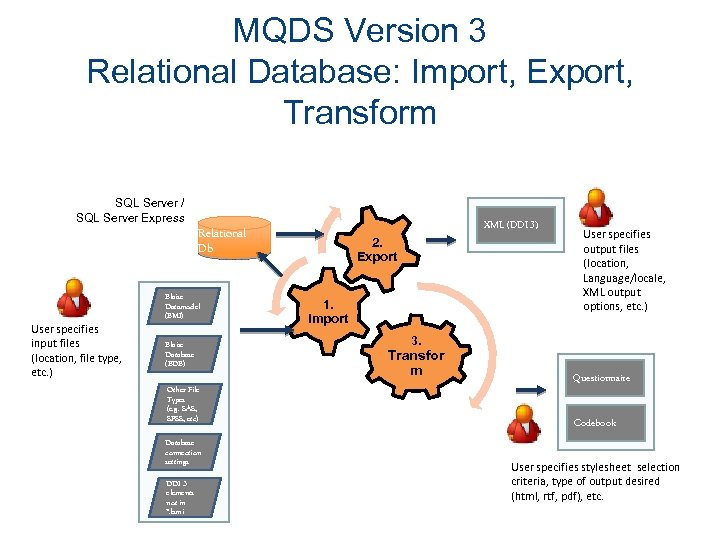

MQDS Version 3 Relational Database: Import, Export, Transform SQL Server / SQL Server Express XML (DDI 3) Relational Db Blaise Datamodel (BMI) User specifies input files (location, file type, etc. ) Blaise Database (BDB) Other File Types (e. g. SAS, SPSS, etc) Database connection settings DDI 3 elements not in *. bmi 2. Export 1. Import User specifies output files (location, Language/locale, XML output options, etc. ) 3. Transfor m Questionnaire Codebook User specifies stylesheet selection criteria, type of output desired (html, rtf, pdf), etc.

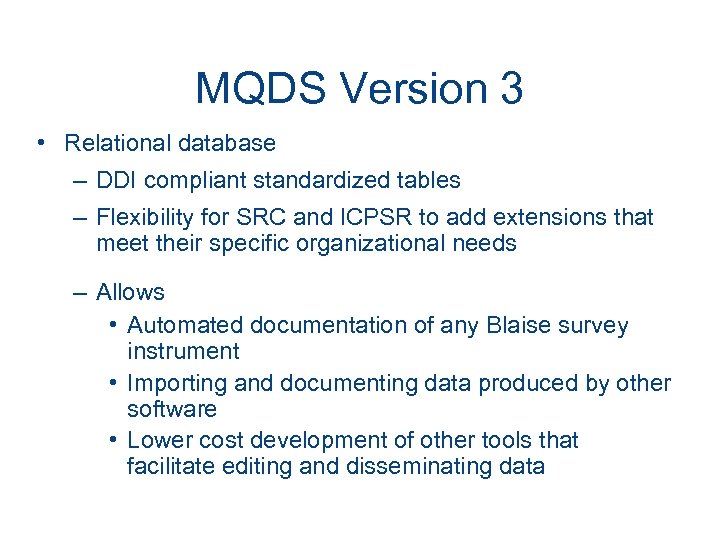

MQDS Version 3 • Relational database – DDI compliant standardized tables – Flexibility for SRC and ICPSR to add extensions that meet their specific organizational needs – Allows • Automated documentation of any Blaise survey instrument • Importing and documenting data produced by other software • Lower cost development of other tools that facilitate editing and disseminating data

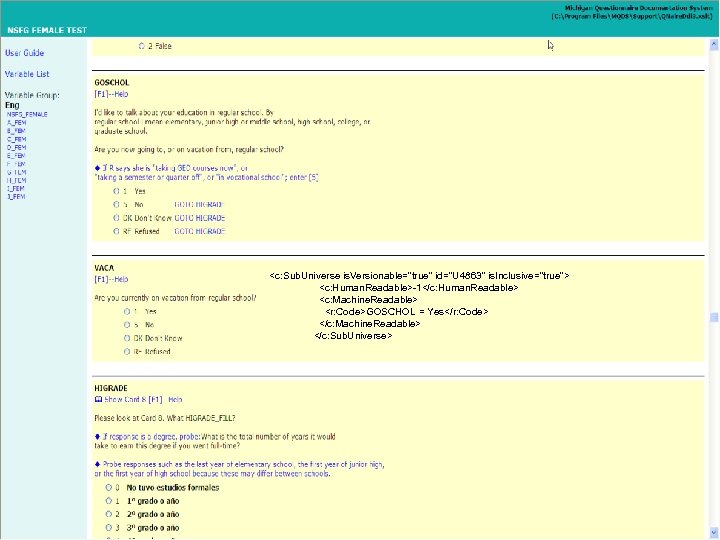

<c: Sub. Universe is. Versionable="true" id="U 4863" is. Inclusive="true"> <c: Human. Readable>-1</c: Human. Readable> <c: Machine. Readable> <r: Code>GOSCHOL = Yes</r: Code> </c: Machine. Readable> </c: Sub. Universe>

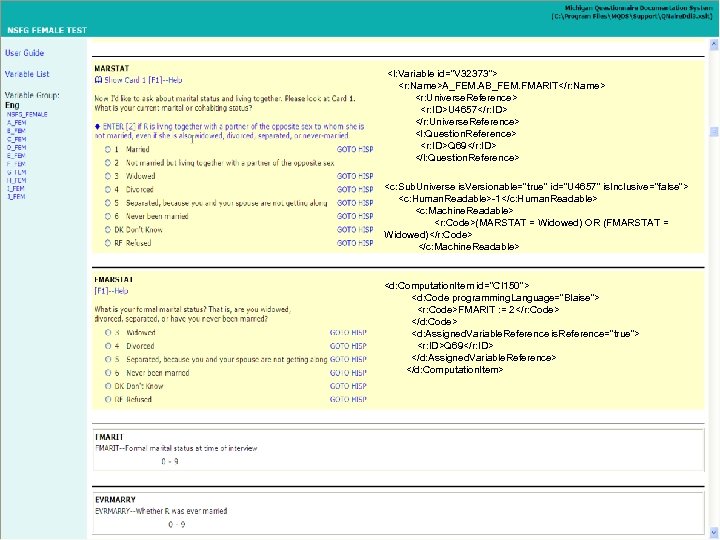

<l: Variable id="V 32373“> <r: Name>A_FEM. AB_FEM. FMARIT</r: Name> <r: Universe. Reference> <r: ID>U 4657</r: ID> </r: Universe. Reference> <l: Question. Reference> <r: ID>Q 69</r: ID> </l: Question. Reference> <c: Sub. Universe is. Versionable="true" id="U 4657" is. Inclusive="false"> <c: Human. Readable>-1</c: Human. Readable> <c: Machine. Readable> <r: Code>(MARSTAT = Widowed) OR (FMARSTAT = Widowed)</r: Code> </c: Machine. Readable> <d: Computation. Item id="CI 150"> <d: Code programming. Language="Blaise"> <r: Code>FMARIT : = 2</r: Code> </d: Code> <d: Assigned. Variable. Reference is. Reference="true"> <r: ID>Q 69</r: ID> </d: Assigned. Variable. Reference> </d: Computation. Item>

Colectica Feature Overview

Current Focus: Data Collection

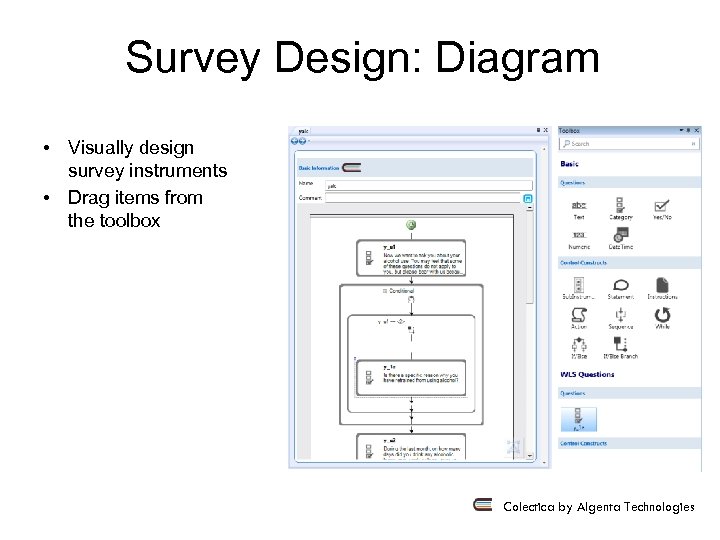

Survey Design: Diagram • Visually design survey instruments • Drag items from the toolbox Colectica by Algenta Technologies

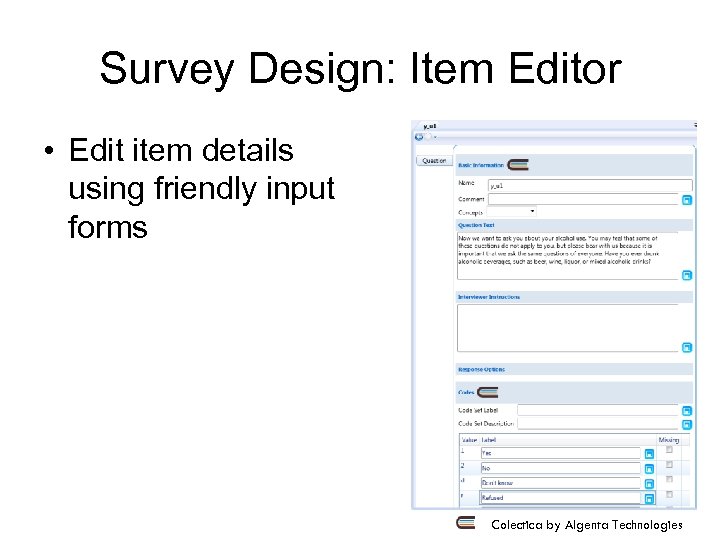

Survey Design: Item Editor • Edit item details using friendly input forms Colectica by Algenta Technologies

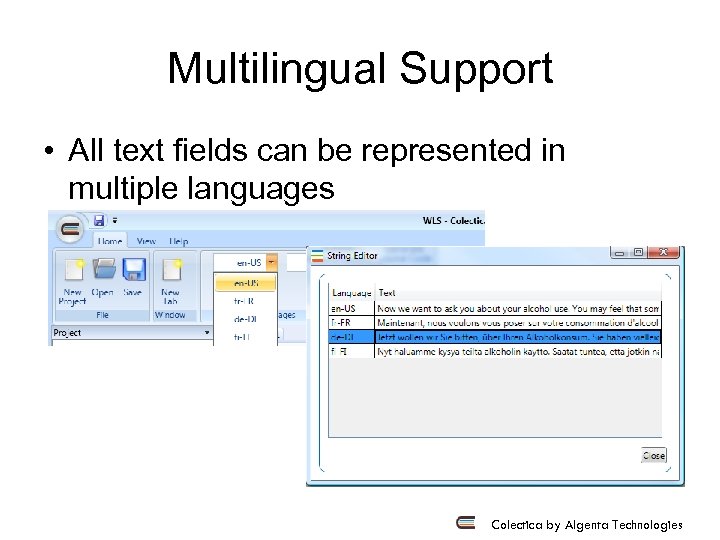

Multilingual Support • All text fields can be represented in multiple languages Colectica by Algenta Technologies

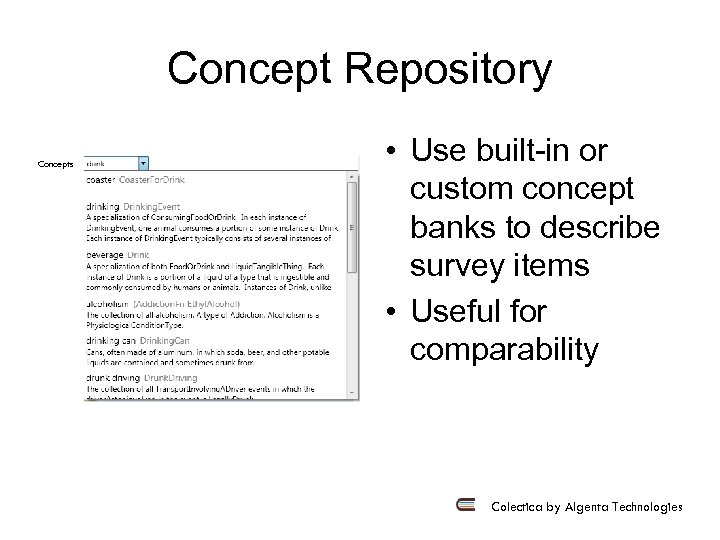

Concept Repository Concepts • Use built-in or custom concept banks to describe survey items • Useful for comparability Colectica by Algenta Technologies

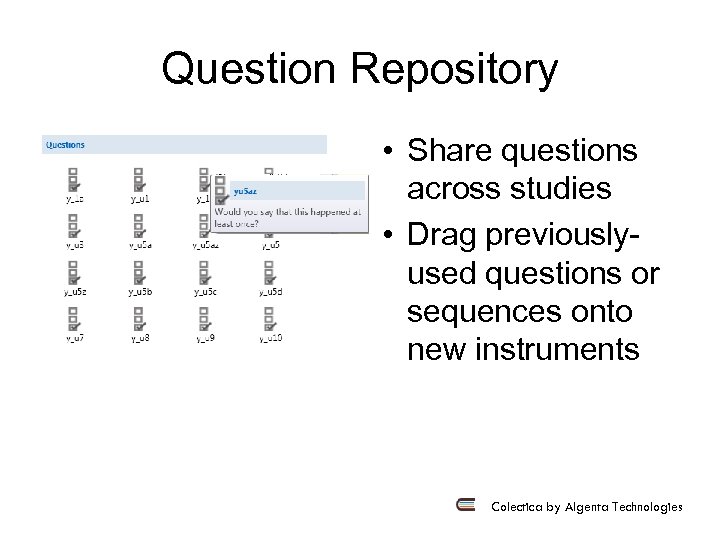

Question Repository • Share questions across studies • Drag previouslyused questions or sequences onto new instruments Colectica by Algenta Technologies

Import Existing CAI Code • Import from: – Blaise® – CASES – CSPro • Support for additional languages can be added Colectica by Algenta Technologies

Generate CAI Source Code • Currently support CASES • Blaise® and CSPro coming soon • Support for additional CAI systems can be added Colectica by Algenta Technologies

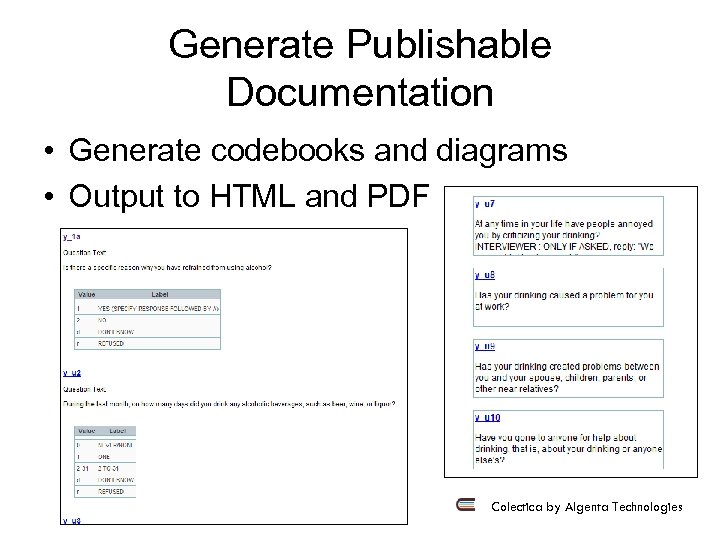

Generate Publishable Documentation • Generate codebooks and diagrams • Output to HTML and PDF Colectica by Algenta Technologies

Also: Study Concept & Design • Basic support for Study Concept & Design documentation Colectica by Algenta Technologies

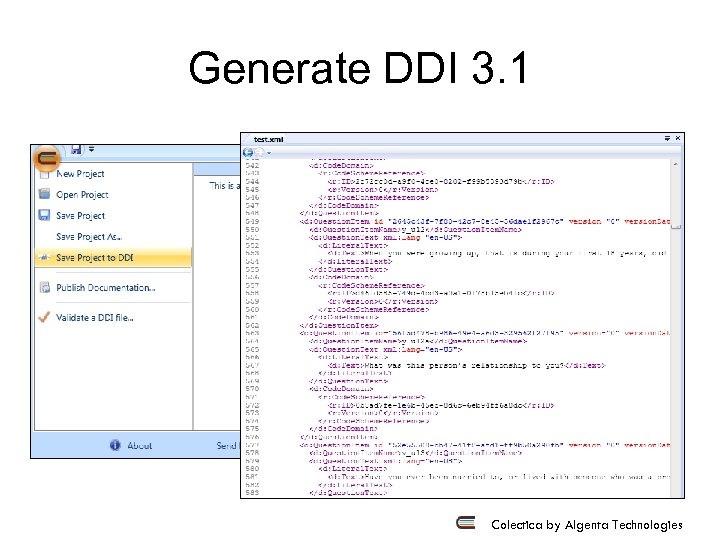

Generate DDI 3. 1 Colectica by Algenta Technologies

Additional Information • Beta available now • Web: http: //www. colectica. com/ • Email: contact@algenta. com

Thank you • DDI Alliance – http: //www. ddialliance. org • Wendy Thomas – wlt@pop. umn. edu

1357bf1c94457a3e93e1ebd33761ac59.ppt