8553904c5574aaabc18aea562b8392bc.ppt

- Количество слайдов: 37

Data and Debora: New Avenues in the Computational Analysis of the Old Testament Wido van Peursen, VU University Amsterdam, Faculty of Theology

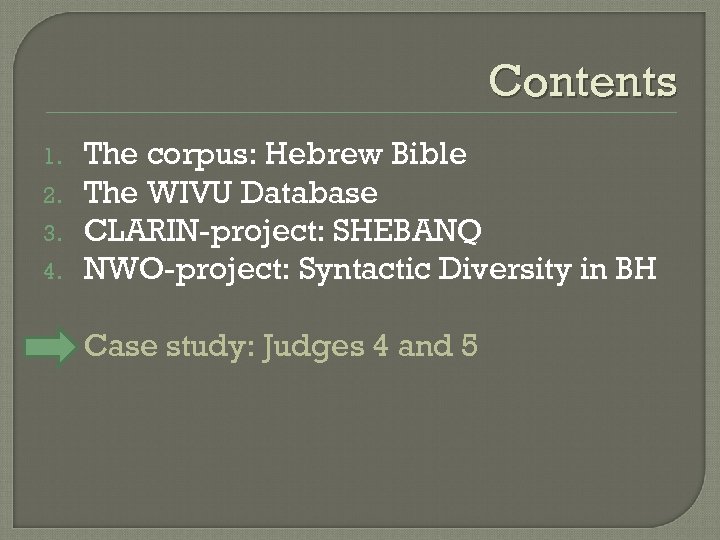

Contents 4. The corpus: Hebrew Bible The WIVU Database CLARIN-project: SHEBANQ NWO-project: Syntactic Diversity in BH 5. Case study: Judges 4 and 5 1. 2. 3.

The Corpus: Hebrew Bible Ca. 400. 000 words Probably composed over a period of ca. 1000 years (1200 -200 BC) Complex transmission history Oldest complete MS: Codex Leningradensis, 1008/9 AD Various linguistic layers (e. g. vowel signs) No native speakers

The WIVU Database WIVU database of the Hebrew Bible [WIVU = Werkgroep Informatica Vrije Universiteit] • Createted since 1970 s • Linguistic levels: Morphology (encoding rather than tagging!) Words Phrases Clauses Sentences Text hierarchy

The WIVU Database

The WIVU Database

Contents 4. The corpus: Hebrew Bible The WIVU Database CLARIN-project: SHEBANQ NWO-project: Syntactic Diversity in BH 5. Case study: Judges 4 and 5 1. 2. 3.

SHEBANQ System for HEBrew text: ANnotations for Queries and markup

SHEBANQ Challenges: 1. 2. 3. 4. 5. No dedicated space on the web where an authorized version of this resource is guaranteed to exist. No possibility to annotate it, link to it or build (open source) tools around it. Results of existing queries cannot be shown on the web. EMDROS is maintained by one-person private company. Mainly used by specialists in Bible & Computer.

SHEBANQ Mission: • To build a bridge between the linguistically annotated Hebrew Text corpus and biblical scholars. Three (1) (2) (3) steps: make text & annotations, available to scholars; demonstrate how queries can function to address research questions: repository of saved queries; give textual scholarship more empirical basis, by creating the opportunity of unique identifiers referring to saved queries.

SHEBANQ Mission: • To build a bridge between the linguistically annotated Hebrew Text corpus and biblical scholars. Three (1) (2) (3) steps: make text & annotations, available to scholars; demonstrate how queries can function to address research questions: repository of saved queries; give textual scholarship more empirical basis, by creating the opportunity of unique identifiers referring to saved queries.

SHEBANQ Mission: • To build a bridge between the linguistically annotated Hebrew Text corpus and biblical scholars. Three (1) (2) (3) steps: make text & annotations, available to scholars; demonstrate how queries can function to address research questions: repository of saved queries; give textual scholarship more empirical basis, by creating the opportunity of unique identifiers referring to saved queries.

SHEBANQ Example: “in-his –feet”: a. “on foot” or Mission: b. “in his footsteps”. • To build a bridge between the linguistically Disambiguation: annotated Hebrew Text corpus and biblical scholars. 1. intuitive/contextual or 2. on basis of pattern recognition (participants/agreement) Three steps: (1) (2) (3) make text & annotations, available to scholars; demonstrate how queries can function to address research questions: repository of saved queries; give textual scholarship more empirical basis, by creating the opportunity of unique identifiers referring to saved queries.

SHEBANQ Mission: • To build a bridge between the linguistically annotated Hebrew Text corpus and biblical scholars. Three (1) (2) (3) steps: make text & annotations, available to scholars; demonstrate how queries can function to address research questions: repository of saved queries; give textual scholarship more empirical basis, by creating the opportunity of unique identifiers referring to saved queries. [she-sang <Pr>] [Deborah and Barak <Su>]

Contents 4. The corpus: Hebrew Bible The WIVU Database CLARIN-project: SHEBANQ NWO-project: Syntactic Diversity in BH 5. Case study: Judges 4 and 5 1. 2. 3.

Syntactic Diversity in Biblical Hebrew Does Syntactic Variation reflect Language Change? Tracing Syntactic Diversity in Biblical Hebrew Texts

Syntactic Diversity in Biblical Hebrew Explanations • • • for linguistic diversity: Genre Chronology Language contact (Aramaic) Dialects Textual transmission Oral versus written layers

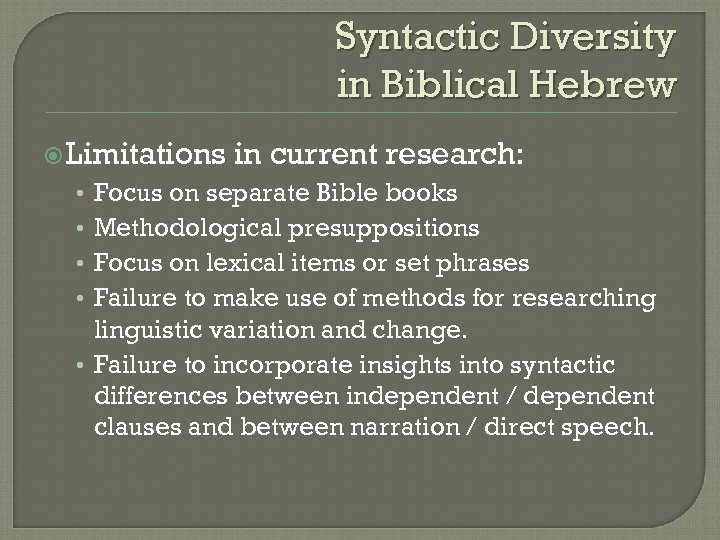

Syntactic Diversity in Biblical Hebrew Limitations in current research: Focus on separate Bible books Methodological presuppositions Focus on lexical items or set phrases Failure to make use of methods for researching linguistic variation and change. • Failure to incorporate insights into syntactic differences between independent / dependent clauses and between narration / direct speech. • •

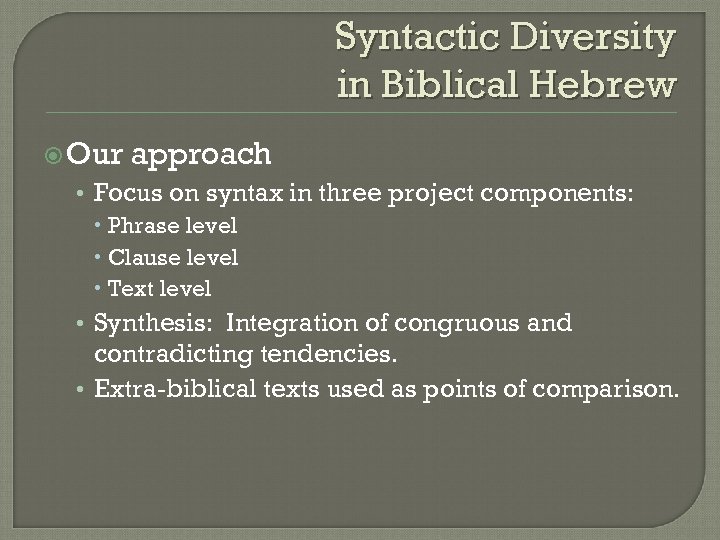

Syntactic Diversity in Biblical Hebrew Our approach • Focus on syntax in three project components: Phrase level Clause level Text level • Synthesis: Integration of congruous and contradicting tendencies. • Extra-biblical texts used as points of comparison.

Contents 4. The corpus: Hebrew Bible The WIVU Database CLARIN-project: SHEBANQ NWO-project: Syntactic Diversity in BH 5. Case study: Judges 4 and 5 1. 2. 3.

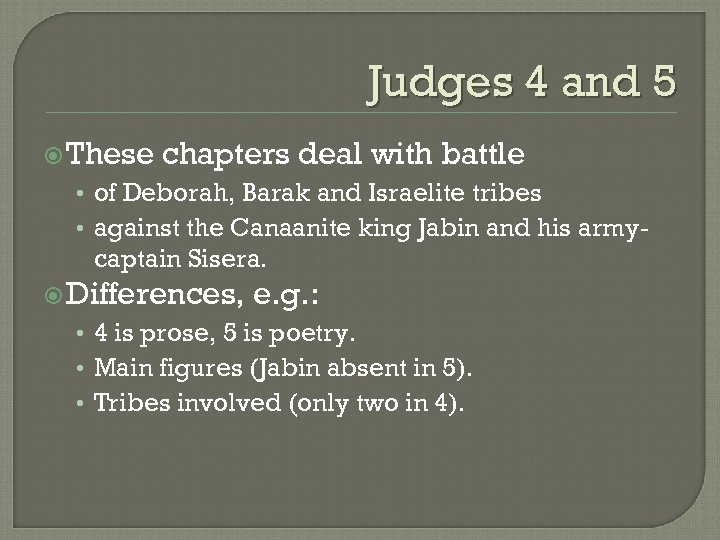

Judges 4 and 5 These chapters deal with battle • of Deborah, Barak and Israelite tribes • against the Canaanite king Jabin and his army- captain Sisera. Differences, e. g. : • 4 is prose, 5 is poetry. • Main figures (Jabin absent in 5). • Tribes involved (only two in 4).

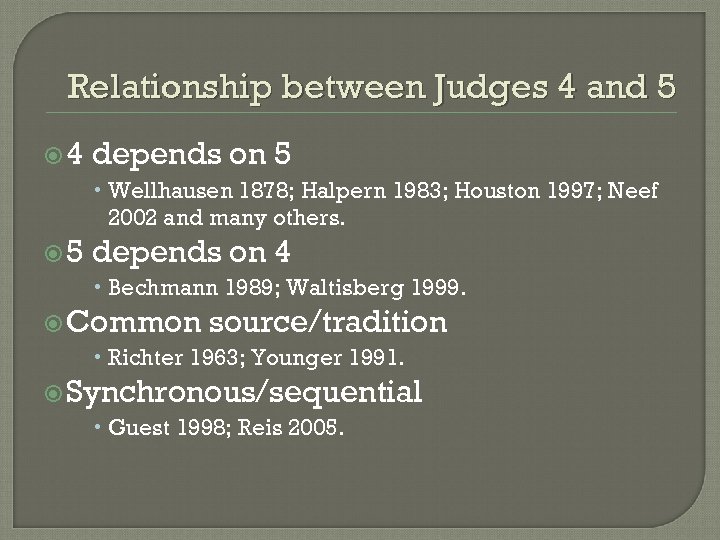

Relationship between Judges 4 and 5 4 depends on 5 Wellhausen 1878; Halpern 1983; Houston 1997; Neef 2002 and many others. 5 depends on 4 Bechmann 1989; Waltisberg 1999. Common source/tradition Richter 1963; Younger 1991. Synchronous/sequential Guest 1998; Reis 2005.

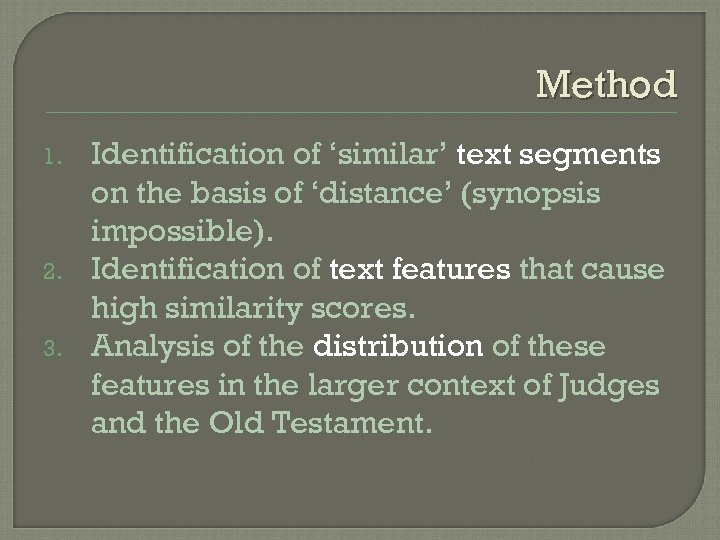

Method 1. 2. 3. Identification of ‘similar’ text segments on the basis of ‘distance’ (synopsis impossible). Identification of text features that cause high similarity scores. Analysis of the distribution of these features in the larger context of Judges and the Old Testament.

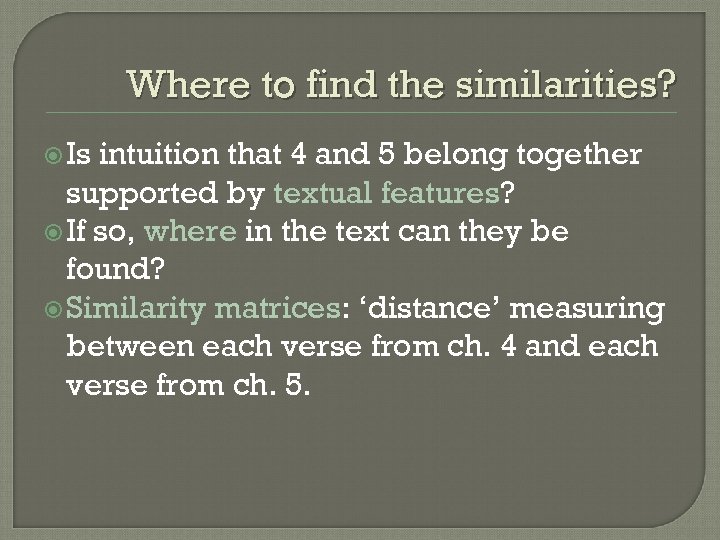

Where to find the similarities? Is intuition that 4 and 5 belong together supported by textual features? If so, where in the text can they be found? Similarity matrices: ‘distance’ measuring between each verse from ch. 4 and each verse from ch. 5.

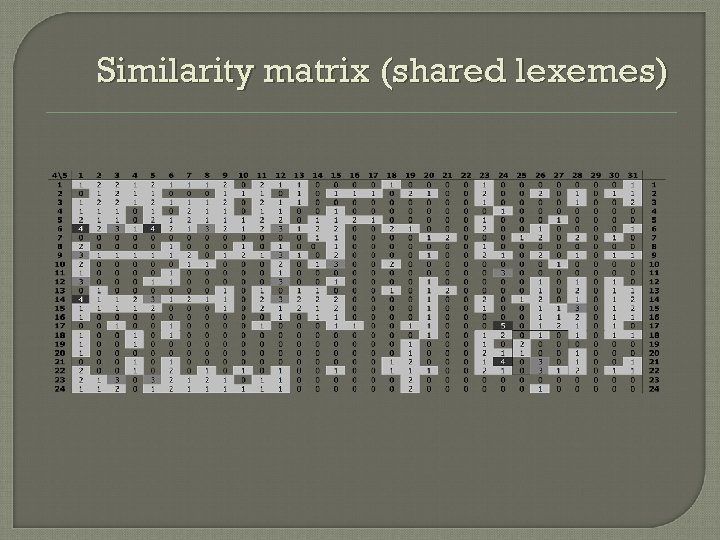

Similarity matrix (shared lexemes)

Measuring distances (1) Shared Lexemes: the more shared lexemes, the smaller the distance. ‘Noise’: e. g. ‘and’ > Stoplist: exclude frequent particles etc. Selection of content words on basis of part of speech: only words with inflection (nouns, verbs, adjectives).

Measuring distances (2) Basic unit for text comparison: verse, but ‘verse’ based on traditional unit delimitation. Differences in verse size may affect results. Jaccard Index: the intersection of the number of shared lexemes divided by the union.

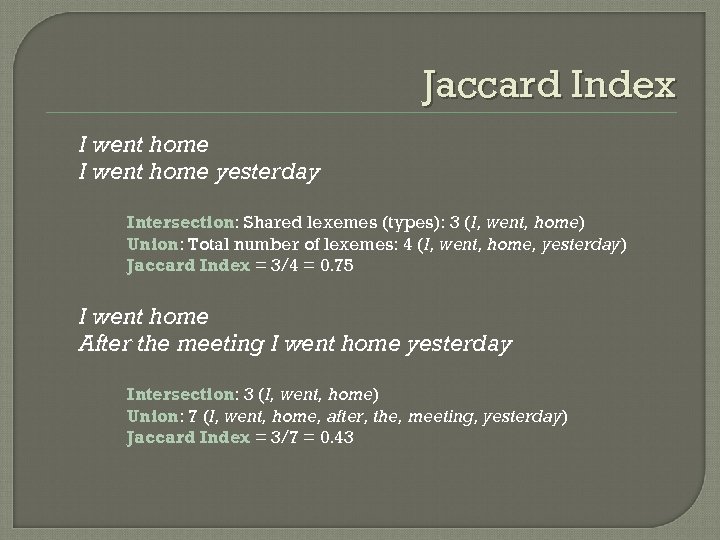

Jaccard Index I went home yesterday Intersection: Shared lexemes (types): 3 (I, went, home) Union: Total number of lexemes: 4 (I, went, home, yesterday) Jaccard Index = 3/4 = 0. 75 I went home After the meeting I went home yesterday Intersection: 3 (I, went, home) Union: 7 (I, went, home, after, the, meeting, yesterday) Jaccard Index = 3/7 = 0. 43

Measuring distances (3) Shared lexemes: ‘feature-based’. Also ‘blind’ methods, based on mathematical characteristics of the digital representation of the text, e. g. Normalized Compression Distance (NCD).

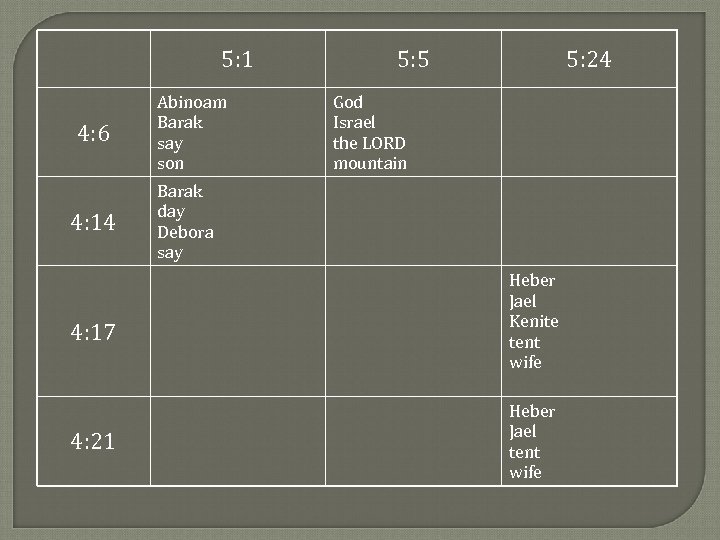

What textual features cause high similarity scores? (1) Example: verse pairs with the highest number of shared lexemes (4 or more)

5: 1 4: 6 4: 14 4: 17 4: 21 Abinoam Barak say son 5: 5 5: 24 God Israel the LORD mountain Barak day Debora say Heber Jael Kenite tent wife Heber Jael tent wife

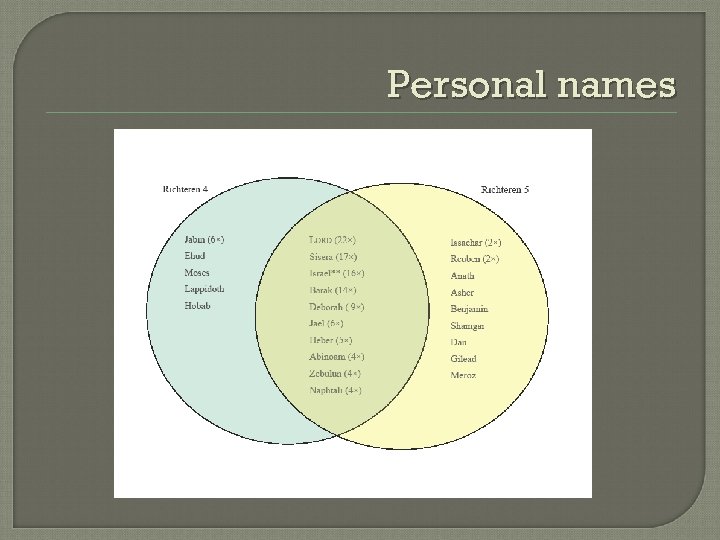

What textual features cause high similarity scores? (2) Proper nouns: ‘Barak’, ‘Israel’. Common nouns that are part of proper noun phrases: ‘wife’ in ‘Jael the wife of Heber’; ‘son’ in ‘Barak the son of Abinoam’. Other verbs and ‘say’, ‘tent’, ‘day’. common nouns:

Personal names

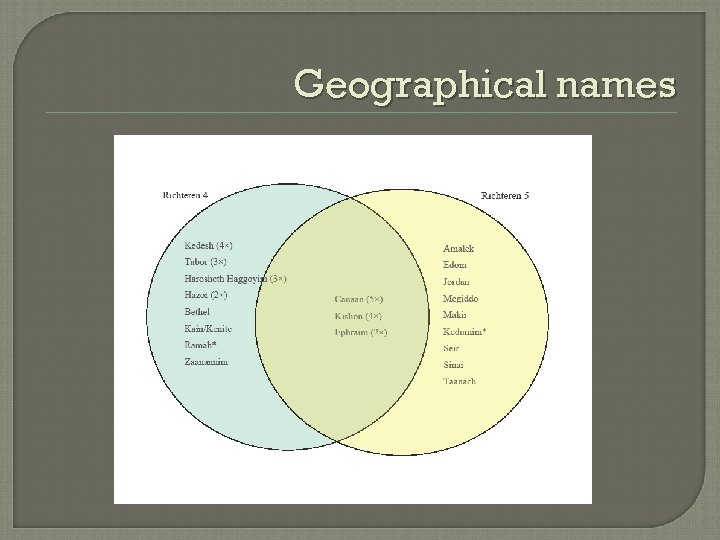

Geographical names

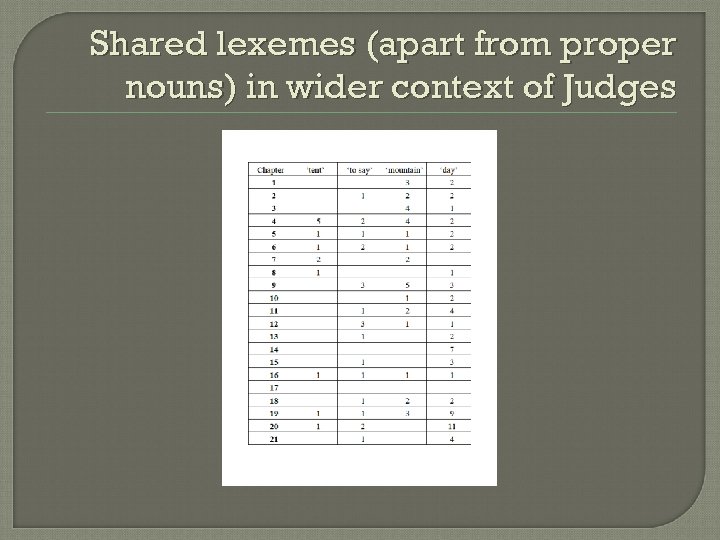

Shared lexemes (apart from proper nouns) in wider context of Judges

Observations High similarity scores in places that show high concentration of proper nouns. Even within category of proper nouns considerable differences. Shared common nouns and verbs: frequent words such as ‘day’, ‘say’. No significant concentration.

Conclusions In case of literary dependency we would expect at least some concentration of shared lexemes. Significant number of shared lexemes only in case of proper nouns. But proper nouns suggest shared traditions, rather than literary dependency.

8553904c5574aaabc18aea562b8392bc.ppt