2f055d946191ff3022c2731be27086c8.ppt

- Количество слайдов: 22

Data Analysis – Present & Future Nick Brook University of Bristol • Generic Requirements & Introduction • Expt specific approaches: 3 rd May’ 03 Nick Brook – 4 th LHC Symposium 1

Complexity of the Problem Detectors: ~2 orders of magnitude more channels than today Triggers must choose correctly only 1 event in every 400, 000 High Level triggers are software-based Computer resources will not be available in a single location 3 rd May’ 03 Nick Brook – 4 th LHC Symposium 2

Complexity of the Problem Major challenges associated with: Communication and collaboration at a distance Distributed computing resources Remote software development and physics analysis 3 rd May’ 03 Nick Brook – 4 th LHC Symposium 3

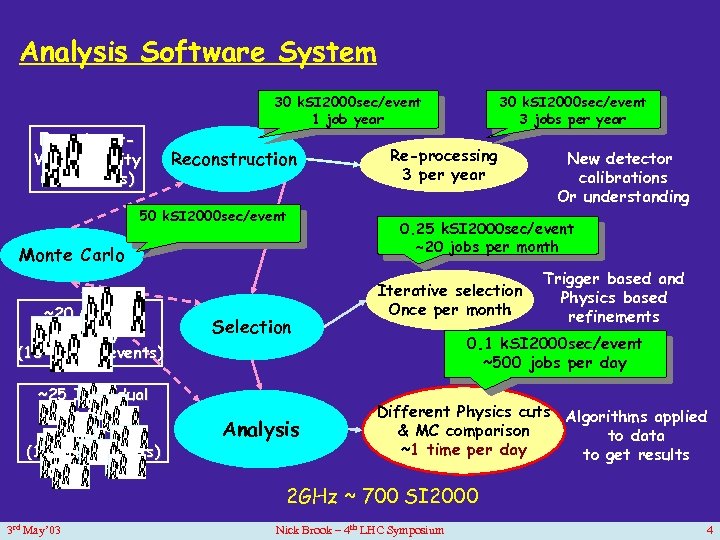

Analysis Software System Experiment. Wide Activity (109 events) 30 k. SI 2000 sec/event 1 job year Reconstruction 50 k. SI 2000 sec/event Monte Carlo ~20 Groups’ Activity (109 107 events) ~25 Individual per Group Activity (106 – 108 events) Selection Analysis 30 k. SI 2000 sec/event 3 jobs per year Re-processing 3 per year New detector calibrations Or understanding 0. 25 k. SI 2000 sec/event ~20 jobs per month Iterative selection Once per month Trigger based and Physics based refinements 0. 1 k. SI 2000 sec/event ~500 jobs per day Different Physics cuts Algorithms applied & MC comparison to data ~1 time per day to get results 2 GHz ~ 700 SI 2000 3 rd May’ 03 Nick Brook – 4 th LHC Symposium 4

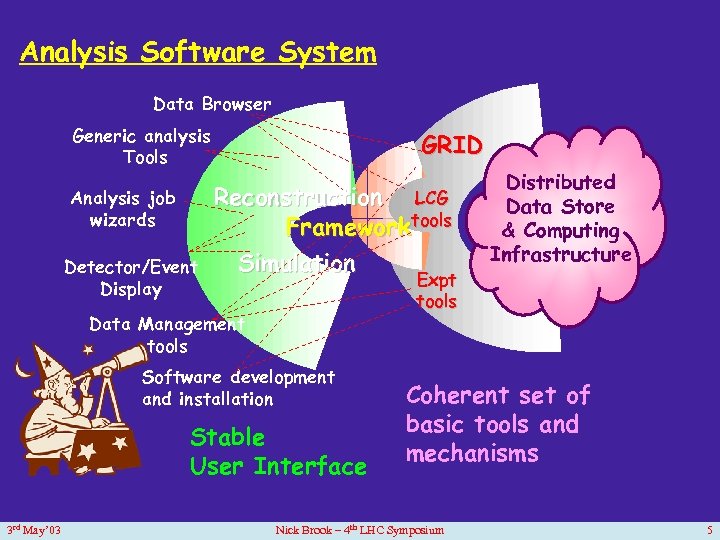

Analysis Software System Data Browser Generic analysis Tools Analysis job wizards Detector/Event Display GRID Reconstruction LCG Frameworktools Simulation Expt tools Data Management tools Software development and installation Stable User Interface 3 rd May’ 03 Distributed Data Store & Computing Infrastructure Coherent set of basic tools and mechanisms Nick Brook – 4 th LHC Symposium 5

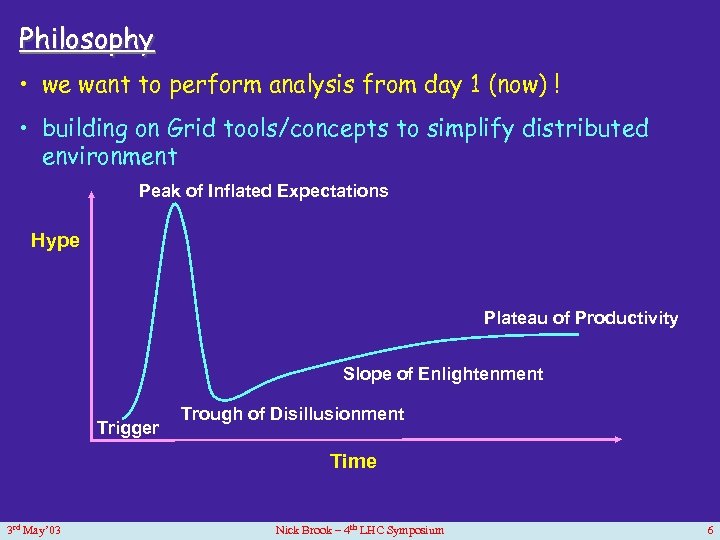

Philosophy • we want to perform analysis from day 1 (now) ! • building on Grid tools/concepts to simplify distributed environment Peak of Inflated Expectations Hype Plateau of Productivity Slope of Enlightenment Trigger Trough of Disillusionment Time 3 rd May’ 03 Nick Brook – 4 th LHC Symposium 6

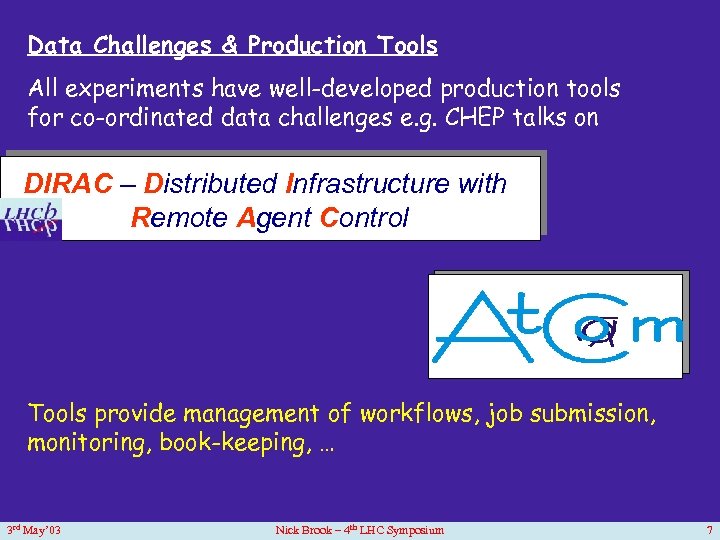

Data Challenges & Production Tools All experiments have well-developed production tools for co-ordinated data challenges e. g. CHEP talks on DIRAC – Distributed Infrastructure with Remote Agent Control Tools provide management of workflows, job submission, monitoring, book-keeping, … 3 rd May’ 03 Nick Brook – 4 th LHC Symposium 7

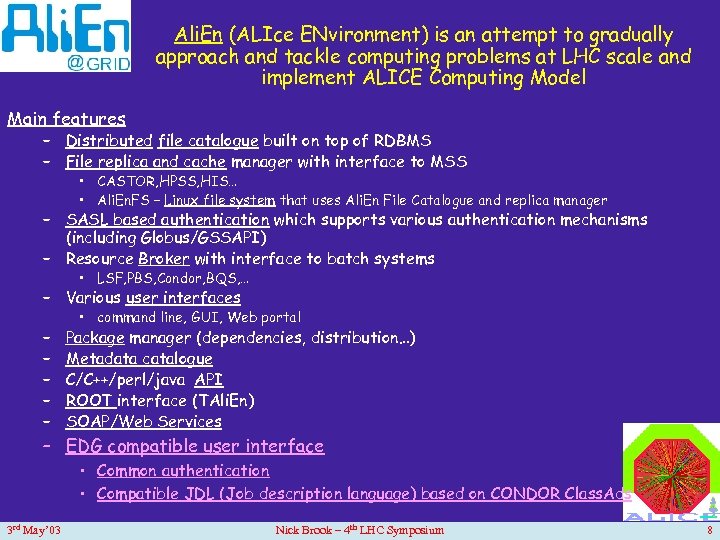

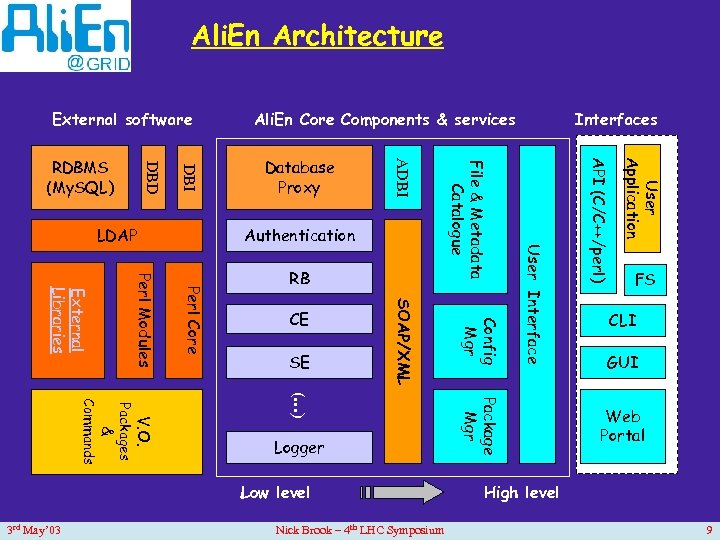

Ali. En (ALIce ENvironment) is an attempt to gradually approach and tackle computing problems at LHC scale and implement ALICE Computing Model Main features – Distributed file catalogue built on top of RDBMS – File replica and cache manager with interface to MSS • CASTOR, HPSS, HIS… • Ali. En. FS – Linux file system that uses Ali. En File Catalogue and replica manager – SASL based authentication which supports various authentication mechanisms (including Globus/GSSAPI) – Resource Broker with interface to batch systems • LSF, PBS, Condor, BQS, … – Various user interfaces – – – • command line, GUI, Web portal Package manager (dependencies, distribution…) Metadata catalogue C/C++/perl/java API ROOT interface (TAli. En) SOAP/Web Services – EDG compatible user interface • Common authentication • Compatible JDL (Job description language) based on CONDOR Class. Ads 3 rd May’ 03 Nick Brook – 4 th LHC Symposium 8

Ali. En Architecture External software 3 rd May’ 03 Nick Brook – 4 th LHC Symposium User Application Low level Package Mgr (…) V. O. Packages & Commands Logger API (C/C++/perl) SE Config Mgr CE SOAP/XML Perl Core Perl Modules External Libraries RB Interfaces User Interface Authentication File & Metadata Catalogue LDAP Database Proxy ADBI DBD RDBMS (My. SQL) Ali. En Core Components & services FS CLI GUI Web Portal High level 9

Ø ALICE have deployed a distributed computing environment which meets their experimental needs ü Simulation & Reconstruction ü Event mixing ü Analysis Ø Using Open Source components (representing 99% of the code), internet standards (SOAP, XML, PKI…) and scripting language (perl) has been a key element - quick prototyping and very fast development cycles Ø close to finalizing Ali. En architecture and API Ø Open. Ali. En? 3 rd May’ 03 Nick Brook – 4 th LHC Symposium 10

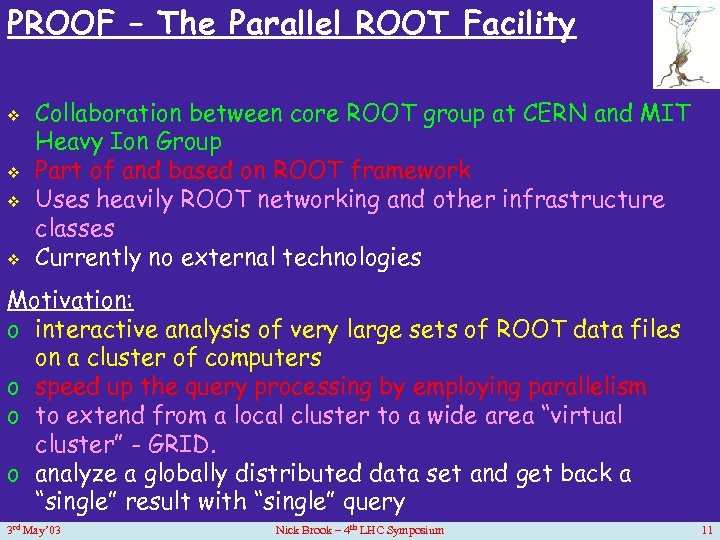

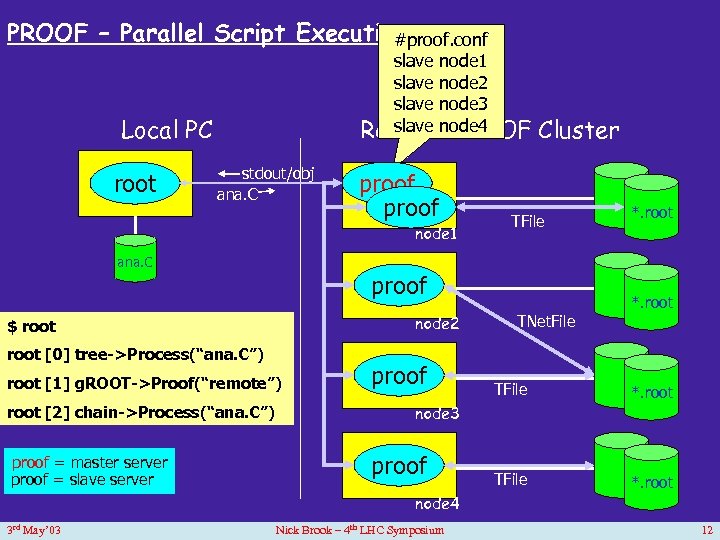

PROOF – The Parallel ROOT Facility v v Collaboration between core ROOT group at CERN and MIT Heavy Ion Group Part of and based on ROOT framework Uses heavily ROOT networking and other infrastructure classes Currently no external technologies Motivation: o interactive analysis of very large sets of ROOT data files on a cluster of computers o speed up the query processing by employing parallelism o to extend from a local cluster to a wide area “virtual cluster” - GRID. o analyze a globally distributed data set and get back a “single” result with “single” query 3 rd May’ 03 Nick Brook – 4 th LHC Symposium 11

PROOF – Parallel Script Execution #proof. conf slave node 1 slave node 2 slave node 3 slave PROOF Remotenode 4 Local PC root stdout/obj ana. C proof node 1 Cluster TFile *. root ana. C proof node 2 $ root [0]. x ana. C tree->Process(“ana. C”) root [1] g. ROOT->Proof(“remote”) root [2] chain->Process(“ana. C”) proof = master server proof = slave server proof *. root TNet. File TFile *. root node 3 proof node 4 3 rd May’ 03 Nick Brook – 4 th LHC Symposium 12

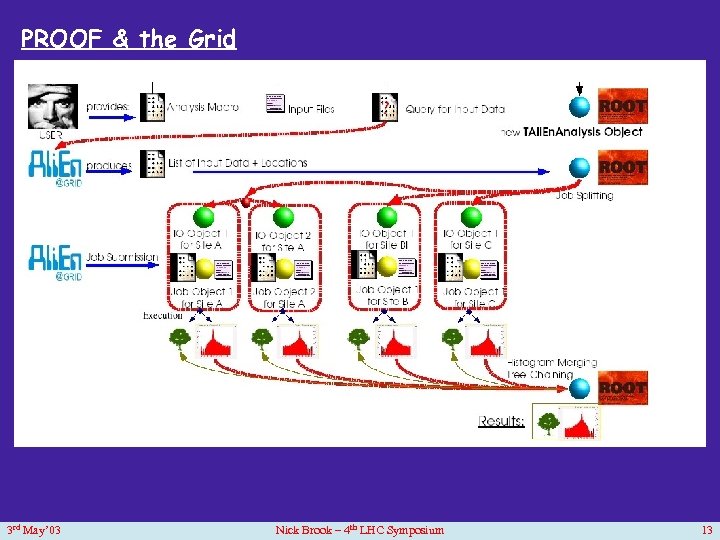

PROOF & the Grid 3 rd May’ 03 Nick Brook – 4 th LHC Symposium 13

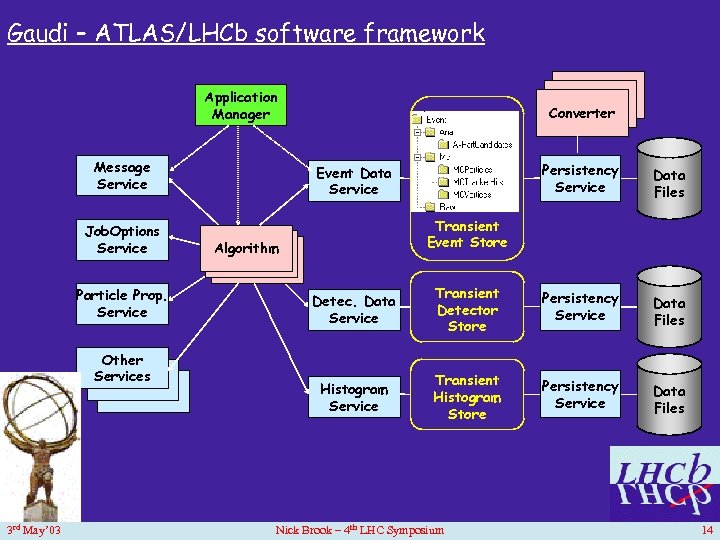

Gaudi – ATLAS/LHCb software framework Converter Application Manager Message Service Job. Options Service Particle Prop. Service Other Services 3 rd May’ 03 Persistency Service Event Data Service Data Files Transient Event Store Algorithm Detec. Data Service Transient Detector Store Persistency Service Data Files Histogram Service Transient Histogram Store Persistency Service Data Files Nick Brook – 4 th LHC Symposium 14

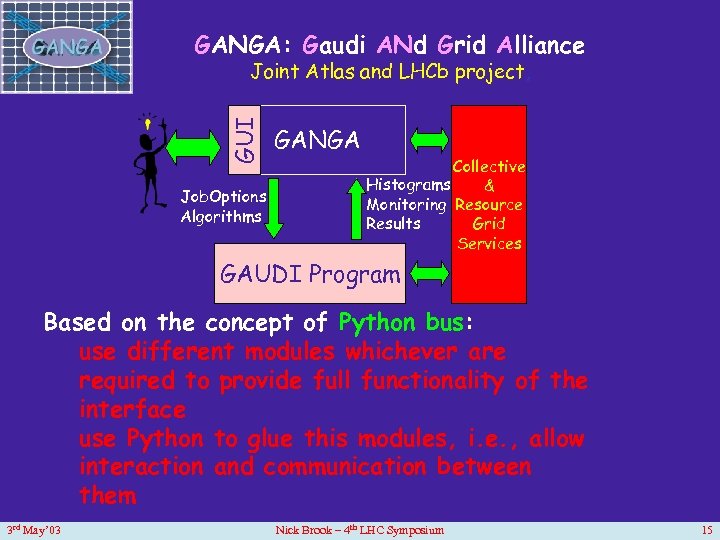

GANGA: Gaudi ANd Grid Alliance GUI Joint Atlas and LHCb project, Job. Options Algorithms GANGA Collective Histograms & Monitoring Resource Results Grid Services GAUDI Program Based on the concept of Python bus: use different modules whichever are required to provide full functionality of the interface use Python to glue this modules, i. e. , allow interaction and communication between them 3 rd May’ 03 Nick Brook – 4 th LHC Symposium 15

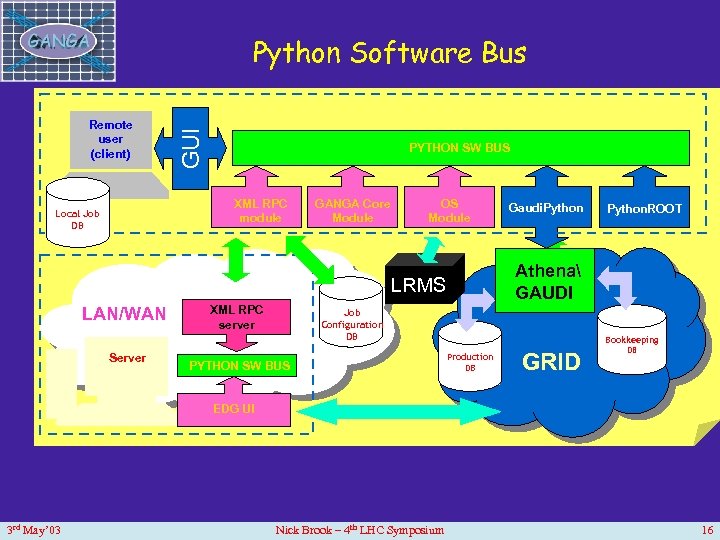

Remote user (client) GUI Python Software Bus PYTHON SW BUS XML RPC module Local Job DB GANGA Core Module OS Module Server XML RPC server Job Configuration DB PYTHON SW BUS Python. ROOT Athena GAUDI LRMS LAN/WAN Gaudi. Python Bookkeeping Production DB GRID DB EDG UI 3 rd May’ 03 Nick Brook – 4 th LHC Symposium 16

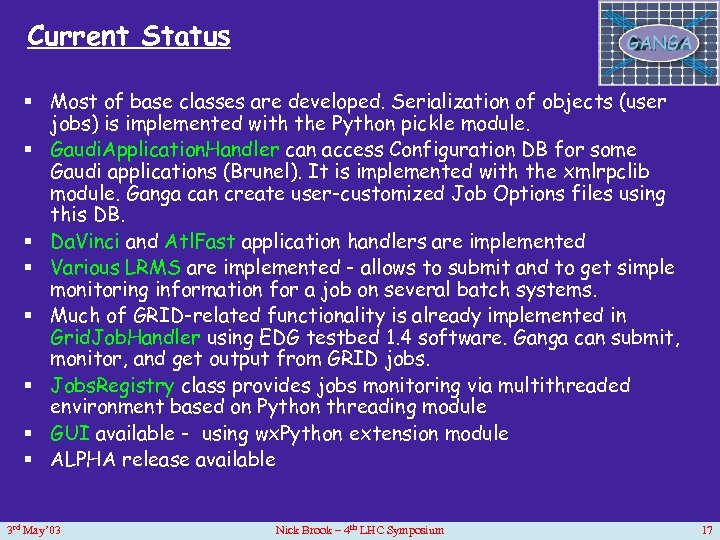

Current Status § Most of base classes are developed. Serialization of objects (user jobs) is implemented with the Python pickle module. § Gaudi. Application. Handler can access Configuration DB for some Gaudi applications (Brunel). It is implemented with the xmlrpclib module. Ganga can create user-customized Job Options files using this DB. § Da. Vinci and Atl. Fast application handlers are implemented § Various LRMS are implemented - allows to submit and to get simple monitoring information for a job on several batch systems. § Much of GRID-related functionality is already implemented in Grid. Job. Handler using EDG testbed 1. 4 software. Ganga can submit, monitor, and get output from GRID jobs. § Jobs. Registry class provides jobs monitoring via multithreaded environment based on Python threading module § GUI available - using wx. Python extension module § ALPHA release available 3 rd May’ 03 Nick Brook – 4 th LHC Symposium 17

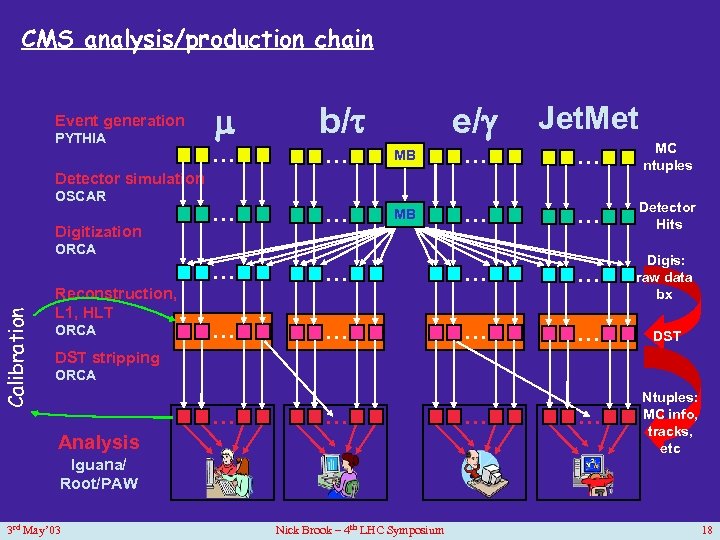

CMS analysis/production chain Event generation PYTHIA m … e/g b/t Jet. Met … MB … … MC ntuples … MB … … Detector Hits Detector simulation OSCAR Digitization … Calibration ORCA Reconstruction, L 1, HLT ORCA … … Digis: raw data bx … … DST stripping ORCA … … Analysis Iguana/ Root/PAW 3 rd May’ 03 Nick Brook – 4 th LHC Symposium … … Ntuples: MC info, tracks, etc 18

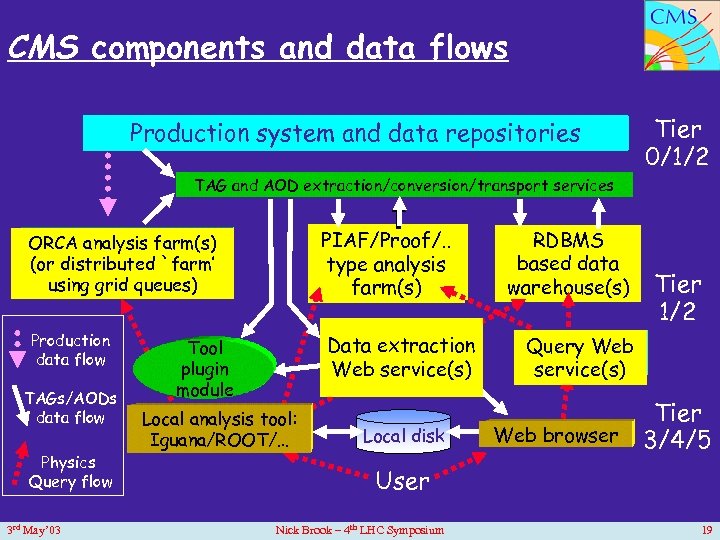

CMS components and data flows Production system and data repositories Tier 0/1/2 TAG and AOD extraction/conversion/transport services PIAF/Proof/. . type analysis farm(s) ORCA analysis farm(s) (or distributed `farm’ using grid queues) Production data flow TAGs/AODs data flow Physics Query flow 3 rd May’ 03 Data extraction Web service(s) Tool plugin module Local analysis tool: Iguana/ROOT/… Local disk RDBMS based data warehouse(s) Tier 1/2 Query Web service(s) Web browser Tier 3/4/5 User Nick Brook – 4 th LHC Symposium 19

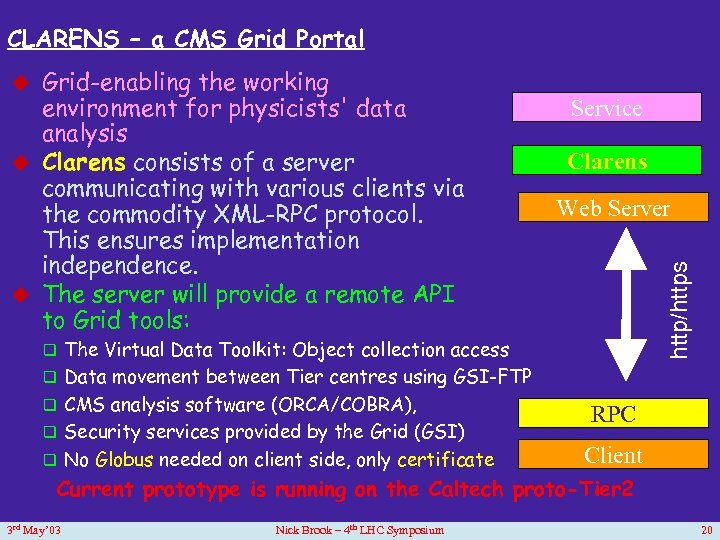

CLARENS – a CMS Grid Portal environment for physicists' data analysis u Clarens consists of a server communicating with various clients via the commodity XML-RPC protocol. This ensures implementation independence. u The server will provide a remote API to Grid tools: Service Clarens Web Server http/https u Grid-enabling the working q The Virtual Data Toolkit: Object collection access q Data movement between Tier centres using GSI-FTP q CMS analysis software (ORCA/COBRA), q Security services provided by the Grid (GSI) q No Globus needed on client side, only certificate RPC Client Current prototype is running on the Caltech proto-Tier 2 3 rd May’ 03 Nick Brook – 4 th LHC Symposium 20

CLARENS Several web services applications have been built on the Clarens web service architectures: v Proxy escrow v Client access available from wide variety of languages v PYTHON v C/C++ v Java application v Java/Javascript browser-based client v Access to Jet. MET data via SQL 2 ROOT v Root access to remote data files v Access to files managed by San Diego SC storage resource broker (SRB) 3 rd May’ 03 Nick Brook – 4 th LHC Symposium 21

Summary • all 4 expts have successfully “managed” distributed production • many lessons learnt – not only by expt but useful feedback to m/w providers • a large degree of automisation achieved • Expts moving onto next challenge – analysis • Chaotic, unmanaged access to data & resources • Tools already (being) developed to aid Joe Bloggs • Success will be measured in terms: • Simplicity, stability & effectiveness • Access to resources • Management & access to data 3 rd • Ease of development of user applications May’ 03 Nick Brook – 4 th LHC Symposium 22

2f055d946191ff3022c2731be27086c8.ppt