6c19a0d74e6ecb204b113d45638f81dd.ppt

- Количество слайдов: 33

DARPA : Executable Extensions of the Bookshelf Igor Markov University of Michigan, EECS

Outline • A three-slide version of the talk – motivations + proposal + how it will help • Basic use models – users and interfaces – restrictions • • Existing VLSI CAD Bookshelf Efforts related to our proposal Details of the proposal Sample flows, screenshots and conclusions

Motivation • Experiences from education – e. g. , undergraduate courses on algorithms and architecture – infrastructure for evaluation: auto-graders • Infrastructure for collaborative research – can also benefit from automation – must support sharing, modularity and reuse – must scale, must be industry-compatible • Modularity in implementation platforms

![Bookshelf. exe • Dynamic version of the [existing] Bookshelf – Implementations+benchmarks = algo evaluations? Bookshelf. exe • Dynamic version of the [existing] Bookshelf – Implementations+benchmarks = algo evaluations?](https://present5.com/presentation/6c19a0d74e6ecb204b113d45638f81dd/image-4.jpg)

Bookshelf. exe • Dynamic version of the [existing] Bookshelf – Implementations+benchmarks = algo evaluations? – Flow composition and high-level scripting • Related efforts – Sat. Ex, PUNCH, NEOS, Omni. Flow • Proposed solution: ``Bookshelf. exe’’ (b. X) – Application Service Provider – Interfaces • Online reporting of simulation results • Support for optimization-specific concepts • Power versus ease-of-use and modularity

Why We Need Bookshelf. exe? • Design flow prototyping • SW maintenance: automation (cf. Source. Forge) • HW/SW incompatibilities, lack of CPU cycles – Standardization, consolidation and sharing • Many published results cannot be reproduced – Simplified sharing and CAD IP re-use • Lack of high-level, large-scale experiments – Web-based scripting, distributed execution – Open-source flows (with or w/o o. -s. components)

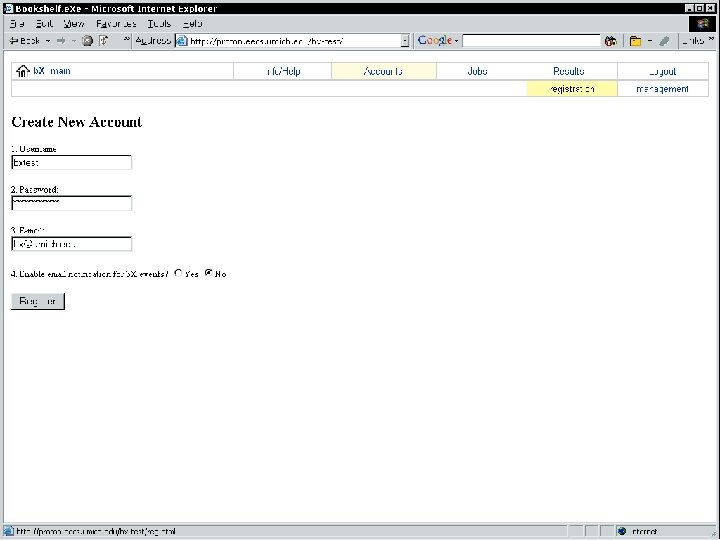

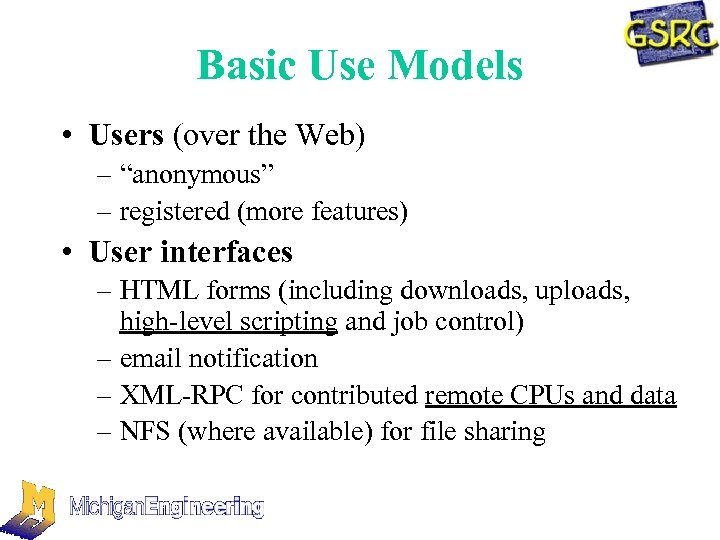

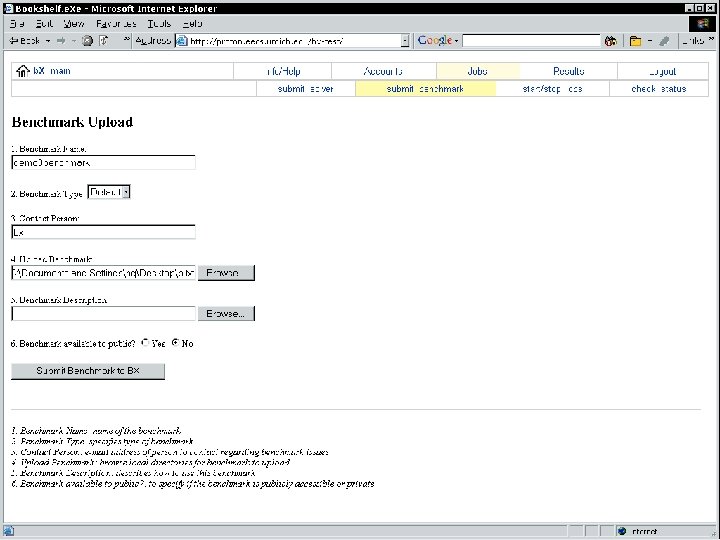

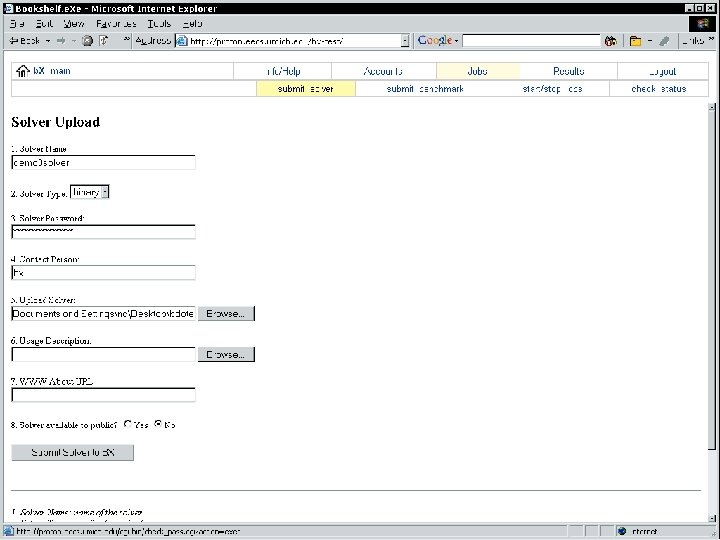

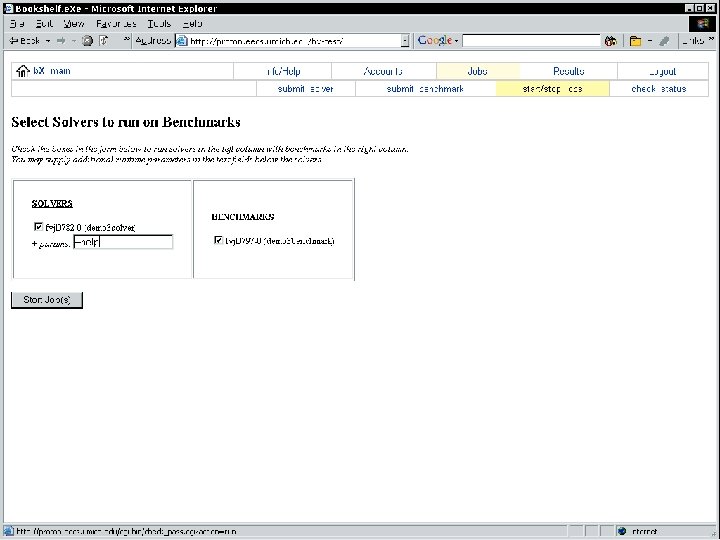

Basic Use Models • Users (over the Web) – “anonymous” – registered (more features) • User interfaces – HTML forms (including downloads, uploads, high-level scripting and job control) – email notification – XML-RPC for contributed remote CPUs and data – NFS (where available) for file sharing

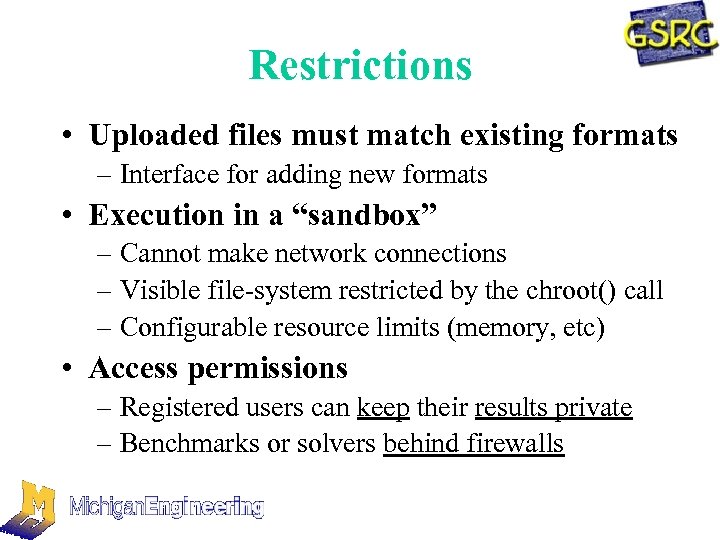

Restrictions • Uploaded files must match existing formats – Interface for adding new formats • Execution in a “sandbox” – Cannot make network connections – Visible file-system restricted by the chroot() call – Configurable resource limits (memory, etc) • Access permissions – Registered users can keep their results private – Benchmarks or solvers behind firewalls

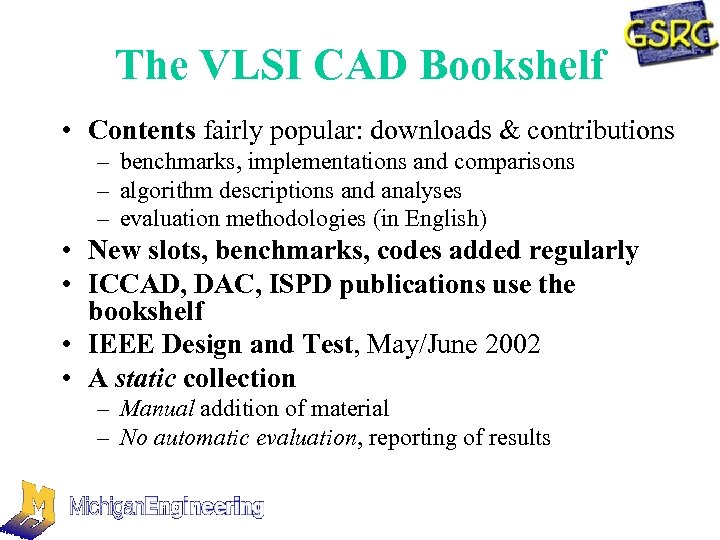

The VLSI CAD Bookshelf • Contents fairly popular: downloads & contributions – benchmarks, implementations and comparisons – algorithm descriptions and analyses – evaluation methodologies (in English) • New slots, benchmarks, codes added regularly • ICCAD, DAC, ISPD publications use the bookshelf • IEEE Design and Test, May/June 2002 • A static collection – Manual addition of material – No automatic evaluation, reporting of results

Other Efforts Related to “. exe” • Sat. Ex http: //www. lri. fr/~simon/satex. php 3 – Specialized in satisfiability problems • PUNCH http: //punch. ece. purdue. edu – Very broad selection of software (from Star. Office to Capo) – Local to Purdue • NEOS http: //www-neos. mcs. anl. gov/neos – Open-source, distributed architecture – Used primarily for linear and non-linear optimization • Omni. Flow: DAC 2001, Brglez and Lavana – http: //www. cbl. ncsu. edu/Open. Projects/Omni. Flow – Distributed Collaborative Design Framework for VLSI – GUI-based flow control, chaining of design tools

Sat. Ex • Continual evaluation and ranking of codes – Results produced and posted automatically – Intuitive interface • Popular – 93, 707 hits March, 2000 – September 2001 – 23 SAT provers, 32, 610 runs – September 2001 • Limited scalability – One workstation (2 yrs of CPU time) – Specialized to one application

• Very general execution framework – From VLSI CAD to GUI-based office applications – Custom-designed file-system (Purdue hosts only) – 241, 458 runs in 5 years (8, 152 in VLSI CAD ) – 20+ publications • Only maintainer can add executables • No support for eval’n and chaining (flows) – No stats for results of runs (cf. Sat. Ex top 20) – No MIME-like data types – Difficult to use when multiple tools are involved

• Open-source, distributed framework • Wide use, solid code base • Adding new implementations requires maintainer intervention (< on PUNCH) – Each new code must come with a host – Distributed maintenance • Loose data typing – No type system for data and implementations • Compare to MIME

NEOS: what can be improved • Independent eval. and verification of results – e. g. , PUNCH offers a WL-eval. from the bookshelf • Real-time on-line reporting of results + stats • High-level scripting and flow design – Scripts to control the execution and evaluation flows • Pairing solvers with benchmarks – Sat. Ex-like evaluation, but for multiple data types

Omni. Flow • Context: collaborative VLSI Design – sharing computational resources, but not results • Distributed over multiple hosts • Provides GUI-based flow control – supports chaining of design tools – several hard-coded conditions for flow control – no support for execution conditional on results – no scripting language; limited by GUI • Cannot dynamically add hosts

Bookshelf. exe (1) • Best of Sat. Ex, PUNCH, NEOS and Omni. Flow – – Reporting style similar to Sat. Ex (+ alternatives) The versatility of PUNCH Scalability and distributed nature of NEOS (or better) Flow control as in Omni. Flow or better • New features – – MIME-like data types and optimization-specific concepts Automatic submission of binaries and source code Chaining of implementations; scripting for flow control Support for use models with proprietary data or code

Bookshelf. exe (2) • Scalability – Computation is distributed (unlike in Sat. Ex) – Maintenance is automated (unlike in NEOS) • Support for multiple use models – “adapts to users” – – – Multiple levels of expertise Multiple levels of commitment Sharing of public data Hiding/protection of proprietary data “Screen-saver” mode, cf. SETI@Home, Entropia, etc

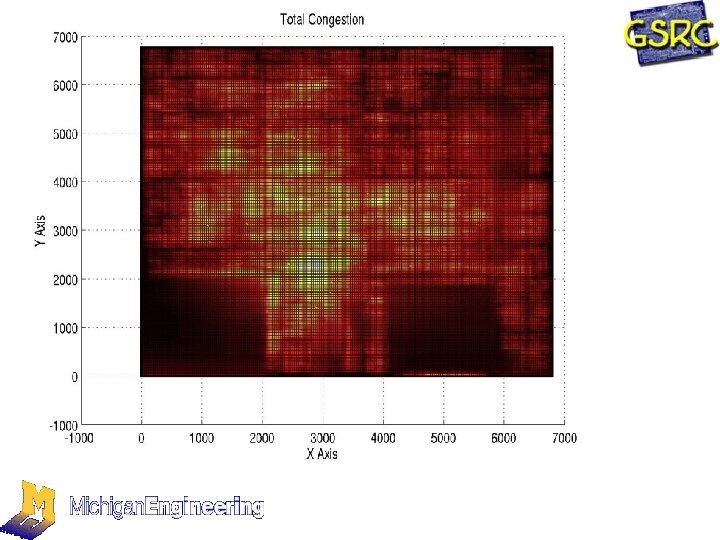

Sample Scenario (IWLS 2002 Focus Group 3) • Question: is it possible to massage the logic of the netlist to improve routing congestion? • Proposed research infrastructure: – IWLS benchmark API (Andreas Kuehlman) – Interface to Bookshelf formats – Layout generation (available in the Bookshelf) – Placement (several placers available in the B. ) – Congestion maps (next slide)

Interface Issues • Transparent error diagnostics – Greatly improve learning curve • Ownership, privacy, resource limits: sample policy questions – Chaining jobs owned by different users – What jobs can be launched anonymously? • Script composer versus programming – Flexibility versus learning curve – GUI implemented using HTML forms (converts clicks and fill-in-the-blanks to scripts)

Script Composer • Built on top of a scripting language – Based on PERL (PERL functions available) • Basic flows designed with HTML forms – Start with one ‘step’ and add more steps – API funcs: e. g. , “run ‘optimizer 2’ + store results” – Support for conditionals and iteration • Scripts sent to back-end for execution as jobs • Scripts can be saved, posted, reused

Language-Level Support • Type system for submissions, results and intermediate data – Algorithm implementations • Deterministic and randomized optimizers, etc. – Input data, results, status info (runtime, memory, …) – Common benchmarks • Rules for matching submission types – E. g. , match a placer with a LEF/DEF benchmark – Violations are reported to user as fatal errors

Data Models • Consistent data models needed for serious data flows and high-level experiments – e. g. , integrated RTL-to-layout implementation • Plan to use Open. Access 2. 0 – Specs published in April 2002 – Implementation and source expected next year • Adjustments within bookshelf expected in terms of open-source design flows – E. g. , for industry SP&R integration

b. X: Structure • b. X front-end: mostly Web-based (+ email) • b. X back-end – Main server (job scheduling, reporting of results, etc) – Client software on computational hosts • Network communications: XML RPC – RPC standard – XML data encoding – HTTP network transport – Compatible with C/C++, Perl, Python, etc.

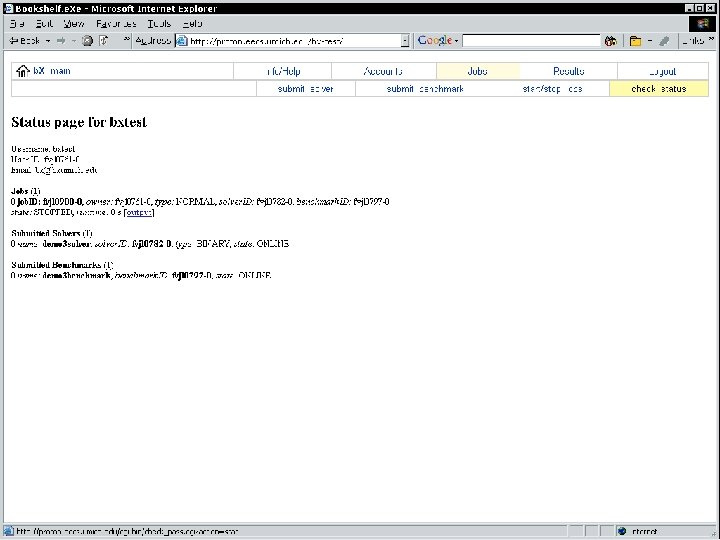

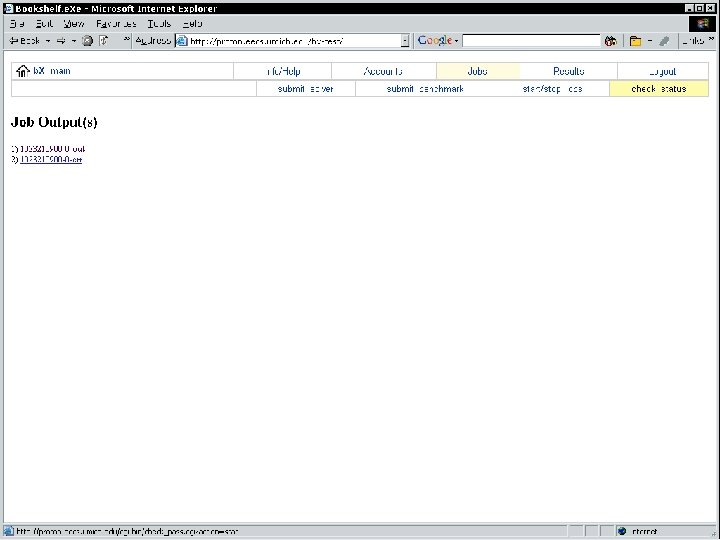

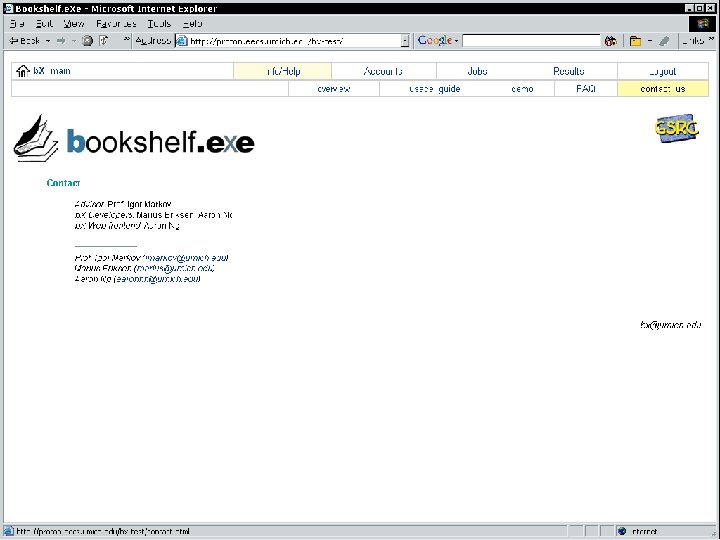

Implementation Status • So far main focus on the back-end • Back-end ver 0. 1 functional on Linux – BX state maintained in a database – Persistence, etc – Simple one-job demo (1 binary & 1 benchmark) • Security features and basic policies – Sandbox execution and data type checks • Front-end supports one-job demo • Next mile-stone: “ 10 X 10” demo (cf SAT 2002) – Jobs automatically distributed and results posted

Conclusions • Bookshelf: popular, but can be improved • Bookshelf. exe: executable extensions • Goals – Automate routine operations – Create open-source flows – Facilitate high-level, large-scale experimentation • We plan to assimilate best features from related works + add new ones • Started bottom-up implementation – Basic version of b. X is working

If we missed anything important, let us know Thank you

6c19a0d74e6ecb204b113d45638f81dd.ppt