48939eaf1f2d59e2ab9316252ca9b04c.ppt

- Количество слайдов: 131

Current Status and Challenges of So. C Verification for Embedded Systems Market November, 2003 Chong-Min Kyung, KAIST, Daejeon, Korea

Current Status and Challenges of So. C Verification for Embedded Systems Market November, 2003 Chong-Min Kyung, KAIST, Daejeon, Korea

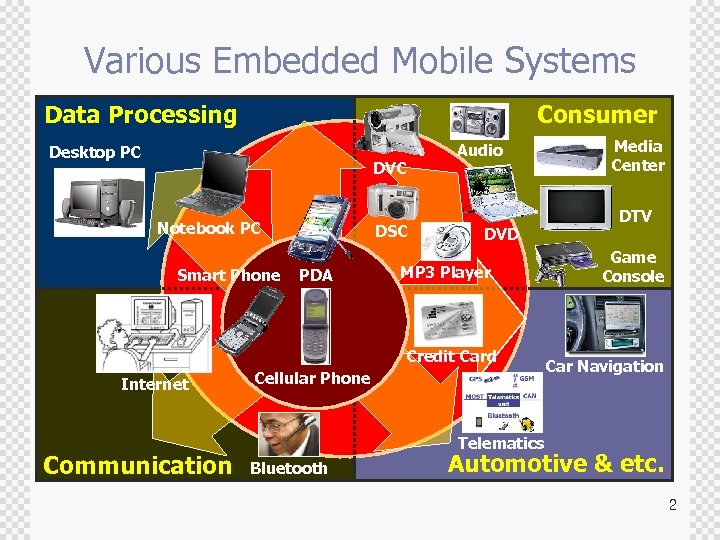

Various Embedded Mobile Systems Data Processing Consumer Desktop PC DVC Notebook PC Smart Phone DSC PDA Audio DVD MP 3 Player Credit Card Internet Communication Cellular Phone Media Center DTV Game Console Car Navigation Telematics Bluetooth Automotive & etc. 2

Various Embedded Mobile Systems Data Processing Consumer Desktop PC DVC Notebook PC Smart Phone DSC PDA Audio DVD MP 3 Player Credit Card Internet Communication Cellular Phone Media Center DTV Game Console Car Navigation Telematics Bluetooth Automotive & etc. 2

Difficulties in Embedded Systems Design (What’s special in ES design? ) Real operating environment of ES is difficult to reproduce. -> In-system verification is necessary. ± Total design flow of ES until the implementation is long and complex. -> Verification must be started early. ± Design turn-around time must be short as the life-time of ES itself is quite short. -> Short verification cycle ± 3

Difficulties in Embedded Systems Design (What’s special in ES design? ) Real operating environment of ES is difficult to reproduce. -> In-system verification is necessary. ± Total design flow of ES until the implementation is long and complex. -> Verification must be started early. ± Design turn-around time must be short as the life-time of ES itself is quite short. -> Short verification cycle ± 3

Most Important Issues in Embedded System Verification ± 1. Verify Right : ® ® ® ± 2. Verify Early ® ± System-level, Heterogeneous Models, SW-HW 3. Verify Appropriately ® ± Always make sure you have correct specifications to start with. (Frequent interaction with SPECIFIER, customer, marketing, etc. ) In-System Verification Check Properties in Formal Techniques. HW-SW Co-simulation 4. Verify Fast ® Hardware Acceleration, Emulation 4

Most Important Issues in Embedded System Verification ± 1. Verify Right : ® ® ® ± 2. Verify Early ® ± System-level, Heterogeneous Models, SW-HW 3. Verify Appropriately ® ± Always make sure you have correct specifications to start with. (Frequent interaction with SPECIFIER, customer, marketing, etc. ) In-System Verification Check Properties in Formal Techniques. HW-SW Co-simulation 4. Verify Fast ® Hardware Acceleration, Emulation 4

Strongly Required Features of Embedded System Verification ± ± Accommodate Multiple Levels of Design Representation Exploit Hardware, Software and Interfacing mechanisms as Verification Tools 5

Strongly Required Features of Embedded System Verification ± ± Accommodate Multiple Levels of Design Representation Exploit Hardware, Software and Interfacing mechanisms as Verification Tools 5

Agenda ± ± ± Why Verification ? Verification Alternatives Languages for System Modeling and Verification with Progressive Refinement So. C Verification Concluding Remarks 6

Agenda ± ± ± Why Verification ? Verification Alternatives Languages for System Modeling and Verification with Progressive Refinement So. C Verification Concluding Remarks 6

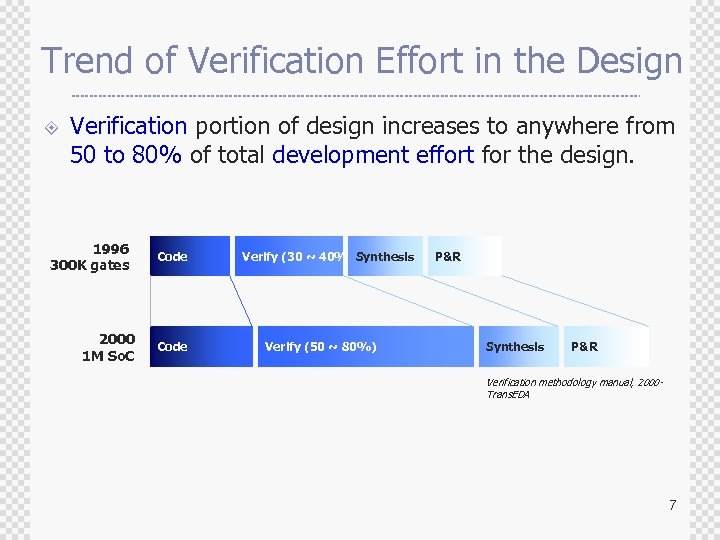

Trend of Verification Effort in the Design ± Verification portion of design increases to anywhere from 50 to 80% of total development effort for the design. 1996 300 K gates 2000 1 M So. C Code Verify (30 ~ 40%) Synthesis Verify (50 ~ 80%) P&R Synthesis P&R Verification methodology manual, 2000 Trans. EDA 7

Trend of Verification Effort in the Design ± Verification portion of design increases to anywhere from 50 to 80% of total development effort for the design. 1996 300 K gates 2000 1 M So. C Code Verify (30 ~ 40%) Synthesis Verify (50 ~ 80%) P&R Synthesis P&R Verification methodology manual, 2000 Trans. EDA 7

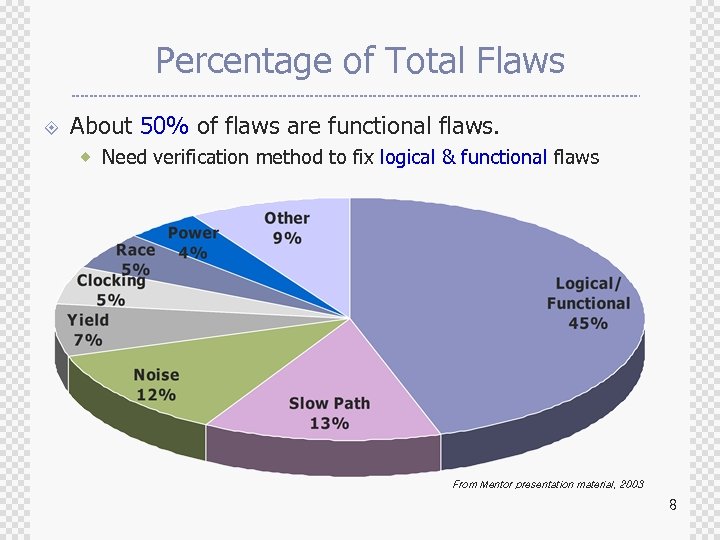

Percentage of Total Flaws ± About 50% of flaws are functional flaws. ® Need verification method to fix logical & functional flaws From Mentor presentation material, 2003 8

Percentage of Total Flaws ± About 50% of flaws are functional flaws. ® Need verification method to fix logical & functional flaws From Mentor presentation material, 2003 8

± Another recent independent study showed that more than half of all chips require one or more re-spins, and that functional errors were found in 74% of these ± re-spins. With increasing chip complexity, this situation could worsen. ± Who can afford that with >= 1 M Dollar NRE cost? 9

± Another recent independent study showed that more than half of all chips require one or more re-spins, and that functional errors were found in 74% of these ± re-spins. With increasing chip complexity, this situation could worsen. ± Who can afford that with >= 1 M Dollar NRE cost? 9

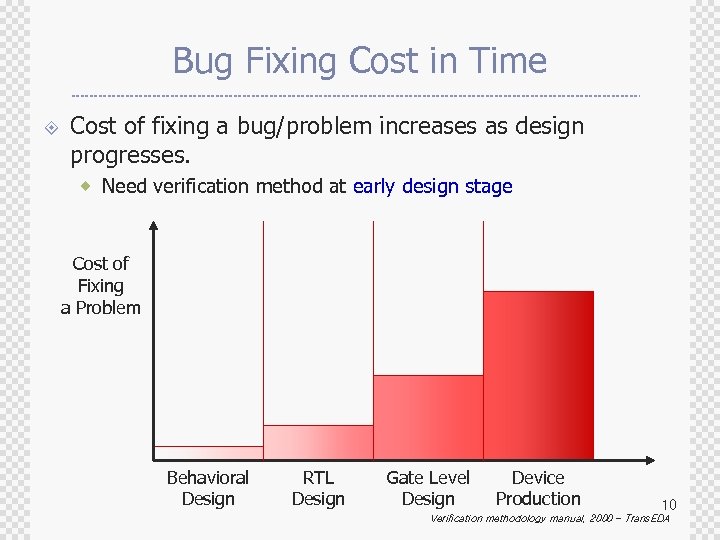

Bug Fixing Cost in Time ± Cost of fixing a bug/problem increases as design progresses. ® Need verification method at early design stage Cost of Fixing a Problem Behavioral Design RTL Design Gate Level Design Device Production 10 Verification methodology manual, 2000 – Trans. EDA

Bug Fixing Cost in Time ± Cost of fixing a bug/problem increases as design progresses. ® Need verification method at early design stage Cost of Fixing a Problem Behavioral Design RTL Design Gate Level Design Device Production 10 Verification methodology manual, 2000 – Trans. EDA

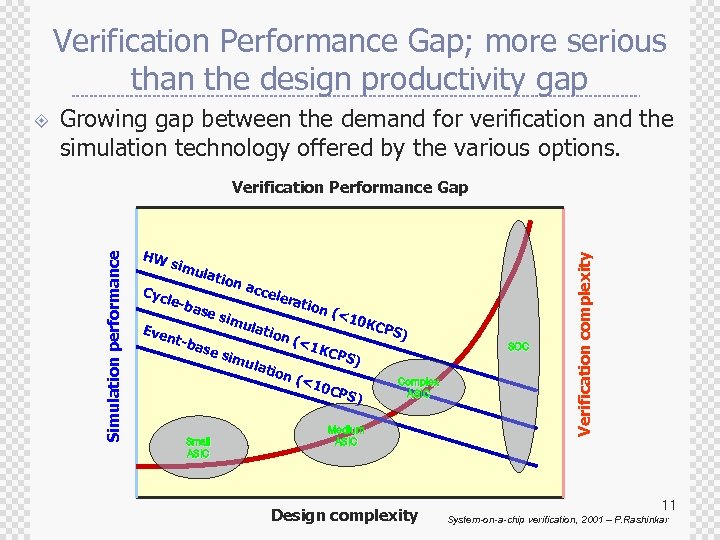

Verification Performance Gap; more serious than the design productivity gap Growing gap between the demand for verification and the simulation technology offered by the various options. HW s imu latio Cyc le -ba Eve nt-b se s ase Small ASIC n ac imu cele rati latio sim ulat n (< ion on ( <10 K CPS ) 1 KC P SOC (<1 S) 0 CP S) Complex ASIC Medium ASIC Design complexity Verification Performance Gap Simulation performance ± 11 System-on-a-chip verification, 2001 – P. Rashinkar

Verification Performance Gap; more serious than the design productivity gap Growing gap between the demand for verification and the simulation technology offered by the various options. HW s imu latio Cyc le -ba Eve nt-b se s ase Small ASIC n ac imu cele rati latio sim ulat n (< ion on ( <10 K CPS ) 1 KC P SOC (<1 S) 0 CP S) Complex ASIC Medium ASIC Design complexity Verification Performance Gap Simulation performance ± 11 System-on-a-chip verification, 2001 – P. Rashinkar

Completion Metrics; How do we know when the verification is done? ± Emotionally, or Intuitively; ® Out of money? Exhausted? ® Competition’s product is there. ® Software people are happy with your hardware. ® There have been no bugs reported for two weeks. ± More rigorous criteria; ® All tests passed ® Test Plan Coverage ® Functional Coverage ® Code Coverage ® Bug Rates have flattened toward bottom. 12

Completion Metrics; How do we know when the verification is done? ± Emotionally, or Intuitively; ® Out of money? Exhausted? ® Competition’s product is there. ® Software people are happy with your hardware. ® There have been no bugs reported for two weeks. ± More rigorous criteria; ® All tests passed ® Test Plan Coverage ® Functional Coverage ® Code Coverage ® Bug Rates have flattened toward bottom. 12

Verification Challenges ± ± ± Specification or Operating Environment is Incomplete/Open-Ended. (Verification metric is never complete like last-minute ECO. ) The Day before Yesterday’s tool for Today’s Design productivity grows faster than Verification productivity. 13

Verification Challenges ± ± ± Specification or Operating Environment is Incomplete/Open-Ended. (Verification metric is never complete like last-minute ECO. ) The Day before Yesterday’s tool for Today’s Design productivity grows faster than Verification productivity. 13

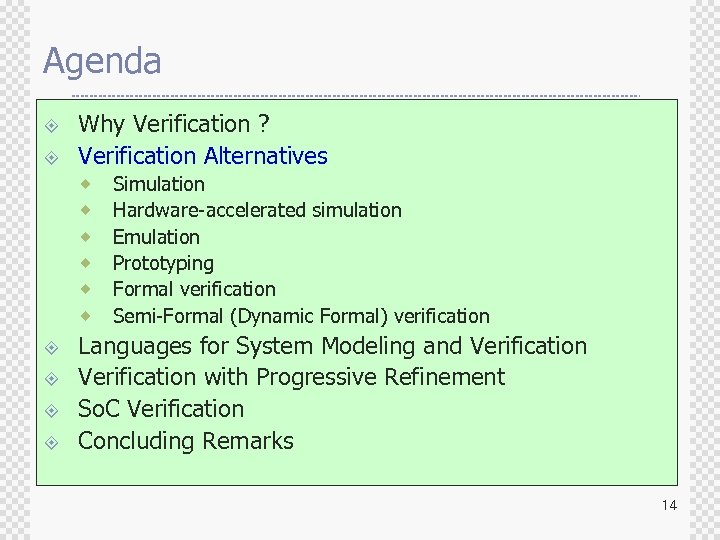

Agenda ± ± Why Verification ? Verification Alternatives ® ® ® ± ± Simulation Hardware-accelerated simulation Emulation Prototyping Formal verification Semi-Formal (Dynamic Formal) verification Languages for System Modeling and Verification with Progressive Refinement So. C Verification Concluding Remarks 14

Agenda ± ± Why Verification ? Verification Alternatives ® ® ® ± ± Simulation Hardware-accelerated simulation Emulation Prototyping Formal verification Semi-Formal (Dynamic Formal) verification Languages for System Modeling and Verification with Progressive Refinement So. C Verification Concluding Remarks 14

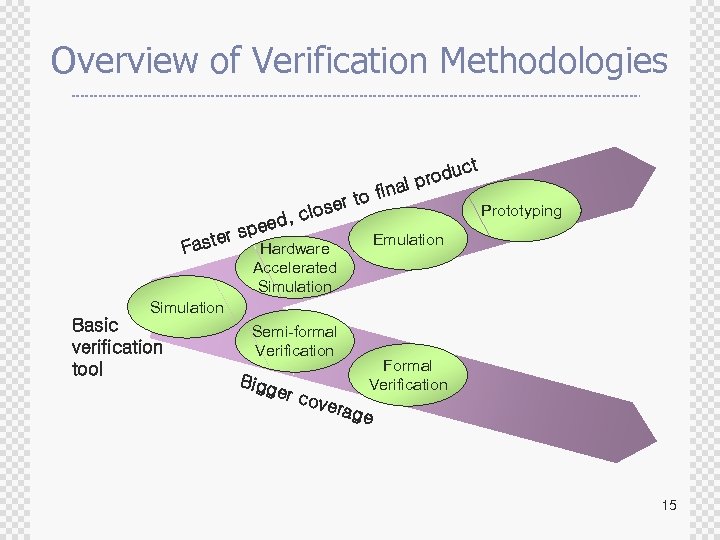

Overview of Verification Methodologies t oduc pr final r to Prototyping lose c ed, pe Emulation ter s Hardware Fas Accelerated Simulation Basic verification tool Semi-formal Verification Formal Verification Bigg er co vera ge 15

Overview of Verification Methodologies t oduc pr final r to Prototyping lose c ed, pe Emulation ter s Hardware Fas Accelerated Simulation Basic verification tool Semi-formal Verification Formal Verification Bigg er co vera ge 15

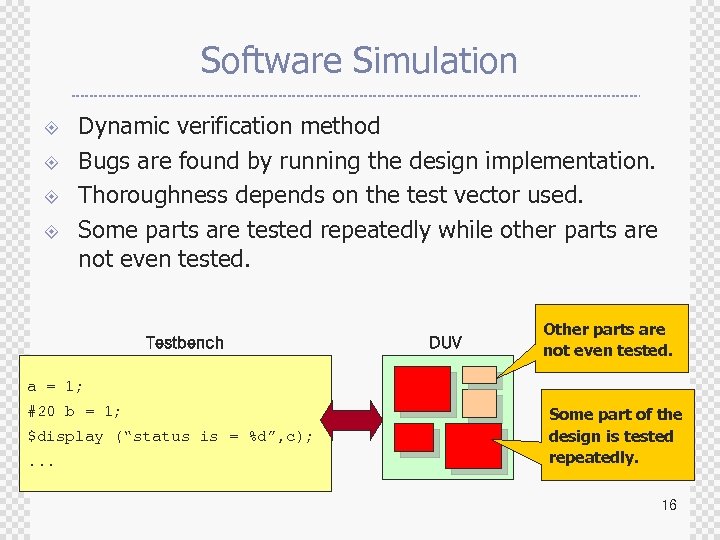

Software Simulation ± ± Dynamic verification method Bugs are found by running the design implementation. Thoroughness depends on the test vector used. Some parts are tested repeatedly while other parts are not even tested. Testbench DUV Other parts are not even tested. a = 1; #20 b = 1; $display (“status is = %d”, c); . . . Some part of the design is tested repeatedly. 16

Software Simulation ± ± Dynamic verification method Bugs are found by running the design implementation. Thoroughness depends on the test vector used. Some parts are tested repeatedly while other parts are not even tested. Testbench DUV Other parts are not even tested. a = 1; #20 b = 1; $display (“status is = %d”, c); . . . Some part of the design is tested repeatedly. 16

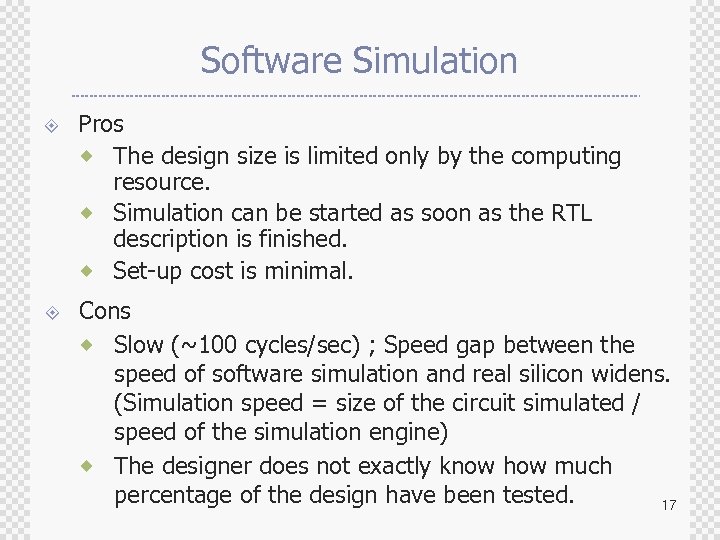

Software Simulation ± ± Pros ® The design size is limited only by the computing resource. ® Simulation can be started as soon as the RTL description is finished. ® Set-up cost is minimal. Cons ® Slow (~100 cycles/sec) ; Speed gap between the speed of software simulation and real silicon widens. (Simulation speed = size of the circuit simulated / speed of the simulation engine) ® The designer does not exactly know how much percentage of the design have been tested. 17

Software Simulation ± ± Pros ® The design size is limited only by the computing resource. ® Simulation can be started as soon as the RTL description is finished. ® Set-up cost is minimal. Cons ® Slow (~100 cycles/sec) ; Speed gap between the speed of software simulation and real silicon widens. (Simulation speed = size of the circuit simulated / speed of the simulation engine) ® The designer does not exactly know how much percentage of the design have been tested. 17

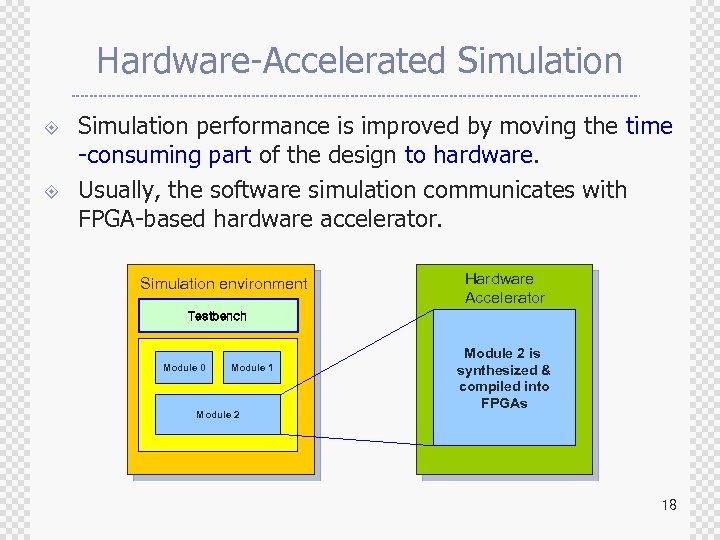

Hardware-Accelerated Simulation ± ± Simulation performance is improved by moving the time -consuming part of the design to hardware. Usually, the software simulation communicates with FPGA-based hardware accelerator. Simulation environment Hardware Accelerator Testbench Module 0 Module 1 Module 2 is synthesized & compiled into FPGAs 18

Hardware-Accelerated Simulation ± ± Simulation performance is improved by moving the time -consuming part of the design to hardware. Usually, the software simulation communicates with FPGA-based hardware accelerator. Simulation environment Hardware Accelerator Testbench Module 0 Module 1 Module 2 is synthesized & compiled into FPGAs 18

Hardware-Accelerated Simulation ± ± Pros ® Fast (100 K cycles/sec) ® Cheaper than hardware emulation ® Debugging is easier as the circuit structure is unchanged. ® Not an Overhead : Deployed as a step stone in the gradual refinement Cons (Obstacles to overcome) ® Set-up time overhead to map RTL design into the hardware can be substantial. ® SW-HW communication speed can degrade the performance. ® Debugging of signals within the hardware can be difficult. 19

Hardware-Accelerated Simulation ± ± Pros ® Fast (100 K cycles/sec) ® Cheaper than hardware emulation ® Debugging is easier as the circuit structure is unchanged. ® Not an Overhead : Deployed as a step stone in the gradual refinement Cons (Obstacles to overcome) ® Set-up time overhead to map RTL design into the hardware can be substantial. ® SW-HW communication speed can degrade the performance. ® Debugging of signals within the hardware can be difficult. 19

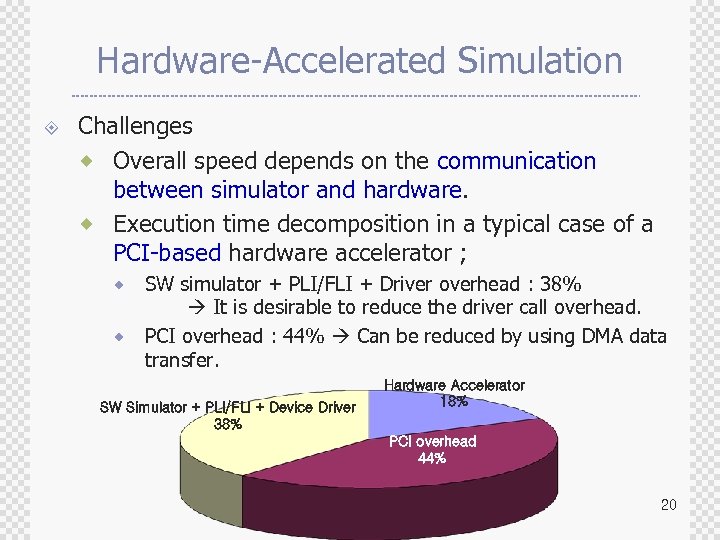

Hardware-Accelerated Simulation ± Challenges ® Overall speed depends on the communication between simulator and hardware. ® Execution time decomposition in a typical case of a PCI-based hardware accelerator ; SW simulator + PLI/FLI + Driver overhead : 38% It is desirable to reduce the driver call overhead. ® PCI overhead : 44% Can be reduced by using DMA data transfer. ® SW Simulator + PLI/FLI + Device Driver 38% Hardware Accelerator 18% PCI overhead 44% 20

Hardware-Accelerated Simulation ± Challenges ® Overall speed depends on the communication between simulator and hardware. ® Execution time decomposition in a typical case of a PCI-based hardware accelerator ; SW simulator + PLI/FLI + Driver overhead : 38% It is desirable to reduce the driver call overhead. ® PCI overhead : 44% Can be reduced by using DMA data transfer. ® SW Simulator + PLI/FLI + Device Driver 38% Hardware Accelerator 18% PCI overhead 44% 20

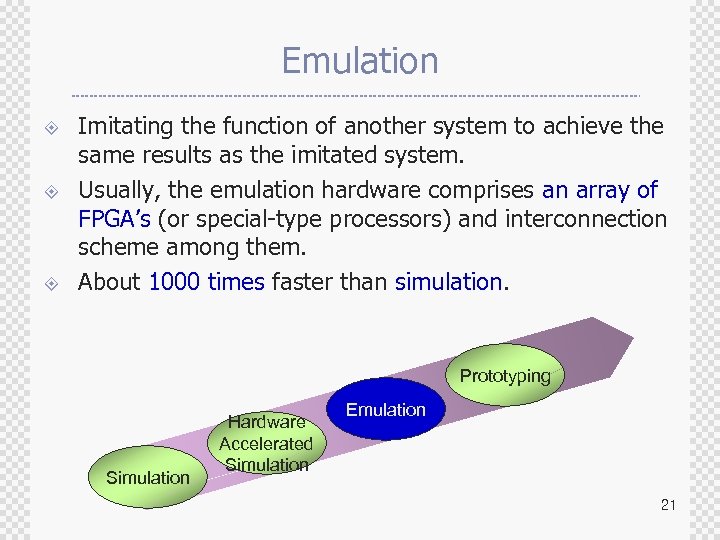

Emulation ± ± ± Imitating the function of another system to achieve the same results as the imitated system. Usually, the emulation hardware comprises an array of FPGA’s (or special-type processors) and interconnection scheme among them. About 1000 times faster than simulation. Prototyping Simulation Hardware Accelerated Simulation Emulation 21

Emulation ± ± ± Imitating the function of another system to achieve the same results as the imitated system. Usually, the emulation hardware comprises an array of FPGA’s (or special-type processors) and interconnection scheme among them. About 1000 times faster than simulation. Prototyping Simulation Hardware Accelerated Simulation Emulation 21

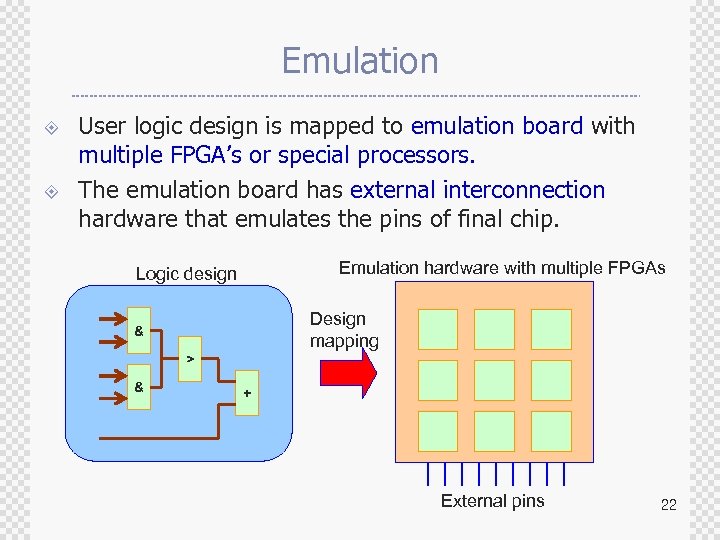

Emulation ± ± User logic design is mapped to emulation board with multiple FPGA’s or special processors. The emulation board has external interconnection hardware that emulates the pins of final chip. Emulation hardware with multiple FPGAs Logic design Design mapping & > & + External pins 22

Emulation ± ± User logic design is mapped to emulation board with multiple FPGA’s or special processors. The emulation board has external interconnection hardware that emulates the pins of final chip. Emulation hardware with multiple FPGAs Logic design Design mapping & > & + External pins 22

Emulation ± ± Pros ® Fast (500 K cycles/sec) ® Verification on real target system. Cons ® Setup time overhead to map RTL design into hardware is very high. ® Many FPGA’s + resources for debugging high cost ® Circuit partitioning algorithm and interconnection architecture limit the usable gate count. 23

Emulation ± ± Pros ® Fast (500 K cycles/sec) ® Verification on real target system. Cons ® Setup time overhead to map RTL design into hardware is very high. ® Many FPGA’s + resources for debugging high cost ® Circuit partitioning algorithm and interconnection architecture limit the usable gate count. 23

Emulation ± Challenges ® Efficient interconnection architecture and Hardware Mapping efficiency for Speed and Cost ® RTL debugging facility with reasonable amount of resource ® Efficient partitioning algorithm for any given interconnection architecture ® Reducing development time (to take advantage of more recent FPGA’s) 24

Emulation ± Challenges ® Efficient interconnection architecture and Hardware Mapping efficiency for Speed and Cost ® RTL debugging facility with reasonable amount of resource ® Efficient partitioning algorithm for any given interconnection architecture ® Reducing development time (to take advantage of more recent FPGA’s) 24

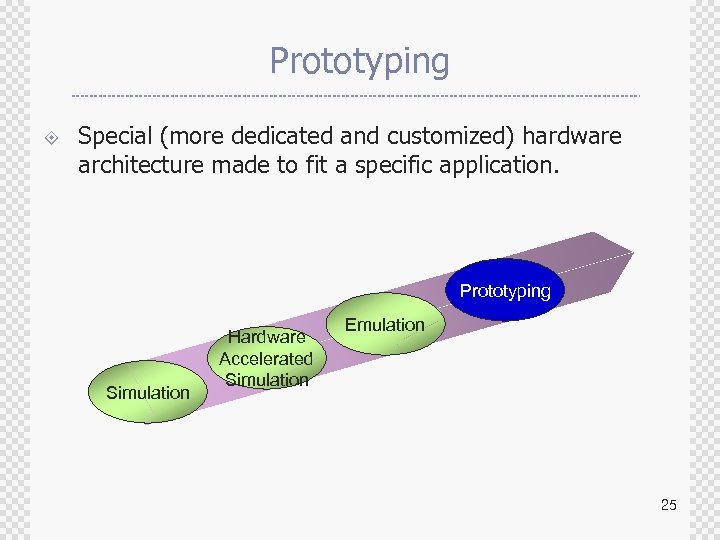

Prototyping ± Special (more dedicated and customized) hardware architecture made to fit a specific application. Prototyping Simulation Hardware Accelerated Simulation Emulation 25

Prototyping ± Special (more dedicated and customized) hardware architecture made to fit a specific application. Prototyping Simulation Hardware Accelerated Simulation Emulation 25

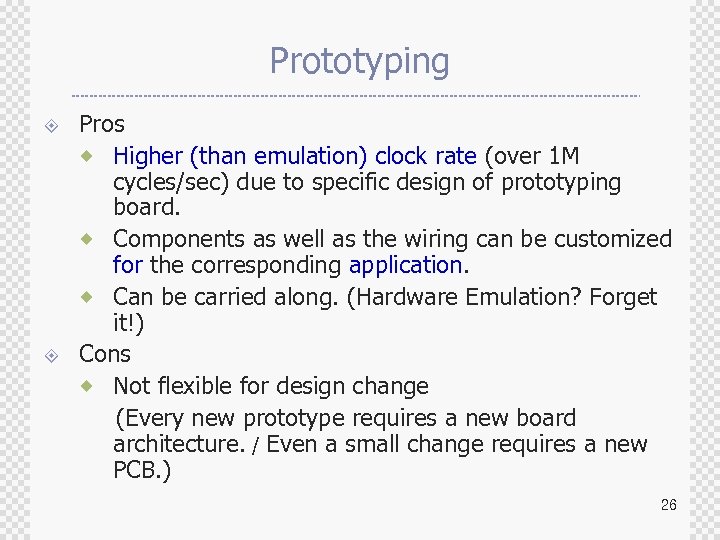

Prototyping ± ± Pros ® Higher (than emulation) clock rate (over 1 M cycles/sec) due to specific design of prototyping board. ® Components as well as the wiring can be customized for the corresponding application. ® Can be carried along. (Hardware Emulation? Forget it!) Cons ® Not flexible for design change (Every new prototype requires a new board architecture. / Even a small change requires a new PCB. ) 26

Prototyping ± ± Pros ® Higher (than emulation) clock rate (over 1 M cycles/sec) due to specific design of prototyping board. ® Components as well as the wiring can be customized for the corresponding application. ® Can be carried along. (Hardware Emulation? Forget it!) Cons ® Not flexible for design change (Every new prototype requires a new board architecture. / Even a small change requires a new PCB. ) 26

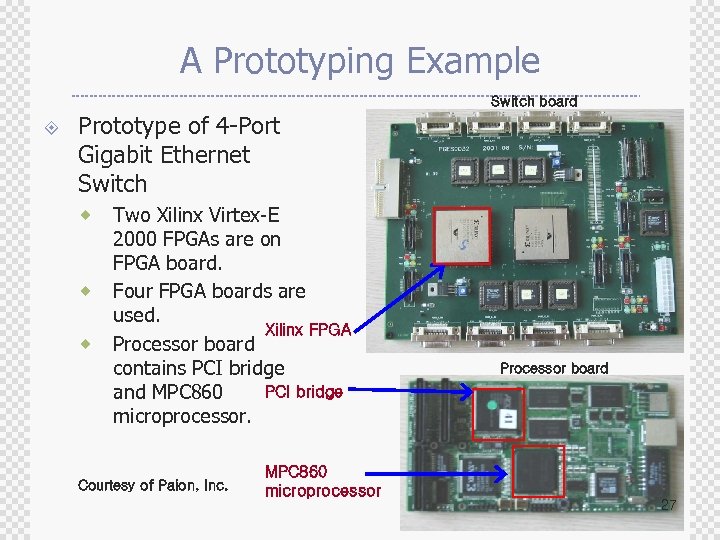

A Prototyping Example Switch board ± Prototype of 4 -Port Gigabit Ethernet Switch ® ® ® Two Xilinx Virtex-E 2000 FPGAs are on FPGA board. Four FPGA boards are used. Xilinx FPGA Processor board contains PCI bridge and MPC 860 microprocessor. Courtesy of Paion, Inc. MPC 860 microprocessor Processor board 27

A Prototyping Example Switch board ± Prototype of 4 -Port Gigabit Ethernet Switch ® ® ® Two Xilinx Virtex-E 2000 FPGAs are on FPGA board. Four FPGA boards are used. Xilinx FPGA Processor board contains PCI bridge and MPC 860 microprocessor. Courtesy of Paion, Inc. MPC 860 microprocessor Processor board 27

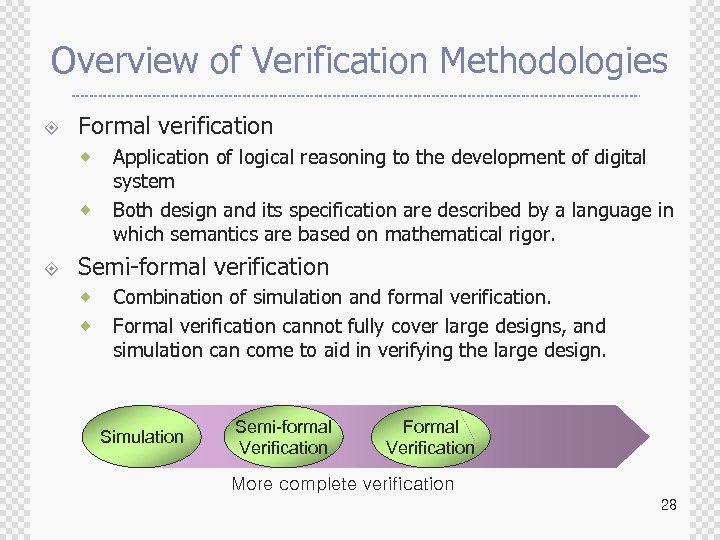

Overview of Verification Methodologies ± Formal verification ® ® ± Application of logical reasoning to the development of digital system Both design and its specification are described by a language in which semantics are based on mathematical rigor. Semi-formal verification ® ® Combination of simulation and formal verification. Formal verification cannot fully cover large designs, and simulation can come to aid in verifying the large design. Simulation Semi-formal Verification Formal Verification More complete verification 28

Overview of Verification Methodologies ± Formal verification ® ® ± Application of logical reasoning to the development of digital system Both design and its specification are described by a language in which semantics are based on mathematical rigor. Semi-formal verification ® ® Combination of simulation and formal verification. Formal verification cannot fully cover large designs, and simulation can come to aid in verifying the large design. Simulation Semi-formal Verification Formal Verification More complete verification 28

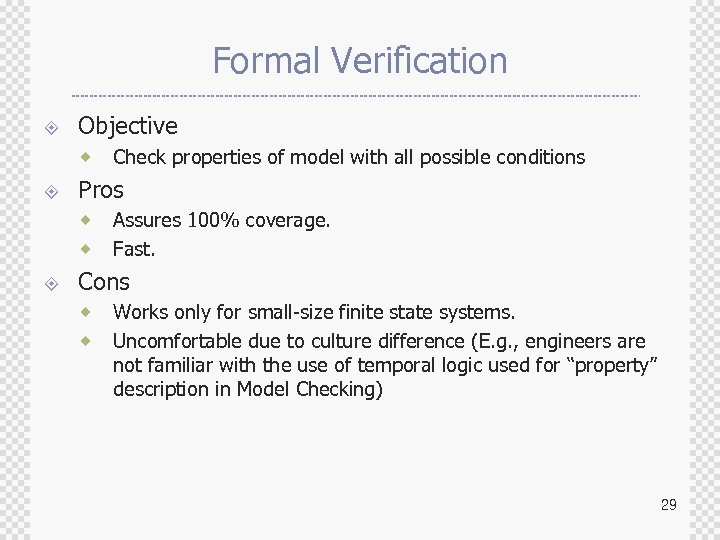

Formal Verification ± Objective ® ± Pros ® ® ± Check properties of model with all possible conditions Assures 100% coverage. Fast. Cons ® ® Works only for small-size finite state systems. Uncomfortable due to culture difference (E. g. , engineers are not familiar with the use of temporal logic used for “property” description in Model Checking) 29

Formal Verification ± Objective ® ± Pros ® ® ± Check properties of model with all possible conditions Assures 100% coverage. Fast. Cons ® ® Works only for small-size finite state systems. Uncomfortable due to culture difference (E. g. , engineers are not familiar with the use of temporal logic used for “property” description in Model Checking) 29

Formal Verification Techniques ± ± Symbolic Simulation Equivalence Checking Theorem proving Model Checking 30

Formal Verification Techniques ± ± Symbolic Simulation Equivalence Checking Theorem proving Model Checking 30

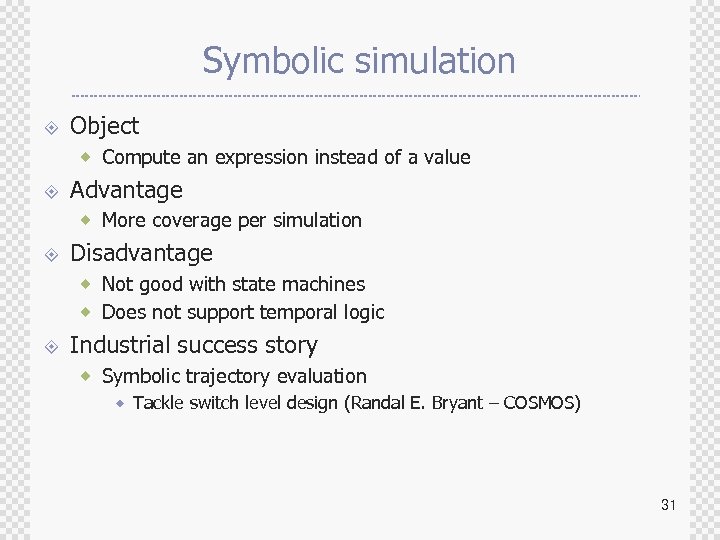

Symbolic simulation ± Object ® Compute an expression instead of a value ± Advantage ® More coverage per simulation ± Disadvantage ® Not good with state machines ® Does not support temporal logic ± Industrial success story ® Symbolic trajectory evaluation ® Tackle switch level design (Randal E. Bryant – COSMOS) 31

Symbolic simulation ± Object ® Compute an expression instead of a value ± Advantage ® More coverage per simulation ± Disadvantage ® Not good with state machines ® Does not support temporal logic ± Industrial success story ® Symbolic trajectory evaluation ® Tackle switch level design (Randal E. Bryant – COSMOS) 31

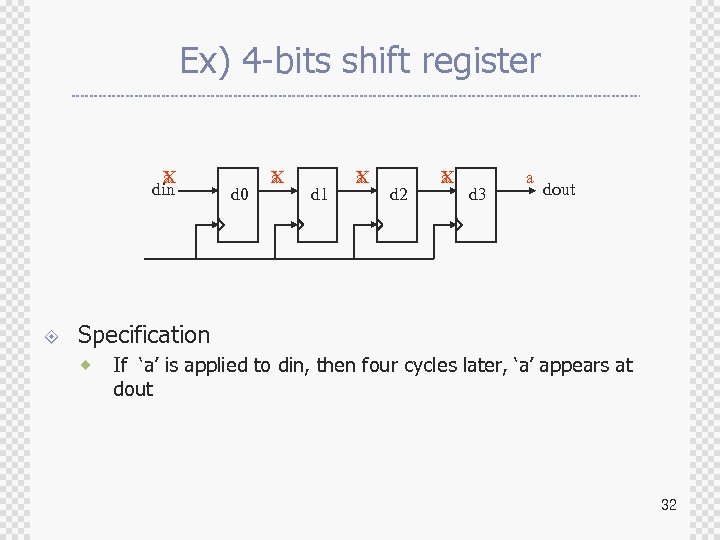

Ex) 4 -bits shift register X a din ± d 0 X a d 1 X a d 2 X a d 3 a dout Specification ® If ‘a’ is applied to din, then four cycles later, ‘a’ appears at dout 32

Ex) 4 -bits shift register X a din ± d 0 X a d 1 X a d 2 X a d 3 a dout Specification ® If ‘a’ is applied to din, then four cycles later, ‘a’ appears at dout 32

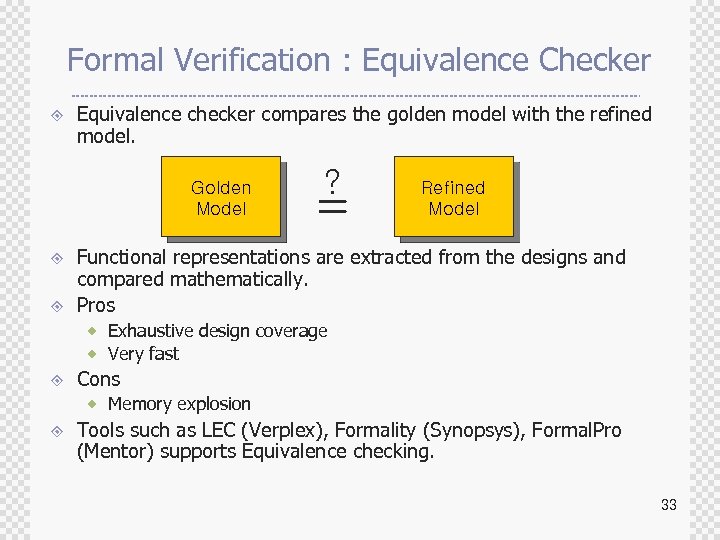

Formal Verification : Equivalence Checker ± Equivalence checker compares the golden model with the refined model. Golden Model ± ± ? = Refined Model Functional representations are extracted from the designs and compared mathematically. Pros ® Exhaustive design coverage ® Very fast ± Cons ® Memory explosion ± Tools such as LEC (Verplex), Formality (Synopsys), Formal. Pro (Mentor) supports Equivalence checking. 33

Formal Verification : Equivalence Checker ± Equivalence checker compares the golden model with the refined model. Golden Model ± ± ? = Refined Model Functional representations are extracted from the designs and compared mathematically. Pros ® Exhaustive design coverage ® Very fast ± Cons ® Memory explosion ± Tools such as LEC (Verplex), Formality (Synopsys), Formal. Pro (Mentor) supports Equivalence checking. 33

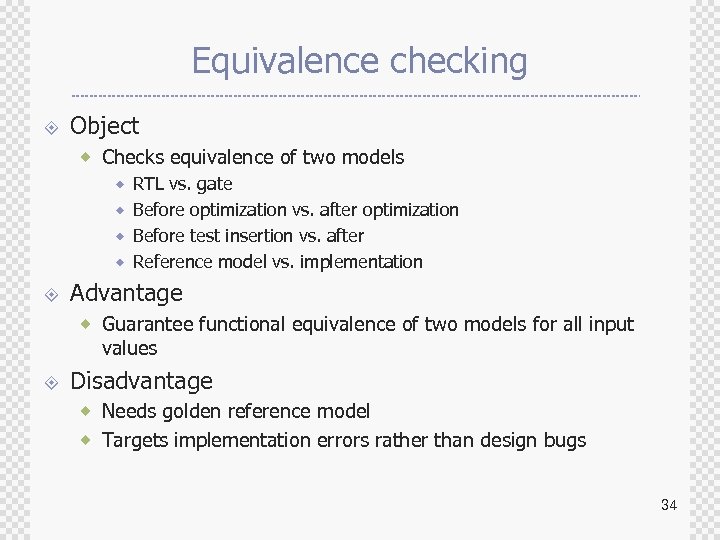

Equivalence checking ± Object ® Checks equivalence of two models ® RTL vs. gate ® Before optimization vs. after optimization ® Before test insertion vs. after ® Reference model vs. implementation ± Advantage ® Guarantee functional equivalence of two models for all input values ± Disadvantage ® Needs golden reference model ® Targets implementation errors rather than design bugs 34

Equivalence checking ± Object ® Checks equivalence of two models ® RTL vs. gate ® Before optimization vs. after optimization ® Before test insertion vs. after ® Reference model vs. implementation ± Advantage ® Guarantee functional equivalence of two models for all input values ± Disadvantage ® Needs golden reference model ® Targets implementation errors rather than design bugs 34

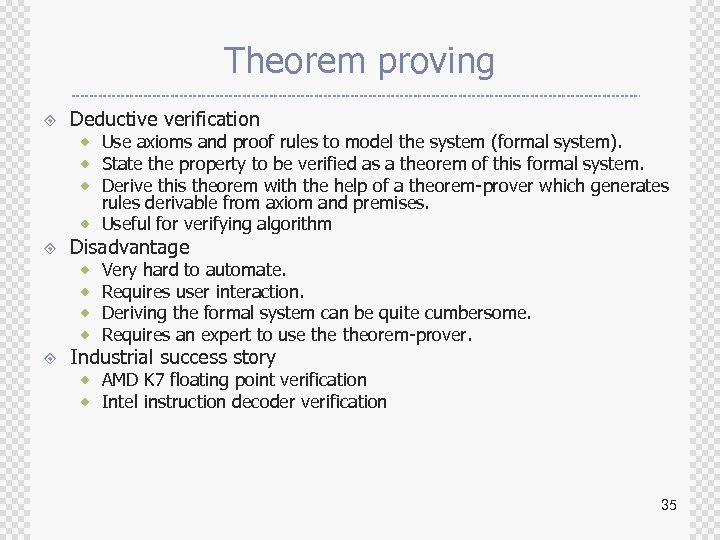

Theorem proving ± Deductive verification ® Use axioms and proof rules to model the system (formal system). ® State the property to be verified as a theorem of this formal system. ® Derive this theorem with the help of a theorem-prover which generates rules derivable from axiom and premises. ® Useful for verifying algorithm ± Disadvantage ® ® ± Very hard to automate. Requires user interaction. Deriving the formal system can be quite cumbersome. Requires an expert to use theorem-prover. Industrial success story ® AMD K 7 floating point verification ® Intel instruction decoder verification 35

Theorem proving ± Deductive verification ® Use axioms and proof rules to model the system (formal system). ® State the property to be verified as a theorem of this formal system. ® Derive this theorem with the help of a theorem-prover which generates rules derivable from axiom and premises. ® Useful for verifying algorithm ± Disadvantage ® ® ± Very hard to automate. Requires user interaction. Deriving the formal system can be quite cumbersome. Requires an expert to use theorem-prover. Industrial success story ® AMD K 7 floating point verification ® Intel instruction decoder verification 35

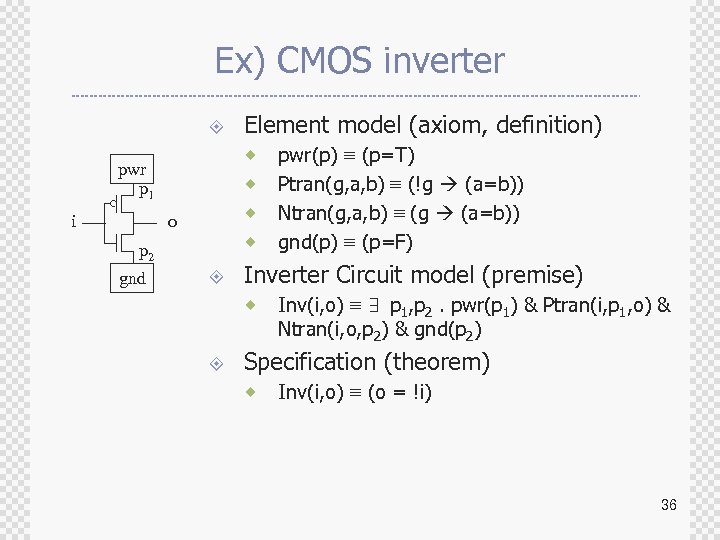

Ex) CMOS inverter ± ® pwr p 1 i ® ® o ® p 2 gnd Element model (axiom, definition) ± Inverter Circuit model (premise) ® ± pwr(p) ≡ (p=T) Ptran(g, a, b) ≡ (!g (a=b)) Ntran(g, a, b) ≡ (g (a=b)) gnd(p) ≡ (p=F) Inv(i, o) ≡ ∃ p 1, p 2. pwr(p 1) & Ptran(i, p 1, o) & Ntran(i, o, p 2) & gnd(p 2) Specification (theorem) ® Inv(i, o) ≡ (o = !i) 36

Ex) CMOS inverter ± ® pwr p 1 i ® ® o ® p 2 gnd Element model (axiom, definition) ± Inverter Circuit model (premise) ® ± pwr(p) ≡ (p=T) Ptran(g, a, b) ≡ (!g (a=b)) Ntran(g, a, b) ≡ (g (a=b)) gnd(p) ≡ (p=F) Inv(i, o) ≡ ∃ p 1, p 2. pwr(p 1) & Ptran(i, p 1, o) & Ntran(i, o, p 2) & gnd(p 2) Specification (theorem) ® Inv(i, o) ≡ (o = !i) 36

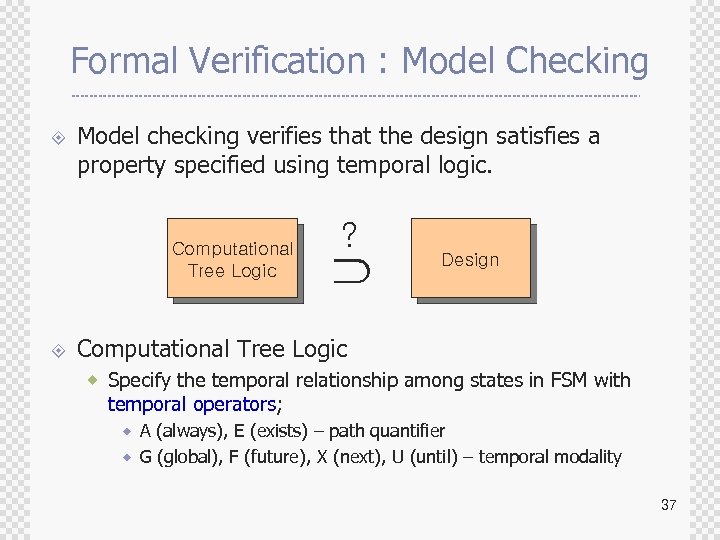

Formal Verification : Model Checking ± Model checking verifies that the design satisfies a property specified using temporal logic. Computational Tree Logic ± ? Design Computational Tree Logic ® Specify the temporal relationship among states in FSM with temporal operators; A (always), E (exists) – path quantifier ® G (global), F (future), X (next), U (until) – temporal modality ® 37

Formal Verification : Model Checking ± Model checking verifies that the design satisfies a property specified using temporal logic. Computational Tree Logic ± ? Design Computational Tree Logic ® Specify the temporal relationship among states in FSM with temporal operators; A (always), E (exists) – path quantifier ® G (global), F (future), X (next), U (until) – temporal modality ® 37

Model checking ± Object ® Check properties of model with all possible conditions ± Advantage ® Can be fully automated ® If the property does not hold, a counter-example will be generated ® Relatively easy to use ± Problem ® Works (well) only for finite state systems. ® Needs abstraction or extraction which tend to cause errors 38

Model checking ± Object ® Check properties of model with all possible conditions ± Advantage ® Can be fully automated ® If the property does not hold, a counter-example will be generated ® Relatively easy to use ± Problem ® Works (well) only for finite state systems. ® Needs abstraction or extraction which tend to cause errors 38

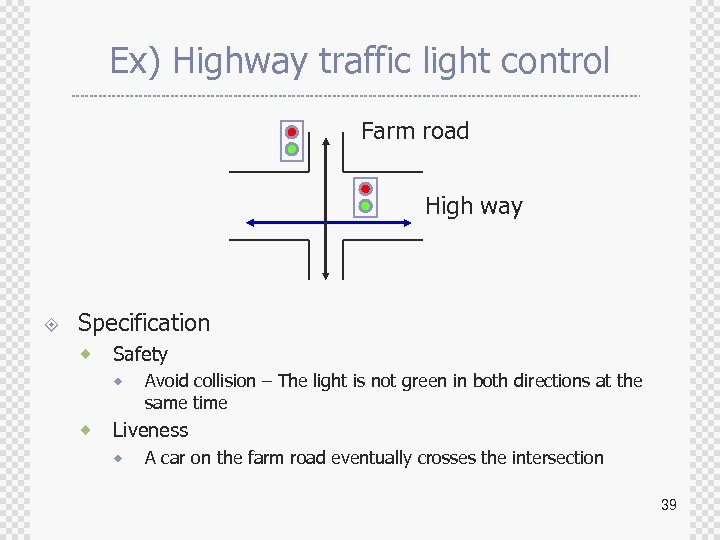

Ex) Highway traffic light control Farm road High way ± Specification ® Safety ® ® Avoid collision – The light is not green in both directions at the same time Liveness ® A car on the farm road eventually crosses the intersection 39

Ex) Highway traffic light control Farm road High way ± Specification ® Safety ® ® Avoid collision – The light is not green in both directions at the same time Liveness ® A car on the farm road eventually crosses the intersection 39

Formal Verification ± Challenges ® The most critical issue of formal verification is the “state explosion” problem. ® The application of current formal methods are limited to the design of up to 500 flip-flops. ® Researches about complexity reductions are : Reachability analysis ® Design state abstraction ® Design decomposition ® State projection ® 40

Formal Verification ± Challenges ® The most critical issue of formal verification is the “state explosion” problem. ® The application of current formal methods are limited to the design of up to 500 flip-flops. ® Researches about complexity reductions are : Reachability analysis ® Design state abstraction ® Design decomposition ® State projection ® 40

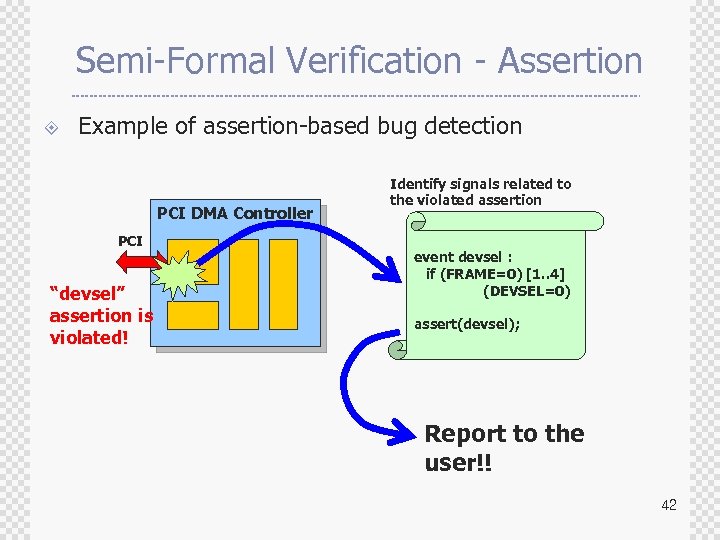

Semi-Formal Verification - Assertion ± Assertion-based verification (ABV) ® ® ± “Assertion” is a statement on the intended behavior of a design. The purpose of assertion is to ensure consistency between the designer’s intention and the implementation. Key features of assertions ® 1. Error detection : If the assertion is violated, it is detected by the simulator. ® 2. Error isolation : The signals related to the violated assertion are identified. ® 3. Error notification : The source of error is reported to the user. 41

Semi-Formal Verification - Assertion ± Assertion-based verification (ABV) ® ® ± “Assertion” is a statement on the intended behavior of a design. The purpose of assertion is to ensure consistency between the designer’s intention and the implementation. Key features of assertions ® 1. Error detection : If the assertion is violated, it is detected by the simulator. ® 2. Error isolation : The signals related to the violated assertion are identified. ® 3. Error notification : The source of error is reported to the user. 41

Semi-Formal Verification - Assertion ± Example of assertion-based bug detection PCI DMA Controller PCI “devsel” assertion is violated! Identify signals related to the violated assertion event devsel : if (FRAME=0) [1. . 4] (DEVSEL=0) assert(devsel); Report to the user!! 42

Semi-Formal Verification - Assertion ± Example of assertion-based bug detection PCI DMA Controller PCI “devsel” assertion is violated! Identify signals related to the violated assertion event devsel : if (FRAME=0) [1. . 4] (DEVSEL=0) assert(devsel); Report to the user!! 42

![Assertion Example - Arbiter req[0] ack[0] req[1] ack[1] Arbiter for(i=0; i<arbit_channel; i++) { event Assertion Example - Arbiter req[0] ack[0] req[1] ack[1] Arbiter for(i=0; i<arbit_channel; i++) { event](https://present5.com/presentation/48939eaf1f2d59e2ab9316252ca9b04c/image-43.jpg) Assertion Example - Arbiter req[0] ack[0] req[1] ack[1] Arbiter for(i=0; i

Assertion Example - Arbiter req[0] ack[0] req[1] ack[1] Arbiter for(i=0; i

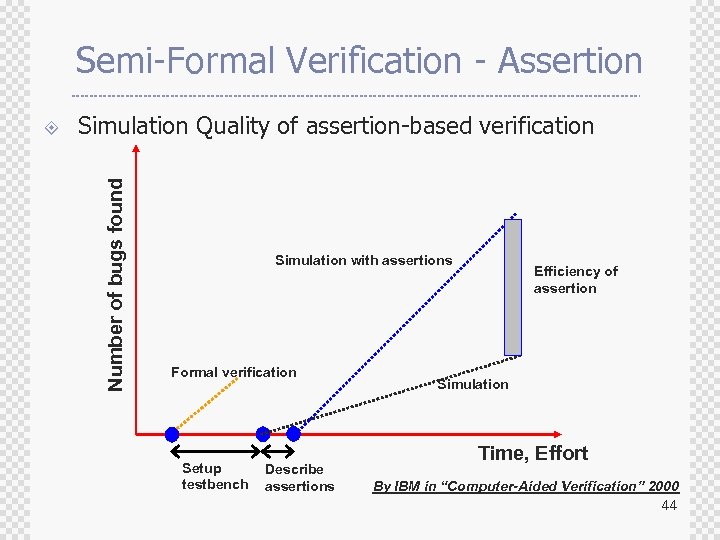

Semi-Formal Verification - Assertion Simulation Quality of assertion-based verification Number of bugs found ± Simulation with assertions Formal verification Setup testbench Describe assertions Efficiency of assertion Simulation Time, Effort By IBM in “Computer-Aided Verification” 2000 44

Semi-Formal Verification - Assertion Simulation Quality of assertion-based verification Number of bugs found ± Simulation with assertions Formal verification Setup testbench Describe assertions Efficiency of assertion Simulation Time, Effort By IBM in “Computer-Aided Verification” 2000 44

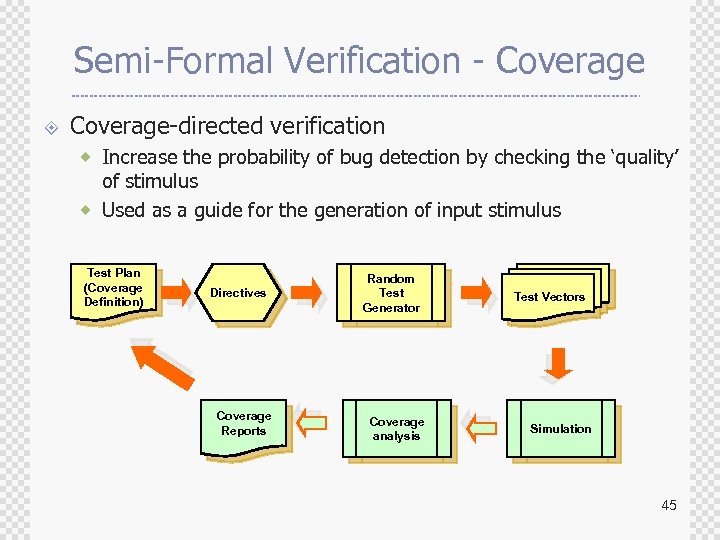

Semi-Formal Verification - Coverage ± Coverage-directed verification ® Increase the probability of bug detection by checking the ‘quality’ of stimulus ® Used as a guide for the generation of input stimulus Test Plan (Coverage Definition) Directives Coverage Reports Random Test Generator Coverage analysis Test Vectors Simulation 45

Semi-Formal Verification - Coverage ± Coverage-directed verification ® Increase the probability of bug detection by checking the ‘quality’ of stimulus ® Used as a guide for the generation of input stimulus Test Plan (Coverage Definition) Directives Coverage Reports Random Test Generator Coverage analysis Test Vectors Simulation 45

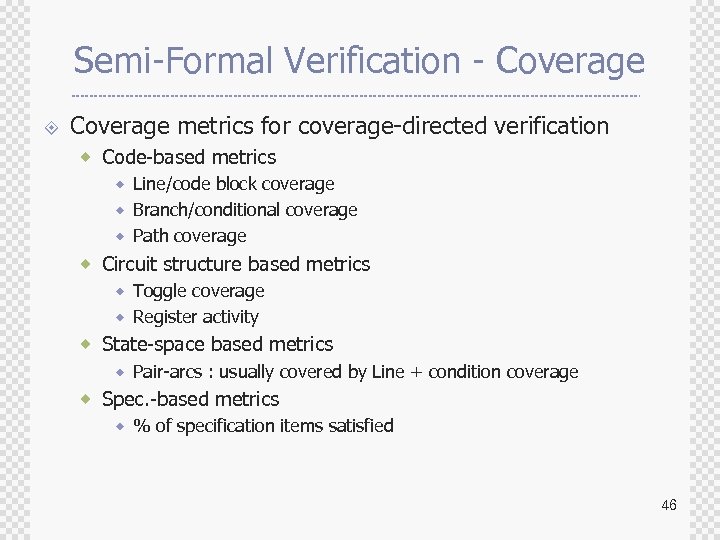

Semi-Formal Verification - Coverage ± Coverage metrics for coverage-directed verification ® Code-based metrics ® Line/code block coverage ® Branch/conditional coverage ® Path coverage ® Circuit structure based metrics ® Toggle coverage ® Register activity ® State-space based metrics ® Pair-arcs : usually covered by Line + condition coverage ® Spec. -based metrics ® % of specification items satisfied 46

Semi-Formal Verification - Coverage ± Coverage metrics for coverage-directed verification ® Code-based metrics ® Line/code block coverage ® Branch/conditional coverage ® Path coverage ® Circuit structure based metrics ® Toggle coverage ® Register activity ® State-space based metrics ® Pair-arcs : usually covered by Line + condition coverage ® Spec. -based metrics ® % of specification items satisfied 46

Semi-Formal Verification - Coverage ± Coverage Checking tools ® Veri. Cover (Veritools) ® Sure. Cov (Verisity) ® Coverscan (Cadence) ® HDLScore, Veri. Cov (Summit Design) ® HDLCover, Veri. Sure (Trans. EDA) ® Polaris (Synopsys) ® Covermeter (Synopsys) 47

Semi-Formal Verification - Coverage ± Coverage Checking tools ® Veri. Cover (Veritools) ® Sure. Cov (Verisity) ® Coverscan (Cadence) ® HDLScore, Veri. Cov (Summit Design) ® HDLCover, Veri. Sure (Trans. EDA) ® Polaris (Synopsys) ® Covermeter (Synopsys) 47

Semi-Formal Verification ± Pros ® ± Cons ® ± Designer can measure the coverage of the test environment as the formal properties are checked during simulation. The simulation speed is degraded as the properties are checked during simulation. Challenges ® ® ® There is no unified testbench description method. It is difficult to guide the direction of test vectors to increase the coverage of the design. Development of more efficient coverage metric to represent the behavior of the design. 48

Semi-Formal Verification ± Pros ® ± Cons ® ± Designer can measure the coverage of the test environment as the formal properties are checked during simulation. The simulation speed is degraded as the properties are checked during simulation. Challenges ® ® ® There is no unified testbench description method. It is difficult to guide the direction of test vectors to increase the coverage of the design. Development of more efficient coverage metric to represent the behavior of the design. 48

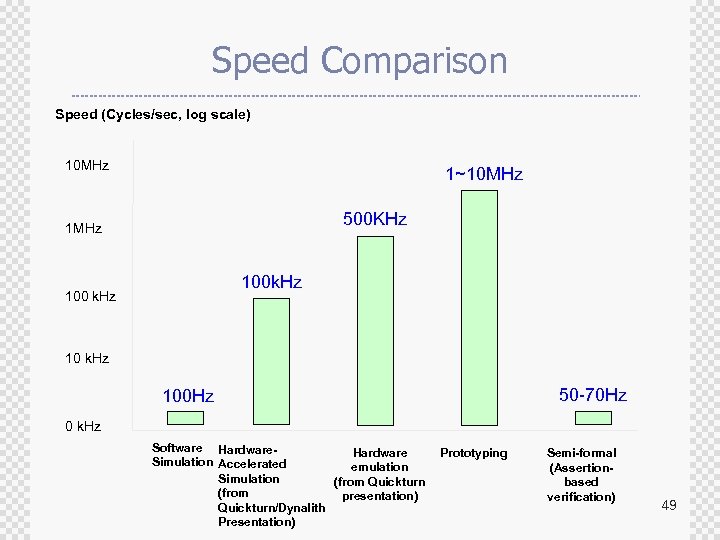

Speed Comparison Speed (Cycles/sec, log scale) 10 MHz 1~10 MHz 500 KHz 1 MHz 100 k. Hz 100 k. Hz 10 k. Hz 50 -70 Hz 100 Hz 0 k. Hz Software Hardware Simulation Accelerated emulation Simulation (from Quickturn (from presentation) Quickturn/Dynalith Presentation) Prototyping Semi-formal (Assertionbased verification) 49

Speed Comparison Speed (Cycles/sec, log scale) 10 MHz 1~10 MHz 500 KHz 1 MHz 100 k. Hz 100 k. Hz 10 k. Hz 50 -70 Hz 100 Hz 0 k. Hz Software Hardware Simulation Accelerated emulation Simulation (from Quickturn (from presentation) Quickturn/Dynalith Presentation) Prototyping Semi-formal (Assertionbased verification) 49

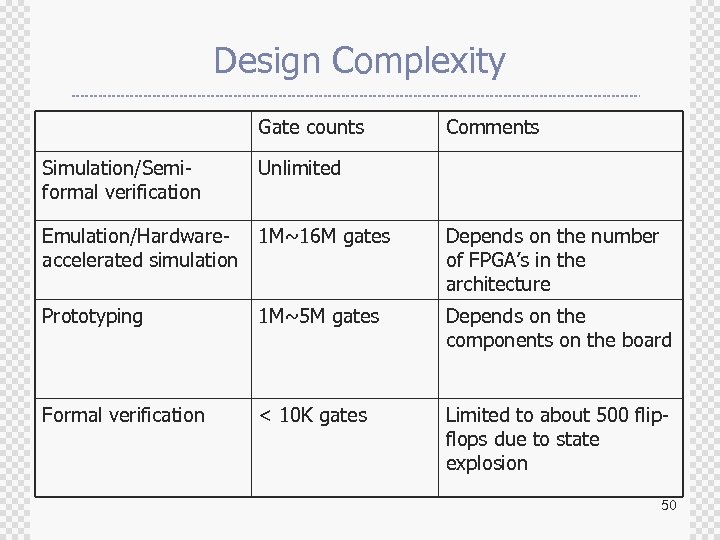

Design Complexity Gate counts Simulation/Semiformal verification Comments Unlimited Emulation/Hardware- 1 M~16 M gates accelerated simulation Depends on the number of FPGA’s in the architecture Prototyping 1 M~5 M gates Depends on the components on the board Formal verification < 10 K gates Limited to about 500 flipflops due to state explosion 50

Design Complexity Gate counts Simulation/Semiformal verification Comments Unlimited Emulation/Hardware- 1 M~16 M gates accelerated simulation Depends on the number of FPGA’s in the architecture Prototyping 1 M~5 M gates Depends on the components on the board Formal verification < 10 K gates Limited to about 500 flipflops due to state explosion 50

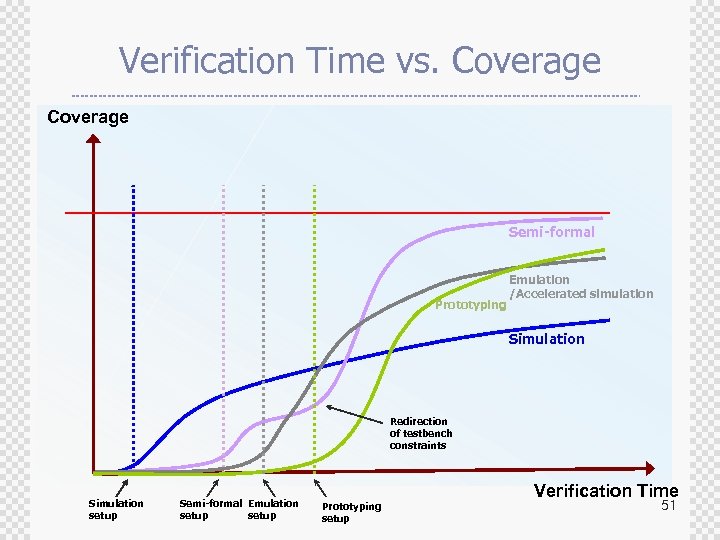

Verification Time vs. Coverage Semi-formal Prototyping Emulation /Accelerated simulation Simulation Redirection of testbench constraints Simulation setup Semi-formal Emulation setup Prototyping setup Verification Time 51

Verification Time vs. Coverage Semi-formal Prototyping Emulation /Accelerated simulation Simulation Redirection of testbench constraints Simulation setup Semi-formal Emulation setup Prototyping setup Verification Time 51

Agenda ± ± ± Why Verification ? Verification Alternatives Languages for System Modeling and Verification ® ® ± ± ± System modeling languages Testbench automation & Assertion languages Verification with Progressive Refinement So. C Verification Concluding Remarks 52

Agenda ± ± ± Why Verification ? Verification Alternatives Languages for System Modeling and Verification ® ® ± ± ± System modeling languages Testbench automation & Assertion languages Verification with Progressive Refinement So. C Verification Concluding Remarks 52

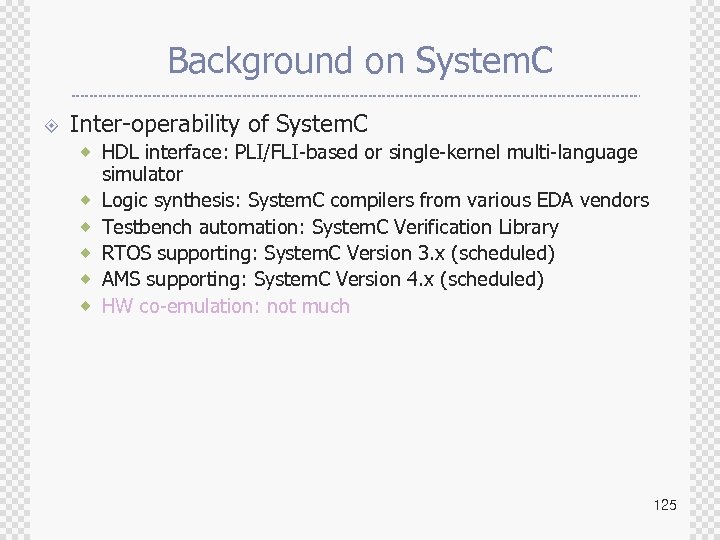

Accellera ± Formed in 2000 through the unification of Open Verilog International and VHDL International to focus on identifying new standards, development of standards and formats, and to foster the adoption of new methodologies. 53

Accellera ± Formed in 2000 through the unification of Open Verilog International and VHDL International to focus on identifying new standards, development of standards and formats, and to foster the adoption of new methodologies. 53

ACCELLERA APPROVES FOUR NEW DESIGN VERIFICATION STANDARDS ± June 2, 2003 - Accellera, the electronics industry organization focused on language-based electronic design standards approved four new standards for language-based design verification; Property Specification Language (PSL) 1. 01, Standard Co. Emulation Application Programming Interface (SCE-API) 1. 0, System. Verilog 3. 1 and Verilog-AMS 2. 1. 54

ACCELLERA APPROVES FOUR NEW DESIGN VERIFICATION STANDARDS ± June 2, 2003 - Accellera, the electronics industry organization focused on language-based electronic design standards approved four new standards for language-based design verification; Property Specification Language (PSL) 1. 01, Standard Co. Emulation Application Programming Interface (SCE-API) 1. 0, System. Verilog 3. 1 and Verilog-AMS 2. 1. 54

Three different ways of specifying Assertions in Verilog designs ± OVL (Open Verification Library) ± PSL (Property Specification Language) ± Native assertion construct in System Verilog 55

Three different ways of specifying Assertions in Verilog designs ± OVL (Open Verification Library) ± PSL (Property Specification Language) ± Native assertion construct in System Verilog 55

Accellera's PSL (Property Specification Language) ± ± Gives the design architect a standard means of specifying design properties using a concise syntax with clearly defined formal semantics. Enables RTL implementer to capture design intent in a verifiable form, while enabling verification engineer to validate that the implementation satisfies its specification with dynamic (that is, simulation) and static (that is, formal) verification. 56

Accellera's PSL (Property Specification Language) ± ± Gives the design architect a standard means of specifying design properties using a concise syntax with clearly defined formal semantics. Enables RTL implementer to capture design intent in a verifiable form, while enabling verification engineer to validate that the implementation satisfies its specification with dynamic (that is, simulation) and static (that is, formal) verification. 56

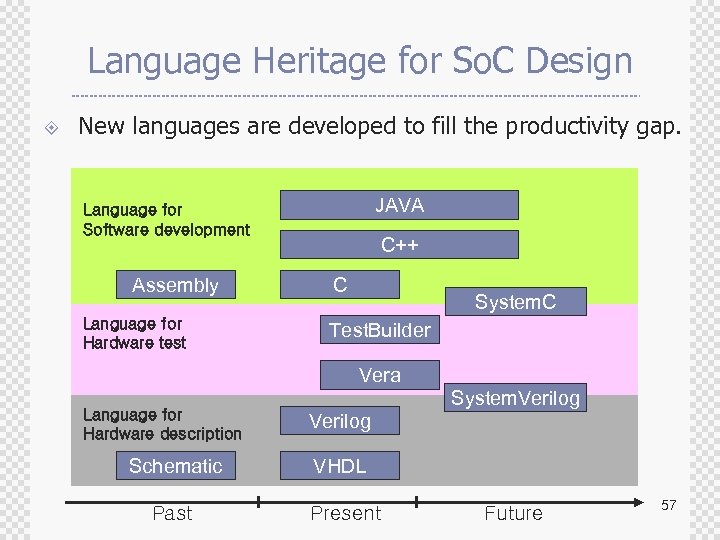

Language Heritage for So. C Design ± New languages are developed to fill the productivity gap. JAVA Language for Software development Assembly Language for Hardware test C++ C System. C Test. Builder Vera Language for Hardware description System. Verilog Schematic VHDL Past Present Future 57

Language Heritage for So. C Design ± New languages are developed to fill the productivity gap. JAVA Language for Software development Assembly Language for Hardware test C++ C System. C Test. Builder Vera Language for Hardware description System. Verilog Schematic VHDL Past Present Future 57

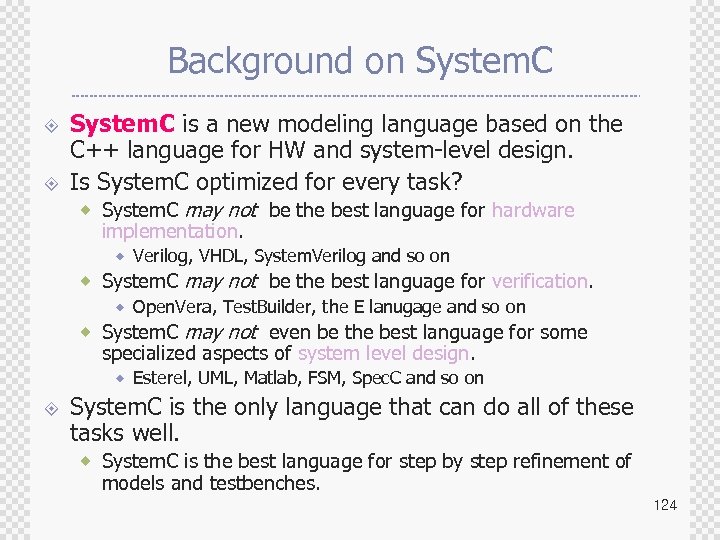

System. C ± System. C is a modeling platform consisting of ® A set of C++ class library, ® Including a simulation kernel that supports hardware modeling concepts at the system level, behavioral level and register transfer level. ± System. C enables us to effectively create ® A cycle-accurate model of Software algorithm, ® Hardware architecture, and ® Interfaces of System-on-a-Chip. ® ± Program in System. C can be ® An executable specification of the system. 58

System. C ± System. C is a modeling platform consisting of ® A set of C++ class library, ® Including a simulation kernel that supports hardware modeling concepts at the system level, behavioral level and register transfer level. ± System. C enables us to effectively create ® A cycle-accurate model of Software algorithm, ® Hardware architecture, and ® Interfaces of System-on-a-Chip. ® ± Program in System. C can be ® An executable specification of the system. 58

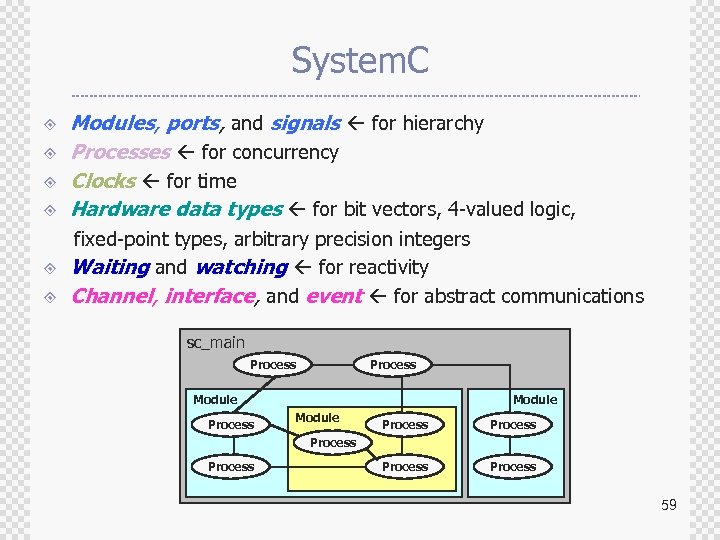

System. C ± ± Modules, ports, and signals for hierarchy Processes for concurrency Clocks for time Hardware data types for bit vectors, 4 -valued logic, fixed-point types, arbitrary precision integers ± Waiting and watching for reactivity ± Channel, interface, and event for abstract communications sc_main Process Module Process Process 59

System. C ± ± Modules, ports, and signals for hierarchy Processes for concurrency Clocks for time Hardware data types for bit vectors, 4 -valued logic, fixed-point types, arbitrary precision integers ± Waiting and watching for reactivity ± Channel, interface, and event for abstract communications sc_main Process Module Process Process 59

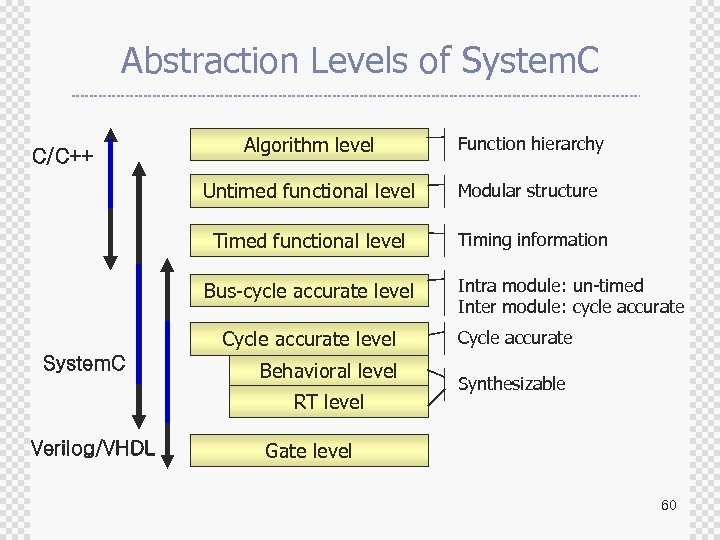

Abstraction Levels of System. C Algorithm level Function hierarchy Untimed functional level Modular structure Timed functional level C/C++ Timing information Bus-cycle accurate level Cycle accurate level System. C Behavioral level RT level Verilog/VHDL Intra module: un-timed Inter module: cycle accurate Cycle accurate Synthesizable Gate level 60

Abstraction Levels of System. C Algorithm level Function hierarchy Untimed functional level Modular structure Timed functional level C/C++ Timing information Bus-cycle accurate level Cycle accurate level System. C Behavioral level RT level Verilog/VHDL Intra module: un-timed Inter module: cycle accurate Cycle accurate Synthesizable Gate level 60

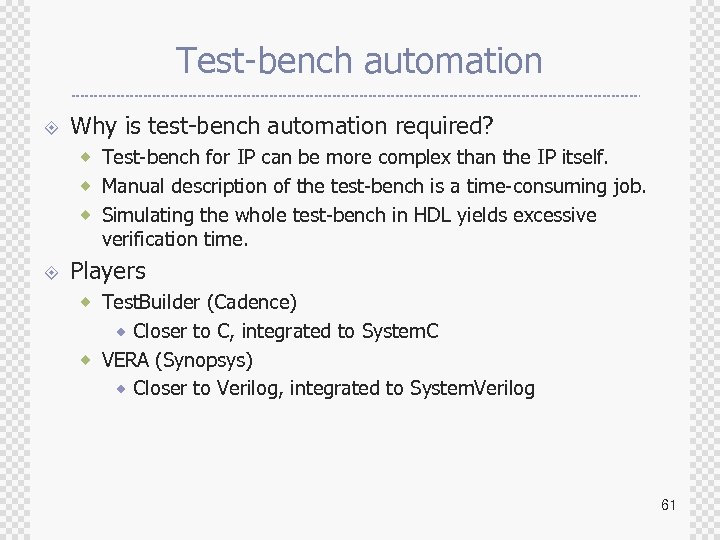

Test-bench automation ± Why is test-bench automation required? ® Test-bench for IP can be more complex than the IP itself. ® Manual description of the test-bench is a time-consuming job. ® Simulating the whole test-bench in HDL yields excessive verification time. ± Players ® Test. Builder (Cadence) Closer to C, integrated to System. C ® VERA (Synopsys) ® Closer to Verilog, integrated to System. Verilog ® 61

Test-bench automation ± Why is test-bench automation required? ® Test-bench for IP can be more complex than the IP itself. ® Manual description of the test-bench is a time-consuming job. ® Simulating the whole test-bench in HDL yields excessive verification time. ± Players ® Test. Builder (Cadence) Closer to C, integrated to System. C ® VERA (Synopsys) ® Closer to Verilog, integrated to System. Verilog ® 61

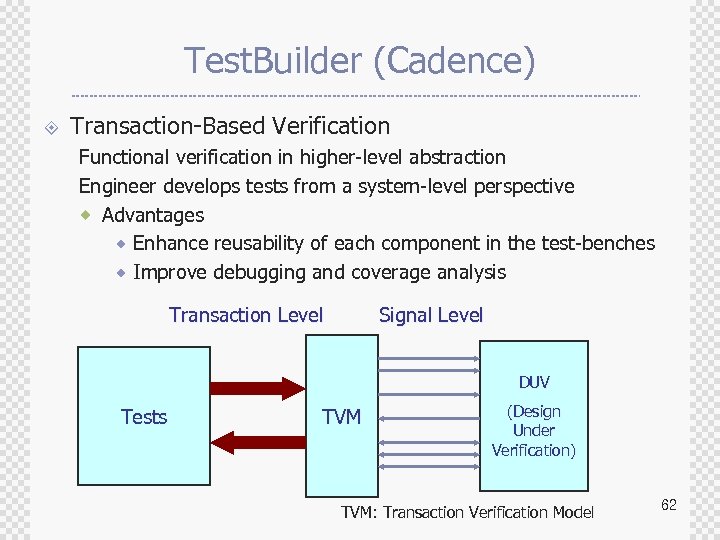

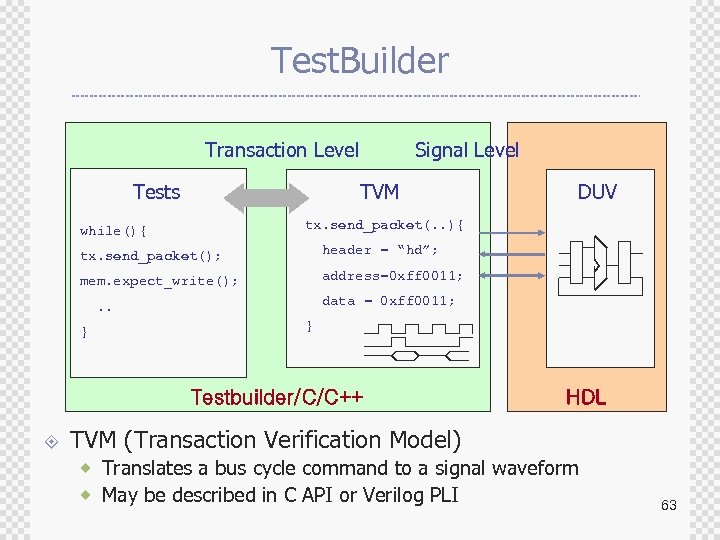

Test. Builder (Cadence) ± Transaction-Based Verification Functional verification in higher-level abstraction Engineer develops tests from a system-level perspective ® Advantages ® Enhance reusability of each component in the test-benches ® Improve debugging and coverage analysis Transaction Level Signal Level DUV Tests TVM (Design Under Verification) TVM: Transaction Verification Model 62

Test. Builder (Cadence) ± Transaction-Based Verification Functional verification in higher-level abstraction Engineer develops tests from a system-level perspective ® Advantages ® Enhance reusability of each component in the test-benches ® Improve debugging and coverage analysis Transaction Level Signal Level DUV Tests TVM (Design Under Verification) TVM: Transaction Verification Model 62

Test. Builder Transaction Level Tests Signal Level TVM tx. send_packet(. . ){ while(){ tx. send_packet(); header = “hd”; mem. expect_write(); address=0 xff 0011; data = 0 xff 0011; . . } } Testbuilder/C/C++ ± DUV HDL TVM (Transaction Verification Model) ® Translates a bus cycle command to a signal waveform ® May be described in C API or Verilog PLI 63

Test. Builder Transaction Level Tests Signal Level TVM tx. send_packet(. . ){ while(){ tx. send_packet(); header = “hd”; mem. expect_write(); address=0 xff 0011; data = 0 xff 0011; . . } } Testbuilder/C/C++ ± DUV HDL TVM (Transaction Verification Model) ® Translates a bus cycle command to a signal waveform ® May be described in C API or Verilog PLI 63

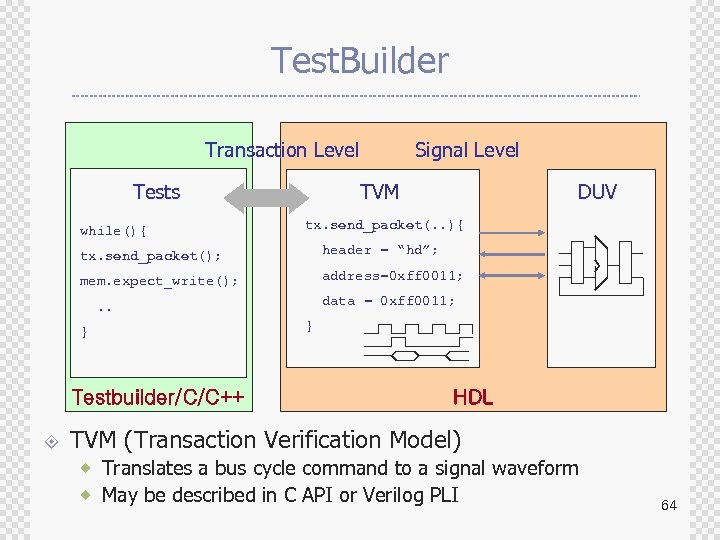

Test. Builder Transaction Level Tests while(){ Signal Level TVM DUV tx. send_packet(. . ){ tx. send_packet(); header = “hd”; mem. expect_write(); address=0 xff 0011; data = 0 xff 0011; . . } Testbuilder/C/C++ ± } HDL TVM (Transaction Verification Model) ® Translates a bus cycle command to a signal waveform ® May be described in C API or Verilog PLI 64

Test. Builder Transaction Level Tests while(){ Signal Level TVM DUV tx. send_packet(. . ){ tx. send_packet(); header = “hd”; mem. expect_write(); address=0 xff 0011; data = 0 xff 0011; . . } Testbuilder/C/C++ ± } HDL TVM (Transaction Verification Model) ® Translates a bus cycle command to a signal waveform ® May be described in C API or Verilog PLI 64

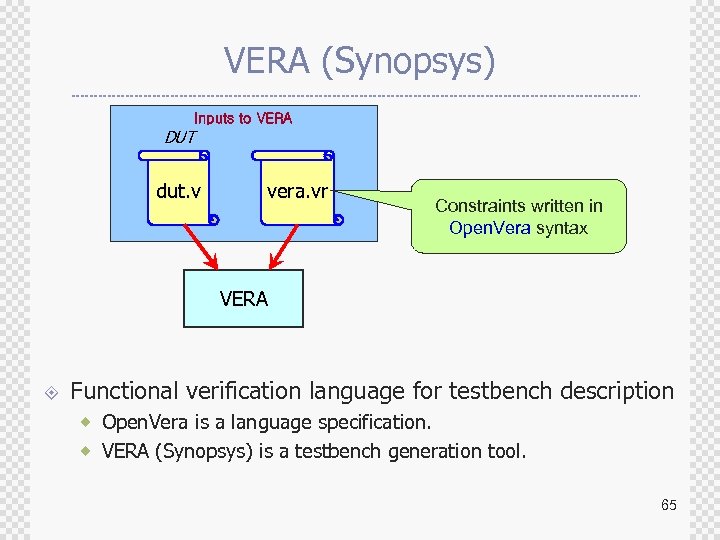

VERA (Synopsys) Inputs to VERA DUT dut. v vera. vr Constraints written in Open. Vera syntax VERA ± Functional verification language for testbench description ® Open. Vera is a language specification. ® VERA (Synopsys) is a testbench generation tool. 65

VERA (Synopsys) Inputs to VERA DUT dut. v vera. vr Constraints written in Open. Vera syntax VERA ± Functional verification language for testbench description ® Open. Vera is a language specification. ® VERA (Synopsys) is a testbench generation tool. 65

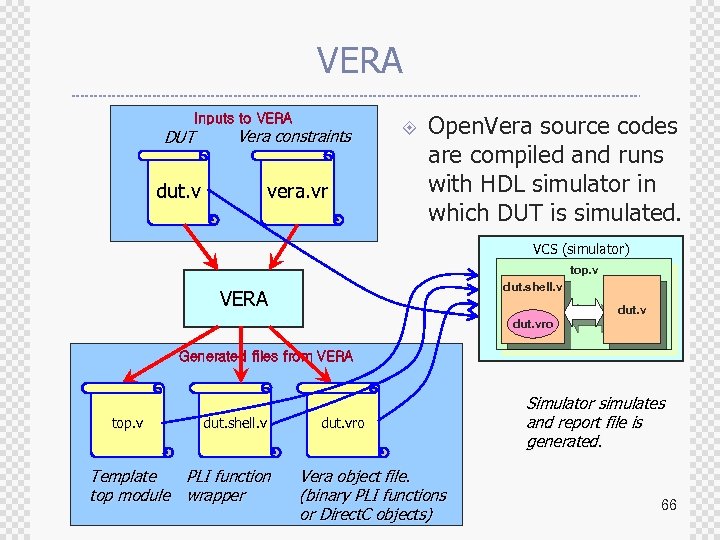

VERA Inputs to VERA DUT Vera constraints dut. v vera. vr ± Open. Vera source codes are compiled and runs with HDL simulator in which DUT is simulated. VCS (simulator) top. v dut. shell. v VERA dut. vro Generated files from VERA top. v dut. shell. v Template PLI function top module wrapper dut. vro Vera object file. (binary PLI functions or Direct. C objects) Simulator simulates and report file is generated. 66

VERA Inputs to VERA DUT Vera constraints dut. v vera. vr ± Open. Vera source codes are compiled and runs with HDL simulator in which DUT is simulated. VCS (simulator) top. v dut. shell. v VERA dut. vro Generated files from VERA top. v dut. shell. v Template PLI function top module wrapper dut. vro Vera object file. (binary PLI functions or Direct. C objects) Simulator simulates and report file is generated. 66

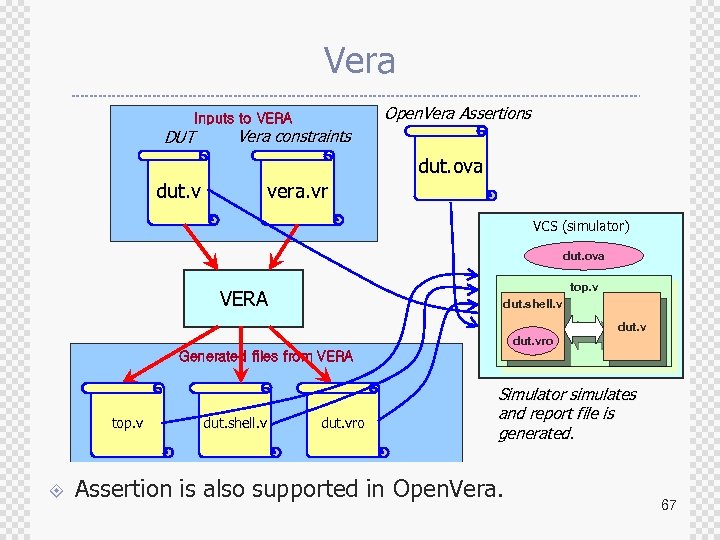

Vera Open. Vera Assertions Inputs to VERA DUT dut. v Vera constraints vera. vr dut. ova VCS (simulator) dut. ova top. v VERA dut. shell. v dut. vro Generated files from VERA top. v dut. shell. v dut. vro Simulator simulates and report file is generated. Vera object file. Template PLI function ± Assertion is also supported in Open. Vera. (binary PLI functions top module wrapper or Direct. C objects) 67

Vera Open. Vera Assertions Inputs to VERA DUT dut. v Vera constraints vera. vr dut. ova VCS (simulator) dut. ova top. v VERA dut. shell. v dut. vro Generated files from VERA top. v dut. shell. v dut. vro Simulator simulates and report file is generated. Vera object file. Template PLI function ± Assertion is also supported in Open. Vera. (binary PLI functions top module wrapper or Direct. C objects) 67

System. Verilog ± ± ± System. Verilog 3. 1 provides design constructs for architectural, algorithmic and transactionbased modeling. Adds an environment for automated testbench generation, while providing assertions to describe design functionality, including complex protocols, to drive verification using simulation or formal verification techniques. Its C-API provides the ability to mix Verilog and C/C++ constructs without the need for PLI for direct data exchange. 68

System. Verilog ± ± ± System. Verilog 3. 1 provides design constructs for architectural, algorithmic and transactionbased modeling. Adds an environment for automated testbench generation, while providing assertions to describe design functionality, including complex protocols, to drive verification using simulation or formal verification techniques. Its C-API provides the ability to mix Verilog and C/C++ constructs without the need for PLI for direct data exchange. 68

System. Verilog ± New data types for higher data abstraction level than Verilog ® ± Assertion ® ® ± Assertions can be embedded directly in Verilog RTL. Sequential assertion is also supported. Encapsulated interfaces ® ® ± Structures, classes, lists, etc. are supported. Most system bugs occur in interfaces between blocks. With encapsulated interfaces, the designer can concentrate on the communications rather than on the signals and wires. Direct. C as a fast C-API ® C codes can be called directly from the System. Verilog codes. 69

System. Verilog ± New data types for higher data abstraction level than Verilog ® ± Assertion ® ® ± Assertions can be embedded directly in Verilog RTL. Sequential assertion is also supported. Encapsulated interfaces ® ® ± Structures, classes, lists, etc. are supported. Most system bugs occur in interfaces between blocks. With encapsulated interfaces, the designer can concentrate on the communications rather than on the signals and wires. Direct. C as a fast C-API ® C codes can be called directly from the System. Verilog codes. 69

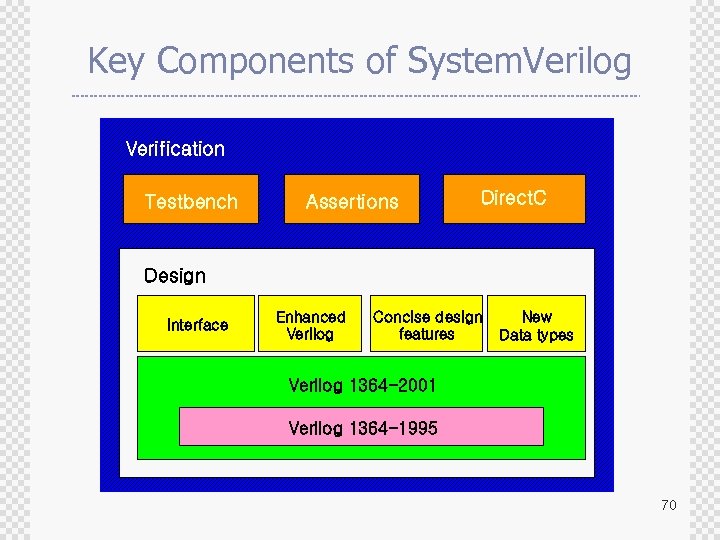

Key Components of System. Verilog Verification Testbench Assertions Direct. C Design Interface Enhanced Verilog Concise design features New Data types Entity Verilog 1364 -2001 Verilog 1364 -1995 70

Key Components of System. Verilog Verification Testbench Assertions Direct. C Design Interface Enhanced Verilog Concise design features New Data types Entity Verilog 1364 -2001 Verilog 1364 -1995 70

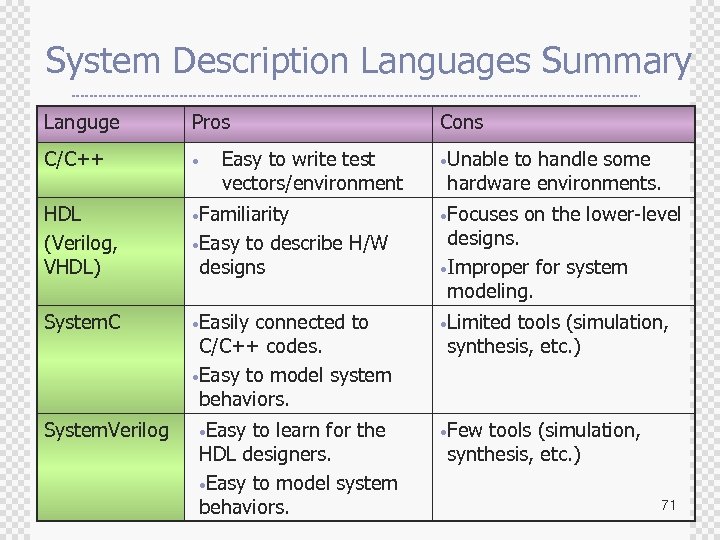

System Description Languages Summary Languge Pros C/C++ • HDL (Verilog, VHDL) • Familiarity • Easy to describe H/W designs. • Improper for system modeling. System. C • Easily connected to • Limited tools (simulation, System. Verilog Easy to write test vectors/environment designs C/C++ codes. • Easy to model system behaviors. • Easy to learn for the HDL designers. • Easy to model system behaviors. Cons • Unable to handle some hardware environments. • Focuses on the lower-level synthesis, etc. ) • Few tools (simulation, synthesis, etc. ) 71

System Description Languages Summary Languge Pros C/C++ • HDL (Verilog, VHDL) • Familiarity • Easy to describe H/W designs. • Improper for system modeling. System. C • Easily connected to • Limited tools (simulation, System. Verilog Easy to write test vectors/environment designs C/C++ codes. • Easy to model system behaviors. • Easy to learn for the HDL designers. • Easy to model system behaviors. Cons • Unable to handle some hardware environments. • Focuses on the lower-level synthesis, etc. ) • Few tools (simulation, synthesis, etc. ) 71

Agenda ± ± Why Verification ? Verification Alternatives Languages for System Modeling and Verification with Progressive Refinement ® ® ± ± Flexible So. C verification environment Debugging features Cycle vs. transaction mode verification Emulation products So. C Verification Concluding Remarks 72

Agenda ± ± Why Verification ? Verification Alternatives Languages for System Modeling and Verification with Progressive Refinement ® ® ± ± Flexible So. C verification environment Debugging features Cycle vs. transaction mode verification Emulation products So. C Verification Concluding Remarks 72

Criteria for Good So. C Verification Environment ± ± ± Support various abstraction levels Support heterogeneous design languages Trade-off between verification speed and debugging features Co-work with existing tools Progressive refinement Platform-based design 73

Criteria for Good So. C Verification Environment ± ± ± Support various abstraction levels Support heterogeneous design languages Trade-off between verification speed and debugging features Co-work with existing tools Progressive refinement Platform-based design 73

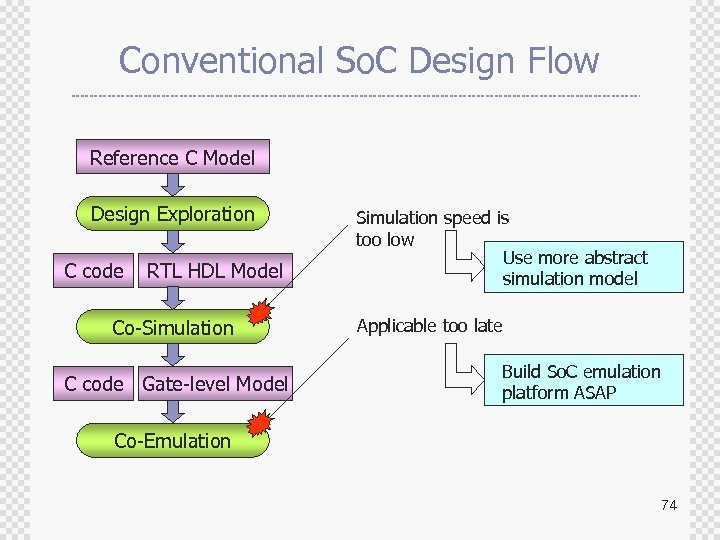

Conventional So. C Design Flow Reference C Model Design Exploration C code RTL HDL Model Co-Simulation C code Gate-level Model Simulation speed is too low Use more abstract simulation model Applicable too late Build So. C emulation platform ASAP Co-Emulation 74

Conventional So. C Design Flow Reference C Model Design Exploration C code RTL HDL Model Co-Simulation C code Gate-level Model Simulation speed is too low Use more abstract simulation model Applicable too late Build So. C emulation platform ASAP Co-Emulation 74

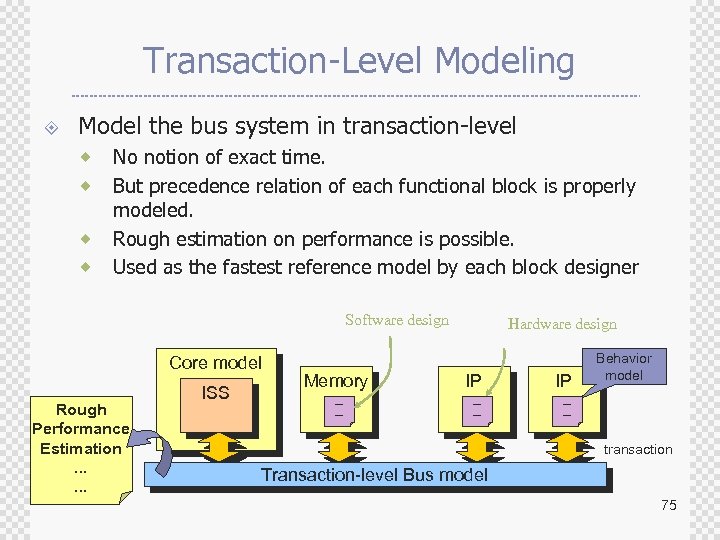

Transaction-Level Modeling ± Model the bus system in transaction-level ® ® No notion of exact time. But precedence relation of each functional block is properly modeled. Rough estimation on performance is possible. Used as the fastest reference model by each block designer Software design Core model Rough Performance Estimation. . . ISS Memory ----- Hardware design IP IP ----- Behavior model ----- transaction Transaction-level Bus model 75

Transaction-Level Modeling ± Model the bus system in transaction-level ® ® No notion of exact time. But precedence relation of each functional block is properly modeled. Rough estimation on performance is possible. Used as the fastest reference model by each block designer Software design Core model Rough Performance Estimation. . . ISS Memory ----- Hardware design IP IP ----- Behavior model ----- transaction Transaction-level Bus model 75

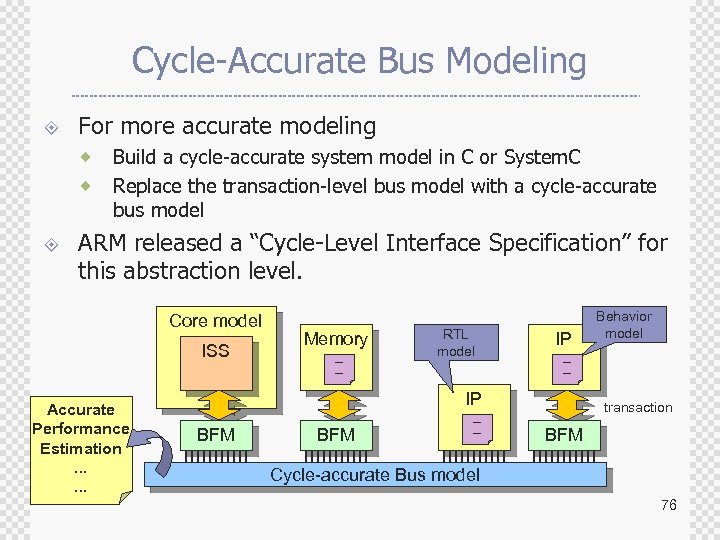

Cycle-Accurate Bus Modeling ± For more accurate modeling ® ® ± Build a cycle-accurate system model in C or System. C Replace the transaction-level bus model with a cycle-accurate bus model ARM released a “Cycle-Level Interface Specification” for this abstraction level. Core model ISS Accurate Performance Estimation. . . Memory ----- RTL model IP ----- IP BFM ----- Behavior model transaction BFM Cycle-accurate Bus model 76

Cycle-Accurate Bus Modeling ± For more accurate modeling ® ® ± Build a cycle-accurate system model in C or System. C Replace the transaction-level bus model with a cycle-accurate bus model ARM released a “Cycle-Level Interface Specification” for this abstraction level. Core model ISS Accurate Performance Estimation. . . Memory ----- RTL model IP ----- IP BFM ----- Behavior model transaction BFM Cycle-accurate Bus model 76

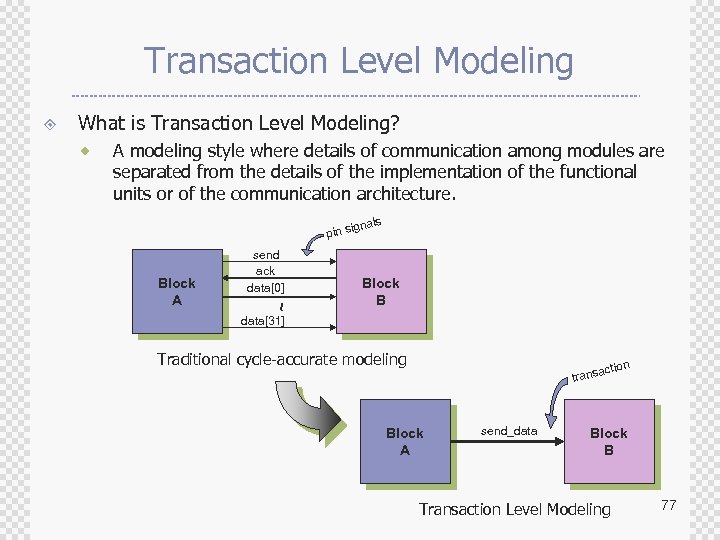

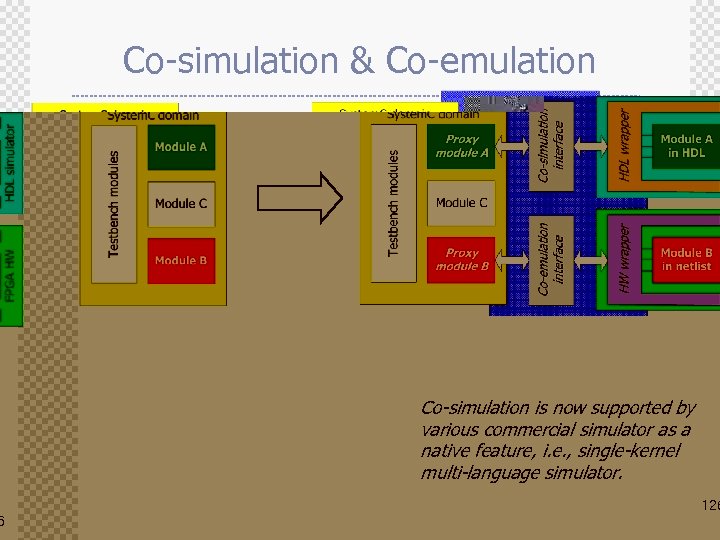

Transaction Level Modeling ± What is Transaction Level Modeling? ® A modeling style where details of communication among modules are separated from the details of the implementation of the functional units or of the communication architecture. s ignal pin s ~ Block A send ack data[0] Block B data[31] Traditional cycle-accurate modeling trans Block A send_data actio n Block B Transaction Level Modeling 77

Transaction Level Modeling ± What is Transaction Level Modeling? ® A modeling style where details of communication among modules are separated from the details of the implementation of the functional units or of the communication architecture. s ignal pin s ~ Block A send ack data[0] Block B data[31] Traditional cycle-accurate modeling trans Block A send_data actio n Block B Transaction Level Modeling 77

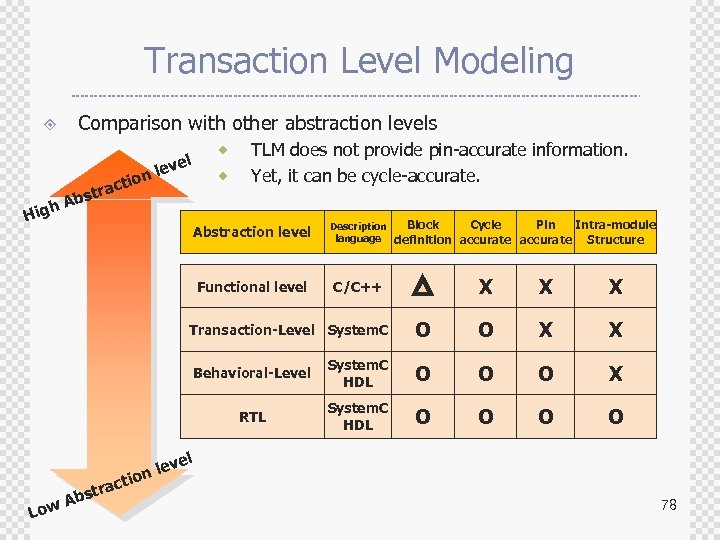

Transaction Level Modeling ± Comparison with other abstraction levels ion h Hig ct stra Ab ® l leve ® TLM does not provide pin-accurate information. Yet, it can be cycle-accurate. Abstraction level Description language Functional level Block Cycle Pin Intra-module definition accurate Structure C/C++ X X X Transaction-Level System. C Ab X X System. C HDL O O O X RTL Low O Behavioral-Level le tion c stra O System. C HDL O O vel 78

Transaction Level Modeling ± Comparison with other abstraction levels ion h Hig ct stra Ab ® l leve ® TLM does not provide pin-accurate information. Yet, it can be cycle-accurate. Abstraction level Description language Functional level Block Cycle Pin Intra-module definition accurate Structure C/C++ X X X Transaction-Level System. C Ab X X System. C HDL O O O X RTL Low O Behavioral-Level le tion c stra O System. C HDL O O vel 78

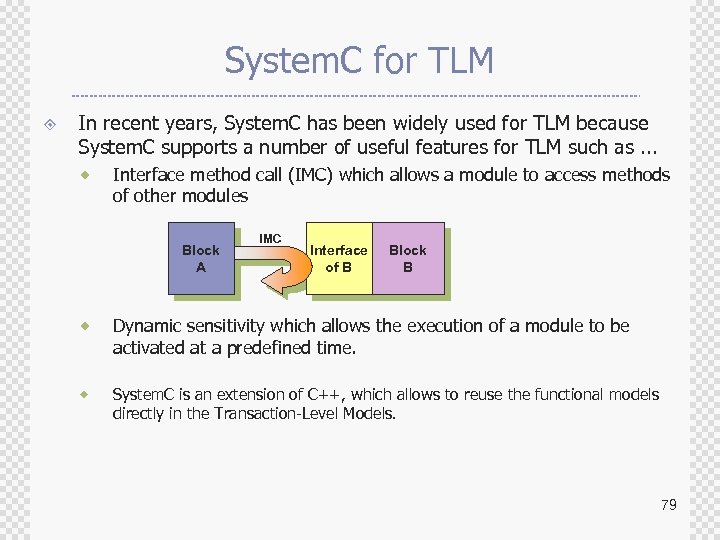

System. C for TLM ± In recent years, System. C has been widely used for TLM because System. C supports a number of useful features for TLM such as. . . ® Interface method call (IMC) which allows a module to access methods of other modules Block A IMC Interface of B Block B ® Dynamic sensitivity which allows the execution of a module to be activated at a predefined time. ® System. C is an extension of C++, which allows to reuse the functional models directly in the Transaction-Level Models. 79

System. C for TLM ± In recent years, System. C has been widely used for TLM because System. C supports a number of useful features for TLM such as. . . ® Interface method call (IMC) which allows a module to access methods of other modules Block A IMC Interface of B Block B ® Dynamic sensitivity which allows the execution of a module to be activated at a predefined time. ® System. C is an extension of C++, which allows to reuse the functional models directly in the Transaction-Level Models. 79

Transaction Level Modeling for So. C ± TLM is a breakthrough for So. C design challenges because ® ® We can estimate the design issues (performance, bottleneck and power consumption) in the early design stage. Simulation performance is faster than RTL. ® ® ® up to x 1000 compared to RTL. TLM can provide 100% cycle-accurate information. TLM can be a reference model for later design stages. 80

Transaction Level Modeling for So. C ± TLM is a breakthrough for So. C design challenges because ® ® We can estimate the design issues (performance, bottleneck and power consumption) in the early design stage. Simulation performance is faster than RTL. ® ® ® up to x 1000 compared to RTL. TLM can provide 100% cycle-accurate information. TLM can be a reference model for later design stages. 80

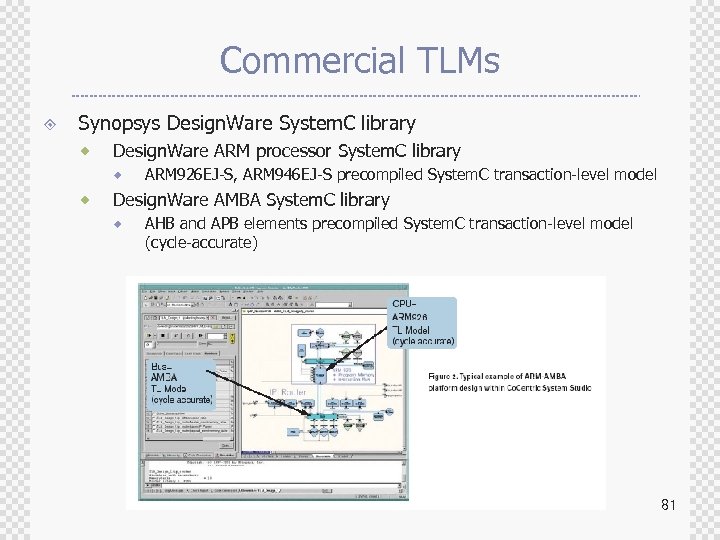

Commercial TLMs ± Synopsys Design. Ware System. C library ® Design. Ware ARM processor System. C library ® ® ARM 926 EJ-S, ARM 946 EJ-S precompiled System. C transaction-level model Design. Ware AMBA System. C library ® AHB and APB elements precompiled System. C transaction-level model (cycle-accurate) 81

Commercial TLMs ± Synopsys Design. Ware System. C library ® Design. Ware ARM processor System. C library ® ® ARM 926 EJ-S, ARM 946 EJ-S precompiled System. C transaction-level model Design. Ware AMBA System. C library ® AHB and APB elements precompiled System. C transaction-level model (cycle-accurate) 81

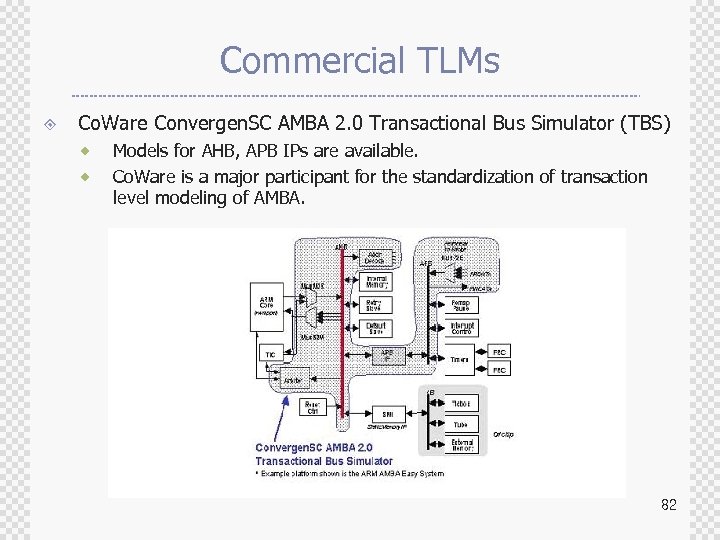

Commercial TLMs ± Co. Ware Convergen. SC AMBA 2. 0 Transactional Bus Simulator (TBS) ® ® Models for AHB, APB IPs are available. Co. Ware is a major participant for the standardization of transaction level modeling of AMBA. 82

Commercial TLMs ± Co. Ware Convergen. SC AMBA 2. 0 Transactional Bus Simulator (TBS) ® ® Models for AHB, APB IPs are available. Co. Ware is a major participant for the standardization of transaction level modeling of AMBA. 82

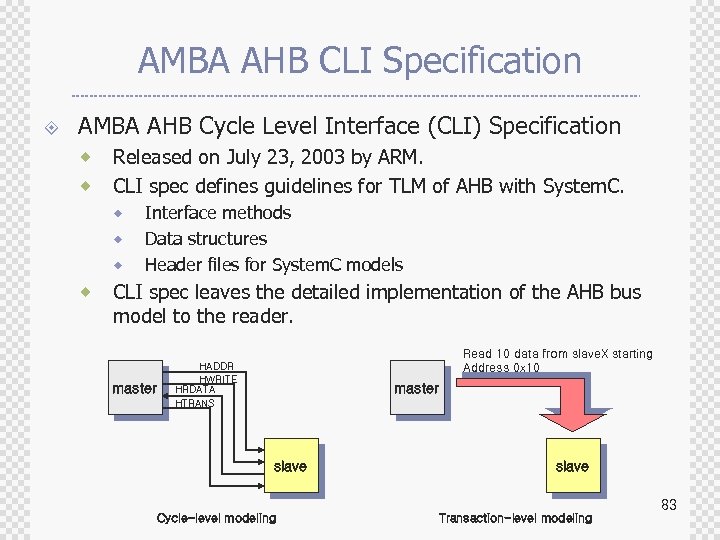

AMBA AHB CLI Specification ± AMBA AHB Cycle Level Interface (CLI) Specification ® ® Released on July 23, 2003 by ARM. CLI spec defines guidelines for TLM of AHB with System. C. ® ® Interface methods Data structures Header files for System. C models CLI spec leaves the detailed implementation of the AHB bus model to the reader. master Read 10 data from slave. X starting Address 0 x 10 HADDR HWRITE HRDATA HTRANS master slave 83 Cycle-level modeling Transaction-level modeling

AMBA AHB CLI Specification ± AMBA AHB Cycle Level Interface (CLI) Specification ® ® Released on July 23, 2003 by ARM. CLI spec defines guidelines for TLM of AHB with System. C. ® ® Interface methods Data structures Header files for System. C models CLI spec leaves the detailed implementation of the AHB bus model to the reader. master Read 10 data from slave. X starting Address 0 x 10 HADDR HWRITE HRDATA HTRANS master slave 83 Cycle-level modeling Transaction-level modeling

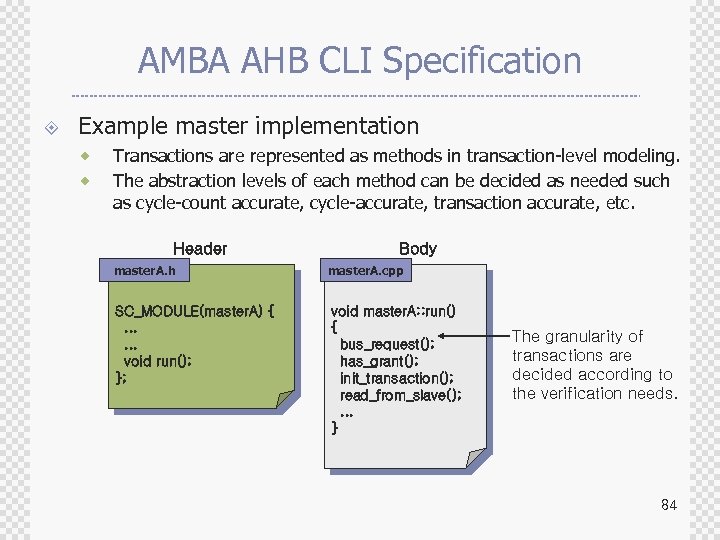

AMBA AHB CLI Specification ± Example master implementation ® ® Transactions are represented as methods in transaction-level modeling. The abstraction levels of each method can be decided as needed such as cycle-count accurate, cycle-accurate, transaction accurate, etc. Header Body master. A. h master. A. cpp SC_MODULE(master. A) {. . . void run(); }; void master. A: : run() { bus_request(); has_grant(); init_transaction(); read_from_slave(); . . . } The granularity of transactions are decided according to the verification needs. 84

AMBA AHB CLI Specification ± Example master implementation ® ® Transactions are represented as methods in transaction-level modeling. The abstraction levels of each method can be decided as needed such as cycle-count accurate, cycle-accurate, transaction accurate, etc. Header Body master. A. h master. A. cpp SC_MODULE(master. A) {. . . void run(); }; void master. A: : run() { bus_request(); has_grant(); init_transaction(); read_from_slave(); . . . } The granularity of transactions are decided according to the verification needs. 84

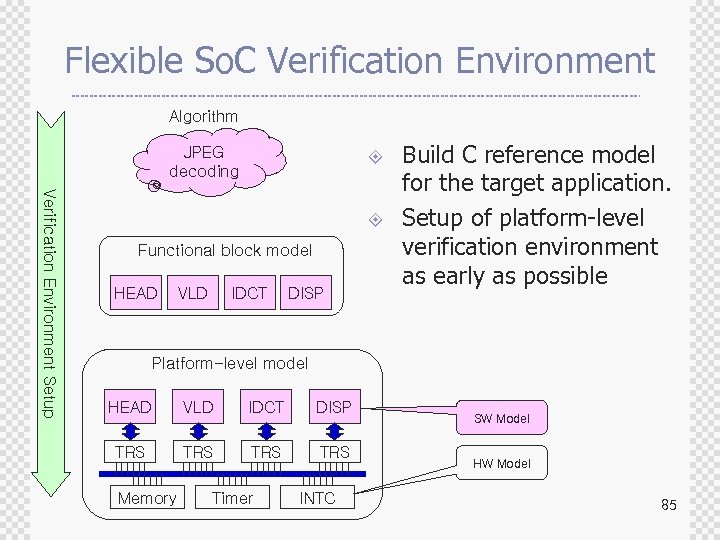

Flexible So. C Verification Environment Algorithm JPEG decoding ± Verification Environment Setup ± Functional block model HEAD VLD IDCT DISP Build C reference model for the target application. Setup of platform-level verification environment as early as possible Platform-level model HEAD VLD IDCT DISP TRS TRS Memory Timer INTC SW Model HW Model 85

Flexible So. C Verification Environment Algorithm JPEG decoding ± Verification Environment Setup ± Functional block model HEAD VLD IDCT DISP Build C reference model for the target application. Setup of platform-level verification environment as early as possible Platform-level model HEAD VLD IDCT DISP TRS TRS Memory Timer INTC SW Model HW Model 85

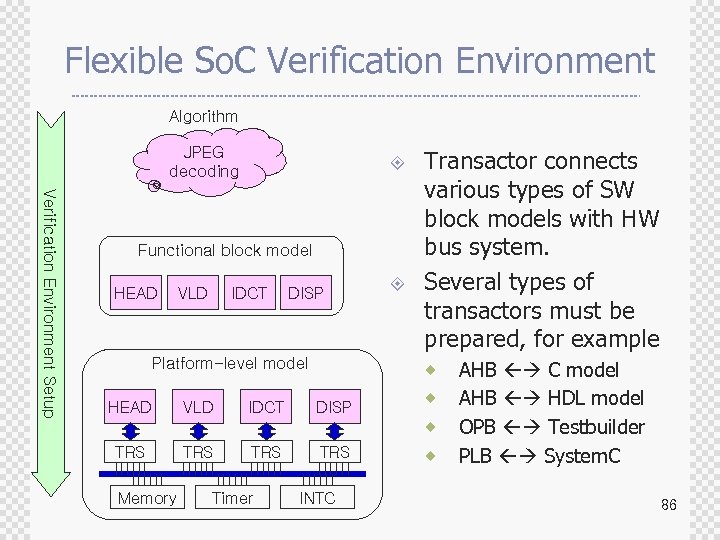

Flexible So. C Verification Environment Algorithm JPEG decoding ± Verification Environment Setup Functional block model HEAD VLD IDCT DISP Platform-level model HEAD VLD IDCT ± Transactor connects various types of SW block models with HW bus system. Several types of transactors must be prepared, for example ® DISP ® ® TRS Memory TRS Timer TRS INTC ® AHB C model AHB HDL model OPB Testbuilder PLB System. C 86

Flexible So. C Verification Environment Algorithm JPEG decoding ± Verification Environment Setup Functional block model HEAD VLD IDCT DISP Platform-level model HEAD VLD IDCT ± Transactor connects various types of SW block models with HW bus system. Several types of transactors must be prepared, for example ® DISP ® ® TRS Memory TRS Timer TRS INTC ® AHB C model AHB HDL model OPB Testbuilder PLB System. C 86

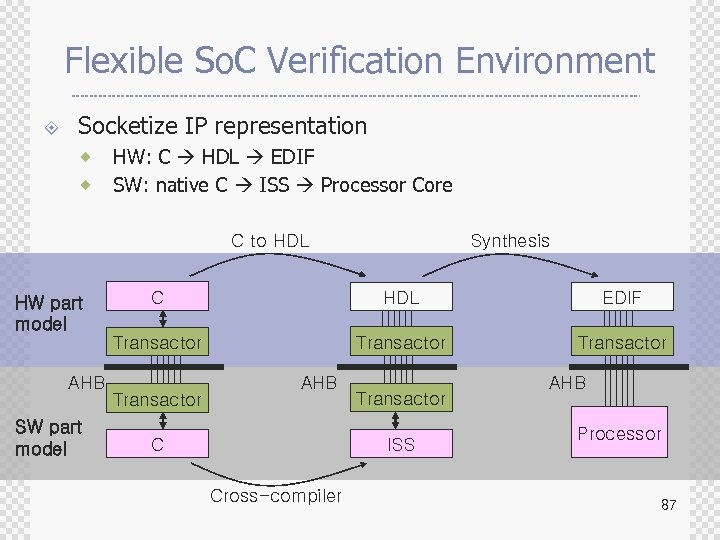

Flexible So. C Verification Environment ± Socketize IP representation ® ® HW: C HDL EDIF SW: native C ISS Processor Core C to HDL Synthesis AHB SW part model C HDL EDIF Transactor HW part model Transactor AHB C Transactor ISS Cross-compiler AHB Processor 87

Flexible So. C Verification Environment ± Socketize IP representation ® ® HW: C HDL EDIF SW: native C ISS Processor Core C to HDL Synthesis AHB SW part model C HDL EDIF Transactor HW part model Transactor AHB C Transactor ISS Cross-compiler AHB Processor 87

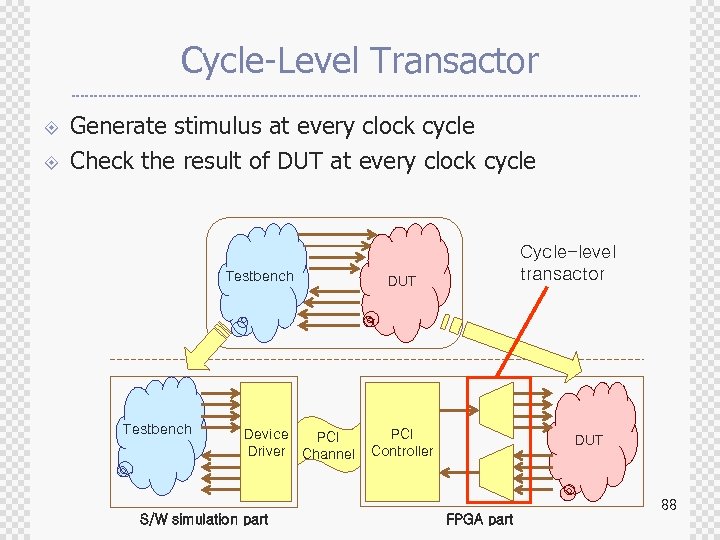

Cycle-Level Transactor ± ± Generate stimulus at every clock cycle Check the result of DUT at every clock cycle Testbench Device PCI Driver Channel Cycle-level transactor DUT PCI Controller DUT 88 S/W simulation part FPGA part

Cycle-Level Transactor ± ± Generate stimulus at every clock cycle Check the result of DUT at every clock cycle Testbench Device PCI Driver Channel Cycle-level transactor DUT PCI Controller DUT 88 S/W simulation part FPGA part

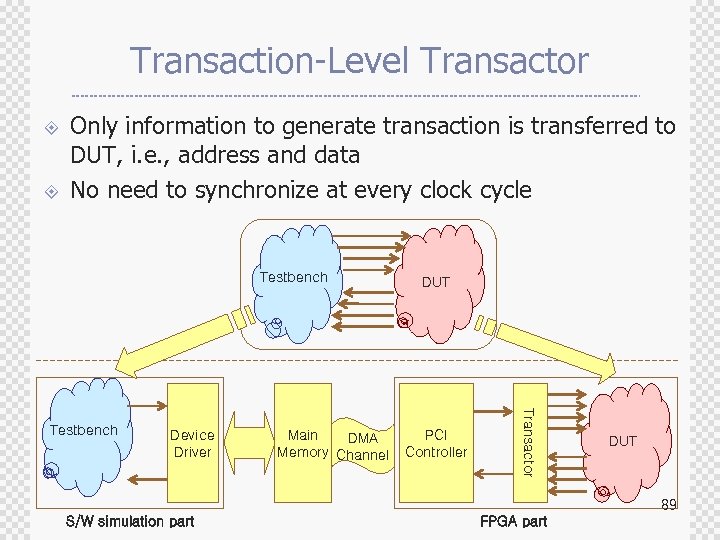

Transaction-Level Transactor ± ± Only information to generate transaction is transferred to DUT, i. e. , address and data No need to synchronize at every clock cycle Testbench Device Driver Main DMA Memory Channel PCI Controller Transactor Testbench DUT 89 S/W simulation part FPGA part

Transaction-Level Transactor ± ± Only information to generate transaction is transferred to DUT, i. e. , address and data No need to synchronize at every clock cycle Testbench Device Driver Main DMA Memory Channel PCI Controller Transactor Testbench DUT 89 S/W simulation part FPGA part

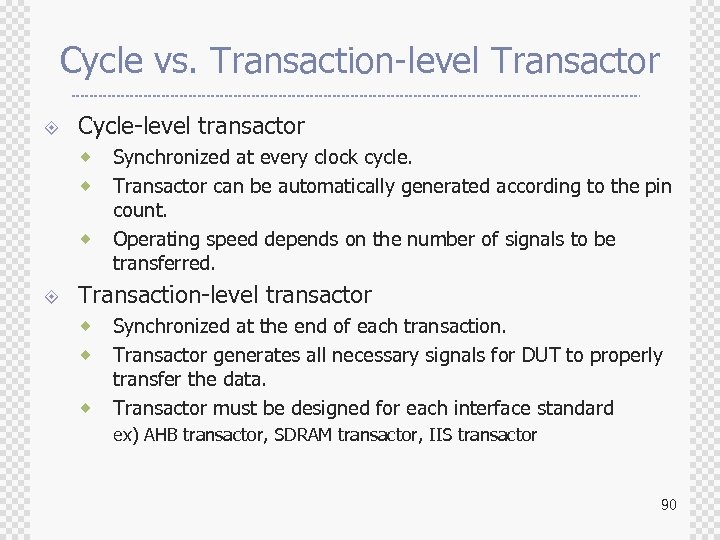

Cycle vs. Transaction-level Transactor ± Cycle-level transactor ® ® ® ± Synchronized at every clock cycle. Transactor can be automatically generated according to the pin count. Operating speed depends on the number of signals to be transferred. Transaction-level transactor ® ® ® Synchronized at the end of each transaction. Transactor generates all necessary signals for DUT to properly transfer the data. Transactor must be designed for each interface standard ex) AHB transactor, SDRAM transactor, IIS transactor 90

Cycle vs. Transaction-level Transactor ± Cycle-level transactor ® ® ® ± Synchronized at every clock cycle. Transactor can be automatically generated according to the pin count. Operating speed depends on the number of signals to be transferred. Transaction-level transactor ® ® ® Synchronized at the end of each transaction. Transactor generates all necessary signals for DUT to properly transfer the data. Transactor must be designed for each interface standard ex) AHB transactor, SDRAM transactor, IIS transactor 90

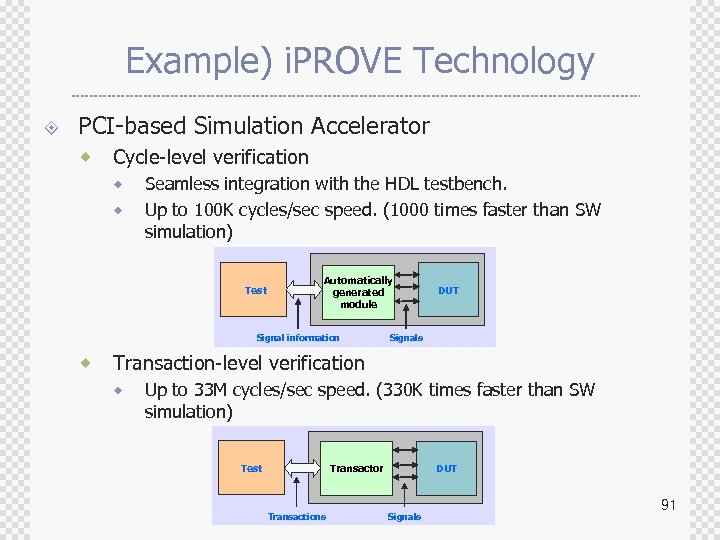

Example) i. PROVE Technology ± PCI-based Simulation Accelerator ® Cycle-level verification ® ® Seamless integration with the HDL testbench. Up to 100 K cycles/sec speed. (1000 times faster than SW simulation) Test Automatically generated module Signal information ® DUT Signals Transaction-level verification ® Up to 33 M cycles/sec speed. (330 K times faster than SW simulation) Test Transactor Transactions DUT Signals 91

Example) i. PROVE Technology ± PCI-based Simulation Accelerator ® Cycle-level verification ® ® Seamless integration with the HDL testbench. Up to 100 K cycles/sec speed. (1000 times faster than SW simulation) Test Automatically generated module Signal information ® DUT Signals Transaction-level verification ® Up to 33 M cycles/sec speed. (330 K times faster than SW simulation) Test Transactor Transactions DUT Signals 91

Open. Vera (OV) verification IP Reusable verification modules, i. e. , 1) bus functional models, 2) traffic generators, 3) protocol monitors, and 4) functional coverage blocks. ± 92

Open. Vera (OV) verification IP Reusable verification modules, i. e. , 1) bus functional models, 2) traffic generators, 3) protocol monitors, and 4) functional coverage blocks. ± 92

Companies providing Open. Vera Verification IP ± ± ± ± Control. Net India. IEEE 1394, TCP/IP Stack GDA Technology. Hyper. Transport HCL Technologies. I 2 C Integnology. Smart Card Interface n. Sys. SIEEE 1284, UART Qualis Design. Ethernet 10/100, Ethernet 10/100/1 G, Ethernet 10 G, PCI-X, PCI Express Base, PCI Express AS, 802. 11 b, ARM AMBA AHB, USB 1. 1, USB 2. 0 Synopsys, Inc. AMBA AHB, AMBA APB, USB, Ethernet 10/1000, IEEE 1394, PCI/PCIx, SONET, SDH, ATM, IP, PDH 93

Companies providing Open. Vera Verification IP ± ± ± ± Control. Net India. IEEE 1394, TCP/IP Stack GDA Technology. Hyper. Transport HCL Technologies. I 2 C Integnology. Smart Card Interface n. Sys. SIEEE 1284, UART Qualis Design. Ethernet 10/100, Ethernet 10/100/1 G, Ethernet 10 G, PCI-X, PCI Express Base, PCI Express AS, 802. 11 b, ARM AMBA AHB, USB 1. 1, USB 2. 0 Synopsys, Inc. AMBA AHB, AMBA APB, USB, Ethernet 10/1000, IEEE 1394, PCI/PCIx, SONET, SDH, ATM, IP, PDH 93

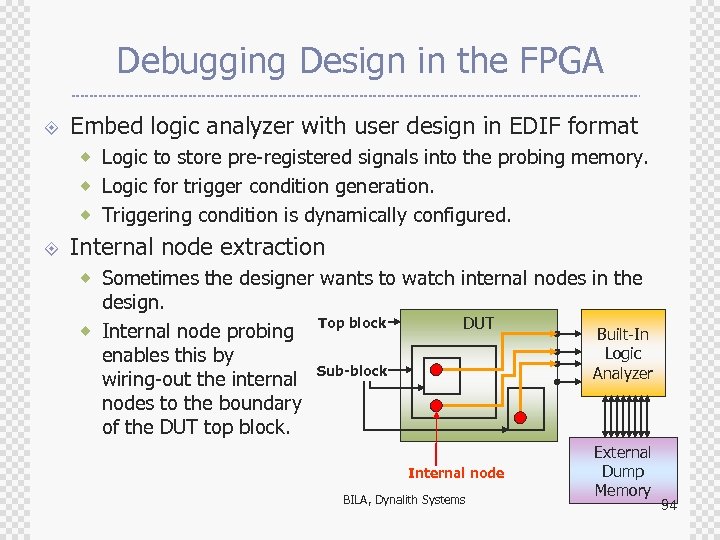

Debugging Design in the FPGA ± Embed logic analyzer with user design in EDIF format ® Logic to store pre-registered signals into the probing memory. ® Logic for trigger condition generation. ® Triggering condition is dynamically configured. ± Internal node extraction ® Sometimes the designer wants to watch internal nodes in the design. ® Internal node probing enables this by wiring-out the internal nodes to the boundary of the DUT top block. Top block DUT Sub-block Internal node BILA, Dynalith Systems Built-In Logic Analyzer External Dump Memory 94

Debugging Design in the FPGA ± Embed logic analyzer with user design in EDIF format ® Logic to store pre-registered signals into the probing memory. ® Logic for trigger condition generation. ® Triggering condition is dynamically configured. ± Internal node extraction ® Sometimes the designer wants to watch internal nodes in the design. ® Internal node probing enables this by wiring-out the internal nodes to the boundary of the DUT top block. Top block DUT Sub-block Internal node BILA, Dynalith Systems Built-In Logic Analyzer External Dump Memory 94

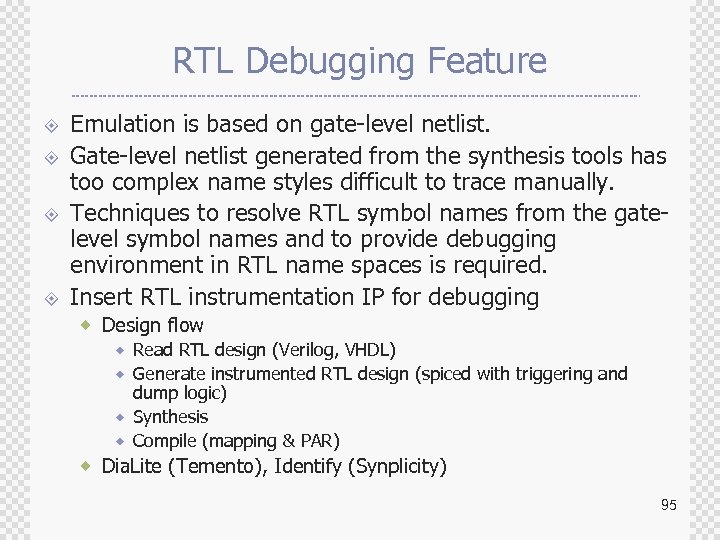

RTL Debugging Feature ± ± Emulation is based on gate-level netlist. Gate-level netlist generated from the synthesis tools has too complex name styles difficult to trace manually. Techniques to resolve RTL symbol names from the gatelevel symbol names and to provide debugging environment in RTL name spaces is required. Insert RTL instrumentation IP for debugging ® Design flow ® Read RTL design (Verilog, VHDL) ® Generate instrumented RTL design (spiced with triggering and dump logic) ® Synthesis ® Compile (mapping & PAR) ® Dia. Lite (Temento), Identify (Synplicity) 95

RTL Debugging Feature ± ± Emulation is based on gate-level netlist. Gate-level netlist generated from the synthesis tools has too complex name styles difficult to trace manually. Techniques to resolve RTL symbol names from the gatelevel symbol names and to provide debugging environment in RTL name spaces is required. Insert RTL instrumentation IP for debugging ® Design flow ® Read RTL design (Verilog, VHDL) ® Generate instrumented RTL design (spiced with triggering and dump logic) ® Synthesis ® Compile (mapping & PAR) ® Dia. Lite (Temento), Identify (Synplicity) 95

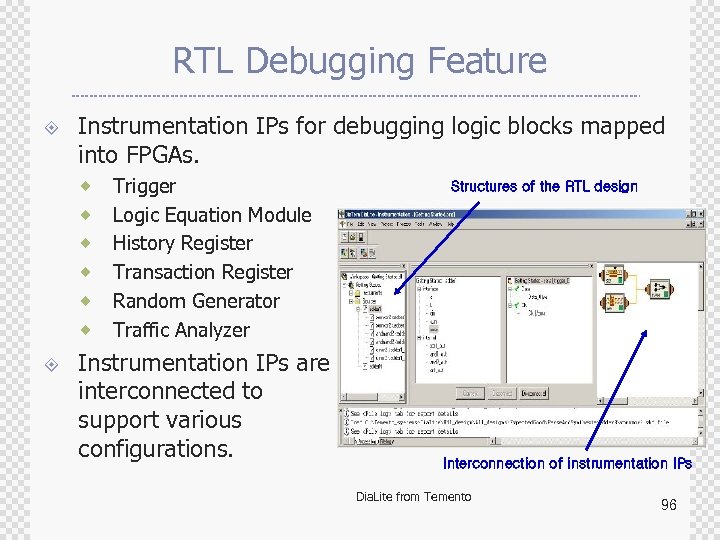

RTL Debugging Feature ± Instrumentation IPs for debugging logic blocks mapped into FPGAs. ® ® ® ± Trigger Logic Equation Module History Register Transaction Register Random Generator Traffic Analyzer Instrumentation IPs are interconnected to support various configurations. Structures of the RTL design Interconnection of instrumentation IPs Dia. Lite from Temento 96

RTL Debugging Feature ± Instrumentation IPs for debugging logic blocks mapped into FPGAs. ® ® ® ± Trigger Logic Equation Module History Register Transaction Register Random Generator Traffic Analyzer Instrumentation IPs are interconnected to support various configurations. Structures of the RTL design Interconnection of instrumentation IPs Dia. Lite from Temento 96

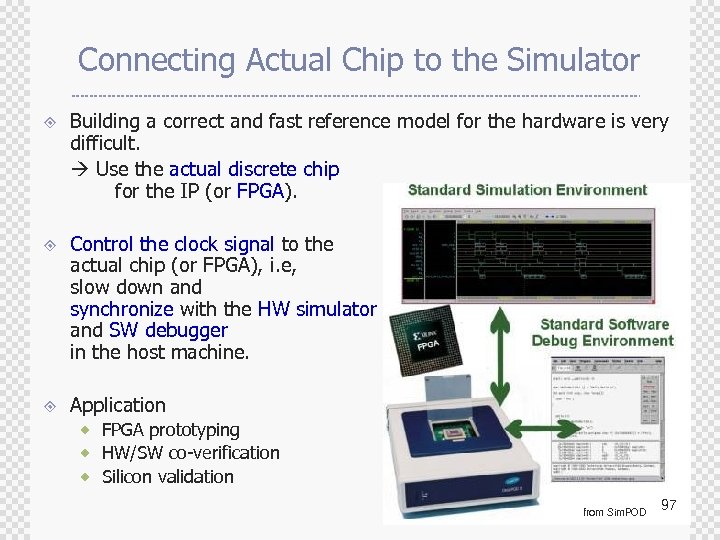

Connecting Actual Chip to the Simulator ± ± ± Building a correct and fast reference model for the hardware is very difficult. Use the actual discrete chip for the IP (or FPGA). Control the clock signal to the actual chip (or FPGA), i. e, slow down and synchronize with the HW simulator and SW debugger in the host machine. Application ® FPGA prototyping ® HW/SW co-verification ® Silicon validation from Sim. POD 97

Connecting Actual Chip to the Simulator ± ± ± Building a correct and fast reference model for the hardware is very difficult. Use the actual discrete chip for the IP (or FPGA). Control the clock signal to the actual chip (or FPGA), i. e, slow down and synchronize with the HW simulator and SW debugger in the host machine. Application ® FPGA prototyping ® HW/SW co-verification ® Silicon validation from Sim. POD 97

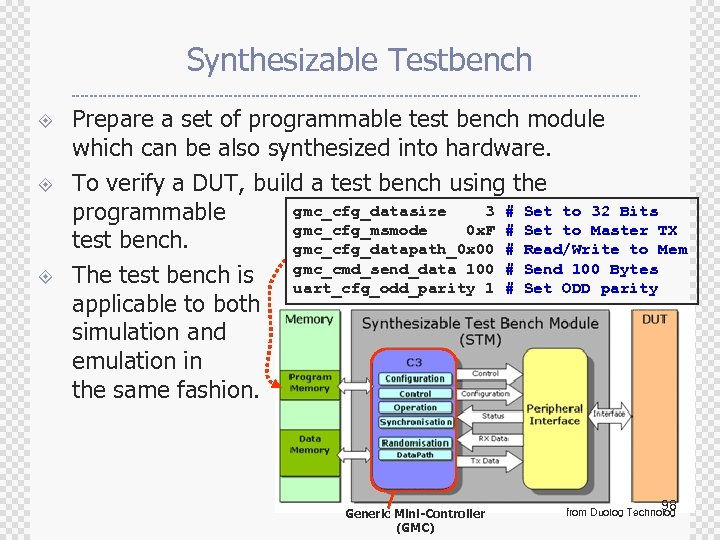

Synthesizable Testbench ± ± ± Prepare a set of programmable test bench module which can be also synthesized into hardware. To verify a DUT, build a test bench using the gmc_cfg_datasize 3 # Set to 32 Bits programmable gmc_cfg_msmode 0 x. F # Set to Master TX test bench. gmc_cfg_datapath_0 x 00 # Read/Write to Mem 100 Bytes The test bench is gmc_cmd_send_data 100 # Send. ODD parity uart_cfg_odd_parity 1 # Set applicable to both simulation and emulation in the same fashion. Generic Mini-Controller (GMC) 98 from Duolog Technolog

Synthesizable Testbench ± ± ± Prepare a set of programmable test bench module which can be also synthesized into hardware. To verify a DUT, build a test bench using the gmc_cfg_datasize 3 # Set to 32 Bits programmable gmc_cfg_msmode 0 x. F # Set to Master TX test bench. gmc_cfg_datapath_0 x 00 # Read/Write to Mem 100 Bytes The test bench is gmc_cmd_send_data 100 # Send. ODD parity uart_cfg_odd_parity 1 # Set applicable to both simulation and emulation in the same fashion. Generic Mini-Controller (GMC) 98 from Duolog Technolog

Agenda ± ± ± Why Verification ? Verification Alternatives Languages for System Modeling and Verification with Progressive Refinement So. C Verification ® ® ± Co-simulation Co-emulation Concluding Remarks 99

Agenda ± ± ± Why Verification ? Verification Alternatives Languages for System Modeling and Verification with Progressive Refinement So. C Verification ® ® ± Co-simulation Co-emulation Concluding Remarks 99

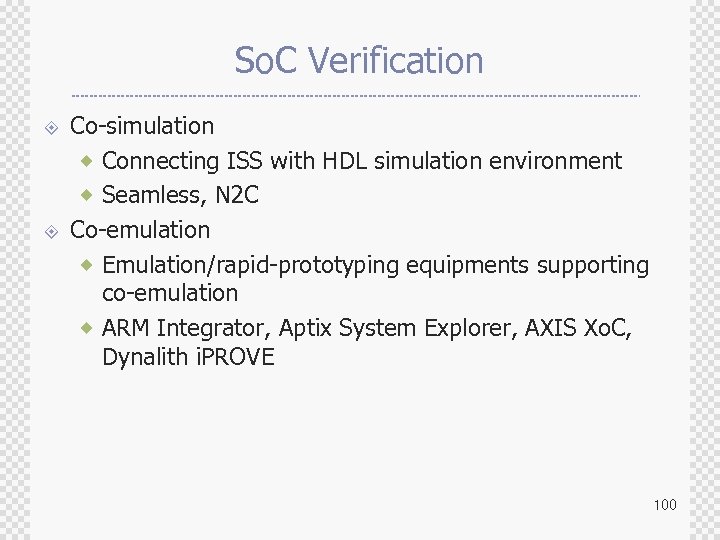

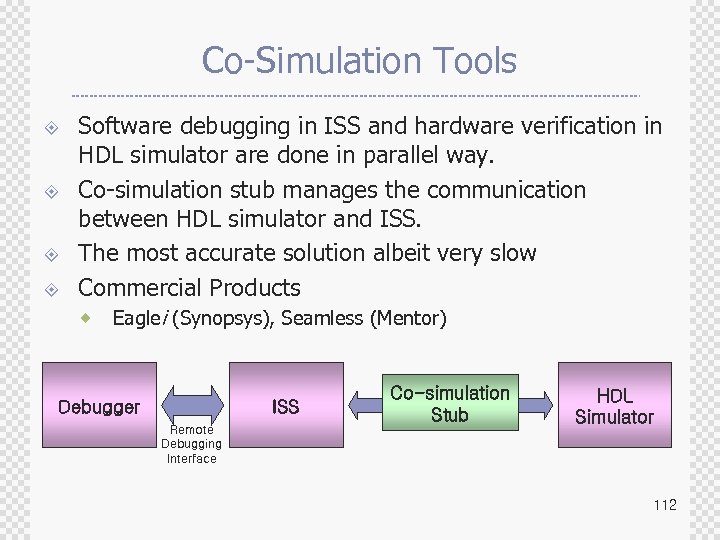

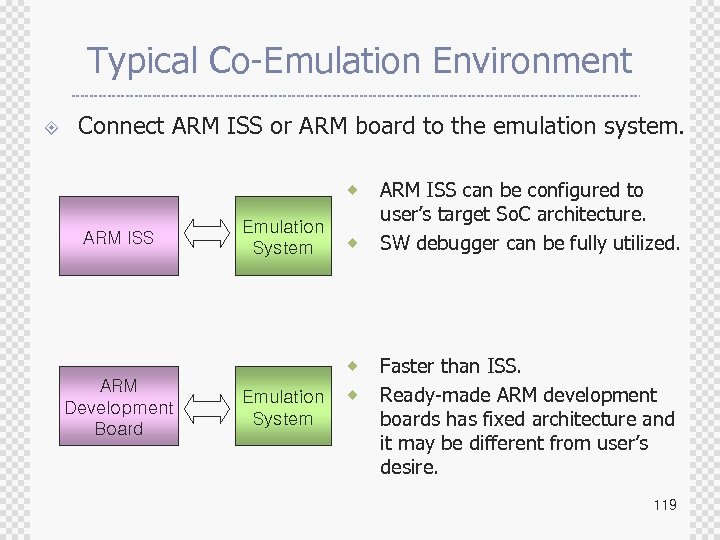

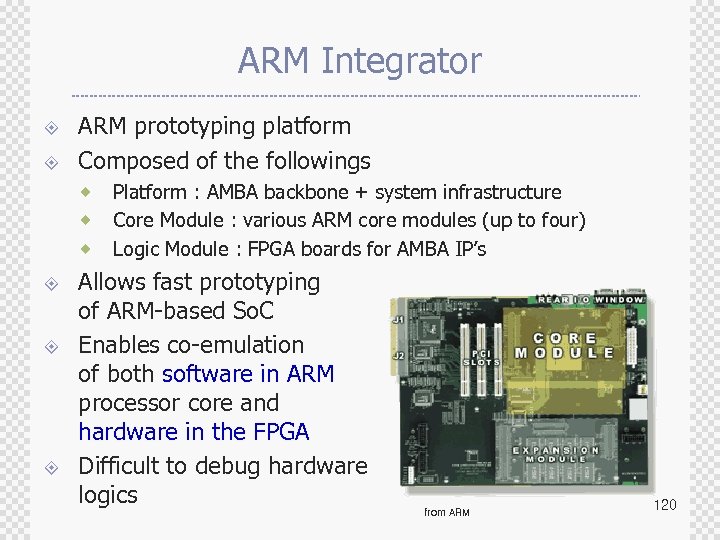

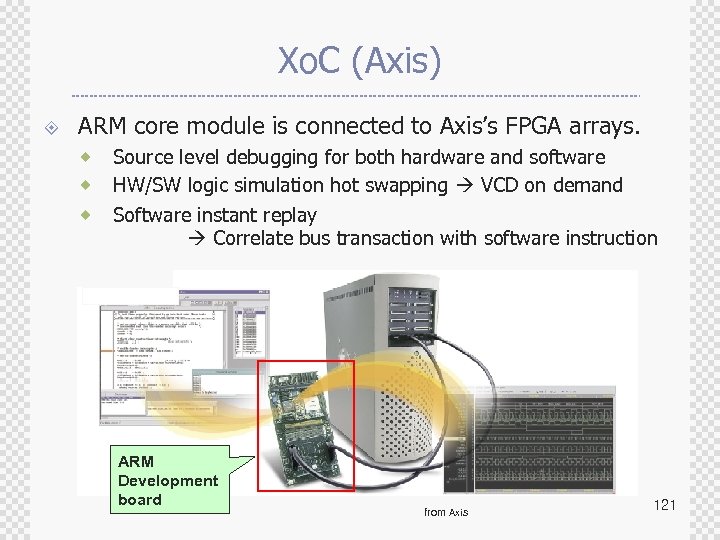

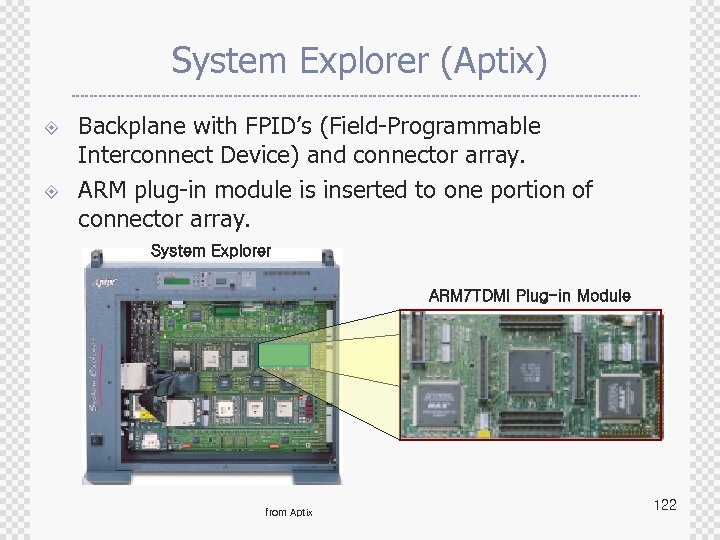

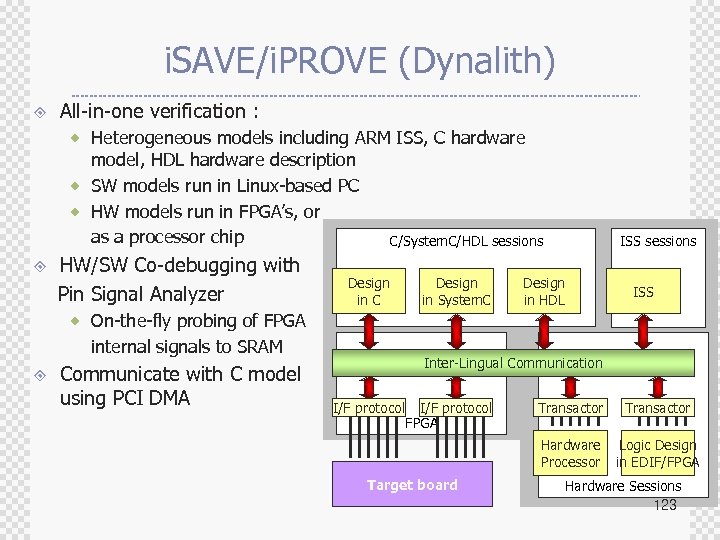

So. C Verification ± ± Co-simulation ® Connecting ISS with HDL simulation environment ® Seamless, N 2 C Co-emulation ® Emulation/rapid-prototyping equipments supporting co-emulation ® ARM Integrator, Aptix System Explorer, AXIS Xo. C, Dynalith i. PROVE 100

So. C Verification ± ± Co-simulation ® Connecting ISS with HDL simulation environment ® Seamless, N 2 C Co-emulation ® Emulation/rapid-prototyping equipments supporting co-emulation ® ARM Integrator, Aptix System Explorer, AXIS Xo. C, Dynalith i. PROVE 100

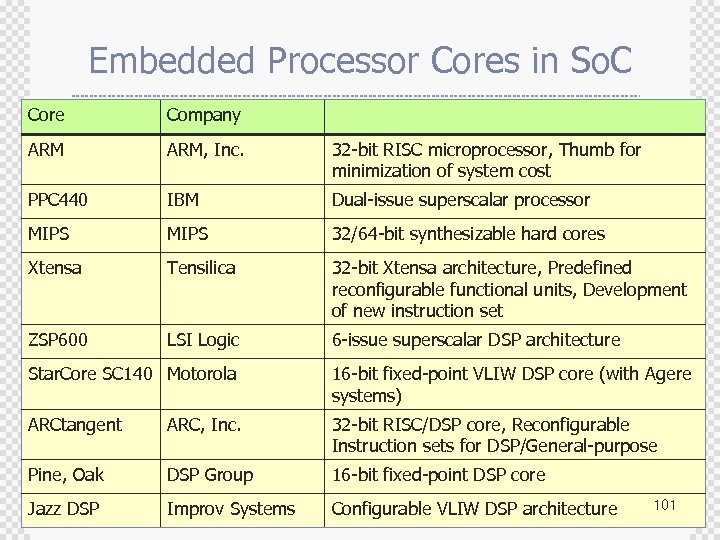

Embedded Processor Cores in So. C Core Company ARM, Inc. 32 -bit RISC microprocessor, Thumb for minimization of system cost PPC 440 IBM Dual-issue superscalar processor MIPS 32/64 -bit synthesizable hard cores Xtensa Tensilica 32 -bit Xtensa architecture, Predefined reconfigurable functional units, Development of new instruction set ZSP 600 LSI Logic 6 -issue superscalar DSP architecture Star. Core SC 140 Motorola 16 -bit fixed-point VLIW DSP core (with Agere systems) ARCtangent ARC, Inc. 32 -bit RISC/DSP core, Reconfigurable Instruction sets for DSP/General-purpose Pine, Oak DSP Group 16 -bit fixed-point DSP core Jazz DSP Improv Systems Configurable VLIW DSP architecture 101

Embedded Processor Cores in So. C Core Company ARM, Inc. 32 -bit RISC microprocessor, Thumb for minimization of system cost PPC 440 IBM Dual-issue superscalar processor MIPS 32/64 -bit synthesizable hard cores Xtensa Tensilica 32 -bit Xtensa architecture, Predefined reconfigurable functional units, Development of new instruction set ZSP 600 LSI Logic 6 -issue superscalar DSP architecture Star. Core SC 140 Motorola 16 -bit fixed-point VLIW DSP core (with Agere systems) ARCtangent ARC, Inc. 32 -bit RISC/DSP core, Reconfigurable Instruction sets for DSP/General-purpose Pine, Oak DSP Group 16 -bit fixed-point DSP core Jazz DSP Improv Systems Configurable VLIW DSP architecture 101

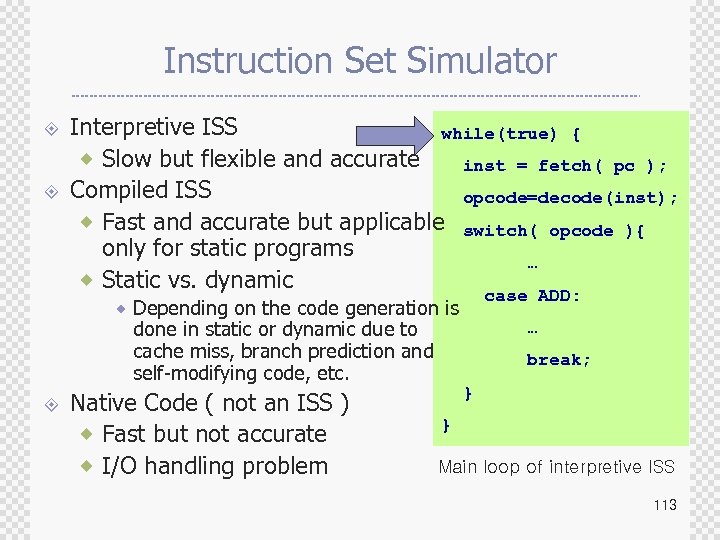

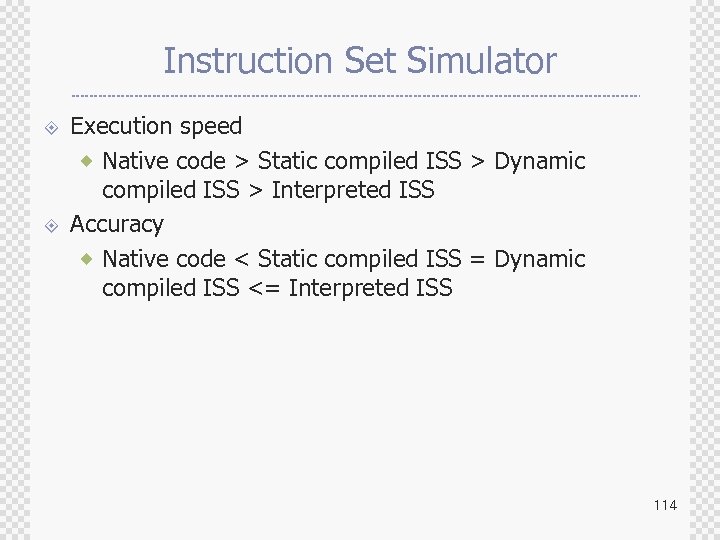

Models of Embedded Processor ± ± Instruction Set Simulator Architecture Behavior Model Processor Simulation Model Hardware module with Real Chip 102

Models of Embedded Processor ± ± Instruction Set Simulator Architecture Behavior Model Processor Simulation Model Hardware module with Real Chip 102

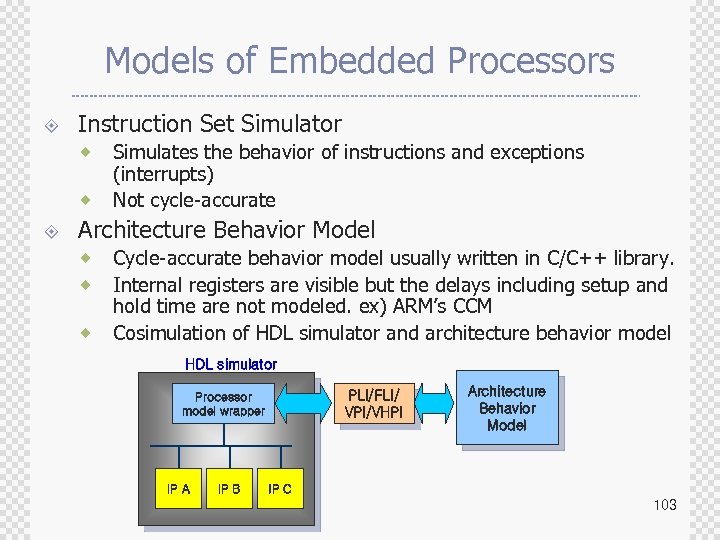

Models of Embedded Processors ± Instruction Set Simulator ® ® ± Simulates the behavior of instructions and exceptions (interrupts) Not cycle-accurate Architecture Behavior Model ® ® ® Cycle-accurate behavior model usually written in C/C++ library. Internal registers are visible but the delays including setup and hold time are not modeled. ex) ARM’s CCM Cosimulation of HDL simulator and architecture behavior model HDL simulator PLI/FLI/ VPI/VHPI Processor model wrapper IP A IP B Architecture Behavior Model IP C 103

Models of Embedded Processors ± Instruction Set Simulator ® ® ± Simulates the behavior of instructions and exceptions (interrupts) Not cycle-accurate Architecture Behavior Model ® ® ® Cycle-accurate behavior model usually written in C/C++ library. Internal registers are visible but the delays including setup and hold time are not modeled. ex) ARM’s CCM Cosimulation of HDL simulator and architecture behavior model HDL simulator PLI/FLI/ VPI/VHPI Processor model wrapper IP A IP B Architecture Behavior Model IP C 103

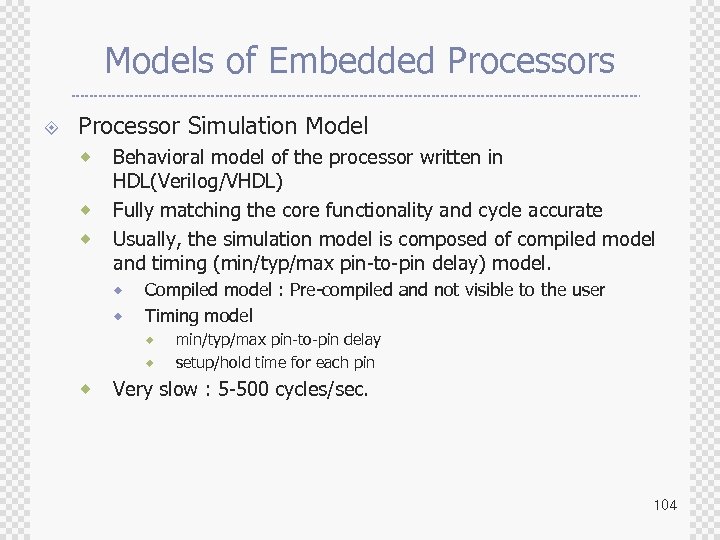

Models of Embedded Processors ± Processor Simulation Model ® ® ® Behavioral model of the processor written in HDL(Verilog/VHDL) Fully matching the core functionality and cycle accurate Usually, the simulation model is composed of compiled model and timing (min/typ/max pin-to-pin delay) model. ® ® Compiled model : Pre-compiled and not visible to the user Timing model ® ® ® min/typ/max pin-to-pin delay setup/hold time for each pin Very slow : 5 -500 cycles/sec. 104

Models of Embedded Processors ± Processor Simulation Model ® ® ® Behavioral model of the processor written in HDL(Verilog/VHDL) Fully matching the core functionality and cycle accurate Usually, the simulation model is composed of compiled model and timing (min/typ/max pin-to-pin delay) model. ® ® Compiled model : Pre-compiled and not visible to the user Timing model ® ® ® min/typ/max pin-to-pin delay setup/hold time for each pin Very slow : 5 -500 cycles/sec. 104

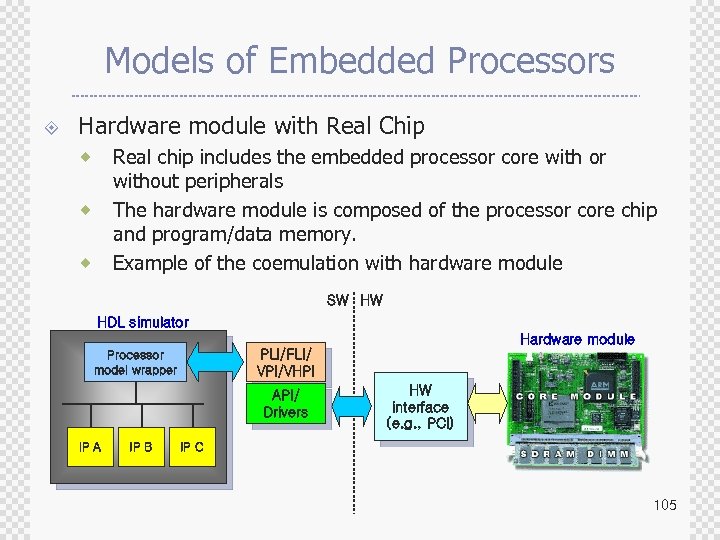

Models of Embedded Processors ± Hardware module with Real Chip Real chip includes the embedded processor core with or without peripherals The hardware module is composed of the processor core chip and program/data memory. Example of the coemulation with hardware module ® ® ® SW HW HDL simulator Hardware module PLI/FLI/ VPI/VHPI Processor model wrapper API/ Drivers IP A IP B HW interface (e. g. , PCI) IP C 105

Models of Embedded Processors ± Hardware module with Real Chip Real chip includes the embedded processor core with or without peripherals The hardware module is composed of the processor core chip and program/data memory. Example of the coemulation with hardware module ® ® ® SW HW HDL simulator Hardware module PLI/FLI/ VPI/VHPI Processor model wrapper API/ Drivers IP A IP B HW interface (e. g. , PCI) IP C 105

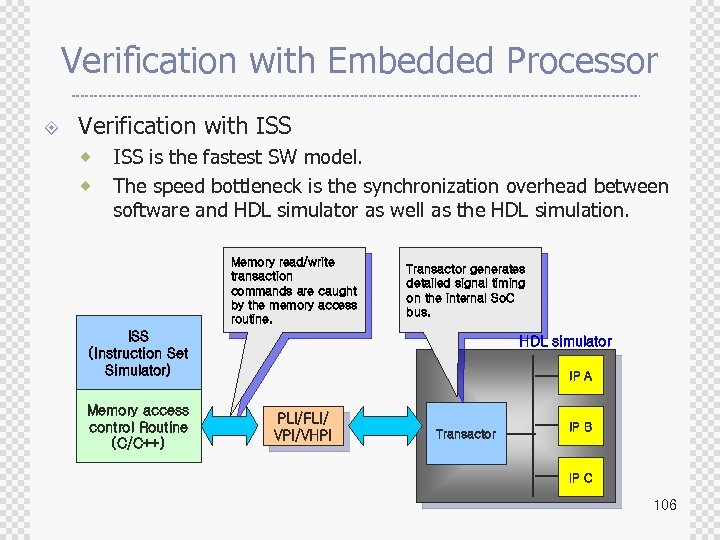

Verification with Embedded Processor ± Verification with ISS ® ® ISS is the fastest SW model. The speed bottleneck is the synchronization overhead between software and HDL simulator as well as the HDL simulation. Memory read/write transaction commands are caught by the memory access routine. Transactor generates detailed signal timing on the internal So. C bus. ISS (Instruction Set Simulator) Memory access control Routine (C/C++) HDL simulator IP A PLI/FLI/ VPI/VHPI Transactor IP B IP C 106

Verification with Embedded Processor ± Verification with ISS ® ® ISS is the fastest SW model. The speed bottleneck is the synchronization overhead between software and HDL simulator as well as the HDL simulation. Memory read/write transaction commands are caught by the memory access routine. Transactor generates detailed signal timing on the internal So. C bus. ISS (Instruction Set Simulator) Memory access control Routine (C/C++) HDL simulator IP A PLI/FLI/ VPI/VHPI Transactor IP B IP C 106

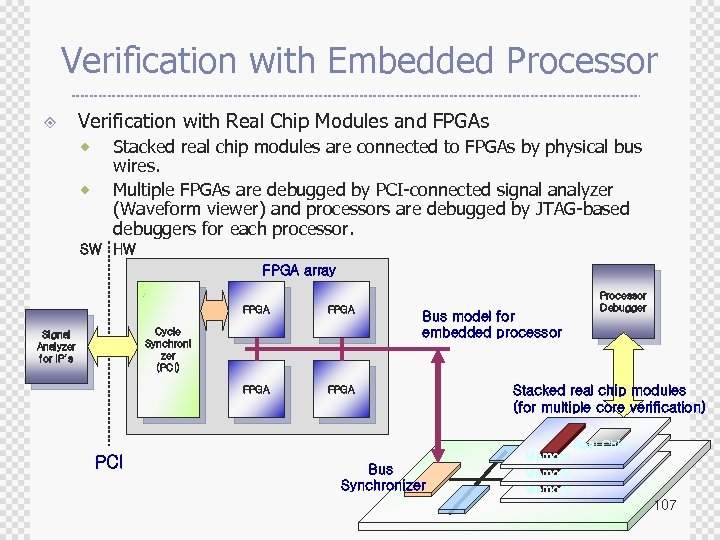

Verification with Embedded Processor ± Verification with Real Chip Modules and FPGAs ® ® Stacked real chip modules are connected to FPGAs by physical bus wires. Multiple FPGAs are debugged by PCI-connected signal analyzer (Waveform viewer) and processors are debugged by JTAG-based debuggers for each processor. SW HW FPGA array FPGA Cycle Synchroni zer (PCI) Signal Analyzer for IP’s PCI Bus model for embedded processor Bus Synchronizer Processor Debugger Stacked real chip modules (for multiple core verification) Real Chip Memory 107

Verification with Embedded Processor ± Verification with Real Chip Modules and FPGAs ® ® Stacked real chip modules are connected to FPGAs by physical bus wires. Multiple FPGAs are debugged by PCI-connected signal analyzer (Waveform viewer) and processors are debugged by JTAG-based debuggers for each processor. SW HW FPGA array FPGA Cycle Synchroni zer (PCI) Signal Analyzer for IP’s PCI Bus model for embedded processor Bus Synchronizer Processor Debugger Stacked real chip modules (for multiple core verification) Real Chip Memory 107

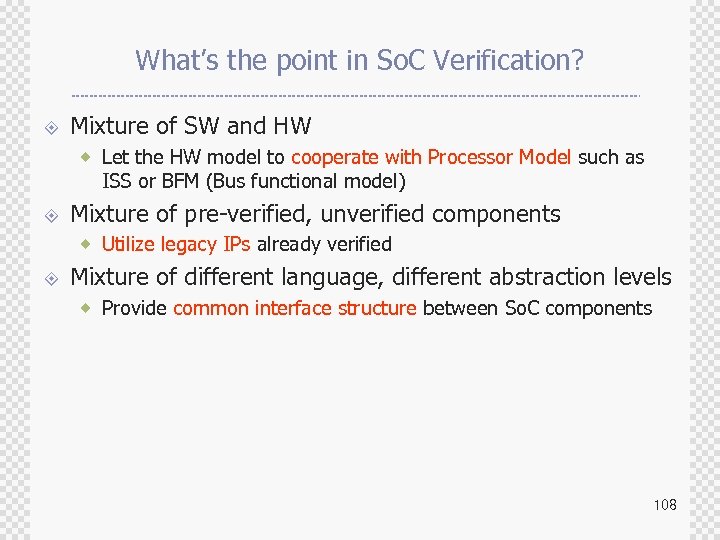

What’s the point in So. C Verification? ± Mixture of SW and HW ® Let the HW model to cooperate with Processor Model such as ISS or BFM (Bus functional model) ± Mixture of pre-verified, unverified components ® Utilize legacy IPs already verified ± Mixture of different language, different abstraction levels ® Provide common interface structure between So. C components 108

What’s the point in So. C Verification? ± Mixture of SW and HW ® Let the HW model to cooperate with Processor Model such as ISS or BFM (Bus functional model) ± Mixture of pre-verified, unverified components ® Utilize legacy IPs already verified ± Mixture of different language, different abstraction levels ® Provide common interface structure between So. C components 108

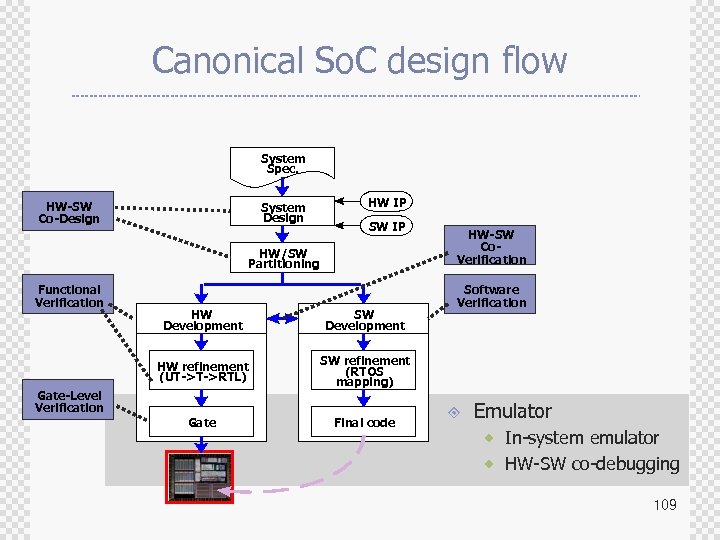

Canonical So. C design flow System Spec. HW-SW Co-Design System Design HW IP SW IP HW/SW Partitioning Functional Verification HW Development SW Development HW refinement (UT->T->RTL) Final code Software Verification SW refinement (RTOS mapping) Gate HW-SW Co. Verification Gate-Level Verification ± Emulator ® In-system emulator ® HW-SW co-debugging 109

Canonical So. C design flow System Spec. HW-SW Co-Design System Design HW IP SW IP HW/SW Partitioning Functional Verification HW Development SW Development HW refinement (UT->T->RTL) Final code Software Verification SW refinement (RTOS mapping) Gate HW-SW Co. Verification Gate-Level Verification ± Emulator ® In-system emulator ® HW-SW co-debugging 109

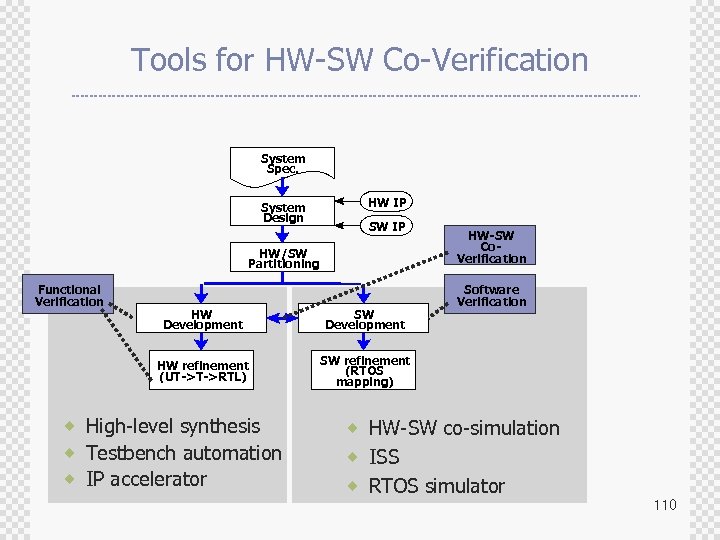

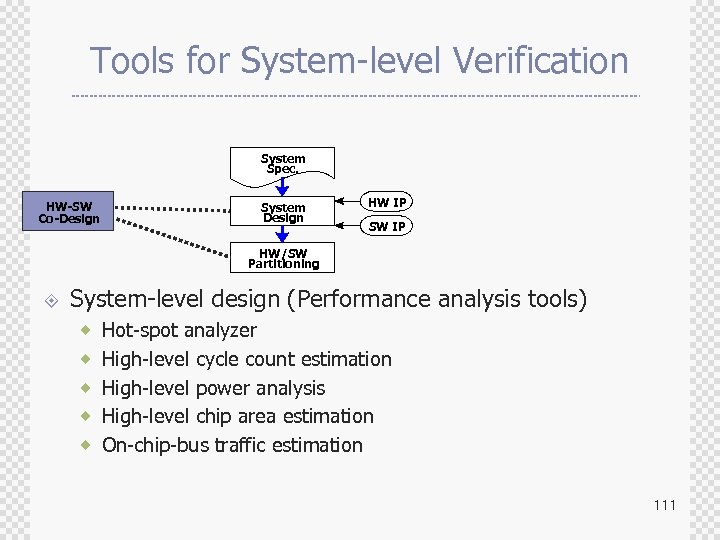

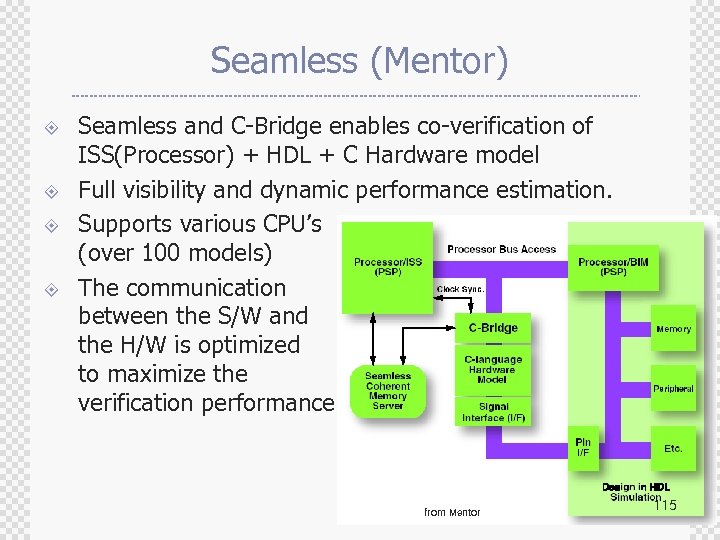

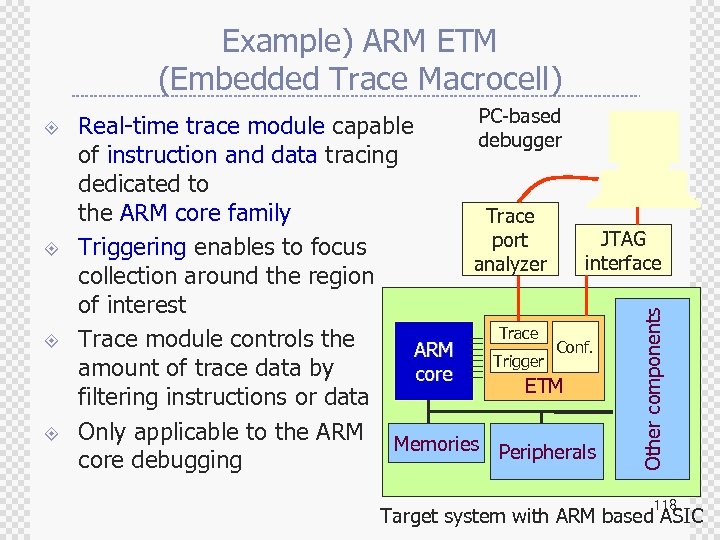

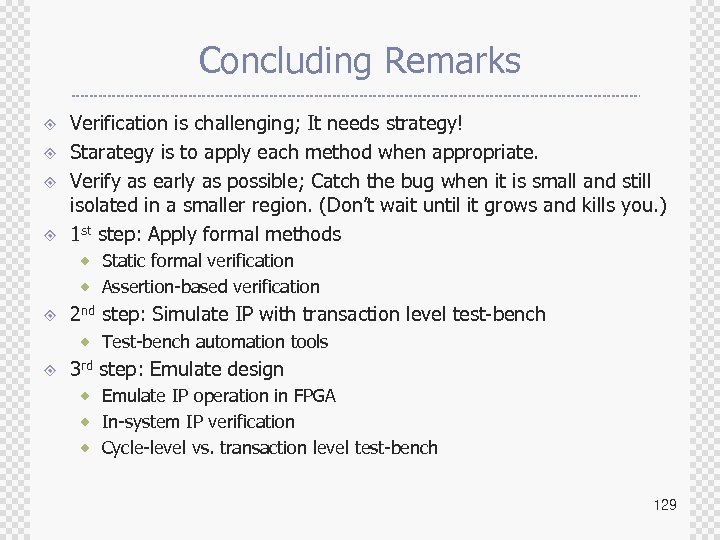

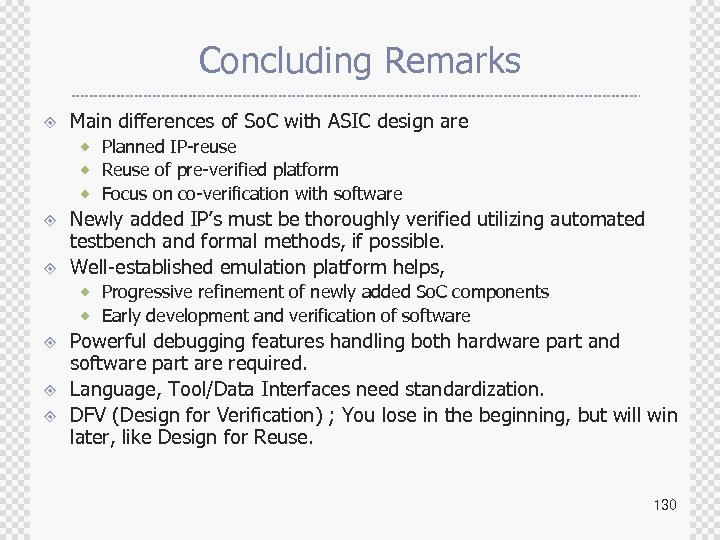

Tools for HW-SW Co-Verification System Spec. System Design HW IP SW IP HW/SW Partitioning Functional Verification HW Development SW Development HW refinement (UT->T->RTL) HW-SW Co. Verification Software Verification SW refinement (RTOS mapping) ® High-level synthesis ® Testbench automation ® IP accelerator ® HW-SW co-simulation ® ISS ® RTOS simulator 110