4757fd7e72b3b3dcd382dde41ca2ef06.ppt

- Количество слайдов: 28

CSA 4040: Advanced Topics in NLP Information Extraction III Coreference December 2003 CSA 4050: Information Extraction III 1

CSA 4040: Advanced Topics in NLP Information Extraction III Coreference December 2003 CSA 4050: Information Extraction III 1

References – D. Appelt & D. Israel, Introduction to IE Technology, IJCAI-99 Tutorial (1999) – Van Deemter and Kibble (1999) – What is coreference and what should coreference annotation be? – J. F. Mc. Carthy & W. Lenhert, Using Decision Trees for Coreference Resolution, Proc. IJCAI 1995 December 2003 CSA 4050: Information Extraction III 2

References – D. Appelt & D. Israel, Introduction to IE Technology, IJCAI-99 Tutorial (1999) – Van Deemter and Kibble (1999) – What is coreference and what should coreference annotation be? – J. F. Mc. Carthy & W. Lenhert, Using Decision Trees for Coreference Resolution, Proc. IJCAI 1995 December 2003 CSA 4050: Information Extraction III 2

What is Coreference? • The relation of coreference has been defined as holding between two noun phrases if they "refer to the same entity". • NPs α and β corefer if ref(α) = ref(β) • Equivalence relation: symmetrical, transitive and reflexive relation which partitions NPs into a set of equivalence classes. • Issue: must reference actually be identified in order to establish coreference? December 2003 CSA 4050: Information Extraction III 3

What is Coreference? • The relation of coreference has been defined as holding between two noun phrases if they "refer to the same entity". • NPs α and β corefer if ref(α) = ref(β) • Equivalence relation: symmetrical, transitive and reflexive relation which partitions NPs into a set of equivalence classes. • Issue: must reference actually be identified in order to establish coreference? December 2003 CSA 4050: Information Extraction III 3

Different Kinds of Noun Phrase • Proper Nouns – Single Word – Multiple Word • Pronouns • Descriptions – Definite – Indefinite December 2003 CSA 4050: Information Extraction III 4

Different Kinds of Noun Phrase • Proper Nouns – Single Word – Multiple Word • Pronouns • Descriptions – Definite – Indefinite December 2003 CSA 4050: Information Extraction III 4

Coreference Tags in MUC 6 • The ID and REF attributes are used to indicate that there is a coreference link between two strings. The ID is arbitrarily but uniquely assigned to the string during markup. The REF uses that ID to indicate the coreference link.

Coreference Tags in MUC 6 • The ID and REF attributes are used to indicate that there is a coreference link between two strings. The ID is arbitrarily but uniquely assigned to the string during markup. The REF uses that ID to indicate the coreference link.

Proper Nouns • Obvious case: two separate occurrences of the same proper noun. Paris is the capital of France; Paris is beautiful • or of identical phrases Mr. Dom Mintoff is as Mr. Dom Mintoff does • But note that similar tokens do not always corefer, even when proper nouns. Example Chris Attard met Chris Attard for the first time • Conversely, different looking tokens can corefer Genève; Geneva; Ginevra; Genf December 2003 CSA 4050: Information Extraction III 6

Proper Nouns • Obvious case: two separate occurrences of the same proper noun. Paris is the capital of France; Paris is beautiful • or of identical phrases Mr. Dom Mintoff is as Mr. Dom Mintoff does • But note that similar tokens do not always corefer, even when proper nouns. Example Chris Attard met Chris Attard for the first time • Conversely, different looking tokens can corefer Genève; Geneva; Ginevra; Genf December 2003 CSA 4050: Information Extraction III 6

Amo et. al 1999 • Definition of “replicantes” relation between variants of Spanish proper names. Names are in replicancia relation if: • One of them coincides with the initials of the other. • The shorter is contained in the longer • Every word of the shorter is “a version of” some word in the longer. {Jose Luis Martinez Lopez, JL Martinez, J. L. Martinez, J Martinez, Luis Martinez, Jose Martinez, JL, M, L} December 2003 CSA 4050: Information Extraction III 7

Amo et. al 1999 • Definition of “replicantes” relation between variants of Spanish proper names. Names are in replicancia relation if: • One of them coincides with the initials of the other. • The shorter is contained in the longer • Every word of the shorter is “a version of” some word in the longer. {Jose Luis Martinez Lopez, JL Martinez, J. L. Martinez, J Martinez, Luis Martinez, Jose Martinez, JL, M, L} December 2003 CSA 4050: Information Extraction III 7

Pronouns • Most pronouns refer to an antecedent which occurs earlier in the text (not necessarily in the same sentence). John came into the room. He shivered. • The pronoun is said to be in an anaphoric relation to the antecedent. • Determination of reference can require large amounts of knowledge processing. The police refused the demonstrators a permit because they feared/advocated violence. December 2003 CSA 4050: Information Extraction III 8

Pronouns • Most pronouns refer to an antecedent which occurs earlier in the text (not necessarily in the same sentence). John came into the room. He shivered. • The pronoun is said to be in an anaphoric relation to the antecedent. • Determination of reference can require large amounts of knowledge processing. The police refused the demonstrators a permit because they feared/advocated violence. December 2003 CSA 4050: Information Extraction III 8

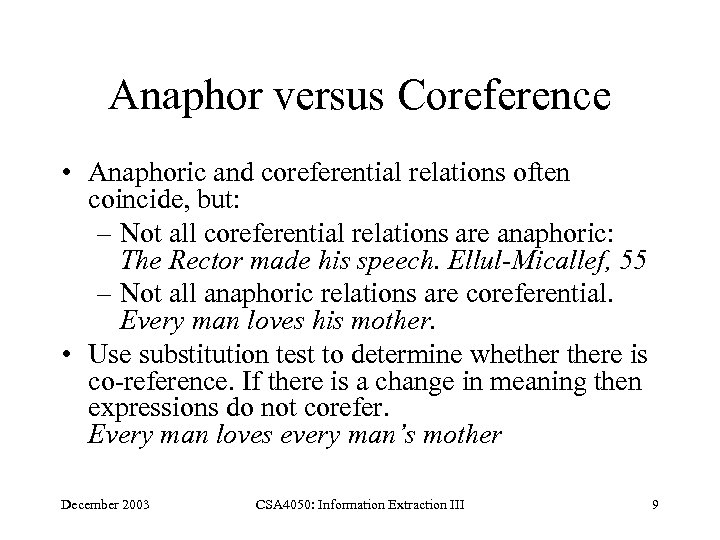

Anaphor versus Coreference • Anaphoric and coreferential relations often coincide, but: – Not all coreferential relations are anaphoric: The Rector made his speech. Ellul-Micallef, 55 – Not all anaphoric relations are coreferential. Every man loves his mother. • Use substitution test to determine whethere is co-reference. If there is a change in meaning then expressions do not corefer. Every man loves every man’s mother December 2003 CSA 4050: Information Extraction III 9

Anaphor versus Coreference • Anaphoric and coreferential relations often coincide, but: – Not all coreferential relations are anaphoric: The Rector made his speech. Ellul-Micallef, 55 – Not all anaphoric relations are coreferential. Every man loves his mother. • Use substitution test to determine whethere is co-reference. If there is a change in meaning then expressions do not corefer. Every man loves every man’s mother December 2003 CSA 4050: Information Extraction III 9

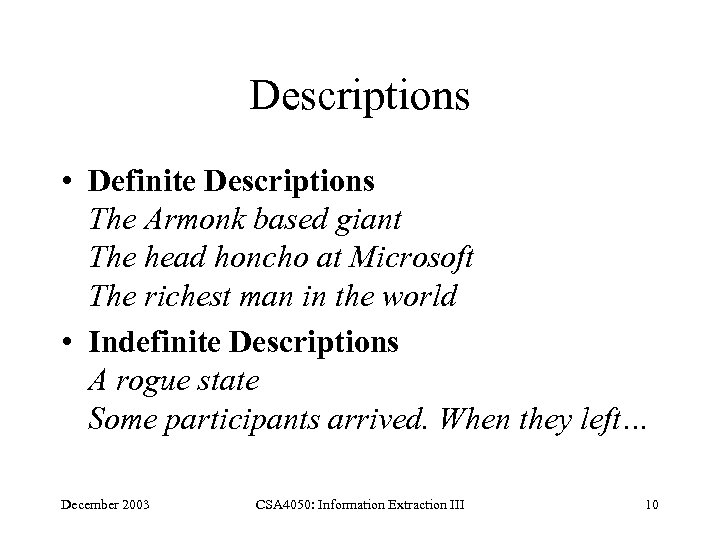

Descriptions • Definite Descriptions The Armonk based giant The head honcho at Microsoft The richest man in the world • Indefinite Descriptions A rogue state Some participants arrived. When they left… December 2003 CSA 4050: Information Extraction III 10

Descriptions • Definite Descriptions The Armonk based giant The head honcho at Microsoft The richest man in the world • Indefinite Descriptions A rogue state Some participants arrived. When they left… December 2003 CSA 4050: Information Extraction III 10

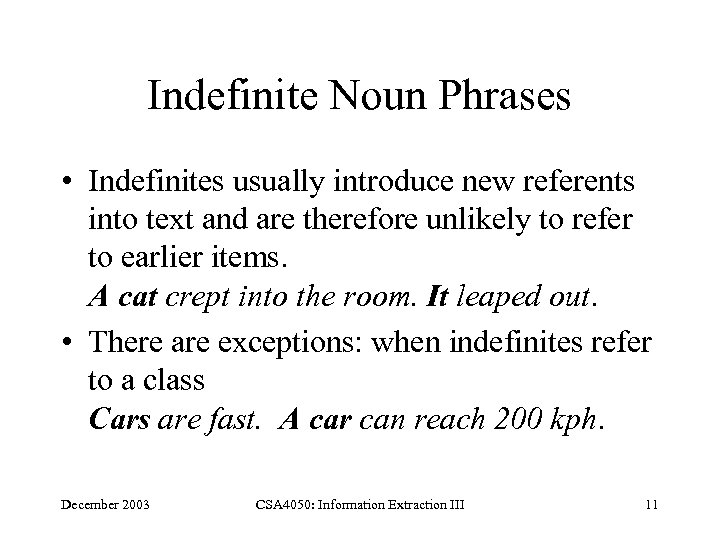

Indefinite Noun Phrases • Indefinites usually introduce new referents into text and are therefore unlikely to refer to earlier items. A cat crept into the room. It leaped out. • There are exceptions: when indefinites refer to a class Cars are fast. A car can reach 200 kph. December 2003 CSA 4050: Information Extraction III 11

Indefinite Noun Phrases • Indefinites usually introduce new referents into text and are therefore unlikely to refer to earlier items. A cat crept into the room. It leaped out. • There are exceptions: when indefinites refer to a class Cars are fast. A car can reach 200 kph. December 2003 CSA 4050: Information Extraction III 11

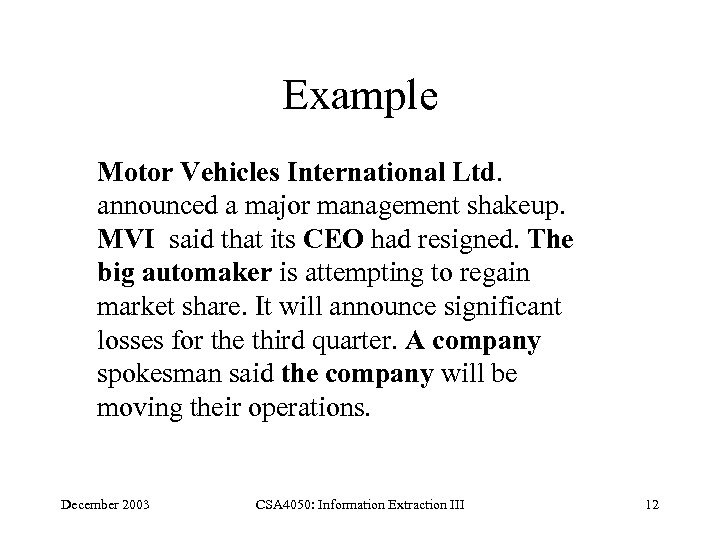

Example Motor Vehicles International Ltd. announced a major management shakeup. MVI said that its CEO had resigned. The big automaker is attempting to regain market share. It will announce significant losses for the third quarter. A company spokesman said the company will be moving their operations. December 2003 CSA 4050: Information Extraction III 12

Example Motor Vehicles International Ltd. announced a major management shakeup. MVI said that its CEO had resigned. The big automaker is attempting to regain market share. It will announce significant losses for the third quarter. A company spokesman said the company will be moving their operations. December 2003 CSA 4050: Information Extraction III 12

Two Approaches to Coreference • Knowledge Engineering – Based on adapting theoretical work on coreference to the sparse and incomplete analyses obtained in IE. • Automatically Trained Systems – Small range of approaches – Probabilistic and non-probabilistic December 2003 CSA 4050: Information Extraction III 13

Two Approaches to Coreference • Knowledge Engineering – Based on adapting theoretical work on coreference to the sparse and incomplete analyses obtained in IE. • Automatically Trained Systems – Small range of approaches – Probabilistic and non-probabilistic December 2003 CSA 4050: Information Extraction III 13

Algorithm - KE Approach 1. Identify each noun phrase 2. Mark each noun phrase with – – type information, animacy etc. agreement info (number/gender) syntactic features (definiteness) possibly other semantic information from dictionary (e. g. vehicle/furniture/transport) December 2003 CSA 4050: Information Extraction III 14

Algorithm - KE Approach 1. Identify each noun phrase 2. Mark each noun phrase with – – type information, animacy etc. agreement info (number/gender) syntactic features (definiteness) possibly other semantic information from dictionary (e. g. vehicle/furniture/transport) December 2003 CSA 4050: Information Extraction III 14

Algorithm – KE Approach • Try to distinguish between referring expressions and referents – – • Length Syntactic criteria (proper noun/description) Internal Structure Gazetteer For each referring expression – – – Determine accessible antecedents Filter with type check Rank candidates December 2003 CSA 4050: Information Extraction III 15

Algorithm – KE Approach • Try to distinguish between referring expressions and referents – – • Length Syntactic criteria (proper noun/description) Internal Structure Gazetteer For each referring expression – – – Determine accessible antecedents Filter with type check Rank candidates December 2003 CSA 4050: Information Extraction III 15

Accessibility Antecedents • Names – entire preceding text – possibly other documents in collection. – Match using aliases/acronyms • Definite noun phrases – part of the preceding text – same sentence; previous paragraph etc. • Pronouns – same but smaller stretches of preceding text (pronouns rarely refer across paragraph boundaries). December 2003 CSA 4050: Information Extraction III 16

Accessibility Antecedents • Names – entire preceding text – possibly other documents in collection. – Match using aliases/acronyms • Definite noun phrases – part of the preceding text – same sentence; previous paragraph etc. • Pronouns – same but smaller stretches of preceding text (pronouns rarely refer across paragraph boundaries). December 2003 CSA 4050: Information Extraction III 16

Filter for Semantic Consistency • Number/Gender John lost Mary's trousers. Then he/she found them/her • Semantic Type John dropped the hammer on the glass table. It shattered/bounced. Mike tried to save CSAI. The Department was burning. The chief acted heroically. December 2003 CSA 4050: Information Extraction III 17

Filter for Semantic Consistency • Number/Gender John lost Mary's trousers. Then he/she found them/her • Semantic Type John dropped the hammer on the glass table. It shattered/bounced. Mike tried to save CSAI. The Department was burning. The chief acted heroically. December 2003 CSA 4050: Information Extraction III 17

State of the Art • MUC 6 – precision. 72, recall. 63 • MUC 7 – UPENN's high precision system, precision =. 80, recall =. 30 • The fact that IE parsers are incomplete, shallow and not fully reliable motivates the statistical appraches. December 2003 CSA 4050: Information Extraction III 18

State of the Art • MUC 6 – precision. 72, recall. 63 • MUC 7 – UPENN's high precision system, precision =. 80, recall =. 30 • The fact that IE parsers are incomplete, shallow and not fully reliable motivates the statistical appraches. December 2003 CSA 4050: Information Extraction III 18

Statistical Approaches • Motivated by errors introduced by earlier processing • Supervised learning • Data: determine, by inspection, correct coreference or classes. December 2003 CSA 4050: Information Extraction III 19

Statistical Approaches • Motivated by errors introduced by earlier processing • Supervised learning • Data: determine, by inspection, correct coreference or classes. December 2003 CSA 4050: Information Extraction III 19

Methodology of Statistical Methods • Produce tagged data in which all coreference pairs are tagged as such. • Determine which system-recognisable features of the individual expressions are relevant to the co-reference judgement. • Apply some learning technique to the resulting feature vectors. December 2003 CSA 4050: Information Extraction III 20

Methodology of Statistical Methods • Produce tagged data in which all coreference pairs are tagged as such. • Determine which system-recognisable features of the individual expressions are relevant to the co-reference judgement. • Apply some learning technique to the resulting feature vectors. December 2003 CSA 4050: Information Extraction III 20

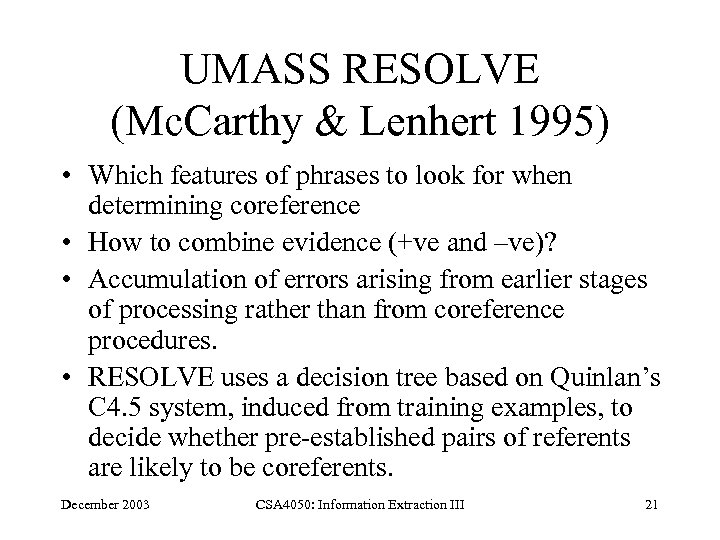

UMASS RESOLVE (Mc. Carthy & Lenhert 1995) • Which features of phrases to look for when determining coreference • How to combine evidence (+ve and –ve)? • Accumulation of errors arising from earlier stages of processing rather than from coreference procedures. • RESOLVE uses a decision tree based on Quinlan’s C 4. 5 system, induced from training examples, to decide whether pre-established pairs of referents are likely to be coreferents. December 2003 CSA 4050: Information Extraction III 21

UMASS RESOLVE (Mc. Carthy & Lenhert 1995) • Which features of phrases to look for when determining coreference • How to combine evidence (+ve and –ve)? • Accumulation of errors arising from earlier stages of processing rather than from coreference procedures. • RESOLVE uses a decision tree based on Quinlan’s C 4. 5 system, induced from training examples, to decide whether pre-established pairs of referents are likely to be coreferents. December 2003 CSA 4050: Information Extraction III 21

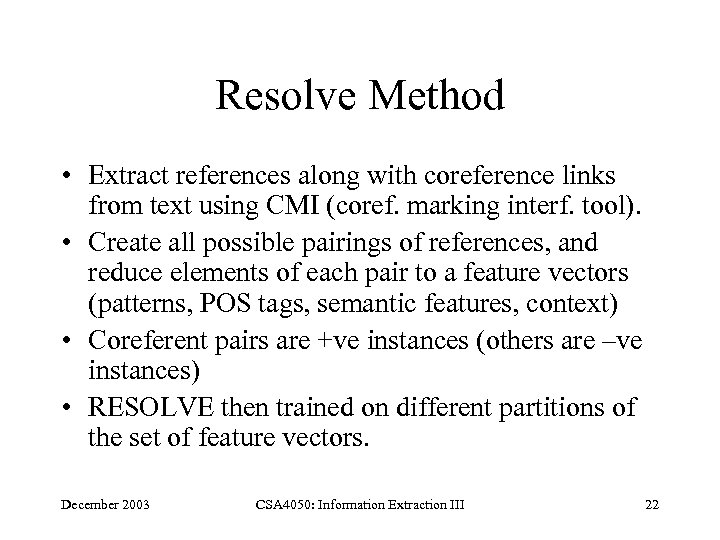

Resolve Method • Extract references along with coreference links from text using CMI (coref. marking interf. tool). • Create all possible pairings of references, and reduce elements of each pair to a feature vectors (patterns, POS tags, semantic features, context) • Coreferent pairs are +ve instances (others are –ve instances) • RESOLVE then trained on different partitions of the set of feature vectors. December 2003 CSA 4050: Information Extraction III 22

Resolve Method • Extract references along with coreference links from text using CMI (coref. marking interf. tool). • Create all possible pairings of references, and reduce elements of each pair to a feature vectors (patterns, POS tags, semantic features, context) • Coreferent pairs are +ve instances (others are –ve instances) • RESOLVE then trained on different partitions of the set of feature vectors. December 2003 CSA 4050: Information Extraction III 22

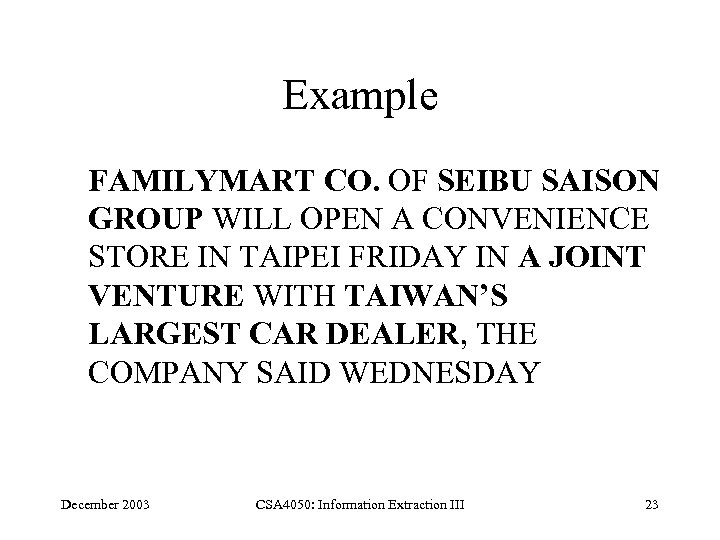

Example FAMILYMART CO. OF SEIBU SAISON GROUP WILL OPEN A CONVENIENCE STORE IN TAIPEI FRIDAY IN A JOINT VENTURE WITH TAIWAN’S LARGEST CAR DEALER, THE COMPANY SAID WEDNESDAY December 2003 CSA 4050: Information Extraction III 23

Example FAMILYMART CO. OF SEIBU SAISON GROUP WILL OPEN A CONVENIENCE STORE IN TAIPEI FRIDAY IN A JOINT VENTURE WITH TAIWAN’S LARGEST CAR DEALER, THE COMPANY SAID WEDNESDAY December 2003 CSA 4050: Information Extraction III 23

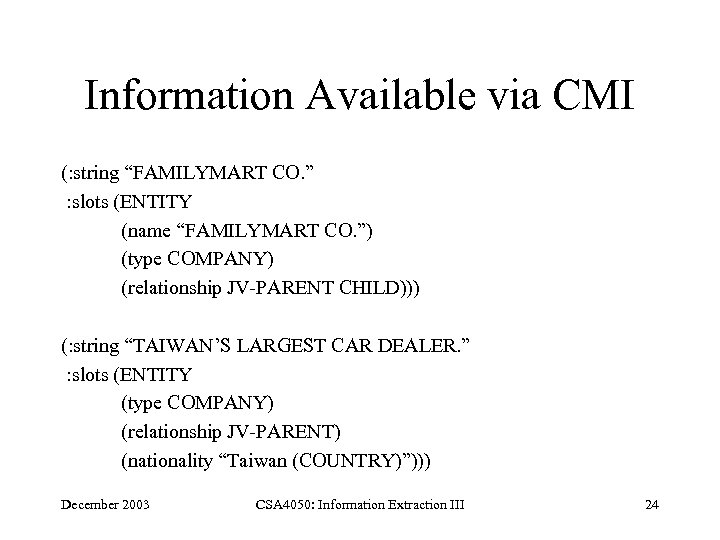

Information Available via CMI (: string “FAMILYMART CO. ” : slots (ENTITY (name “FAMILYMART CO. ”) (type COMPANY) (relationship JV-PARENT CHILD))) (: string “TAIWAN’S LARGEST CAR DEALER. ” : slots (ENTITY (type COMPANY) (relationship JV-PARENT) (nationality “Taiwan (COUNTRY)”))) December 2003 CSA 4050: Information Extraction III 24

Information Available via CMI (: string “FAMILYMART CO. ” : slots (ENTITY (name “FAMILYMART CO. ”) (type COMPANY) (relationship JV-PARENT CHILD))) (: string “TAIWAN’S LARGEST CAR DEALER. ” : slots (ENTITY (type COMPANY) (relationship JV-PARENT) (nationality “Taiwan (COUNTRY)”))) December 2003 CSA 4050: Information Extraction III 24

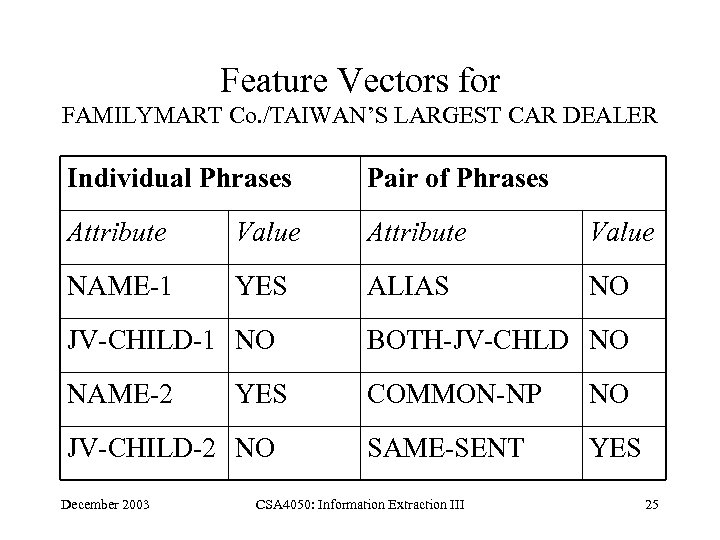

Feature Vectors for FAMILYMART Co. /TAIWAN’S LARGEST CAR DEALER Individual Phrases Pair of Phrases Attribute Value NAME-1 YES ALIAS NO JV-CHILD-1 NO BOTH-JV-CHLD NO NAME-2 COMMON-NP NO SAME-SENT YES JV-CHILD-2 NO December 2003 CSA 4050: Information Extraction III 25

Feature Vectors for FAMILYMART Co. /TAIWAN’S LARGEST CAR DEALER Individual Phrases Pair of Phrases Attribute Value NAME-1 YES ALIAS NO JV-CHILD-1 NO BOTH-JV-CHLD NO NAME-2 COMMON-NP NO SAME-SENT YES JV-CHILD-2 NO December 2003 CSA 4050: Information Extraction III 25

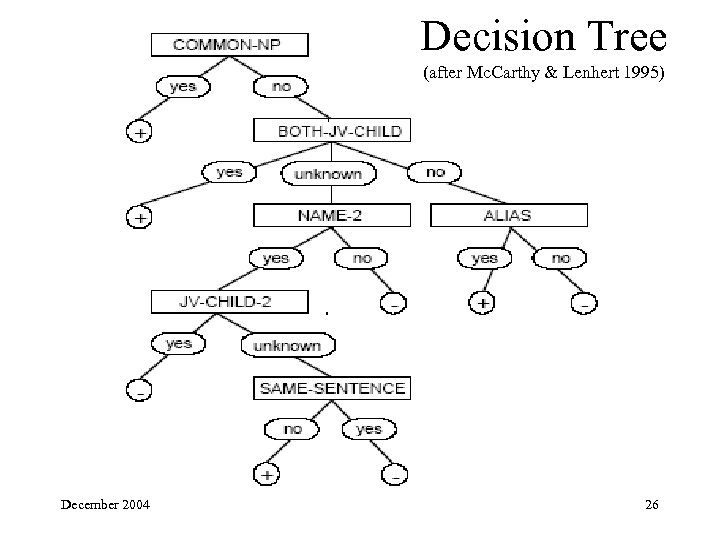

Decision Tree (after Mc. Carthy & Lenhert 1995) December 2004 26

Decision Tree (after Mc. Carthy & Lenhert 1995) December 2004 26

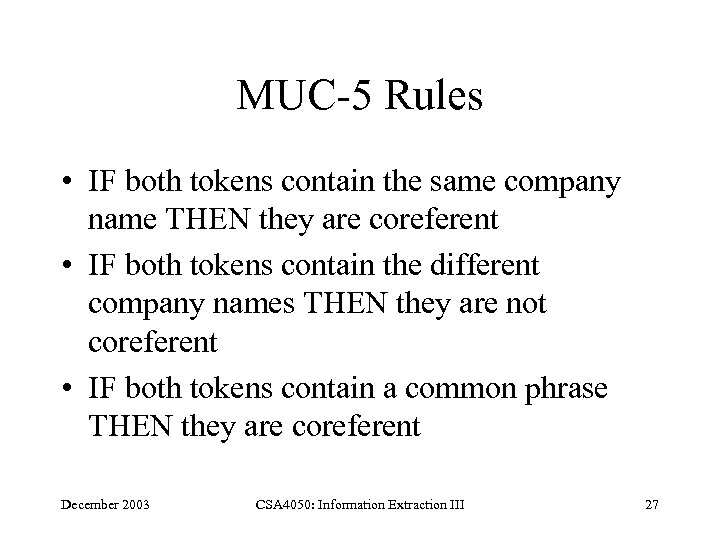

MUC-5 Rules • IF both tokens contain the same company name THEN they are coreferent • IF both tokens contain the different company names THEN they are not coreferent • IF both tokens contain a common phrase THEN they are coreferent December 2003 CSA 4050: Information Extraction III 27

MUC-5 Rules • IF both tokens contain the same company name THEN they are coreferent • IF both tokens contain the different company names THEN they are not coreferent • IF both tokens contain a common phrase THEN they are coreferent December 2003 CSA 4050: Information Extraction III 27

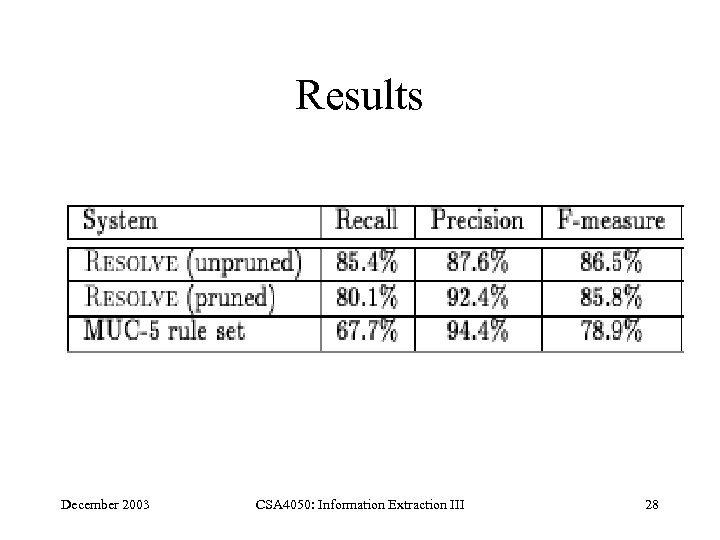

Results December 2003 CSA 4050: Information Extraction III 28

Results December 2003 CSA 4050: Information Extraction III 28