b6bb1e7d77f85ad4304798c354c1b7ce.ppt

- Количество слайдов: 34

CS 7960 -4 Lecture 2 Limits of Instruction-Level Parallelism David W. Wall WRL Research Report 93/6 Also appears in ASPLOS’ 91

CS 7960 -4 Lecture 2 Limits of Instruction-Level Parallelism David W. Wall WRL Research Report 93/6 Also appears in ASPLOS’ 91

Goals of the Study • Under optimistic assumptions, you can find a very high degree of parallelism (1000!) Ø What about parallelism under realistic assumptions? Ø What are the bottlenecks? What contributes to parallelism?

Goals of the Study • Under optimistic assumptions, you can find a very high degree of parallelism (1000!) Ø What about parallelism under realistic assumptions? Ø What are the bottlenecks? What contributes to parallelism?

Dependencies For registers and memory: True data dependency RAW Anti dependency WAR Output dependency WAW Control dependency Structural dependency

Dependencies For registers and memory: True data dependency RAW Anti dependency WAR Output dependency WAW Control dependency Structural dependency

![Perfect Scheduling For a long loop: Read a[i] and b[i] from memory and store Perfect Scheduling For a long loop: Read a[i] and b[i] from memory and store](https://present5.com/presentation/b6bb1e7d77f85ad4304798c354c1b7ce/image-4.jpg) Perfect Scheduling For a long loop: Read a[i] and b[i] from memory and store in registers Add the register values Store the result in memory c[i] The whole program should finish in 3 cycles!! Anti and output dependences : the assembly code keeps using lr 1 Control dependences : decision-making after each iteration Structural dependences : how many registers and cache ports do I have?

Perfect Scheduling For a long loop: Read a[i] and b[i] from memory and store in registers Add the register values Store the result in memory c[i] The whole program should finish in 3 cycles!! Anti and output dependences : the assembly code keeps using lr 1 Control dependences : decision-making after each iteration Structural dependences : how many registers and cache ports do I have?

Impediments to Perfect Scheduling • Register renaming • Alias analysis • Branch prediction • Branch fanout • Indirect jump prediction • Window size and cycle width • Latency

Impediments to Perfect Scheduling • Register renaming • Alias analysis • Branch prediction • Branch fanout • Indirect jump prediction • Window size and cycle width • Latency

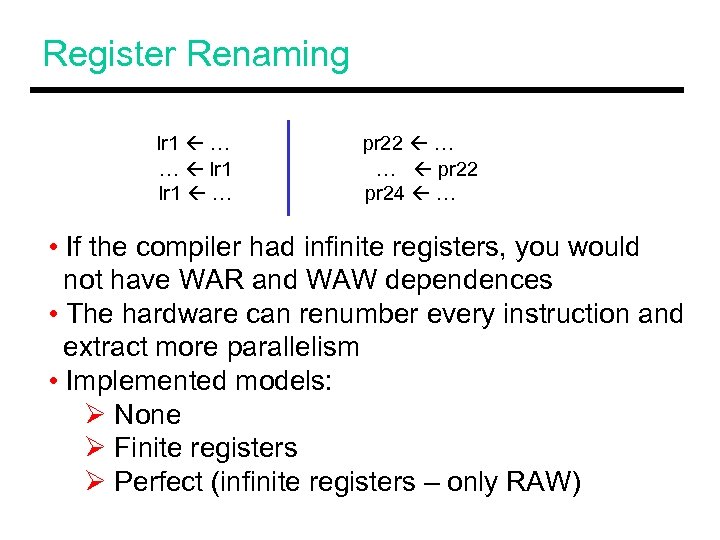

Register Renaming lr 1 … … lr 1 … pr 22 … … pr 22 pr 24 … • If the compiler had infinite registers, you would not have WAR and WAW dependences • The hardware can renumber every instruction and extract more parallelism • Implemented models: Ø None Ø Finite registers Ø Perfect (infinite registers – only RAW)

Register Renaming lr 1 … … lr 1 … pr 22 … … pr 22 pr 24 … • If the compiler had infinite registers, you would not have WAR and WAW dependences • The hardware can renumber every instruction and extract more parallelism • Implemented models: Ø None Ø Finite registers Ø Perfect (infinite registers – only RAW)

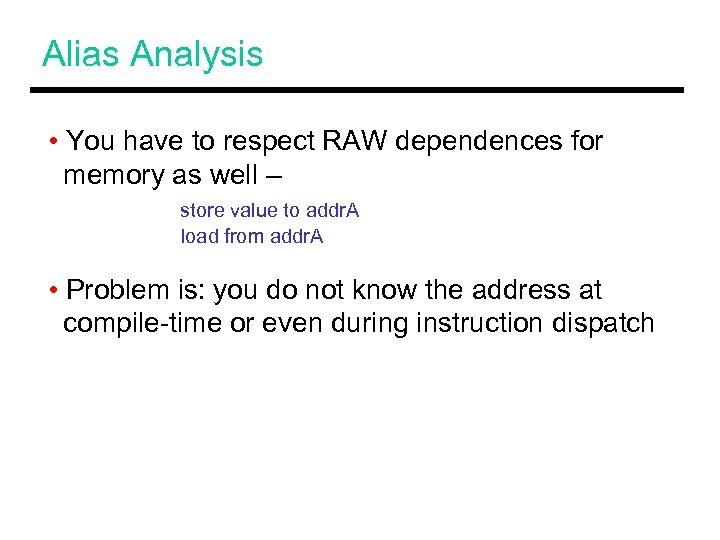

Alias Analysis • You have to respect RAW dependences for memory as well – store value to addr. A load from addr. A • Problem is: you do not know the address at compile-time or even during instruction dispatch

Alias Analysis • You have to respect RAW dependences for memory as well – store value to addr. A load from addr. A • Problem is: you do not know the address at compile-time or even during instruction dispatch

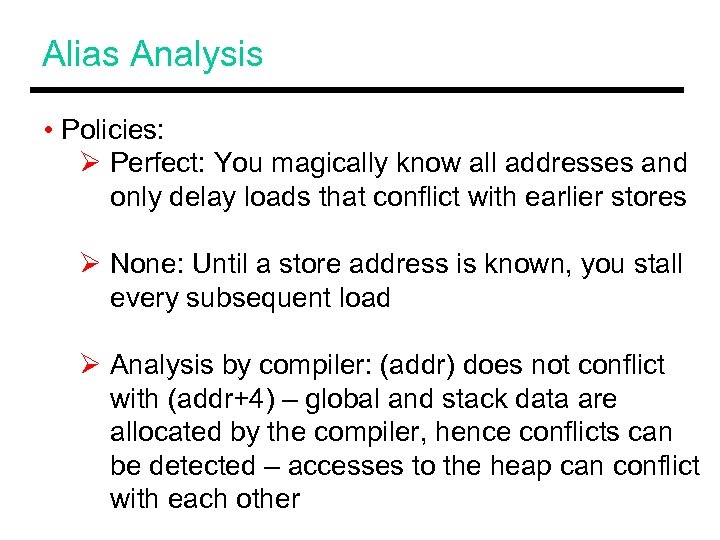

Alias Analysis • Policies: Ø Perfect: You magically know all addresses and only delay loads that conflict with earlier stores Ø None: Until a store address is known, you stall every subsequent load Ø Analysis by compiler: (addr) does not conflict with (addr+4) – global and stack data are allocated by the compiler, hence conflicts can be detected – accesses to the heap can conflict with each other

Alias Analysis • Policies: Ø Perfect: You magically know all addresses and only delay loads that conflict with earlier stores Ø None: Until a store address is known, you stall every subsequent load Ø Analysis by compiler: (addr) does not conflict with (addr+4) – global and stack data are allocated by the compiler, hence conflicts can be detected – accesses to the heap can conflict with each other

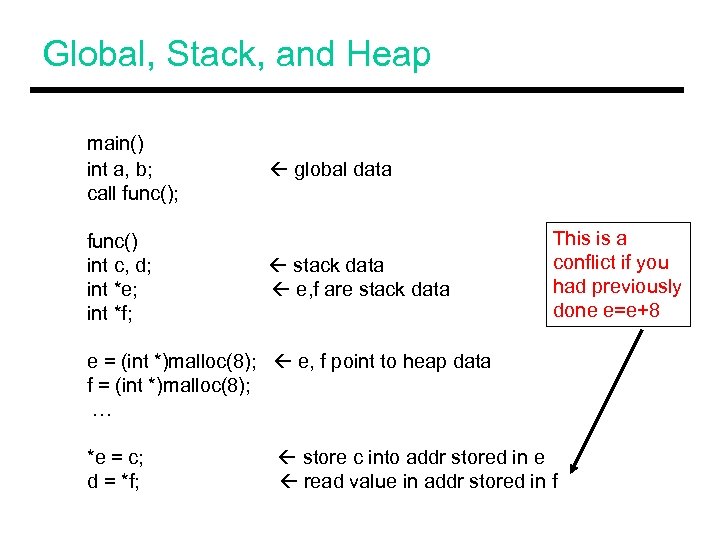

Global, Stack, and Heap main() int a, b; call func(); func() int c, d; int *e; int *f; global data stack data e, f are stack data This is a conflict if you had previously done e=e+8 e = (int *)malloc(8); e, f point to heap data f = (int *)malloc(8); … *e = c; d = *f; store c into addr stored in e read value in addr stored in f

Global, Stack, and Heap main() int a, b; call func(); func() int c, d; int *e; int *f; global data stack data e, f are stack data This is a conflict if you had previously done e=e+8 e = (int *)malloc(8); e, f point to heap data f = (int *)malloc(8); … *e = c; d = *f; store c into addr stored in e read value in addr stored in f

Branch Prediction • If you go the wrong way, you are not extracting useful parallelism • You can predict the branch direction statically or dynamically • You can execute along both directions and throw away some of the work (need more resources)

Branch Prediction • If you go the wrong way, you are not extracting useful parallelism • You can predict the branch direction statically or dynamically • You can execute along both directions and throw away some of the work (need more resources)

Effect on Performance 1000 instructions 150 are branches With 1% mispredict rate, 1. 5 branches are mispredicts Assume 1 -cycle mispredict penalty per branch If you assume that IPC is 2, 1. 5 cycles are added to the 500 -cycle execution time Performance loss = 0. 3 x mispredict-rate x penalty Today, performance loss = 0. 3 x 5 x 40 = 60%

Effect on Performance 1000 instructions 150 are branches With 1% mispredict rate, 1. 5 branches are mispredicts Assume 1 -cycle mispredict penalty per branch If you assume that IPC is 2, 1. 5 cycles are added to the 500 -cycle execution time Performance loss = 0. 3 x mispredict-rate x penalty Today, performance loss = 0. 3 x 5 x 40 = 60%

Dynamic Branch Prediction • Tables of 2 -bit counters that get biased towards being taken or not-taken • Can use history (for each branch or global) • Can have multiple predictors and dynamically pick the more promising one • Much more in a few weeks…

Dynamic Branch Prediction • Tables of 2 -bit counters that get biased towards being taken or not-taken • Can use history (for each branch or global) • Can have multiple predictors and dynamically pick the more promising one • Much more in a few weeks…

Static Branch Prediction • Profile the application and provide hints to the hardware • Hardly used in today’s high-performance processors • Dynamic predictors are much better (Figure 7, Pg. 10)

Static Branch Prediction • Profile the application and provide hints to the hardware • Hardly used in today’s high-performance processors • Dynamic predictors are much better (Figure 7, Pg. 10)

Branch Fanout • Execute both directions of the branch – an exponential growth in resource requirements • Hence, do this until you encounter four branches, after which, you employ dynamic branch prediction • Better still, execute both directions only if the prediction confidence is low Not commonly used in today’s processors.

Branch Fanout • Execute both directions of the branch – an exponential growth in resource requirements • Hence, do this until you encounter four branches, after which, you employ dynamic branch prediction • Better still, execute both directions only if the prediction confidence is low Not commonly used in today’s processors.

Indirect Jumps • Indirect jumps do not encode the target in the instruction – the target has to be computed • The address can be predicted by Ø using a table to store the last target Ø using a stack to keep track of subroutine call and returns (the most common indirect jump) • The combination achieves 95% prediction rates (Figure 8, pg. 12)

Indirect Jumps • Indirect jumps do not encode the target in the instruction – the target has to be computed • The address can be predicted by Ø using a table to store the last target Ø using a stack to keep track of subroutine call and returns (the most common indirect jump) • The combination achieves 95% prediction rates (Figure 8, pg. 12)

Latency • In their study, every instruction has unit latency -- highly questionable assumption today! • They also model other “realistic” latencies • Parallelism is being defined as cycles for sequential exec / cycles for superscalar, not as instructions / cycles • Hence, increasing instruction latency can increase parallelism – not true for IPC

Latency • In their study, every instruction has unit latency -- highly questionable assumption today! • They also model other “realistic” latencies • Parallelism is being defined as cycles for sequential exec / cycles for superscalar, not as instructions / cycles • Hence, increasing instruction latency can increase parallelism – not true for IPC

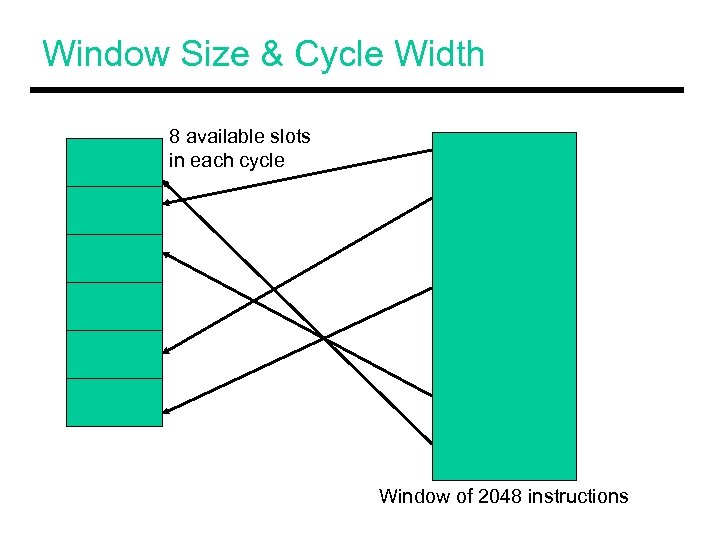

Window Size & Cycle Width 8 available slots in each cycle Window of 2048 instructions

Window Size & Cycle Width 8 available slots in each cycle Window of 2048 instructions

Window Size & Cycle Width • Discrete windows: grab 2048 instructions, schedule them, retire all cycles, grab the next window • Continuous windows: grab 2048 instructions, schedule them, retire the oldest cycle, grab a few more instructions • Window size and register renaming are not related • They allow infinite windows and cycles (infinite windows and limited cycles is memory-intensive)

Window Size & Cycle Width • Discrete windows: grab 2048 instructions, schedule them, retire all cycles, grab the next window • Continuous windows: grab 2048 instructions, schedule them, retire the oldest cycle, grab a few more instructions • Window size and register renaming are not related • They allow infinite windows and cycles (infinite windows and limited cycles is memory-intensive)

Simulated Models • Seven models: control, register, and memory dependences (Figure 11, Pg. 15) • Today’s processors: ? • However, note optimistic scheduling, 2048 instr window, cycle width of 64, and 1 -cycle latencies • SPEC’ 92 benchmarks, utility programs (grep, sed, yacc), CAD tools

Simulated Models • Seven models: control, register, and memory dependences (Figure 11, Pg. 15) • Today’s processors: ? • However, note optimistic scheduling, 2048 instr window, cycle width of 64, and 1 -cycle latencies • SPEC’ 92 benchmarks, utility programs (grep, sed, yacc), CAD tools

Aggressive Models • Parallelism steadily increases as we move to aggressive models (Fig 12, Pg. 16) • Branch fanout does not buy much • IPC of Great model: 10 Reality: 1. 5 • Numeric programs can do much better

Aggressive Models • Parallelism steadily increases as we move to aggressive models (Fig 12, Pg. 16) • Branch fanout does not buy much • IPC of Great model: 10 Reality: 1. 5 • Numeric programs can do much better

Cycle Width and Window Size • Unlimited cycle widths buys very little (much less than 10%) (Figure 15) • Decreasing the window size seems to have little effect as well (you need only 256? ! – are registers the bottleneck? ) (Figure 16) • Unlimited window size and cycle widths don’t help (Figure 18) Would these results hold true today?

Cycle Width and Window Size • Unlimited cycle widths buys very little (much less than 10%) (Figure 15) • Decreasing the window size seems to have little effect as well (you need only 256? ! – are registers the bottleneck? ) (Figure 16) • Unlimited window size and cycle widths don’t help (Figure 18) Would these results hold true today?

Memory Latencies • The ability to prefetch has a huge impact on IPC – to hide a 200 cycle latency, you have to spot the instruction very early • Hence, registers and window size are extremely important today!

Memory Latencies • The ability to prefetch has a huge impact on IPC – to hide a 200 cycle latency, you have to spot the instruction very early • Hence, registers and window size are extremely important today!

Loop Unrolling • Should be a non-issue for today’s processors Ø Reduces dynamic instruction count Ø Can help if rename checkpoints are a bottleneck

Loop Unrolling • Should be a non-issue for today’s processors Ø Reduces dynamic instruction count Ø Can help if rename checkpoints are a bottleneck

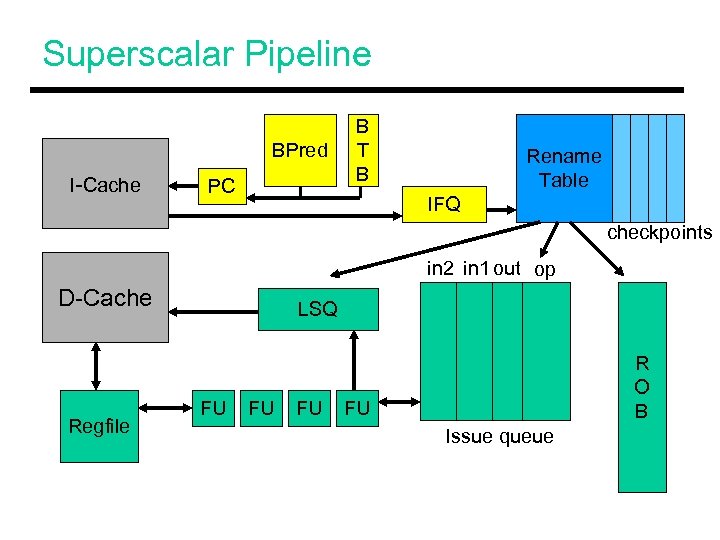

Superscalar Pipeline BPred I-Cache PC B T B Rename Table IFQ checkpoints in 2 in 1 out op D-Cache Regfile LSQ FU FU FU R O B FU Issue queue

Superscalar Pipeline BPred I-Cache PC B T B Rename Table IFQ checkpoints in 2 in 1 out op D-Cache Regfile LSQ FU FU FU R O B FU Issue queue

Branch Prediction • Obviously, better prediction helps (Fig. 22) • Fanout does not help much (Fig. 24 -b) – not selecting the right branches? • Fig. 27 does not show a graph for profiled-fanout plus hardware prediction • Luckily, small tables are good enough for good indirect jump prediction • Note: Mispredict penalty=0

Branch Prediction • Obviously, better prediction helps (Fig. 22) • Fanout does not help much (Fig. 24 -b) – not selecting the right branches? • Fig. 27 does not show a graph for profiled-fanout plus hardware prediction • Luckily, small tables are good enough for good indirect jump prediction • Note: Mispredict penalty=0

Mispredict Penalty • Has a big impact on performance (earlier equation) (Fig. 30) • Pentium 4 mispredict penalty = 20 + issueq wait-time

Mispredict Penalty • Has a big impact on performance (earlier equation) (Fig. 30) • Pentium 4 mispredict penalty = 20 + issueq wait-time

Alias Analysis • Has a big impact on performance – compiler analysis results in a two-fold speed-up (Fig. 32) • Reality: modern languages make such an analysis very hard • Later, we’ll read a paper that attempts this in hardware (Chrysos ’ 98)

Alias Analysis • Has a big impact on performance – compiler analysis results in a two-fold speed-up (Fig. 32) • Reality: modern languages make such an analysis very hard • Later, we’ll read a paper that attempts this in hardware (Chrysos ’ 98)

Registers • Results suggest that 64 registers are good enough (Fig. 33) • Precise exceptions make it difficult to look at a large speculative window with few registers • Room for improvement for register utilization? (Monreal ’ 99)

Registers • Results suggest that 64 registers are good enough (Fig. 33) • Precise exceptions make it difficult to look at a large speculative window with few registers • Room for improvement for register utilization? (Monreal ’ 99)

Instruction Latency • Parallelism almost unaffected by increased latency (increases marginally in some cases!) • Note: “unconventional” definition of parallelism • Today, latency strongly influences IPC

Instruction Latency • Parallelism almost unaffected by increased latency (increases marginally in some cases!) • Note: “unconventional” definition of parallelism • Today, latency strongly influences IPC

Conclusions • Branch prediction, alias analysis, mispredict penalty are huge bottlenecks • Instr latency, registers, window size, cycle width are not huge bottlenecks • Today, they are all huge bottlenecks because they all influence effective memory latency…which is the biggest bottleneck

Conclusions • Branch prediction, alias analysis, mispredict penalty are huge bottlenecks • Instr latency, registers, window size, cycle width are not huge bottlenecks • Today, they are all huge bottlenecks because they all influence effective memory latency…which is the biggest bottleneck

Questions • Is it worth doing multi-issue? • Is there more ILP left to extract? • What would some of these graphs look like today? • Weaknesses – cache and reg model • Lessons for today’s procs – is it worth doing multi-issue, parallelism has gone down

Questions • Is it worth doing multi-issue? • Is there more ILP left to extract? • What would some of these graphs look like today? • Weaknesses – cache and reg model • Lessons for today’s procs – is it worth doing multi-issue, parallelism has gone down

Next Week’s Paper • “Complexity-Effective Superscalar Processors”, Palacharla, Jouppi, Smith, ISCA ’ 97 • The impact of increased issue width and window size on clock speed

Next Week’s Paper • “Complexity-Effective Superscalar Processors”, Palacharla, Jouppi, Smith, ISCA ’ 97 • The impact of increased issue width and window size on clock speed

Title • Bullet

Title • Bullet

Title • Bullet

Title • Bullet