05819284d5263355840840bb786804bf.ppt

- Количество слайдов: 68

CS 760 – Machine Learning • Course Instructor: David Page • • • email: dpage@cs. wisc. edu office: MSC 6743 (University & Charter) hours: TBA • Teaching Assistant: Daniel Wong • • • email: wong@cs. wisc. edu office: TBA hours: TBA © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 1

CS 760 – Machine Learning • Course Instructor: David Page • • • email: dpage@cs. wisc. edu office: MSC 6743 (University & Charter) hours: TBA • Teaching Assistant: Daniel Wong • • • email: wong@cs. wisc. edu office: TBA hours: TBA © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 1

Textbooks & Reading Assignment • Machine Learning (Tom Mitchell) • Selected on-line readings • Read in Mitchell (posted on class web page) • • Preface Chapter 1 Sections 2. 1 and 2. 2 Chapter 8 © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 2

Textbooks & Reading Assignment • Machine Learning (Tom Mitchell) • Selected on-line readings • Read in Mitchell (posted on class web page) • • Preface Chapter 1 Sections 2. 1 and 2. 2 Chapter 8 © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 2

Monday, Wednesday, and Friday? • We’ll meet 30 times this term (may or may not include exam in this count) • We’ll meet on FRIDAY this and next week, in order to cover material for HW 1 (plus I have some business travel this term) • Default: we WILL meet on Friday unless I announce otherwise © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 3

Monday, Wednesday, and Friday? • We’ll meet 30 times this term (may or may not include exam in this count) • We’ll meet on FRIDAY this and next week, in order to cover material for HW 1 (plus I have some business travel this term) • Default: we WILL meet on Friday unless I announce otherwise © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 3

Course "Style" • Primarily algorithmic & experimental • Some theory, both mathematical & conceptual (much on statistics) • "Hands on" experience, interactive lectures/discussions • Broad survey of many ML subfields, including • • • © Jude Shavlik 2006, David Page 2007 "symbolic" (rules, decision trees, ILP) "connectionist" (neural nets) support vector machines, nearest-neighbors theoretical ("COLT") statistical ("Bayes rule") reinforcement learning, genetic algorithms CS 760 – Machine Learning (UW-Madison) 4

Course "Style" • Primarily algorithmic & experimental • Some theory, both mathematical & conceptual (much on statistics) • "Hands on" experience, interactive lectures/discussions • Broad survey of many ML subfields, including • • • © Jude Shavlik 2006, David Page 2007 "symbolic" (rules, decision trees, ILP) "connectionist" (neural nets) support vector machines, nearest-neighbors theoretical ("COLT") statistical ("Bayes rule") reinforcement learning, genetic algorithms CS 760 – Machine Learning (UW-Madison) 4

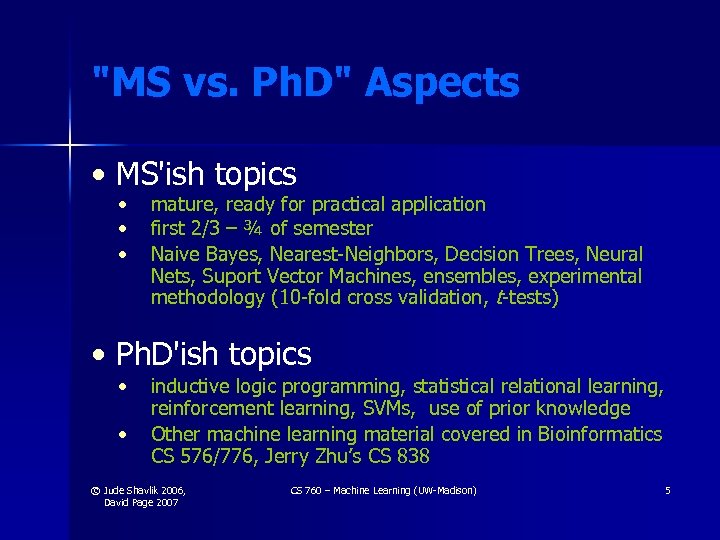

"MS vs. Ph. D" Aspects • MS'ish topics • • • mature, ready for practical application first 2/3 – ¾ of semester Naive Bayes, Nearest-Neighbors, Decision Trees, Neural Nets, Suport Vector Machines, ensembles, experimental methodology (10 -fold cross validation, t-tests) • Ph. D'ish topics • • inductive logic programming, statistical relational learning, reinforcement learning, SVMs, use of prior knowledge Other machine learning material covered in Bioinformatics CS 576/776, Jerry Zhu’s CS 838 © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 5

"MS vs. Ph. D" Aspects • MS'ish topics • • • mature, ready for practical application first 2/3 – ¾ of semester Naive Bayes, Nearest-Neighbors, Decision Trees, Neural Nets, Suport Vector Machines, ensembles, experimental methodology (10 -fold cross validation, t-tests) • Ph. D'ish topics • • inductive logic programming, statistical relational learning, reinforcement learning, SVMs, use of prior knowledge Other machine learning material covered in Bioinformatics CS 576/776, Jerry Zhu’s CS 838 © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 5

Two Major Goals • to understand what a learning system should do • to understand how (and how well) existing systems work • Issues in algorithm design • Choosing algorithms for applications © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 6

Two Major Goals • to understand what a learning system should do • to understand how (and how well) existing systems work • Issues in algorithm design • Choosing algorithms for applications © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 6

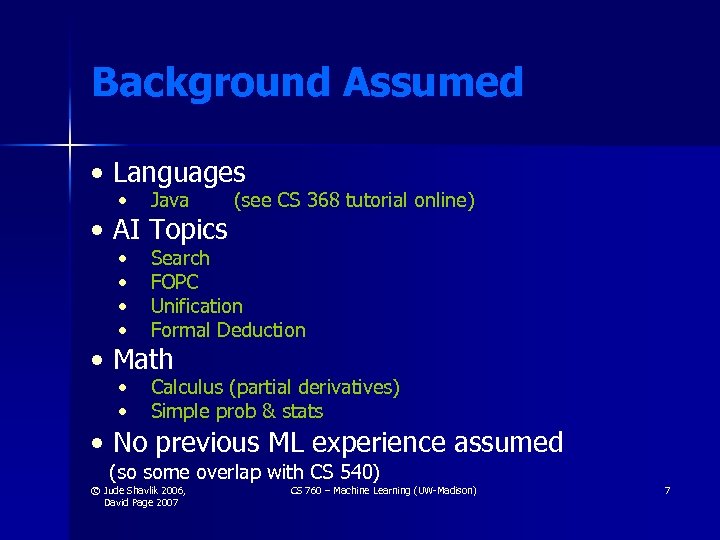

Background Assumed • Languages • Java • • Search FOPC Unification Formal Deduction • • Calculus (partial derivatives) Simple prob & stats • AI Topics (see CS 368 tutorial online) • Math • No previous ML experience assumed (so some overlap with CS 540) © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 7

Background Assumed • Languages • Java • • Search FOPC Unification Formal Deduction • • Calculus (partial derivatives) Simple prob & stats • AI Topics (see CS 368 tutorial online) • Math • No previous ML experience assumed (so some overlap with CS 540) © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 7

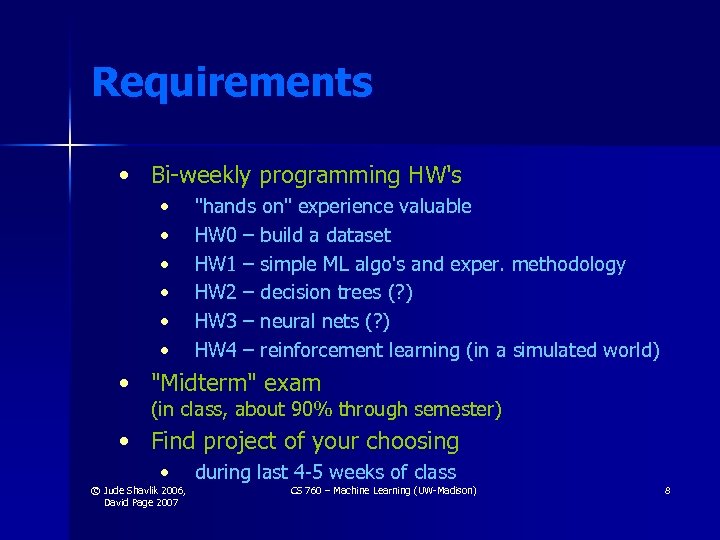

Requirements • Bi-weekly programming HW's • • • "hands on" experience valuable HW 0 – build a dataset HW 1 – simple ML algo's and exper. methodology HW 2 – decision trees (? ) HW 3 – neural nets (? ) HW 4 – reinforcement learning (in a simulated world) • "Midterm" exam (in class, about 90% through semester) • Find project of your choosing • © Jude Shavlik 2006, David Page 2007 during last 4 -5 weeks of class CS 760 – Machine Learning (UW-Madison) 8

Requirements • Bi-weekly programming HW's • • • "hands on" experience valuable HW 0 – build a dataset HW 1 – simple ML algo's and exper. methodology HW 2 – decision trees (? ) HW 3 – neural nets (? ) HW 4 – reinforcement learning (in a simulated world) • "Midterm" exam (in class, about 90% through semester) • Find project of your choosing • © Jude Shavlik 2006, David Page 2007 during last 4 -5 weeks of class CS 760 – Machine Learning (UW-Madison) 8

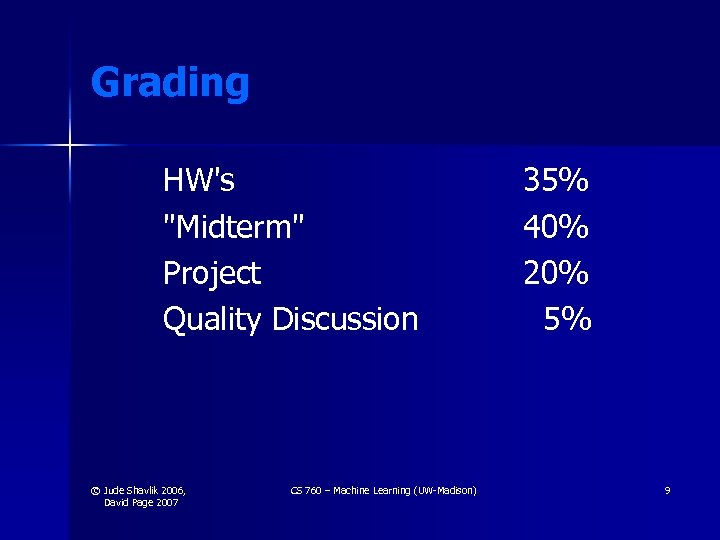

Grading HW's "Midterm" Project Quality Discussion © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 35% 40% 20% 5% 9

Grading HW's "Midterm" Project Quality Discussion © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 35% 40% 20% 5% 9

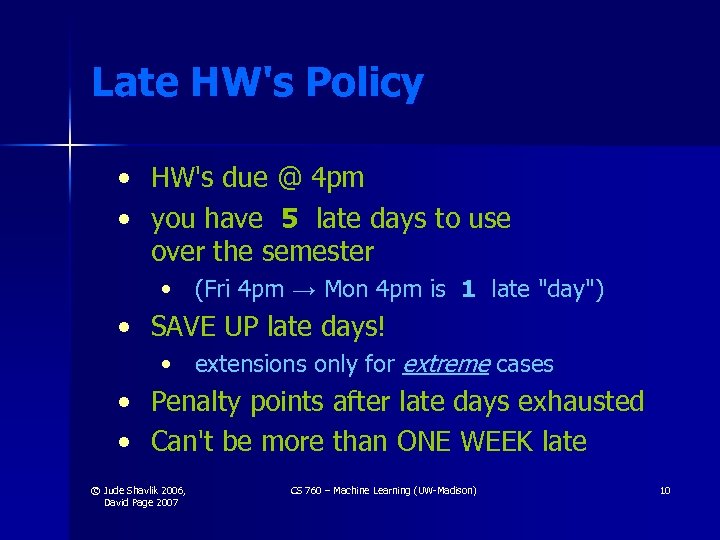

Late HW's Policy • • HW's due @ 4 pm you have 5 late days to use over the semester • (Fri 4 pm → Mon 4 pm is 1 late "day") • SAVE UP late days! • extensions only for extreme cases • • Penalty points after late days exhausted Can't be more than ONE WEEK late © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 10

Late HW's Policy • • HW's due @ 4 pm you have 5 late days to use over the semester • (Fri 4 pm → Mon 4 pm is 1 late "day") • SAVE UP late days! • extensions only for extreme cases • • Penalty points after late days exhausted Can't be more than ONE WEEK late © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 10

Academic Misconduct (also on course homepage) All examinations, programming assignments, and written homeworks must be done individually. Cheating and plagiarism will be dealt with in accordance with University procedures (see the Academic Misconduct Guide for Students). Hence, for example, code for programming assignments must not be developed in groups, nor should code be shared. You are encouraged to discuss with your peers, the TAs or the instructor ideas, approaches and techniques broadly, but not at a level of detail where specific implementation issues are described by anyone. If you have any questions on this, please ask the instructor before you act. © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 11

Academic Misconduct (also on course homepage) All examinations, programming assignments, and written homeworks must be done individually. Cheating and plagiarism will be dealt with in accordance with University procedures (see the Academic Misconduct Guide for Students). Hence, for example, code for programming assignments must not be developed in groups, nor should code be shared. You are encouraged to discuss with your peers, the TAs or the instructor ideas, approaches and techniques broadly, but not at a level of detail where specific implementation issues are described by anyone. If you have any questions on this, please ask the instructor before you act. © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 11

What Do You Think Learning Means? © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 12

What Do You Think Learning Means? © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 12

What is Learning? “Learning denotes changes in the system that … enable the system to do the same task … more effectively the next time. ” - Herbert Simon “Learning is making useful changes in our minds. ” - Marvin Minsky © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 13

What is Learning? “Learning denotes changes in the system that … enable the system to do the same task … more effectively the next time. ” - Herbert Simon “Learning is making useful changes in our minds. ” - Marvin Minsky © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 13

Today’s Topics • • Memorization as Learning Feature Space Supervised ML K-NN (K-Nearest Neighbor) © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 14

Today’s Topics • • Memorization as Learning Feature Space Supervised ML K-NN (K-Nearest Neighbor) © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 14

Memorization (Rote Learning) • Employed by first machine learning systems, in 1950 s • Samuel’s Checkers program • Michie’s MENACE: Matchbox Educable Naughts and Crosses Engine • Prior to these, some people believed computers could not improve at a task with experience © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 15

Memorization (Rote Learning) • Employed by first machine learning systems, in 1950 s • Samuel’s Checkers program • Michie’s MENACE: Matchbox Educable Naughts and Crosses Engine • Prior to these, some people believed computers could not improve at a task with experience © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 15

Rote Learning is Limited • Memorize I/O pairs and perform exact matching with new inputs • If computer has not seen precise case before, it cannot apply its experience • Want computer to “generalize” from prior experience © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 16

Rote Learning is Limited • Memorize I/O pairs and perform exact matching with new inputs • If computer has not seen precise case before, it cannot apply its experience • Want computer to “generalize” from prior experience © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 16

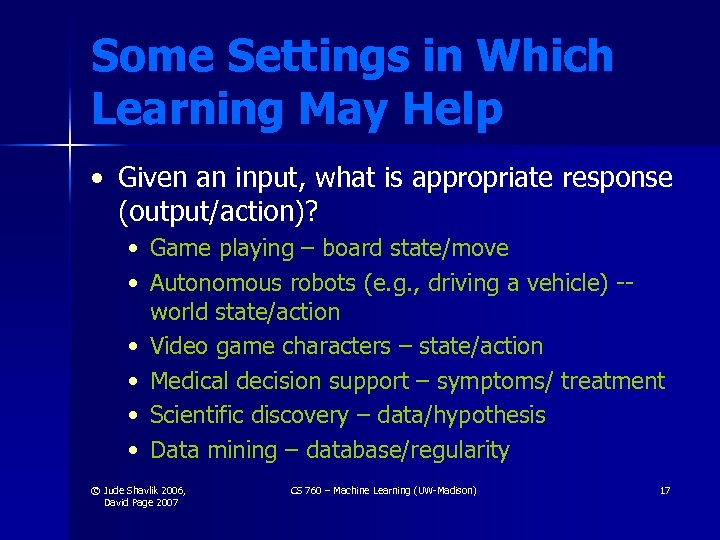

Some Settings in Which Learning May Help • Given an input, what is appropriate response (output/action)? • Game playing – board state/move • Autonomous robots (e. g. , driving a vehicle) -world state/action • Video game characters – state/action • Medical decision support – symptoms/ treatment • Scientific discovery – data/hypothesis • Data mining – database/regularity © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 17

Some Settings in Which Learning May Help • Given an input, what is appropriate response (output/action)? • Game playing – board state/move • Autonomous robots (e. g. , driving a vehicle) -world state/action • Video game characters – state/action • Medical decision support – symptoms/ treatment • Scientific discovery – data/hypothesis • Data mining – database/regularity © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 17

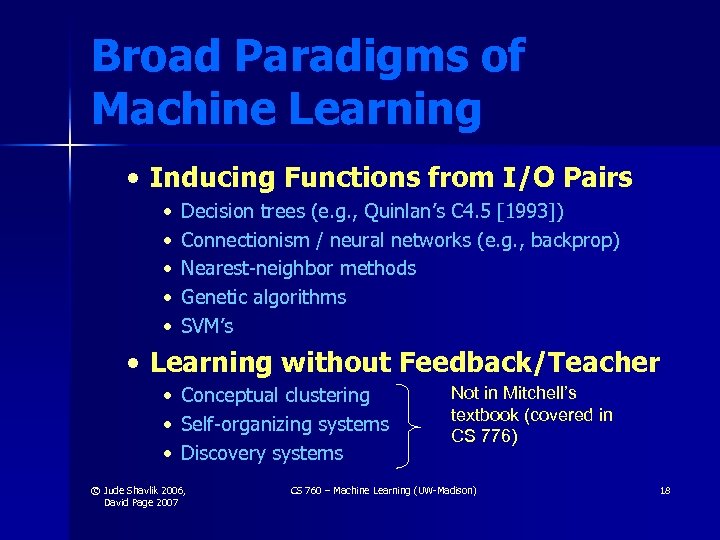

Broad Paradigms of Machine Learning • Inducing Functions from I/O Pairs • • • Decision trees (e. g. , Quinlan’s C 4. 5 [1993]) Connectionism / neural networks (e. g. , backprop) Nearest-neighbor methods Genetic algorithms SVM’s • Learning without Feedback/Teacher • • • Conceptual clustering Self-organizing systems Discovery systems © Jude Shavlik 2006, David Page 2007 Not in Mitchell’s textbook (covered in CS 776) CS 760 – Machine Learning (UW-Madison) 18

Broad Paradigms of Machine Learning • Inducing Functions from I/O Pairs • • • Decision trees (e. g. , Quinlan’s C 4. 5 [1993]) Connectionism / neural networks (e. g. , backprop) Nearest-neighbor methods Genetic algorithms SVM’s • Learning without Feedback/Teacher • • • Conceptual clustering Self-organizing systems Discovery systems © Jude Shavlik 2006, David Page 2007 Not in Mitchell’s textbook (covered in CS 776) CS 760 – Machine Learning (UW-Madison) 18

IID (Completion of Lec #2) • We are assuming examples are IID: independently identically distributed • Eg, we are ignoring temporal dependencies (covered in time-series learning) • Eg, we assume the learner has no say in which examples it gets (covered in active learning) © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 19

IID (Completion of Lec #2) • We are assuming examples are IID: independently identically distributed • Eg, we are ignoring temporal dependencies (covered in time-series learning) • Eg, we assume the learner has no say in which examples it gets (covered in active learning) © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 19

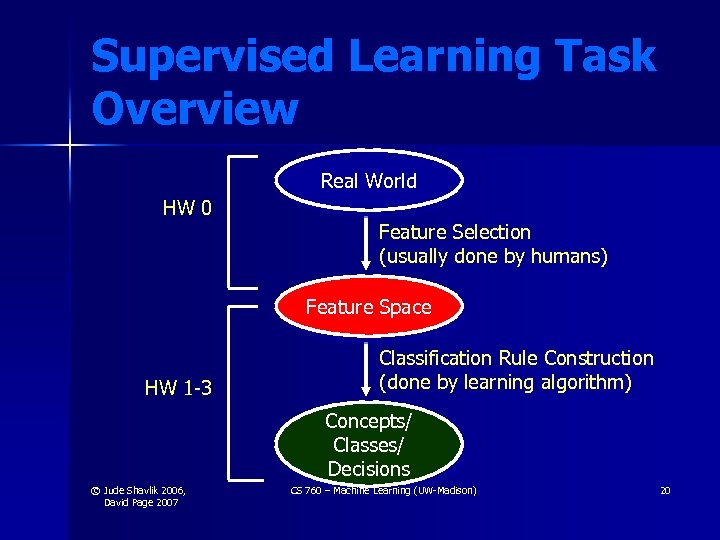

Supervised Learning Task Overview Real World HW 0 Feature Selection (usually done by humans) Feature Space HW 1 -3 Classification Rule Construction (done by learning algorithm) Concepts/ Classes/ Decisions © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 20

Supervised Learning Task Overview Real World HW 0 Feature Selection (usually done by humans) Feature Space HW 1 -3 Classification Rule Construction (done by learning algorithm) Concepts/ Classes/ Decisions © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 20

Supervised Learning Task Overview (cont. ) • Note: mappings on previous slide are not necessarily 1 -to-1 • Bad for first mapping? • Good for the second (in fact, it’s the goal!) © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 21

Supervised Learning Task Overview (cont. ) • Note: mappings on previous slide are not necessarily 1 -to-1 • Bad for first mapping? • Good for the second (in fact, it’s the goal!) © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 21

Empirical Learning: Task Definition • Given • A collection of positive examples of some concept/class/category (i. e. , members of the class) and, possibly, a collection of the negative examples (i. e. , nonmembers) • Produce • A description that covers (includes) all/most of the positive examples and non/few of the negative examples The Key (and, hopefully, properly categorizes most future examples!) Point! Note: one can easily extend this definition to handle more than two classes © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 22

Empirical Learning: Task Definition • Given • A collection of positive examples of some concept/class/category (i. e. , members of the class) and, possibly, a collection of the negative examples (i. e. , nonmembers) • Produce • A description that covers (includes) all/most of the positive examples and non/few of the negative examples The Key (and, hopefully, properly categorizes most future examples!) Point! Note: one can easily extend this definition to handle more than two classes © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 22

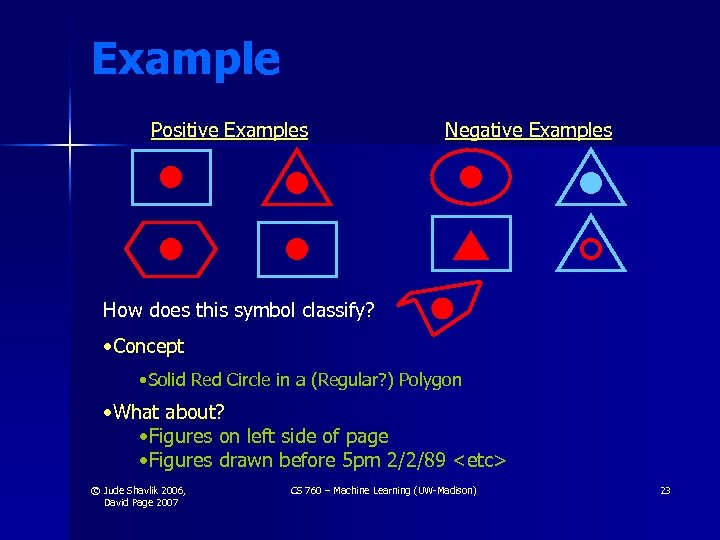

Example Positive Examples Negative Examples How does this symbol classify? • Concept • Solid Red Circle in a (Regular? ) Polygon • What about? • Figures on left side of page • Figures drawn before 5 pm 2/2/89

Example Positive Examples Negative Examples How does this symbol classify? • Concept • Solid Red Circle in a (Regular? ) Polygon • What about? • Figures on left side of page • Figures drawn before 5 pm 2/2/89

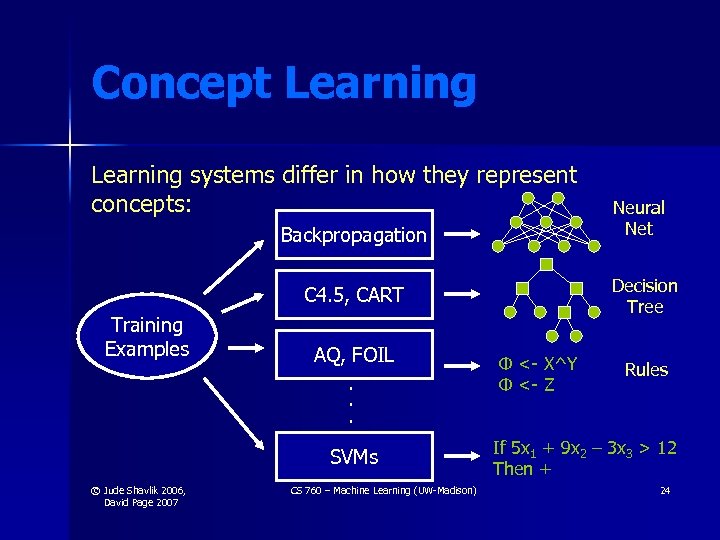

Concept Learning systems differ in how they represent concepts: Backpropagation Decision Tree C 4. 5, CART Training Examples AQ, FOIL. . . SVMs © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) Neural Net Φ <- X^Y Φ <- Z Rules If 5 x 1 + 9 x 2 – 3 x 3 > 12 Then + 24

Concept Learning systems differ in how they represent concepts: Backpropagation Decision Tree C 4. 5, CART Training Examples AQ, FOIL. . . SVMs © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) Neural Net Φ <- X^Y Φ <- Z Rules If 5 x 1 + 9 x 2 – 3 x 3 > 12 Then + 24

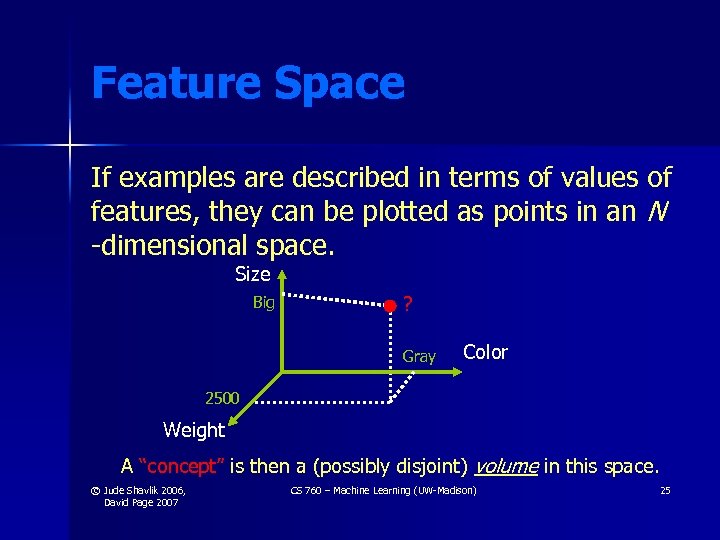

Feature Space If examples are described in terms of values of features, they can be plotted as points in an N -dimensional space. Size Big ? Gray Color 2500 Weight A “concept” is then a (possibly disjoint) volume in this space. © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 25

Feature Space If examples are described in terms of values of features, they can be plotted as points in an N -dimensional space. Size Big ? Gray Color 2500 Weight A “concept” is then a (possibly disjoint) volume in this space. © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 25

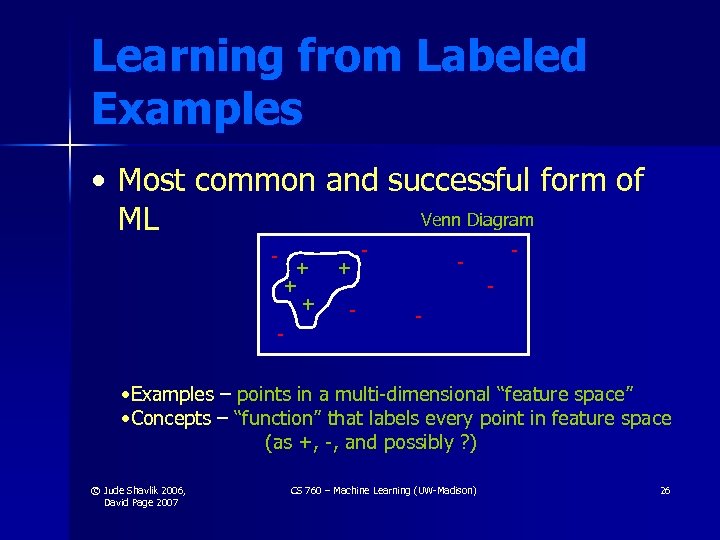

Learning from Labeled Examples • Most common and successful form of Venn Diagram ML - + + + - - - - • Examples – points in a multi-dimensional “feature space” • Concepts – “function” that labels every point in feature space (as +, -, and possibly ? ) © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 26

Learning from Labeled Examples • Most common and successful form of Venn Diagram ML - + + + - - - - • Examples – points in a multi-dimensional “feature space” • Concepts – “function” that labels every point in feature space (as +, -, and possibly ? ) © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 26

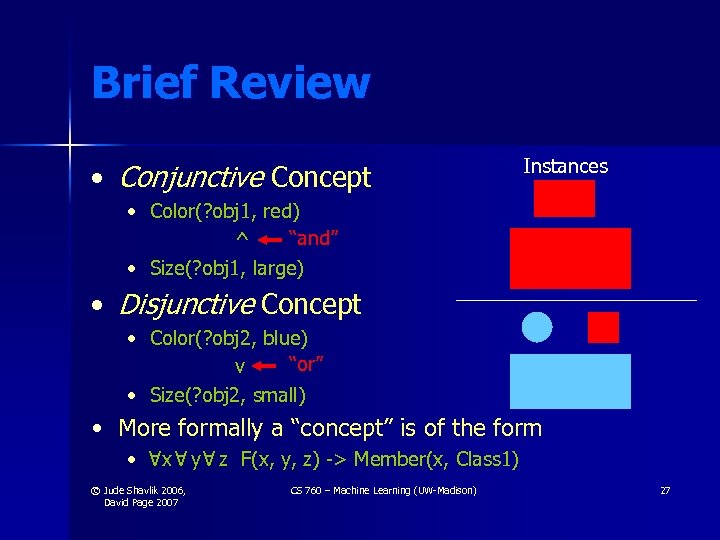

Brief Review • Conjunctive Concept Instances • Color(? obj 1, red) “and” ^ • Size(? obj 1, large) • Disjunctive Concept • Color(? obj 2, blue) “or” v • Size(? obj 2, small) • More formally a “concept” is of the form x y z F(x, y, z) -> Member(x, Class 1) A A A • © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 27

Brief Review • Conjunctive Concept Instances • Color(? obj 1, red) “and” ^ • Size(? obj 1, large) • Disjunctive Concept • Color(? obj 2, blue) “or” v • Size(? obj 2, small) • More formally a “concept” is of the form x y z F(x, y, z) -> Member(x, Class 1) A A A • © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 27

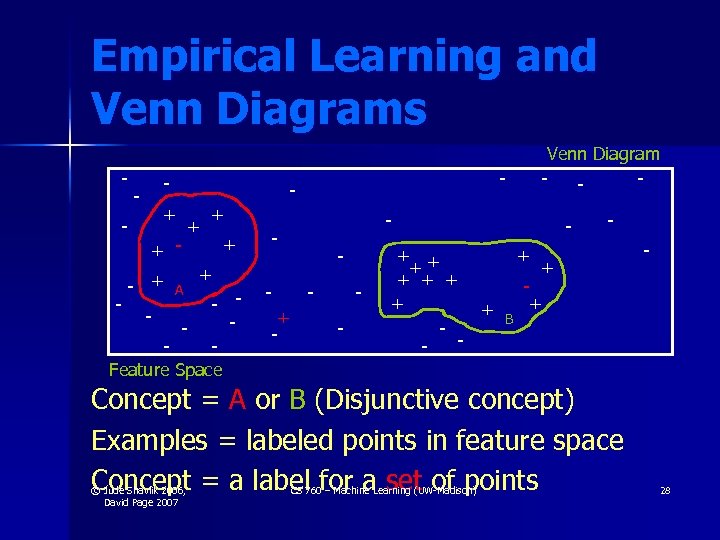

Empirical Learning and Venn Diagrams Venn Diagram - - + + - - + A - + + + - - Feature Space - - - + + + + - - + + B - - + + - Concept = A or B (Disjunctive concept) Examples = labeled points in feature space Concept = a label for a set of points © Jude Shavlik 2006, David Page 2007 - CS 760 – Machine Learning (UW-Madison) 28

Empirical Learning and Venn Diagrams Venn Diagram - - + + - - + A - + + + - - Feature Space - - - + + + + - - + + B - - + + - Concept = A or B (Disjunctive concept) Examples = labeled points in feature space Concept = a label for a set of points © Jude Shavlik 2006, David Page 2007 - CS 760 – Machine Learning (UW-Madison) 28

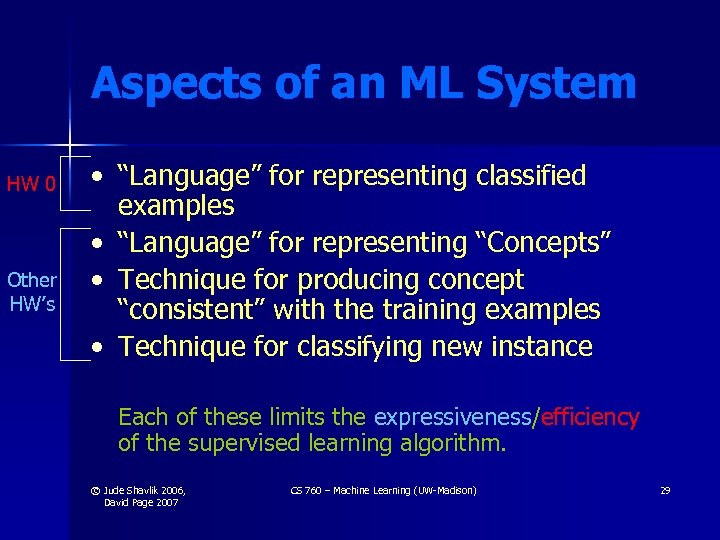

Aspects of an ML System HW 0 Other HW’s • “Language” for representing classified examples • “Language” for representing “Concepts” • Technique for producing concept “consistent” with the training examples • Technique for classifying new instance Each of these limits the expressiveness/efficiency of the supervised learning algorithm. © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 29

Aspects of an ML System HW 0 Other HW’s • “Language” for representing classified examples • “Language” for representing “Concepts” • Technique for producing concept “consistent” with the training examples • Technique for classifying new instance Each of these limits the expressiveness/efficiency of the supervised learning algorithm. © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 29

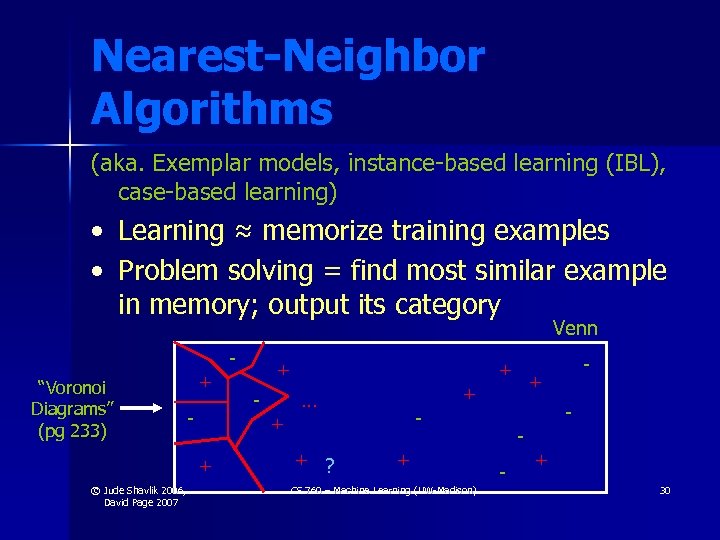

Nearest-Neighbor Algorithms (aka. Exemplar models, instance-based learning (IBL), case-based learning) • Learning ≈ memorize training examples • Problem solving = find most similar example in memory; output its category Venn “Voronoi Diagrams” (pg 233) + + © Jude Shavlik 2006, David Page 2007 - + + + ? + + … + CS 760 – Machine Learning (UW-Madison) - - + 30

Nearest-Neighbor Algorithms (aka. Exemplar models, instance-based learning (IBL), case-based learning) • Learning ≈ memorize training examples • Problem solving = find most similar example in memory; output its category Venn “Voronoi Diagrams” (pg 233) + + © Jude Shavlik 2006, David Page 2007 - + + + ? + + … + CS 760 – Machine Learning (UW-Madison) - - + 30

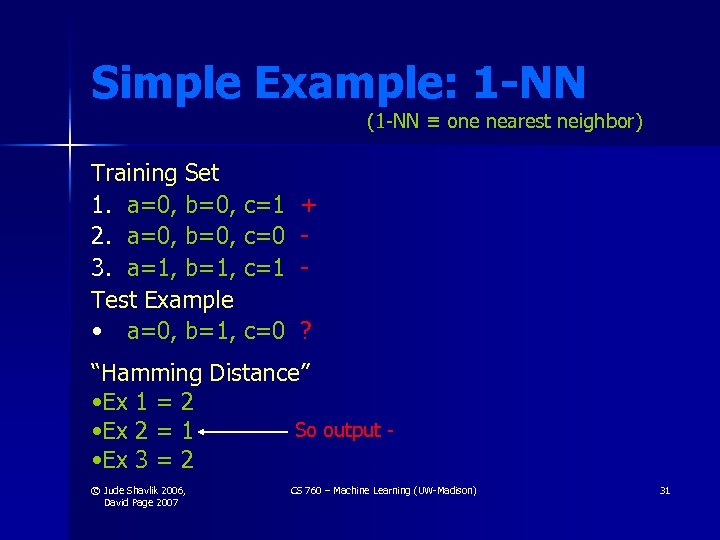

Simple Example: 1 -NN (1 -NN ≡ one nearest neighbor) Training Set 1. a=0, b=0, c=1 2. a=0, b=0, c=0 3. a=1, b=1, c=1 Test Example • a=0, b=1, c=0 + ? “Hamming Distance” • Ex 1 = 2 So output • Ex 2 = 1 • Ex 3 = 2 © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 31

Simple Example: 1 -NN (1 -NN ≡ one nearest neighbor) Training Set 1. a=0, b=0, c=1 2. a=0, b=0, c=0 3. a=1, b=1, c=1 Test Example • a=0, b=1, c=0 + ? “Hamming Distance” • Ex 1 = 2 So output • Ex 2 = 1 • Ex 3 = 2 © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 31

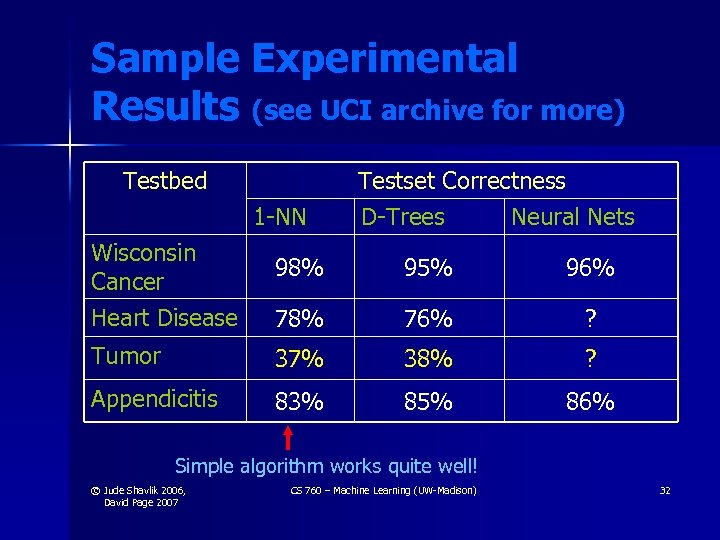

Sample Experimental Results (see UCI archive for more) Testbed 1 -NN Wisconsin Cancer Heart Disease Testset Correctness D-Trees Neural Nets 98% 95% 96% 78% 76% ? Tumor 37% 38% ? Appendicitis 83% 85% 86% Simple algorithm works quite well! © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 32

Sample Experimental Results (see UCI archive for more) Testbed 1 -NN Wisconsin Cancer Heart Disease Testset Correctness D-Trees Neural Nets 98% 95% 96% 78% 76% ? Tumor 37% 38% ? Appendicitis 83% 85% 86% Simple algorithm works quite well! © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 32

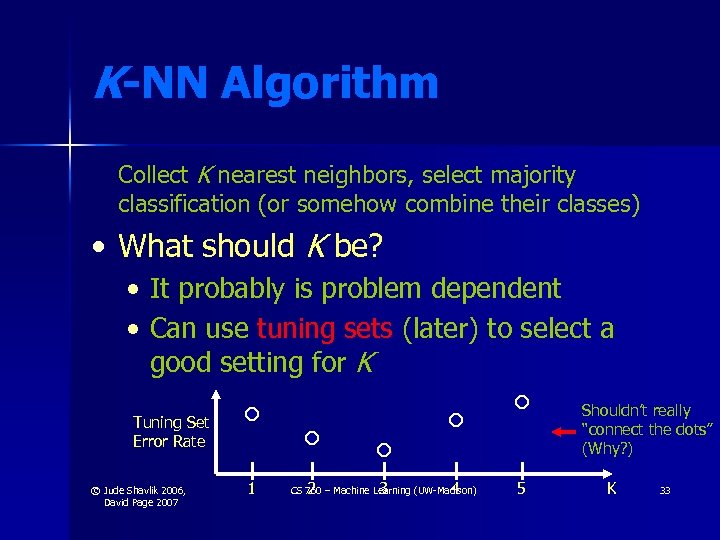

K-NN Algorithm Collect K nearest neighbors, select majority classification (or somehow combine their classes) • What should K be? • It probably is problem dependent • Can use tuning sets (later) to select a good setting for K Shouldn’t really “connect the dots” (Why? ) Tuning Set Error Rate © Jude Shavlik 2006, David Page 2007 1 2 3 4 CS 760 – Machine Learning (UW-Madison) 5 K 33

K-NN Algorithm Collect K nearest neighbors, select majority classification (or somehow combine their classes) • What should K be? • It probably is problem dependent • Can use tuning sets (later) to select a good setting for K Shouldn’t really “connect the dots” (Why? ) Tuning Set Error Rate © Jude Shavlik 2006, David Page 2007 1 2 3 4 CS 760 – Machine Learning (UW-Madison) 5 K 33

Data Representation • Creating a dataset of fixed length feature vectors • Be sure to include – on separate 8 x 11 sheet – a photo and a brief bio • HW 0 out on-line • Due next Friday © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 34

Data Representation • Creating a dataset of fixed length feature vectors • Be sure to include – on separate 8 x 11 sheet – a photo and a brief bio • HW 0 out on-line • Due next Friday © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 34

HW 0 – Create Your Own Dataset (repeated from lecture #1) • Think about before next class • Read HW 0 (on-line) • Google to find: • UCI archive (or UCI KDD archive) • UCI ML archive (UCI ML repository) • More links in HW 0’s web page © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 35

HW 0 – Create Your Own Dataset (repeated from lecture #1) • Think about before next class • Read HW 0 (on-line) • Google to find: • UCI archive (or UCI KDD archive) • UCI ML archive (UCI ML repository) • More links in HW 0’s web page © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 35

HW 0 – Your “Personal Concept” • Step 1: Choose a Boolean (true/false) concept • Books I like/dislike Movies I like/dislike www pages I like/dislike • Subjective judgment (can’t articulate) • “time will tell” concepts • Stocks to buy • Medical treatment • at time t, predict outcome at time (t +∆t) • Sensory interpretation • • • Face recognition (see textbook) Handwritten digit recognition Sound recognition • Hard-to-Program Functions © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 36

HW 0 – Your “Personal Concept” • Step 1: Choose a Boolean (true/false) concept • Books I like/dislike Movies I like/dislike www pages I like/dislike • Subjective judgment (can’t articulate) • “time will tell” concepts • Stocks to buy • Medical treatment • at time t, predict outcome at time (t +∆t) • Sensory interpretation • • • Face recognition (see textbook) Handwritten digit recognition Sound recognition • Hard-to-Program Functions © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 36

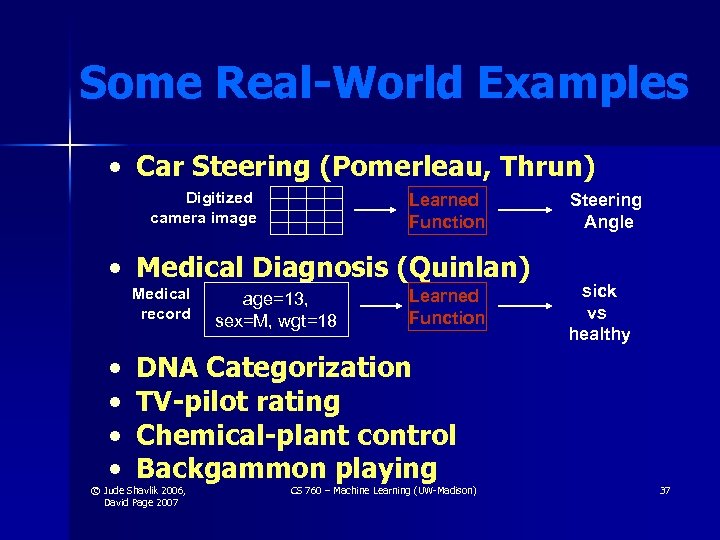

Some Real-World Examples • Car Steering (Pomerleau, Thrun) Digitized camera image Learned Function • Medical Diagnosis (Quinlan) Medical record • • age=13, sex=M, wgt=18 Learned Function DNA Categorization TV-pilot rating Chemical-plant control Backgammon playing © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) Steering Angle sick vs healthy 37

Some Real-World Examples • Car Steering (Pomerleau, Thrun) Digitized camera image Learned Function • Medical Diagnosis (Quinlan) Medical record • • age=13, sex=M, wgt=18 Learned Function DNA Categorization TV-pilot rating Chemical-plant control Backgammon playing © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) Steering Angle sick vs healthy 37

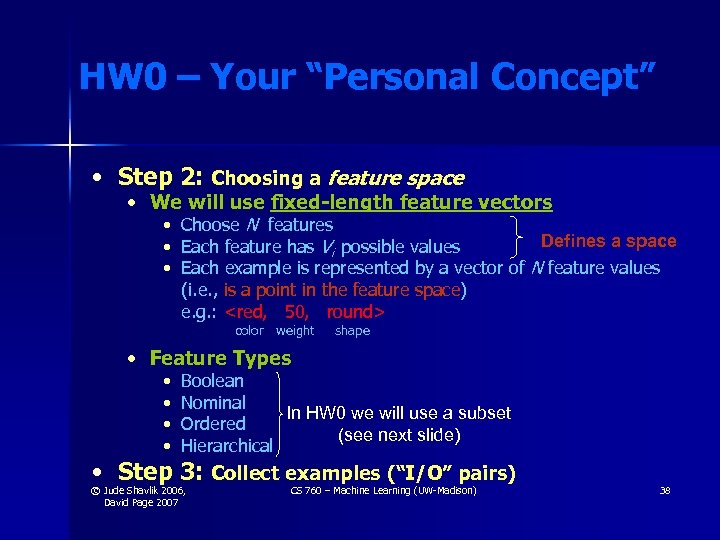

HW 0 – Your “Personal Concept” • Step 2: Choosing a feature space • We will use fixed-length feature vectors • • • Choose N features Defines a space Each feature has Vi possible values Each example is represented by a vector of N feature values (i. e. , is a point in the feature space) e. g. :

HW 0 – Your “Personal Concept” • Step 2: Choosing a feature space • We will use fixed-length feature vectors • • • Choose N features Defines a space Each feature has Vi possible values Each example is represented by a vector of N feature values (i. e. , is a point in the feature space) e. g. :

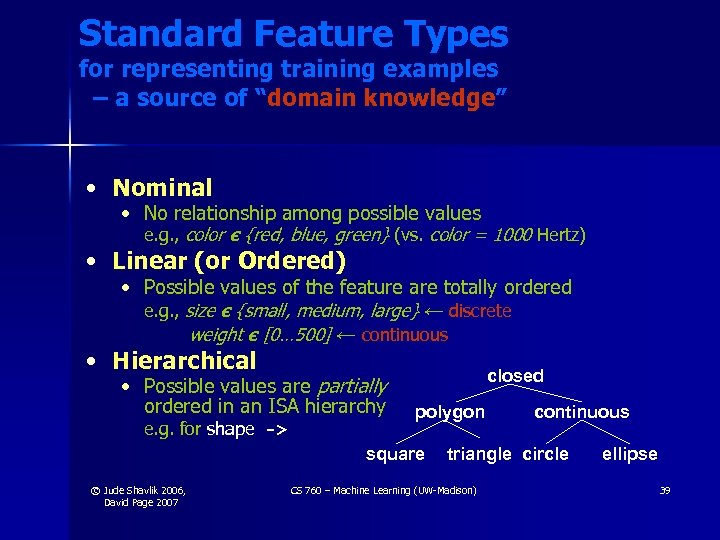

Standard Feature Types for representing training examples – a source of “domain knowledge” • Nominal • No relationship among possible values e. g. , color є {red, blue, green} (vs. color = 1000 Hertz) • Linear (or Ordered) • Possible values of the feature are totally ordered e. g. , size є {small, medium, large} ← discrete weight є [0… 500] ← continuous • Hierarchical • Possible values are partially ordered in an ISA hierarchy e. g. for shape -> closed polygon square © Jude Shavlik 2006, David Page 2007 continuous triangle circle CS 760 – Machine Learning (UW-Madison) ellipse 39

Standard Feature Types for representing training examples – a source of “domain knowledge” • Nominal • No relationship among possible values e. g. , color є {red, blue, green} (vs. color = 1000 Hertz) • Linear (or Ordered) • Possible values of the feature are totally ordered e. g. , size є {small, medium, large} ← discrete weight є [0… 500] ← continuous • Hierarchical • Possible values are partially ordered in an ISA hierarchy e. g. for shape -> closed polygon square © Jude Shavlik 2006, David Page 2007 continuous triangle circle CS 760 – Machine Learning (UW-Madison) ellipse 39

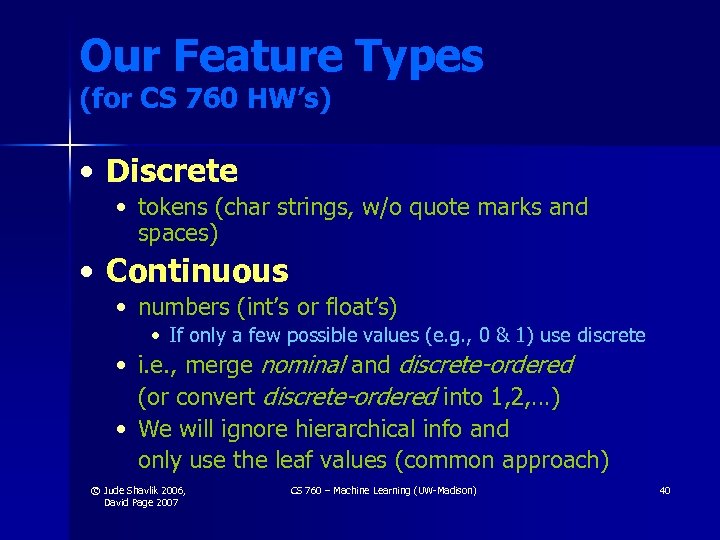

Our Feature Types (for CS 760 HW’s) • Discrete • tokens (char strings, w/o quote marks and spaces) • Continuous • numbers (int’s or float’s) • If only a few possible values (e. g. , 0 & 1) use discrete • i. e. , merge nominal and discrete-ordered (or convert discrete-ordered into 1, 2, …) • We will ignore hierarchical info and only use the leaf values (common approach) © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 40

Our Feature Types (for CS 760 HW’s) • Discrete • tokens (char strings, w/o quote marks and spaces) • Continuous • numbers (int’s or float’s) • If only a few possible values (e. g. , 0 & 1) use discrete • i. e. , merge nominal and discrete-ordered (or convert discrete-ordered into 1, 2, …) • We will ignore hierarchical info and only use the leaf values (common approach) © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 40

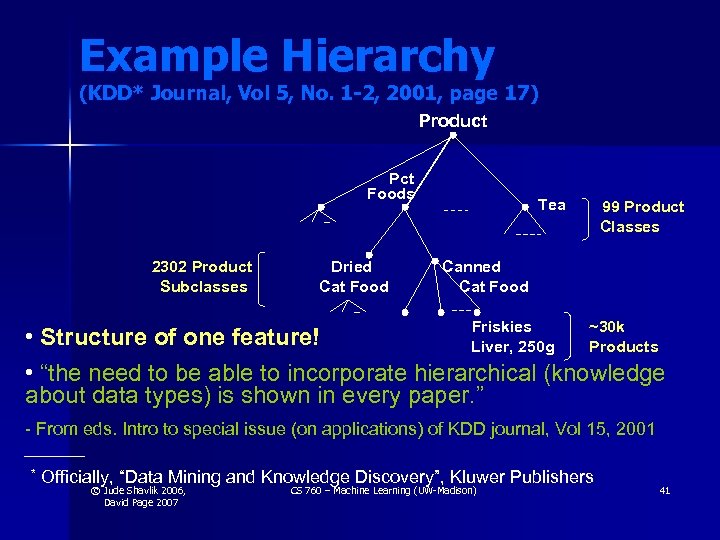

Example Hierarchy (KDD* Journal, Vol 5, No. 1 -2, 2001, page 17) Product Pct Foods 2302 Product Subclasses Dried Cat Food Tea 99 Product Classes Canned Cat Food Friskies ~30 k • Structure of one feature! Liver, 250 g Products • “the need to be able to incorporate hierarchical (knowledge about data types) is shown in every paper. ” - From eds. Intro to special issue (on applications) of KDD journal, Vol 15, 2001 * Officially, “Data Mining and Knowledge Discovery”, Kluwer Publishers © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 41

Example Hierarchy (KDD* Journal, Vol 5, No. 1 -2, 2001, page 17) Product Pct Foods 2302 Product Subclasses Dried Cat Food Tea 99 Product Classes Canned Cat Food Friskies ~30 k • Structure of one feature! Liver, 250 g Products • “the need to be able to incorporate hierarchical (knowledge about data types) is shown in every paper. ” - From eds. Intro to special issue (on applications) of KDD journal, Vol 15, 2001 * Officially, “Data Mining and Knowledge Discovery”, Kluwer Publishers © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 41

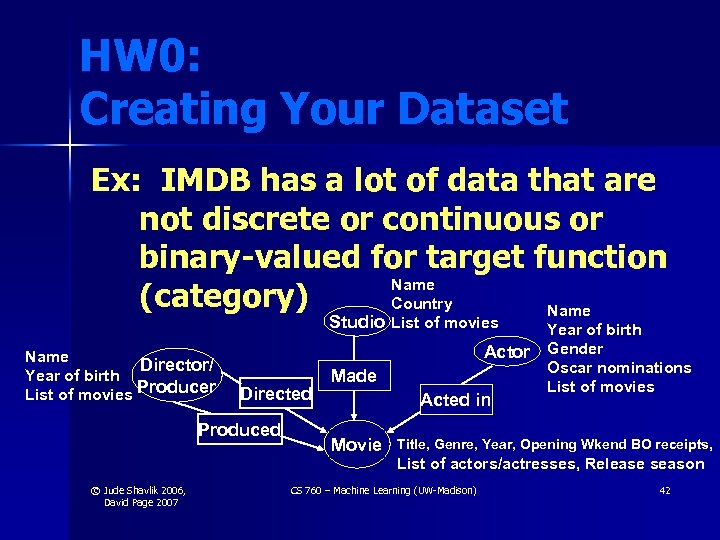

HW 0: Creating Your Dataset Ex: IMDB has a lot of data that are not discrete or continuous or binary-valued for target function Name (category) Country Name Studio List of movies Name Director/ Year of birth List of movies Producer Actor Directed Produced © Jude Shavlik 2006, David Page 2007 Made Acted in Movie Year of birth Gender Oscar nominations List of movies Title, Genre, Year, Opening Wkend BO receipts, List of actors/actresses, Release season CS 760 – Machine Learning (UW-Madison) 42

HW 0: Creating Your Dataset Ex: IMDB has a lot of data that are not discrete or continuous or binary-valued for target function Name (category) Country Name Studio List of movies Name Director/ Year of birth List of movies Producer Actor Directed Produced © Jude Shavlik 2006, David Page 2007 Made Acted in Movie Year of birth Gender Oscar nominations List of movies Title, Genre, Year, Opening Wkend BO receipts, List of actors/actresses, Release season CS 760 – Machine Learning (UW-Madison) 42

HW 0: Sample DB Choose a Boolean or binary-valued target function (category) • Opening weekend box-office receipts > $2 million • Movie is drama? (action, sci-fi, …) • Movies I like/dislike (e. g. Tivo) © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 43

HW 0: Sample DB Choose a Boolean or binary-valued target function (category) • Opening weekend box-office receipts > $2 million • Movie is drama? (action, sci-fi, …) • Movies I like/dislike (e. g. Tivo) © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 43

HW 0: Representing as a Fixed-Length Feature Vector

HW 0: Representing as a Fixed-Length Feature Vector

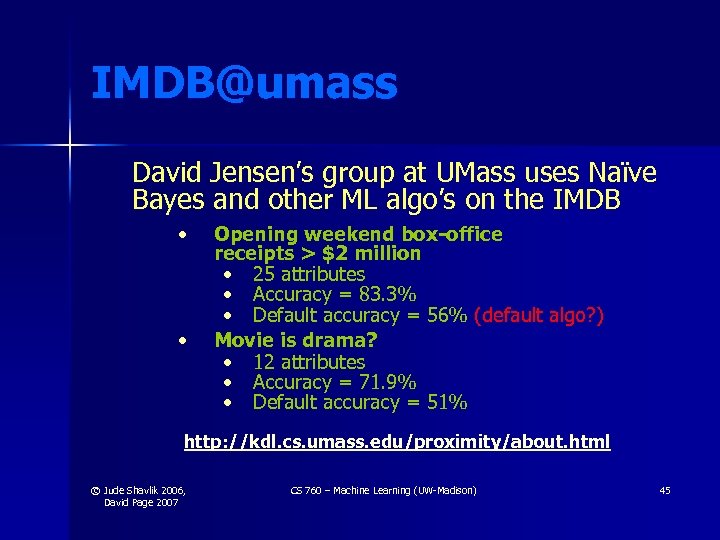

IMDB@umass David Jensen’s group at UMass uses Naïve Bayes and other ML algo’s on the IMDB • • Opening weekend box-office receipts > $2 million • 25 attributes • Accuracy = 83. 3% • Default accuracy = 56% (default algo? ) Movie is drama? • 12 attributes • Accuracy = 71. 9% • Default accuracy = 51% http: //kdl. cs. umass. edu/proximity/about. html © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 45

IMDB@umass David Jensen’s group at UMass uses Naïve Bayes and other ML algo’s on the IMDB • • Opening weekend box-office receipts > $2 million • 25 attributes • Accuracy = 83. 3% • Default accuracy = 56% (default algo? ) Movie is drama? • 12 attributes • Accuracy = 71. 9% • Default accuracy = 51% http: //kdl. cs. umass. edu/proximity/about. html © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 45

First Algorithm in Detail • K-Nearest Neighbors / Instance-Based Learning (k-NN/IBL) • • Distance functions Kernel functions Feature selection (applies to all ML algo’s) IBL Summary Chapter 8 of Mitchell © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 46

First Algorithm in Detail • K-Nearest Neighbors / Instance-Based Learning (k-NN/IBL) • • Distance functions Kernel functions Feature selection (applies to all ML algo’s) IBL Summary Chapter 8 of Mitchell © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 46

Some Common Jargon • Classification • Learning a discrete valued function Discrete/Real • Regression Outputs • Learning a real valued function IBL easily extended to regression tasks (and to multi-category classification) © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 47

Some Common Jargon • Classification • Learning a discrete valued function Discrete/Real • Regression Outputs • Learning a real valued function IBL easily extended to regression tasks (and to multi-category classification) © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 47

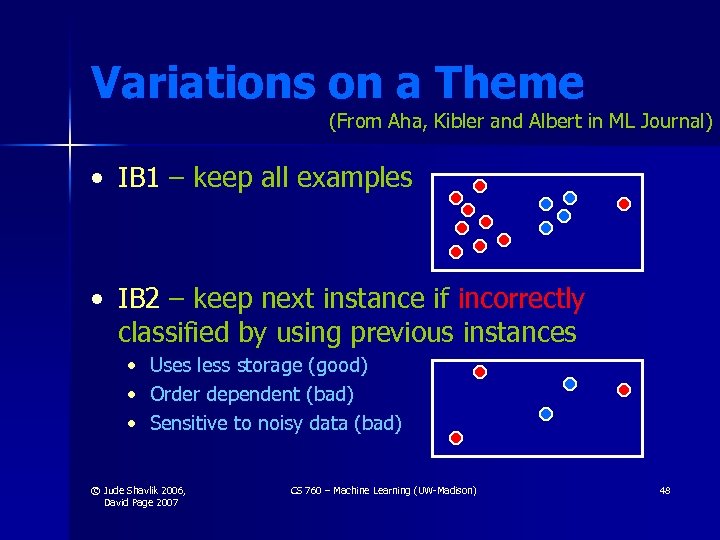

Variations on a Theme (From Aha, Kibler and Albert in ML Journal) • IB 1 – keep all examples • IB 2 – keep next instance if incorrectly classified by using previous instances • Uses less storage (good) • Order dependent (bad) • Sensitive to noisy data (bad) © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 48

Variations on a Theme (From Aha, Kibler and Albert in ML Journal) • IB 1 – keep all examples • IB 2 – keep next instance if incorrectly classified by using previous instances • Uses less storage (good) • Order dependent (bad) • Sensitive to noisy data (bad) © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 48

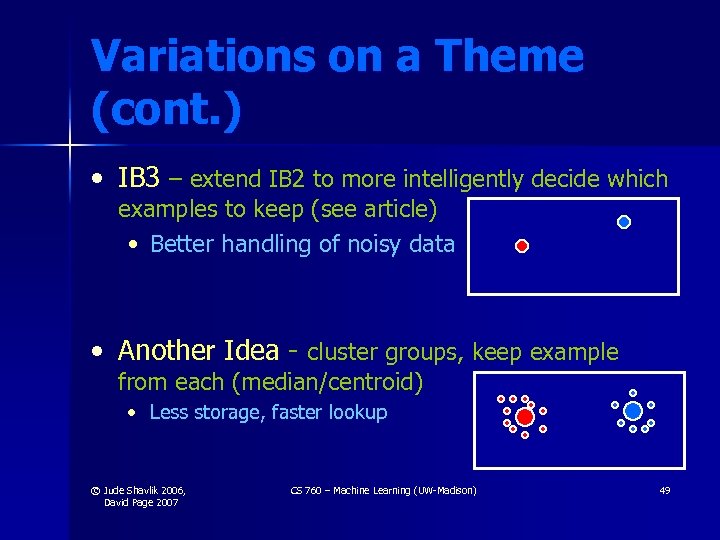

Variations on a Theme (cont. ) • IB 3 – extend IB 2 to more intelligently decide which examples to keep (see article) • Better handling of noisy data • Another Idea - cluster groups, keep example from each (median/centroid) • Less storage, faster lookup © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 49

Variations on a Theme (cont. ) • IB 3 – extend IB 2 to more intelligently decide which examples to keep (see article) • Better handling of noisy data • Another Idea - cluster groups, keep example from each (median/centroid) • Less storage, faster lookup © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 49

Distance Functions • Key issue in IBL (instance-based learning) • One approach: assign weights to each feature © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 50

Distance Functions • Key issue in IBL (instance-based learning) • One approach: assign weights to each feature © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 50

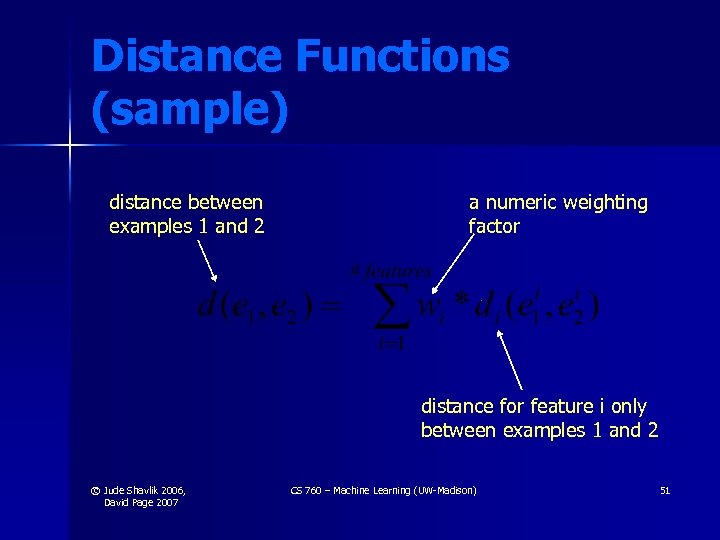

Distance Functions (sample) distance between examples 1 and 2 a numeric weighting factor distance for feature i only between examples 1 and 2 © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 51

Distance Functions (sample) distance between examples 1 and 2 a numeric weighting factor distance for feature i only between examples 1 and 2 © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 51

Kernel Functions and k-NN • Term “kernel” comes from statistics • Major topic in support vector machines (SVMs) • Weights the interaction between pairs of examples © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 52

Kernel Functions and k-NN • Term “kernel” comes from statistics • Major topic in support vector machines (SVMs) • Weights the interaction between pairs of examples © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 52

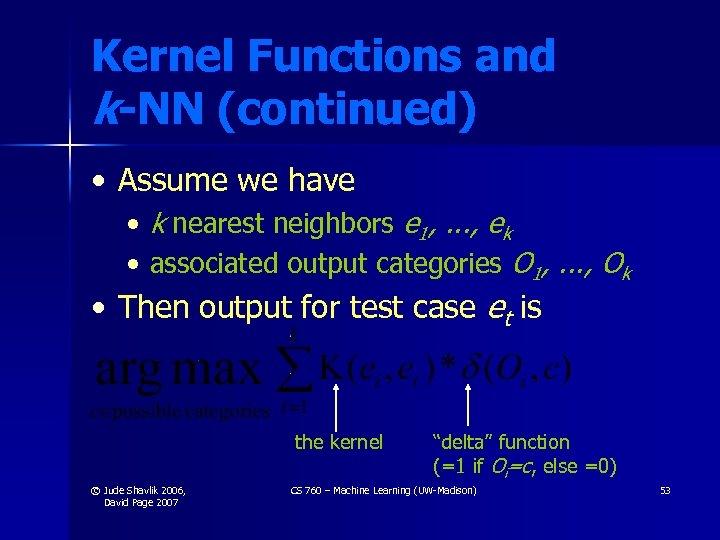

Kernel Functions and k-NN (continued) • Assume we have • k nearest neighbors e 1, . . . , ek • associated output categories O 1, . . . , Ok • Then output for test case et is the kernel © Jude Shavlik 2006, David Page 2007 “delta” function (=1 if Oi=c, else =0) CS 760 – Machine Learning (UW-Madison) 53

Kernel Functions and k-NN (continued) • Assume we have • k nearest neighbors e 1, . . . , ek • associated output categories O 1, . . . , Ok • Then output for test case et is the kernel © Jude Shavlik 2006, David Page 2007 “delta” function (=1 if Oi=c, else =0) CS 760 – Machine Learning (UW-Madison) 53

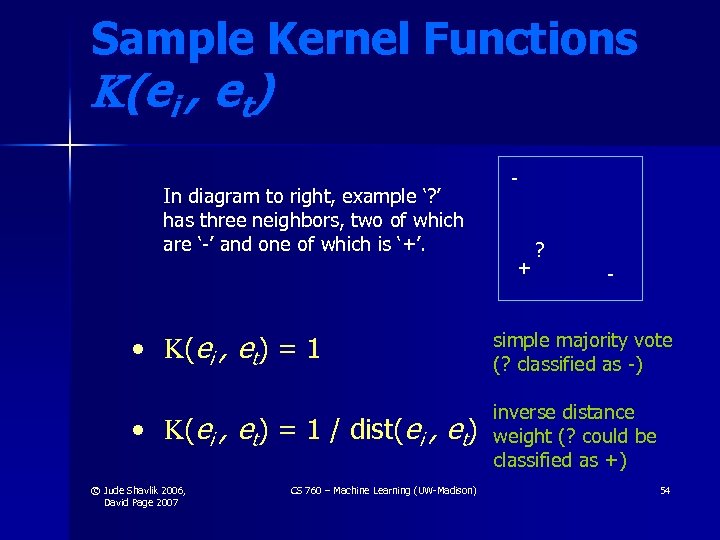

Sample Kernel Functions K(ei , et) In diagram to right, example ‘? ’ has three neighbors, two of which are ‘-’ and one of which is ‘+’. - + ? - • K( e i , e t ) = 1 simple majority vote (? classified as -) • K(ei , et) = 1 / dist(ei , et) inverse distance weight (? could be classified as +) © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 54

Sample Kernel Functions K(ei , et) In diagram to right, example ‘? ’ has three neighbors, two of which are ‘-’ and one of which is ‘+’. - + ? - • K( e i , e t ) = 1 simple majority vote (? classified as -) • K(ei , et) = 1 / dist(ei , et) inverse distance weight (? could be classified as +) © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 54

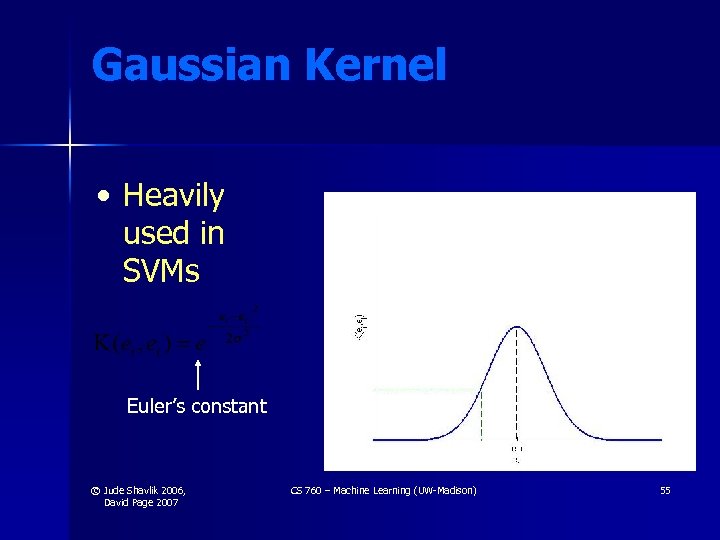

Gaussian Kernel • Heavily used in SVMs Euler’s constant © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 55

Gaussian Kernel • Heavily used in SVMs Euler’s constant © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 55

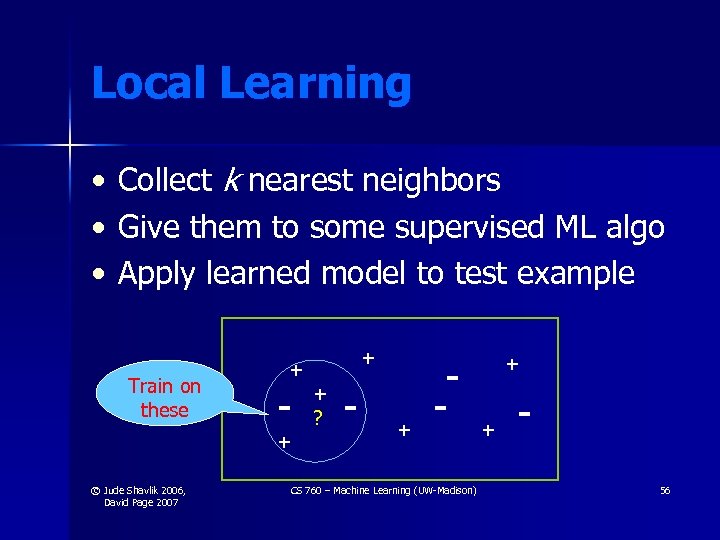

Local Learning • • • Collect k nearest neighbors Give them to some supervised ML algo Apply learned model to test example Train on these + - + © Jude Shavlik 2006, David Page 2007 + + ? - + - CS 760 – Machine Learning (UW-Madison) + + 56

Local Learning • • • Collect k nearest neighbors Give them to some supervised ML algo Apply learned model to test example Train on these + - + © Jude Shavlik 2006, David Page 2007 + + ? - + - CS 760 – Machine Learning (UW-Madison) + + 56

Instance-Based Learning (IBL) and Efficiency • IBL algorithms postpone work from training to testing • Pure k-NN/IBL just memorizes the training data • Sometimes called lazy learning • Computationally intensive • Match all features of all training examples © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 57

Instance-Based Learning (IBL) and Efficiency • IBL algorithms postpone work from training to testing • Pure k-NN/IBL just memorizes the training data • Sometimes called lazy learning • Computationally intensive • Match all features of all training examples © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 57

Instance-Based Learning (IBL) and Efficiency • Possible Speed-ups • Use a subset of the training examples (Aha) • Use clever data structures (A. Moore) • KD trees, hash tables, Voronoi diagrams • Use subset of the features © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 58

Instance-Based Learning (IBL) and Efficiency • Possible Speed-ups • Use a subset of the training examples (Aha) • Use clever data structures (A. Moore) • KD trees, hash tables, Voronoi diagrams • Use subset of the features © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 58

Number of Features and Performance • Too many features can hurt test set performance • Too many irrelevant features mean many spurious correlation possibilities for a ML algorithm to detect • “Curse of dimensionality” © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 59

Number of Features and Performance • Too many features can hurt test set performance • Too many irrelevant features mean many spurious correlation possibilities for a ML algorithm to detect • “Curse of dimensionality” © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 59

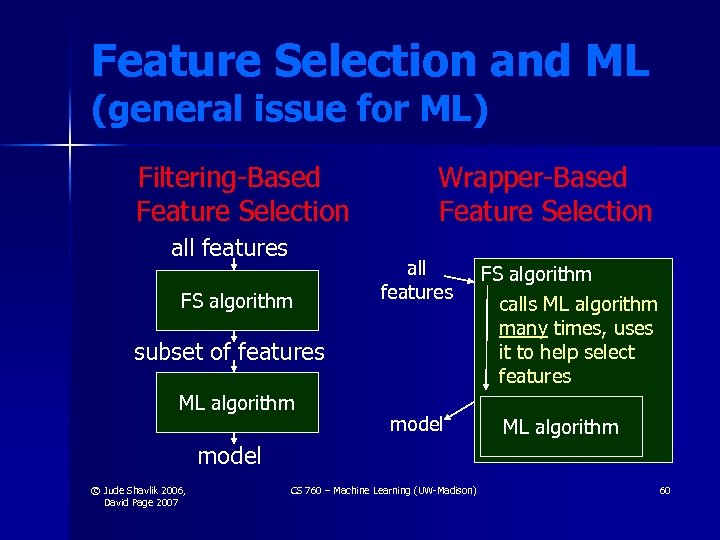

Feature Selection and ML (general issue for ML) Filtering-Based Feature Selection all features FS algorithm Wrapper-Based Feature Selection all features subset of features ML algorithm model FS algorithm calls ML algorithm many times, uses it to help select features ML algorithm model © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 60

Feature Selection and ML (general issue for ML) Filtering-Based Feature Selection all features FS algorithm Wrapper-Based Feature Selection all features subset of features ML algorithm model FS algorithm calls ML algorithm many times, uses it to help select features ML algorithm model © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 60

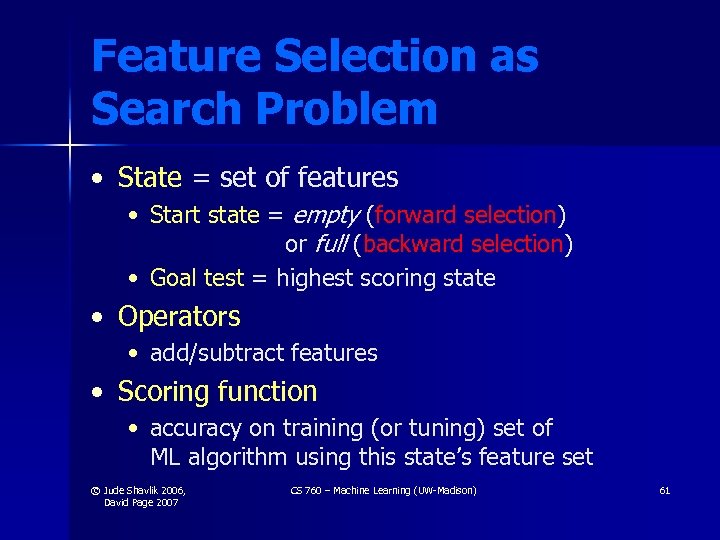

Feature Selection as Search Problem • State = set of features • Start state = empty (forward selection) or full (backward selection) • Goal test = highest scoring state • Operators • add/subtract features • Scoring function • accuracy on training (or tuning) set of ML algorithm using this state’s feature set © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 61

Feature Selection as Search Problem • State = set of features • Start state = empty (forward selection) or full (backward selection) • Goal test = highest scoring state • Operators • add/subtract features • Scoring function • accuracy on training (or tuning) set of ML algorithm using this state’s feature set © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 61

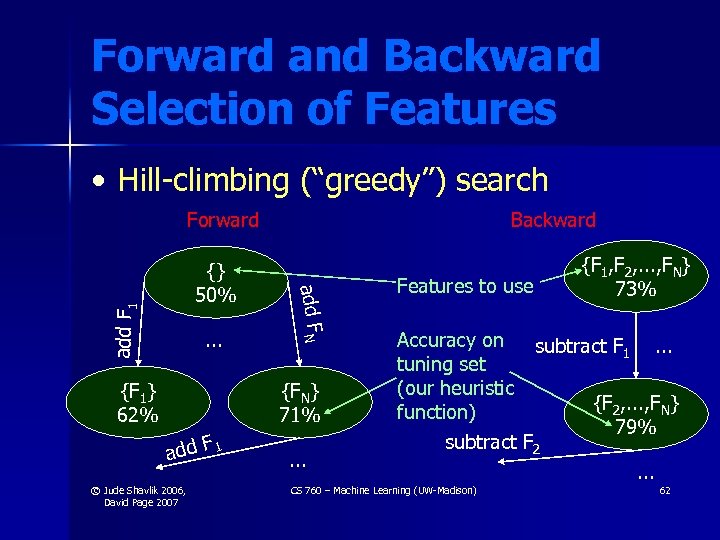

Forward and Backward Selection of Features • Hill-climbing (“greedy”) search Forward add F 1 . . . {F 1} 62% add F N {} 50% Backward {FN} 71% add © Jude Shavlik 2006, David Page 2007 F 1 . . . Features to use {F 1, F 2, . . . , FN} 73% Accuracy on subtract F 1. . . tuning set (our heuristic {F 2, . . . , FN} function) 79% subtract F 2. . . CS 760 – Machine Learning (UW-Madison) 62

Forward and Backward Selection of Features • Hill-climbing (“greedy”) search Forward add F 1 . . . {F 1} 62% add F N {} 50% Backward {FN} 71% add © Jude Shavlik 2006, David Page 2007 F 1 . . . Features to use {F 1, F 2, . . . , FN} 73% Accuracy on subtract F 1. . . tuning set (our heuristic {F 2, . . . , FN} function) 79% subtract F 2. . . CS 760 – Machine Learning (UW-Madison) 62

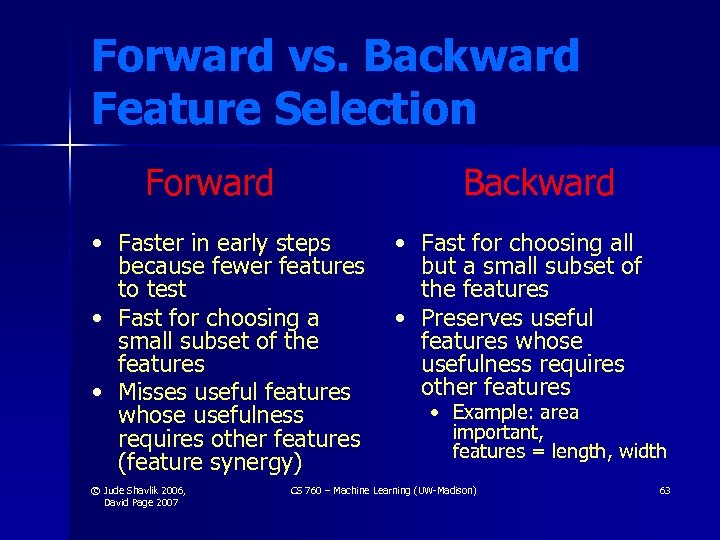

Forward vs. Backward Feature Selection Forward Backward • Faster in early steps because fewer features to test • Fast for choosing a small subset of the features • Misses useful features whose usefulness requires other features (feature synergy) © Jude Shavlik 2006, David Page 2007 • Fast for choosing all but a small subset of the features • Preserves useful features whose usefulness requires other features • Example: area important, features = length, width CS 760 – Machine Learning (UW-Madison) 63

Forward vs. Backward Feature Selection Forward Backward • Faster in early steps because fewer features to test • Fast for choosing a small subset of the features • Misses useful features whose usefulness requires other features (feature synergy) © Jude Shavlik 2006, David Page 2007 • Fast for choosing all but a small subset of the features • Preserves useful features whose usefulness requires other features • Example: area important, features = length, width CS 760 – Machine Learning (UW-Madison) 63

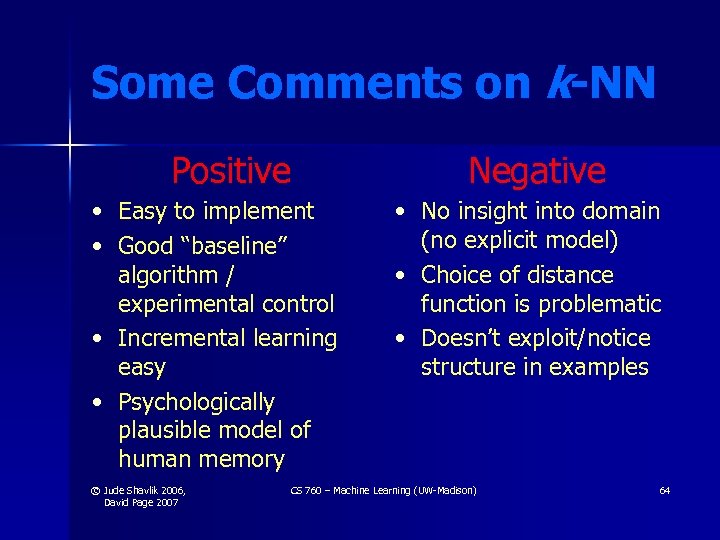

Some Comments on k-NN Positive • Easy to implement • Good “baseline” algorithm / experimental control • Incremental learning easy • Psychologically plausible model of human memory © Jude Shavlik 2006, David Page 2007 Negative • No insight into domain (no explicit model) • Choice of distance function is problematic • Doesn’t exploit/notice structure in examples CS 760 – Machine Learning (UW-Madison) 64

Some Comments on k-NN Positive • Easy to implement • Good “baseline” algorithm / experimental control • Incremental learning easy • Psychologically plausible model of human memory © Jude Shavlik 2006, David Page 2007 Negative • No insight into domain (no explicit model) • Choice of distance function is problematic • Doesn’t exploit/notice structure in examples CS 760 – Machine Learning (UW-Madison) 64

Questions about IBL (Breiman et al. - CART book) • Computationally expensive to save all examples; slow classification of new examples • Addressed by IB 2/IB 3 of Aha et al. and work of A. Moore (CMU; now Google) • Is this really a problem? © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 65

Questions about IBL (Breiman et al. - CART book) • Computationally expensive to save all examples; slow classification of new examples • Addressed by IB 2/IB 3 of Aha et al. and work of A. Moore (CMU; now Google) • Is this really a problem? © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 65

Questions about IBL (Breiman et al. - CART book) • Intolerant of Noise • Addressed by IB 3 of Aha et al. • Addressed by k-NN version • Addressed by feature selection - can discard the noisy feature • Intolerant of Irrelevant Features • Since algorithm very fast, can experimentally choose good feature sets (Kohavi, Ph. D. – now at Amazon) © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 66

Questions about IBL (Breiman et al. - CART book) • Intolerant of Noise • Addressed by IB 3 of Aha et al. • Addressed by k-NN version • Addressed by feature selection - can discard the noisy feature • Intolerant of Irrelevant Features • Since algorithm very fast, can experimentally choose good feature sets (Kohavi, Ph. D. – now at Amazon) © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 66

More IBL Criticisms • High sensitivity to choice of similiarity (distance) function • Euclidean distance might not be best choice • Handling non-numeric features and missing feature values is not natural, but doable • How might we do this? (Part of HW 1) • No insight into task (learned concept not interpretable) © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 67

More IBL Criticisms • High sensitivity to choice of similiarity (distance) function • Euclidean distance might not be best choice • Handling non-numeric features and missing feature values is not natural, but doable • How might we do this? (Part of HW 1) • No insight into task (learned concept not interpretable) © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 67

Summary • IBL can be a very effective machine learning algorithm • Good “baseline” for experiments © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 68

Summary • IBL can be a very effective machine learning algorithm • Good “baseline” for experiments © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 68