e3f843b1e75e42b7b577f72e41b4ae1c.ppt

- Количество слайдов: 23

CS 601 R, section 2: Statistical Natural Language Processing Lectures #16 & 17: Part of Speech Tagging, Hidden Markov Models Thanks to Dan Klein of UC Berkeley for many of the materials used in this lecture.

CS 601 R, section 2: Statistical Natural Language Processing Lectures #16 & 17: Part of Speech Tagging, Hidden Markov Models Thanks to Dan Klein of UC Berkeley for many of the materials used in this lecture.

Last Time § Maximum entropy models § A technique for estimating multinomial distributions conditionally on many features § A building block of many NLP systems

Last Time § Maximum entropy models § A technique for estimating multinomial distributions conditionally on many features § A building block of many NLP systems

Goals § To be able to model sequences § Application: Part-of-Speech Tagging § Technique: Hidden Markov Models (HMMs) § Think of this as sequential classification

Goals § To be able to model sequences § Application: Part-of-Speech Tagging § Technique: Hidden Markov Models (HMMs) § Think of this as sequential classification

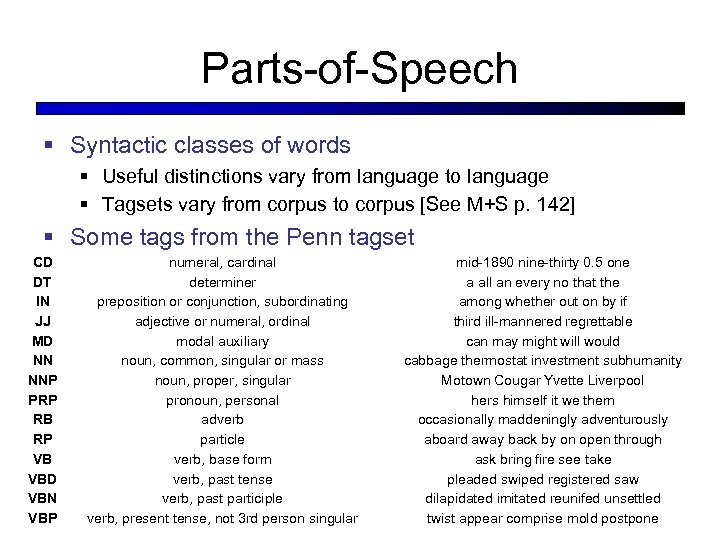

Parts-of-Speech § Syntactic classes of words § Useful distinctions vary from language to language § Tagsets vary from corpus to corpus [See M+S p. 142] § Some tags from the Penn tagset CD DT IN JJ MD NN NNP PRP RB RP VB VBD VBN VBP numeral, cardinal determiner preposition or conjunction, subordinating adjective or numeral, ordinal modal auxiliary noun, common, singular or mass noun, proper, singular pronoun, personal adverb particle verb, base form verb, past tense verb, past participle verb, present tense, not 3 rd person singular mid-1890 nine-thirty 0. 5 one a all an every no that the among whether out on by if third ill-mannered regrettable can may might will would cabbage thermostat investment subhumanity Motown Cougar Yvette Liverpool hers himself it we them occasionally maddeningly adventurously aboard away back by on open through ask bring fire see take pleaded swiped registered saw dilapidated imitated reunifed unsettled twist appear comprise mold postpone

Parts-of-Speech § Syntactic classes of words § Useful distinctions vary from language to language § Tagsets vary from corpus to corpus [See M+S p. 142] § Some tags from the Penn tagset CD DT IN JJ MD NN NNP PRP RB RP VB VBD VBN VBP numeral, cardinal determiner preposition or conjunction, subordinating adjective or numeral, ordinal modal auxiliary noun, common, singular or mass noun, proper, singular pronoun, personal adverb particle verb, base form verb, past tense verb, past participle verb, present tense, not 3 rd person singular mid-1890 nine-thirty 0. 5 one a all an every no that the among whether out on by if third ill-mannered regrettable can may might will would cabbage thermostat investment subhumanity Motown Cougar Yvette Liverpool hers himself it we them occasionally maddeningly adventurously aboard away back by on open through ask bring fire see take pleaded swiped registered saw dilapidated imitated reunifed unsettled twist appear comprise mold postpone

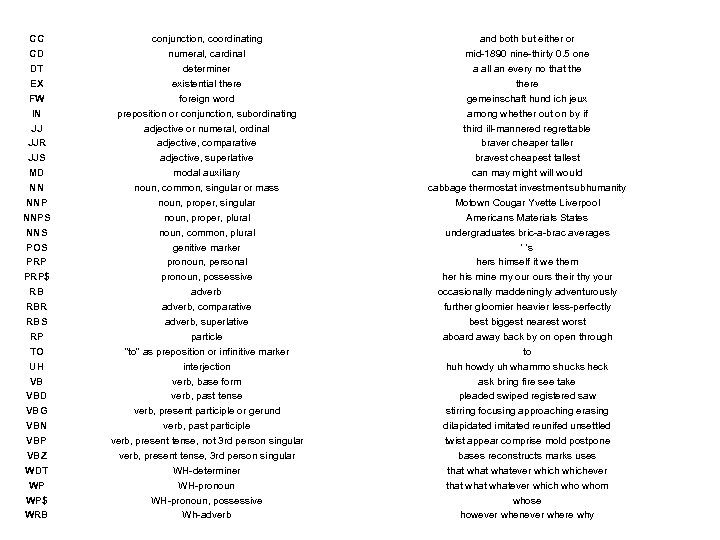

CC CD DT EX FW IN JJ JJR JJS MD NN NNPS NNS POS PRP$ RB RBR RBS RP TO UH VB VBD VBG VBN VBP VBZ WDT WP WP$ WRB conjunction, coordinating numeral, cardinal determiner existential there foreign word preposition or conjunction, subordinating adjective or numeral, ordinal adjective, comparative adjective, superlative modal auxiliary noun, common, singular or mass noun, proper, singular noun, proper, plural noun, common, plural genitive marker pronoun, personal pronoun, possessive adverb, comparative adverb, superlative particle "to" as preposition or infinitive marker interjection verb, base form verb, past tense verb, present participle or gerund verb, past participle verb, present tense, not 3 rd person singular verb, present tense, 3 rd person singular WH-determiner WH-pronoun, possessive Wh-adverb and both but either or mid-1890 nine-thirty 0. 5 one a all an every no that there gemeinschaft hund ich jeux among whether out on by if third ill-mannered regrettable braver cheaper taller bravest cheapest tallest can may might will would cabbage thermostat investment subhumanity Motown Cougar Yvette Liverpool Americans Materials States undergraduates bric-a-brac averages ' 's hers himself it we them her his mine my ours their thy your occasionally maddeningly adventurously further gloomier heavier less-perfectly best biggest nearest worst aboard away back by on open through to huh howdy uh whammo shucks heck ask bring fire see take pleaded swiped registered saw stirring focusing approaching erasing dilapidated imitated reunifed unsettled twist appear comprise mold postpone bases reconstructs marks uses that whatever whichever that whatever which whom whose however whenever where why

CC CD DT EX FW IN JJ JJR JJS MD NN NNPS NNS POS PRP$ RB RBR RBS RP TO UH VB VBD VBG VBN VBP VBZ WDT WP WP$ WRB conjunction, coordinating numeral, cardinal determiner existential there foreign word preposition or conjunction, subordinating adjective or numeral, ordinal adjective, comparative adjective, superlative modal auxiliary noun, common, singular or mass noun, proper, singular noun, proper, plural noun, common, plural genitive marker pronoun, personal pronoun, possessive adverb, comparative adverb, superlative particle "to" as preposition or infinitive marker interjection verb, base form verb, past tense verb, present participle or gerund verb, past participle verb, present tense, not 3 rd person singular verb, present tense, 3 rd person singular WH-determiner WH-pronoun, possessive Wh-adverb and both but either or mid-1890 nine-thirty 0. 5 one a all an every no that there gemeinschaft hund ich jeux among whether out on by if third ill-mannered regrettable braver cheaper taller bravest cheapest tallest can may might will would cabbage thermostat investment subhumanity Motown Cougar Yvette Liverpool Americans Materials States undergraduates bric-a-brac averages ' 's hers himself it we them her his mine my ours their thy your occasionally maddeningly adventurously further gloomier heavier less-perfectly best biggest nearest worst aboard away back by on open through to huh howdy uh whammo shucks heck ask bring fire see take pleaded swiped registered saw stirring focusing approaching erasing dilapidated imitated reunifed unsettled twist appear comprise mold postpone bases reconstructs marks uses that whatever whichever that whatever which whom whose however whenever where why

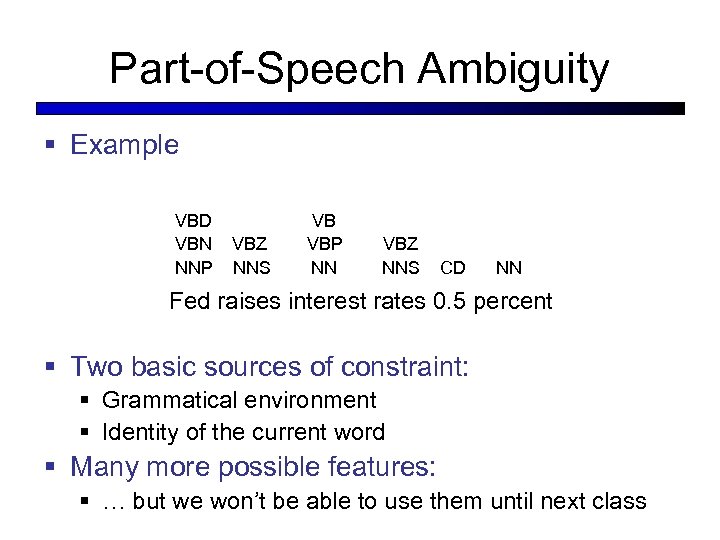

Part-of-Speech Ambiguity § Example VBD VBN NNP VBZ NNS VB VBP NN VBZ NNS CD NN Fed raises interest rates 0. 5 percent § Two basic sources of constraint: § Grammatical environment § Identity of the current word § Many more possible features: § … but we won’t be able to use them until next class

Part-of-Speech Ambiguity § Example VBD VBN NNP VBZ NNS VB VBP NN VBZ NNS CD NN Fed raises interest rates 0. 5 percent § Two basic sources of constraint: § Grammatical environment § Identity of the current word § Many more possible features: § … but we won’t be able to use them until next class

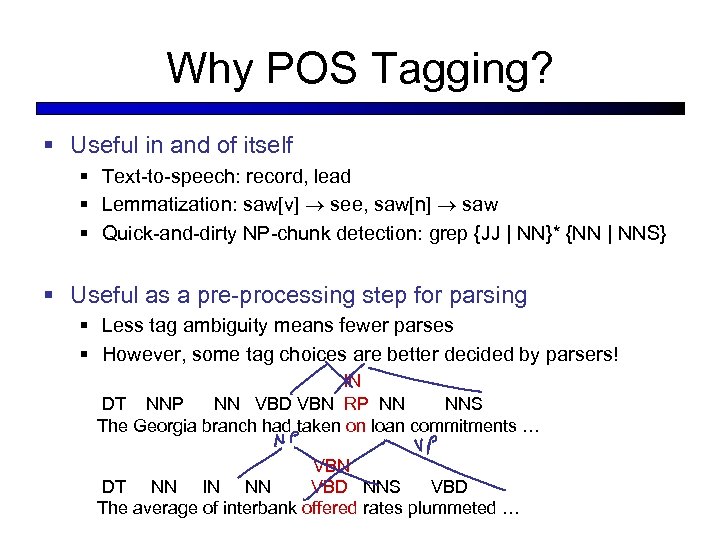

Why POS Tagging? § Useful in and of itself § Text-to-speech: record, lead § Lemmatization: saw[v] see, saw[n] saw § Quick-and-dirty NP-chunk detection: grep {JJ | NN}* {NN | NNS} § Useful as a pre-processing step for parsing § Less tag ambiguity means fewer parses § However, some tag choices are better decided by parsers! IN DT NNP NN VBD VBN RP NN NNS The Georgia branch had taken on loan commitments … VBN DT NN IN NN VBD NNS VBD The average of interbank offered rates plummeted …

Why POS Tagging? § Useful in and of itself § Text-to-speech: record, lead § Lemmatization: saw[v] see, saw[n] saw § Quick-and-dirty NP-chunk detection: grep {JJ | NN}* {NN | NNS} § Useful as a pre-processing step for parsing § Less tag ambiguity means fewer parses § However, some tag choices are better decided by parsers! IN DT NNP NN VBD VBN RP NN NNS The Georgia branch had taken on loan commitments … VBN DT NN IN NN VBD NNS VBD The average of interbank offered rates plummeted …

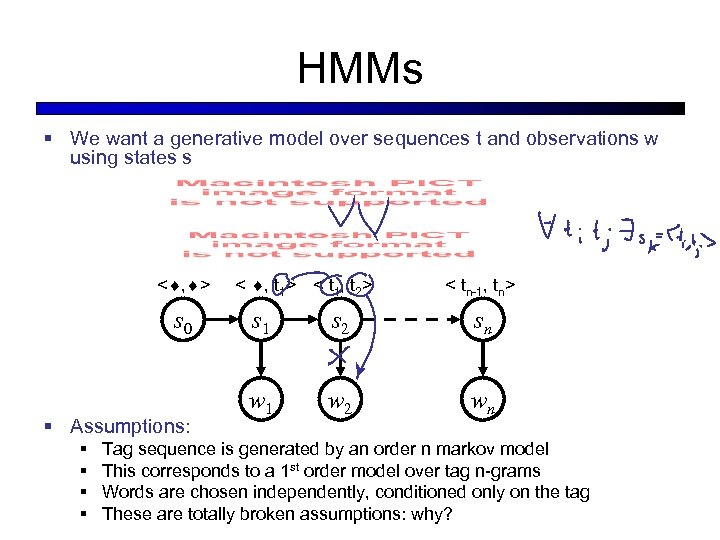

HMMs § We want a generative model over sequences t and observations w using states s < , > s 0 § Assumptions: § § < , t 1> < t 1, t 2> < tn-1, tn> s 1 s 2 sn w 1 w 2 wn Tag sequence is generated by an order n markov model This corresponds to a 1 st order model over tag n-grams Words are chosen independently, conditioned only on the tag These are totally broken assumptions: why?

HMMs § We want a generative model over sequences t and observations w using states s < , > s 0 § Assumptions: § § < , t 1> < t 1, t 2> < tn-1, tn> s 1 s 2 sn w 1 w 2 wn Tag sequence is generated by an order n markov model This corresponds to a 1 st order model over tag n-grams Words are chosen independently, conditioned only on the tag These are totally broken assumptions: why?

Parameter Estimation § Need two multinomials § Transitions: § Emissions: § Can get these off a collection of tagged sentences:

Parameter Estimation § Need two multinomials § Transitions: § Emissions: § Can get these off a collection of tagged sentences:

Practical Issues with Estimation § Use standard smoothing methods to estimate transition scores, e. g. : § Emissions are trickier § § Words we’ve never seen before Words which occur with tags we’ve never seen One option: break out the Good-Turing smoothing Issue: words aren’t black boxes: 343, 127. 23 11 -year Minteria reintroducible § Another option: decompose words into features and use a maxent model along with Bayes’ rule.

Practical Issues with Estimation § Use standard smoothing methods to estimate transition scores, e. g. : § Emissions are trickier § § Words we’ve never seen before Words which occur with tags we’ve never seen One option: break out the Good-Turing smoothing Issue: words aren’t black boxes: 343, 127. 23 11 -year Minteria reintroducible § Another option: decompose words into features and use a maxent model along with Bayes’ rule.

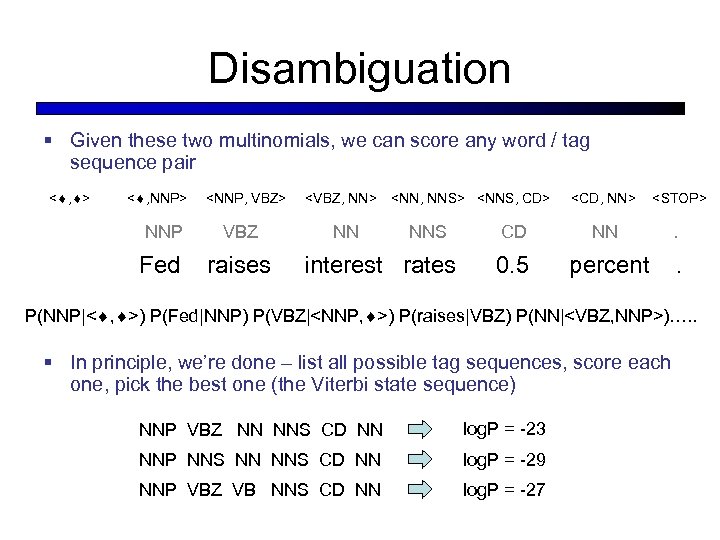

Disambiguation § Given these two multinomials, we can score any word / tag sequence pair < , > < , NNP>

Disambiguation § Given these two multinomials, we can score any word / tag sequence pair < , > < , NNP>

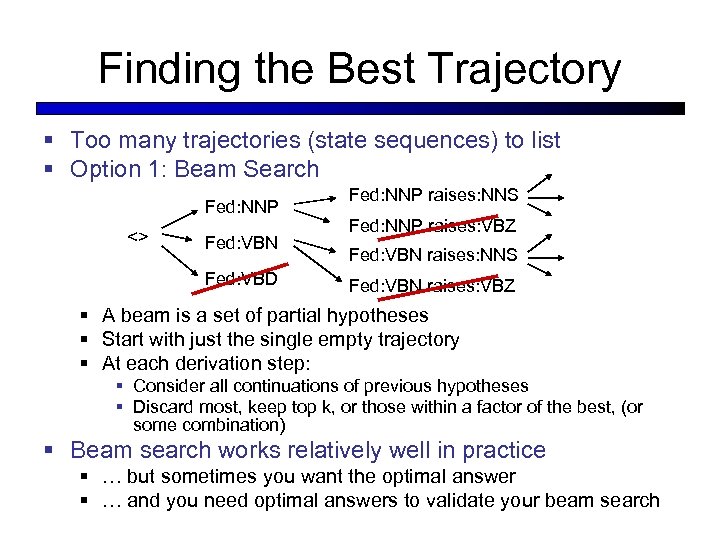

Finding the Best Trajectory § Too many trajectories (state sequences) to list § Option 1: Beam Search Fed: NNP <> Fed: VBN Fed: VBD Fed: NNP raises: NNS Fed: NNP raises: VBZ Fed: VBN raises: NNS Fed: VBN raises: VBZ § A beam is a set of partial hypotheses § Start with just the single empty trajectory § At each derivation step: § Consider all continuations of previous hypotheses § Discard most, keep top k, or those within a factor of the best, (or some combination) § Beam search works relatively well in practice § … but sometimes you want the optimal answer § … and you need optimal answers to validate your beam search

Finding the Best Trajectory § Too many trajectories (state sequences) to list § Option 1: Beam Search Fed: NNP <> Fed: VBN Fed: VBD Fed: NNP raises: NNS Fed: NNP raises: VBZ Fed: VBN raises: NNS Fed: VBN raises: VBZ § A beam is a set of partial hypotheses § Start with just the single empty trajectory § At each derivation step: § Consider all continuations of previous hypotheses § Discard most, keep top k, or those within a factor of the best, (or some combination) § Beam search works relatively well in practice § … but sometimes you want the optimal answer § … and you need optimal answers to validate your beam search

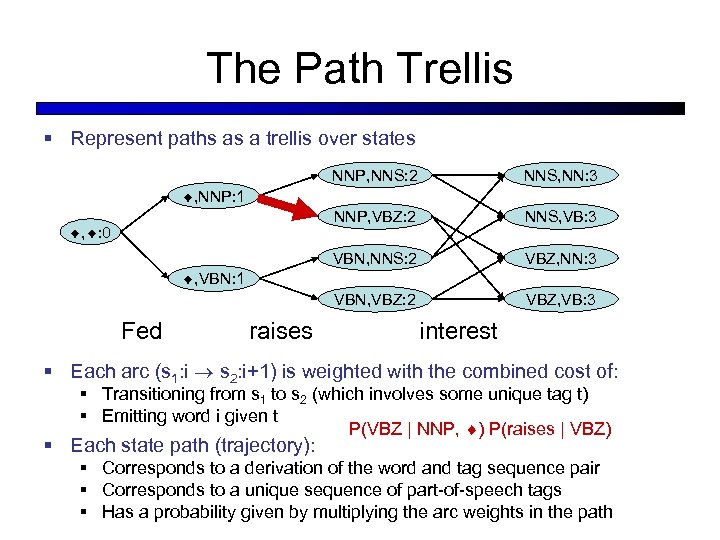

The Path Trellis § Represent paths as a trellis over states NNP, NNS: 2 NNS, NN: 3 NNP, VBZ: 2 NNS, VB: 3 VBN, NNS: 2 VBZ, NN: 3 VBN, VBZ: 2 VBZ, VB: 3 , NNP: 1 , : 0 , VBN: 1 Fed raises interest § Each arc (s 1: i s 2: i+1) is weighted with the combined cost of: § Transitioning from s 1 to s 2 (which involves some unique tag t) § Emitting word i given t P(VBZ | NNP, ) P(raises | VBZ) § Each state path (trajectory): § Corresponds to a derivation of the word and tag sequence pair § Corresponds to a unique sequence of part-of-speech tags § Has a probability given by multiplying the arc weights in the path

The Path Trellis § Represent paths as a trellis over states NNP, NNS: 2 NNS, NN: 3 NNP, VBZ: 2 NNS, VB: 3 VBN, NNS: 2 VBZ, NN: 3 VBN, VBZ: 2 VBZ, VB: 3 , NNP: 1 , : 0 , VBN: 1 Fed raises interest § Each arc (s 1: i s 2: i+1) is weighted with the combined cost of: § Transitioning from s 1 to s 2 (which involves some unique tag t) § Emitting word i given t P(VBZ | NNP, ) P(raises | VBZ) § Each state path (trajectory): § Corresponds to a derivation of the word and tag sequence pair § Corresponds to a unique sequence of part-of-speech tags § Has a probability given by multiplying the arc weights in the path

The Viterbi Algorithm § Dynamic program for computing § The score of a best path up to position i ending in state s § Also store a backtrace § Memoized solution § Iterative solution

The Viterbi Algorithm § Dynamic program for computing § The score of a best path up to position i ending in state s § Also store a backtrace § Memoized solution § Iterative solution

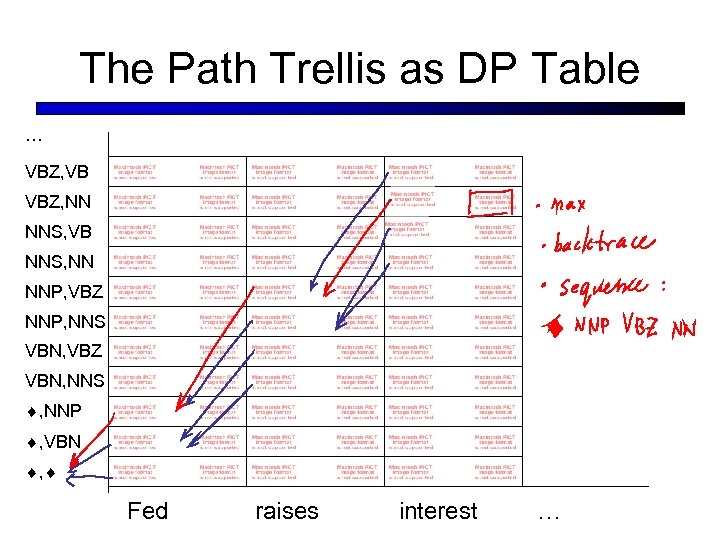

The Path Trellis as DP Table … VBZ, VB VBZ, NN NNS, VB NNS, NN NNP, VBZ NNP, NNS VBN, VBZ VBN, NNS , NNP , VBN , Fed raises interest …

The Path Trellis as DP Table … VBZ, VB VBZ, NN NNS, VB NNS, NN NNP, VBZ NNP, NNS VBN, VBZ VBN, NNS , NNP , VBN , Fed raises interest …

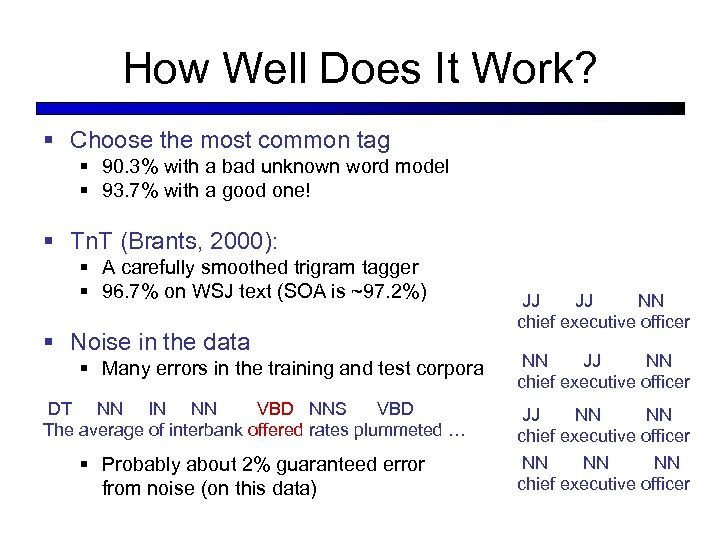

How Well Does It Work? § Choose the most common tag § 90. 3% with a bad unknown word model § 93. 7% with a good one! § Tn. T (Brants, 2000): § A carefully smoothed trigram tagger § 96. 7% on WSJ text (SOA is ~97. 2%) § Noise in the data § Many errors in the training and test corpora DT NN IN NN VBD NNS VBD The average of interbank offered rates plummeted … § Probably about 2% guaranteed error from noise (on this data) JJ JJ NN chief executive officer NN JJ NN chief executive officer JJ NN NN chief executive officer NN NN NN chief executive officer

How Well Does It Work? § Choose the most common tag § 90. 3% with a bad unknown word model § 93. 7% with a good one! § Tn. T (Brants, 2000): § A carefully smoothed trigram tagger § 96. 7% on WSJ text (SOA is ~97. 2%) § Noise in the data § Many errors in the training and test corpora DT NN IN NN VBD NNS VBD The average of interbank offered rates plummeted … § Probably about 2% guaranteed error from noise (on this data) JJ JJ NN chief executive officer NN JJ NN chief executive officer JJ NN NN chief executive officer NN NN NN chief executive officer

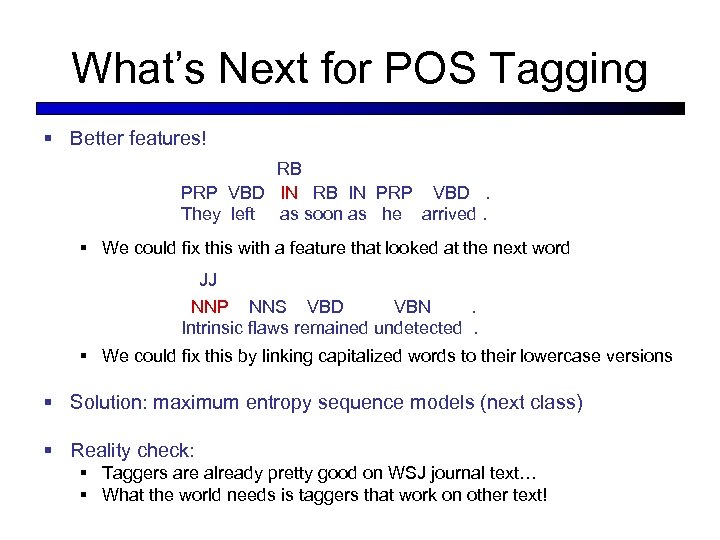

What’s Next for POS Tagging § Better features! RB PRP VBD IN RB IN PRP VBD. They left as soon as he arrived. § We could fix this with a feature that looked at the next word JJ NNP NNS VBD VBN. Intrinsic flaws remained undetected. § We could fix this by linking capitalized words to their lowercase versions § Solution: maximum entropy sequence models (next class) § Reality check: § Taggers are already pretty good on WSJ journal text… § What the world needs is taggers that work on other text!

What’s Next for POS Tagging § Better features! RB PRP VBD IN RB IN PRP VBD. They left as soon as he arrived. § We could fix this with a feature that looked at the next word JJ NNP NNS VBD VBN. Intrinsic flaws remained undetected. § We could fix this by linking capitalized words to their lowercase versions § Solution: maximum entropy sequence models (next class) § Reality check: § Taggers are already pretty good on WSJ journal text… § What the world needs is taggers that work on other text!

HMMs as Language Models § We have a generative model of tagged sentences: § We can turn this into a distribution over sentences by summing over the tag sequences: § Problem: too many sequences! § (And beam search isn’t going to help this time)

HMMs as Language Models § We have a generative model of tagged sentences: § We can turn this into a distribution over sentences by summing over the tag sequences: § Problem: too many sequences! § (And beam search isn’t going to help this time)

Summing over Paths § Just like Viterbi, but with sum instead of max § Recursive decomposition

Summing over Paths § Just like Viterbi, but with sum instead of max § Recursive decomposition

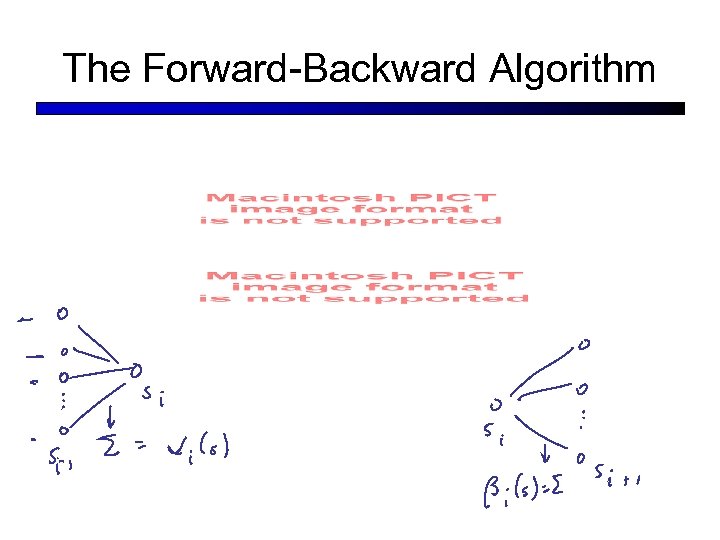

The Forward-Backward Algorithm

The Forward-Backward Algorithm

What Does This Buy Us? § Why do we want forward and backward probabilities? § Lets us ask more questions § Like: what fraction of sequences contain tag t at position i § Max-tag decoding: § Pick the tag at each point which has highest expectation § Raises accuracy a tiny bit § Bad idea in practice (why? ) § Also: Unsupervised learning of HMMs § At least in theory, more later…

What Does This Buy Us? § Why do we want forward and backward probabilities? § Lets us ask more questions § Like: what fraction of sequences contain tag t at position i § Max-tag decoding: § Pick the tag at each point which has highest expectation § Raises accuracy a tiny bit § Bad idea in practice (why? ) § Also: Unsupervised learning of HMMs § At least in theory, more later…

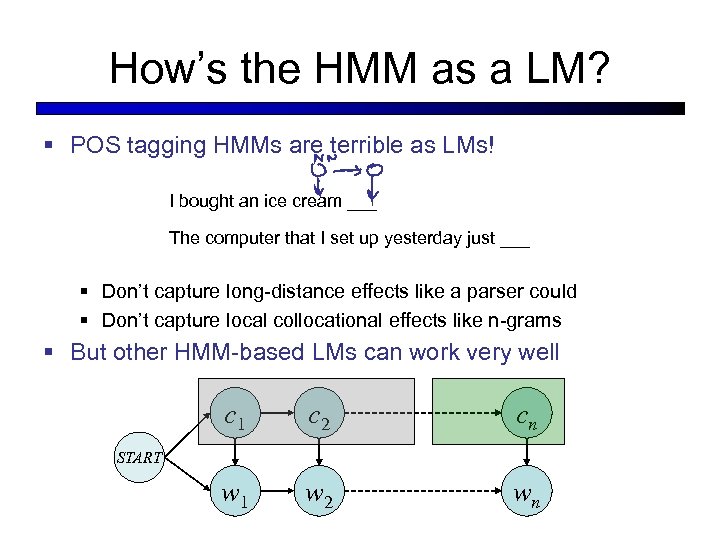

How’s the HMM as a LM? § POS tagging HMMs are terrible as LMs! I bought an ice cream ___ The computer that I set up yesterday just ___ § Don’t capture long-distance effects like a parser could § Don’t capture local collocational effects like n-grams § But other HMM-based LMs can work very well c 1 c 2 cn w 1 w 2 wn START

How’s the HMM as a LM? § POS tagging HMMs are terrible as LMs! I bought an ice cream ___ The computer that I set up yesterday just ___ § Don’t capture long-distance effects like a parser could § Don’t capture local collocational effects like n-grams § But other HMM-based LMs can work very well c 1 c 2 cn w 1 w 2 wn START

Next Time § Better Tagging Features using Maxent § Dealing with unknown words § Adjacent words § Longer-distance features § Soon: Named-Entity Recognition

Next Time § Better Tagging Features using Maxent § Dealing with unknown words § Adjacent words § Longer-distance features § Soon: Named-Entity Recognition