88dd7930d3cfc779f03253d1edffe13e.ppt

- Количество слайдов: 74

CS 60057 Speech &Natural Language Processing Autumn 2007 Lecture 14 b 24 August 2007 Lecture 1, 7/21/2005 Natural Language Processing 1

CS 60057 Speech &Natural Language Processing Autumn 2007 Lecture 14 b 24 August 2007 Lecture 1, 7/21/2005 Natural Language Processing 1

LING 180 SYMBSYS 138 Intro to Computer Speech and Language Processing Lecture 13: Machine Translation (II) November 9, 2006 Dan Jurafsky Lecture 1, 7/21/2005 Thanks to Kevin Knight for much of this material, and many slides also came from Bonnie Dorr and Natural Language Processing Christof Monz! 2

LING 180 SYMBSYS 138 Intro to Computer Speech and Language Processing Lecture 13: Machine Translation (II) November 9, 2006 Dan Jurafsky Lecture 1, 7/21/2005 Thanks to Kevin Knight for much of this material, and many slides also came from Bonnie Dorr and Natural Language Processing Christof Monz! 2

Outline for MT Week p p Intro and a little history Language Similarities and Divergences Four main MT Approaches n Transfer n Interlingua n Direct n Statistical Evaluation Lecture 1, 7/21/2005 Natural Language Processing 3

Outline for MT Week p p Intro and a little history Language Similarities and Divergences Four main MT Approaches n Transfer n Interlingua n Direct n Statistical Evaluation Lecture 1, 7/21/2005 Natural Language Processing 3

![Centauri/Arcturan [Knight, 1997] Your assignment, translate this to Arcturan: Lecture 1, 7/21/2005 farok crrrok Centauri/Arcturan [Knight, 1997] Your assignment, translate this to Arcturan: Lecture 1, 7/21/2005 farok crrrok](https://present5.com/presentation/88dd7930d3cfc779f03253d1edffe13e/image-4.jpg) Centauri/Arcturan [Knight, 1997] Your assignment, translate this to Arcturan: Lecture 1, 7/21/2005 farok crrrok hihok yorok clok kantok ok-yurp Natural Language Processing 4

Centauri/Arcturan [Knight, 1997] Your assignment, translate this to Arcturan: Lecture 1, 7/21/2005 farok crrrok hihok yorok clok kantok ok-yurp Natural Language Processing 4

![Centauri/Arcturan [Knight, 1997] Your assignment, translate this to Arcturan: farok crrrok hihok yorok clok Centauri/Arcturan [Knight, 1997] Your assignment, translate this to Arcturan: farok crrrok hihok yorok clok](https://present5.com/presentation/88dd7930d3cfc779f03253d1edffe13e/image-5.jpg) Centauri/Arcturan [Knight, 1997] Your assignment, translate this to Arcturan: farok crrrok hihok yorok clok kantok ok-yurp 1 a. ok-voon ororok sprok. 1 b. at-voon bichat dat. 7 a. lalok farok ororok lalok sprok izokenemok. 2 a. ok-drubel ok-voon anok plok sprok. 2 b. at-drubel at-voon pippat rrat dat. 8 a. lalok brok anok plok nok. 8 b. iat lat pippat rrat nnat. 3 a. erok sprok izok hihok ghirok. 3 b. totat dat arrat vat hilat. 9 a. wiwok nok izok kantok ok-yurp. 9 b. totat nnat quat oloat at-yurp. 4 a. ok-voon anok drok brok jok. 4 b. at-voon krat pippat sat lat. 10 a. lalok mok nok yorok ghirok clok. 10 b. wat nnat gat mat bat hilat. 5 a. wiwok farok izok stok. 5 b. totat jjat quat cat. 11 a. lalok nok crrrok hihok yorok zanzanok. 11 b. wat nnat arrat mat zanzanat. 6 a. lalok sprok izok jok stok. 6 b. wat dat krat quat cat. 12 a. lalok rarok nok izok hihok mok. 12 b. wat nnat forat arrat vat gat. Lecture 1, 7/21/2005 Slide from Kevin Knight 7 b. wat jjat bichat wat dat vat eneat. Natural Language Processing 5

Centauri/Arcturan [Knight, 1997] Your assignment, translate this to Arcturan: farok crrrok hihok yorok clok kantok ok-yurp 1 a. ok-voon ororok sprok. 1 b. at-voon bichat dat. 7 a. lalok farok ororok lalok sprok izokenemok. 2 a. ok-drubel ok-voon anok plok sprok. 2 b. at-drubel at-voon pippat rrat dat. 8 a. lalok brok anok plok nok. 8 b. iat lat pippat rrat nnat. 3 a. erok sprok izok hihok ghirok. 3 b. totat dat arrat vat hilat. 9 a. wiwok nok izok kantok ok-yurp. 9 b. totat nnat quat oloat at-yurp. 4 a. ok-voon anok drok brok jok. 4 b. at-voon krat pippat sat lat. 10 a. lalok mok nok yorok ghirok clok. 10 b. wat nnat gat mat bat hilat. 5 a. wiwok farok izok stok. 5 b. totat jjat quat cat. 11 a. lalok nok crrrok hihok yorok zanzanok. 11 b. wat nnat arrat mat zanzanat. 6 a. lalok sprok izok jok stok. 6 b. wat dat krat quat cat. 12 a. lalok rarok nok izok hihok mok. 12 b. wat nnat forat arrat vat gat. Lecture 1, 7/21/2005 Slide from Kevin Knight 7 b. wat jjat bichat wat dat vat eneat. Natural Language Processing 5

![Centauri/Arcturan [Knight, 1997] Your assignment, translate this to Arcturan: farok crrrok hihok yorok clok Centauri/Arcturan [Knight, 1997] Your assignment, translate this to Arcturan: farok crrrok hihok yorok clok](https://present5.com/presentation/88dd7930d3cfc779f03253d1edffe13e/image-6.jpg) Centauri/Arcturan [Knight, 1997] Your assignment, translate this to Arcturan: farok crrrok hihok yorok clok kantok ok-yurp 1 a. ok-voon ororok sprok. 7 a. lalok farok ororok lalok sprok izok enemok. 1 b. at-voon bichat dat. 7 b. wat jjat bichat wat dat vat eneat. 2 a. ok-drubel ok-voon anok plok sprok. 8 a. lalok brok anok plok nok. 2 b. at-drubel at-voon pippat rrat dat. 8 b. iat lat pippat rrat nnat. 3 a. erok sprok izok hihok ghirok. 9 a. wiwok nok izok kantok ok-yurp. 3 b. totat dat arrat vat hilat. 4 a. ok-voon anok drok brok jok. 9 b. totat nnat quat oloat at-yurp. 10 a. lalok mok nok yorok ghirok clok. 4 b. at-voon krat pippat sat lat. 5 a. wiwok farok izok stok. 10 b. wat nnat gat mat bat hilat. 11 a. lalok nok crrrok hihok yorok zanzanok. 5 b. totat jjat quat cat. 6 a. lalok sprok izok jok stok. 11 b. wat nnat arrat mat zanzanat. 12 a. lalok rarok nok izok hihok mok. 6 b. wat dat krat quat cat. Slide from Kevin Knight Lecture 1, 7/21/2005 12 b. wat nnat forat arrat vat gat. Natural Language Processing 6

Centauri/Arcturan [Knight, 1997] Your assignment, translate this to Arcturan: farok crrrok hihok yorok clok kantok ok-yurp 1 a. ok-voon ororok sprok. 7 a. lalok farok ororok lalok sprok izok enemok. 1 b. at-voon bichat dat. 7 b. wat jjat bichat wat dat vat eneat. 2 a. ok-drubel ok-voon anok plok sprok. 8 a. lalok brok anok plok nok. 2 b. at-drubel at-voon pippat rrat dat. 8 b. iat lat pippat rrat nnat. 3 a. erok sprok izok hihok ghirok. 9 a. wiwok nok izok kantok ok-yurp. 3 b. totat dat arrat vat hilat. 4 a. ok-voon anok drok brok jok. 9 b. totat nnat quat oloat at-yurp. 10 a. lalok mok nok yorok ghirok clok. 4 b. at-voon krat pippat sat lat. 5 a. wiwok farok izok stok. 10 b. wat nnat gat mat bat hilat. 11 a. lalok nok crrrok hihok yorok zanzanok. 5 b. totat jjat quat cat. 6 a. lalok sprok izok jok stok. 11 b. wat nnat arrat mat zanzanat. 12 a. lalok rarok nok izok hihok mok. 6 b. wat dat krat quat cat. Slide from Kevin Knight Lecture 1, 7/21/2005 12 b. wat nnat forat arrat vat gat. Natural Language Processing 6

![Centauri/Arcturan [Knight, 1997] Your assignment, translate this to Arcturan: farok crrrok hihok yorok clok Centauri/Arcturan [Knight, 1997] Your assignment, translate this to Arcturan: farok crrrok hihok yorok clok](https://present5.com/presentation/88dd7930d3cfc779f03253d1edffe13e/image-7.jpg) Centauri/Arcturan [Knight, 1997] Your assignment, translate this to Arcturan: farok crrrok hihok yorok clok kantok ok-yurp 1 a. ok-voon ororok sprok. 7 a. lalok farok ororok lalok sprok izok enemok. 1 b. at-voon bichat dat. 7 b. wat jjat bichat wat dat vat eneat. 2 a. ok-drubel ok-voon anok plok sprok. 8 a. lalok brok anok plok nok. 2 b. at-drubel at-voon pippat rrat dat. 8 b. iat lat pippat rrat nnat. 3 a. erok sprok izok hihok ghirok. 9 a. wiwok nok izok kantok ok-yurp. 3 b. totat dat arrat vat hilat. 4 a. ok-voon anok drok brok jok. 9 b. totat nnat quat oloat at-yurp. 10 a. lalok mok nok yorok ghirok clok. 4 b. at-voon krat pippat sat lat. 5 a. wiwok farok izok stok. 10 b. wat nnat gat mat bat hilat. 11 a. lalok nok crrrok hihok yorok zanzanok. 5 b. totat jjat quat cat. 6 a. lalok sprok izok jok stok. 11 b. wat nnat arrat mat zanzanat. 12 a. lalok rarok nok izok hihok mok. 6 b. wat dat krat quat cat. Slide from Kevin Knight Lecture 1, 7/21/2005 12 b. wat nnat forat arrat vat gat. Natural Language Processing 7

Centauri/Arcturan [Knight, 1997] Your assignment, translate this to Arcturan: farok crrrok hihok yorok clok kantok ok-yurp 1 a. ok-voon ororok sprok. 7 a. lalok farok ororok lalok sprok izok enemok. 1 b. at-voon bichat dat. 7 b. wat jjat bichat wat dat vat eneat. 2 a. ok-drubel ok-voon anok plok sprok. 8 a. lalok brok anok plok nok. 2 b. at-drubel at-voon pippat rrat dat. 8 b. iat lat pippat rrat nnat. 3 a. erok sprok izok hihok ghirok. 9 a. wiwok nok izok kantok ok-yurp. 3 b. totat dat arrat vat hilat. 4 a. ok-voon anok drok brok jok. 9 b. totat nnat quat oloat at-yurp. 10 a. lalok mok nok yorok ghirok clok. 4 b. at-voon krat pippat sat lat. 5 a. wiwok farok izok stok. 10 b. wat nnat gat mat bat hilat. 11 a. lalok nok crrrok hihok yorok zanzanok. 5 b. totat jjat quat cat. 6 a. lalok sprok izok jok stok. 11 b. wat nnat arrat mat zanzanat. 12 a. lalok rarok nok izok hihok mok. 6 b. wat dat krat quat cat. Slide from Kevin Knight Lecture 1, 7/21/2005 12 b. wat nnat forat arrat vat gat. Natural Language Processing 7

![Centauri/Arcturan: farok crrrok hihok yorok clok kantok ok-yurp [Knight, 1997] Your assignment, translate this Centauri/Arcturan: farok crrrok hihok yorok clok kantok ok-yurp [Knight, 1997] Your assignment, translate this](https://present5.com/presentation/88dd7930d3cfc779f03253d1edffe13e/image-8.jpg) Centauri/Arcturan: farok crrrok hihok yorok clok kantok ok-yurp [Knight, 1997] Your assignment, translate this to 1 a. ok-voon ororok sprok. 7 a. lalok farok ororok lalok sprok izok enemok. 1 b. at-voon bichat dat. 7 b. wat jjat bichat wat dat vat eneat. 2 a. ok-drubel ok-voon anok plok sprok. 8 a. lalok brok anok plok nok. 2 b. at-drubel at-voon pippat rrat dat. 8 b. iat lat pippat rrat nnat. 3 a. erok sprok izok hihok ghirok. 9 a. wiwok nok izok kantok ok-yurp. 3 b. totat dat arrat vat hilat. 4 a. ok-voon anok drok brok jok. 9 b. totat nnat quat oloat at-yurp. 10 a. lalok mok nok yorok ghirok clok. 4 b. at-voon krat pippat sat lat. 5 a. wiwok farok izok stok. 10 b. wat nnat gat mat bat hilat. 11 a. lalok nok crrrok hihok yorok zanzanok. 5 b. totat jjat quat cat. 6 a. lalok sprok izok jok stok. 11 b. wat nnat arrat mat zanzanat. 12 a. lalok rarok nok izok hihok mok. 6 b. wat dat krat quat cat. Slide from Kevin Knight Lecture 1, 7/21/2005 ? ? ? 12 b. wat nnat forat arrat vat gat. Natural Language Processing 8

Centauri/Arcturan: farok crrrok hihok yorok clok kantok ok-yurp [Knight, 1997] Your assignment, translate this to 1 a. ok-voon ororok sprok. 7 a. lalok farok ororok lalok sprok izok enemok. 1 b. at-voon bichat dat. 7 b. wat jjat bichat wat dat vat eneat. 2 a. ok-drubel ok-voon anok plok sprok. 8 a. lalok brok anok plok nok. 2 b. at-drubel at-voon pippat rrat dat. 8 b. iat lat pippat rrat nnat. 3 a. erok sprok izok hihok ghirok. 9 a. wiwok nok izok kantok ok-yurp. 3 b. totat dat arrat vat hilat. 4 a. ok-voon anok drok brok jok. 9 b. totat nnat quat oloat at-yurp. 10 a. lalok mok nok yorok ghirok clok. 4 b. at-voon krat pippat sat lat. 5 a. wiwok farok izok stok. 10 b. wat nnat gat mat bat hilat. 11 a. lalok nok crrrok hihok yorok zanzanok. 5 b. totat jjat quat cat. 6 a. lalok sprok izok jok stok. 11 b. wat nnat arrat mat zanzanat. 12 a. lalok rarok nok izok hihok mok. 6 b. wat dat krat quat cat. Slide from Kevin Knight Lecture 1, 7/21/2005 ? ? ? 12 b. wat nnat forat arrat vat gat. Natural Language Processing 8

![Centauri/Arcturan [Knight, 1997] Your assignment, translate this to Arcturan: farok crrrok hihok yorok clok Centauri/Arcturan [Knight, 1997] Your assignment, translate this to Arcturan: farok crrrok hihok yorok clok](https://present5.com/presentation/88dd7930d3cfc779f03253d1edffe13e/image-9.jpg) Centauri/Arcturan [Knight, 1997] Your assignment, translate this to Arcturan: farok crrrok hihok yorok clok kantok ok-yurp 1 a. ok-voon ororok sprok. 7 a. lalok farok ororok lalok sprok izok enemok. 1 b. at-voon bichat dat. 7 b. wat jjat bichat wat dat vat eneat. 2 a. ok-drubel ok-voon anok plok sprok. 8 a. lalok brok anok plok nok. 2 b. at-drubel at-voon pippat rrat dat. 8 b. iat lat pippat rrat nnat. 3 a. erok sprok izok hihok ghirok. 9 a. wiwok nok izok kantok ok-yurp. 3 b. totat dat arrat vat hilat. 4 a. ok-voon anok drok brok jok. 9 b. totat nnat quat oloat at-yurp. 10 a. lalok mok nok yorok ghirok clok. 4 b. at-voon krat pippat sat lat. 5 a. wiwok farok izok stok. 10 b. wat nnat gat mat bat hilat. 11 a. lalok nok crrrok hihok yorok zanzanok. 5 b. totat jjat quat cat. 6 a. lalok sprok izok jok stok. 11 b. wat nnat arrat mat zanzanat. 12 a. lalok rarok nok izok hihok mok. 6 b. wat dat krat quat cat. Slide from Kevin Knight Lecture 1, 7/21/2005 12 b. wat nnat forat arrat vat gat. Natural Language Processing 9

Centauri/Arcturan [Knight, 1997] Your assignment, translate this to Arcturan: farok crrrok hihok yorok clok kantok ok-yurp 1 a. ok-voon ororok sprok. 7 a. lalok farok ororok lalok sprok izok enemok. 1 b. at-voon bichat dat. 7 b. wat jjat bichat wat dat vat eneat. 2 a. ok-drubel ok-voon anok plok sprok. 8 a. lalok brok anok plok nok. 2 b. at-drubel at-voon pippat rrat dat. 8 b. iat lat pippat rrat nnat. 3 a. erok sprok izok hihok ghirok. 9 a. wiwok nok izok kantok ok-yurp. 3 b. totat dat arrat vat hilat. 4 a. ok-voon anok drok brok jok. 9 b. totat nnat quat oloat at-yurp. 10 a. lalok mok nok yorok ghirok clok. 4 b. at-voon krat pippat sat lat. 5 a. wiwok farok izok stok. 10 b. wat nnat gat mat bat hilat. 11 a. lalok nok crrrok hihok yorok zanzanok. 5 b. totat jjat quat cat. 6 a. lalok sprok izok jok stok. 11 b. wat nnat arrat mat zanzanat. 12 a. lalok rarok nok izok hihok mok. 6 b. wat dat krat quat cat. Slide from Kevin Knight Lecture 1, 7/21/2005 12 b. wat nnat forat arrat vat gat. Natural Language Processing 9

![Centauri/Arcturan [Knight, 1997] Your assignment, translate this to Arcturan: farok crrrok hihok yorok clok Centauri/Arcturan [Knight, 1997] Your assignment, translate this to Arcturan: farok crrrok hihok yorok clok](https://present5.com/presentation/88dd7930d3cfc779f03253d1edffe13e/image-10.jpg) Centauri/Arcturan [Knight, 1997] Your assignment, translate this to Arcturan: farok crrrok hihok yorok clok kantok ok-yurp 1 a. ok-voon ororok sprok. 7 a. lalok farok ororok lalok sprok izok enemok. 1 b. at-voon bichat dat. 7 b. wat jjat bichat wat dat vat eneat. 2 a. ok-drubel ok-voon anok plok sprok. 8 a. lalok brok anok plok nok. 2 b. at-drubel at-voon pippat rrat dat. 8 b. iat lat pippat rrat nnat. 3 a. erok sprok izok hihok ghirok. 9 a. wiwok nok izok kantok ok-yurp. 3 b. totat dat arrat vat hilat. 4 a. ok-voon anok drok brok jok. 9 b. totat nnat quat oloat at-yurp. 10 a. lalok mok nok yorok ghirok clok. 4 b. at-voon krat pippat sat lat. 5 a. wiwok farok izok stok. 10 b. wat nnat gat mat bat hilat. 11 a. lalok nok crrrok hihok yorok zanzanok. 5 b. totat jjat quat cat. 6 a. lalok sprok izok jok stok. 11 b. wat nnat arrat mat zanzanat. 12 a. lalok rarok nok izok hihok mok. 6 b. wat dat krat quat cat. Lecture 1, 7/21/2005 12 b. wat nnat forat arrat vat gat. Natural Language Processing 10

Centauri/Arcturan [Knight, 1997] Your assignment, translate this to Arcturan: farok crrrok hihok yorok clok kantok ok-yurp 1 a. ok-voon ororok sprok. 7 a. lalok farok ororok lalok sprok izok enemok. 1 b. at-voon bichat dat. 7 b. wat jjat bichat wat dat vat eneat. 2 a. ok-drubel ok-voon anok plok sprok. 8 a. lalok brok anok plok nok. 2 b. at-drubel at-voon pippat rrat dat. 8 b. iat lat pippat rrat nnat. 3 a. erok sprok izok hihok ghirok. 9 a. wiwok nok izok kantok ok-yurp. 3 b. totat dat arrat vat hilat. 4 a. ok-voon anok drok brok jok. 9 b. totat nnat quat oloat at-yurp. 10 a. lalok mok nok yorok ghirok clok. 4 b. at-voon krat pippat sat lat. 5 a. wiwok farok izok stok. 10 b. wat nnat gat mat bat hilat. 11 a. lalok nok crrrok hihok yorok zanzanok. 5 b. totat jjat quat cat. 6 a. lalok sprok izok jok stok. 11 b. wat nnat arrat mat zanzanat. 12 a. lalok rarok nok izok hihok mok. 6 b. wat dat krat quat cat. Lecture 1, 7/21/2005 12 b. wat nnat forat arrat vat gat. Natural Language Processing 10

![Centauri/Arcturan [Knight, 1997] Your assignment, translate this to Arcturan: farok crrrok hihok yorok clok Centauri/Arcturan [Knight, 1997] Your assignment, translate this to Arcturan: farok crrrok hihok yorok clok](https://present5.com/presentation/88dd7930d3cfc779f03253d1edffe13e/image-11.jpg) Centauri/Arcturan [Knight, 1997] Your assignment, translate this to Arcturan: farok crrrok hihok yorok clok kantok ok-yurp 1 a. ok-voon ororok sprok. 7 a. lalok farok ororok lalok sprok izok enemok. 1 b. at-voon bichat dat. 7 b. wat jjat bichat wat dat vat eneat. 2 a. ok-drubel ok-voon anok plok sprok. 8 a. lalok brok anok plok nok. 2 b. at-drubel at-voon pippat rrat dat. 8 b. iat lat pippat rrat nnat. 3 a. erok sprok izok hihok ghirok. 9 a. wiwok nok izok kantok ok-yurp. 3 b. totat dat arrat vat hilat. 4 a. ok-voon anok drok brok jok. 9 b. totat nnat quat oloat at-yurp. 10 a. lalok mok nok yorok ghirok clok. 4 b. at-voon krat pippat sat lat. 5 a. wiwok farok izok stok. 10 b. wat nnat gat mat bat hilat. 11 a. lalok nok crrrok hihok yorok zanzanok. 5 b. totat jjat quat cat. 6 a. lalok sprok izok jok stok. 11 b. wat nnat arrat mat zanzanat. 12 a. lalok rarok nok izok hihok mok. 6 b. wat dat krat quat cat. Slide from Kevin Knight Lecture 1, 7/21/2005 12 b. wat nnat forat arrat vat gat. Natural Language Processing 11

Centauri/Arcturan [Knight, 1997] Your assignment, translate this to Arcturan: farok crrrok hihok yorok clok kantok ok-yurp 1 a. ok-voon ororok sprok. 7 a. lalok farok ororok lalok sprok izok enemok. 1 b. at-voon bichat dat. 7 b. wat jjat bichat wat dat vat eneat. 2 a. ok-drubel ok-voon anok plok sprok. 8 a. lalok brok anok plok nok. 2 b. at-drubel at-voon pippat rrat dat. 8 b. iat lat pippat rrat nnat. 3 a. erok sprok izok hihok ghirok. 9 a. wiwok nok izok kantok ok-yurp. 3 b. totat dat arrat vat hilat. 4 a. ok-voon anok drok brok jok. 9 b. totat nnat quat oloat at-yurp. 10 a. lalok mok nok yorok ghirok clok. 4 b. at-voon krat pippat sat lat. 5 a. wiwok farok izok stok. 10 b. wat nnat gat mat bat hilat. 11 a. lalok nok crrrok hihok yorok zanzanok. 5 b. totat jjat quat cat. 6 a. lalok sprok izok jok stok. 11 b. wat nnat arrat mat zanzanat. 12 a. lalok rarok nok izok hihok mok. 6 b. wat dat krat quat cat. Slide from Kevin Knight Lecture 1, 7/21/2005 12 b. wat nnat forat arrat vat gat. Natural Language Processing 11

![Centauri/Arcturan [Knight, 1997] Your assignment, translate this to Arcturan: farok crrrok hihok yorok clok Centauri/Arcturan [Knight, 1997] Your assignment, translate this to Arcturan: farok crrrok hihok yorok clok](https://present5.com/presentation/88dd7930d3cfc779f03253d1edffe13e/image-12.jpg) Centauri/Arcturan [Knight, 1997] Your assignment, translate this to Arcturan: farok crrrok hihok yorok clok kantok ok-yurp 1 a. ok-voon ororok sprok. 7 a. lalok farok ororok lalok sprok izok enemok. 1 b. at-voon bichat dat. 7 b. wat jjat bichat wat dat vat eneat. 2 a. ok-drubel ok-voon anok plok sprok. 8 a. lalok brok anok plok nok. 2 b. at-drubel at-voon pippat rrat dat. 8 b. iat lat pippat rrat nnat. 3 a. erok sprok izok hihok ghirok. 9 a. wiwok nok izok kantok ok-yurp. 3 b. totat dat arrat vat hilat. 4 a. ok-voon anok drok brok jok. 9 b. totat nnat quat oloat at-yurp. 10 a. lalok mok nok yorok ghirok clok. 4 b. at-voon krat pippat sat lat. 5 a. wiwok farok izok stok. 10 b. wat nnat gat mat bat hilat. 11 a. lalok nok crrrok hihok yorok zanzanok. 5 b. totat jjat quat cat. 6 a. lalok sprok izok jok stok. 11 b. wat nnat arrat mat zanzanat. 12 a. lalok rarok nok izok hihok mok. 6 b. wat dat krat quat cat. Lecture 1, 7/21/2005 12 b. wat nnat forat arrat vat gat. Natural Language Processing ? ? ? 12

Centauri/Arcturan [Knight, 1997] Your assignment, translate this to Arcturan: farok crrrok hihok yorok clok kantok ok-yurp 1 a. ok-voon ororok sprok. 7 a. lalok farok ororok lalok sprok izok enemok. 1 b. at-voon bichat dat. 7 b. wat jjat bichat wat dat vat eneat. 2 a. ok-drubel ok-voon anok plok sprok. 8 a. lalok brok anok plok nok. 2 b. at-drubel at-voon pippat rrat dat. 8 b. iat lat pippat rrat nnat. 3 a. erok sprok izok hihok ghirok. 9 a. wiwok nok izok kantok ok-yurp. 3 b. totat dat arrat vat hilat. 4 a. ok-voon anok drok brok jok. 9 b. totat nnat quat oloat at-yurp. 10 a. lalok mok nok yorok ghirok clok. 4 b. at-voon krat pippat sat lat. 5 a. wiwok farok izok stok. 10 b. wat nnat gat mat bat hilat. 11 a. lalok nok crrrok hihok yorok zanzanok. 5 b. totat jjat quat cat. 6 a. lalok sprok izok jok stok. 11 b. wat nnat arrat mat zanzanat. 12 a. lalok rarok nok izok hihok mok. 6 b. wat dat krat quat cat. Lecture 1, 7/21/2005 12 b. wat nnat forat arrat vat gat. Natural Language Processing ? ? ? 12

![Centauri/Arcturan [Knight, 1997] Your assignment, translate this to Arcturan: farok crrrok hihok yorok clok Centauri/Arcturan [Knight, 1997] Your assignment, translate this to Arcturan: farok crrrok hihok yorok clok](https://present5.com/presentation/88dd7930d3cfc779f03253d1edffe13e/image-13.jpg) Centauri/Arcturan [Knight, 1997] Your assignment, translate this to Arcturan: farok crrrok hihok yorok clok kantok ok-yurp 1 a. ok-voon ororok sprok. 7 a. lalok farok ororok lalok sprok izok enemok. 1 b. at-voon bichat dat. 7 b. wat jjat bichat wat dat vat eneat. 2 a. ok-drubel ok-voon anok plok sprok. 8 a. lalok brok anok plok nok. 2 b. at-drubel at-voon pippat rrat dat. 8 b. iat lat pippat rrat nnat. 3 a. erok sprok izok hihok ghirok. 9 a. wiwok nok izok kantok ok-yurp. 3 b. totat dat arrat vat hilat. 4 a. ok-voon anok drok brok jok. 9 b. totat nnat quat oloat at-yurp. 10 a. lalok mok nok yorok ghirok clok. 4 b. at-voon krat pippat sat lat. 5 a. wiwok farok izok stok. 10 b. wat nnat gat mat bat hilat. 11 a. lalok nok crrrok hihok yorok zanzanok. 5 b. totat jjat quat cat. 6 a. lalok sprok izok jok stok. 11 b. wat nnat arrat mat zanzanat. 12 a. lalok rarok nok izok hihok mok. 6 b. wat dat krat quat cat. Slide from Kevin Knight Lecture 1, 7/21/2005 12 b. wat nnat forat arrat vat gat. Natural Language Processing 13

Centauri/Arcturan [Knight, 1997] Your assignment, translate this to Arcturan: farok crrrok hihok yorok clok kantok ok-yurp 1 a. ok-voon ororok sprok. 7 a. lalok farok ororok lalok sprok izok enemok. 1 b. at-voon bichat dat. 7 b. wat jjat bichat wat dat vat eneat. 2 a. ok-drubel ok-voon anok plok sprok. 8 a. lalok brok anok plok nok. 2 b. at-drubel at-voon pippat rrat dat. 8 b. iat lat pippat rrat nnat. 3 a. erok sprok izok hihok ghirok. 9 a. wiwok nok izok kantok ok-yurp. 3 b. totat dat arrat vat hilat. 4 a. ok-voon anok drok brok jok. 9 b. totat nnat quat oloat at-yurp. 10 a. lalok mok nok yorok ghirok clok. 4 b. at-voon krat pippat sat lat. 5 a. wiwok farok izok stok. 10 b. wat nnat gat mat bat hilat. 11 a. lalok nok crrrok hihok yorok zanzanok. 5 b. totat jjat quat cat. 6 a. lalok sprok izok jok stok. 11 b. wat nnat arrat mat zanzanat. 12 a. lalok rarok nok izok hihok mok. 6 b. wat dat krat quat cat. Slide from Kevin Knight Lecture 1, 7/21/2005 12 b. wat nnat forat arrat vat gat. Natural Language Processing 13

![Centauri/Arcturan: farok crrrok hihok yorok clok kantok ok-yurp [Knight, 1997] Your assignment, translate this Centauri/Arcturan: farok crrrok hihok yorok clok kantok ok-yurp [Knight, 1997] Your assignment, translate this](https://present5.com/presentation/88dd7930d3cfc779f03253d1edffe13e/image-14.jpg) Centauri/Arcturan: farok crrrok hihok yorok clok kantok ok-yurp [Knight, 1997] Your assignment, translate this to 1 a. ok-voon ororok sprok. 7 a. lalok farok ororok lalok sprok izok enemok. 1 b. at-voon bichat dat. 7 b. wat jjat bichat wat dat vat eneat. 2 a. ok-drubel ok-voon anok plok sprok. 8 a. lalok brok anok plok nok. 2 b. at-drubel at-voon pippat rrat dat. 8 b. iat lat pippat rrat nnat. 3 a. erok sprok izok hihok ghirok. 9 a. wiwok nok izok kantok ok-yurp. 3 b. totat dat arrat vat hilat. 4 a. ok-voon anok drok brok jok. 9 b. totat nnat quat oloat at-yurp. 10 a. lalok mok nok yorok ghirok clok. 4 b. at-voon krat pippat sat lat. 5 a. wiwok farok izok stok. 10 b. wat nnat gat mat bat hilat. 11 a. lalok nok crrrok hihok yorok zanzanok. 5 b. totat jjat quat cat. 6 a. lalok sprok izok jok stok. 11 b. wat nnat arrat mat zanzanat. 12 a. lalok rarok nok izok hihok mok. 6 b. wat dat krat quat cat. Lecture 1, 7/21/2005 process of elimination 12 b. wat nnat forat arrat vat gat. Natural Language Processing 14

Centauri/Arcturan: farok crrrok hihok yorok clok kantok ok-yurp [Knight, 1997] Your assignment, translate this to 1 a. ok-voon ororok sprok. 7 a. lalok farok ororok lalok sprok izok enemok. 1 b. at-voon bichat dat. 7 b. wat jjat bichat wat dat vat eneat. 2 a. ok-drubel ok-voon anok plok sprok. 8 a. lalok brok anok plok nok. 2 b. at-drubel at-voon pippat rrat dat. 8 b. iat lat pippat rrat nnat. 3 a. erok sprok izok hihok ghirok. 9 a. wiwok nok izok kantok ok-yurp. 3 b. totat dat arrat vat hilat. 4 a. ok-voon anok drok brok jok. 9 b. totat nnat quat oloat at-yurp. 10 a. lalok mok nok yorok ghirok clok. 4 b. at-voon krat pippat sat lat. 5 a. wiwok farok izok stok. 10 b. wat nnat gat mat bat hilat. 11 a. lalok nok crrrok hihok yorok zanzanok. 5 b. totat jjat quat cat. 6 a. lalok sprok izok jok stok. 11 b. wat nnat arrat mat zanzanat. 12 a. lalok rarok nok izok hihok mok. 6 b. wat dat krat quat cat. Lecture 1, 7/21/2005 process of elimination 12 b. wat nnat forat arrat vat gat. Natural Language Processing 14

![Centauri/Arcturan: farok crrrok hihok yorok clok kantok ok-yurp [Knight, 1997] Your assignment, translate this Centauri/Arcturan: farok crrrok hihok yorok clok kantok ok-yurp [Knight, 1997] Your assignment, translate this](https://present5.com/presentation/88dd7930d3cfc779f03253d1edffe13e/image-15.jpg) Centauri/Arcturan: farok crrrok hihok yorok clok kantok ok-yurp [Knight, 1997] Your assignment, translate this to 1 a. ok-voon ororok sprok. 7 a. lalok farok ororok lalok sprok izok enemok. 1 b. at-voon bichat dat. 7 b. wat jjat bichat wat dat vat eneat. 2 a. ok-drubel ok-voon anok plok sprok. 8 a. lalok brok anok plok nok. 2 b. at-drubel at-voon pippat rrat dat. 8 b. iat lat pippat rrat nnat. 3 a. erok sprok izok hihok ghirok. 9 a. wiwok nok izok kantok ok-yurp. 3 b. totat dat arrat vat hilat. 4 a. ok-voon anok drok brok jok. 9 b. totat nnat quat oloat at-yurp. 10 a. lalok mok nok yorok ghirok clok. 4 b. at-voon krat pippat sat lat. 5 a. wiwok farok izok stok. 10 b. wat nnat gat mat bat hilat. 11 a. lalok nok crrrok hihok yorok zanzanok. 5 b. totat jjat quat cat. 6 a. lalok sprok izok jok stok. 11 b. wat nnat arrat mat zanzanat. 12 a. lalok rarok nok izok hihok mok. 6 b. wat dat krat quat cat. Slide from Kevin Knight Lecture 1, 7/21/2005 12 b. wat nnat forat arrat vat gat. Natural Language Processing cognate? 15

Centauri/Arcturan: farok crrrok hihok yorok clok kantok ok-yurp [Knight, 1997] Your assignment, translate this to 1 a. ok-voon ororok sprok. 7 a. lalok farok ororok lalok sprok izok enemok. 1 b. at-voon bichat dat. 7 b. wat jjat bichat wat dat vat eneat. 2 a. ok-drubel ok-voon anok plok sprok. 8 a. lalok brok anok plok nok. 2 b. at-drubel at-voon pippat rrat dat. 8 b. iat lat pippat rrat nnat. 3 a. erok sprok izok hihok ghirok. 9 a. wiwok nok izok kantok ok-yurp. 3 b. totat dat arrat vat hilat. 4 a. ok-voon anok drok brok jok. 9 b. totat nnat quat oloat at-yurp. 10 a. lalok mok nok yorok ghirok clok. 4 b. at-voon krat pippat sat lat. 5 a. wiwok farok izok stok. 10 b. wat nnat gat mat bat hilat. 11 a. lalok nok crrrok hihok yorok zanzanok. 5 b. totat jjat quat cat. 6 a. lalok sprok izok jok stok. 11 b. wat nnat arrat mat zanzanat. 12 a. lalok rarok nok izok hihok mok. 6 b. wat dat krat quat cat. Slide from Kevin Knight Lecture 1, 7/21/2005 12 b. wat nnat forat arrat vat gat. Natural Language Processing cognate? 15

![Centauri/Arcturan [Knight, 1997] Your assignment, put these words in order: { jjat, arrat, mat, Centauri/Arcturan [Knight, 1997] Your assignment, put these words in order: { jjat, arrat, mat,](https://present5.com/presentation/88dd7930d3cfc779f03253d1edffe13e/image-16.jpg) Centauri/Arcturan [Knight, 1997] Your assignment, put these words in order: { jjat, arrat, mat, bat, oloat, at-yurp } 1 a. ok-voon ororok sprok. 7 a. lalok farok ororok lalok sprok izok enemok. 1 b. at-voon bichat dat. 7 b. wat jjat bichat wat dat vat eneat. 2 a. ok-drubel ok-voon anok plok sprok. 8 a. lalok brok anok plok nok. 2 b. at-drubel at-voon pippat rrat dat. 8 b. iat lat pippat rrat nnat. 3 a. erok sprok izok hihok ghirok. 9 a. wiwok nok izok kantok ok-yurp. 3 b. totat dat arrat vat hilat. 4 a. ok-voon anok drok brok jok. 9 b. totat nnat quat oloat at-yurp. 10 a. lalok mok nok yorok ghirok clok. 4 b. at-voon krat pippat sat lat. 5 a. wiwok farok izok stok. 10 b. wat nnat gat mat bat hilat. 11 a. lalok nok crrrok hihok yorok zanzanok. 5 b. totat jjat quat cat. 6 a. lalok sprok izok jok stok. 11 b. wat nnat arrat mat zanzanat. 12 a. lalok rarok nok izok hihok mok. 6 b. wat dat krat quat cat. Lecture 1, 7/21/2005 12 b. wat nnat forat arrat vat gat. Natural Language Processing zero fertility 16

Centauri/Arcturan [Knight, 1997] Your assignment, put these words in order: { jjat, arrat, mat, bat, oloat, at-yurp } 1 a. ok-voon ororok sprok. 7 a. lalok farok ororok lalok sprok izok enemok. 1 b. at-voon bichat dat. 7 b. wat jjat bichat wat dat vat eneat. 2 a. ok-drubel ok-voon anok plok sprok. 8 a. lalok brok anok plok nok. 2 b. at-drubel at-voon pippat rrat dat. 8 b. iat lat pippat rrat nnat. 3 a. erok sprok izok hihok ghirok. 9 a. wiwok nok izok kantok ok-yurp. 3 b. totat dat arrat vat hilat. 4 a. ok-voon anok drok brok jok. 9 b. totat nnat quat oloat at-yurp. 10 a. lalok mok nok yorok ghirok clok. 4 b. at-voon krat pippat sat lat. 5 a. wiwok farok izok stok. 10 b. wat nnat gat mat bat hilat. 11 a. lalok nok crrrok hihok yorok zanzanok. 5 b. totat jjat quat cat. 6 a. lalok sprok izok jok stok. 11 b. wat nnat arrat mat zanzanat. 12 a. lalok rarok nok izok hihok mok. 6 b. wat dat krat quat cat. Lecture 1, 7/21/2005 12 b. wat nnat forat arrat vat gat. Natural Language Processing zero fertility 16

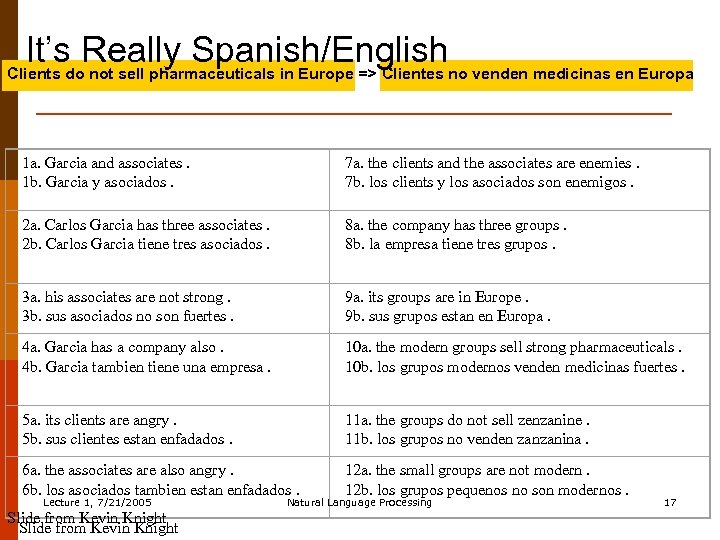

It’sdo. Really Spanish/Englishno venden medicinas en Europa Clients not sell pharmaceuticals in Europe => Clientes 1 a. Garcia and associates. 1 b. Garcia y asociados. 7 a. the clients and the associates are enemies. 7 b. los clients y los asociados son enemigos. 2 a. Carlos Garcia has three associates. 2 b. Carlos Garcia tiene tres asociados. 8 a. the company has three groups. 8 b. la empresa tiene tres grupos. 3 a. his associates are not strong. 3 b. sus asociados no son fuertes. 9 a. its groups are in Europe. 9 b. sus grupos estan en Europa. 4 a. Garcia has a company also. 4 b. Garcia tambien tiene una empresa. 10 a. the modern groups sell strong pharmaceuticals. 10 b. los grupos modernos venden medicinas fuertes. 5 a. its clients are angry. 5 b. sus clientes estan enfadados. 11 a. the groups do not sell zenzanine. 11 b. los grupos no venden zanzanina. 6 a. the associates are also angry. 12 a. the small groups are not modern. 12 b. los grupos pequenos no son modernos. 6 b. los asociados tambien estan enfadados. Lecture 1, 7/21/2005 Natural Language Processing Slide from Kevin Knight 17

It’sdo. Really Spanish/Englishno venden medicinas en Europa Clients not sell pharmaceuticals in Europe => Clientes 1 a. Garcia and associates. 1 b. Garcia y asociados. 7 a. the clients and the associates are enemies. 7 b. los clients y los asociados son enemigos. 2 a. Carlos Garcia has three associates. 2 b. Carlos Garcia tiene tres asociados. 8 a. the company has three groups. 8 b. la empresa tiene tres grupos. 3 a. his associates are not strong. 3 b. sus asociados no son fuertes. 9 a. its groups are in Europe. 9 b. sus grupos estan en Europa. 4 a. Garcia has a company also. 4 b. Garcia tambien tiene una empresa. 10 a. the modern groups sell strong pharmaceuticals. 10 b. los grupos modernos venden medicinas fuertes. 5 a. its clients are angry. 5 b. sus clientes estan enfadados. 11 a. the groups do not sell zenzanine. 11 b. los grupos no venden zanzanina. 6 a. the associates are also angry. 12 a. the small groups are not modern. 12 b. los grupos pequenos no son modernos. 6 b. los asociados tambien estan enfadados. Lecture 1, 7/21/2005 Natural Language Processing Slide from Kevin Knight 17

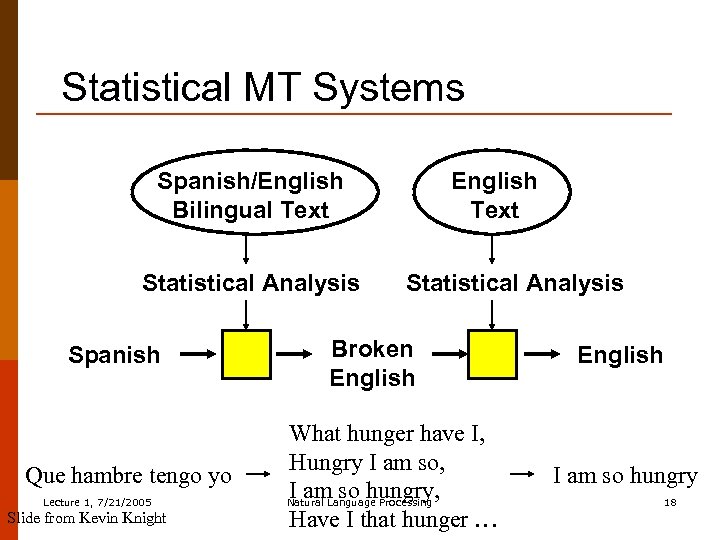

Statistical MT Systems Spanish/English Bilingual Text Statistical Analysis Spanish Que hambre tengo yo Lecture 1, 7/21/2005 Slide from Kevin Knight English Text Statistical Analysis Broken English What hunger have I, Hungry I am so, I am so hungry, Natural Language Processing Have I that hunger … English I am so hungry 18

Statistical MT Systems Spanish/English Bilingual Text Statistical Analysis Spanish Que hambre tengo yo Lecture 1, 7/21/2005 Slide from Kevin Knight English Text Statistical Analysis Broken English What hunger have I, Hungry I am so, I am so hungry, Natural Language Processing Have I that hunger … English I am so hungry 18

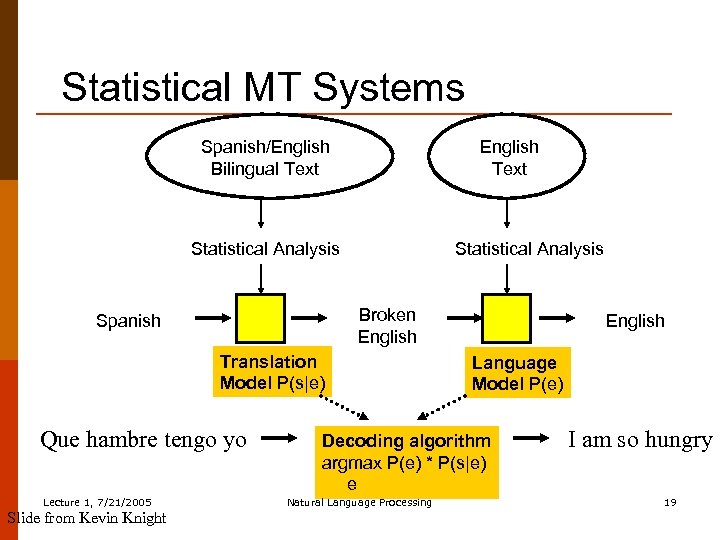

Statistical MT Systems Spanish/English Bilingual Text English Text Statistical Analysis Broken English Spanish Translation Model P(s|e) Que hambre tengo yo Lecture 1, 7/21/2005 Slide from Kevin Knight English Language Model P(e) Decoding algorithm argmax P(e) * P(s|e) e Natural Language Processing I am so hungry 19

Statistical MT Systems Spanish/English Bilingual Text English Text Statistical Analysis Broken English Spanish Translation Model P(s|e) Que hambre tengo yo Lecture 1, 7/21/2005 Slide from Kevin Knight English Language Model P(e) Decoding algorithm argmax P(e) * P(s|e) e Natural Language Processing I am so hungry 19

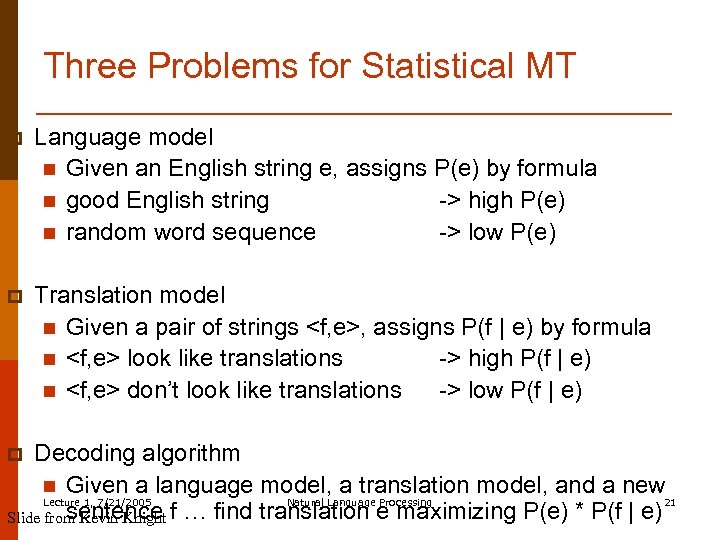

Three Problems for Statistical MT p Language model n Given an English string e, assigns P(e) by formula n good English string -> high P(e) n random word sequence -> low P(e) p Translation model n Given a pair of strings

Three Problems for Statistical MT p Language model n Given an English string e, assigns P(e) by formula n good English string -> high P(e) n random word sequence -> low P(e) p Translation model n Given a pair of strings

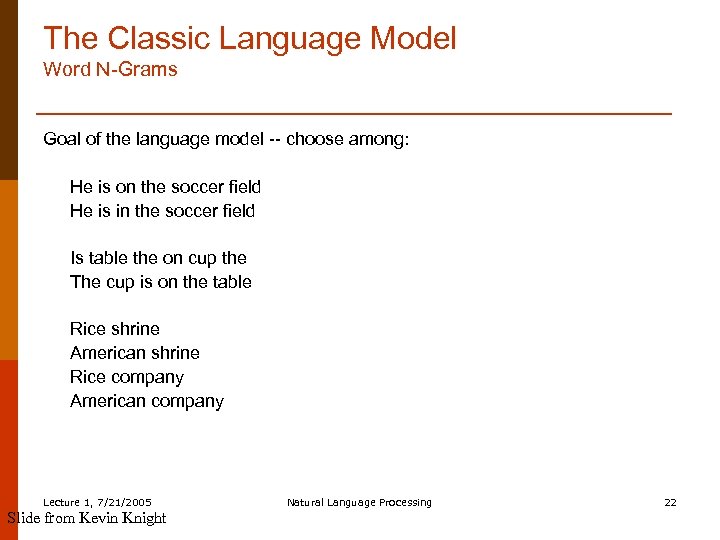

The Classic Language Model Word N-Grams Goal of the language model -- choose among: He is on the soccer field He is in the soccer field Is table the on cup the The cup is on the table Rice shrine American shrine Rice company American company Lecture 1, 7/21/2005 Slide from Kevin Knight Natural Language Processing 22

The Classic Language Model Word N-Grams Goal of the language model -- choose among: He is on the soccer field He is in the soccer field Is table the on cup the The cup is on the table Rice shrine American shrine Rice company American company Lecture 1, 7/21/2005 Slide from Kevin Knight Natural Language Processing 22

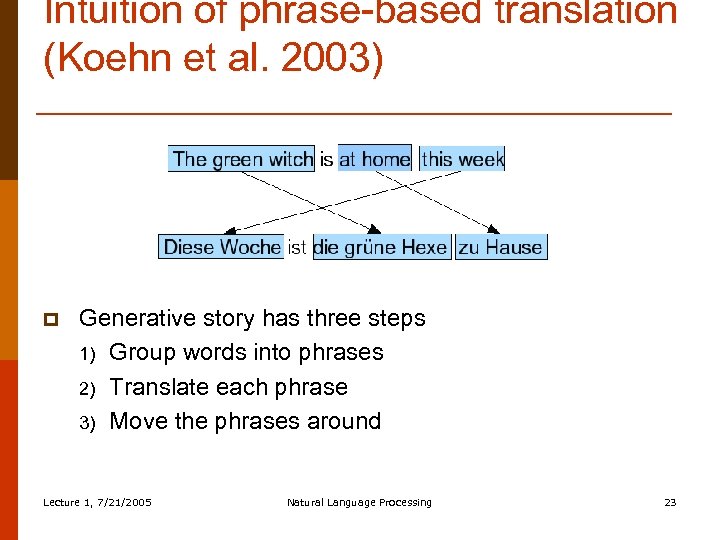

Intuition of phrase-based translation (Koehn et al. 2003) p Generative story has three steps 1) Group words into phrases 2) Translate each phrase 3) Move the phrases around Lecture 1, 7/21/2005 Natural Language Processing 23

Intuition of phrase-based translation (Koehn et al. 2003) p Generative story has three steps 1) Group words into phrases 2) Translate each phrase 3) Move the phrases around Lecture 1, 7/21/2005 Natural Language Processing 23

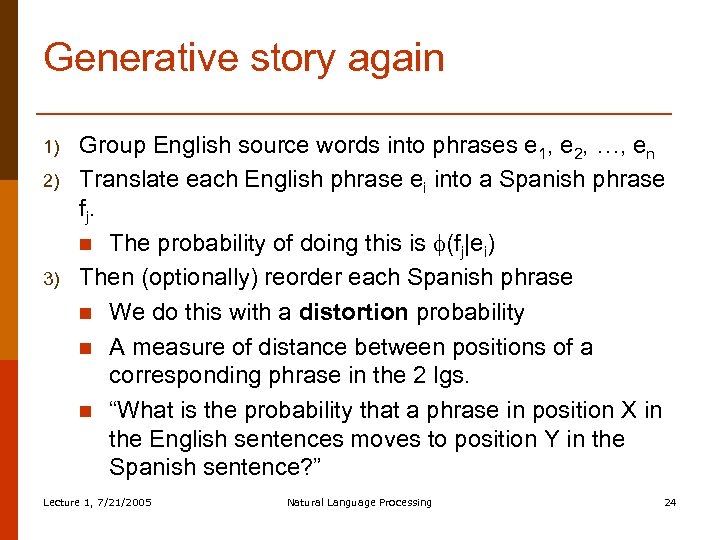

Generative story again 1) 2) 3) Group English source words into phrases e 1, e 2, …, en Translate each English phrase ei into a Spanish phrase f j. n The probability of doing this is (fj|ei) Then (optionally) reorder each Spanish phrase n We do this with a distortion probability n A measure of distance between positions of a corresponding phrase in the 2 lgs. n “What is the probability that a phrase in position X in the English sentences moves to position Y in the Spanish sentence? ” Lecture 1, 7/21/2005 Natural Language Processing 24

Generative story again 1) 2) 3) Group English source words into phrases e 1, e 2, …, en Translate each English phrase ei into a Spanish phrase f j. n The probability of doing this is (fj|ei) Then (optionally) reorder each Spanish phrase n We do this with a distortion probability n A measure of distance between positions of a corresponding phrase in the 2 lgs. n “What is the probability that a phrase in position X in the English sentences moves to position Y in the Spanish sentence? ” Lecture 1, 7/21/2005 Natural Language Processing 24

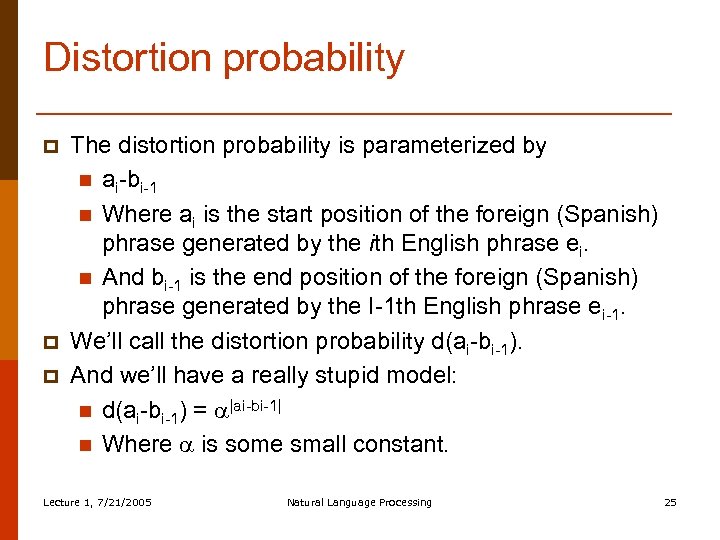

Distortion probability p p p The distortion probability is parameterized by n ai-bi-1 n Where ai is the start position of the foreign (Spanish) phrase generated by the ith English phrase ei. n And bi-1 is the end position of the foreign (Spanish) phrase generated by the I-1 th English phrase ei-1. We’ll call the distortion probability d(ai-bi-1). And we’ll have a really stupid model: n d(ai-bi-1) = |ai-bi-1| n Where is some small constant. Lecture 1, 7/21/2005 Natural Language Processing 25

Distortion probability p p p The distortion probability is parameterized by n ai-bi-1 n Where ai is the start position of the foreign (Spanish) phrase generated by the ith English phrase ei. n And bi-1 is the end position of the foreign (Spanish) phrase generated by the I-1 th English phrase ei-1. We’ll call the distortion probability d(ai-bi-1). And we’ll have a really stupid model: n d(ai-bi-1) = |ai-bi-1| n Where is some small constant. Lecture 1, 7/21/2005 Natural Language Processing 25

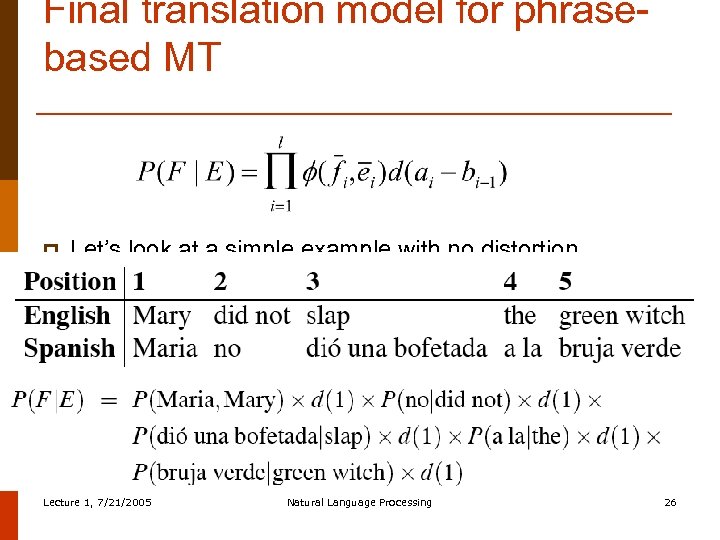

Final translation model for phrasebased MT p Let’s look at a simple example with no distortion Lecture 1, 7/21/2005 Natural Language Processing 26

Final translation model for phrasebased MT p Let’s look at a simple example with no distortion Lecture 1, 7/21/2005 Natural Language Processing 26

Phrase-based MT p p p Language model P(E) Translation model P(F|E) n Model n How to train the model Decoder: finding the sentence E that is most probable Lecture 1, 7/21/2005 Natural Language Processing 27

Phrase-based MT p p p Language model P(E) Translation model P(F|E) n Model n How to train the model Decoder: finding the sentence E that is most probable Lecture 1, 7/21/2005 Natural Language Processing 27

Training P(F|E) p p p What we mainly need to train is (fj|ei) Suppose we had a large bilingual training corpus n A bitext n In which each English sentence is paired with a Spanish sentence And suppose we knew exactly which phrase in Spanish was the translation of which phrase in the English We call this a phrase alignment If we had this, we could just count-and-divide: Lecture 1, 7/21/2005 Natural Language Processing 28

Training P(F|E) p p p What we mainly need to train is (fj|ei) Suppose we had a large bilingual training corpus n A bitext n In which each English sentence is paired with a Spanish sentence And suppose we knew exactly which phrase in Spanish was the translation of which phrase in the English We call this a phrase alignment If we had this, we could just count-and-divide: Lecture 1, 7/21/2005 Natural Language Processing 28

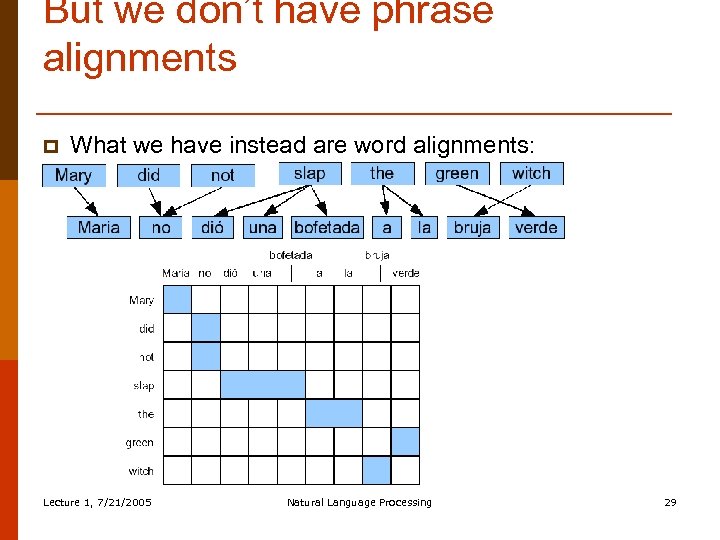

But we don’t have phrase alignments p What we have instead are word alignments: Lecture 1, 7/21/2005 Natural Language Processing 29

But we don’t have phrase alignments p What we have instead are word alignments: Lecture 1, 7/21/2005 Natural Language Processing 29

Getting phrase alignments p To get phrase alignments: 1) We first get word alignments 2) Then we “symmetrize” the word alignments into phrase alignments Lecture 1, 7/21/2005 Natural Language Processing 30

Getting phrase alignments p To get phrase alignments: 1) We first get word alignments 2) Then we “symmetrize” the word alignments into phrase alignments Lecture 1, 7/21/2005 Natural Language Processing 30

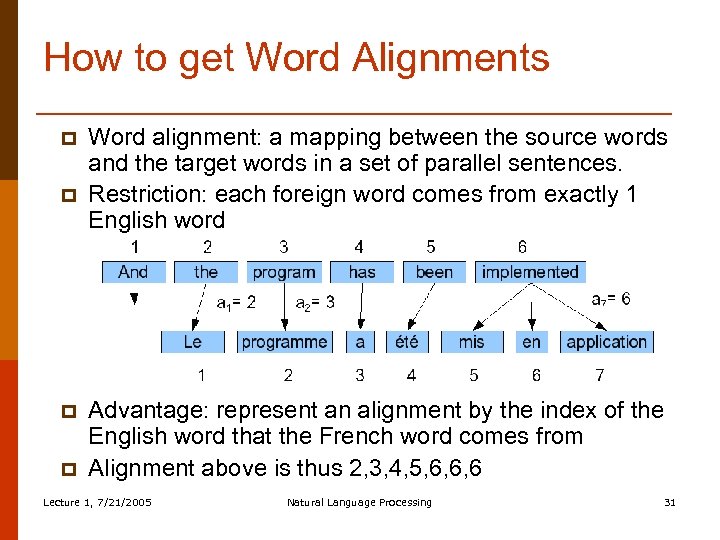

How to get Word Alignments p p Word alignment: a mapping between the source words and the target words in a set of parallel sentences. Restriction: each foreign word comes from exactly 1 English word Advantage: represent an alignment by the index of the English word that the French word comes from Alignment above is thus 2, 3, 4, 5, 6, 6, 6 Lecture 1, 7/21/2005 Natural Language Processing 31

How to get Word Alignments p p Word alignment: a mapping between the source words and the target words in a set of parallel sentences. Restriction: each foreign word comes from exactly 1 English word Advantage: represent an alignment by the index of the English word that the French word comes from Alignment above is thus 2, 3, 4, 5, 6, 6, 6 Lecture 1, 7/21/2005 Natural Language Processing 31

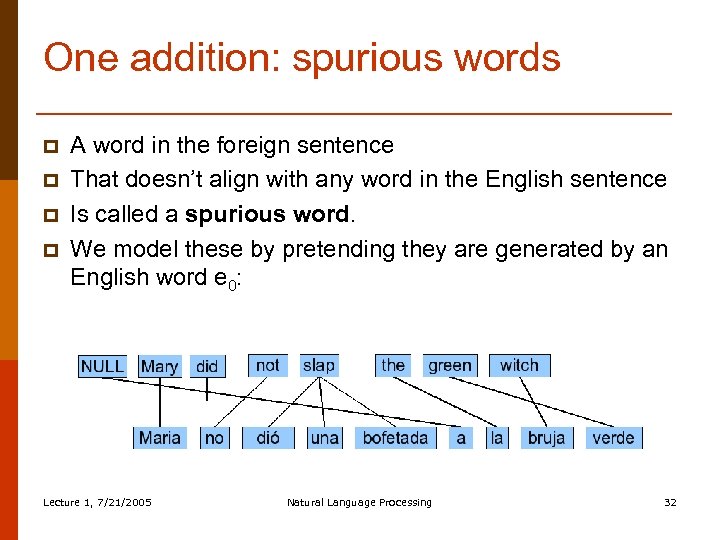

One addition: spurious words p p A word in the foreign sentence That doesn’t align with any word in the English sentence Is called a spurious word. We model these by pretending they are generated by an English word e 0: Lecture 1, 7/21/2005 Natural Language Processing 32

One addition: spurious words p p A word in the foreign sentence That doesn’t align with any word in the English sentence Is called a spurious word. We model these by pretending they are generated by an English word e 0: Lecture 1, 7/21/2005 Natural Language Processing 32

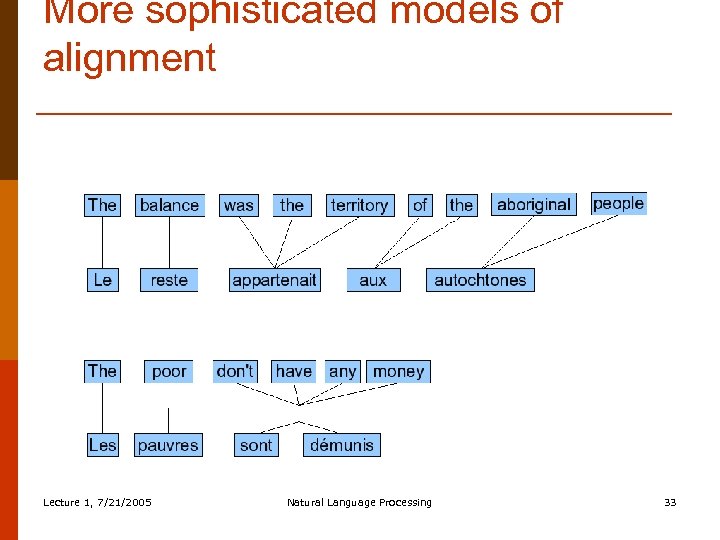

More sophisticated models of alignment Lecture 1, 7/21/2005 Natural Language Processing 33

More sophisticated models of alignment Lecture 1, 7/21/2005 Natural Language Processing 33

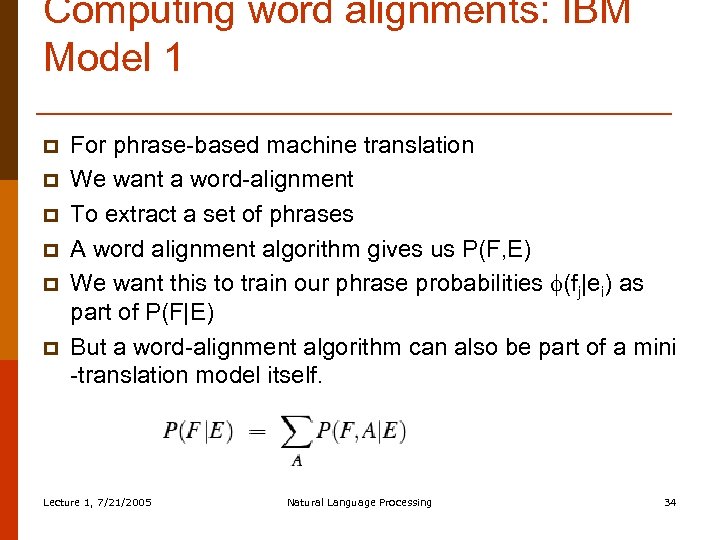

Computing word alignments: IBM Model 1 p p p For phrase-based machine translation We want a word-alignment To extract a set of phrases A word alignment algorithm gives us P(F, E) We want this to train our phrase probabilities (fj|ei) as part of P(F|E) But a word-alignment algorithm can also be part of a mini -translation model itself. Lecture 1, 7/21/2005 Natural Language Processing 34

Computing word alignments: IBM Model 1 p p p For phrase-based machine translation We want a word-alignment To extract a set of phrases A word alignment algorithm gives us P(F, E) We want this to train our phrase probabilities (fj|ei) as part of P(F|E) But a word-alignment algorithm can also be part of a mini -translation model itself. Lecture 1, 7/21/2005 Natural Language Processing 34

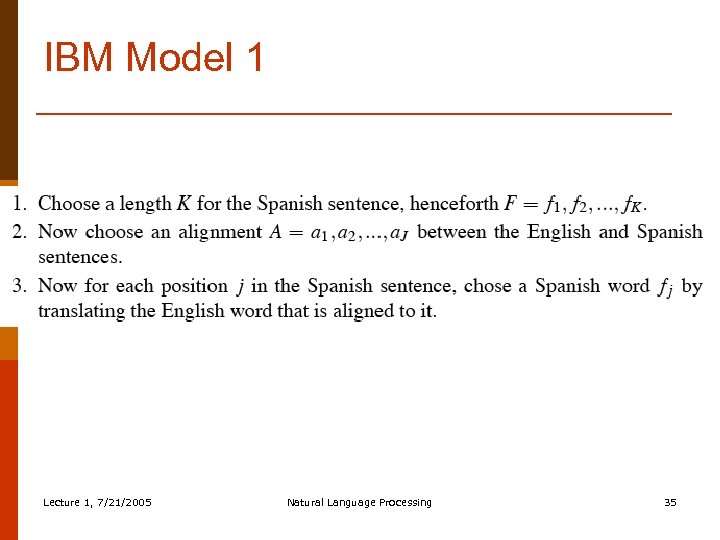

IBM Model 1 Lecture 1, 7/21/2005 Natural Language Processing 35

IBM Model 1 Lecture 1, 7/21/2005 Natural Language Processing 35

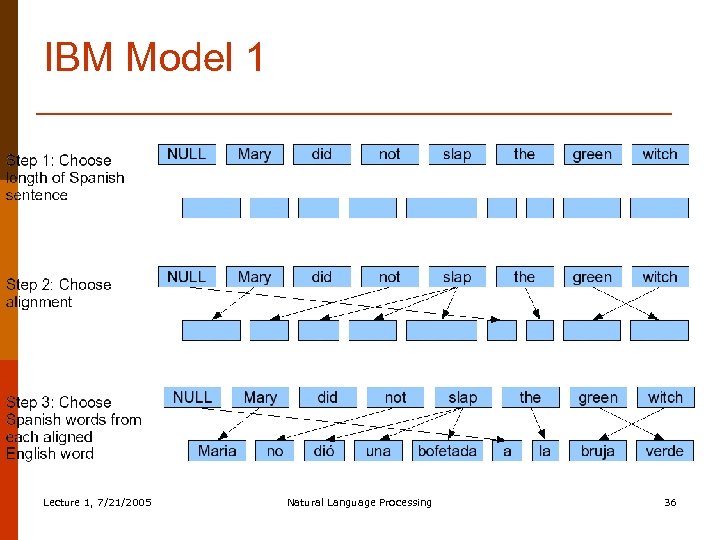

IBM Model 1 Lecture 1, 7/21/2005 Natural Language Processing 36

IBM Model 1 Lecture 1, 7/21/2005 Natural Language Processing 36

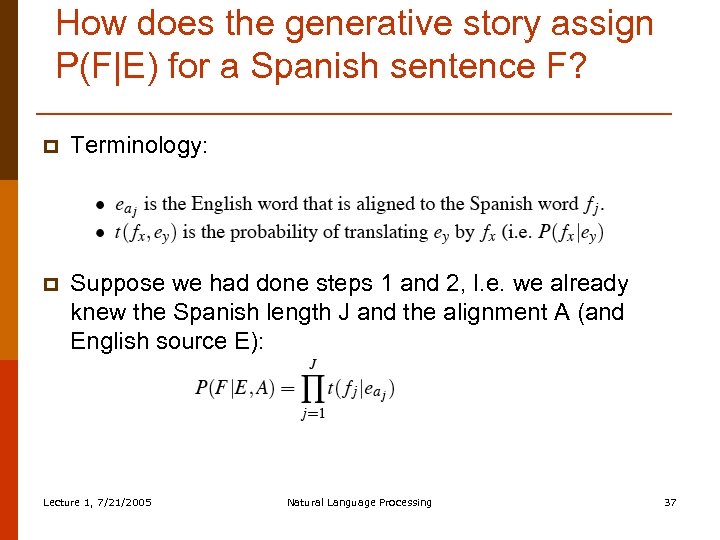

How does the generative story assign P(F|E) for a Spanish sentence F? p Terminology: p Suppose we had done steps 1 and 2, I. e. we already knew the Spanish length J and the alignment A (and English source E): Lecture 1, 7/21/2005 Natural Language Processing 37

How does the generative story assign P(F|E) for a Spanish sentence F? p Terminology: p Suppose we had done steps 1 and 2, I. e. we already knew the Spanish length J and the alignment A (and English source E): Lecture 1, 7/21/2005 Natural Language Processing 37

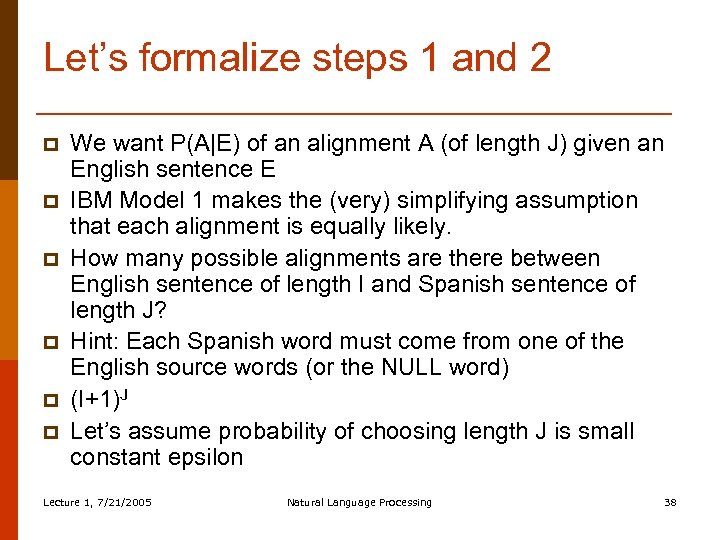

Let’s formalize steps 1 and 2 p p p We want P(A|E) of an alignment A (of length J) given an English sentence E IBM Model 1 makes the (very) simplifying assumption that each alignment is equally likely. How many possible alignments are there between English sentence of length I and Spanish sentence of length J? Hint: Each Spanish word must come from one of the English source words (or the NULL word) (I+1)J Let’s assume probability of choosing length J is small constant epsilon Lecture 1, 7/21/2005 Natural Language Processing 38

Let’s formalize steps 1 and 2 p p p We want P(A|E) of an alignment A (of length J) given an English sentence E IBM Model 1 makes the (very) simplifying assumption that each alignment is equally likely. How many possible alignments are there between English sentence of length I and Spanish sentence of length J? Hint: Each Spanish word must come from one of the English source words (or the NULL word) (I+1)J Let’s assume probability of choosing length J is small constant epsilon Lecture 1, 7/21/2005 Natural Language Processing 38

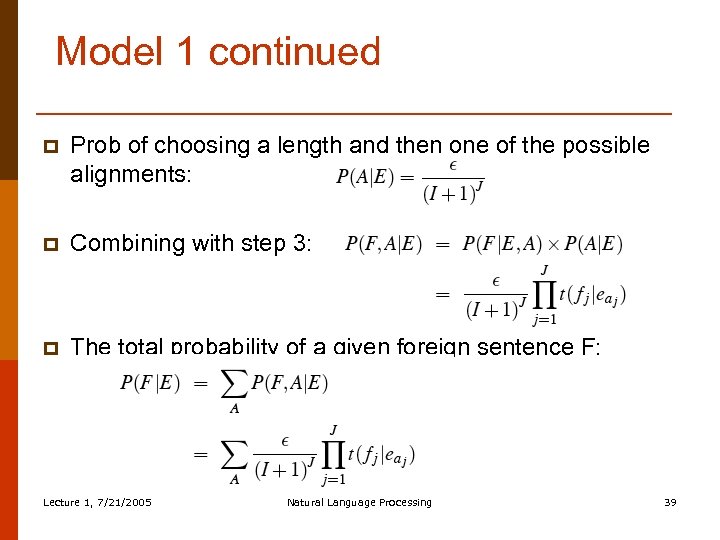

Model 1 continued p Prob of choosing a length and then one of the possible alignments: p Combining with step 3: p The total probability of a given foreign sentence F: Lecture 1, 7/21/2005 Natural Language Processing 39

Model 1 continued p Prob of choosing a length and then one of the possible alignments: p Combining with step 3: p The total probability of a given foreign sentence F: Lecture 1, 7/21/2005 Natural Language Processing 39

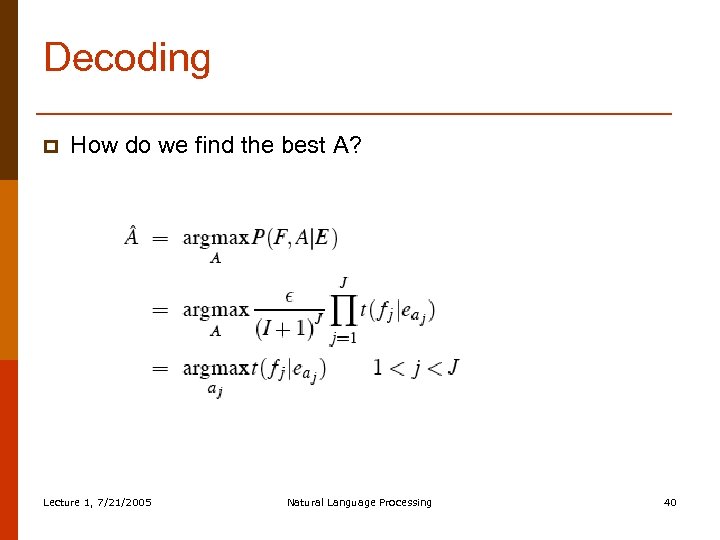

Decoding p How do we find the best A? Lecture 1, 7/21/2005 Natural Language Processing 40

Decoding p How do we find the best A? Lecture 1, 7/21/2005 Natural Language Processing 40

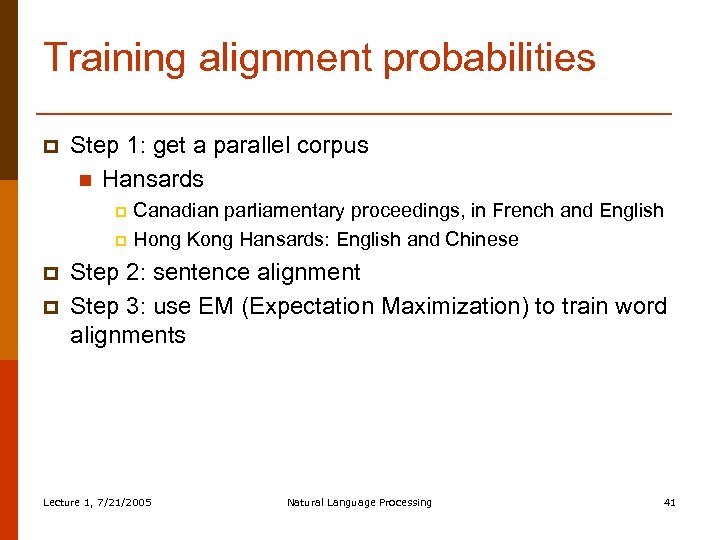

Training alignment probabilities p Step 1: get a parallel corpus n Hansards Canadian parliamentary proceedings, in French and English p Hong Kong Hansards: English and Chinese p p p Step 2: sentence alignment Step 3: use EM (Expectation Maximization) to train word alignments Lecture 1, 7/21/2005 Natural Language Processing 41

Training alignment probabilities p Step 1: get a parallel corpus n Hansards Canadian parliamentary proceedings, in French and English p Hong Kong Hansards: English and Chinese p p p Step 2: sentence alignment Step 3: use EM (Expectation Maximization) to train word alignments Lecture 1, 7/21/2005 Natural Language Processing 41

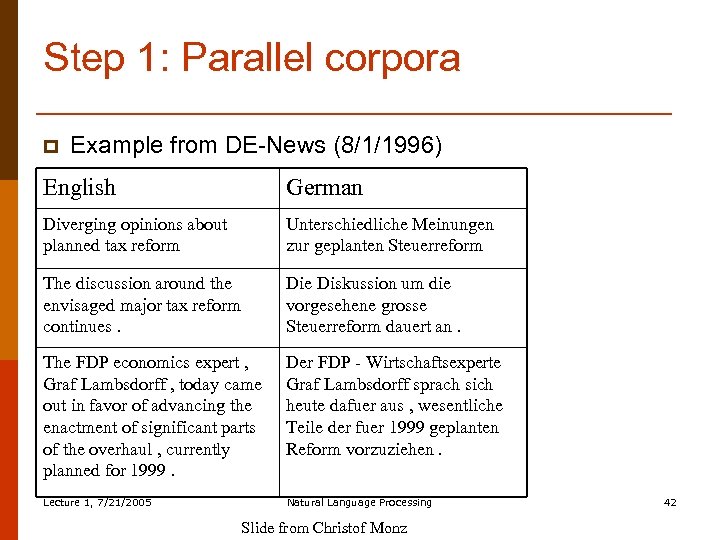

Step 1: Parallel corpora p Example from DE-News (8/1/1996) English German Diverging opinions about planned tax reform Unterschiedliche Meinungen zur geplanten Steuerreform The discussion around the envisaged major tax reform continues. Die Diskussion um die vorgesehene grosse Steuerreform dauert an. The FDP economics expert , Graf Lambsdorff , today came out in favor of advancing the enactment of significant parts of the overhaul , currently planned for 1999. Der FDP - Wirtschaftsexperte Graf Lambsdorff sprach sich heute dafuer aus , wesentliche Teile der fuer 1999 geplanten Reform vorzuziehen. Lecture 1, 7/21/2005 Natural Language Processing Slide from Christof Monz 42

Step 1: Parallel corpora p Example from DE-News (8/1/1996) English German Diverging opinions about planned tax reform Unterschiedliche Meinungen zur geplanten Steuerreform The discussion around the envisaged major tax reform continues. Die Diskussion um die vorgesehene grosse Steuerreform dauert an. The FDP economics expert , Graf Lambsdorff , today came out in favor of advancing the enactment of significant parts of the overhaul , currently planned for 1999. Der FDP - Wirtschaftsexperte Graf Lambsdorff sprach sich heute dafuer aus , wesentliche Teile der fuer 1999 geplanten Reform vorzuziehen. Lecture 1, 7/21/2005 Natural Language Processing Slide from Christof Monz 42

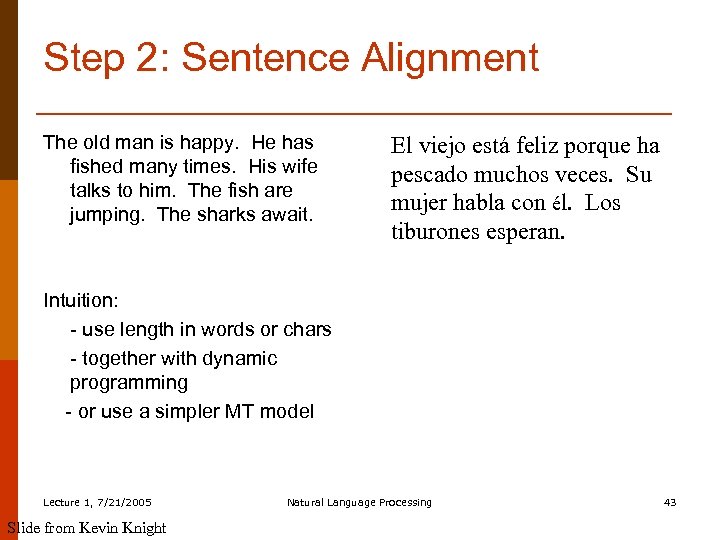

Step 2: Sentence Alignment The old man is happy. He has fished many times. His wife talks to him. The fish are jumping. The sharks await. El viejo está feliz porque ha pescado muchos veces. Su mujer habla con él. Los tiburones esperan. Intuition: - use length in words or chars - together with dynamic programming - or use a simpler MT model Lecture 1, 7/21/2005 Slide from Kevin Knight Natural Language Processing 43

Step 2: Sentence Alignment The old man is happy. He has fished many times. His wife talks to him. The fish are jumping. The sharks await. El viejo está feliz porque ha pescado muchos veces. Su mujer habla con él. Los tiburones esperan. Intuition: - use length in words or chars - together with dynamic programming - or use a simpler MT model Lecture 1, 7/21/2005 Slide from Kevin Knight Natural Language Processing 43

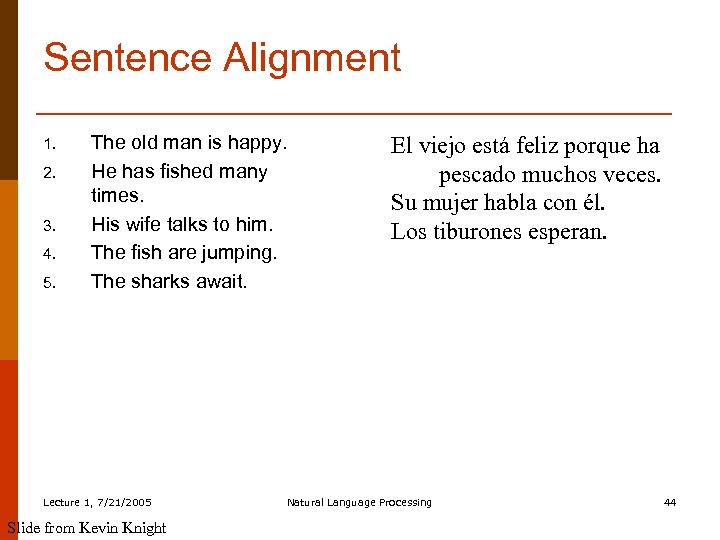

Sentence Alignment 1. 2. 3. 4. 5. The old man is happy. He has fished many times. His wife talks to him. The fish are jumping. The sharks await. Lecture 1, 7/21/2005 Slide from Kevin Knight El viejo está feliz porque ha pescado muchos veces. Su mujer habla con él. Los tiburones esperan. Natural Language Processing 44

Sentence Alignment 1. 2. 3. 4. 5. The old man is happy. He has fished many times. His wife talks to him. The fish are jumping. The sharks await. Lecture 1, 7/21/2005 Slide from Kevin Knight El viejo está feliz porque ha pescado muchos veces. Su mujer habla con él. Los tiburones esperan. Natural Language Processing 44

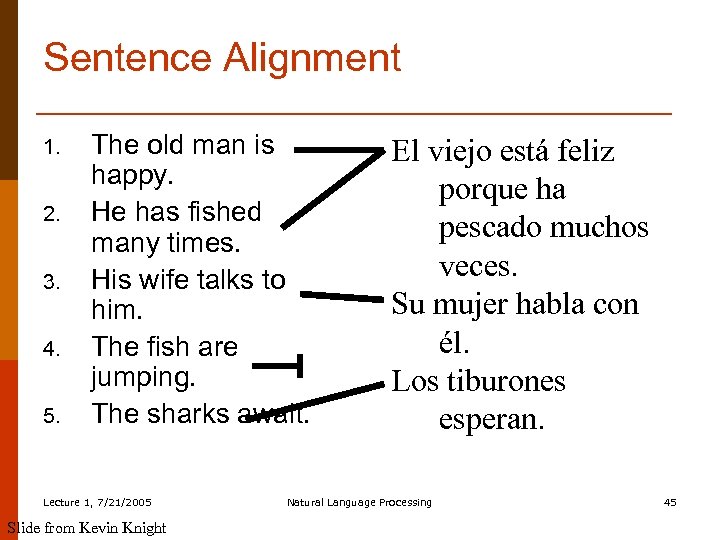

Sentence Alignment 1. 2. 3. 4. 5. The old man is happy. He has fished many times. His wife talks to him. The fish are jumping. The sharks await. Lecture 1, 7/21/2005 Slide from Kevin Knight El viejo está feliz porque ha pescado muchos veces. Su mujer habla con él. Los tiburones esperan. Natural Language Processing 45

Sentence Alignment 1. 2. 3. 4. 5. The old man is happy. He has fished many times. His wife talks to him. The fish are jumping. The sharks await. Lecture 1, 7/21/2005 Slide from Kevin Knight El viejo está feliz porque ha pescado muchos veces. Su mujer habla con él. Los tiburones esperan. Natural Language Processing 45

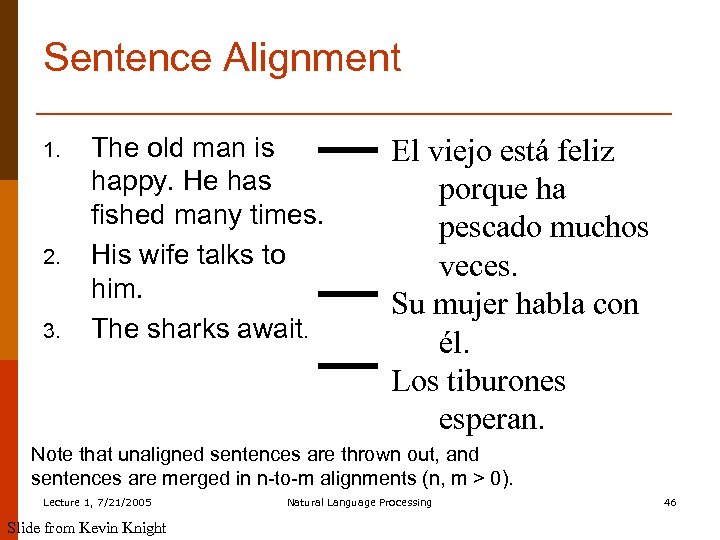

Sentence Alignment 1. 2. 3. The old man is happy. He has fished many times. His wife talks to him. The sharks await. El viejo está feliz porque ha pescado muchos veces. Su mujer habla con él. Los tiburones esperan. Note that unaligned sentences are thrown out, and sentences are merged in n-to-m alignments (n, m > 0). Lecture 1, 7/21/2005 Slide from Kevin Knight Natural Language Processing 46

Sentence Alignment 1. 2. 3. The old man is happy. He has fished many times. His wife talks to him. The sharks await. El viejo está feliz porque ha pescado muchos veces. Su mujer habla con él. Los tiburones esperan. Note that unaligned sentences are thrown out, and sentences are merged in n-to-m alignments (n, m > 0). Lecture 1, 7/21/2005 Slide from Kevin Knight Natural Language Processing 46

Step 3: word alignments § § § It turns out we can bootstrap alignments From a sentence-aligned bilingual corpus We use is the Expectation-Maximization or EM algorithm Lecture 1, 7/21/2005 Natural Language Processing 47

Step 3: word alignments § § § It turns out we can bootstrap alignments From a sentence-aligned bilingual corpus We use is the Expectation-Maximization or EM algorithm Lecture 1, 7/21/2005 Natural Language Processing 47

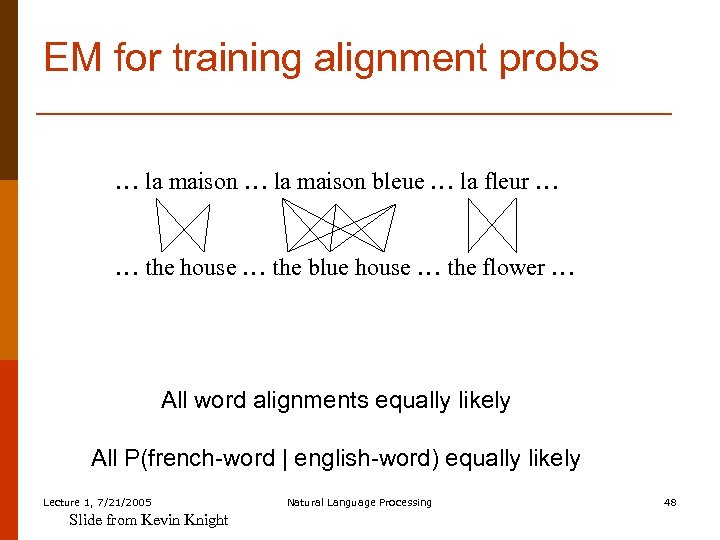

EM for training alignment probs … la maison bleue … la fleur … … the house … the blue house … the flower … All word alignments equally likely All P(french-word | english-word) equally likely Lecture 1, 7/21/2005 Slide from Kevin Knight Natural Language Processing 48

EM for training alignment probs … la maison bleue … la fleur … … the house … the blue house … the flower … All word alignments equally likely All P(french-word | english-word) equally likely Lecture 1, 7/21/2005 Slide from Kevin Knight Natural Language Processing 48

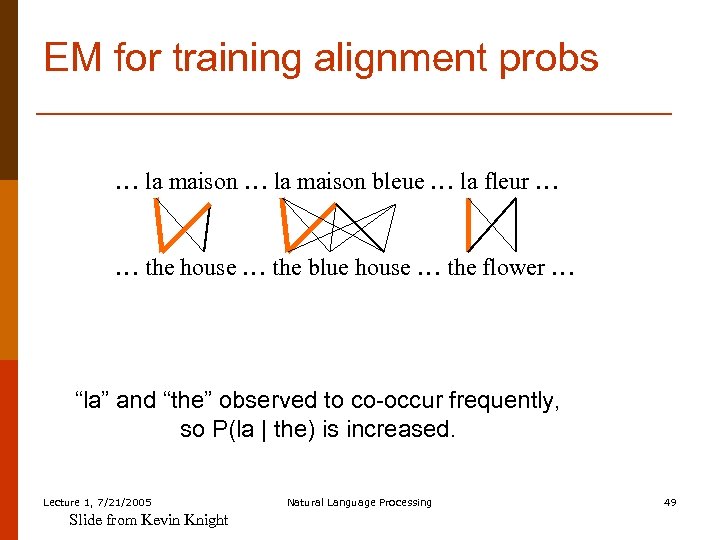

EM for training alignment probs … la maison bleue … la fleur … … the house … the blue house … the flower … “la” and “the” observed to co-occur frequently, so P(la | the) is increased. Lecture 1, 7/21/2005 Slide from Kevin Knight Natural Language Processing 49

EM for training alignment probs … la maison bleue … la fleur … … the house … the blue house … the flower … “la” and “the” observed to co-occur frequently, so P(la | the) is increased. Lecture 1, 7/21/2005 Slide from Kevin Knight Natural Language Processing 49

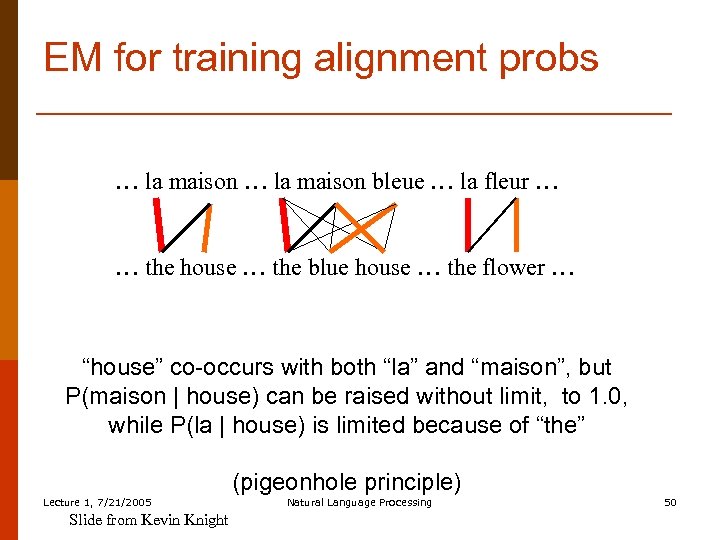

EM for training alignment probs … la maison bleue … la fleur … … the house … the blue house … the flower … “house” co-occurs with both “la” and “maison”, but P(maison | house) can be raised without limit, to 1. 0, while P(la | house) is limited because of “the” (pigeonhole principle) Lecture 1, 7/21/2005 Slide from Kevin Knight Natural Language Processing 50

EM for training alignment probs … la maison bleue … la fleur … … the house … the blue house … the flower … “house” co-occurs with both “la” and “maison”, but P(maison | house) can be raised without limit, to 1. 0, while P(la | house) is limited because of “the” (pigeonhole principle) Lecture 1, 7/21/2005 Slide from Kevin Knight Natural Language Processing 50

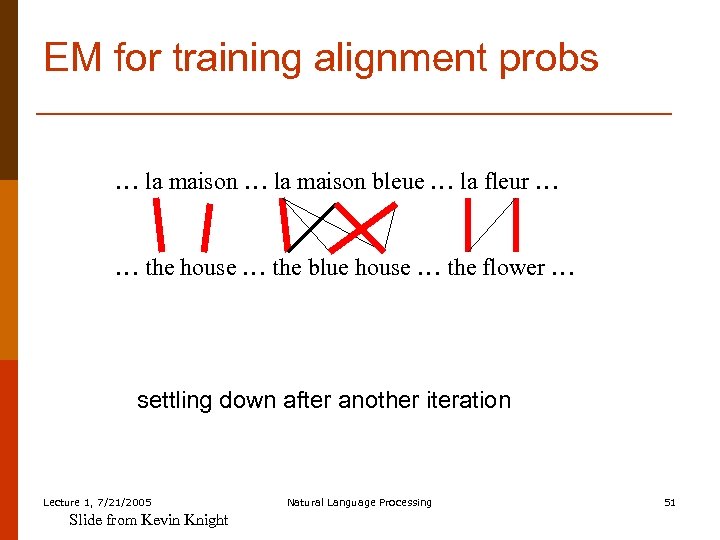

EM for training alignment probs … la maison bleue … la fleur … … the house … the blue house … the flower … settling down after another iteration Lecture 1, 7/21/2005 Slide from Kevin Knight Natural Language Processing 51

EM for training alignment probs … la maison bleue … la fleur … … the house … the blue house … the flower … settling down after another iteration Lecture 1, 7/21/2005 Slide from Kevin Knight Natural Language Processing 51

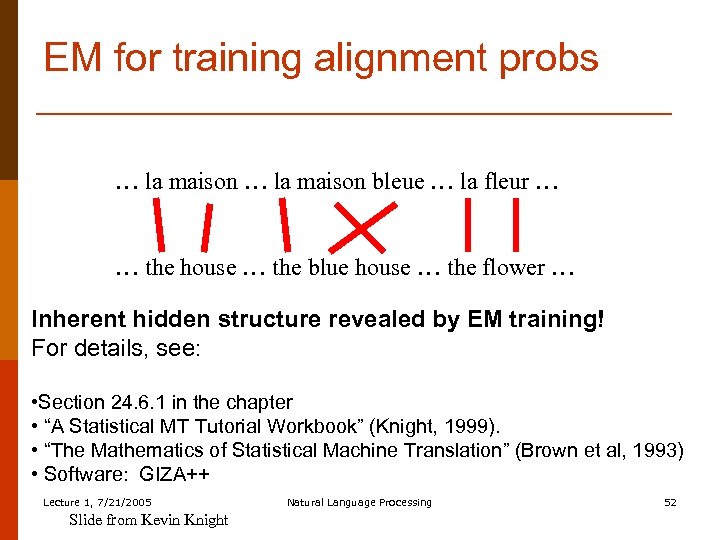

EM for training alignment probs … la maison bleue … la fleur … … the house … the blue house … the flower … Inherent hidden structure revealed by EM training! For details, see: • Section 24. 6. 1 in the chapter • “A Statistical MT Tutorial Workbook” (Knight, 1999). • “The Mathematics of Statistical Machine Translation” (Brown et al, 1993) • Software: GIZA++ Lecture 1, 7/21/2005 Slide from Kevin Knight Natural Language Processing 52

EM for training alignment probs … la maison bleue … la fleur … … the house … the blue house … the flower … Inherent hidden structure revealed by EM training! For details, see: • Section 24. 6. 1 in the chapter • “A Statistical MT Tutorial Workbook” (Knight, 1999). • “The Mathematics of Statistical Machine Translation” (Brown et al, 1993) • Software: GIZA++ Lecture 1, 7/21/2005 Slide from Kevin Knight Natural Language Processing 52

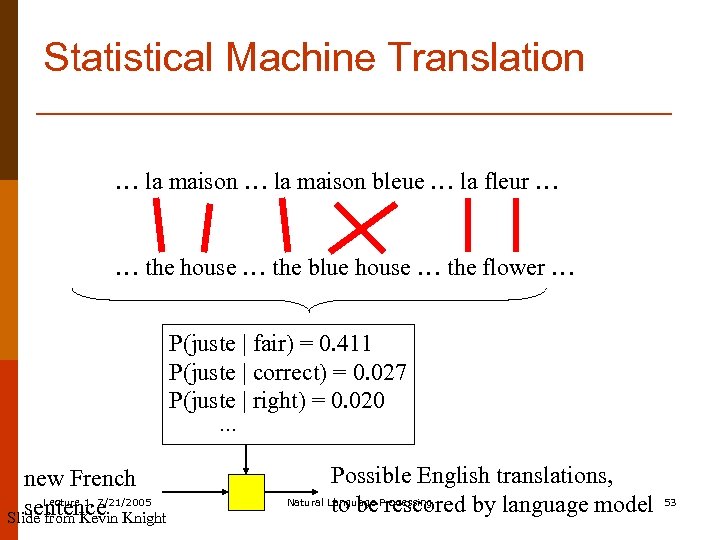

Statistical Machine Translation … la maison bleue … la fleur … … the house … the blue house … the flower … P(juste | fair) = 0. 411 P(juste | correct) = 0. 027 P(juste | right) = 0. 020 … new French Lecture 1, 7/21/2005 sentence Slide from Kevin Knight Possible English translations, Natural Language Processing to be rescored by language model 53

Statistical Machine Translation … la maison bleue … la fleur … … the house … the blue house … the flower … P(juste | fair) = 0. 411 P(juste | correct) = 0. 027 P(juste | right) = 0. 020 … new French Lecture 1, 7/21/2005 sentence Slide from Kevin Knight Possible English translations, Natural Language Processing to be rescored by language model 53

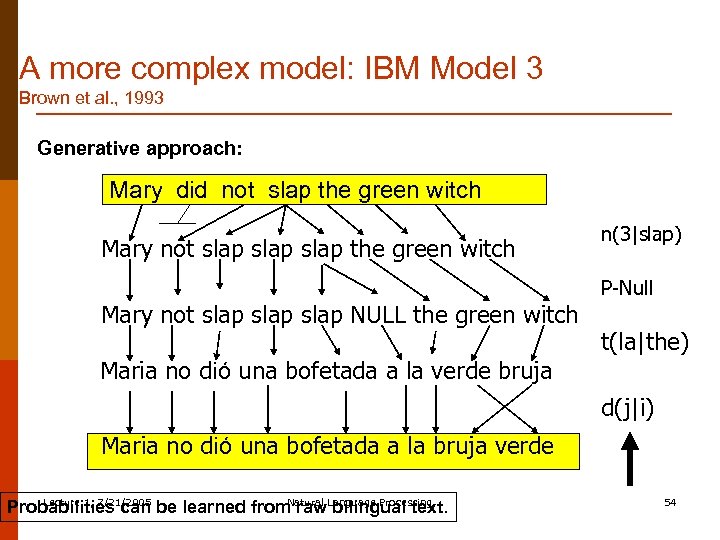

A more complex model: IBM Model 3 Brown et al. , 1993 Generative approach: Mary did not slap the green witch Mary not slap slap NULL the green witch n(3|slap) P-Null t(la|the) Maria no dió una bofetada a la verde bruja d(j|i) Maria no dió una bofetada a la bruja verde Lecture 1, 7/21/2005 Natural Probabilities can be learned from raw. Language Processing bilingual text. 54

A more complex model: IBM Model 3 Brown et al. , 1993 Generative approach: Mary did not slap the green witch Mary not slap slap NULL the green witch n(3|slap) P-Null t(la|the) Maria no dió una bofetada a la verde bruja d(j|i) Maria no dió una bofetada a la bruja verde Lecture 1, 7/21/2005 Natural Probabilities can be learned from raw. Language Processing bilingual text. 54

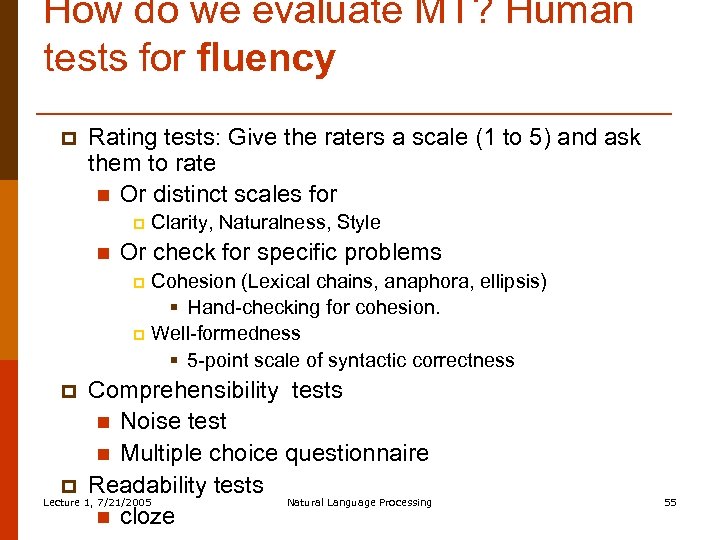

How do we evaluate MT? Human tests for fluency p Rating tests: Give the raters a scale (1 to 5) and ask them to rate n Or distinct scales for p n Clarity, Naturalness, Style Or check for specific problems Cohesion (Lexical chains, anaphora, ellipsis) § Hand-checking for cohesion. p Well-formedness § 5 -point scale of syntactic correctness p Comprehensibility tests n Noise test n Multiple choice questionnaire p Readability tests Lecture 1, 7/21/2005 Natural Language Processing n cloze p 55

How do we evaluate MT? Human tests for fluency p Rating tests: Give the raters a scale (1 to 5) and ask them to rate n Or distinct scales for p n Clarity, Naturalness, Style Or check for specific problems Cohesion (Lexical chains, anaphora, ellipsis) § Hand-checking for cohesion. p Well-formedness § 5 -point scale of syntactic correctness p Comprehensibility tests n Noise test n Multiple choice questionnaire p Readability tests Lecture 1, 7/21/2005 Natural Language Processing n cloze p 55

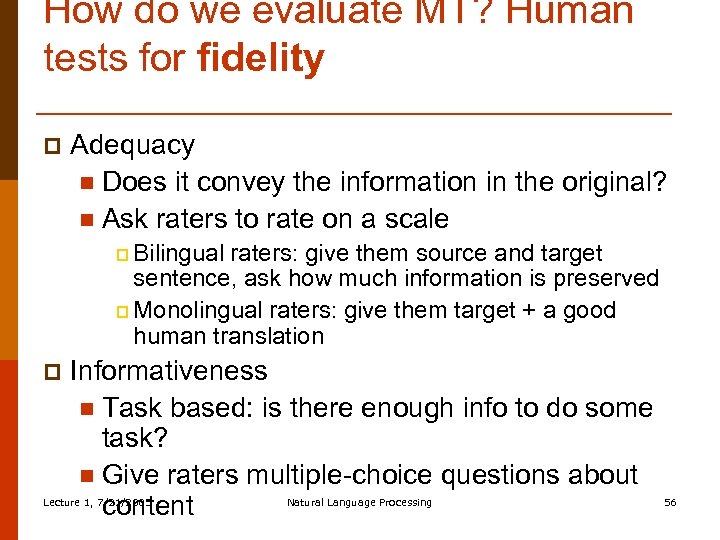

How do we evaluate MT? Human tests for fidelity p Adequacy n Does it convey the information in the original? n Ask raters to rate on a scale p Bilingual raters: give them source and target sentence, ask how much information is preserved p Monolingual raters: give them target + a good human translation p Informativeness n Task based: is there enough info to do some task? n Give raters multiple-choice questions about content Lecture 1, 7/21/2005 Natural Language Processing 56

How do we evaluate MT? Human tests for fidelity p Adequacy n Does it convey the information in the original? n Ask raters to rate on a scale p Bilingual raters: give them source and target sentence, ask how much information is preserved p Monolingual raters: give them target + a good human translation p Informativeness n Task based: is there enough info to do some task? n Give raters multiple-choice questions about content Lecture 1, 7/21/2005 Natural Language Processing 56

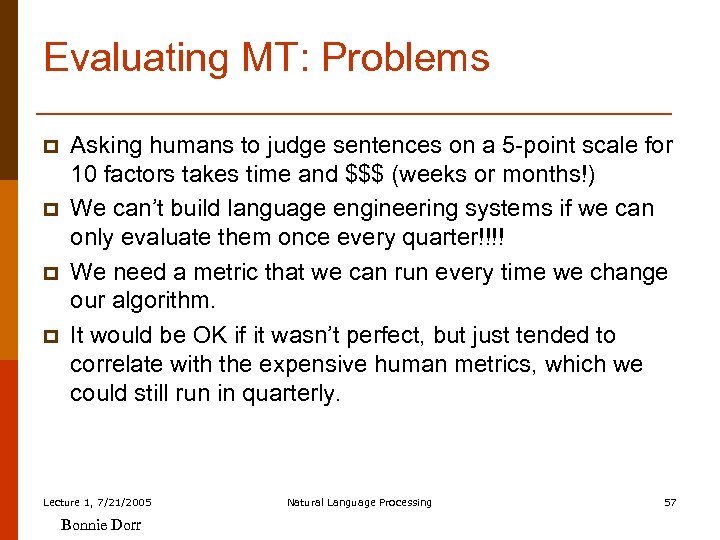

Evaluating MT: Problems p p Asking humans to judge sentences on a 5 -point scale for 10 factors takes time and $$$ (weeks or months!) We can’t build language engineering systems if we can only evaluate them once every quarter!!!! We need a metric that we can run every time we change our algorithm. It would be OK if it wasn’t perfect, but just tended to correlate with the expensive human metrics, which we could still run in quarterly. Lecture 1, 7/21/2005 Bonnie Dorr Natural Language Processing 57

Evaluating MT: Problems p p Asking humans to judge sentences on a 5 -point scale for 10 factors takes time and $$$ (weeks or months!) We can’t build language engineering systems if we can only evaluate them once every quarter!!!! We need a metric that we can run every time we change our algorithm. It would be OK if it wasn’t perfect, but just tended to correlate with the expensive human metrics, which we could still run in quarterly. Lecture 1, 7/21/2005 Bonnie Dorr Natural Language Processing 57

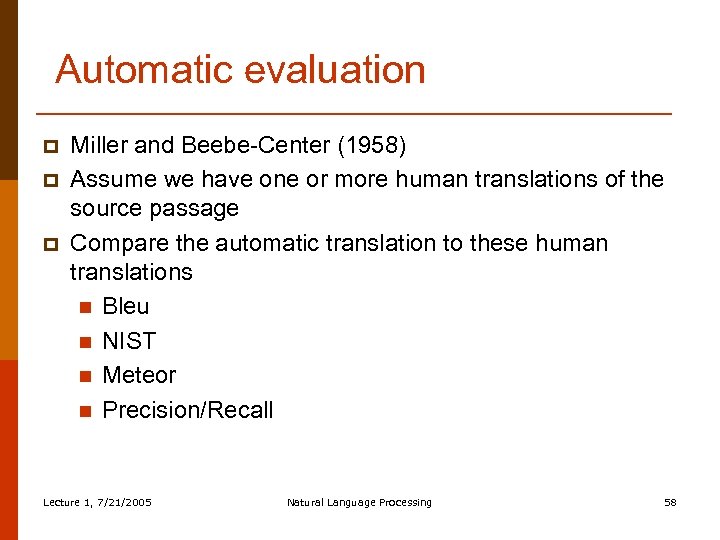

Automatic evaluation p p p Miller and Beebe-Center (1958) Assume we have one or more human translations of the source passage Compare the automatic translation to these human translations n Bleu n NIST n Meteor n Precision/Recall Lecture 1, 7/21/2005 Natural Language Processing 58

Automatic evaluation p p p Miller and Beebe-Center (1958) Assume we have one or more human translations of the source passage Compare the automatic translation to these human translations n Bleu n NIST n Meteor n Precision/Recall Lecture 1, 7/21/2005 Natural Language Processing 58

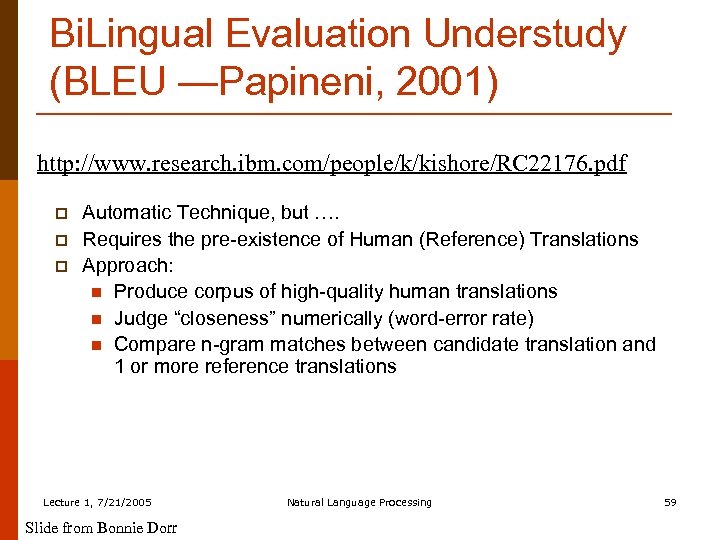

Bi. Lingual Evaluation Understudy (BLEU —Papineni, 2001) http: //www. research. ibm. com/people/k/kishore/RC 22176. pdf p p p Automatic Technique, but …. Requires the pre-existence of Human (Reference) Translations Approach: n Produce corpus of high-quality human translations n Judge “closeness” numerically (word-error rate) n Compare n-gram matches between candidate translation and 1 or more reference translations Lecture 1, 7/21/2005 Slide from Bonnie Dorr Natural Language Processing 59

Bi. Lingual Evaluation Understudy (BLEU —Papineni, 2001) http: //www. research. ibm. com/people/k/kishore/RC 22176. pdf p p p Automatic Technique, but …. Requires the pre-existence of Human (Reference) Translations Approach: n Produce corpus of high-quality human translations n Judge “closeness” numerically (word-error rate) n Compare n-gram matches between candidate translation and 1 or more reference translations Lecture 1, 7/21/2005 Slide from Bonnie Dorr Natural Language Processing 59

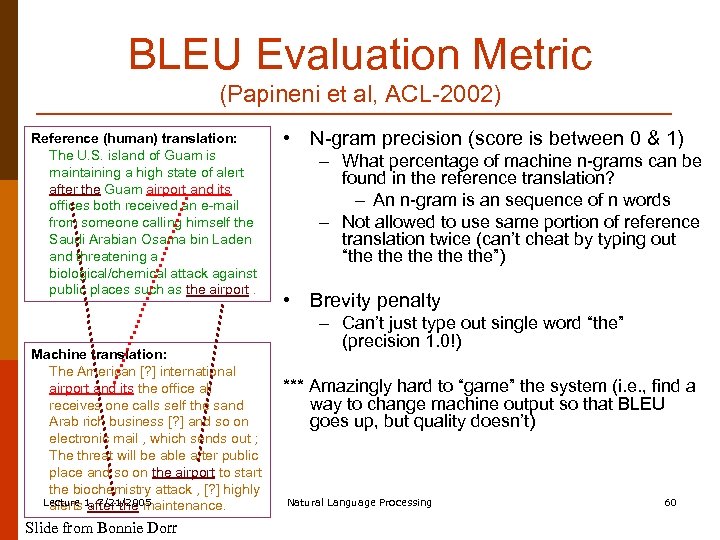

BLEU Evaluation Metric (Papineni et al, ACL-2002) Reference (human) translation: The U. S. island of Guam is maintaining a high state of alert after the Guam airport and its offices both received an e-mail from someone calling himself the Saudi Arabian Osama bin Laden and threatening a biological/chemical attack against public places such as the airport. Machine translation: The American [? ] international airport and its the office all receives one calls self the sand Arab rich business [? ] and so on electronic mail , which sends out ; The threat will be able after public place and so on the airport to start the biochemistry attack , [? ] highly Lecture 1, 7/21/2005 alerts after the maintenance. Slide from Bonnie Dorr • N-gram precision (score is between 0 & 1) – What percentage of machine n-grams can be found in the reference translation? – An n-gram is an sequence of n words – Not allowed to use same portion of reference translation twice (can’t cheat by typing out “the the the”) • Brevity penalty – Can’t just type out single word “the” (precision 1. 0!) *** Amazingly hard to “game” the system (i. e. , find a way to change machine output so that BLEU goes up, but quality doesn’t) Natural Language Processing 60

BLEU Evaluation Metric (Papineni et al, ACL-2002) Reference (human) translation: The U. S. island of Guam is maintaining a high state of alert after the Guam airport and its offices both received an e-mail from someone calling himself the Saudi Arabian Osama bin Laden and threatening a biological/chemical attack against public places such as the airport. Machine translation: The American [? ] international airport and its the office all receives one calls self the sand Arab rich business [? ] and so on electronic mail , which sends out ; The threat will be able after public place and so on the airport to start the biochemistry attack , [? ] highly Lecture 1, 7/21/2005 alerts after the maintenance. Slide from Bonnie Dorr • N-gram precision (score is between 0 & 1) – What percentage of machine n-grams can be found in the reference translation? – An n-gram is an sequence of n words – Not allowed to use same portion of reference translation twice (can’t cheat by typing out “the the the”) • Brevity penalty – Can’t just type out single word “the” (precision 1. 0!) *** Amazingly hard to “game” the system (i. e. , find a way to change machine output so that BLEU goes up, but quality doesn’t) Natural Language Processing 60

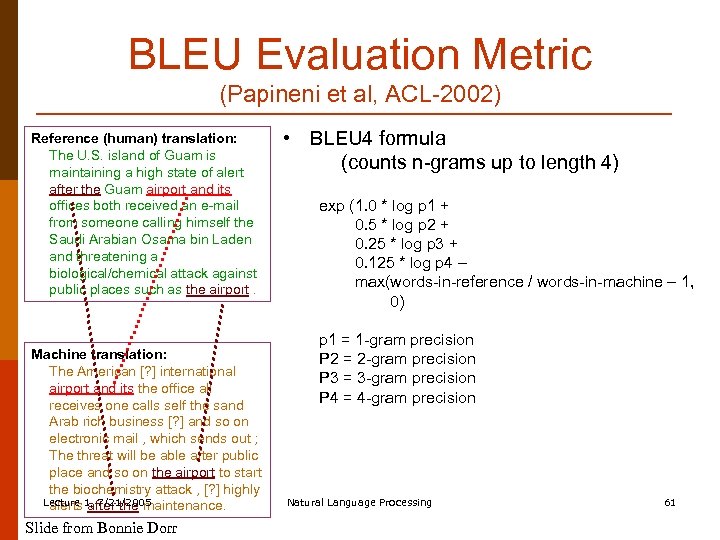

BLEU Evaluation Metric (Papineni et al, ACL-2002) Reference (human) translation: The U. S. island of Guam is maintaining a high state of alert after the Guam airport and its offices both received an e-mail from someone calling himself the Saudi Arabian Osama bin Laden and threatening a biological/chemical attack against public places such as the airport. Machine translation: The American [? ] international airport and its the office all receives one calls self the sand Arab rich business [? ] and so on electronic mail , which sends out ; The threat will be able after public place and so on the airport to start the biochemistry attack , [? ] highly Lecture 1, 7/21/2005 alerts after the maintenance. Slide from Bonnie Dorr • BLEU 4 formula (counts n-grams up to length 4) exp (1. 0 * log p 1 + 0. 5 * log p 2 + 0. 25 * log p 3 + 0. 125 * log p 4 – max(words-in-reference / words-in-machine – 1, 0) p 1 = 1 -gram precision P 2 = 2 -gram precision P 3 = 3 -gram precision P 4 = 4 -gram precision Natural Language Processing 61

BLEU Evaluation Metric (Papineni et al, ACL-2002) Reference (human) translation: The U. S. island of Guam is maintaining a high state of alert after the Guam airport and its offices both received an e-mail from someone calling himself the Saudi Arabian Osama bin Laden and threatening a biological/chemical attack against public places such as the airport. Machine translation: The American [? ] international airport and its the office all receives one calls self the sand Arab rich business [? ] and so on electronic mail , which sends out ; The threat will be able after public place and so on the airport to start the biochemistry attack , [? ] highly Lecture 1, 7/21/2005 alerts after the maintenance. Slide from Bonnie Dorr • BLEU 4 formula (counts n-grams up to length 4) exp (1. 0 * log p 1 + 0. 5 * log p 2 + 0. 25 * log p 3 + 0. 125 * log p 4 – max(words-in-reference / words-in-machine – 1, 0) p 1 = 1 -gram precision P 2 = 2 -gram precision P 3 = 3 -gram precision P 4 = 4 -gram precision Natural Language Processing 61

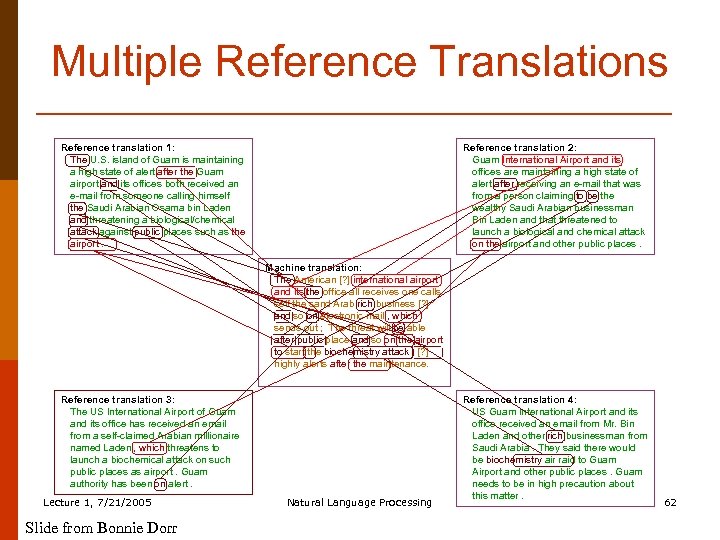

Multiple Reference Translations Reference translation 1: The U. S. island of Guam is maintaining a high state of alert after the Guam airport and its offices both received an e-mail from someone calling himself the Saudi Arabian Osama bin Laden and threatening a biological/chemical attack against public places such as the airport. Reference translation 2: Guam International Airport and its offices are maintaining a high state of alert after receiving an e-mail that was from a person claiming to be the wealthy Saudi Arabian businessman Bin Laden and that threatened to launch a biological and chemical attack on the airport and other public places. Machine translation: The American [? ] international airport and its the office all receives one calls self the sand Arab rich business [? ] and so on electronic mail , which sends out ; The threat will be able after public place and so on the airport to start the biochemistry attack , [? ] highly alerts after the maintenance. Reference translation 3: The US International Airport of Guam and its office has received an email from a self-claimed Arabian millionaire named Laden , which threatens to launch a biochemical attack on such public places as airport. Guam authority has been on alert. Lecture 1, 7/21/2005 Slide from Bonnie Dorr Natural Language Processing Reference translation 4: US Guam International Airport and its office received an email from Mr. Bin Laden and other rich businessman from Saudi Arabia. They said there would be biochemistry air raid to Guam Airport and other public places. Guam needs to be in high precaution about this matter. 62

Multiple Reference Translations Reference translation 1: The U. S. island of Guam is maintaining a high state of alert after the Guam airport and its offices both received an e-mail from someone calling himself the Saudi Arabian Osama bin Laden and threatening a biological/chemical attack against public places such as the airport. Reference translation 2: Guam International Airport and its offices are maintaining a high state of alert after receiving an e-mail that was from a person claiming to be the wealthy Saudi Arabian businessman Bin Laden and that threatened to launch a biological and chemical attack on the airport and other public places. Machine translation: The American [? ] international airport and its the office all receives one calls self the sand Arab rich business [? ] and so on electronic mail , which sends out ; The threat will be able after public place and so on the airport to start the biochemistry attack , [? ] highly alerts after the maintenance. Reference translation 3: The US International Airport of Guam and its office has received an email from a self-claimed Arabian millionaire named Laden , which threatens to launch a biochemical attack on such public places as airport. Guam authority has been on alert. Lecture 1, 7/21/2005 Slide from Bonnie Dorr Natural Language Processing Reference translation 4: US Guam International Airport and its office received an email from Mr. Bin Laden and other rich businessman from Saudi Arabia. They said there would be biochemistry air raid to Guam Airport and other public places. Guam needs to be in high precaution about this matter. 62

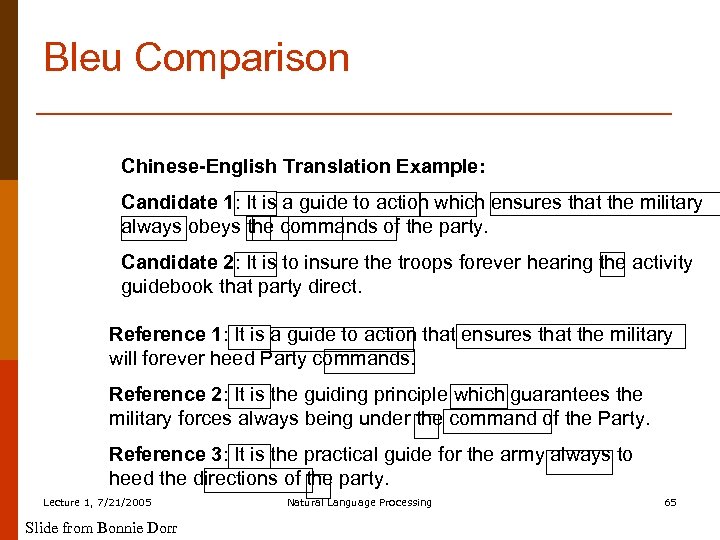

Bleu Comparison Chinese-English Translation Example: Candidate 1: It is a guide to action which ensures that the military always obeys the commands of the party. Candidate 2: It is to insure the troops forever hearing the activity guidebook that party direct. Reference 1: It is a guide to action that ensures that the military will forever heed Party commands. Reference 2: It is the guiding principle which guarantees the military forces always being under the command of the Party. Reference 3: It is the practical guide for the army always to heed the directions of the party. Lecture 1, 7/21/2005 Slide from Bonnie Dorr Natural Language Processing 65

Bleu Comparison Chinese-English Translation Example: Candidate 1: It is a guide to action which ensures that the military always obeys the commands of the party. Candidate 2: It is to insure the troops forever hearing the activity guidebook that party direct. Reference 1: It is a guide to action that ensures that the military will forever heed Party commands. Reference 2: It is the guiding principle which guarantees the military forces always being under the command of the Party. Reference 3: It is the practical guide for the army always to heed the directions of the party. Lecture 1, 7/21/2005 Slide from Bonnie Dorr Natural Language Processing 65

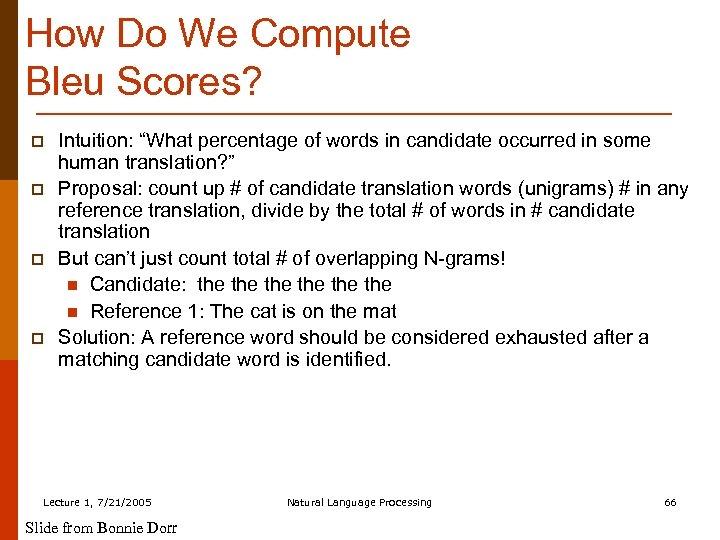

How Do We Compute Bleu Scores? p p Intuition: “What percentage of words in candidate occurred in some human translation? ” Proposal: count up # of candidate translation words (unigrams) # in any reference translation, divide by the total # of words in # candidate translation But can’t just count total # of overlapping N-grams! n Candidate: the the the n Reference 1: The cat is on the mat Solution: A reference word should be considered exhausted after a matching candidate word is identified. Lecture 1, 7/21/2005 Slide from Bonnie Dorr Natural Language Processing 66

How Do We Compute Bleu Scores? p p Intuition: “What percentage of words in candidate occurred in some human translation? ” Proposal: count up # of candidate translation words (unigrams) # in any reference translation, divide by the total # of words in # candidate translation But can’t just count total # of overlapping N-grams! n Candidate: the the the n Reference 1: The cat is on the mat Solution: A reference word should be considered exhausted after a matching candidate word is identified. Lecture 1, 7/21/2005 Slide from Bonnie Dorr Natural Language Processing 66

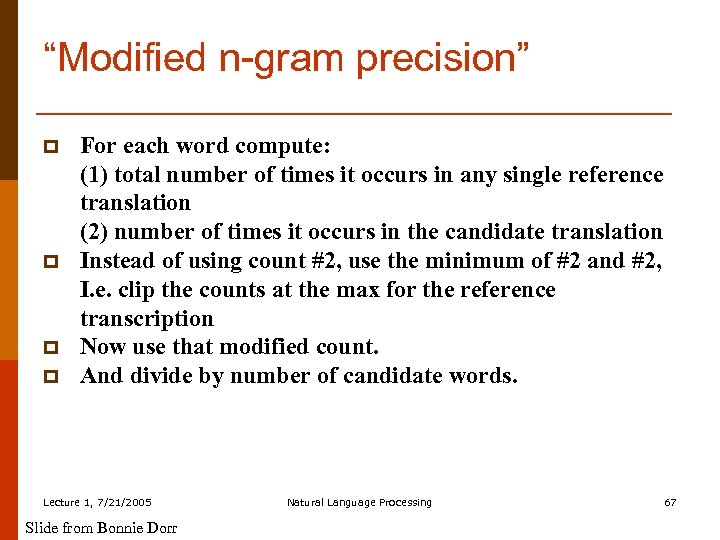

“Modified n-gram precision” p p For each word compute: (1) total number of times it occurs in any single reference translation (2) number of times it occurs in the candidate translation Instead of using count #2, use the minimum of #2 and #2, I. e. clip the counts at the max for the reference transcription Now use that modified count. And divide by number of candidate words. Lecture 1, 7/21/2005 Slide from Bonnie Dorr Natural Language Processing 67

“Modified n-gram precision” p p For each word compute: (1) total number of times it occurs in any single reference translation (2) number of times it occurs in the candidate translation Instead of using count #2, use the minimum of #2 and #2, I. e. clip the counts at the max for the reference transcription Now use that modified count. And divide by number of candidate words. Lecture 1, 7/21/2005 Slide from Bonnie Dorr Natural Language Processing 67

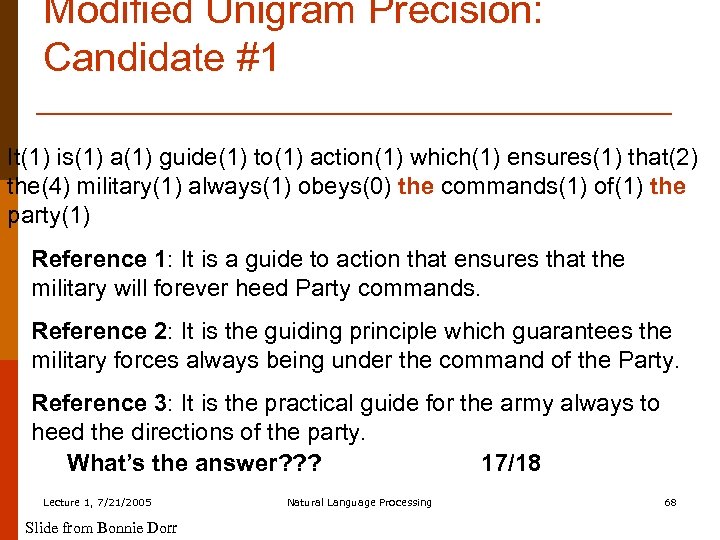

Modified Unigram Precision: Candidate #1 It(1) is(1) a(1) guide(1) to(1) action(1) which(1) ensures(1) that(2) the(4) military(1) always(1) obeys(0) the commands(1) of(1) the party(1) Reference 1: It is a guide to action that ensures that the military will forever heed Party commands. Reference 2: It is the guiding principle which guarantees the military forces always being under the command of the Party. Reference 3: It is the practical guide for the army always to heed the directions of the party. What’s the answer? ? ? 17/18 Lecture 1, 7/21/2005 Slide from Bonnie Dorr Natural Language Processing 68

Modified Unigram Precision: Candidate #1 It(1) is(1) a(1) guide(1) to(1) action(1) which(1) ensures(1) that(2) the(4) military(1) always(1) obeys(0) the commands(1) of(1) the party(1) Reference 1: It is a guide to action that ensures that the military will forever heed Party commands. Reference 2: It is the guiding principle which guarantees the military forces always being under the command of the Party. Reference 3: It is the practical guide for the army always to heed the directions of the party. What’s the answer? ? ? 17/18 Lecture 1, 7/21/2005 Slide from Bonnie Dorr Natural Language Processing 68

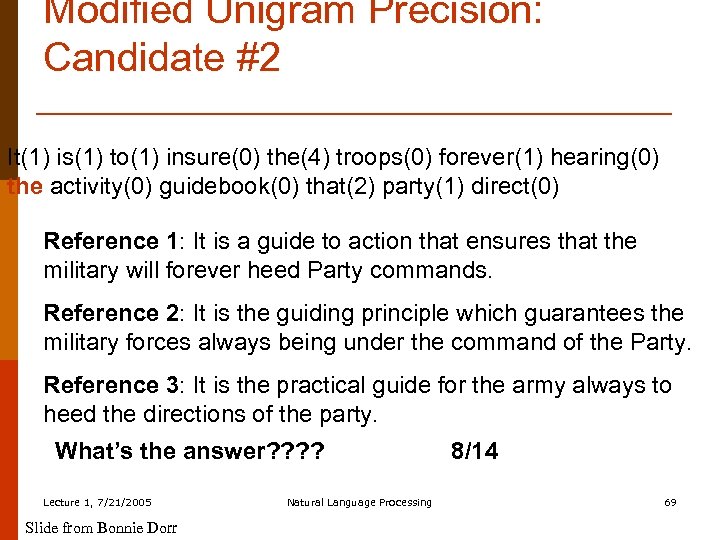

Modified Unigram Precision: Candidate #2 It(1) is(1) to(1) insure(0) the(4) troops(0) forever(1) hearing(0) the activity(0) guidebook(0) that(2) party(1) direct(0) Reference 1: It is a guide to action that ensures that the military will forever heed Party commands. Reference 2: It is the guiding principle which guarantees the military forces always being under the command of the Party. Reference 3: It is the practical guide for the army always to heed the directions of the party. What’s the answer? ? Lecture 1, 7/21/2005 Slide from Bonnie Dorr Natural Language Processing 8/14 69

Modified Unigram Precision: Candidate #2 It(1) is(1) to(1) insure(0) the(4) troops(0) forever(1) hearing(0) the activity(0) guidebook(0) that(2) party(1) direct(0) Reference 1: It is a guide to action that ensures that the military will forever heed Party commands. Reference 2: It is the guiding principle which guarantees the military forces always being under the command of the Party. Reference 3: It is the practical guide for the army always to heed the directions of the party. What’s the answer? ? Lecture 1, 7/21/2005 Slide from Bonnie Dorr Natural Language Processing 8/14 69

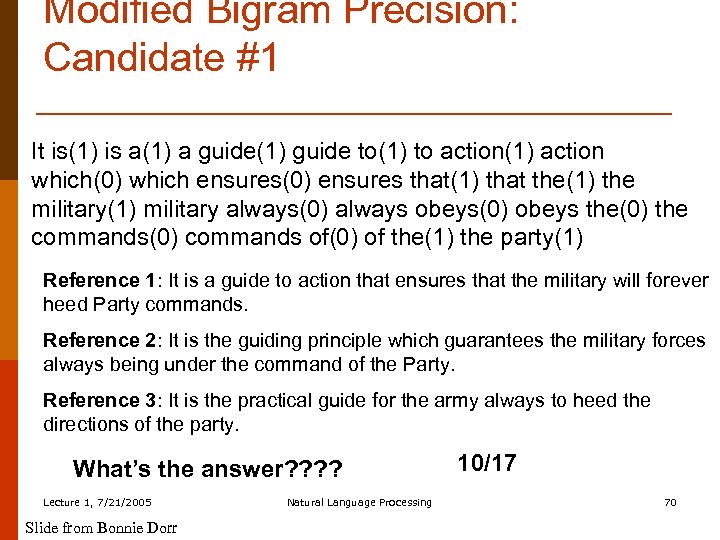

Modified Bigram Precision: Candidate #1 It is(1) is a(1) a guide(1) guide to(1) to action(1) action which(0) which ensures(0) ensures that(1) that the(1) the military(1) military always(0) always obeys(0) obeys the(0) the commands(0) commands of(0) of the(1) the party(1) Reference 1: It is a guide to action that ensures that the military will forever heed Party commands. Reference 2: It is the guiding principle which guarantees the military forces always being under the command of the Party. Reference 3: It is the practical guide for the army always to heed the directions of the party. What’s the answer? ? Lecture 1, 7/21/2005 Slide from Bonnie Dorr Natural Language Processing 10/17 70

Modified Bigram Precision: Candidate #1 It is(1) is a(1) a guide(1) guide to(1) to action(1) action which(0) which ensures(0) ensures that(1) that the(1) the military(1) military always(0) always obeys(0) obeys the(0) the commands(0) commands of(0) of the(1) the party(1) Reference 1: It is a guide to action that ensures that the military will forever heed Party commands. Reference 2: It is the guiding principle which guarantees the military forces always being under the command of the Party. Reference 3: It is the practical guide for the army always to heed the directions of the party. What’s the answer? ? Lecture 1, 7/21/2005 Slide from Bonnie Dorr Natural Language Processing 10/17 70

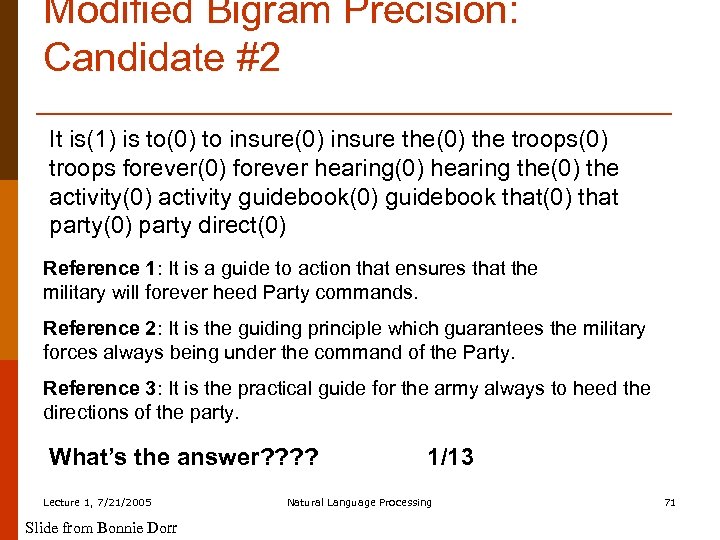

Modified Bigram Precision: Candidate #2 It is(1) is to(0) to insure(0) insure the(0) the troops(0) troops forever(0) forever hearing(0) hearing the(0) the activity(0) activity guidebook(0) guidebook that(0) that party(0) party direct(0) Reference 1: It is a guide to action that ensures that the military will forever heed Party commands. Reference 2: It is the guiding principle which guarantees the military forces always being under the command of the Party. Reference 3: It is the practical guide for the army always to heed the directions of the party. What’s the answer? ? Lecture 1, 7/21/2005 Slide from Bonnie Dorr 1/13 Natural Language Processing 71

Modified Bigram Precision: Candidate #2 It is(1) is to(0) to insure(0) insure the(0) the troops(0) troops forever(0) forever hearing(0) hearing the(0) the activity(0) activity guidebook(0) guidebook that(0) that party(0) party direct(0) Reference 1: It is a guide to action that ensures that the military will forever heed Party commands. Reference 2: It is the guiding principle which guarantees the military forces always being under the command of the Party. Reference 3: It is the practical guide for the army always to heed the directions of the party. What’s the answer? ? Lecture 1, 7/21/2005 Slide from Bonnie Dorr 1/13 Natural Language Processing 71

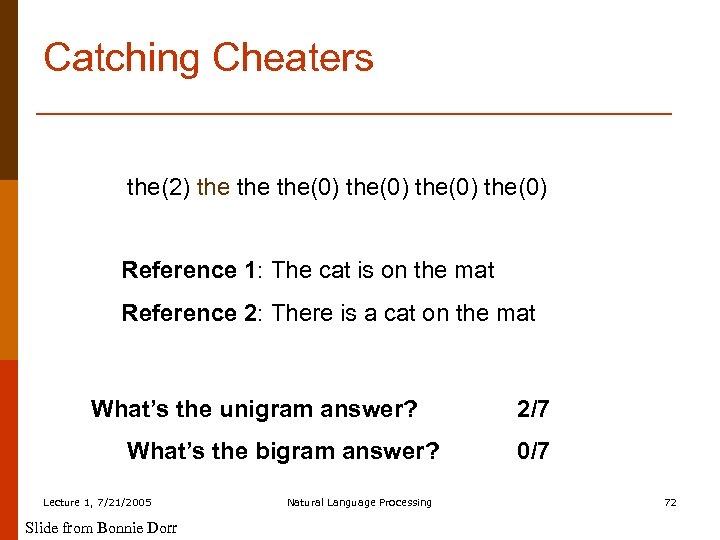

Catching Cheaters the(2) the the(0) Reference 1: The cat is on the mat Reference 2: There is a cat on the mat What’s the unigram answer? What’s the bigram answer? Lecture 1, 7/21/2005 Slide from Bonnie Dorr Natural Language Processing 2/7 0/7 72

Catching Cheaters the(2) the the(0) Reference 1: The cat is on the mat Reference 2: There is a cat on the mat What’s the unigram answer? What’s the bigram answer? Lecture 1, 7/21/2005 Slide from Bonnie Dorr Natural Language Processing 2/7 0/7 72

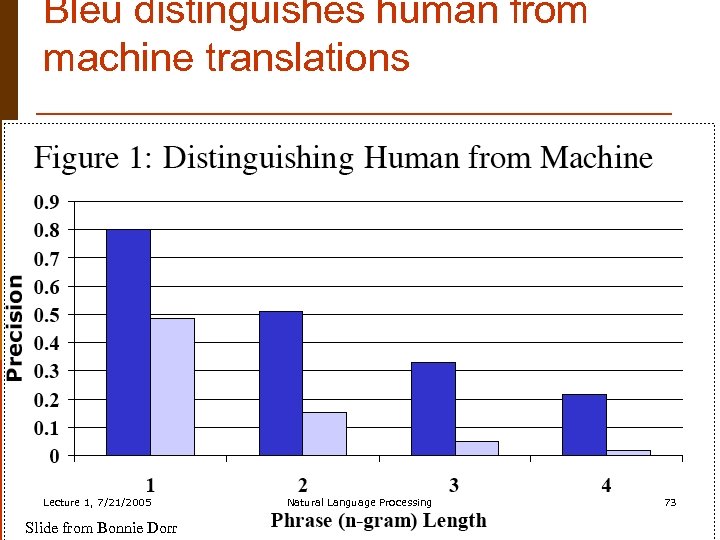

Bleu distinguishes human from machine translations Lecture 1, 7/21/2005 Slide from Bonnie Dorr Natural Language Processing 73

Bleu distinguishes human from machine translations Lecture 1, 7/21/2005 Slide from Bonnie Dorr Natural Language Processing 73

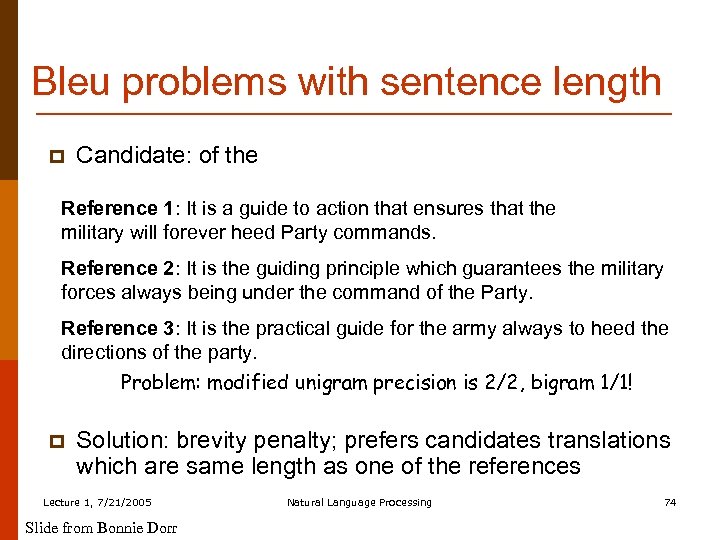

Bleu problems with sentence length p Candidate: of the Reference 1: It is a guide to action that ensures that the military will forever heed Party commands. Reference 2: It is the guiding principle which guarantees the military forces always being under the command of the Party. Reference 3: It is the practical guide for the army always to heed the directions of the party. Problem: modified unigram precision is 2/2, bigram 1/1! p Solution: brevity penalty; prefers candidates translations which are same length as one of the references Lecture 1, 7/21/2005 Slide from Bonnie Dorr Natural Language Processing 74

Bleu problems with sentence length p Candidate: of the Reference 1: It is a guide to action that ensures that the military will forever heed Party commands. Reference 2: It is the guiding principle which guarantees the military forces always being under the command of the Party. Reference 3: It is the practical guide for the army always to heed the directions of the party. Problem: modified unigram precision is 2/2, bigram 1/1! p Solution: brevity penalty; prefers candidates translations which are same length as one of the references Lecture 1, 7/21/2005 Slide from Bonnie Dorr Natural Language Processing 74

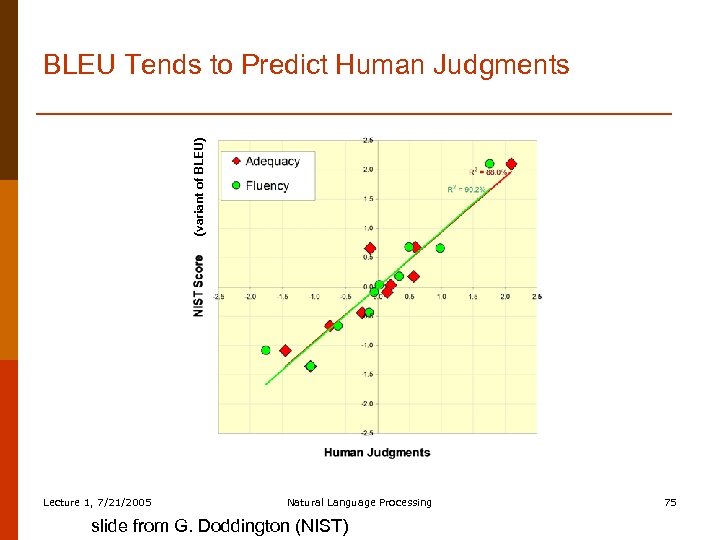

(variant of BLEU) BLEU Tends to Predict Human Judgments Lecture 1, 7/21/2005 Natural Language Processing slide from G. Doddington (NIST) 75

(variant of BLEU) BLEU Tends to Predict Human Judgments Lecture 1, 7/21/2005 Natural Language Processing slide from G. Doddington (NIST) 75

Summary p p Intro and a little history Language Similarities and Divergences Four main MT Approaches n Transfer n Interlingua n Direct n Statistical Evaluation Lecture 1, 7/21/2005 Natural Language Processing 76

Summary p p Intro and a little history Language Similarities and Divergences Four main MT Approaches n Transfer n Interlingua n Direct n Statistical Evaluation Lecture 1, 7/21/2005 Natural Language Processing 76

Classes p p LINGUIST 139 M/239 M. Human and Machine Translation. (Martin Kay) CS 224 N. Natural Language Processing (Chris Manning) Lecture 1, 7/21/2005 Natural Language Processing 77

Classes p p LINGUIST 139 M/239 M. Human and Machine Translation. (Martin Kay) CS 224 N. Natural Language Processing (Chris Manning) Lecture 1, 7/21/2005 Natural Language Processing 77