667e655438ae2afc89cb37cc3ed177c8.ppt

- Количество слайдов: 37

CS 336: Intelligent Information Retrieval Lecture 8: Indexing Models

CS 336: Intelligent Information Retrieval Lecture 8: Indexing Models

Basic Automatic Indexing • Parse documents to recognize structure – e. g. title, date, other fields • • Scan for word tokens Stopword removal Stem words Weight words – using frequency in documents and database – frequency data independent of retrieval model • Optional – phrase indexing – thesaurus classes

Basic Automatic Indexing • Parse documents to recognize structure – e. g. title, date, other fields • • Scan for word tokens Stopword removal Stem words Weight words – using frequency in documents and database – frequency data independent of retrieval model • Optional – phrase indexing – thesaurus classes

Words vs. Terms vs. “Concepts” • Concept-based retrieval – often used to imply something beyond word indexing • In virtually all systems, a concept is a name given to a set of recognition criteria or rules – similar to a thesaurus class • Words, phrases, synonyms, linguistic relations can all be evidence used to infer presence of the concept – e. g. “information retrieval” can be inferred based on: • • “information” “retrieval” “information retrieval” “text retrieval”

Words vs. Terms vs. “Concepts” • Concept-based retrieval – often used to imply something beyond word indexing • In virtually all systems, a concept is a name given to a set of recognition criteria or rules – similar to a thesaurus class • Words, phrases, synonyms, linguistic relations can all be evidence used to infer presence of the concept – e. g. “information retrieval” can be inferred based on: • • “information” “retrieval” “information retrieval” “text retrieval”

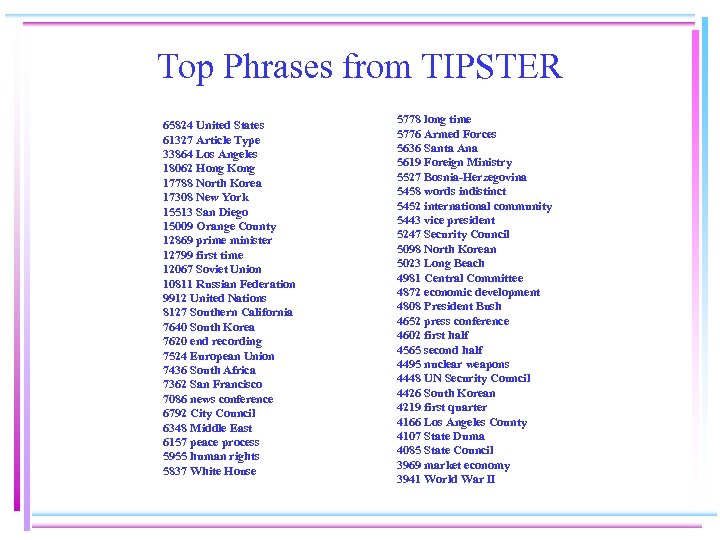

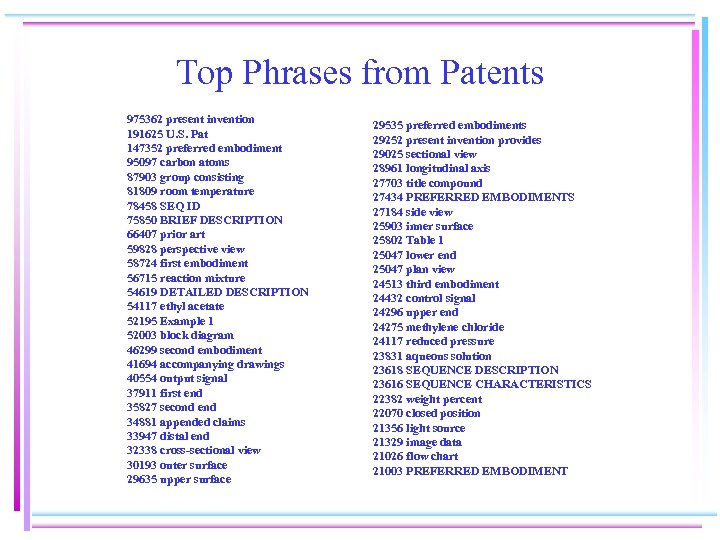

Phrases • Both statistical and syntactic methods have been used to identify “good” phrases • Proven techniques: – find all word pairs that occur more than n times – using POS tagger to identify simple noun phrases • 1, 100, 000 phrases extracted from all TREC data (>1, 000 documents) • 3, 700, 000 phrases extracted from PTO 1996 data

Phrases • Both statistical and syntactic methods have been used to identify “good” phrases • Proven techniques: – find all word pairs that occur more than n times – using POS tagger to identify simple noun phrases • 1, 100, 000 phrases extracted from all TREC data (>1, 000 documents) • 3, 700, 000 phrases extracted from PTO 1996 data

Phrases • Phrases can have an impact on both effectiveness and efficiency – phrase indexing will speed up phrase queries – finding documents containing “Black Sea” better than finding documents containing both words alone – effectiveness not straightforward and depends on retrieval model • e. g. for “information retrieval” or “green house”, how much do individual words count? – Effectiveness can also depend upon collection

Phrases • Phrases can have an impact on both effectiveness and efficiency – phrase indexing will speed up phrase queries – finding documents containing “Black Sea” better than finding documents containing both words alone – effectiveness not straightforward and depends on retrieval model • e. g. for “information retrieval” or “green house”, how much do individual words count? – Effectiveness can also depend upon collection

Top Phrases from TIPSTER 65824 United States 61327 Article Type 33864 Los Angeles 18062 Hong Kong 17788 North Korea 17308 New York 15513 San Diego 15009 Orange County 12869 prime minister 12799 first time 12067 Soviet Union 10811 Russian Federation 9912 United Nations 8127 Southern California 7640 South Korea 7620 end recording 7524 European Union 7436 South Africa 7362 San Francisco 7086 news conference 6792 City Council 6348 Middle East 6157 peace process 5955 human rights 5837 White House 5778 long time 5776 Armed Forces 5636 Santa Ana 5619 Foreign Ministry 5527 Bosnia-Herzegovina 5458 words indistinct 5452 international community 5443 vice president 5247 Security Council 5098 North Korean 5023 Long Beach 4981 Central Committee 4872 economic development 4808 President Bush 4652 press conference 4602 first half 4565 second half 4495 nuclear weapons 4448 UN Security Council 4426 South Korean 4219 first quarter 4166 Los Angeles County 4107 State Duma 4085 State Council 3969 market economy 3941 World War II

Top Phrases from TIPSTER 65824 United States 61327 Article Type 33864 Los Angeles 18062 Hong Kong 17788 North Korea 17308 New York 15513 San Diego 15009 Orange County 12869 prime minister 12799 first time 12067 Soviet Union 10811 Russian Federation 9912 United Nations 8127 Southern California 7640 South Korea 7620 end recording 7524 European Union 7436 South Africa 7362 San Francisco 7086 news conference 6792 City Council 6348 Middle East 6157 peace process 5955 human rights 5837 White House 5778 long time 5776 Armed Forces 5636 Santa Ana 5619 Foreign Ministry 5527 Bosnia-Herzegovina 5458 words indistinct 5452 international community 5443 vice president 5247 Security Council 5098 North Korean 5023 Long Beach 4981 Central Committee 4872 economic development 4808 President Bush 4652 press conference 4602 first half 4565 second half 4495 nuclear weapons 4448 UN Security Council 4426 South Korean 4219 first quarter 4166 Los Angeles County 4107 State Duma 4085 State Council 3969 market economy 3941 World War II

Top Phrases from Patents 975362 present invention 191625 U. S. Pat 147352 preferred embodiment 95097 carbon atoms 87903 group consisting 81809 room temperature 78458 SEQ ID 75850 BRIEF DESCRIPTION 66407 prior art 59828 perspective view 58724 first embodiment 56715 reaction mixture 54619 DETAILED DESCRIPTION 54117 ethyl acetate 52195 Example 1 52003 block diagram 46299 second embodiment 41694 accompanying drawings 40554 output signal 37911 first end 35827 second end 34881 appended claims 33947 distal end 32338 cross-sectional view 30193 outer surface 29635 upper surface 29535 preferred embodiments 29252 present invention provides 29025 sectional view 28961 longitudinal axis 27703 title compound 27434 PREFERRED EMBODIMENTS 27184 side view 25903 inner surface 25802 Table 1 25047 lower end 25047 plan view 24513 third embodiment 24432 control signal 24296 upper end 24275 methylene chloride 24117 reduced pressure 23831 aqueous solution 23618 SEQUENCE DESCRIPTION 23616 SEQUENCE CHARACTERISTICS 22382 weight percent 22070 closed position 21356 light source 21329 image data 21026 flow chart 21003 PREFERRED EMBODIMENT

Top Phrases from Patents 975362 present invention 191625 U. S. Pat 147352 preferred embodiment 95097 carbon atoms 87903 group consisting 81809 room temperature 78458 SEQ ID 75850 BRIEF DESCRIPTION 66407 prior art 59828 perspective view 58724 first embodiment 56715 reaction mixture 54619 DETAILED DESCRIPTION 54117 ethyl acetate 52195 Example 1 52003 block diagram 46299 second embodiment 41694 accompanying drawings 40554 output signal 37911 first end 35827 second end 34881 appended claims 33947 distal end 32338 cross-sectional view 30193 outer surface 29635 upper surface 29535 preferred embodiments 29252 present invention provides 29025 sectional view 28961 longitudinal axis 27703 title compound 27434 PREFERRED EMBODIMENTS 27184 side view 25903 inner surface 25802 Table 1 25047 lower end 25047 plan view 24513 third embodiment 24432 control signal 24296 upper end 24275 methylene chloride 24117 reduced pressure 23831 aqueous solution 23618 SEQUENCE DESCRIPTION 23616 SEQUENCE CHARACTERISTICS 22382 weight percent 22070 closed position 21356 light source 21329 image data 21026 flow chart 21003 PREFERRED EMBODIMENT

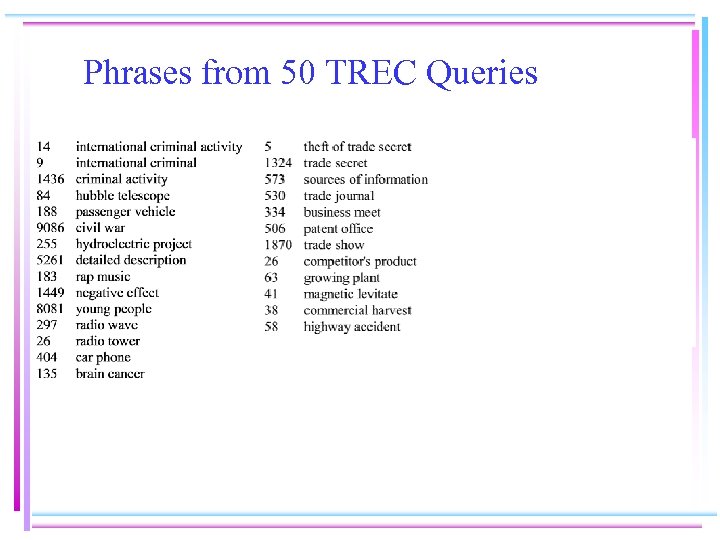

Phrases from 50 TREC Queries

Phrases from 50 TREC Queries

Collocation (Co-occurrence) • Co-occurrence patterns of words & word classes – reveal significant information about how a language is used. Apply to: • build dictionaries (lexicography) • IR tasks such as phrase detection, indexing, query expansion, building thesauri • Co-occurrence based on text windows – typical window may be 100 words – smaller windows used for lexicography, e. g. adjacent pairs or 5 words

Collocation (Co-occurrence) • Co-occurrence patterns of words & word classes – reveal significant information about how a language is used. Apply to: • build dictionaries (lexicography) • IR tasks such as phrase detection, indexing, query expansion, building thesauri • Co-occurrence based on text windows – typical window may be 100 words – smaller windows used for lexicography, e. g. adjacent pairs or 5 words

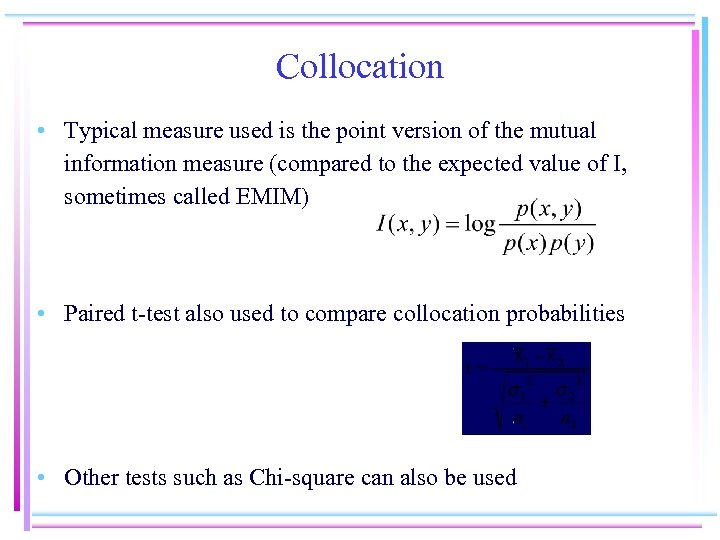

Collocation • Typical measure used is the point version of the mutual information measure (compared to the expected value of I, sometimes called EMIM) • Paired t-test also used to compare collocation probabilities • Other tests such as Chi-square can also be used

Collocation • Typical measure used is the point version of the mutual information measure (compared to the expected value of I, sometimes called EMIM) • Paired t-test also used to compare collocation probabilities • Other tests such as Chi-square can also be used

Indexing Models • Which terms should index a document? – what terms describe the documents in the collection? – what terms are good for discriminating between documents? • Different focus than retrieval model, but related • Sometimes seen as term weighting – – – TF. IDF Term Discrimination model 2 -Poisson model Language models Clumping model

Indexing Models • Which terms should index a document? – what terms describe the documents in the collection? – what terms are good for discriminating between documents? • Different focus than retrieval model, but related • Sometimes seen as term weighting – – – TF. IDF Term Discrimination model 2 -Poisson model Language models Clumping model

Indexing Models • Term Weighting – 2 components • term weight indicating its relative importance • similarity measure: use term weights to calculate similarity between query and document • How do we determine the importance of indexing terms? – We want to do this in a way that distinguishes Relevant documents from Non-relevant documents

Indexing Models • Term Weighting – 2 components • term weight indicating its relative importance • similarity measure: use term weights to calculate similarity between query and document • How do we determine the importance of indexing terms? – We want to do this in a way that distinguishes Relevant documents from Non-relevant documents

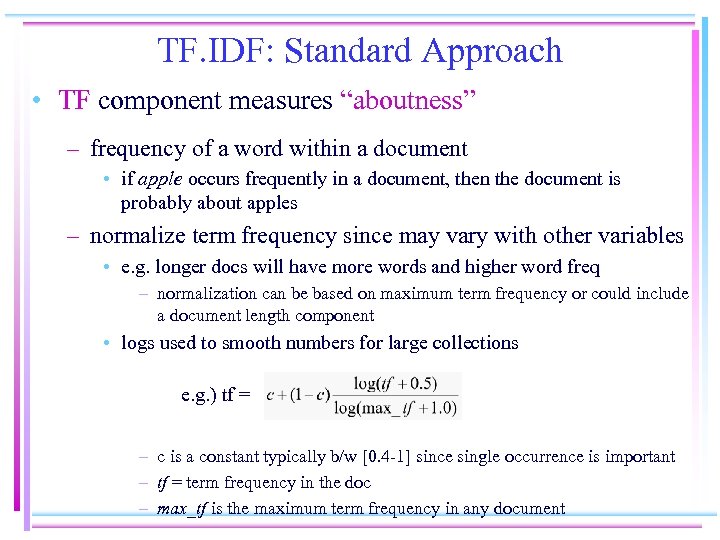

TF. IDF: Standard Approach • TF component measures “aboutness” – frequency of a word within a document • if apple occurs frequently in a document, then the document is probably about apples – normalize term frequency since may vary with other variables • e. g. longer docs will have more words and higher word freq – normalization can be based on maximum term frequency or could include a document length component • logs used to smooth numbers for large collections e. g. ) tf = – c is a constant typically b/w [0. 4 -1] since single occurrence is important – tf = term frequency in the doc – max_tf is the maximum term frequency in any document

TF. IDF: Standard Approach • TF component measures “aboutness” – frequency of a word within a document • if apple occurs frequently in a document, then the document is probably about apples – normalize term frequency since may vary with other variables • e. g. longer docs will have more words and higher word freq – normalization can be based on maximum term frequency or could include a document length component • logs used to smooth numbers for large collections e. g. ) tf = – c is a constant typically b/w [0. 4 -1] since single occurrence is important – tf = term frequency in the doc – max_tf is the maximum term frequency in any document

TF. IDF • Inverse document frequency (IDF) measures “discrimination value” – if “apple” occurs in many documents, will it tell us anything about how those documents differ? – by Spark Jones in 1972: IDF = log (N/df) + 1 • N is the number of documents in the collection • df is the number of documents the term occurs in • wt (term weight) = TF*IDF – reward a term for occurring frequently in a document (high tf) – penalize it for occurring frequently in the collection (low idf)

TF. IDF • Inverse document frequency (IDF) measures “discrimination value” – if “apple” occurs in many documents, will it tell us anything about how those documents differ? – by Spark Jones in 1972: IDF = log (N/df) + 1 • N is the number of documents in the collection • df is the number of documents the term occurs in • wt (term weight) = TF*IDF – reward a term for occurring frequently in a document (high tf) – penalize it for occurring frequently in the collection (low idf)

Computing idf • Assume 10, 000 documents. • If one term appears in 10 documents: idf=log(10, 000/10)+1=log(1000)+1~11 • If another term appears in 100 documents: idf =log(10, 000/100)+1 = log(100)+1 ~8

Computing idf • Assume 10, 000 documents. • If one term appears in 10 documents: idf=log(10, 000/10)+1=log(1000)+1~11 • If another term appears in 100 documents: idf =log(10, 000/100)+1 = log(100)+1 ~8

Term Discrimination Model • Based on vector space model – documents and queries are vectors in an n-dimensional space for n terms • Basic idea: – Compute discrimination value of a term • degree to which use of the term will help to distinguish documents • based on Comparing average similarity of documents both with and without an index term

Term Discrimination Model • Based on vector space model – documents and queries are vectors in an n-dimensional space for n terms • Basic idea: – Compute discrimination value of a term • degree to which use of the term will help to distinguish documents • based on Comparing average similarity of documents both with and without an index term

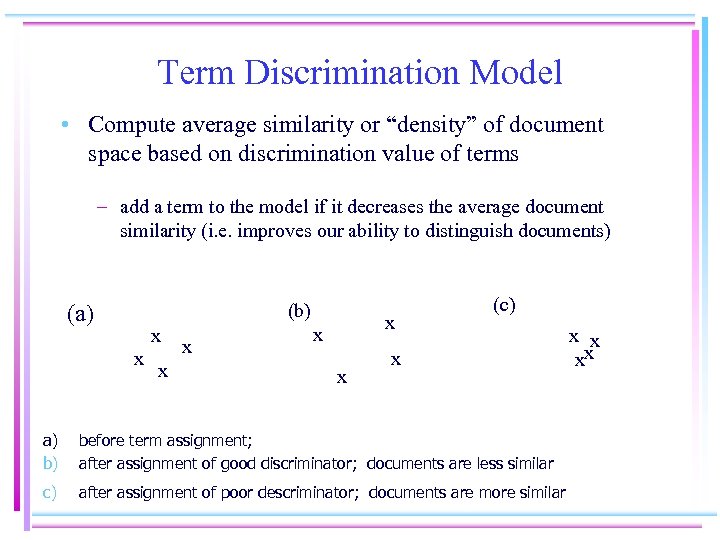

Term Discrimination Model • Compute average similarity or “density” of document space based on discrimination value of terms – add a term to the model if it decreases the average document similarity (i. e. improves our ability to distinguish documents) (b) (a) x x x x (c) x a) b) before term assignment; after assignment of good discriminator; documents are less similar c) after assignment of poor descriminator; documents are more similar x x xx

Term Discrimination Model • Compute average similarity or “density” of document space based on discrimination value of terms – add a term to the model if it decreases the average document similarity (i. e. improves our ability to distinguish documents) (b) (a) x x x x (c) x a) b) before term assignment; after assignment of good discriminator; documents are less similar c) after assignment of poor descriminator; documents are more similar x x xx

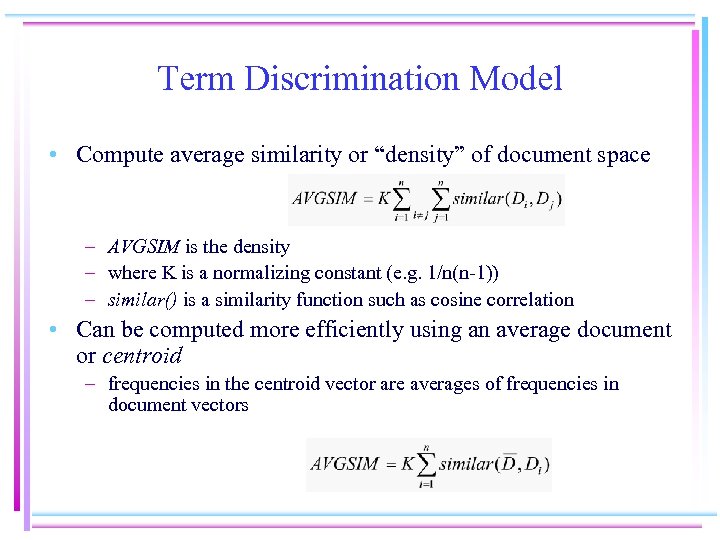

Term Discrimination Model • Compute average similarity or “density” of document space – AVGSIM is the density – where K is a normalizing constant (e. g. 1/n(n-1)) – similar() is a similarity function such as cosine correlation • Can be computed more efficiently using an average document or centroid – frequencies in the centroid vector are averages of frequencies in document vectors

Term Discrimination Model • Compute average similarity or “density” of document space – AVGSIM is the density – where K is a normalizing constant (e. g. 1/n(n-1)) – similar() is a similarity function such as cosine correlation • Can be computed more efficiently using an average document or centroid – frequencies in the centroid vector are averages of frequencies in document vectors

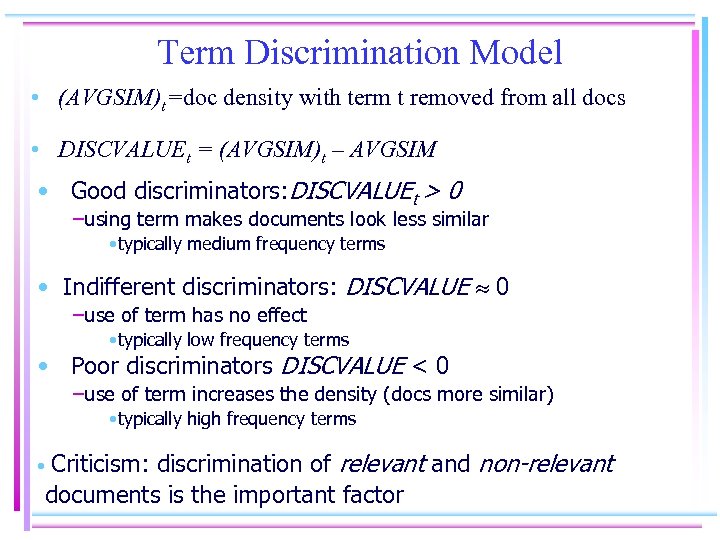

Term Discrimination Model • (AVGSIM)t =doc density with term t removed from all docs • DISCVALUEt = (AVGSIM)t – AVGSIM • Good discriminators: DISCVALUEt > 0 –using term makes documents look less similar • typically medium frequency terms • Indifferent discriminators: DISCVALUE 0 –use of term has no effect • typically low frequency terms • Poor discriminators DISCVALUE < 0 –use of term increases the density (docs more similar) • typically high frequency terms Criticism: discrimination of relevant and non-relevant documents is the important factor •

Term Discrimination Model • (AVGSIM)t =doc density with term t removed from all docs • DISCVALUEt = (AVGSIM)t – AVGSIM • Good discriminators: DISCVALUEt > 0 –using term makes documents look less similar • typically medium frequency terms • Indifferent discriminators: DISCVALUE 0 –use of term has no effect • typically low frequency terms • Poor discriminators DISCVALUE < 0 –use of term increases the density (docs more similar) • typically high frequency terms Criticism: discrimination of relevant and non-relevant documents is the important factor •

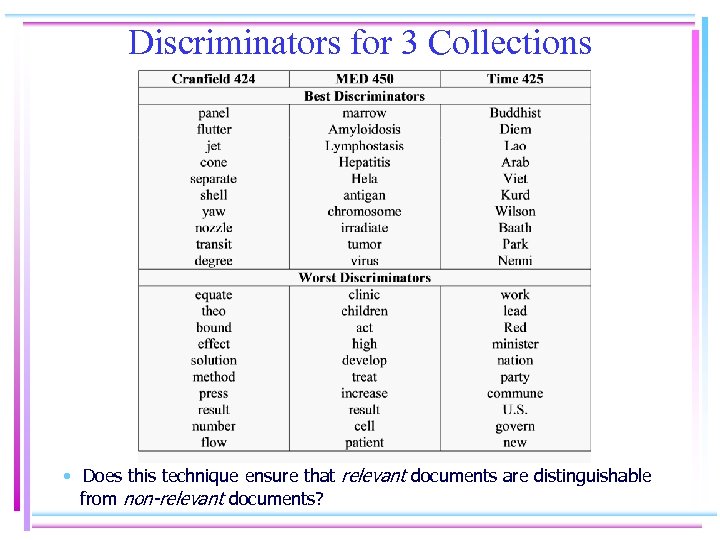

Discriminators for 3 Collections • Does this technique ensure that relevant documents are distinguishable from non-relevant documents?

Discriminators for 3 Collections • Does this technique ensure that relevant documents are distinguishable from non-relevant documents?

Summary • Index model identifies how to represent documents • Content-based indexing – Typically use features occurring within document • Identify features used to represent documents – Words, phrases, concepts, etc • Normalize if needed – Stopping, stemming, etc • Assign index term weights (measure of significance) – TF*IDF, discrimination value, etc • Other decisions determined by retrieval model – e. g. how to incorporate term weights

Summary • Index model identifies how to represent documents • Content-based indexing – Typically use features occurring within document • Identify features used to represent documents – Words, phrases, concepts, etc • Normalize if needed – Stopping, stemming, etc • Assign index term weights (measure of significance) – TF*IDF, discrimination value, etc • Other decisions determined by retrieval model – e. g. how to incorporate term weights

Queries • • What is a query? Query languages Query formulation Query processing

Queries • • What is a query? Query languages Query formulation Query processing

Queries and Information Needs • Information need is specific to searcher • Many different kinds of information needs – – known item known attribute general content search exhaustive literature review • Information need often poorly understood – evolves during search process – influenced by collection and system • Serendipity • Query is some interpretable form of information need

Queries and Information Needs • Information need is specific to searcher • Many different kinds of information needs – – known item known attribute general content search exhaustive literature review • Information need often poorly understood – evolves during search process – influenced by collection and system • Serendipity • Query is some interpretable form of information need

Queries • Inherent ambiguity! • Form of query depends on intended interpreter – – NL statement for a colleague NL statement for a reference librarian free text statement for a retrieval system Boolean expression for a retrieval system • Often multiple query ‘translations’ – – – Judge describes need to law clerk Clerk describes need to law librarian Librarian formulates free text query to Westlaw translates query to internal form for search engine Westlaw translates for external systems (e. g. Dialog, Dow Jones)

Queries • Inherent ambiguity! • Form of query depends on intended interpreter – – NL statement for a colleague NL statement for a reference librarian free text statement for a retrieval system Boolean expression for a retrieval system • Often multiple query ‘translations’ – – – Judge describes need to law clerk Clerk describes need to law librarian Librarian formulates free text query to Westlaw translates query to internal form for search engine Westlaw translates for external systems (e. g. Dialog, Dow Jones)

• Different IR systems generate different answers to different kinds of queries (e. g. , full-text IR system vs. boolean ranking system) • Different IR models dictate which queries can be formulated • For conventional IR models, natural language (NL) queries are the main type of queries • To find relevant answers to a query, techniques have been developed to enhance & preprocess the query (e. g. , synonyms of keywords, thesaurus, stemming, stopwords, etc. )

• Different IR systems generate different answers to different kinds of queries (e. g. , full-text IR system vs. boolean ranking system) • Different IR models dictate which queries can be formulated • For conventional IR models, natural language (NL) queries are the main type of queries • To find relevant answers to a query, techniques have been developed to enhance & preprocess the query (e. g. , synonyms of keywords, thesaurus, stemming, stopwords, etc. )

Query Formulation • 2 basic query language types – Boolean, structured – free text • Many systems support some combination of both • User interface is crucial part of query formulation – covered later • Tools provided to support formulation – – query processing and weighting query expansion dictionaries and thesauri relevance feedback

Query Formulation • 2 basic query language types – Boolean, structured – free text • Many systems support some combination of both • User interface is crucial part of query formulation – covered later • Tools provided to support formulation – – query processing and weighting query expansion dictionaries and thesauri relevance feedback

Boolean Queries • Queries that combine words & Boolean operators • Syntax of a Boolean query: – Boolean operators: • OR, AND, and BUT (i. e. , NOT) are common operators • Documents retrieved satisfy boolean algebra, results are document set as opposed to ranked list e. g. ) information AND retrieval Result set contains all documents containing at least one occurrence of both query words.

Boolean Queries • Queries that combine words & Boolean operators • Syntax of a Boolean query: – Boolean operators: • OR, AND, and BUT (i. e. , NOT) are common operators • Documents retrieved satisfy boolean algebra, results are document set as opposed to ranked list e. g. ) information AND retrieval Result set contains all documents containing at least one occurrence of both query words.

Boolean Queries • May sort the retrieved documents by some criterion • Drawbacks of Boolean queries – Users must be familiar with Boolean expressions – Classic IR systems based on Boolean queries provide no ranking (i. e. , either ‘yes’ or ‘no’) and hence no partial matching • Extended Boolean system: fuzzy Boolean operators – Relax the meaning of AND and OR using SOME – Rank docs according to # of matched operands in a query

Boolean Queries • May sort the retrieved documents by some criterion • Drawbacks of Boolean queries – Users must be familiar with Boolean expressions – Classic IR systems based on Boolean queries provide no ranking (i. e. , either ‘yes’ or ‘no’) and hence no partial matching • Extended Boolean system: fuzzy Boolean operators – Relax the meaning of AND and OR using SOME – Rank docs according to # of matched operands in a query

Natural Language Querying • NL queries popular because they are intuitive, easy to express, fast in ranking • Simpliest form: a word or set of words • Complex form: combination of operations w/ words • Basic queries: – Queries composed of a set of individual words – Multiple-word queries (including phrases or proximity) – Pattern-matching queries

Natural Language Querying • NL queries popular because they are intuitive, easy to express, fast in ranking • Simpliest form: a word or set of words • Complex form: combination of operations w/ words • Basic queries: – Queries composed of a set of individual words – Multiple-word queries (including phrases or proximity) – Pattern-matching queries

Single-Word Querying • Retrieved documents – contain at least one of the words in the query – typically ranked according to their similarity to the query based on tf and idf • Supplement single-word querying by considering the proximity of words, i. e. , the context of words • Related words often appear together (co-occurrence)

Single-Word Querying • Retrieved documents – contain at least one of the words in the query – typically ranked according to their similarity to the query based on tf and idf • Supplement single-word querying by considering the proximity of words, i. e. , the context of words • Related words often appear together (co-occurrence)

Queries Exploiting Context • Phrase: – query contains groups of adjacent words • stopwords are typically eliminated • Proximity: – relaxed-phrase queries – sequence of single words and/or phrases with a maximum distance between them – distance can be measured by number of characters or words – words/phrases, as specified in the query, can appear in any order in the retrieved docs

Queries Exploiting Context • Phrase: – query contains groups of adjacent words • stopwords are typically eliminated • Proximity: – relaxed-phrase queries – sequence of single words and/or phrases with a maximum distance between them – distance can be measured by number of characters or words – words/phrases, as specified in the query, can appear in any order in the retrieved docs

Natural Language Queries • Rank retrieved documents according to their degrees of matching • Negation – documents contain the negated words are penalized in the ranking computation • Establish a threshold to eliminate low weighted docs

Natural Language Queries • Rank retrieved documents according to their degrees of matching • Negation – documents contain the negated words are penalized in the ranking computation • Establish a threshold to eliminate low weighted docs

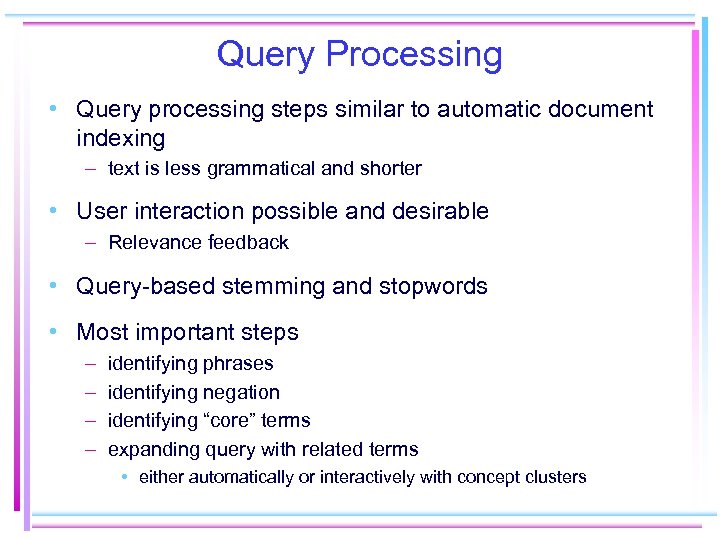

Query Processing • Query processing steps similar to automatic document indexing – text is less grammatical and shorter • User interaction possible and desirable – Relevance feedback • Query-based stemming and stopwords • Most important steps – – identifying phrases identifying negation identifying “core” terms expanding query with related terms • either automatically or interactively with concept clusters

Query Processing • Query processing steps similar to automatic document indexing – text is less grammatical and shorter • User interaction possible and desirable – Relevance feedback • Query-based stemming and stopwords • Most important steps – – identifying phrases identifying negation identifying “core” terms expanding query with related terms • either automatically or interactively with concept clusters

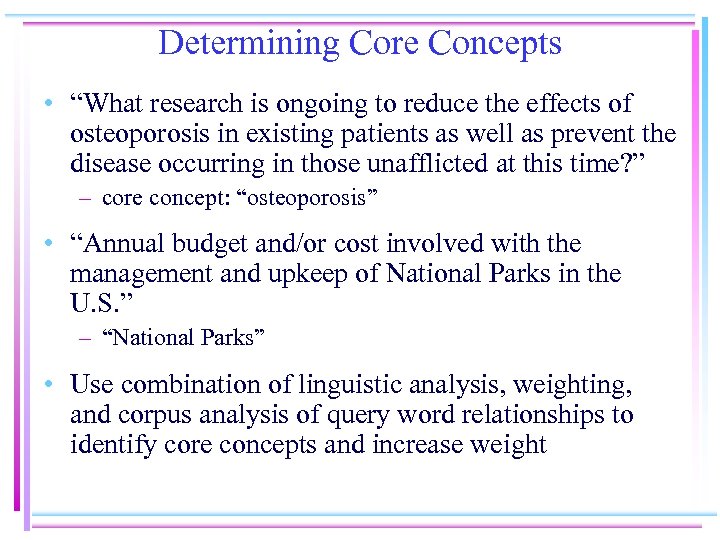

Determining Core Concepts • “What research is ongoing to reduce the effects of osteoporosis in existing patients as well as prevent the disease occurring in those unafflicted at this time? ” – core concept: “osteoporosis” • “Annual budget and/or cost involved with the management and upkeep of National Parks in the U. S. ” – “National Parks” • Use combination of linguistic analysis, weighting, and corpus analysis of query word relationships to identify core concepts and increase weight

Determining Core Concepts • “What research is ongoing to reduce the effects of osteoporosis in existing patients as well as prevent the disease occurring in those unafflicted at this time? ” – core concept: “osteoporosis” • “Annual budget and/or cost involved with the management and upkeep of National Parks in the U. S. ” – “National Parks” • Use combination of linguistic analysis, weighting, and corpus analysis of query word relationships to identify core concepts and increase weight

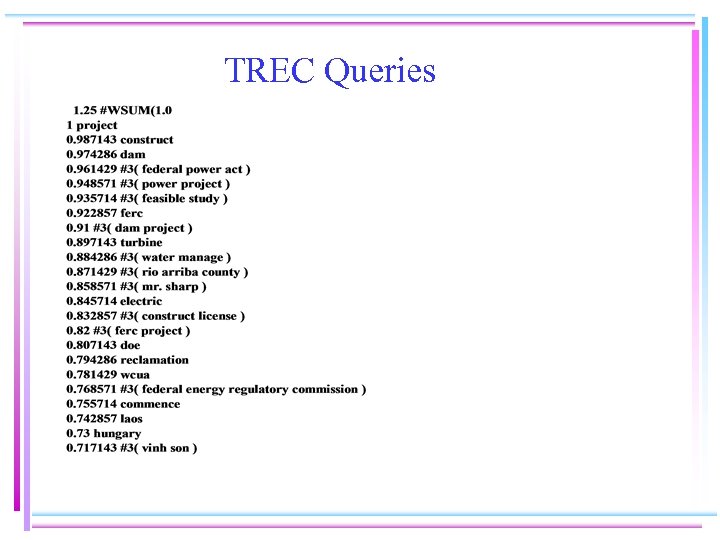

TREC Queries

TREC Queries

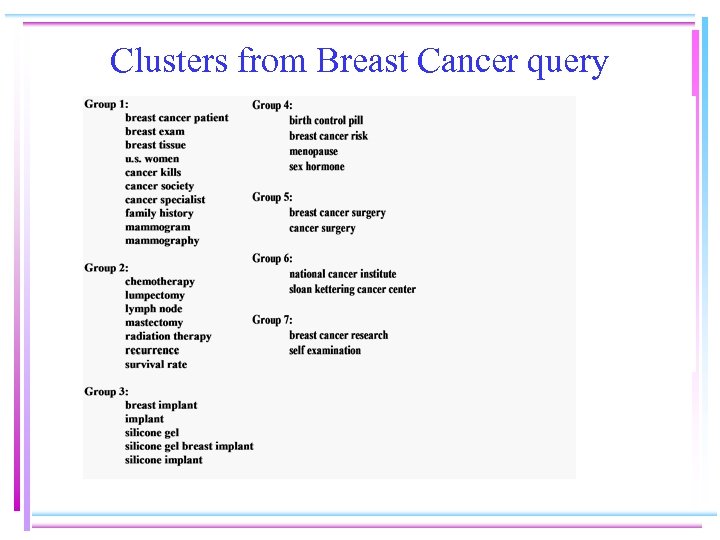

Clusters from Breast Cancer query

Clusters from Breast Cancer query

Other Representations • N-grams – for spelling, Soundex, OCR errors • Hypertext – citations – web links • Reduced dimensionality – LSI – Neural networks • Natural language processing – semantic primitives, frames

Other Representations • N-grams – for spelling, Soundex, OCR errors • Hypertext – citations – web links • Reduced dimensionality – LSI – Neural networks • Natural language processing – semantic primitives, frames