3a1c12b34c02627e3897050e6d0afdc8.ppt

- Количество слайдов: 37

CS 257 Modelling Multimedia Information LECTURE 4

CS 257 Modelling Multimedia Information LECTURE 4

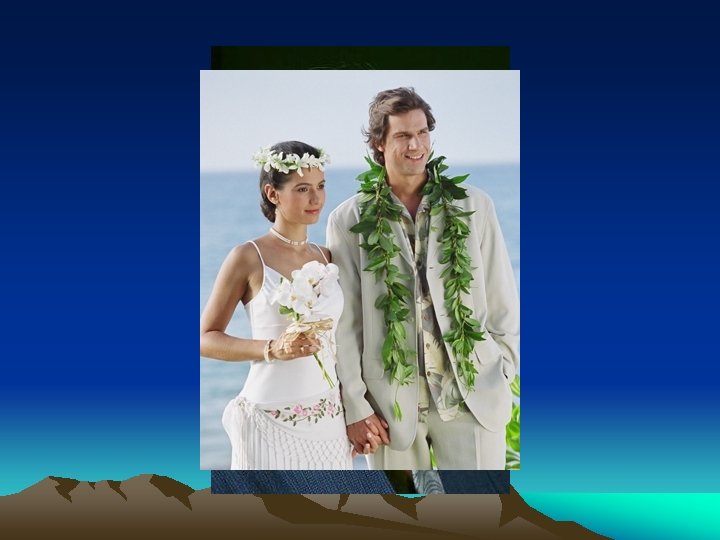

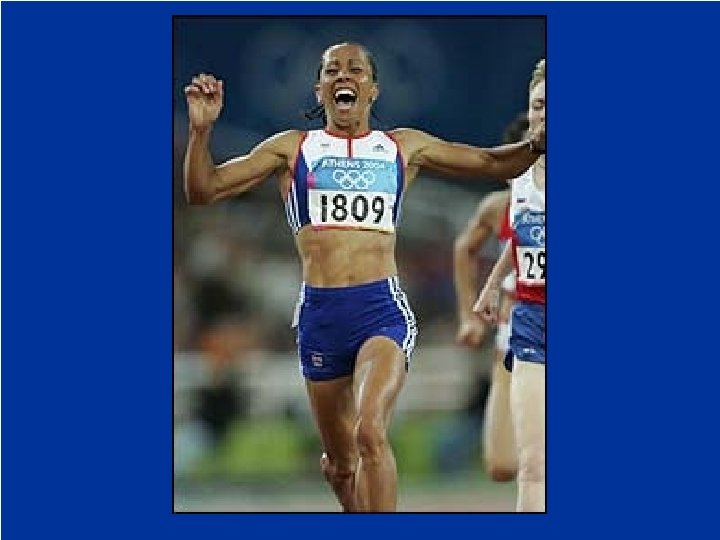

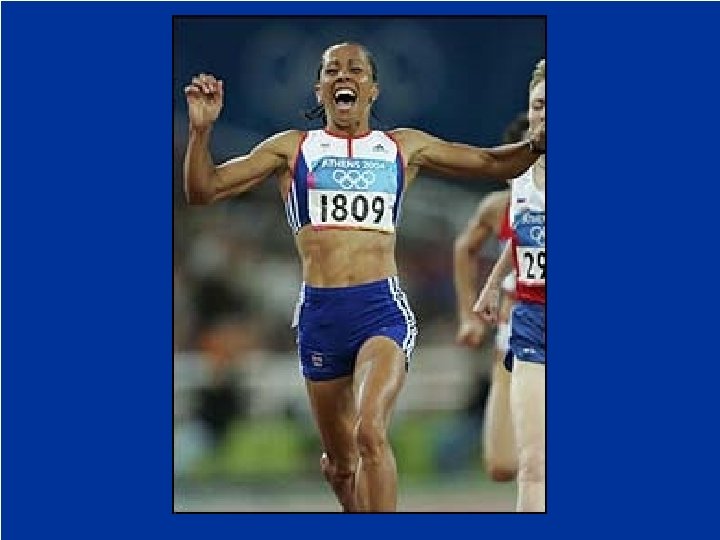

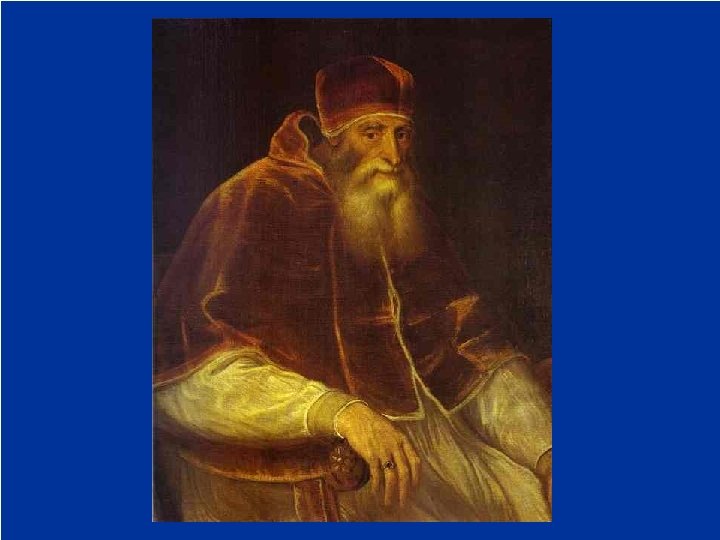

Describing Images Imagine you are the indexer of an image collection. 1) List all the words you can think of that describe the following image, so that it could be retrieved by as many users as possible who might be interested in it. Your words do NOT need to be factually correct, but they should show the range of things that could be said about the image 2) Try and put your words into groups so that each group of words says the same sort of thing about the image 3) Which words (metadata) do you think a machine could extract from the image automatically?

Describing Images Imagine you are the indexer of an image collection. 1) List all the words you can think of that describe the following image, so that it could be retrieved by as many users as possible who might be interested in it. Your words do NOT need to be factually correct, but they should show the range of things that could be said about the image 2) Try and put your words into groups so that each group of words says the same sort of thing about the image 3) Which words (metadata) do you think a machine could extract from the image automatically?

Words to describe the image…

Words to describe the image…

Overview of LECTURE 4 • PART 1: Different Kinds of Metadata for Visual Information; Thesauri and Controlled Vocabularies for Images • PART 2: Standards for Indexing Visual Information • PART 3: Current Image Retrieval Systems: how much indexing-retrieval can be automated • LAB – Evaluating state-of-the-art image retrieval systems

Overview of LECTURE 4 • PART 1: Different Kinds of Metadata for Visual Information; Thesauri and Controlled Vocabularies for Images • PART 2: Standards for Indexing Visual Information • PART 3: Current Image Retrieval Systems: how much indexing-retrieval can be automated • LAB – Evaluating state-of-the-art image retrieval systems

PART 1: Different Kinds of Metadata for Visual Information • “Picture worth a thousand words…” – it’s a cliché but then clichés are often true • These words relate to different aspects of the image – need to have labels to talk about different kinds of metadata for images (useful for specifying / designing / comparing image retrieval applications) – may want to structure how metadata is stored (rather than one long list of keywords – may help to reduce polysemy problems) • NB. Some kinds of metadata for visual information (image and video data) are more likely to require human input than others

PART 1: Different Kinds of Metadata for Visual Information • “Picture worth a thousand words…” – it’s a cliché but then clichés are often true • These words relate to different aspects of the image – need to have labels to talk about different kinds of metadata for images (useful for specifying / designing / comparing image retrieval applications) – may want to structure how metadata is stored (rather than one long list of keywords – may help to reduce polysemy problems) • NB. Some kinds of metadata for visual information (image and video data) are more likely to require human input than others

Different Kinds of Metadata for Visual Information • The kinds of image metadata used for a particular image retrieval application will depend in part on the types of images being stored, and the types of user (Enser and Sandom 2003) – Four types of image: • Documentary – general purpose (like news, wildlife); • Documentary – special purpose (medical X-rays etc. , fingerprints); • Creative (artworks) • Models (maps, plans, charts) – Two types of user: • Specialist users • General public

Different Kinds of Metadata for Visual Information • The kinds of image metadata used for a particular image retrieval application will depend in part on the types of images being stored, and the types of user (Enser and Sandom 2003) – Four types of image: • Documentary – general purpose (like news, wildlife); • Documentary – special purpose (medical X-rays etc. , fingerprints); • Creative (artworks) • Models (maps, plans, charts) – Two types of user: • Specialist users • General public

Different Kinds of Metadata for Visual Information • Frameworks to structure metadata for visual information into different proposed by: – Del Bimbo (1999) – Shatford (1986) – Chang and Jaimes (2000)

Different Kinds of Metadata for Visual Information • Frameworks to structure metadata for visual information into different proposed by: – Del Bimbo (1999) – Shatford (1986) – Chang and Jaimes (2000)

3 Kinds of Metadata for Visual Information (Del Bimbo 1999) • Content-independent Metadata: data which is not directly concerned with image content, and could not necessarily be extracted from it, e. g. artist name, date, ownership • Content-dependent Metadata: perceptual facts to do with colour, texture, shape; can be automatically (and therefore objectively) extracted from image data • Content-descriptive Metadata (semantics): It is concerned with relationships of image entities with realworld entities or temporal events, emotions and meaning associated with visual signs and scenes; more subjective and much harder to extract automatically

3 Kinds of Metadata for Visual Information (Del Bimbo 1999) • Content-independent Metadata: data which is not directly concerned with image content, and could not necessarily be extracted from it, e. g. artist name, date, ownership • Content-dependent Metadata: perceptual facts to do with colour, texture, shape; can be automatically (and therefore objectively) extracted from image data • Content-descriptive Metadata (semantics): It is concerned with relationships of image entities with realworld entities or temporal events, emotions and meaning associated with visual signs and scenes; more subjective and much harder to extract automatically

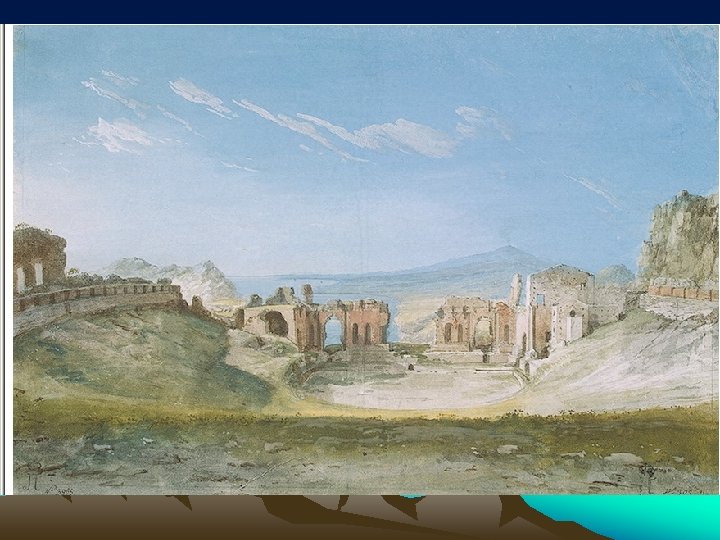

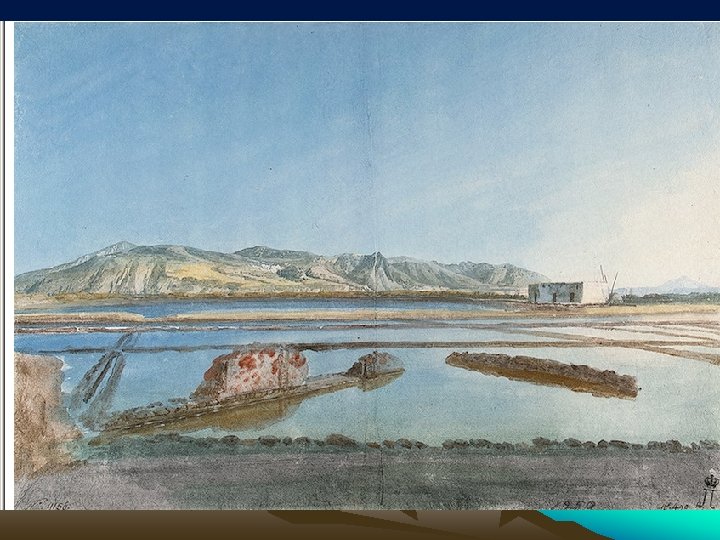

EXERCISE 4 -1 Fill in for following images… Some possible metadata of each kind (doesn’t need to be factually correct) Content-independent Metadata: Content-descriptive Metadata:

EXERCISE 4 -1 Fill in for following images… Some possible metadata of each kind (doesn’t need to be factually correct) Content-independent Metadata: Content-descriptive Metadata:

Metadata for Visual Information: SUMMARY • Del Bimbo (1999): content-independent; content-descriptive • Shatford (1986): in effect refines ‘content descriptive’ pre-iconographic; iconological – based on Panofsky’s ideas • Jaimes and Chang (2000): 10 Levels in their conceptual framework for visual information – more about this in the Optional Reading.

Metadata for Visual Information: SUMMARY • Del Bimbo (1999): content-independent; content-descriptive • Shatford (1986): in effect refines ‘content descriptive’ pre-iconographic; iconological – based on Panofsky’s ideas • Jaimes and Chang (2000): 10 Levels in their conceptual framework for visual information – more about this in the Optional Reading.

PART 2: Standards for Indexing Visual Information • Exif – common standard for images taken by digital cameras • A&AT – Art and Architecture Thesaurus • NASA Thesaurus • ICONCLASS

PART 2: Standards for Indexing Visual Information • Exif – common standard for images taken by digital cameras • A&AT – Art and Architecture Thesaurus • NASA Thesaurus • ICONCLASS

Exif • “Exif image file specification stipulates the method of recording image data in files, and specifies: – Structure of image data files – Tags used by this standard – Definition and management of format versions” • Includes details about the camera that took the image, shutter speed, apeture, time, etc. www. exif. org

Exif • “Exif image file specification stipulates the method of recording image data in files, and specifies: – Structure of image data files – Tags used by this standard – Definition and management of format versions” • Includes details about the camera that took the image, shutter speed, apeture, time, etc. www. exif. org

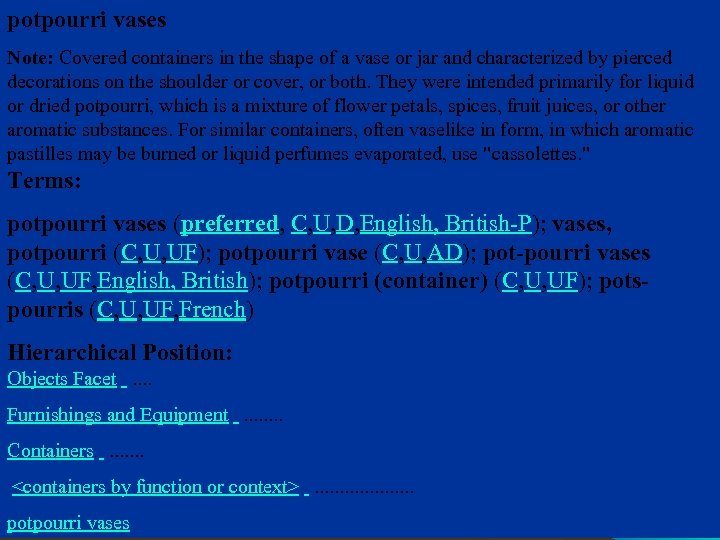

A&AT: Art and Architecture Thesaurus www. getty. edu/research/tools/vocabulary/aat • Under development since 1980 s • Now 120, 000 terms of controlled vocabulary; organised by concepts • Concept – linked to several terms (including preferred term) – positioned in a hierarchy – linked to related concepts • Terms cover: objects, vocabulary to describe objects and scholarly concepts of theory and criticism

A&AT: Art and Architecture Thesaurus www. getty. edu/research/tools/vocabulary/aat • Under development since 1980 s • Now 120, 000 terms of controlled vocabulary; organised by concepts • Concept – linked to several terms (including preferred term) – positioned in a hierarchy – linked to related concepts • Terms cover: objects, vocabulary to describe objects and scholarly concepts of theory and criticism

potpourri vases Note: Covered containers in the shape of a vase or jar and characterized by pierced decorations on the shoulder or cover, or both. They were intended primarily for liquid or dried potpourri, which is a mixture of flower petals, spices, fruit juices, or other aromatic substances. For similar containers, often vaselike in form, in which aromatic pastilles may be burned or liquid perfumes evaporated, use "cassolettes. " Terms: potpourri vases (preferred, C, U, D, English, British-P); vases, potpourri (C, U, UF); potpourri vase (C, U, AD); pot-pourri vases (C, U, UF, English, British); potpourri (container) (C, U, UF); potspourris (C, U, UF, French) Hierarchical Position: Objects Facet . . Furnishings and Equipment . . . . Containers . . . .

potpourri vases Note: Covered containers in the shape of a vase or jar and characterized by pierced decorations on the shoulder or cover, or both. They were intended primarily for liquid or dried potpourri, which is a mixture of flower petals, spices, fruit juices, or other aromatic substances. For similar containers, often vaselike in form, in which aromatic pastilles may be burned or liquid perfumes evaporated, use "cassolettes. " Terms: potpourri vases (preferred, C, U, D, English, British-P); vases, potpourri (C, U, UF); potpourri vase (C, U, AD); pot-pourri vases (C, U, UF, English, British); potpourri (container) (C, U, UF); potspourris (C, U, UF, French) Hierarchical Position: Objects Facet . . Furnishings and Equipment . . . . Containers . . . .

NASA Thesaurus • “contains the authorized subject terms by which the documents in the NASA STI Databases are indexed and retrieved” w 17, 700 terms and 3, 832 definitions http: //www. sti. nasa. gov/thesfrm 1. htm

NASA Thesaurus • “contains the authorized subject terms by which the documents in the NASA STI Databases are indexed and retrieved” w 17, 700 terms and 3, 832 definitions http: //www. sti. nasa. gov/thesfrm 1. htm

ICONCLASS “Iconclass is a subject specific international classification system for iconographic research and the documentation of images. ” http: //www. iconclass. nl/

ICONCLASS “Iconclass is a subject specific international classification system for iconographic research and the documentation of images. ” http: //www. iconclass. nl/

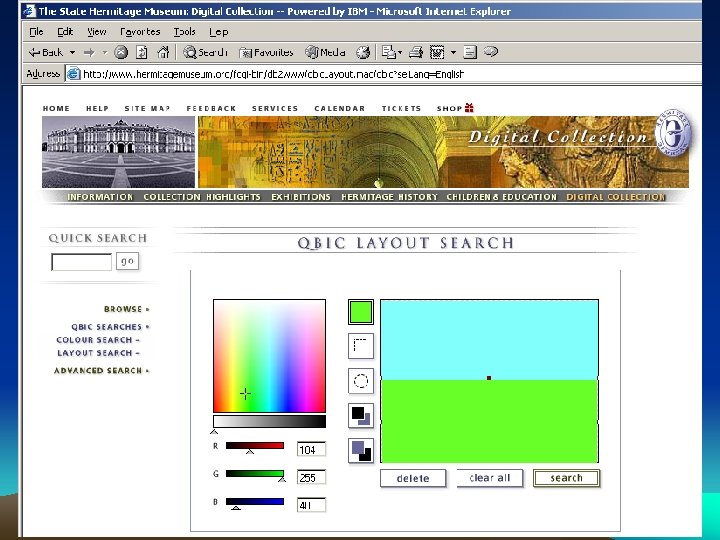

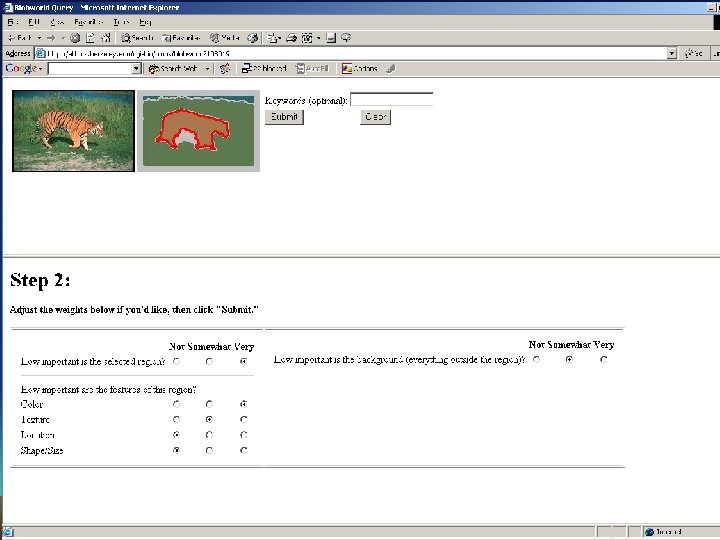

Part 3: Approaches taken by Image Retrieval Systems • Systems that retrieve images based on Visual Similarity: QBIC (Query-by-Image-Content) and Blobworld • Systems that use the text associated with images as a source of keywords, e. g. search engines like Google • Manually indexed image collections like online art galleries (e. g. Tate) and commercial picture collections (e. g. Corbis). [You can start your own image collection with freeware image management software, like Picassa – www. picassa. com]

Part 3: Approaches taken by Image Retrieval Systems • Systems that retrieve images based on Visual Similarity: QBIC (Query-by-Image-Content) and Blobworld • Systems that use the text associated with images as a source of keywords, e. g. search engines like Google • Manually indexed image collections like online art galleries (e. g. Tate) and commercial picture collections (e. g. Corbis). [You can start your own image collection with freeware image management software, like Picassa – www. picassa. com]

Visual Similarity • Images can be indexed / queried at different levels of abstraction (cf. del Bimbo’s metadata scheme) • When dealing with content-dependent metadata (e. g. perceptual features like colour, texture and shape) it is possible to automate indexing • To query: – draw coloured regions (sketch-based query) ; – or choose an example image (query by example) • Images with similar perceptual features are retrieved (not necessarily similar ‘semantic content’)

Visual Similarity • Images can be indexed / queried at different levels of abstraction (cf. del Bimbo’s metadata scheme) • When dealing with content-dependent metadata (e. g. perceptual features like colour, texture and shape) it is possible to automate indexing • To query: – draw coloured regions (sketch-based query) ; – or choose an example image (query by example) • Images with similar perceptual features are retrieved (not necessarily similar ‘semantic content’)

Perceptual Features: Colour • Colour can be computed as a global metric, i. e. a feature of an entire image; or of a region • Colour is considered a good metric because it is invariant to image translation and rotation; and changes only slowly under effects of different viewpoints, scale and occlusion • Colour values of pixels in an image are discretized and a colour histogram is made to represent the image / region

Perceptual Features: Colour • Colour can be computed as a global metric, i. e. a feature of an entire image; or of a region • Colour is considered a good metric because it is invariant to image translation and rotation; and changes only slowly under effects of different viewpoints, scale and occlusion • Colour values of pixels in an image are discretized and a colour histogram is made to represent the image / region

Similarity-based Retrieval • Perceptual Features (for ‘visual similarity’) – – Colour Texture Shape Spatial Relations • These features can be computed directly from image data – they characterise the pixel distribution in different ways • Different features may help retrieve different kinds of images: consider images of leaves, fabrics, adverts

Similarity-based Retrieval • Perceptual Features (for ‘visual similarity’) – – Colour Texture Shape Spatial Relations • These features can be computed directly from image data – they characterise the pixel distribution in different ways • Different features may help retrieve different kinds of images: consider images of leaves, fabrics, adverts

Problems for Automation • How much metadata for images can be created automatically? • Two sets of problems have been labelled: – Sensory Gap – Semantic Gap

Problems for Automation • How much metadata for images can be created automatically? • Two sets of problems have been labelled: – Sensory Gap – Semantic Gap

The Sensory Gap “The sensory gap is the gap between the object in the world and the information in a (computational) description derived from a recording of that scene” (Smeulders et al 2000).

The Sensory Gap “The sensory gap is the gap between the object in the world and the information in a (computational) description derived from a recording of that scene” (Smeulders et al 2000).

The Semantic Gap “The semantic gap is the lack of coincidence between the information that one can extract from the visual data and the interpretation that the same data have for a user in a given situation” (Smeulders et al 2000).

The Semantic Gap “The semantic gap is the lack of coincidence between the information that one can extract from the visual data and the interpretation that the same data have for a user in a given situation” (Smeulders et al 2000).

• EXERCISE 4 -2 Amy, Brian and Claire are developing the following image database systems: – – – • Amy is developing an image database of people’s holiday photographs, to be used by families to store and retrieve their personal photographs. Brian is developing an image database of fabric samples, i. e. images of different materials, to be used by fashion designers to select fabrics for their clothes. Claire is developing an image database of paintings from an art gallery to be used by the general public to choose prints to decorate their homes. Which kinds of metadata do you think Amy, Brian and Claire should each include in their systems, following del Bimbo’s (1999) classification of content-independent, contentdependent and content-descriptive metadata for visual information? Note, each person may use more than one kind of metadata. In your answer give examples of each kind of metadata that you think Amy, Brian and Claire should use.

• EXERCISE 4 -2 Amy, Brian and Claire are developing the following image database systems: – – – • Amy is developing an image database of people’s holiday photographs, to be used by families to store and retrieve their personal photographs. Brian is developing an image database of fabric samples, i. e. images of different materials, to be used by fashion designers to select fabrics for their clothes. Claire is developing an image database of paintings from an art gallery to be used by the general public to choose prints to decorate their homes. Which kinds of metadata do you think Amy, Brian and Claire should each include in their systems, following del Bimbo’s (1999) classification of content-independent, contentdependent and content-descriptive metadata for visual information? Note, each person may use more than one kind of metadata. In your answer give examples of each kind of metadata that you think Amy, Brian and Claire should use.

EXERCISE 4 -3 • What kinds of metadata can be indexed automatically more reliably? • What users information needs are likely to be met by this kind of metadata?

EXERCISE 4 -3 • What kinds of metadata can be indexed automatically more reliably? • What users information needs are likely to be met by this kind of metadata?

Lab Exercise To use and evaluate current image retrieval systems: – Systems that use visual similarity (QBIC and Blobworld) – Systems that use keywords from HTML (Google, Alta. Vista) – Systems in which images are manually indexed (Tate Gallery, Corbis)

Lab Exercise To use and evaluate current image retrieval systems: – Systems that use visual similarity (QBIC and Blobworld) – Systems that use keywords from HTML (Google, Alta. Vista) – Systems in which images are manually indexed (Tate Gallery, Corbis)

LECTURE 4: LEARNING OUTCOMES • After the lecture and lab exercise, you should be able to: – Distinguish different kinds of metadata for images, and give examples for a given image • Del Bimbo: content-dependent, content-independent, contentdescriptive – Decide what kinds of metadata are appropriate given user information needs – Assess the cost of producing the metadata, e. g. how much can be automated? – Compare and contrast the approaches taken to image retrieval by current systems, considering in particular the kinds of users / image data they are best suited for – Discuss problems for image retrieval like the ‘sensory gap’ and the ‘semantic gap’

LECTURE 4: LEARNING OUTCOMES • After the lecture and lab exercise, you should be able to: – Distinguish different kinds of metadata for images, and give examples for a given image • Del Bimbo: content-dependent, content-independent, contentdescriptive – Decide what kinds of metadata are appropriate given user information needs – Assess the cost of producing the metadata, e. g. how much can be automated? – Compare and contrast the approaches taken to image retrieval by current systems, considering in particular the kinds of users / image data they are best suited for – Discuss problems for image retrieval like the ‘sensory gap’ and the ‘semantic gap’

Optional Reading A Framework with 10 Levels of Metadata for Images: Jaimes and Chang (2000). Alejandro Jaimes and Shih-Fu Chang, “A Conceptual Framework for Indexing Visual Information at Multiple Levels”, IS&T/SPIE Internet Imaging, Vol. 3964, San Jose, CA, Jan. 2000. www. ctr. columbia. edu/~ajaimes/Pubs/spie 00_internet. pdf (concentrate on the distinctions made in Section 2 and on the different levels described in Section 3. 1) TASI (Technical Advisory Service for Images) – paper on ‘Metadata and Digital Images’ www. tasi. ac. uk/advice/delivering/metadata. html A paper on creating an online art museum - http: //www. research. ibm. com/visualtechnologies/pdf/cacm_herm. html For more on the kinds of metadata for visual information: del Bimbo (1999), Visual Information Retrieval. In Library Article Collection.

Optional Reading A Framework with 10 Levels of Metadata for Images: Jaimes and Chang (2000). Alejandro Jaimes and Shih-Fu Chang, “A Conceptual Framework for Indexing Visual Information at Multiple Levels”, IS&T/SPIE Internet Imaging, Vol. 3964, San Jose, CA, Jan. 2000. www. ctr. columbia. edu/~ajaimes/Pubs/spie 00_internet. pdf (concentrate on the distinctions made in Section 2 and on the different levels described in Section 3. 1) TASI (Technical Advisory Service for Images) – paper on ‘Metadata and Digital Images’ www. tasi. ac. uk/advice/delivering/metadata. html A paper on creating an online art museum - http: //www. research. ibm. com/visualtechnologies/pdf/cacm_herm. html For more on the kinds of metadata for visual information: del Bimbo (1999), Visual Information Retrieval. In Library Article Collection.