c9f2f34817957a0c69705b27044e8162.ppt

- Количество слайдов: 56

CS 252 Graduate Computer Architecture Lecture 8 Instruction Level Parallelism: Getting the CPI < 1 September 27, 2000 Prof. John Kubiatowicz 9/27/00 CS 252/Kubiatowicz Lec 8. 1

CS 252 Graduate Computer Architecture Lecture 8 Instruction Level Parallelism: Getting the CPI < 1 September 27, 2000 Prof. John Kubiatowicz 9/27/00 CS 252/Kubiatowicz Lec 8. 1

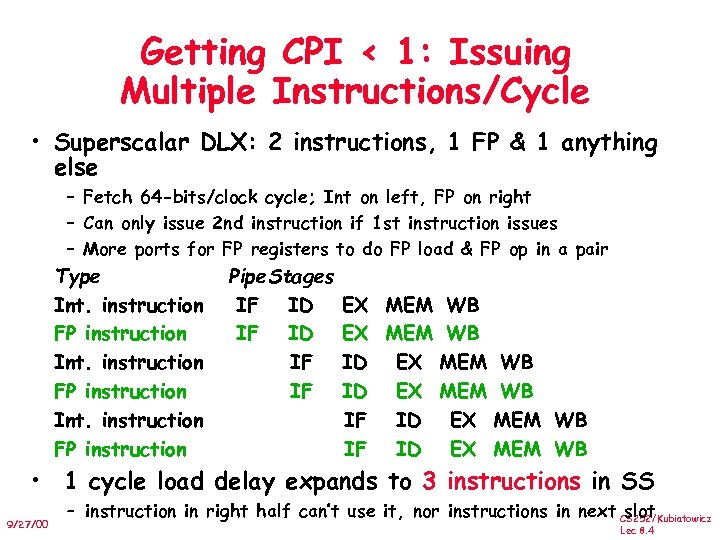

Getting CPI < 1: Issuing Multiple Instructions/Cycle • Superscalar DLX: 2 instructions, 1 FP & 1 anything else – Fetch 64 -bits/clock cycle; Int on left, FP on right – Can only issue 2 nd instruction if 1 st instruction issues – More ports for FP registers to do FP load & FP op in a pair Type Int. instruction FP instruction Pipe. Stages IF ID IF IF EX MEM WB ID EX MEM WB IF ID EX MEM WB • 1 cycle load delay expands to 3 instructions in SS 9/27/00 – instruction in right half can’t use it, nor instructions in next CS 252/Kubiatowicz slot Lec 8. 4

Getting CPI < 1: Issuing Multiple Instructions/Cycle • Superscalar DLX: 2 instructions, 1 FP & 1 anything else – Fetch 64 -bits/clock cycle; Int on left, FP on right – Can only issue 2 nd instruction if 1 st instruction issues – More ports for FP registers to do FP load & FP op in a pair Type Int. instruction FP instruction Pipe. Stages IF ID IF IF EX MEM WB ID EX MEM WB IF ID EX MEM WB • 1 cycle load delay expands to 3 instructions in SS 9/27/00 – instruction in right half can’t use it, nor instructions in next CS 252/Kubiatowicz slot Lec 8. 4

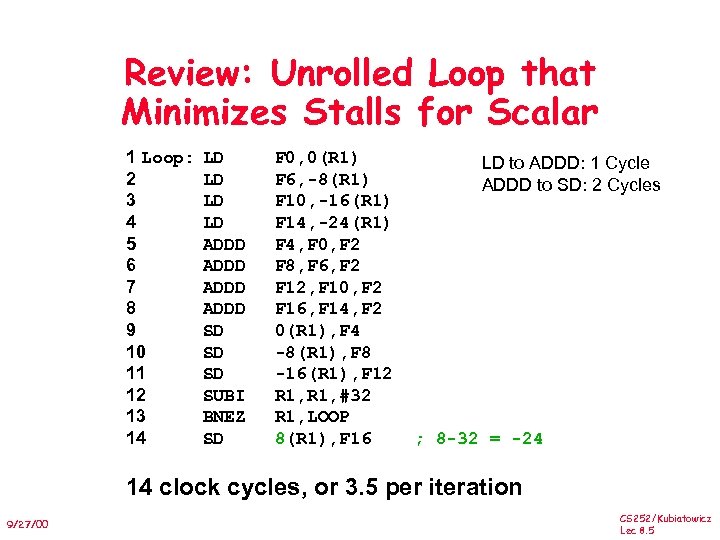

Review: Unrolled Loop that Minimizes Stalls for Scalar 1 Loop: 2 3 4 5 6 7 8 9 10 11 12 13 14 LD LD ADDD SD SD SD SUBI BNEZ SD F 0, 0(R 1) F 6, -8(R 1) F 10, -16(R 1) F 14, -24(R 1) F 4, F 0, F 2 F 8, F 6, F 2 F 12, F 10, F 2 F 16, F 14, F 2 0(R 1), F 4 -8(R 1), F 8 -16(R 1), F 12 R 1, #32 R 1, LOOP 8(R 1), F 16 LD to ADDD: 1 Cycle ADDD to SD: 2 Cycles ; 8 -32 = -24 14 clock cycles, or 3. 5 per iteration 9/27/00 CS 252/Kubiatowicz Lec 8. 5

Review: Unrolled Loop that Minimizes Stalls for Scalar 1 Loop: 2 3 4 5 6 7 8 9 10 11 12 13 14 LD LD ADDD SD SD SD SUBI BNEZ SD F 0, 0(R 1) F 6, -8(R 1) F 10, -16(R 1) F 14, -24(R 1) F 4, F 0, F 2 F 8, F 6, F 2 F 12, F 10, F 2 F 16, F 14, F 2 0(R 1), F 4 -8(R 1), F 8 -16(R 1), F 12 R 1, #32 R 1, LOOP 8(R 1), F 16 LD to ADDD: 1 Cycle ADDD to SD: 2 Cycles ; 8 -32 = -24 14 clock cycles, or 3. 5 per iteration 9/27/00 CS 252/Kubiatowicz Lec 8. 5

Loop Unrolling in 2 -issue Superscalar Integer instruction Loop: LD F 0, 0(R 1) LD F 6, -8(R 1) LD F 10, -16(R 1) LD F 14, -24(R 1) LD F 18, -32(R 1) SD 0(R 1), F 4 SD -8(R 1), F 8 SD -16(R 1), F 12 SD -24(R 1), F 16 SUBI R 1, #40 BNEZ R 1, LOOP SD -32(R 1), F 20 FP instruction ADDD ADDD F 4, F 0, F 2 F 8, F 6, F 2 F 12, F 10, F 2 F 16, F 14, F 20, F 18, F 2 Clock cycle 1 2 3 4 5 6 7 8 9 10 11 12 • Unrolled 5 times to avoid delays (+1 due to SS) • 12 clocks, or 2. 4 clocks per iteration (1. 5 X) CS 252/Kubiatowicz 9/27/00 Lec 8. 6

Loop Unrolling in 2 -issue Superscalar Integer instruction Loop: LD F 0, 0(R 1) LD F 6, -8(R 1) LD F 10, -16(R 1) LD F 14, -24(R 1) LD F 18, -32(R 1) SD 0(R 1), F 4 SD -8(R 1), F 8 SD -16(R 1), F 12 SD -24(R 1), F 16 SUBI R 1, #40 BNEZ R 1, LOOP SD -32(R 1), F 20 FP instruction ADDD ADDD F 4, F 0, F 2 F 8, F 6, F 2 F 12, F 10, F 2 F 16, F 14, F 20, F 18, F 2 Clock cycle 1 2 3 4 5 6 7 8 9 10 11 12 • Unrolled 5 times to avoid delays (+1 due to SS) • 12 clocks, or 2. 4 clocks per iteration (1. 5 X) CS 252/Kubiatowicz 9/27/00 Lec 8. 6

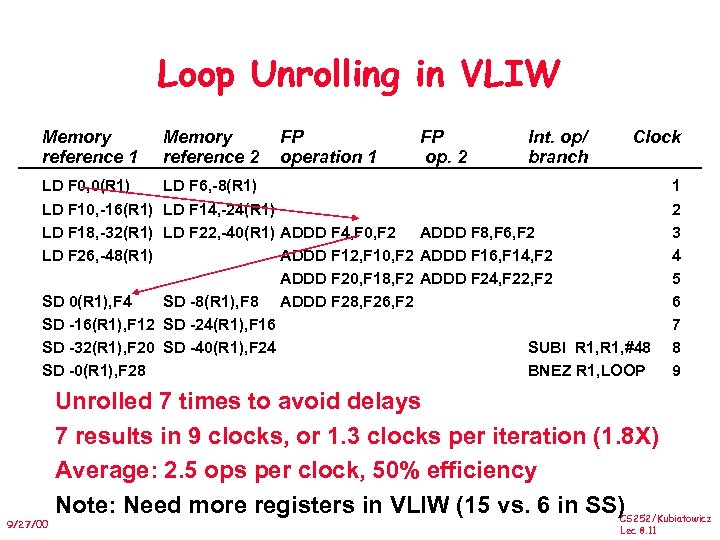

Loop Unrolling in VLIW Memory reference 1 Memory reference 2 LD F 0, 0(R 1) FP operation 1 FP op. 2 Int. op/ branch Clock LD F 6, -8(R 1) 1 LD F 10, -16(R 1) LD F 14, -24(R 1) LD F 18, -32(R 1) LD F 22, -40(R 1) ADDD F 4, F 0, F 2 ADDD F 8, F 6, F 2 LD F 26, -48(R 1) ADDD F 12, F 10, F 2 ADDD F 16, F 14, F 2 ADDD F 20, F 18, F 2 ADDD F 24, F 22, F 2 SD 0(R 1), F 4 SD -8(R 1), F 8 ADDD F 28, F 26, F 2 SD -16(R 1), F 12 SD -24(R 1), F 16 SD -32(R 1), F 20 SD -40(R 1), F 24 SUBI R 1, #48 SD -0(R 1), F 28 BNEZ R 1, LOOP 9/27/00 2 3 4 5 6 7 8 9 Unrolled 7 times to avoid delays 7 results in 9 clocks, or 1. 3 clocks per iteration (1. 8 X) Average: 2. 5 ops per clock, 50% efficiency Note: Need more registers in VLIW (15 vs. 6 in SS) CS 252/Kubiatowicz Lec 8. 11

Loop Unrolling in VLIW Memory reference 1 Memory reference 2 LD F 0, 0(R 1) FP operation 1 FP op. 2 Int. op/ branch Clock LD F 6, -8(R 1) 1 LD F 10, -16(R 1) LD F 14, -24(R 1) LD F 18, -32(R 1) LD F 22, -40(R 1) ADDD F 4, F 0, F 2 ADDD F 8, F 6, F 2 LD F 26, -48(R 1) ADDD F 12, F 10, F 2 ADDD F 16, F 14, F 2 ADDD F 20, F 18, F 2 ADDD F 24, F 22, F 2 SD 0(R 1), F 4 SD -8(R 1), F 8 ADDD F 28, F 26, F 2 SD -16(R 1), F 12 SD -24(R 1), F 16 SD -32(R 1), F 20 SD -40(R 1), F 24 SUBI R 1, #48 SD -0(R 1), F 28 BNEZ R 1, LOOP 9/27/00 2 3 4 5 6 7 8 9 Unrolled 7 times to avoid delays 7 results in 9 clocks, or 1. 3 clocks per iteration (1. 8 X) Average: 2. 5 ops per clock, 50% efficiency Note: Need more registers in VLIW (15 vs. 6 in SS) CS 252/Kubiatowicz Lec 8. 11

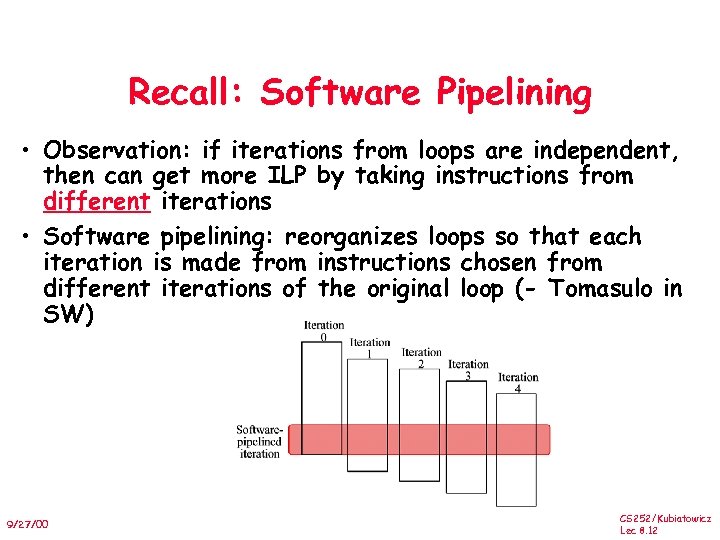

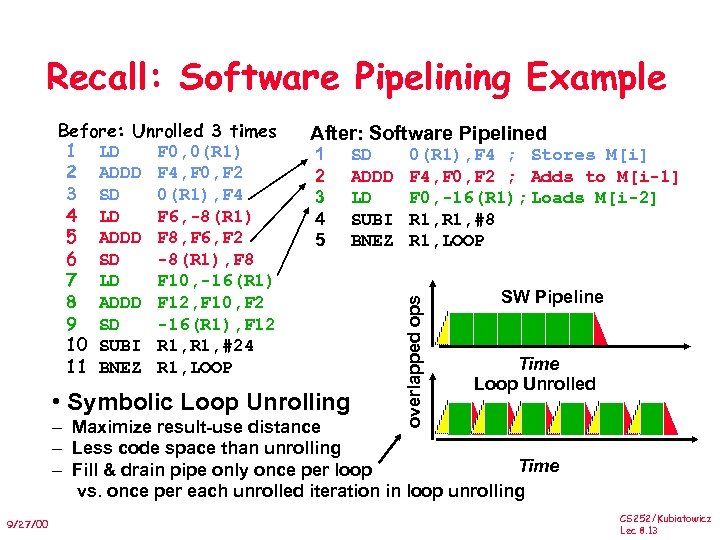

Recall: Software Pipelining • Observation: if iterations from loops are independent, then can get more ILP by taking instructions from different iterations • Software pipelining: reorganizes loops so that each iteration is made from instructions chosen from different iterations of the original loop ( Tomasulo in SW) 9/27/00 CS 252/Kubiatowicz Lec 8. 12

Recall: Software Pipelining • Observation: if iterations from loops are independent, then can get more ILP by taking instructions from different iterations • Software pipelining: reorganizes loops so that each iteration is made from instructions chosen from different iterations of the original loop ( Tomasulo in SW) 9/27/00 CS 252/Kubiatowicz Lec 8. 12

Recall: Software Pipelining Example After: Software Pipelined 1 2 3 4 5 SD ADDD LD SUBI BNEZ • Symbolic Loop Unrolling 0(R 1), F 4 ; Stores M[i] F 4, F 0, F 2 ; Adds to M[i-1] F 0, -16(R 1); Loads M[i-2] R 1, #8 R 1, LOOP overlapped ops Before: Unrolled 3 times 1 LD F 0, 0(R 1) 2 ADDD F 4, F 0, F 2 3 SD 0(R 1), F 4 4 LD F 6, -8(R 1) 5 ADDD F 8, F 6, F 2 6 SD -8(R 1), F 8 7 LD F 10, -16(R 1) 8 ADDD F 12, F 10, F 2 9 SD -16(R 1), F 12 10 SUBI R 1, #24 11 BNEZ R 1, LOOP SW Pipeline Time Loop Unrolled – Maximize result-use distance – Less code space than unrolling Time – Fill & drain pipe only once per loop vs. once per each unrolled iteration in loop unrolling 9/27/00 CS 252/Kubiatowicz Lec 8. 13

Recall: Software Pipelining Example After: Software Pipelined 1 2 3 4 5 SD ADDD LD SUBI BNEZ • Symbolic Loop Unrolling 0(R 1), F 4 ; Stores M[i] F 4, F 0, F 2 ; Adds to M[i-1] F 0, -16(R 1); Loads M[i-2] R 1, #8 R 1, LOOP overlapped ops Before: Unrolled 3 times 1 LD F 0, 0(R 1) 2 ADDD F 4, F 0, F 2 3 SD 0(R 1), F 4 4 LD F 6, -8(R 1) 5 ADDD F 8, F 6, F 2 6 SD -8(R 1), F 8 7 LD F 10, -16(R 1) 8 ADDD F 12, F 10, F 2 9 SD -16(R 1), F 12 10 SUBI R 1, #24 11 BNEZ R 1, LOOP SW Pipeline Time Loop Unrolled – Maximize result-use distance – Less code space than unrolling Time – Fill & drain pipe only once per loop vs. once per each unrolled iteration in loop unrolling 9/27/00 CS 252/Kubiatowicz Lec 8. 13

Software Pipelining with Loop Unrolling in VLIW Memory reference 1 Memory reference 2 FP operation 1 LD F 0, -48(R 1) ST 0(R 1), F 4 ADDD F 4, F 0, F 2 LD F 6, -56(R 1) LD F 10, -40(R 1) ST -8(R 1), F 8 ST 8(R 1), F 12 ADDD F 8, F 6, F 2 ADDD F 12, F 10, F 2 FP op. 2 Int. op/ branch Clock 1 SUBI R 1, #24 BNEZ R 1, LOOP 2 3 • Software pipelined across 9 iterations of original loop – In each iteration of above loop, we: » Store to m, m-8, m-16 (iterations I-3, I-2, I-1) » Compute for m-24, m-32, m-40 (iterations I, I+1, I+2) » Load from m-48, m-56, m-64 (iterations I+3, I+4, I+5) • 9 results in 9 cycles, or 1 clock per iteration • Average: 3. 3 ops per clock, 66% efficiency Note: Need less registers for software pipelining 9/27/00 (only using 7 registers here, was using 15) CS 252/Kubiatowicz Lec 8. 14

Software Pipelining with Loop Unrolling in VLIW Memory reference 1 Memory reference 2 FP operation 1 LD F 0, -48(R 1) ST 0(R 1), F 4 ADDD F 4, F 0, F 2 LD F 6, -56(R 1) LD F 10, -40(R 1) ST -8(R 1), F 8 ST 8(R 1), F 12 ADDD F 8, F 6, F 2 ADDD F 12, F 10, F 2 FP op. 2 Int. op/ branch Clock 1 SUBI R 1, #24 BNEZ R 1, LOOP 2 3 • Software pipelined across 9 iterations of original loop – In each iteration of above loop, we: » Store to m, m-8, m-16 (iterations I-3, I-2, I-1) » Compute for m-24, m-32, m-40 (iterations I, I+1, I+2) » Load from m-48, m-56, m-64 (iterations I+3, I+4, I+5) • 9 results in 9 cycles, or 1 clock per iteration • Average: 3. 3 ops per clock, 66% efficiency Note: Need less registers for software pipelining 9/27/00 (only using 7 registers here, was using 15) CS 252/Kubiatowicz Lec 8. 14

Advantages of HW (Tomasulo) vs. SW (VLIW) Speculation • HW advantages: – – – HW better at memory disambiguation since knows actual addresses HW better at branch prediction since lower overhead HW maintains precise exception model HW does not execute bookkeeping instructions Same software works across multiple implementations Smaller code size (not as many noops filing blank instructions) • SW advantages: – – 9/27/00 Window of instructions that is examined for parallelism much higher Much less hardware involved in VLIW (unless you are Intel…!) More involved types of speculation can be done more easily Speculation can be based on large-scale program behavior, not just local information CS 252/Kubiatowicz Lec 8. 15

Advantages of HW (Tomasulo) vs. SW (VLIW) Speculation • HW advantages: – – – HW better at memory disambiguation since knows actual addresses HW better at branch prediction since lower overhead HW maintains precise exception model HW does not execute bookkeeping instructions Same software works across multiple implementations Smaller code size (not as many noops filing blank instructions) • SW advantages: – – 9/27/00 Window of instructions that is examined for parallelism much higher Much less hardware involved in VLIW (unless you are Intel…!) More involved types of speculation can be done more easily Speculation can be based on large-scale program behavior, not just local information CS 252/Kubiatowicz Lec 8. 15

Superscalar v. VLIW • Smaller code size • Binary compatability across generations of hardware 9/27/00 • Simplified Hardware for decoding, issuing instructions • No Interlock Hardware (compiler checks? ) • More registers, but simplified Hardware for Register Ports (multiple independent register files? ) CS 252/Kubiatowicz Lec 8. 16

Superscalar v. VLIW • Smaller code size • Binary compatability across generations of hardware 9/27/00 • Simplified Hardware for decoding, issuing instructions • No Interlock Hardware (compiler checks? ) • More registers, but simplified Hardware for Register Ports (multiple independent register files? ) CS 252/Kubiatowicz Lec 8. 16

Techniques to Increase ILP • • 9/27/00 Forwarding Branch Prediction Superpipelining Multiple Issue - Superscalar, VLIW/EPIC Software manipulation Dynamic Pipeline Scheduling Speculative Execution Simultaneous Multithreading (SMT) CS 252/Kubiatowicz Lec 8. 30

Techniques to Increase ILP • • 9/27/00 Forwarding Branch Prediction Superpipelining Multiple Issue - Superscalar, VLIW/EPIC Software manipulation Dynamic Pipeline Scheduling Speculative Execution Simultaneous Multithreading (SMT) CS 252/Kubiatowicz Lec 8. 30

Multithreaded Processors • Aim: Latency tolerance • What is the problem? Load access latencies measured on an Alpha Server 4100 SMP with four Alpha 21164 processors are: – 7 cycles for a primary cache miss which hits in the onchip L 2 cache of the 21164 processor, – 21 cycles for a L 2 cache miss which hits in the L 3 (board-level) cache, – 80 cycles for a miss that is served by the memory, and – 125 cycles for a dirty miss, i. e. , a miss that has to be served from another processor's cache memory. 9/27/00 CS 252/Kubiatowicz Lec 8. 31

Multithreaded Processors • Aim: Latency tolerance • What is the problem? Load access latencies measured on an Alpha Server 4100 SMP with four Alpha 21164 processors are: – 7 cycles for a primary cache miss which hits in the onchip L 2 cache of the 21164 processor, – 21 cycles for a L 2 cache miss which hits in the L 3 (board-level) cache, – 80 cycles for a miss that is served by the memory, and – 125 cycles for a dirty miss, i. e. , a miss that has to be served from another processor's cache memory. 9/27/00 CS 252/Kubiatowicz Lec 8. 31

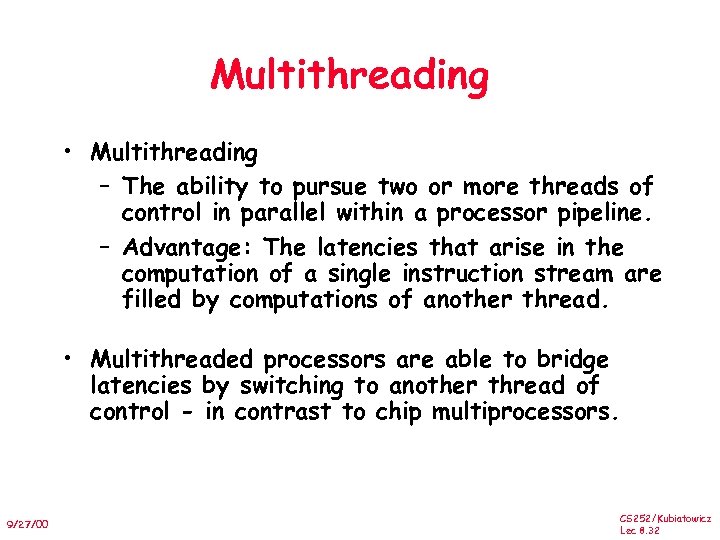

Multithreading • Multithreading – The ability to pursue two or more threads of control in parallel within a processor pipeline. – Advantage: The latencies that arise in the computation of a single instruction stream are filled by computations of another thread. • Multithreaded processors are able to bridge latencies by switching to another thread of control - in contrast to chip multiprocessors. 9/27/00 CS 252/Kubiatowicz Lec 8. 32

Multithreading • Multithreading – The ability to pursue two or more threads of control in parallel within a processor pipeline. – Advantage: The latencies that arise in the computation of a single instruction stream are filled by computations of another thread. • Multithreaded processors are able to bridge latencies by switching to another thread of control - in contrast to chip multiprocessors. 9/27/00 CS 252/Kubiatowicz Lec 8. 32

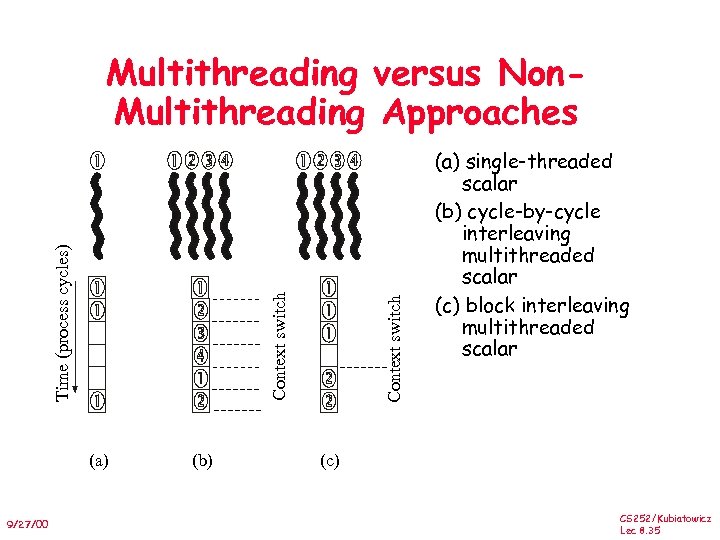

Approaches of Multithreaded Processors • Cycle-by-cycle interleaving – An instruction of another thread is fetched and fed into the execution pipeline at each processor cycle. • Block-interleaving – The instructions of a thread are executed successively until an event occurs that may cause latency. This event induces a context switch. • Simultaneous multithreading SMTs – Instructions are simultaneously issued from multiple threads to the FUs of a superscalar processor. – combines a wide issue superscalar instruction issue with multithreading. 9/27/00 CS 252/Kubiatowicz Lec 8. 33

Approaches of Multithreaded Processors • Cycle-by-cycle interleaving – An instruction of another thread is fetched and fed into the execution pipeline at each processor cycle. • Block-interleaving – The instructions of a thread are executed successively until an event occurs that may cause latency. This event induces a context switch. • Simultaneous multithreading SMTs – Instructions are simultaneously issued from multiple threads to the FUs of a superscalar processor. – combines a wide issue superscalar instruction issue with multithreading. 9/27/00 CS 252/Kubiatowicz Lec 8. 33

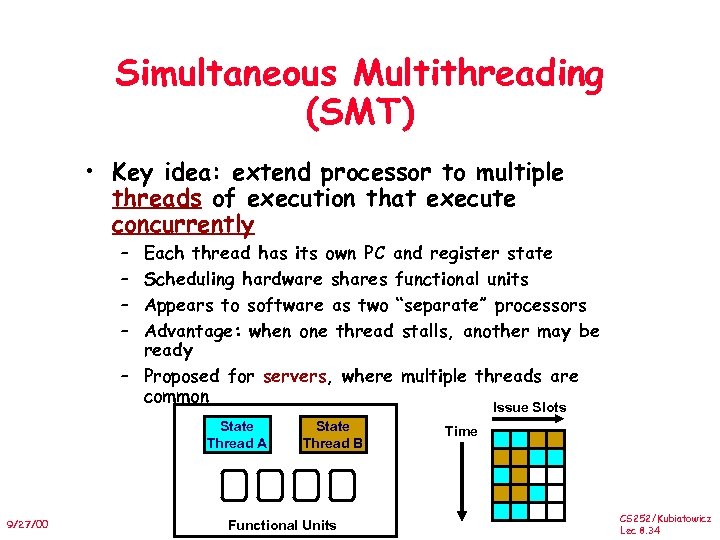

Simultaneous Multithreading (SMT) • Key idea: extend processor to multiple threads of execution that execute concurrently – – Each thread has its own PC and register state Scheduling hardware shares functional units Appears to software as two “separate” processors Advantage: when one thread stalls, another may be ready – Proposed for servers, where multiple threads are common Issue Slots State Thread A 9/27/00 State Thread B Functional Units Time CS 252/Kubiatowicz Lec 8. 34

Simultaneous Multithreading (SMT) • Key idea: extend processor to multiple threads of execution that execute concurrently – – Each thread has its own PC and register state Scheduling hardware shares functional units Appears to software as two “separate” processors Advantage: when one thread stalls, another may be ready – Proposed for servers, where multiple threads are common Issue Slots State Thread A 9/27/00 State Thread B Functional Units Time CS 252/Kubiatowicz Lec 8. 34

(a) 9/27/00 (b) Context switch Time (process cycles) Multithreading versus Non. Multithreading Approaches (a) single-threaded scalar (b) cycle-by-cycle interleaving multithreaded scalar (c) block interleaving multithreaded scalar (c) CS 252/Kubiatowicz Lec 8. 35

(a) 9/27/00 (b) Context switch Time (process cycles) Multithreading versus Non. Multithreading Approaches (a) single-threaded scalar (b) cycle-by-cycle interleaving multithreaded scalar (c) block interleaving multithreaded scalar (c) CS 252/Kubiatowicz Lec 8. 35

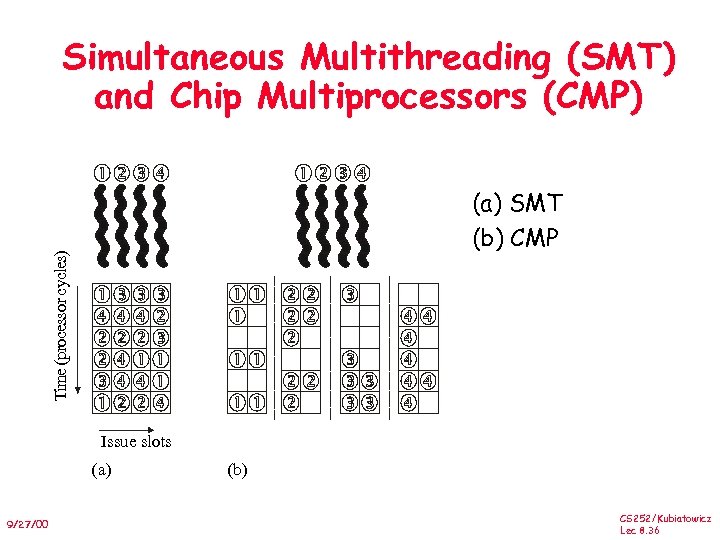

Simultaneous Multithreading (SMT) and Chip Multiprocessors (CMP) Time (processor cycles) (a) SMT (b) CMP Issue slots (a) 9/27/00 (b) CS 252/Kubiatowicz Lec 8. 36

Simultaneous Multithreading (SMT) and Chip Multiprocessors (CMP) Time (processor cycles) (a) SMT (b) CMP Issue slots (a) 9/27/00 (b) CS 252/Kubiatowicz Lec 8. 36

Combining SMT and Multimedia • Start with a wide-issue superscalar generalpurpose processor SS • Enhance by simultaneous multithreading SMT • Enhance by multimedia unit(s), accelerators SIMD • Enhance by on-chip RAM memory for constants and local variables 9/27/00 CS 252/Kubiatowicz Lec 8. 37

Combining SMT and Multimedia • Start with a wide-issue superscalar generalpurpose processor SS • Enhance by simultaneous multithreading SMT • Enhance by multimedia unit(s), accelerators SIMD • Enhance by on-chip RAM memory for constants and local variables 9/27/00 CS 252/Kubiatowicz Lec 8. 37

The SMT Multimedia Processor 9/27/00 CS 252/Kubiatowicz Lec 8. 38

The SMT Multimedia Processor 9/27/00 CS 252/Kubiatowicz Lec 8. 38

IPC of Maximum Processor Models 9/27/00 CS 252/Kubiatowicz Lec 8. 39

IPC of Maximum Processor Models 9/27/00 CS 252/Kubiatowicz Lec 8. 39

Multis: Additional utilization of more coarse-grained parallelism • CMPs Chip multiprocessors or multiprocessor chips – integrate two or more complete processors on a single chip, – every functional unit of a processor is duplicated. • SMTs Simultaneous multithreaded processors – store multiple contexts in different register sets on the chip, – the functional units are multiplexed between the threads, – instructions of different contexts are simultaneously executed. 9/27/00 CS 252/Kubiatowicz Lec 8. 48

Multis: Additional utilization of more coarse-grained parallelism • CMPs Chip multiprocessors or multiprocessor chips – integrate two or more complete processors on a single chip, – every functional unit of a processor is duplicated. • SMTs Simultaneous multithreaded processors – store multiple contexts in different register sets on the chip, – the functional units are multiplexed between the threads, – instructions of different contexts are simultaneously executed. 9/27/00 CS 252/Kubiatowicz Lec 8. 48

CMPs-Homo: Com-arch by shared global memory Processor Primary Cache Secndary Cache Global Memory Shared global memory, no caches 9/27/00 CS 252/Kubiatowicz Lec 8. 49

CMPs-Homo: Com-arch by shared global memory Processor Primary Cache Secndary Cache Global Memory Shared global memory, no caches 9/27/00 CS 252/Kubiatowicz Lec 8. 49

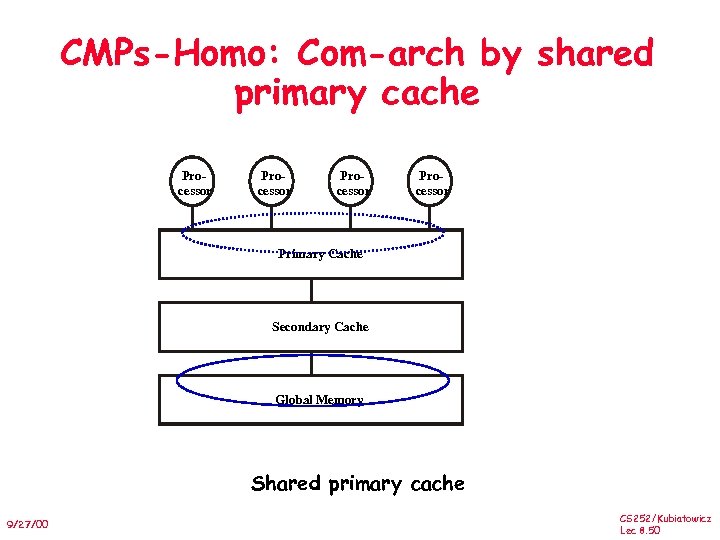

CMPs-Homo: Com-arch by shared primary cache Processor Primary Cache Secondary Cache Global Memory Shared primary cache 9/27/00 CS 252/Kubiatowicz Lec 8. 50

CMPs-Homo: Com-arch by shared primary cache Processor Primary Cache Secondary Cache Global Memory Shared primary cache 9/27/00 CS 252/Kubiatowicz Lec 8. 50

CMPs-Homo: Com-arch by global memory, caches Processor Processor Primary Cache Primary Cache Secondary Cache Global Memory Shared memory 9/27/00 Secondary Cache Global Memory Shared secondary cache CS 252/Kubiatowicz Lec 8. 51

CMPs-Homo: Com-arch by global memory, caches Processor Processor Primary Cache Primary Cache Secondary Cache Global Memory Shared memory 9/27/00 Secondary Cache Global Memory Shared secondary cache CS 252/Kubiatowicz Lec 8. 51

Com-arch in Hydra: A Single -Chip Multiprocessor A Single Chip Centralized Bus Arbitration Mechanisms CPU 0 Primary I-cache Primary D-cache CPU 0 Memory Controller On-chip Secondary Cache CPU 1 Primary I-cache Primary D-cache CPU 1 Memory Controller Off-chip L 3 Interface Cache SRAM Array 9/27/00 CPU 2 Primary I-cache CPU 3 Primary D-cache Primary I-cache CPU 2 Memory Controller Rambus Memory Interface DRAM Main Memory Primary D-cache CPU 3 Memory Controller DMA I/O Bus Interface I/O Device CS 252/Kubiatowicz Lec 8. 52

Com-arch in Hydra: A Single -Chip Multiprocessor A Single Chip Centralized Bus Arbitration Mechanisms CPU 0 Primary I-cache Primary D-cache CPU 0 Memory Controller On-chip Secondary Cache CPU 1 Primary I-cache Primary D-cache CPU 1 Memory Controller Off-chip L 3 Interface Cache SRAM Array 9/27/00 CPU 2 Primary I-cache CPU 3 Primary D-cache Primary I-cache CPU 2 Memory Controller Rambus Memory Interface DRAM Main Memory Primary D-cache CPU 3 Memory Controller DMA I/O Bus Interface I/O Device CS 252/Kubiatowicz Lec 8. 52

CMPs-Hetero: Communications Architecture • Architectures found in today’s heterogeneous processors for platform based design • E. gr. CPU cores, AMBA buses, internal/external shared memories RISC Core Internal/ External Memory 9/27/00 AMBA Bus Engines Shared Bus External I/O CS 252/Kubiatowicz Lec 8. 53

CMPs-Hetero: Communications Architecture • Architectures found in today’s heterogeneous processors for platform based design • E. gr. CPU cores, AMBA buses, internal/external shared memories RISC Core Internal/ External Memory 9/27/00 AMBA Bus Engines Shared Bus External I/O CS 252/Kubiatowicz Lec 8. 53

CMPs-Hetero: Communications Architecture, Arbiters 9/27/00 CS 252/Kubiatowicz Lec 8. 54

CMPs-Hetero: Communications Architecture, Arbiters 9/27/00 CS 252/Kubiatowicz Lec 8. 54

CS 252 Graduate Computer Architecture Lecture 9* Branch Prediction October 4, 2000 Prof. John Kubiatowicz 9/27/00 CS 252/Kubiatowicz Lec 8. 55

CS 252 Graduate Computer Architecture Lecture 9* Branch Prediction October 4, 2000 Prof. John Kubiatowicz 9/27/00 CS 252/Kubiatowicz Lec 8. 55

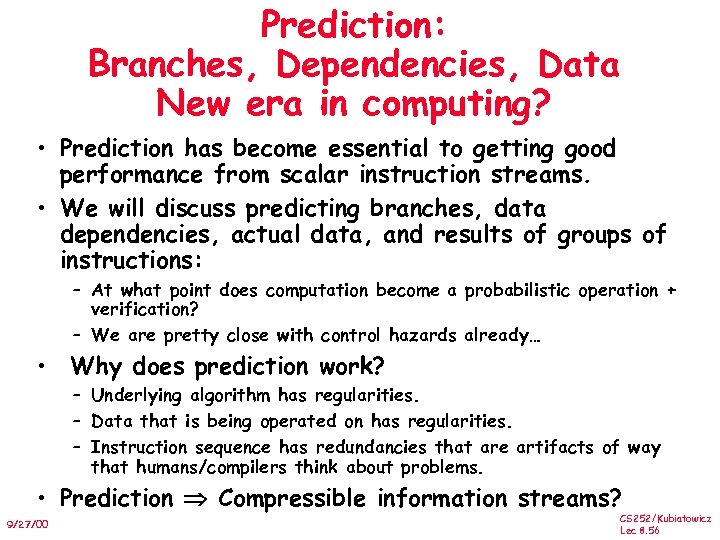

Prediction: Branches, Dependencies, Data New era in computing? • Prediction has become essential to getting good performance from scalar instruction streams. • We will discuss predicting branches, data dependencies, actual data, and results of groups of instructions: – At what point does computation become a probabilistic operation + verification? – We are pretty close with control hazards already… • Why does prediction work? – Underlying algorithm has regularities. – Data that is being operated on has regularities. – Instruction sequence has redundancies that are artifacts of way that humans/compilers think about problems. • Prediction Compressible information streams? 9/27/00 CS 252/Kubiatowicz Lec 8. 56

Prediction: Branches, Dependencies, Data New era in computing? • Prediction has become essential to getting good performance from scalar instruction streams. • We will discuss predicting branches, data dependencies, actual data, and results of groups of instructions: – At what point does computation become a probabilistic operation + verification? – We are pretty close with control hazards already… • Why does prediction work? – Underlying algorithm has regularities. – Data that is being operated on has regularities. – Instruction sequence has redundancies that are artifacts of way that humans/compilers think about problems. • Prediction Compressible information streams? 9/27/00 CS 252/Kubiatowicz Lec 8. 56

Need Address at Same Time as Prediction • Branch Target Buffer (BTB): Address of branch index to get prediction AND branch address (if taken) – Note: must check for branch match now, since can’t use wrong branch address (Figure 4. 22, p. 273) Branch PC Predicted PC PC of instruction FETCH =? Predict taken or untaken 9/27/00 • Return instruction addresses predicted with stack CS 252/Kubiatowicz Lec 8. 58

Need Address at Same Time as Prediction • Branch Target Buffer (BTB): Address of branch index to get prediction AND branch address (if taken) – Note: must check for branch match now, since can’t use wrong branch address (Figure 4. 22, p. 273) Branch PC Predicted PC PC of instruction FETCH =? Predict taken or untaken 9/27/00 • Return instruction addresses predicted with stack CS 252/Kubiatowicz Lec 8. 58

Dynamic Branch Prediction • Performance = ƒ(accuracy, cost of misprediction) • Branch History Table: Lower bits of PC address index table of 1 -bit values – Says whether or not branch taken last time – No address check • Problem: in a loop, 1 -bit BHT will cause two mispredictions (avg is 9 iteratios before exit): – End of loop case, when it exits instead of looping as before – First time through loop on next time through code, when it predicts exit instead of looping 9/27/00 CS 252/Kubiatowicz Lec 8. 59

Dynamic Branch Prediction • Performance = ƒ(accuracy, cost of misprediction) • Branch History Table: Lower bits of PC address index table of 1 -bit values – Says whether or not branch taken last time – No address check • Problem: in a loop, 1 -bit BHT will cause two mispredictions (avg is 9 iteratios before exit): – End of loop case, when it exits instead of looping as before – First time through loop on next time through code, when it predicts exit instead of looping 9/27/00 CS 252/Kubiatowicz Lec 8. 59

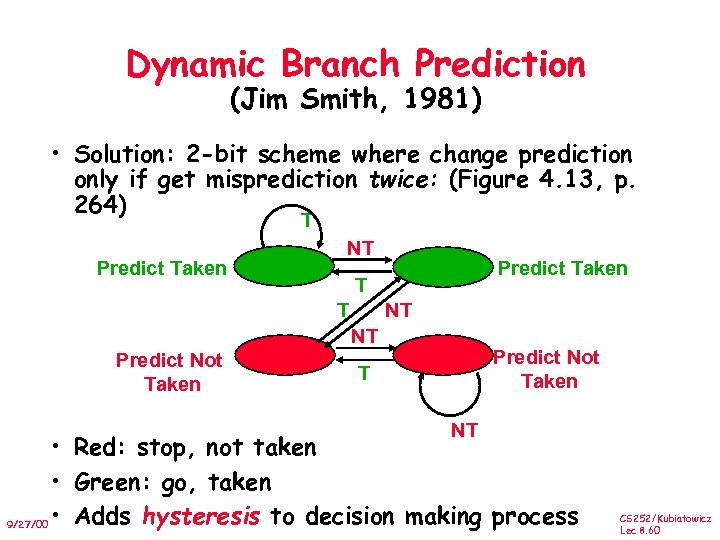

Dynamic Branch Prediction (Jim Smith, 1981) • Solution: 2 -bit scheme where change prediction only if get misprediction twice: (Figure 4. 13, p. 264) T Predict Taken NT Predict Taken T T NT NT Predict Not Taken T NT • Red: stop, not taken • Green: go, taken 9/27/00 • Adds hysteresis to decision making process CS 252/Kubiatowicz Lec 8. 60

Dynamic Branch Prediction (Jim Smith, 1981) • Solution: 2 -bit scheme where change prediction only if get misprediction twice: (Figure 4. 13, p. 264) T Predict Taken NT Predict Taken T T NT NT Predict Not Taken T NT • Red: stop, not taken • Green: go, taken 9/27/00 • Adds hysteresis to decision making process CS 252/Kubiatowicz Lec 8. 60

9/27/00 CS 252/Kubiatowicz Lec 8. 61

9/27/00 CS 252/Kubiatowicz Lec 8. 61

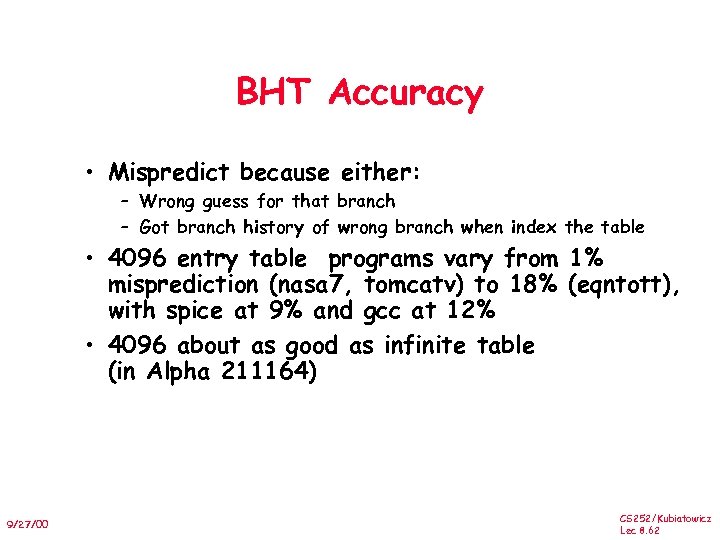

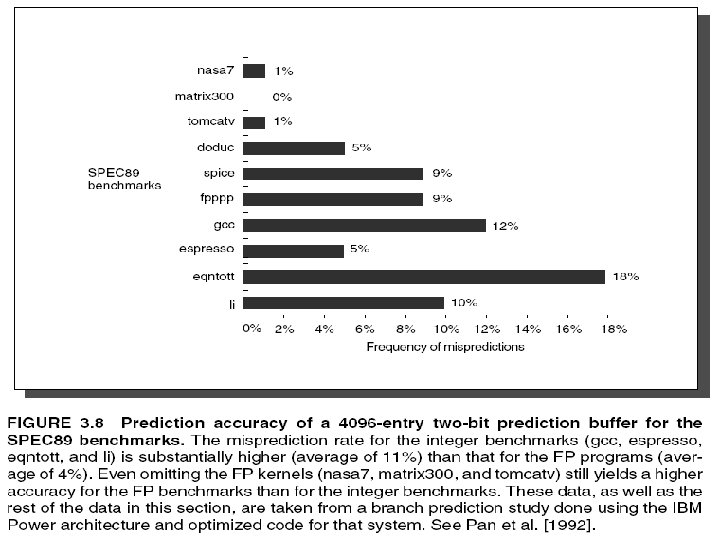

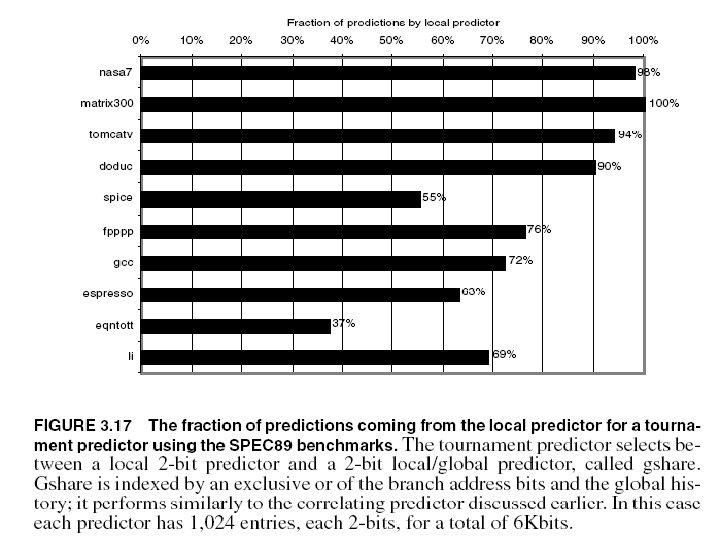

BHT Accuracy • Mispredict because either: – Wrong guess for that branch – Got branch history of wrong branch when index the table • 4096 entry table programs vary from 1% misprediction (nasa 7, tomcatv) to 18% (eqntott), with spice at 9% and gcc at 12% • 4096 about as good as infinite table (in Alpha 211164) 9/27/00 CS 252/Kubiatowicz Lec 8. 62

BHT Accuracy • Mispredict because either: – Wrong guess for that branch – Got branch history of wrong branch when index the table • 4096 entry table programs vary from 1% misprediction (nasa 7, tomcatv) to 18% (eqntott), with spice at 9% and gcc at 12% • 4096 about as good as infinite table (in Alpha 211164) 9/27/00 CS 252/Kubiatowicz Lec 8. 62

9/27/00 CS 252/Kubiatowicz Lec 8. 63

9/27/00 CS 252/Kubiatowicz Lec 8. 63

9/27/00 CS 252/Kubiatowicz Lec 8. 64

9/27/00 CS 252/Kubiatowicz Lec 8. 64

Correlating Branches • Hypothesis: recent branches are correlated; that is, behavior of recently executed branches affects prediction of current branch • Two possibilities; Current branch depends on: – Last m most recently executed branches anywhere in program Produces a “GA” (for “global address”) in the Yeh and Patt classification (e. g. GAg) – Last m most recent outcomes of same branch. Produces a “PA” (for “per address”) in same classification (e. g. PAg) • Idea: record m most recently executed branches as taken or not taken, and use that pattern to select the proper branch history table entry – A single history table shared by all branches (appends a “g” at end), indexed by history value. – Address is used along with history to select table entry (appends a “p” at end of classification) – If only portion of address used, often appends an “s” to indicate “set CS 252/Kubiatowicz -indexed” tables (I. e. GAs) 9/27/00 Lec 8. 65

Correlating Branches • Hypothesis: recent branches are correlated; that is, behavior of recently executed branches affects prediction of current branch • Two possibilities; Current branch depends on: – Last m most recently executed branches anywhere in program Produces a “GA” (for “global address”) in the Yeh and Patt classification (e. g. GAg) – Last m most recent outcomes of same branch. Produces a “PA” (for “per address”) in same classification (e. g. PAg) • Idea: record m most recently executed branches as taken or not taken, and use that pattern to select the proper branch history table entry – A single history table shared by all branches (appends a “g” at end), indexed by history value. – Address is used along with history to select table entry (appends a “p” at end of classification) – If only portion of address used, often appends an “s” to indicate “set CS 252/Kubiatowicz -indexed” tables (I. e. GAs) 9/27/00 Lec 8. 65

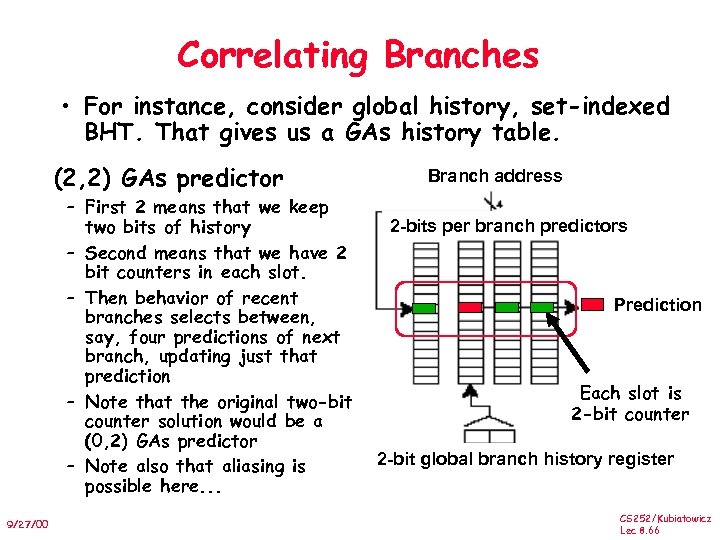

Correlating Branches • For instance, consider global history, set-indexed BHT. That gives us a GAs history table. (2, 2) GAs predictor – First 2 means that we keep two bits of history – Second means that we have 2 bit counters in each slot. – Then behavior of recent branches selects between, say, four predictions of next branch, updating just that prediction – Note that the original two-bit counter solution would be a (0, 2) GAs predictor – Note also that aliasing is possible here. . . 9/27/00 Branch address 2 -bits per branch predictors Prediction Each slot is 2 -bit counter 2 -bit global branch history register CS 252/Kubiatowicz Lec 8. 66

Correlating Branches • For instance, consider global history, set-indexed BHT. That gives us a GAs history table. (2, 2) GAs predictor – First 2 means that we keep two bits of history – Second means that we have 2 bit counters in each slot. – Then behavior of recent branches selects between, say, four predictions of next branch, updating just that prediction – Note that the original two-bit counter solution would be a (0, 2) GAs predictor – Note also that aliasing is possible here. . . 9/27/00 Branch address 2 -bits per branch predictors Prediction Each slot is 2 -bit counter 2 -bit global branch history register CS 252/Kubiatowicz Lec 8. 66

9/27/00 CS 252/Kubiatowicz Lec 8. 67

9/27/00 CS 252/Kubiatowicz Lec 8. 67

9/27/00 CS 252/Kubiatowicz Lec 8. 68

9/27/00 CS 252/Kubiatowicz Lec 8. 68

9/27/00 CS 252/Kubiatowicz Lec 8. 69

9/27/00 CS 252/Kubiatowicz Lec 8. 69

9/27/00 CS 252/Kubiatowicz Lec 8. 70

9/27/00 CS 252/Kubiatowicz Lec 8. 70

9/27/00 CS 252/Kubiatowicz Lec 8. 71

9/27/00 CS 252/Kubiatowicz Lec 8. 71

9/27/00 CS 252/Kubiatowicz Lec 8. 72

9/27/00 CS 252/Kubiatowicz Lec 8. 72

9/27/00 CS 252/Kubiatowicz Lec 8. 73

9/27/00 CS 252/Kubiatowicz Lec 8. 73

Accuracy of Different Schemes (Figure 4. 21, p. 272) Frequency of Mispredictions 18% 9/27/00 Unlimited Entries 2 -bit BHT 4096 Entries 2 -bit BHT 1024 Entries (2, 2) BHT 0% CS 252/Kubiatowicz Lec 8. 74

Accuracy of Different Schemes (Figure 4. 21, p. 272) Frequency of Mispredictions 18% 9/27/00 Unlimited Entries 2 -bit BHT 4096 Entries 2 -bit BHT 1024 Entries (2, 2) BHT 0% CS 252/Kubiatowicz Lec 8. 74

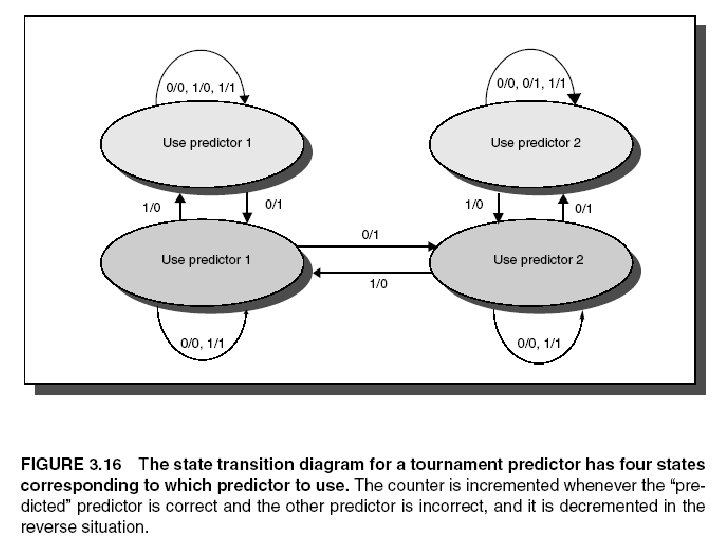

9/27/00 CS 252/Kubiatowicz Lec 8. 75

9/27/00 CS 252/Kubiatowicz Lec 8. 75

9/27/00 CS 252/Kubiatowicz Lec 8. 76

9/27/00 CS 252/Kubiatowicz Lec 8. 76

9/27/00 CS 252/Kubiatowicz Lec 8. 77

9/27/00 CS 252/Kubiatowicz Lec 8. 77

Summary Dynamic Branch Prediction • Prediction becoming important part of scalar execution. – Prediction is exploiting “information compressibility” in execution • Branch History Table: 2 bits for loop accuracy • Correlation: Recently executed branches correlated with next branch. – Either different branches (GA) – Or different executions of same branches (PA). • Branch Target Buffer: include branch address & prediction • (Predicated Execution can reduce number of branches, number of mispredicted branches) 9/27/00 CS 252/Kubiatowicz Lec 8. 79

Summary Dynamic Branch Prediction • Prediction becoming important part of scalar execution. – Prediction is exploiting “information compressibility” in execution • Branch History Table: 2 bits for loop accuracy • Correlation: Recently executed branches correlated with next branch. – Either different branches (GA) – Or different executions of same branches (PA). • Branch Target Buffer: include branch address & prediction • (Predicated Execution can reduce number of branches, number of mispredicted branches) 9/27/00 CS 252/Kubiatowicz Lec 8. 79

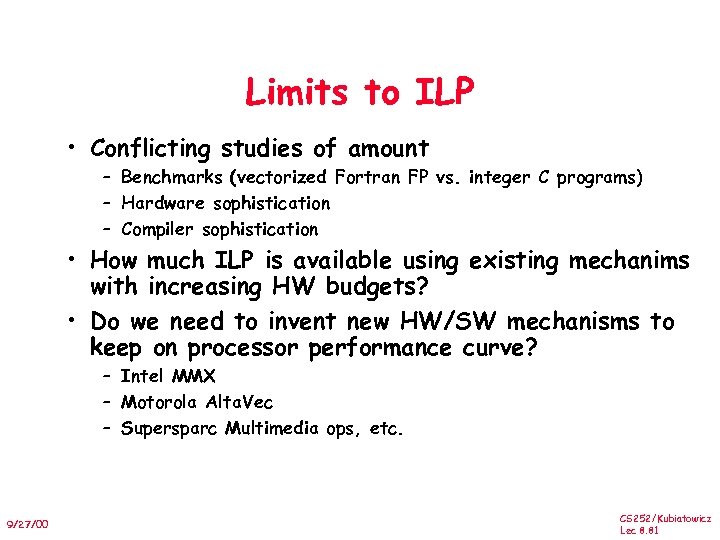

Limits to ILP • Conflicting studies of amount – Benchmarks (vectorized Fortran FP vs. integer C programs) – Hardware sophistication – Compiler sophistication • How much ILP is available using existing mechanims with increasing HW budgets? • Do we need to invent new HW/SW mechanisms to keep on processor performance curve? – Intel MMX – Motorola Alta. Vec – Supersparc Multimedia ops, etc. 9/27/00 CS 252/Kubiatowicz Lec 8. 81

Limits to ILP • Conflicting studies of amount – Benchmarks (vectorized Fortran FP vs. integer C programs) – Hardware sophistication – Compiler sophistication • How much ILP is available using existing mechanims with increasing HW budgets? • Do we need to invent new HW/SW mechanisms to keep on processor performance curve? – Intel MMX – Motorola Alta. Vec – Supersparc Multimedia ops, etc. 9/27/00 CS 252/Kubiatowicz Lec 8. 81

Quantitative Limits to ILP Initial HW Model here; MIPS compilers. Assumptions for ideal/perfect machine to start: 1. Register renaming–infinite virtual registers and all WAW & WAR hazards are avoided 2. Branch prediction–perfect; no mispredictions 3. Jump prediction–all jumps perfectly predicted => machine with perfect speculation & an unbounded buffer of instructions available 4. Memory-address alias analysis–addresses are known & a store can be moved before a load provided addresses not equal 1 cycle latency for all instructions; unlimited number of instructions issued per clock cycle 9/27/00 CS 252/Kubiatowicz Lec 8. 82

Quantitative Limits to ILP Initial HW Model here; MIPS compilers. Assumptions for ideal/perfect machine to start: 1. Register renaming–infinite virtual registers and all WAW & WAR hazards are avoided 2. Branch prediction–perfect; no mispredictions 3. Jump prediction–all jumps perfectly predicted => machine with perfect speculation & an unbounded buffer of instructions available 4. Memory-address alias analysis–addresses are known & a store can be moved before a load provided addresses not equal 1 cycle latency for all instructions; unlimited number of instructions issued per clock cycle 9/27/00 CS 252/Kubiatowicz Lec 8. 82

Upper Limit to ILP: Ideal Machine (Figure 4. 38, page 319) FP: 75 - 150 IPC Integer: 18 - 60 9/27/00 CS 252/Kubiatowicz Lec 8. 83

Upper Limit to ILP: Ideal Machine (Figure 4. 38, page 319) FP: 75 - 150 IPC Integer: 18 - 60 9/27/00 CS 252/Kubiatowicz Lec 8. 83

More Realistic HW: Branch Impact Figure 4. 40, Page 323 Change from Infinite window to examine to 2000 and maximum issue of 64 instructions per clock cycle FP: 15 - 45 IPC Integer: 6 - 12 9/27/00 Perfect Pick Cor. or BHT (512) Profile CS 252/Kubiatowicz No prediction Lec 8. 84

More Realistic HW: Branch Impact Figure 4. 40, Page 323 Change from Infinite window to examine to 2000 and maximum issue of 64 instructions per clock cycle FP: 15 - 45 IPC Integer: 6 - 12 9/27/00 Perfect Pick Cor. or BHT (512) Profile CS 252/Kubiatowicz No prediction Lec 8. 84

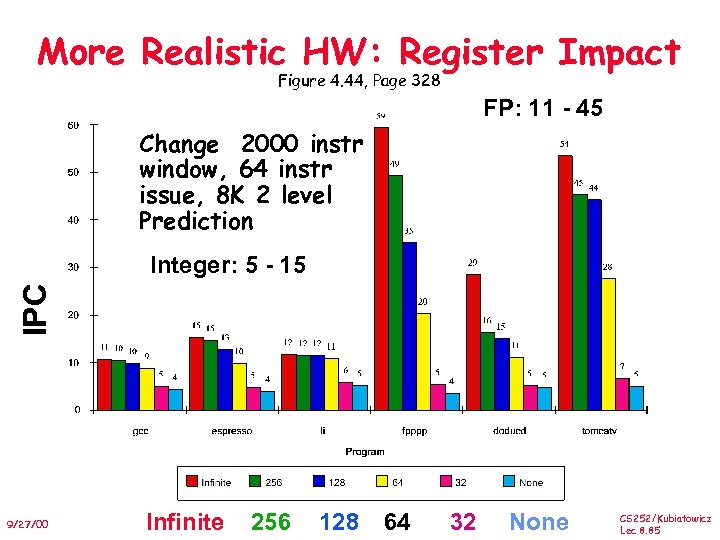

More Realistic HW: Register Impact Figure 4. 44, Page 328 FP: 11 - 45 Change 2000 instr window, 64 instr issue, 8 K 2 level Prediction IPC Integer: 5 - 15 9/27/00 Infinite 256 128 64 32 None CS 252/Kubiatowicz Lec 8. 85

More Realistic HW: Register Impact Figure 4. 44, Page 328 FP: 11 - 45 Change 2000 instr window, 64 instr issue, 8 K 2 level Prediction IPC Integer: 5 - 15 9/27/00 Infinite 256 128 64 32 None CS 252/Kubiatowicz Lec 8. 85

Realistic HW for ‘ 9 X: Window Impact (Figure 4. 48, Page 332) IPC Perfect disambiguation (HW), 1 K Selective Prediction, 16 entry return, 64 registers, issue as many as window 9/27/00 FP: 8 - 45 Integer: 6 - 12 Infinite 256 128 64 32 16 8 4 CS 252/Kubiatowicz Lec 8. 87

Realistic HW for ‘ 9 X: Window Impact (Figure 4. 48, Page 332) IPC Perfect disambiguation (HW), 1 K Selective Prediction, 16 entry return, 64 registers, issue as many as window 9/27/00 FP: 8 - 45 Integer: 6 - 12 Infinite 256 128 64 32 16 8 4 CS 252/Kubiatowicz Lec 8. 87