6b4b8a2f25220989125424ab177de215.ppt

- Количество слайдов: 60

CS 252 Graduate Computer Architecture Lecture 14 3+1 Cs of Caching and many ways Cache Optimizations John Kubiatowicz Electrical Engineering and Computer Sciences University of California, Berkeley http: //www. eecs. berkeley. edu/~kubitron/cs 252

CS 252 Graduate Computer Architecture Lecture 14 3+1 Cs of Caching and many ways Cache Optimizations John Kubiatowicz Electrical Engineering and Computer Sciences University of California, Berkeley http: //www. eecs. berkeley. edu/~kubitron/cs 252

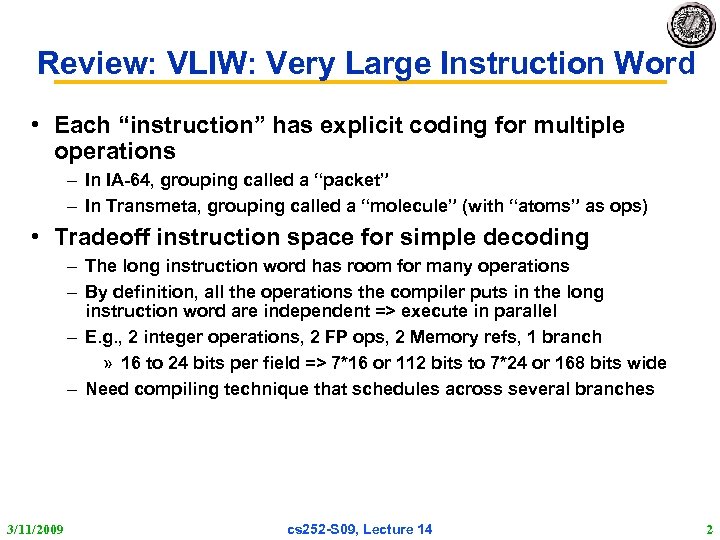

Review: VLIW: Very Large Instruction Word • Each “instruction” has explicit coding for multiple operations – In IA-64, grouping called a “packet” – In Transmeta, grouping called a “molecule” (with “atoms” as ops) • Tradeoff instruction space for simple decoding – The long instruction word has room for many operations – By definition, all the operations the compiler puts in the long instruction word are independent => execute in parallel – E. g. , 2 integer operations, 2 FP ops, 2 Memory refs, 1 branch » 16 to 24 bits per field => 7*16 or 112 bits to 7*24 or 168 bits wide – Need compiling technique that schedules across several branches 3/11/2009 cs 252 -S 09, Lecture 14 2

Review: VLIW: Very Large Instruction Word • Each “instruction” has explicit coding for multiple operations – In IA-64, grouping called a “packet” – In Transmeta, grouping called a “molecule” (with “atoms” as ops) • Tradeoff instruction space for simple decoding – The long instruction word has room for many operations – By definition, all the operations the compiler puts in the long instruction word are independent => execute in parallel – E. g. , 2 integer operations, 2 FP ops, 2 Memory refs, 1 branch » 16 to 24 bits per field => 7*16 or 112 bits to 7*24 or 168 bits wide – Need compiling technique that schedules across several branches 3/11/2009 cs 252 -S 09, Lecture 14 2

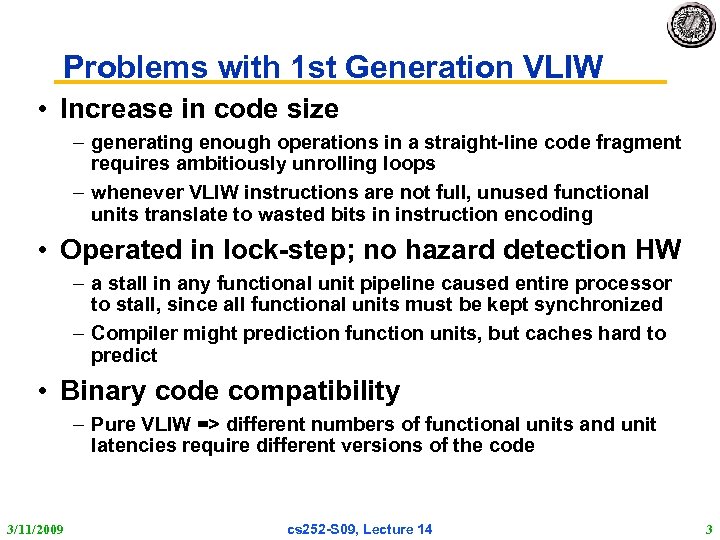

Problems with 1 st Generation VLIW • Increase in code size – generating enough operations in a straight-line code fragment requires ambitiously unrolling loops – whenever VLIW instructions are not full, unused functional units translate to wasted bits in instruction encoding • Operated in lock-step; no hazard detection HW – a stall in any functional unit pipeline caused entire processor to stall, since all functional units must be kept synchronized – Compiler might prediction function units, but caches hard to predict • Binary code compatibility – Pure VLIW => different numbers of functional units and unit latencies require different versions of the code 3/11/2009 cs 252 -S 09, Lecture 14 3

Problems with 1 st Generation VLIW • Increase in code size – generating enough operations in a straight-line code fragment requires ambitiously unrolling loops – whenever VLIW instructions are not full, unused functional units translate to wasted bits in instruction encoding • Operated in lock-step; no hazard detection HW – a stall in any functional unit pipeline caused entire processor to stall, since all functional units must be kept synchronized – Compiler might prediction function units, but caches hard to predict • Binary code compatibility – Pure VLIW => different numbers of functional units and unit latencies require different versions of the code 3/11/2009 cs 252 -S 09, Lecture 14 3

Discussion of two papers for today • “Abstract DAISY: Dynamic Compilation for 100 % Architectural Compatibility, ” Erik R. Altman. Appeared in International Symposium on Computer Architecture (ISCA), 1997 • “The Transmeta Code Morphing Software: Using Speculation, Recovery, and Adaptive Retranslation to Address Real-Life Challenges, ” James C. Dehnert, Brian K. Grant, John P. Banning, Richard Johnson, Thomas Kistler, Alexander Klaiber, Jim Mattson. Appeared in the Proceedings of the First Annual IEEE/ACM International Symposium on Code Generation and Optimization, March 2003 3/11/2009 cs 252 -S 09, Lecture 14 4

Discussion of two papers for today • “Abstract DAISY: Dynamic Compilation for 100 % Architectural Compatibility, ” Erik R. Altman. Appeared in International Symposium on Computer Architecture (ISCA), 1997 • “The Transmeta Code Morphing Software: Using Speculation, Recovery, and Adaptive Retranslation to Address Real-Life Challenges, ” James C. Dehnert, Brian K. Grant, John P. Banning, Richard Johnson, Thomas Kistler, Alexander Klaiber, Jim Mattson. Appeared in the Proceedings of the First Annual IEEE/ACM International Symposium on Code Generation and Optimization, March 2003 3/11/2009 cs 252 -S 09, Lecture 14 4

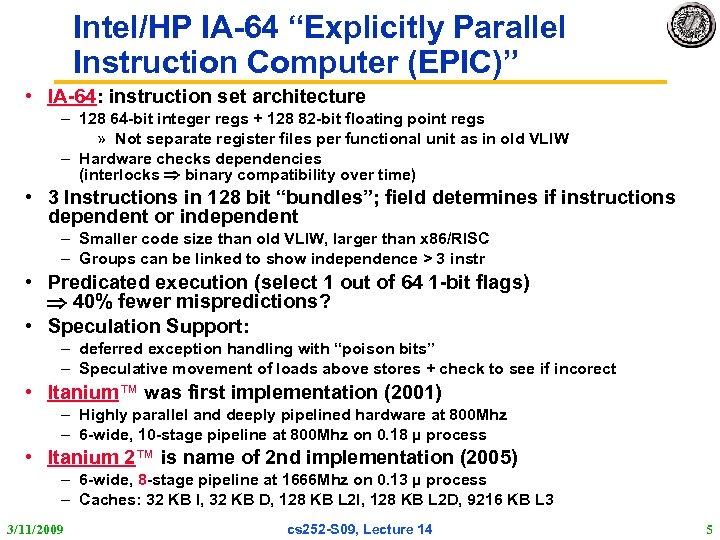

Intel/HP IA-64 “Explicitly Parallel Instruction Computer (EPIC)” • IA-64: instruction set architecture – 128 64 -bit integer regs + 128 82 -bit floating point regs » Not separate register files per functional unit as in old VLIW – Hardware checks dependencies (interlocks binary compatibility over time) • 3 Instructions in 128 bit “bundles”; field determines if instructions dependent or independent – Smaller code size than old VLIW, larger than x 86/RISC – Groups can be linked to show independence > 3 instr • Predicated execution (select 1 out of 64 1 -bit flags) 40% fewer mispredictions? • Speculation Support: – deferred exception handling with “poison bits” – Speculative movement of loads above stores + check to see if incorect • Itanium™ was first implementation (2001) – Highly parallel and deeply pipelined hardware at 800 Mhz – 6 -wide, 10 -stage pipeline at 800 Mhz on 0. 18 µ process • Itanium 2™ is name of 2 nd implementation (2005) – 6 -wide, 8 -stage pipeline at 1666 Mhz on 0. 13 µ process – Caches: 32 KB I, 32 KB D, 128 KB L 2 I, 128 KB L 2 D, 9216 KB L 3 3/11/2009 cs 252 -S 09, Lecture 14 5

Intel/HP IA-64 “Explicitly Parallel Instruction Computer (EPIC)” • IA-64: instruction set architecture – 128 64 -bit integer regs + 128 82 -bit floating point regs » Not separate register files per functional unit as in old VLIW – Hardware checks dependencies (interlocks binary compatibility over time) • 3 Instructions in 128 bit “bundles”; field determines if instructions dependent or independent – Smaller code size than old VLIW, larger than x 86/RISC – Groups can be linked to show independence > 3 instr • Predicated execution (select 1 out of 64 1 -bit flags) 40% fewer mispredictions? • Speculation Support: – deferred exception handling with “poison bits” – Speculative movement of loads above stores + check to see if incorect • Itanium™ was first implementation (2001) – Highly parallel and deeply pipelined hardware at 800 Mhz – 6 -wide, 10 -stage pipeline at 800 Mhz on 0. 18 µ process • Itanium 2™ is name of 2 nd implementation (2005) – 6 -wide, 8 -stage pipeline at 1666 Mhz on 0. 13 µ process – Caches: 32 KB I, 32 KB D, 128 KB L 2 I, 128 KB L 2 D, 9216 KB L 3 3/11/2009 cs 252 -S 09, Lecture 14 5

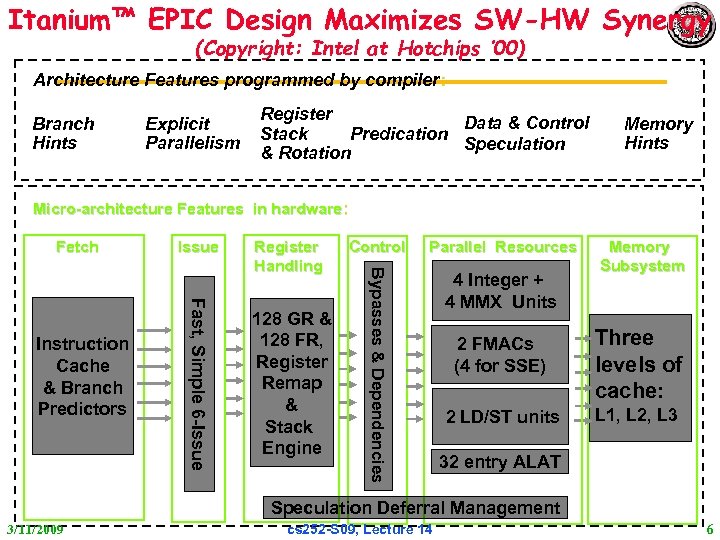

Itanium™ EPIC Design Maximizes SW-HW Synergy (Copyright: Intel at Hotchips ’ 00) Architecture Features programmed by compiler: Branch Hints Explicit Parallelism Register Data & Control Stack Predication Speculation & Rotation Memory Hints Micro-architecture Features in hardware: Fast, Simple 6 -Issue Instruction Cache & Branch Predictors Issue Register Handling 128 GR & 128 FR, Register Remap & Stack Engine Control Parallel Resources Bypasses & Dependencies Fetch 4 Integer + 4 MMX Units Memory Subsystem 2 FMACs (4 for SSE) Three levels of cache: 2 LD/ST units L 1, L 2, L 3 32 entry ALAT Speculation Deferral Management 3/11/2009 cs 252 -S 09, Lecture 14 6

Itanium™ EPIC Design Maximizes SW-HW Synergy (Copyright: Intel at Hotchips ’ 00) Architecture Features programmed by compiler: Branch Hints Explicit Parallelism Register Data & Control Stack Predication Speculation & Rotation Memory Hints Micro-architecture Features in hardware: Fast, Simple 6 -Issue Instruction Cache & Branch Predictors Issue Register Handling 128 GR & 128 FR, Register Remap & Stack Engine Control Parallel Resources Bypasses & Dependencies Fetch 4 Integer + 4 MMX Units Memory Subsystem 2 FMACs (4 for SSE) Three levels of cache: 2 LD/ST units L 1, L 2, L 3 32 entry ALAT Speculation Deferral Management 3/11/2009 cs 252 -S 09, Lecture 14 6

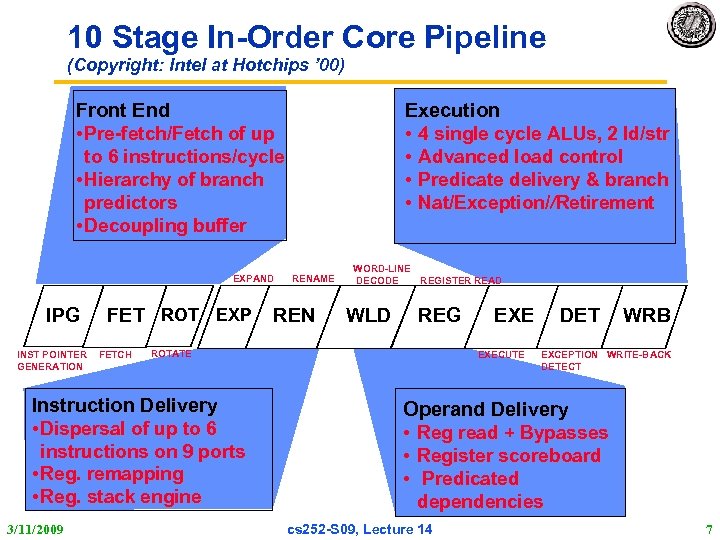

10 Stage In-Order Core Pipeline (Copyright: Intel at Hotchips ’ 00) Execution • 4 single cycle ALUs, 2 ld/str • Advanced load control • Predicate delivery & branch • Nat/Exception//Retirement Front End • Pre-fetch/Fetch of up to 6 instructions/cycle • Hierarchy of branch predictors • Decoupling buffer EXPAND IPG INST POINTER GENERATION FET ROT EXP FETCH REN WORD-LINE REGISTER READ DECODE WLD REG ROTATE Instruction Delivery • Dispersal of up to 6 instructions on 9 ports • Reg. remapping • Reg. stack engine 3/11/2009 RENAME EXECUTE DET WRB EXCEPTION WRITE-BACK DETECT Operand Delivery • Reg read + Bypasses • Register scoreboard • Predicated dependencies cs 252 -S 09, Lecture 14 7

10 Stage In-Order Core Pipeline (Copyright: Intel at Hotchips ’ 00) Execution • 4 single cycle ALUs, 2 ld/str • Advanced load control • Predicate delivery & branch • Nat/Exception//Retirement Front End • Pre-fetch/Fetch of up to 6 instructions/cycle • Hierarchy of branch predictors • Decoupling buffer EXPAND IPG INST POINTER GENERATION FET ROT EXP FETCH REN WORD-LINE REGISTER READ DECODE WLD REG ROTATE Instruction Delivery • Dispersal of up to 6 instructions on 9 ports • Reg. remapping • Reg. stack engine 3/11/2009 RENAME EXECUTE DET WRB EXCEPTION WRITE-BACK DETECT Operand Delivery • Reg read + Bypasses • Register scoreboard • Predicated dependencies cs 252 -S 09, Lecture 14 7

Why More on Memory Hierarchy? Processor-Memory Performance Gap Growing 3/11/2009 cs 252 -S 09, Lecture 14 8

Why More on Memory Hierarchy? Processor-Memory Performance Gap Growing 3/11/2009 cs 252 -S 09, Lecture 14 8

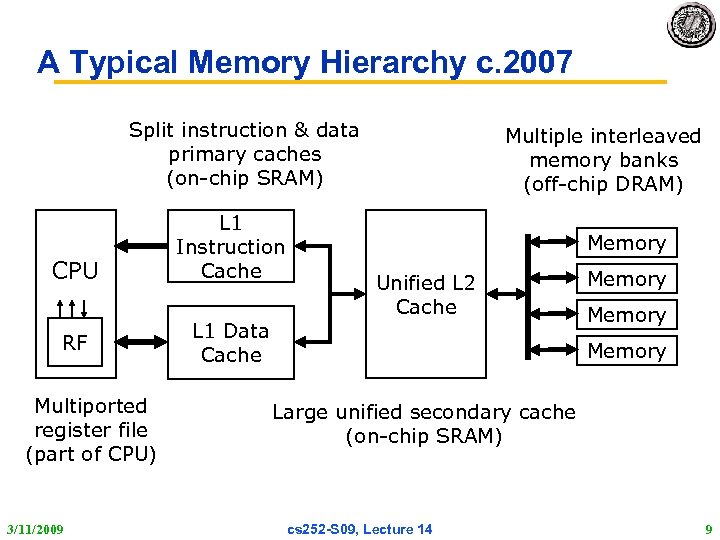

A Typical Memory Hierarchy c. 2007 Split instruction & data primary caches (on-chip SRAM) CPU RF Multiported register file (part of CPU) 3/11/2009 L 1 Instruction Cache Multiple interleaved memory banks (off-chip DRAM) Memory Unified L 2 Cache L 1 Data Cache Memory Large unified secondary cache (on-chip SRAM) cs 252 -S 09, Lecture 14 9

A Typical Memory Hierarchy c. 2007 Split instruction & data primary caches (on-chip SRAM) CPU RF Multiported register file (part of CPU) 3/11/2009 L 1 Instruction Cache Multiple interleaved memory banks (off-chip DRAM) Memory Unified L 2 Cache L 1 Data Cache Memory Large unified secondary cache (on-chip SRAM) cs 252 -S 09, Lecture 14 9

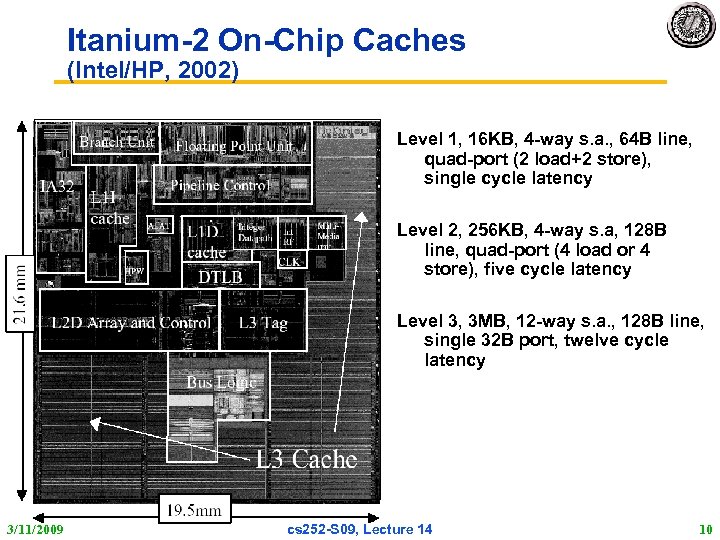

Itanium-2 On-Chip Caches (Intel/HP, 2002) Level 1, 16 KB, 4 -way s. a. , 64 B line, quad-port (2 load+2 store), single cycle latency Level 2, 256 KB, 4 -way s. a, 128 B line, quad-port (4 load or 4 store), five cycle latency Level 3, 3 MB, 12 -way s. a. , 128 B line, single 32 B port, twelve cycle latency 3/11/2009 cs 252 -S 09, Lecture 14 10

Itanium-2 On-Chip Caches (Intel/HP, 2002) Level 1, 16 KB, 4 -way s. a. , 64 B line, quad-port (2 load+2 store), single cycle latency Level 2, 256 KB, 4 -way s. a, 128 B line, quad-port (4 load or 4 store), five cycle latency Level 3, 3 MB, 12 -way s. a. , 128 B line, single 32 B port, twelve cycle latency 3/11/2009 cs 252 -S 09, Lecture 14 10

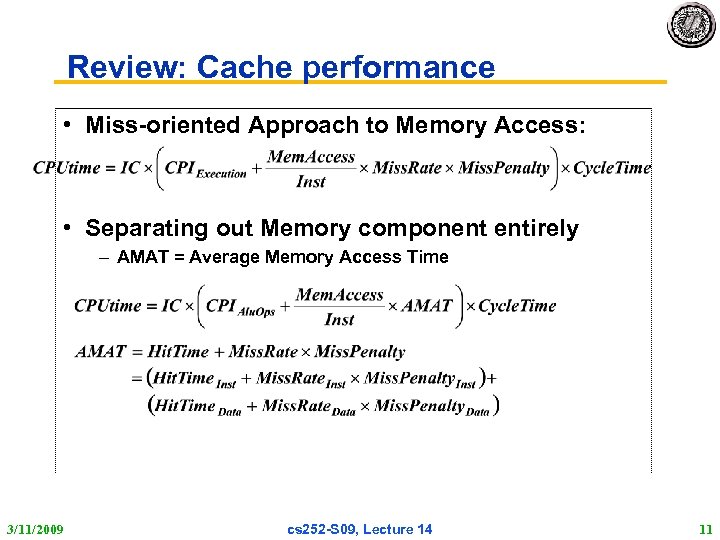

Review: Cache performance • Miss-oriented Approach to Memory Access: • Separating out Memory component entirely – AMAT = Average Memory Access Time 3/11/2009 cs 252 -S 09, Lecture 14 11

Review: Cache performance • Miss-oriented Approach to Memory Access: • Separating out Memory component entirely – AMAT = Average Memory Access Time 3/11/2009 cs 252 -S 09, Lecture 14 11

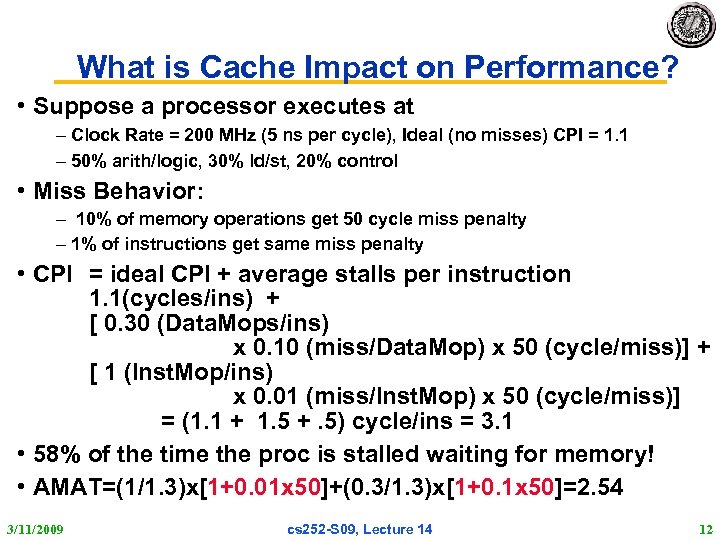

What is Cache Impact on Performance? • Suppose a processor executes at – Clock Rate = 200 MHz (5 ns per cycle), Ideal (no misses) CPI = 1. 1 – 50% arith/logic, 30% ld/st, 20% control • Miss Behavior: – 10% of memory operations get 50 cycle miss penalty – 1% of instructions get same miss penalty • CPI = ideal CPI + average stalls per instruction 1. 1(cycles/ins) + [ 0. 30 (Data. Mops/ins) x 0. 10 (miss/Data. Mop) x 50 (cycle/miss)] + [ 1 (Inst. Mop/ins) x 0. 01 (miss/Inst. Mop) x 50 (cycle/miss)] = (1. 1 + 1. 5 +. 5) cycle/ins = 3. 1 • 58% of the time the proc is stalled waiting for memory! • AMAT=(1/1. 3)x[1+0. 01 x 50]+(0. 3/1. 3)x[1+0. 1 x 50]=2. 54 3/11/2009 cs 252 -S 09, Lecture 14 12

What is Cache Impact on Performance? • Suppose a processor executes at – Clock Rate = 200 MHz (5 ns per cycle), Ideal (no misses) CPI = 1. 1 – 50% arith/logic, 30% ld/st, 20% control • Miss Behavior: – 10% of memory operations get 50 cycle miss penalty – 1% of instructions get same miss penalty • CPI = ideal CPI + average stalls per instruction 1. 1(cycles/ins) + [ 0. 30 (Data. Mops/ins) x 0. 10 (miss/Data. Mop) x 50 (cycle/miss)] + [ 1 (Inst. Mop/ins) x 0. 01 (miss/Inst. Mop) x 50 (cycle/miss)] = (1. 1 + 1. 5 +. 5) cycle/ins = 3. 1 • 58% of the time the proc is stalled waiting for memory! • AMAT=(1/1. 3)x[1+0. 01 x 50]+(0. 3/1. 3)x[1+0. 1 x 50]=2. 54 3/11/2009 cs 252 -S 09, Lecture 14 12

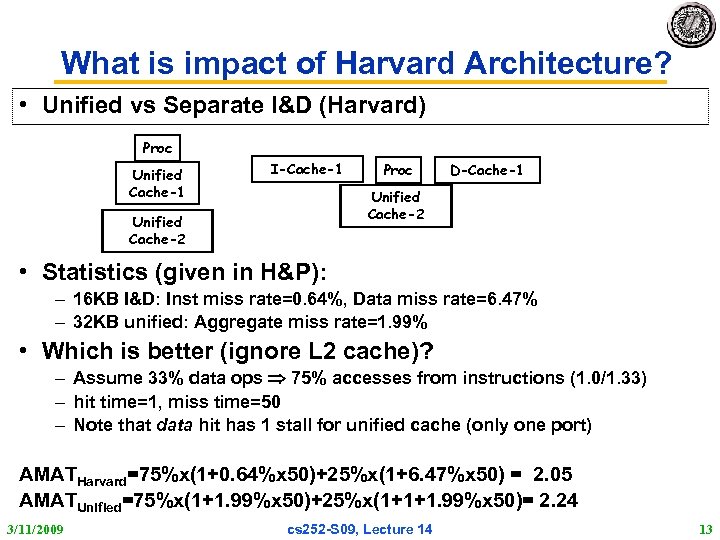

What is impact of Harvard Architecture? • Unified vs Separate I&D (Harvard) Proc Unified Cache-1 I-Cache-1 Proc D-Cache-1 Unified Cache-2 • Statistics (given in H&P): – 16 KB I&D: Inst miss rate=0. 64%, Data miss rate=6. 47% – 32 KB unified: Aggregate miss rate=1. 99% • Which is better (ignore L 2 cache)? – Assume 33% data ops 75% accesses from instructions (1. 0/1. 33) – hit time=1, miss time=50 – Note that data hit has 1 stall for unified cache (only one port) AMATHarvard=75%x(1+0. 64%x 50)+25%x(1+6. 47%x 50) = 2. 05 AMATUnified=75%x(1+1. 99%x 50)+25%x(1+1+1. 99%x 50)= 2. 24 3/11/2009 cs 252 -S 09, Lecture 14 13

What is impact of Harvard Architecture? • Unified vs Separate I&D (Harvard) Proc Unified Cache-1 I-Cache-1 Proc D-Cache-1 Unified Cache-2 • Statistics (given in H&P): – 16 KB I&D: Inst miss rate=0. 64%, Data miss rate=6. 47% – 32 KB unified: Aggregate miss rate=1. 99% • Which is better (ignore L 2 cache)? – Assume 33% data ops 75% accesses from instructions (1. 0/1. 33) – hit time=1, miss time=50 – Note that data hit has 1 stall for unified cache (only one port) AMATHarvard=75%x(1+0. 64%x 50)+25%x(1+6. 47%x 50) = 2. 05 AMATUnified=75%x(1+1. 99%x 50)+25%x(1+1+1. 99%x 50)= 2. 24 3/11/2009 cs 252 -S 09, Lecture 14 13

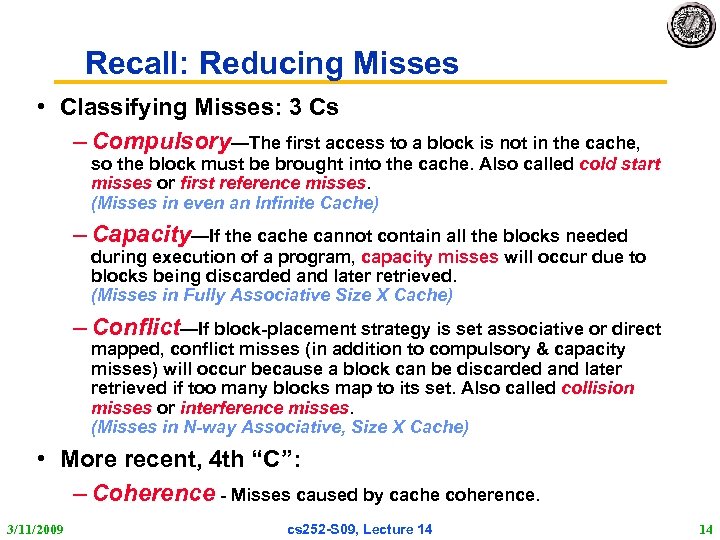

Recall: Reducing Misses • Classifying Misses: 3 Cs – Compulsory—The first access to a block is not in the cache, so the block must be brought into the cache. Also called cold start misses or first reference misses. (Misses in even an Infinite Cache) – Capacity—If the cache cannot contain all the blocks needed during execution of a program, capacity misses will occur due to blocks being discarded and later retrieved. (Misses in Fully Associative Size X Cache) – Conflict—If block-placement strategy is set associative or direct mapped, conflict misses (in addition to compulsory & capacity misses) will occur because a block can be discarded and later retrieved if too many blocks map to its set. Also called collision misses or interference misses. (Misses in N-way Associative, Size X Cache) • More recent, 4 th “C”: – Coherence - Misses caused by cache coherence. 3/11/2009 cs 252 -S 09, Lecture 14 14

Recall: Reducing Misses • Classifying Misses: 3 Cs – Compulsory—The first access to a block is not in the cache, so the block must be brought into the cache. Also called cold start misses or first reference misses. (Misses in even an Infinite Cache) – Capacity—If the cache cannot contain all the blocks needed during execution of a program, capacity misses will occur due to blocks being discarded and later retrieved. (Misses in Fully Associative Size X Cache) – Conflict—If block-placement strategy is set associative or direct mapped, conflict misses (in addition to compulsory & capacity misses) will occur because a block can be discarded and later retrieved if too many blocks map to its set. Also called collision misses or interference misses. (Misses in N-way Associative, Size X Cache) • More recent, 4 th “C”: – Coherence - Misses caused by cache coherence. 3/11/2009 cs 252 -S 09, Lecture 14 14

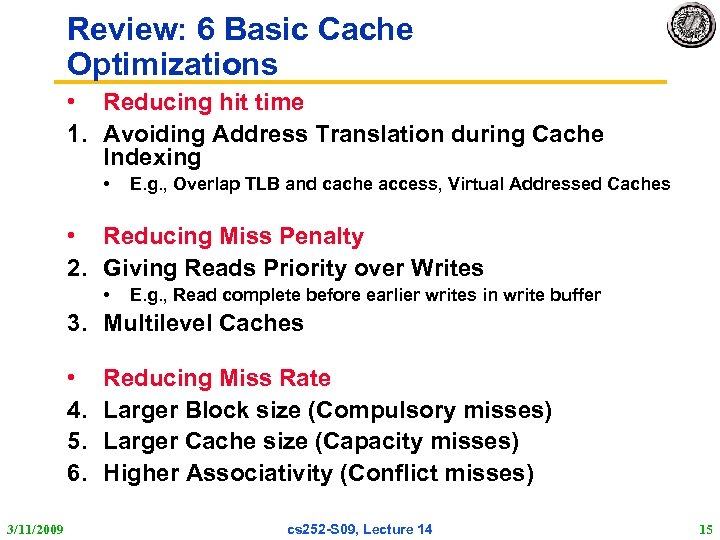

Review: 6 Basic Cache Optimizations • Reducing hit time 1. Avoiding Address Translation during Cache Indexing • E. g. , Overlap TLB and cache access, Virtual Addressed Caches • Reducing Miss Penalty 2. Giving Reads Priority over Writes • E. g. , Read complete before earlier writes in write buffer 3. Multilevel Caches • 4. 5. 6. 3/11/2009 Reducing Miss Rate Larger Block size (Compulsory misses) Larger Cache size (Capacity misses) Higher Associativity (Conflict misses) cs 252 -S 09, Lecture 14 15

Review: 6 Basic Cache Optimizations • Reducing hit time 1. Avoiding Address Translation during Cache Indexing • E. g. , Overlap TLB and cache access, Virtual Addressed Caches • Reducing Miss Penalty 2. Giving Reads Priority over Writes • E. g. , Read complete before earlier writes in write buffer 3. Multilevel Caches • 4. 5. 6. 3/11/2009 Reducing Miss Rate Larger Block size (Compulsory misses) Larger Cache size (Capacity misses) Higher Associativity (Conflict misses) cs 252 -S 09, Lecture 14 15

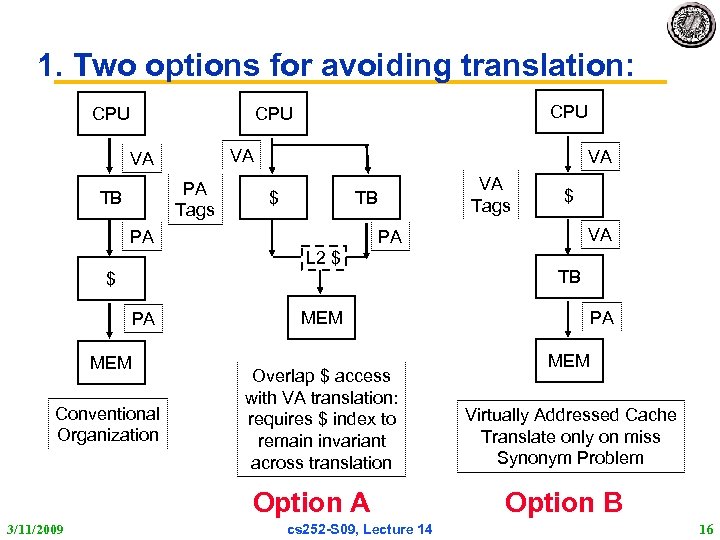

1. Two options for avoiding translation: CPU CPU VA VA PA Tags TB VA $ VA Tags TB PA $ MEM Conventional Organization MEM Overlap $ access with VA translation: requires $ index to remain invariant across translation Option A 3/11/2009 VA PA L 2 $ PA $ cs 252 -S 09, Lecture 14 TB PA MEM Virtually Addressed Cache Translate only on miss Synonym Problem Option B 16

1. Two options for avoiding translation: CPU CPU VA VA PA Tags TB VA $ VA Tags TB PA $ MEM Conventional Organization MEM Overlap $ access with VA translation: requires $ index to remain invariant across translation Option A 3/11/2009 VA PA L 2 $ PA $ cs 252 -S 09, Lecture 14 TB PA MEM Virtually Addressed Cache Translate only on miss Synonym Problem Option B 16

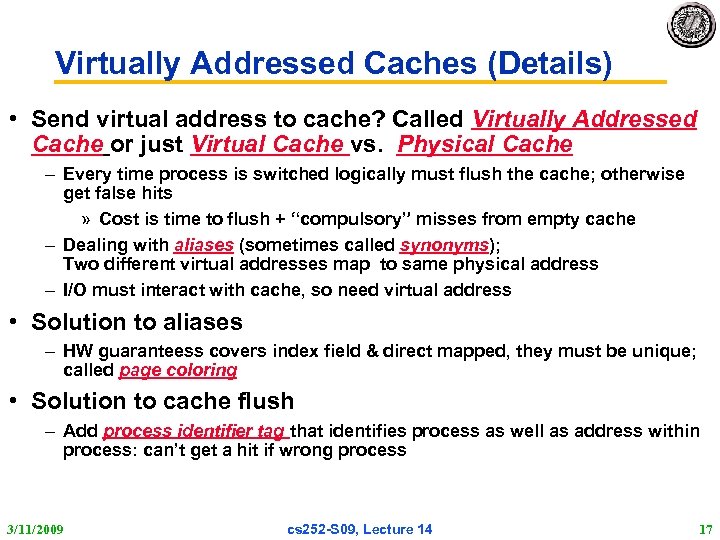

Virtually Addressed Caches (Details) • Send virtual address to cache? Called Virtually Addressed Cache or just Virtual Cache vs. Physical Cache – Every time process is switched logically must flush the cache; otherwise get false hits » Cost is time to flush + “compulsory” misses from empty cache – Dealing with aliases (sometimes called synonyms); Two different virtual addresses map to same physical address – I/O must interact with cache, so need virtual address • Solution to aliases – HW guaranteess covers index field & direct mapped, they must be unique; called page coloring • Solution to cache flush – Add process identifier tag that identifies process as well as address within process: can’t get a hit if wrong process 3/11/2009 cs 252 -S 09, Lecture 14 17

Virtually Addressed Caches (Details) • Send virtual address to cache? Called Virtually Addressed Cache or just Virtual Cache vs. Physical Cache – Every time process is switched logically must flush the cache; otherwise get false hits » Cost is time to flush + “compulsory” misses from empty cache – Dealing with aliases (sometimes called synonyms); Two different virtual addresses map to same physical address – I/O must interact with cache, so need virtual address • Solution to aliases – HW guaranteess covers index field & direct mapped, they must be unique; called page coloring • Solution to cache flush – Add process identifier tag that identifies process as well as address within process: can’t get a hit if wrong process 3/11/2009 cs 252 -S 09, Lecture 14 17

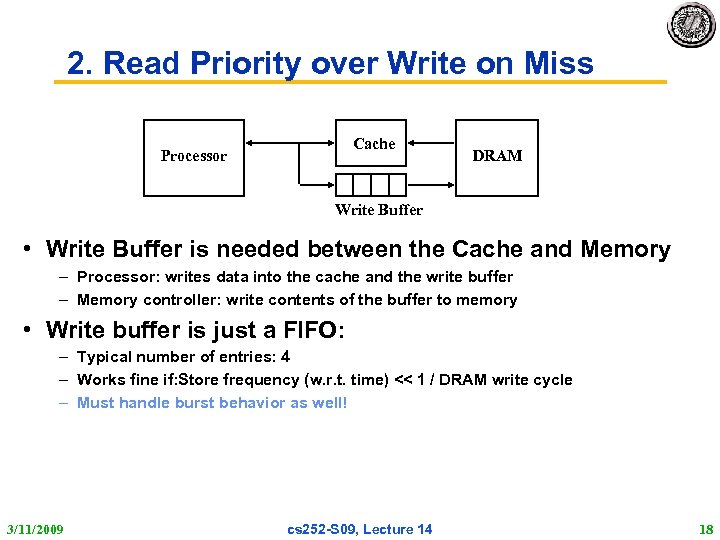

2. Read Priority over Write on Miss Cache Processor DRAM Write Buffer • Write Buffer is needed between the Cache and Memory – Processor: writes data into the cache and the write buffer – Memory controller: write contents of the buffer to memory • Write buffer is just a FIFO: – Typical number of entries: 4 – Works fine if: Store frequency (w. r. t. time) << 1 / DRAM write cycle – Must handle burst behavior as well! 3/11/2009 cs 252 -S 09, Lecture 14 18

2. Read Priority over Write on Miss Cache Processor DRAM Write Buffer • Write Buffer is needed between the Cache and Memory – Processor: writes data into the cache and the write buffer – Memory controller: write contents of the buffer to memory • Write buffer is just a FIFO: – Typical number of entries: 4 – Works fine if: Store frequency (w. r. t. time) << 1 / DRAM write cycle – Must handle burst behavior as well! 3/11/2009 cs 252 -S 09, Lecture 14 18

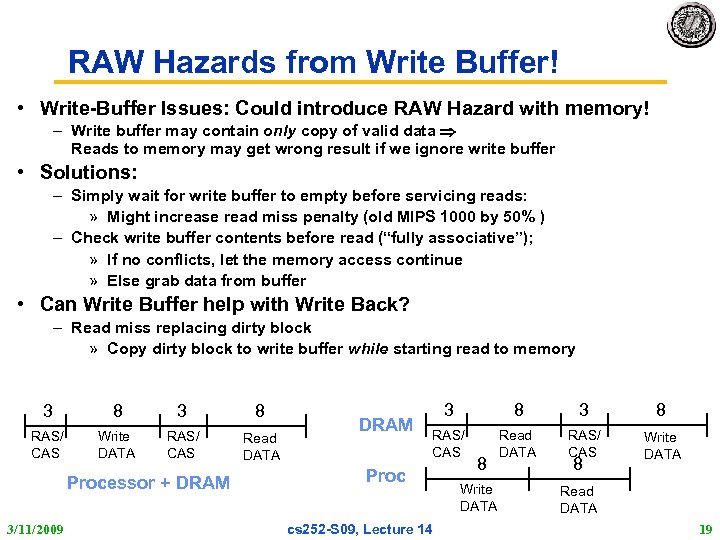

RAW Hazards from Write Buffer! • Write-Buffer Issues: Could introduce RAW Hazard with memory! – Write buffer may contain only copy of valid data Reads to memory may get wrong result if we ignore write buffer • Solutions: – Simply wait for write buffer to empty before servicing reads: » Might increase read miss penalty (old MIPS 1000 by 50% ) – Check write buffer contents before read (“fully associative”); » If no conflicts, let the memory access continue » Else grab data from buffer • Can Write Buffer help with Write Back? – Read miss replacing dirty block » Copy dirty block to write buffer while starting read to memory 3 8 RAS/ CAS Write DATA RAS/ CAS Read DATA Processor + DRAM 3/11/2009 DRAM 3 8 RAS/ CAS Read DATA RAS/ CAS Write DATA Proc cs 252 -S 09, Lecture 14 8 Write DATA 8 Read DATA 19

RAW Hazards from Write Buffer! • Write-Buffer Issues: Could introduce RAW Hazard with memory! – Write buffer may contain only copy of valid data Reads to memory may get wrong result if we ignore write buffer • Solutions: – Simply wait for write buffer to empty before servicing reads: » Might increase read miss penalty (old MIPS 1000 by 50% ) – Check write buffer contents before read (“fully associative”); » If no conflicts, let the memory access continue » Else grab data from buffer • Can Write Buffer help with Write Back? – Read miss replacing dirty block » Copy dirty block to write buffer while starting read to memory 3 8 RAS/ CAS Write DATA RAS/ CAS Read DATA Processor + DRAM 3/11/2009 DRAM 3 8 RAS/ CAS Read DATA RAS/ CAS Write DATA Proc cs 252 -S 09, Lecture 14 8 Write DATA 8 Read DATA 19

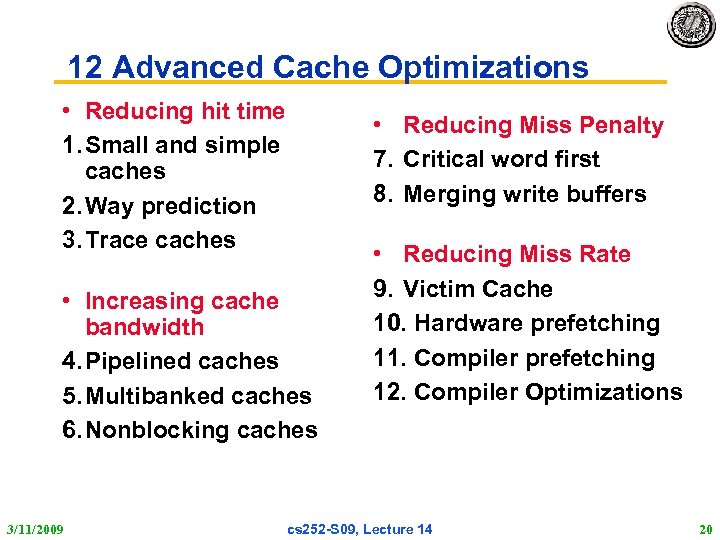

12 Advanced Cache Optimizations • Reducing hit time 1. Small and simple caches 2. Way prediction 3. Trace caches • Reducing Miss Penalty 7. Critical word first 8. Merging write buffers • Increasing cache bandwidth 4. Pipelined caches 5. Multibanked caches 6. Nonblocking caches 3/11/2009 • Reducing Miss Rate 9. Victim Cache 10. Hardware prefetching 11. Compiler prefetching 12. Compiler Optimizations cs 252 -S 09, Lecture 14 20

12 Advanced Cache Optimizations • Reducing hit time 1. Small and simple caches 2. Way prediction 3. Trace caches • Reducing Miss Penalty 7. Critical word first 8. Merging write buffers • Increasing cache bandwidth 4. Pipelined caches 5. Multibanked caches 6. Nonblocking caches 3/11/2009 • Reducing Miss Rate 9. Victim Cache 10. Hardware prefetching 11. Compiler prefetching 12. Compiler Optimizations cs 252 -S 09, Lecture 14 20

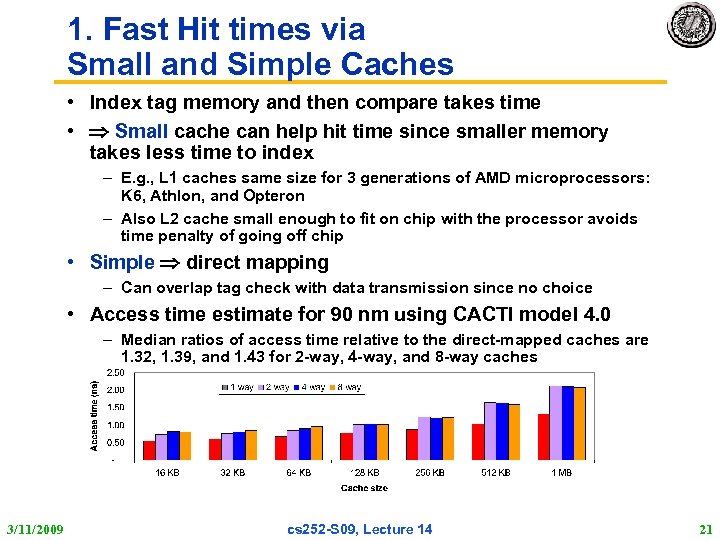

1. Fast Hit times via Small and Simple Caches • Index tag memory and then compare takes time • Small cache can help hit time since smaller memory takes less time to index – E. g. , L 1 caches same size for 3 generations of AMD microprocessors: K 6, Athlon, and Opteron – Also L 2 cache small enough to fit on chip with the processor avoids time penalty of going off chip • Simple direct mapping – Can overlap tag check with data transmission since no choice • Access time estimate for 90 nm using CACTI model 4. 0 – Median ratios of access time relative to the direct-mapped caches are 1. 32, 1. 39, and 1. 43 for 2 -way, 4 -way, and 8 -way caches 3/11/2009 cs 252 -S 09, Lecture 14 21

1. Fast Hit times via Small and Simple Caches • Index tag memory and then compare takes time • Small cache can help hit time since smaller memory takes less time to index – E. g. , L 1 caches same size for 3 generations of AMD microprocessors: K 6, Athlon, and Opteron – Also L 2 cache small enough to fit on chip with the processor avoids time penalty of going off chip • Simple direct mapping – Can overlap tag check with data transmission since no choice • Access time estimate for 90 nm using CACTI model 4. 0 – Median ratios of access time relative to the direct-mapped caches are 1. 32, 1. 39, and 1. 43 for 2 -way, 4 -way, and 8 -way caches 3/11/2009 cs 252 -S 09, Lecture 14 21

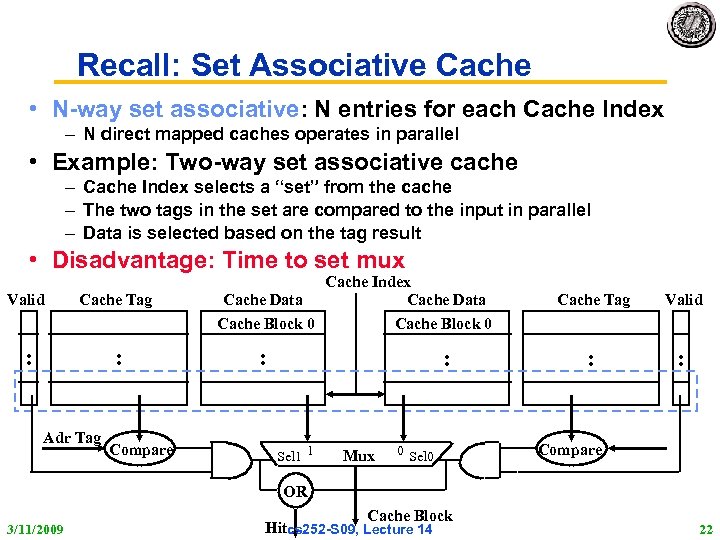

Recall: Set Associative Cache • N-way set associative: N entries for each Cache Index – N direct mapped caches operates in parallel • Example: Two-way set associative cache – Cache Index selects a “set” from the cache – The two tags in the set are compared to the input in parallel – Data is selected based on the tag result • Disadvantage: Time to set mux Valid Cache Tag : : Adr Tag Compare Cache Index Cache Data Cache Block 0 : : Sel 1 1 Mux 0 Sel 0 Cache Tag Valid : : Compare OR 3/11/2009 Cache Block Hitcs 252 -S 09, Lecture 14 22

Recall: Set Associative Cache • N-way set associative: N entries for each Cache Index – N direct mapped caches operates in parallel • Example: Two-way set associative cache – Cache Index selects a “set” from the cache – The two tags in the set are compared to the input in parallel – Data is selected based on the tag result • Disadvantage: Time to set mux Valid Cache Tag : : Adr Tag Compare Cache Index Cache Data Cache Block 0 : : Sel 1 1 Mux 0 Sel 0 Cache Tag Valid : : Compare OR 3/11/2009 Cache Block Hitcs 252 -S 09, Lecture 14 22

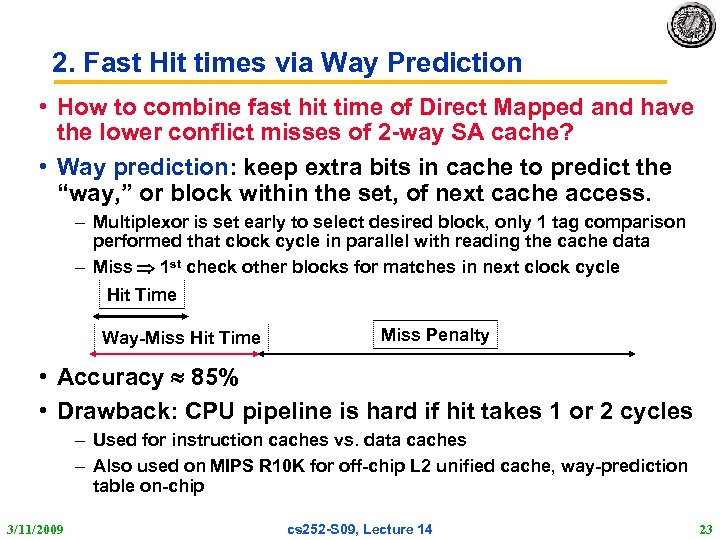

2. Fast Hit times via Way Prediction • How to combine fast hit time of Direct Mapped and have the lower conflict misses of 2 -way SA cache? • Way prediction: keep extra bits in cache to predict the “way, ” or block within the set, of next cache access. – Multiplexor is set early to select desired block, only 1 tag comparison performed that clock cycle in parallel with reading the cache data – Miss 1 st check other blocks for matches in next clock cycle Hit Time Way-Miss Hit Time Miss Penalty • Accuracy 85% • Drawback: CPU pipeline is hard if hit takes 1 or 2 cycles – Used for instruction caches vs. data caches – Also used on MIPS R 10 K for off-chip L 2 unified cache, way-prediction table on-chip 3/11/2009 cs 252 -S 09, Lecture 14 23

2. Fast Hit times via Way Prediction • How to combine fast hit time of Direct Mapped and have the lower conflict misses of 2 -way SA cache? • Way prediction: keep extra bits in cache to predict the “way, ” or block within the set, of next cache access. – Multiplexor is set early to select desired block, only 1 tag comparison performed that clock cycle in parallel with reading the cache data – Miss 1 st check other blocks for matches in next clock cycle Hit Time Way-Miss Hit Time Miss Penalty • Accuracy 85% • Drawback: CPU pipeline is hard if hit takes 1 or 2 cycles – Used for instruction caches vs. data caches – Also used on MIPS R 10 K for off-chip L 2 unified cache, way-prediction table on-chip 3/11/2009 cs 252 -S 09, Lecture 14 23

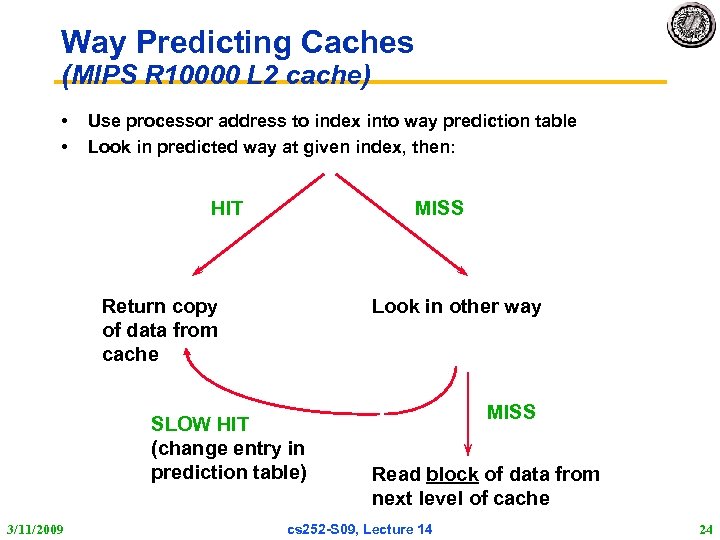

Way Predicting Caches (MIPS R 10000 L 2 cache) • • Use processor address to index into way prediction table Look in predicted way at given index, then: HIT MISS Return copy of data from cache Look in other way SLOW HIT (change entry in prediction table) 3/11/2009 MISS Read block of data from next level of cache cs 252 -S 09, Lecture 14 24

Way Predicting Caches (MIPS R 10000 L 2 cache) • • Use processor address to index into way prediction table Look in predicted way at given index, then: HIT MISS Return copy of data from cache Look in other way SLOW HIT (change entry in prediction table) 3/11/2009 MISS Read block of data from next level of cache cs 252 -S 09, Lecture 14 24

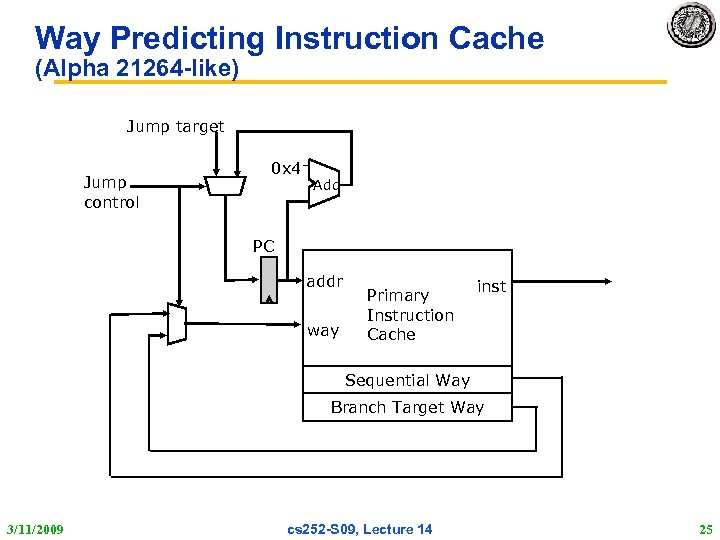

Way Predicting Instruction Cache (Alpha 21264 -like) Jump target Jump control 0 x 4 Add PC addr way Primary Instruction Cache inst Sequential Way Branch Target Way 3/11/2009 cs 252 -S 09, Lecture 14 25

Way Predicting Instruction Cache (Alpha 21264 -like) Jump target Jump control 0 x 4 Add PC addr way Primary Instruction Cache inst Sequential Way Branch Target Way 3/11/2009 cs 252 -S 09, Lecture 14 25

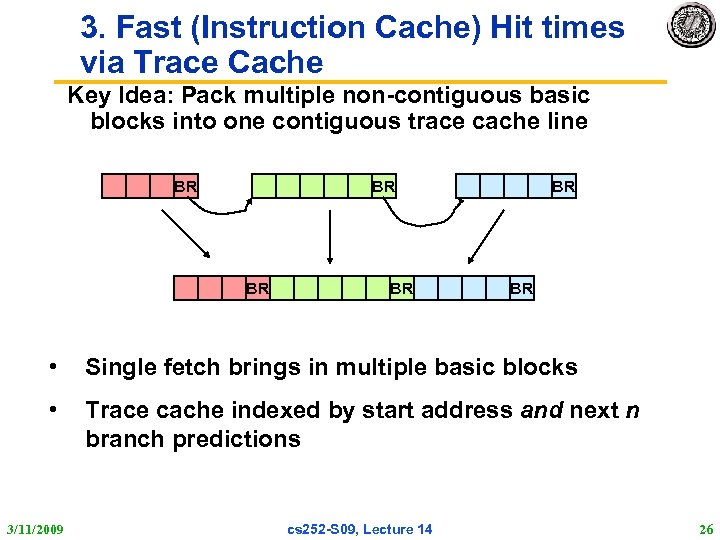

3. Fast (Instruction Cache) Hit times via Trace Cache Key Idea: Pack multiple non-contiguous basic blocks into one contiguous trace cache line BR BR BR • Single fetch brings in multiple basic blocks • Trace cache indexed by start address and next n branch predictions 3/11/2009 cs 252 -S 09, Lecture 14 26

3. Fast (Instruction Cache) Hit times via Trace Cache Key Idea: Pack multiple non-contiguous basic blocks into one contiguous trace cache line BR BR BR • Single fetch brings in multiple basic blocks • Trace cache indexed by start address and next n branch predictions 3/11/2009 cs 252 -S 09, Lecture 14 26

3. Fast Hit times via Trace Cache (Pentium 4 only; and last time? ) • • Find more instruction level parallelism? How avoid translation from x 86 to microops? Trace cache in Pentium 4 1. Dynamic traces of the executed instructions vs. static sequences of instructions as determined by layout in memory – Built-in branch predictor 2. Cache the micro-ops vs. x 86 instructions – Decode/translate from x 86 to micro-ops on trace cache miss + 1. better utilize long blocks (don’t exit in middle of block, don’t enter at label in middle of block) - 1. complicated address mapping since addresses no longer aligned to power-of-2 multiples of word size - 1. instructions may appear multiple times in multiple dynamic traces due to different branch outcomes 3/11/2009 cs 252 -S 09, Lecture 14 27

3. Fast Hit times via Trace Cache (Pentium 4 only; and last time? ) • • Find more instruction level parallelism? How avoid translation from x 86 to microops? Trace cache in Pentium 4 1. Dynamic traces of the executed instructions vs. static sequences of instructions as determined by layout in memory – Built-in branch predictor 2. Cache the micro-ops vs. x 86 instructions – Decode/translate from x 86 to micro-ops on trace cache miss + 1. better utilize long blocks (don’t exit in middle of block, don’t enter at label in middle of block) - 1. complicated address mapping since addresses no longer aligned to power-of-2 multiples of word size - 1. instructions may appear multiple times in multiple dynamic traces due to different branch outcomes 3/11/2009 cs 252 -S 09, Lecture 14 27

4: Increasing Cache Bandwidth by Pipelining • Pipeline cache access to maintain bandwidth, but higher latency • Instruction cache access pipeline stages: 1: Pentium 2: Pentium Pro through Pentium III 4: Pentium 4 - greater penalty on mispredicted branches - more clock cycles between the issue of the load and the use of the data 3/11/2009 cs 252 -S 09, Lecture 14 28

4: Increasing Cache Bandwidth by Pipelining • Pipeline cache access to maintain bandwidth, but higher latency • Instruction cache access pipeline stages: 1: Pentium 2: Pentium Pro through Pentium III 4: Pentium 4 - greater penalty on mispredicted branches - more clock cycles between the issue of the load and the use of the data 3/11/2009 cs 252 -S 09, Lecture 14 28

5. Increasing Cache Bandwidth: Non-Blocking Caches • Non-blocking cache or lockup-free cache allow data cache to continue to supply cache hits during a miss – requires F/E bits on registers or out-of-order execution – requires multi-bank memories • “hit under miss” reduces the effective miss penalty by working during miss vs. ignoring CPU requests • “hit under multiple miss” or “miss under miss” may further lower the effective miss penalty by overlapping multiple misses – Significantly increases the complexity of the cache controller as there can be multiple outstanding memory accesses – Requires muliple memory banks (otherwise cannot support) – Penium Pro allows 4 outstanding memory misses 3/11/2009 cs 252 -S 09, Lecture 14 29

5. Increasing Cache Bandwidth: Non-Blocking Caches • Non-blocking cache or lockup-free cache allow data cache to continue to supply cache hits during a miss – requires F/E bits on registers or out-of-order execution – requires multi-bank memories • “hit under miss” reduces the effective miss penalty by working during miss vs. ignoring CPU requests • “hit under multiple miss” or “miss under miss” may further lower the effective miss penalty by overlapping multiple misses – Significantly increases the complexity of the cache controller as there can be multiple outstanding memory accesses – Requires muliple memory banks (otherwise cannot support) – Penium Pro allows 4 outstanding memory misses 3/11/2009 cs 252 -S 09, Lecture 14 29

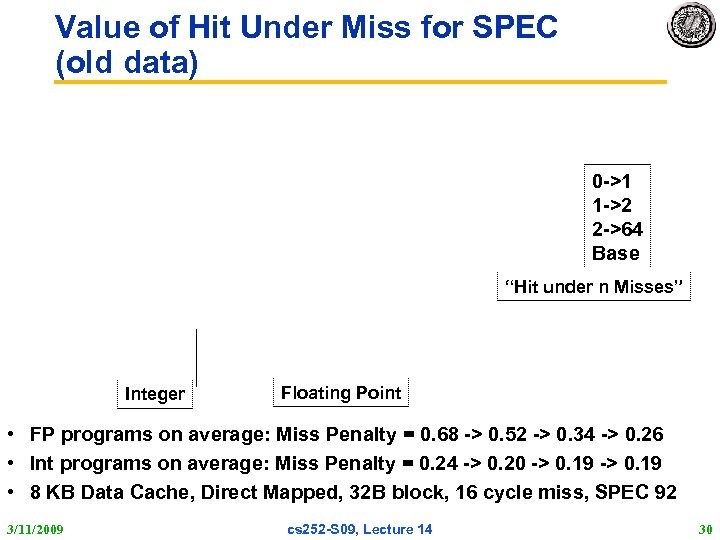

Value of Hit Under Miss for SPEC (old data) 0 ->1 1 ->2 2 ->64 Base “Hit under n Misses” Integer Floating Point • FP programs on average: Miss Penalty = 0. 68 -> 0. 52 -> 0. 34 -> 0. 26 • Int programs on average: Miss Penalty = 0. 24 -> 0. 20 -> 0. 19 • 8 KB Data Cache, Direct Mapped, 32 B block, 16 cycle miss, SPEC 92 3/11/2009 cs 252 -S 09, Lecture 14 30

Value of Hit Under Miss for SPEC (old data) 0 ->1 1 ->2 2 ->64 Base “Hit under n Misses” Integer Floating Point • FP programs on average: Miss Penalty = 0. 68 -> 0. 52 -> 0. 34 -> 0. 26 • Int programs on average: Miss Penalty = 0. 24 -> 0. 20 -> 0. 19 • 8 KB Data Cache, Direct Mapped, 32 B block, 16 cycle miss, SPEC 92 3/11/2009 cs 252 -S 09, Lecture 14 30

6: Increasing Cache Bandwidth via Multiple Banks • Rather than treat the cache as a single monolithic block, divide into independent banks that can support simultaneous accesses – E. g. , T 1 (“Niagara”) L 2 has 4 banks • Banking works best when accesses naturally spread themselves across banks mapping of addresses to banks affects behavior of memory system • Simple mapping that works well is “sequential interleaving” – Spread block addresses sequentially across banks – E, g, if there 4 banks, Bank 0 has all blocks whose address modulo 4 is 0; bank 1 has all blocks whose address modulo 4 is 1; … 3/11/2009 cs 252 -S 09, Lecture 14 31

6: Increasing Cache Bandwidth via Multiple Banks • Rather than treat the cache as a single monolithic block, divide into independent banks that can support simultaneous accesses – E. g. , T 1 (“Niagara”) L 2 has 4 banks • Banking works best when accesses naturally spread themselves across banks mapping of addresses to banks affects behavior of memory system • Simple mapping that works well is “sequential interleaving” – Spread block addresses sequentially across banks – E, g, if there 4 banks, Bank 0 has all blocks whose address modulo 4 is 0; bank 1 has all blocks whose address modulo 4 is 1; … 3/11/2009 cs 252 -S 09, Lecture 14 31

7. Reduce Miss Penalty: Early Restart and Critical Word First • Don’t wait for full block before restarting CPU • Early restart—As soon as the requested word of the block arrives, send it to the CPU and let the CPU continue execution – Spatial locality tend to want next sequential word, so not clear size of benefit of just early restart • Critical Word First—Request the missed word first from memory and send it to the CPU as soon as it arrives; let the CPU continue execution while filling the rest of the words in the block – Long blocks more popular today Critical Word 1 st Widely used block 3/11/2009 cs 252 -S 09, Lecture 14 32

7. Reduce Miss Penalty: Early Restart and Critical Word First • Don’t wait for full block before restarting CPU • Early restart—As soon as the requested word of the block arrives, send it to the CPU and let the CPU continue execution – Spatial locality tend to want next sequential word, so not clear size of benefit of just early restart • Critical Word First—Request the missed word first from memory and send it to the CPU as soon as it arrives; let the CPU continue execution while filling the rest of the words in the block – Long blocks more popular today Critical Word 1 st Widely used block 3/11/2009 cs 252 -S 09, Lecture 14 32

8. Merging Write Buffer to Reduce Miss Penalty • • • 3/11/2009 Write buffer to allow processor to continue while waiting to write to memory If buffer contains modified blocks, the addresses can be checked to see if address of new data matches the address of a valid write buffer entry If so, new data are combined with that entry Increases block size of write for write-through cache of writes to sequential words, bytes since multiword writes more efficient to memory The Sun T 1 (Niagara) processor, among many others, uses write merging cs 252 -S 09, Lecture 14 33

8. Merging Write Buffer to Reduce Miss Penalty • • • 3/11/2009 Write buffer to allow processor to continue while waiting to write to memory If buffer contains modified blocks, the addresses can be checked to see if address of new data matches the address of a valid write buffer entry If so, new data are combined with that entry Increases block size of write for write-through cache of writes to sequential words, bytes since multiword writes more efficient to memory The Sun T 1 (Niagara) processor, among many others, uses write merging cs 252 -S 09, Lecture 14 33

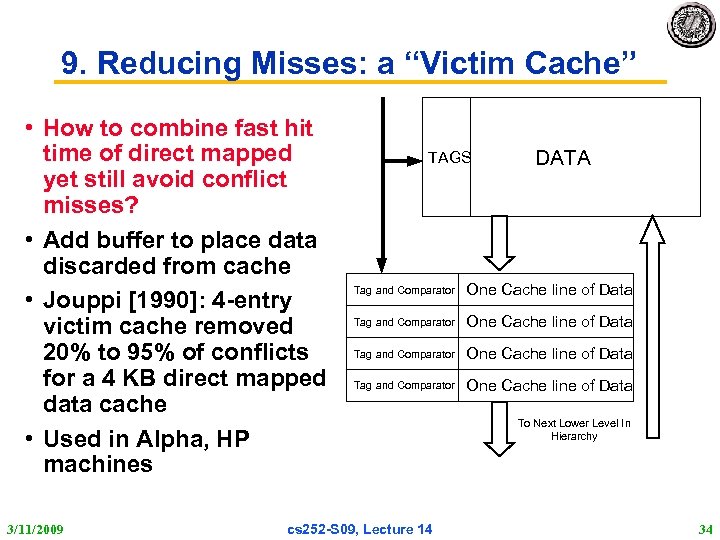

9. Reducing Misses: a “Victim Cache” • How to combine fast hit time of direct mapped yet still avoid conflict misses? • Add buffer to place data discarded from cache • Jouppi [1990]: 4 -entry victim cache removed 20% to 95% of conflicts for a 4 KB direct mapped data cache • Used in Alpha, HP machines 3/11/2009 TAGS DATA Tag and Comparator One Cache line of Data cs 252 -S 09, Lecture 14 To Next Lower Level In Hierarchy 34

9. Reducing Misses: a “Victim Cache” • How to combine fast hit time of direct mapped yet still avoid conflict misses? • Add buffer to place data discarded from cache • Jouppi [1990]: 4 -entry victim cache removed 20% to 95% of conflicts for a 4 KB direct mapped data cache • Used in Alpha, HP machines 3/11/2009 TAGS DATA Tag and Comparator One Cache line of Data cs 252 -S 09, Lecture 14 To Next Lower Level In Hierarchy 34

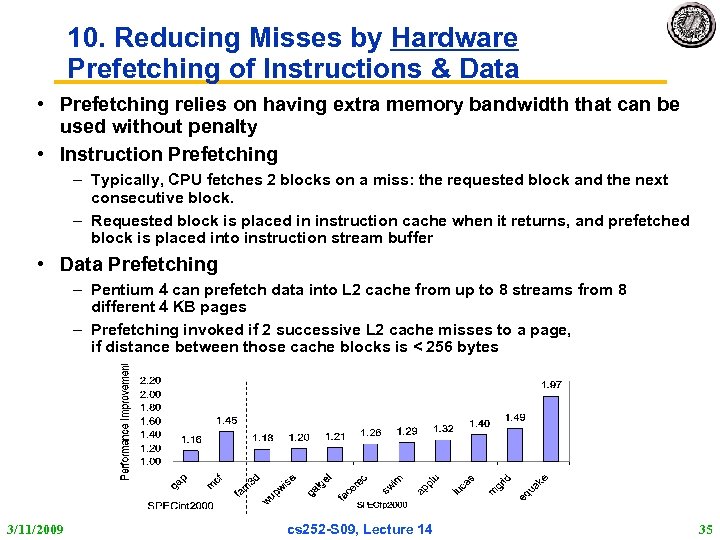

10. Reducing Misses by Hardware Prefetching of Instructions & Data • Prefetching relies on having extra memory bandwidth that can be used without penalty • Instruction Prefetching – Typically, CPU fetches 2 blocks on a miss: the requested block and the next consecutive block. – Requested block is placed in instruction cache when it returns, and prefetched block is placed into instruction stream buffer • Data Prefetching – Pentium 4 can prefetch data into L 2 cache from up to 8 streams from 8 different 4 KB pages – Prefetching invoked if 2 successive L 2 cache misses to a page, if distance between those cache blocks is < 256 bytes 3/11/2009 cs 252 -S 09, Lecture 14 35

10. Reducing Misses by Hardware Prefetching of Instructions & Data • Prefetching relies on having extra memory bandwidth that can be used without penalty • Instruction Prefetching – Typically, CPU fetches 2 blocks on a miss: the requested block and the next consecutive block. – Requested block is placed in instruction cache when it returns, and prefetched block is placed into instruction stream buffer • Data Prefetching – Pentium 4 can prefetch data into L 2 cache from up to 8 streams from 8 different 4 KB pages – Prefetching invoked if 2 successive L 2 cache misses to a page, if distance between those cache blocks is < 256 bytes 3/11/2009 cs 252 -S 09, Lecture 14 35

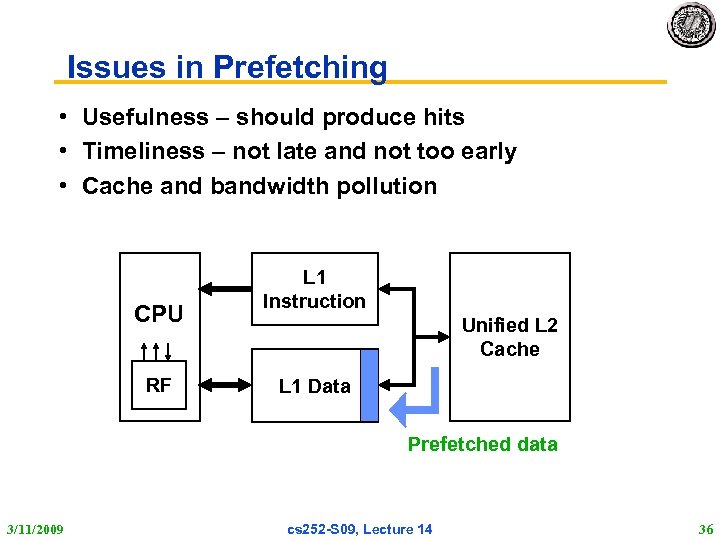

Issues in Prefetching • Usefulness – should produce hits • Timeliness – not late and not too early • Cache and bandwidth pollution CPU RF L 1 Instruction Unified L 2 Cache L 1 Data Prefetched data 3/11/2009 cs 252 -S 09, Lecture 14 36

Issues in Prefetching • Usefulness – should produce hits • Timeliness – not late and not too early • Cache and bandwidth pollution CPU RF L 1 Instruction Unified L 2 Cache L 1 Data Prefetched data 3/11/2009 cs 252 -S 09, Lecture 14 36

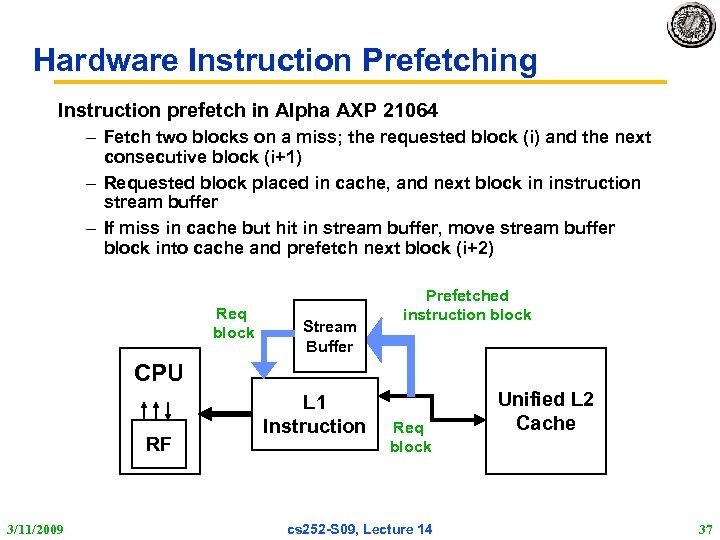

Hardware Instruction Prefetching Instruction prefetch in Alpha AXP 21064 – Fetch two blocks on a miss; the requested block (i) and the next consecutive block (i+1) – Requested block placed in cache, and next block in instruction stream buffer – If miss in cache but hit in stream buffer, move stream buffer block into cache and prefetch next block (i+2) Req block Stream Buffer Prefetched instruction block CPU RF 3/11/2009 L 1 Instruction Req block cs 252 -S 09, Lecture 14 Unified L 2 Cache 37

Hardware Instruction Prefetching Instruction prefetch in Alpha AXP 21064 – Fetch two blocks on a miss; the requested block (i) and the next consecutive block (i+1) – Requested block placed in cache, and next block in instruction stream buffer – If miss in cache but hit in stream buffer, move stream buffer block into cache and prefetch next block (i+2) Req block Stream Buffer Prefetched instruction block CPU RF 3/11/2009 L 1 Instruction Req block cs 252 -S 09, Lecture 14 Unified L 2 Cache 37

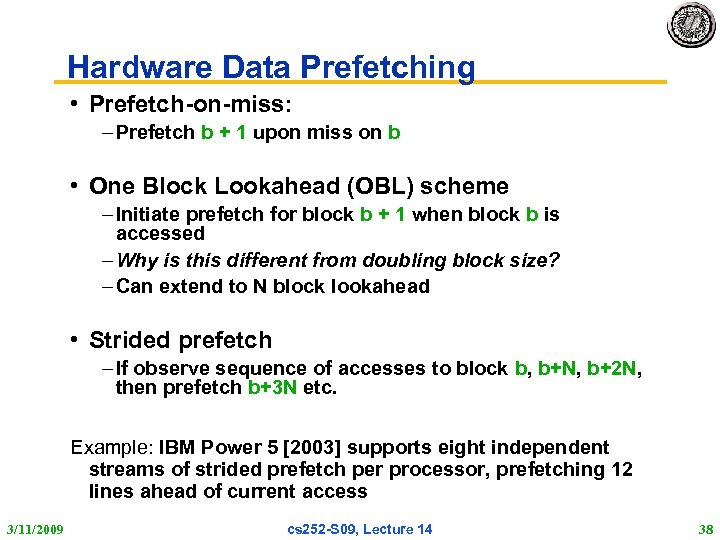

Hardware Data Prefetching • Prefetch-on-miss: – Prefetch b + 1 upon miss on b • One Block Lookahead (OBL) scheme – Initiate prefetch for block b + 1 when block b is accessed – Why is this different from doubling block size? – Can extend to N block lookahead • Strided prefetch – If observe sequence of accesses to block b, b+N, b+2 N, then prefetch b+3 N etc. Example: IBM Power 5 [2003] supports eight independent streams of strided prefetch per processor, prefetching 12 lines ahead of current access 3/11/2009 cs 252 -S 09, Lecture 14 38

Hardware Data Prefetching • Prefetch-on-miss: – Prefetch b + 1 upon miss on b • One Block Lookahead (OBL) scheme – Initiate prefetch for block b + 1 when block b is accessed – Why is this different from doubling block size? – Can extend to N block lookahead • Strided prefetch – If observe sequence of accesses to block b, b+N, b+2 N, then prefetch b+3 N etc. Example: IBM Power 5 [2003] supports eight independent streams of strided prefetch per processor, prefetching 12 lines ahead of current access 3/11/2009 cs 252 -S 09, Lecture 14 38

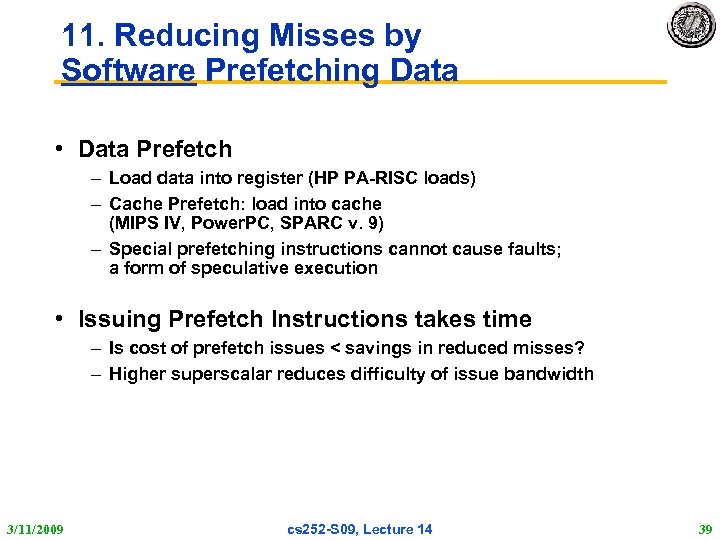

11. Reducing Misses by Software Prefetching Data • Data Prefetch – Load data into register (HP PA-RISC loads) – Cache Prefetch: load into cache (MIPS IV, Power. PC, SPARC v. 9) – Special prefetching instructions cannot cause faults; a form of speculative execution • Issuing Prefetch Instructions takes time – Is cost of prefetch issues < savings in reduced misses? – Higher superscalar reduces difficulty of issue bandwidth 3/11/2009 cs 252 -S 09, Lecture 14 39

11. Reducing Misses by Software Prefetching Data • Data Prefetch – Load data into register (HP PA-RISC loads) – Cache Prefetch: load into cache (MIPS IV, Power. PC, SPARC v. 9) – Special prefetching instructions cannot cause faults; a form of speculative execution • Issuing Prefetch Instructions takes time – Is cost of prefetch issues < savings in reduced misses? – Higher superscalar reduces difficulty of issue bandwidth 3/11/2009 cs 252 -S 09, Lecture 14 39

![12. Reducing Misses by Compiler Optimizations • Mc. Farling [1989] reduced caches misses by 12. Reducing Misses by Compiler Optimizations • Mc. Farling [1989] reduced caches misses by](https://present5.com/presentation/6b4b8a2f25220989125424ab177de215/image-40.jpg) 12. Reducing Misses by Compiler Optimizations • Mc. Farling [1989] reduced caches misses by 75% on 8 KB direct mapped cache, 4 byte blocks in software • Instructions – Reorder procedures in memory so as to reduce conflict misses – Profiling to look at conflicts(using tools they developed) • Data – Merging Arrays: improve spatial locality by single array of compound elements vs. 2 arrays – Loop Interchange: change nesting of loops to access data in order stored in memory – Loop Fusion: Combine 2 independent loops that have same looping and some variables overlap – Blocking: Improve temporal locality by accessing “blocks” of data repeatedly vs. going down whole columns or rows 3/11/2009 cs 252 -S 09, Lecture 14 40

12. Reducing Misses by Compiler Optimizations • Mc. Farling [1989] reduced caches misses by 75% on 8 KB direct mapped cache, 4 byte blocks in software • Instructions – Reorder procedures in memory so as to reduce conflict misses – Profiling to look at conflicts(using tools they developed) • Data – Merging Arrays: improve spatial locality by single array of compound elements vs. 2 arrays – Loop Interchange: change nesting of loops to access data in order stored in memory – Loop Fusion: Combine 2 independent loops that have same looping and some variables overlap – Blocking: Improve temporal locality by accessing “blocks” of data repeatedly vs. going down whole columns or rows 3/11/2009 cs 252 -S 09, Lecture 14 40

![Merging Arrays Example /* Before: 2 sequential arrays */ int val[SIZE]; int key[SIZE]; /* Merging Arrays Example /* Before: 2 sequential arrays */ int val[SIZE]; int key[SIZE]; /*](https://present5.com/presentation/6b4b8a2f25220989125424ab177de215/image-41.jpg) Merging Arrays Example /* Before: 2 sequential arrays */ int val[SIZE]; int key[SIZE]; /* After: 1 array of stuctures */ struct merge { int val; int key; }; struct merged_array[SIZE]; Reducing conflicts between val & key; improve spatial locality 3/11/2009 cs 252 -S 09, Lecture 14 41

Merging Arrays Example /* Before: 2 sequential arrays */ int val[SIZE]; int key[SIZE]; /* After: 1 array of stuctures */ struct merge { int val; int key; }; struct merged_array[SIZE]; Reducing conflicts between val & key; improve spatial locality 3/11/2009 cs 252 -S 09, Lecture 14 41

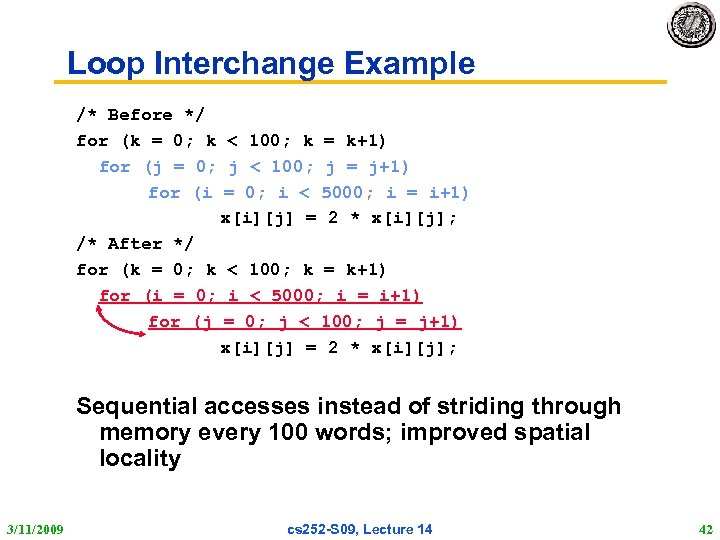

Loop Interchange Example /* Before */ for (k = 0; k < 100; k = k+1) for (j = 0; j < 100; j = j+1) for (i = 0; i < 5000; i = i+1) x[i][j] = 2 * x[i][j]; /* After */ for (k = 0; k < 100; k = k+1) for (i = 0; i < 5000; i = i+1) for (j = 0; j < 100; j = j+1) x[i][j] = 2 * x[i][j]; Sequential accesses instead of striding through memory every 100 words; improved spatial locality 3/11/2009 cs 252 -S 09, Lecture 14 42

Loop Interchange Example /* Before */ for (k = 0; k < 100; k = k+1) for (j = 0; j < 100; j = j+1) for (i = 0; i < 5000; i = i+1) x[i][j] = 2 * x[i][j]; /* After */ for (k = 0; k < 100; k = k+1) for (i = 0; i < 5000; i = i+1) for (j = 0; j < 100; j = j+1) x[i][j] = 2 * x[i][j]; Sequential accesses instead of striding through memory every 100 words; improved spatial locality 3/11/2009 cs 252 -S 09, Lecture 14 42

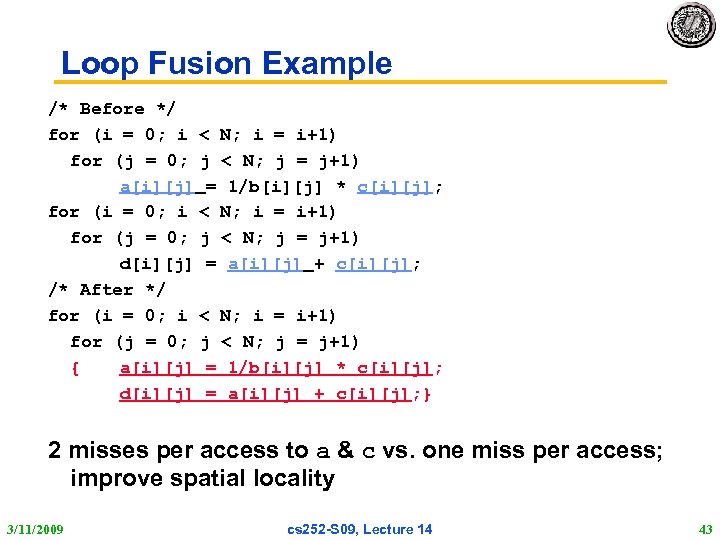

Loop Fusion Example /* Before */ for (i = 0; i < N; i = i+1) for (j = 0; j < N; j = j+1) a[i][j] = 1/b[i][j] * c[i][j]; for (i = 0; i < N; i = i+1) for (j = 0; j < N; j = j+1) d[i][j] = a[i][j] + c[i][j]; /* After */ for (i = 0; i < N; i = i+1) for (j = 0; j < N; j = j+1) { a[i][j] = 1/b[i][j] * c[i][j]; d[i][j] = a[i][j] + c[i][j]; } 2 misses per access to a & c vs. one miss per access; improve spatial locality 3/11/2009 cs 252 -S 09, Lecture 14 43

Loop Fusion Example /* Before */ for (i = 0; i < N; i = i+1) for (j = 0; j < N; j = j+1) a[i][j] = 1/b[i][j] * c[i][j]; for (i = 0; i < N; i = i+1) for (j = 0; j < N; j = j+1) d[i][j] = a[i][j] + c[i][j]; /* After */ for (i = 0; i < N; i = i+1) for (j = 0; j < N; j = j+1) { a[i][j] = 1/b[i][j] * c[i][j]; d[i][j] = a[i][j] + c[i][j]; } 2 misses per access to a & c vs. one miss per access; improve spatial locality 3/11/2009 cs 252 -S 09, Lecture 14 43

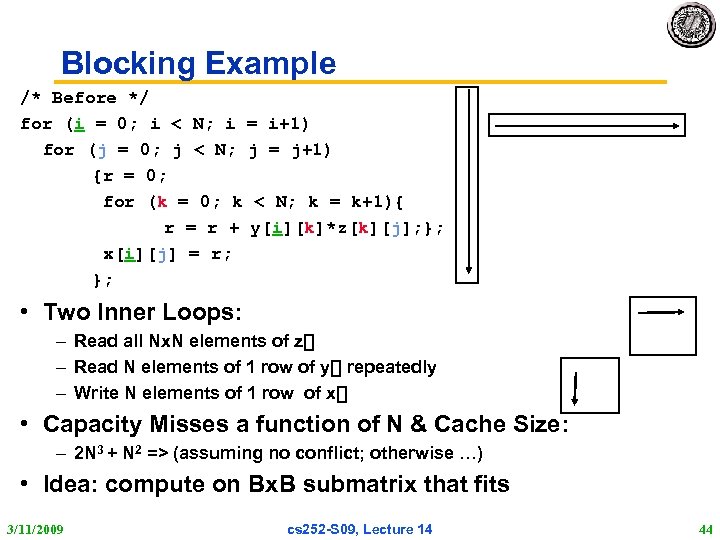

Blocking Example /* Before */ for (i = 0; i < N; i = i+1) for (j = 0; j < N; j = j+1) {r = 0; for (k = 0; k < N; k = k+1){ r = r + y[i][k]*z[k][j]; }; x[i][j] = r; }; • Two Inner Loops: – Read all Nx. N elements of z[] – Read N elements of 1 row of y[] repeatedly – Write N elements of 1 row of x[] • Capacity Misses a function of N & Cache Size: – 2 N 3 + N 2 => (assuming no conflict; otherwise …) • Idea: compute on Bx. B submatrix that fits 3/11/2009 cs 252 -S 09, Lecture 14 44

Blocking Example /* Before */ for (i = 0; i < N; i = i+1) for (j = 0; j < N; j = j+1) {r = 0; for (k = 0; k < N; k = k+1){ r = r + y[i][k]*z[k][j]; }; x[i][j] = r; }; • Two Inner Loops: – Read all Nx. N elements of z[] – Read N elements of 1 row of y[] repeatedly – Write N elements of 1 row of x[] • Capacity Misses a function of N & Cache Size: – 2 N 3 + N 2 => (assuming no conflict; otherwise …) • Idea: compute on Bx. B submatrix that fits 3/11/2009 cs 252 -S 09, Lecture 14 44

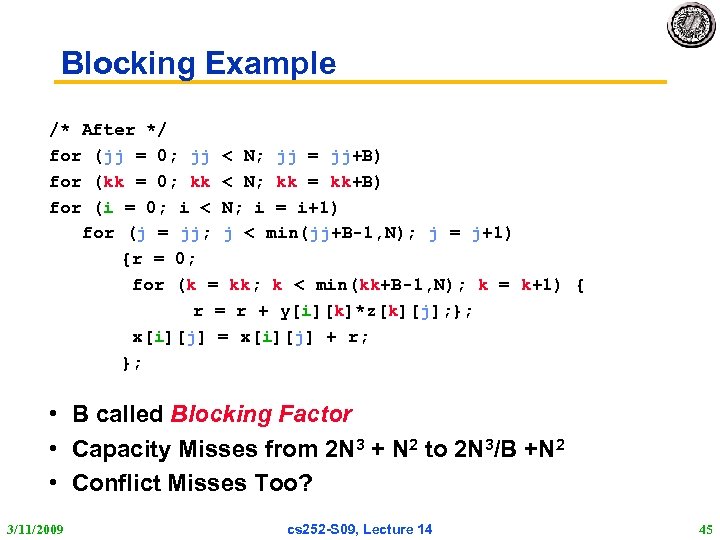

Blocking Example /* After */ for (jj = 0; jj < N; jj = jj+B) for (kk = 0; kk < N; kk = kk+B) for (i = 0; i < N; i = i+1) for (j = jj; j < min(jj+B-1, N); j = j+1) {r = 0; for (k = kk; k < min(kk+B-1, N); k = k+1) { r = r + y[i][k]*z[k][j]; }; x[i][j] = x[i][j] + r; }; • B called Blocking Factor • Capacity Misses from 2 N 3 + N 2 to 2 N 3/B +N 2 • Conflict Misses Too? 3/11/2009 cs 252 -S 09, Lecture 14 45

Blocking Example /* After */ for (jj = 0; jj < N; jj = jj+B) for (kk = 0; kk < N; kk = kk+B) for (i = 0; i < N; i = i+1) for (j = jj; j < min(jj+B-1, N); j = j+1) {r = 0; for (k = kk; k < min(kk+B-1, N); k = k+1) { r = r + y[i][k]*z[k][j]; }; x[i][j] = x[i][j] + r; }; • B called Blocking Factor • Capacity Misses from 2 N 3 + N 2 to 2 N 3/B +N 2 • Conflict Misses Too? 3/11/2009 cs 252 -S 09, Lecture 14 45

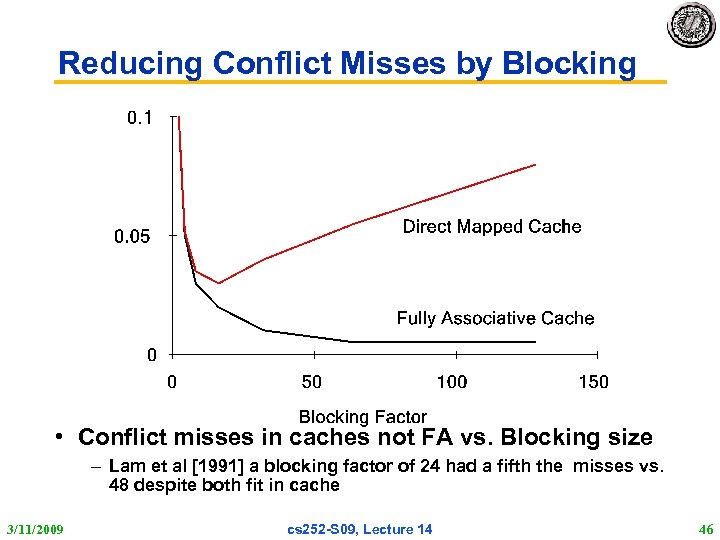

Reducing Conflict Misses by Blocking • Conflict misses in caches not FA vs. Blocking size – Lam et al [1991] a blocking factor of 24 had a fifth the misses vs. 48 despite both fit in cache 3/11/2009 cs 252 -S 09, Lecture 14 46

Reducing Conflict Misses by Blocking • Conflict misses in caches not FA vs. Blocking size – Lam et al [1991] a blocking factor of 24 had a fifth the misses vs. 48 despite both fit in cache 3/11/2009 cs 252 -S 09, Lecture 14 46

Summary of Compiler Optimizations to Reduce Cache Misses (by hand) 3/11/2009 cs 252 -S 09, Lecture 14 47

Summary of Compiler Optimizations to Reduce Cache Misses (by hand) 3/11/2009 cs 252 -S 09, Lecture 14 47

Impact of Hierarchy on Algorithms • Today CPU time is a function of (ops, cache misses) • What does this mean to Compilers, Data structures, Algorithms? – Quicksort: fastest comparison based sorting algorithm when keys fit in memory – Radix sort: also called “linear time” sort For keys of fixed length and fixed radix a constant number of passes over the data is sufficient independent of the number of keys • “The Influence of Caches on the Performance of Sorting” by A. La. Marca and R. E. Ladner. Proceedings of the Eighth Annual ACM-SIAM Symposium on Discrete Algorithms, January, 1997, 370 -379. – For Alphastation 250, 32 byte blocks, direct mapped L 2 2 MB cache, 8 byte keys, from 4000 to 4000000 3/11/2009 cs 252 -S 09, Lecture 14 48

Impact of Hierarchy on Algorithms • Today CPU time is a function of (ops, cache misses) • What does this mean to Compilers, Data structures, Algorithms? – Quicksort: fastest comparison based sorting algorithm when keys fit in memory – Radix sort: also called “linear time” sort For keys of fixed length and fixed radix a constant number of passes over the data is sufficient independent of the number of keys • “The Influence of Caches on the Performance of Sorting” by A. La. Marca and R. E. Ladner. Proceedings of the Eighth Annual ACM-SIAM Symposium on Discrete Algorithms, January, 1997, 370 -379. – For Alphastation 250, 32 byte blocks, direct mapped L 2 2 MB cache, 8 byte keys, from 4000 to 4000000 3/11/2009 cs 252 -S 09, Lecture 14 48

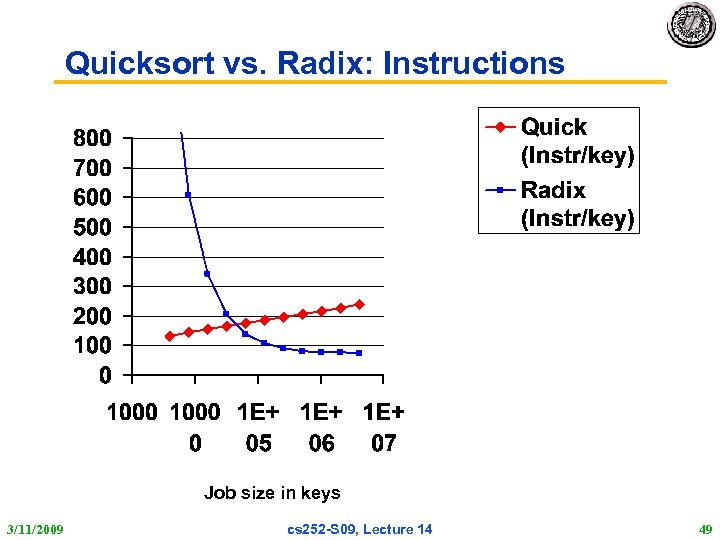

Quicksort vs. Radix: Instructions Job size in keys 3/11/2009 cs 252 -S 09, Lecture 14 49

Quicksort vs. Radix: Instructions Job size in keys 3/11/2009 cs 252 -S 09, Lecture 14 49

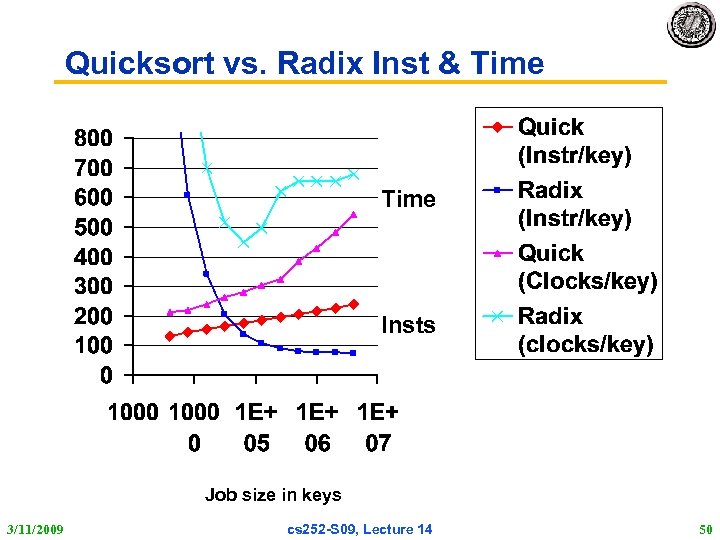

Quicksort vs. Radix Inst & Time Insts Job size in keys 3/11/2009 cs 252 -S 09, Lecture 14 50

Quicksort vs. Radix Inst & Time Insts Job size in keys 3/11/2009 cs 252 -S 09, Lecture 14 50

Quicksort vs. Radix: Cache misses Job size in keys 3/11/2009 cs 252 -S 09, Lecture 14 51

Quicksort vs. Radix: Cache misses Job size in keys 3/11/2009 cs 252 -S 09, Lecture 14 51

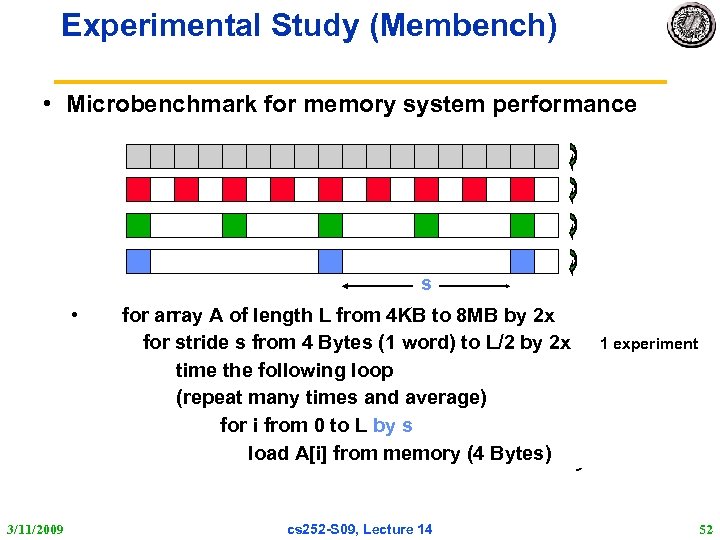

Experimental Study (Membench) • Microbenchmark for memory system performance s • 3/11/2009 for array A of length L from 4 KB to 8 MB by 2 x for stride s from 4 Bytes (1 word) to L/2 by 2 x time the following loop (repeat many times and average) for i from 0 to L by s load A[i] from memory (4 Bytes) cs 252 -S 09, Lecture 14 1 experiment 52

Experimental Study (Membench) • Microbenchmark for memory system performance s • 3/11/2009 for array A of length L from 4 KB to 8 MB by 2 x for stride s from 4 Bytes (1 word) to L/2 by 2 x time the following loop (repeat many times and average) for i from 0 to L by s load A[i] from memory (4 Bytes) cs 252 -S 09, Lecture 14 1 experiment 52

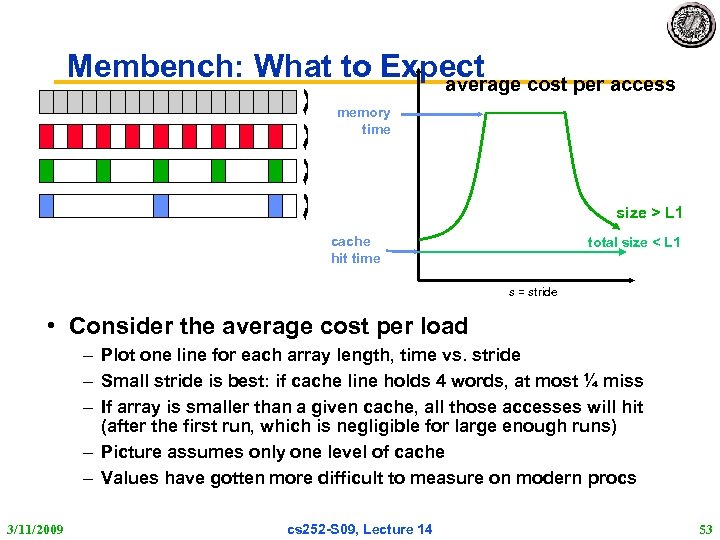

Membench: What to Expect cost per access average memory time size > L 1 cache hit time total size < L 1 s = stride • Consider the average cost per load – Plot one line for each array length, time vs. stride – Small stride is best: if cache line holds 4 words, at most ¼ miss – If array is smaller than a given cache, all those accesses will hit (after the first run, which is negligible for large enough runs) – Picture assumes only one level of cache – Values have gotten more difficult to measure on modern procs 3/11/2009 cs 252 -S 09, Lecture 14 53

Membench: What to Expect cost per access average memory time size > L 1 cache hit time total size < L 1 s = stride • Consider the average cost per load – Plot one line for each array length, time vs. stride – Small stride is best: if cache line holds 4 words, at most ¼ miss – If array is smaller than a given cache, all those accesses will hit (after the first run, which is negligible for large enough runs) – Picture assumes only one level of cache – Values have gotten more difficult to measure on modern procs 3/11/2009 cs 252 -S 09, Lecture 14 53

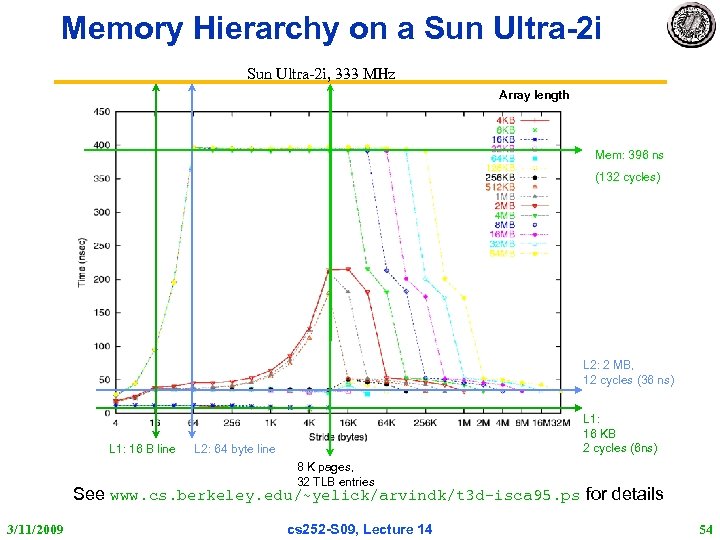

Memory Hierarchy on a Sun Ultra-2 i, 333 MHz Array length Mem: 396 ns (132 cycles) L 2: 2 MB, 12 cycles (36 ns) L 1: 16 B line L 1: 16 KB 2 cycles (6 ns) L 2: 64 byte line 8 K pages, 32 TLB entries See www. cs. berkeley. edu/~yelick/arvindk/t 3 d-isca 95. ps for details 3/11/2009 cs 252 -S 09, Lecture 14 54

Memory Hierarchy on a Sun Ultra-2 i, 333 MHz Array length Mem: 396 ns (132 cycles) L 2: 2 MB, 12 cycles (36 ns) L 1: 16 B line L 1: 16 KB 2 cycles (6 ns) L 2: 64 byte line 8 K pages, 32 TLB entries See www. cs. berkeley. edu/~yelick/arvindk/t 3 d-isca 95. ps for details 3/11/2009 cs 252 -S 09, Lecture 14 54

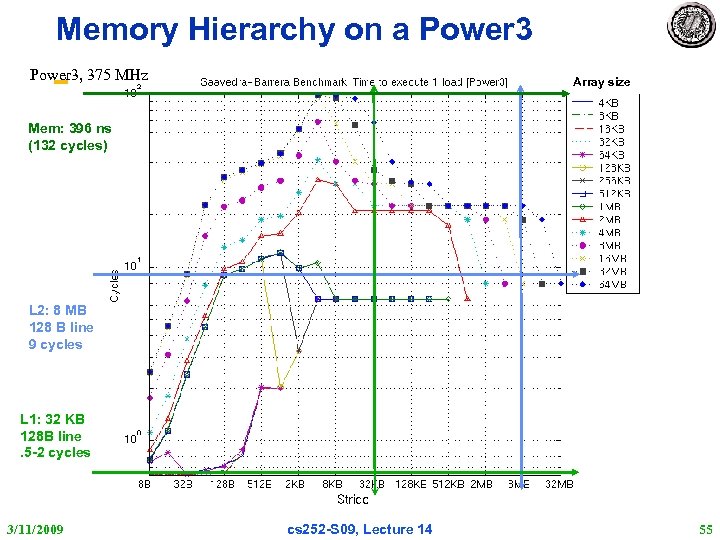

Memory Hierarchy on a Power 3, 375 MHz Array size Mem: 396 ns (132 cycles) L 2: 8 MB 128 B line 9 cycles L 1: 32 KB 128 B line. 5 -2 cycles 3/11/2009 cs 252 -S 09, Lecture 14 55

Memory Hierarchy on a Power 3, 375 MHz Array size Mem: 396 ns (132 cycles) L 2: 8 MB 128 B line 9 cycles L 1: 32 KB 128 B line. 5 -2 cycles 3/11/2009 cs 252 -S 09, Lecture 14 55

Compiler Optimization vs. Memory Hierarchy Search • Compiler tries to figure out memory hierarchy optimizations • New approach: “Auto-tuners” 1 st run variations of program on computer to find best combinations of optimizations (blocking, padding, …) and algorithms, then produce C code to be compiled for that computer • “Auto-tuner” targeted to numerical method – E. g. , PHi. PAC (BLAS), Atlas (BLAS), Sparsity (Sparse linear algebra), Spiral (DSP), FFT-W 3/11/2009 cs 252 -S 09, Lecture 14 56

Compiler Optimization vs. Memory Hierarchy Search • Compiler tries to figure out memory hierarchy optimizations • New approach: “Auto-tuners” 1 st run variations of program on computer to find best combinations of optimizations (blocking, padding, …) and algorithms, then produce C code to be compiled for that computer • “Auto-tuner” targeted to numerical method – E. g. , PHi. PAC (BLAS), Atlas (BLAS), Sparsity (Sparse linear algebra), Spiral (DSP), FFT-W 3/11/2009 cs 252 -S 09, Lecture 14 56

![Sparse Matrix – Search for Blocking for finite element problem [Im, Yelick, Vuduc, 2005] Sparse Matrix – Search for Blocking for finite element problem [Im, Yelick, Vuduc, 2005]](https://present5.com/presentation/6b4b8a2f25220989125424ab177de215/image-57.jpg) Sparse Matrix – Search for Blocking for finite element problem [Im, Yelick, Vuduc, 2005] Mflop/s Best: 4 x 2 Reference 3/11/2009 Mflop/s cs 252 -S 09, Lecture 14 57

Sparse Matrix – Search for Blocking for finite element problem [Im, Yelick, Vuduc, 2005] Mflop/s Best: 4 x 2 Reference 3/11/2009 Mflop/s cs 252 -S 09, Lecture 14 57

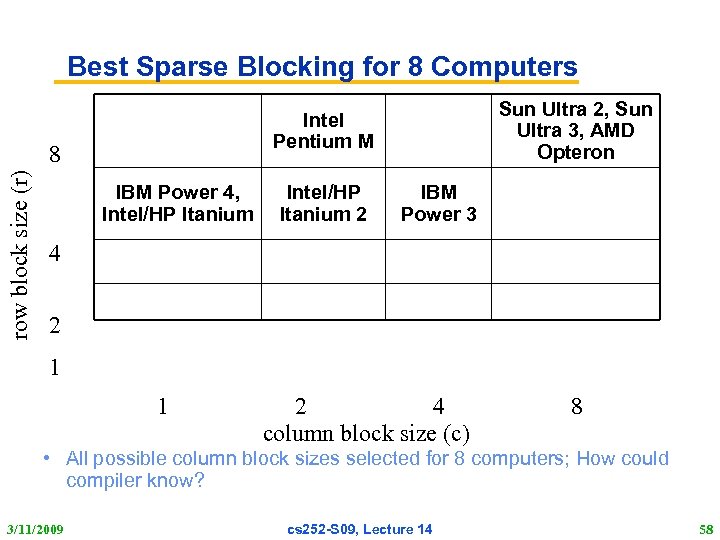

Best Sparse Blocking for 8 Computers 8 row block size (r) Sun Ultra 2, Sun Ultra 3, AMD Opteron Intel Pentium M IBM Power 4, Intel/HP Itanium 2 IBM Power 3 4 2 1 1 2 4 column block size (c) 8 • All possible column block sizes selected for 8 computers; How could compiler know? 3/11/2009 cs 252 -S 09, Lecture 14 58

Best Sparse Blocking for 8 Computers 8 row block size (r) Sun Ultra 2, Sun Ultra 3, AMD Opteron Intel Pentium M IBM Power 4, Intel/HP Itanium 2 IBM Power 3 4 2 1 1 2 4 column block size (c) 8 • All possible column block sizes selected for 8 computers; How could compiler know? 3/11/2009 cs 252 -S 09, Lecture 14 58

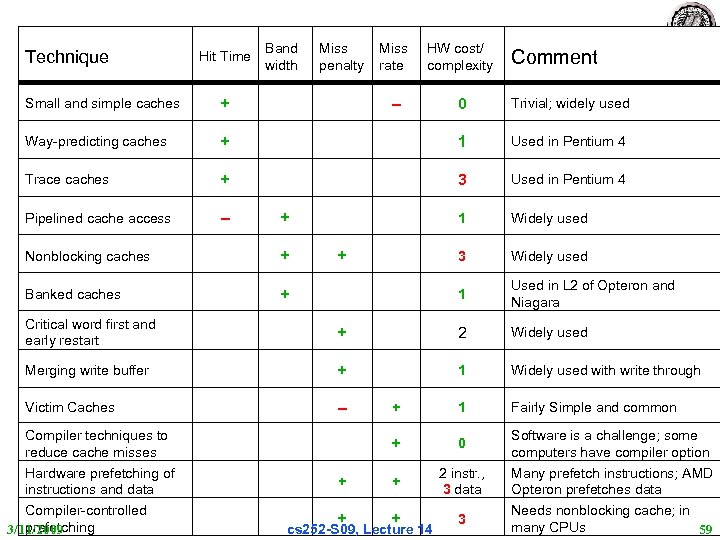

Technique Hit Time Band width Miss penalty Miss rate HW cost/ complexity – 0 Trivial; widely used Comment Small and simple caches + Way-predicting caches + 1 Used in Pentium 4 Trace caches + 3 Used in Pentium 4 Pipelined cache access – 1 Widely used 3 Widely used 1 Used in L 2 of Opteron and Niagara + Nonblocking caches + Banked caches + + Critical word first and early restart + 2 Widely used Merging write buffer + 1 Widely used with write through Victim Caches – + 1 Fairly Simple and common + 0 + + 2 instr. , 3 data + + 3 Compiler techniques to reduce cache misses Hardware prefetching of instructions and data Compiler-controlled prefetching 3/11/2009 cs 252 -S 09, Lecture 14 Software is a challenge; some computers have compiler option Many prefetch instructions; AMD Opteron prefetches data Needs nonblocking cache; in many CPUs 59

Technique Hit Time Band width Miss penalty Miss rate HW cost/ complexity – 0 Trivial; widely used Comment Small and simple caches + Way-predicting caches + 1 Used in Pentium 4 Trace caches + 3 Used in Pentium 4 Pipelined cache access – 1 Widely used 3 Widely used 1 Used in L 2 of Opteron and Niagara + Nonblocking caches + Banked caches + + Critical word first and early restart + 2 Widely used Merging write buffer + 1 Widely used with write through Victim Caches – + 1 Fairly Simple and common + 0 + + 2 instr. , 3 data + + 3 Compiler techniques to reduce cache misses Hardware prefetching of instructions and data Compiler-controlled prefetching 3/11/2009 cs 252 -S 09, Lecture 14 Software is a challenge; some computers have compiler option Many prefetch instructions; AMD Opteron prefetches data Needs nonblocking cache; in many CPUs 59

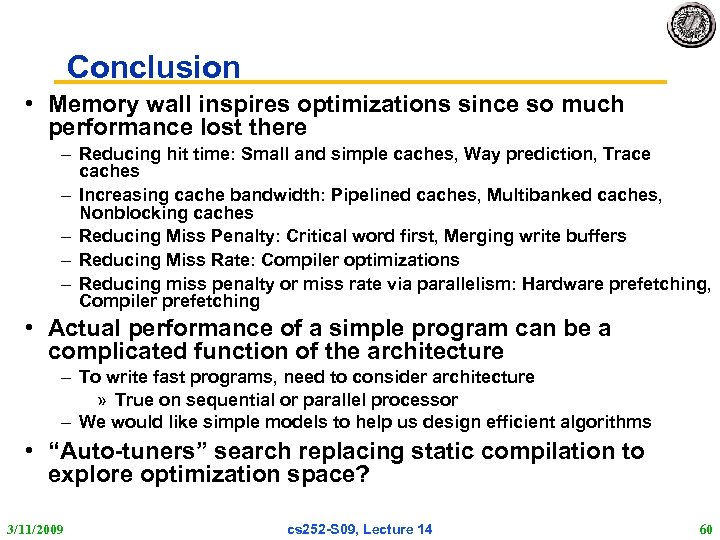

Conclusion • Memory wall inspires optimizations since so much performance lost there – Reducing hit time: Small and simple caches, Way prediction, Trace caches – Increasing cache bandwidth: Pipelined caches, Multibanked caches, Nonblocking caches – Reducing Miss Penalty: Critical word first, Merging write buffers – Reducing Miss Rate: Compiler optimizations – Reducing miss penalty or miss rate via parallelism: Hardware prefetching, Compiler prefetching • Actual performance of a simple program can be a complicated function of the architecture – To write fast programs, need to consider architecture » True on sequential or parallel processor – We would like simple models to help us design efficient algorithms • “Auto-tuners” search replacing static compilation to explore optimization space? 3/11/2009 cs 252 -S 09, Lecture 14 60

Conclusion • Memory wall inspires optimizations since so much performance lost there – Reducing hit time: Small and simple caches, Way prediction, Trace caches – Increasing cache bandwidth: Pipelined caches, Multibanked caches, Nonblocking caches – Reducing Miss Penalty: Critical word first, Merging write buffers – Reducing Miss Rate: Compiler optimizations – Reducing miss penalty or miss rate via parallelism: Hardware prefetching, Compiler prefetching • Actual performance of a simple program can be a complicated function of the architecture – To write fast programs, need to consider architecture » True on sequential or parallel processor – We would like simple models to help us design efficient algorithms • “Auto-tuners” search replacing static compilation to explore optimization space? 3/11/2009 cs 252 -S 09, Lecture 14 60