5c3f7f801ba241230275b408f1b9cf81.ppt

- Количество слайдов: 23

Critical Components Underlying Scientifically Credible Intervention Research Joel R. Levin University of Arizona

Focus of This Presentation Considerations that researchers need to make in the way of their methodologies, procedures, measures, and statistical analyses when investigating whether or not causal relationships exist between educational interventions and outcome measures.

Counter-Example 1: Surveys and Questionnaire Studies Newspaper Headline: Survey Links All-Nighters to Students’ Lower GPAs Comments: • One wonders what type of students are inclined to pull all -nighters – compared to those who don’t!! • Is it possible that by pulling all-nighters instead of getting a good night’s sleep, the former type of students actually elevated their grades from what would have been Fs to Cs and Ds?

Brief Review of Common Research Methodologies in Education • Descriptive/observational/case study (includes ethnographic research) • • • Self-report/survey Correlational Nonexperimental (e. g. , nonequivalent control group) Quasi-experimental Experimental Note: Different degrees of plausibility/credibility about empirical and causal relationships are associated with these different methodologies.

Counter-Example 2: Surveys and Questionnaire Studies “For Scientists, a Beer Test Shows Results as a Litmus Test (New York Times, 2008) “According to the study, published in Oikos, a highly respected scientific journal, the more beer a scientist drinks, the less likely the scientist is to publish a paper or to have a paper cited by another researcher, a measure of a paper’s quality and importance. ” “[This is not just] a matter of a few scientists having had too many brews to be able to stumble back to the lab. Publication did not simply drop off among the heaviest drinkers. Instead, scientific performance steadily declined with increasing beer consumption across the board, from scientists who primarily sip at two or three beers over a year to the sort who average knocking back more than two a day. ” Quoth a quaffing evolutionary biologist at the University of Melbourne: “It’s rather devastating to be told we should drink less beer in order to increase our scientific performance. ”

CAREful Experiments Yield Scientifically Credible Evidence

CAREful Experiments → Scientifically Convincing Evidence Question: When is evidence about the effectiveness of a new "treatment" (such as a new drug, medical procedure, method of teaching or learning, etc. ) scientifically convincing? Answer: Evidence is scientifically convincing if it is collected systematically, with great CARE — specifically, when four CAREful ingredients are present: 1. The evidence must be based on a Comparison that is appropriate, and 2. The evidence must be produced Again and Again, and 3. There must be a direct Relationship between the treatment or intervention (independent variable) and the desired outcome (dependent variable), and 4. All other competing variables (extraneous variables) must be Eliminated, through randomization and control.

CAREful Experiments Yield Scientifically Convincing Evidence Thus: If an appropriate Comparison reveals Again and Again evidence of a direct Relationship between a treatment and a desired outcome, while Eliminating all other extraneous variables, then such CAREful experimentation yields scientifically credible evidence about the effect of the treatment on the outcome. Derry, S. , Levin, J. R. , Osana, H. P. , Jones, M. S. , & Peterson, M. (2000). Fostering students' statistical and scientific thinking: Lessons learned from an innovative college course. American Educational Research Journal, 37, 747 -773.

One View of Educational Research Types, Incorporating the CAREful Components Nonintervention One Unit More Than One Unit Descriptive/Observational "C" C A R? Self-Report/Survey "C" C A R? CR CAR Quasi-Experimental C R E? C A R E? Experimental CRE CARE Intervention Nonexperimental

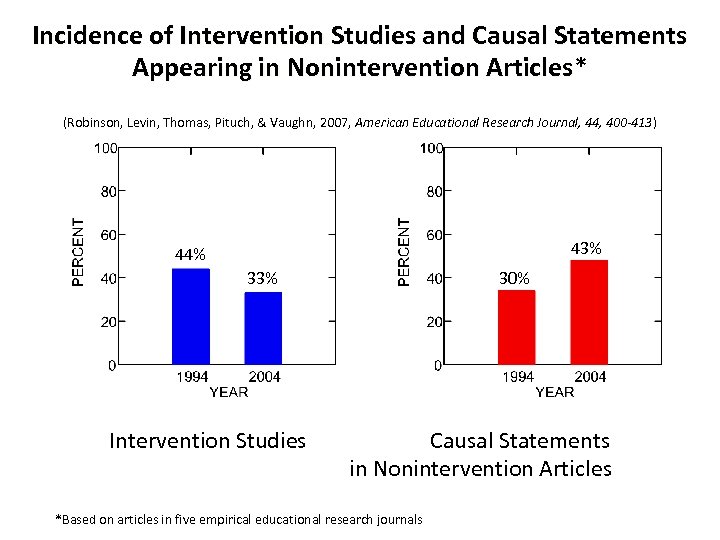

Incidence of Intervention Studies and Causal Statements Appearing in Nonintervention Articles* Articles (Robinson, Levin, Thomas, Pituch, & Vaughn, 2007, American Educational Research Journal, 44, 400 -413) 43% 44% 33% Intervention Studies 30% Causal Statements in Nonintervention Articles *Based on articles in five empirical educational research journals

Selected Research Validities • Internal validity – ("randomization" = random assignment to treatments or interventions; needed to argue for cause-effect relationships) • External validity – (random selection = random sampling of participants; needed to generalize from sample to population) • Construct validity – (the relationship between experimental operations and underlying psychological traits/processes) • Implementation validity – (treatment integrity and acceptability) • Statistical-conclusion validity – (assumptions associated with the statistical tests conducted) Shadish, W. R. , Cook, T. D. , & Campbell, D. T. (2002). Experimental and quasi-experimental designs for generalized causal inference. Boston: Houghton Mifflin.

An Important Distinction to Remember Throughout This Institute Random Sampling/Selection of Participants vs. Random Assignment/Randomization of Participants to Intervention Conditions – The former is not needed to constitute an internally valid (scientifically credible) intervention study whereas the latter is

Educational Intervention Research “Credibility” and “Creditability” Credible = Believable • methodologically sound; scientifically convincing • Do the methods used and evidence presented justify the conclusions reached? Creditable = Commendable • substantively interesting; educationally exciting • Are the questions asked and the evidence presented of [potential] importance to society? Ideal Educational Intervention Research = Credible + Creditable Levin, J. R. (1994). Crafting educational intervention research that's both credible and creditable. Educational Psychology Review, 6, 231 -243.

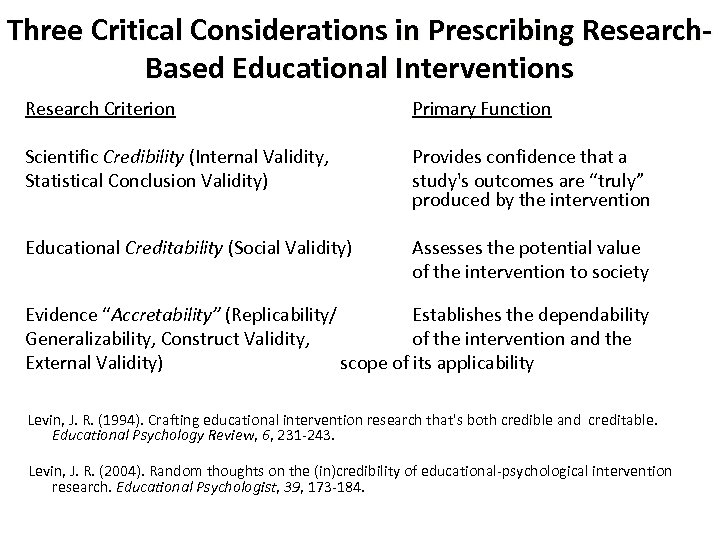

Three Critical Considerations in Prescribing Research. Based Educational Interventions Research Criterion Primary Function Scientific Credibility (Internal Validity, Statistical Conclusion Validity) Provides confidence that a study's outcomes are “truly” produced by the intervention Educational Creditability (Social Validity) Assesses the potential value of the intervention to society Evidence “Accretability” (Replicability/ Establishes the dependability Generalizability, Construct Validity, of the intervention and the External Validity) scope of its applicability Levin, J. R. (1994). Crafting educational intervention research that's both credible and creditable. Educational Psychology Review, 6, 231 -243. Levin, J. R. (2004). Random thoughts on the (in)credibility of educational-psychological intervention research. Educational Psychologist, 39, 173 -184.

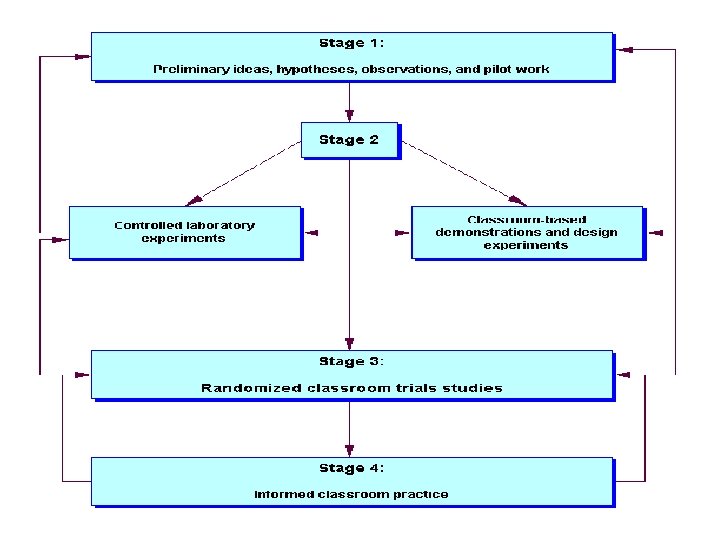

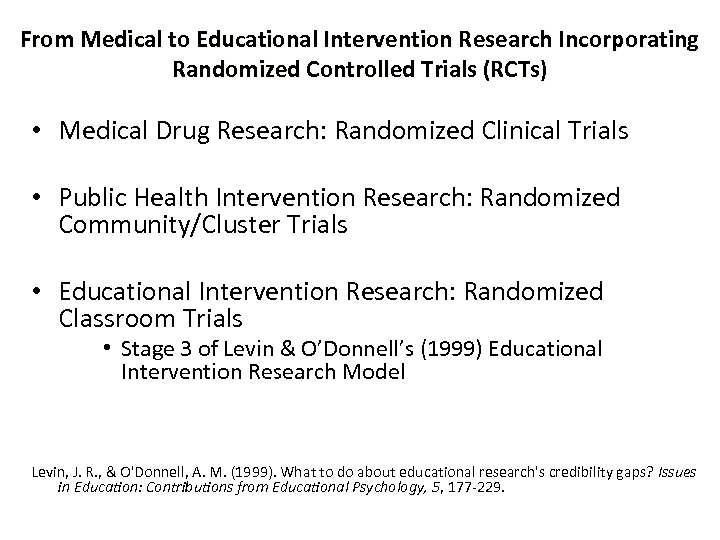

From Medical to Educational Intervention Research Incorporating Randomized Controlled Trials (RCTs) • Medical Drug Research: Randomized Clinical Trials • Public Health Intervention Research: Randomized Community/Cluster Trials • Educational Intervention Research: Randomized Classroom Trials • Stage 3 of Levin & O’Donnell’s (1999) Educational Intervention Research Model Levin, J. R. , & O'Donnell, A. M. (1999). What to do about educational research's credibility gaps? Issues in Education: Contributions from Educational Psychology, 5, 177 -229.

Stage 1 Notions and Interplay Between Stage 2 Laboratory and Field Research How do you make a baby buggy? Traditional answer: Tickle its feet.

Stage 1 Notions and Interplay Between Stage 2 Laboratory and Field Research How do you make a baby buggy? Nontraditional answer: That’s an empirical question (Zeedyk, New York Times, March 2, 2009)

Stage 1 Notions and Interplay Between Stage 2 Laboratory and Field Research Educational Issue: How to promote children’s language development? Assumption: Parents talking to their young children is an important contributor to the children’s language development. Hypothesis: Children in forward-facing strollers receive less parent talk in comparison to those in towardfacing strollers

Stage 1 Notions and Interplay Between Stage 2 Laboratory and Field Research Naturalistic Data: Observations of 2700 families with both types of strollers* Results: Percentage of Talk Cases Forward, 11%; Toward, 25% * ”You can observe a lot by just watching” -Yogi Berra

Stage 1 Notions and Interplay Between Stage 2 Laboratory and Field Research Confounding Variable Concern: Parents who buy towardfacing strollers talk more. “Preliminary” Laboratory Study: 20 mothers using both types of strollers for 15 minutes. Findings: Twice as much mother talk with the towardfacing strollers. Bonus: Babies in toward-facing strollers also laughed more.

Stage 1 Notions and Interplay Between Stage 2 Laboratory and Field Research “Ours was a preliminary study, intending to raise questions rather than to provide answers. It is now clear that future research on the effects of stroller design would be worthwhile. ” Important Next Baby Steps: Stroll on, dudes, toward RCTs (randomized carriage trials)!

Alternative Research Methodologies Gaining Scientific Respectability • Nonequivalent control group designs based on comprehensive matching (Aickin, 2009; Shadish, 2010) • Regression discontinuity analysis (Shadish, 2010) • Single-case time-series designs (Clay, 2010; Kratochwill & Levin, 2010) Aickin, M. (2009, Jan. 1). A simulation study of the validity and efficiency of design-adaptive allocation to two groups in the regression situation. International Journal of Biostatistics, 5(1): Article 19. Retrieved from www. ncbi. nlm. nih. gov/pmc/articles/PMC 2827888/ Clay, R. A. (2010, Sept. ). Randomized clinical trials have their place, but critics argue that researchers would get better results if they also embraced other methodologies. Monitor on Psychology, 53 -55. Kratochwill, T. R. , & Levin, J. R. (2010). Enhancing the scientific credibility of single-case intervention research: Randomization to the rescue. Psychological Methods, 15, 122 -144. Shadish, W. (2010, Aug. ). A quiet revolution: The empirical program of quasi-experimentation. Paper presented at the annual meeting of the American Psychological Association, San Diego.

5c3f7f801ba241230275b408f1b9cf81.ppt