6bc38904317931033b7ccbd015383790.ppt

- Количество слайдов: 50

CPSC 503 Computational Linguistics Discourse and Dialog Lecture 14 Giuseppe Carenini 3/19/2018 CPSC 503 Winter 2008 1

CPSC 503 Computational Linguistics Discourse and Dialog Lecture 14 Giuseppe Carenini 3/19/2018 CPSC 503 Winter 2008 1

Finish form (22/10) • Word Sense Disambiguation • Word Similarity • Semantic Role Labeling 3/19/2018 CPSC 503 Winter 2008 2

Finish form (22/10) • Word Sense Disambiguation • Word Similarity • Semantic Role Labeling 3/19/2018 CPSC 503 Winter 2008 2

Semantic Role Labeling: Example Some roles. . Employer Employee Task Position – In 1979 , singer Nancy Wilson HIRED him to open her nightclub act. – Castro has swallowed his doubts and HIRED Valenzuela as a cook in his small restaurant. 3/19/2018 CPSC 503 Winter 2008 3

Semantic Role Labeling: Example Some roles. . Employer Employee Task Position – In 1979 , singer Nancy Wilson HIRED him to open her nightclub act. – Castro has swallowed his doubts and HIRED Valenzuela as a cook in his small restaurant. 3/19/2018 CPSC 503 Winter 2008 3

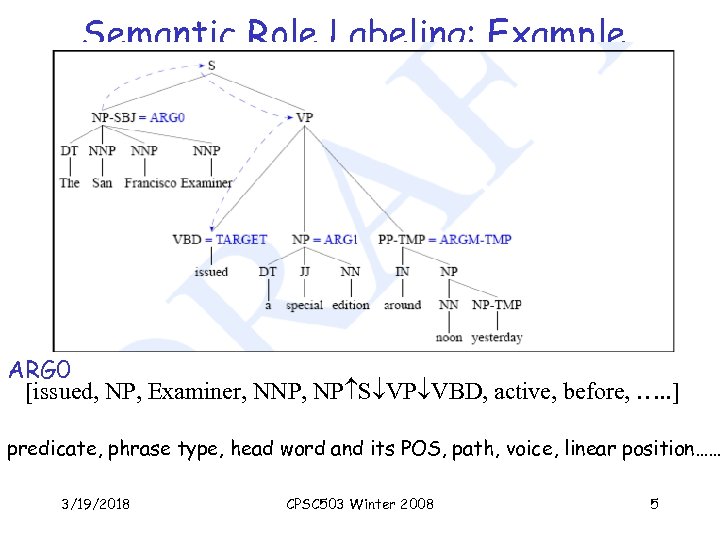

![Supervised Semantic Role Labeling Typically framed as a classification problem [Gildea, Jurfsky 2002] • Supervised Semantic Role Labeling Typically framed as a classification problem [Gildea, Jurfsky 2002] •](https://present5.com/presentation/6bc38904317931033b7ccbd015383790/image-4.jpg) Supervised Semantic Role Labeling Typically framed as a classification problem [Gildea, Jurfsky 2002] • Train a classifier that for each predicate: – determine for each synt. constituent which semantic role (if any) it plays with respect to the predicate • Train on a corpus annotated with relevant constituent features These include: predicate, phrase type, head word and its POS, path, voice, linear position…… and many others 3/19/2018 CPSC 503 Winter 2008 4

Supervised Semantic Role Labeling Typically framed as a classification problem [Gildea, Jurfsky 2002] • Train a classifier that for each predicate: – determine for each synt. constituent which semantic role (if any) it plays with respect to the predicate • Train on a corpus annotated with relevant constituent features These include: predicate, phrase type, head word and its POS, path, voice, linear position…… and many others 3/19/2018 CPSC 503 Winter 2008 4

Semantic Role Labeling: Example ARG 0 [issued, NP, Examiner, NNP, NP S VP VBD, active, before, …. . ] predicate, phrase type, head word and its POS, path, voice, linear position…… 3/19/2018 CPSC 503 Winter 2008 5

Semantic Role Labeling: Example ARG 0 [issued, NP, Examiner, NNP, NP S VP VBD, active, before, …. . ] predicate, phrase type, head word and its POS, path, voice, linear position…… 3/19/2018 CPSC 503 Winter 2008 5

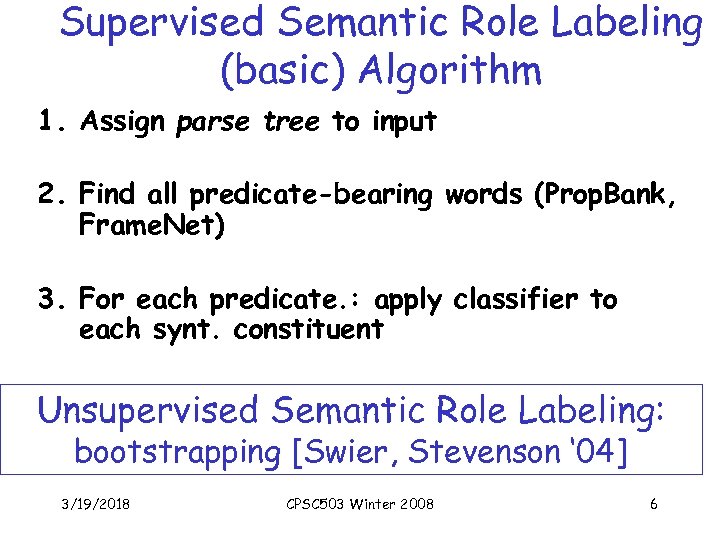

Supervised Semantic Role Labeling (basic) Algorithm 1. Assign parse tree to input 2. Find all predicate-bearing words (Prop. Bank, Frame. Net) 3. For each predicate. : apply classifier to each synt. constituent Unsupervised Semantic Role Labeling: bootstrapping [Swier, Stevenson ‘ 04] 3/19/2018 CPSC 503 Winter 2008 6

Supervised Semantic Role Labeling (basic) Algorithm 1. Assign parse tree to input 2. Find all predicate-bearing words (Prop. Bank, Frame. Net) 3. For each predicate. : apply classifier to each synt. constituent Unsupervised Semantic Role Labeling: bootstrapping [Swier, Stevenson ‘ 04] 3/19/2018 CPSC 503 Winter 2008 6

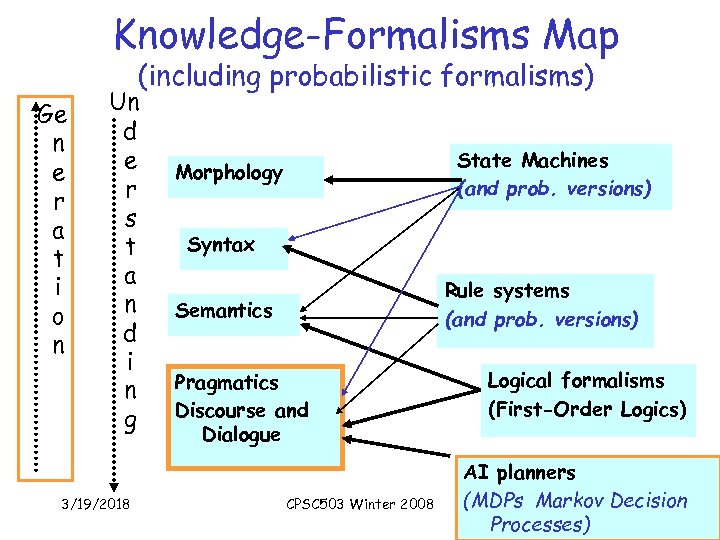

Knowledge-Formalisms Map (including probabilistic formalisms) Ge n e r a t i o n Un d e r s t a n d i n g 3/19/2018 State Machines (and prob. versions) Morphology Syntax Rule systems (and prob. versions) Semantics Pragmatics Discourse and Dialogue CPSC 503 Winter 2008 Logical formalisms (First-Order Logics) AI planners (MDPs Markov Decision 7 Processes)

Knowledge-Formalisms Map (including probabilistic formalisms) Ge n e r a t i o n Un d e r s t a n d i n g 3/19/2018 State Machines (and prob. versions) Morphology Syntax Rule systems (and prob. versions) Semantics Pragmatics Discourse and Dialogue CPSC 503 Winter 2008 Logical formalisms (First-Order Logics) AI planners (MDPs Markov Decision 7 Processes)

Today 27/10 • Brief Intro Pragmatics • Discourse – Monologue – Dialog 3/19/2018 CPSC 503 Winter 2008 8

Today 27/10 • Brief Intro Pragmatics • Discourse – Monologue – Dialog 3/19/2018 CPSC 503 Winter 2008 8

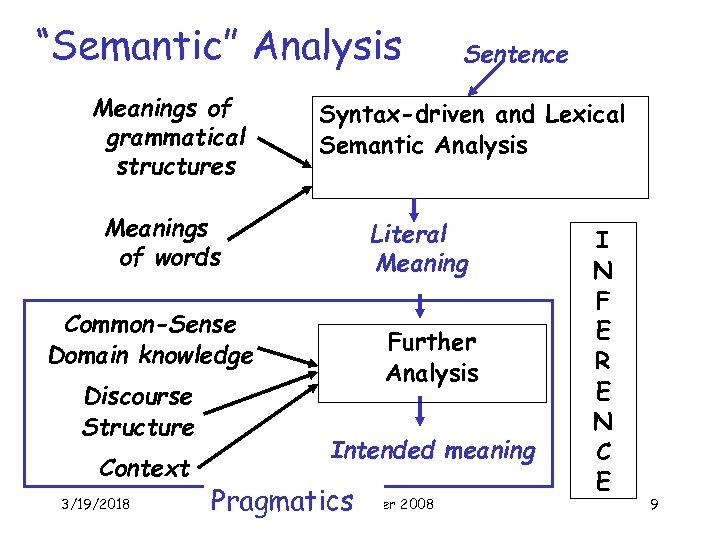

“Semantic” Analysis Meanings of grammatical structures Meanings of words Common-Sense Domain knowledge Discourse Structure Context 3/19/2018 Sentence Syntax-driven and Lexical Semantic Analysis Literal Meaning Further Analysis Intended meaning CPSC 503 Pragmatics. Winter 2008 I N F E R E N C E 9

“Semantic” Analysis Meanings of grammatical structures Meanings of words Common-Sense Domain knowledge Discourse Structure Context 3/19/2018 Sentence Syntax-driven and Lexical Semantic Analysis Literal Meaning Further Analysis Intended meaning CPSC 503 Pragmatics. Winter 2008 I N F E R E N C E 9

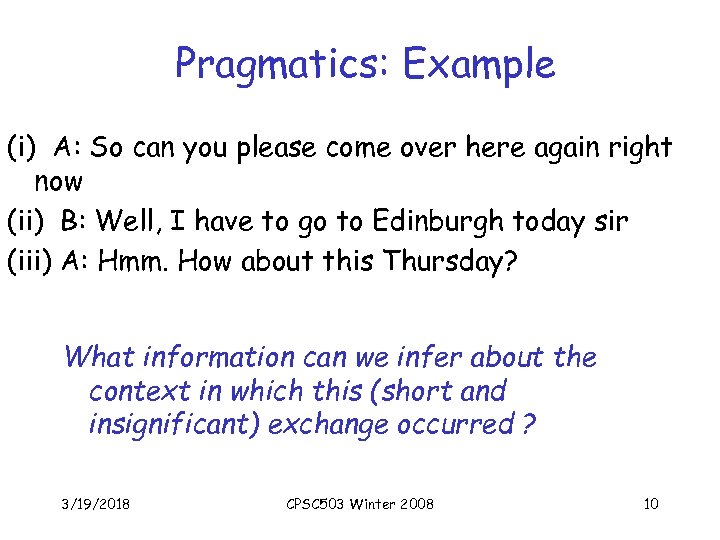

Pragmatics: Example (i) A: So can you please come over here again right now (ii) B: Well, I have to go to Edinburgh today sir (iii) A: Hmm. How about this Thursday? What information can we infer about the context in which this (short and insignificant) exchange occurred ? 3/19/2018 CPSC 503 Winter 2008 10

Pragmatics: Example (i) A: So can you please come over here again right now (ii) B: Well, I have to go to Edinburgh today sir (iii) A: Hmm. How about this Thursday? What information can we infer about the context in which this (short and insignificant) exchange occurred ? 3/19/2018 CPSC 503 Winter 2008 10

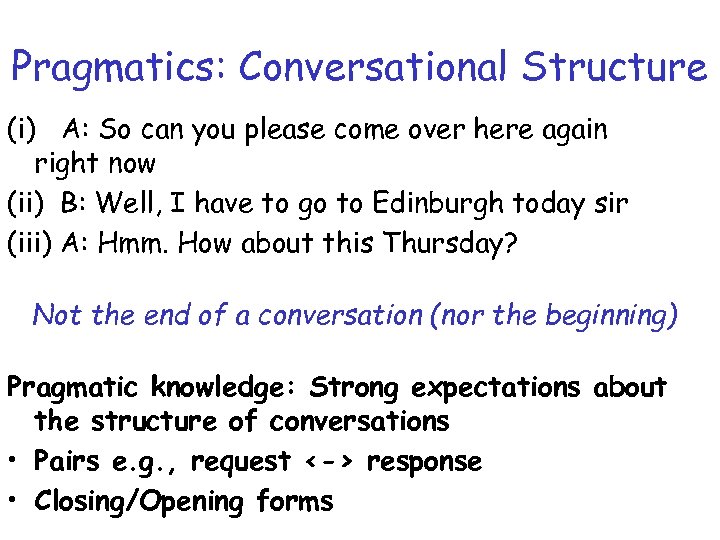

Pragmatics: Conversational Structure (i) A: So can you please come over here again right now (ii) B: Well, I have to go to Edinburgh today sir (iii) A: Hmm. How about this Thursday? Not the end of a conversation (nor the beginning) Pragmatic knowledge: Strong expectations about the structure of conversations • Pairs e. g. , request <-> response 3/19/2018 CPSC 503 11 • Closing/Opening forms Winter 2008

Pragmatics: Conversational Structure (i) A: So can you please come over here again right now (ii) B: Well, I have to go to Edinburgh today sir (iii) A: Hmm. How about this Thursday? Not the end of a conversation (nor the beginning) Pragmatic knowledge: Strong expectations about the structure of conversations • Pairs e. g. , request <-> response 3/19/2018 CPSC 503 11 • Closing/Opening forms Winter 2008

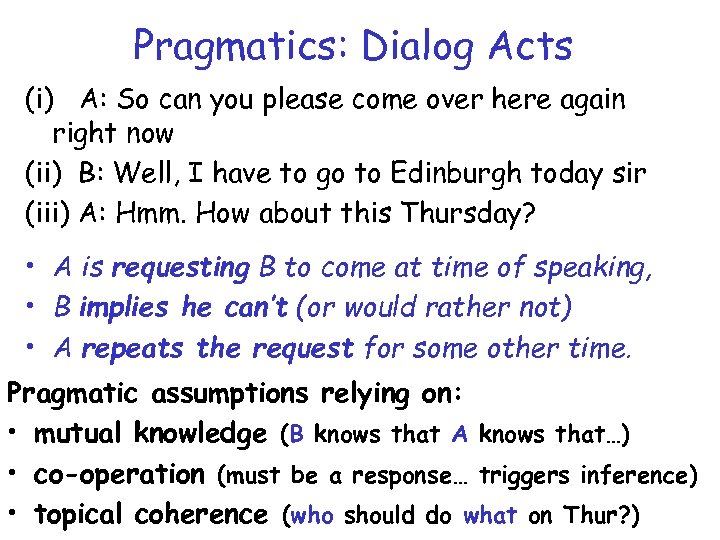

Pragmatics: Dialog Acts (i) A: So can you please come over here again right now (ii) B: Well, I have to go to Edinburgh today sir (iii) A: Hmm. How about this Thursday? • A is requesting B to come at time of speaking, • B implies he can’t (or would rather not) • A repeats the request for some other time. Pragmatic assumptions relying on: • mutual knowledge (B knows that A knows that…) • co-operation (must be a response… triggers inference) 3/19/2018 CPSC 503 Winter 2008 • topical coherence (who should do what on Thur? ) 12

Pragmatics: Dialog Acts (i) A: So can you please come over here again right now (ii) B: Well, I have to go to Edinburgh today sir (iii) A: Hmm. How about this Thursday? • A is requesting B to come at time of speaking, • B implies he can’t (or would rather not) • A repeats the request for some other time. Pragmatic assumptions relying on: • mutual knowledge (B knows that A knows that…) • co-operation (must be a response… triggers inference) 3/19/2018 CPSC 503 Winter 2008 • topical coherence (who should do what on Thur? ) 12

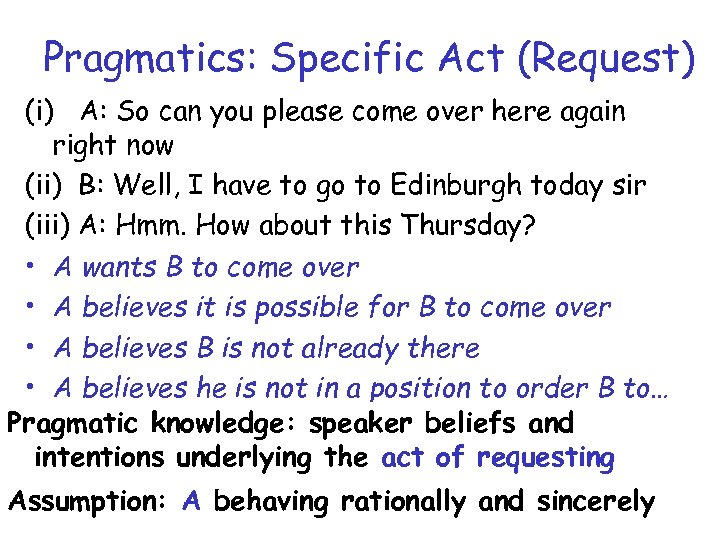

Pragmatics: Specific Act (Request) (i) A: So can you please come over here again right now (ii) B: Well, I have to go to Edinburgh today sir (iii) A: Hmm. How about this Thursday? • A wants B to come over • A believes it is possible for B to come over • A believes B is not already there • A believes he is not in a position to order B to… Pragmatic knowledge: speaker beliefs and intentions underlying the act of requesting 3/19/2018 CPSC 503 Winter 2008 13 Assumption: A behaving rationally and sincerely

Pragmatics: Specific Act (Request) (i) A: So can you please come over here again right now (ii) B: Well, I have to go to Edinburgh today sir (iii) A: Hmm. How about this Thursday? • A wants B to come over • A believes it is possible for B to come over • A believes B is not already there • A believes he is not in a position to order B to… Pragmatic knowledge: speaker beliefs and intentions underlying the act of requesting 3/19/2018 CPSC 503 Winter 2008 13 Assumption: A behaving rationally and sincerely

Pragmatics: Deixis (i) A: So can you please come over here again right now (ii) B: Well, I have to go to Edinburgh today sir (iii) A: Hmm. How about this Thursday? • A assumes B knows where A is • Neither A nor B are in Edinburgh • The day in which the exchange is taking place is not Thur. , nor Wed. (or at least, so A believes) Pragmatic knowledge: References to space and time wrt space and time of speaking 3/19/2018 CPSC 503 Winter 2008 14

Pragmatics: Deixis (i) A: So can you please come over here again right now (ii) B: Well, I have to go to Edinburgh today sir (iii) A: Hmm. How about this Thursday? • A assumes B knows where A is • Neither A nor B are in Edinburgh • The day in which the exchange is taking place is not Thur. , nor Wed. (or at least, so A believes) Pragmatic knowledge: References to space and time wrt space and time of speaking 3/19/2018 CPSC 503 Winter 2008 14

Today 27/10 • Brief Intro Pragmatics • Discourse – Monologue – Dialog 3/19/2018 CPSC 503 Winter 2008 15

Today 27/10 • Brief Intro Pragmatics • Discourse – Monologue – Dialog 3/19/2018 CPSC 503 Winter 2008 15

Discourse: Monologue • Monologues as sequences of “sentences” have structure (like sentences as sequences of words) • Tasks: Text Segmentation and Rhetorical (discourse) parsing and generation • Key discourse phenomenon: referring expressions (what they denote may depend on previous discourse) Task: Coreference resolution 3/19/2018 CPSC 503 Winter 2008 16

Discourse: Monologue • Monologues as sequences of “sentences” have structure (like sentences as sequences of words) • Tasks: Text Segmentation and Rhetorical (discourse) parsing and generation • Key discourse phenomenon: referring expressions (what they denote may depend on previous discourse) Task: Coreference resolution 3/19/2018 CPSC 503 Winter 2008 16

Discourse/Text Segmentation(1) • State of the art: – linear (unable to identify hierarchical structure) – Subtopics, passages UNSUPERVISED • Key idea: lexical cohesion (vs. coherence) “There is not water on the moon. Andromeda is covered by the moon. ” • Discourse segments tend to be lexically cohesive • Cohesion score drops on segment boundaries 3/19/2018 CPSC 503 Winter 2008 17

Discourse/Text Segmentation(1) • State of the art: – linear (unable to identify hierarchical structure) – Subtopics, passages UNSUPERVISED • Key idea: lexical cohesion (vs. coherence) “There is not water on the moon. Andromeda is covered by the moon. ” • Discourse segments tend to be lexically cohesive • Cohesion score drops on segment boundaries 3/19/2018 CPSC 503 Winter 2008 17

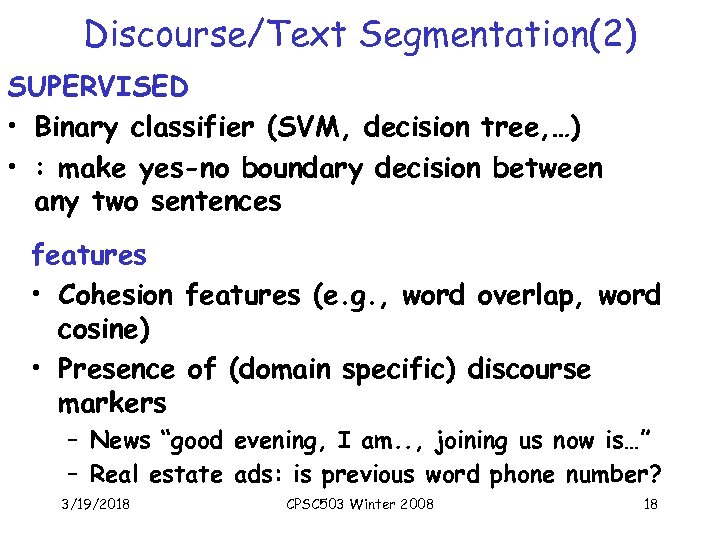

Discourse/Text Segmentation(2) SUPERVISED • Binary classifier (SVM, decision tree, …) • : make yes-no boundary decision between any two sentences features • Cohesion features (e. g. , word overlap, word cosine) • Presence of (domain specific) discourse markers – News “good evening, I am. . , joining us now is…” – Real estate ads: is previous word phone number? 3/19/2018 CPSC 503 Winter 2008 18

Discourse/Text Segmentation(2) SUPERVISED • Binary classifier (SVM, decision tree, …) • : make yes-no boundary decision between any two sentences features • Cohesion features (e. g. , word overlap, word cosine) • Presence of (domain specific) discourse markers – News “good evening, I am. . , joining us now is…” – Real estate ads: is previous word phone number? 3/19/2018 CPSC 503 Winter 2008 18

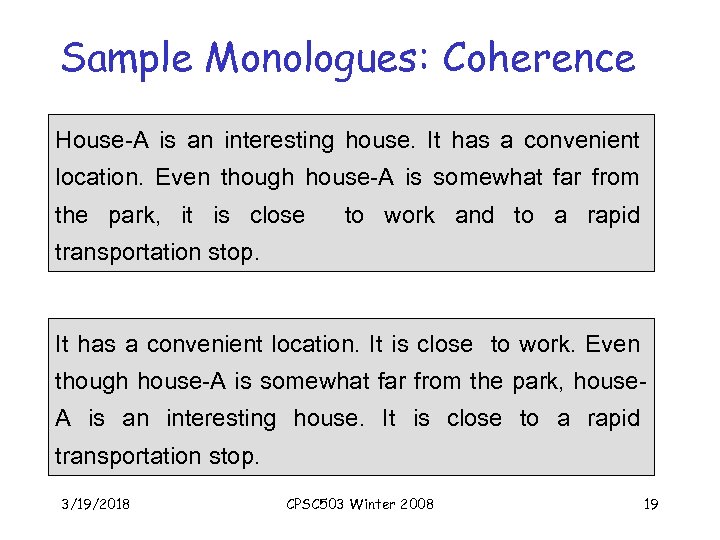

Sample Monologues: Coherence House-A is an interesting house. It has a convenient location. Even though house-A is somewhat far from the park, it is close to work and to a rapid transportation stop. It has a convenient location. It is close to work. Even though house-A is somewhat far from the park, house. A is an interesting house. It is close to a rapid transportation stop. 3/19/2018 CPSC 503 Winter 2008 19

Sample Monologues: Coherence House-A is an interesting house. It has a convenient location. Even though house-A is somewhat far from the park, it is close to work and to a rapid transportation stop. It has a convenient location. It is close to work. Even though house-A is somewhat far from the park, house. A is an interesting house. It is close to a rapid transportation stop. 3/19/2018 CPSC 503 Winter 2008 19

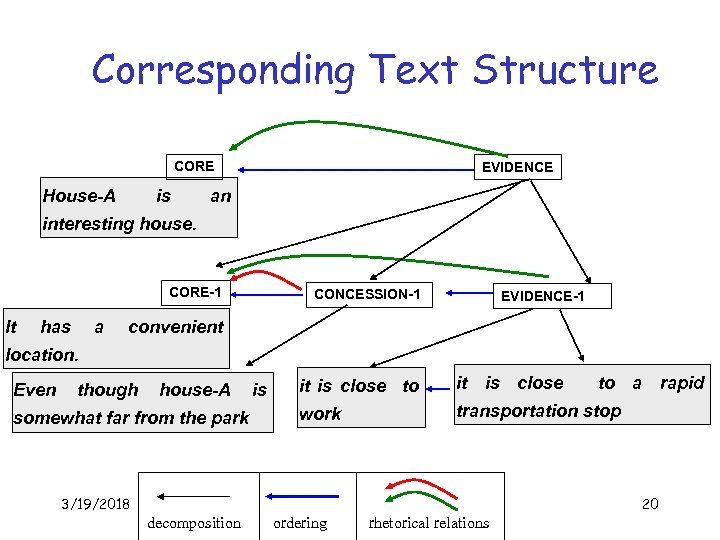

Corresponding Text Structure CORE House-A is EVIDENCE an interesting house. CORE-1 It has a CONCESSION-1 EVIDENCE-1 convenient location. Even though house-A somewhat far from the park 3/19/2018 is it is close to it is close work transportation stop CPSC 503 Winter 2008 decomposition ordering rhetorical relations to a rapid 20

Corresponding Text Structure CORE House-A is EVIDENCE an interesting house. CORE-1 It has a CONCESSION-1 EVIDENCE-1 convenient location. Even though house-A somewhat far from the park 3/19/2018 is it is close to it is close work transportation stop CPSC 503 Winter 2008 decomposition ordering rhetorical relations to a rapid 20

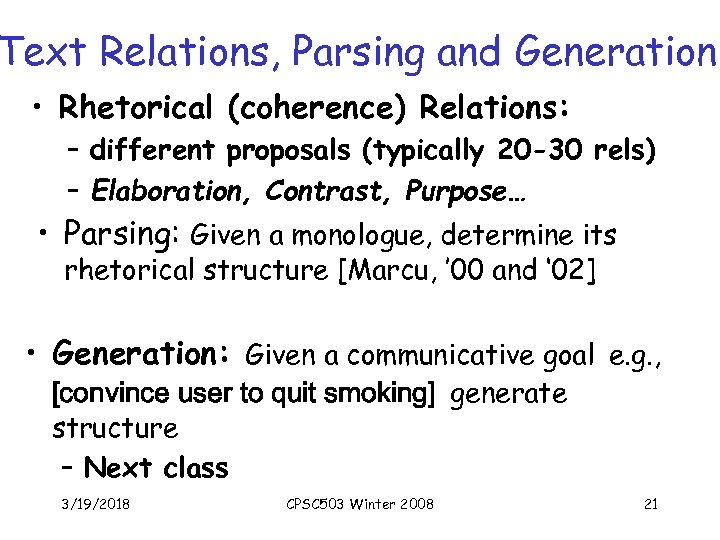

Text Relations, Parsing and Generation • Rhetorical (coherence) Relations: – different proposals (typically 20 -30 rels) – Elaboration, Contrast, Purpose… • Parsing: Given a monologue, determine its rhetorical structure [Marcu, ’ 00 and ‘ 02] • Generation: Given a communicative goal e. g. , [convince user to quit smoking] generate structure – Next class 3/19/2018 CPSC 503 Winter 2008 21

Text Relations, Parsing and Generation • Rhetorical (coherence) Relations: – different proposals (typically 20 -30 rels) – Elaboration, Contrast, Purpose… • Parsing: Given a monologue, determine its rhetorical structure [Marcu, ’ 00 and ‘ 02] • Generation: Given a communicative goal e. g. , [convince user to quit smoking] generate structure – Next class 3/19/2018 CPSC 503 Winter 2008 21

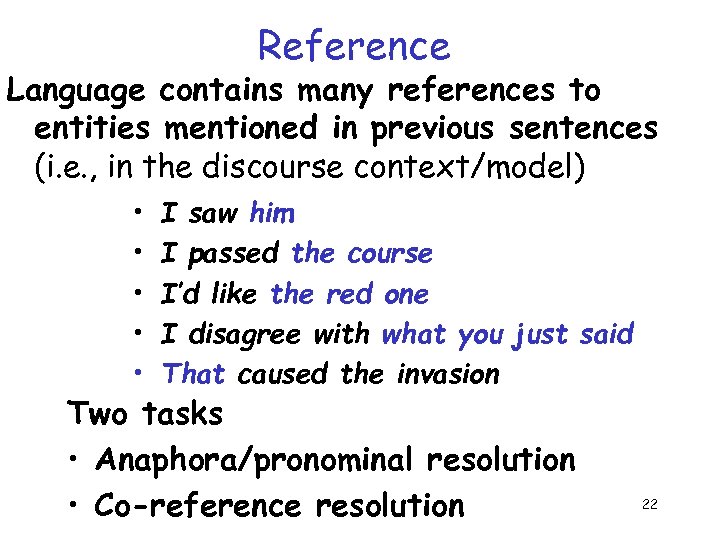

Reference Language contains many references to entities mentioned in previous sentences (i. e. , in the discourse context/model) • • • I saw him I passed the course I’d like the red one I disagree with what you just said That caused the invasion Two tasks • Anaphora/pronominal resolution 3/19/2018 CPSC 503 Winter 2008 • Co-reference resolution 22

Reference Language contains many references to entities mentioned in previous sentences (i. e. , in the discourse context/model) • • • I saw him I passed the course I’d like the red one I disagree with what you just said That caused the invasion Two tasks • Anaphora/pronominal resolution 3/19/2018 CPSC 503 Winter 2008 • Co-reference resolution 22

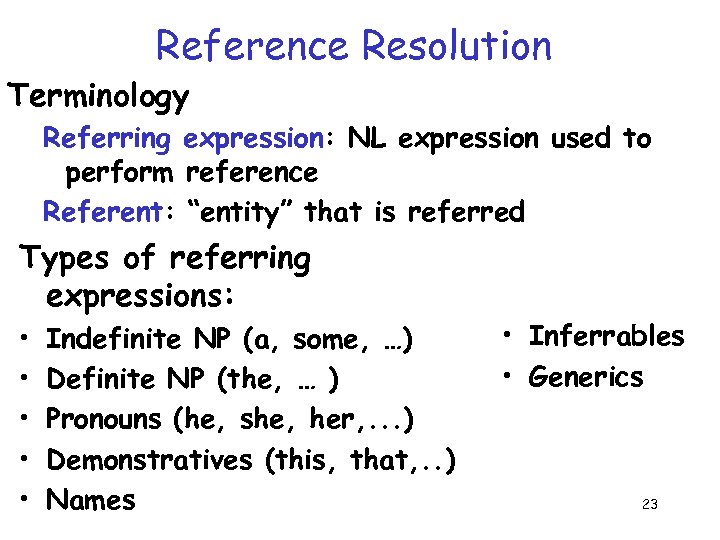

Reference Resolution Terminology Referring expression: NL expression used to perform reference Referent: “entity” that is referred Types of referring expressions: • • • Indefinite NP (a, some, …) Definite NP (the, … ) Pronouns (he, she, her, . . . ) Demonstratives (this, that, . . ) Names 3/19/2018 CPSC 503 Winter 2008 • Inferrables • Generics 23

Reference Resolution Terminology Referring expression: NL expression used to perform reference Referent: “entity” that is referred Types of referring expressions: • • • Indefinite NP (a, some, …) Definite NP (the, … ) Pronouns (he, she, her, . . . ) Demonstratives (this, that, . . ) Names 3/19/2018 CPSC 503 Winter 2008 • Inferrables • Generics 23

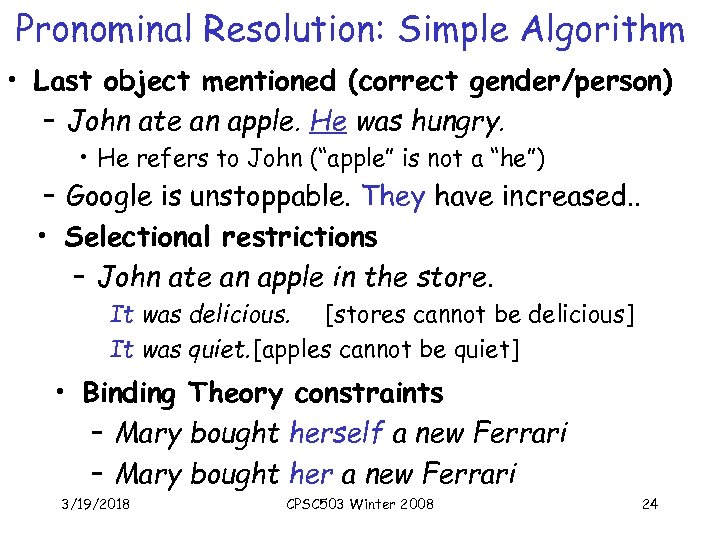

Pronominal Resolution: Simple Algorithm • Last object mentioned (correct gender/person) – John ate an apple. He was hungry. • He refers to John (“apple” is not a “he”) – Google is unstoppable. They have increased. . • Selectional restrictions – John ate an apple in the store. It was delicious. [stores cannot be delicious] It was quiet. [apples cannot be quiet] • Binding Theory constraints – Mary bought herself a new Ferrari – Mary bought her a new Ferrari 3/19/2018 CPSC 503 Winter 2008 24

Pronominal Resolution: Simple Algorithm • Last object mentioned (correct gender/person) – John ate an apple. He was hungry. • He refers to John (“apple” is not a “he”) – Google is unstoppable. They have increased. . • Selectional restrictions – John ate an apple in the store. It was delicious. [stores cannot be delicious] It was quiet. [apples cannot be quiet] • Binding Theory constraints – Mary bought herself a new Ferrari – Mary bought her a new Ferrari 3/19/2018 CPSC 503 Winter 2008 24

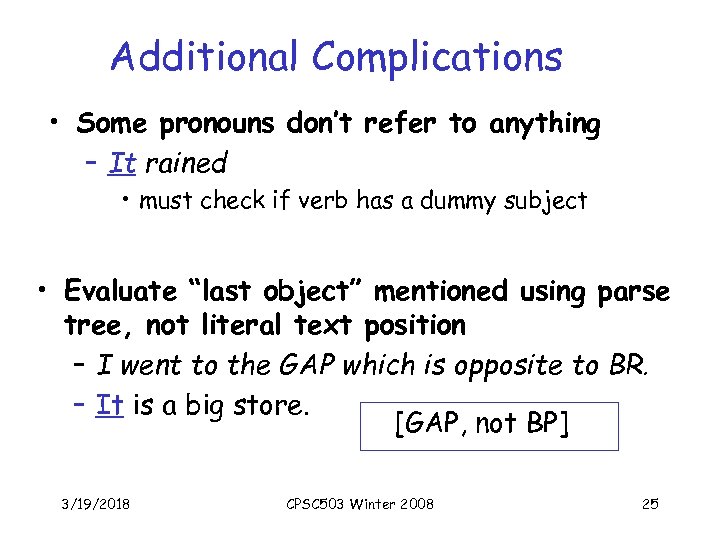

Additional Complications • Some pronouns don’t refer to anything – It rained • must check if verb has a dummy subject • Evaluate “last object” mentioned using parse tree, not literal text position – I went to the GAP which is opposite to BR. – It is a big store. [GAP, not BP] 3/19/2018 CPSC 503 Winter 2008 25

Additional Complications • Some pronouns don’t refer to anything – It rained • must check if verb has a dummy subject • Evaluate “last object” mentioned using parse tree, not literal text position – I went to the GAP which is opposite to BR. – It is a big store. [GAP, not BP] 3/19/2018 CPSC 503 Winter 2008 25

Focus John is a good student He goes to all his tutorials He helped Sam with CS 4001 He wants to do a project for Prof. Gray He refers to John (not Sam) 3/19/2018 CPSC 503 Winter 2008 26

Focus John is a good student He goes to all his tutorials He helped Sam with CS 4001 He wants to do a project for Prof. Gray He refers to John (not Sam) 3/19/2018 CPSC 503 Winter 2008 26

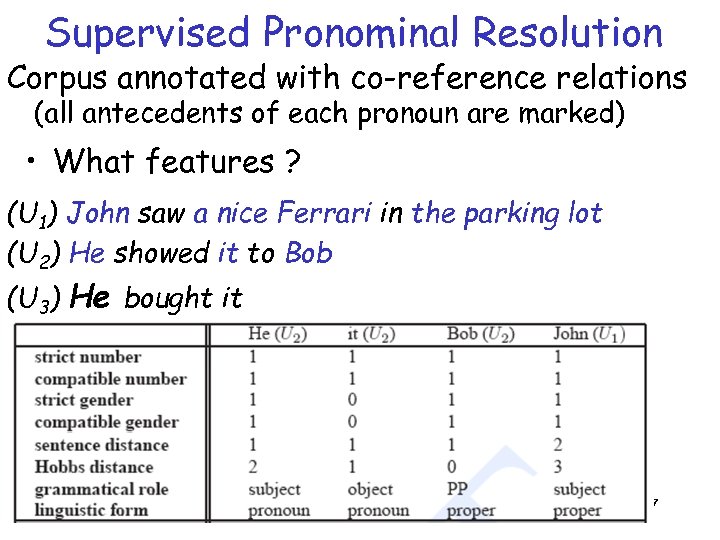

Supervised Pronominal Resolution Corpus annotated with co-reference relations (all antecedents of each pronoun are marked) • What features ? (U 1) John saw a nice Ferrari in the parking lot (U 2) He showed it to Bob (U 3) He bought it 3/19/2018 CPSC 503 Winter 2008 27

Supervised Pronominal Resolution Corpus annotated with co-reference relations (all antecedents of each pronoun are marked) • What features ? (U 1) John saw a nice Ferrari in the parking lot (U 2) He showed it to Bob (U 3) He bought it 3/19/2018 CPSC 503 Winter 2008 27

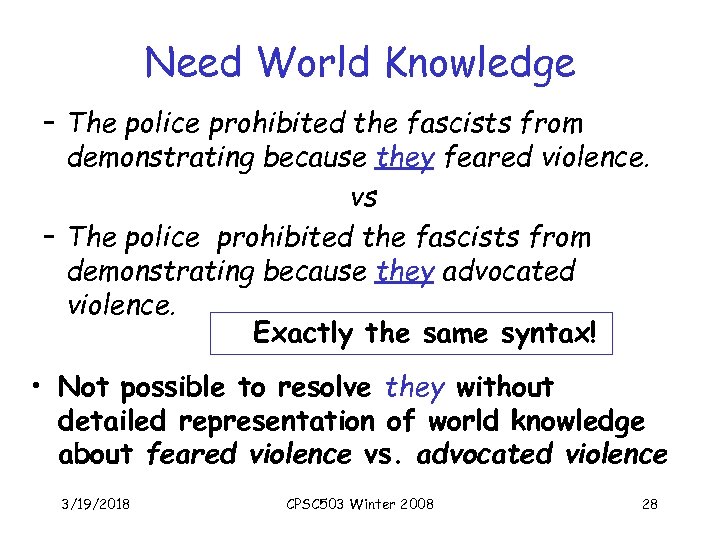

Need World Knowledge – The police prohibited the fascists from demonstrating because they feared violence. vs – The police prohibited the fascists from demonstrating because they advocated violence. Exactly the same syntax! • Not possible to resolve they without detailed representation of world knowledge about feared violence vs. advocated violence 3/19/2018 CPSC 503 Winter 2008 28

Need World Knowledge – The police prohibited the fascists from demonstrating because they feared violence. vs – The police prohibited the fascists from demonstrating because they advocated violence. Exactly the same syntax! • Not possible to resolve they without detailed representation of world knowledge about feared violence vs. advocated violence 3/19/2018 CPSC 503 Winter 2008 28

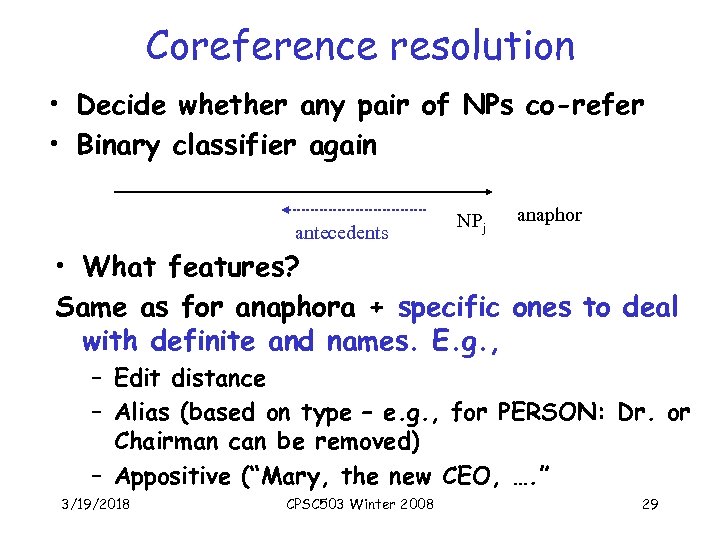

Coreference resolution • Decide whether any pair of NPs co-refer • Binary classifier again antecedents NPj anaphor • What features? Same as for anaphora + specific ones to deal with definite and names. E. g. , – Edit distance – Alias (based on type – e. g. , for PERSON: Dr. or Chairman can be removed) – Appositive (“Mary, the new CEO, …. ” 3/19/2018 CPSC 503 Winter 2008 29

Coreference resolution • Decide whether any pair of NPs co-refer • Binary classifier again antecedents NPj anaphor • What features? Same as for anaphora + specific ones to deal with definite and names. E. g. , – Edit distance – Alias (based on type – e. g. , for PERSON: Dr. or Chairman can be removed) – Appositive (“Mary, the new CEO, …. ” 3/19/2018 CPSC 503 Winter 2008 29

Today 27/10 • Brief Intro Pragmatics • Discourse – Monologue – Dialog 3/19/2018 CPSC 503 Winter 2008 30

Today 27/10 • Brief Intro Pragmatics • Discourse – Monologue – Dialog 3/19/2018 CPSC 503 Winter 2008 30

Discourse: Dialog • Most fundamental form of language use • First kind we learn as children Dialog can be seen as a sequence of communicative actions of different kinds (dialog acts) - (DAMSL 1997; ~20) Example: ACTION-DIRECTIVE (i) A: So can you please come over here again right now (ii) B: Well, I have to go to Edinburgh today sir REJECT-PART (iii) A: Hmm. How about this Thursday ACTION- DIRECTIVE ACCEPT (vi) B: OK 3/19/2018 CPSC 503 Winter 2008 31

Discourse: Dialog • Most fundamental form of language use • First kind we learn as children Dialog can be seen as a sequence of communicative actions of different kinds (dialog acts) - (DAMSL 1997; ~20) Example: ACTION-DIRECTIVE (i) A: So can you please come over here again right now (ii) B: Well, I have to go to Edinburgh today sir REJECT-PART (iii) A: Hmm. How about this Thursday ACTION- DIRECTIVE ACCEPT (vi) B: OK 3/19/2018 CPSC 503 Winter 2008 31

Dialog: two key tasks • (1) Dialog act interpretation: identify the user dialog act • (2) Dialog management: (1) & decide what to say and when 3/19/2018 CPSC 503 Winter 2008 32

Dialog: two key tasks • (1) Dialog act interpretation: identify the user dialog act • (2) Dialog management: (1) & decide what to say and when 3/19/2018 CPSC 503 Winter 2008 32

Dialog Act Interpretation • What dialog act a given utterance is? • Surface form is not sufficient! E. g. , I’m having problems with the homework – Statement - prof. should make a note of this, perhaps make homework easier next year – Directive - prof. should help student with the homework – Information request - prof should give student the solution 3/19/2018 CPSC 503 Winter 2008 33

Dialog Act Interpretation • What dialog act a given utterance is? • Surface form is not sufficient! E. g. , I’m having problems with the homework – Statement - prof. should make a note of this, perhaps make homework easier next year – Directive - prof. should help student with the homework – Information request - prof should give student the solution 3/19/2018 CPSC 503 Winter 2008 33

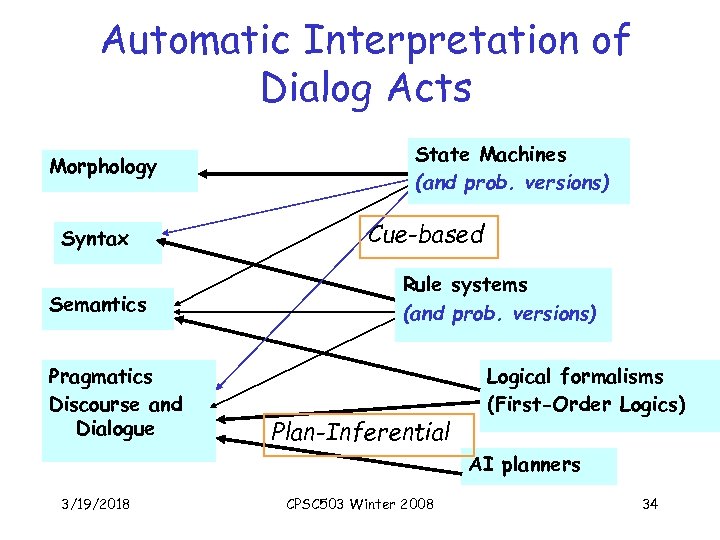

Automatic Interpretation of Dialog Acts Morphology Syntax Semantics Pragmatics Discourse and Dialogue State Machines (and prob. versions) Cue-based Rule systems (and prob. versions) Plan-Inferential Logical formalisms (First-Order Logics) AI planners 3/19/2018 CPSC 503 Winter 2008 34

Automatic Interpretation of Dialog Acts Morphology Syntax Semantics Pragmatics Discourse and Dialogue State Machines (and prob. versions) Cue-based Rule systems (and prob. versions) Plan-Inferential Logical formalisms (First-Order Logics) AI planners 3/19/2018 CPSC 503 Winter 2008 34

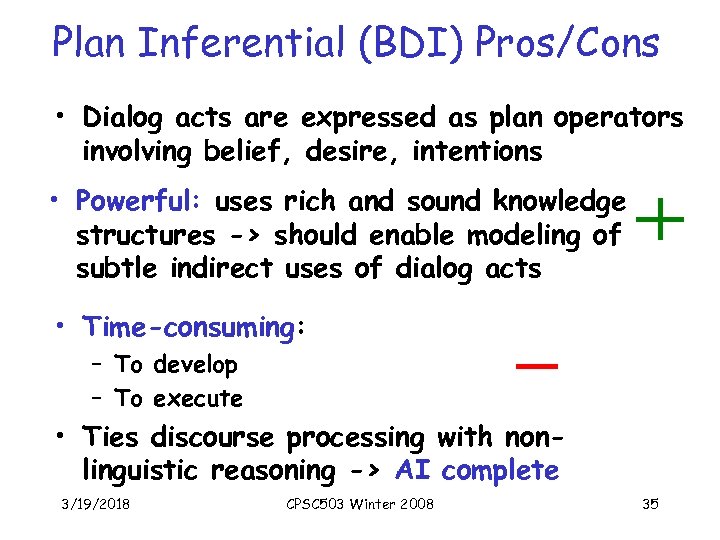

Plan Inferential (BDI) Pros/Cons • Dialog acts are expressed as plan operators involving belief, desire, intentions • Powerful: uses rich and sound knowledge structures -> should enable modeling of subtle indirect uses of dialog acts • Time-consuming: – To develop – To execute • Ties discourse processing with nonlinguistic reasoning -> AI complete 3/19/2018 CPSC 503 Winter 2008 35

Plan Inferential (BDI) Pros/Cons • Dialog acts are expressed as plan operators involving belief, desire, intentions • Powerful: uses rich and sound knowledge structures -> should enable modeling of subtle indirect uses of dialog acts • Time-consuming: – To develop – To execute • Ties discourse processing with nonlinguistic reasoning -> AI complete 3/19/2018 CPSC 503 Winter 2008 35

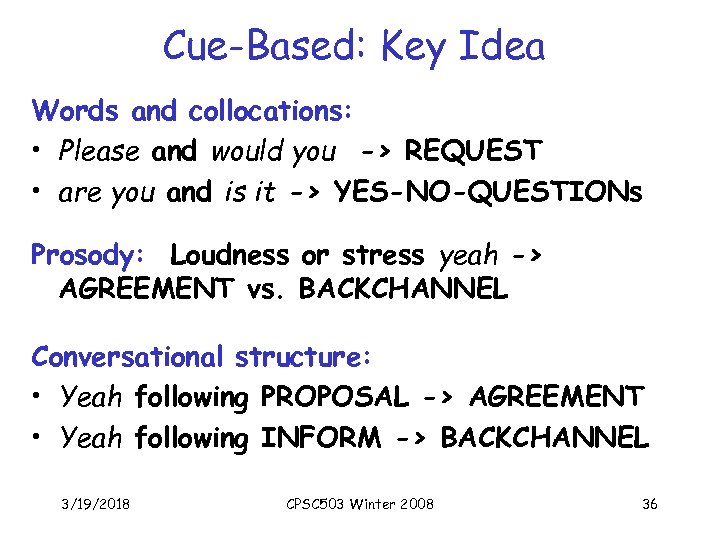

Cue-Based: Key Idea Words and collocations: • Please and would you -> REQUEST • are you and is it -> YES-NO-QUESTIONs Prosody: Loudness or stress yeah -> AGREEMENT vs. BACKCHANNEL Conversational structure: • Yeah following PROPOSAL -> AGREEMENT • Yeah following INFORM -> BACKCHANNEL 3/19/2018 CPSC 503 Winter 2008 36

Cue-Based: Key Idea Words and collocations: • Please and would you -> REQUEST • are you and is it -> YES-NO-QUESTIONs Prosody: Loudness or stress yeah -> AGREEMENT vs. BACKCHANNEL Conversational structure: • Yeah following PROPOSAL -> AGREEMENT • Yeah following INFORM -> BACKCHANNEL 3/19/2018 CPSC 503 Winter 2008 36

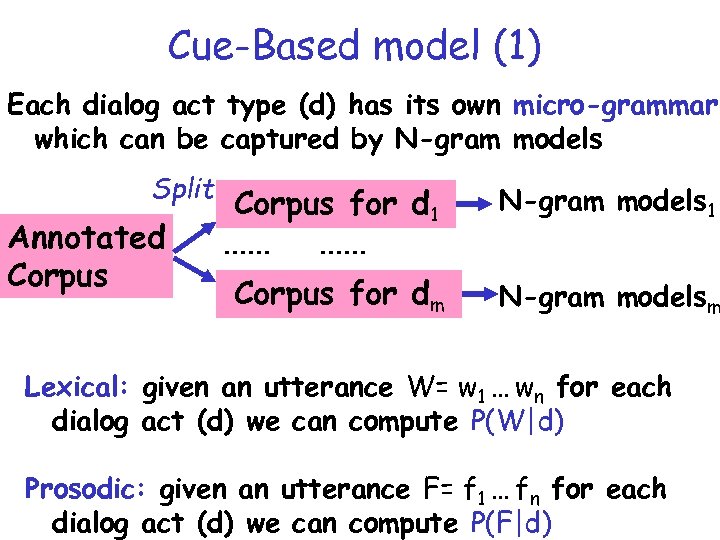

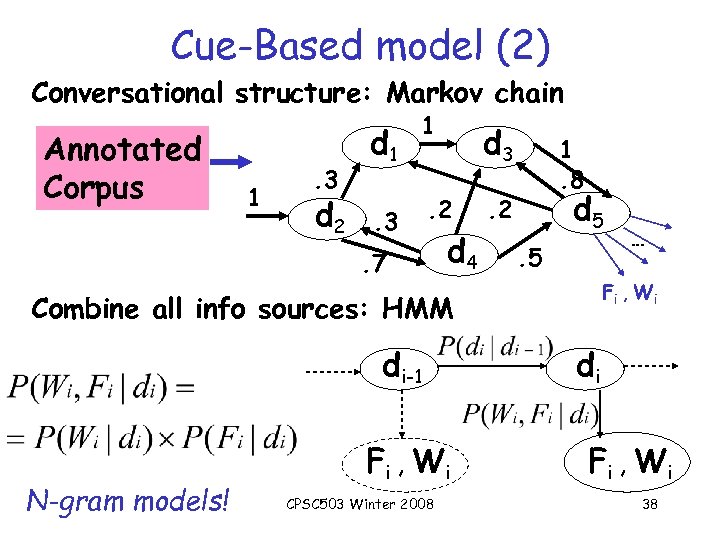

Cue-Based model (1) Each dialog act type (d) has its own micro-grammar which can be captured by N-gram models Split Annotated Corpus for d 1 …… N-gram models 1 …… Corpus for dm N-gram modelsm Lexical: given an utterance W= w 1 … wn for each dialog act (d) we can compute P(W|d) Prosodic: given an utterance F= f 1 … fn for each 3/19/2018 CPSC 503 Winter 2008 37 dialog act (d) we can compute P(F|d)

Cue-Based model (1) Each dialog act type (d) has its own micro-grammar which can be captured by N-gram models Split Annotated Corpus for d 1 …… N-gram models 1 …… Corpus for dm N-gram modelsm Lexical: given an utterance W= w 1 … wn for each dialog act (d) we can compute P(W|d) Prosodic: given an utterance F= f 1 … fn for each 3/19/2018 CPSC 503 Winter 2008 37 dialog act (d) we can compute P(F|d)

Cue-Based model (2) Conversational structure: Markov chain Annotated Corpus 1 . 3 d 2 d 1 1 d 3 . 2 . 7 d 4 1. 8 d 5 . 5 F i , Wi Combine all info sources: HMM di-1 3/19/2018 N-gram models! F i , Wi CPSC 503 Winter 2008 … di F i , Wi 38

Cue-Based model (2) Conversational structure: Markov chain Annotated Corpus 1 . 3 d 2 d 1 1 d 3 . 2 . 7 d 4 1. 8 d 5 . 5 F i , Wi Combine all info sources: HMM di-1 3/19/2018 N-gram models! F i , Wi CPSC 503 Winter 2008 … di F i , Wi 38

Cue-Based model Summary • Start form annotated corpus (each utterance labeled with appropriate dialog act) • For each dialog act type (e. g. , REQUEST), build lexical and phonological N-grams • Build Markov chain for dialog acts (to express conversational structure) • Combine Markov Chain and N-grams into an HMM • Now Sequences of sequences CPSC 503 Winter 2008. . can be computed with …… 3/19/2018 39

Cue-Based model Summary • Start form annotated corpus (each utterance labeled with appropriate dialog act) • For each dialog act type (e. g. , REQUEST), build lexical and phonological N-grams • Build Markov chain for dialog acts (to express conversational structure) • Combine Markov Chain and N-grams into an HMM • Now Sequences of sequences CPSC 503 Winter 2008. . can be computed with …… 3/19/2018 39

Dialog Managers in Conversational Agents • Examples: Airline travel info system, restaurant/movie guide, email access by phone • Tasks – Control flow of dialogue (turn-taking) – What to say/ask and when 3/19/2018 CPSC 503 Winter 2008 40

Dialog Managers in Conversational Agents • Examples: Airline travel info system, restaurant/movie guide, email access by phone • Tasks – Control flow of dialogue (turn-taking) – What to say/ask and when 3/19/2018 CPSC 503 Winter 2008 40

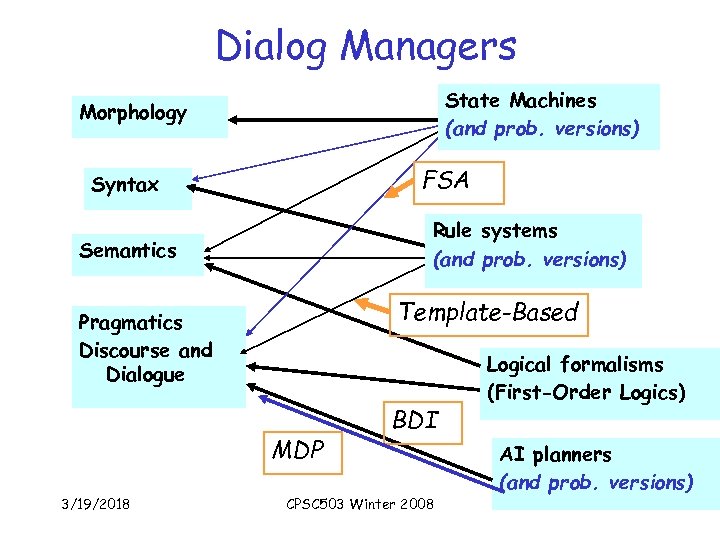

Dialog Managers State Machines (and prob. versions) Morphology FSA Syntax Rule systems (and prob. versions) Semantics Template-Based Pragmatics Discourse and Dialogue MDP 3/19/2018 BDI CPSC 503 Winter 2008 Logical formalisms (First-Order Logics) AI planners (and prob. versions) 41

Dialog Managers State Machines (and prob. versions) Morphology FSA Syntax Rule systems (and prob. versions) Semantics Template-Based Pragmatics Discourse and Dialogue MDP 3/19/2018 BDI CPSC 503 Winter 2008 Logical formalisms (First-Order Logics) AI planners (and prob. versions) 41

27/10: Probably stop here 3/19/2018 CPSC 503 Winter 2008 42

27/10: Probably stop here 3/19/2018 CPSC 503 Winter 2008 42

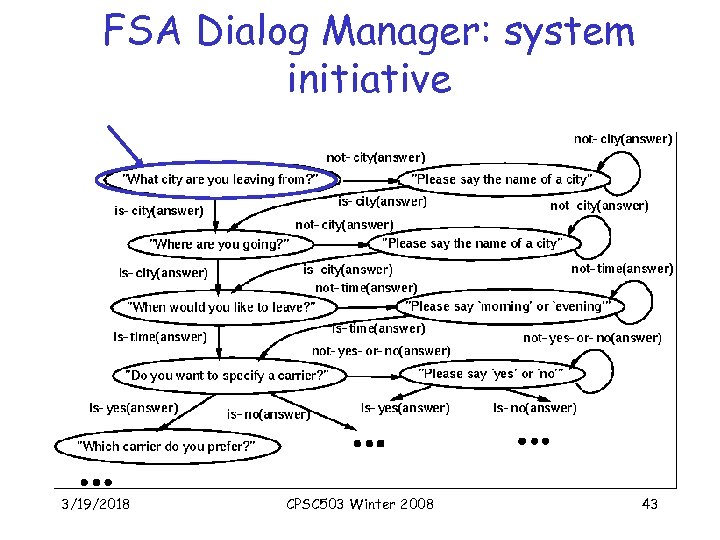

FSA Dialog Manager: system initiative • xxx 3/19/2018 CPSC 503 Winter 2008 43

FSA Dialog Manager: system initiative • xxx 3/19/2018 CPSC 503 Winter 2008 43

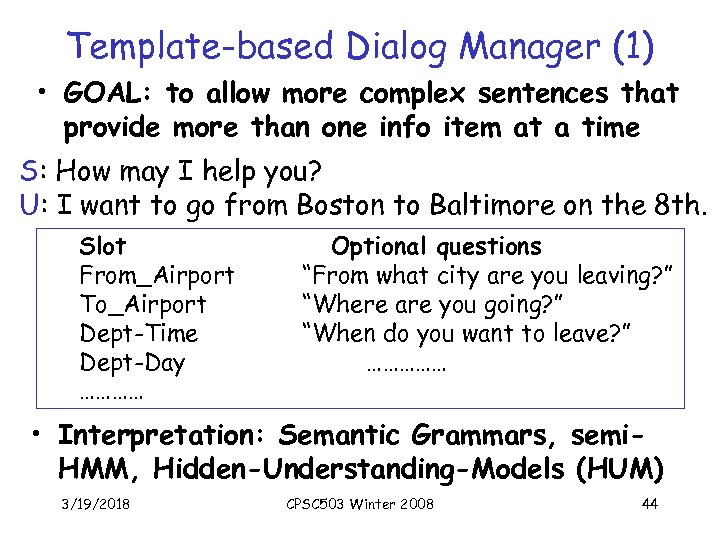

Template-based Dialog Manager (1) • GOAL: to allow more complex sentences that provide more than one info item at a time S: How may I help you? U: I want to go from Boston to Baltimore on the 8 th. Slot From_Airport To_Airport Dept-Time Dept-Day ………… Optional questions “From what city are you leaving? ” “Where are you going? ” “When do you want to leave? ” …………… • Interpretation: Semantic Grammars, semi. HMM, Hidden-Understanding-Models (HUM) 3/19/2018 CPSC 503 Winter 2008 44

Template-based Dialog Manager (1) • GOAL: to allow more complex sentences that provide more than one info item at a time S: How may I help you? U: I want to go from Boston to Baltimore on the 8 th. Slot From_Airport To_Airport Dept-Time Dept-Day ………… Optional questions “From what city are you leaving? ” “Where are you going? ” “When do you want to leave? ” …………… • Interpretation: Semantic Grammars, semi. HMM, Hidden-Understanding-Models (HUM) 3/19/2018 CPSC 503 Winter 2008 44

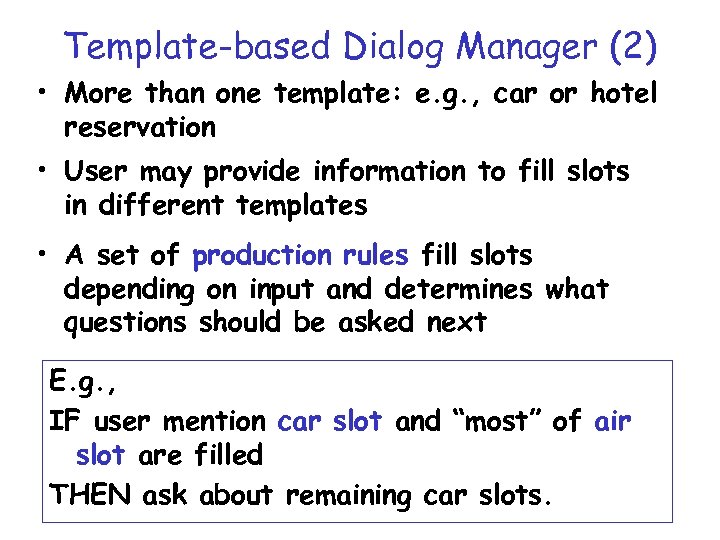

Template-based Dialog Manager (2) • More than one template: e. g. , car or hotel reservation • User may provide information to fill slots in different templates • A set of production rules fill slots depending on input and determines what questions should be asked next E. g. , IF user mention car slot and “most” of air slot are filled THEN ask about CPSC 503 Winter 2008 remaining car slots. 3/19/2018 45

Template-based Dialog Manager (2) • More than one template: e. g. , car or hotel reservation • User may provide information to fill slots in different templates • A set of production rules fill slots depending on input and determines what questions should be asked next E. g. , IF user mention car slot and “most” of air slot are filled THEN ask about CPSC 503 Winter 2008 remaining car slots. 3/19/2018 45

![Markov Decision Processes [’ 02] • Common formalism in AI to model an agent Markov Decision Processes [’ 02] • Common formalism in AI to model an agent](https://present5.com/presentation/6bc38904317931033b7ccbd015383790/image-46.jpg) Markov Decision Processes [’ 02] • Common formalism in AI to model an agent interacting with its environment. • States / Actions / Rewards • Application to dialog: – States: slot in frame currently worked on, ASR confidence value, number of questions about slot, . . – Actions: questions types, confirmation types – Rewards: user feedback, task completion rate 3/19/2018 CPSC 503 Winter 2008 46

Markov Decision Processes [’ 02] • Common formalism in AI to model an agent interacting with its environment. • States / Actions / Rewards • Application to dialog: – States: slot in frame currently worked on, ASR confidence value, number of questions about slot, . . – Actions: questions types, confirmation types – Rewards: user feedback, task completion rate 3/19/2018 CPSC 503 Winter 2008 46

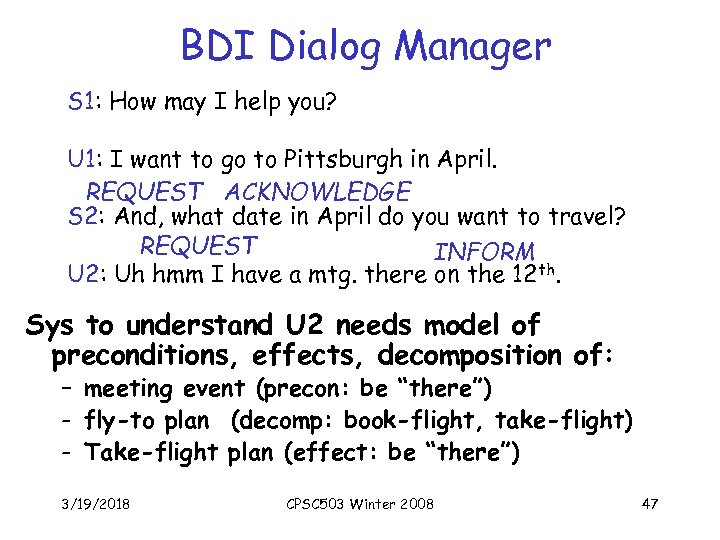

BDI Dialog Manager S 1: How may I help you? U 1: I want to go to Pittsburgh in April. REQUEST ACKNOWLEDGE S 2: And, what date in April do you want to travel? REQUEST INFORM U 2: Uh hmm I have a mtg. there on the 12 th. Sys to understand U 2 needs model of preconditions, effects, decomposition of: – meeting event (precon: be “there”) - fly-to plan (decomp: book-flight, take-flight) - Take-flight plan (effect: be “there”) 3/19/2018 CPSC 503 Winter 2008 47

BDI Dialog Manager S 1: How may I help you? U 1: I want to go to Pittsburgh in April. REQUEST ACKNOWLEDGE S 2: And, what date in April do you want to travel? REQUEST INFORM U 2: Uh hmm I have a mtg. there on the 12 th. Sys to understand U 2 needs model of preconditions, effects, decomposition of: – meeting event (precon: be “there”) - fly-to plan (decomp: book-flight, take-flight) - Take-flight plan (effect: be “there”) 3/19/2018 CPSC 503 Winter 2008 47

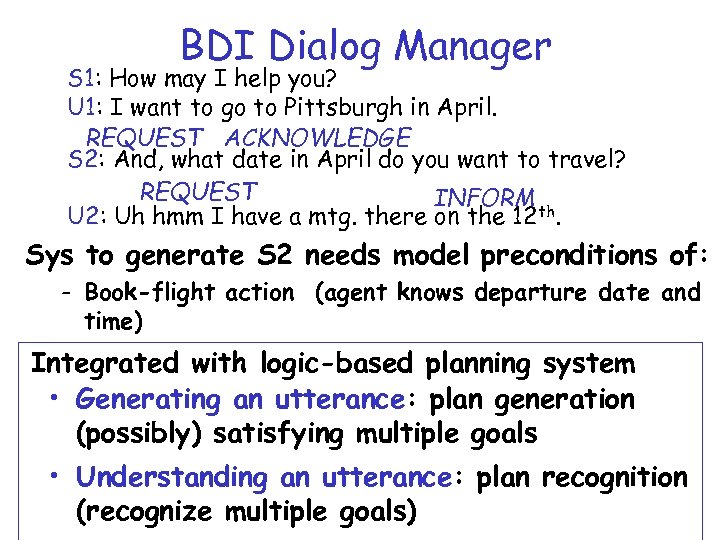

BDI Dialog Manager S 1: How may I help you? U 1: I want to go to Pittsburgh in April. REQUEST ACKNOWLEDGE S 2: And, what date in April do you want to travel? REQUEST INFORM th U 2: Uh hmm I have a mtg. there on the 12. Sys to generate S 2 needs model preconditions of: - Book-flight action (agent knows departure date and time) Integrated with logic-based planning system • Generating an utterance: plan generation (possibly) satisfying multiple goals • Understanding an utterance: plan recognition 3/19/2018 CPSC 503 Winter 2008 48 (recognize multiple goals)

BDI Dialog Manager S 1: How may I help you? U 1: I want to go to Pittsburgh in April. REQUEST ACKNOWLEDGE S 2: And, what date in April do you want to travel? REQUEST INFORM th U 2: Uh hmm I have a mtg. there on the 12. Sys to generate S 2 needs model preconditions of: - Book-flight action (agent knows departure date and time) Integrated with logic-based planning system • Generating an utterance: plan generation (possibly) satisfying multiple goals • Understanding an utterance: plan recognition 3/19/2018 CPSC 503 Winter 2008 48 (recognize multiple goals)

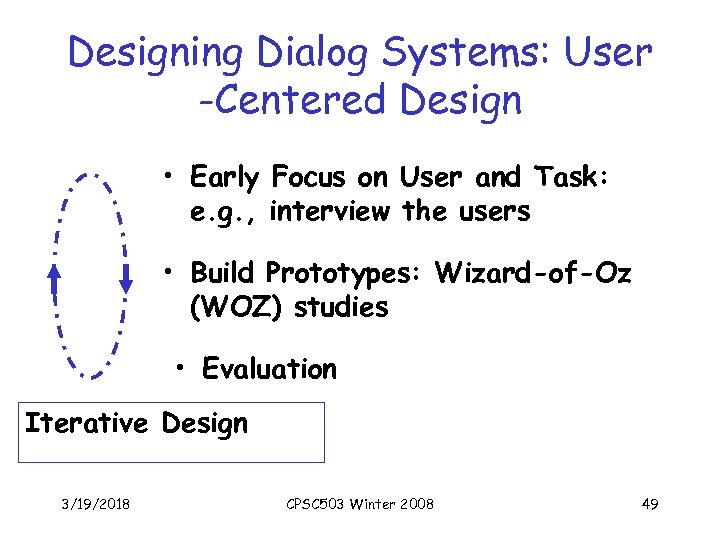

Designing Dialog Systems: User -Centered Design • Early Focus on User and Task: e. g. , interview the users • Build Prototypes: Wizard-of-Oz (WOZ) studies • Evaluation Iterative Design 3/19/2018 CPSC 503 Winter 2008 49

Designing Dialog Systems: User -Centered Design • Early Focus on User and Task: e. g. , interview the users • Build Prototypes: Wizard-of-Oz (WOZ) studies • Evaluation Iterative Design 3/19/2018 CPSC 503 Winter 2008 49

Next Time: Natural Language Generation • Read handout on NLG • Lecture will be about an NLG system that I developed and tested 3/19/2018 CPSC 503 Winter 2008 50

Next Time: Natural Language Generation • Read handout on NLG • Lecture will be about an NLG system that I developed and tested 3/19/2018 CPSC 503 Winter 2008 50