706b9238a3e6912585ea0a276faa4724.ppt

- Количество слайдов: 105

CPE 619 Workloads: Types, Selection, Characterization Aleksandar Milenković The La. CASA Laboratory Electrical and Computer Engineering Department The University of Alabama in Huntsville http: //www. ece. uah. edu/~milenka http: //www. ece. uah. edu/~lacasa

CPE 619 Workloads: Types, Selection, Characterization Aleksandar Milenković The La. CASA Laboratory Electrical and Computer Engineering Department The University of Alabama in Huntsville http: //www. ece. uah. edu/~milenka http: //www. ece. uah. edu/~lacasa

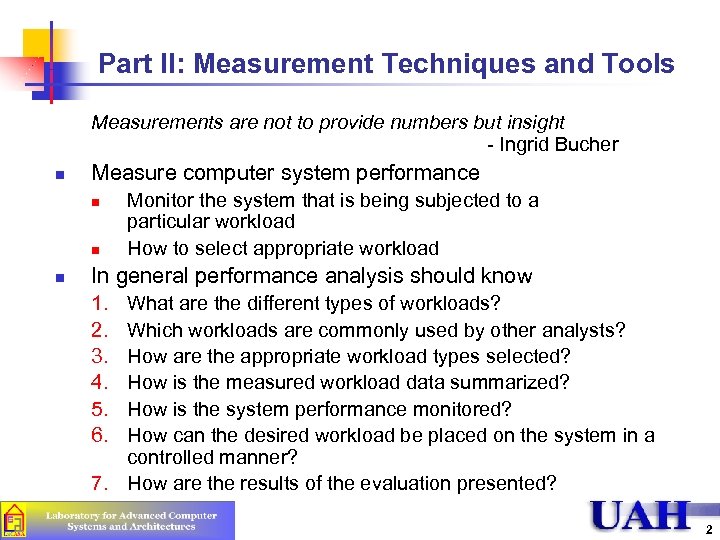

Part II: Measurement Techniques and Tools Measurements are not to provide numbers but insight - Ingrid Bucher n Measure computer system performance n n n Monitor the system that is being subjected to a particular workload How to select appropriate workload In general performance analysis should know 1. 2. 3. 4. 5. 6. What are the different types of workloads? Which workloads are commonly used by other analysts? How are the appropriate workload types selected? How is the measured workload data summarized? How is the system performance monitored? How can the desired workload be placed on the system in a controlled manner? 7. How are the results of the evaluation presented? 2

Part II: Measurement Techniques and Tools Measurements are not to provide numbers but insight - Ingrid Bucher n Measure computer system performance n n n Monitor the system that is being subjected to a particular workload How to select appropriate workload In general performance analysis should know 1. 2. 3. 4. 5. 6. What are the different types of workloads? Which workloads are commonly used by other analysts? How are the appropriate workload types selected? How is the measured workload data summarized? How is the system performance monitored? How can the desired workload be placed on the system in a controlled manner? 7. How are the results of the evaluation presented? 2

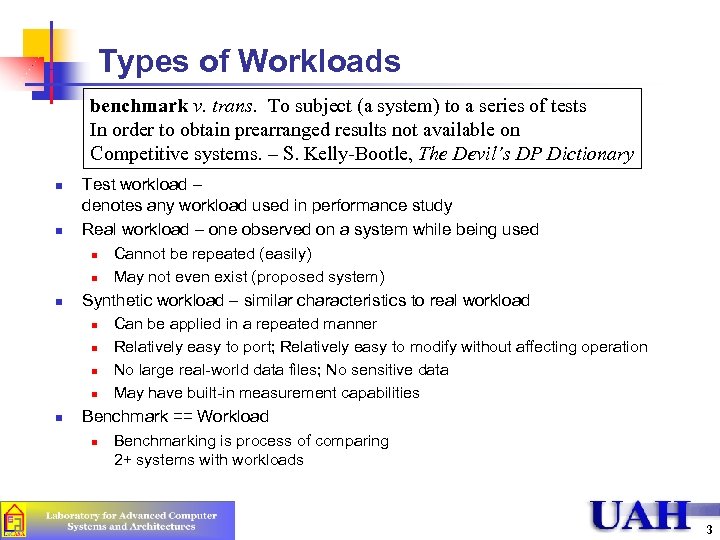

Types of Workloads benchmark v. trans. To subject (a system) to a series of tests In order to obtain prearranged results not available on Competitive systems. – S. Kelly-Bootle, The Devil’s DP Dictionary n n Test workload – denotes any workload used in performance study Real workload – one observed on a system while being used n n n Synthetic workload – similar characteristics to real workload n n n Cannot be repeated (easily) May not even exist (proposed system) Can be applied in a repeated manner Relatively easy to port; Relatively easy to modify without affecting operation No large real-world data files; No sensitive data May have built-in measurement capabilities Benchmark == Workload n Benchmarking is process of comparing 2+ systems with workloads 3

Types of Workloads benchmark v. trans. To subject (a system) to a series of tests In order to obtain prearranged results not available on Competitive systems. – S. Kelly-Bootle, The Devil’s DP Dictionary n n Test workload – denotes any workload used in performance study Real workload – one observed on a system while being used n n n Synthetic workload – similar characteristics to real workload n n n Cannot be repeated (easily) May not even exist (proposed system) Can be applied in a repeated manner Relatively easy to port; Relatively easy to modify without affecting operation No large real-world data files; No sensitive data May have built-in measurement capabilities Benchmark == Workload n Benchmarking is process of comparing 2+ systems with workloads 3

Test Workloads for Computer Systems n n n Addition instructions Instruction mixes Kernels Synthetic programs Application benchmarks 4

Test Workloads for Computer Systems n n n Addition instructions Instruction mixes Kernels Synthetic programs Application benchmarks 4

Addition Instructions n Early computers had CPU as most expensive component n n n System performance == Processor Performance CPUs supported few operations; the most frequent one was addition Computer with faster addition instruction performed better Run many addition operations as test workload Problem n n More operations, not only addition Some more complicated than others 5

Addition Instructions n Early computers had CPU as most expensive component n n n System performance == Processor Performance CPUs supported few operations; the most frequent one was addition Computer with faster addition instruction performed better Run many addition operations as test workload Problem n n More operations, not only addition Some more complicated than others 5

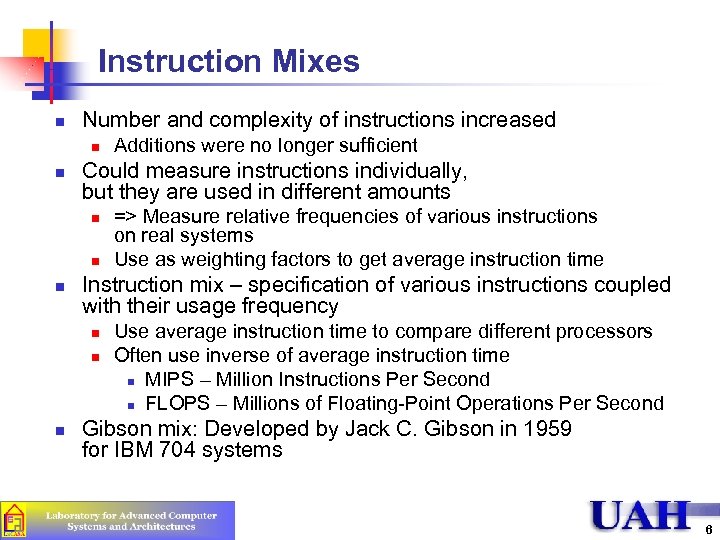

Instruction Mixes n Number and complexity of instructions increased n n Could measure instructions individually, but they are used in different amounts n n n => Measure relative frequencies of various instructions on real systems Use as weighting factors to get average instruction time Instruction mix – specification of various instructions coupled with their usage frequency n n n Additions were no longer sufficient Use average instruction time to compare different processors Often use inverse of average instruction time n MIPS – Million Instructions Per Second n FLOPS – Millions of Floating-Point Operations Per Second Gibson mix: Developed by Jack C. Gibson in 1959 for IBM 704 systems 6

Instruction Mixes n Number and complexity of instructions increased n n Could measure instructions individually, but they are used in different amounts n n n => Measure relative frequencies of various instructions on real systems Use as weighting factors to get average instruction time Instruction mix – specification of various instructions coupled with their usage frequency n n n Additions were no longer sufficient Use average instruction time to compare different processors Often use inverse of average instruction time n MIPS – Million Instructions Per Second n FLOPS – Millions of Floating-Point Operations Per Second Gibson mix: Developed by Jack C. Gibson in 1959 for IBM 704 systems 6

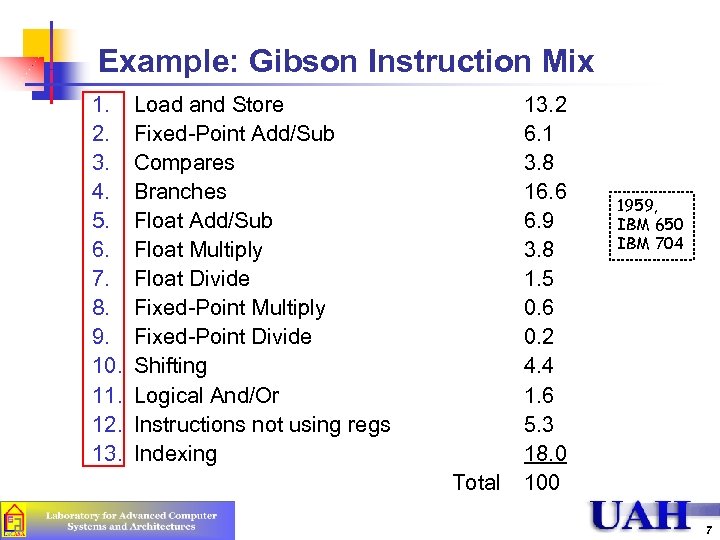

Example: Gibson Instruction Mix 1. 2. 3. 4. 5. 6. 7. 8. 9. 10. 11. 12. 13. Load and Store Fixed-Point Add/Sub Compares Branches Float Add/Sub Float Multiply Float Divide Fixed-Point Multiply Fixed-Point Divide Shifting Logical And/Or Instructions not using regs Indexing Total 13. 2 6. 1 3. 8 16. 6 6. 9 3. 8 1. 5 0. 6 0. 2 4. 4 1. 6 5. 3 18. 0 100 1959, IBM 650 IBM 704 7

Example: Gibson Instruction Mix 1. 2. 3. 4. 5. 6. 7. 8. 9. 10. 11. 12. 13. Load and Store Fixed-Point Add/Sub Compares Branches Float Add/Sub Float Multiply Float Divide Fixed-Point Multiply Fixed-Point Divide Shifting Logical And/Or Instructions not using regs Indexing Total 13. 2 6. 1 3. 8 16. 6 6. 9 3. 8 1. 5 0. 6 0. 2 4. 4 1. 6 5. 3 18. 0 100 1959, IBM 650 IBM 704 7

Problems with Instruction Mixes n In modern systems, instruction time variable depending upon n n n Addressing modes, cache hit rates, pipelining Interference with other devices during processor-memory access Distribution of zeros in multiplier Times a conditional branch is taken Mixes do not reflect special hardware such as page table lookups Only represents speed of processor n Bottleneck may be in other parts of system 8

Problems with Instruction Mixes n In modern systems, instruction time variable depending upon n n n Addressing modes, cache hit rates, pipelining Interference with other devices during processor-memory access Distribution of zeros in multiplier Times a conditional branch is taken Mixes do not reflect special hardware such as page table lookups Only represents speed of processor n Bottleneck may be in other parts of system 8

Kernels n Pipelining, caching, address translation, … made computer instruction times highly variable n n n Cannot use individual instructions in isolation Instead, use higher level functions Kernel = the most frequent function (kernel = nucleus) Commonly used kernels: Sieve, Puzzle, Tree Searching, Ackerman's Function, Matrix Inversion, and Sorting Disadvantages n n Do not make use of I/O devices Ad-hoc selection of kernels (not based on real measurements) 9

Kernels n Pipelining, caching, address translation, … made computer instruction times highly variable n n n Cannot use individual instructions in isolation Instead, use higher level functions Kernel = the most frequent function (kernel = nucleus) Commonly used kernels: Sieve, Puzzle, Tree Searching, Ackerman's Function, Matrix Inversion, and Sorting Disadvantages n n Do not make use of I/O devices Ad-hoc selection of kernels (not based on real measurements) 9

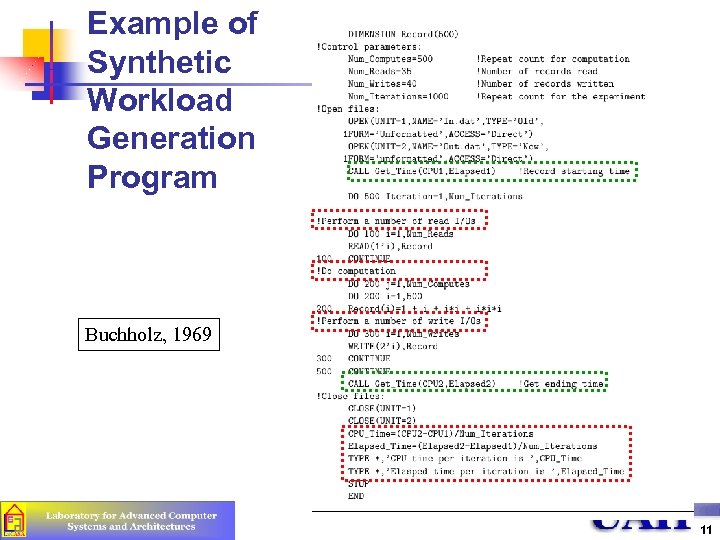

Synthetic Programs n Proliferation in computer systems, OS emerged, changes in applications n n Use simple exerciser loops n n Make a number of service calls or I/O requests Compute average CPU time and elapsed time for each service call Easy to port, distribute (Fortran, Pascal) First exerciser loop by Buchholz (1969) n n No more processing-only apps, I/O became important too Called it synthetic program May have built-in measurement capabilities 10

Synthetic Programs n Proliferation in computer systems, OS emerged, changes in applications n n Use simple exerciser loops n n Make a number of service calls or I/O requests Compute average CPU time and elapsed time for each service call Easy to port, distribute (Fortran, Pascal) First exerciser loop by Buchholz (1969) n n No more processing-only apps, I/O became important too Called it synthetic program May have built-in measurement capabilities 10

Example of Synthetic Workload Generation Program Buchholz, 1969 11

Example of Synthetic Workload Generation Program Buchholz, 1969 11

Synthetic Programs n Advantages n n n n Quickly developed and given to different vendors No real data files Easily modified and ported to different systems Have built-in measurement capabilities Measurement process is automated Repeated easily on successive versions of the operating systems Disadvantages n n n Too small Do not make representative memory or disk references Mechanisms for page faults and disk cache may not be adequately exercised CPU-I/O overlap may not be representative Not suitable for multi-user environments because loops may create synchronizations, which may result in better or worse performance 12

Synthetic Programs n Advantages n n n n Quickly developed and given to different vendors No real data files Easily modified and ported to different systems Have built-in measurement capabilities Measurement process is automated Repeated easily on successive versions of the operating systems Disadvantages n n n Too small Do not make representative memory or disk references Mechanisms for page faults and disk cache may not be adequately exercised CPU-I/O overlap may not be representative Not suitable for multi-user environments because loops may create synchronizations, which may result in better or worse performance 12

Application Workloads n For special-purpose systems, may be able to run representative applications as measure of performance n n Make use of entire system (I/O, etc) Issues may be n n n E. g. : airline reservation E. g. : banking Input parameters Multiuser Only applicable when specific applications are targeted n For a particular industry: Debit-Credit for Banks 13

Application Workloads n For special-purpose systems, may be able to run representative applications as measure of performance n n Make use of entire system (I/O, etc) Issues may be n n n E. g. : airline reservation E. g. : banking Input parameters Multiuser Only applicable when specific applications are targeted n For a particular industry: Debit-Credit for Banks 13

Benchmarks n Benchmark = workload n n n Kernels, synthetic programs, application-level workloads are all called benchmarks Instruction mixes are not called benchrmarks Some authors try to restrict the term benchmark only to a set of programs taken from real workloads Benchmarking is the process of performance comparison of two or more systems by measurements Workloads used in measurements are called benchmarks 14

Benchmarks n Benchmark = workload n n n Kernels, synthetic programs, application-level workloads are all called benchmarks Instruction mixes are not called benchrmarks Some authors try to restrict the term benchmark only to a set of programs taken from real workloads Benchmarking is the process of performance comparison of two or more systems by measurements Workloads used in measurements are called benchmarks 14

Popular Benchmarks n n n n n Sieve Ackerman’s Function Whetstone Linpack Dhrystone Lawrence Livermore Loops SPEC Debit-card Benchmark TPC EMBS 15

Popular Benchmarks n n n n n Sieve Ackerman’s Function Whetstone Linpack Dhrystone Lawrence Livermore Loops SPEC Debit-card Benchmark TPC EMBS 15

Sieve (1 of 2) n n n Sieve of Eratosthenes (finds primes) Write down all numbers 1 to n Strike out multiples of k for k = 2, 3, 5 … sqrt(n) n In steps of remaining numbers 16

Sieve (1 of 2) n n n Sieve of Eratosthenes (finds primes) Write down all numbers 1 to n Strike out multiples of k for k = 2, 3, 5 … sqrt(n) n In steps of remaining numbers 16

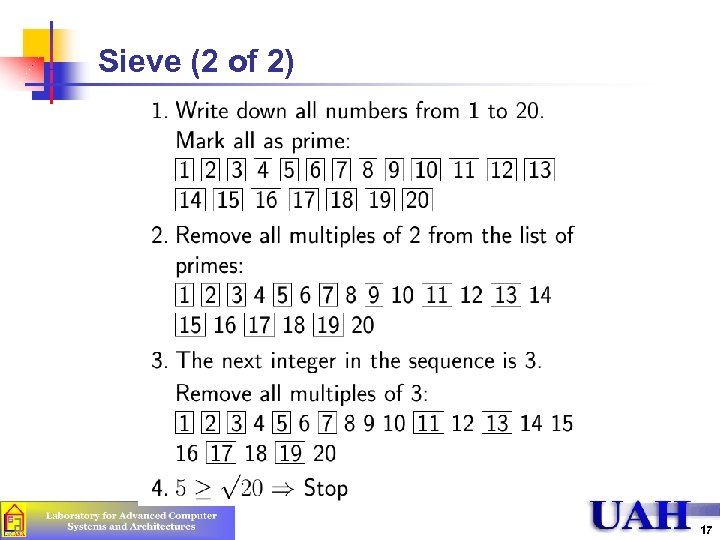

Sieve (2 of 2) 17

Sieve (2 of 2) 17

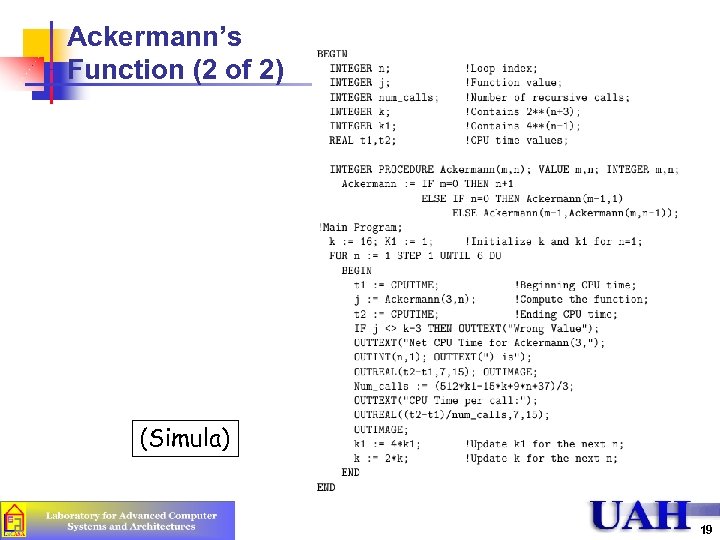

Ackermann’s Function (1 of 2) n n Assess efficiency of procedure calling mechanisms Ackermann’s Function has two parameters, and it is defined recursively n n Average execution time per call, the number of instructions executed, and the amount of stack space required for each call are used to compare various systems Return value is 2 n+3 -3, can be used to verify implementation Number of calls: n n Benchmark is to call Ackerman(3, n) for values of n = 1 to 6 (512 x 4 n-1 – 15 x 2 n+3 + 9 n + 37)/3 Can be used to compute time per call Depth is 2 n+3 – 4, stack space doubles when n++ 18

Ackermann’s Function (1 of 2) n n Assess efficiency of procedure calling mechanisms Ackermann’s Function has two parameters, and it is defined recursively n n Average execution time per call, the number of instructions executed, and the amount of stack space required for each call are used to compare various systems Return value is 2 n+3 -3, can be used to verify implementation Number of calls: n n Benchmark is to call Ackerman(3, n) for values of n = 1 to 6 (512 x 4 n-1 – 15 x 2 n+3 + 9 n + 37)/3 Can be used to compute time per call Depth is 2 n+3 – 4, stack space doubles when n++ 18

Ackermann’s Function (2 of 2) (Simula) 19

Ackermann’s Function (2 of 2) (Simula) 19

Whetstone n Set of 11 modules designed to match observed frequencies in ALGOL programs n n Array addressing, arithmetic, subroutine calls, parameter passing Ported to Fortran, most popular in C, … Many variations of Whetstone, so take care when comparing results Problems – specific kernel n n Only valid for small, scientific (floating) apps that fit in cache Does not exercise I/O 20

Whetstone n Set of 11 modules designed to match observed frequencies in ALGOL programs n n Array addressing, arithmetic, subroutine calls, parameter passing Ported to Fortran, most popular in C, … Many variations of Whetstone, so take care when comparing results Problems – specific kernel n n Only valid for small, scientific (floating) apps that fit in cache Does not exercise I/O 20

LINPACK n n Developed by Jack Dongarra (1983) at ANL Programs that solve dense systems of linear equations n n Many float adds and multiplies Core is Basic Linear Algebra Subprograms (BLAS), called repeatedly Usually, solve 100 x 100 system of equations Represents mechanical engineering applications on workstations n n Drafting to finite element analysis High computation speed and good graphics processing 21

LINPACK n n Developed by Jack Dongarra (1983) at ANL Programs that solve dense systems of linear equations n n Many float adds and multiplies Core is Basic Linear Algebra Subprograms (BLAS), called repeatedly Usually, solve 100 x 100 system of equations Represents mechanical engineering applications on workstations n n Drafting to finite element analysis High computation speed and good graphics processing 21

Dhrystone n n n Pun on Whetstone Intent to represent systems programming environments Most common was in C, but many versions Low nesting depth and instructions in each call Large amount of time copying strings Mostly integer performance with no float operations 22

Dhrystone n n n Pun on Whetstone Intent to represent systems programming environments Most common was in C, but many versions Low nesting depth and instructions in each call Large amount of time copying strings Mostly integer performance with no float operations 22

Lawrence Livermore Loops n n 24 vectorizable, scientific tests Floating point operations n n Physics and chemistry apps spend about 40 -60% of execution time performing floating point operations Relevant for: fluid dynamics, airplane design, weather modeling 23

Lawrence Livermore Loops n n 24 vectorizable, scientific tests Floating point operations n n Physics and chemistry apps spend about 40 -60% of execution time performing floating point operations Relevant for: fluid dynamics, airplane design, weather modeling 23

SPEC n Systems Performance Evaluation Cooperative (SPEC) (http: //www. spec. org) n n n Aim: ensure that the marketplace has a fair and useful set of metrics to differentiate candidate systems Product: “fair, impartial and meaningful benchmarks for computers“ n n n Non-profit, founded in 1988, by leading HW and SW vendors Initially, focus on CPUs: SPEC 89, SPEC 92, SPEC 95, SPEC CPU 2000, SPEC CPU 2006 Now, many suites are available Results are published on the SPEC web site 24

SPEC n Systems Performance Evaluation Cooperative (SPEC) (http: //www. spec. org) n n n Aim: ensure that the marketplace has a fair and useful set of metrics to differentiate candidate systems Product: “fair, impartial and meaningful benchmarks for computers“ n n n Non-profit, founded in 1988, by leading HW and SW vendors Initially, focus on CPUs: SPEC 89, SPEC 92, SPEC 95, SPEC CPU 2000, SPEC CPU 2006 Now, many suites are available Results are published on the SPEC web site 24

SPEC (cont’d) n Benchmarks aim to test "real-life" situations n n n E. g. , SPECweb 2005 tests web server performance by performing various types of parallel HTTP requests E. g. , SPEC CPU tests CPU performance by measuring the run time of several programs such as the compiler gcc and the chess program crafty. SPEC benchmarks are written in a platform neutral programming language (usually C or Fortran), and the interested parties may compile the code using whatever compiler they prefer for their platform, but may not change the code n Manufacturers have been known to optimize their compilers to improve performance of the various SPEC benchmarks 25

SPEC (cont’d) n Benchmarks aim to test "real-life" situations n n n E. g. , SPECweb 2005 tests web server performance by performing various types of parallel HTTP requests E. g. , SPEC CPU tests CPU performance by measuring the run time of several programs such as the compiler gcc and the chess program crafty. SPEC benchmarks are written in a platform neutral programming language (usually C or Fortran), and the interested parties may compile the code using whatever compiler they prefer for their platform, but may not change the code n Manufacturers have been known to optimize their compilers to improve performance of the various SPEC benchmarks 25

SPEC Benchmark Suits (Current) n SPEC CPU 2006: combined performance of CPU, memory and compiler n n n n CINT 2006 ("SPECint"): testing integer arithmetic, with programs such as compilers, interpreters, word processors, chess programs etc. CFP 2006 ("SPECfp"): testing floating point performance, with physical simulations, 3 D graphics, image processing, computational chemistry etc. SPECjms 2007: Java Message Service performance SPECweb 2005: PHP and/or JSP performance. SPECviewperf: performance of an Open. GL 3 D graphics system, tested with various rendering tasks from real applications SPECapc: performance of several 3 D-intensive popular applications on a given system SPEC OMP V 3. 1: for evaluating performance of parallel systems using Open. MP (http: //www. openmp. org) applications. SPEC MPI 2007: for evaluating performance of parallel systems using MPI (Message Passing Interface) applications. SPECjvm 98: performance of a java client system running a Java virtual machine SPECj. App. Server 2004: a multi-tier benchmark for measuring the performance of Java 2 Enterprise Edition (J 2 EE) technology-based application servers. SPECjbb 2005: evaluates the performance of server side Java by emulating a three-tier client/server system (with emphasis on the middle tier). SPEC MAIL 2001: performance of a mail server, testing SMTP and POP protocols SPECpower_2008: evaluates the energy efficiency of server systems. SPEC SFS 97_R 1: NFS file server throughput and response time 26

SPEC Benchmark Suits (Current) n SPEC CPU 2006: combined performance of CPU, memory and compiler n n n n CINT 2006 ("SPECint"): testing integer arithmetic, with programs such as compilers, interpreters, word processors, chess programs etc. CFP 2006 ("SPECfp"): testing floating point performance, with physical simulations, 3 D graphics, image processing, computational chemistry etc. SPECjms 2007: Java Message Service performance SPECweb 2005: PHP and/or JSP performance. SPECviewperf: performance of an Open. GL 3 D graphics system, tested with various rendering tasks from real applications SPECapc: performance of several 3 D-intensive popular applications on a given system SPEC OMP V 3. 1: for evaluating performance of parallel systems using Open. MP (http: //www. openmp. org) applications. SPEC MPI 2007: for evaluating performance of parallel systems using MPI (Message Passing Interface) applications. SPECjvm 98: performance of a java client system running a Java virtual machine SPECj. App. Server 2004: a multi-tier benchmark for measuring the performance of Java 2 Enterprise Edition (J 2 EE) technology-based application servers. SPECjbb 2005: evaluates the performance of server side Java by emulating a three-tier client/server system (with emphasis on the middle tier). SPEC MAIL 2001: performance of a mail server, testing SMTP and POP protocols SPECpower_2008: evaluates the energy efficiency of server systems. SPEC SFS 97_R 1: NFS file server throughput and response time 26

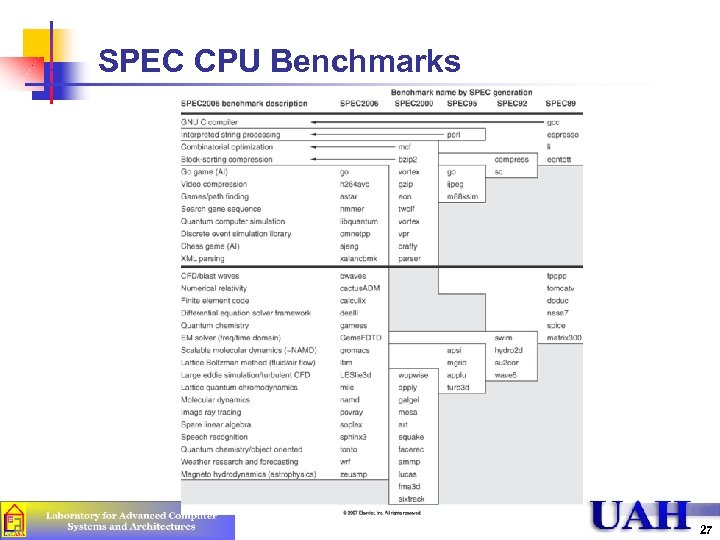

SPEC CPU Benchmarks 27

SPEC CPU Benchmarks 27

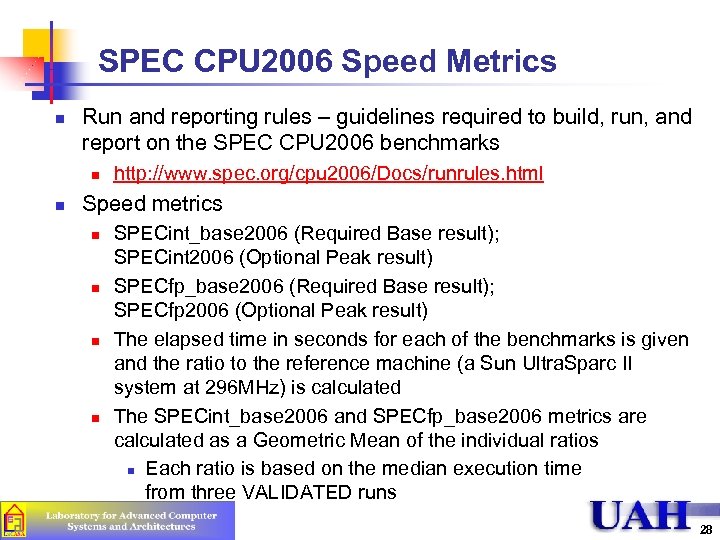

SPEC CPU 2006 Speed Metrics n Run and reporting rules – guidelines required to build, run, and report on the SPEC CPU 2006 benchmarks n n http: //www. spec. org/cpu 2006/Docs/runrules. html Speed metrics n n SPECint_base 2006 (Required Base result); SPECint 2006 (Optional Peak result) SPECfp_base 2006 (Required Base result); SPECfp 2006 (Optional Peak result) The elapsed time in seconds for each of the benchmarks is given and the ratio to the reference machine (a Sun Ultra. Sparc II system at 296 MHz) is calculated The SPECint_base 2006 and SPECfp_base 2006 metrics are calculated as a Geometric Mean of the individual ratios n Each ratio is based on the median execution time from three VALIDATED runs 28

SPEC CPU 2006 Speed Metrics n Run and reporting rules – guidelines required to build, run, and report on the SPEC CPU 2006 benchmarks n n http: //www. spec. org/cpu 2006/Docs/runrules. html Speed metrics n n SPECint_base 2006 (Required Base result); SPECint 2006 (Optional Peak result) SPECfp_base 2006 (Required Base result); SPECfp 2006 (Optional Peak result) The elapsed time in seconds for each of the benchmarks is given and the ratio to the reference machine (a Sun Ultra. Sparc II system at 296 MHz) is calculated The SPECint_base 2006 and SPECfp_base 2006 metrics are calculated as a Geometric Mean of the individual ratios n Each ratio is based on the median execution time from three VALIDATED runs 28

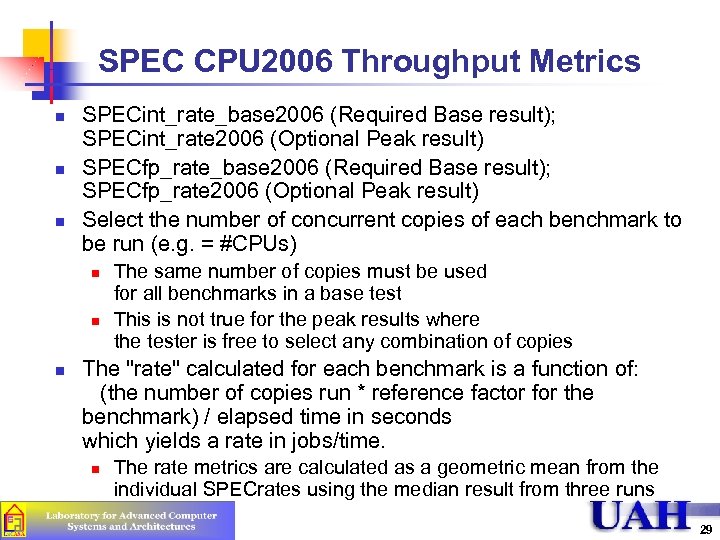

SPEC CPU 2006 Throughput Metrics n n n SPECint_rate_base 2006 (Required Base result); SPECint_rate 2006 (Optional Peak result) SPECfp_rate_base 2006 (Required Base result); SPECfp_rate 2006 (Optional Peak result) Select the number of concurrent copies of each benchmark to be run (e. g. = #CPUs) n n n The same number of copies must be used for all benchmarks in a base test This is not true for the peak results where the tester is free to select any combination of copies The "rate" calculated for each benchmark is a function of: (the number of copies run * reference factor for the benchmark) / elapsed time in seconds which yields a rate in jobs/time. n The rate metrics are calculated as a geometric mean from the individual SPECrates using the median result from three runs 29

SPEC CPU 2006 Throughput Metrics n n n SPECint_rate_base 2006 (Required Base result); SPECint_rate 2006 (Optional Peak result) SPECfp_rate_base 2006 (Required Base result); SPECfp_rate 2006 (Optional Peak result) Select the number of concurrent copies of each benchmark to be run (e. g. = #CPUs) n n n The same number of copies must be used for all benchmarks in a base test This is not true for the peak results where the tester is free to select any combination of copies The "rate" calculated for each benchmark is a function of: (the number of copies run * reference factor for the benchmark) / elapsed time in seconds which yields a rate in jobs/time. n The rate metrics are calculated as a geometric mean from the individual SPECrates using the median result from three runs 29

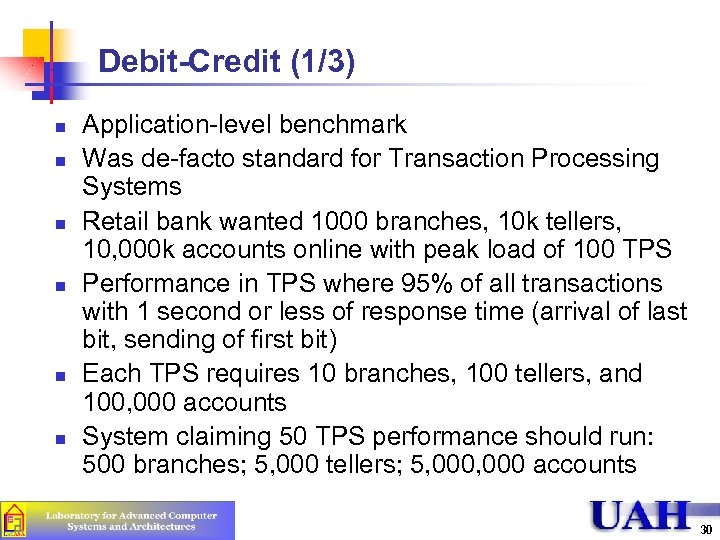

Debit-Credit (1/3) n n n Application-level benchmark Was de-facto standard for Transaction Processing Systems Retail bank wanted 1000 branches, 10 k tellers, 10, 000 k accounts online with peak load of 100 TPS Performance in TPS where 95% of all transactions with 1 second or less of response time (arrival of last bit, sending of first bit) Each TPS requires 10 branches, 100 tellers, and 100, 000 accounts System claiming 50 TPS performance should run: 500 branches; 5, 000 tellers; 5, 000 accounts 30

Debit-Credit (1/3) n n n Application-level benchmark Was de-facto standard for Transaction Processing Systems Retail bank wanted 1000 branches, 10 k tellers, 10, 000 k accounts online with peak load of 100 TPS Performance in TPS where 95% of all transactions with 1 second or less of response time (arrival of last bit, sending of first bit) Each TPS requires 10 branches, 100 tellers, and 100, 000 accounts System claiming 50 TPS performance should run: 500 branches; 5, 000 tellers; 5, 000 accounts 30

Debit-Credit (2/3) 31

Debit-Credit (2/3) 31

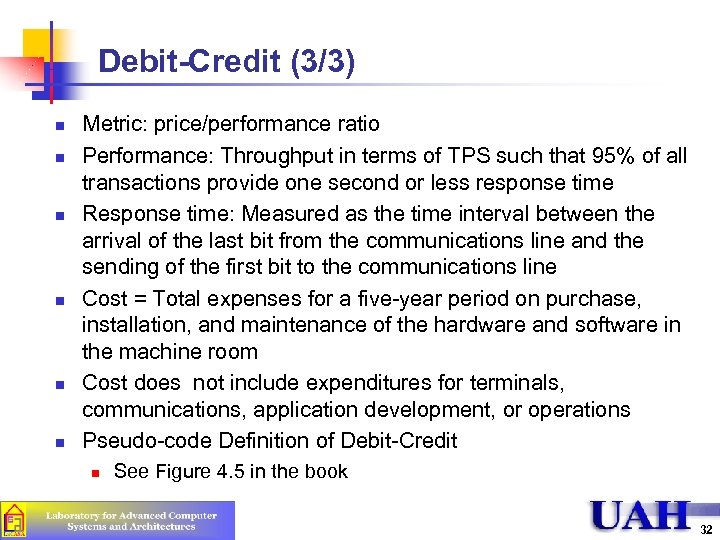

Debit-Credit (3/3) n n n Metric: price/performance ratio Performance: Throughput in terms of TPS such that 95% of all transactions provide one second or less response time Response time: Measured as the time interval between the arrival of the last bit from the communications line and the sending of the first bit to the communications line Cost = Total expenses for a five-year period on purchase, installation, and maintenance of the hardware and software in the machine room Cost does not include expenditures for terminals, communications, application development, or operations Pseudo-code Definition of Debit-Credit n See Figure 4. 5 in the book 32

Debit-Credit (3/3) n n n Metric: price/performance ratio Performance: Throughput in terms of TPS such that 95% of all transactions provide one second or less response time Response time: Measured as the time interval between the arrival of the last bit from the communications line and the sending of the first bit to the communications line Cost = Total expenses for a five-year period on purchase, installation, and maintenance of the hardware and software in the machine room Cost does not include expenditures for terminals, communications, application development, or operations Pseudo-code Definition of Debit-Credit n See Figure 4. 5 in the book 32

TPC n Transaction Processing Council (TPC) n n n Benchmark types n n n Mission: create realistic and fair benchmarks for TP For more info: http: //www. tpc. org TPC-A (1985) TPC-C (1992) – complex query environment TPC-H – models ad-hoc decision support (unrelated queries, no local history to optimize future queries) TPC-W – transaction Web benchmark (simulates the activities of a business-oriented transactional Web server) TPC-App – application server and Web services benchmark (simulates activities of a B 2 B transactional application server operating 24/7) Metric: transaction per second, also include response time (throughput performance is measure only when response time requirements are met). 33

TPC n Transaction Processing Council (TPC) n n n Benchmark types n n n Mission: create realistic and fair benchmarks for TP For more info: http: //www. tpc. org TPC-A (1985) TPC-C (1992) – complex query environment TPC-H – models ad-hoc decision support (unrelated queries, no local history to optimize future queries) TPC-W – transaction Web benchmark (simulates the activities of a business-oriented transactional Web server) TPC-App – application server and Web services benchmark (simulates activities of a B 2 B transactional application server operating 24/7) Metric: transaction per second, also include response time (throughput performance is measure only when response time requirements are met). 33

EMBS n Embedded Microprocessor Benchmark Consortium (EEMBC, pronounced “embassy”) n n n Non-profit consortium supported by member dues and license fees Real world benchmark software helps designers select the right embedded processors for their systems Standard benchmarks and methodology ensure fair and reasonable comparisons EEMBC Technology Center manages development of new benchmark software and certifies benchmark test results For more info: http: //www. eembc. com/ 41 kernels used in different embedded applications n n n n Automotive/Industrial Consumer Digital Entertainment Java Networking Office Automation Telecommunications 34

EMBS n Embedded Microprocessor Benchmark Consortium (EEMBC, pronounced “embassy”) n n n Non-profit consortium supported by member dues and license fees Real world benchmark software helps designers select the right embedded processors for their systems Standard benchmarks and methodology ensure fair and reasonable comparisons EEMBC Technology Center manages development of new benchmark software and certifies benchmark test results For more info: http: //www. eembc. com/ 41 kernels used in different embedded applications n n n n Automotive/Industrial Consumer Digital Entertainment Java Networking Office Automation Telecommunications 34

The Art of Workload Selection

The Art of Workload Selection

The Art of Workload Selection n Workload is the most crucial part of any performance evaluation n n Inappropriate workload will result in misleading conclusions Major considerations in workload selection n n Services exercised by the workload Level of detail Representativeness Timeliness 36

The Art of Workload Selection n Workload is the most crucial part of any performance evaluation n n Inappropriate workload will result in misleading conclusions Major considerations in workload selection n n Services exercised by the workload Level of detail Representativeness Timeliness 36

Services Exercised n n SUT = System Under Test CUS = Component Under Study 37

Services Exercised n n SUT = System Under Test CUS = Component Under Study 37

Services Exercised (cont’d) n n Do not confuse SUT w CUS Metrics depend upon SUT: MIPS is ok for two CPUs but not for two timesharing systems Workload: depends upon the system Examples: n n n CPU: instructions System: Transactions not good for CPU and vice versa Two systems identical except for CPU n Comparing Systems: Use transactions n Comparing CPUs: Use instructions Multiple services: Exercise as complete a set of services as possible 38

Services Exercised (cont’d) n n Do not confuse SUT w CUS Metrics depend upon SUT: MIPS is ok for two CPUs but not for two timesharing systems Workload: depends upon the system Examples: n n n CPU: instructions System: Transactions not good for CPU and vice versa Two systems identical except for CPU n Comparing Systems: Use transactions n Comparing CPUs: Use instructions Multiple services: Exercise as complete a set of services as possible 38

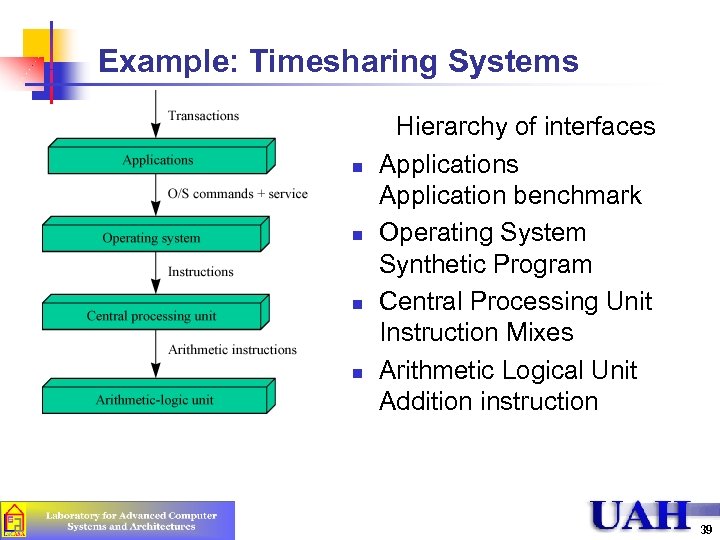

Example: Timesharing Systems n n Hierarchy of interfaces Application benchmark Operating System Synthetic Program Central Processing Unit Instruction Mixes Arithmetic Logical Unit Addition instruction 39

Example: Timesharing Systems n n Hierarchy of interfaces Application benchmark Operating System Synthetic Program Central Processing Unit Instruction Mixes Arithmetic Logical Unit Addition instruction 39

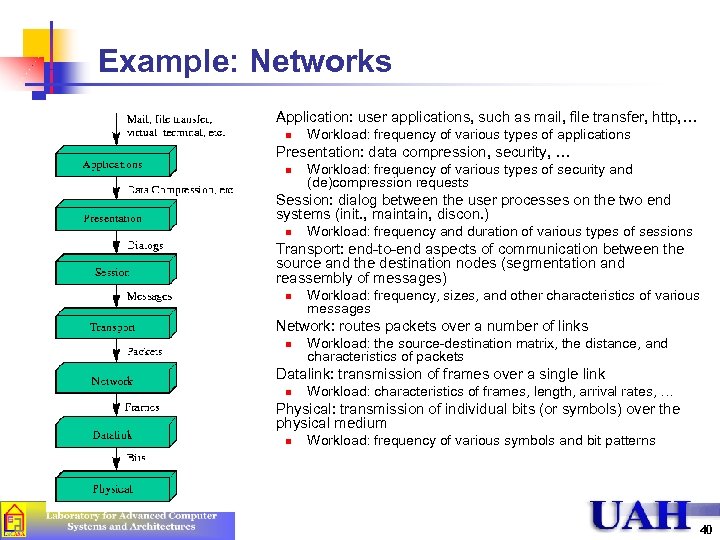

Example: Networks n Application: user applications, such as mail, file transfer, http, … n n Presentation: data compression, security, … n n Workload: the source-destination matrix, the distance, and characteristics of packets Datalink: transmission of frames over a single link n n Workload: frequency, sizes, and other characteristics of various messages Network: routes packets over a number of links n n Workload: frequency and duration of various types of sessions Transport: end-to-end aspects of communication between the source and the destination nodes (segmentation and reassembly of messages) n n Workload: frequency of various types of security and (de)compression requests Session: dialog between the user processes on the two end systems (init. , maintain, discon. ) n n Workload: frequency of various types of applications Workload: characteristics of frames, length, arrival rates, … Physical: transmission of individual bits (or symbols) over the physical medium n Workload: frequency of various symbols and bit patterns 40

Example: Networks n Application: user applications, such as mail, file transfer, http, … n n Presentation: data compression, security, … n n Workload: the source-destination matrix, the distance, and characteristics of packets Datalink: transmission of frames over a single link n n Workload: frequency, sizes, and other characteristics of various messages Network: routes packets over a number of links n n Workload: frequency and duration of various types of sessions Transport: end-to-end aspects of communication between the source and the destination nodes (segmentation and reassembly of messages) n n Workload: frequency of various types of security and (de)compression requests Session: dialog between the user processes on the two end systems (init. , maintain, discon. ) n n Workload: frequency of various types of applications Workload: characteristics of frames, length, arrival rates, … Physical: transmission of individual bits (or symbols) over the physical medium n Workload: frequency of various symbols and bit patterns 40

Example: Magnetic Tape Backup System n n n Services: Backup files, backup changed files, restore files, list backed-up files Factors: File-system size, batch or background process, incremental or full backups Metrics: Backup time, restore time Workload: A computer system with files to be backed up. Vary frequency of backups Tape Data System n n Services: Read/write to the tape, read tape label, auto load tapes Factors: Type of tape drive Metrics: Speed, reliability, time between failures Workload: A synthetic program generating representative tape I/O requests 41

Example: Magnetic Tape Backup System n n n Services: Backup files, backup changed files, restore files, list backed-up files Factors: File-system size, batch or background process, incremental or full backups Metrics: Backup time, restore time Workload: A computer system with files to be backed up. Vary frequency of backups Tape Data System n n Services: Read/write to the tape, read tape label, auto load tapes Factors: Type of tape drive Metrics: Speed, reliability, time between failures Workload: A synthetic program generating representative tape I/O requests 41

Magnetic Tape System (cont’d) n Tape Drives n n n Services: Read record, write record, rewind, find record, move to end of tape, move to beginning of tape Factors: Cartridge or reel tapes, drive size Metrics: Time for each type of service, for example, time to read record and to write record, speed (requests/time), noise, power dissipation Workload: A synthetic program exerciser generating various types of requests in a representative manner Read/Write Subsystem n n Services: Read data, write data (as digital signals) Factors: Data-encoding technique, implementation technology (CMOS, TTL, and so forth) Metrics: Coding density, I/O bandwidth (bits per second) Workload: Read/write data streams with varying patterns of bits 42

Magnetic Tape System (cont’d) n Tape Drives n n n Services: Read record, write record, rewind, find record, move to end of tape, move to beginning of tape Factors: Cartridge or reel tapes, drive size Metrics: Time for each type of service, for example, time to read record and to write record, speed (requests/time), noise, power dissipation Workload: A synthetic program exerciser generating various types of requests in a representative manner Read/Write Subsystem n n Services: Read data, write data (as digital signals) Factors: Data-encoding technique, implementation technology (CMOS, TTL, and so forth) Metrics: Coding density, I/O bandwidth (bits per second) Workload: Read/write data streams with varying patterns of bits 42

Magnetic Tape System (cont’d) n Read/Write Heads n n Services: Read signal, write signal (electrical signals) Factors: Composition, inter-head spacing, gap sizing, number of heads in parallel Metrics: Magnetic field strength, hysteresis Workload: Read/write currents of various amplitudes, tapes moving at various speeds 43

Magnetic Tape System (cont’d) n Read/Write Heads n n Services: Read signal, write signal (electrical signals) Factors: Composition, inter-head spacing, gap sizing, number of heads in parallel Metrics: Magnetic field strength, hysteresis Workload: Read/write currents of various amplitudes, tapes moving at various speeds 43

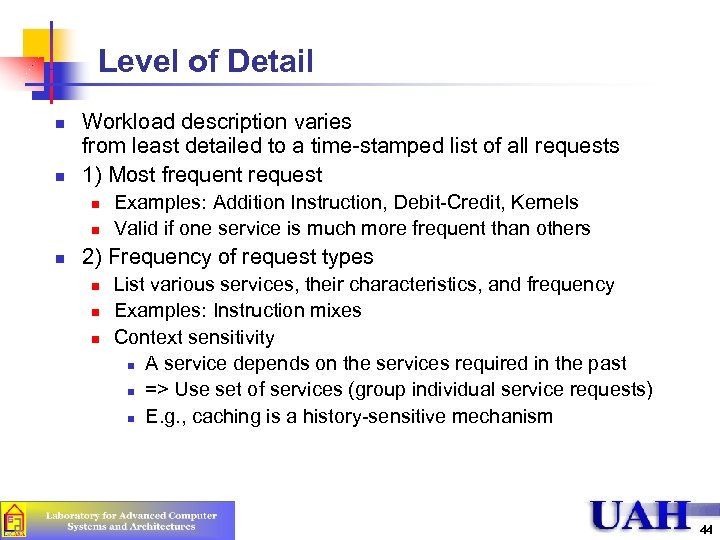

Level of Detail n n Workload description varies from least detailed to a time-stamped list of all requests 1) Most frequent request n n n Examples: Addition Instruction, Debit-Credit, Kernels Valid if one service is much more frequent than others 2) Frequency of request types n n n List various services, their characteristics, and frequency Examples: Instruction mixes Context sensitivity n A service depends on the services required in the past n => Use set of services (group individual service requests) n E. g. , caching is a history-sensitive mechanism 44

Level of Detail n n Workload description varies from least detailed to a time-stamped list of all requests 1) Most frequent request n n n Examples: Addition Instruction, Debit-Credit, Kernels Valid if one service is much more frequent than others 2) Frequency of request types n n n List various services, their characteristics, and frequency Examples: Instruction mixes Context sensitivity n A service depends on the services required in the past n => Use set of services (group individual service requests) n E. g. , caching is a history-sensitive mechanism 44

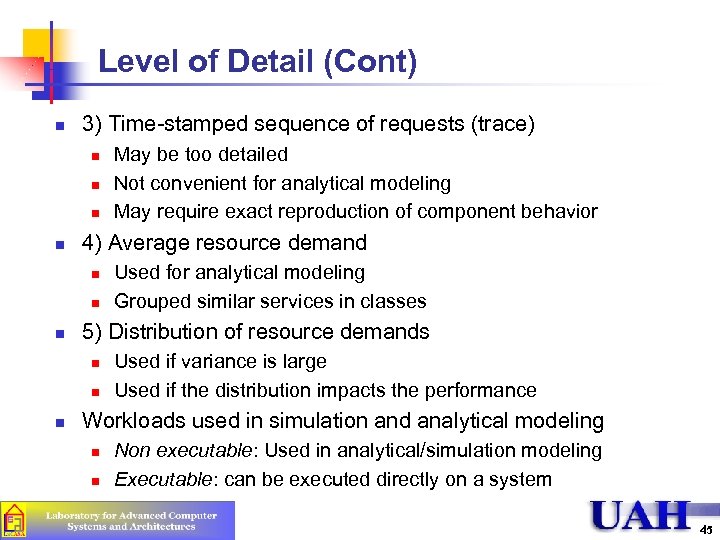

Level of Detail (Cont) n 3) Time-stamped sequence of requests (trace) n n 4) Average resource demand n n n Used for analytical modeling Grouped similar services in classes 5) Distribution of resource demands n n n May be too detailed Not convenient for analytical modeling May require exact reproduction of component behavior Used if variance is large Used if the distribution impacts the performance Workloads used in simulation and analytical modeling n n Non executable: Used in analytical/simulation modeling Executable: can be executed directly on a system 45

Level of Detail (Cont) n 3) Time-stamped sequence of requests (trace) n n 4) Average resource demand n n n Used for analytical modeling Grouped similar services in classes 5) Distribution of resource demands n n n May be too detailed Not convenient for analytical modeling May require exact reproduction of component behavior Used if variance is large Used if the distribution impacts the performance Workloads used in simulation and analytical modeling n n Non executable: Used in analytical/simulation modeling Executable: can be executed directly on a system 45

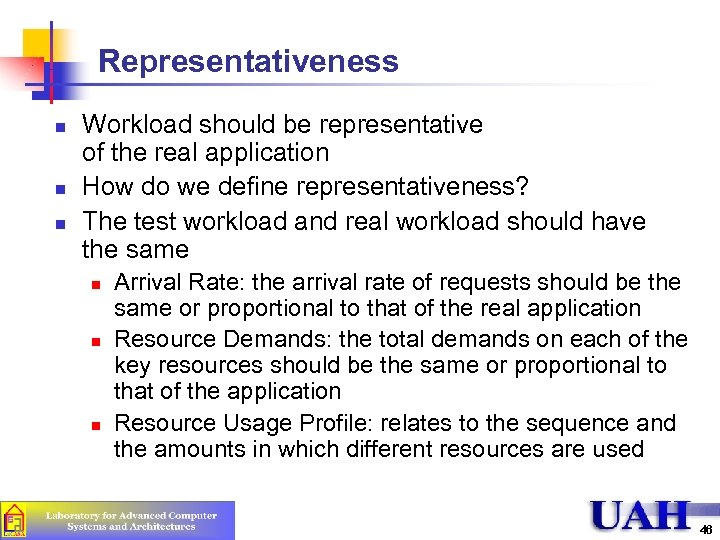

Representativeness n n n Workload should be representative of the real application How do we define representativeness? The test workload and real workload should have the same n n n Arrival Rate: the arrival rate of requests should be the same or proportional to that of the real application Resource Demands: the total demands on each of the key resources should be the same or proportional to that of the application Resource Usage Profile: relates to the sequence and the amounts in which different resources are used 46

Representativeness n n n Workload should be representative of the real application How do we define representativeness? The test workload and real workload should have the same n n n Arrival Rate: the arrival rate of requests should be the same or proportional to that of the real application Resource Demands: the total demands on each of the key resources should be the same or proportional to that of the application Resource Usage Profile: relates to the sequence and the amounts in which different resources are used 46

Timeliness n n Workloads should follow the changes in usage patterns in a timely fashion Difficult to achieve: users are a moving target New systems new workloads Users tend to optimize the demand n n n Use those features that the system performs efficiently E. g. , fast multiplication higher frequency of multiplication instructions Important to monitor user behavior on an ongoing basis 47

Timeliness n n Workloads should follow the changes in usage patterns in a timely fashion Difficult to achieve: users are a moving target New systems new workloads Users tend to optimize the demand n n n Use those features that the system performs efficiently E. g. , fast multiplication higher frequency of multiplication instructions Important to monitor user behavior on an ongoing basis 47

Other Considerations in Workload Selection n Loading Level: A workload may exercise a system to its n n n Impact of External Components n n Full capacity (best case) Beyond its capacity (worst case) At the load level observed in real workload (typical case) For procurement purposes Typical For design best to worst, all cases Do not use a workload that makes external component a bottleneck All alternatives in the system give equally good performance Repeatability n Workload should be such that the results can be easily reproduced without too much variance 48

Other Considerations in Workload Selection n Loading Level: A workload may exercise a system to its n n n Impact of External Components n n Full capacity (best case) Beyond its capacity (worst case) At the load level observed in real workload (typical case) For procurement purposes Typical For design best to worst, all cases Do not use a workload that makes external component a bottleneck All alternatives in the system give equally good performance Repeatability n Workload should be such that the results can be easily reproduced without too much variance 48

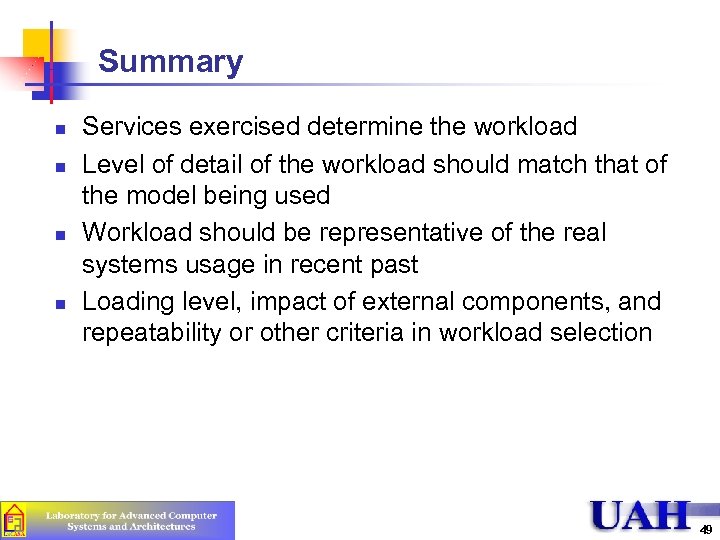

Summary n n Services exercised determine the workload Level of detail of the workload should match that of the model being used Workload should be representative of the real systems usage in recent past Loading level, impact of external components, and repeatability or other criteria in workload selection 49

Summary n n Services exercised determine the workload Level of detail of the workload should match that of the model being used Workload should be representative of the real systems usage in recent past Loading level, impact of external components, and repeatability or other criteria in workload selection 49

Workload Characterization

Workload Characterization

Workload Characterization Techniques Speed, quality, price. Pick any two. – James M. Wallace n n n Want to have repeatable workload so can compare systems under identical conditions Hard to do in real-user environment Instead n n n Study real-user environment Observe key characteristics Develop workload model Workload Characterization 51

Workload Characterization Techniques Speed, quality, price. Pick any two. – James M. Wallace n n n Want to have repeatable workload so can compare systems under identical conditions Hard to do in real-user environment Instead n n n Study real-user environment Observe key characteristics Develop workload model Workload Characterization 51

Terminology n n Assume system provides services User (workload component, workload unit) – entity that makes service requests at the SUT interface n n Applications: mail, editing, programming. . Sites: workload at different organizations User Sessions: complete user sessions from login to logout Workload parameters – the measure quantities, service requests, resource demands used to model or characterize workload n Ex: instructions, packet sizes, source or destination of packets, page reference pattern, … 52

Terminology n n Assume system provides services User (workload component, workload unit) – entity that makes service requests at the SUT interface n n Applications: mail, editing, programming. . Sites: workload at different organizations User Sessions: complete user sessions from login to logout Workload parameters – the measure quantities, service requests, resource demands used to model or characterize workload n Ex: instructions, packet sizes, source or destination of packets, page reference pattern, … 52

Choosing Parameters n n n The workload component should be at the SUT interface. Each component should represent as homogeneous a group as possible. Combining very different users into a site workload may not be meaningful. Better to pick parameters that depend upon workload and not upon system n n n Ex: response time of email not good n Depends upon system Ex: email size is good n Depends upon workload Several characteristics that are of interest n n Arrival time, duration, quantity of resources demanded n Ex: network packet size Have significant impact (exclude if little impact) n Ex: type of Ethernet card 53

Choosing Parameters n n n The workload component should be at the SUT interface. Each component should represent as homogeneous a group as possible. Combining very different users into a site workload may not be meaningful. Better to pick parameters that depend upon workload and not upon system n n n Ex: response time of email not good n Depends upon system Ex: email size is good n Depends upon workload Several characteristics that are of interest n n Arrival time, duration, quantity of resources demanded n Ex: network packet size Have significant impact (exclude if little impact) n Ex: type of Ethernet card 53

Techniques for Workload Characterization n n n Averaging Specifying dispersion Single-parameter histograms Multi-parameter histograms Principal-component analysis Markov models Clustering 54

Techniques for Workload Characterization n n n Averaging Specifying dispersion Single-parameter histograms Multi-parameter histograms Principal-component analysis Markov models Clustering 54

Averaging n Mean n Standard deviation: n Coefficient Of Variation: n Mode (for categorical variables): Most frequent value n Median: 50 -percentile 55

Averaging n Mean n Standard deviation: n Coefficient Of Variation: n Mode (for categorical variables): Most frequent value n Median: 50 -percentile 55

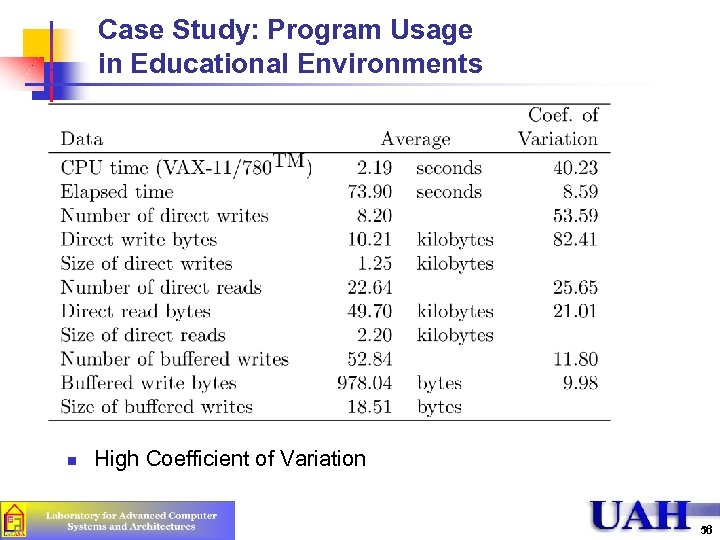

Case Study: Program Usage in Educational Environments n High Coefficient of Variation 56

Case Study: Program Usage in Educational Environments n High Coefficient of Variation 56

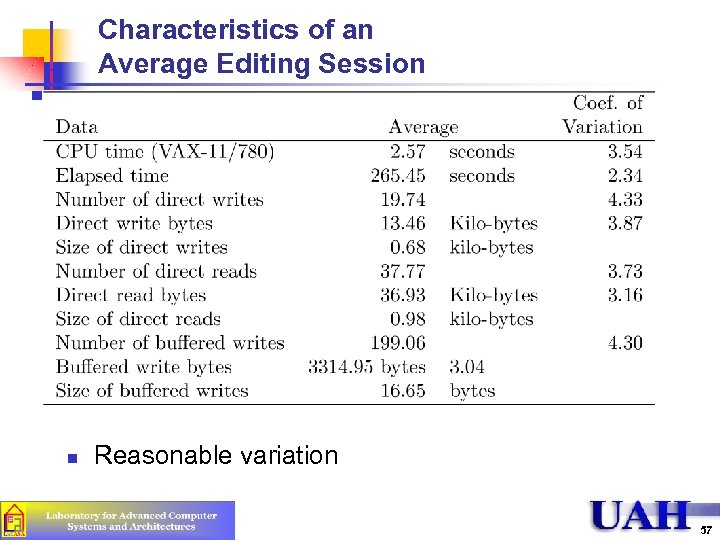

Characteristics of an Average Editing Session n Reasonable variation 57

Characteristics of an Average Editing Session n Reasonable variation 57

Techniques for Workload Characterization n n n Averaging Specifying dispersion Single-parameter histograms Multi-parameter histograms Principal-component analysis Markov models Clustering 58

Techniques for Workload Characterization n n n Averaging Specifying dispersion Single-parameter histograms Multi-parameter histograms Principal-component analysis Markov models Clustering 58

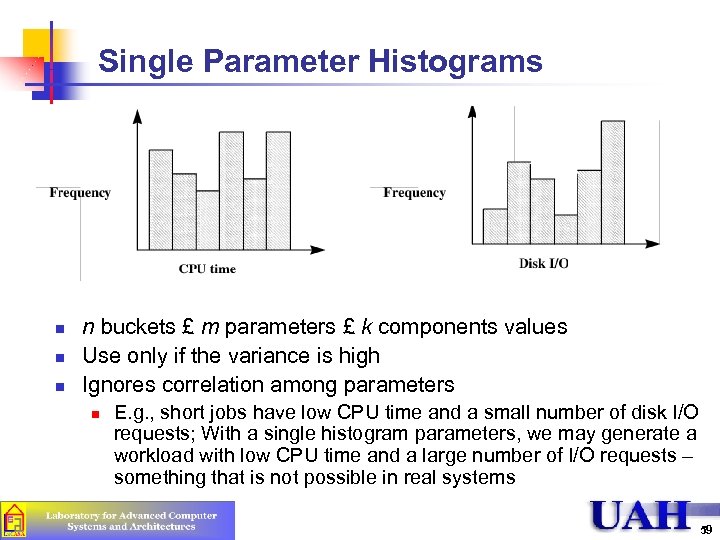

Single Parameter Histograms n n buckets £ m parameters £ k components values Use only if the variance is high Ignores correlation among parameters n E. g. , short jobs have low CPU time and a small number of disk I/O requests; With a single histogram parameters, we may generate a workload with low CPU time and a large number of I/O requests – something that is not possible in real systems 59

Single Parameter Histograms n n buckets £ m parameters £ k components values Use only if the variance is high Ignores correlation among parameters n E. g. , short jobs have low CPU time and a small number of disk I/O requests; With a single histogram parameters, we may generate a workload with low CPU time and a large number of I/O requests – something that is not possible in real systems 59

Multi-parameter Histograms n Difficult to plot joint histograms for more than two parameters 60

Multi-parameter Histograms n Difficult to plot joint histograms for more than two parameters 60

Techniques for Workload Characterization n n n Averaging Specifying dispersion Single-parameter histograms Multi-parameter histograms Principal-component analysis Markov models Clustering 61

Techniques for Workload Characterization n n n Averaging Specifying dispersion Single-parameter histograms Multi-parameter histograms Principal-component analysis Markov models Clustering 61

Principal-Component Analysis n n Goal is to reduce number of factors PCA transforms a number of (possibly) correlated variables into a (smaller) number of uncorrelated variables called principal components 62

Principal-Component Analysis n n Goal is to reduce number of factors PCA transforms a number of (possibly) correlated variables into a (smaller) number of uncorrelated variables called principal components 62

Principal Component Analysis (cont’d) n n Key Idea: Use a weighted sum of parameters to classify the components Let xij denote the ith parameter for jth component yj = åi=1 n wi xij n n n Principal component analysis assigns weights wi's such that yj's provide the maximum discrimination among the components The quantity yj is called the principal factor The factors are ordered. First factor explains the highest percentage of the variance 63

Principal Component Analysis (cont’d) n n Key Idea: Use a weighted sum of parameters to classify the components Let xij denote the ith parameter for jth component yj = åi=1 n wi xij n n n Principal component analysis assigns weights wi's such that yj's provide the maximum discrimination among the components The quantity yj is called the principal factor The factors are ordered. First factor explains the highest percentage of the variance 63

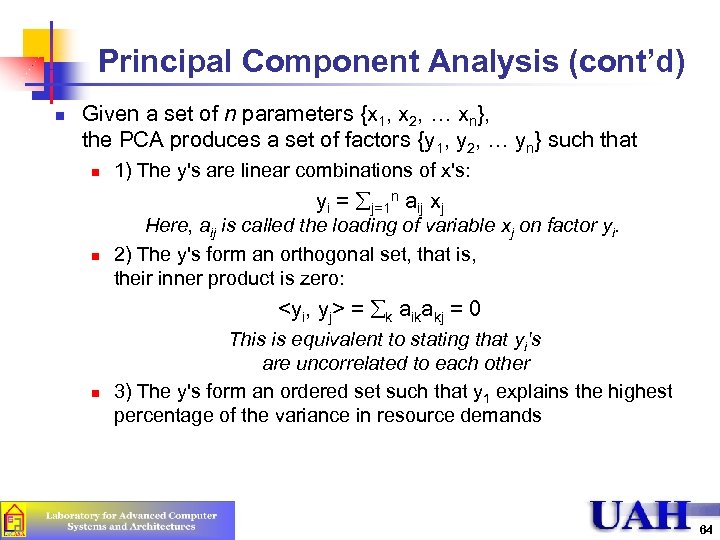

Principal Component Analysis (cont’d) n Given a set of n parameters {x 1, x 2, … xn}, the PCA produces a set of factors {y 1, y 2, … yn} such that n 1) The y's are linear combinations of x's: yi = åj=1 n aij xj n Here, aij is called the loading of variable xj on factor yi. 2) The y's form an orthogonal set, that is, their inner product is zero:

Principal Component Analysis (cont’d) n Given a set of n parameters {x 1, x 2, … xn}, the PCA produces a set of factors {y 1, y 2, … yn} such that n 1) The y's are linear combinations of x's: yi = åj=1 n aij xj n Here, aij is called the loading of variable xj on factor yi. 2) The y's form an orthogonal set, that is, their inner product is zero:

Finding Principal Factors n n n Find the correlation matrix Find the eigen values of the matrix and sort them in the order of decreasing magnitude Find corresponding eigen vectors These give the required loadings 65

Finding Principal Factors n n n Find the correlation matrix Find the eigen values of the matrix and sort them in the order of decreasing magnitude Find corresponding eigen vectors These give the required loadings 65

Principal Component Analysis Example n xs – packets sent, xr – packet received 66

Principal Component Analysis Example n xs – packets sent, xr – packet received 66

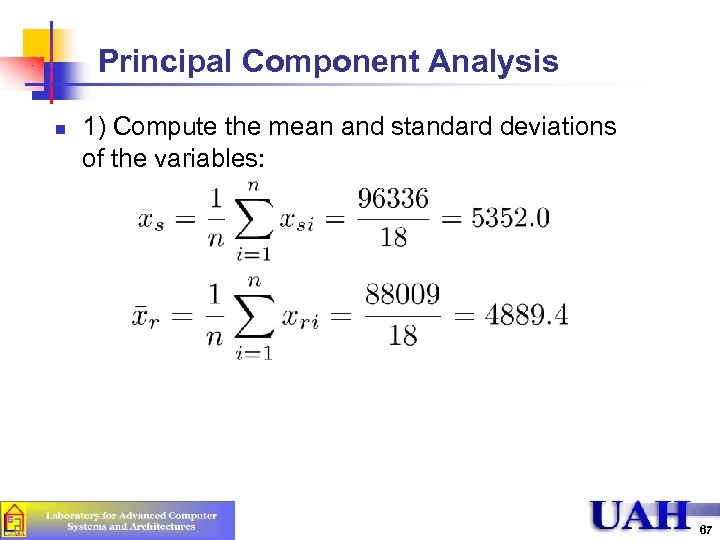

Principal Component Analysis n 1) Compute the mean and standard deviations of the variables: 67

Principal Component Analysis n 1) Compute the mean and standard deviations of the variables: 67

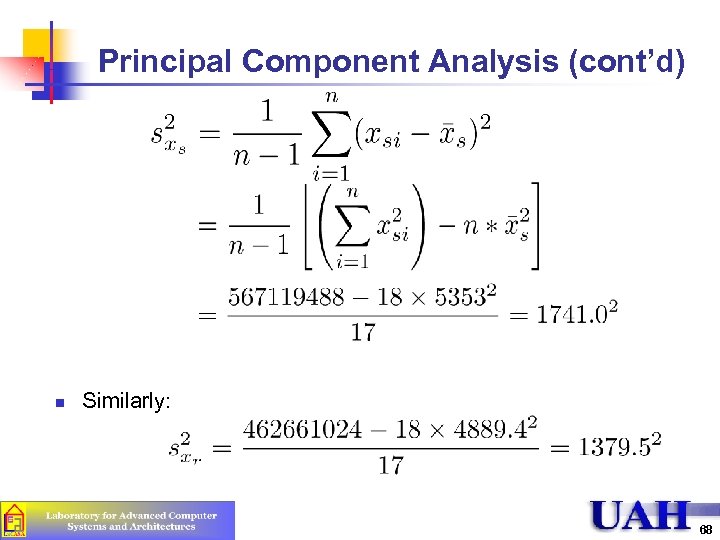

Principal Component Analysis (cont’d) n Similarly: 68

Principal Component Analysis (cont’d) n Similarly: 68

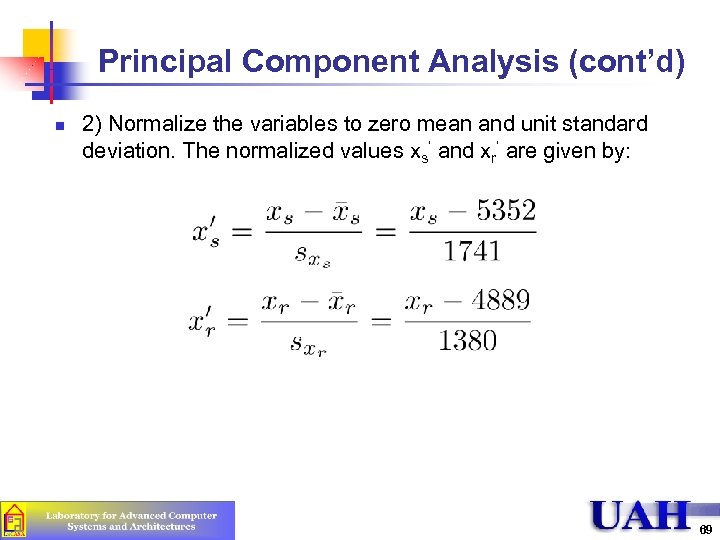

Principal Component Analysis (cont’d) n 2) Normalize the variables to zero mean and unit standard deviation. The normalized values xs’ and xr’ are given by: 69

Principal Component Analysis (cont’d) n 2) Normalize the variables to zero mean and unit standard deviation. The normalized values xs’ and xr’ are given by: 69

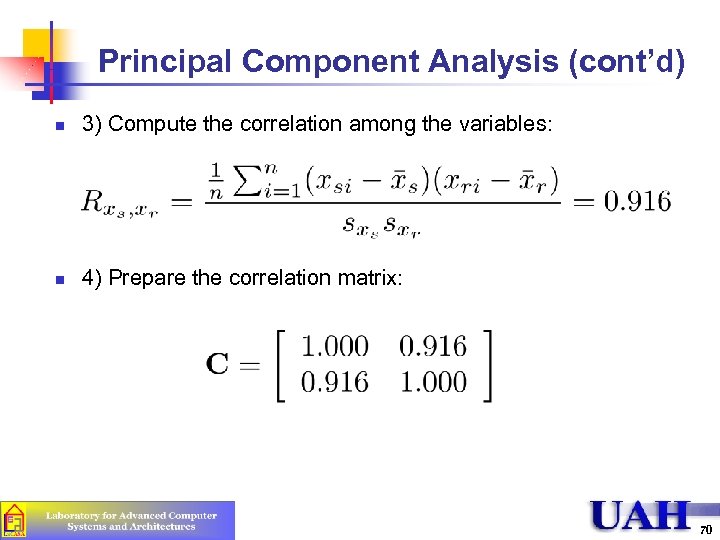

Principal Component Analysis (cont’d) n 3) Compute the correlation among the variables: n 4) Prepare the correlation matrix: 70

Principal Component Analysis (cont’d) n 3) Compute the correlation among the variables: n 4) Prepare the correlation matrix: 70

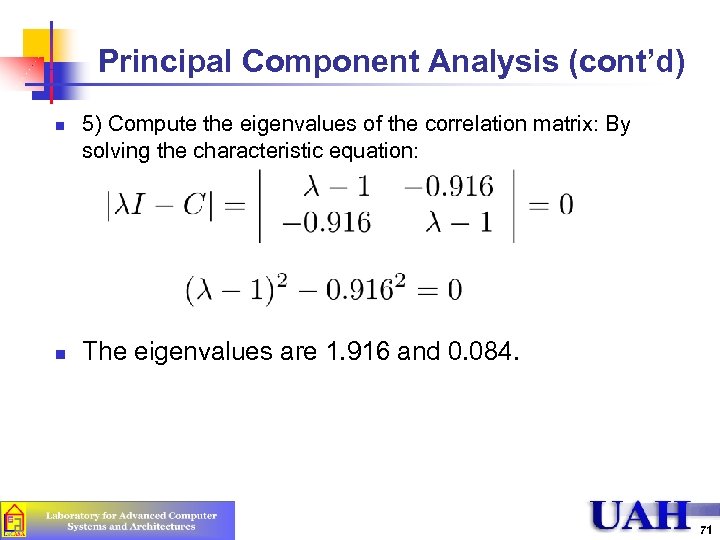

Principal Component Analysis (cont’d) n n 5) Compute the eigenvalues of the correlation matrix: By solving the characteristic equation: The eigenvalues are 1. 916 and 0. 084. 71

Principal Component Analysis (cont’d) n n 5) Compute the eigenvalues of the correlation matrix: By solving the characteristic equation: The eigenvalues are 1. 916 and 0. 084. 71

Principal Component Analysis (cont’d) n 6) Compute the eigenvectors of the correlation matrix. The eigenvector q 1 corresponding to l 1=1. 916 are defined by the following relationship: {C}{q}1 = l 1 {q}1 or: q 11=q 21 72

Principal Component Analysis (cont’d) n 6) Compute the eigenvectors of the correlation matrix. The eigenvector q 1 corresponding to l 1=1. 916 are defined by the following relationship: {C}{q}1 = l 1 {q}1 or: q 11=q 21 72

Principal Component Analysis (cont’d) n n Restricting the length of the eigenvectors to one: 7) Obtain principal factors by multiplying the eigen vectors by the normalized vectors: 73

Principal Component Analysis (cont’d) n n Restricting the length of the eigenvectors to one: 7) Obtain principal factors by multiplying the eigen vectors by the normalized vectors: 73

Principal Component Analysis (cont’d) n n 8) Compute the values of the principal factors (last two columns) 9) Compute the sum and sum of squares of the principal factors n n The sum must be zero The sum of squares give the percentage of variation explained 74

Principal Component Analysis (cont’d) n n 8) Compute the values of the principal factors (last two columns) 9) Compute the sum and sum of squares of the principal factors n n The sum must be zero The sum of squares give the percentage of variation explained 74

Principal Component Analysis (cont’d) n n The first factor explains 32. 565/(32. 565+1. 435) or 95. 7% of the variation The second factor explains only 4. 3% of the variation and can, thus, be ignored 75

Principal Component Analysis (cont’d) n n The first factor explains 32. 565/(32. 565+1. 435) or 95. 7% of the variation The second factor explains only 4. 3% of the variation and can, thus, be ignored 75

Techniques for Workload Characterization n n n Averaging Specifying dispersion Single-parameter histograms Multi-parameter histograms Principal-component analysis Markov models Clustering 76

Techniques for Workload Characterization n n n Averaging Specifying dispersion Single-parameter histograms Multi-parameter histograms Principal-component analysis Markov models Clustering 76

Markov Models n n Sometimes, important not to just have number of each type of request but also order of requests If next request depends upon previous request, then can use Markov model n Actually, more general. If next state depends upon current state 77

Markov Models n n Sometimes, important not to just have number of each type of request but also order of requests If next request depends upon previous request, then can use Markov model n Actually, more general. If next state depends upon current state 77

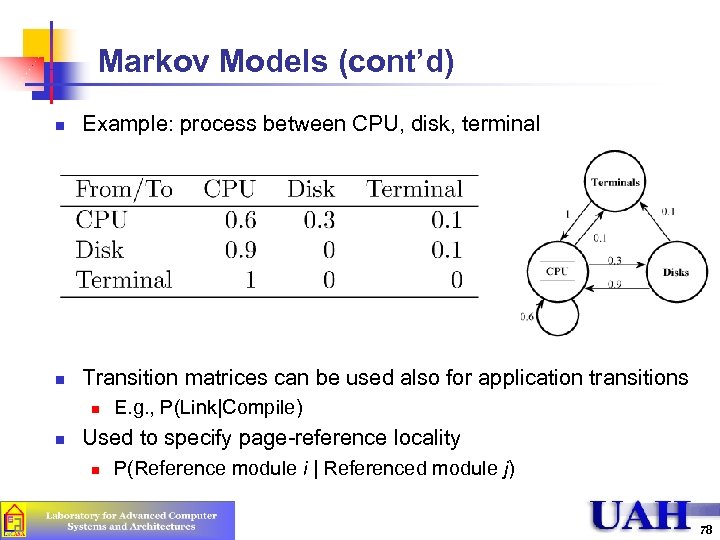

Markov Models (cont’d) n Example: process between CPU, disk, terminal n Transition matrices can be used also for application transitions n n E. g. , P(Link|Compile) Used to specify page-reference locality n P(Reference module i | Referenced module j) 78

Markov Models (cont’d) n Example: process between CPU, disk, terminal n Transition matrices can be used also for application transitions n n E. g. , P(Link|Compile) Used to specify page-reference locality n P(Reference module i | Referenced module j) 78

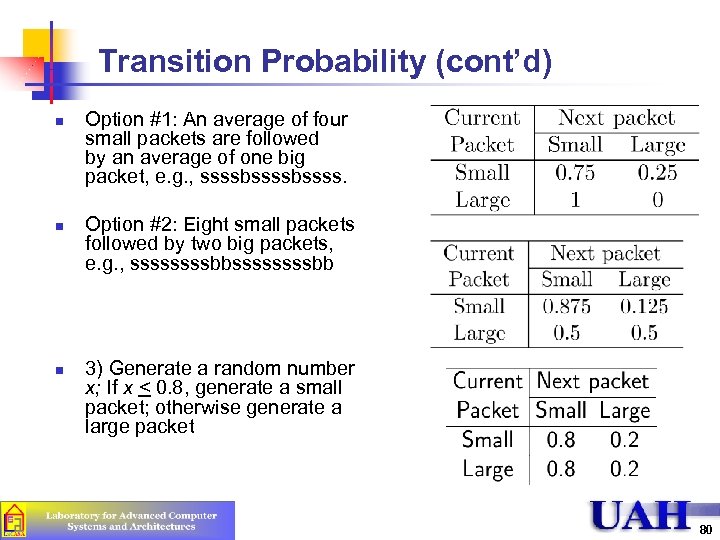

Transition Probability n Given the same relative frequency of requests of different types, it is possible to realize the frequency with several different transition matrices n n n Each matrix may result in a different performance of the system If order is important, measure the transition probabilities directly on the real system Example: Two packet sizes: Small (80%), Large (20%) 79

Transition Probability n Given the same relative frequency of requests of different types, it is possible to realize the frequency with several different transition matrices n n n Each matrix may result in a different performance of the system If order is important, measure the transition probabilities directly on the real system Example: Two packet sizes: Small (80%), Large (20%) 79

Transition Probability (cont’d) n n n Option #1: An average of four small packets are followed by an average of one big packet, e. g. , ssssbssss. Option #2: Eight small packets followed by two big packets, e. g. , ssssssssbb 3) Generate a random number x; If x < 0. 8, generate a small packet; otherwise generate a large packet 80

Transition Probability (cont’d) n n n Option #1: An average of four small packets are followed by an average of one big packet, e. g. , ssssbssss. Option #2: Eight small packets followed by two big packets, e. g. , ssssssssbb 3) Generate a random number x; If x < 0. 8, generate a small packet; otherwise generate a large packet 80

Techniques for Workload Characterization n n n Averaging Specifying dispersion Single-parameter histograms Multi-parameter histograms Principal-component analysis Markov models Clustering 81

Techniques for Workload Characterization n n n Averaging Specifying dispersion Single-parameter histograms Multi-parameter histograms Principal-component analysis Markov models Clustering 81

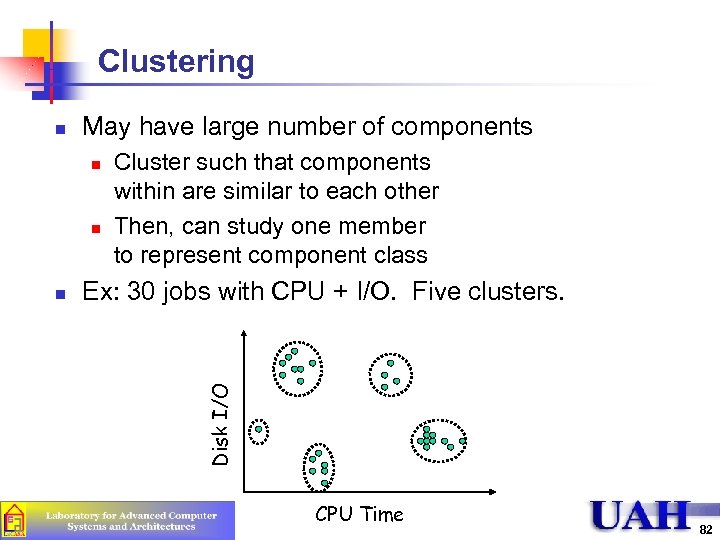

Clustering May have large number of components n n n Cluster such that components within are similar to each other Then, can study one member to represent component class Ex: 30 jobs with CPU + I/O. Five clusters. Disk I/O n CPU Time 82

Clustering May have large number of components n n n Cluster such that components within are similar to each other Then, can study one member to represent component class Ex: 30 jobs with CPU + I/O. Five clusters. Disk I/O n CPU Time 82

Clustering Steps 1. Take sample 2. Select parameters 3. Transform, if necessary 4. Remove outliers 5. Scale observations 6. Select distance metric 7. Perform clustering 8. Interpret 9. Change and repeat 3 -7 10. Select representative components 83

Clustering Steps 1. Take sample 2. Select parameters 3. Transform, if necessary 4. Remove outliers 5. Scale observations 6. Select distance metric 7. Perform clustering 8. Interpret 9. Change and repeat 3 -7 10. Select representative components 83

1) Sampling n Usually too many components to do clustering analysis n n Select small subset n n That’s why we are doing clustering in the first place! If careful, will show similar behavior to the rest May choose randomly n However, if are interested in a specific aspect, may choose to cluster only “top consumers” n E. g. , if interested in a disk, only do clustering analysis on components with high I/O 84

1) Sampling n Usually too many components to do clustering analysis n n Select small subset n n That’s why we are doing clustering in the first place! If careful, will show similar behavior to the rest May choose randomly n However, if are interested in a specific aspect, may choose to cluster only “top consumers” n E. g. , if interested in a disk, only do clustering analysis on components with high I/O 84

2) Parameter Selection n Many components have a large number of parameters (resource demands) n n n Two key criteria: impact on perf & variance n n n If have no impact, omit. If have little variance, omit. Method: redo clustering with 1 less parameter. n n Some important, some not Remove the ones that do not matter Count the number of components that change cluster membership. If not many change, remove parameter Principal component analysis: Identify parameters with the highest variance 85

2) Parameter Selection n Many components have a large number of parameters (resource demands) n n n Two key criteria: impact on perf & variance n n n If have no impact, omit. If have little variance, omit. Method: redo clustering with 1 less parameter. n n Some important, some not Remove the ones that do not matter Count the number of components that change cluster membership. If not many change, remove parameter Principal component analysis: Identify parameters with the highest variance 85

3) Transformation n n If distribution is skewed, may want to transform the measure of the parameter Ex: one study measured CPU time Two programs taking 1 and 2 seconds are as different as two programs taking 10 and 20 milliseconds Take ratio of CPU time and not difference n (More in Chapter 15) 86

3) Transformation n n If distribution is skewed, may want to transform the measure of the parameter Ex: one study measured CPU time Two programs taking 1 and 2 seconds are as different as two programs taking 10 and 20 milliseconds Take ratio of CPU time and not difference n (More in Chapter 15) 86

4) Outliers n n Data points with extreme parameter values Can significantly affect max or min (or mean or variance) For normalization (scaling, next) their inclusion/exclusion may significantly affect outcome Only exclude if do not consume significant portion of resources n E. g. , disk backup may make a number of disk I/O requests, and should not be excluded if backup is done frequently (e. g. , several times a day); may be excluded if done once in a month 87

4) Outliers n n Data points with extreme parameter values Can significantly affect max or min (or mean or variance) For normalization (scaling, next) their inclusion/exclusion may significantly affect outcome Only exclude if do not consume significant portion of resources n E. g. , disk backup may make a number of disk I/O requests, and should not be excluded if backup is done frequently (e. g. , several times a day); may be excluded if done once in a month 87

5) Data Scaling n Final results depend upon relative ranges n n Typically scale so relative ranges equal Different ways of doing this 88

5) Data Scaling n Final results depend upon relative ranges n n Typically scale so relative ranges equal Different ways of doing this 88

5) Data Scaling (cont’d) n Normalize to Zero Mean and Unit Variance: n Weights: xik 0 = wk xik wk / relative importance or wk = 1/sk n Range Normalization n Change from [xmin, k, xmax, k] to [0, 1] : Affected by outliers 89

5) Data Scaling (cont’d) n Normalize to Zero Mean and Unit Variance: n Weights: xik 0 = wk xik wk / relative importance or wk = 1/sk n Range Normalization n Change from [xmin, k, xmax, k] to [0, 1] : Affected by outliers 89

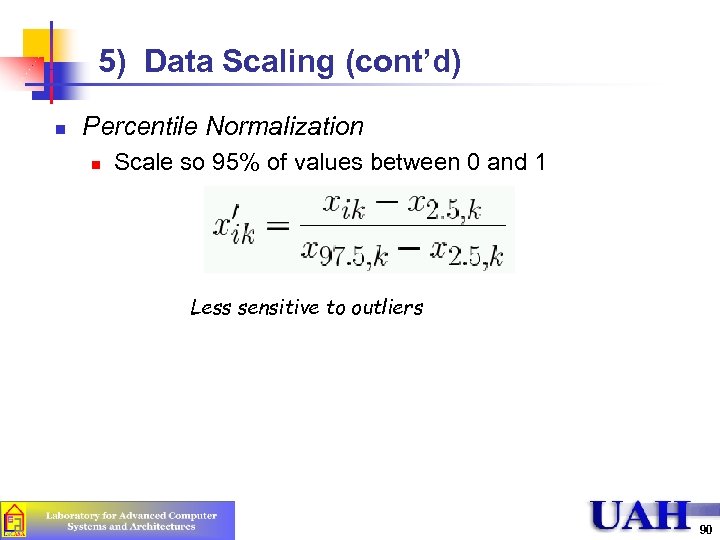

5) Data Scaling (cont’d) n Percentile Normalization n Scale so 95% of values between 0 and 1 Less sensitive to outliers 90

5) Data Scaling (cont’d) n Percentile Normalization n Scale so 95% of values between 0 and 1 Less sensitive to outliers 90

6) Distance Metric n n Map each component to n-dimensional space and see which are close to each other Euclidean Distance between two components n n {xi 1, xi 2, … xin} and {xj 1, xj 2, …, xjn} Weighted Euclidean Distance n n Assign weights ak for n parameters Used if values not scaled or if significantly different in importance 91

6) Distance Metric n n Map each component to n-dimensional space and see which are close to each other Euclidean Distance between two components n n {xi 1, xi 2, … xin} and {xj 1, xj 2, …, xjn} Weighted Euclidean Distance n n Assign weights ak for n parameters Used if values not scaled or if significantly different in importance 91

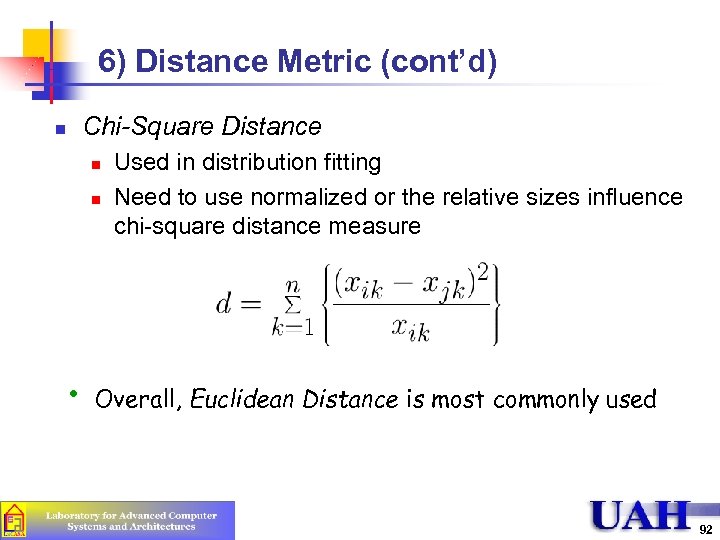

6) Distance Metric (cont’d) Chi-Square Distance n n n • Used in distribution fitting Need to use normalized or the relative sizes influence chi-square distance measure Overall, Euclidean Distance is most commonly used 92

6) Distance Metric (cont’d) Chi-Square Distance n n n • Used in distribution fitting Need to use normalized or the relative sizes influence chi-square distance measure Overall, Euclidean Distance is most commonly used 92

7) Clustering Techniques n n Goal: Partition into groups so the members of a group are as similar as possible and different groups are as dissimilar as possible Statistically, the intragroup variance should be as small as possible, and inter-group variance should be as large as possible n Total Variance = Intra-group Variance + Intergroup Variance 93

7) Clustering Techniques n n Goal: Partition into groups so the members of a group are as similar as possible and different groups are as dissimilar as possible Statistically, the intragroup variance should be as small as possible, and inter-group variance should be as large as possible n Total Variance = Intra-group Variance + Intergroup Variance 93

7) Clustering Techniques (cont’d) n n Nonhierarchical techniques: Start with an arbitrary set of k clusters, Move members until the intra-group variance is minimum. Hierarchical Techniques: n n n Agglomerative: Start with n clusters and merge Divisive: Start with one cluster and divide. Two popular techniques: n n Minimum spanning tree method (agglomerative) Centroid method (Divisive) 94

7) Clustering Techniques (cont’d) n n Nonhierarchical techniques: Start with an arbitrary set of k clusters, Move members until the intra-group variance is minimum. Hierarchical Techniques: n n n Agglomerative: Start with n clusters and merge Divisive: Start with one cluster and divide. Two popular techniques: n n Minimum spanning tree method (agglomerative) Centroid method (Divisive) 94

Clustering Techniques: Minimum Spanning Tree Method 1. 2. 3. 4. 5. Start with k = n clusters. Find the centroid of the ith cluster, i=1, 2, …, k. Compute the inter-cluster distance matrix. Merge the nearest clusters. Repeat steps 2 through 4 until all components are part of one cluster. 95

Clustering Techniques: Minimum Spanning Tree Method 1. 2. 3. 4. 5. Start with k = n clusters. Find the centroid of the ith cluster, i=1, 2, …, k. Compute the inter-cluster distance matrix. Merge the nearest clusters. Repeat steps 2 through 4 until all components are part of one cluster. 95

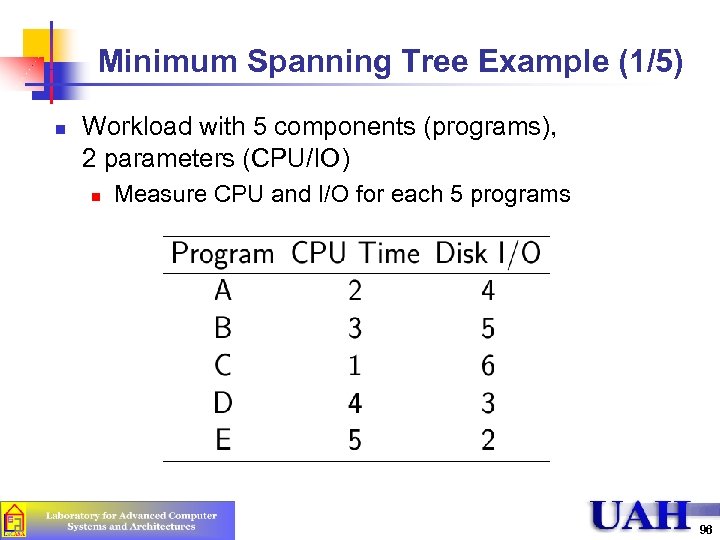

Minimum Spanning Tree Example (1/5) n Workload with 5 components (programs), 2 parameters (CPU/IO) n Measure CPU and I/O for each 5 programs 96

Minimum Spanning Tree Example (1/5) n Workload with 5 components (programs), 2 parameters (CPU/IO) n Measure CPU and I/O for each 5 programs 96

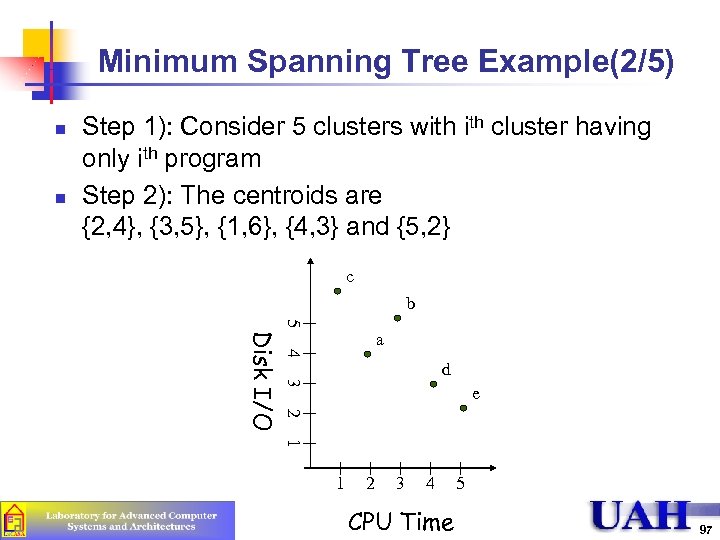

Minimum Spanning Tree Example(2/5) n n Step 1): Consider 5 clusters with cluster having only ith program Step 2): The centroids are {2, 4}, {3, 5}, {1, 6}, {4, 3} and {5, 2} c b 5 4 d 3 e 2 Disk I/O a 1 1 2 3 4 CPU Time 5 97

Minimum Spanning Tree Example(2/5) n n Step 1): Consider 5 clusters with cluster having only ith program Step 2): The centroids are {2, 4}, {3, 5}, {1, 6}, {4, 3} and {5, 2} c b 5 4 d 3 e 2 Disk I/O a 1 1 2 3 4 CPU Time 5 97

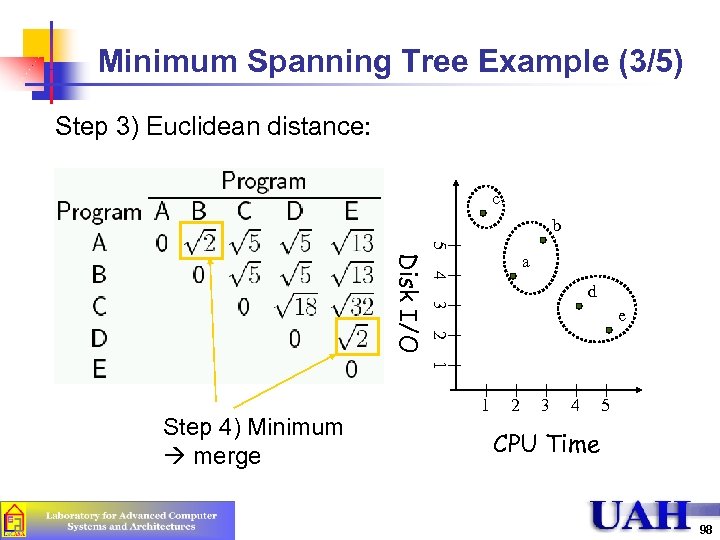

Minimum Spanning Tree Example (3/5) Step 3) Euclidean distance: c b 5 4 d 3 e 2 Disk I/O a 1 Step 4) Minimum merge 1 2 3 4 5 CPU Time 98

Minimum Spanning Tree Example (3/5) Step 3) Euclidean distance: c b 5 4 d 3 e 2 Disk I/O a 1 Step 4) Minimum merge 1 2 3 4 5 CPU Time 98

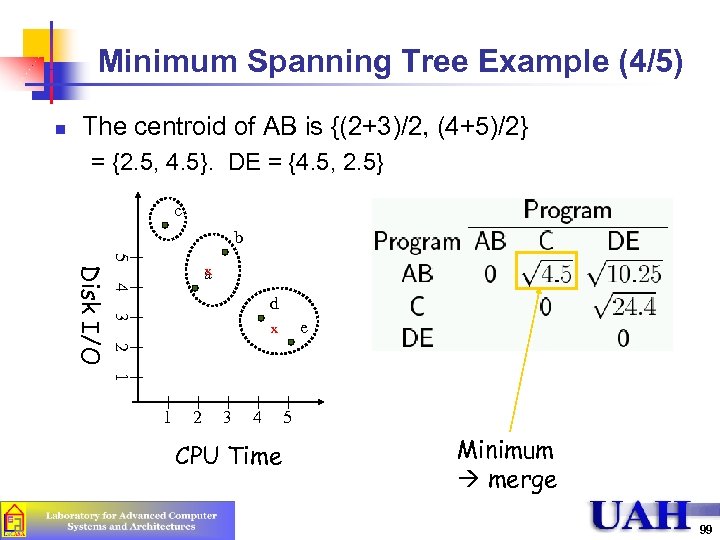

Minimum Spanning Tree Example (4/5) n The centroid of AB is {(2+3)/2, (4+5)/2} = {2. 5, 4. 5}. DE = {4. 5, 2. 5} c b 5 4 d 3 e x 2 Disk I/O x a 1 1 2 3 4 CPU Time 5 Minimum merge 99

Minimum Spanning Tree Example (4/5) n The centroid of AB is {(2+3)/2, (4+5)/2} = {2. 5, 4. 5}. DE = {4. 5, 2. 5} c b 5 4 d 3 e x 2 Disk I/O x a 1 1 2 3 4 CPU Time 5 Minimum merge 99

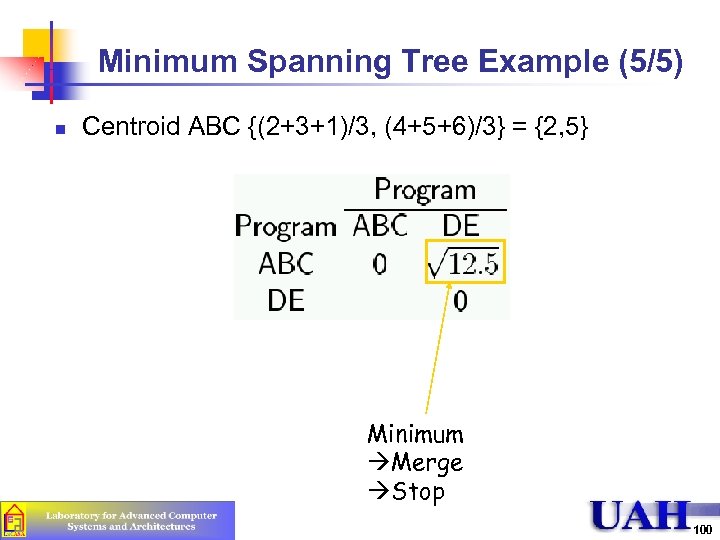

Minimum Spanning Tree Example (5/5) n Centroid ABC {(2+3+1)/3, (4+5+6)/3} = {2, 5} Minimum Merge Stop 100

Minimum Spanning Tree Example (5/5) n Centroid ABC {(2+3+1)/3, (4+5+6)/3} = {2, 5} Minimum Merge Stop 100

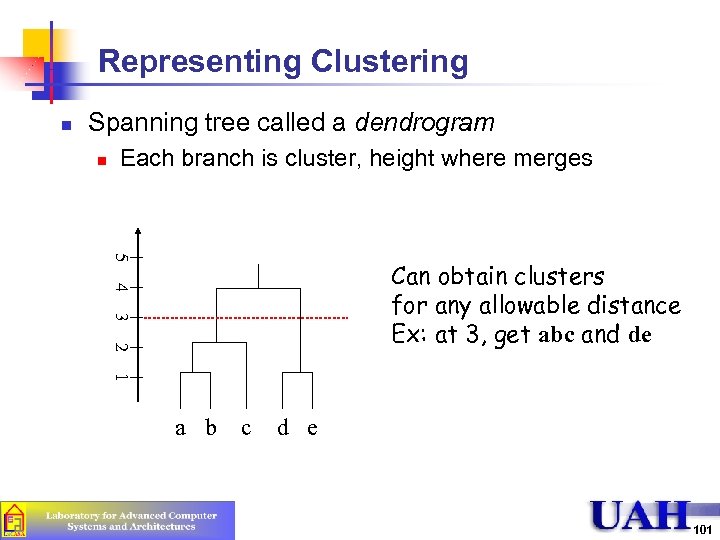

Representing Clustering n Spanning tree called a dendrogram n Each branch is cluster, height where merges 5 4 Can obtain clusters for any allowable distance Ex: at 3, get abc and de 3 2 1 a b c d e 101

Representing Clustering n Spanning tree called a dendrogram n Each branch is cluster, height where merges 5 4 Can obtain clusters for any allowable distance Ex: at 3, get abc and de 3 2 1 a b c d e 101

Nearest Centroid Method n n n Start with k = 1. Find the centroid and intra-cluster variance for ith cluster, i= 1, 2, …, k. Find the cluster with the highest variance and arbitrarily divide it into two clusters n n Find the two components that are farthest apart, assign other components according to their distance from these points. Place all components below the centroid in one cluster and all components above this hyper plane in the other. Adjust the points in the two new clusters until the inter-cluster distance between the two clusters is maximum Set k = k+1. Repeat steps 2 through 4 until k = n 102

Nearest Centroid Method n n n Start with k = 1. Find the centroid and intra-cluster variance for ith cluster, i= 1, 2, …, k. Find the cluster with the highest variance and arbitrarily divide it into two clusters n n Find the two components that are farthest apart, assign other components according to their distance from these points. Place all components below the centroid in one cluster and all components above this hyper plane in the other. Adjust the points in the two new clusters until the inter-cluster distance between the two clusters is maximum Set k = k+1. Repeat steps 2 through 4 until k = n 102

Interpreting Clusters n Clusters will small populations may be discarded n n n Name clusters, often by resource demands n n If use few resources If cluster with 1 component uses 50% of resources, cannot discard! Ex: “CPU bound” or “I/O bound” Select 1+ components from each cluster as a test workload n Can make number selected proportional to cluster size, total resource demands or other 103

Interpreting Clusters n Clusters will small populations may be discarded n n n Name clusters, often by resource demands n n If use few resources If cluster with 1 component uses 50% of resources, cannot discard! Ex: “CPU bound” or “I/O bound” Select 1+ components from each cluster as a test workload n Can make number selected proportional to cluster size, total resource demands or other 103

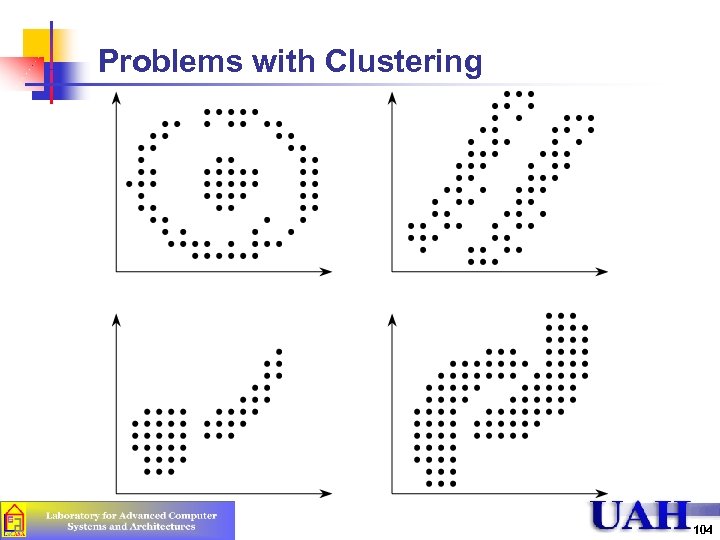

Problems with Clustering 104

Problems with Clustering 104

Problems with Clustering (Cont) n n Goal: Minimize variance The results of clustering are highly variable. No rules for: n n Labeling each cluster by functionality is difficult n n Selection of parameters Distance measure Scaling In one study, editing programs appeared in 23 different clusters Requires many repetitions of the analysis 105

Problems with Clustering (Cont) n n Goal: Minimize variance The results of clustering are highly variable. No rules for: n n Labeling each cluster by functionality is difficult n n Selection of parameters Distance measure Scaling In one study, editing programs appeared in 23 different clusters Requires many repetitions of the analysis 105