6bc4fd0feb8200532d09c1deae05ebcb.ppt

- Количество слайдов: 34

COSC 4406 Software Engineering Haibin Zhu, Ph. D. Dept. of Computer Science and mathematics, Nipissing University, 100 College Dr. , North Bay, ON P 1 B 8 L 7, Canada, haibinz@nipissingu. ca, http: //www. nipissingu. ca/faculty/haibinz 1

Lecture 12 Product Metrics for Software 2

Mc. Call’s Triangle of Quality Maintainability Flexibility Testability PRODUCT REVISION Portability Reusability Interoperability PRODUCT TRANSITION PRODUCT OPERATION Correctness Usability Efficiency Integrity Reliability 3

Mc. Call’s Quality Factors n n n Correctness: The extent to which a program satisfies its specification and fulfils the customer’s mission objectives. Reliability: The extent to which a program can be expected to perform its intended function with required precision. Efficiency: The amount of computing resources and code required by a program to perform its functions. Integrity: The extent to which access to software or data by unauthorized persons can be controlled. Usability: The effort required to learn, operate input for, and interpret output of a program. Maintainability: The effort required to locate and fix an error in a program. 4

Mc. Call’s Quality Factors n n n Flexibility: The effort required to modify an operational program. Testability: The effort required to test a program to ensure that it performs its intended functions. Portability: The effort required to transfer the program from one hardware and/or software system environment to another. Reusability: The extent to which a program [or part of the program] can be reused in other applications. Interoperability: The effort required to couple one system to another. 5

A Comment Mc. Call’s quality factors were proposed in the early 1970 s. They are as valid today as they were in that time. It’s likely that software built to conform to these factors will exhibit high quality well into the 21 st century, even if there are dramatic changes in technology. 6

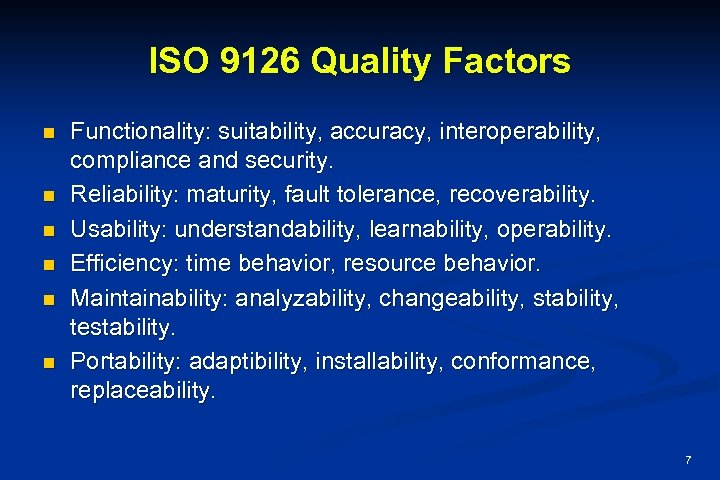

ISO 9126 Quality Factors n n n Functionality: suitability, accuracy, interoperability, compliance and security. Reliability: maturity, fault tolerance, recoverability. Usability: understandability, learnability, operability. Efficiency: time behavior, resource behavior. Maintainability: analyzability, changeability, stability, testability. Portability: adaptibility, installability, conformance, replaceability. 7

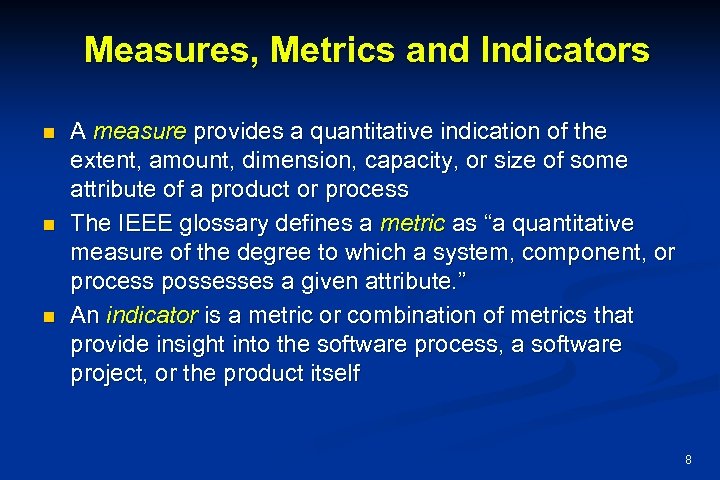

Measures, Metrics and Indicators n n n A measure provides a quantitative indication of the extent, amount, dimension, capacity, or size of some attribute of a product or process The IEEE glossary defines a metric as “a quantitative measure of the degree to which a system, component, or process possesses a given attribute. ” An indicator is a metric or combination of metrics that provide insight into the software process, a software project, or the product itself 8

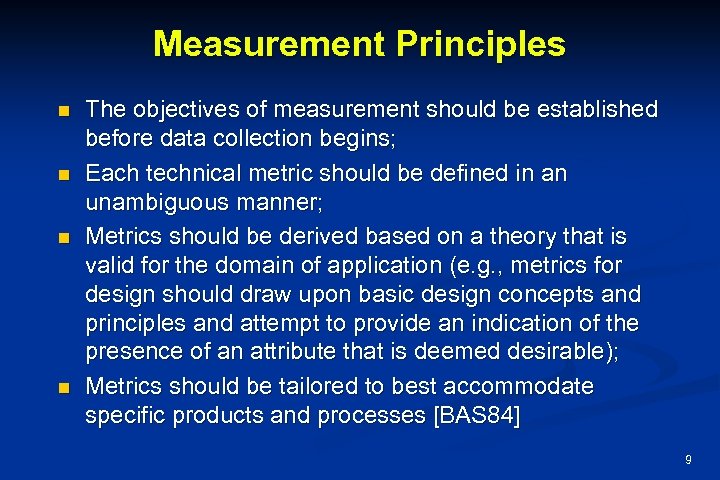

Measurement Principles n n The objectives of measurement should be established before data collection begins; Each technical metric should be defined in an unambiguous manner; Metrics should be derived based on a theory that is valid for the domain of application (e. g. , metrics for design should draw upon basic design concepts and principles and attempt to provide an indication of the presence of an attribute that is deemed desirable); Metrics should be tailored to best accommodate specific products and processes [BAS 84] 9

Measurement Process n n n Formulation. The derivation of software measures and metrics appropriate for the representation of the software that is being considered. Collection. The mechanism used to accumulate data required to derive the formulated metrics. Analysis. The computation of metrics and the application of mathematical tools. Interpretation. The evaluation of metrics results in an effort to gain insight into the quality of the representation. Feedback. Recommendations derived from the interpretation of product metrics transmitted to the software team. 10

Goal-Oriented Software Measurement n The Goal/Question/Metric Paradigm n n (1) establish an explicit measurement goal that is specific to the process activity or product characteristic that is to be assessed (2) define a set of questions that must be answered in order to achieve the goal, and (3) identify well-formulated metrics that help to answer these questions. Goal definition template n n n Analyze {the name of activity or attribute to be measured} for the purpose of {the overall objective of the analysis} with respect to {the aspect of the activity or attribute that is considered} from the viewpoint of {the people who have an interest in the measurement} in the context of {the environment in which the measurement takes place}. 11

Metrics Attributes n n n simple and computable. It should be relatively easy to learn how to derive the metric, and its computation should not demand inordinate effort or time empirically and intuitively persuasive. The metric should satisfy the engineer’s intuitive notions about the product attribute under consideration consistent and objective. The metric should always yield results that are unambiguous. consistent in its use of units and dimensions. The mathematical computation of the metric should use measures that do not lead to bizarre combinations of unit. programming language independent. Metrics should be based on the analysis model, the design model, or the structure of the program itself. an effective mechanism for quality feedback. That is, the metric should provide a software engineer with information that can lead to a higher quality end product 12

Collection and Analysis Principles n n n Whenever possible, data collection and analysis should be automated; Valid statistical techniques should be applied to establish relationship between internal product attributes and external quality characteristics Interpretative guidelines and recommendations should be established for each metric 13

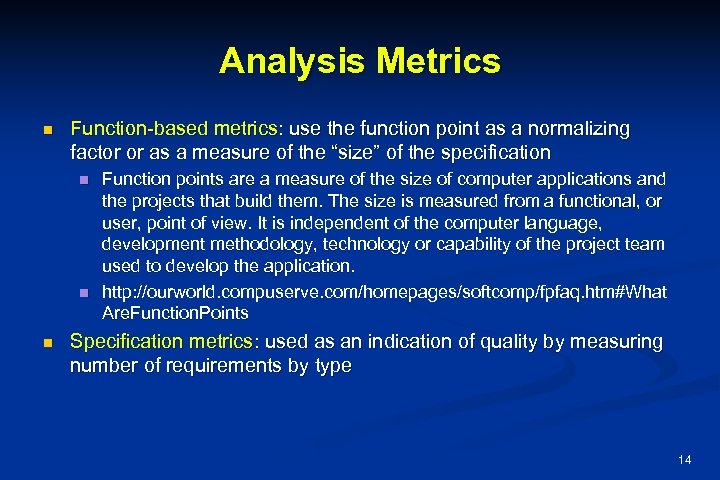

Analysis Metrics n Function-based metrics: use the function point as a normalizing factor or as a measure of the “size” of the specification n Function points are a measure of the size of computer applications and the projects that build them. The size is measured from a functional, or user, point of view. It is independent of the computer language, development methodology, technology or capability of the project team used to develop the application. http: //ourworld. compuserve. com/homepages/softcomp/fpfaq. htm#What Are. Function. Points Specification metrics: used as an indication of quality by measuring number of requirements by type 14

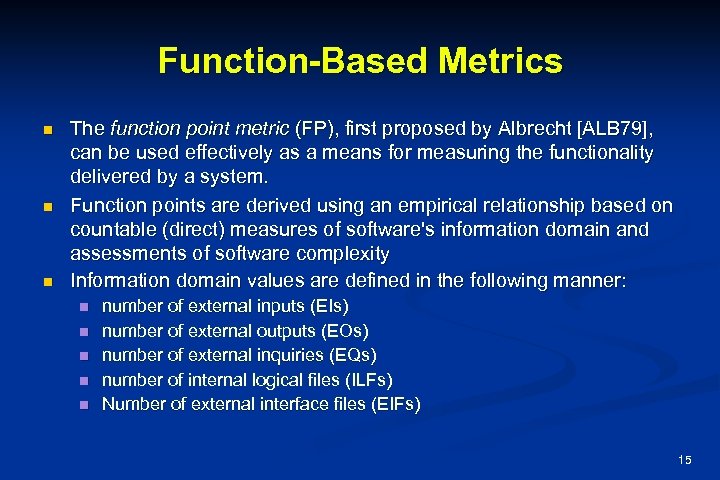

Function-Based Metrics n n n The function point metric (FP), first proposed by Albrecht [ALB 79], can be used effectively as a means for measuring the functionality delivered by a system. Function points are derived using an empirical relationship based on countable (direct) measures of software's information domain and assessments of software complexity Information domain values are defined in the following manner: n n number of external inputs (EIs) number of external outputs (EOs) number of external inquiries (EQs) number of internal logical files (ILFs) Number of external interface files (EIFs) 15

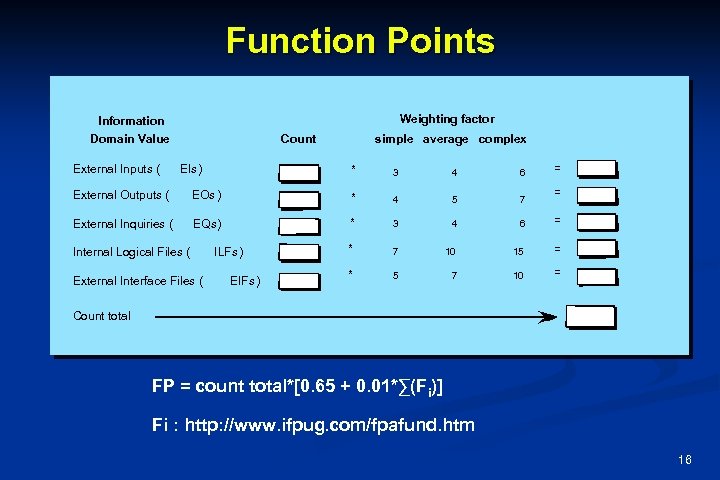

Function Points Weighting factor Information Domain Value External Inputs ( Count EIs) simple average complex * 3 4 6 = = External Outputs ( EOs ) * 4 5 7 External Inquiries ( EQs) * 3 4 6 = * 7 10 15 = * 5 7 10 = Internal Logical Files ( External Interface Files ( ILFs ) EIFs) Count total FP = count total*[0. 65 + 0. 01*∑(Fi)] Fi : http: //www. ifpug. com/fpafund. htm 16

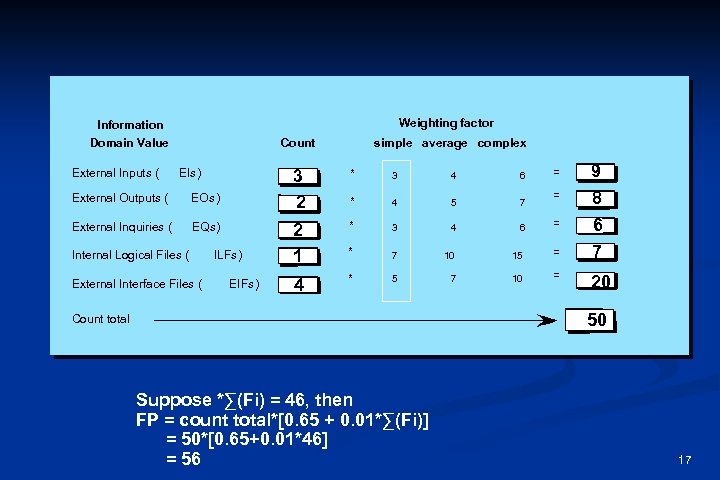

Weighting factor Information Domain Value External Inputs ( Count EOs ) External Inquiries ( EQs) Internal Logical Files ( External Interface Files ( = 9 8 6 = 6 10 15 = 7 7 10 = 20 3 2 EIs) External Outputs ( simple average complex ILFs ) EIFs) * 3 4 6 * 4 5 7 2 1 * 3 4 * 7 * 5 4 = 50 Count total Suppose *∑(Fi) = 46, then FP = count total*[0. 65 + 0. 01*∑(Fi)] = 50*[0. 65+0. 01*46] = 56 17

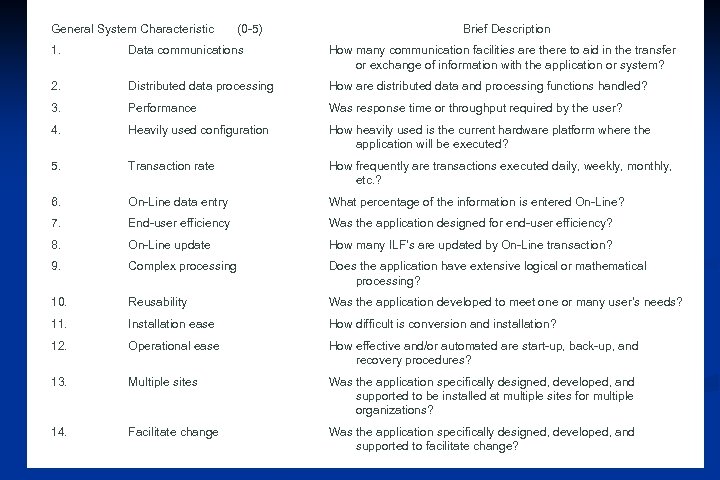

General System Characteristic (0 -5) Brief Description 1. Data communications How many communication facilities are there to aid in the transfer or exchange of information with the application or system? 2. Distributed data processing How are distributed data and processing functions handled? 3. Performance Was response time or throughput required by the user? 4. Heavily used configuration How heavily used is the current hardware platform where the application will be executed? 5. Transaction rate How frequently are transactions executed daily, weekly, monthly, etc. ? 6. On-Line data entry What percentage of the information is entered On-Line? 7. End-user efficiency Was the application designed for end-user efficiency? 8. On-Line update How many ILF’s are updated by On-Line transaction? 9. Complex processing Does the application have extensive logical or mathematical processing? 10. Reusability Was the application developed to meet one or many user’s needs? 11. Installation ease How difficult is conversion and installation? 12. Operational ease How effective and/or automated are start-up, back-up, and recovery procedures? 13. Multiple sites Was the application specifically designed, developed, and supported to be installed at multiple sites for multiple organizations? 14. Facilitate change Was the application specifically designed, developed, and supported to facilitate change? 18

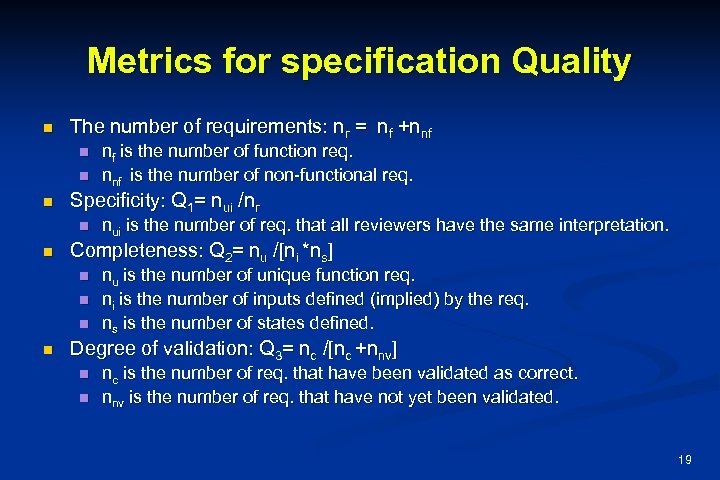

Metrics for specification Quality n The number of requirements: nr = nf +nnf n n n Specificity: Q 1= nui /nr n n nui is the number of req. that all reviewers have the same interpretation. Completeness: Q 2= nu /[ni *ns] n n nf is the number of function req. nnf is the number of non-functional req. nu is the number of unique function req. ni is the number of inputs defined (implied) by the req. ns is the number of states defined. Degree of validation: Q 3= nc /[nc +nnv] n n nc is the number of req. that have been validated as correct. nnv is the number of req. that have not yet been validated. 19

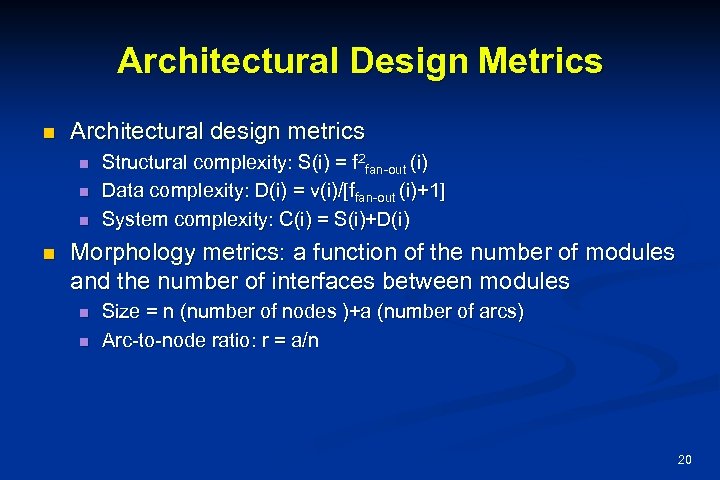

Architectural Design Metrics n Architectural design metrics n n Structural complexity: S(i) = f 2 fan-out (i) Data complexity: D(i) = v(i)/[ffan-out (i)+1] System complexity: C(i) = S(i)+D(i) Morphology metrics: a function of the number of modules and the number of interfaces between modules n n Size = n (number of nodes )+a (number of arcs) Arc-to-node ratio: r = a/n 20

![Metrics for OO Design-I n Whitmire [WHI 97] describes nine distinct and measurable characteristics Metrics for OO Design-I n Whitmire [WHI 97] describes nine distinct and measurable characteristics](https://present5.com/presentation/6bc4fd0feb8200532d09c1deae05ebcb/image-21.jpg)

Metrics for OO Design-I n Whitmire [WHI 97] describes nine distinct and measurable characteristics of an OO design: n Size n n Complexity n n The physical connections between elements of the OO design Sufficiency n n How classes of an OO design are interrelated to one another Coupling n n Size is defined in terms of four views: population, volume, length, and functionality “the degree to which an abstraction possesses the features required of it, or the degree to which a design component possesses features in its abstraction, from the point of view of the current application. ” Completeness n An indirect implication about the degree to which the abstraction or design component can be reused 21

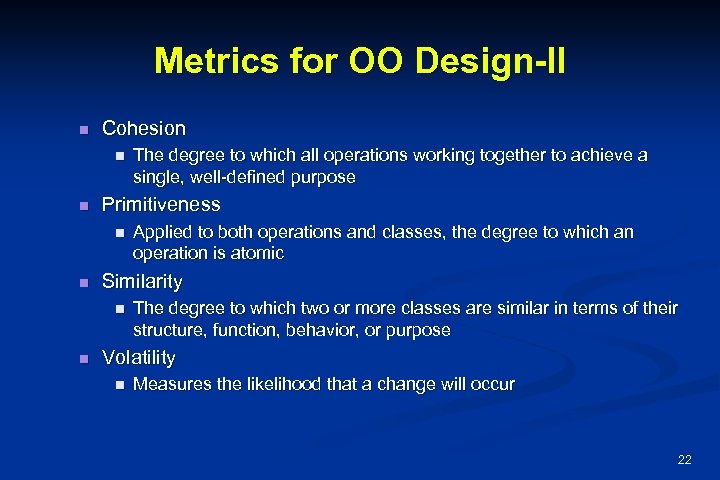

Metrics for OO Design-II n Cohesion n n Primitiveness n n Applied to both operations and classes, the degree to which an operation is atomic Similarity n n The degree to which all operations working together to achieve a single, well-defined purpose The degree to which two or more classes are similar in terms of their structure, function, behavior, or purpose Volatility n Measures the likelihood that a change will occur 22

![Distinguishing Characteristics Berard [BER 95] argues that the following characteristics require that special OO Distinguishing Characteristics Berard [BER 95] argues that the following characteristics require that special OO](https://present5.com/presentation/6bc4fd0feb8200532d09c1deae05ebcb/image-23.jpg)

Distinguishing Characteristics Berard [BER 95] argues that the following characteristics require that special OO metrics be developed: n n n Localization—the way in which information is concentrated in a program Encapsulation—the packaging of data and processing Information hiding—the way in which information about operational details is hidden by a secure interface Inheritance—the manner in which the responsibilities of one class are propagated to another Abstraction—the mechanism that allows a design to focus on essential details 23

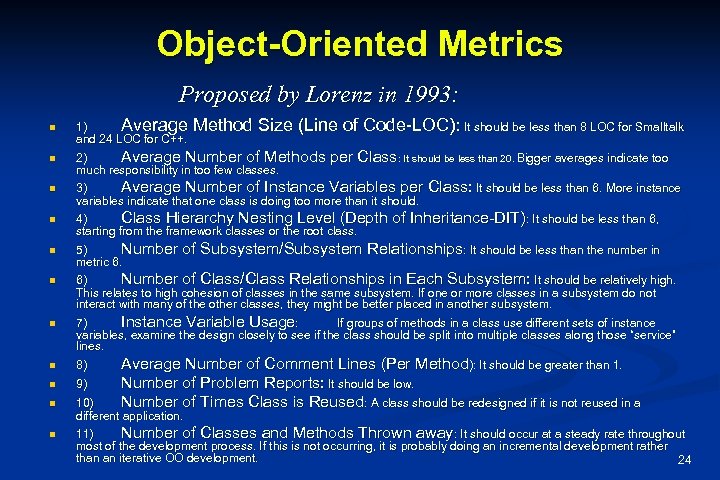

Object-Oriented Metrics Proposed by Lorenz in 1993: n 1) Average Method Size (Line of Code-LOC): It should be less than 8 LOC for Smalltalk and 24 LOC for C++. 2) Average Number of Methods per Class: It should be less than 20. Bigger averages indicate too much responsibility in too few classes. 3) Average Number of Instance Variables per Class: It should be less than 6. More instance variables indicate that one class is doing too more than it should. 4) Class Hierarchy Nesting Level (Depth of Inheritance-DIT): It should be less than 6, starting from the framework classes or the root class. 5) Number of Subsystem/Subsystem Relationships: It should be less than the number in metric 6. 6) Number of Class/Class Relationships in Each Subsystem: It should be relatively high. This relates to high cohesion of classes in the same subsystem. If one or more classes in a subsystem do not interact with many of the other classes, they might be better placed in another subsystem. 7) Instance Variable Usage: If groups of methods in a class use different sets of instance variables, examine the design closely to see if the class should be split into multiple classes along those “service” lines. 8) Average Number of Comment Lines (Per Method): It should be greater than 1. n 9) n 10) different application. 11) Number of Classes and Methods Thrown away: It should occur at a steady rate throughout most of the development process. If this is not occurring, it is probably doing an incremental development rather than an iterative OO development. 24 n n n n Number of Problem Reports: It should be low. Number of Times Class is Reused: A class should be redesigned if it is not reused in a

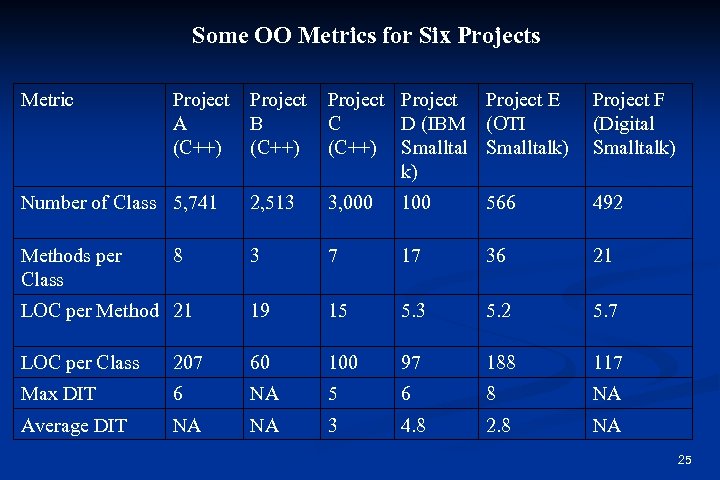

Some OO Metrics for Six Projects Metric Project A (C++) Project B (C++) Project E C D (IBM (OTI (C++) Smalltalk) k) Project F (Digital Smalltalk) Number of Class 5, 741 2, 513 3, 000 100 566 492 Methods per Class 3 7 17 36 21 LOC per Method 21 19 15 5. 3 5. 2 5. 7 LOC per Class 207 60 100 97 188 117 Max DIT 6 NA 5 6 8 NA Average DIT NA NA 3 4. 8 2. 8 NA 8 25

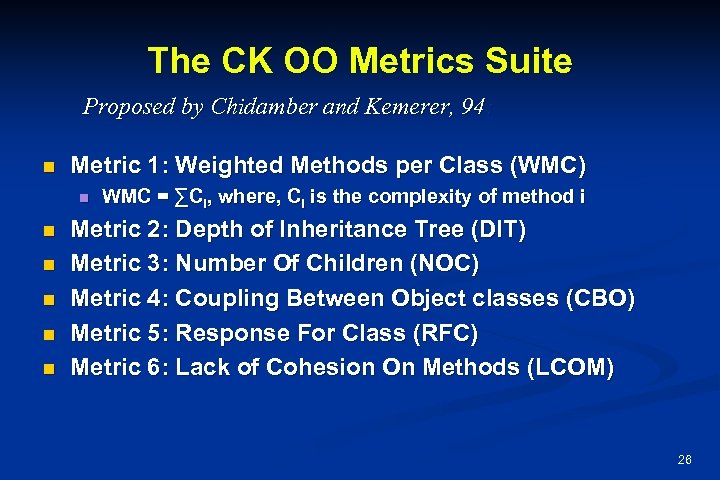

The CK OO Metrics Suite Proposed by Chidamber and Kemerer, 94: n Metric 1: Weighted Methods per Class (WMC) n n n WMC = ∑Ci, where, Ci is the complexity of method i Metric 2: Depth of Inheritance Tree (DIT) Metric 3: Number Of Children (NOC) Metric 4: Coupling Between Object classes (CBO) Metric 5: Response For Class (RFC) Metric 6: Lack of Cohesion On Methods (LCOM) 26

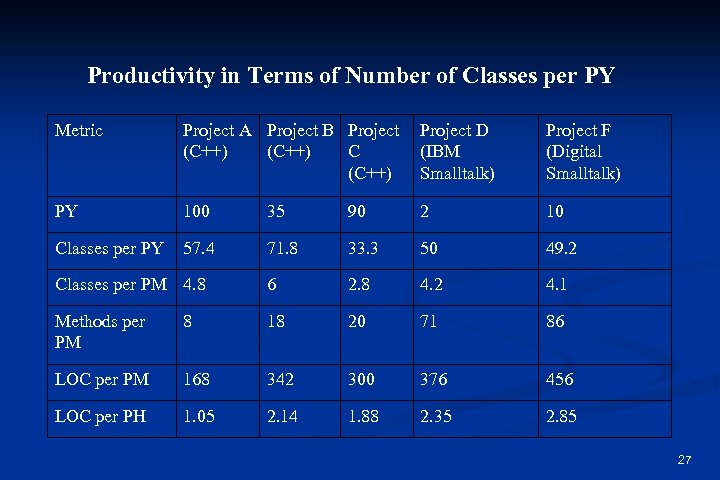

Productivity in Terms of Number of Classes per PY Metric Project A Project B Project (C++) C (C++) Project D (IBM Smalltalk) Project F (Digital Smalltalk) PY 100 35 90 2 10 Classes per PY 57. 4 71. 8 33. 3 50 49. 2 Classes per PM 4. 8 6 2. 8 4. 2 4. 1 Methods per PM 8 18 20 71 86 LOC per PM 168 342 300 376 456 LOC per PH 1. 05 2. 14 1. 88 2. 35 2. 85 27

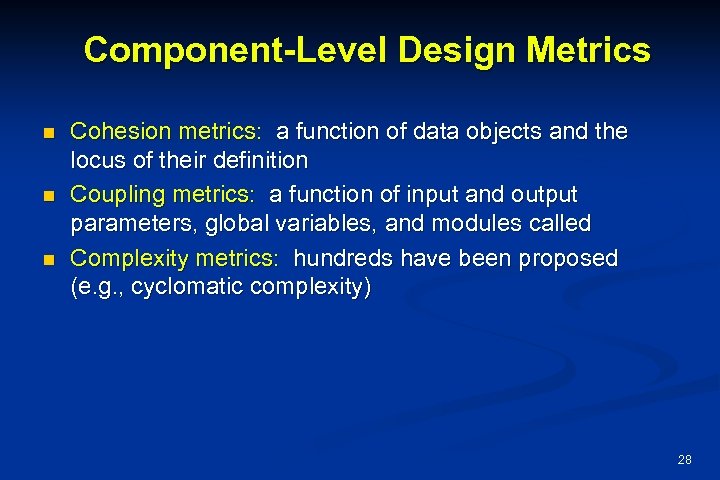

Component-Level Design Metrics n n n Cohesion metrics: a function of data objects and the locus of their definition Coupling metrics: a function of input and output parameters, global variables, and modules called Complexity metrics: hundreds have been proposed (e. g. , cyclomatic complexity) 28

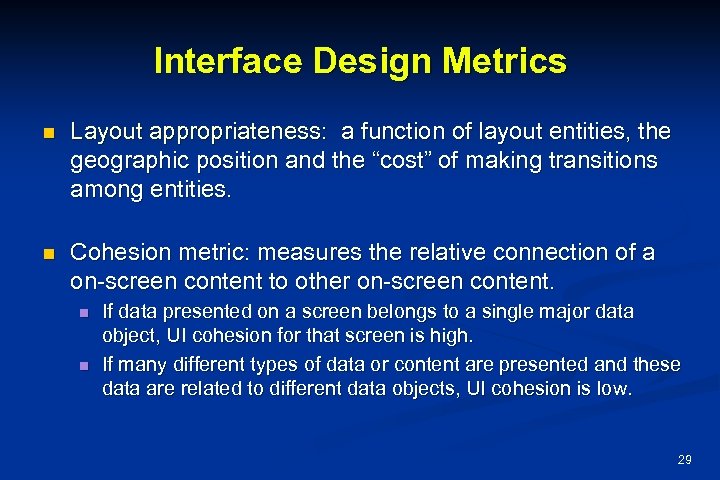

Interface Design Metrics n Layout appropriateness: a function of layout entities, the geographic position and the “cost” of making transitions among entities. n Cohesion metric: measures the relative connection of a on-screen content to other on-screen content. n n If data presented on a screen belongs to a single major data object, UI cohesion for that screen is high. If many different types of data or content are presented and these data are related to different data objects, UI cohesion is low. 29

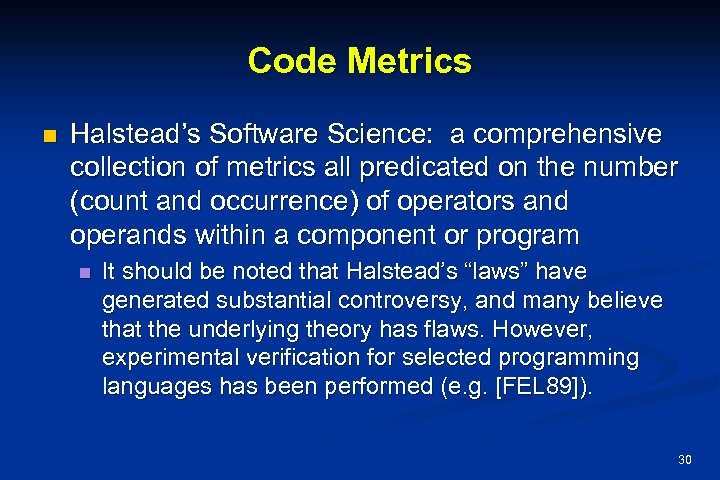

Code Metrics n Halstead’s Software Science: a comprehensive collection of metrics all predicated on the number (count and occurrence) of operators and operands within a component or program n It should be noted that Halstead’s “laws” have generated substantial controversy, and many believe that the underlying theory has flaws. However, experimental verification for selected programming languages has been performed (e. g. [FEL 89]). 30

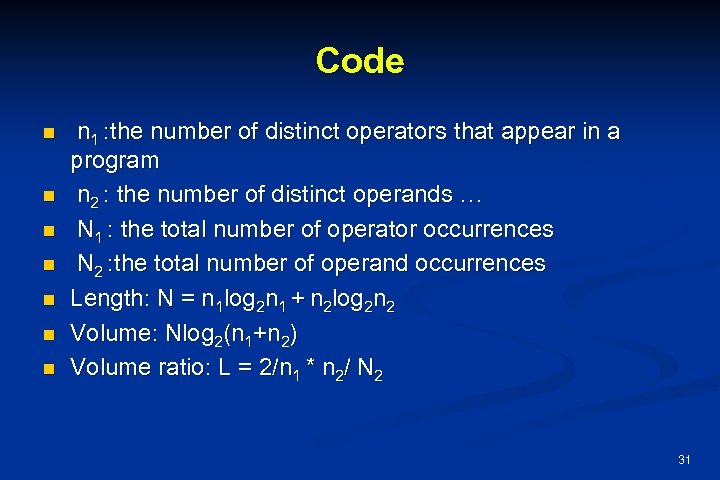

Code n n n n 1 : the number of distinct operators that appear in a program n 2 : the number of distinct operands … N 1 : the total number of operator occurrences N 2 : the total number of operand occurrences Length: N = n 1 log 2 n 1 + n 2 log 2 n 2 Volume: Nlog 2(n 1+n 2) Volume ratio: L = 2/n 1 * n 2/ N 2 31

Metrics for Testing n Testing effort can also be estimated using metrics derived from Halstead measures n Binder [BIN 94] suggests a broad array of design metrics that have a direct influence on the “testability” of an OO system. n n n Lack of cohesion in methods (LCOM). Percent public and protected (PAP). Public access to data members (PAD). Number of root classes (NOR). Fan-in (FIN) (Multiple Inheritance). Number of children (NOC) and depth of the inheritance tree (DIT). 32

Metrics for Maintenance n The number of modules: n n n MT : ~ in the current release Fc: ~ that have been changed in the current release Fa : ~ that have been added~~ Fd : ~ that have been deleted~~ from the preceding release Software maturity index: SMI = [MT - (Fc+Fa+Fd)]/ MT 33

Summary n n n n Software Quality Measure, Measurement, and Metrics for Analysis Metrics for Design Metrics for Source Code Metrics for Testing Metrics for Maintenance 34

6bc4fd0feb8200532d09c1deae05ebcb.ppt